For Vendors

99 machine learning case studies from 91 enterprises by 2024.

- 99 use cases in 17 industries

- 14 business processes in 14 business functions

- Implementations in 91 companies in 20 countries

- 10 benefits

- Growth over 6 years

- 9 vendors which created these case studies

Which industries leverage machine learning?

The most common use case of machine learning is Financial services which is mentioned in 19% of case studies.

The most common industries using machine learning are:

- Financial services

Which business functions leverage machine learning?

The most common business function of machine learning is Analytics which is mentioned in 14 case studies.

Most common business functions using machine learning are:

Which processes leverage machine learning?

The top process reported in machine learning case studies is Credit appraisal.

Most common business processes using machine learning are:

- Credit appraisal

- Financial planning & analysis

- Marketing analytics

- Data quality management

- Innovation management

- Product development

- Shipping / transportation management

- Financial risk management

- Customer journey mapping

- Incident management

- Performance management

- Sales forecasting

- Campaign management

- Data governance

What is the geographical distribution of machine learning case studies?

Click on the countries with links to explore how that country’s machine learning market is structured including top vendors, case studies etc.

Countries that use machine learning most commonly are listed below.

- United States of America

- United Kingdom

What are machine learning’s use cases?

The most common use case of machine learning is customer segmentation which is mentioned in 27% of case studies.

What are machine learning’s benefits?

The most common benefit of machine learning is time saving which is mentioned in 24% of case studies.

How are machine learning case studies growing?

Growth by vendor.

Leading vendors in terms of case study contributions to machine learning are:

- CognitiveScale

Growth over time

Years in which the;

- The first case study in our DB was published: 2016

- Most machine learning case studies have been published: 2019

- The highest increase in the number of case studies was reported vs the previous year: 2018

- The largest decrease in the number of case studies was reported vs the previous year: 2020

Comprehensive list of machine learning case studies

AIMultiple identified 99 case studies in machine learning covering 10 benefits and 99 use cases. You can learn more about these case studies in table below:

Our research on machine learning software

If you want to learn more about machine learning software, you can also check our related research articles that can assist you in your decision:

Machine Learning Case Studies with Powerful Insights

Explore the potential of machine learning through these practical machine learning case studies and success stories in various industries. | ProjectPro

Machine learning is revolutionizing how different industries function, from healthcare to finance to transportation. If you're curious about how this technology is applied in real-world scenarios, look no further. In this blog, we'll explore some exciting machine learning case studies that showcase the potential of this powerful emerging technology.

Machine-learning-based applications have quickly transformed work methods in the technological world. It is changing the way we work, live, and interact with the world around us. Machine learning is revolutionizing industries, from personalized recommendations on streaming platforms to self-driving cars.

But while the technology of artificial intelligence and machine learning may seem abstract or daunting to some, its applications are incredibly tangible and impactful. Data Scientists use machine learning algorithms to predict equipment failures in manufacturing, improve cancer diagnoses in healthcare , and even detect fraudulent activity in 5 . If you're interested in learning more about how machine learning is applied in real-world scenarios, you are on the right page. This blog will explore in depth how machine learning applications are used for solving real-world problems.

We'll start with a few case studies from GitHub that examine how machine learning is being used by businesses to retain their customers and improve customer satisfaction. We'll also look at how machine learning is being used with the help of Python programming language to detect and prevent fraud in the financial sector and how it can save companies millions of dollars in losses. Next, we will examine how top companies use machine learning to solve various business problems. Additionally, we'll explore how machine learning is used in the healthcare industry, and how this technology can improve patient outcomes and save lives.

By going through these case studies, you will better understand how machine learning is transforming work across different industries. So, let's get started!

Table of Contents

Machine learning case studies on github, machine learning case studies in python, company-specific machine learning case studies, machine learning case studies in biology and healthcare, aws machine learning case studies , azure machine learning case studies, how to prepare for machine learning case studies interview.

This section has machine learning case studies along with their GitHub repository that contains the sample code.

1. Customer Churn Prediction

Predicting customer churn is essential for businesses interested in retaining customers and maximizing their profits. By leveraging historical customer data, machine learning algorithms can identify patterns and factors that are correlated with churn, enabling businesses to take proactive steps to prevent it.

In this case study, you will study how a telecom company uses machine learning for customer churn prediction. The available data contains information about the services each customer signed up for, their contact information, monthly charges, and their demographics. The goal is to first analyze the data at hand with the help of methods used in Exploratory Data Analysis . It will assist in picking a suitable machine-learning algorithm. The five machine learning models used in this case-study are AdaBoost, Gradient Boost, Random Forest, Support Vector Machines, and K-Nearest Neighbors. These models are used to determine which customers are at risk of churn.

By using machine learning for churn prediction, businesses can better understand customer behavior, identify areas for improvement, and implement targeted retention strategies. It can result in increased customer loyalty, higher revenue, and a better understanding of customer needs and preferences. This case study example will help you understand how machine learning is a valuable tool for any business looking to improve customer retention and stay ahead of the competition.

GitHub Repository: https://github.com/Pradnya1208/Telecom-Customer-Churn-prediction

2. Market Basket Analysis

Market basket analysis is a common application of machine learning in retail and e-commerce, where it is used to identify patterns and relationships between products that are frequently purchased together. By leveraging this information, businesses can make informed decisions about product placement, promotions, and pricing strategies.

In this case study, you will utilize the EDA methods to carefully analyze the relationships among different variables in the data. Next, you will study how to use the Apriori algorithm to identify frequent itemsets and association rules, which describe the likelihood of a product being purchased given the presence of another product. These rules can generate recommendations, optimize product placement, and increase sales, and they can also be used for customer segmentation.

Using machine learning for market basket analysis allows businesses to understand customer behavior better, identify cross-selling opportunities, and increase customer satisfaction. It has the potential to result in increased revenue, improved customer loyalty, and a better understanding of customer needs and preferences.

GitHub Repository: https://github.com/kkrusere/Market-Basket-Analysis-on-the-Online-Retail-Data

3. Predicting Prices for Airbnb

Airbnb is a tech company that enables hosts to rent out their homes, apartments, or rooms to guests interested in temporary lodging. One of the key challenges hosts face is optimizing the rent prices for the customers. With the help of machine learning, hosts can have rough estimates of the rental costs based on various factors such as location, property type, amenities, and availability.

The first step, in this case study, is to clean the dataset to handle missing values, duplicates, and outliers. In the same step, the data is transformed, and the data is prepared for modeling with the help of feature engineering methods. The next step is to perform EDA to understand how the rental listings are spread across different cities in the US. Next, you will learn how to visualize how prices change over time, looking at trends for different seasons, months, days of the week, and times of the day.

The final step involves implementing ML models like linear regression (ridge and lasso), Naive Bayes, and Random Forests to produce price estimates for listings. You will learn how to compare the outcome of these models and evaluate their performance.

GitHub Repository: https://github.com/samuelklam/airbnb-pricing-prediction

New Projects

4. Titanic Disaster Analysis

The Titanic Machine Learning Case Study is a classic example in the field of data science and machine learning. The study is based on the dataset of passengers aboard the Titanic when it sank in 1912. The study's goal is to predict whether a passenger survived or not based on their demographic and other information.

The dataset contains information on 891 passengers, including their age, gender, ticket class, fare paid, as well as whether or not they survived the disaster. The first step in the analysis is to explore the dataset and identify any missing values or outliers. Once this is done, the data is preprocessed to prepare it for modeling.

The next step is to build a predictive model using various machine learning algorithms, such as logistic regression, decision trees, and random forests. These models are trained on a subset of the data and evaluated on another subset to ensure they can generalize well to new data.

Finally, the model is used to make predictions on a test dataset, and the model performance is measured using various metrics such as accuracy, precision, and recall. The study results can be used to improve safety protocols and inform future disaster response efforts.

GitHub Repository: https://github.com/ashishpatel26/Titanic-Machine-Learning-from-Disaster

Here's what valued users are saying about ProjectPro

Graduate Research assistance at Stony Brook University

Tech Leader | Stanford / Yale University

Not sure what you are looking for?

If you are looking for a sample of machine learning case study in python, then keep reading this space.

5. Loan Application Classification

Financial institutions receive tons of requests for lending money by borrowers and making decisions for each request is a crucial task. Manually processing these requests can be a time-consuming and error-prone process, so there is an increasing demand for machine learning to improve this process by automation.

You can work on this Loan Dataset on Kaggle to get started on this one of the most real-world case studies in the financial industry. The dataset contains 614 unique values for 13 columns: Follow the below-mentioned steps to get started on this case study.

Analyze the dataset and explore how various factors such as gender, marital status, and employment affect the loan amount and status of the loan application .

Select the features to automate the process of classification of loan applications.

Apply machine learning models such as logistic regression, decision trees, and random forests to the features and compare their performance using statistical metrics.

This case study falls under the umbrella of supervised learning problems in machine learning and demonstrates how ML models are used to automate tasks in the financial industry.

With these Data Science Projects in Python , your career is bound to reach new heights. Start working on them today!

6. Computer Price Estimation

Whenever one thinks of buying a new computer, the first thing that comes to mind is to curate a list of hardware specifications that best suit their needs. The next step is browsing different websites and looking for the cheapest option available. Performing all these processes can be time-consuming and require a lot of effort. But you don’t have to worry as machine learning can help you build a system that can estimate the price of a computer system by taking into account its various features.

This sample basic computer dataset on Kaggle can help you develop a price estimation model that can analyze historical data and identify patterns and trends in the relationship between computer specifications and prices. By training a machine learning model on this data, the model can learn to make accurate predictions of prices for new or unseen computer components. Machine learning algorithms such as K-Nearest Neighbours, Decision Trees, Random Forests, ADA Boost and XGBoost can effectively capture complex relationships between features and prices, leading to more accurate price estimates.

Besides saving time and effort compared to manual estimation methods, this project also has a business use case as it can provide stakeholders with valuable insights into market trends and consumer preferences.

7. House Price Prediction

Here is a machine learning case study that aims to predict the median value of owner-occupied homes in Boston suburbs based on various features such as crime rate, number of rooms, and pupil-teacher ratio.

Start working on this study by collecting the data from the publicly available UCI Machine Learning Repository, which contains information about 506 neighborhoods in the Boston area. The dataset includes 13 features such as per capita crime rate, average number of rooms per dwelling, and the proportion of owner-occupied units built before 1940. You can gain more insights into this data by using EDA techniques. Then prepare the dataset for implementing ML models by handling missing values, converting categorical features to numerical ones, and scaling the data.

Use machine learning algorithms such as Linear Regression, Lasso Regression, and Random Forest to predict house prices for different neighborhoods in the Boston area. Select the best model by comparing the performance of each one using metrics such as mean squared error, mean absolute error, and R-squared.

This section has machine learning case studies of different firms across various industries.

8. Machine Learning Case Study on Dell

Dell Technologies is a multinational technology company that designs, develops, and sells computers, servers, data storage devices, network switches, software, and other technology products and services. Dell is one of the world's most prominent PC vendors and serves customers in over 180 countries. As Data is an integral component of Dell's hard drive, the marketing team of Dell required a data-focused solution that would improve response rates and demonstrate why some words and phrases are more effective than others.

Dell contacted Persado and partnered with the firm that utilizes AI to create marketing content. Persado helped Dell revamp the email marketing strategy and leverage the data analytics to garner their audiences' attention. The statistics revealed that the partnership resulted in a noticeable increase in customer engagement as the page visits by 22% on average and a 50% average increase in CTR.

Dell currently relies on ML methods to improve their marketing strategy for emails, banners, direct mail, Facebook ads, and radio content.

Explore Categories

9. Machine Learning Case Study on Harley Davidson

In the current environment, it is challenging to overcome traditional marketing. An artificial intelligence powered robot, Albert is appealing for a business like Harley Davidson. Robots are now directing traffic, creating news stories, working in hotels, and even running McDonald's, thanks to machine learning and artificial intelligence.

There are many marketing channels that Albert can be applied to, including Email and social media.It automatically prepares customized creative copies and forecasts which customers will most likely convert.

The only company to make use of Albert is Harley Davidson. The business examined customer data to ascertain the activities of past clients who successfully made purchases and invested more time than usual across different pages on the website. With this knowledge, Albert divided the customer base into groups and adjusted the scale of test campaigns accordingly.

Results reveal that using Albert increased Harley Davidson's sales by 40%. The brand also saw a 2,930% spike in leads, 50% of which came from very effective "lookalikes" found by machine learning and artificial intelligence.

10. Machine Learning Case Study on Zomato

Zomato is a popular online platform that provides restaurant search and discovery services, online ordering and delivery, and customer reviews and ratings. Founded in India in 2008, the company has expanded to over 24 countries and serves millions of users globally. Over the years, it has become a popular choice for consumers to browse the ratings of different restaurants in their area.

To provide the best restaurant options to their customers, Zomato ensures to hand-pick the ones likely to perform well in the future. Machine Learning can help zomato in making such decisions by considering the different restaurant features. You can work on this sample Zomato Restaurants Data and experiment with how machine learning can be useful to Zomato. The dataset has the details of 9551 restaurants. The first step should involve careful analysis of the data and identifying outliers and missing values in the dataset. Treat them using statistical methods and then use regression models to predict the rating of different restaurants.

The Zomato Case study is one of the most popular machine learning startup case studies among data science enthusiasts.

11. Machine Learning Case Study on Tesla

Tesla, Inc. is an American electric vehicle and clean energy company founded in 2003 by Elon Musk. The company designs, manufactures, and sells electric cars, battery storage systems, and solar products. Tesla has pioneered the electric vehicle industry and has popularized high-capacity lithium-ion batteries and regenerative braking systems. The company strongly focuses on innovation, sustainability, and reducing the world's dependence on fossil fuels.

Tesla uses machine learning in various ways to enhance the performance and features of its electric vehicles. One of the most notable applications of machine learning at Tesla is in its Autopilot system, which uses a combination of cameras, sensors, and machine learning algorithms to enable advanced driver assistance features such as lane centering, adaptive cruise control, and automatic emergency braking.

Tesla's Autopilot system uses deep neural networks to process large amounts of real-world driving data and accurately predict driving behavior and potential hazards. It enables the system to learn and adapt over time, improving its accuracy and responsiveness.

Additionally, Tesla also uses machine learning in its battery management systems to optimize the performance and longevity of its batteries. Machine learning algorithms are used to model and predict the behavior of the batteries under different conditions, enabling Tesla to optimize charging rates, temperature control, and other factors to maximize the lifespan and performance of its batteries.

Unlock the ProjectPro Learning Experience for FREE

12. Machine Learning Case Study on Amazon

Amazon Prime Video uses machine learning to ensure high video quality for its users. The company has developed a system that analyzes video content and applies various techniques to enhance the viewing experience.

The system uses machine learning algorithms to automatically detect and correct issues such as unexpected black frames, blocky frames, and audio noise. For detecting block corruption, residual neural networks are used. After training the algorithm on the large dataset, a threshold of 0.07 was set for the corrupted-area ratio to mark the areas of the frame that have block corruption. For detecting unwanted noise in the audio, a model based on a pre-trained audio neural network is used to classify a one-second audio sample into one of these classes: audio hum, audio distortion, audio diss, audio clicks, and no defect. The lip sync is handled using the SynNet architecture.

By using machine learning to optimize video quality, Amazon can deliver a consistent and high-quality viewing experience to its users, regardless of the device or network conditions they are using. It helps maintain customer satisfaction and loyalty and ensures that Amazon remains a competitive video streaming market leader.

Machine Learning applications are not only limited to financial and tech use cases. It also finds its use in the Healthcare industry. So, here are a few machine learning case studies that showcase the use of this technology in the Biology and Healthcare domain.

13. Microbiome Therapeutics Development

The development of microbiome therapeutics involves the study of the interactions between the human microbiome and various diseases and identifying specific microbial strains or compositions that can be used to treat or prevent these diseases. Machine learning plays a crucial role in this process by enabling the analysis of large, complex datasets and identifying patterns and correlations that would be difficult or impossible to detect through traditional methods.

Machine learning algorithms can analyze microbiome data at various levels, including taxonomic composition, functional pathways, and gene expression profiles. These algorithms can identify specific microbial strains or communities associated with different diseases or conditions and can be used to develop targeted therapies.

Besides that, machine learning can be used to optimize the design and delivery of microbiome therapeutics. For example, machine learning algorithms can be used to predict the efficacy of different microbial strains or compositions and optimize these therapies' dosage and delivery mechanisms.

14. Mental Illness Diagnosis

Machine learning is increasingly being used to develop predictive models for diagnosing and managing mental illness. One of the critical advantages of machine learning in this context is its ability to analyze large, complex datasets and identify patterns and correlations that would be difficult for human experts to detect.

Machine learning algorithms can be trained on various data sources, including clinical assessments, self-reported symptoms, and physiological measures such as brain imaging or heart rate variability. These algorithms can then be used to develop predictive models to identify individuals at high risk of developing a mental illness or who are likely to experience a particular symptom or condition.

One example of machine learning being used to predict mental illness is in the development of suicide risk assessment tools. These tools use machine learning algorithms to analyze various risk factors, such as demographic information, medical history, and social media activity, to identify individuals at risk of suicide. These tools can be used to guide early intervention and support for individuals struggling with mental health issues.

One can also a build a Chatbot using Machine learning and Natural Lanaguage Processing that can analyze the responses of the user and recommend them the necessary steps that they can immediately take.

Get confident to build end-to-end projects

Access to a curated library of 250+ end-to-end industry projects with solution code, videos and tech support.

15. 3D Bioprinting

Another popular subject in the biotechnology industry is Bioprinting. Based on a computerized blueprint, the printer prints biological tissues like skin, organs, blood arteries, and bones layer by layer using cells and biomaterials, also known as bioinks.

They can be made in printers more ethically and economically than by relying on organ donations. Additionally, synthetic construct tissue is used for drug testing instead of testing on animals or people. Due to its tremendous complexity, the entire technology is still in its early stages of maturity. Data science is one of the most essential components to handle this complexity of printing.

The qualities of the bioinks, which have inherent variability, or the many printing parameters, are just a couple of the many variables that affect the printing process and quality. For instance, Bayesian optimization improves the likelihood of producing useable output and optimizes the printing process.

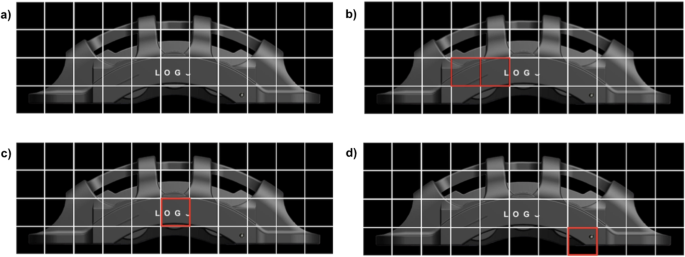

A crucial element of the procedure is the printing speed. To estimate the optimal speed, siamese network models are used. Convolutional neural networks are applied to photographs of the layer-by-layer tissue to detect material, or tissue abnormalities.

In this section, you will find a list of machine learning case studies that have utilized Amazon Web Services to create machine learning based solutions.

16. Machine Learning Case Study on AutoDesk

Autodesk is a US-based software company that provides solutions for 3D design, engineering, and entertainment industries. The company offers a wide range of software products and services, including computer-aided design (CAD) software, 3D animation software, and other tools used in architecture, construction, engineering, manufacturing, media and entertainment industries.

Autodesk utilizes machine learning (ML) models that are constructed on Amazon SageMaker, a managed ML service provided by Amazon Web Services (AWS), to assist designers in categorizing and sifting through a multitude of versions created by generative design procedures and selecting the most optimal design. ML techniques built with Amazon SageMaker help Autodesk progress from intuitive design to exploring the boundaries of generative design for their customers to produce innovative products that can even be life-changing. As an example, Edera Safety, a design studio located in Austria, created a superior and more effective spine protector by utilizing Autodesk's generative design process constructed on AWS.

17. Machine Learning Case Study on Capital One

Capital One is a financial services company in the United States that offers a range of financial products and services to consumers, small businesses, and commercial clients. The company provides credit cards, loans, savings and checking accounts, investment services, and other financial products and services.

Capital One leverages AWS to transform data into valuable insights using machine learning, enabling the company to innovate rapidly on behalf of its customers. To power its machine-learning innovation, Capital One utilizes a range of AWS services such as Amazon Elastic Compute Cloud (Amazon EC2), Amazon Relational Database Service (Amazon RDS), and AWS Lambda. AWS is enabling Capital One to implement flexible DevOps processes, enabling the company to introduce new products and features to the market in just a few weeks instead of several months or years. Additionally, AWS assists Capital One in providing data to and facilitating the training of sophisticated machine-learning analysis and customer-service solutions. The company also integrates its contact centers with its CRM and other critical systems, while simultaneously attracting promising entry-level and mid-career developers and engineers with the opportunity to gain knowledge and innovate with the most up-to-date cloud technologies.

18. Machine Learning Case Study on BuildFax

In 2008, BuildFax began by collecting widely scattered building permit data from different parts of the United States and distributing it to various businesses, including building inspectors, insurance companies, and economic analysts. Today, it offers custom-made solutions to these professions and several other services. These services comprise indices that monitor trends like commercial construction, and housing remodels.

Source: aws.amazon.com/solutions/case-studies

The primary customer base of BuildFax is insurance companies that splurge billion dollars on rood losses. BuildFax assists its customers in developing policies and premiums by evaluating the roof losses for them. Initially, it relied on general data and ZIP codes for building predictive models but they did not prove to be useful as they were not accurate and were slightly complex in nature. It thus required a way out of building a solution that could support more accurate results for property-specific estimates. It thus chose Amazon Machine Learning for predictive modeling. By employing Amazon Machine Learning, it is possible for the company to offer insurance companies and builders personalized estimations of roof-age and job-cost, which are specific to a particular property and it does not have to depend on more generalized estimates based on ZIP codes. It now utilizes customers' data and data from public sources to create predictive models.

What makes Python one of the best programming languages for ML Projects? The answer lies in these solved and end-to-end Machine Learning Projects in Python . Check them out now!

This section will present you with a list of machine learning case studies that showcase how companies have leveraged Microsoft Azure Services for completing machine learning tasks in their firm.

19. Machine Learning Case Study for an Enterprise Company

Consider a company (Azure customer) in the Electronic Design Automation industry that provides software, hardware, and IP for electronic systems and semiconductor companies. Their finance team was struggling to manage account receivables efficiently, so they wanted to use machine learning to predict payment outcomes and reduce outstanding receivables. The team faced a major challenge with managing change data capture using Azure Data Factory . A3S provided a solution by automating data migration from SAP ECC to Azure Synapse and offering fully automated analytics as a service, which helped the company streamline their account receivables management. It was able to achieve the entire scenario from data ingestion to analytics within a week, and they plan to use A3S for other analytics initiatives.

20. Machine Learning Case Study on Shell

Royal Dutch Shell, a global company managing oil wells to retail petrol stations, is using computer vision technology to automate safety checks at its service stations. In partnership with Microsoft, it has developed the project called Video Analytics for Downstream Retail (VADR) that uses machine vision and image processing to detect dangerous behavior and alert the servicemen. It uses OpenCV and Azure Databricks in the background highlighting how Azure can be used for personalised applications. Once the projects shows decent results in the countries where it has been deployed (Thailand and Singapore), Shell plans to expand the project further by going global with the VADR project.

21. Machine Learning Case Study on TransLink

TransLink, a transportation company in Vancouver, deployed 18,000 different sets of machine learning models using Azure Machine Learning to predict bus departure times and determine bus crowdedness. The models take into account factors such as traffic, bad weather and at-capacity buses. The deployment led to an improvement in predicted bus departure times of 74%. The company also created a mobile app that allows people to plan their trips based on how at-capacity a bus might be at different times of day.

22. Machine Learning Case Study on XBox

Microsoft Azure Personaliser is a cloud-based service that uses reinforcement learning to select the best content for customers based on up-to-date information about them, the context, and the application. Custom recommender services can also be created using Azure Machine Learning. The Xbox One group used Cognitive Services Personaliser to find content suited to each user, which resulted in a 40% increase in user engagement compared to a random personalisation policy on the Xbox platform.

All the mentioned case studies in this blog will help you explore the application of machine learning in solving real problems across different industries. But you must not stop after working on them if you are preparing for an interview and intend to showcase that you have mastered the art of implementing ML algorithms, and you must practice more such caste studies in machine learning.

And if you have decided to dive deeper into machine learning, data science, and big data, be sure to check out ProjectPro , which offers a repository of solved projects in data science and big data. With a wide range of projects, you can explore different techniques and approaches and build your machine learning and data science skills . Our repository has a project for each one of you, irrespective of your academic and professional background. The customer-specific learning path is likely to help you find your way to making a mark in this newly emerging field. So why wait? Start exploring today and see what you can accomplish with big data and data science !

Access Data Science and Machine Learning Project Code Examples

1. What is a case study in machine learning?

A case study in machine learning is an in-depth analysis of a real-world problem or scenario, where machine learning techniques are applied to solve the problem or provide insights. Case studies can provide valuable insights into the application of machine learning and can be used as a basis for further research or development.

2. What is a good use case for machine learning?

A good use case for machine learning is any scenario with a large and complex dataset and where there is a need to identify patterns, predict outcomes, or automate decision-making based on that data. It could include fraud detection, predictive maintenance, recommendation systems, and image or speech recognition, among others.

3. What are the 3 basic types of machine learning problems?

The three basic types of machine learning problems are supervised learning, unsupervised learning, and reinforcement learning. In supervised learning, the algorithm is trained on labeled data. In unsupervised learning, the algorithm seeks to identify patterns in unstructured data. In reinforcement learning, the algorithm learns through trial and error based on feedback from the environment.

4. What are the 4 basics of machine learning?

The four basics of machine learning are data preparation, model selection, model training, and model evaluation. Data preparation involves collecting, cleaning, and preparing data for use in training models. Model selection involves choosing the appropriate algorithm for a given task. Model training involves optimizing the chosen algorithm to achieve the desired outcome. Model evaluation consists of assessing the performance of the trained model on new data.

About the Author

Manika Nagpal is a versatile professional with a strong background in both Physics and Data Science. As a Senior Analyst at ProjectPro, she leverages her expertise in data science and writing to create engaging and insightful blogs that help businesses and individuals stay up-to-date with the

© 2024

© 2024 Iconiq Inc.

Privacy policy

User policy

Write for ProjectPro

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 09 September 2022

Machine learning in project analytics: a data-driven framework and case study

- Shahadat Uddin 1 ,

- Stephen Ong 1 &

- Haohui Lu 1

Scientific Reports volume 12 , Article number: 15252 ( 2022 ) Cite this article

9786 Accesses

13 Citations

18 Altmetric

Metrics details

- Applied mathematics

- Computational science

The analytic procedures incorporated to facilitate the delivery of projects are often referred to as project analytics. Existing techniques focus on retrospective reporting and understanding the underlying relationships to make informed decisions. Although machine learning algorithms have been widely used in addressing problems within various contexts (e.g., streamlining the design of construction projects), limited studies have evaluated pre-existing machine learning methods within the delivery of construction projects. Due to this, the current research aims to contribute further to this convergence between artificial intelligence and the execution construction project through the evaluation of a specific set of machine learning algorithms. This study proposes a machine learning-based data-driven research framework for addressing problems related to project analytics. It then illustrates an example of the application of this framework. In this illustration, existing data from an open-source data repository on construction projects and cost overrun frequencies was studied in which several machine learning models (Python’s Scikit-learn package) were tested and evaluated. The data consisted of 44 independent variables (from materials to labour and contracting) and one dependent variable (project cost overrun frequency), which has been categorised for processing under several machine learning models. These models include support vector machine, logistic regression, k -nearest neighbour, random forest, stacking (ensemble) model and artificial neural network. Feature selection and evaluation methods, including the Univariate feature selection, Recursive feature elimination, SelectFromModel and confusion matrix, were applied to determine the most accurate prediction model. This study also discusses the generalisability of using the proposed research framework in other research contexts within the field of project management. The proposed framework, its illustration in the context of construction projects and its potential to be adopted in different contexts will significantly contribute to project practitioners, stakeholders and academics in addressing many project-related issues.

Similar content being viewed by others

An ensemble-based machine learning solution for imbalanced multiclass dataset during lithology log generation

Prediction of jumbo drill penetration rate in underground mines using various machine learning approaches and traditional models

An efficient machine learning approach for predicting concrete chloride resistance using a comprehensive dataset

Introduction.

Successful projects require the presence of appropriate information and technology 1 . Project analytics provides an avenue for informed decisions to be made through the lifecycle of a project. Project analytics applies various statistics (e.g., earned value analysis or Monte Carlo simulation) among other models to make evidence-based decisions. They are used to manage risks as well as project execution 2 . There is a tendency for project analytics to be employed due to other additional benefits, including an ability to forecast and make predictions, benchmark with other projects, and determine trends such as those that are time-dependent 3 , 4 , 5 . There has been increasing interest in project analytics and how current technology applications can be incorporated and utilised 6 . Broadly, project analytics can be understood on five levels 4 . The first is descriptive analytics which incorporates retrospective reporting. The second is known as diagnostic analytics , which aims to understand the interrelationships and underlying causes and effects. The third is predictive analytics which seeks to make predictions. Subsequent to this is prescriptive analytics , which prescribes steps following predictions. Finally, cognitive analytics aims to predict future problems. The first three levels can be applied with ease with the help of technology. The fourth and fifth steps require data that is generally more difficult to obtain as they may be less accessible or unstructured. Further, although project key performance indicators can be challenging to define 2 , identifying common measurable features facilitates this 7 . It is anticipated that project analytics will continue to experience development due to its direct benefits to the major baseline measures focused on productivity, profitability, cost, and time 8 . The nature of project management itself is fluid and flexible, and project analytics allows an avenue for which machine learning algorithms can be applied 9 .

Machine learning within the field of project analytics falls into the category of cognitive analytics, which deals with problem prediction. Generally, machine learning explores the possibilities of computers to improve processes through training or experience 10 . It can also build on the pre-existing capabilities and techniques prevalent within management to accomplish complex tasks 11 . Due to its practical use and broad applicability, recent developments have led to the invention and introduction of newer and more innovative machine learning algorithms and techniques. Artificial intelligence, for instance, allows for software to develop computer vision, speech recognition, natural language processing, robot control, and other applications 10 . Specific to the construction industry, it is now used to monitor construction environments through a virtual reality and building information modelling replication 12 or risk prediction 13 . Within other industries, such as consumer services and transport, machine learning is being applied to improve consumer experiences and satisfaction 10 , 14 and reduce the human errors of traffic controllers 15 . Recent applications and development of machine learning broadly fall into the categories of classification, regression, ranking, clustering, dimensionality reduction and manifold learning 16 . Current learning models include linear predictors, boosting, stochastic gradient descent, kernel methods, and nearest neighbour, among others 11 . Newer and more applications and learning models are continuously being introduced to improve accessibility and effectiveness.

Specific to the management of construction projects, other studies have also been made to understand how copious amounts of project data can be used 17 , the importance of ontology and semantics throughout the nexus between artificial intelligence and construction projects 18 , 19 as well as novel approaches to the challenges within this integration of fields 20 , 21 , 22 . There have been limited applications of pre-existing machine learning models on construction cost overruns. They have predominantly focussed on applications to streamline the design processes within construction 23 , 24 , 25 , 26 , and those which have investigated project profitability have not incorporated the types and combinations of algorithms used within this study 6 , 27 . Furthermore, existing applications have largely been skewed towards one type or another 28 , 29 .

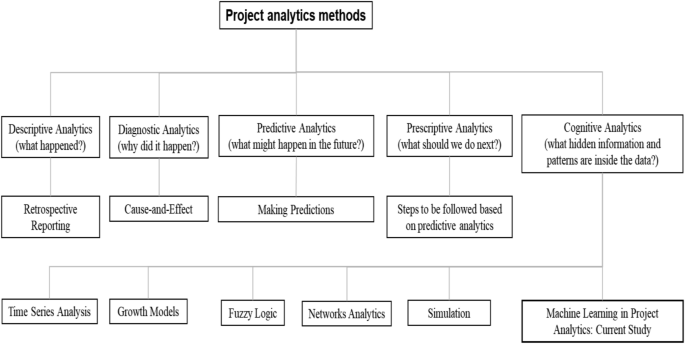

In addition to the frequently used earned value method (EVM), researchers have been applying many other powerful quantitative methods to address a diverse range of project analytics research problems over time. Examples of those methods include time series analysis, fuzzy logic, simulation, network analytics, and network correlation and regression. Time series analysis uses longitudinal data to forecast an underlying project's future needs, such as the time and cost 30 , 31 , 32 . Few other methods are combined with EVM to find a better solution for the underlying research problems. For example, Narbaev and De Marco 33 integrated growth models and EVM for forecasting project cost at completion using data from construction projects. For analysing the ongoing progress of projects having ambiguous or linguistic outcomes, fuzzy logic is often combined with EVM 34 , 35 , 36 . Yu et al. 36 applied fuzzy theory and EVM for schedule management. Ponz-Tienda et al. 35 found that using fuzzy arithmetic on EVM provided more objective results in uncertain environments than the traditional methodology. Bonato et al. 37 integrated EVM with Monte Carlo simulation to predict the final cost of three engineering projects. Batselier and Vanhoucke 38 compared the accuracy of the project time and cost forecasting using EVM and simulation. They found that the simulation results supported findings from the EVM. Network methods are primarily used to analyse project stakeholder networks. Yang and Zou 39 developed a social network theory-based model to explore stakeholder-associated risks and their interactions in complex green building projects. Uddin 40 proposed a social network analytics-based framework for analysing stakeholder networks. Ong and Uddin 41 further applied network correlation and regression to examine the co-evolution of stakeholder networks in collaborative healthcare projects. Although many other methods have already been used, as evident in the current literature, machine learning methods or models are yet to be adopted for addressing research problems related to project analytics. The current investigation is derived from the cognitive analytics component of project analytics. It proposes an approach for determining hidden information and patterns to assist with project delivery. Figure 1 illustrates a tree diagram showing different levels of project analytics and their associated methods from the literature. It also illustrates existing methods within the cognitive component of project analytics to where the application of machine learning is situated contextually.

A tree diagram of different project analytics methods. It also shows where the current study belongs to. Although earned value analysis is commonly used in project analytics, we do not include it in this figure since it is used in the first three levels of project analytics.

Machine learning models have several notable advantages over traditional statistical methods that play a significant role in project analytics 42 . First, machine learning algorithms can quickly identify trends and patterns by simultaneously analysing a large volume of data. Second, they are more capable of continuous improvement. Machine learning algorithms can improve their accuracy and efficiency for decision-making through subsequent training from potential new data. Third, machine learning algorithms efficiently handle multi-dimensional and multi-variety data in dynamic or uncertain environments. Fourth, they are compelling to automate various decision-making tasks. For example, machine learning-based sentiment analysis can easily a negative tweet and can automatically take further necessary steps. Last but not least, machine learning has been helpful across various industries, for example, defence to education 43 . Current research has seen the development of several different branches of artificial intelligence (including robotics, automated planning and scheduling and optimisation) within safety monitoring, risk prediction, cost estimation and so on 44 . This has progressed from the applications of regression on project cost overruns 45 to the current deep-learning implementations within the construction industry 46 . Despite this, the uses remain largely limited and are still in a developmental state. The benefits of applications are noted, such as optimising and streamlining existing processes; however, high initial costs form a barrier to accessibility 44 .

The primary goal of this study is to demonstrate the applicability of different machine learning algorithms in addressing problems related to project analytics. Limitations in applying machine learning algorithms within the context of construction projects have been explored previously. However, preceding research has mainly been conducted to improve the design processes specific to construction 23 , 24 , and those investigating project profitabilities have not incorporated the types and combinations of algorithms used within this study 6 , 27 . For instance, preceding research has incorporated a different combination of machine-learning algorithms in research of predicting construction delays 47 . This study first proposed a machine learning-based data-driven research framework for project analytics to contribute to the proposed study direction. It then applied this framework to a case study of construction projects. Although there are three different machine learning algorithms (supervised, unsupervised and semi-supervised), the supervised machine learning models are most commonly used due to their efficiency and effectiveness in addressing many real-world problems 48 . Therefore, we will use machine learning to represent supervised machine learning throughout the rest of this article. The contribution of this study is significant in that it considers the applications of machine learning within project management. Project management is often thought of as being very fluid in nature, and because of this, applications of machine learning are often more difficult 9 , 49 . Further to this, existing implementations have largely been limited to safety monitoring, risk prediction, cost estimation and so on 44 . Through the evaluation of machine-learning applications, this study further demonstrates a case study for which algorithms can be used to consider and model the relationship between project attributes and a project performance measure (i.e., cost overrun frequency).

Machine learning-based framework for project analytics

When and why machine learning for project analytics.

Machine learning models are typically used for research problems that involve predicting the classification outcome of a categorical dependent variable. Therefore, they can be applied in the context of project analytics if the underlying objective variable is a categorical one. If that objective variable is non-categorical, it must first be converted into a categorical variable. For example, if the objective or target variable is the project cost, we can convert this variable into a categorical variable by taking only two possible values. The first value would be 0 to indicate a low-cost project, and the second could be 1 for showing a high-cost project. The average or median cost value for all projects under consideration can be considered for splitting project costs into low-cost and high-cost categories.

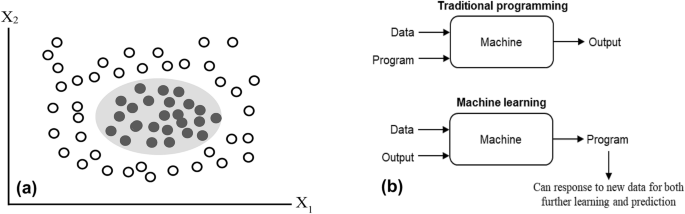

For data-driven decision-making, machine learning models are advantageous. This is because traditional statistical methods (e.g., ordinary least square (OLS) regression) make assumptions about the underlying research data to produce explicit formulae for the objective target measures. Unlike these statistical methods, machine learning algorithms figure out patterns on their own directly from the data. For instance, for a non-linear but separable dataset, an OLS regression model will not be the right choice due to its assumption that the underlying data must be linear. However, a machine learning model can easily separate the dataset into the underlying classes. Figure 2 (a) presents a situation where machine learning models perform better than traditional statistical methods.

( a ) An illustration showing the superior performance of machine learning models compared with the traditional statistical models using an abstract dataset with two attributes (X 1 and X 2 ). The data points within this abstract dataset consist of two classes: one represented with a transparent circle and the second class illustrated with a black-filled circle. These data points are non-linear but separable. Traditional statistical models (e.g., ordinary least square regression) will not accurately separate these data points. However, any machine learning model can easily separate them without making errors; and ( b ) Traditional programming versus machine learning.

Similarly, machine learning models are compelling if the underlying research dataset has many attributes or independent measures. Such models can identify features that significantly contribute to the corresponding classification performance regardless of their distributions or collinearity. Traditional statistical methods have become prone to biased results when there exists a correlation between independent variables. Machine learning-based current studies specific to project analytics have been largely limited. Despite this, there have been tangential studies on the use of artificial intelligence to improve cost estimations as well as risk prediction 44 . Additionally, models have been implemented in the optimisation of existing processes 50 .

Machine learning versus traditional programming

Machine learning can be thought of as a process of teaching a machine (i.e., computers) to learn from data and adjust or apply its present knowledge when exposed to new data 42 . It is a type of artificial intelligence that enables computers to learn from examples or experiences. Traditional programming requires some input data and some logic in the form of code (program) to generate the output. Unlike traditional programming, the input data and their corresponding output are fed to an algorithm to create a program in machine learning. This resultant program can capture powerful insights into the data pattern and can be used to predict future outcomes. Figure 2 (b) shows the difference between machine learning and traditional programming.

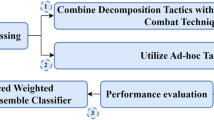

Proposed machine learning-based framework

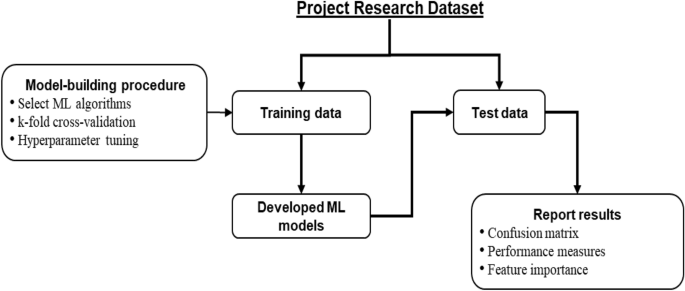

Figure 3 illustrates the proposed machine learning-based research framework of this study. The framework starts with breaking the project research dataset into the training and test components. As mentioned in the previous section, the research dataset may have many categorical and/or nominal independent variables, but its single dependent variable must be categorical. Although there is no strict rule for this split, the training data size is generally more than or equal to 50% of the original dataset 48 .

The proposed machine learning-based data-driven framework.

Machine learning algorithms can handle variables that have only numerical outcomes. So, when one or more of the underlying categorical variables have a textual or string outcome, we must first convert them into the corresponding numerical values. Suppose a variable can take only three textual outcomes (low, medium and high). In that case, we could consider, for example, 1 to represent low , 2 to represent medium , and 3 to represent high . Other statistical techniques, such as the RIDIT (relative to an identified distribution) scoring 51 , can also be used to convert ordered categorical measurements into quantitative ones. RIDIT is a parametric approach that uses probabilistic comparison to determine the statistical differences between ordered categorical groups. The remaining components of the proposed framework have been briefly described in the following subsections.

Model-building procedure

The next step of the framework is to follow the model-building procedure to develop the desired machine learning models using the training data. The first step of this procedure is to select suitable machine learning algorithms or models. Among the available machine learning algorithms, the commonly used ones are support vector machine, logistic regression, k -nearest neighbours, artificial neural network, decision tree and random forest 52 . One can also select an ensemble machine learning model as the desired algorithm. An ensemble machine learning method uses multiple algorithms or the same algorithm multiple times to achieve better predictive performance than could be obtained from any of the constituent learning models alone 52 . Three widely used ensemble approaches are bagging, boosting and stacking. In bagging, the research dataset is divided into different equal-sized subsets. The underlying machine learning algorithm is then applied to these subsets for classification. In boosting, a random sample of the dataset is selected and then fitted and trained sequentially with different models to compensate for the weakness observed in the immediately used model. Stacking combined different weak machine learning models in a heterogeneous way to improve the predictive performance. For example, the random forest algorithm is an ensemble of different decision tree models 42 .

Second, each selected machine learning model will be processed through the k -fold cross-validation approach to improve predictive efficiency. In k -fold cross-validation, the training data is divided into k folds. In an iteration, the (k-1) folds are used to train the selected machine models, and the remaining last fold isF used for validation purposes. This iteration process continues until each k folds will get a turn to be used for validation purposes. The final predictive efficiency of the trained models is based on the average values from the outcomes of these iterations. In addition to this average value, researchers use the standard deviation of the results from different iterations as the predictive training efficiency. Supplementary Fig 1 shows an illustration of the k -fold cross-validation.

Third, most machine learning algorithms require a pre-defined value for their different parameters, known as hyperparameter tuning. The settings of these parameters play a vital role in the achieved performance of the underlying algorithm. For a given machine learning algorithm, the optimal value for these parameters can be different from one dataset to another. The same algorithm needs to run multiple times with different parameter values to find its optimal parameter value for a given dataset. Many algorithms are available in the literature, such as the Grid search 53 , to find the optimal parameter value. In the Grid search, hyperparameters are divided into discrete grids. Each grid point represents a specific combination of the underlying model parameters. The parameter values of the point that results in the best performance are the optimal parameter values 53 .

Testing of the developed models and reporting results

Once the desired machine learning models have been developed using the training data, they need to be tested using the test data. The underlying trained model is then applied to predict its dependent variable for each data instance. Therefore, for each data instance, two categorical outcomes will be available for its dependent variable: one predicted using the underlying trained model, and the other is the actual category. These predicted and actual categorical outcome values are used to report the results of the underlying machine learning model.

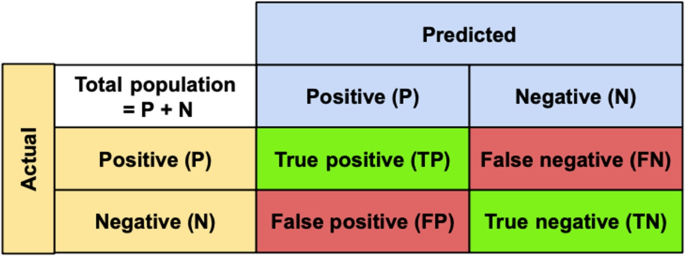

The fundamental tool to report results from machine learning models is the confusion matrix, which consists of four integer values 48 . The first value represents the number of positive cases correctly identified as positive by the underlying trained model (true-positive). The second value indicates the number of positive instances incorrectly identified as negative (false-negative). The third value represents the number of negative cases incorrectly identified as positive (false-positive). Finally, the fourth value indicates the number of negative instances correctly identified as negative (true-negative). Researchers also use a few performance measures based on the four values of the confusion matrix to report machine learning results. The most used measure is accuracy which is the ratio of the number of correct predictions (true-positive + true-negative) and the total number of data instances (sum of all four values of the confusion matrix). Other measures commonly used to report machine learning results are precision, recall and F1-score. Precision refers to the ratio between true-positives and the total number of positive predictions (i.e., true-positive + false-positive), often used to indicate the quality of a positive prediction made by a model 48 . Recall, also known as the true-positive rate, is calculated by dividing true-positive by the number of data instances that should have been predicted as positive (i.e., true-positive + false-negative). F1-score is the harmonic mean of the last two measures, i.e., [(2 × Precision × Recall)/(Precision + Recall)] and the error-rate equals to (1-Accuracy).

Another essential tool for reporting machine learning results is variable or feature importance, which identifies a list of independent variables (features) contributing most to the classification performance. The importance of a variable refers to how much a given machine learning algorithm uses that variable in making accurate predictions 54 . The widely used technique for identifying variable importance is the principal component analysis. It reduces the dimensionality of the data while minimising information loss, which eventually increases the interpretability of the underlying machine learning outcome. It further helps in finding the important features in a dataset as well as plotting them in 2D and 3D 54 .

Ethical approval

Ethical approval is not required for this study since this study used publicly available data for research investigation purposes. All research was performed in accordance with relevant guidelines/regulations.

Informed consent

Due to the nature of the data sources, informed consent was not required for this study.

Case study: an application of the proposed framework

This section illustrates an application of this study’s proposed framework (Fig. 2 ) in a construction project context. We will apply this framework in classifying projects into two classes based on their cost overrun experience. Projects rarely experience a delay belonging to the first class (Rare class). The second class indicates those projects that often experience a delay (Often class). In doing so, we consider a list of independent variables or features.

Data source

The research dataset is taken from an open-source data repository, Kaggle 55 . This survey-based research dataset was collected to explore the causes of the project cost overrun in Indian construction projects 45 , consisting of 44 independent variables or features and one dependent variable. The independent variables cover a wide range of cost overrun factors, from materials and labour to contractual issues and the scope of the work. The dependent variable is the frequency of experiencing project cost overrun (rare or often). The dataset size is 139; 65 belong to the rare class, and the remaining 74 are from the often class. We converted each categorical variable with a textual or string outcome into an appropriate numerical value range to prepare the dataset for machine learning analysis. For example, we used 1 and 2 to represent rare and often class, respectively. The correlation matrix among the 44 features is presented in Supplementary Fig 2 .

Machine learning algorithms

This study considered four machine learning algorithms to explore the causes of project cost overrun using the research dataset mentioned above. They are support vector machine, logistic regression, k- nearest neighbours and random forest.

Support vector machine (SVM) is a process applied to understand data. For instance, if one wants to determine and interpret which projects are classified as programmatically successful through the processing of precedent data information, SVM would provide a practical approach for prediction. SVM functions by assigning labels to objects 56 . The comparison attributes are used to cluster these objects into different groups or classes by maximising their marginal distances and minimising the classification errors. The attributes are plotted multi-dimensionally, allowing a separation line, known as a hyperplane , see supplementary Fig 3 (a), to distinguish between underlying classes or groups 52 . Support vectors are the data points that lie closest to the decision boundary on both sides. In Supplementary Fig 3 (a), they are the circles (both transparent and shaded ones) close to the hyperplane. Support vectors play an essential role in deciding the position and orientation of the hyperplane. Various computational methods, including a kernel function to create more derived attributes, are applied to accommodate this process 56 . Support vector machines are not only limited to binary classes but can also be generalised to a larger variety of classifications. This is accomplished through the training of separate SVMs 56 .

Logistic regression (LR) builds on the linear regression model and predicts the outcome of a dichotomous variable 57 ; for example, the presence or absence of an event. It uses a scatterplot to understand the connection between an independent variable and one or more dependent variables (see Supplementary Fig 3 (b)). LR model fits the data to a sigmoidal curve instead of fitting it to a straight line. The natural logarithm is considered when developing the model. It provides a value between 0 and 1 that is interpreted as the probability of class membership. Best estimates are determined by developing from approximate estimates until a level of stability is reached 58 . Generally, LR offers a straightforward approach for determining and observing interrelationships. It is more efficient compared to ordinary regressions 59 .

k -nearest neighbours (KNN) algorithm uses a process that plots prior information and applies a specific sample size ( k ) to the plot to determine the most likely scenario 52 . This method finds the nearest training examples using a distance measure. The final classification is made by counting the most common scenario or votes present within the specified sample. As illustrated in Supplementary Fig 3 (c), the closest four nearest neighbours in the small circle are three grey squares and one white square. The majority class is grey. Hence, KNN will predict the instance (i.e., Χ ) as grey. On the other hand, if we look at the larger circle of the same figure, the nearest neighbours consist of ten white squares and four grey squares. The majority class is white. Thus, KNN will classify the instance as white. KNN’s advantage lies in its ability to produce a simplified result and handle missing data 60 . In summary, KNN utilises similarities (as well as differences) and distances in the process of developing models.

Random forest (RF) is a machine learning process that consists of many decision trees. A decision tree is a tree-like structure where each internal node represents a test on the input attribute. It may have multiple internal nodes at different levels, and the leaf or terminal nodes represent the decision outcomes. It produces a classification outcome for a distinctive and separate part to the input vector. For non-numerical processes, it considers the average value, and for discrete processes, it considers the number of votes 52 . Supplementary Fig 3 (d) shows three decision trees to illustrate the function of a random forest. The outcomes from trees 1, 2 and 3 are class B, class A and class A, respectively. According to the majority vote, the final prediction will be class A. Because it considers specific attributes, it can have a tendency to emphasise specific attributes over others, which may result in some attributes being unevenly weighted 52 . Advantages of the random forest include its ability to handle multidimensionality and multicollinearity in data despite its sensitivity to sampling design.

Artificial neural network (ANN) simulates the way in which human brains work. This is accomplished by modelling logical propositions and incorporating weighted inputs, a transfer and one output 61 (Supplementary Fig 3 (e)). It is advantageous because it can be used to model non-linear relationships and handle multivariate data 62 . ANN learns through three major avenues. These include error-back propagation (supervised), the Kohonen (unsupervised) and the counter-propagation ANN (supervised) 62 . There are two types of ANN—supervised and unsupervised. ANN has been used in a myriad of applications ranging from pharmaceuticals 61 to electronic devices 63 . It also possesses great levels of fault tolerance 64 and learns by example and through self-organisation 65 .

Ensemble techniques are a type of machine learning methodology in which numerous basic classifiers are combined to generate an optimal model 66 . An ensemble technique considers many models and combines them to form a single model, and the final model will eliminate the weaknesses of each individual learner, resulting in a powerful model that will improve model performance. The stacking model is a general architecture comprised of two classifier levels: base classifier and meta-learner 67 . The base classifiers are trained with the training dataset, and a new dataset is constructed for the meta-learner. Afterwards, this new dataset is used to train the meta-classifier. This study uses four models (SVM, LR, KNN and RF) as base classifiers and LR as a meta learner, as illustrated in Supplementary Fig 3 (f).

Feature selection

The process of selecting the optimal feature subset that significantly influences the predicted outcomes, which may be efficient to increase model performance and save running time, is known as feature selection. This study considers three different feature selection approaches. They are the Univariate feature selection (UFS), Recursive feature elimination (RFE) and SelectFromModel (SFM) approach. UFS examines each feature separately to determine the strength of its relationship with the response variable 68 . This method is straightforward to use and comprehend and helps acquire a deeper understanding of data. In this study, we calculate the chi-square values between features. RFE is a type of backwards feature elimination in which the model is fit first using all features in the given dataset and then removing the least important features one by one 69 . After that, the model is refit until the desired number of features is left over, which is determined by the parameter. SFM is used to choose effective features based on the feature importance of the best-performing model 70 . This approach selects features by establishing a threshold based on feature significance as indicated by the model on the training set. Those characteristics whose feature importance is more than the threshold are chosen, while those whose feature importance is less than the threshold are deleted. In this study, we apply SFM after we compare the performance of four machine learning methods. Afterwards, we train the best-performing model again using the features from the SFM approach.

Findings from the case study

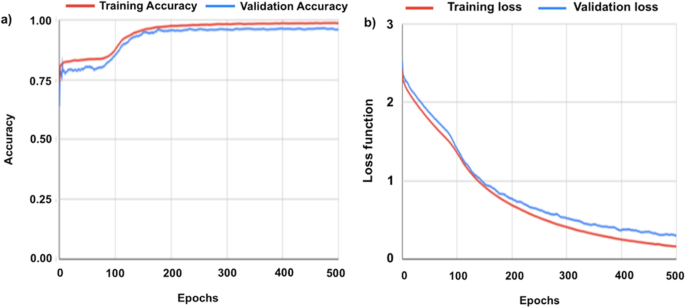

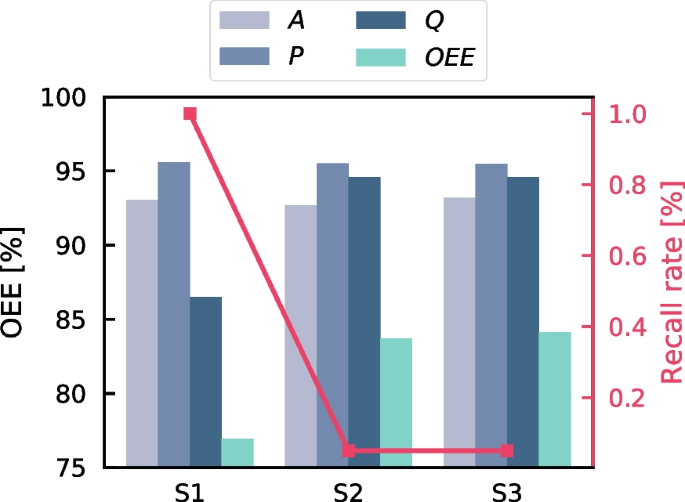

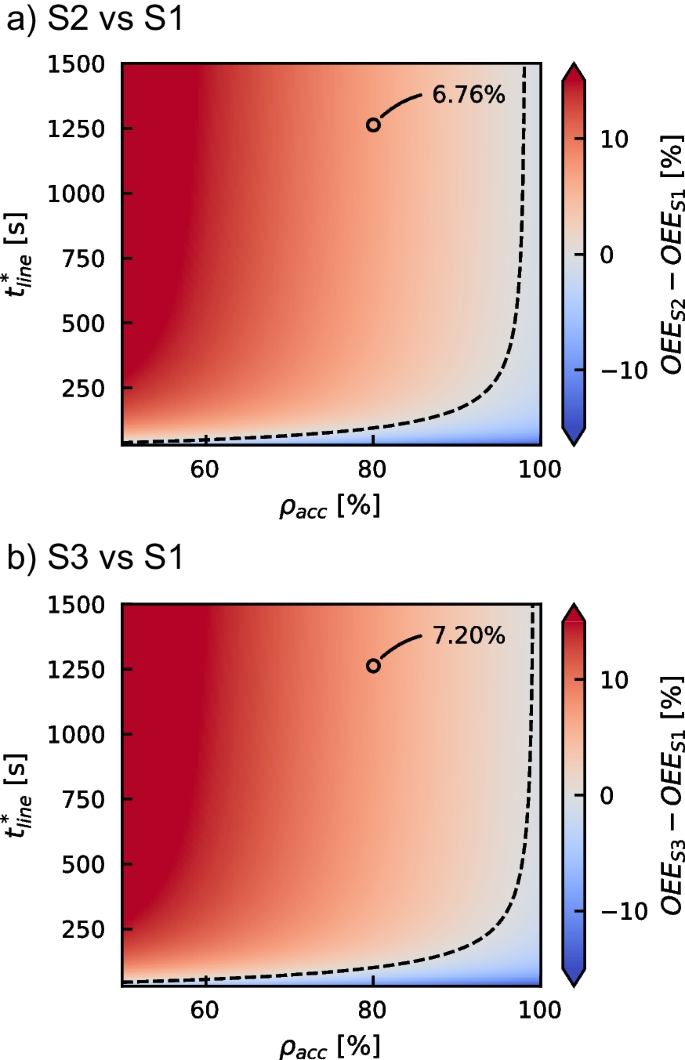

We split the dataset into 70:30 for training and test purposes of the four selected machine learning algorithms. We used Python’s Scikit-learn package for implementing these algorithms 70 . Using the training data, we first developed six models based on these six algorithms. We used fivefold validation and target to improve the accuracy value. Then, we applied these models to the test data. We also executed all required hyperparameter tunings for each algorithm for the possible best classification outcome. Table 1 shows the performance outcomes for each algorithm during the training and test phase. The hyperparameter settings for each algorithm have been listed in Supplementary Table 1 .

As revealed in Table 1 , random forest outperformed the other three algorithms in terms of accuracy for both the training and test phases. It showed an accuracy of 78.14% and 77.50% for the training and test phases, respectively. The second-best performer in the training phase is k- nearest neighbours (76.98%), and for the test phase, it is the support vector machine, k- nearest neighbours and artificial neural network (72.50%).

Since random forest showed the best performance, we explored further based on this algorithm. We applied the three approaches (UFS, RFE and SFM) for feature optimisation on the random forest. The result is presented in Table 2 . SFM shows the best outcome among these three approaches. Its accuracy is 85.00%, whereas the accuracies of USF and RFE are 77.50% and 72.50%, respectively. As can be seen in Table 2 , the accuracy for the testing phase increases from 77.50% in Table 1 (b) to 85.00% with the SFM feature optimisation. Table 3 shows the 19 selected features from the SFM output. Out of 44 features, SFM found that 19 of them play a significant role in predicting the outcomes.

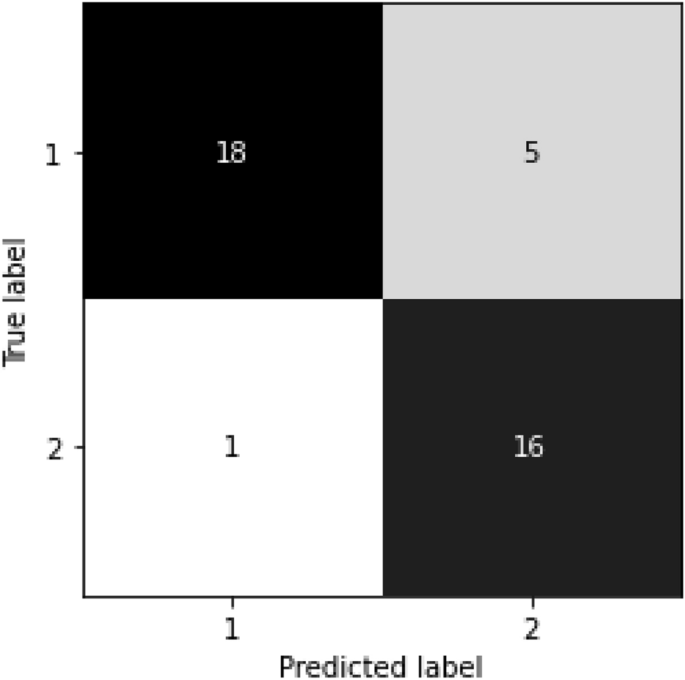

Further, Fig. 4 illustrates the confusion matrix when the random forest model with the SFM feature optimiser was applied to the test data. There are 18 true-positive, five false-negative, one false-positive and 16 true-negative cases. Therefore, the accuracy for the test phase is (18 + 16)/(18 + 5 + 1 + 16) = 85.00%.

Confusion matrix results based on the random forest model with the SFM feature optimiser (1 for the rare class and 2 for the often class).

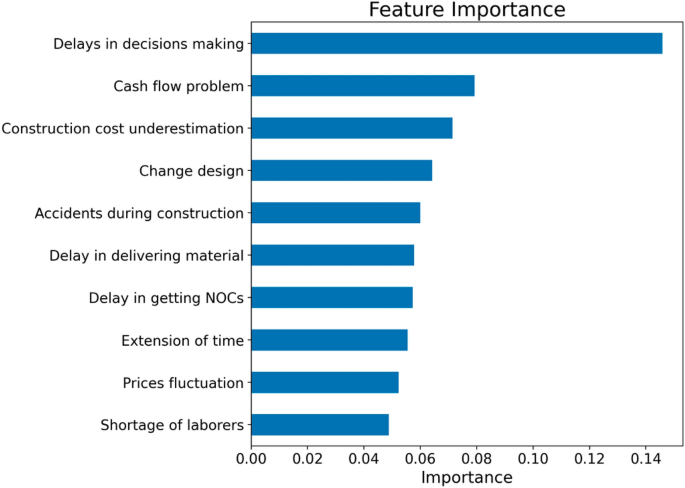

Figure 5 illustrates the top-10 most important features or variables based on the random forest algorithm with the SFM optimiser. We used feature importance based on the mean decrease in impurity in identifying this list of important variables. Mean decrease in impurity computes each feature’s importance as the sum over the number of splits that include the feature in proportion to the number of samples it splits 71 . According to this figure, the delays in decision marking attribute contributed most to the classification performance of the random forest algorithm, followed by cash flow problem and construction cost underestimation attributes. The current construction project literature also highlighted these top-10 factors as significant contributors to project cost overrun. For example, using construction project data from Jordan, Al-Hazim et al. 72 ranked 20 causes for cost overrun, including causes similar to these causes.

Feature importance (top-10 out of 19) based on the random forest model with the SFM feature optimiser.

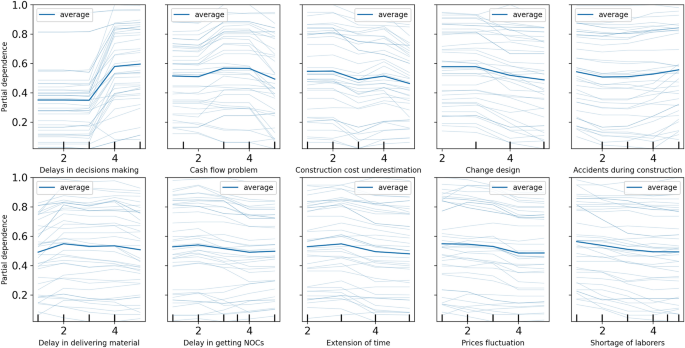

Further, we conduct a sensitivity analysis of the model’s ten most important features (from Fig. 5 ) to explore how a change in each feature affects the cost overrun. We utilise the partial dependence plot (PDP), which is a typical visualisation tool for non-parametric models 73 , to display this analysis’s outcomes. A PDP can demonstrate whether the relation between the target and a feature is linear, monotonic, or more complicated. The result of the sensitivity analysis is presented in Fig. 6 . For the ‘delays in decisions making’ attribute, the PDP shows that the probability is below 0.4 until the rating value is three and increases after. A higher value for this attribute indicates a higher risk of cost overrun. On the other hand, there are no significant differences can be seen in the remaining nine features if the value changes.

The result of the sensitivity analysis from the partial dependency plot tool for the ten most important features.

Summary of the case study

We illustrated an application of the proposed machine learning-based research framework in classifying construction projects. RF showed the highest accuracy in predicting the test dataset. For a new data instance with information for its 19 features but has not had any information on its classification, RF can identify its class ( rare or often ) correctly with a probability of 85.00%. If more data is provided, in addition to the 139 instances of the case study, to the machine learning algorithms, then their accuracy and efficiency in making project classification will improve with subsequent training. For example, if we provide 100 more data instances, these algorithms will have an additional 50 instances for training with a 70:30 split. This continuous improvement facility put the machine learning algorithms in a superior position over other traditional methods. In the current literature, some studies explore the factors contributing to project delay or cost overrun. In most cases, they applied factor analysis or other related statistical methods for research data analysis 72 , 74 , 75 . In addition to identifying important attributes, the proposed machine learning-based framework identified the ranking of factors and how eliminating less important factors affects the prediction accuracy when applied to this case study.

We shared the Python software developed to implement the four machine learning algorithms considered in this case study using GitHub 76 , a software hosting internet site. user-friendly version of this software can be accessed at https://share.streamlit.io/haohuilu/pa/main/app.py . The accuracy findings from this link could be slightly different from one run to another due to the hyperparameter settings of the corresponding machine learning algorithms.