What Is Computer Vision?

Computer vision is a field of artificial intelligence (AI) that applies machine learning to images and videos to understand media and make decisions about them. With computer vision, we can, in a sense, give vision to software and technology.

How Does Computer Vision Work?

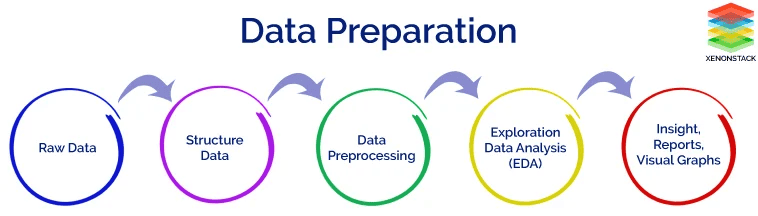

Computer vision programs use a combination of techniques to process raw images and turn them into usable data and insights.

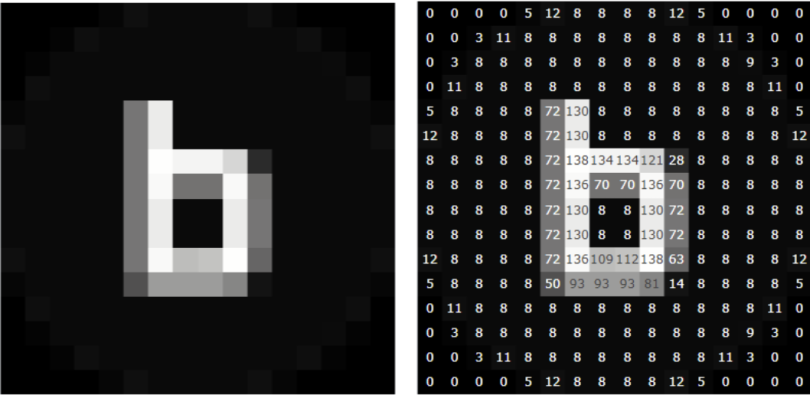

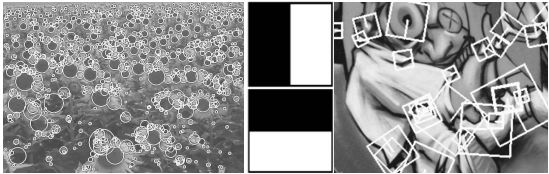

The basis for much computer vision work is 2D images, as shown below. While images may seem like a complex input, we can decompose them into raw numbers. Images are really just a combination of individual pixels and each pixel can be represented by a number (grayscale) or combination of numbers such as (255, 0, 0— RGB ).

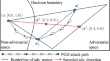

Once we’ve translated an image to a set of numbers, a computer vision algorithm applies processing. One way to do this is a classic technique called convolutional neural networks (CNNs) that uses layers to group together the pixels in order to create successively more meaningful representations of the data. A CNN may first translate pixels into lines, which are then combined to form features such as eyes and finally combined to create more complex items such as face shapes.

Why Is Computer Vision Important?

Computer vision has been around since as early as the 1950s and continues to be a popular field of research with many applications. According to the deep learning research group, BitRefine , we should expect the computer vision industry to grow to nearly 50 billion USD in 2022, with 75 percent of the revenue deriving from hardware .

The importance of computer vision comes from the increasing need for computers to be able to understand the human environment. To understand the environment, it helps if computers can see what we do, which means mimicking the sense of human vision. This is especially important as we develop more complex AI systems that are more human-like in their abilities.

On That Note. . . How Do Self-Driving Cars Work?

Computer Vision Examples

Computer vision is often used in everyday life and its applications range from simple to very complex.

Optical character recognition (OCR) was one of the most widespread applications of computer vision. The most well-known case of this today is Google’s Translate , which can take an image of anything — from menus to signboards — and convert it into text that the program then translates into the user’s native language. We can also apply OCR in other use cases such as automated tolling of cars on highways and translating hand-written documents into digital counterparts.

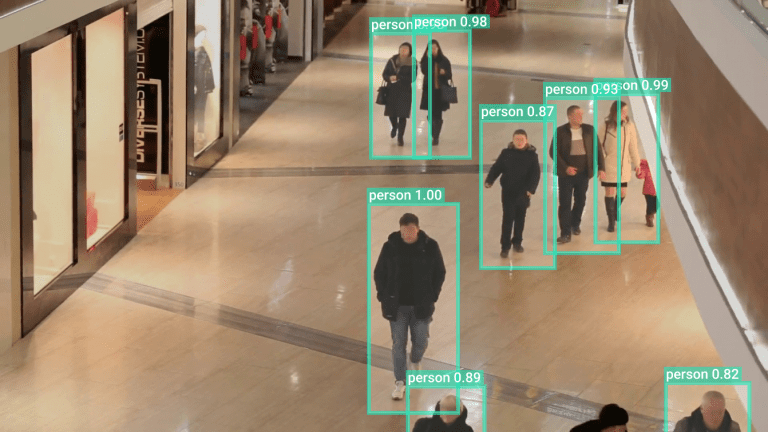

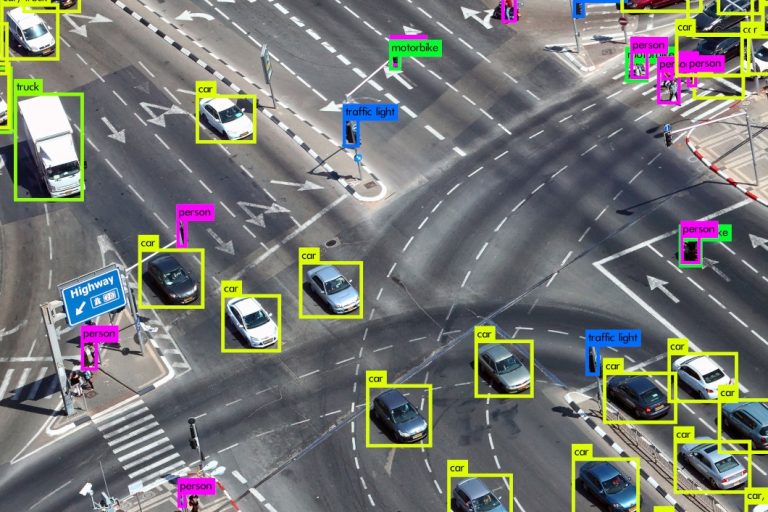

A more recent application, which is still under development and will play a big role in the future of transportation, is object recognition. In object recognition an algorithm takes an input image and searches for a set of objects within the image, drawing boundaries around the object and labelling it. This application is critical in self-driving cars which need to quickly identify its surroundings in order to decide on the best course of action.

Computer Vision Applications

- Facial recognition

- Self-driving cars

- Robotic automation

- Medical anomaly detection

- Sports performance analysis

- Manufacturing fault detection

- Agricultural monitoring

- Plant species classification

- Text parsing

What Are the Risks of Computer Vision?

As with all technology, computer vision is a tool, which means that it can have benefits, but also risks. Computer vision has many applications in everyday life that make it a useful part of modern society but recent concerns have been raised around privacy. The issue that we see most often in the media is around facial recognition. Facial recognition technology uses computer vision to identify specific people in photos and videos. In its lightest form it’s used by companies such as Meta or Google to suggest people to tag in photos, but it can also be used by law enforcement agencies to track suspicious individuals. Some people feel facial recognition violates privacy, especially when private companies may use it to track customers to learn their movements and buying patterns.

Built In’s expert contributor network publishes thoughtful, solutions-oriented stories written by innovative tech professionals. It is the tech industry’s definitive destination for sharing compelling, first-person accounts of problem-solving on the road to innovation.

Great Companies Need Great People. That's Where We Come In.

Computer vision is a field of artificial intelligence (AI) that uses machine learning and neural networks to teach computers and systems to derive meaningful information from digital images, videos and other visual inputs—and to make recommendations or take actions when they see defects or issues.

If AI enables computers to think, computer vision enables them to see, observe and understand.

Computer vision works much the same as human vision, except humans have a head start. Human sight has the advantage of lifetimes of context to train how to tell objects apart, how far away they are, whether they are moving or something is wrong with an image.

Computer vision trains machines to perform these functions, but it must do it in much less time with cameras, data and algorithms rather than retinas, optic nerves and a visual cortex. Because a system trained to inspect products or watch a production asset can analyze thousands of products or processes a minute, noticing imperceptible defects or issues, it can quickly surpass human capabilities.

Computer vision is used in industries that range from energy and utilities to manufacturing and automotive—and the market is continuing to grow. It is expected to reach USD 48.6 billion by 2022. 1

With ESG disclosures starting as early as 2025 for some companies, make sure that you're prepared with our guide.

Register for the playbook on smarter asset management

Computer vision needs lots of data. It runs analyses of data over and over until it discerns distinctions and ultimately recognize images. For example, to train a computer to recognize automobile tires, it needs to be fed vast quantities of tire images and tire-related items to learn the differences and recognize a tire, especially one with no defects.

Two essential technologies are used to accomplish this: a type of machine learning called deep learning and a convolutional neural network (CNN).

Machine learning uses algorithmic models that enable a computer to teach itself about the context of visual data. If enough data is fed through the model, the computer will “look” at the data and teach itself to tell one image from another. Algorithms enable the machine to learn by itself, rather than someone programming it to recognize an image.

A CNN helps a machine learning or deep learning model “look” by breaking images down into pixels that are given tags or labels. It uses the labels to perform convolutions (a mathematical operation on two functions to produce a third function) and makes predictions about what it is “seeing.” The neural network runs convolutions and checks the accuracy of its predictions in a series of iterations until the predictions start to come true. It is then recognizing or seeing images in a way similar to humans.

Much like a human making out an image at a distance, a CNN first discerns hard edges and simple shapes, then fills in information as it runs iterations of its predictions. A CNN is used to understand single images. A recurrent neural network (RNN) is used in a similar way for video applications to help computers understand how pictures in a series of frames are related to one another.

Scientists and engineers have been trying to develop ways for machines to see and understand visual data for about 60 years. Experimentation began in 1959 when neurophysiologists showed a cat an array of images, attempting to correlate a response in its brain. They discovered that it responded first to hard edges or lines and scientifically, this meant that image processing starts with simple shapes like straight edges. 2

At about the same time, the first computer image scanning technology was developed, enabling computers to digitize and acquire images. Another milestone was reached in 1963 when computers were able to transform two-dimensional images into three-dimensional forms. In the 1960s, AI emerged as an academic field of study and it also marked the beginning of the AI quest to solve the human vision problem.

1974 saw the introduction of optical character recognition (OCR) technology, which could recognize text printed in any font or typeface. 3 Similarly, intelligent character recognition (ICR) could decipher hand-written text that is using neural networks. 4 Since then, OCR and ICR have found their way into document and invoice processing, vehicle plate recognition, mobile payments, machine conversion and other common applications.

In 1982, neuroscientist David Marr established that vision works hierarchically and introduced algorithms for machines to detect edges, corners, curves and similar basic shapes. Concurrently, computer scientist Kunihiko Fukushima developed a network of cells that could recognize patterns. The network, called the Neocognitron, included convolutional layers in a neural network.

By 2000, the focus of study was on object recognition; and by 2001, the first real-time face recognition applications appeared. Standardization of how visual data sets are tagged and annotated emerged through the 2000s. In 2010, the ImageNet data set became available. It contained millions of tagged images across a thousand object classes and provides a foundation for CNNs and deep learning models used today. In 2012, a team from the University of Toronto entered a CNN into an image recognition contest. The model, called AlexNet, significantly reduced the error rate for image recognition. After this breakthrough, error rates have fallen to just a few percent. 5

Access videos, papers, workshops and more.

There is a lot of research being done in the computer vision field, but it doesn't stop there. Real-world applications demonstrate how important computer vision is to endeavors in business, entertainment, transportation, healthcare and everyday life. A key driver for the growth of these applications is the flood of visual information flowing from smartphones, security systems, traffic cameras and other visually instrumented devices. This data could play a major role in operations across industries, but today goes unused. The information creates a test bed to train computer vision applications and a launchpad for them to become part of a range of human activities:

- IBM used computer vision to create My Moments for the 2018 Masters golf tournament. IBM Watson® watched hundreds of hours of Masters footage and could identify the sights (and sounds) of significant shots. It curated these key moments and delivered them to fans as personalized highlight reels.

- Google Translate lets users point a smartphone camera at a sign in another language and almost immediately obtain a translation of the sign in their preferred language. 6

- The development of self-driving vehicles relies on computer vision to make sense of the visual input from a car’s cameras and other sensors. It’s essential to identify other cars, traffic signs, lane markers, pedestrians, bicycles and all of the other visual information encountered on the road.

- IBM is applying computer vision technology with partners like Verizon to bring intelligent AI to the edge and to help automotive manufacturers identify quality defects before a vehicle leaves the factory.

Many organizations don’t have the resources to fund computer vision labs and create deep learning models and neural networks. They may also lack the computing power that is required to process huge sets of visual data. Companies such as IBM are helping by offering computer vision software development services. These services deliver pre-built learning models available from the cloud—and also ease demand on computing resources. Users connect to the services through an application programming interface (API) and use them to develop computer vision applications.

IBM has also introduced a computer vision platform that addresses both developmental and computing resource concerns. IBM Maximo® Visual Inspection includes tools that enable subject matter experts to label, train and deploy deep learning vision models—without coding or deep learning expertise. The vision models can be deployed in local data centers, the cloud and edge devices.

While it’s getting easier to obtain resources to develop computer vision applications, an important question to answer early on is: What exactly will these applications do? Understanding and defining specific computer vision tasks can focus and validate projects and applications and make it easier to get started.

Here are a few examples of established computer vision tasks:

- Image classification sees an image and can classify it (a dog, an apple, a person’s face). More precisely, it is able to accurately predict that a given image belongs to a certain class. For example, a social media company might want to use it to automatically identify and segregate objectionable images uploaded by users.

- Object detection can use image classification to identify a certain class of image and then detect and tabulate their appearance in an image or video. Examples include detecting damages on an assembly line or identifying machinery that requires maintenance.

- Object tracking follows or tracks an object once it is detected. This task is often executed with images captured in sequence or real-time video feeds. Autonomous vehicles, for example, need to not only classify and detect objects such as pedestrians, other cars and road infrastructure, they need to track them in motion to avoid collisions and obey traffic laws. 7

- Content-based image retrieval uses computer vision to browse, search and retrieve images from large data stores, based on the content of the images rather than metadata tags associated with them. This task can incorporate automatic image annotation that replaces manual image tagging. These tasks can be used for digital asset management systems and can increase the accuracy of search and retrieval.

Put the power of computer vision into the hands of your quality and inspection teams. IBM Maximo Visual Inspection makes computer vision with deep learning more accessible to business users with visual inspection tools that empower.

IBM Research is one of the world’s largest corporate research labs. Learn more about research being done across industries.

Learn about the evolution of visual inspection and how artificial intelligence is improving safety and quality.

Learn more about getting started with visual recognition and IBM Maximo Visual Inspection. Explore resources and courses for developers.

Read how Sund & Baelt used computer vision technology to streamline inspections and improve productivity.

Learn how computer vision technology can improve quality inspections in manufacturing.

Unleash the power of no-code computer vision for automated visual inspection with IBM Maximo Visual Inspection—an intuitive toolset for labelling, training, and deploying artificial intelligence vision models.

1. https://www.forbes.com/sites/bernardmarr/2019/04/08/7-amazing-examples-of-computer-and-machine-vision-in-practice/#3dbb3f751018 (link resides outside ibm.com)

2. https://hackernoon.com/a-brief-history-of-computer-vision-and-convolutional-neural-networks-8fe8aacc79f3 (link resides outside ibm.com)

3. Optical character recognition, Wikipedia (link resides outside ibm.com)

4. Intelligent character recognition, Wikipedia (link resides outside ibm.com)

5. A Brief History of Computer Vision (and Convolutional Neural Networks), Rostyslav Demush, Hacker Noon, February 27, 2019 (link resides outside ibm.com)

6. 7 Amazing Examples of Computer And Machine Vision In Practice, Bernard Marr, Forbes, April 8, 2019 (link resides outside ibm.com)

7. The 5 Computer Vision Techniques That Will Change How You See The World, James Le, Heartbeat, April 12, 2018 (link resides outside ibm.com)

- Explore Blog

Data Collection

Building Blocks

Device Enrollment

Monitoring Dashboards

Video Annotation

Application Editor

Device Management

Remote Maintenance

Model Training

Application Library

Deployment Manager

Unified Security Center

AI Model Library

Configuration Manager

IoT Edge Gateway

Privacy-preserving AI

Ready to get started?

- Why Viso Suite

Top Computer Vision Papers of All Time (Updated 2024)

Viso Suite is the all-in-one solution for teams to build, deliver, scale computer vision applications.

Viso Suite is the world’s only end-to-end computer vision platform. Request a demo.

Today’s boom in computer vision (CV) started at the beginning of the 21 st century with the breakthrough of deep learning models and convolutional neural networks (CNN). The main CV methods include image classification, image localization, object detection, and segmentation.

In this article, we dive into some of the most significant research papers that triggered the rapid development of computer vision. We split them into two categories – classical CV approaches, and papers based on deep-learning. We chose the following papers based on their influence, quality, and applicability.

Gradient-based Learning Applied to Document Recognition (1998)

Distinctive image features from scale-invariant keypoints (2004), histograms of oriented gradients for human detection (2005), surf: speeded up robust features (2006), imagenet classification with deep convolutional neural networks (2012), very deep convolutional networks for large-scale image recognition (2014), googlenet – going deeper with convolutions (2014), resnet – deep residual learning for image recognition (2015), faster r-cnn: towards real-time object detection with region proposal networks (2015), yolo: you only look once: unified, real-time object detection (2016), mask r-cnn (2017), efficientnet – rethinking model scaling for convolutional neural networks (2019).

About us: Viso Suite is the end-to-end computer vision solution for enterprises. With a simple interface and features that give machine learning teams control over the entire ML pipeline, Viso Suite makes it possible to achieve a 3-year ROI of 695%. Book a demo to learn more about how Viso Suite can help solve business problems.

Classic Computer Vision Papers

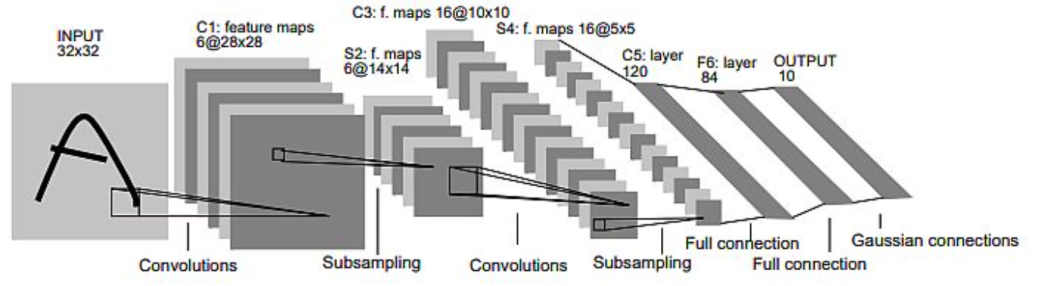

The authors Yann LeCun, Leon Bottou, Yoshua Bengio, and Patrick Haffner published the LeNet paper in 1998. They introduced the concept of a trainable Graph Transformer Network (GTN) for handwritten character and word recognition . They researched (non) discriminative gradient-based techniques for training the recognizer without manual segmentation and labeling.

Characteristics of the model:

- LeNet-5 CNN contains 6 convolution layers with multiple feature maps (156 trainable parameters).

- The input is a 32×32 pixel image and the output layer is composed of Euclidean Radial Basis Function units (RBF) one for each class (letter).

- The training set consists of 30000 examples, and authors achieved a 0.35% error rate on the training set (after 19 passes).

Find the LeNet paper here .

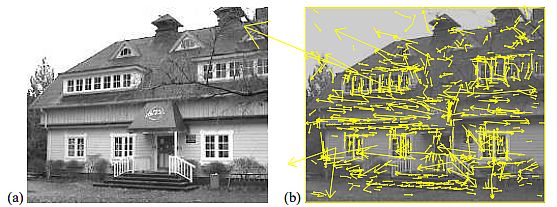

David Lowe (2004), proposed a method for extracting distinctive invariant features from images. He used them to perform reliable matching between different views of an object or scene. The paper introduced Scale Invariant Feature Transform (SIFT), while transforming image data into scale-invariant coordinates relative to local features.

Model characteristics:

- The method generates large numbers of features that densely cover the image over the full range of scales and locations.

- The model needs to match at least 3 features from each object – in order to reliably detect small objects in cluttered backgrounds.

- For image matching and recognition, the model extracts SIFT features from a set of reference images stored in a database.

- SIFT model matches a new image by individually comparing each feature from the new image to this previous database (Euclidian distance).

Find the SIFT paper here .

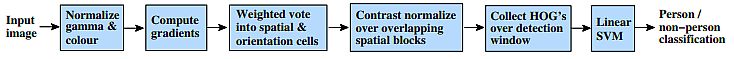

The authors Navneet Dalal and Bill Triggs researched the feature sets for robust visual object recognition, by using a linear SVM-based human detection as a test case. They experimented with grids of Histograms of Oriented Gradient (HOG) descriptors that significantly outperform existing feature sets for human detection .

Authors achievements:

- The histogram method gave near-perfect separation from the original MIT pedestrian database.

- For good results – the model requires: fine-scale gradients, fine orientation binning, i.e. high-quality local contrast normalization in overlapping descriptor blocks.

- Researchers examined a more challenging dataset containing over 1800 annotated human images with many pose variations and backgrounds.

- In the standard detector, each HOG cell appears four times with different normalizations and improves performance to 89%.

Find the HOG paper here .

Herbert Bay, Tinne Tuytelaars, and Luc Van Goo presented a scale- and rotation-invariant interest point detector and descriptor, called SURF (Speeded Up Robust Features). It outperforms previously proposed schemes concerning repeatability, distinctiveness, and robustness, while computing much faster. The authors relied on integral images for image convolutions, furthermore utilizing the leading existing detectors and descriptors.

- Applied a Hessian matrix-based measure for the detector, and a distribution-based descriptor, simplifying these methods to the essential.

- Presented experimental results on a standard evaluation set, as well as on imagery obtained in the context of a real-life object recognition application.

- SURF showed strong performance – SURF-128 with an 85.7% recognition rate, followed by U-SURF (83.8%) and SURF (82.6%).

Find the SURF paper here .

Papers Based on Deep-Learning Models

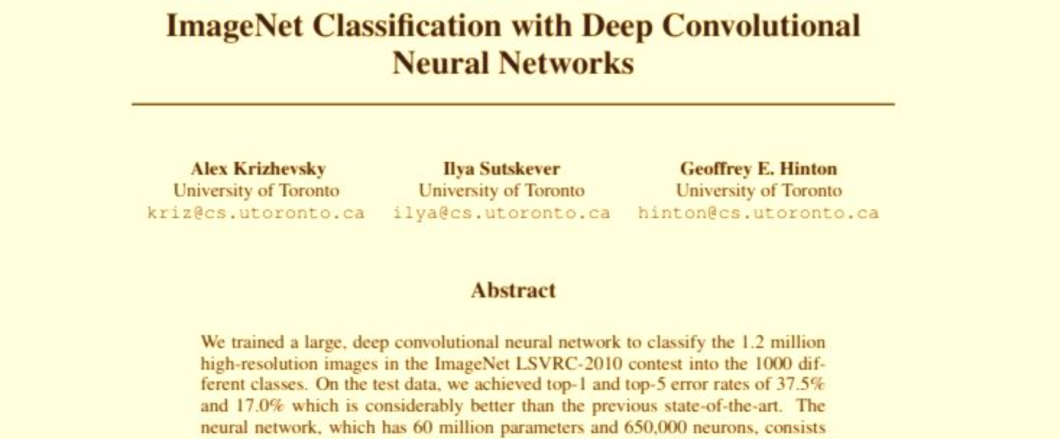

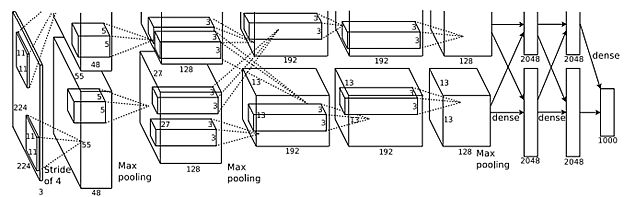

Alex Krizhevsky and his team won the ImageNet Challenge in 2012 by researching deep convolutional neural networks. They trained one of the largest CNNs at that moment over the ImageNet dataset used in the ILSVRC-2010 / 2012 challenges and achieved the best results reported on these datasets. They implemented a highly-optimized GPU of 2D convolution, thus including all required steps in CNN training, and published the results.

- The final CNN contained five convolutional and three fully connected layers, and the depth was quite significant.

- They found that removing any convolutional layer (each containing less than 1% of the model’s parameters) resulted in inferior performance.

- The same CNN, with an extra sixth convolutional layer, was used to classify the entire ImageNet Fall 2011 release (15M images, 22K categories).

- After fine-tuning on ImageNet-2012 it gave an error rate of 16.6%.

Find the ImageNet paper here .

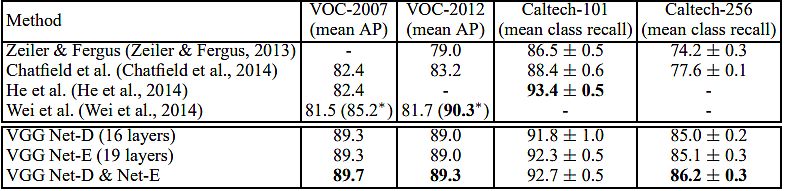

Karen Simonyan and Andrew Zisserman (Oxford University) investigated the effect of the convolutional network depth on its accuracy in the large-scale image recognition setting. Their main contribution is a thorough evaluation of networks of increasing depth using an architecture with very small (3×3) convolution filters, specifically focusing on very deep convolutional networks (VGG) . They proved that a significant improvement on the prior-art configurations can be achieved by pushing the depth to 16–19 weight layers.

- Their ImageNet Challenge 2014 submission secured the first and second places in the localization and classification tracks respectively.

- They showed that their representations generalize well to other datasets, where they achieved state-of-the-art results.

- They made two best-performing ConvNet models publicly available, in addition to the deep visual representations in CV.

Find the VGG paper here .

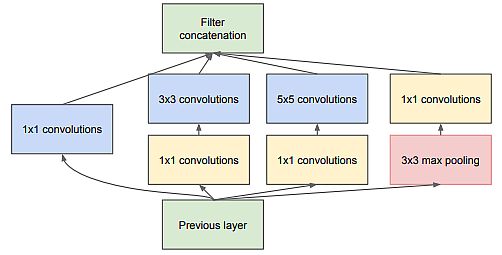

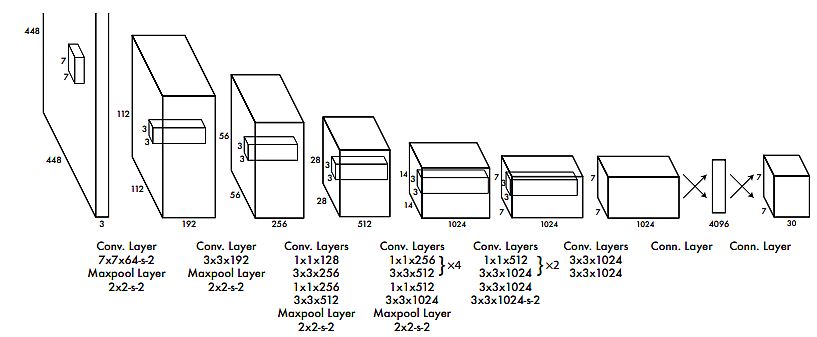

The Google team (Christian Szegedy, Wei Liu, et al.) proposed a deep convolutional neural network architecture codenamed Inception. They intended to set the new state of the art for classification and detection in the ImageNet Large-Scale Visual Recognition Challenge 2014 (ILSVRC14). The main hallmark of their architecture was the improved utilization of the computing resources inside the network.

- A carefully crafted design that allows for increasing the depth and width of the network while keeping the computational budget constant.

- Their submission for ILSVRC14 was called GoogLeNet , a 22-layer deep network. Its quality was assessed in the context of classification and detection.

- They added 200 region proposals coming from multi-box increasing the coverage from 92% to 93%.

- Lastly, they used an ensemble of 6 ConvNets when classifying each region which improved results from 40% to 43.9% accuracy.

Find the GoogLeNet paper here .

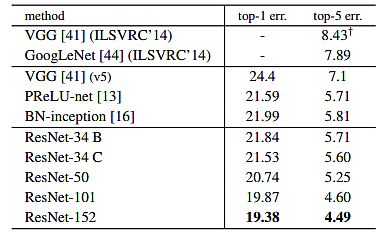

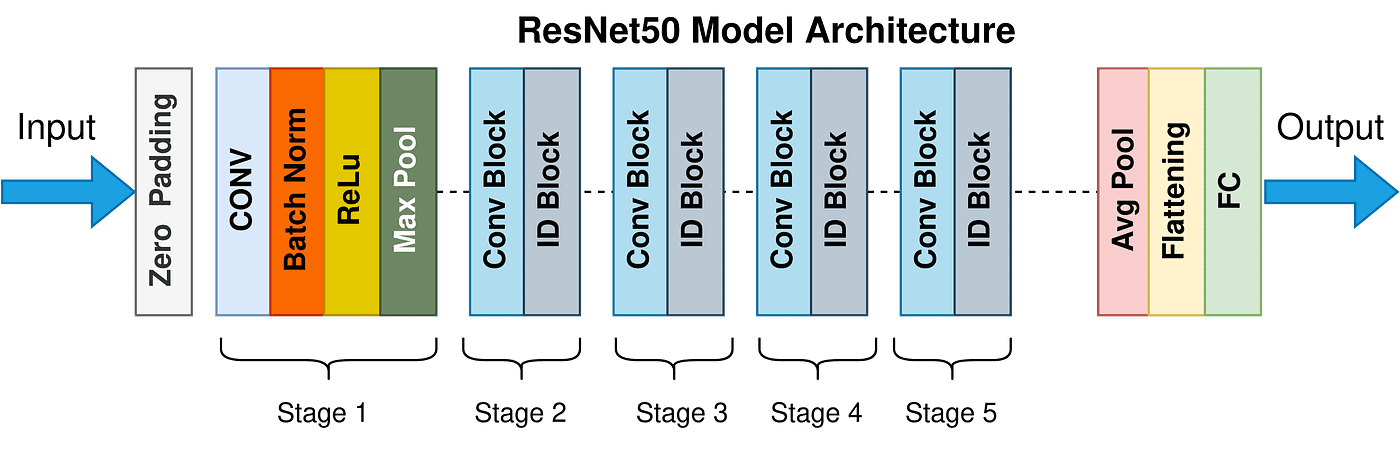

Microsoft researchers Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun presented a residual learning framework (ResNet) to ease the training of networks that are substantially deeper than those used previously. They reformulated the layers as learning residual functions concerning the layer inputs, instead of learning unreferenced functions.

- They evaluated residual nets with a depth of up to 152 layers – 8× deeper than VGG nets, but still having lower complexity.

- This result won 1st place on the ILSVRC 2015 classification task.

- The team also analyzed the CIFAR-10 with 100 and 1000 layers, achieving a 28% relative improvement on the COCO object detection dataset.

- Moreover – in ILSVRC & COCO 2015 competitions, they won 1 st place on the tasks of ImageNet detection, ImageNet localization, COCO detection/segmentation.

Find the ResNet paper here .

Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun introduced the Region Proposal Network (RPN) with full-image convolutional features with the detection network, therefore enabling nearly cost-free region proposals. Their RPN was a fully convolutional network that simultaneously predicted object bounds and objective scores at each position. Also, they trained the RPN end-to-end to generate high-quality region proposals, which Fast R-CNN used for detection.

- Merged RPN and fast R-CNN into a single network by sharing their convolutional features. In addition, they applied neural networks with “ attention” mechanisms .

- For the very deep VGG-16 model, their detection system had a frame rate of 5fps on a GPU.

- Achieved state-of-the-art object detection accuracy on PASCAL VOC 2007, 2012, and MS COCO datasets with only 300 proposals per image.

- In ILSVRC and COCO 2015 competitions, faster R-CNN and RPN were the foundations of the 1st-place winning entries in several tracks.

Find the Faster R-CNN paper here .

Joseph Redmon, Santosh Divvala, Ross Girshick, and Ali Farhadi developed YOLO, an innovative approach to object detection. Instead of repurposing classifiers to perform detection, the authors framed object detection as a regression problem. In addition, they spatially separated bounding boxes and associated class probabilities. A single neural network predicts bounding boxes and class probabilities directly from full images in one evaluation. Since the whole detection pipeline is a single network, it can be optimized end-to-end directly on detection performance .

- The base YOLO model processed images in real-time at 45 frames per second.

- A smaller version of the network, Fast YOLO, processed 155 frames per second, while still achieving double the mAP of other real-time detectors.

- Compared to state-of-the-art detection systems, YOLO was making more localization errors, but was less likely to predict false positives in the background.

- YOLO learned very general representations of objects and outperformed other detection methods, including DPM and R-CNN , when generalizing natural images.

Find the YOLO paper here .

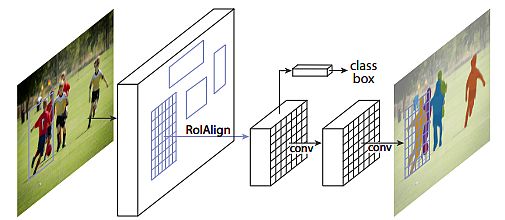

Kaiming He, Georgia Gkioxari, Piotr Dollar, and Ross Girshick (Facebook) presented a conceptually simple, flexible, and general framework for object instance segmentation. Their approach could detect objects in an image, while simultaneously generating a high-quality segmentation mask for each instance. The method, called Mask R-CNN , extended Faster R-CNN by adding a branch for predicting an object mask in parallel with the existing branch for bounding box recognition.

- Mask R-CNN is simple to train and adds only a small overhead to Faster R-CNN, running at 5 fps.

- Showed great results in all three tracks of the COCO suite of challenges. Also, it includes instance segmentation, bounding box object detection, and person keypoint detection.

- Mask R-CNN outperformed all existing, single-model entries on every task, including the COCO 2016 challenge winners.

- The model served as a solid baseline and eased future research in instance-level recognition.

Find the Mask R-CNN paper here .

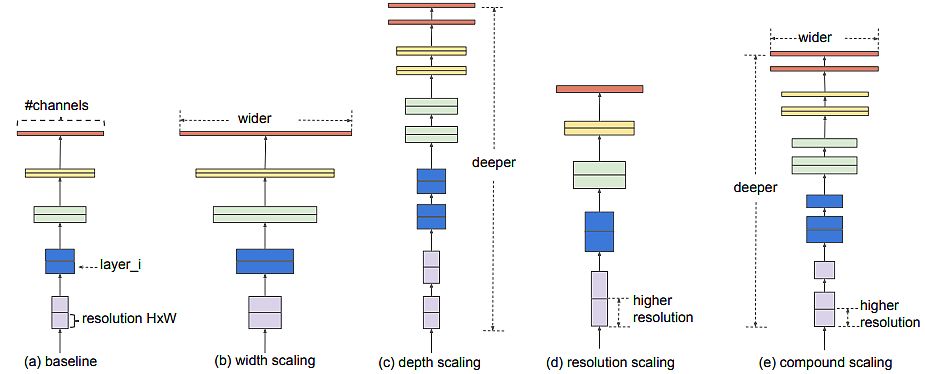

The authors (Mingxing Tan, Quoc V. Le) of EfficientNet studied model scaling and identified that carefully balancing network depth, width, and resolution can lead to better performance. They proposed a new scaling method that uniformly scales all dimensions of depth resolution using a simple but effective compound coefficient. They demonstrated the effectiveness of this method in scaling up MobileNet and ResNet .

- Designed a new baseline network and scaled it up to obtain a family of models, called EfficientNets. It had much better accuracy and efficiency than previous ConvNets.

- EfficientNet-B7 achieved state-of-the-art 84.3% top-1 accuracy on ImageNet, while being 8.4x smaller and 6.1x faster on inference than the best existing ConvNet.

- It also transferred well and achieved state-of-the-art accuracy on CIFAR-100 (91.7%), Flowers (98.8%), and 3 other transfer learning datasets, with much fewer parameters.

Find the EfficientNet paper here .

Related Articles

What is Pattern Recognition? A Gentle Introduction (2024)

Easy to understand guide about Pattern Recognition with AI and Machine Learning. The forms, methods, and examples you need to know.

Artificial Neural Network: Everything You Need to Know

Artificial Neural Networks are an important part of machine learning. This article explains the basic concepts and examples.

All-in-one platform to build, deploy, and scale computer vision applications

Join 6,300+ Fellow AI Enthusiasts

Get expert news and updates straight to your inbox. Subscribe to the Viso Blog.

Get expert AI news 2x a month. Subscribe to the most read Computer Vision Blog.

You can unsubscribe anytime. See our privacy policy .

Build any Computer Vision Application, 10x faster

All-in-one Computer Vision Platform for businesses to build, deploy and scale real-world applications.

- Deploy Apps

- Monitor Apps

- Manage Apps

- Help Center

Privacy Overview

Foundations of Computer Vision

Antonio Torralba, Phillip Isola, and William Freeman

April 16, 2024

This is a draft site for the book.

Dedicated to all the pixels.

About this Book

This book covers foundational topics within computer vision, with an image processing and machine learning perspective. We want to build the reader’s intuition and so we include many visualizations. The audience is undergraduate and graduate students who are entering the field, but we hope experienced practitioners will find the book valuable as well.

Our initial goal was to write a large book that provided a good coverage of the field. Unfortunately, the field of computer vision is just too large for that. So, we decided to write a small book instead, limiting each chapter to no more than five pages. Such a goal forced us to really focus on the important concepts necessary to understand each topic. Writing a short book was perfect because we did not have time to write a long book and you did not have time to read it. Unfortunately, we have failed at that goal, too.

Writing this Book

To appreciate the path we took to write this book, let’s look at some data first. shows the number of pages written as a function of time since we mentioned the idea to MIT press for the first time on November 24, 2010.

Writing this book has not been a linear process. As the plot shows, the evolution of the manuscript length is non-monotonic, with a period when the book shrank before growing again. Lots of things have happened since we started thinking about this book in November 2010; yes, it has taken us more than 10 years to write this book. If we knew on the first day all the work that is involved in writing a book like this one there is no way we would have started. However, from today’s vantage point, with most of the work behind us, we feel happy we started this journey. We learned a lot by writing and working out the many examples we show in this book, and we hope you will too by reading and reproducing the examples yourself.

When we started writing the book, the field was moving ahead steadily, but unaware of the revolution that was about to unfold in less than 2 years. Fortunately, the deep learning revolution in 2012 made the foundations of the field more solid, providing tools to build working implementations of many of the original ideas that were introduced in the field since it began. During the first years after 2012, some of the early ideas were forgotten due to the popularity of the new approaches, but over time many of them returned. We find it interesting to look at the process of writing this book with the perspective of the changes that were happening in the field. Figure 1 shows some important events in the field of artificial intelligence (AI) that took place while writing this book.

Structure of the Book

Computer vision has undergone a revolution over the last decade. It may seem like the methods we use now bear little relationship to the methods of 10 years ago. But that’s not the case. The names have changed, yes, and some ideas are genuinely new, but the methods of today in fact have deep roots in the history of computer vision and AI. Throughout this book we will emphasize the unifying themes behind the concepts we present. Some chapters revisit concepts presented earlier from different perspectives.

One of the central metaphors of vision is that of multiple views . There is a true physical scene out there and we view it from different angles, with different sensors, and at different times. Through the collection of views we come to understand the underlying reality. This book also presents a collection of views, and our goal will be to identify the underlying foundations.

The book is organized in multiple parts, of a few chapters each, devoted to a coherent topic within computer vision. It is preferable to read them in that order as most of the chapters assume familiarity with the topics covered before them. The parts are as follows:

Part I discusses some motivational topics to introduce the problem of vision and to place it in its societal context. We will introduce a simple vision system that will let us present concepts that will be useful throughout the book, and to refresh some of the basic mathematical tools.

Part II covers the image formation process.

Part III covers the foundations of learning using vision examples to introduce concepts of broad applicability.

Part IV provides an introduction to signal and image processing, which is foundational to computer vision.

Part V describes a collection of useful linear filters (Gaussian kernels, binomial filters, image derivatives, Laplacian filter, and temporal filters) and some of their applications.

Part VI describes multiscale image representations.

Part VII describes neural networks for vision, including convolutional neural networks, recurrent neural networks, and transformers. Those chapters will focus on the main principles without going into describing specific architectures.

Part VIII introduces statistical models of images and graphical models.

Part IX focuses on two powerful modeling approaches in the age of neural nets: generative modeling and representation learning. Generative image models are statistical image models that create synthetic images that follow the rules of natural image formation and proper geometry. Representation learning seeks to find useful abstract representations of images, such as vector embeddings.

Part X is composed of brief chapters that discuss some of the challenges that arise from building learning-based vision systems.

Part XI introduces geometry tools and their use in computer vision to reconstruct the 3D world structure from 2D images.

Part XII focuses on processing sequences and how to measure motion.

Part XIII deals with scene understanding and object detection.

Part XIV is a collection of chapters with advice for junior researchers on effective methods of giving presentations, writing papers, and the mentality of an effective researcher.

Part XV returns to the simple visual system and applies some of the techniques presented in the book to solve the toy problem introduced in Part I.

What Do We Not Cover?

This should be a long section, but we will keep it short. We do not provide a review on the current state of the art of computer vision; we focus instead on the foundational concepts. We do not cover in depth the many applications of computer vision such as shape analysis, object tracking, person pose analysis, or face recognition. Many of those topics are better studied by reading the latest publications from computer vision conferences and specialized monographs.

Related Books

We want to mention a number of related books that we’ve had the pleasure to learn from. For a number of years, we taught our computer vision class from the Computer Vision: A Modern Approach by Forsyth and Ponce ( 2012 ) , and have also used Szeliski ( 2022 ) book, Computer Vision: Algorithms and Applications . These are excellent general texts. Robot Vision , by Horn ( 1986 ) is an older textbook, but covers physics-based fundamentals very well. The book that enticed one of us into computer vision is still in print: Vision by Marr ( 2010 ) . The intuitions are timeless and the writing is wonderful.

The geometry of vision through multiple cameras is covered thoroughly in the classic, Multiple View Geometry in Computer Vision by Hartley and Zisserman ( 2004 ) . Solid Shape by Koenderink ( 1990 ) , offers a general treatment of three-dimensional (3D) geometry. Useful and related books include Three-Dimensional Computer Vision by Faugeras ( 1993 ) , and Introductory Techniques for 3D Computer Vision by Trucco and Verri ( 1998 ) .

A number of recent textbooks focus on learning. Our favorites are MacKay ( 2003 ) , Bishop ( 2006 ) , Murphy ( 2022 ) , and Goodfellow, Bengio, and Courville ( 2016 ) . Probabilistic models for vision are well covered in the textbook of Prince ( 2012 ) .

Vision Science: Photons to Phenomenology by Palmer ( 1999 ) , is a wonderful book covering human visual perception. It includes some chapters discussing connections between studies in visual cognition and computer vision. This is an indispensable book if you are interested in the science of vision.

Signal Processing for Computer Vision by Granlund and Knutsson ( 1995 ) , covers many basics of low-level vision. Ullman insightfully addresses High-level Vision in his book of that title, Ullman ( 2000 ) .

Finally, a favorite book of ours, about light and vision, is Light and Color in the Outdoors , by Minnaert ( 2012 ) , a delightful treatment of optical effects in nature.

Acknowledgments

We thank our teachers, students, and colleagues all over the world who have taught us so much and have brought us so much joy in conversations about research. This book also builds on many computer vision courses taught around the world that helped us decide which topics should be included. We thank everyone that made their slides and syllabus available. A lot of the material in this book has been created while preparing the MIT course, “Advances in Computer Vision.”

We thank our colleagues who gave us comments on the book: Ted Adelson, David Brainard, Fredo Durand, David Fouhey, Agata Lapedriza, Pietro Perona, Olga Russakovsky, Rick Szeliski, Greg Wornell, Jose María Llauradó, and Alyosha Efros. A special thanks goes to David Fouhey and Rick Szeliski for all the help and advice they provided. We also thank Rob Fergus and Yusuf Aytar for early contributions to this manuscript. Many colleagues and students have helped proof reading the book and with some of the experiments. Special thanks to Manel Baradad, Sarah Schwettmann, Krishna Murthy Jatavallabhula, Wei-Chiu Ma, Kabir Swain, Adrian Rodriguez Muñoz, Tongzhou Wang, Jacob Huh, Yen-Chen Lin, Pratyusha Sharma, Joanna Materzynska, and Shuang Li. Thanks to Manel Baradad for his help on the experiments in , to Krishna Murthy Jatavallabhula for helping with the code for , and Aina Torralba for help designing the book cover and several figures.

Antonio Torralba thanks Juan, Idoia, Ade, Sergio, Aina, Alberto, and Agata for all their support over many years.

Phillip Isola thanks Pam, John, Justine, Anna, DeDe, and Daryl for being a wonderful source of support along this journey.

William Freeman thanks Franny, Roz, Taylor, Maddie, Michael, and Joseph for their love and support.

Computer Vision: 10 Papers to Start

Dec 25, 2015

“How do I know what papers to read in computer vision? There are so many. And they are so different.” Graduate Student. Xi’An. China. November, 2011.

This is a quote from an opinion paper by my advisor. Having worked on computer vision for nearly 2 years, I can absolutely resonate with the comment. The diversity of computer vision may be especially confusing for starters.

This post serves as a humble attempt to answer the opening question. Of course it is subjective, but a good starting point for sure.

This post is intended for computer vision starters , mostly undergraduate students . An important lesson is that unlike undergraduate education, when doing research, you learn primarily from reading papers, which is why I am recommending 10 to start.

Before getting to the list, it is good to know where CV papers are usually published. CV people like to publish in conferences. The three top tier CV conferences are: CVPR (each year), ICCV (odd year), ECCV (even year). Since CV is an application of machine learning, people also publish in NIPS and ICML. ICLR is new but rapidly rising to the top tier. As for journals, PAMI and IJCV are the best.

I am partitioning the 10 papers into 5 categories, and the list is loosely sorted by publication time. Here it goes!

Finding good features has always been a core problem of computer vision. A good feature can summarize the information of the image and enable the subsequent use of powerful mathematical tools. In the 2000s, a lot of feature designs were proposed.

Distinctive Image Features from Scale-Invariant Keypoints , IJCV 2004

SIFT feature is designed to establish correspondence between two images. Its most important applications are in reconstruction and tracking.

Histograms of Oriented Gradients for Human Detection , CVPR 2005

HOG has the same philosophy of feature design as SIFT, but is even simpler. While SIFT is more low-level understanding, HOG is more high-level understanding.

Reconstruction

Reconstruction is an important branch of computer vision. Since the 2000s, structure from motion (SfM) has been formalized and is still the standard practice today.

Photo Tourism: Exploring Photo Collections in 3D , ACM Transactions on Graphics 2006

This paper uses SfM to reconstruct scenes from photos collected from the internet. Since then, the core pipeline remains more or less the same, and people seek improvement in, for instance, scalability and visualization. There is also an extended IJCV version later.

Graphical Models

Graphical model is a machine learning tool that tries to capture the relationship between random variables. It is quite general in nature, and is suitable for many computer vision tasks.

Structured Learning and Prediction in Computer Vision , Foundations and Trends in Computer Graphics and Vision 2011

This 180+ page paper is one of the first paper that I have read, and remains my personal favourite. It is a comprehensive overview of both theory and application of graphical models in various computer vision tasks.

The advancement in computer vision can hardly live without good datasets. The evaluation on a suited and unbiased dataset is the valid proof of the proposed algorithm. Interestingly, the evolution of dataset can also reflect the progress of computer vision research.

The PASCAL Visual Object Classes (VOC) Challenge , IJCV 2010

PASCAL VOC is the standard evaluation dataset of semantic segmentation and object detection. While the annual challenge has ended, the evaluation server is still open, and the leaderboard is definitely something you want to check out to find the state-of-the-art result/algorithm. There is also a recent retrospect paper on IJCV.

ImageNet: A Large-Scale Hierarchical Image Database , CVPR 2009

ImageNet is the first large scale dataset, containing millions of images of 1000 categories. It is the standard evaluation dataset of classification, and is one of the driving force behind the recent success of deep convolutional neural networks. There is also a recent retrospect paper on IJCV.

Microsoft COCO: Common Objects in Context , ECCV 2014

This dataset is relatively new. Similar to PASCAL VOC, it aims at instance segmentation and object detection, but the number of images is much larger. More interestingly, it contains language descriptions for each image, bridging computer vision with natural language processing.

Deep Learning

I am sure you have heard of deep learning. It is an end-to-end hierarchical model optimized by simply chain rule and gradient descent. What makes it powerful is its billions of parameters, which enables unprecedented representation capacity.

ImageNet Classification with Deep Convolutional Neural Networks , NIPS 2012

This paper marks the big breakthrough of applying deep learning to computer vision. Made possible by the large ImageNet dataset and the fast GPU, the model took 1 week to train, and outperforms the traditional method on image classification by 10%.

DeCAF: A Deep Convolutional Activation Feature for Generic Visual Recognition , ICML 2014

This paper shows that while the model mentioned above is trained for image classification, its intermediate representation is a powerful feature that can transfer to other tasks. This comes back to finding good features for images. In high-level tasks, deep features consistently show superiority over traditional features.

Visualizing and Understanding Convolutional Networks , ECCV 2014

Understanding what is indeed going on inside the deep neural network remains a challenging task. This paper is perhaps the most famous and important work towards this goal. It looks at individual neurons and uses deconvolution to visualize. However, there is still much to be done.

Again, this has been a humble attempt to address the opening question. Hope these excellent papers can kindle your enthusiasm for computer vision!

Merry Christmas!

Help | Advanced Search

Computer Vision and Pattern Recognition

Authors and titles for recent submissions.

- Fri, 17 May 2024

- Thu, 16 May 2024

- Wed, 15 May 2024

- Tue, 14 May 2024

- Mon, 13 May 2024

Fri, 17 May 2024 (showing first 25 of 90 entries )

- IEEE CS Standards

- Career Center

- Subscribe to Newsletter

- IEEE Standards

- For Industry Professionals

- For Students

- Launch a New Career

- Membership FAQ

- Membership FAQs

- Membership Grades

- Special Circumstances

- Discounts & Payments

- Distinguished Contributor Recognition

- Grant Programs

- Find a Local Chapter

- Find a Distinguished Visitor

- Find a Speaker on Early Career Topics

- Technical Communities

- Collabratec (Discussion Forum)

- Start a Chapter

- My Subscriptions

- My Referrals

- Computer Magazine

- ComputingEdge Magazine

- Let us help make your event a success. EXPLORE PLANNING SERVICES

- Events Calendar

- Calls for Papers

- Conference Proceedings

- Conference Highlights

- Top 2024 Conferences

- Conference Sponsorship Options

- Conference Planning Services

- Conference Organizer Resources

- Virtual Conference Guide

- Get a Quote

- CPS Dashboard

- CPS Author FAQ

- CPS Organizer FAQ

- Find the latest in advanced computing research. VISIT THE DIGITAL LIBRARY

- Open Access

- Tech News Blog

- Author Guidelines

- Reviewer Information

- Guest Editor Information

- Editor Information

- Editor-in-Chief Information

- Volunteer Opportunities

- Video Library

- Member Benefits

- Institutional Library Subscriptions

- Advertising and Sponsorship

- Code of Ethics

- Educational Webinars

- Online Education

- Certifications

- Industry Webinars & Whitepapers

- Research Reports

- Bodies of Knowledge

- CS for Industry Professionals

- Resource Library

- Newsletters

- Women in Computing

- Digital Library Access

- Organize a Conference

- Run a Publication

- Become a Distinguished Speaker

- Participate in Standards Activities

- Peer Review Content

- Author Resources

- Publish Open Access

- Society Leadership

- Boards & Committees

- Local Chapters

- Governance Resources

- Conference Publishing Services

- Chapter Resources

- About the Board of Governors

- Board of Governors Members

- Diversity & Inclusion

- Open Volunteer Opportunities

- Award Recipients

- Student Scholarships & Awards

- Nominate an Election Candidate

- Nominate a Colleague

- Corporate Partnerships

- Conference Sponsorships & Exhibits

- Advertising

- Recruitment

- Publications

- Education & Career

Resources for Computer Vision Professionals

With the ever-growing interest in computer vision, the research, applications, and commercial possibilities for this technology are immense. discover how the world of computer vision is evolving and explore the career opportunities that are newly emerging., page content:, what is computer vision, the fundamentals of computer vision, where is computer vision headed, transportation & aviation, security & privacy, entertainment, agriculture, career opportunities, computer vision engineers, xr design/graphics engineers, data visualization engineers, challenges and limitations of computer vision technology, ethics, standards, diversity, and inclusion, ethics in computer vision, standards & inclusion in xr, diversity in visualization research, voices from the community, ieee computer society fellow: greg welch, insights and trends from cvpr, blurred lines between computer vision and computer graphics, nerf research on the rise, burgeoning development of content generation, re-emergence of classic computer vision, synthetic data, dependable facial recognition research.

- No results found.

On this resource page you’ll learn…

- Foundations of Computer Vision: Understand the core principles of computer vision and gain insights into how these systems work.

- Market Projections: Gain insight into the anticipated growth of the computer vision market, set to exceed USD $20.88 billion by 2030, with impacts on key domains such as transportation , healthcare , security , entertainment , and agriculture .

- Opportunities in Research and Development: Learn about the increasing demand for research and development in the expanding landscape of computer vision, and discover the rising job opportunities within this dynamic field.

- Industry Impact and Challenges : Uncover the transformative effects of computer vision across various sectors, while acknowledging the existing limitations and barriers that require attention.

- Ethical Considerations: Examine the ethical concerns of computer vision, including the pressing need for transparency, fairness, accountability, privacy, and the adoption of best practices to ensure responsible deployment.

Back to Top

“‘Intelligent’ computers require knowledge of their environment, and the most effective means of acquiring such knowledge is by seeing. Vision opens a new realm of computer applications,” Computer magazine, May 1973.

Grounded in the principles of artificial intelligence (AI), computer vision provides machines the capability to perceive and analyze visual data such as images, graphics, and videos. The intention is similar to AI — to automate decisions — yet its area of focus is exclusive to activities a human’s visual system would generally conduct. IBM describes the contrast lucidly: “If AI enables computers to think, computer vision enables them to see, observe, and understand.”

Computer vision, which seems like a modern innovation, is the outcome of extensive research stretching back to the 1960s. First coming into discovery with Seymour Papert’s Summer Vision Project of 1966, computer vision has been in development for decades, improving all along the way and creating new possibilities for everyone. Though complex, the process of these systems can be broken down into four fundamental steps:

- Visual data such as images or video is taken into the computer vision systems as input. Since images are made up of pixels, these machines process information at the pixel level.

- To analyze the data, distinctive features in the image, such as contours, corners, or colors, are identified using algorithms and models.

- Through the process of identification, the computer recognizes objects such as people, as well as certain behaviors in the visuals. With the powers of machine learning, the computer can improve this ability over time.

- Finally, the computer can provide an output based on this interpretation. To be put simply, this is when the computer communicates what it’s seeing.

Before the technology of computer vision came to today’s application methods, there were of course key pioneers that led the way first. For example, the Optical Character Recognition system was developed by Ray Kurzweil of Kurzweil Computer Products, Inc. in 1974. This system could recognize and process printed text, no matter the font and without manual entry. When placed in a machine learning format and enhanced with text-to-speech features, the technology was used to read for the blind.

This is just one pivotal example of the many applications that display the power and impact of computer vision. Thanks to waves of developments and crucial research, the technology has improved several domains of human life including transportation, healthcare, security, entertainment, and agriculture. Because of this, it is no surprise that the market of computer vision is expected to expand in the very near future.

According to the Top Trends in Computer Vision Report , which reviews the latest trends covered at the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) , the computer vision industry raked in over $12.14 billion USD in 2022 and has a 7% projected growth rate with $20.88 billion USD expected by 2030.

The revenue is projected to increase due to the surging need for the technology in various fields, like transportation, healthcare, and security. Moreover, according to PS Market Research , XR entertainment systems which were worth $38.3 billion in 2022 are predicted to reach an immense value of $394.8 billion by 2030.

Discover the Future of Computer Vision at IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)

- The U.S. National Highway Traffic Safety Administration (NHTSA) has reported that 94% of critical collisions are caused by human error. With the help of computer vision, advanced cameras and sensors allow vehicles to analyze surroundings, detect objects such as pedestrians and other vehicles, and safely navigate around them. Furthermore, the technology is also used within the aviation sector to create flight simulators. Within these sectors, Extended Reality (XR) is also used to simulate flight training while reducing costs, time, and possible damages to aircraft.

- Toward Fully Autonomous and Networked Vehicles

- Autonomous Driving Technologies Special Technical Community

- Using Extended Reality in Flight Simulators: A Literature Review

Learn more about computer vision and automated vehicles by taking the IEEE course on ‘Using Machine Vision Perception to Control Automated Vehicle Maneuvering’

- Computer vision is also the technology to thank for an improved patient experience within the healthcare system. This includes medical treatments and procedures. Specifically, computer vision has transformed the capabilities of medical imaging data , which allows practitioners to diagnose, monitor, or treat medical conditions. The technology also permits augmented reality (AR)-assisted surgical guidance , which can visualize human anatomy and aid practitioners when performing operations such as neurosurgical procedures.

- AR-Assisted Surgical Guidance System for Ventriculostomy

- Augmented and Virtual Reality in Surgery

- Standardizing 3D Medical Imaging

- Driven by progress made within machine learning, edge computing, IoT, and AI, computer vision enables the capability to mitigate security threats in real time. For example, with the help of image processing and statistical pattern recognition, biometrics allow computers to recognize persons based on physiological characteristics, such as faces or fingerprints. Additionally, computer vision aids security within smart security surveillance . This includes cameras that are placed in different areas within a city that monitor and detect threatening behavior. Attracting more attention is privacy-preserving biometrics as it may be used to resolve concerns related to cryptographic authentication processes.

- The Interplay of AI and Biometrics: Challenges and Opportunities

- Biometrics and Privacy-Preservation: How Do They Evolve?

- Biometrics Based Access Framework for Secure Cloud Computing

XR gaming blurs the line between virtual and physical realities, simulating new worlds and adventures for players to be fully immersed within. According to XR Today , the technology has provided the capability to transform social gatherings by giving its users the ability to create virtual events and exhibitions anywhere at any time.

- Virtual Reality: A Journey from Vision to Commodity

- Affective Virtual Reality: How to Design Artificial Experiences Impacting Human Emotions

Learn More About Virtual Reality and its Applications at IEEE VR 2024

- According to researchers, insects affect 35% of farmland. Understanding and monitoring how insects play a role in agriculture is vital for food production, however, can be very labor-intensive and may even be unreliable at times. Computer vision can potentially improve this process by monitoring it automatically. On top of that, computer vision offers the opportunity to give automated machine systems ‘eyes’, enabling them to navigate fields, without manual labor.

- Towards Computer Vision and Deep Learning Facilitated Pollination Monitoring for Agriculture

- The 1st Agriculture-Vision Challenge: Methods and Results

- Agriculture-Vision: A Large Aerial Image Database for Agricultural Pattern Analysis

According to the US Bureau of Labor Statistics , the employment of professionals in the computer and information science industry is expected to increase significantly over the next decade, reaching a 21% rise by 2031. To fill these new roles, experts in computer vision, extended reality (XR), and data visualization will be needed.

- Computer vision engineers work in highly collaborative environments, usually guided by the needs of their clients. In addition to building architectures and using algorithms, their typical areas of expertise include image classification, face detection, pose estimation, and optical flow . Within this field, time is mainly spent developing models, retraining them, and creating reliable datasets.

- Skills: Developing image analysis algorithms, deep learning architectures, image processing and visualization, computer vision libraries, and data flow programming Salary: $160K USD (This is a salary estimation for United States employees according to talent.com . View estimates for other countries via Salary Expert .)

- Degree: Bachelor’s in mathematics, computer vision, computer science, machine learning, information systems

- IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)

- IEEE/CVF International Conference on Computer Vision

- Technical Community on Pattern Analysis and Machine Intelligence

- Those within the XR industry, such as XR Design/Graphics Engineers , use their knowledge of computer vision to bring creative projects to life. Furthermore, they research and develop technology that augments reality, re-creates real-life environments, or generates other spaces that users can interact with virtually. Working cross functionally with creative teams, they use their knowledge within computer vision to help aid the design, optimization, integration, and testing of XR devices and products such as video games and other entertainment systems.

- Skills: 3D visualization tools/art, coding languages such as python, C/C++ programming, and/or Java, Linear algebra, multimedia software stacks and frameworks

- Salary: $107,000 USD (This is a salary estimation for United States employees according to circuitstream.com . View estimates for other countries via Salary Expert .)

- Degree: Bachelor’s in Computer engineering, mathematics, or related fields of study. Master’s in Human Centered Design and Engineering or Interaction Design

- IEEE Virtual Reality 2024

- Technical Community on Intelligent Informatics

- The power of visualizing data helps decision makers to recognize and address patterns and mistakes in their information, allowing them to make educated choices for their organization. Data visualization engineers create visual representations of data, then build dashboards for different business departments to inspect. They play a pivotal role in the process of informed decision-making.

- Skills: Business Intelligence (BI) tools, Data analysis, python-based visualizations, Data Visualization Tools such as Tableau, Yellowfin, and Qlik Sense, and mathematics/statistics

- Salary: $96,317 (This is a salary estimation for United States employees according to salary.com . View estimates for other countries via Salary Expert .)

- Degree: bachelor’s degree in computer science, computer information systems, software engineering, or a closely related field. Master’s degree in Data Analytics or Visualization

- IEEE VIS: Visualization & Visual Analytics

- Technical Community on Visualization and Graphics

While computer vision has made significant improvements, challenges still prevail, emphasizing the necessity for continuous research and development in the field. This includes concerns related to data quality and bias. It’s important to note that any technology created or managed by humans is susceptible to biases. To ensure accurate detections and optimal functionality, these systems must be developed with diversity in inputs.

Moreover, the question remains: Can a computer not only perceive but truly comprehend its observations? It is crucial to instill trust in these systems, ensuring they understand what they observe with minimal errors and increased adoption to be accurate.

Lastly, security and privacy stand as major considerations for any widely adapted technology. However, these aspects continue to be challenging with room for improvement. In the context of facial recognition, this issue becomes particularly pronounced and ongoing, necessitating scrutiny and improvement.

As the usage of computer vision technology progresses, ethics considerations have begun dominating the discussion. It’s crucial to examine specifics related to computer vision rather than depending on the general ethics linked to AI. These conversations are taking place during conferences, standards development and working groups, and research projects.

The IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR) aims to initiate further discussion within computer vision applications and research. In 2022, it was encouraged that researchers submit papers and proposals including potential negative societal impacts of their proposed research and possible methods on how to mitigate them. Potential ethical concerns include the safety of living beings, privacy, environmental impact, and economic security.

The organizers prioritized transparency and stated, “Grappling with ethics is a difficult problem for the field [computer vision], and thinking about ethics is still relatively new to many authors… In certain cases, it will not be possible to draw a bright line between ethical and unethical.”

The committee of IEEE/CVF CVPR 2023 planed to continue this conversation for the next annual conference and called for papers that focus on transparency, fairness, accountability, privacy, and ethics in vision.

Specifically, in regard to ethics for XR, IEEE is laying down the foundation with standardization. As stated in IEEE Spectrum , “… the IEEE Standards Association (IEEE SA) is working to help define, develop, and deploy the technologies, applications, and governance practices needed to help turn metaverse concepts into practical realities, and to drive new markets.”

It’s also vital to keep in mind that this cutting-edge technology should be made accessible. For instance, it needs to accommodate people who are visually impaired . The study “ Toward inclusivity: Virtual Reality Museums for the Visually Impaired ” examines how narrations, spatialized “reference” audio, along with haptic feedback can be an effective replacement for the traditional use of vision in a virtual reality. The study discovered that those with visual impairments could locate objects more quickly with the aid of enhanced audio and tactile feedback.

Lastly, IEEE Transactions on Visualization and Computer Graphics ( IEEE TVCG ) conducted an analysis of gender representation among the attendees, organizers, and presenters at the IEEE Visualization (VIS) conference over the last 30 years. It was found that the proportion of female authors has increased from 9% in the first five years to 22% in the last five years of the conference.

The IEEE Computer Society urges academics and practitioners to send any ideas that may advance the dialogue to [email protected] since, it is efforts such as these, that have the potential to push the industry towards a brighter future.

IEEE Computer Society Fellow and computer scientist engineer, Greg Welch, is the AdventHealth Endowed Chair in Healthcare Simulation in UCF’s College of Nursing in addition to being co-director of the UCF Synthetic Reality Laborator y. In 2021, Welch reached fellowship status, for contributions to tracking methods in augmented reality applications . Specifically, his primary area of study is virtual reality (VR) and augmented reality (AR), collectively known as “XR,” with a focus in both hardware and software applications.

Currently, Welch spends his time researching the way humans perceive AR related experiences when interacting with the technology. Additionally, he is the lead of the pending NSF project, “Virtual Experience Research Accelerator (VERA),” a system that will improve the process of generating VR related research for scientists.

When asked what advice Welch had for readers with an interest in pursuing a similar path, he mentioned how beneficial ongoing exploration can be, “The field changes fast — something that is hot today might not be tomorrow. In addition, a broader perspective can enable one to see connections and opportunities.”

He recommends taking advantage of community resources and networking opportunities, “From an experiential perspective, get involved! The community [IEEE Computer Society] would not exist without volunteers, but there are so many benefits — it really is true that you get out what you put in.”

Computer vision remains a dynamic and evolving field. Technological advances introduce new opportunities and efficiencies, and they are met with challenges in the form of new theoretical and societal considerations.

From privacy and algorithmic fairness to the feasibility of wide-scale adoption, this is one of the most exciting eras in computer vision. The market is expected to reach US $20.88 billion by 2030, growing 7% annually.

Environmental Factors Shaping Computer Vision

- Increase industry demand – Industries ranging from finance and healthcare to retail and security and beyond are exploring how computer vision supports their emerging needs. Such emphasis means research continues to focus on ways to access and manipulate data in strategic, efficient, and highly accurate new ways.

- Data accessibility – The quality and integrity of data remains pivotal to results. Computer vision researchers are exploring how to achieve highly accurate results with smaller data sets, as well as with new techniques. In addition, more emphasis has been placed on opportunities with synthetic data to expand the use cases, availability, and address security issues around data sets.

- Data privacy and bias – As computer vision techniques progress, how the data is used becomes a chief consideration. Advanced algorithms create unparalleled results, but personal security, bias, and societal factors come into play. Continued work will focus on the ethics surrounding these achievements.

Here are a few key observations, developments, and considerations for the field, informed by insights from IEEE Computer Vision and Pattern Recognition Conference (CVPR) .

“Half the papers in computer vision look like computer graphics. Instead of collecting data you can now simulate and that is very powerful.”

– Rama Chellappa, Johns Hopkins University

“NeRF research is a hot focus right now. It continues to generate jaw-dropping images and is a beautiful blend of computer graphics and computer vision. Computer vision scientists think of cameras as scientific measuring devices that can do more than capture visually pleasing 2D images. These algorithms are a continuation of that. The cameras will be designed to get better computational photography, unifying computer graphics, computational photograophy, and computer vision.”

– Kristin Dana, Rutgers University

“Another trend is content generation: DALL-E can now generate images out of open AI. It makes some computational sense that we should be able to do it. When we think and have a text description, our brains generate an image even though we haven’t seen it, like when we read a book and generate an image in our heads. The algorithms are capturing that ability, and it’s remarkable. But with these content generation algorithms comes the potential for bias, and we have our work ahead of us in considering how they can and should be used.”

“The community is at a unique junction where while some papers focus on core technical research combining classical and modern deep networks, others focus on classical problems and innovative solutions.”

– Richa Singh, IIT Jodhpur

“There’s a tendency to move from real data to synthetic data if it is working, if it is effective. Cameras can only capture what has happened; whereas synthesis can imagine and produce whatever you wish. So, there is more variety in the synthetic data. And the privacy concerns are less.”

“The Computer Vision, Pattern Recognition, and Machine Learning community at large is focusing on developing ingenious algorithms not only for difficult scenarios, unconstrained environments, but also being trustworthy and dependable.”

Inside the Computer Society

Expo and Leadership Forum | 27-28 August 2024

Our Commitment to equity, diversity, and inclusion

CS Members can now add full CSDL access for one flat rate! Use promo code CSDLTRACK

Software Engineering Radio: The Podcast for Professional Software Developers

Sign up for our newsletter.

EMAIL ADDRESS

IEEE COMPUTER SOCIETY

- Board of Governors

- IEEE Support Center

DIGITAL LIBRARY

- Librarian Resources

COMPUTING RESOURCES

- Courses & Certifications

COMMUNITY RESOURCES

- Conference Organizers

- Communities

BUSINESS SOLUTIONS

- Conference Sponsorships & Exhibits

- Digital Library Institutional Subscriptions

- Accessibility Statement

- IEEE Nondiscrimination Policy

- XML Sitemap

©IEEE — All rights reserved. Use of this website signifies your agreement to the IEEE Terms and Conditions.

A not-for-profit organization, the Institute of Electrical and Electronics Engineers (IEEE) is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity.

computer vision Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

2D Computer Vision

A survey on generative adversarial networks: variants, applications, and training.

The Generative Models have gained considerable attention in unsupervised learning via a new and practical framework called Generative Adversarial Networks (GAN) due to their outstanding data generation capability. Many GAN models have been proposed, and several practical applications have emerged in various domains of computer vision and machine learning. Despite GANs excellent success, there are still obstacles to stable training. The problems are Nash equilibrium, internal covariate shift, mode collapse, vanishing gradient, and lack of proper evaluation metrics. Therefore, stable training is a crucial issue in different applications for the success of GANs. Herein, we survey several training solutions proposed by different researchers to stabilize GAN training. We discuss (I) the original GAN model and its modified versions, (II) a detailed analysis of various GAN applications in different domains, and (III) a detailed study about the various GAN training obstacles as well as training solutions. Finally, we reveal several issues as well as research outlines to the topic.

Efficient Channel Attention Based Encoder–Decoder Approach for Image Captioning in Hindi

Image captioning refers to the process of generating a textual description that describes objects and activities present in a given image. It connects two fields of artificial intelligence, computer vision, and natural language processing. Computer vision and natural language processing deal with image understanding and language modeling, respectively. In the existing literature, most of the works have been carried out for image captioning in the English language. This article presents a novel method for image captioning in the Hindi language using encoder–decoder based deep learning architecture with efficient channel attention. The key contribution of this work is the deployment of an efficient channel attention mechanism with bahdanau attention and a gated recurrent unit for developing an image captioning model in the Hindi language. Color images usually consist of three channels, namely red, green, and blue. The channel attention mechanism focuses on an image’s important channel while performing the convolution, which is basically to assign higher importance to specific channels over others. The channel attention mechanism has been shown to have great potential for improving the efficiency of deep convolution neural networks (CNNs). The proposed encoder–decoder architecture utilizes the recently introduced ECA-NET CNN to integrate the channel attention mechanism. Hindi is the fourth most spoken language globally, widely spoken in India and South Asia; it is India’s official language. By translating the well-known MSCOCO dataset from English to Hindi, a dataset for image captioning in Hindi is manually created. The efficiency of the proposed method is compared with other baselines in terms of Bilingual Evaluation Understudy (BLEU) scores, and the results obtained illustrate that the method proposed outperforms other baselines. The proposed method has attained improvements of 0.59%, 2.51%, 4.38%, and 3.30% in terms of BLEU-1, BLEU-2, BLEU-3, and BLEU-4 scores, respectively, with respect to the state-of-the-art. Qualities of the generated captions are further assessed manually in terms of adequacy and fluency to illustrate the proposed method’s efficacy.

Feature Matching-based Approaches to Improve the Robustness of Android Visual GUI Testing