Understand the power and capabilities of Power BI. My highest goal is to enable everyone to get the most out of their data with Power BI.

Assign power bi workspaces to a capacity automatically.

In recent discussions with customers, I was asked if there is an automatic way to assign workspaces to dedicated capacities like Power BI Premium or Embedded. Obviously, you can do it manually through the Power BI Admin Portal, but how can you automate it in a scenario where you have to assign hundreds of workspaces based on different conditions? I’m sure you know my answer for this question: Through the Power BI REST API! Let me walk you through how to achieve it.

Prerequisites

You’ll need a few things to be able to automate this need.

- Service Principal

- A dedicated capacity (Power BI Premium, Embedded, or Premium per User license)

- Python skills

- Understanding REST APIs

Setting the scene

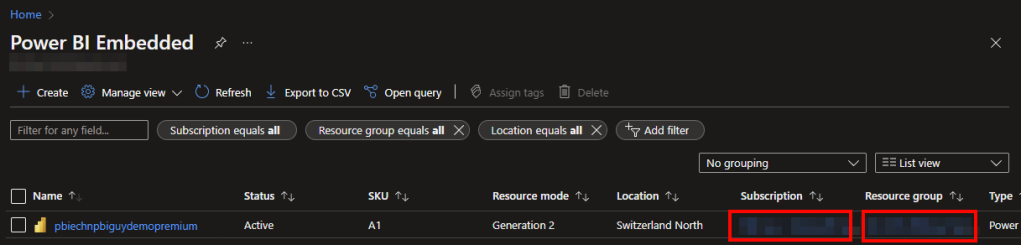

For my demo purpose I’m going to use a Power BI Embedded capacity – a so-called A-SKU – from the Azure Portal. If you’re interested in how to create an Embedded capacity, follow this link .

Further, I’m going to reuse my already created Service Principal (SP). I blogged about how to create a SP, what kind of settings in your Power BI Service you need to enable, and what kind of permissions are needed here .

Lastly, I have to make sure my SP can assign workspaces to the capacity. Regarding the documentation (see here ) the SP needs admin rights on the workspace as well as capacity assignment permissions to that capacity.

Unfortunately, the Admin REST API does not support (yet?) Service Principals to assign workspaces to a capacity. Therefore, we have to make sure that the SP is admin of each workspace we wish to move to a capacity. Luckily, there is an Admin API to assign yourself as Admin or a SP to a workspace (see here ). If you’re interested in a blog about assigning a SP to different workspaces, let me know and I’ll be happy to blog about it.

So, let’s make sure the SP has sufficient permissions.

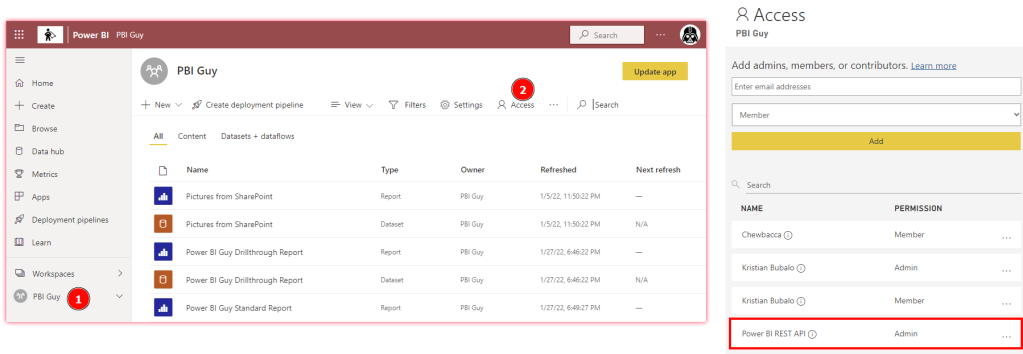

Add SP as Admin to a workspace

This step is pretty easy and straight forward. Just head over to powerbi.com, select your workspace, click on Access, and make sure your SP is added as Admin.

As you can see in the screen shot above, the workspace is not assigned to a capacity yet otherwise it would have a diamond sign right to the workspace name (PBI Guy).

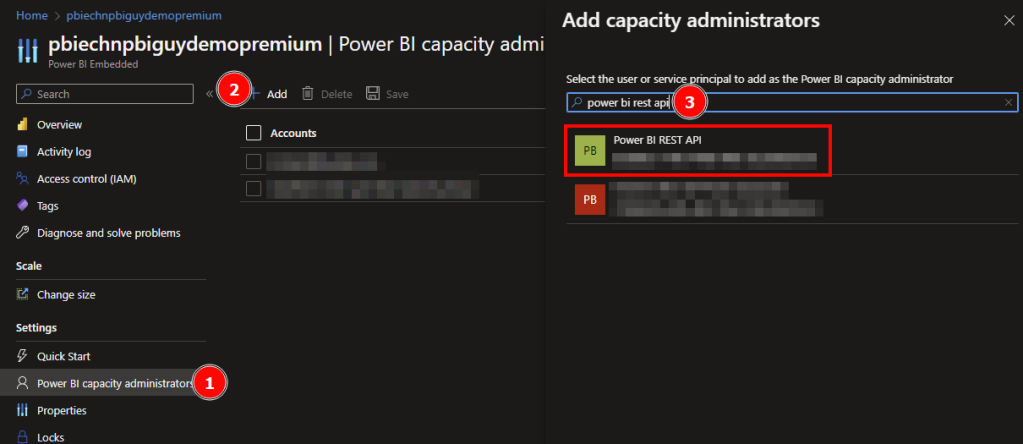

Add SP as Capacity Admin

In the case of Power BI Embedded you can’t differentiate between admins and contributors like with Premium. Therefore, I have to add the SP as admin in the Azure Portal. To do that I just log in to the Azure Portal , select my Power BI Embedded capacity, and click on Power BI capacity administrators. Once there, click + Add, search for your SP and add it. That’s it. Just make sure your Embedded Capacity is running otherwise you can’t add a new admin.

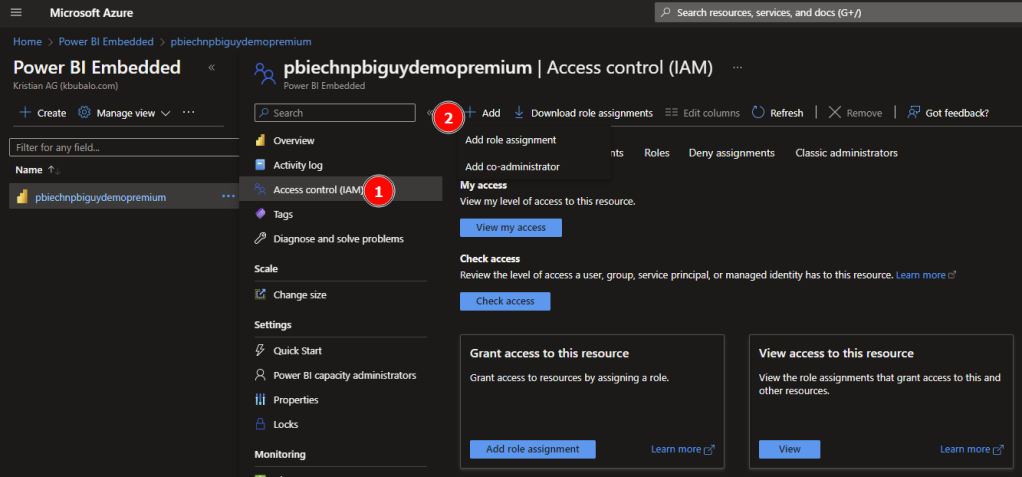

Further, we have to make sure the Service Principal is allowed to start and pause the embedded capacity. This is done through the Access control on the left-hand side of the pane. Once selected, click + Add and select Add role assignment.

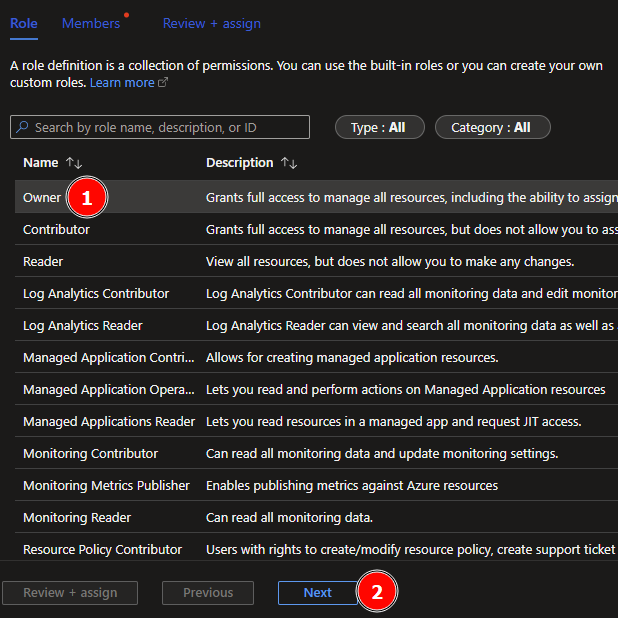

Next, select the needed role. In my case I just give the SP Owner rights but Contributor would be sufficient as well. Once selected, hit next.

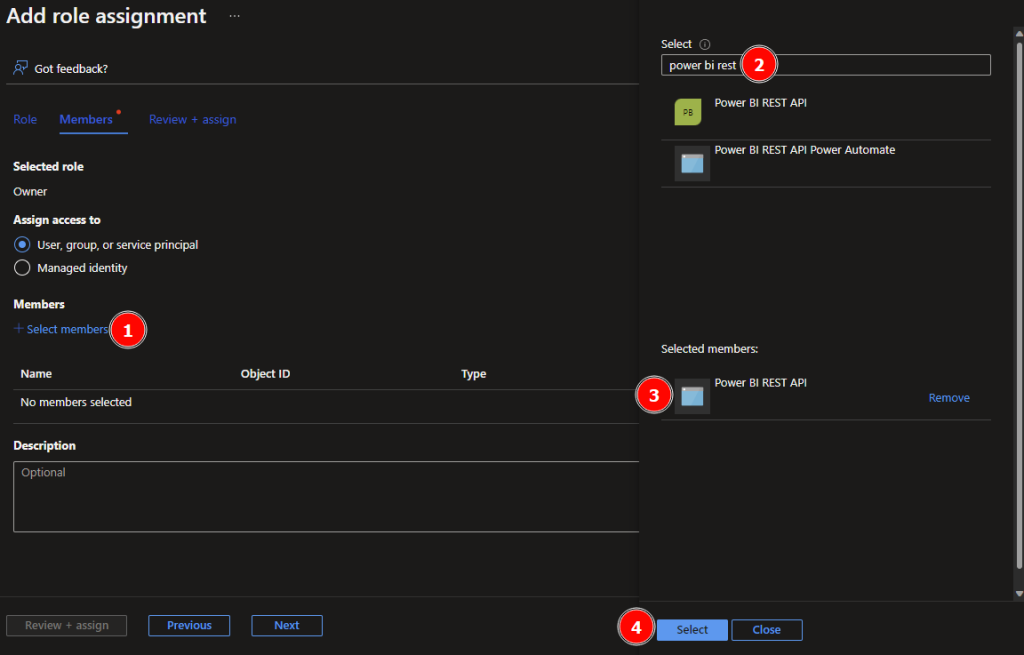

On the next screen just select the + Select members, search and add the SP to it. Click select to proceed.

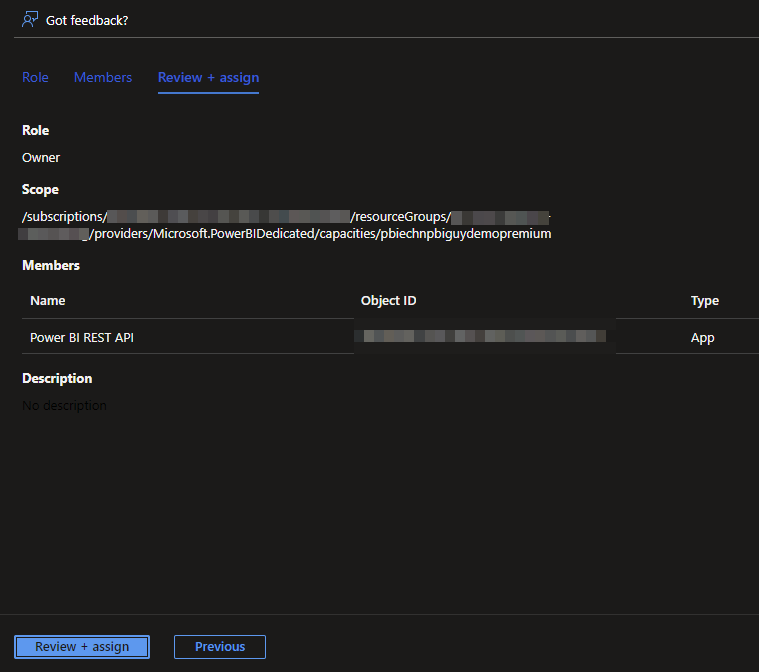

Lastly, hit Review + assign to check your configuration.

If everything looks as expected, hit Review + assign again. We’re now good to go and create our Python script.

It’s time for magic!

As usual, in my first block of code I’m going to import the needed libraries.

In my second block, I specify all required variables which we will use later on.

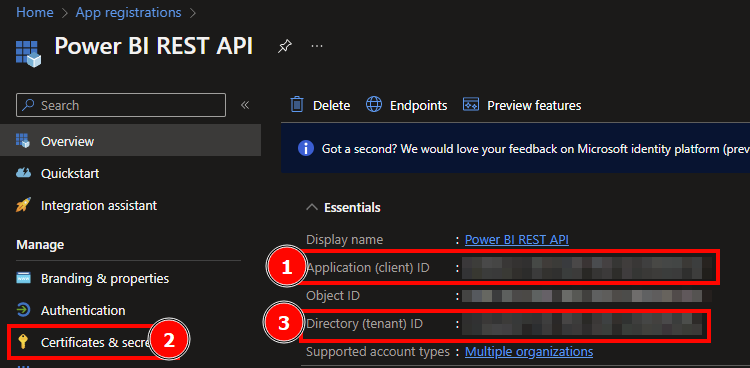

The client id, secret as well as the tenant id can be found in the Overview page of your SP.

The domain is everything behind the @ of your email address, e.g. [email protected] would mean “pbiguy.com”.

Authority URL and the scope shouldn’t be touched as those are needed to authenticate for the PBI Service. Lastly, the subscription name and resource group name can be found in the Azure Portal on the Power BI Embedded Services overview.

Just keep in mind to use the Subscription ID, not the name!

Next piece of code is to grab a token on behalf of the SP.

In my next step, I want to list all capacities I have access to. Because I’ll need to do that twice within the code (explanation will follow), I create a function, so I don’t have to duplicate my code. The function returns a Pandas DataFrame with all capacities incl. further details.

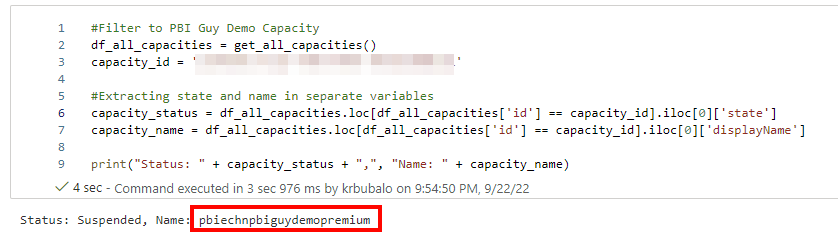

Next, I want to select my desired capacity, which is in my case the Power BI Embedded one. So, I call the function to get all capacities and filter the result, based on the capacity id, to my Embedded one. Making sure the right one is selected, I print out the capacity name as well as the status (is it running or paused).

The result is as desired, and I only selected my Embedded capacity.

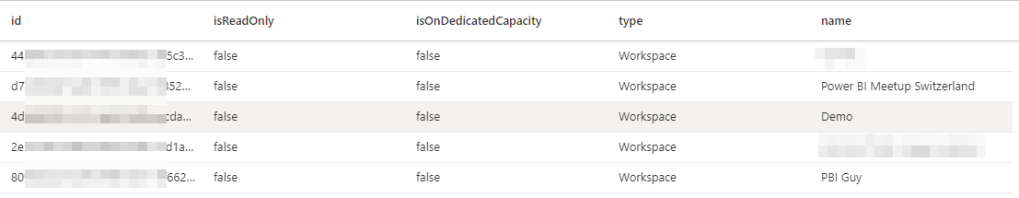

Now that I got the needed capacity, it’s time to get all workspaces I wish to assign to this capacity. Therefore, my next step is to call the REST API to list all workspaces the SP has access to. To get an overview, I display the DataFrame at the end.

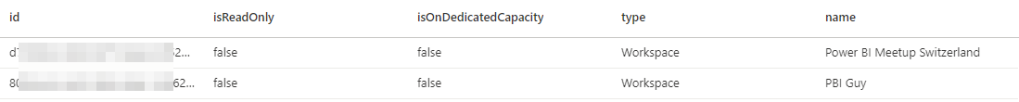

For my purpose, I filter the workspaces to only include those with “BI” in the name. Of course, you can create further conditions and filter options based on your needs.

Again, I display the DataFrame at the end to check my filter and selection. Looks good so far.

Let’s do a quick recap what we achieved so far. We have our capacity selected to which we want to assign our workspaces. We also selected all the workspaces we wish to assign to our capacity. As a next step, we have to assign them. But before doing so, especially in the case of Power BI Embedded, we have to make sure that the capacity is running and not paused. Thus, my next block of code will check the status and if it’s paused (suspended), I’ll start (activate) it. This step is not necessary for Premium capacities as they are always active.

I’ll first create a function to get an Azure token. This one differs from the Power BI one as we have to log in into Azure and not Power BI.

Next, I define a function to create the URL to start or pause the capacity. As the REST API URL is very similar and only the last piece (status variable) differs, it’s much more efficient due to reusability reasons to work with a function in this case.

Lastly, I use the capacity status from my previous code to check if it’s suspended. If so, I call the previously created function to create an Azure Token and call the REST API to resume the capacity. At the end of the code, I print out a message based on the status code received.

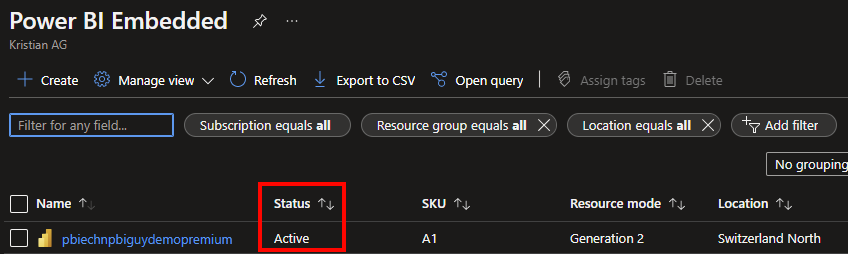

As it takes some time to activate the capacity, I’ll check in my next code block if the capacity is really active. Otherwise, I would get an error message trying to assign a workspace to a suspended capacity. Now, I call the get_all_capacities function to get again all capacities, filter down to my desired one, and save the status in a separate variable called capacity_status. Next, I do a while loop if the status is suspended and check all 5 seconds until the status has changed to active. This way I make sure the capacity is really in active stage.

Let’s check in the Azure Portal, if the capacity is really running. I select the general overview of the Power BI Embedded service and see that my embedded capacity has an active status – great!

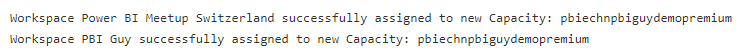

Finally, I can assign now my workspaces to the capacity. I create a for each loop on my selected workspaces DataFrame to assign each workspace obviously to the capacity (bulk update is not supported through the API). In the loop I extract the workspace ID and the name, update the URL for the REST API call (including the workspace ID), and specify the required body. In there, you’ll find the capacity_id variable specifying to which capacity we wish to assign the workspace. At the end I call the REST API and provide a message based on the status code received. If it’s successful, I print out a message with the workspace and capacity name confirming it worked.

If you wish to unassign a workspace from a capacity and put it back to Power BI Service (Shared Capacity), just use the 00000000-0000-0000-0000-000000000000 GUID for the capacity_id variable.

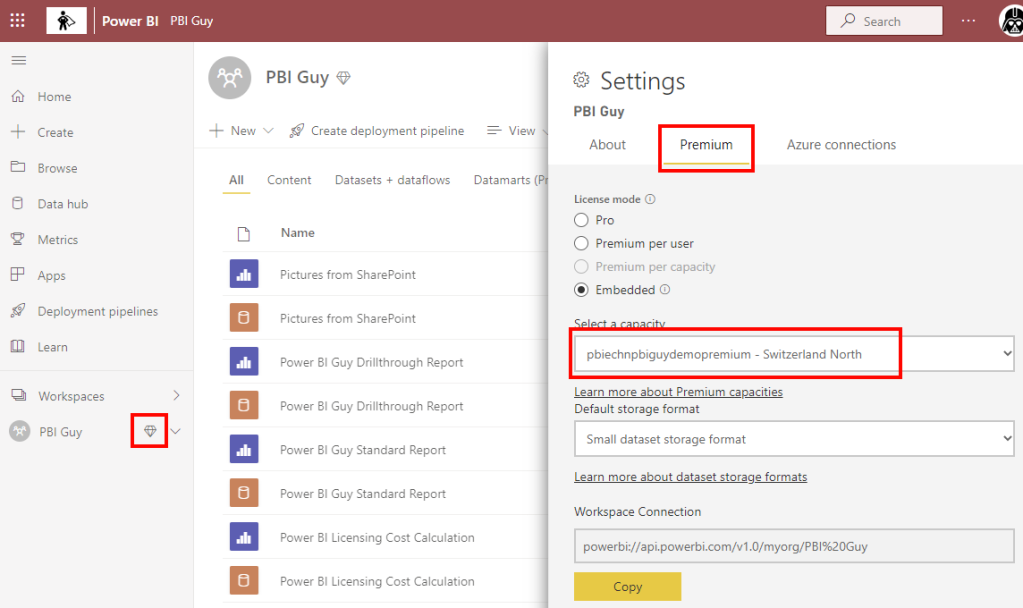

Let’s check in the Power BI Service if it really worked.

Great! First sign that it worked is the diamond icon right to the workspace name. Making sure the workspace is really assigned to the right capacity, I also check the workspace settings. Looks perfect!

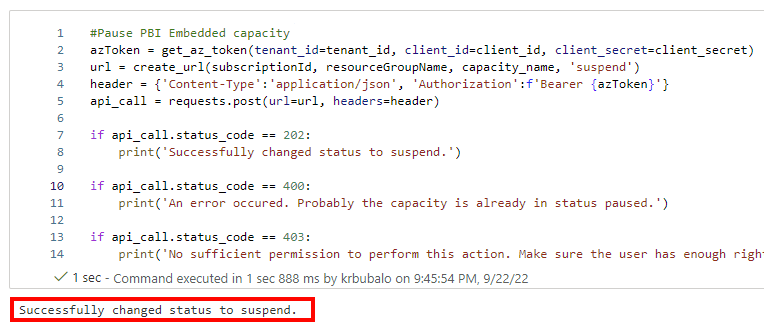

My last step in the Python code is to pause the capacity making sure no additional or unnecessary costs will occur as I’m using the Power BI Embedded one. Depending on the number of workspaces, the Azure Token could be expired. Therefore, I want to make sure I have still an active one and call the get_az_token function again to get a fresh token. Afterwards, I call the create_url function but this time with the suspend status and save it to the url variable. Afterwards I call the REST API to pause it. Lastly, I again print out a message based on the response from the REST API.

Once the code is executed, it looks like the status of the capacity has changed.

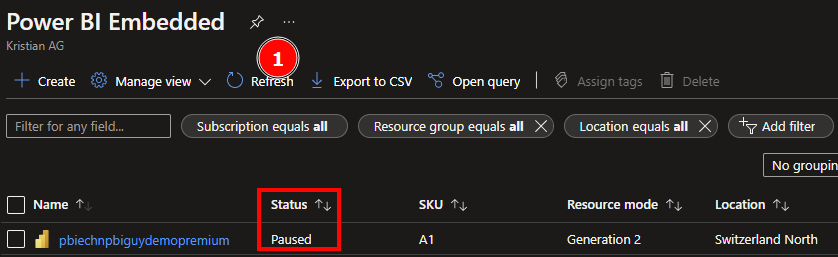

Let’s again check in the Azure Portal.

After hitting the refresh button, I see the capacity is really paused – excellent!

With this piece of code, I can automatically assign workspaces based on my needs to a specific capacity! As I worked with an Embedded one, I even automatically started and paused the capacity through the REST API – awesome!

Please let me know if this post was helpful and give me some feedback. Also feel free to contact me if you have any questions.

If you’re interested in the files used in this blog check out my GitHub repo https://github.com/PBI-Guy/blog

Share this:

- Click to share on Twitter (Opens in new window)

- Click to share on Facebook (Opens in new window)

7 thoughts on “ Assign Power BI workspaces to a capacity automatically ”

Fantastic post

Do you know please ? how to add a SP as contributor (add/remove WS) to a Premium capacity (not embedded) Is it via power shell ? Because impossible to add manually (email adress not valid)

Thanks very much!

Can you add the Service Principal to a Security Group and add this group to the Contributors of your Premium capacity? ( https://learn.microsoft.com/en-us/power-bi/enterprise/service-admin-premium-manage#manage-user-permissions )

Thanks a lot Guy I guess we can use your nice & very useful python scripts on Azure Data factory via azure batch tasks If you are close to Microsoft, do you know please , by any chance if python support will be improved, simplified, modernized etc… on ADF ?

Apache Airflow dags uses python itself to manage tasks together, organized with dependencies and relationships to say how they should run.

Not that of course on ADF ;>) , but improve python scripts handle on ADF

I guess so yes but haven’t tested it 🙂 I’m not very close to the ADF team and can’t find anything on the roadmap so perhaps through the community you can raise the idea for a better python script handling?

Thanks Guy for detailed explanation! Need your small help in #Filter to needed workspaces. In this case all workspaces with “BI” in the name will be used. How can I achieve the same via PowerShell? It would be helpful if you can help me with the PS script to filter to needed workspace

I’m unfortunately not really good with PowerShell (that’s the reason why I use Python :D) but something like this should work:

Get-PowerBIWorkspace -Scope Organization -Filter “tolower(name) eq ‘My Workspace Name'”

I’m using the “tolower” at the beginning to lowercase everything as the filter is case sensitive. As the filter option is passed through OData you can use something else than eq (which means equals). Check all filter options here: https://www.odata.org/documentation/odata-version-2-0/uri-conventions/ under 4.5 Filter System Query Option ($filter)

Hope that helps.

[…] In one of my previous blog posts I showed how to create a Service Principal ( https://pbi-guy.com/2022/03/10/power-bi-and-activity-logs-with-python/ ) and also did a walk-through how to give the sufficient permission to the SP to start and pause the Embedded Capacity ( https://pbi-guy.com/2022/09/22/assign-power-bi-workspaces-to-a-capacity-automatically/ ). […]

Leave a comment Cancel reply

- Already have a WordPress.com account? Log in now.

- Subscribe Subscribed

- Copy shortlink

- Report this content

- View post in Reader

- Manage subscriptions

- Collapse this bar

How To Use The Power BI Premium Capacity Metrics App

Organizations spend lots of money ensuring they have the right resources to support their Power BI platform to ensure a good developer and end-user experience. This is especially true if your organization has purchased its own Premium Capacity . Because of this large investment, you’ll want to make sure that you have what you need to appropriately monitor your organization’s premium capacities.

Enter the Power BI Premium Capacity Metrics App, a canned app designed to give admins the necessary monitoring capabilities to make informed decisions about their Premium Capacity.

In this blog, we’ll explain the PBI Premium Capacity Metrics App, describe how to install it, and provide some tips on how to use it.

What Is The PBI Premium Capacity Metrics App?

The PBI Premium Capacity Metrics App is a template app, meaning it’s publicly available to use within your organization’s tenant. This app equips Power BI admins to be able to monitor their capacity’s resources and identify issues and areas for improvement.

Let’s start with an example of how you, as a Power BI admin, might use this app.

When a dedicated capacity in Power BI reaches its maximum capacity, meaning its compute resources are fully utilized, the admin team will receive an email notifying them and explaining that their capacity will be throttled. This will impact the dataset and report performance, thus negatively impacting user experience.

As an admin, you can use the app to identify where the issue came from, the exact point in time that it occurred, and even the user that caused it so that you can make them aware and provide mitigation tips.

As previously stated, the PBI Premium Capacity Metrics App is a template app and is available in the AppSource to be installed (instructions for installation are in the next section).

How To Install And Set Up The PBI Premium Capacity Metrics App

Installing the PBI Premium Capacity Metrics App is easy and can be done directly from Power BI Service.

Step 1: Open the “Apps” page using the left panel and click the green “Get apps” button on the top right.

Step 2: Navigate to the “Template apps” section and search Premium Capacity Utilization and click on the app icon (note there is still a previous version available, make sure you have the one in the screenshot below).

Step 3: In the next two pop-up windows, click the blue “Get It Now” button.

Step 4: Click the “Install” button in the next window (this step may take a few minutes).

Installing this app will automatically create a new workspace with the app already installed in it; there is no alternative to this and you will not be able to install it into an existing workspace.

Step 5: Open the newly created workspace which will follow this naming convention in the screenshot below. Click the “Connect your data” button on the yellow banner towards the top.

Step 6: Fill out the information in the pop-up window accordingly. If you have multiple capacities, you will NOT need multiple apps, just use one of the ID’s, and it will automatically connect to the others in the same tenant.

Step 7: Follow the prompts on the next screen and click the “Sign in and connect” button.

Step 8: Add the app to your list of favorites by going back to the “Apps” page using the left panel, then clicking on the “Get apps” button and searching for the Premium Capacity Utilization and Metrics App.

How To Use The PBI Premium Capacity Metrics App For Performance Monitoring

Using the PBI Premium Capacity Metrics App can be daunting because of the amount of information it can provide. For that reason, we will use this section to introduce the different components of the app and provide some advice on where a user’s time is best spent.

The app has five different pages that admins can use to help monitor their capacity and triage any issues that may come up, which are described in more detail below.

At the top of each page is a slicer for the user to select which capacity they would like to view.

Overview Page

The Overview page provides high-level details of the overall performance of the capacity and has three sections.

This section is the left portion of the page and consists of two separate visuals stacked on top of each other.

Drive your focus to the matrix on the bottom left, which displays metrics (described in the table below) for each Power BI item in the selected capacity. Use this matrix to highlight problem artifacts that could be draining your capacity’s resources. And expand the individual artifact to view what kind of operation could be causing the problem.

Performance

The performance section is to the right of the Artifacts section and has four visuals. This section is perhaps the most important and will help the user pinpoint exactly when, where, and who caused a capacity to reach its max threshold.

CPU over time: visual on the top displays CPU usage for the capacity broken out by background and interactive activities. Background operations are activities that are not directly triggered by users, such as data refreshes. Whereas, interactive operations are caused by users, such as loading pages or filtering.

Right-click to drill through any part of the visual to open the Timepoint Details page to view exactly what background and interactive operations caused a spike at that given moment.

Overloaded minutes per hour: displays a value showing how severe an overload was on the performance of an artifact.

Item size: shows the memory recorded for the Power BI items in the capacity over time.

Performance profile: displays report performance broken out into three different categories (Fast/Moderate/Slow). The performance aggregation is taken from the number of operations conducted on an artifact.

Weekly Trendlines

This section has four sparklines that provide quick, high-level insights on the trends for each of the following data points included.

Evidence Page

The Evidence page of the app helps to identify which Power BI artifacts are leading to overload and which items are impacted by that overload.

Overloaded Item: the most important visual here is the table on the bottom left, which displays Overloaders and Overloaded Items. The overloaders highlight items that cause highly impactful overloaded events. Toggle this visual to Overloaded Artifacts, which displays items most impacted by overloading in the past two weeks. Users can drill through the individual artifacts to get more information specific to that artifact.

Overloading windows: helps users understand if an overload or autoscale events occurred because of a single artifact or several.

Items overloaded (seconds) use this visual to understand if items that are overloading impact their own performance, or if they impact the performance of other items

Number of users overloaded: use this visual to understand if a single user was impacted by an overload event, or several users. This helps the user understand how widespread of an issue this is.

Refresh Page

This entire page is dedicated to helping users identify refresh performance issues and can be accessed for an entire capacity or as a drill-through for specific items or. The top of the page allows users to make selections that impact all of the visuals on the page.

Refresh by item: shows the breakdown of items by the metric filtered to (using the slicer described above). This visual is helpful when trying to understand which optimizations for an item can help reduce the capacity footprint.

CPU: the columns in this visual represent the number of CPU seconds used to finish a single operation per hour across a fourteen-day period.

Duration and Operations visuals: the columns in these visuals show the number of seconds a single operation took to finish a single operation per hour across a fourteen-day period.

Refresh Detail: his matrix shows the metadata for all of the individual refresh operations that occurred. The ratio column should be used to help understand the ratio between CPU time and processing time. A low ratio means there are inefficiencies that cause Power BI to spend more time waiting for the data source and less time executing the refresh.

Timepoint Details Page

This page is perhaps the most important page when determining the causal factors that lead to overload or your dedicated capacity reaching its maximum threshold. Access it by drilling through various points in other pages to view the operations that occurred at that time.

The page is broken into two primary sections. There is a table for interactive operations and a table for background operations. As described before, interactive operations are activities performed by users at the front end, such as loading pages, making visualization selections, and filtering.

Background operations are not caused directly but users and are operations such as dataset refreshes. Each of the tables displays the exact artifact, the operation, the start and end time, whether or not the operation was a success as well as helpful metrics that describe it’s impact to the capacity at that point in time.

I recommend using this view when you want to know the specific operation that caused an overload and would like to contact the user.

Item Details Page

The Item Details page is another drill-through page that offers very low-level information specific to a single item that the user drills through. This page displays the information provided for the past two weeks regarding CPU usage, Users, Duration, Overloading, and more.

Organizations spend lots of time, money, and resources ensuring their teams have the necessary items to report on their business metrics efficiently. If your organization has licensed Power BI for a dedicated Premium Capacity , you should take the necessary steps to monitor and act proactively to find efficiencies that will lead to better and faster insights, and thus a better user experience leading to greater adoption.

The PBI Premium Capacity Metrics App is an excellent tool that helps organizations do that, and since it’s a template app, it requires no effort to develop and minimal effort to install.

If you have more questions about the PBI Premium Capacity Metrics App or Power BI in general, our experienced Power Platform experts will gladly guide you.

More to explore

phData Announces Partnership with LandingAI

Snowflake Cortex vs. Snowpark – What’s the difference?

phData Achieves Elite Partner Status with Coalesce

Join our team

- About phData

- Leadership Team

- All Technology Partners

- Case Studies

- phData Toolkit

Subscribe to our newsletter

- © 2024 phData

- Privacy Policy

- Accessibility Policy

- Website Terms of Use

- Data Processing Agreement

- End User License Agreement

Data Coach is our premium analytics training program with one-on-one coaching from renowned experts.

- Data Coach Overview

- Course Collection

Accelerate and automate your data projects with the phData Toolkit

- Get Started

- Financial Services

- Manufacturing

- Retail and CPG

- Healthcare and Life Sciences

- Call Center Analytics Services

- Snowflake Native Streaming of HL7 Data

- Snowflake Retail & CPG Supply Chain Forecasting

- Snowflake Plant Intelligence For Manufacturing

- Snowflake Demand Forecasting For Manufacturing

- Snowflake Data Collaboration For Manufacturing

- MLOps Framework

- Teradata to Snowflake

- Cloudera CDP Migration

Technology Partners

Other technology partners.

Check out our latest insights

- Dashboard Library

- Whitepapers and eBooks

Data Engineering

Consulting, migrations, data pipelines, dataops, change management, enablement & learning, coe, coaching, pmo, data science and machine learning services, mlops enablement, prototyping, model development and deployment, strategy services, data, analytics, and ai strategy, architecture and assessments, reporting, analytics, and visualization services, self-service, integrated analytics, dashboards, automation, elastic operations, data platforms, data pipelines, and machine learning.

with contract intelligence

Explore Contract Intelligence Services

Drive value from your contracts to transform them from static documents to strategic business assets with our Icertis Contract Intelligence Center of Excellence.

AI into business operations

AI/ML (Artificial Intelligence & Machine Learning)

IoT (Internet of Things)

NLP (Natural Language Processing)

Democratize.

Data Management & Governance

Data Engineering

Data Analytics

Apps at litespeed.

App engineering and integration

Low-Code/ No-Code

Solution overview.

Transport Management

Fast-track your railroad modernization through the CloudMoyo Rail Transportation Management (CTRM) Software Suite. CTRM automates core operations, manages crews, optimizes assets, obtains commercial visibility, and supports safety compliance – all powered by advanced analytics an AI/ML.

Interline Settlement

Automate revenue settlement processing and dispute resolution involved in interline rail freight shipment. The CloudMoyo Interline Settlement System (ISS) works with Railinc CISS and acts as a single source of truth for all data related to your interline shipment, improving the efficiency, timing, and overall quality of interline settlements.

Unifies Crew & Safety Management

A powerful suite of digital systems for effective, safe, and compliant crew operations. CloudMoyo’s Unified Crew & Safety Management promotes collaboration, data visibility and analysis, integrated functions, and organization initiatives by unifying all business solutions for crew and safety management.

About CloudMoyo

Here at CloudMoyo, we don’t just create and provide technology solutions, we build dreams. We strongly believe that our expertise is founded on the efforts of our employees, a group of talented and fun-loving people.

A Complete Guide to Power BI Pricing and Capacity Management

- February 2, 2023

As business intelligence programs are becoming more sophisticated and nuanced, Power BI has found a unique position in the market as a go-to BI tool. As an increasing number of organizations are adopting Power BI, they’ve got lots of questions about the various aspects of Power BI like capacity and pricing.

As a Microsoft Gold Certified Partner with more than a decade of experience implementing digital solutions, CloudMoyo’s experts have shared some insights that can help your organization identify which solution might fit your enterprise BI needs. Keep reading for an overview of dedicated capacities, supported workloads, content sharing, and other Power BI features that might be helpful in your decision-making!

Power BI Capacity Management

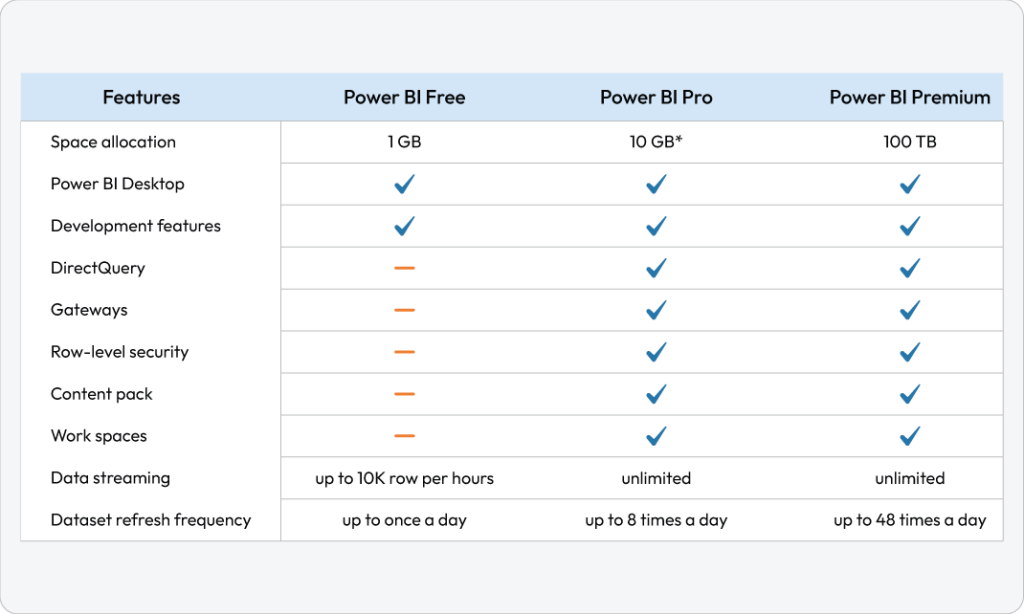

Power BI offers three tiers of service – Free, Pro, and Premium based on usage:

- Power BI Free for Content Creation: Use the free Power BI Desktop tool to author reports and prepare, model, and create data visualizations.

- Power BI Pro for Content Publication: Collaborate with colleagues, model data, author content, share dashboards, publish reports, and perform ad hoc analysis.

- Power BI Premium for Content Consumption: Read and interact with pre-published dashboards and reports with either a per-user Power BI Pro license or a Power BI Premium license for large-scale databases.

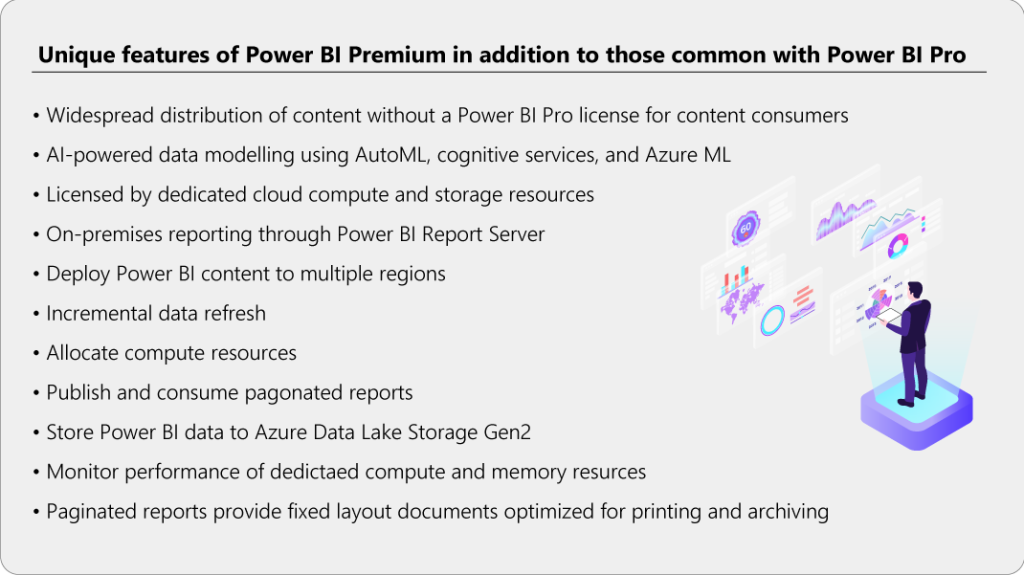

While Power BI Pro and Premium both have their own set of extensive features, we’ve highlighted some of the unique features of Power BI Premium in Figure 1. These features are in addition to those common with Power BI Pro.

Power BI Pricing Management

The total users and users by category who interact with Power BI reports or dashboards are the biggest factors in estimating the cost of Power BI for your organization. There are three categories of users:

- Pro Users: These users require collaboration, authorization of content, data modeling, ad hoc analysis, dashboard sharing, and report publishing.

- Frequent Users: Users frequently interact with reports or dashboards.

- Occasional Users: Users occasionally consume the reports and dashboards.

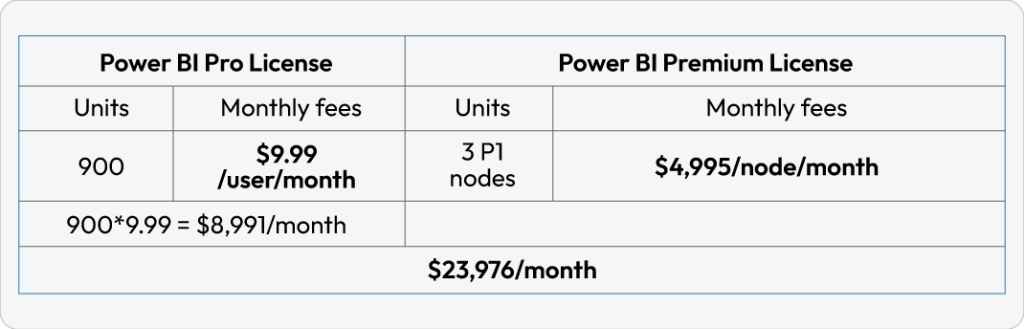

Based on these identified user types, the Power BI pricing calculator can be used to estimate the cost. Let’s work with an example here:

Suppose an organization contains a total of 4500 users who’ll have access to Power BI. Let’s divide these users into 3 categories – 20% pro users, 35% frequent users, and 45% occasional users. Based on the pricing calculator, the total cost for 4500 users will be $23,976/month.

Power BI Premium

Power BI Premium provides dedicated and enhanced resources to run the Power BI service for your organization with features like:

- Greater scale and performance

- Flexibility to license by capacity

- Unify self-service and enterprise BI

- Extend on-premises BI with Power BI Report Server

- Support for data residency by region (Multi-Geo)

- Share data with anyone without purchasing a per-user license

The Office 365 subscription of Power BI Premium is available in two SKU (Stock-Keeping Unit) families:

- P SKUs (P1-P3) for embedding and enterprise features. The commitment is monthly or yearly, and it’s billed monthly. This includes a license to install Power BI Report Server on-premises.

- EM SKUs (EM1-EM3) for organizational embedding. The commitment is yearly and is billed monthly. EM1 and EM2 SKUs are available only through volume licensing plans. You can’t purchase them directly.

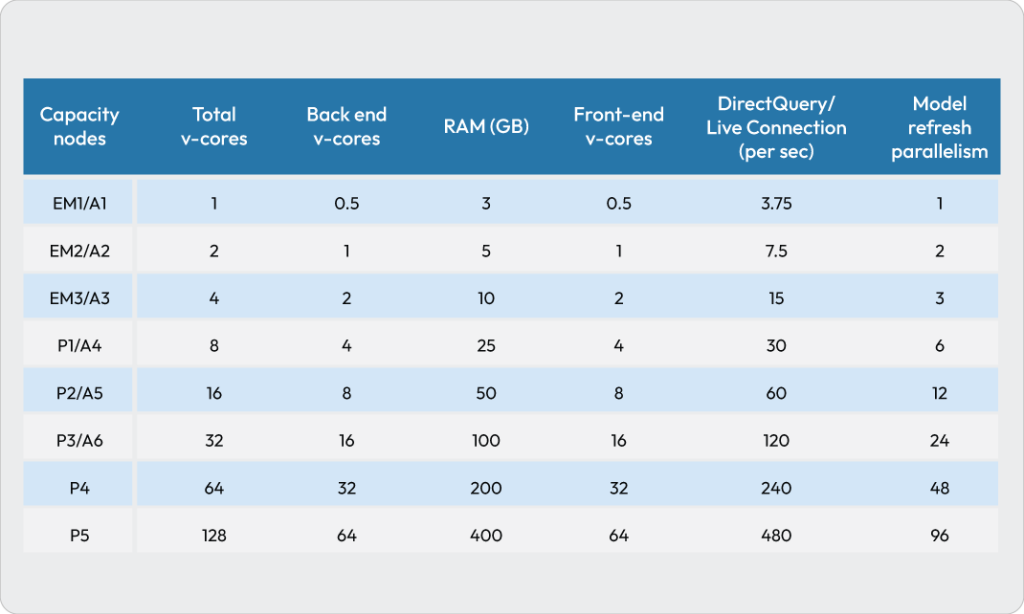

Capacity Nodes

As described earlier, there are two Power BI Premium SKU families – EM and P. The SKU represents the storage, memory, and a set number of resources consisting of processors where ALL SKUs are considered capacity nodes. Each SKU contains some operational limits on the number of DirectQuery and Live Connections per second and the number of parallel model refreshes.

Processing is achieved by a set number of v-cores, divided equally between the back-end and front-end. Back-end v-cores are also known as active datasets where it has assigned a fixed amount of memory that’s primarily used to host models. Back-end v-cores are responsible for core Power BI functionalities which contain the following activities: query processing, cache management, running R services, model refresh, natural language processing, and server-side rendering of reports and images.

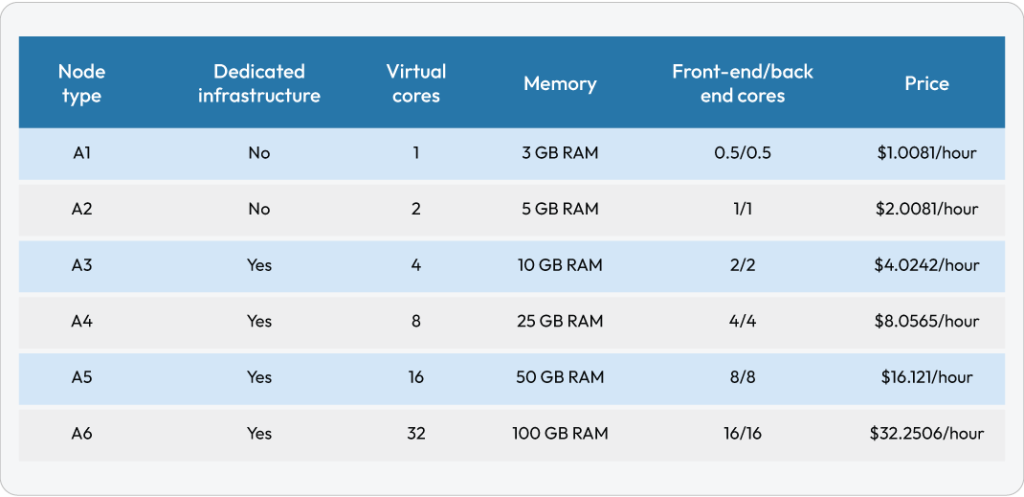

Front-end v-cores are responsible for the following activities: web services, dashboard & report document management, access rights management scheduling, APIs, uploads & downloads, and everything related to the user experiences. Storage is set to 100 TB per capacity node. The resources and limitations of each Premium SKU (and equivalently sized A SKU) are described in table 2:

Workload Configuration in Premium Capacity Using Power BI Admin Portal

To fulfill the capacity resource requirements, you will need to change memory and other settings if the default settings are not meeting the requirements. The steps to configure workloads in the Power BI admin portal are:

- In Capacity settings > PREMIUM CAPACITIES, select a capacity.

- Under MORE OPTIONS, expand Workloads.

- Enable one or more workloads and set a value for Max Memory and other settings.

- Select Apply.

Different parameters contributing to workloads in a premium capacity are AI workload, datasets, max intermediate row set count, max offline dataset size, max result row set count, query memory limit, query timeout, automatic page refresh (in preview), data flows, container size, and paginated reports.

Power BI Embedded

The total cost of Power BI Embedded depends on the type of node chosen and the number of nodes deployed. Node types differ based on the number of v-cores and RAM. The Power BI Embedded pricing by Microsoft is available on monthly/hourly basis across different regions. Table 3 covers the pricing for the Central U.S. region by the hour.

Frequently Asked Questions (by CloudMoyo Customers)

When should i choose power bi pro for deployment.

For small and large deployments, Power BI Pro works great to deliver full Power BI capabilities to all users. Employees across roles and departments can engage in ad-hoc analysis, dashboard sharing and report publishing, collaboration, and other related activities.

Not all my users require the full capabilities of Power BI Pro – do I still need a Power BI Pro license?

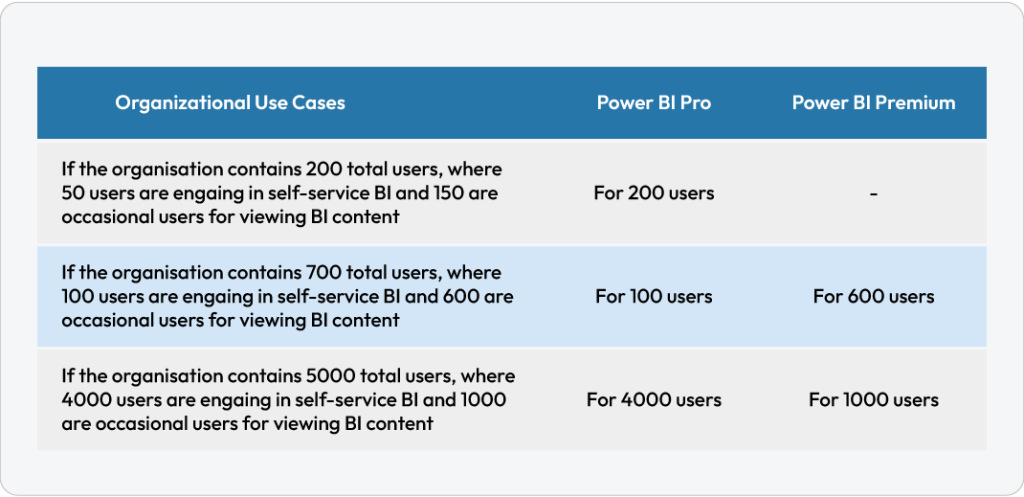

Even though you have Power BI Premium, you will need Power BI Pro to publish reports, share dashboards, collaborate with colleagues in workspaces, and engage in other related activities. To use a Power BI Premium capacity, you need to assign a workspace to a capacity. The following use cases will help you to understand the scenarios in which you can go for Power BI Pro/Premium or both.

Do you need self-service BI?

Self-service BI isn’t just a trend anymore – it’s become a necessity for efficient information sharing within an organization. Various professionals can collaborate, publish, share, and perform ad-hoc analysis easily with advanced self-service BI tools.

Can Power BI support big data analytics and on-premises, as well as cloud reporting?

Power BI Premium provides enterprise BI, big data analytics, cloud, and on-premises reporting along with advanced administration and deployment controls. It also provides dedicated cloud computing and storage resources that allow any user to consume Power BI content.

So, Do I Need Power BI?

Depending on your enterprise business intelligence needs, it’s important to choose the right Power BI offering for you! From the number of users to pricing to varying features of each Power BI option, we hope this guide helps guide you in your decision.

Every organization is unique in its needs and goals. And the right technology partner can help you identify the best solution based on your enterprise needs! CloudMoyo experts have decades of experience working with various technologies, including Power BI and other Microsoft tools to transform your organization with resilience. Our goal is to support organizations on their digital transformation journeys, future-proofing their business with agility and scalability as they grow.

Have more questions about Power BI? Get in touch here !

Not quite ready to connect? Check out some of our customer success stories :

- Optimizing Power BI Report Performance During Peak Hours Using Visual Studio Testing Services

- Indivior Improves Reporting and Contract Management Process by Implementing Power BI and ODS

Originally posted 06/18/2020, Updated 02/02/2023.

By Abhay Jadhav, Analytics team CloudMoyo

Sign up for a consultation today and explore more about our services offering

Ready to get started?

Subscribe to our newsletter

13 May 2024

PowerBI premium capacity administration: dataset monitoring and tuning

Over the years Power BI has evolved into a complex and varied ecosystem of tools and solutions, which in its turn demands several supporting roles: there are, of course, developers, data engineers and data scientists, but there is need for one more, i.e. a capacity administrator. Of course some of these roles may be covered by one person, but the roles are distinct in their nature as well.

Capacity Administration

The role of the capacity admin is to oversee and make sure that all tools and users are playing well together, and sometimes there is need for a hard proof that something is wrong before it can be fixed. This article describes the process of how the capacity admin gathers enough data from the Fabric Capacity to helps the developers finetune their solutions and get the most value from the software, as well as the insights the programmers provide.

As part of the Capacity administration tasks, a PowerBI admin needs to monitor and finetune the performance of the environment, while providing best practices guidance to the developers which are publishing the reports to the shared environment. I have seen both sides in the past – I have been a Power BI developer for many years, optimizing performance and fighting for shared resources, while in the past year I have been in the Power BI Admin. These are two different points of view, especially because the developers have their tools (Power BI Desktop, DAX studio, Power BI Desktop profiler, and so on) which give them granular performance data from the local environment, while the Admins have their tools which can see the bigger picture of the resource utilization in reality from the shared environment where the published reports are.

Wouldn’t it be best to have both views combined and thus creating a much reliable and better performing environment?

For an admin, one way to get a good overview of the Premium Capacity and its resource usage is to use the Fabric Capacity Metrics app which is provided by Microsoft. (For more info, read here: https://learn.microsoft.com/en-us/fabric/enterprise/metrics-app )

In my previous article “ What is Microsoft Fabric all about? ” I explained how the resources of the Power BI capacities are expected to work and what triggers the throttling. But, in short, when we use Power BI services, we get the processing capacity we have subscribed for, and once we exceed it, the users of the entire capacity get “punished” by getting throttled or even rejected. Recently a team of developers managed to bring down an entire Power BI Premium capacity by publishing a single report without testing it, and without knowing how to monitor its performance on the Power BI portal after publishing it.

One Problem Is The Variability

At times a capacity may be completely adequate, but at other times there might be significant resource pressure, which if encountered for longer periods of time may mean throttling of the resources for all of the Premium Capacity constituents. When this occurs, all users and all workspaces will likely suffer the delays of a slowed down environment. A real-life example is how a single report was able to bring down an entire capacity due to having more than 10 nested levels of DAX in its measures, and by using the techniques described in this article, the admin together with the developers were able to restore the service quickly. Furthermore, there were some learnings on how to proactively investigate and prevent future outages by using the described techniques.

Of course, one way to deal with throttling caused by resource overutilization is to enable the AutoScale function. This would work well in cases when the Capacity is used for critical production reports and the stakeholders have endlessly deep pockets.

Sooner or later, however, it would be a great idea to do some performance tuning.

Viewing the Metrics

If we turn to the previously mentioned Fabric Capacity Metrics app, we might find something similar to this in the metrics report:

Looking further in the details, we might find which workspaces and which objects within them are causing the most pressure:

Finally, in the Fabric Capacity Metrics report we can also view a short list of statistics about the operations which take the most resources:

In this case we may think that this particular dataset is a great candidate for a performance tuning session, since we see that the Query is used by 91 users and is taking an enormous amount of CU seconds.

So far, this is the viewpoint of the Admin, and given that the Admin is actively monitoring the system, he / she will send a clear message to the developers that there is a great opportunity for optimization.

What would the developers do, though?

As mentioned before, the PowerBI developers use the PowerBI Performance Analyzer (by clicking on the Optimize tab in PowerBI desktop), which is a great tool in the PowerBI Desktop:

It gives insight into the query durations; we can even copy the DAX queries and finetune them in DAX Studio. We could even attach the local machine Power BI desktop process to the DAX Studio and replay and finetune the DAX queries in the context of the local developer’s machine.

Performance Analyzer, is not enough when it comes to the performance of the reports and the datasets in the Power BI Service portal . There are other factors at play in the Service Portal and the environments may be drastically different. For example, there might be a VPN in the way, or maybe the data transfer is different between the cloud services based on where the developer’s machine is– all of this would make the debugging much harder on our local development machines. Furthermore, there usually are various usage patterns of the datasets and the reports in the Service Portal, at times it may happen that everything works in a predictable way during development, but when the users touch the system, we get unexpected results.

This is the same challenge we have had in database development and query tuning for decades, and this is why there are so many posters and T-Shirts with the words “But it worked on my machine…”

This is the main reason why we would try and get performance analytics data from the portal and would not solely trust the reality of the developers’ machines.

In this case we know which reports and datasets use the most resources, but we need the tools to gather their performance metrics in context, not only on our development machines. In database development and query tuning, the next logical step would be to turn to our extended events and see what happens in the environment where the objects are.

The next tool which comes to mind is the SQL Server Management studio, after all we can connect to SSAS objects and use extended events to trace what is hurting our performance.

We can easily do this for on-prem SSAS instances:

But if we try to connect to the PowerBI service, we simply do not see the Management section:

We can use extended events in the case of an on-prem Analysis Services, but it is not allowed for use when we connect to the Service portal.

Luckily, in this case we can turn to the good-old (already deprecated) SQL Server Profiler . There are Extended events available for the on-prem Analysis Services, but there are none available for the Azure Analysis Services.

There are a few considerations, however. First, the Profiler will only work with Datasets published in a Premium workspace (Premium, Premium-per-user or PowerBI embedded. ). Second, we can only run a trace for one dataset at a time.

To trace a dataset, we need to be able to connect to the Workspace XMLA endpoint.

To use the XMLA endpoint feature there are couple of admin settings, which need to be enabled by the Power BI admin first:

- The XMLA endpoint for the Capacity needs to be enabled.

- The Allow XMLA endpoints and Analyze in Excel with on-premises datasets should be allowed on a tenant level and the user who will perform the tracing needs to be on the allowed user list.

To run a trace for a dataset, the first thing we need is the XMLA endpoint connection string. It is in the format of

The easiest way to get it is to go to the Premium Workspace, click on Settings > Premium > Workspace Connection and copy the string.

Starting the trace

As promised, now we will start working with the Profiler. We select Server Type to be Analysis Services and we connect to the XMLA endpoint with whatever authentication method is needed.

It is very important before clicking Connect here to go to the Connection Properties tab and to specify the dataset name:

If the dataset name is not specified correctly, then an error will appear like this

And now we can click on Connect and choose what events we want to collect. For this guide we can just select the Standard template

When we click on Run , we will start getting some real-time events captured:

Note that there is a XML chunk at the end of the Query Begin event, which contains some valuable session information. First of all, we have captured the actual DAX query, and we also have some context information. If we format it, it looks like this:

This is a great set of information, since it gives the developers some real insight of the performance of their dataset in context. The developer can see who the user was, what the application context was, and what the performance metrics were for each interaction. This information can be used for taking the DAX query and finetuning it, but also from this information we know the exact user who submitted the request, and we may talk to them about their needs – it may turn out that there is an easier way to get the work done. We also know which report and which visual is consuming the resources, and thus we can revisit those items.

From here on, the developers may take the best candidates for query optimization and they may work their way through optimizing the resources of the shared capacity.

A broader approach to performance tuning

So far, I mentioned the ad-hoc way to use SQL Server Trace tool to capture valuable information about the datasets and query performance in a Power BI Premium workspace.

There is, however, another way to capture performance information from a Power BI Workspace which is assigned to a Premium Capacity: each workspace can individually be added to a Azure Log Analytics workspace.

This is fairly simple to do – all we need to do is to create a Azure Log Analytics and go to the Workspace Settings and then to the Azure Connections tab and point to the Azure Log Analytics environment we wish the data to be stored in.

There are some important differences between Trace and Log Analytics, however.

For example, several developers can run a trace at any time when they are testing the environment, and this trace would not cost anything. The trace can be started and stopped at any time.

Log analytics collects data continuously and it incurs costs. Furthermore, specific permissions need to be granted and managed for the Log Analytics workspace, which creates a bit of administration work.

Another aspect which is most visible for me as a Power BI Global admin is that with the Trace, I am able to audit different datasets without worrying whether the workspaces are already added to some instance of Log Analytics or not, and whether I have access to it. This really helps me have quick conversations with the Power BI developers and do ad-hoc pear-programming and tuning sessions.

How Log Analytics works

Here is how it works: when we connect a workspace to Log Analytics, the data starts flowing to a predefined log called PowerBIDatasetsWorkspace (here is the official documentation for it: https://learn.microsoft.com/en-us/azure/azure-monitor/reference/tables/PowerBIDatasetsWorkspace )

It takes some time for the data to get flowing in the logs, as I have experience it, it takes about 5 minutes to get the data in.

When the data is in the Log, we can use KQL to query it, for example here is a query which gives us the average query duration by day for the last 30 days.

The result of this query is trivial and it wont tell us much.

We could perhaps spend some time and write a more advanced KQL query, which gives us query count, distinct users and average duration per workspace for the last 30 days:

The result may look like this:

What we see here is a simple aggregation of resource usage over time for our Premium Capacity – how many queries vs. how many users and the aggregated CPU and Duration. This particular query can be used as an overview, but it is also worth noting that the underlying data is quite granular and we can filter by a specific user or even look for specific patterns within the submitted queries.

For further dive into KQL I would recommend the official documentation as a starting point: https://learn.microsoft.com/en-us/azure/data-explorer/kusto/query/

As mentioned, there are options: we can either go for the ‘on-the-spot’ profiling or we can opt out for collecting log data for our workspaces and query it later.

After all, it is an iterative process, because after the finetuning sessions (whether with the help of the Trace or the Log Analytics), the developers may ask the Power BI admin for another view of their Performance Metrics report, as a guidance to how their work has improved the environment as a whole, and the admin may help them find out what the overall health of the environment is.

In this article we saw the different views of a Power BI admin and a Power BI Developer: their tools, options, and workloads.

Happy debugging!

Subscribe for more articles

Fortnightly newsletters help sharpen your skills and keep you ahead, with articles, ebooks and opinion to keep you informed.

Fabriano Richard

Fabriano has a background of many years working with the Microsoft stack, starting with SQL Server 2000, going all the way to Azure and PowerBI administration. Over more than 20 years Fabriano has worked on assignments involving database architecture, Microsoft SQL Server data platform, data model design, database design, integration solutions, business intelligence, reporting, as well as performance optimization and systems scalability. For the past 2 years he has actively developed PowerBI systems and administered Premium Capacities / Microsoft Fabric workloads.

Follow Fabriano Richard via

View all articles by Fabriano Richard

Load comments

Related articles

Purview and Microsoft Fabric: Better Together

How to Build Metadata Driven Pipelines in Microsoft Fabric

Data & AI

Four Questions to Ask When Planning a Power BI Premium Deployment

As adoption of Power BI grows, more and more organizations are finding value in purchasing and deploying Power BI Premium . Power BI Premium offers a different licensing structure where an organization can purchase dedicated capacity and users who only consume reports and dashboards aren’t required to have a Power BI Pro license. The move from shared to dedicated capacity brings with it:

- larger storage limits

- support for larger data sets

- higher refresh rates

- support for incremental refresh

- support for Power BI Embedded

- use of Power BI Report Server

Power BI Premium requires some additional deployment planning over simply using shared capacity in PowerBI.com. Listed below are important questions to answer as you get started with Power BI Premium.

1. How will we allocate Power BI Premium capacity?

When you purchase Power BI Premium, you are purchasing an amount of capacity (virtual cores) rather than a number of nodes. If you purchased the P2 plan, you would have 16 v-cores. You could have one node with all of the v-cores, or you could have 2 nodes with 8 v-cores each. You’ll want to take into consideration memory and CPU usage based upon dataset sizes and refresh rates, as well as the need for autonomy (dedicated capacity) of departments or groups within your organization.

2. Who will manage each capacity?

Power BI Premium brings new management roles in PowerBI.com. In addition to the Office 365 admin and Power BI admin roles, there are capacity admins and capacity assignment permissions. Capacity admins can assign workspaces to their capacity and update the capacity assignment list. Users with capacity assignment permissions can add any workspace in which they are an admin to dedicated capacity. It can be helpful to allow some groups to manage that for themselves rather than to have to submit a help ticket to IT or the BI team when they want to move workspaces in or out of dedicated capacity. If you have multiple groups on one capacity, it might be best to have a capacity admin from each of those groups. But those capacity admins will need to communicate so they don’t overcommit the capacity and cause poor performance for the other groups.

3. How will we assign workspaces to each capacity?

Not every workspace needs to be in dedicated capacity. As the number of workspaces grows, all of your workspaces may not fit in dedicated capacity. You could start out putting every workspace in dedicated capacity until it fills up and then re-evaluate, or you could come up with guidelines as to which workspaces should be assigned to a capacity. Some organizations leave all personal workspaces in shared capacity and put all app workspaces in dedicated capacity. Some organizations assign workspaces to dedicated capacity to maximize their use of Power BI Free licenses for users who only consume content. Other organizations identify important or frequently used workspaces and assign only those to dedicated capacity.

4. Should we allow users to sign up for Power BI Free licenses on their own, or block them from doing so?

Often, the deployment of Power BI Premium brings new users to your Power BI tenant. By default, any user can sign up with their Azure Active Directory credentials. Some organizations turn off that ability and require the O365 admin to assign a license to users before they can use PowerBI.com. This might be a good fit if your organization requires training on Power BI or data security before users may begin to use Power BI.

Power BI Premium can bring interactive analytics to a larger audience within your organization, but it takes some additional planning and management. If you would like help planning your Power BI Premium deployment, please contact us . We would be happy to share our knowledge and experience.

Related Articles

3Cloud Global Delivery Center

How AI Benefits the Retail Industry

Leading The Way In The Era Of AI Infographic

Your Cloud Transformation Journey Starts Here

Operationalize the cloud and enjoy the ultimate Azure experience with the experts at 3Cloud.

Let’s Talk

Microsoft Power BI Cookbook by Brett Powell

Get full access to Microsoft Power BI Cookbook and 60K+ other titles, with a free 10-day trial of O'Reilly.

There are also live events, courses curated by job role, and more.

Power BI premium capacity admins

- Office 365 Global Admins and Power BI Admins are Capacity Admins of Power BI Premium capacities by default.

- These admins can assign users as Capacity Admins per capacity during initial setup of the capacity and later via User Permissions within the Premium settings of a capacity in the Power BI Admin Portal.

- Capacity Admins have administrative control over the given capacity but must also be granted assignment permissions in the Users with assignment permissions setting to assign workspaces to premium capacities if the capacity admin will be responsible for associating an app workspace to premium capacity.

- Power BI Admins are expected to have the ability to assign individual workspaces to premium capacity ...

Get Microsoft Power BI Cookbook now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.

Don’t leave empty-handed

Get Mark Richards’s Software Architecture Patterns ebook to better understand how to design components—and how they should interact.

It’s yours, free.

Check it out now on O’Reilly

Dive in for free with a 10-day trial of the O’Reilly learning platform—then explore all the other resources our members count on to build skills and solve problems every day.

Power BI pricing

Compare business intelligence plans and pricing, free account.

- Included in Microsoft Fabric free account

- No credit card required

- Upgrade to Pro or Premium to share reports

Power BI Pro

- Publish and share Power BI reports

- Included in Microsoft 365 E5 and Office 365 E5

- Available to buy now with a credit card 1

Power BI Premium Per User

- Includes all the features available with Power BI Pro

- Access larger model sizes

- More frequent refreshes

- Available to buy now with a credit card 1

Power BI Embedded

- Brand Power BI reports as your own

- Automate monitoring, management, and deployment

- Reduce developer overhead

Fabric Capacity Reservation

- Annual purchase, saving 40.5% over pay-as-you-go prices

- Microsoft Azure Consumption Commitment eligible

- Per-user licenses required for certain activities 5

Fabric Capacity Pay-As-You-Go

- Dynamically scale up or down and pause capacity

- Microsoft Azure Consumption Commitment (MACC) eligible

Power BI Premium in Microsoft Fabric

- Includes all the features available in Power BI Premium per user

- Use autoscale to respond to occasional, unplanned overage spikes in capacity 4

- Power BI Premium capacity is being retired soon— learn more

Explore Power BI Plans

Learn about Power BI licensing

Take the next step

Start using Power BI for free

Contact Sales

Request we contact you

Prices shown are for marketing purposes only and may not be reflective of actual list price due to currency, country, and regional variant factors. Your actual price will be reflected at checkout. All offers are subject to product service limits. Contact a Microsoft representative to learn more about enterprise pricing and offers.

Academic, government , and nonprofit pricing are available.

Power BI is available in China operated by 21Vianet .

[1] Power BI Pro and Power BI Premium per user subscriptions are available for self-service purchase, as well as through the Microsoft 365 admin center. Purchasing Microsoft Fabric requires access to the Microsoft 365 admin center. Learn more about available Power BI purchasing and licensing options.

[2] A $10.00 per user/month add-on is available for users with Power BI Pro, Microsoft 365 E5, and Office 365 E5 licenses to step up to Power BI Premium per user. Learn more about purchasing Power BI Premium per user.

[3] Power BI Pro license is required for all Power BI Premium (“P”) and Fabric Capacity (“F”) SKUs to publish Power BI content to Microsoft Fabric. Enabling content consumers to review and interact with Power BI reports without additional paid per-user licenses is available at P1 and above (and F64 and above).

[4] Autoscale is an optional add-on that requires Power BI Premium per capacity (Gen2) and Azure subscriptions. Limits can be preset with autoscale to control costs, such as thresholds on vCore scaling or total subscription charges.

[5] A capacity-based license (P1 or F64 and above only) allows Pro or PPU users to create and save content in Fabric or Premium capacity workspaces. They can then share that workspace with colleagues who have any license type including a free account. Only users with Pro or PPU licenses can create and save content in Premium capacities, and only if their organization purchased Premium capacity.

[6] Representative of features available in general availability (GA) status.

[7] Power BI Desktop is the data exploration and report authoring experience for Power BI, and it is available as a free download.

[8] Power BI report consumption without paid per-user licenses applies to Fabric SKUs F64 and above, and Power BI Premium per capacity SKUs P1 and above.

[9] See the Power BI model memory size limit capacity and SKUs .

[10] For storing Power BI data sets only.

Follow Power BI

- Chat with sales

- Contact sales

Available M-F 6 AM to 3 PM PT.

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Organizational licenses and subscriptions for Fabric and Power BI

- 7 contributors

There are two ways for users to get a license, from an administrator or by using self-service. This article is for the administrators, who purchase and manage services and licenses.

Global and Billing administrators can sign up for the Power BI service and Fabric. After signing up and purchasing a subscription or a free trial, Billing, License, User, and Global administrators assign licenses to users in their organization.

If you give them permissions, users in your organization can get one of the organization's licenses simply by signing in to Power BI (app.powerbi.com) or Fabric (app.fabric.com). When a user signs in, they automatically receive a license. Administrators can set these permissions by following Enable and disable self-service .

If you're an individual, you can sign up for your own free, Pro, Premium Per User, and trial licenses. This process is called self-service. To learn more, see the self-service process .

Fabric and the Power BI service

Power BI is available as a standalone SaaS service and as one of the services that's integrated with Microsoft Fabric . Administration and licensing of the Power BI service is now integrated with Microsoft Fabric.

Licenses and subscriptions

Fabric and the Power BI service are both SaaS platforms and require licenses and at least one capacity subscription. There are some differences in how these licenses and capacities are acquired.

Power BI licenses

Use of the Power BI service requires a license and a capacity.

Per user license - Per user licenses allow users to work in the Power BI service. The options are Fabric free, Pro, and Premium Per User (PPU).

Capacity subscription - There are two types of Power BI subscriptions for organizations: standard and Premium. Premium provides enhancements to Power BI, and a comprehensive portfolio of Premium features . Power BI Premium is a tenant-level Microsoft 365 subscription, available in a choice of SKUs (Stock Keeping Units).

Each Microsoft 365 E5 subscription includes standard capacity and a Pro license.

Fabric licenses

To access the Fabric SaaS platform, you need a license and a capacity.

- Per user license - Per user licenses allow users to work in Fabric. The options are Fabric free, Pro, and Premium Per User (PPU).

- Capacity license - An organizational license that provides a pool of resources for Fabric operations. Capacity licenses are divided into stock keeping units (SKUs). Each SKU provides a different number of capacity units (CUs) which are used to calculate the capacity's compute power.

Which admins can purchase licenses and subscriptions

Admin roles for purchasing licenses and subscriptions.

You must belong to the Global or Billing admin role to purchase or assign licenses for your organization. Admin roles are assigned from the Microsoft Entra admin center or the Microsoft 365 admin center. For more information about admin roles in Microsoft Entra ID, see View and assign administrator roles in Microsoft Entra ID . To learn more about admin roles in Microsoft 365, including best practices, see About admin roles .

Admin roles for managing licenses and subscriptions

After the licenses and subscriptions are purchased, Global, Billing, License, or User admins can manage licenses for an organization. For details about these four admin roles, see Admin roles.

Get Fabric or Power BI for your organization

Fabric and the Power BI service are available for organizations to purchase and to try for free. For detailed instructions, select the option you prefer.

For up-to-date pricing information, see Pricing & product comparison .

Purchase a Fabric subscription. If you're ready to purchase, your options include bulk purchasing for your organization or enabling your users to upgrade their own licenses.

After you purchase a Fabric subscription, enable Fabric for your organization . This includes setting up tenants and capacities.

Purchase a Power BI subscription and licenses. Power BI Pro is included in Microsoft 365 E5. Otherwise, you can purchase Pro or PPU licenses from the Microsoft pricing site , through Microsoft 365, or through a Microsoft partner. After your purchase, you can assign licenses to individual users or allow self-service. For more information, see enabling your users to upgrade their own licenses .

Start a free 60-day Fabric trial for your organization. The trial includes a trial capacity, 1 TB of storage, and access to all of the Fabric experiences and features.

Get free licenses for all of your users. The Fabric free license doesn't include a Fabric capacity. The Fabric free license includes access to the paid features of the Power BI service.

Start a free 30-day trial of Power BI Pro. The trial includes up to 25 licenses. After the 30 days expire, you're charged for the licenses.

View existing licenses

To see which users in your organization already have a license, see View and manage user licenses .

Enable and disable self-service sign-up and purchase

You purchased licenses or signed up for an organizational trial. Now, decide whether your users can assign themselves a license or give themselves an automatic license upgrade. Here are links to help you decide which settings to use.

Self-service

- When to use self-service sign-up and purchase

- Manage self-service purchases and trials

- Manage self-service license requests in the Microsoft 365 admin center

- Manage self-service sign-up subscriptions in the Microsoft 365 admin center

- How to combine self-service settings

Guest user licenses

You might want to distribute content to users who are outside of your organization. You can share content with external users by inviting them to view content as a guest. Microsoft Entra Business-to-business (Microsoft Entra B2B) enables sharing with external guest users. Prerequisites:

The ability to share content with external users must be enabled.

The guest user must have the proper licensing in place to view the shared content.

For more information about guest user access, see Distribute Power BI content to external guest users with Microsoft Entra B2B .

Other articles for admins

There are many other admin tasks related to licenses and subscriptions. Some of them are listed and linked here.

- Assign and manage licenses with Microsoft 365 and with the Azure portal .

- Remove or reassign a license, enable, disable a license

- Cancel a trial

- Takeover a license or tenant

- Handle expiring trials .

- Handle expiring licenses for Power BI

Power BI license expiration

There's a grace period after a Power BI license expires. For licenses that are part of a volume license purchase, the grace period is 90 days. If you bought the license directly, the grace period is 30 days. For license trials, there is no grace period.

Power BI has the same license lifecycle as Microsoft 365. For more information, see What happens to my data and access when my Microsoft 365 for business subscription ends .

Manage subscriptions included with Microsoft 365 E5 SKUs

A Microsoft 365 E5 subscription includes Power BI Pro licenses. To learn how to manage licenses, see Administration overview .

Related content

- Purchase and assign Power BI Pro licenses

- About self-service sign-up

- Business subscriptions and billing documentation

- Find Power BI users that are signed in

- Self service FAQ

Was this page helpful?

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback .

Submit and view feedback for

Additional resources

Chris Webb's BI Blog

Microsoft Fabric, Power BI, Analysis Services, DAX, M, MDX, Power Query, Power Pivot and Excel

Migrating From Power BI P-SKU Premium Capacities To F-SKU Capacities Is Not The Same Thing As Enabling Fabric

Since the announcement in March that Power BI Premium P-SKUs are being retired and that customers will need to migrate to F-SKU capacities intead I have been asked the same question several times:

Why are you forcing me to migrate to Fabric???

This thread on Reddit is a great example. What I want to make clear in this post is the following:

Moving from P-SKU capacities to F-SKU capacities is not the same thing as enabling Fabric in your tenant

No-one is being forced to migrate from Power BI to Fabric and using F-SKU capacities does not mean you are using Fabric. Access to Fabric for your users is governed by the tenant-level settings documented here and these settings work the same way regardless of whether you’re using a P-SKU capacity or an F-SKU capacity. If you do not enable Fabric you can carry on using Power BI in exactly the same way as you did before, with exactly the same functionality, when you move to using an F-SKU capacity. Your users will not have the ability to create Fabric items like notebooks, warehouses, lakehouses and so on just because you’re using an F-SKU.

As the announcement blog post explains, moving to F-SKUs will involve changes about how and where you purchase your capacities and there will be some features that are only available in F-SKU capacities. Migrating workspaces to a new F-SKU capacity is fairly straightforward (and no different from moving a workspace from one P-SKU capacity to another) but if you have questions about how to perform the migration or how this affects how much you’re paying for Power BI you should contact your Microsoft account team.

Share this:

Published by Chris Webb

My name is Chris Webb, and I work on the Fabric CAT team at Microsoft. I blog about Power BI, Power Query, SQL Server Analysis Services, Azure Analysis Services and Excel. View all posts by Chris Webb

15 thoughts on “ Migrating From Power BI P-SKU Premium Capacities To F-SKU Capacities Is Not The Same Thing As Enabling Fabric ”

In the post, you mention some Fabric features are only available on F-SKUs. Can you provide more information?

There are some examples in the blog post that I linked to: trusted workspace access for OneLake shortcuts is one.

Aren’t F-SKUs a bit more pricey than their P-SKUs counterparts?

Not really. Depends on how you buy them. There is a PAYG option that is more expensive, but very flexible. And there is a Reserved Capacity option that is the same price as the P SKUs.

Reserved Capacity requires an annual commitment, but for most customers, that’s normal.

Personally, I recommend Reserved Capacity for stable, big workloads and suggest looking at PAYG for Dev/Test environments.

With PAYG, you can scale as needed (up to look like Production and then back down). And… if you have a Dev environment that shuts down at night and weekends, it might be less expensive than Reserved Capacity. I ran the numbers and if you can “pause” your environment for more than 10 hours per day (like over night) then PAYG pricing is less than Reserved Capacity. BUT… you have to pause it. 🙂

Hope that helps. Andy http://www.directionsonmicrosoft.com

Chris, thank you… Your comments are true and I can understand the frustration. There is no requirement to use the other Fabric experiences, yet.

But there are three items that are relevant to this discussion. 1. The Fabric licensing for Premium EM and A is bad news. All EM options (which include read-only rights for all users) is going away. The Fabric equivalent SKUs (in performance) are the lower F SKUs, which require Power BI Pro licensing for all read-only users. That means EM customers will see a big cost increase. We have a number of customers who are very, very upset about this change.

2. Power BI Report Server dual-use rights are going away. They are available with Premium P, but are not included with F SKUs. There was a bogus argument that they are not compatible with Fabric, but it was always paperwork, so that makes no sense. Again, a number of our customers are frustrated that they bought P SKUs in good-faith to also run PBI Report Server on-prem and now… they have to purchase SQL Server Enterprise edition licenses and carry SA to do the same thing. That is a cost increase.

3. Finally, I’m seeing Power BI features go on the chopping block, only to be replaced by Fabric features. AutoML in Dataflows (being replaced by Data Science) is one and the fact that Dataflows Gen1 is called Gen1 now, leads me to believe it’s only a matter of time. So, while customers don’t have to use Fabric, the reality is for some Power BI features that are migrating it won’t be an option in the future.

Now, that sounded kind of harsh, and items 1 & 2 are because I don’t like cost increases. But I happen to be a big fan of Fabric and I think it’s a fabulous tool and the direction customers should go. But I would love to see a roadmap of Power BI features that are moving to Fabric, so customers can see the future, jump on board, and put together project plans to get there? That would really help…

Thanks… Andy http://www.directionsonmicrosoft.com

Licensing Power BI is non-trivial. Getting a reasonable bang-for-the-buck on reserved capacity is an almost impossible goal nowadays. I think reserved capacity is discouraged by Microsoft in favor of PAYG, and they certainly don’t give us the tools to get the most value out of reserved capacity.

Even before the F sku’s, Microsoft was continually changing the meaning of premium capacity. For example we used to have isolated background vs foreground cpu. Now it is all fungible. Also cpu seems to be fungible across TIME, and you can “borrow” from future cpu in a way that will “catch up with you” and create outages that last for two hours into the future or more!

The biggest problem with the fungibility of background and foreground cpu is the way the background cpu is averaged across the entire day. There is NO WAY to get full value out of the “overnight” CU’s . Because even if you try to concentrate the background operations to happen at night, it will still incur a debt that will be paid in the future rather than using the capacity that is going to waste overnight.

If you use the reservation recommender in Azure (it’s turned on by default), you will get recommendations on which RI to buy based on your usage pattern. RIs are super granular so you can optimize your commitment and maximize savings.

Something that confuses me is people say you have to have an F64 to have all the same features as a P1. However I am not clear what “features” are missing if you got an F32 vs F64 other than “speed” (generic term). I have not seen that documented.

Its because you only have free powerbi access for your tenant use F64. Using P1, you only need a PRO account do developemnt, in Fabric its only possible using F64. If you want to use powerbi with free account, you need to develop a portal, like you do with Embedded.

So here’s how it works.

An F64 and higher actually includes a Power BI Premium P environment. Granted, it shares compute with the other Fabric experiences, but it provides a full Premium P environment with all the features of Premium P. That means it has multi-geo, higher data model sizes, dataflow pipelines, bring your on key for data encryption, etc… All the high-end stuff big orgs need, including 100TB of Power BI storage.

An F32 and lower actually “works with Power BI”. None of those lower F SKUs include a Power BI environment directly. Instead, you have to fire up a Power BI Pro subscription which comes with its own data storage and is limited compared to a Premium P environment. (Lower model sizes, etc…) Now, what’s cool is the Fabric workspaces can include Power BI content and share security controls (works with). So… with a lower F SKU capacity working with Power BI feels just like the higher F SKUs, but the Power BI feature set is different.

Summary F64 and higher – Power BI included and brings Premium P features F32 and lower – Power BI not included but works with Power BI Pro workspaces

Hope that helps. Andy Snodgrass http://www.directionsonmicrosoft.com

I know what you’re trying to explain here but it’s not correct to say that “Power BI is not included” with F32 and lower. There’s a licensing difference: if you have a workspace on an F64 or above then you can use a Power BI Free licence to consume content in that workspace; if you are using a F32 the rules are the same as if you’re using Shared today, namely that you need a Power BI Pro licence to consume content as well as create it.

Chris, I think we’re into semantics here. My point is that purchasing an F32 or lower SKU does not give me (creator or consumer) the right to use Power BI. I have to purchase Power BI separately.

So, in my mind, that means Power BI is not included with F32 or lower. I can still use Power BI in an F32 deployment, but I have to pay for it separately.

Does that sound right? Andy

I agree we’re just discussing terminology here. I still don’t like the phrase “Power BI is not included in with F32 or lower” though. If you’re using Shared/Pro you still need licences to publish and consume, and you can’t say that Power BI is not included there.

In general when I look at reserved capacity F64 vs a P1 the basic price of an F64 seems to be much higher.

However, as a nonprofit the P SKU nonprofit pricing is heavily discounted compared to regular pricing. Microsoft does not seem to offer discounts on the Azure side for F SKUs (only small generic Azure Grant for nonprofits that we already use).