Model-based test case generation and prioritization: a systematic literature review

- Regular Paper

- Published: 07 September 2021

- Volume 21 , pages 717–753, ( 2022 )

Cite this article

- Muhammad Luqman Mohd-Shafie ORCID: orcid.org/0000-0001-6988-8851 1 ,

- Wan Mohd Nasir Wan Kadir 1 ,

- Horst Lichter 2 ,

- Muhammad Khatibsyarbini 1 &

- Mohd Adham Isa 1

1580 Accesses

6 Citations

1 Altmetric

Explore all metrics

Model-based test case generation (MB-TCG) and prioritization (MB-TCP) utilize models that represent the system under test (SUT) for test generation and prioritization in software testing. They are based on model-based testing (MBT), a technique that facilitates automation in testing. Automated testing is indispensable for testing complex and industrial-size systems because of its advantages over manual testing. In recent years, MB-TCG and MB-TCP publications have shown an encouraging growth. However, the empirical studies done to validate these approaches must not be taken lightly because they reflect the results' validity and whether these approaches are generalizable to the industrial context. This systematic review aims at identifying and reviewing the state-of-the-art for MB-TCG, MB-TCP, and the approaches that combined MB-TCG and MB-TCP. The needs for this review were used to design the research questions. Keywords extracted from the research questions were utilized to search for studies in the literature that will answer the research questions. Prospective studies also underwent a quality assessment to ensure that only studies with sufficient quality were selected. All the research data of this review are also available in a public repository for full transparency. 122 primary studies were finalized and selected. There were 100, 15, and seven studies proposed for MB-TCG, MB-TCP, and MB-TCG and MB-TCP combination approaches, respectively. One of the main findings is that the most common limitations in the existing approaches are the dependency on specifications, the need for manual interventions, and the scalability issue.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Test case prioritization techniques for model-based testing: a replicated study.

A Survey on Model-Based Testing Tools for Test Case Generation

A Systematic Literature Review on Prioritizing Software Test Cases Using Markov Chains

Utting, M., Pretschner, A., Legeard, B.: A taxonomy of model-based testing approaches. Softw. Test. Verif. Reliab. 22 (5), 297–312 (2012). https://doi.org/10.1002/stvr.456

Article Google Scholar

Arora, P.K., Bhatia, R.: A systematic review of agent-based test case generation for regression testing. Arab. J. Sci. Eng. 43 (2), 447–470 (2018). https://doi.org/10.1007/s13369-017-2796-4

Setiani, N., Ferdiana, R., Santosa, P.I., Hartanto, R.: Literature review on test case generation approach. In: 2nd International Conference on Software Engineering and Information Management, Bali, Indonesia, pp. 91–95. ACM, (2019).

Catal, C., Mishra, D.: Test case prioritization: a systematic mapping study. Softw. Qual. J. 21 (3), 445–478 (2013). https://doi.org/10.1007/s11219-012-9181-z

Shah, S.A.A., Shahzad, R.K., Bukhari, S.S.A., Minhas, N.M., Humayun, M.: A review of class based test case generation techniques. JSW 11 (5), 464–480 (2016). https://doi.org/10.17706/jsw.11.5.464-480

Swain, S.K., Mohapatra, D.P., Mall, R.: Test case generation based on use case and sequence diagram. Int. J. Softw. Eng. 3 (2), 21–52 (2010)

Google Scholar

Prasanna, M., Sivanandam, S., Venkatesan, R., Sundarrajan, R.: A survey on automatic test case generation. Acad. Open Internet J. 15 (6) (2005). http://www.acadjournal.com/2005/v15/part6/p4/

Ingle, S., Mahamune, M.: An uml based software automatic test case generation: survey. Int. Res. J. Eng. Technol. 2 (1), 971–973 (2015)

Maheshwari, V., Prasanna, M.: Generation of test case using automation in software systems—a review. Indian J. Sci. Technol. 8 (35), 1 (2015)

Memon, A., Gao, Z., Nguyen, B., Dhanda, S., Nickell, E., Siemborski, R., Micco, J.: Taming Google-scale continuous testing. In: 39th International Conference on Software Engineering: Software Engineering in Practice Track, Buenos Aires, Argentina, pp. 233–242. IEEE (2017). https://doi.org/10.1109/ICSE-SEIP.2017.16

Singh, R.: Test case generation for object-oriented systems: a review. In: Fourth International Conference on Communication Systems and Network Technologies, Bhopal, India, pp. 981–989. IEEE (2014). https://doi.org/10.1109/CSNT.2014.201

Chugh, M.A.: GUI based test case generation—a review. Int. J. Recent Innov. Trends Comput. Commun. 5 (6), 273–276 (2017)

Kaur, K., Chopra, V.: Review of automatic test case generation from UML diagram using evolutionary algorithm. Int. J. Invent. Eng. Sci. 2 (11), 17–20 (2014)

Mahadik, P., Bhattacharyya, D., Jin Kim, H.: Techniques for automated test cases generation: a review. Int. J. Softw. Eng. Appl. 10 (12), 13–20 (2016)

Ali, S., Briand, L.C., Hemmati, H., Panesar-Walawege, R.K.: A systematic review of the application and empirical investigation of search-based test case generation. IEEE Trans. Softw. Eng. 36 (6), 742–762 (2009). https://doi.org/10.1109/TSE.2009.52

Bansal, P.: A critical review on test case prioritization and optimization using soft computing techniques. In: International Conference on Role of Technology in Nation Building (ICRTNB-2013), pp. 74–77 (2013)

Rajal, J.S., Sharma, S.: A review on various techniques for regression testing and test case prioritization. Int. J. Comput. Appl. 116 (16), 8–13 (2015)

Lasi, H., Fettke, P., Kemper, H.-G., Feld, T., Hoffmann, M.: Industry 4.0. Bus. Inf. Syst. Eng. 6 (4), 239–242 (2014). https://doi.org/10.1007/s12599-014-0334-4

Arrieta, A., Wang, S., Markiegi, U., Sagardui, G., Etxeberria, L.: Employing multi-objective search to enhance reactive test case generation and prioritization for testing industrial cyber-physical systems. IEEE Trans. Ind. Inform. 14 (3), 1055–1066 (2017). https://doi.org/10.1109/TII.2017.2788019

Ahmad, J., Baharom, S.: A systematic literature review of the test case prioritization technique for sequence of events. Int. J. Appl. Eng. Res. 12 (7), 1389–1395 (2017)

Rava, M., Wan-Kadir, W.M.: A review on prioritization techniques in regression testing. Int. J. Softw. Eng. Appl. 10 (1), 221–232 (2016). https://doi.org/10.14257/ijseia.2016.10.1.21

Qu, X., Cohen, M.B., Woolf, K.M.: Combinatorial interaction regression testing: a study of test case generation and prioritization. In: International Conference on Software Maintenance, Paris, France, pp. 255–264. IEEE (2007). https://doi.org/10.1109/ICSM.2007.4362638

Matinnejad, R., Nejati, S., Briand, L.C., Bruckmann, T.: Test generation and test prioritization for simulink models with dynamic behavior. IEEE Trans. Softw. Eng. 45 (9), 919–944 (2018). https://doi.org/10.1109/TSE.2018.2811489

Stallbaum, H., Metzger, A., Pohl, K.: An automated technique for risk-based test case generation and prioritization. In: Proceedings of the 3rd International Workshop on Automation of Software Test, Leipzig, Germany, pp. 67–70. ACM (2008). https://doi.org/10.1145/1370042.1370057

Tahiliani, S., Pandit, P.: A survey of UML-based approaches to testing. Int. J. Comput. Eng. Res. 2 (5), 1396–1400 (2012)

Nagpurkar, S., Gurav, Y.: A survey on test case generation from UML based requirement analysis model. Int. J. Adv. Res. Technol. 2 , 282–284 (2013)

Khandai, M., Acharya, A.A., Mohapatra, D.P.: A survey on test case generation from UML model. Int. J. Comput. Sci. Inf. Technol. 2 (3), 1164–1171 (2011)

Aggarwal, M., Sabharwal, S.: Test case generation from UML state machine diagram: a survey. In: Third International Conference on Computer and Communication Technology, Allahabad, India, pp. 133–140. IEEE (2012). https://doi.org/10.1109/ICCCT.2012.34

Pahwa, N., Solanki, K.: UML based test case generation methods: a review. Int. J. Comput. Appl. 95 (20), 1–6 (2014)

Shirole, M., Kumar, R.: UML behavioral model based test case generation: a survey. ACM SIGSOFT Softw. Eng. Notes 38 (4), 1–13 (2013). https://doi.org/10.1145/2492248.2492274

Mohanty, S., Acharya, A.A., Mohapatra, D.P.: A survey on model based test case prioritization. Int. J. Comput. Sci. Inf. Technol. 2 (3), 1042–1047 (2011)

Joshi, S.A., Tiple, B.: Literature review of model based test case prioritization. Int. J. Comput. Sci. Inf. Technol. 5 (5), 6736–6738 (2014)

Sharma, S., Singh, A.: Model-based test case prioritization using ACO: a review. In: Fourth International Conference on Parallel, Distributed and Grid Computing (PDGC), Waknaghat, India, pp. 177–181. IEEE (2016). https://doi.org/10.1109/PDGC.2016.7913140

Shafie, M.L., Kadir, W.M.W.: Model-based test case prioritization: a systematic literature review. J. Theor. Appl. Inf. Technol. (JATIT) 96 (14), 4548–4573 (2018)

Ahmad, T., Iqbal, J., Ashraf, A., Truscan, D., Porres, I.: Model-based testing using UML activity diagrams: a systematic mapping study. Comput. Sci. Rev. 33 , 98–112 (2019). https://doi.org/10.1016/j.cosrev.2019.07.001

Javed, H., Minhas, N.M., Abbas, A., Riaz, F.M.: Model based testing for web applications: a literature survey presented. J. Softw. 11 (4), 347–361 (2016). https://doi.org/10.17706/jsw.11.4.347-361

Sabbaghi, A., Keyvanpour, M.R.: State-based models in model-based testing: a systematic review. In: 4th International Conference on Knowledge-Based Engineering and Innovation (KBEI), Tehran, Iran, pp. 0942–0948. IEEE (2017). https://doi.org/10.1109/KBEI.2017.8324934

Ernits, J., Roo, R., Jacky, J., Veanes, M.: Model-based testing of web applications using NModel. In: Núñez, M., Baker, P., Merayo, M.G. (eds.) Testing of Software and Communication Systems, pp. 211–216. Springer, Berlin (2009)

Chapter Google Scholar

Pretschner, A., Philipps, J.: 10 methodological issues in model-based testing. In: Broy, M., Jonsson, B., Katoen, J.-P., Leucker, M., Pretschner, A. (eds.) Model-Based Testing of Reactive Systems, pp. 281–291. Springer, Berlin (2005)

Anand, S., Burke, E.K., Chen, T.Y., Clark, J., Cohen, M.B., Grieskamp, W., Harman, M., Harrold, M.J., Mcminn, P., Bertolino, A.: An orchestrated survey of methodologies for automated software test case generation. J. Syst. Softw. 86 (8), 1978–2001 (2013). https://doi.org/10.1016/j.jss.2013.02.061

Singh, Y., Kaur, A., Suri, B., Singhal, S.: Systematic literature review on regression test prioritization techniques. Informatica 36 (4), 379–408 (2012)

Yoo, S., Harman, M.: Regression testing minimization, selection and prioritization: a survey. Softw. Test. Verif. Reliab. 22 (2), 67–120 (2012). https://doi.org/10.1002/stvr.430

Keele, S.: Guidelines for performing systematic literature reviews in software engineering. In: Technical report, Ver. 2.3 EBSE Technical Report. EBSE. sn (2007)

Brereton, P., Kitchenham, B.A., Budgen, D., Turner, M., Khalil, M.: Lessons from applying the systematic literature review process within the software engineering domain. J. Syst. Softw. 80 (4), 571–583 (2007). https://doi.org/10.1016/j.jss.2006.07.009

Khatibsyarbini, M., Isa, M.A., Jawawi, D.N., Tumeng, R.: Test case prioritization approaches in regression testing: a systematic literature review. Inf. Softw. Technol. 93 , 74–93 (2018). https://doi.org/10.1016/j.infsof.2017.08.014

Gurbuz, H.G., Tekinerdogan, B.: Model-based testing for software safety: a systematic mapping study. Softw. Qual. J. 26 (4), 1327–1372 (2018). https://doi.org/10.1007/s11219-017-9386-2

Wohlin, C.: Guidelines for snowballing in systematic literature studies and a replication in software engineering. In: 18th International Conference on Evaluation and Assessment in Software Engineering, London, England, UK, pp. 1–10. ACM (2014). https://doi.org/10.1145/2601248.2601268

Mohd-Shafie, M.L.: Replication data for: model-based test case generation and prioritization: a systematic literature review. Harvard Dataverse (2020). https://doi.org/10.7910/DVN/20VASV

Nebut, C., Fleurey, F., Le Traon, Y., Jezequel, J.-M.: Automatic test generation: a use case driven approach. IEEE Trans. Softw. Eng. 32 (3), 140–155 (2006). https://doi.org/10.1109/TSE.2006.22

Belli, F., Hollmann, A.: Test generation and minimization with “basic” statecharts. In: Symposium on applied computing, Fortaleza, Ceara, Brazil, pp. 718–723. ACM (2008). https://doi.org/10.1145/1363686.1363856

Arcaini, P., Gargantini, A., Riccobene, E.: Improving model-based test generation by model decomposition. In: 10th Joint Meeting on Foundations of Software Engineering, New York, USA, pp. 119–130. ACM (2015). https://doi.org/10.1145/2786805.2786837

Belli, F., Beyazıt, M.: Exploiting model morphology for event-based testing. IEEE Trans. Softw. Eng. 41 (2), 113–134 (2015). https://doi.org/10.1109/TSE.2014.2360690

Gambi, A., Mayr-Dorn, C., Zeller, A.: Model-based testing of end-user collaboration intensive systems. In: Symposium on Applied Computing, Marrakech, Morocco, pp. 1213–1218. ACM (2017). https://doi.org/10.1145/3019612.3019778

Marchetto, A., Tonella, P., Ricca, F.: State-based testing of Ajax web applications. In: 1st International Conference on Software Testing, Verification, and Validation, Lillehammer, Norway, pp. 121–130. IEEE (2008). https://doi.org/10.1109/ICST.2008.22

Arcaini, P., Gargantini, A., Riccobene, E.: Decomposition-based approach for model-based test generation. IEEE Trans. Softw. Eng. 45 (5), 507–520 (2017). https://doi.org/10.1109/TSE.2017.2781231

Devroey, X., Perrouin, G., Schobbens, P.-Y.: Abstract test case generation for behavioural testing of software product lines. In: 18th International Software Product Line Conference: Companion Volume for Workshops, Demonstrations and Tools, Florence, Italy, pp. 86–93. ACM (2014). https://doi.org/10.1145/2647908.2655971

Belli, F., Budnik, C.J., Hollmann, A., Tuglular, T., Wong, W.E.: Model-based mutation testing—approach and case studies. Sci. Comput. Program. 120 , 25–48 (2016). https://doi.org/10.1016/j.scico.2016.01.003

Andrade, W.L., Machado, P.D.: Generating test cases for real-time systems based on symbolic models. IEEE Trans. Softw. Eng. 39 (9), 1216–1229 (2013). https://doi.org/10.1109/TSE.2013.13

Endo, A.T., Bernardino, M., Rodrigues, E.M., Simao, A., de Oliveira, F.M., Zorzo, A.F., Saad, R.: An industrial experience on using models to test web service-oriented applications. In: International Conference on Information Integration and Web-Based Applications & Services (IIWAS), Vienna, Austria, pp. 240–249. ACM (2013). https://doi.org/10.1145/2539150.2539188

Sun, C.-A., Zhang, B., Li, J.: TSGen: A UML activity diagram-based test scenario generation tool. In: International Conference on Computational Science and Engineering, Vancouver, BC, Canada pp. 853–858. IEEE (2009). https://doi.org/10.1109/CSE.2009.99

Yuan, X., Memon, A.M.: Generating event sequence-based test cases using GUI runtime state feedback. IEEE Trans. Softw. Eng. 36 (1), 81–95 (2009). https://doi.org/10.1109/TSE.2009.68

Endo, A.T., Simao, A.: Event tree algorithms to generate test sequences for composite Web services. Softw. Test. Verif. Reliab. 29 (3), e1637 (2019). https://doi.org/10.1002/stvr.1637

Chen, M., Qiu, X., Xu, W., Wang, L., Zhao, J., Li, X.: UML activity diagram-based automatic test case generation for Java programs. Comput. J. 52 (5), 545–556 (2009). https://doi.org/10.1093/comjnl/bxm057

Masood, A., Bhatti, R., Ghafoor, A., Mathur, A.P.: Scalable and effective test generation for role-based access control systems. IEEE Trans. Softw. Eng. 35 (5), 654–668 (2009). https://doi.org/10.1109/TSE.2009.35

Satpathy, M., Yeolekar, A., Peranandam, P., Ramesh, S.: Efficient coverage of parallel and hierarchical stateflow models for test case generation. Softw. Test. Verif. Reliab. 22 (7), 457–479 (2012). https://doi.org/10.1002/stvr.444

Swain, S.K., Mohapatra, D.P., Mall, R.: Test case generation based on state and activity models. J. Object Technol. 9 (5), 1–27 (2010)

El-Fakih, K., Yevtushenko, N., Bochmann, G.: FSM-based incremental conformance testing methods. IEEE Trans. Softw. Eng. 30 (7), 425–436 (2004). https://doi.org/10.1109/TSE.2004.31

Gallagher, L., Offutt, J., Cincotta, A.: Integration testing of object-oriented components using finite state machines. Softw. Test. Verif. Reliab. 16 (4), 215–266 (2006). https://doi.org/10.1002/stvr.340

Yang, R., Chen, Z., Xu, B., Wong, W.E., Zhang, J.: Improve the effectiveness of test case generation on efsm via automatic path feasibility analysis. In: 13th international symposium on high-assurance systems engineering, Boca Raton, FL, USA, pp. 17–24. IEEE (2011). https://doi.org/10.1109/HASE.2011.12

Liu, P., Xu, Z.: MTTool: a tool for software modeling and test generation. IEEE Access 6 , 56222–56237 (2018). https://doi.org/10.1109/ACCESS.2018.2872774

Zhang, M., Ali, S., Yue, T.: Uncertainty-wise test case generation and minimization for cyber-physical systems. J. Syst. Softw. 153 , 1–21 (2019). https://doi.org/10.1016/j.jss.2019.03.011

Keum, C., Kang, S., Ko, I.-Y., Baik, J., Choi, Y.-I.: Generating test cases for web services using extended finite state machine. In: IFIP International Conference on Testing of Communicating Systems, New York, USA, pp. 103–117. Springer (2006). https://doi.org/10.1007/11754008_7

Xu, D., Xu, W., Kent, M., Thomas, L., Wang, L.: An automated test generation technique for software quality assurance. IEEE Trans. Reliab. 64 (1), 247–268 (2014). https://doi.org/10.1109/TR.2014.2354172

Kalaee, A., Rafe, V.: Model-based test suite generation for graph transformation system using model simulation and search-based techniques. Inf. Softw. Technol. 108 , 1–29 (2018). https://doi.org/10.1016/j.infsof.2018.12.001

Asoudeh, N., Labiche, Y.: Multi-objective construction of an entire adequate test suite for an EFSM. In: 25th International Symposium on Software Reliability Engineering, Naples, Italy, pp. 288–299. IEEE (2014). https://doi.org/10.1109/ISSRE.2014.14

Arora, P.K., Bhatia, R.: Mobile agent-based regression test case generation using model and formal specifications. IET Softw. 12 (1), 30–40 (2017). https://doi.org/10.1049/iet-sen.2016.0203

Kalaji, A.S., Hierons, R.M., Swift, S.: An integrated search-based approach for automatic testing from extended finite state machine (EFSM) models. Inf. Softw. Technol. 53 (12), 1297–1318 (2011). https://doi.org/10.1016/j.infsof.2011.06.004

Belli, F., Endo, A.T., Linschulte, M., Simao, A.: A holistic approach to model-based testing of Web service compositions. Softw. Pract. Exp. 44 (2), 201–234 (2014). https://doi.org/10.1002/spe.2161

Anbunathan, R., Basu, A.: Combining genetic algorithm and pairwise testing for optimised test generation from UML ADs. IET Softw. 13 (5), 423–433 (2019). https://doi.org/10.1049/iet-sen.2018.5207

Ali, S., Briand, L.C., Rehman, M.J.-U., Asghar, H., Iqbal, M.Z.Z., Nadeem, A.: A state-based approach to integration testing based on UML models. Inf. Softw. Technol. 49 (11–12), 1087–1106 (2007). https://doi.org/10.1016/j.infsof.2006.11.002

Fraser, G., Wotawa, F.: Using model-checkers to generate and analyze property relevant test-cases. Softw. Qual. J. 16 (2), 161–183 (2008). https://doi.org/10.1007/s11219-007-9031-6

Singh, R., Bhatia, R., Singhrova, A.: Demand based test case generation for object oriented system. IET Softw. 13 (5), 403–413 (2019). https://doi.org/10.1049/iet-sen.2018.5043

Hessel, A., Pettersson, P.: A global algorithm for model-based test suite generation. Electron. Notes Theor. Comput. Sci. 190 (2), 47–59 (2007). https://doi.org/10.1016/j.entcs.2007.08.005

Wong, W.E., Restrepo, A., Choi, B.: Validation of SDL specifications using EFSM-based test generation. Inf. Softw. Technol. 51 (11), 1505–1519 (2009). https://doi.org/10.1016/j.infsof.2009.06.005

Samuel, P., Mall, R., Bothra, A.K.: Automatic test case generation using unified modeling language (UML) state diagrams. IET Softw. 2 (2), 79–93 (2008). https://doi.org/10.1049/iet-sen:20060061

Arcaini, P., Gargantini, A.: Test generation for sequential nets of abstract state machines with information passing. Sci. Comput. Program. 94 , 93–108 (2014). https://doi.org/10.1016/j.scico.2014.02.007

Gargantini, A., Riccobene, E., Rinzivillo, S.: Using Spin to generate tests from ASM specifications. In: International Workshop on Abstract State Machines, Turku, Finland, pp. 263–277. Springer (2003). https://doi.org/10.1007/3-540-36498-6_15

Kirkici, A., Gebizli, C.S., Sözer, H.: Risk-driven model-based testing of washing machine software: an industrial case study. In: International Conference on Software Testing, Verification and Validation Workshops (ICSTW), Vasteras, Sweden, pp. 398–403. IEEE (2018). https://doi.org/10.1109/ICSTW.2018.00080

Pradhan, S., Ray, M., Swain, S.K.: Transition coverage based test case generation from state chart diagram. J. King Saud Univ. Comput. Inf. Sci. (2019). https://doi.org/10.1016/j.jksuci.2019.05.005

Prasanna, M., Chandran, K.: Automatic test case generation for UML object diagrams using genetic algorithm. Int. J. Adv. Soft Comput. Appl 1 (1), 19–32 (2009)

Perrouin, G., Sen, S., Klein, J., Baudry, B., Le Traon, Y.: Automated and scalable t-wise test case generation strategies for software product lines. In: Third International Conference on Software Testing, Verification and Validation, Paris, France, pp. 459–468. IEEE (2010). https://doi.org/10.1109/ICST.2010.43

Nabuco, M., Paiva, A.C.: Model-based test case generation for web applications. In: International Conference on Computational Science and Its Applications, Guimarães, Portugal, pp. 248–262. Springer (2014). https://doi.org/10.1007/978-3-319-09153-2_19

Fraser, G., Wotawa, F.: Using LTL rewriting to improve the performance of model-checker based test-case generation. In: 3rd International Workshop on Advances in Model-Based Testing, London, UK, pp. 64–74. ACM (2007). https://doi.org/10.1145/1291535.1291542

Guan, J., Offutt, J.: A model-based testing technique for component-based real-time embedded systems. In: Eighth International Conference on Software Testing, Verification and Validation Workshops (ICSTW), Graz, Austria, pp. 1–10. IEEE (2015). https://doi.org/10.1109/ICSTW.2015.7107407

Hessel, A., Larsen, K.G., Nielsen, B., Pettersson, P., Skou, A.: Time-optimal real-time test case generation using UPPAAL. In: International Workshop on Formal Approaches to Software Testing, Montreal, QC, Canada, pp. 114–130. Springer (2003). https://doi.org/10.1007/978-3-540-24617-6_9

Nayak, A., Samanta, D.: Synthesis of test scenarios using UML sequence diagrams. Int. Schol. Res. Not. (2012). https://doi.org/10.5402/2012/324054

Enoiu, E.P., Sundmark, D., Pettersson, P.: Model-based test suite generation for function block diagrams using the Uppaal model checker. In: Sixth International Conference on Software Testing, Verification and Validation Workshops, Luxembourg, Luxembourg, pp. 158–167. IEEE (2013). https://doi.org/10.1109/ICSTW.2013.27

Ding, Z., Jiang, M., Chen, H., Jin, Z., Zhou, M.: Petri net based test case generation for evolved specification. Sci. China Inf. Sci. 59 (8), 080105 (2016). https://doi.org/10.1007/s11432-016-5598-5

Bahrin, N.K., Mohamad, R.: TCG algorithm approach for UML sequence diagram. In: 9th Malaysian Software Engineering Conference (MySEC), Kuala Lumpur, Malaysia, pp. 43–48. IEEE (2015). https://doi.org/10.1109/MySEC.2015.7475193

Lorber, F., Larsen, K.G., Nielsen, B.: Model-based mutation testing of real-time systems via model checking. In: International Conference on Software Testing, Verification and Validation Workshops (ICSTW), Vasteras, Sweden, pp. 59–68. IEEE (2018). https://doi.org/10.1109/ICSTW.2018.00029

Reis, S., Metzger, A., Pohl, K.: Integration testing in software product line engineering: a model-based technique. In: International Conference on Fundamental Approaches to Software Engineering, Braga, Portugal, pp. 321–335. Springer (2007). https://doi.org/10.1007/978-3-540-71289-3_25

Sarma, M., Mall, R.: System state coverage through automatic test case generation. Int. J. Inf. Commun. Technol. 1 (3–4), 347–372 (2008). https://doi.org/10.1504/IJICT.2008.024007

Marinescu, R., Enoiu, E., Seceleanu, C., Sundmark, D.: Automatic test generation for energy consumption of embedded systems modeled in EAST-ADL. In: International Conference on Software Testing, Verification and Validation Workshops (ICSTW), Tokyo, Japan, pp. 69–76. IEEE (2017). https://doi.org/10.1109/ICSTW.2017.19

Seqerloo, A.Y., Amiri, M.J., Parsa, S., Koupaee, M.: Automatic test cases generation from business process models. Requir. Eng. 24 (1), 119–132 (2019). https://doi.org/10.1007/s00766-018-0304-3

Khamaiseh, S., Chapman, P., Xu, D.: Model-based testing of obligatory ABAC systems. In: International Conference on Software Quality, Reliability and Security (QRS), Lisbon, Portugal, pp. 405–413. IEEE (2018). https://doi.org/10.1109/QRS.2018.00054

Yano, T., Martins, E., de Sousa, F.L.: MOST: a multi-objective search-based testing from EFSM. In: Fourth International Conference on Software Testing, Verification and Validation Workshops, Berlin, Germany, pp. 164–173. IEEE (2011). https://doi.org/10.1109/ICSTW.2011.37

De Souza, É.F., de Santiago Júnior, V.A., Vijaykumar, N.L.: H-Switch Cover: a new test criterion to generate test case from finite state machines. Softw. Qual. J. 25 (2), 373–405 (2017). https://doi.org/10.1007/s11219-015-9300-8

Fragal, V.H., Simao, A., Mousavi, M.R., Turker, U.C.: Extending HSI test generation method for software product lines. Comput. J. 62 (1), 109–129 (2018). https://doi.org/10.1093/comjnl/bxy046

Soucha, M., Bogdanov, K.: SPYH-method: an improvement in testing of finite-state machines. In: International Conference on Software Testing, Verification and Validation Workshops (ICSTW), Vasteras, Sweden, pp. 194–203. IEEE (2018). https://doi.org/10.1109/ICSTW.2018.00050

Paiva, S.C., Simao, A.: Generation of complete test suites from mealy input/output transition systems. Form. Asp. Comput. 28 (1), 65–78 (2016). https://doi.org/10.1007/s00165-015-0350-2

Article MathSciNet MATH Google Scholar

Wong, S., Ooi, C.Y., Hau, Y.W., Marsono, M.N., Shaikh-Husin, N.: Feasible transition path generation for EFSM-based system testing. In: International Symposium on Circuits and Systems (ISCAS), Beijing, China, pp. 1724–1727. IEEE (2013). https://doi.org/10.1109/ISCAS.2013.6572197

Kruse, P.M., Wegener, J.: Test sequence generation from classification trees. In: Fifth International Conference on Software Testing, Verification and Validation, Montreal, QC, Canada, pp. 539–548. IEEE (2012). https://doi.org/10.1109/ICST.2012.139

Nayak, A., Samanta, D.: Synthesis of test scenarios using UML activity diagrams. Softw. Syst. Model. 10 (1), 63–89 (2011). https://doi.org/10.1007/s10270-009-0133-4

Shu, T., Ding, Z., Chen, M., Xia, J.: A heuristic transition executability analysis method for generating EFSM-specified protocol test sequences. Inf. Sci. 370–371 , 63–78 (2016). https://doi.org/10.1016/j.ins.2016.07.059

Article MathSciNet Google Scholar

Bonfanti, S., Gargantini, A., Mashkoor, A.: Generation of C++ unit tests from abstract state machines specifications. In: International Conference on Software Testing, Verification and Validation Workshops (ICSTW), Vasteras, Sweden, pp. 185–193. IEEE (2018). https://doi.org/10.1109/ICSTW.2018.00049

Arcaini, P., Bolis, F., Gargantini, A.: Test generation for sequential nets of abstract state machines. In: International Conference on Abstract State Machines, Alloy, B, VDM, and Z, Pisa, Italy, pp. 36–50. Springer (2012). https://doi.org/10.1007/978-3-642-30885-7_3

Su, T., Meng, G., Chen, Y., Wu, K., Yang, W., Yao, Y., Pu, G., Liu, Y., Su, Z.: Guided, stochastic model-based GUI testing of Android apps. In: 11th Joint Meeting on Foundations of Software Engineering, Paderborn, Germany, pp. 245–256. ACM (2017). https://doi.org/10.1145/3106237.3106298

Thummalapenta, S., Lakshmi, K.V., Sinha, S., Sinha, N., Chandra, S.: Guided test generation for web applications. In: 35th International Conference on Software Engineering (ICSE), San Francisco, CA, USA, pp. 162–171. IEEE (2013). https://doi.org/10.1109/ICSE.2013.6606562

Enoiu, E.P., Čaušević, A., Ostrand, T.J., Weyuker, E.J., Sundmark, D., Pettersson, P.: Automated test generation using model checking: an industrial evaluation. Int. J. Softw. Tools Technol. Transf. 18 (3), 335–353 (2016). https://doi.org/10.1007/s10009-014-0355-9

Tonella, P., Tiella, R., Nguyen, C.D.: N-gram based test sequence generation from finite state models. In: International Workshop on Future Internet Testing, Istanbul, Turkey, pp. 59–74. Springer (2013). https://doi.org/10.1007/978-3-319-07785-7_4

Zhang, J., Yang, R., Chen, Z., Zhao, Z., Xu, B.: Automated EFSM-based test case generation with scatter search. In: 7th International Workshop on Automation of Software Test (AST), Zurich, Switzerland, pp. 76–82. IEEE (2012). https://doi.org/10.1109/IWAST.2012.6228994

Shin, K.-W., Lim, D.-J.: Model-based automatic test case generation for automotive embedded software testing. Int. J. Autom. Technol. 19 (1), 107–119 (2018). https://doi.org/10.1007/s12239-018-0011-6

Sulaiman, R.A., Jawawi, D.N., Abd Halim, S.: Derivation of test cases for model-based testing of software product line with hybrid heuristic approach. In: International Conference of Reliable Information and Communication Technology, Johor, Malaysia, pp. 199–208. Springer (2019). https://doi.org/10.1007/978-3-030-33582-3_19

Jiang, Z., Wu, X., Dong, Z., Mu, M.: Optimal test case generation for simulink models using slicing. In: International Conference on Software Quality, Reliability and Security Companion (QRS-C), Prague, Czech Republic, pp. 363–369. IEEE (2017). https://doi.org/10.1109/QRS-C.2017.67

Chen, M., Mishra, P., Kalita, D.: Efficient test case generation for validation of UML activity diagrams. Des. Autom. Embedd. Syst. 14 (2), 105–130 (2010). https://doi.org/10.1007/s10617-010-9052-4

Liu, B., Nejati, S., Briand, L.C.: Improving fault localization for Simulink models using search-based testing and prediction models. In: 24th International Conference on Software Analysis, Evolution and Reengineering (SANER), Klagenfurt, Austria, pp. 359–370. IEEE (2017). https://doi.org/10.1109/SANER.2017.7884636

Chen, Y., Wang, A., Wang, J., Liu, L., Song, Y., Ha, Q.: Automatic test transition paths generation approach from EFSM using state tree. In: International Conference on Software Quality, Reliability and Security Companion (QRS-C), Lisbon, Portugal, pp. 87–93. IEEE (2018). https://doi.org/10.1109/QRS-C.2018.00029

Choi, Y.-M., Lim, D.-J.: Model-based test suite generation using mutation analysis for fault localization. Appl. Sci. 9 (17), 3492 (2019). https://doi.org/10.3390/app9173492

Xu, D., El-Ariss, O., Xu, W., Wang, L.: Testing aspect-oriented programs with finite state machines. Softw. Test. Verif. Reliab. 22 (4), 267–293 (2012). https://doi.org/10.1002/stvr.440

Turlea, A., Ipate, F., Lefticaru, R.: Generating complex paths for testing from an EFSM. In: International Conference on Software Quality, Reliability and Security Companion (QRS-C), pp. 242–249. IEEE (2018). https://doi.org/10.1109/QRS-C.2018.00052

Rezaee, A., Zamani, B.: A novel approach to automatic model-based test case generation. Sci. Iran. Trans. Comput. Sci. Eng. Electr. Eng. 24 (6), 3132–3147 (2017). https://doi.org/10.24200/sci.2017.4528

Guo, J., Wang, W., Sun, L., Li, Z., Zhao, R.: A Test case generation method based on state importance of EFSM for web application. In: 13th International Conference on Software Engineering and Knowledge Engineering, San Francisco Bay, USA, pp. 548–547 (2018). https://doi.org/10.18293/SEKE2018-177

Sant, J., Souter, A., Greenwald, L.: An exploration of statistical models for automated test case generation. In: Third International Workshop on Dynamic Analysis, pp. 1–7. ACM (2005). https://doi.org/10.1145/1083246.1083256

Gadkari, A.A., Mohalik, S., Shashidhar, K., Yeolekar, A., Suresh, J., Ramesh, S.: Automatic generation of test-cases using model checking for SL/SF models. In: 4th Model-Driven Engineering, Verification and Validation Workshop, pp. 33–46 (2007).

Akbari, Z., Khoshnevis, S., Mohsenzadeh, M.: A method for prioritizing integration testing in software product lines based on feature model. Int. J. Softw. Eng. Knowl. Eng. 27 (04), 575–600 (2017). https://doi.org/10.1142/S0218194017500218

Zhang, H., Wu, H., Rountev, A.: Automated test generation for detection of leaks in Android applications. In: 11th International Workshop on Automation of Software Test, Austin, TX, pp. 64–70. ACM (2016). https://doi.org/10.1145/2896921.2896932

Sun, C.A., Zhao, Y., Pan, L., He, X., Towey, D.: A transformation-based approach to testing concurrent programs using UML activity diagrams. Softw. Pract. Exp. 46 (4), 551–576 (2016). https://doi.org/10.1002/spe.2324

Arora, V., Bhatia, R., Singh, M.: Synthesizing test scenarios in UML activity diagram using a bio-inspired approach. Comput. Lang. Syst. Struct. 50 , 1–19 (2017). https://doi.org/10.1016/j.cl.2017.05.002

Zhang, C., Cheng, H., Tang, E., Chen, X., Bu, L., Li, X.: Sketch-guided GUI test generation for mobile applications. In: International Conference on Automated Software Engineering (ASE), Urbana, IL, USA, pp. 38–43. IEEE (2017). https://doi.org/10.1109/ASE.2017.8115616

Mohalik, S., Gadkari, A.A., Yeolekar, A., Shashidhar, K., Ramesh, S.: Automatic test case generation from Simulink/Stateflow models using model checking. Softw. Test. Verif. Reliab. 24 (2), 155–180 (2014). https://doi.org/10.1002/stvr.1489

Enoiu, E.P., Sundmark, D., Čaušević, A., Feldt, R., Pettersson, P.: Mutation-based test generation for plc embedded software using model checking. In: IFIP International Conference on Testing Software and Systems, Graz, Austria, pp. 155–171. Springer (2016). https://doi.org/10.1007/978-3-319-47443-4_10

Gupta, P., Surve, P.: Model based approach to assist test case creation, execution, and maintenance for test automation. In: First International Workshop on End-to-End Test Script Engineering, Toronto, ON, Canada, pp. 1–7. ACM (2011). https://doi.org/10.1145/2002931.2002932

Peranandam, P., Raviram, S., Satpathy, M., Yeolekar, A., Gadkari, A., Ramesh, S.: An integrated test generation tool for enhanced coverage of Simulink/Stateflow models. In: Design, Automation & Test in Europe Conference & Exhibition (DATE), Dresden, Germany, pp. 308–311. IEEE (2012). https://doi.org/10.1109/DATE.2012.6176485

Vu, T.D., Pham, N.H., Nguyen, V.H.: A method for automated test cases generation from sequence diagrams and object constraint language for concurrent programs. VNU J. Comput. Sci. Commun. Eng. 31 (3), 28–42 (2016)

Wang, C., Pastore, F., Goknil, A., Briand, L., Iqbal, Z.: Automatic generation of system test cases from use case specifications. In: Proceedings of the 2015 International Symposium on Software Testing and Analysis, Baltimore, MD, USA, pp. 385–396. ACM (2015). https://doi.org/10.1145/2771783.2771812

Satpathy, M., Yeolekar, A., Ramesh, S.: Randomized directed testing (REDIRECT) for simulink/stateflow models. In: 8th ACM International Conference on Embedded Software, Atlanta, GA, USA, pp. 217–226. ACM (2008). https://doi.org/10.1145/1450058.1450088

Nguyen, C.D., Marchetto, A., Tonella, P.: Combining model-based and combinatorial testing for effective test case generation. In: International Symposium on Software Testing and Analysis, Minneapolis, MN, USA, pp. 100–110. ACM (2012). https://doi.org/10.1145/2338965.2336765

Belli, F., Beyazit, M., Takagi, T., Furukawa, Z.: Model-based mutation testing using pushdown automata. IEICE Trans. Inf. Syst. 95 (9), 2211–2218 (2012). https://doi.org/10.1587/transinf.E95.D.2211

Bryce, R.C., Sampath, S., Memon, A.M.: Developing a single model and test prioritization strategies for event-driven software. IEEE Trans. Softw. Eng. 37 (1), 48–64 (2011). https://doi.org/10.1109/TSE.2010.12

Emam, S.S., Miller, J.: Test case prioritization using extended digraphs. ACM Trans. Softw. Eng. Methodol. (TOSEM) 25 (1), 6 (2015). https://doi.org/10.1145/2789209

Fraser, G., Wotawa, F.: Test-case prioritization with model-checkers. In: 25th Conference on IASTED International (2007)

Rapos, E.J., Dingel, J.: Using fuzzy logic and symbolic execution to prioritize UML-RT test cases. In: International Conference on Software Testing, Verification and Validation (ICST), Graz, Austria, pp. 1–10. IEEE (2015). https://doi.org/10.1109/ICST.2015.7102610

Tahat, L., Korel, B., Koutsogiannakis, G., Almasri, N.: State-based models in regression test suite prioritization. Softw. Qual. J. 25 (3), 703–742 (2017). https://doi.org/10.1007/s11219-016-9330-x

Korel, B., Koutsogiannakis, G., Tahat, L.H.: Model-based test prioritization heuristic methods and their evaluation. In: 3rd International Workshop on Advances in Model-Based Testing, London, UK, pp. 34–43. ACM (2007). https://doi.org/10.1145/1291535.1291539

Morozov, A., Ding, K., Chen, T., Janschek, K.: Test suite prioritization for efficient regression testing of model-based automotive software. In: International Conference on Software Analysis, Testing and Evolution (SATE), Harbin, China, pp. 20–29. IEEE (2017). https://doi.org/10.1109/SATE.2017.11

Mahali, P., Mohapatra, D.P.: Model based test case prioritization using UML behavioural diagrams and association rule mining. Int. J. Syst. Assur. Eng. Manag. 9 (5), 1063–1079 (2018). https://doi.org/10.1007/s13198-018-0736-7

Acharya, A.A., Mahali, P., Mohapatra, D.P.: Model based test case prioritization using association rule mining. In: Jain, L.C., Behera, H.S., Mandal, J.K., Mohapatra, D.P. (eds.) Computational Intelligence in Data Mining, vol. 3, pp. 429–440. Springer, Berlin (2015)

Korel, B., Tahat, L.H., Harman, M.: Test prioritization using system models. In: 21st International Conference on Software Maintenance (ICSM'05), Budapest, Hungary, pp. 559–568. IEEE (2005). https://doi.org/10.1109/ICSM.2005.87

Shafie, M.L., Kadir, W.M.W.: Test case prioritization based on extended finite state machine model. J. Telecommun. Electron. Comput. Eng. (JTEC) 9 (3–3), 125–132 (2017)

Han, X., Zeng, H., Gao, H.: A heuristic model-based test prioritization method for regression testing. In: International Symposium on Computer, Consumer and Control, Taichung, Taiwan, pp. 886–889. IEEE, (2012). https://doi.org/10.1109/IS3C.2012.226

Panigrahi, C.R., Mall, R.: Model-based regression test case prioritization. ACM SIGSOFT Softw. Eng. Notes 35 (6), 1–7 (2010). https://doi.org/10.1145/1874391.1874405

Gökçe, N., Belli, F., Eminli, M., Dincer, B.T.: Model-based test case prioritization using cluster analysis: a soft-computing approach. Turk. J. Electr. Eng. Comput. Sci. 23 (3), 623–640 (2015)

Briand, L., Labiche, Y., Chen, K.: A multi-objective genetic algorithm to rank state-based test cases. In: International Symposium on Search Based Software Engineering, St. Petersburg, Russia, pp. 66–80. Springer (2013). https://doi.org/10.1007/978-3-642-39742-4_7

Rathore, L.K., Sao, N.: An integrated model based test case prioritization using UML sequence and activity diagram. Int. J. Res. Comput. Appl. Robot. 3 (12), 31–41 (2015)

Kundu, D., Sarma, M., Samanta, D., Mall, R.: System testing for object-oriented systems with test case prioritization. Softw. Test. Verif. Reliab. 19 (4), 297–333 (2009). https://doi.org/10.1002/stvr.407

Acharya, A., Mohapatra, D.P., Panda, N.: Model based test case prioritization for testing component dependency in CBSD using UML sequence diagram. Int. J. Adv. Comput. Sci. Appl. 1 (6), 108–113 (2010)

Panthi, V., Mohapatra, D.P.: Generating and evaluating effectiveness of test sequences using state machine. Int. J. Syst. Assur. Eng. Manag. 8 (2), 242–252 (2017). https://doi.org/10.1007/s13198-016-0419-1

Swain, R.K., Panthi, V., Mohapatra, D.P., Behera, P.K.: Prioritizing test scenarios from UML communication and activity diagrams. Innov. Syst. Softw. Eng. 10 (3), 165–180 (2014). https://doi.org/10.1007/s11334-013-0228-5

Van Eck, N.J., Waltman, L.: Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics 84 (2), 523–538 (2010). https://doi.org/10.1007/s11192-009-0146-3

Wang, J.: Formal Methods in Computer Science. CRC Press, Boca Raton (2019)

Book Google Scholar

Belli, F., Budnik, C.J., White, L.: Event-based modelling, analysis and testing of user interactions: approach and case study. Softw. Test. Verif. Reliab. 16 (1), 3–32 (2006). https://doi.org/10.1002/stvr.335

Grochtmann, M., Grimm, K.: Classification trees for partition testing. Softw. Test. Verif. Reliab. 3 (2), 63–82 (1993). https://doi.org/10.1002/stvr.4370030203

Wohlin, C., Runeson, P., Höst, M., Ohlsson, M.C., Regnell, B., Wesslén, A.: Experimentation in Software Engineering. Springer, Belin (2012)

Bittner, R.M., Fuentes, M., Rubinstein, D., Jansson, A., Choi, K., Kell, T.: mirdata: software for reproducible usage of datasets. In: International Society for Music Information Retrieval Conference (ISMIR), Delft, Netherlands, pp. 99–106 (2019). https://doi.org/10.5281/zenodo.3527750

Kochhar, P.S., Bissyandé, T.F., Lo, D., Jiang, L.: An empirical study of adoption of software testing in open source projects. In: 13th International Conference on Quality Software, Nanjing, China, pp. 103–112. IEEE (2013). https://doi.org/10.1109/QSIC.2013.57

Gousios, G., Pinzger, M., Deursen, A.v.: An exploratory study of the pull-based software development model. In: 36th International Conference on Software Engineering, Hyderabad, India, pp. 345–355 (2014). https://doi.org/10.1145/2568225.2568260

Elbaum, S., Malishevsky, A., Rothermel, G.: Incorporating varying test costs and fault severities into test case prioritization. In: Proceedings of the 23rd International Conference on Software Engineering, Toronto, Ontario, Canada, pp. 329–338. IEEE Computer Society (2001). https://doi.org/10.1109/ICSE.2001.919106

Huang, Y.-C., Peng, K.-L., Huang, C.-Y.: A history-based cost-cognizant test case prioritization technique in regression testing. J. Syst. Softw. 85 (3), 626–637 (2012). https://doi.org/10.1016/j.jss.2011.09.063

Do, H., Elbaum, S., Rothermel, G.: Supporting controlled experimentation with testing techniques: An infrastructure and its potential impact. Empir. Softw. Eng. 10 (4), 405–435 (2005). https://doi.org/10.1007/s10664-005-3861-2

Hanh, L.T.M., Binh, N.T., Tung, K.T.: Survey on mutation-based test data generation. Int. J. Electr. Comput. Eng. 5(5) (2015).

McMinn, P.: Search-based software test data generation: a survey. Softw. Test. Verif. Reliab. 14 (2), 105–156 (2004). https://doi.org/10.1002/stvr.294

Li, W., Le Gall, F., Spaseski, N.: A survey on model-based testing tools for test case generation. In: International Conference on Tools and Methods for Program Analysis, Moscow, Russia, pp. 77–89. Springer (2017). https://doi.org/10.1007/978-3-319-71734-0_7

Ali, S., Iqbal, M.Z., Arcuri, A., Briand, L.C.: Generating test data from OCL constraints with search techniques. IEEE Trans. Softw. Eng. 39 (10), 1376–1402 (2013). https://doi.org/10.1109/TSE.2013.17

Saeed, A., Ab Hamid, S.H., Sani, A.A.: Cost and effectiveness of search-based techniques for model-based testing: an empirical analysis. Int. J. Softw. Eng. Knowl. Eng. 27 (04), 601–622 (2017). https://doi.org/10.1142/S021819401750022X

Hebig, R., Quang, T.H., Chaudron, M.R., Robles, G., Fernandez, M.A.: The quest for open source projects that use UML: mining GitHub. In: 19th International Conference on Model Driven Engineering Languages and Systems, Saint-malo, France, pp. 173–183. ACM (2016). https://doi.org/10.1145/2976767.2976778

Mendonca, M., Branco, M., Cowan, D.: SPLOT: software product lines online tools. In: 24th ACM SIGPLAN Conference Companion on Object Oriented Programming Systems Languages and Applications, Orlando, FL, USA, pp. 761–762. ACM (2009). https://doi.org/10.1145/1639950.1640002

Download references

Acknowledgements

The research was funded by Universiti Teknologi Malaysia (UTM), and the Malaysian Ministry of Higher Education (MOHE) under the Industry-International Incentive Grant Scheme (IIIGS) (Vote Number: Q.J130000.3051.01M86), and the Academic Fellowship Scheme (SLAM). The authors would also like to express their deepest gratitude to the members of the Software Engineering Research Group (SERG) and the anonymous reviewers for their constructive comments and suggestions.

Author information

Authors and affiliations.

Department of Software Engineering, School of Computing, Faculty of Engineering, Universiti Teknologi Malaysia, 81310, Johor Bahru, Johor, Malaysia

Muhammad Luqman Mohd-Shafie, Wan Mohd Nasir Wan Kadir, Muhammad Khatibsyarbini & Mohd Adham Isa

Group Software Construction, RWTH Aachen University, Aachen, NRW, Germany

Horst Lichter

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Muhammad Luqman Mohd-Shafie .

Additional information

Communicated by Benoit Combemale.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Reprints and permissions

About this article

Mohd-Shafie, M.L., Kadir, W.M.N.W., Lichter, H. et al. Model-based test case generation and prioritization: a systematic literature review. Softw Syst Model 21 , 717–753 (2022). https://doi.org/10.1007/s10270-021-00924-8

Download citation

Received : 29 December 2020

Revised : 28 July 2021

Accepted : 20 August 2021

Published : 07 September 2021

Issue Date : April 2022

DOI : https://doi.org/10.1007/s10270-021-00924-8

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Model-based testing

- Test case prioritization

- Test case generation

- Systematic literature review

- Find a journal

- Publish with us

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

How-to conduct a systematic literature review: A quick guide for computer science research

Angela carrera-rivera.

a Faculty of Engineering, Mondragon University

William Ochoa

Felix larrinaga.

b Design Innovation Center(DBZ), Mondragon University

Associated Data

- No data was used for the research described in the article.

Performing a literature review is a critical first step in research to understanding the state-of-the-art and identifying gaps and challenges in the field. A systematic literature review is a method which sets out a series of steps to methodically organize the review. In this paper, we present a guide designed for researchers and in particular early-stage researchers in the computer-science field. The contribution of the article is the following:

- • Clearly defined strategies to follow for a systematic literature review in computer science research, and

- • Algorithmic method to tackle a systematic literature review.

Graphical abstract

Specifications table

Method details

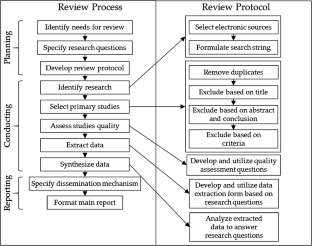

A Systematic Literature Review (SLR) is a research methodology to collect, identify, and critically analyze the available research studies (e.g., articles, conference proceedings, books, dissertations) through a systematic procedure [12] . An SLR updates the reader with current literature about a subject [6] . The goal is to review critical points of current knowledge on a topic about research questions to suggest areas for further examination [5] . Defining an “Initial Idea” or interest in a subject to be studied is the first step before starting the SLR. An early search of the relevant literature can help determine whether the topic is too broad to adequately cover in the time frame and whether it is necessary to narrow the focus. Reading some articles can assist in setting the direction for a formal review., and formulating a potential research question (e.g., how is semantics involved in Industry 4.0?) can further facilitate this process. Once the focus has been established, an SLR can be undertaken to find more specific studies related to the variables in this question. Although there are multiple approaches for performing an SLR ( [5] , [26] , [27] ), this work aims to provide a step-by-step and practical guide while citing useful examples for computer-science research. The methodology presented in this paper comprises two main phases: “Planning” described in section 2, and “Conducting” described in section 3, following the depiction of the graphical abstract.

Defining the protocol is the first step of an SLR since it describes the procedures involved in the review and acts as a log of the activities to be performed. Obtaining opinions from peers while developing the protocol, is encouraged to ensure the review's consistency and validity, and helps identify when modifications are necessary [20] . One final goal of the protocol is to ensure the replicability of the review.

Define PICOC and synonyms

The PICOC (Population, Intervention, Comparison, Outcome, and Context) criteria break down the SLR's objectives into searchable keywords and help formulate research questions [ 27 ]. PICOC is widely used in the medical and social sciences fields to encourage researchers to consider the components of the research questions [14] . Kitchenham & Charters [6] compiled the list of PICOC elements and their corresponding terms in computer science, as presented in Table 1 , which includes keywords derived from the PICOC elements. From that point on, it is essential to think of synonyms or “alike” terms that later can be used for building queries in the selected digital libraries. For instance, the keyword “context awareness” can also be linked to “context-aware”.

Planning Step 1 “Defining PICOC keywords and synonyms”.

Formulate research questions

Clearly defined research question(s) are the key elements which set the focus for study identification and data extraction [21] . These questions are formulated based on the PICOC criteria as presented in the example in Table 2 (PICOC keywords are underlined).

Research questions examples.

Select digital library sources

The validity of a study will depend on the proper selection of a database since it must adequately cover the area under investigation [19] . The Web of Science (WoS) is an international and multidisciplinary tool for accessing literature in science, technology, biomedicine, and other disciplines. Scopus is a database that today indexes 40,562 peer-reviewed journals, compared to 24,831 for WoS. Thus, Scopus is currently the largest existing multidisciplinary database. However, it may also be necessary to include sources relevant to computer science, such as EI Compendex, IEEE Xplore, and ACM. Table 3 compares the area of expertise of a selection of databases.

Planning Step 3 “Select digital libraries”. Description of digital libraries in computer science and software engineering.

Define inclusion and exclusion criteria

Authors should define the inclusion and exclusion criteria before conducting the review to prevent bias, although these can be adjusted later, if necessary. The selection of primary studies will depend on these criteria. Articles are included or excluded in this first selection based on abstract and primary bibliographic data. When unsure, the article is skimmed to further decide the relevance for the review. Table 4 sets out some criteria types with descriptions and examples.

Planning Step 4 “Define inclusion and exclusion criteria”. Examples of criteria type.

Define the Quality Assessment (QA) checklist

Assessing the quality of an article requires an artifact which describes how to perform a detailed assessment. A typical quality assessment is a checklist that contains multiple factors to evaluate. A numerical scale is used to assess the criteria and quantify the QA [22] . Zhou et al. [25] presented a detailed description of assessment criteria in software engineering, classified into four main aspects of study quality: Reporting, Rigor, Credibility, and Relevance. Each of these criteria can be evaluated using, for instance, a Likert-type scale [17] , as shown in Table 5 . It is essential to select the same scale for all criteria established on the quality assessment.

Planning Step 5 “Define QA assessment checklist”. Examples of QA scales and questions.

Define the “Data Extraction” form

The data extraction form represents the information necessary to answer the research questions established for the review. Synthesizing the articles is a crucial step when conducting research. Ramesh et al. [15] presented a classification scheme for computer science research, based on topics, research methods, and levels of analysis that can be used to categorize the articles selected. Classification methods and fields to consider when conducting a review are presented in Table 6 .

Planning Step 6 “Define data extraction form”. Examples of fields.

The data extraction must be relevant to the research questions, and the relationship to each of the questions should be included in the form. Kitchenham & Charters [6] presented more pertinent data that can be captured, such as conclusions, recommendations, strengths, and weaknesses. Although the data extraction form can be updated if more information is needed, this should be treated with caution since it can be time-consuming. It can therefore be helpful to first have a general background in the research topic to determine better data extraction criteria.

After defining the protocol, conducting the review requires following each of the steps previously described. Using tools can help simplify the performance of this task. Standard tools such as Excel or Google sheets allow multiple researchers to work collaboratively. Another online tool specifically designed for performing SLRs is Parsif.al 1 . This tool allows researchers, especially in the context of software engineering, to define goals and objectives, import articles using BibTeX files, eliminate duplicates, define selection criteria, and generate reports.

Build digital library search strings

Search strings are built considering the PICOC elements and synonyms to execute the search in each database library. A search string should separate the synonyms with the boolean operator OR. In comparison, the PICOC elements are separated with parentheses and the boolean operator AND. An example is presented next:

(“Smart Manufacturing” OR “Digital Manufacturing” OR “Smart Factory”) AND (“Business Process Management” OR “BPEL” OR “BPM” OR “BPMN”) AND (“Semantic Web” OR “Ontology” OR “Semantic” OR “Semantic Web Service”) AND (“Framework” OR “Extension” OR “Plugin” OR “Tool”

Gather studies

Databases that feature advanced searches enable researchers to perform search queries based on titles, abstracts, and keywords, as well as for years or areas of research. Fig. 1 presents the example of an advanced search in Scopus, using titles, abstracts, and keywords (TITLE-ABS-KEY). Most of the databases allow the use of logical operators (i.e., AND, OR). In the example, the search is for “BIG DATA” and “USER EXPERIENCE” or “UX” as a synonym.

Example of Advanced search on Scopus.

In general, bibliometric data of articles can be exported from the databases as a comma-separated-value file (CSV) or BibTeX file, which is helpful for data extraction and quantitative and qualitative analysis. In addition, researchers should take advantage of reference-management software such as Zotero, Mendeley, Endnote, or Jabref, which import bibliographic information onto the software easily.

Study Selection and Refinement

The first step in this stage is to identify any duplicates that appear in the different searches in the selected databases. Some automatic procedures, tools like Excel formulas, or programming languages (i.e., Python) can be convenient here.

In the second step, articles are included or excluded according to the selection criteria, mainly by reading titles and abstracts. Finally, the quality is assessed using the predefined scale. Fig. 2 shows an example of an article QA evaluation in Parsif.al, using a simple scale. In this scenario, the scoring procedure is the following YES= 1, PARTIALLY= 0.5, and NO or UNKNOWN = 0 . A cut-off score should be defined to filter those articles that do not pass the QA. The QA will require a light review of the full text of the article.

Performing quality assessment (QA) in Parsif.al.

Data extraction

Those articles that pass the study selection are then thoroughly and critically read. Next, the researcher completes the information required using the “data extraction” form, as illustrated in Fig. 3 , in this scenario using Parsif.al tool.

Example of data extraction form using Parsif.al.

The information required (study characteristics and findings) from each included study must be acquired and documented through careful reading. Data extraction is valuable, especially if the data requires manipulation or assumptions and inferences. Thus, information can be synthesized from the extracted data for qualitative or quantitative analysis [16] . This documentation supports clarity, precise reporting, and the ability to scrutinize and replicate the examination.

Analysis and Report

The analysis phase examines the synthesized data and extracts meaningful information from the selected articles [10] . There are two main goals in this phase.

The first goal is to analyze the literature in terms of leading authors, journals, countries, and organizations. Furthermore, it helps identify correlations among topic s . Even when not mandatory, this activity can be constructive for researchers to position their work, find trends, and find collaboration opportunities. Next, data from the selected articles can be analyzed using bibliometric analysis (BA). BA summarizes large amounts of bibliometric data to present the state of intellectual structure and emerging trends in a topic or field of research [4] . Table 7 sets out some of the most common bibliometric analysis representations.

Techniques for bibliometric analysis and examples.

Several tools can perform this type of analysis, such as Excel and Google Sheets for statistical graphs or using programming languages such as Python that has available multiple data visualization libraries (i.e. Matplotlib, Seaborn). Cluster maps based on bibliographic data(i.e keywords, authors) can be developed in VosViewer which makes it easy to identify clusters of related items [18] . In Fig. 4 , node size is representative of the number of papers related to the keyword, and lines represent the links among keyword terms.

[1] Keyword co-relationship analysis using clusterization in vos viewer.

This second and most important goal is to answer the formulated research questions, which should include a quantitative and qualitative analysis. The quantitative analysis can make use of data categorized, labelled, or coded in the extraction form (see Section 1.6). This data can be transformed into numerical values to perform statistical analysis. One of the most widely employed method is frequency analysis, which shows the recurrence of an event, and can also represent the percental distribution of the population (i.e., percentage by technology type, frequency of use of different frameworks, etc.). Q ualitative analysis includes the narration of the results, the discussion indicating the way forward in future research work, and inferring a conclusion.

Finally, the literature review report should state the protocol to ensure others researchers can replicate the process and understand how the analysis was performed. In the protocol, it is essential to present the inclusion and exclusion criteria, quality assessment, and rationality beyond these aspects.

The presentation and reporting of results will depend on the structure of the review given by the researchers conducting the SLR, there is no one answer. This structure should tie the studies together into key themes, characteristics, or subgroups [ 28 ].

SLR can be an extensive and demanding task, however the results are beneficial in providing a comprehensive overview of the available evidence on a given topic. For this reason, researchers should keep in mind that the entire process of the SLR is tailored to answer the research question(s). This article has detailed a practical guide with the essential steps to conducting an SLR in the context of computer science and software engineering while citing multiple helpful examples and tools. It is envisaged that this method will assist researchers, and particularly early-stage researchers, in following an algorithmic approach to fulfill this task. Finally, a quick checklist is presented in Appendix A as a companion of this article.

CRediT author statement

Angela Carrera-Rivera: Conceptualization, Methodology, Writing-Original. William Ochoa-Agurto : Methodology, Writing-Original. Felix Larrinaga : Reviewing and Supervision Ganix Lasa: Reviewing and Supervision.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

Funding : This project has received funding from the European Union's Horizon 2020 research and innovation programme under the Marie Sklodowska-Curie Grant No. 814078.

Carrera-Rivera, A., Larrinaga, F., & Lasa, G. (2022). Context-awareness for the design of Smart-product service systems: Literature review. Computers in Industry, 142, 103730.

1 https://parsif.al/

Data Availability

SYSTEMATIC REVIEW article

This article is part of the research topic.

Ensuring Food Safety And Quality Throughout The Supply Chain

DO ORGANIC, CONVENTIONAL, AND INTENSIVE APPROACHES IN LIVESTOCK FARMING HAVE AN IMPACT ON THE CIRCULATION OF INFECTIOUS AGENTS? A SYSTEMATIC RE-VIEW, FOCUSED ON DAIRY CATTLE Provisionally Accepted

- 1 Catholic University of the Sacred Heart, Italy

- 2 University of Milan, Italy

- 3 University of Parma, Italy

The final, formatted version of the article will be published soon.

A common thought is that extensive and organic breeding systems are associated with lower prevalence of infections in livestock animals, compared to intensive ones. In addition, organic systems limit the use of antimicrobial drugs, which may lead to lower emergence of antimicrobial resistances (AMR) in bacterial pathogens. To examine these issues, avoiding any a priori bias, we carried out a systematic literature search on dairy cattle breeding. Search was targeted to publications that compared different types of livestock farming (intensive, extensive, conventional, organic) in terms of the circulation of infectious diseases and AMR. A total of 101 papers were finally selected. These papers did not show any trend in the circulation of the infections in the four types of breeding systems. However, AMR was more prevalent on conventional dairy farms compared to organic ones. The prevalence of specific pathogens and types of resistances were frequently associated with specific risk factors that were not strictly related to the type of farming system. In summary, we did not find any convincing evidence suggesting that extensive and organic dairy farming bears any advantage over the intensive and conventional ones, in terms of the circulation of infectious agents. However, while most papers dealing with the comparisons between organic and conventional production referred to some norms, guidelines or certification, to categorize the examined farms, the definition of extensive production appeared rather arbitrary in most cases, which suggests that results of published studies are to be interpreted with caution. In addition, further research is required, with appropriate statistical modelling on the cumulative correlations of various risk factors, to determine whether significant differences really exist, under the different types of management.

Keywords: Intensive, Extensive, Organic, dairy farm, Infectious Disease, infectious agents, Antimicrobial resistance (AMR)

Received: 06 Mar 2024; Accepted: 09 May 2024.

Copyright: © 2024 Pajoro, Brilli, Pezzali, Kramer, Moroni and Bandi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY) . The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

* Correspondence: PhD. Claudio Bandi, University of Milan, Milan, 20122, Lombardy, Italy

People also looked at

IMAGES

VIDEO

COMMENTS

The aim of this systematic review is to examine the existing literature on use case specifications research to identify (1) how use cases have evolved and how are they used to specify the problem requirements (2) their applicability in various software development activities (3) their quality (4) the open issues and the future directions ...

ObjectiveThe aim of this systematic review is to examine the existing literature for the evolution of the use cases, their applications, quality assessments, open issues, and the future directions. MethodWe perform keyword-based extensive search to identify the relevant studies related to use case specifications research reported in journal ...

The paper by Tiwari and Gupta [14] presents a systematic literature review that examines the evolution of use cases, their applications, quality assessments, open issues and the future directions ...

This paper reports the findings of a systematic literature review that focuses on the use case specification research works published between 1992 and February 2014. A total of 119 studies were identified from the 'hits' of a two-stage keyword-based search. We frame four research questions to investigate use case specification evolution ...

A systematic transformation approach that automatically extracts various use case elements from textual problem specifications by using Natural Language (NL) parser and questionnaire-based approach to develop the remaining unpopulated parts of the use case template.

The prioritization of software requirements is necessary for successful software development. A use case is a useful approach to represent and prioritize user-centric requirements. Use-case-based prioritization is used to rank use cases to attain a business value based on identified criteria. The research community has started engaging use case modeling for emerging technologies such as the ...

Method details Overview. A Systematic Literature Review (SLR) is a research methodology to collect, identify, and critically analyze the available research studies (e.g., articles, conference proceedings, books, dissertations) through a systematic procedure [12].An SLR updates the reader with current literature about a subject [6].The goal is to review critical points of current knowledge on a ...

The flexibility of the use cases in specifying requirements from informal textual descriptions to more formal ones makes them usable in different contexts and purposes. However, the versatility of use cases regarding their admissible structure raises a natural concern about the specification completeness.

Literature review is an essential feature of academic research. Fundamentally, knowledge advancement must be built on prior existing work. To push the knowledge frontier, we must know where the frontier is. By reviewing relevant literature, we understand the breadth and depth of the existing body of work and identify gaps to explore.

A use case is a useful approach to represent and prioritize user-centric requirements. Use-case-based prioritization is used to rank use cases to attain a business value based on identified ...

Compared to traditional literature overviews, which often leave a lot to the expertise of the authors, SRs treat the literature review process like a scientific process, and apply concepts of empirical research in order to make the review process more transparent and replicable and to reduce the possibility of bias.

Nicolás and Toval conducted a systematic review of the methods and techniques for transforming domain models (e.g., business models, UML models, and user interface models), use cases, scenarios, and user stories into textual requirements . In both of these reviews, the requirements sources contained static domain knowledge.

This paper aims to present a systematic literature review (SLR) to identify, gather and analyze strategies for UCM. During the SLR, two thousand two hundred sixty-six studies published between ...

Saurabh Tiwari and Atul Gupta. 2015. A systematic literature review of use case specifications research. Information and Software Technology 67, Supplement C (2015), 128--158. Google Scholar Digital Library; Saurabh Tiwari and Atul Gupta. 2017. Investigating Comprehension and Learn-ability Aspects of Use Cases for Software Specification ...

The needs for this review were used to design the research questions. Keywords extracted from the research questions were utilized to search for studies in the literature that will answer the research questions. Prospective studies also underwent a quality assessment to ensure that only studies with sufficient quality were selected.

The flexibility of the use cases in specifying requirements from informal textual descriptions to more formal ones makes them usable in different contexts and purposes. However, the versatility of use cases regarding their admissible structure raises a natural concern about the specification completeness.

Abstract. Performing a literature review is a critical first step in research to understanding the state-of-the-art and identifying gaps and challenges in the field. A systematic literature review is a method which sets out a series of steps to methodically organize the review. In this paper, we present a guide designed for researchers and in ...

A systematic literature review (SLR) to identify, gather and analyze strategies for UCM is presented, which can help teachers in the adoption of the most appropriate UCM strategies for their students. A major challenge in teaching use-case modeling (UCM) is to mitigate the difficulties of students that prevent them from producing use-case models with quality. The strategies for UCM are ...

Although the use case (UC) specifications are used to communicate requirements in detail, developers do not always follow them. This study presents an empirical study carried out to understand the reasons why developers do not follow UC specifications and their difficulties using UC specifications in generating prototypes.

Prior literature assumes that such 'maturity' leads to a better-quality understanding of stakeholders' desires and needs, and thus an increased likelihood that a resulting software will satisfy those requirements. This research paper found 140 studies to answer these questions.

The systematic, transparent searching techniques outlined in this article can be adopted and adapted for use in other forms of literature review (Grant & Booth 2009), for example, while the critical appraisal tools highlighted are appropriate for use in other contexts in which the reliability and applicability of medical research require ...

This literature review (LR) seeks to explore the approaches used for the automatic generation of test cases from use case specification and the method of validation for each approach. The rest of the paper is organized as follows. Section 2 discusses the methodology for the literature review.

This paper describes the results of a general systematic literature review (SLR) on the topic of generating test cases based on UML diagrams. ... Lionel Briand, and Zohaib Iqbal. 2015. Automatic generation of system test cases from use case specifications. In Proceedings of the 2015 International Symposium on Software Testing and Analysis ...

Most existing work does not use systematic literature review (SLR) for paper selection. There is a lack of work in specifying technical details such as sensor and controller details. Most of the research work has not studied energy consumption, utilization of renewable energy, stability, etc.

A common thought is that extensive and organic breeding systems are associated with lower prevalence of infections in livestock animals, compared to intensive ones. In addition, organic systems limit the use of antimicrobial drugs, which may lead to lower emergence of antimicrobial resistances (AMR) in bacterial pathogens. To examine these issues, avoiding any a priori bias, we carried out a ...