Data analysis write-ups

What should a data-analysis write-up look like.

Writing up the results of a data analysis is not a skill that anyone is born with. It requires practice and, at least in the beginning, a bit of guidance.

Organization

When writing your report, organization will set you free. A good outline is: 1) overview of the problem, 2) your data and modeling approach, 3) the results of your data analysis (plots, numbers, etc), and 4) your substantive conclusions.

1) Overview Describe the problem. What substantive question are you trying to address? This needn’t be long, but it should be clear.

2) Data and model What data did you use to address the question, and how did you do it? When describing your approach, be specific. For example:

- Don’t say, “I ran a regression” when you instead can say, “I fit a linear regression model to predict price that included a house’s size and neighborhood as predictors.”

- Justify important features of your modeling approach. For example: “Neighborhood was included as a categorical predictor in the model because Figure 2 indicated clear differences in price across the neighborhoods.”

Sometimes your Data and Model section will contain plots or tables, and sometimes it won’t. If you feel that a plot helps the reader understand the problem or data set itself—as opposed to your results—then go ahead and include it. A great example here is Tables 1 and 2 in the main paper on the PREDIMED study . These tables help the reader understand some important properties of the data and approach, but not the results of the study itself.

3) Results In your results section, include any figures and tables necessary to make your case. Label them (Figure 1, 2, etc), give them informative captions, and refer to them in the text by their numbered labels where you discuss them. Typical things to include here may include: pictures of the data; pictures and tables that show the fitted model; tables of model coefficients and summaries.

4) Conclusion What did you learn from the analysis? What is the answer, if any, to the question you set out to address?

General advice

Make the sections as short or long as they need to be. For example, a conclusions section is often pretty short, while a results section is usually a bit longer.

It’s OK to use the first person to avoid awkward or bizarre sentence constructions, but try to do so sparingly.

Do not include computer code unless explicitly called for. Note: model outputs do not count as computer code. Outputs should be used as evidence in your results section (ideally formatted in a nice way). By code, I mean the sequence of commands you used to process the data and produce the outputs.

When in doubt, use shorter words and sentences.

A very common way for reports to go wrong is when the writer simply narrates the thought process he or she followed: :First I did this, but it didn’t work. Then I did something else, and I found A, B, and C. I wasn’t really sure what to make of B, but C was interesting, so I followed up with D and E. Then having done this…” Do not do this. The desire for specificity is admirable, but the overall effect is one of amateurism. Follow the recommended outline above.

Here’s a good example of a write-up for an analysis of a few relatively simple problems. Because the problems are so straightforward, there’s not much of a need for an outline of the kind described above. Nonetheless, the spirit of these guidelines is clearly in evidence. Notice the clear exposition, the labeled figures and tables that are referred to in the text, and the careful integration of visual and numerical evidence into the overall argument. This is one worth emulating.

Find what you need to study

Academic Paper: Discussion and Analysis

5 min read • march 10, 2023

Dylan Black

Introduction

After presenting your data and results to readers, you have one final step before you can finally wrap up your paper and write a conclusion: analyzing your data! This is the big part of your paper that finally takes all the stuff you've been talking about - your method, the data you collected, the information presented in your literature review - and uses it to make a point!

The major question to be answered in your analysis section is simply "we have all this data, but what does it mean?" What questions does this data answer? How does it relate to your research question ? Can this data be explained by, and is it consistent with, other papers? If not, why? These are the types of questions you'll be discussing in this section.

Source: GIPHY

Writing a Discussion and Analysis

Explain what your data means.

The primary point of a discussion section is to explain to your readers, through both statistical means and thorough explanation, what your results mean for your project. In doing so, you want to be succinct, clear, and specific about how your data backs up the claims you are making. These claims should be directly tied back to the overall focus of your paper.

What is this overall focus, you may ask? Your research question ! This discussion along with your conclusion forms the final analysis of your research - what answers did we find? Was our research successful? How do the results we found tie into and relate to the current consensus by the research community? Were our results expected or unexpected? Why or why not? These are all questions you may consider in writing your discussion section.

You showing off all of the cool findings of your research! Source: GIPHY

Why Did Your Results Happen?

After presenting your results in your results section, you may also want to explain why your results actually occurred. This is integral to gaining a full understanding of your results and the conclusions you can draw from them. For example, if data you found contradicts certain data points found in other studies, one of the most important aspects of your discussion of said data is going to be theorizing as to why this disparity took place.

Note that making broad, sweeping claims based on your data is not enough! Everything, and I mean just about everything you say in your discussions section must be backed up either by your own findings that you showed in your results section or past research that has been performed in your field.

For many situations, finding these answers is not easy, and a lot of thinking must be done as to why your results actually occurred the way they did. For some fields, specifically STEM-related fields, a discussion might dive into the theoretical foundations of your research, explaining interactions between parts of your study that led to your results. For others, like social sciences and humanities, results may be open to more interpretation.

However, "open to more interpretation" does not mean you can make claims willy nilly and claim "author's interpretation". In fact, such interpretation may be harder than STEM explanations! You will have to synthesize existing analysis on your topic and incorporate that in your analysis.

Liam Neeson explains the major question of your analysis. Source: GIPHY

Discussion vs. Summary & Repetition

Quite possibly the biggest mistake made within a discussion section is simply restating your data in a different format. The role of the discussion section is to explain your data and what it means for your project. Many students, thinking they're making discussion and analysis, simply regurgitate their numbers back in full sentences with a surface-level explanation.

Phrases like "this shows" and others similar, while good building blocks and great planning tools, often lead to a relatively weak discussion that isn't very nuanced and doesn't lead to much new understanding.

Instead, your goal will be to, through this section and your conclusion, establish a new understanding and in the end, close your gap! To do this effectively, you not only will have to present the numbers and results of your study, but you'll also have to describe how such data forms a new idea that has not been found in prior research.

This, in essence, is the heart of research - finding something new that hasn't been studied before! I don't know if it's just us, but that's pretty darn cool and something that you as the researcher should be incredibly proud of yourself for accomplishing.

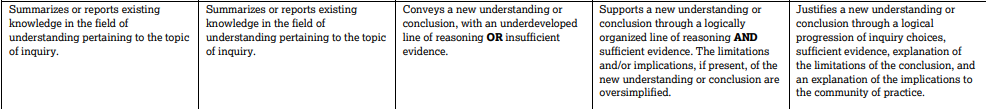

Rubric Points

Before we close out this guide, let's take a quick peek at our best friend: the AP Research Rubric for the Discussion and Conclusion sections.

Source: CollegeBoard

Scores of One and Two: Nothing New, Your Standard Essay

Responses that earn a score of one or two on this section of the AP Research Academic Paper typically don't find much new and by this point may not have a fully developed method nor well-thought-out results. For the most part, these are more similar to essays you may have written in a prior English class or AP Seminar than a true Research paper. Instead of finding new ideas, they summarize already existing information about a topic.

Score of Three: New Understanding, Not Enough Support

A score of three is the first row that establishes a new understanding! This is a great step forward from a one or a two. However, what differentiates a three from a four or a five is the explanation and support of such a new understanding. A paper that earns a three lacks in building a line of reasoning and does not present enough evidence, both from their results section and from already published research.

Scores of Four and Five: New Understanding With A Line of Reasoning

We've made it to the best of the best! With scores of four and five, successful papers describe a new understanding with an effective line of reasoning, sufficient evidence, and an all-around great presentation of how their results signify filling a gap and answering a research question .

As far as the discussions section goes, the difference between a four and a five is more on the side of complexity and nuance. Where a four hits all the marks and does it well, a five exceeds this and writes a truly exceptional analysis. Another area where these two sections differ is in the limitations described, which we discuss in the Conclusion section guide.

You did it!!!! You have, for the most part, finished the brunt of your research paper and are over the hump! All that's left to do is tackle the conclusion, which tends to be for most the easiest section to write because all you do is summarize how your research question was answered and make some final points about how your research impacts your field. Finally, as always...

Key Terms to Review ( 1 )

Research Question

Stay Connected

© 2024 Fiveable Inc. All rights reserved.

AP® and SAT® are trademarks registered by the College Board, which is not affiliated with, and does not endorse this website.

Research Paper Writing: 6. Results / Analysis

- 1. Getting Started

- 2. Abstract

- 3. Introduction

- 4. Literature Review

- 5. Methods / Materials

- 6. Results / Analysis

- 7. Discussion

- 8. Conclusion

- 9. Reference

Writing about the information

There are two sections of a research paper depending on what style is being written. The sections are usually straightforward commentary of exactly what the writer observed and found during the actual research. It is important to include only the important findings, and avoid too much information that can bury the exact meaning of the context.

The results section should aim to narrate the findings without trying to interpret or evaluate, and also provide a direction to the discussion section of the research paper. The results are reported and reveals the analysis. The analysis section is where the writer describes what was done with the data found. In order to write the analysis section it is important to know what the analysis consisted of, but does not mean data is needed. The analysis should already be performed to write the results section.

Written explanations

How should the analysis section be written?

- Should be a paragraph within the research paper

- Consider all the requirements (spacing, margins, and font)

- Should be the writer’s own explanation of the chosen problem

- Thorough evaluation of work

- Description of the weak and strong points

- Discussion of the effect and impact

- Includes criticism

How should the results section be written?

- Show the most relevant information in graphs, figures, and tables

- Include data that may be in the form of pictures, artifacts, notes, and interviews

- Clarify unclear points

- Present results with a short discussion explaining them at the end

- Include the negative results

- Provide stability, accuracy, and value

How the style is presented

Analysis section

- Includes a justification of the methods used

- Technical explanation

Results section

- Purely descriptive

- Easily explained for the targeted audience

- Data driven

Example of a Results Section

Publication Manual of the American Psychological Association Sixth Ed. 2010

- << Previous: 5. Methods / Materials

- Next: 7. Discussion >>

- Last Updated: Nov 7, 2023 7:37 AM

- URL: https://wiu.libguides.com/researchpaperwriting

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- BMJ Open Access

How to write statistical analysis section in medical research

Alok kumar dwivedi.

Department of Molecular and Translational Medicine, Division of Biostatistics and Epidemiology, Texas Tech University Health Sciences Center El Paso, El Paso, Texas, USA

Associated Data

jim-2022-002479supp001.pdf

Data sharing not applicable as no datasets generated and/or analyzed for this study.

Reporting of statistical analysis is essential in any clinical and translational research study. However, medical research studies sometimes report statistical analysis that is either inappropriate or insufficient to attest to the accuracy and validity of findings and conclusions. Published works involving inaccurate statistical analyses and insufficient reporting influence the conduct of future scientific studies, including meta-analyses and medical decisions. Although the biostatistical practice has been improved over the years due to the involvement of statistical reviewers and collaborators in research studies, there remain areas of improvement for transparent reporting of the statistical analysis section in a study. Evidence-based biostatistics practice throughout the research is useful for generating reliable data and translating meaningful data to meaningful interpretation and decisions in medical research. Most existing research reporting guidelines do not provide guidance for reporting methods in the statistical analysis section that helps in evaluating the quality of findings and data interpretation. In this report, we highlight the global and critical steps to be reported in the statistical analysis of grants and research articles. We provide clarity and the importance of understanding study objective types, data generation process, effect size use, evidence-based biostatistical methods use, and development of statistical models through several thematic frameworks. We also provide published examples of adherence or non-adherence to methodological standards related to each step in the statistical analysis and their implications. We believe the suggestions provided in this report can have far-reaching implications for education and strengthening the quality of statistical reporting and biostatistical practice in medical research.

Introduction

Biostatistics is the overall approach to how we realistically and feasibly execute a research idea to produce meaningful data and translate data to meaningful interpretation and decisions. In this era of evidence-based medicine and practice, basic biostatistical knowledge becomes essential for critically appraising research articles and implementing findings for better patient management, improving healthcare, and research planning. 1 However, it may not be sufficient for the proper execution and reporting of statistical analyses in studies. 2 3 Three things are required for statistical analyses, namely knowledge of the conceptual framework of variables, research design, and evidence-based applications of statistical analysis with statistical software. 4 5 The conceptual framework provides possible biological and clinical pathways between independent variables and outcomes with role specification of variables. The research design provides a protocol of study design and data generation process (DGP), whereas the evidence-based statistical analysis approach provides guidance for selecting and implementing approaches after evaluating data with the research design. 2 5 Ocaña-Riola 6 reported a substantial percentage of articles from high-impact medical journals contained errors in statistical analysis or data interpretation. These errors in statistical analyses and interpretation of results do not only impact the reliability of research findings but also influence the medical decision-making and planning and execution of other related studies. A survey of consulting biostatisticians in the USA reported that researchers frequently request biostatisticians for performing inappropriate statistical analyses and inappropriate reporting of data. 7 This implies that there is a need to enforce standardized reporting of the statistical analysis section in medical research which can also help rreviewers and investigators to improve the methodological standards of the study.

Biostatistical practice in medicine has been improving over the years due to continuous efforts in promoting awareness and involving expert services on biostatistics, epidemiology, and research design in clinical and translational research. 8–11 Despite these efforts, the quality of reporting of statistical analysis in research studies has often been suboptimal. 12 13 We noticed that none of the methods reporting documents were developed using evidence-based biostatistics (EBB) theory and practice. The EBB practice implies that the selection of statistical analysis methods for statistical analyses and the steps of results reporting and interpretation should be grounded based on the evidence generated in the scientific literature and according to the study objective type and design. 5 Previous works have not properly elucidated the importance of understanding EBB concepts and related reporting in the write-up of statistical analyses. As a result, reviewers sometimes ask to present data or execute analyses that do not match the study objective type. 14 We summarize the statistical analysis steps to be reported in the statistical analysis section based on review and thematic frameworks.

We identified articles describing statistical reporting problems in medicine using different search terms ( online supplemental table 1 ). Based on these studies, we prioritized commonly reported statistical errors in analytical strategies and developed essential components to be reported in the statistical analysis section of research grants and studies. We also clarified the purpose and the overall implication of reporting each step in statistical analyses through various examples.

Supplementary data

Although biostatistical inputs are critical for the entire research study ( online supplemental table 2 ), biostatistical consultations were mostly used for statistical analyses only 15 . Even though the conduct of statistical analysis mismatched with the study objective and DGP was identified as the major problem in articles submitted to high-impact medical journals. 16 In addition, multivariable analyses were often inappropriately conducted and reported in published studies. 17 18 In light of these statistical errors, we describe the reporting of the following components in the statistical analysis section of the study.

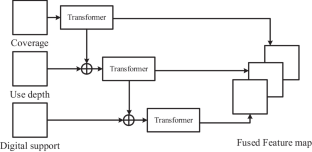

Step 1: specify study objective type and outcomes (overall approach)

The study objective type provides the role of important variables for a specified outcome in statistical analyses and the overall approach of the model building and model reporting steps in a study. In the statistical framework, the problems are classified into descriptive and inferential/analytical/confirmatory objectives. In the epidemiological framework, the analytical and prognostic problems are broadly classified into association, explanatory, and predictive objectives. 19 These study objectives ( figure 1 ) may be classified into six categories: (1) exploratory, (2) association, (3) causal, (4) intervention, (5) prediction and (6) clinical decision models in medical research. 20

Comparative assessments of developing and reporting of study objective types and models. Association measures include odds ratio, risk ratio, or hazard ratio. AUC, area under the curve; C, confounder; CI, confidence interval; E, exposure; HbA1C: hemoglobin A1c; M, mediator; MFT, model fit test; MST, model specification test; PI, predictive interval; R 2 , coefficient of determinant; X, independent variable; Y, outcome.

The exploratory objective type is a specific type of determinant study and is commonly known as risk factors or correlates study in medical research. In an exploratory study, all covariates are considered equally important for the outcome of interest in the study. The goal of the exploratory study is to present the results of a model which gives higher accuracy after satisfying all model-related assumptions. In the association study, the investigator identifies predefined exposures of interest for the outcome, and variables other than exposures are also important for the interpretation and considered as covariates. The goal of an association study is to present the adjusted association of exposure with outcome. 20 In the causal objective study, the investigator is interested in determining the impact of exposure(s) on outcome using the conceptual framework. In this study objective, all variables should have a predefined role (exposures, confounders, mediators, covariates, and predictors) in a conceptual framework. A study with a causal objective is known as an explanatory or a confirmatory study in medical research. The goal is to present the direct or indirect effects of exposure(s) on an outcome after assessing the model’s fitness in the conceptual framework. 19 21 The objective of an interventional study is to determine the effect of an intervention on outcomes and is often known as randomized or non-randomized clinical trials in medical research. In the intervention objective model, all variables other than the intervention are treated as nuisance variables for primary analyses. The goal is to present the direct effect of the intervention on the outcomes by eliminating biases. 22–24 In the predictive study, the goal is to determine an optimum set of variables that can predict the outcome, particularly in external settings. The clinical decision models are a special case of prognostic models in which high dimensional data at various levels are used for risk stratification, classification, and prediction. In this model, all variables are considered input features. The goal is to present a decision tool that has high accuracy in training, testing, and validation data sets. 20 25 Biostatisticians or applied researchers should properly discuss the intention of the study objective type before proceeding with statistical analyses. In addition, it would be a good idea to prepare a conceptual model framework regardless of study objective type to understand study concepts.

A study 26 showed a favorable effect of the beta-blocker intervention on survival outcome in patients with advanced human epidermal growth factor receptor (HER2)-negative breast cancer without adjusting for all the potential confounding effects (age or menopausal status and Eastern Cooperative Oncology Performance Status) in primary analyses or validation analyses or using a propensity score-adjusted analysis, which is an EBB preferred method for analyzing non-randomized studies. 27 Similarly, another study had the goal of developing a predictive model for prediction of Alzheimer’s disease progression. 28 However, this study did not internally or externally validate the performance of the model as per the requirement of a predictive objective study. In another study, 29 investigators were interested in determining an association between metabolic syndrome and hepatitis C virus. However, the authors did not clearly specify the outcome in the analysis and produced conflicting associations with different analyses. 30 Thus, the outcome should be clearly specified as per the study objective type.

Step 2: specify effect size measure according to study design (interpretation and practical value)

The study design provides information on the selection of study participants and the process of data collection conditioned on either exposure or outcome ( figure 2 ). The appropriate use of effect size measure, tabular presentation of results, and the level of evidence are mostly determined by the study design. 31 32 In cohort or clinical trial study designs, the participants are selected based on exposure status and are followed up for the development of the outcome. These study designs can provide multiple outcomes, produce incidence or incidence density, and are preferred to be analyzed with risk ratio (RR) or hazards models. In a case–control study, the selection of participants is conditioned on outcome status. This type of study can have only one outcome and is preferred to be analyzed with an odds ratio (OR) model. In a cross-sectional study design, there is no selection restriction on outcomes or exposures. All data are collected simultaneously and can be analyzed with a prevalence ratio model, which is mathematically equivalent to the RR model. 33 The reporting of effect size measure also depends on the study objective type. For example, predictive models typically require reporting of regression coefficients or weight of variables in the model instead of association measures, which are required in other objective types. There are agreements and disagreements between OR and RR measures. Due to the constancy and symmetricity properties of OR, some researchers prefer to use OR in studies with common events. Similarly, the collapsibility and interpretability properties of RR make it more appealing to use in studies with common events. 34 To avoid variable practice and interpretation issues with OR, it is recommended to use RR models in all studies except for case–control and nested case–control studies, where OR approximates RR and thus OR models should be used. Otherwise, investigators may report sufficient data to compute any ratio measure. Biostatisticians should educate investigators on the proper interpretation of ratio measures in the light of study design and their reporting. 34 35

Effect size according to study design.

Investigators sometimes either inappropriately label their study design 36 37 or report effect size measures not aligned with the study design, 38 39 leading to difficulty in results interpretation and evaluation of the level of evidence. The proper labeling of study design and the appropriate use of effect size measure have substantial implications for results interpretation, including the conduct of systematic review and meta-analysis. 40 A study 31 reviewed the frequency of reporting OR instead of RR in cohort studies and randomized clinical trials (RCTs) and found that one-third of the cohort studies used an OR model, whereas 5% of RCTs used an OR model. The majority of estimated ORs from these studies had a 20% or higher deviation from the corresponding RR.

Step 3: specify study hypothesis, reporting of p values, and interval estimates (interpretation and decision)

The clinical hypothesis provides information for evaluating formal claims specified in the study objectives, while the statistical hypothesis provides information about the population parameters/statistics being used to test the formal claims. The inference about the study hypothesis is typically measured by p value and confidence interval (CI). A smaller p value indicates that the data support against the null hypothesis. Since the p value is a conditional probability, it can never tell about the acceptance or rejection of the null hypothesis. Therefore, multiple alternative strategies of p values have been proposed to strengthen the credibility of conclusions. 41 42 Adaption of these alternative strategies is only needed in the explanatory objective studies. Although exact p values are recommended to be reported in research studies, p values do not provide any information about the effect size. Compared with p values, the CI provides a confidence range of the effect size that contains the true effect size if the study were repeated and can be used to determine whether the results are statistically significant or not. 43 Both p value and 95% CI provide complementary information and thus need to be specified in the statistical analysis section. 24 44

Researchers often test one or more comparisons or hypotheses. Accordingly, the side and the level of significance for considering results to be statistically significant may change. Furthermore, studies may include more than one primary outcome that requires an adjustment in the level of significance for multiplicity. All studies should provide the interval estimate of the effect size/regression coefficient in the primary analyses. Since the interpretation of data analysis depends on the study hypothesis, researchers are required to specify the level of significance along with the side (one-sided or two-sided) of the p value in the test for considering statistically significant results, adjustment of the level of significance due to multiple comparisons or multiplicity, and reporting of interval estimates of the effect size in the statistical analysis section. 45

A study 46 showed a significant effect of fluoxetine on relapse rates in obsessive-compulsive disorder based on a one-sided p value of 0.04. Clearly, there was no reason for using a one-sided p value as opposed to a two-sided p value. A review of the appropriate use of multiple test correction methods in multiarm clinical trials published in major medical journals in 2012 identified over 50% of the articles did not perform multiple-testing correction. 47 Similar to controlling a familywise error rate due to multiple comparisons, adjustment of the false discovery rate is also critical in studies involving multiple related outcomes. A review of RCTs for depression between 2007 and 2008 from six journals reported that only limited studies (5.8%) accounted for multiplicity in the analyses due to multiple outcomes. 48

Step 4: account for DGP in the statistical analysis (accuracy)

The study design also requires the specification of the selection of participants and outcome measurement processes in different design settings. We referred to this specific design feature as DGP. Understanding DGP helps in determining appropriate modeling of outcome distribution in statistical analyses and setting up model premises and units of analysis. 4 DGP ( figure 3 ) involves information on data generation and data measures, including the number of measurements after random selection, complex selection, consecutive selection, pragmatic selection, or systematic selection. Specifically, DGP depends on a sampling setting (participants are selected using survey sampling methods and one subject may represent multiple participants in the population), clustered setting (participants are clustered through a recruitment setting or hierarchical setting or multiple hospitals), pragmatic setting (participants are selected through mixed approaches), or systematic review setting (participants are selected from published studies). DGP also depends on the measurements of outcomes in an unpaired setting (measured on one occasion only in independent groups), paired setting (measured on more than one occasion or participants are matched on certain subject characteristics), or mixed setting (measured on more than one occasion but interested in comparing independent groups). It also involves information regarding outcomes or exposure generation processes using quantitative or categorical variables, quantitative values using labs or validated instruments, and self-reported or administered tests yielding a variety of data distributions, including individual distribution, mixed-type distribution, mixed distributions, and latent distributions. Due to different DGPs, study data may include messy or missing data, incomplete/partial measurements, time-varying measurements, surrogate measures, latent measures, imbalances, unknown confounders, instrument variables, correlated responses, various levels of clustering, qualitative data, or mixed data outcomes, competing events, individual and higher-level variables, etc. The performance of statistical analysis, appropriate estimation of standard errors of estimates and subsequently computation of p values, the generalizability of findings, and the graphical display of data rely on DGP. Accounting for DGP in the analyses requires proper communication between investigators and biostatisticians about each aspect of participant selection and data collection, including measurements, occasions of measurements, and instruments used in the research study.

Common features of the data generation process.

A study 49 compared the intake of fresh fruit and komatsuna juice with the intake of commercial vegetable juice on metabolic parameters in middle-aged men using an RCT. The study was criticized for many reasons, but primarily for incorrect statistical methods not aligned with the study DGP. 50 Similarly, another study 51 highlighted that 80% of published studies using the Korean National Health and Nutrition Examination Survey did not incorporate survey sampling structure in statistical analyses, producing biased estimates and inappropriate findings. Likewise, another study 52 highlighted the need for maintaining methodological standards while analyzing data from the National Inpatient Sample. A systematic review 53 identified that over 50% of studies did not specify whether a paired t-test or an unpaired t-test was performed in statistical analysis in the top 25% of physiology journals, indicating poor transparency in reporting of statistical analysis as per the data type. Another study 54 also highlighted the data displaying errors not aligned with DGP. As per DGP, delay in treatment initiation of patients with cancer defined from the onset of symptom to treatment initiation should be analyzed into three components: patient/primary delay, secondary delay, and tertiary delay. 55 Similarly, the number of cancerous nodes should be analyzed with count data models. 56 However, several studies did not analyze such data according to DGP. 57 58

Step 5: apply EBB methods specific to study design features and DGP (efficiency and robustness)

The continuous growth in the development of robust statistical methods for dealing with a specific problem produced various methods to analyze specific data types. Since multiple methods are available for handling a specific problem yet with varying performances, heterogeneous practices among applied researchers have been noticed. Variable practices could also be due to a lack of consensus on statistical methods in literature, unawareness, and the unavailability of standardized statistical guidelines. 2 5 59 However, it becomes sometimes difficult to differentiate whether a specific method was used due to its robustness, lack of awareness, lack of accessibility of statistical software to apply an alternative appropriate method, intention to produce expected results, or ignorance of model diagnostics. To avoid heterogeneous practices, the selection of statistical methodology and their reporting at each stage of data analysis should be conducted using methods according to EBB practice. 5 Since it is hard for applied researchers to optimally select statistical methodology at each step, we encourage investigators to involve biostatisticians at the very early stage in basic, clinical, population, translational, and database research. We also appeal to biostatisticians to develop guidelines, checklists, and educational tools to promote the concept of EBB. As an effort, we developed the statistical analysis and methods in biomedical research (SAMBR) guidelines for applied researchers to use EBB methods for data analysis. 5 The EBB practice is essential for applying recent cutting-edge robust methodologies to yield accurate and unbiased results. The efficiency of statistical methodologies depends on the assumptions and DGP. Therefore, investigators may attempt to specify the choice of specific models in the primary analysis as per the EBB.

Although details of evidence-based preferred methods are provided in the SAMBR checklists for each study design/objective, 5 we have presented a simplified version of evidence-based preferred methods for common statistical analysis ( online supplemental table 3 ). Several examples are available in the literature where inefficient methods not according to EBB practice have been used. 31 57 60

Step 6: report variable selection method in the multivariable analysis according to study objective type (unbiased)

Multivariable analysis can be used for association, prediction or classification or risk stratification, adjustment, propensity score development, and effect size estimation. 61 Some biological, clinical, behavioral, and environmental factors may directly associate or influence the relationship between exposure and outcome. Therefore, almost all health studies require multivariable analyses for accurate and unbiased interpretations of findings ( figure 1 ). Analysts should develop an adjusted model if the sample size permits. It is a misconception that the analysis of RCT does not require adjusted analysis. Analysis of RCT may require adjustment for prognostic variables. 23 The foremost step in model building is the entry of variables after finalizing the appropriate parametric or non-parametric regression model. In the exploratory model building process due to no preference of exposures, a backward automated approach after including any variables that are significant at 25% in the unadjusted analysis can be used for variable selection. 62 63 In the association model, a manual selection of covariates based on the relevance of the variables should be included in a fully adjusted model. 63 In a causal model, clinically guided methods should be used for variable selection and their adjustments. 20 In a non-randomized interventional model, efforts should be made to eliminate confounding effects through propensity score methods and the final propensity score-adjusted multivariable model may adjust any prognostic variables, while a randomized study simply should adjust any prognostic variables. 27 Maintaining the event per variable (EVR) is important to avoid overfitting in any type of modeling; therefore, screening of variables may be required in some association and explanatory studies, which may be accomplished using a backward stepwise method that needs to be clarified in the statistical analyses. 10 In a predictive study, a model with an optimum set of variables producing the highest accuracy should be used. The optimum set of variables may be screened with the random forest method or bootstrap or machine learning methods. 64 65 Different methods of variable selection and adjustments may lead to different results. The screening process of variables and their adjustments in the final multivariable model should be clearly mentioned in the statistical analysis section.

A study 66 evaluating the effect of hydroxychloroquine (HDQ) showed unfavorable events (intubation or death) in patients who received HDQ compared with those who did not (hazard ratio (HR): 2.37, 95% CI 1.84 to 3.02) in an unadjusted analysis. However, the propensity score-adjusted analyses as appropriate with the interventional objective model showed no significant association between HDQ use and unfavorable events (HR: 1.04, 95% CI 0.82 to 1.32), which was also confirmed in multivariable and other propensity score-adjusted analyses. This study clearly suggests that results interpretation should be based on a multivariable analysis only in observational studies if feasible. A recent study 10 noted that approximately 6% of multivariable analyses based on either logistic or Cox regression used an inappropriate selection method of variables in medical research. This practice was more commonly noted in studies that did not involve an expert biostatistician. Another review 61 of 316 articles from high-impact Chinese medical journals revealed that 30.7% of articles did not report the selection of variables in multivariable models. Indeed, this inappropriate practice could have been identified more commonly if classified according to the study objective type. 18 In RCTs, it is uncommon to report an adjusted analysis based on prognostic variables, even though an adjusted analysis may produce an efficient estimate compared with an unadjusted analysis. A study assessing the effect of preemptive intervention on development outcomes showed a significant effect of an intervention on reducing autism spectrum disorder symptoms. 67 However, this study was criticized by Ware 68 for not reporting non-significant results in unadjusted analyses. If possible, unadjusted estimates should also be reported in any study, particularly in RCTs. 23 68

Step 7: provide evidence for exploring effect modifiers (applicability)

Any variable that modifies the effect of exposure on the outcome is called an effect modifier or modifier or an interacting variable. Exploring the effect modifiers in multivariable analyses helps in (1) determining the applicability/generalizability of findings in the overall or specific subpopulation, (2) generating ideas for new hypotheses, (3) explaining uninterpretable findings between unadjusted and adjusted analyses, (4) guiding to present combined or separate models for each specific subpopulation, and (5) explaining heterogeneity in treatment effect. Often, investigators present adjusted stratified results according to the presence or absence of an effect modifier. If the exposure interacts with multiple variables statistically or conceptually in the model, then the stratified findings (subgroup) according to each effect modifier may be presented. Otherwise, stratified analysis substantially reduces the power of the study due to the lower sample size in each stratum and may produce significant results by inflating type I error. 69 Therefore, a multivariable analysis involving an interaction term as opposed to a stratified analysis may be presented in the presence of an effect modifier. 70 Sometimes, a quantitative variable may emerge as a potential effect modifier for exposure and an outcome relationship. In such a situation, the quantitative variable should not be categorized unless a clinically meaningful threshold is not available in the study. In fact, the practice of categorizing quantitative variables should be avoided in the analysis unless a clinically meaningful cut-off is available or a hypothesis requires for it. 71 In an exploratory objective type, any possible interaction may be obtained in a study; however, the interpretation should be guided based on clinical implications. Similarly, some objective models may have more than one exposure or intervention and the association of each exposure according to the level of other exposure should be presented through adjusted analyses as suggested in the presence of interaction effects. 70

A review of 428 articles from MEDLINE on the quality of reporting from statistical analyses of three (linear, logistic, and Cox) commonly used regression models reported that only 18.5% of the published articles provided interaction analyses, 17 even though interaction analyses can provide a lot of useful information.

Step 8: assessment of assumptions, specifically the distribution of outcome, linearity, multicollinearity, sparsity, and overfitting (reliability)

The assessment and reporting of model diagnostics are important in assessing the efficiency, validity, and usefulness of the model. Model diagnostics include satisfying model-specific assumptions and the assessment of sparsity, linearity, distribution of outcome, multicollinearity, and overfitting. 61 72 Model-specific assumptions such as normal residuals, heteroscedasticity and independence of errors in linear regression, proportionality in Cox regression, proportionality odds assumption in ordinal logistic regression, and distribution fit in other types of continuous and count models are required. In addition, sparsity should also be examined prior to selecting an appropriate model. Sparsity indicates many zero observations in the data set. 73 In the presence of sparsity, the effect size is difficult to interpret. Except for machine learning models, most of the parametric and semiparametric models require a linear relationship between independent variables and a functional form of an outcome. Linearity should be assessed using a multivariable polynomial in all model objectives. 62 Similarly, the appropriate choice of the distribution of outcome is required for model building in all study objective models. Multicollinearity assessment is also useful in all objective models. Assessment of EVR in multivariable analysis can be used to avoid the overfitting issue of a multivariable model. 18

Some review studies highlighted that 73.8%–92% of the articles published in MEDLINE had not assessed the model diagnostics of the multivariable regression models. 17 61 72 Contrary to the monotonically, linearly increasing relationship between systolic blood pressure (SBP) and mortality established using the Framingham’s study, 74 Port et al 75 reported a non-linear relationship between SBP and all-cause mortality or cardiovascular deaths by reanalysis of the Framingham’s study data set. This study identified a different threshold for treating hypertension, indicating the role of linearity assessment in multivariable models. Although a non-Gaussian distribution model may be required for modeling patient delay outcome data in cancer, 55 a study analyzed patient delay data using an ordinary linear regression model. 57 An investigation of the development of predictive models and their reporting in medical journals identified that 53% of the articles had fewer EVR than the recommended EVR, indicating over half of the published articles may have an overfitting model. 18 Another study 76 attempted to identify the anthropometric variables associated with non-insulin-dependent diabetes and found that none of the anthropometric variables were significant after adjusting for waist circumference, age, and sex, indicating the presence of collinearity. A study reported detailed sparse data problems in published studies and potential solutions. 73

Step 9: report type of primary and sensitivity analyses (consistency)

Numerous considerations and assumptions are made throughout the research processes that require assessment, evaluation, and validation. Some assumptions, executions, and errors made at the beginning of the study data collection may not be fixable 13 ; however, additional information collected during the study and data processing, including data distribution obtained at the end of the study, may facilitate additional considerations that need to be verified in the statistical analyses. Consistencies in the research findings via modifications in the outcome or exposure definition, study population, accounting for missing data, model-related assumptions, variables and their forms, and accounting for adherence to protocol in the models can be evaluated and reported in research studies using sensitivity analyses. 77 The purpose and type of supporting analyses need to be specified clearly in the statistical analyses to differentiate the main findings from the supporting findings. Sensitivity analyses are different from secondary or interim or subgroup analyses. 78 Data analyses for secondary outcomes are often referred to as secondary analyses, while data analyses of an ongoing study are called interim analyses and data analyses according to groups based on patient characteristics are known as subgroup analyses.

Almost all studies require some form of sensitivity analysis to validate the findings under different conditions. However, it is often underutilized in medical journals. Only 18%–20.3% of studies reported some forms of sensitivity analyses. 77 78 A review of nutritional trials from high-quality journals reflected that 17% of the conclusions were reported inappropriately using findings from sensitivity analyses not based on the primary/main analyses. 77

Step 10: provide methods for summarizing, displaying, and interpreting data (transparency and usability)

Data presentation includes data summary, data display, and data from statistical model analyses. The primary purpose of the data summary is to understand the distribution of outcome status and other characteristics in the total sample and by primary exposure status or outcome status. Column-wise data presentation should be preferred according to exposure status in all study designs, while row-wise data presentation for the outcome should be preferred in all study designs except for a case–control study. 24 32 Summary statistics should be used to provide maximum information on data distribution aligned with DGP and variable type. The purpose of results presentation primarily from regression analyses or statistical models is to convey results interpretation and implications of findings. The results should be presented according to the study objective type. Accordingly, the reporting of unadjusted and adjusted associations of each factor with the outcome may be preferred in the determinant objective model, while unadjusted and adjusted effects of primary exposure on the outcome may be preferred in the explanatory objective model. In prognostic models, the final predictive models may be presented in such a way that users can use models to predict an outcome. In the exploratory objective model, a final multivariable model should be reported with R 2 or area under the curve (AUC). In the association and interventional models, the assessment of internal validation is critically important through various sensitivity and validation analyses. A model with better fit indices (in terms of R 2 or AUC, Akaike information criterion, Bayesian information criterion, fit index, root mean square error) should be finalized and reported in the causal model objective study. In the predictive objective type, the model performance in terms of R 2 or AUC in training and validation data sets needs to be reported ( figure 1 ). 20 21 There are multiple purposes of data display, including data distribution using bar diagram or histogram or frequency polygons or box plots, comparisons using cluster bar diagram or scatter dot plot or stacked bar diagram or Kaplan-Meier plot, correlation or model assessment using scatter plot or scatter matrix, clustering or pattern using heatmap or line plots, the effect of predictors with fitted models using marginsplot, and comparative evaluation of effect sizes from regression models using forest plot. Although the key purpose of data display is to highlight critical issues or findings in the study, data display should essentially follow DGP and variable types and should be user-friendly. 54 79 Data interpretation heavily relies on the effect size measure along with study design and specified hypotheses. Sometimes, variables require standardization for descriptive comparison of effect sizes among exposures or interpreting small effect size, or centralization for interpreting intercept or avoiding collinearity due to interaction terms, or transformation for achieving model-related assumptions. 80 Appropriate methods of data reporting and interpretation aligned with study design, study hypothesis, and effect size measure should be specified in the statistical analysis section of research studies.

Published articles from reputed journals inappropriately summarized a categorized variable with mean and range, 81 summarized a highly skewed variable with mean and standard deviation, 57 and treated a categorized variable as a continuous variable in regression analyses. 82 Similarly, numerous examples from published studies reporting inappropriate graphical display or inappropriate interpretation of data not aligned with DGP or variable types are illustrated in a book published by Bland and Peacock. 83 84 A study used qualitative data on MRI but inappropriately presented with a Box-Whisker plot. 81 Another study reported unusually high OR for an association between high breast parenchymal enhancement and breast cancer in both premenopausal and postmenopausal women. 85 This reporting makes suspicious findings and may include sparse data bias. 86 A poor tabular presentation without proper scaling or standardization of a variable, missing CI for some variables, missing unit and sample size, and inconsistent reporting of decimal places could be easily noticed in table 4 of a published study. 29 Some published predictive models 87 do not report intercept or baseline survival estimates to use their predictive models in clinical use. Although a direct comparison of effect sizes obtained from the same model may be avoided if the units are different among variables, 35 a study had an objective to compare effect sizes across variables but the authors performed comparisons without standardization of variables or using statistical tests. 88

A sample for writing statistical analysis section in medical journals/research studies

Our primary study objective type was to develop a (select from figure 1 ) model to assess the relationship of risk factors (list critical variables or exposures) with outcomes (specify type from continuous/discrete/count/binary/polytomous/time-to-event). To address this objective, we conducted a (select from figure 2 or any other) study design to test the hypotheses of (equality or superiority or non-inferiority or equivalence or futility) or develop prediction. Accordingly, the other variables were adjusted or considered as (specify role of variables from confounders, covariates, or predictors or independent variables) as reflected in the conceptual framework. In the unadjusted or preliminary analyses as per the (select from figure 3 or any other design features) DGP, (specify EBB preferred tests from online supplemental table 3 or any other appropriate tests) were used for (specify variables and types) in unadjusted analyses. According to the EBB practice for the outcome (specify type) and DGP of (select from figure 3 or any other), we used (select from online supplemental table 1 or specify a multivariable approach) as the primary model in the multivariable analysis. We used (select from figure 1 ) variable selection method in the multivariable analysis and explored the interaction effects between (specify variables). The model diagnostics including (list all applicable, including model-related assumptions, linearity, or multicollinearity or overfitting or distribution of outcome or sparsity) were also assessed using (specify appropriate methods) respectively. In such exploration, we identified (specify diagnostic issues if any) and therefore the multivariable models were developed using (specify potential methods used to handle diagnostic issues). The other outcomes were analyzed with (list names of multivariable approaches with respective outcomes). All the models used the same procedure (or specify from figure 1 ) for variable selection, exploration of interaction effects, and model diagnostics using (specify statistical approaches) depending on the statistical models. As per the study design, hypothesis, and multivariable analysis, the results were summarized with effect size (select as appropriate or from figure 2 ) along with (specify 95% CI or other interval estimates) and considered statistically significant using (specify the side of p value or alternatives) at (specify the level of significance) due to (provide reasons for choosing a significance level). We presented unadjusted and/or adjusted estimates of primary outcome according to (list primary exposures or variables). Additional analyses were conducted for (specific reasons from step 9) using (specify methods) to validate findings obtained in the primary analyses. The data were summarized with (list summary measures and appropriate graphs from step 10), whereas the final multivariable model performance was summarized with (fit indices if applicable from step 10). We also used (list graphs) as appropriate with DGP (specify from figure 3 ) to present the critical findings or highlight (specify data issues) using (list graphs/methods) in the study. The exposures or variables were used in (specify the form of the variables) and therefore the effect or association of (list exposures or variables) on outcome should be interpreted in terms of changes in (specify interpretation unit) exposures/variables. List all other additional analyses if performed (with full details of all models in a supplementary file along with statistical codes if possible).

Concluding remarks

We highlighted 10 essential steps to be reported in the statistical analysis section of any analytical study ( figure 4 ). Adherence to minimum reporting of the steps specified in this report may enforce investigators to understand concepts and approach biostatisticians timely to apply these concepts in their study to improve the overall quality of methodological standards in grant proposals and research studies. The order of reporting information in statistical analyses specified in this report is not mandatory; however, clear reporting of analytical steps applicable to the specific study type should be mentioned somewhere in the manuscript. Since the entire approach of statistical analyses is dependent on the study objective type and EBB practice, proper execution and reporting of statistical models can be taught to the next generation of statisticians by the study objective type in statistical education courses. In fact, some disciplines ( figure 5 ) are strictly aligned with specific study objective types. Bioinformaticians are oriented in studying determinant and prognostic models toward precision medicine, while epidemiologists are oriented in studying association and causal models, particularly in population-based observational and pragmatic settings. Data scientists are heavily involved in prediction and classification models in personalized medicine. A common thing across disciplines is using biostatistical principles and computation tools to address any research question. Sometimes, one discipline expert does the part of others. 89 We strongly recommend using a team science approach that includes an epidemiologist, biostatistician, data scientist, and bioinformatician depending on the study objectives and needs. Clear reporting of data analyses as per the study objective type should be encouraged among all researchers to minimize heterogeneous practices and improve scientific quality and outcomes. In addition, we also encourage investigators to strictly follow transparent reporting and quality assessment guidelines according to the study design ( https://www.equator-network.org/ ) to improve the overall quality of the study, accordingly STROBE (Strengthening the Reporting of Observational Studies in Epidemiology) for observational studies, CONSORT (Consolidated Standards of Reporting Trials) for clinical trials, STARD (Standards for Reporting Diagnostic Accuracy Studies) for diagnostic studies, TRIPOD (Transparent Reporting of a multivariable prediction model for Individual Prognosis OR Diagnosis) for prediction modeling, and ARRIVE (Animal Research: Reporting of In Vivo Experiments) for preclinical studies. The steps provided in this document for writing the statistical analysis section is essentially different from other guidance documents, including SAMBR. 5 SAMBR provides a guidance document for selecting evidence-based preferred methods of statistical analysis according to different study designs, while this report suggests the global reporting of essential information in the statistical analysis section according to study objective type. In this guidance report, our suggestion strictly pertains to the reporting of methods in the statistical analysis section and their implications on the interpretation of results. Our document does not provide guidance on the reporting of sample size or results or statistical analysis section for meta-analysis. The examples and reviews reported in this study may be used to emphasize the concepts and related implications in medical research.

Summary of reporting steps, purpose, and evaluation measures in the statistical analysis section.

Role of interrelated disciplines according to study objective type.

Acknowledgments

The author would like to thank the reviewers for their careful review and insightful suggestions.

Contributors: AKD developed the concept and design and wrote the manuscript.

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: AKD is a Journal of Investigative Medicine Editorial Board member. No other competing interests declared.

Provenance and peer review: Commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Data availability statement

Ethics statements, patient consent for publication.

Not required.

- Affiliate Program

- UNITED STATES

- 台灣 (TAIWAN)

- TÜRKIYE (TURKEY)

- Academic Editing Services

- - Research Paper

- - Journal Manuscript

- - Dissertation

- - College & University Assignments

- Admissions Editing Services

- - Application Essay

- - Personal Statement

- - Recommendation Letter

- - Cover Letter

- - CV/Resume

- Business Editing Services

- - Business Documents

- - Report & Brochure

- - Website & Blog

- Writer Editing Services

- - Script & Screenplay

- Our Editors

- Client Reviews

- Editing & Proofreading Prices

- Wordvice Points

- Partner Discount

- Plagiarism Checker

- APA Citation Generator

- MLA Citation Generator

- Chicago Citation Generator

- Vancouver Citation Generator

- - APA Style

- - MLA Style

- - Chicago Style

- - Vancouver Style

- Writing & Editing Guide

- Academic Resources

- Admissions Resources

How to Write the Results/Findings Section in Research

What is the research paper Results section and what does it do?

The Results section of a scientific research paper represents the core findings of a study derived from the methods applied to gather and analyze information. It presents these findings in a logical sequence without bias or interpretation from the author, setting up the reader for later interpretation and evaluation in the Discussion section. A major purpose of the Results section is to break down the data into sentences that show its significance to the research question(s).

The Results section appears third in the section sequence in most scientific papers. It follows the presentation of the Methods and Materials and is presented before the Discussion section —although the Results and Discussion are presented together in many journals. This section answers the basic question “What did you find in your research?”

What is included in the Results section?

The Results section should include the findings of your study and ONLY the findings of your study. The findings include:

- Data presented in tables, charts, graphs, and other figures (may be placed into the text or on separate pages at the end of the manuscript)

- A contextual analysis of this data explaining its meaning in sentence form

- All data that corresponds to the central research question(s)

- All secondary findings (secondary outcomes, subgroup analyses, etc.)

If the scope of the study is broad, or if you studied a variety of variables, or if the methodology used yields a wide range of different results, the author should present only those results that are most relevant to the research question stated in the Introduction section .

As a general rule, any information that does not present the direct findings or outcome of the study should be left out of this section. Unless the journal requests that authors combine the Results and Discussion sections, explanations and interpretations should be omitted from the Results.

How are the results organized?

The best way to organize your Results section is “logically.” One logical and clear method of organizing research results is to provide them alongside the research questions—within each research question, present the type of data that addresses that research question.

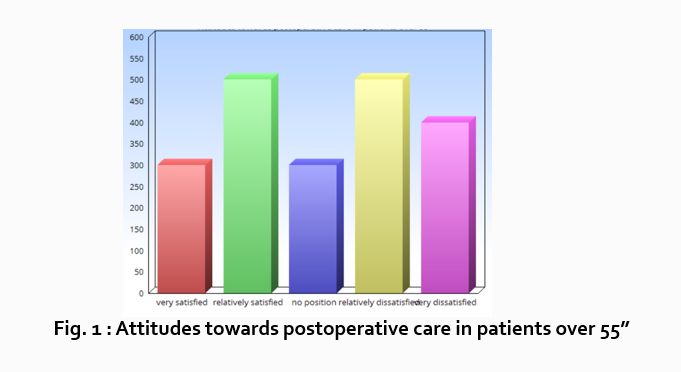

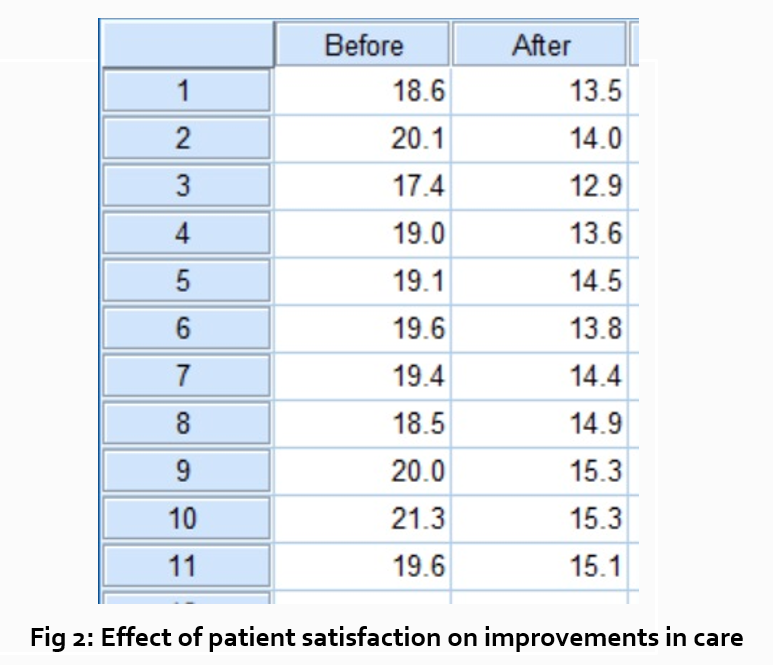

Let’s look at an example. Your research question is based on a survey among patients who were treated at a hospital and received postoperative care. Let’s say your first research question is:

“What do hospital patients over age 55 think about postoperative care?”

This can actually be represented as a heading within your Results section, though it might be presented as a statement rather than a question:

Attitudes towards postoperative care in patients over the age of 55

Now present the results that address this specific research question first. In this case, perhaps a table illustrating data from a survey. Likert items can be included in this example. Tables can also present standard deviations, probabilities, correlation matrices, etc.

Following this, present a content analysis, in words, of one end of the spectrum of the survey or data table. In our example case, start with the POSITIVE survey responses regarding postoperative care, using descriptive phrases. For example:

“Sixty-five percent of patients over 55 responded positively to the question “ Are you satisfied with your hospital’s postoperative care ?” (Fig. 2)

Include other results such as subcategory analyses. The amount of textual description used will depend on how much interpretation of tables and figures is necessary and how many examples the reader needs in order to understand the significance of your research findings.

Next, present a content analysis of another part of the spectrum of the same research question, perhaps the NEGATIVE or NEUTRAL responses to the survey. For instance:

“As Figure 1 shows, 15 out of 60 patients in Group A responded negatively to Question 2.”

After you have assessed the data in one figure and explained it sufficiently, move on to your next research question. For example:

“How does patient satisfaction correspond to in-hospital improvements made to postoperative care?”

This kind of data may be presented through a figure or set of figures (for instance, a paired T-test table).

Explain the data you present, here in a table, with a concise content analysis:

“The p-value for the comparison between the before and after groups of patients was .03% (Fig. 2), indicating that the greater the dissatisfaction among patients, the more frequent the improvements that were made to postoperative care.”

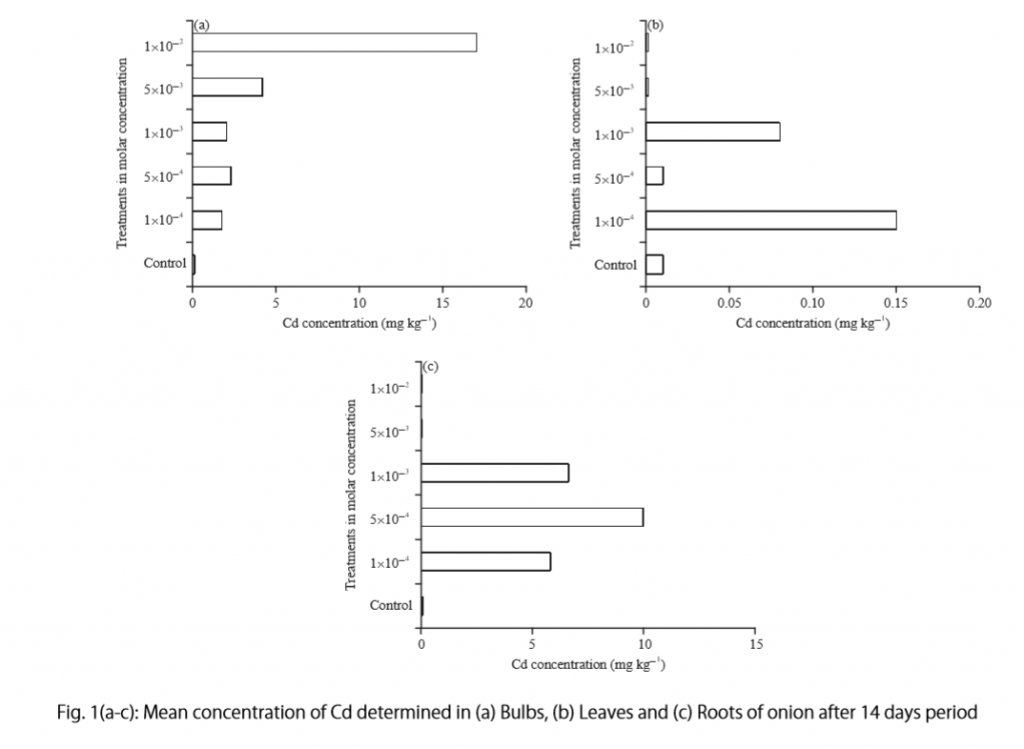

Let’s examine another example of a Results section from a study on plant tolerance to heavy metal stress . In the Introduction section, the aims of the study are presented as “determining the physiological and morphological responses of Allium cepa L. towards increased cadmium toxicity” and “evaluating its potential to accumulate the metal and its associated environmental consequences.” The Results section presents data showing how these aims are achieved in tables alongside a content analysis, beginning with an overview of the findings:

“Cadmium caused inhibition of root and leave elongation, with increasing effects at higher exposure doses (Fig. 1a-c).”

The figure containing this data is cited in parentheses. Note that this author has combined three graphs into one single figure. Separating the data into separate graphs focusing on specific aspects makes it easier for the reader to assess the findings, and consolidating this information into one figure saves space and makes it easy to locate the most relevant results.

Following this overall summary, the relevant data in the tables is broken down into greater detail in text form in the Results section.

- “Results on the bio-accumulation of cadmium were found to be the highest (17.5 mg kgG1) in the bulb, when the concentration of cadmium in the solution was 1×10G2 M and lowest (0.11 mg kgG1) in the leaves when the concentration was 1×10G3 M.”

Captioning and Referencing Tables and Figures

Tables and figures are central components of your Results section and you need to carefully think about the most effective way to use graphs and tables to present your findings . Therefore, it is crucial to know how to write strong figure captions and to refer to them within the text of the Results section.

The most important advice one can give here as well as throughout the paper is to check the requirements and standards of the journal to which you are submitting your work. Every journal has its own design and layout standards, which you can find in the author instructions on the target journal’s website. Perusing a journal’s published articles will also give you an idea of the proper number, size, and complexity of your figures.

Regardless of which format you use, the figures should be placed in the order they are referenced in the Results section and be as clear and easy to understand as possible. If there are multiple variables being considered (within one or more research questions), it can be a good idea to split these up into separate figures. Subsequently, these can be referenced and analyzed under separate headings and paragraphs in the text.

To create a caption, consider the research question being asked and change it into a phrase. For instance, if one question is “Which color did participants choose?”, the caption might be “Color choice by participant group.” Or in our last research paper example, where the question was “What is the concentration of cadmium in different parts of the onion after 14 days?” the caption reads:

“Fig. 1(a-c): Mean concentration of Cd determined in (a) bulbs, (b) leaves, and (c) roots of onions after a 14-day period.”

Steps for Composing the Results Section

Because each study is unique, there is no one-size-fits-all approach when it comes to designing a strategy for structuring and writing the section of a research paper where findings are presented. The content and layout of this section will be determined by the specific area of research, the design of the study and its particular methodologies, and the guidelines of the target journal and its editors. However, the following steps can be used to compose the results of most scientific research studies and are essential for researchers who are new to preparing a manuscript for publication or who need a reminder of how to construct the Results section.

Step 1 : Consult the guidelines or instructions that the target journal or publisher provides authors and read research papers it has published, especially those with similar topics, methods, or results to your study.

- The guidelines will generally outline specific requirements for the results or findings section, and the published articles will provide sound examples of successful approaches.

- Note length limitations on restrictions on content. For instance, while many journals require the Results and Discussion sections to be separate, others do not—qualitative research papers often include results and interpretations in the same section (“Results and Discussion”).

- Reading the aims and scope in the journal’s “ guide for authors ” section and understanding the interests of its readers will be invaluable in preparing to write the Results section.

Step 2 : Consider your research results in relation to the journal’s requirements and catalogue your results.

- Focus on experimental results and other findings that are especially relevant to your research questions and objectives and include them even if they are unexpected or do not support your ideas and hypotheses.

- Catalogue your findings—use subheadings to streamline and clarify your report. This will help you avoid excessive and peripheral details as you write and also help your reader understand and remember your findings. Create appendices that might interest specialists but prove too long or distracting for other readers.

- Decide how you will structure of your results. You might match the order of the research questions and hypotheses to your results, or you could arrange them according to the order presented in the Methods section. A chronological order or even a hierarchy of importance or meaningful grouping of main themes or categories might prove effective. Consider your audience, evidence, and most importantly, the objectives of your research when choosing a structure for presenting your findings.

Step 3 : Design figures and tables to present and illustrate your data.

- Tables and figures should be numbered according to the order in which they are mentioned in the main text of the paper.

- Information in figures should be relatively self-explanatory (with the aid of captions), and their design should include all definitions and other information necessary for readers to understand the findings without reading all of the text.

- Use tables and figures as a focal point to tell a clear and informative story about your research and avoid repeating information. But remember that while figures clarify and enhance the text, they cannot replace it.

Step 4 : Draft your Results section using the findings and figures you have organized.

- The goal is to communicate this complex information as clearly and precisely as possible; precise and compact phrases and sentences are most effective.

- In the opening paragraph of this section, restate your research questions or aims to focus the reader’s attention to what the results are trying to show. It is also a good idea to summarize key findings at the end of this section to create a logical transition to the interpretation and discussion that follows.

- Try to write in the past tense and the active voice to relay the findings since the research has already been done and the agent is usually clear. This will ensure that your explanations are also clear and logical.

- Make sure that any specialized terminology or abbreviation you have used here has been defined and clarified in the Introduction section .

Step 5 : Review your draft; edit and revise until it reports results exactly as you would like to have them reported to your readers.

- Double-check the accuracy and consistency of all the data, as well as all of the visual elements included.

- Read your draft aloud to catch language errors (grammar, spelling, and mechanics), awkward phrases, and missing transitions.

- Ensure that your results are presented in the best order to focus on objectives and prepare readers for interpretations, valuations, and recommendations in the Discussion section . Look back over the paper’s Introduction and background while anticipating the Discussion and Conclusion sections to ensure that the presentation of your results is consistent and effective.

- Consider seeking additional guidance on your paper. Find additional readers to look over your Results section and see if it can be improved in any way. Peers, professors, or qualified experts can provide valuable insights.

One excellent option is to use a professional English proofreading and editing service such as Wordvice, including our paper editing service . With hundreds of qualified editors from dozens of scientific fields, Wordvice has helped thousands of authors revise their manuscripts and get accepted into their target journals. Read more about the proofreading and editing process before proceeding with getting academic editing services and manuscript editing services for your manuscript.

As the representation of your study’s data output, the Results section presents the core information in your research paper. By writing with clarity and conciseness and by highlighting and explaining the crucial findings of their study, authors increase the impact and effectiveness of their research manuscripts.

For more articles and videos on writing your research manuscript, visit Wordvice’s Resources page.

Wordvice Resources

- How to Write a Research Paper Introduction

- Which Verb Tenses to Use in a Research Paper

- How to Write an Abstract for a Research Paper

- How to Write a Research Paper Title

- Useful Phrases for Academic Writing

- Common Transition Terms in Academic Papers

- Active and Passive Voice in Research Papers

- 100+ Verbs That Will Make Your Research Writing Amazing

- Tips for Paraphrasing in Research Papers

- Skip to main content

- Skip to primary sidebar