An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Can J Hosp Pharm

- v.68(4); Jul-Aug 2015

Creating a Data Analysis Plan: What to Consider When Choosing Statistics for a Study

There are three kinds of lies: lies, damned lies, and statistics. – Mark Twain 1

INTRODUCTION

Statistics represent an essential part of a study because, regardless of the study design, investigators need to summarize the collected information for interpretation and presentation to others. It is therefore important for us to heed Mr Twain’s concern when creating the data analysis plan. In fact, even before data collection begins, we need to have a clear analysis plan that will guide us from the initial stages of summarizing and describing the data through to testing our hypotheses.

The purpose of this article is to help you create a data analysis plan for a quantitative study. For those interested in conducting qualitative research, previous articles in this Research Primer series have provided information on the design and analysis of such studies. 2 , 3 Information in the current article is divided into 3 main sections: an overview of terms and concepts used in data analysis, a review of common methods used to summarize study data, and a process to help identify relevant statistical tests. My intention here is to introduce the main elements of data analysis and provide a place for you to start when planning this part of your study. Biostatistical experts, textbooks, statistical software packages, and other resources can certainly add more breadth and depth to this topic when you need additional information and advice.

TERMS AND CONCEPTS USED IN DATA ANALYSIS

When analyzing information from a quantitative study, we are often dealing with numbers; therefore, it is important to begin with an understanding of the source of the numbers. Let us start with the term variable , which defines a specific item of information collected in a study. Examples of variables include age, sex or gender, ethnicity, exercise frequency, weight, treatment group, and blood glucose. Each variable will have a group of categories, which are referred to as values , to help describe the characteristic of an individual study participant. For example, the variable “sex” would have values of “male” and “female”.

Although variables can be defined or grouped in various ways, I will focus on 2 methods at this introductory stage. First, variables can be defined according to the level of measurement. The categories in a nominal variable are names, for example, male and female for the variable “sex”; white, Aboriginal, black, Latin American, South Asian, and East Asian for the variable “ethnicity”; and intervention and control for the variable “treatment group”. Nominal variables with only 2 categories are also referred to as dichotomous variables because the study group can be divided into 2 subgroups based on information in the variable. For example, a study sample can be split into 2 groups (patients receiving the intervention and controls) using the dichotomous variable “treatment group”. An ordinal variable implies that the categories can be placed in a meaningful order, as would be the case for exercise frequency (never, sometimes, often, or always). Nominal-level and ordinal-level variables are also referred to as categorical variables, because each category in the variable can be completely separated from the others. The categories for an interval variable can be placed in a meaningful order, with the interval between consecutive categories also having meaning. Age, weight, and blood glucose can be considered as interval variables, but also as ratio variables, because the ratio between values has meaning (e.g., a 15-year-old is half the age of a 30-year-old). Interval-level and ratio-level variables are also referred to as continuous variables because of the underlying continuity among categories.

As we progress through the levels of measurement from nominal to ratio variables, we gather more information about the study participant. The amount of information that a variable provides will become important in the analysis stage, because we lose information when variables are reduced or aggregated—a common practice that is not recommended. 4 For example, if age is reduced from a ratio-level variable (measured in years) to an ordinal variable (categories of < 65 and ≥ 65 years) we lose the ability to make comparisons across the entire age range and introduce error into the data analysis. 4

A second method of defining variables is to consider them as either dependent or independent. As the terms imply, the value of a dependent variable depends on the value of other variables, whereas the value of an independent variable does not rely on other variables. In addition, an investigator can influence the value of an independent variable, such as treatment-group assignment. Independent variables are also referred to as predictors because we can use information from these variables to predict the value of a dependent variable. Building on the group of variables listed in the first paragraph of this section, blood glucose could be considered a dependent variable, because its value may depend on values of the independent variables age, sex, ethnicity, exercise frequency, weight, and treatment group.

Statistics are mathematical formulae that are used to organize and interpret the information that is collected through variables. There are 2 general categories of statistics, descriptive and inferential. Descriptive statistics are used to describe the collected information, such as the range of values, their average, and the most common category. Knowledge gained from descriptive statistics helps investigators learn more about the study sample. Inferential statistics are used to make comparisons and draw conclusions from the study data. Knowledge gained from inferential statistics allows investigators to make inferences and generalize beyond their study sample to other groups.

Before we move on to specific descriptive and inferential statistics, there are 2 more definitions to review. Parametric statistics are generally used when values in an interval-level or ratio-level variable are normally distributed (i.e., the entire group of values has a bell-shaped curve when plotted by frequency). These statistics are used because we can define parameters of the data, such as the centre and width of the normally distributed curve. In contrast, interval-level and ratio-level variables with values that are not normally distributed, as well as nominal-level and ordinal-level variables, are generally analyzed using nonparametric statistics.

METHODS FOR SUMMARIZING STUDY DATA: DESCRIPTIVE STATISTICS

The first step in a data analysis plan is to describe the data collected in the study. This can be done using figures to give a visual presentation of the data and statistics to generate numeric descriptions of the data.

Selection of an appropriate figure to represent a particular set of data depends on the measurement level of the variable. Data for nominal-level and ordinal-level variables may be interpreted using a pie graph or bar graph . Both options allow us to examine the relative number of participants within each category (by reporting the percentages within each category), whereas a bar graph can also be used to examine absolute numbers. For example, we could create a pie graph to illustrate the proportions of men and women in a study sample and a bar graph to illustrate the number of people who report exercising at each level of frequency (never, sometimes, often, or always).

Interval-level and ratio-level variables may also be interpreted using a pie graph or bar graph; however, these types of variables often have too many categories for such graphs to provide meaningful information. Instead, these variables may be better interpreted using a histogram . Unlike a bar graph, which displays the frequency for each distinct category, a histogram displays the frequency within a range of continuous categories. Information from this type of figure allows us to determine whether the data are normally distributed. In addition to pie graphs, bar graphs, and histograms, many other types of figures are available for the visual representation of data. Interested readers can find additional types of figures in the books recommended in the “Further Readings” section.

Figures are also useful for visualizing comparisons between variables or between subgroups within a variable (for example, the distribution of blood glucose according to sex). Box plots are useful for summarizing information for a variable that does not follow a normal distribution. The lower and upper limits of the box identify the interquartile range (or 25th and 75th percentiles), while the midline indicates the median value (or 50th percentile). Scatter plots provide information on how the categories for one continuous variable relate to categories in a second variable; they are often helpful in the analysis of correlations.

In addition to using figures to present a visual description of the data, investigators can use statistics to provide a numeric description. Regardless of the measurement level, we can find the mode by identifying the most frequent category within a variable. When summarizing nominal-level and ordinal-level variables, the simplest method is to report the proportion of participants within each category.

The choice of the most appropriate descriptive statistic for interval-level and ratio-level variables will depend on how the values are distributed. If the values are normally distributed, we can summarize the information using the parametric statistics of mean and standard deviation. The mean is the arithmetic average of all values within the variable, and the standard deviation tells us how widely the values are dispersed around the mean. When values of interval-level and ratio-level variables are not normally distributed, or we are summarizing information from an ordinal-level variable, it may be more appropriate to use the nonparametric statistics of median and range. The first step in identifying these descriptive statistics is to arrange study participants according to the variable categories from lowest value to highest value. The range is used to report the lowest and highest values. The median or 50th percentile is located by dividing the number of participants into 2 groups, such that half (50%) of the participants have values above the median and the other half (50%) have values below the median. Similarly, the 25th percentile is the value with 25% of the participants having values below and 75% of the participants having values above, and the 75th percentile is the value with 75% of participants having values below and 25% of participants having values above. Together, the 25th and 75th percentiles define the interquartile range .

PROCESS TO IDENTIFY RELEVANT STATISTICAL TESTS: INFERENTIAL STATISTICS

One caveat about the information provided in this section: selecting the most appropriate inferential statistic for a specific study should be a combination of following these suggestions, seeking advice from experts, and discussing with your co-investigators. My intention here is to give you a place to start a conversation with your colleagues about the options available as you develop your data analysis plan.

There are 3 key questions to consider when selecting an appropriate inferential statistic for a study: What is the research question? What is the study design? and What is the level of measurement? It is important for investigators to carefully consider these questions when developing the study protocol and creating the analysis plan. The figures that accompany these questions show decision trees that will help you to narrow down the list of inferential statistics that would be relevant to a particular study. Appendix 1 provides brief definitions of the inferential statistics named in these figures. Additional information, such as the formulae for various inferential statistics, can be obtained from textbooks, statistical software packages, and biostatisticians.

What Is the Research Question?

The first step in identifying relevant inferential statistics for a study is to consider the type of research question being asked. You can find more details about the different types of research questions in a previous article in this Research Primer series that covered questions and hypotheses. 5 A relational question seeks information about the relationship among variables; in this situation, investigators will be interested in determining whether there is an association ( Figure 1 ). A causal question seeks information about the effect of an intervention on an outcome; in this situation, the investigator will be interested in determining whether there is a difference ( Figure 2 ).

Decision tree to identify inferential statistics for an association.

Decision tree to identify inferential statistics for measuring a difference.

What Is the Study Design?

When considering a question of association, investigators will be interested in measuring the relationship between variables ( Figure 1 ). A study designed to determine whether there is consensus among different raters will be measuring agreement. For example, an investigator may be interested in determining whether 2 raters, using the same assessment tool, arrive at the same score. Correlation analyses examine the strength of a relationship or connection between 2 variables, like age and blood glucose. Regression analyses also examine the strength of a relationship or connection; however, in this type of analysis, one variable is considered an outcome (or dependent variable) and the other variable is considered a predictor (or independent variable). Regression analyses often consider the influence of multiple predictors on an outcome at the same time. For example, an investigator may be interested in examining the association between a treatment and blood glucose, while also considering other factors, like age, sex, ethnicity, exercise frequency, and weight.

When considering a question of difference, investigators must first determine how many groups they will be comparing. In some cases, investigators may be interested in comparing the characteristic of one group with that of an external reference group. For example, is the mean age of study participants similar to the mean age of all people in the target group? If more than one group is involved, then investigators must also determine whether there is an underlying connection between the sets of values (or samples ) to be compared. Samples are considered independent or unpaired when the information is taken from different groups. For example, we could use an unpaired t test to compare the mean age between 2 independent samples, such as the intervention and control groups in a study. Samples are considered related or paired if the information is taken from the same group of people, for example, measurement of blood glucose at the beginning and end of a study. Because blood glucose is measured in the same people at both time points, we could use a paired t test to determine whether there has been a significant change in blood glucose.

What Is the Level of Measurement?

As described in the first section of this article, variables can be grouped according to the level of measurement (nominal, ordinal, or interval). In most cases, the independent variable in an inferential statistic will be nominal; therefore, investigators need to know the level of measurement for the dependent variable before they can select the relevant inferential statistic. Two exceptions to this consideration are correlation analyses and regression analyses ( Figure 1 ). Because a correlation analysis measures the strength of association between 2 variables, we need to consider the level of measurement for both variables. Regression analyses can consider multiple independent variables, often with a variety of measurement levels. However, for these analyses, investigators still need to consider the level of measurement for the dependent variable.

Selection of inferential statistics to test interval-level variables must include consideration of how the data are distributed. An underlying assumption for parametric tests is that the data approximate a normal distribution. When the data are not normally distributed, information derived from a parametric test may be wrong. 6 When the assumption of normality is violated (for example, when the data are skewed), then investigators should use a nonparametric test. If the data are normally distributed, then investigators can use a parametric test.

ADDITIONAL CONSIDERATIONS

What is the level of significance.

An inferential statistic is used to calculate a p value, the probability of obtaining the observed data by chance. Investigators can then compare this p value against a prespecified level of significance, which is often chosen to be 0.05. This level of significance represents a 1 in 20 chance that the observation is wrong, which is considered an acceptable level of error.

What Are the Most Commonly Used Statistics?

In 1983, Emerson and Colditz 7 reported the first review of statistics used in original research articles published in the New England Journal of Medicine . This review of statistics used in the journal was updated in 1989 and 2005, 8 and this type of analysis has been replicated in many other journals. 9 – 13 Collectively, these reviews have identified 2 important observations. First, the overall sophistication of statistical methodology used and reported in studies has grown over time, with survival analyses and multivariable regression analyses becoming much more common. The second observation is that, despite this trend, 1 in 4 articles describe no statistical methods or report only simple descriptive statistics. When inferential statistics are used, the most common are t tests, contingency table tests (for example, χ 2 test and Fisher exact test), and simple correlation and regression analyses. This information is important for educators, investigators, reviewers, and readers because it suggests that a good foundational knowledge of descriptive statistics and common inferential statistics will enable us to correctly evaluate the majority of research articles. 11 – 13 However, to fully take advantage of all research published in high-impact journals, we need to become acquainted with some of the more complex methods, such as multivariable regression analyses. 8 , 13

What Are Some Additional Resources?

As an investigator and Associate Editor with CJHP , I have often relied on the advice of colleagues to help create my own analysis plans and review the plans of others. Biostatisticians have a wealth of knowledge in the field of statistical analysis and can provide advice on the correct selection, application, and interpretation of these methods. Colleagues who have “been there and done that” with their own data analysis plans are also valuable sources of information. Identify these individuals and consult with them early and often as you develop your analysis plan.

Another important resource to consider when creating your analysis plan is textbooks. Numerous statistical textbooks are available, differing in levels of complexity and scope. The titles listed in the “Further Reading” section are just a few suggestions. I encourage interested readers to look through these and other books to find resources that best fit their needs. However, one crucial book that I highly recommend to anyone wanting to be an investigator or peer reviewer is Lang and Secic’s How to Report Statistics in Medicine (see “Further Reading”). As the title implies, this book covers a wide range of statistics used in medical research and provides numerous examples of how to correctly report the results.

CONCLUSIONS

When it comes to creating an analysis plan for your project, I recommend following the sage advice of Douglas Adams in The Hitchhiker’s Guide to the Galaxy : Don’t panic! 14 Begin with simple methods to summarize and visualize your data, then use the key questions and decision trees provided in this article to identify relevant statistical tests. Information in this article will give you and your co-investigators a place to start discussing the elements necessary for developing an analysis plan. But do not stop there! Use advice from biostatisticians and more experienced colleagues, as well as information in textbooks, to help create your analysis plan and choose the most appropriate statistics for your study. Making careful, informed decisions about the statistics to use in your study should reduce the risk of confirming Mr Twain’s concern.

Appendix 1. Glossary of statistical terms * (part 1 of 2)

- 1-way ANOVA: Uses 1 variable to define the groups for comparing means. This is similar to the Student t test when comparing the means of 2 groups.

- Kruskall–Wallis 1-way ANOVA: Nonparametric alternative for the 1-way ANOVA. Used to determine the difference in medians between 3 or more groups.

- n -way ANOVA: Uses 2 or more variables to define groups when comparing means. Also called a “between-subjects factorial ANOVA”.

- Repeated-measures ANOVA: A method for analyzing whether the means of 3 or more measures from the same group of participants are different.

- Freidman ANOVA: Nonparametric alternative for the repeated-measures ANOVA. It is often used to compare rankings and preferences that are measured 3 or more times.

- Fisher exact: Variation of chi-square that accounts for cell counts < 5.

- McNemar: Variation of chi-square that tests statistical significance of changes in 2 paired measurements of dichotomous variables.

- Cochran Q: An extension of the McNemar test that provides a method for testing for differences between 3 or more matched sets of frequencies or proportions. Often used as a measure of heterogeneity in meta-analyses.

- 1-sample: Used to determine whether the mean of a sample is significantly different from a known or hypothesized value.

- Independent-samples t test (also referred to as the Student t test): Used when the independent variable is a nominal-level variable that identifies 2 groups and the dependent variable is an interval-level variable.

- Paired: Used to compare 2 pairs of scores between 2 groups (e.g., baseline and follow-up blood pressure in the intervention and control groups).

Lang TA, Secic M. How to report statistics in medicine: annotated guidelines for authors, editors, and reviewers. 2nd ed. Philadelphia (PA): American College of Physicians; 2006.

Norman GR, Streiner DL. PDQ statistics. 3rd ed. Hamilton (ON): B.C. Decker; 2003.

Plichta SB, Kelvin E. Munro’s statistical methods for health care research . 6th ed. Philadelphia (PA): Wolters Kluwer Health/ Lippincott, Williams & Wilkins; 2013.

This article is the 12th in the CJHP Research Primer Series, an initiative of the CJHP Editorial Board and the CSHP Research Committee. The planned 2-year series is intended to appeal to relatively inexperienced researchers, with the goal of building research capacity among practising pharmacists. The articles, presenting simple but rigorous guidance to encourage and support novice researchers, are being solicited from authors with appropriate expertise.

Previous articles in this series:

- Bond CM. The research jigsaw: how to get started. Can J Hosp Pharm . 2014;67(1):28–30.

- Tully MP. Research: articulating questions, generating hypotheses, and choosing study designs. Can J Hosp Pharm . 2014;67(1):31–4.

- Loewen P. Ethical issues in pharmacy practice research: an introductory guide. Can J Hosp Pharm. 2014;67(2):133–7.

- Tsuyuki RT. Designing pharmacy practice research trials. Can J Hosp Pharm . 2014;67(3):226–9.

- Bresee LC. An introduction to developing surveys for pharmacy practice research. Can J Hosp Pharm . 2014;67(4):286–91.

- Gamble JM. An introduction to the fundamentals of cohort and case–control studies. Can J Hosp Pharm . 2014;67(5):366–72.

- Austin Z, Sutton J. Qualitative research: getting started. C an J Hosp Pharm . 2014;67(6):436–40.

- Houle S. An introduction to the fundamentals of randomized controlled trials in pharmacy research. Can J Hosp Pharm . 2014; 68(1):28–32.

- Charrois TL. Systematic reviews: What do you need to know to get started? Can J Hosp Pharm . 2014;68(2):144–8.

- Sutton J, Austin Z. Qualitative research: data collection, analysis, and management. Can J Hosp Pharm . 2014;68(3):226–31.

- Cadarette SM, Wong L. An introduction to health care administrative data. Can J Hosp Pharm. 2014;68(3):232–7.

Competing interests: None declared.

Further Reading

- Devor J, Peck R. Statistics: the exploration and analysis of data. 7th ed. Boston (MA): Brooks/Cole Cengage Learning; 2012. [ Google Scholar ]

- Lang TA, Secic M. How to report statistics in medicine: annotated guidelines for authors, editors, and reviewers. 2nd ed. Philadelphia (PA): American College of Physicians; 2006. [ Google Scholar ]

- Mendenhall W, Beaver RJ, Beaver BM. Introduction to probability and statistics. 13th ed. Belmont (CA): Brooks/Cole Cengage Learning; 2009. [ Google Scholar ]

- Norman GR, Streiner DL. PDQ statistics. 3rd ed. Hamilton (ON): B.C. Decker; 2003. [ Google Scholar ]

- Plichta SB, Kelvin E. Munro’s statistical methods for health care research. 6th ed. Philadelphia (PA): Wolters Kluwer Health/Lippincott, Williams & Wilkins; 2013. [ Google Scholar ]

Customize Your Path

Filters Applied

Customize Your Experience.

Utilize the "Customize Your Path" feature to refine the information displayed in myRESEARCHpath based on your role, project inclusions, sponsor or funding, and management center.

Design the analysis plan

Need assistance with analysis planning?

Get help with analysis planning.

Contact the Biostatistics, Epidemiology, and Research Design (BERD) Methods Core:

- Submit a help request

- Top Courses

- Online Degrees

- Find your New Career

- Join for Free

What Is Data Analysis? (With Examples)

Data analysis is the practice of working with data to glean useful information, which can then be used to make informed decisions.

![research plan data analysis [Featured image] A female data analyst takes notes on her laptop at a standing desk in a modern office space](https://d3njjcbhbojbot.cloudfront.net/api/utilities/v1/imageproxy/https://images.ctfassets.net/wp1lcwdav1p1/2CUbULaq9mEfSSIq6lsCUu/b8ec58abf5106bf9bf75b17da09c39c0/What_is_data_analysis.png?w=1500&h=680&q=60&fit=fill&f=faces&fm=jpg&fl=progressive&auto=format%2Ccompress&dpr=1&w=1000)

"It is a capital mistake to theorize before one has data. Insensibly one begins to twist facts to suit theories, instead of theories to suit facts," Sherlock Holme's proclaims in Sir Arthur Conan Doyle's A Scandal in Bohemia.

This idea lies at the root of data analysis. When we can extract meaning from data, it empowers us to make better decisions. And we’re living in a time when we have more data than ever at our fingertips.

Companies are wisening up to the benefits of leveraging data. Data analysis can help a bank to personalize customer interactions, a health care system to predict future health needs, or an entertainment company to create the next big streaming hit.

The World Economic Forum Future of Jobs Report 2023 listed data analysts and scientists as one of the most in-demand jobs, alongside AI and machine learning specialists and big data specialists [ 1 ]. In this article, you'll learn more about the data analysis process, different types of data analysis, and recommended courses to help you get started in this exciting field.

Read more: How to Become a Data Analyst (with or Without a Degree)

Beginner-friendly data analysis courses

Interested in building your knowledge of data analysis today? Consider enrolling in one of these popular courses on Coursera:

In Google's Foundations: Data, Data, Everywhere course, you'll explore key data analysis concepts, tools, and jobs.

In Duke University's Data Analysis and Visualization course, you'll learn how to identify key components for data analytics projects, explore data visualization, and find out how to create a compelling data story.

Data analysis process

As the data available to companies continues to grow both in amount and complexity, so too does the need for an effective and efficient process by which to harness the value of that data. The data analysis process typically moves through several iterative phases. Let’s take a closer look at each.

Identify the business question you’d like to answer. What problem is the company trying to solve? What do you need to measure, and how will you measure it?

Collect the raw data sets you’ll need to help you answer the identified question. Data collection might come from internal sources, like a company’s client relationship management (CRM) software, or from secondary sources, like government records or social media application programming interfaces (APIs).

Clean the data to prepare it for analysis. This often involves purging duplicate and anomalous data, reconciling inconsistencies, standardizing data structure and format, and dealing with white spaces and other syntax errors.

Analyze the data. By manipulating the data using various data analysis techniques and tools, you can begin to find trends, correlations, outliers, and variations that tell a story. During this stage, you might use data mining to discover patterns within databases or data visualization software to help transform data into an easy-to-understand graphical format.

Interpret the results of your analysis to see how well the data answered your original question. What recommendations can you make based on the data? What are the limitations to your conclusions?

You can complete hands-on projects for your portfolio while practicing statistical analysis, data management, and programming with Meta's beginner-friendly Data Analyst Professional Certificate . Designed to prepare you for an entry-level role, this self-paced program can be completed in just 5 months.

Or, L earn more about data analysis in this lecture by Kevin, Director of Data Analytics at Google, from Google's Data Analytics Professional Certificate :

Read more: What Does a Data Analyst Do? A Career Guide

Types of data analysis (with examples)

Data can be used to answer questions and support decisions in many different ways. To identify the best way to analyze your date, it can help to familiarize yourself with the four types of data analysis commonly used in the field.

In this section, we’ll take a look at each of these data analysis methods, along with an example of how each might be applied in the real world.

Descriptive analysis

Descriptive analysis tells us what happened. This type of analysis helps describe or summarize quantitative data by presenting statistics. For example, descriptive statistical analysis could show the distribution of sales across a group of employees and the average sales figure per employee.

Descriptive analysis answers the question, “what happened?”

Diagnostic analysis

If the descriptive analysis determines the “what,” diagnostic analysis determines the “why.” Let’s say a descriptive analysis shows an unusual influx of patients in a hospital. Drilling into the data further might reveal that many of these patients shared symptoms of a particular virus. This diagnostic analysis can help you determine that an infectious agent—the “why”—led to the influx of patients.

Diagnostic analysis answers the question, “why did it happen?”

Predictive analysis

So far, we’ve looked at types of analysis that examine and draw conclusions about the past. Predictive analytics uses data to form projections about the future. Using predictive analysis, you might notice that a given product has had its best sales during the months of September and October each year, leading you to predict a similar high point during the upcoming year.

Predictive analysis answers the question, “what might happen in the future?”

Prescriptive analysis

Prescriptive analysis takes all the insights gathered from the first three types of analysis and uses them to form recommendations for how a company should act. Using our previous example, this type of analysis might suggest a market plan to build on the success of the high sales months and harness new growth opportunities in the slower months.

Prescriptive analysis answers the question, “what should we do about it?”

This last type is where the concept of data-driven decision-making comes into play.

Read more : Advanced Analytics: Definition, Benefits, and Use Cases

What is data-driven decision-making (DDDM)?

Data-driven decision-making, sometimes abbreviated to DDDM), can be defined as the process of making strategic business decisions based on facts, data, and metrics instead of intuition, emotion, or observation.

This might sound obvious, but in practice, not all organizations are as data-driven as they could be. According to global management consulting firm McKinsey Global Institute, data-driven companies are better at acquiring new customers, maintaining customer loyalty, and achieving above-average profitability [ 2 ].

Get started with Coursera

If you’re interested in a career in the high-growth field of data analytics, consider these top-rated courses on Coursera:

Begin building job-ready skills with the Google Data Analytics Professional Certificate . Prepare for an entry-level job as you learn from Google employees—no experience or degree required.

Practice working with data with Macquarie University's Excel Skills for Business Specialization . Learn how to use Microsoft Excel to analyze data and make data-informed business decisions.

Deepen your skill set with Google's Advanced Data Analytics Professional Certificate . In this advanced program, you'll continue exploring the concepts introduced in the beginner-level courses, plus learn Python, statistics, and Machine Learning concepts.

Frequently asked questions (FAQ)

Where is data analytics used .

Just about any business or organization can use data analytics to help inform their decisions and boost their performance. Some of the most successful companies across a range of industries — from Amazon and Netflix to Starbucks and General Electric — integrate data into their business plans to improve their overall business performance.

What are the top skills for a data analyst?

Data analysis makes use of a range of analysis tools and technologies. Some of the top skills for data analysts include SQL, data visualization, statistical programming languages (like R and Python), machine learning, and spreadsheets.

Read : 7 In-Demand Data Analyst Skills to Get Hired in 2022

What is a data analyst job salary?

Data from Glassdoor indicates that the average base salary for a data analyst in the United States is $75,349 as of March 2024 [ 3 ]. How much you make will depend on factors like your qualifications, experience, and location.

Do data analysts need to be good at math?

Data analytics tends to be less math-intensive than data science. While you probably won’t need to master any advanced mathematics, a foundation in basic math and statistical analysis can help set you up for success.

Learn more: Data Analyst vs. Data Scientist: What’s the Difference?

Article sources

World Economic Forum. " The Future of Jobs Report 2023 , https://www3.weforum.org/docs/WEF_Future_of_Jobs_2023.pdf." Accessed March 19, 2024.

McKinsey & Company. " Five facts: How customer analytics boosts corporate performance , https://www.mckinsey.com/business-functions/marketing-and-sales/our-insights/five-facts-how-customer-analytics-boosts-corporate-performance." Accessed March 19, 2024.

Glassdoor. " Data Analyst Salaries , https://www.glassdoor.com/Salaries/data-analyst-salary-SRCH_KO0,12.htm" Accessed March 19, 2024.

Keep reading

Coursera staff.

Editorial Team

Coursera’s editorial team is comprised of highly experienced professional editors, writers, and fact...

This content has been made available for informational purposes only. Learners are advised to conduct additional research to ensure that courses and other credentials pursued meet their personal, professional, and financial goals.

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Data Analysis in Research: Types & Methods

Content Index

Why analyze data in research?

Types of data in research, finding patterns in the qualitative data, methods used for data analysis in qualitative research, preparing data for analysis, methods used for data analysis in quantitative research, considerations in research data analysis, what is data analysis in research.

Definition of research in data analysis: According to LeCompte and Schensul, research data analysis is a process used by researchers to reduce data to a story and interpret it to derive insights. The data analysis process helps reduce a large chunk of data into smaller fragments, which makes sense.

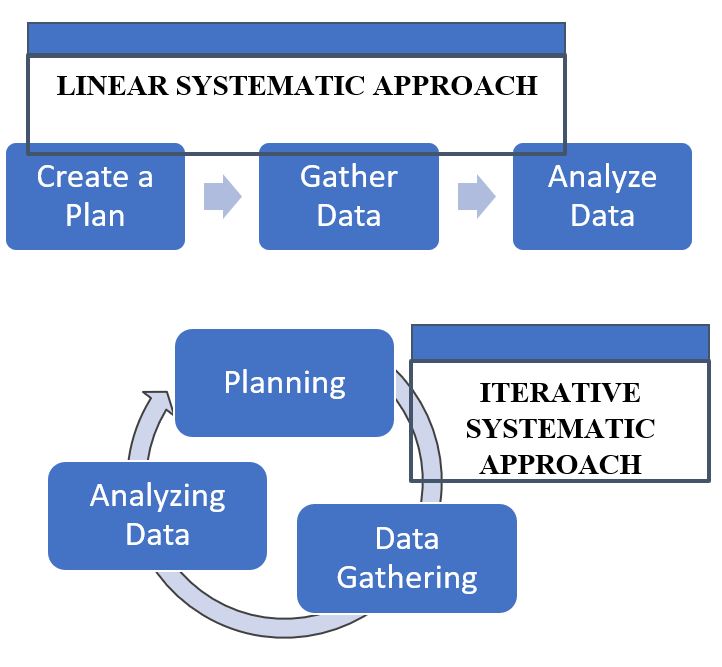

Three essential things occur during the data analysis process — the first is data organization . Summarization and categorization together contribute to becoming the second known method used for data reduction. It helps find patterns and themes in the data for easy identification and linking. The third and last way is data analysis – researchers do it in both top-down and bottom-up fashion.

LEARN ABOUT: Research Process Steps

On the other hand, Marshall and Rossman describe data analysis as a messy, ambiguous, and time-consuming but creative and fascinating process through which a mass of collected data is brought to order, structure and meaning.

We can say that “the data analysis and data interpretation is a process representing the application of deductive and inductive logic to the research and data analysis.”

Researchers rely heavily on data as they have a story to tell or research problems to solve. It starts with a question, and data is nothing but an answer to that question. But, what if there is no question to ask? Well! It is possible to explore data even without a problem – we call it ‘Data Mining’, which often reveals some interesting patterns within the data that are worth exploring.

Irrelevant to the type of data researchers explore, their mission and audiences’ vision guide them to find the patterns to shape the story they want to tell. One of the essential things expected from researchers while analyzing data is to stay open and remain unbiased toward unexpected patterns, expressions, and results. Remember, sometimes, data analysis tells the most unforeseen yet exciting stories that were not expected when initiating data analysis. Therefore, rely on the data you have at hand and enjoy the journey of exploratory research.

Create a Free Account

Every kind of data has a rare quality of describing things after assigning a specific value to it. For analysis, you need to organize these values, processed and presented in a given context, to make it useful. Data can be in different forms; here are the primary data types.

- Qualitative data: When the data presented has words and descriptions, then we call it qualitative data . Although you can observe this data, it is subjective and harder to analyze data in research, especially for comparison. Example: Quality data represents everything describing taste, experience, texture, or an opinion that is considered quality data. This type of data is usually collected through focus groups, personal qualitative interviews , qualitative observation or using open-ended questions in surveys.

- Quantitative data: Any data expressed in numbers of numerical figures are called quantitative data . This type of data can be distinguished into categories, grouped, measured, calculated, or ranked. Example: questions such as age, rank, cost, length, weight, scores, etc. everything comes under this type of data. You can present such data in graphical format, charts, or apply statistical analysis methods to this data. The (Outcomes Measurement Systems) OMS questionnaires in surveys are a significant source of collecting numeric data.

- Categorical data: It is data presented in groups. However, an item included in the categorical data cannot belong to more than one group. Example: A person responding to a survey by telling his living style, marital status, smoking habit, or drinking habit comes under the categorical data. A chi-square test is a standard method used to analyze this data.

Learn More : Examples of Qualitative Data in Education

Data analysis in qualitative research

Data analysis and qualitative data research work a little differently from the numerical data as the quality data is made up of words, descriptions, images, objects, and sometimes symbols. Getting insight from such complicated information is a complicated process. Hence it is typically used for exploratory research and data analysis .

Although there are several ways to find patterns in the textual information, a word-based method is the most relied and widely used global technique for research and data analysis. Notably, the data analysis process in qualitative research is manual. Here the researchers usually read the available data and find repetitive or commonly used words.

For example, while studying data collected from African countries to understand the most pressing issues people face, researchers might find “food” and “hunger” are the most commonly used words and will highlight them for further analysis.

LEARN ABOUT: Level of Analysis

The keyword context is another widely used word-based technique. In this method, the researcher tries to understand the concept by analyzing the context in which the participants use a particular keyword.

For example , researchers conducting research and data analysis for studying the concept of ‘diabetes’ amongst respondents might analyze the context of when and how the respondent has used or referred to the word ‘diabetes.’

The scrutiny-based technique is also one of the highly recommended text analysis methods used to identify a quality data pattern. Compare and contrast is the widely used method under this technique to differentiate how a specific text is similar or different from each other.

For example: To find out the “importance of resident doctor in a company,” the collected data is divided into people who think it is necessary to hire a resident doctor and those who think it is unnecessary. Compare and contrast is the best method that can be used to analyze the polls having single-answer questions types .

Metaphors can be used to reduce the data pile and find patterns in it so that it becomes easier to connect data with theory.

Variable Partitioning is another technique used to split variables so that researchers can find more coherent descriptions and explanations from the enormous data.

LEARN ABOUT: Qualitative Research Questions and Questionnaires

There are several techniques to analyze the data in qualitative research, but here are some commonly used methods,

- Content Analysis: It is widely accepted and the most frequently employed technique for data analysis in research methodology. It can be used to analyze the documented information from text, images, and sometimes from the physical items. It depends on the research questions to predict when and where to use this method.

- Narrative Analysis: This method is used to analyze content gathered from various sources such as personal interviews, field observation, and surveys . The majority of times, stories, or opinions shared by people are focused on finding answers to the research questions.

- Discourse Analysis: Similar to narrative analysis, discourse analysis is used to analyze the interactions with people. Nevertheless, this particular method considers the social context under which or within which the communication between the researcher and respondent takes place. In addition to that, discourse analysis also focuses on the lifestyle and day-to-day environment while deriving any conclusion.

- Grounded Theory: When you want to explain why a particular phenomenon happened, then using grounded theory for analyzing quality data is the best resort. Grounded theory is applied to study data about the host of similar cases occurring in different settings. When researchers are using this method, they might alter explanations or produce new ones until they arrive at some conclusion.

LEARN ABOUT: 12 Best Tools for Researchers

Data analysis in quantitative research

The first stage in research and data analysis is to make it for the analysis so that the nominal data can be converted into something meaningful. Data preparation consists of the below phases.

Phase I: Data Validation

Data validation is done to understand if the collected data sample is per the pre-set standards, or it is a biased data sample again divided into four different stages

- Fraud: To ensure an actual human being records each response to the survey or the questionnaire

- Screening: To make sure each participant or respondent is selected or chosen in compliance with the research criteria

- Procedure: To ensure ethical standards were maintained while collecting the data sample

- Completeness: To ensure that the respondent has answered all the questions in an online survey. Else, the interviewer had asked all the questions devised in the questionnaire.

Phase II: Data Editing

More often, an extensive research data sample comes loaded with errors. Respondents sometimes fill in some fields incorrectly or sometimes skip them accidentally. Data editing is a process wherein the researchers have to confirm that the provided data is free of such errors. They need to conduct necessary checks and outlier checks to edit the raw edit and make it ready for analysis.

Phase III: Data Coding

Out of all three, this is the most critical phase of data preparation associated with grouping and assigning values to the survey responses . If a survey is completed with a 1000 sample size, the researcher will create an age bracket to distinguish the respondents based on their age. Thus, it becomes easier to analyze small data buckets rather than deal with the massive data pile.

LEARN ABOUT: Steps in Qualitative Research

After the data is prepared for analysis, researchers are open to using different research and data analysis methods to derive meaningful insights. For sure, statistical analysis plans are the most favored to analyze numerical data. In statistical analysis, distinguishing between categorical data and numerical data is essential, as categorical data involves distinct categories or labels, while numerical data consists of measurable quantities. The method is again classified into two groups. First, ‘Descriptive Statistics’ used to describe data. Second, ‘Inferential statistics’ that helps in comparing the data .

Descriptive statistics

This method is used to describe the basic features of versatile types of data in research. It presents the data in such a meaningful way that pattern in the data starts making sense. Nevertheless, the descriptive analysis does not go beyond making conclusions. The conclusions are again based on the hypothesis researchers have formulated so far. Here are a few major types of descriptive analysis methods.

Measures of Frequency

- Count, Percent, Frequency

- It is used to denote home often a particular event occurs.

- Researchers use it when they want to showcase how often a response is given.

Measures of Central Tendency

- Mean, Median, Mode

- The method is widely used to demonstrate distribution by various points.

- Researchers use this method when they want to showcase the most commonly or averagely indicated response.

Measures of Dispersion or Variation

- Range, Variance, Standard deviation

- Here the field equals high/low points.

- Variance standard deviation = difference between the observed score and mean

- It is used to identify the spread of scores by stating intervals.

- Researchers use this method to showcase data spread out. It helps them identify the depth until which the data is spread out that it directly affects the mean.

Measures of Position

- Percentile ranks, Quartile ranks

- It relies on standardized scores helping researchers to identify the relationship between different scores.

- It is often used when researchers want to compare scores with the average count.

For quantitative research use of descriptive analysis often give absolute numbers, but the in-depth analysis is never sufficient to demonstrate the rationale behind those numbers. Nevertheless, it is necessary to think of the best method for research and data analysis suiting your survey questionnaire and what story researchers want to tell. For example, the mean is the best way to demonstrate the students’ average scores in schools. It is better to rely on the descriptive statistics when the researchers intend to keep the research or outcome limited to the provided sample without generalizing it. For example, when you want to compare average voting done in two different cities, differential statistics are enough.

Descriptive analysis is also called a ‘univariate analysis’ since it is commonly used to analyze a single variable.

Inferential statistics

Inferential statistics are used to make predictions about a larger population after research and data analysis of the representing population’s collected sample. For example, you can ask some odd 100 audiences at a movie theater if they like the movie they are watching. Researchers then use inferential statistics on the collected sample to reason that about 80-90% of people like the movie.

Here are two significant areas of inferential statistics.

- Estimating parameters: It takes statistics from the sample research data and demonstrates something about the population parameter.

- Hypothesis test: I t’s about sampling research data to answer the survey research questions. For example, researchers might be interested to understand if the new shade of lipstick recently launched is good or not, or if the multivitamin capsules help children to perform better at games.

These are sophisticated analysis methods used to showcase the relationship between different variables instead of describing a single variable. It is often used when researchers want something beyond absolute numbers to understand the relationship between variables.

Here are some of the commonly used methods for data analysis in research.

- Correlation: When researchers are not conducting experimental research or quasi-experimental research wherein the researchers are interested to understand the relationship between two or more variables, they opt for correlational research methods.

- Cross-tabulation: Also called contingency tables, cross-tabulation is used to analyze the relationship between multiple variables. Suppose provided data has age and gender categories presented in rows and columns. A two-dimensional cross-tabulation helps for seamless data analysis and research by showing the number of males and females in each age category.

- Regression analysis: For understanding the strong relationship between two variables, researchers do not look beyond the primary and commonly used regression analysis method, which is also a type of predictive analysis used. In this method, you have an essential factor called the dependent variable. You also have multiple independent variables in regression analysis. You undertake efforts to find out the impact of independent variables on the dependent variable. The values of both independent and dependent variables are assumed as being ascertained in an error-free random manner.

- Frequency tables: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Analysis of variance: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Researchers must have the necessary research skills to analyze and manipulation the data , Getting trained to demonstrate a high standard of research practice. Ideally, researchers must possess more than a basic understanding of the rationale of selecting one statistical method over the other to obtain better data insights.

- Usually, research and data analytics projects differ by scientific discipline; therefore, getting statistical advice at the beginning of analysis helps design a survey questionnaire, select data collection methods , and choose samples.

LEARN ABOUT: Best Data Collection Tools

- The primary aim of data research and analysis is to derive ultimate insights that are unbiased. Any mistake in or keeping a biased mind to collect data, selecting an analysis method, or choosing audience sample il to draw a biased inference.

- Irrelevant to the sophistication used in research data and analysis is enough to rectify the poorly defined objective outcome measurements. It does not matter if the design is at fault or intentions are not clear, but lack of clarity might mislead readers, so avoid the practice.

- The motive behind data analysis in research is to present accurate and reliable data. As far as possible, avoid statistical errors, and find a way to deal with everyday challenges like outliers, missing data, data altering, data mining , or developing graphical representation.

LEARN MORE: Descriptive Research vs Correlational Research The sheer amount of data generated daily is frightening. Especially when data analysis has taken center stage. in 2018. In last year, the total data supply amounted to 2.8 trillion gigabytes. Hence, it is clear that the enterprises willing to survive in the hypercompetitive world must possess an excellent capability to analyze complex research data, derive actionable insights, and adapt to the new market needs.

LEARN ABOUT: Average Order Value

QuestionPro is an online survey platform that empowers organizations in data analysis and research and provides them a medium to collect data by creating appealing surveys.

MORE LIKE THIS

Raked Weighting: A Key Tool for Accurate Survey Results

May 31, 2024

Top 8 Data Trends to Understand the Future of Data

May 30, 2024

Top 12 Interactive Presentation Software to Engage Your User

May 29, 2024

Trend Report: Guide for Market Dynamics & Strategic Analysis

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Data Analysis Plan: Ultimate Guide and Examples

Learn the post survey questions you need to ask attendees for valuable feedback.

Once you get survey feedback , you might think that the job is done. The next step, however, is to analyze those results. Creating a data analysis plan will help guide you through how to analyze the data and come to logical conclusions.

So, how do you create a data analysis plan? It starts with the goals you set for your survey in the first place. This guide will help you create a data analysis plan that will effectively utilize the data your respondents provided.

What can a data analysis plan do?

Think of data analysis plans as a guide to your organization and analysis, which will help you accomplish your ultimate survey goals. A good plan will make sure that you get answers to your top questions, such as “how do customers feel about this new product?” through specific survey questions. It will also separate respondents to see how opinions among various demographics may differ.

Creating a data analysis plan

Follow these steps to create your own data analysis plan.

Review your goals

When you plan a survey, you typically have specific goals in mind. That might be measuring customer sentiment, answering an academic question, or achieving another purpose.

If you’re beta testing a new product, your survey goal might be “find out how potential customers feel about the new product.” You probably came up with several topics you wanted to address, such as:

- What is the typical experience with the product?

- Which demographics are responding most positively? How well does this match with our idea of the target market?

- Are there any specific pain points that need to be corrected before the product launches?

- Are there any features that should be added before the product launches?

Use these objectives to organize your survey data.

Evaluate the results for your top questions

Your survey questions probably included at least one or two questions that directly relate to your primary goals. For example, in the beta testing example above, your top two questions might be:

- How would you rate your overall satisfaction with the product?

- Would you consider purchasing this product?

Those questions offer a general overview of how your customers feel. Whether their sentiments are generally positive, negative, or neutral, this is the main data your company needs. The next goal is to determine why the beta testers feel the way they do.

Assign questions to specific goals

Next, you’ll organize your survey questions and responses by which research question they answer. For example, you might assign questions to the “overall satisfaction” section, like:

- How would you describe your experience with the product?

- Did you encounter any problems while using the product?

- What were your favorite/least favorite features?

- How useful was the product in achieving your goals?

Under demographics, you’d include responses to questions like:

- Education level

This helps you determine which questions and answers will answer larger questions, such as “which demographics are most likely to have had a positive experience?”

Pay special attention to demographics

Demographics are particularly important to a data analysis plan. Of course you’ll want to know what kind of experience your product testers are having with the product—but you also want to know who your target market should be. Separating responses based on demographics can be especially illuminating.

For example, you might find that users aged 25 to 45 find the product easier to use, but people over 65 find it too difficult. If you want to target the over-65 demographic, you can use that group’s survey data to refine the product before it launches.

Other demographic segregation can be helpful, too. You might find that your product is popular with people from the tech industry, who have an easier time with a user interface, while those from other industries, like education, struggle to use the tool effectively. If you’re targeting the tech industry, you may not need to make adjustments—but if it’s a technological tool designed primarily for educators, you’ll want to make appropriate changes.

Similarly, factors like location, education level, income bracket, and other demographics can help you compare experiences between the groups. Depending on your ultimate survey goals, you may want to compare multiple demographic types to get accurate insight into your results.

Consider correlation vs. causation

When creating your data analysis plan, remember to consider the difference between correlation and causation. For instance, being over 65 might correlate with a difficult user experience, but the cause of the experience might be something else entirely. You may find that your respondents over 65 are primarily from a specific educational background, or have issues reading the text in your user interface. It’s important to consider all the different data points, and how they might have an effect on the overall results.

Moving on to analysis

Once you’ve assigned survey questions to the overall research questions they’re designed to answer, you can move on to the actual data analysis. Depending on your survey tool, you may already have software that can perform quantitative and/or qualitative analysis. Choose the analysis types that suit your questions and goals, then use your analytic software to evaluate the data and create graphs or reports with your survey results.

At the end of the process, you should be able to answer your major research questions.

Power your data analysis with Voiceform

Once you have established your survey goals, Voiceform can power your data collection and analysis. Our feature-rich survey platform offers an easy-to-use interface, multi-channel survey tools, multimedia question types, and powerful analytics. We can help you create and work through a data analysis plan. Find out more about the product, and book a free demo today !

We make collecting, sharing and analyzing data a breeze

Get started for free. Get instant access to Voiceform features that get you amazing data in minutes.

Writing the Data Analysis Plan

- First Online: 01 January 2010

Cite this chapter

- A. T. Panter 4

5826 Accesses

3 Altmetric

You and your project statistician have one major goal for your data analysis plan: You need to convince all the reviewers reading your proposal that you would know what to do with your data once your project is funded and your data are in hand. The data analytic plan is a signal to the reviewers about your ability to score, describe, and thoughtfully synthesize a large number of variables into appropriately-selected quantitative models once the data are collected. Reviewers respond very well to plans with a clear elucidation of the data analysis steps – in an appropriate order, with an appropriate level of detail and reference to relevant literatures, and with statistical models and methods for that map well into your proposed aims. A successful data analysis plan produces reviews that either include no comments about the data analysis plan or better yet, compliments it for being comprehensive and logical given your aims. This chapter offers practical advice about developing and writing a compelling, “bullet-proof” data analytic plan for your grant application.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Similar content being viewed by others

A Holistic Approach to Empirical Analysis: The Insignificance of P, Hypothesis Testing and Statistical Significance*

Meta-Analysis

Researchers’ data analysis choices: an excess of false positives?

Aiken, L. S. & West, S. G. (1991). Multiple regression: testing and interpreting interactions . Newbury Park, CA: Sage.

Google Scholar

Aiken, L. S., West, S. G., & Millsap, R. E. (2008). Doctoral training in statistics, measurement, and methodology in psychology: Replication and extension of Aiken, West, Sechrest and Reno’s (1990) survey of PhD programs in North America. American Psychologist , 63 , 32–50.

Article PubMed Google Scholar

Allison, P. D. (2003). Missing data techniques for structural equation modeling. Journal of Abnormal Psychology , 112 , 545–557.

American Psychological Association (APA) Task Force to Increase the Quantitative Pipeline (2009). Report of the task force to increase the quantitative pipeline . Washington, DC: American Psychological Association.

Bauer, D. & Curran, P. J. (2004). The integration of continuous and discrete latent variables: Potential problems and promising opportunities. Psychological Methods , 9 , 3–29.

Bollen, K. A. (1989). Structural equations with latent variables . New York: Wiley.

Bollen, K. A. & Curran, P. J. (2007). Latent curve models: A structural equation modeling approach . New York: Wiley.

Cohen, J., Cohen, P., West, S. G., & Aiken, L. S. (2003). Multiple correlation/regression for the behavioral sciences (3rd ed.). Mahwah, NJ: Erlbaum.

Curran, P. J., Bauer, D. J., & Willoughby, M. T. (2004). Testing main effects and interactions in hierarchical linear growth models. Psychological Methods , 9 , 220–237.

Embretson, S. E. & Reise, S. P. (2000). Item response theory for psychologists . Mahwah, NJ: Erlbaum.

Enders, C. K. (2006). Analyzing structural equation models with missing data. In G. R. Hancock & R. O. Mueller (Eds.), Structural equation modeling: A second course (pp. 313–342). Greenwich, CT: Information Age.

Hosmer, D. & Lemeshow, S. (1989). Applied logistic regression . New York: Wiley.

Hoyle, R. H. & Panter, A. T. (1995). Writing about structural equation models. In R. H. Hoyle (Ed.), Structural equation modeling: Concepts, issues, and applications (pp. 158–176). Thousand Oaks: Sage.

Kaplan, D. & Elliott, P. R. (1997). A didactic example of multilevel structural equation modeling applicable to the study of organizations. Structural Equation Modeling , 4 , 1–23.

Article Google Scholar

Lanza, S. T., Collins, L. M., Schafer, J. L., & Flaherty, B. P. (2005). Using data augmentation to obtain standard errors and conduct hypothesis tests in latent class and latent transition analysis. Psychological Methods , 10 , 84–100.

MacKinnon, D. P. (2008). Introduction to statistical mediation analysis . Mahwah, NJ: Erlbaum.

Maxwell, S. E. (2004). The persistence of underpowered studies in psychological research: Causes, consequences, and remedies. Psychological Methods , 9 , 147–163.

McCullagh, P. & Nelder, J. (1989). Generalized linear models . London: Chapman and Hall.

McDonald, R. P. & Ho, M. R. (2002). Principles and practices in reporting structural equation modeling analyses. Psychological Methods , 7 , 64–82.

Messick, S. (1989). Validity. In R. L. Linn (Ed.), Educational measurement (3rd ed., pp. 13–103). New York: Macmillan.

Muthén, B. O. (1994). Multilevel covariance structure analysis. Sociological Methods & Research , 22 , 376–398.

Muthén, B. (2008). Latent variable hybrids: overview of old and new models. In G. R. Hancock & K. M. Samuelsen (Eds.), Advances in latent variable mixture models (pp. 1–24). Charlotte, NC: Information Age.

Muthén, B. & Masyn, K. (2004). Discrete-time survival mixture analysis. Journal of Educational and Behavioral Statistics , 30 , 27–58.

Muthén, L. K. & Muthén, B. O. (2004). Mplus, statistical analysis with latent variables: User’s guide . Los Angeles, CA: Muthén &Muthén.

Peugh, J. L. & Enders, C. K. (2004). Missing data in educational research: a review of reporting practices and suggestions for improvement. Review of Educational Research , 74 , 525–556.

Preacher, K. J., Curran, P. J., & Bauer, D. J. (2006). Computational tools for probing interaction effects in multiple linear regression, multilevel modeling, and latent curve analysis. Journal of Educational and Behavioral Statistics , 31 , 437–448.

Preacher, K. J., Curran, P. J., & Bauer, D. J. (2003, September). Probing interactions in multiple linear regression, latent curve analysis, and hierarchical linear modeling: Interactive calculation tools for establishing simple intercepts, simple slopes, and regions of significance [Computer software]. Available from http://www.quantpsy.org .

Preacher, K. J., Rucker, D. D., & Hayes, A. F. (2007). Addressing moderated mediation hypotheses: Theory, methods, and prescriptions. Multivariate Behavioral Research , 42 , 185–227.

Raudenbush, S. W. & Bryk, A. S. (2002). Hierarchical linear models: Applications and data analysis methods (2nd ed.). Thousand Oaks, CA: Sage.

Radloff, L. (1977). The CES-D scale: A self-report depression scale for research in the general population. Applied Psychological Measurement , 1 , 385–401.

Rosenberg, M. (1965). Society and the adolescent self-image . Princeton, NJ: Princeton University Press.

Schafer. J. L. & Graham, J. W. (2002). Missing data: Our view of the state of the art. Psychological Methods , 7 , 147–177.

Schumacker, R. E. (2002). Latent variable interaction modeling. Structural Equation Modeling , 9 , 40–54.

Schumacker, R. E. & Lomax, R. G. (2004). A beginner’s guide to structural equation modeling . Mahwah, NJ: Erlbaum.

Selig, J. P. & Preacher, K. J. (2008, June). Monte Carlo method for assessing mediation: An interactive tool for creating confidence intervals for indirect effects [Computer software]. Available from http://www.quantpsy.org .

Singer, J. D. & Willett, J. B. (1991). Modeling the days of our lives: Using survival analysis when designing and analyzing longitudinal studies of duration and the timing of events. Psychological Bulletin , 110 , 268–290.

Singer, J. D. & Willett, J. B. (1993). It’s about time: Using discrete-time survival analysis to study duration and the timing of events. Journal of Educational Statistics , 18 , 155–195.

Singer, J. D. & Willett, J. B. (2003). Applied longitudinal data analysis: Modeling change and event occurrence . New York: Oxford University.

Book Google Scholar

Vandenberg, R. J. & Lance, C. E. (2000). A review and synthesis of the measurement invariance literature: Suggestions, practices, and recommendations for organizational research. Organizational Research Methods , 3 , 4–69.

Wirth, R. J. & Edwards, M. C. (2007). Item factor analysis: Current approaches and future directions. Psychological Methods , 12 , 58–79.

Article PubMed CAS Google Scholar

Download references

Author information

Authors and affiliations.

L. L. Thurstone Psychometric Laboratory, Department of Psychology, University of North Carolina, Chapel Hill, NC, USA

A. T. Panter

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to A. T. Panter .

Editor information

Editors and affiliations.

National Institute of Mental Health, Executive Blvd. 6001, Bethesda, 20892-9641, Maryland, USA

Willo Pequegnat

Ellen Stover

Delafield Place, N.W. 1413, Washington, 20011, District of Columbia, USA

Cheryl Anne Boyce

Rights and permissions

Reprints and permissions

Copyright information

© 2010 Springer Science+Business Media, LLC

About this chapter

Panter, A.T. (2010). Writing the Data Analysis Plan. In: Pequegnat, W., Stover, E., Boyce, C. (eds) How to Write a Successful Research Grant Application. Springer, Boston, MA. https://doi.org/10.1007/978-1-4419-1454-5_22

Download citation

DOI : https://doi.org/10.1007/978-1-4419-1454-5_22

Published : 20 August 2010

Publisher Name : Springer, Boston, MA

Print ISBN : 978-1-4419-1453-8

Online ISBN : 978-1-4419-1454-5

eBook Packages : Medicine Medicine (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

2.3 Data management and analysis

Learning objectives.

Learners will be able to…

- Define and construct a data analysis plan

- Define key quantitative data management terms—variable name, data dictionary, and observations/cases

- Differentiate between univariate and bivariate quantitative analysis

- Explain when we might use quantitative bivariate analysis in social work research

- Identify how your qualitative research question, research aim, and type of data may influence your choice of analytic methods

- Outline the steps you will take in preparation for conducting qualitative data analysis

After you have your raw data, whether this is secondary data or data you collected yourself, you will need to analyze it. While the specific steps to follow in quantitative or qualitative data analysis are beyond the scope of this chapter, we are going to address some basic concepts in this section to help you create a data analysis plan. A data analysis plan is an ordered outline that includes your research question, a description of the data you are going to use to answer it, and the exact step-by-step analyses that you plan to run to answer your research question. If you look back at Table 2.1, you will see that creating a data analysis plan is a part of the study design process. The data analysis plan flows from the research question, is integral to the study design, and should be well conceptualized prior to beginning data collection. In this section, we will walk through the basics of quantitative and qualitative data analysis to help you understand the fundamentals of creating a data analysis plan.

Quantitative Data: Management

When considering what data you might want to collect as part of your project, there are two important considerations that can create dilemmas for researchers. You might only get one chance to interact with your participants, so you must think comprehensively in your planning phase about what information you need and collect as much relevant data as possible. At the same time, though, especially when collecting sensitive information, you need to consider how onerous the data collection is for participants and whether you really need them to share that information. Just because something is interesting to us doesn’t mean it’s related enough to our research question to chase it down. Work with your research team and/or faculty early in your project to talk through these issues before you get to this point. And if you’re using secondary data, make sure you have access to all the information you need in that data before you use it.

Once you’ve collected your quantitative data, you need to make sure it is well-organized in a database in a way that’s actually usable. “Database” can be kind of a scary word, but really, it can be as simple as an Excel spreadsheet or a data file in whatever program you’re using to analyze your data. You may want to avoid Excel and use a formal database such as Microsoft Access or MySQL if you’ve got a large or complicated data set. But if your data set is smaller and you plan to keep your analyses simple, you can definitely get away with Excel. A typical data set is organized with variables as columns and observations/cases as rows. For example, let’s say we did a survey on ice cream preferences and collected the following information in Table 2.3:

There are a few key data management terms to understand:

- Variable name : Just what it sounds like—the name of your variable. Make sure this is something useful, short and, if you’re using something other than Excel, all one word. Most statistical programs will automatically rename variables for you if they aren’t one word, but the names can be a little ridiculous and long.

- Observations/cases : The rows in your data set. In social work, these are often your study participants (people), but can be anything from census tracts to black bears to trains. When we talk about sample size, we’re talking about the number of observations/cases. In our mini data set, each person is an observation/case.

- Data dictionary (also called a code book or metadata) : This is the document where you list your variable names, what the variables actually measure or represent, what each of the values of the variable mean if the meaning isn’t obvious (i.e., if there are numbers assigned to gender), the level of measurement and anything special to know about the variables (for instance, the source if you mashed two data sets together). If you’re using secondary data, the researchers sharing the data should make the data dictionary available.