Conducting Risk-Benefit Assessments and Determining Level of IRB Review

Regulatory background.

Investigators should understand the concept of minimizing risk when designing research and conduct a risk-benefit assessment to determine the level of IRB review of the research. In the protocol application the Investigator should:

- Assess potential risks and discomforts associated with each intervention or research procedure;

- Estimate the probability that a given harm may occur and its severity;

- Explain measures that will be taken to prevent and minimize potential risks and discomforts;

- Describe the benefits that may accrue directly to subjects; and

- Discuss and the potential societal benefits that may be expected from the research.

Risks to subjects who participate in research should be justified by the anticipated benefits to the subject or society. This requirement is found in all codes of research ethics, and is a central requirement in the Federal regulations ( 45 CFR 46.111 and 21 CFR 56.111 ). Two of the required criteria for granting IRB approval of the research are:

- Risks to subjects are minimized by using procedures which are consistent with sound research design and which do not unnecessarily expose subjects to risk, and whenever appropriate, by using procedures already being performed on the subjects for diagnostic or treatment purposes.

- Risks to subjects are reasonable in relation to anticipated benefits, if any, to subjects, and the importance of the knowledge that may reasonably be expected to result. In evaluating risks and benefits, the IRB Committee will consider only those risks and benefits that may result from the research , as distinguished from risks and benefits of therapies subjects would receive even if not participating in the research.

Definitions

Benefit: A helpful or good effect, something intended to help, promote or enhance well-being; an advantage.

Risk: The probability of harm or injury (physical, psychological, social, or economic) occurring as a result of participation in a research study. Both the probability and magnitude of possible harm may vary from minimal to significant.

Minimal Risk: A risk is minimal when “the probability and magnitude of harm or discomfort anticipated in the proposed research are not greater in and of themselves than those ordinarily encountered in daily life of the general population or during the performance of routine physical or psychological examinations or tests .” Examples of procedures that typically are considered no more than minimal risk include: collection of blood or saliva, moderate exercise, medical record chart reviews, quality of life questionnaires and focus groups. See Expedited review categories for a complete listing.

Minimal Risk for Research involving Prisoners: The definition of minimal risk for research involving prisoners differs somewhat from that given for non-institutionalized adults. A risk is minimal when, "the probability and magnitude of physical or psychological harm that is normally encountered in the daily lives, or in the routine medical, dental or psychological examinations of healthy persons ."

Privacy: Privacy is about people and their sense of being in control of others access to them or to information about themselves.

Confidentiality: Confidentiality is about how identifiable, private information that has been disclosed to others is used and stored. People share private information in the context of research with the expectation that it be kept confidential and will not be divulged except in ways that have been agreed upon.

Types of Risks to Research Subjects

Physical Harms: Medical research often involves exposure to pain, discomfort, or injury from invasive medical procedures, or harm from possible side effects of drugs, devices or new procedures. All of these should be considered "risks" for purposes of IRB review.

- Some medical research is designed only to measure the effects of therapeutic or diagnostic procedures applied in the course of caring for an illness. Such research may not entail any significant risks beyond those presented by medically indicated interventions.

- Research designed to evaluate new drugs, devices or procedures typically present more than minimal risk and involve risks that are unforeseeable that could cause serious or disabling injuries.

Psychological Harms: Participation in research may result in undesired changes in thought processes and emotion (e.g., episodes of depression, confusion, feelings of stress, guilt, and loss of self-esteem). Most psychological risks are minimal or transitory, but some research has the potential for causing serious psychological harm.

- Stress and feelings of guilt or embarrassment may arise from thinking or talking about one's own behavior or attitudes on sensitive topics such as drug use, sexual preferences, selfishness, and violence.

- Stress may be induced when the researchers manipulate the subjects' environment to observe their behaviors and reactions. The possibility of psychological harm is heightened when behavioral research involves an element of deception.

Social and Economic Harms: Some losses of privacy and breaches of confidentiality may result in embarrassment within one's business or social group, loss of employment, or criminal prosecution.

- Areas of particular sensitivity involve information regarding alcohol or drug abuse, mental illness, illegal activities, and sexual behavior.

- Some social and behavioral research may yield information about individuals that could be considered stigmatizing to individual subjects or groups of subjects. (e.g., as actual or potential carriers of a gene; individuals prone to alcoholism). Confidentiality safeguards must be strong in these instances.

- Participation in research may result in additional actual costs to individuals. Any anticipated costs to research participants should be described to prospective subjects during the consent process.

Privacy Risks: Loss of privacy in the research context usually involves either covert observation or participant observation of behavior that the subjects consider private. It can also involve access and use of private information about the subjects. The IRB must make two determinations:

- Is the loss of privacy involved acceptable in light of the subjects' reasonable expectations of privacy in the situation under study; and

- Is the research question of sufficient importance to justify the intrusion?

Breach of Confidentiality Risks: Absolutely confidentiality cannot be guaranteed and is always a potential risk of participation in research. A breach of confidentiality is sometimes confused with loss of privacy, but it is a different risk. Loss of privacy concerns access to private information about a person or to a person's body or behavior without consent; confidentiality of data concerns safeguarding information that has been given voluntarily by one person to another. It is important to recognize that a breach of confidentiality may result in psychological harm to individuals (embarrassment, guilt, stress, etc.) or in social harm.

Conducting Risk-Benefit Assessments

Role of the Investigator: When designing research studies, investigators are responsible for conducting an initial risk-benefit assessment using the steps outlined in the diagram below.

Role of the IRB: The IRB ultimately is responsible for evaluating the potential risks and weighing the probability of the risk occurring and the magnitude of harm that may result. It must then judge whether the anticipated benefit, either of new knowledge or of improved health for the research subjects, justifies asking any person to undertake the risks. The IRB cannot approve research in which the risks are judged unreasonable in relation to the anticipated benefits. The IRB must:

- Identify the risks associated with the research, as distinguished from the risks of therapies the subjects would receive even if not participating in research;

- Determine that the risks will be minimized to the extent possible;

- Identify the probable benefits to be derived from the research;

- Determine that the risks are reasonable in relation to be benefits to subjects, if any, and the importance of the knowledge to be gained; and

- Assure that potential subjects will be provided with an accurate and fair description (during consent) of the risks or discomforts and the anticipated benefits.

Diagram 1: Steps for Conducting a Risk-Benefit Assessment

Ways to Minimize Risk

- Provide complete information in the protocol regarding the experimental design and the scientific rationale underlying the proposed research, including the results of previous animal and human studies.

- Assemble a research team with sufficient expertise and experience to conduct the research.

- Ensure that the projected sample size is sufficient to yield useful results.

- Collect data from conventional (standard) procedures to avoid unnecessary risk, particularly for invasive or risky procedures (e.g., spinal taps, cardiac catheterization).

- Incorporate adequate safeguards into the research design such as an appropriate data safety monitoring plan, the presence of trained personnel who can respond to emergencies.

- Store data in such a way that it is impossible to connect research data directly to the individuals from whom or about the data pertain; limit access to key codes and store separately from the data.

- Incorporate procedures to protect the confidentiality of the data (e.g., encryption, codes, and passwords) and follow UCLA IRB guidelines on Data Security in Research .

Levels of IRB Review

Exempt research.

Although the category is called "exempt," this type of research does require IRB review and registration. The exempt registration process is much less rigorous than an expedited or full-committee review. To qualify, research must fall into 8 federally-defined exempt categories. These categories present the lowest amount of risk to potential subjects because, generally speaking, they involve either collection of anonymous or publicly-available data, or conduct of the least potentially-harmful research experiments. For additional information see OHRPP Exempt Guidance .

- Anonymous surveys or interviews

- Passive observation of public behavior without collection of identifiers

- Retrospective chart reviews with no recording of identifiers

- Analyses of discarded pathological specimens without identifiers

Expedited Research

To qualify for an expedited review, research must be no more than minimal risk and fall into nine (9) federally-defined expedited categories. These categories involve collection of samples and data in a manner that is not anonymous and that involves no more than minimal risk to subjects. For additional information see OHRPP Expedited Guidance .

- Surveys and interviews with collection of identifiers

- Collection of biological specimens (e.g., hair, saliva) for research by noninvasive means

- Collection of blood samples from healthy volunteers

- Studies of existing pathological specimens with identifiers

Full Board Research

Proposed human subject research that does not fall into either the exempt or expedited review categories must be submitted for full committee review. This is the most rigorous level of review and, accordingly, is used for research projects that present greater than minimal risk to subjects. The majority of biomedical protocols submitted to the IRB require full Committee review. For additional information see OHRPP Full Board Guidance .

- Clinical investigations of drugs and devices

- Studies involving invasive medical procedures or diagnostics

- Longitudinal interviews about illegal behavior or drug abuse

- Treatment interventions for suicidal ideation and behavior

Regulations and References

- DHHS 45 CFR 46.110

- DHHS 45 CFR 46.111(a)(1-2)

- FDA 21 CFR 56.110

- FDA 21 CFR 56.111(a)(1-2)

- OHRP IRB Guidebook, Chapter 3: Basic IRB Review, Section A, Risk/Benefit Analysis

- Open access

- Published: 20 April 2012

The risk-benefit task of research ethics committees: An evaluation of current approaches and the need to incorporate decision studies methods

- Rosemarie D L C Bernabe 1 ,

- Ghislaine J M W van Thiel 1 ,

- Jan A M Raaijmakers 2 &

- Johannes J M van Delden 1

BMC Medical Ethics volume 13 , Article number: 6 ( 2012 ) Cite this article

15k Accesses

18 Citations

1 Altmetric

Metrics details

Research ethics committees (RECs) are tasked to assess the risks and the benefits of a trial. Currently, two procedure-level approaches are predominant, the Net Risk Test and the Component Analysis.

By looking at decision studies, we see that both procedure-level approaches conflate the various risk-benefit tasks, i.e., risk-benefit assessment, risk-benefit evaluation, risk treatment, and decision making. This conflation makes the RECs’ risk-benefit task confusing, if not impossible. We further realize that RECs are not meant to do all the risk-benefit tasks; instead, RECs are meant to evaluate risks and benefits, appraise risk treatment suggestions, and make the final decision.

As such, research ethics would benefit from looking beyond the procedure-level approaches and allowing disciplines like decision studies to be involved in the discourse on RECs’ risk-benefit task.

Peer Review reports

Research ethics committees (RECs) are tasked to do a risk-benefit assessment of proposed research with human subjects for at least two reasons: to verify the scientific/social validity of the research since an unscientific research is also an unethical research; and to ensure that the risks that the participants are exposed to are necessary, justified, and minimized [ 1 ].

Since 1979, specifically through the Belmont Report, the requirement for a “systematic, nonarbitrary analysis of risks and benefits” has been called for, though up to the present, commentaries about the lack of a generally acknowledged suitable risk-benefit assessment method continue [ 1 ]. The US National Bioethics Advisory Commission (US-NBAC), for example, stated the following in its 2001 report on Ethical and Policy issues in Research Involving Human Participants:

"An IRB’s 1

An institutional review board (IRB) is synonymous to an ethics committee. For consistency’s sake, we shall use REC throughout this paper.

assessment of risks and potential benefits is central to determining that a research study is ethically acceptable and would protect participants, which is not an easy task, because there are no clear criteria for IRBs to use in judging whether the risks of research are reasonable in relation to what might be gained by the research participant or society [ 2 ]."

The lack of a universally accepted risk-benefit assessment criteria does not mean that the research ethics literature says nothing about it. Within this same 2001 report, the US-NBAC recommended Weijer and Miller’s Component Analysis to RECs in evaluating clinical researches. As a reaction to Weijer and P. Miller, Wendler and F. Miller proposed the Net Risk Test. For convenience sake, we shall use the term “procedure-level approaches” [ 3 ] to refer to the models of Weijer et al. and Wendler et al.

In spite of their ideological differences, both procedure-level approaches are procedural in the sense that both approaches propose a step-by-step process in doing the risk-benefit assessment. In this paper, we shall not tackle their differences; rather, we are more interested in their similarities. We are of the position that both approaches fall short of providing an evaluation procedure that is systematic and nonarbitrary precisely because they conflate the various risk-benefit tasks, i.e., risk-benefit analysis, risk-benefit evaluation, risk treatment, and decision making [ 4 – 6 ]. As such, we recommend clarifying what these individual tasks refer to, and to whom these tasks must go. Lastly, we shall assert that RECs would benefit by looking into the current inputs of decision studies on the various risk-benefit tasks.

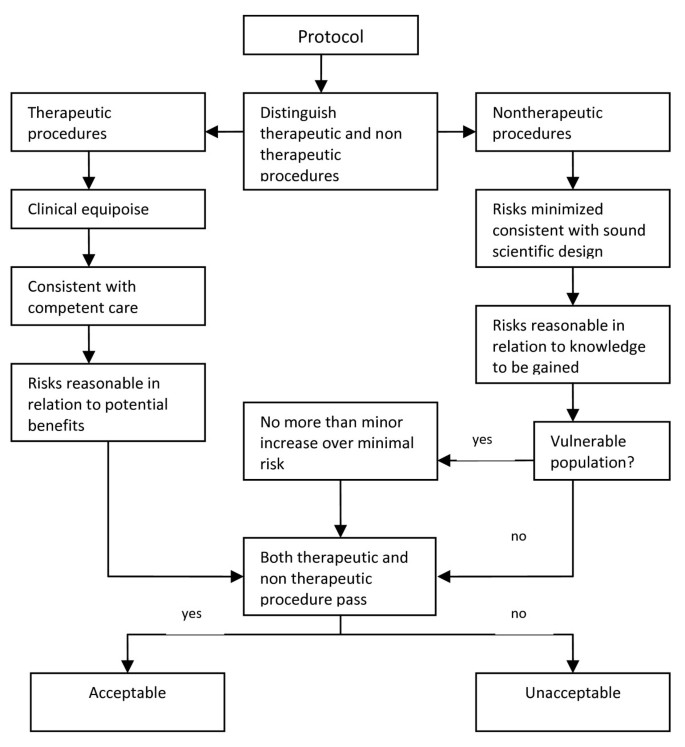

The procedure-level approaches

Charles Weijer and Paul Miller’s Component Analysis (Figure 1 ) requires research protocol procedures or “components” to be evaluated separately, since the probable benefits of one component must not be used to justify the risks that another component poses [ 2 ]. In this system, RECs would need to make a distinction between procedures in the protocol that are with and those that are without therapeutic warrant since therapeutic procedures would need to be analyzed differently compared to those that are non-therapeutic. It works on the assumption that a therapeutic warrant, that is, the reasonable belief that participants may directly benefit from a procedure, would justify more risks for the participants [ 7 ]. As such, therapeutic procedures ought to be evaluated based on the following conditions, in chronological order: that clinical equipoise exists, that is, that there is an “honest professional disagreement in the community of expert practitioners as to the preferred treatment” [ 8 ]; the “procedure is consistent with competent care; and risk is reasonable in relation to potential benefits to subjects” [ 7 ]. Non-therapeutic procedures, on the other hand, would need to be evaluated on the following conditions: the “risks are minimized and are consistent with sound scientific design; risks are reasonable in relation to knowledge to be gained; and if vulnerable population is involved, (there must be) no more than minor increase over minimal risk” [ 7 ]. Lastly, the REC would need to determine if both therapeutic and non-therapeutic procedures are acceptable [ 7 ]. If all components “pass”, then the “research risks are reasonable in relation to anticipated benefits” [ 7 ].

Component Analysis [ 7 , 9 ].

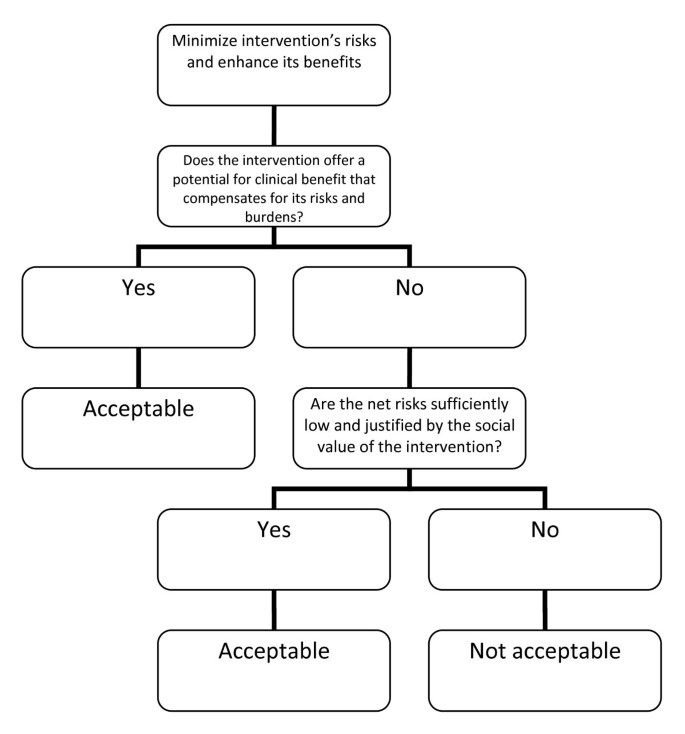

David Wendler and Franklin Miller, on the other hand, developed the Net-Risk Test (Figure 2 ) as a reaction to the Component Analysis. This system requires RECs to first “minimize the risks of all interventions included in the study” [ 10 ]. After which, the REC ought to review the remaining risks by first looking at each intervention in the study, and evaluating if the intervention “offers a potential for clinical benefit that compensates for its risks and burdens” [ 10 ]. If an intervention does offer a potential benefit that can compensate for the risks, then the intervention is acceptable; otherwise, the REC would need to determine whether the net risk is “sufficiently low and justified by the social value of the intervention” [ 10 ]. By net risk, they refer to the “risks of harm that are not, or not entirely, offset or outweighed by the potential clinical benefits for participants” [ 11 ]. If the net risks are sufficiently low and are justified by the social value of the intervention, then the intervention is acceptable; otherwise, it is not. Lastly, the REC would need to “calculate the cumulative net risks of all the interventions…and ensure that, taken together, the cumulative net risks are not excessive” [ 10 ].

The Net Risk Test [ 10 ].

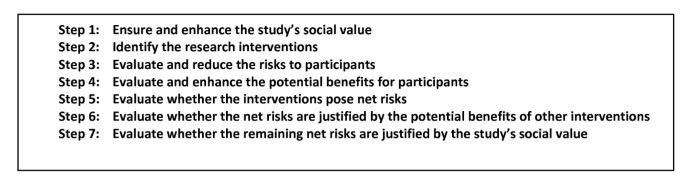

Recently, Rid and Wendler elaborated the Net Risk Test through a seven-step framework (see Figure 3 ) that is meant to offer a chronological, “systematic and comprehensive guidance” for the risk-benefit evaluations of RECs [ 11 ]. As we could see from Figure 3 , most of the steps are the same as that of the previously explained Net Risk Test; the main addition of the framework is the first step, which is to ensure and enhance the study’s social value. In this first step, Rid and Wendler meant that RECs, at the start of their risk-benefit evaluation, ought to “ensure the study methods are sound”; “ensure that the study passes a minimum threshold of social value”; and “enhance the knowledge to be gained from the study” [ 11 ]. It is only after the social value of the study has been identified, evaluated, and enhanced could the RECs identify the individual interventions and then go through the other steps, i.e., the steps we have earlier discussed in the Net Risk Test.

Seven-step framework for risk-benefit evaluations in biomedical research [ 11 ].

The procedure-level approaches and the conflation of risk-benefit analysis, risk-benefit evaluation, risk treatment, and decision making

These procedure-level approaches may be credited for providing some form of a framework for the risk-benefit assessment tasks of RECs. They have also provided RECs with a framework that includes and puts into perspective certain ethical concepts that may or may not have been considered in REC evaluations, but are now procedurally necessary concepts. Weijer and Miller, for example, made it necessary for RECs to always consider therapeutic warrant, equipoise, and minimal risk when evaluating the risk-benefit balance of a study. Wendler and Miller on the other hand, provided RECs with the concept of net risk. In spite of these contributions, these approaches presuppose (maybe unwittingly) that risk-benefit analysis, risk-benefit evaluation, risk treatment, and decision making can all be conflated. This, in our view, is a major error that ought to be corrected since from this error flow other problems, problems that unavoidably make the procedures unsystematic and arbitrary. To substantiate our view, we first have to make a necessary detour by discussing the distinction between risk-benefit analysis, risk-benefit evaluation, risk treatment, and decision making [ 4 , 5 ]. After which, we shall show how the conflation is present in the procedure-level approaches and how such a conflation leads to difficult problems.

Distinction between risk-benefit analysis, risk-benefit evaluation, risk treatment, and decision making

Decisions on benefits and risks in fact involve four activities: risk-benefit analysis, risk-benefit evaluation, risk treatment, and decision making [ 4 – 6 ]. In the current debate, these terms are used as if they are interchangeable. Precisely because these four activities have four different demands, it must be made clear that the problem is not merely on terminological preference; that is, the problem cannot be solved by simply “agreeing” to use one term over another. In risk studies, the risk-benefit task concretely demands four separate activities [ 4 , 6 ]. Hence, these terms are not interchangeable, and their order must be chronological. The distinctions among these tasks and the necessity of their chronological ordering are as follows.

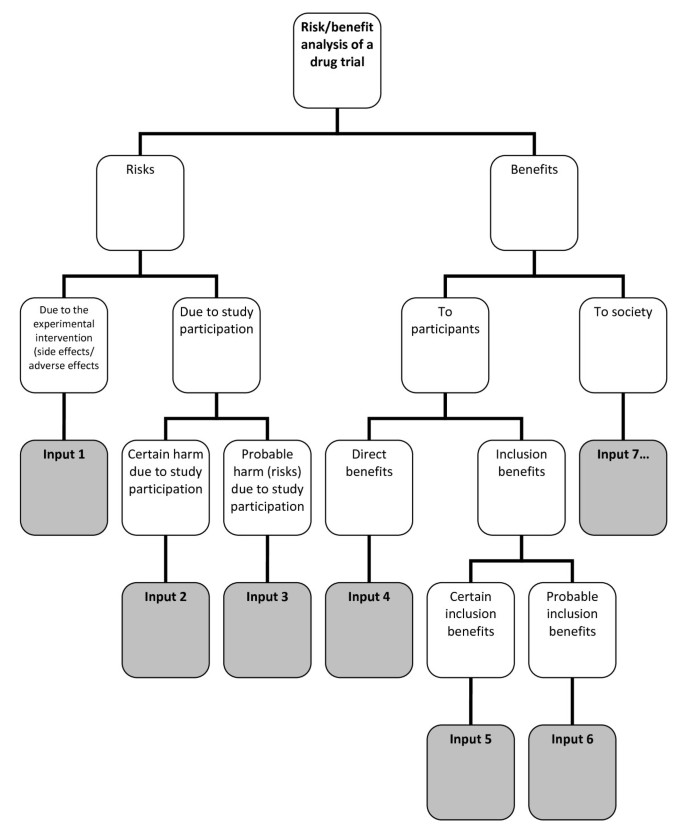

Risk-benefit analysis refers to the “systematic use of information to identify initiating events, causes, and consequences of these initiating events, and express risk (and benefit)” [ 4 ]. This, risk-benefit analysis refers to 1.) gathering of risk and benefit events, causes, and consequences; and 2.) presenting this wealth of information in a systematic and comprehensive way, in accordance with the purpose why such information is systematized in the first place. There are a number of risk analysis methods such as fault tree analysis, event tree analysis, Bayesian networks, Monte Carlo simulation, and others [ 4 ]. The multi criteria decision analysis (MCDA) method, mentioned by the EU Committee for Medicinal Products for Human Use (CHMP) in the Reflection Paper on Benefit Risk Assessment Methods in the Context of the Evaluation of Marketing Authorization Applications of Medicinal Products for Human Use [ 12 ] , proposes the use of a value tree in analyzing the risk-benefit balance of a drug, for example. Adjusted to drug trials, a drug trial risk-benefit analysis value tree could look like (Figure 4 ).

Risk-benefit analysis value tree .

In this value tree (Figure 4 ), we used King and Churchill’s typology of harms and benefits [ 1 ]. From each of the branches, the risk analyst would fill in information about a specific study. Of course, there could be more than one input under each category, depending on the nature of the drug trial being analyzed. Also, this value tree serves as an example; this is not the only way that benefits and risks may be analyzed within the context of drug trials. The best way to analyze risks and benefits within this context is something that ought to be further discussed and developed. Our aim is simply to show that a method such as a value tree is capable of encapsulating and framing the multidimensional nature of the causes and consequences of the benefits and risks of a study within one “tree.” This provides a functional risk-benefit picture from which the risks and the benefits may be evaluated, i.e., risk-benefit evaluation.

Risk-benefit evaluation refers to the “process of comparing risk (and benefit) against given risk (and benefit) criteria to determine the significance of the risk (and the benefit)” [ 4 ]. There are a number of methods to evaluate benefits and risks. Within the MCDA model for example, the “identification of the risk-benefit criteria; assessment of the performance of each option against the criteria; the assignment of weight to each criterion; and the calculation of the weighted scores at each level and the calculation of the overall weighted scores”[ 13 ] would constitute risk evaluation. The multriattribute utility theory (MAUT) is yet another example of an evaluation method. The MAUT is a theory that is basically “concerned with making tradoffs among different goals” [ 14 ]. This theory factors in human values, values defined as “the functions to use to assign utilities to outcomes” [ 14 ]. From the value tree “inputs,” the evaluator would then need to assign weights to each of these inputs. The purpose of plugging in weights is to establish the importance of each input, according to the evaluators. This is tantamount to establishing criteria, or identifying and making explicit the evaluators’ definition of acceptable risk. Next, the evaluators would need to plug in numerical values as the utility values of those that are being evaluated. These values would be multiplied to the weight. The latter values, when summed, would constitute the total utility value. To illustrate, if, for example, an REC wishes to make an evaluation of a psychotropic study drug and the standard drug, an REC may come up with MAUT chart like (Table 1 ).

Just like the value tree, our purpose is not to endorse only one way of doing the evaluation. Our purpose is merely to illustrate that such a decision study tool is capable of explicitly showing the following: a.) the inputs that the evaluators think must play a role in the evaluation; b.) the values of the evaluators, through the scores they have provided; c.) the importance they give to each of the factors/inputs through the weights that they have provided, d.) how the things compared (in this case, the study drug and the standard drug) fare given a, b and c ; and e.) a global perspective of what a, b, c, and d amount to, i.e., through the total utility value.

In the risk-benefit literature in research ethics, we find statements that such an algorithm is undesirable because it “yields one and only one verdict about the risk-benefit profile of each possible protocol” [ 11 ]. On this issue, CMHP’s Reflection is instructive. The scores in quantitative evaluations are valuable not because of some absolute value, but because these scores can

"…focus the discussion by highlighting the divergences between the assessors and stakeholders concerning choice for weights. The benefit of such analysis methods is that the degree and nature of these divergences can be assessed, even in advance of any compound’s review. The same method might be used with the weights (e.g., of different stakeholders) and make both the differences and the consequences of those differences more explicit. If the analyses agree, decision-makers can be more comfortable with a decision. If the analyses disagree, exact sources of the differences in view will be identified, and this will help focus the discussion on those topics [ 12 ]."

Thus, the scores are meant to allow the evaluators to know each others’ values, similarities, differences, and divergences. The divergences and differences could aid in focusing the REC discussion and figure out problem areas in a deliberate, transparent, coherent, and less intuitive manner [ 15 ].

Risk-benefit analysis and evaluation together constitute risk-benefit assessment [ 4 ] .

Once risks and benefits have been evaluated versus the evaluators’ given criteria, risk evaluation allows evaluators to decide “which risks need treatment and which do not” [ 6 ]. In decision studies, amplifying benefits and modifying risks are possible only after a global understanding of it through risk assessment has been achieved. Thus, after risk-benefit assessment comes risk treatment. By risk treatment, we refer to the “process of selection and implementation of measures to modify risk…measures may include avoiding, optimizing, transferring, or retaining risk” [ 4 ]. In terms of trials, risk treatment would refer to enhancing the trial’s social value, reducing the risks to the participants, and enhancing the participants’ benefits [ 11 ]. There may be concerns especially from REC members who have been used to minimizing risk immediately after its identification that this process necessitates them to suspend such move until risk evaluation is done, a procedure that may be counter-intuitive for some. However, the process of “immediately cutting the risks” also have passed through the process of evaluation, although intuitively and implicitly. An REC member who says that the risks of a certain procedure may be minimized or that the risks are unnecessary given the research question has already implicitly gone through a personal evaluation of what is and what is not necessary in such a clinical trial.

After investigating on the possibilities to modify risk and amplify the benefits, the decision makers would then have to finally decide whether the risks of the trial are justified given the benefits. By decision making , we refer to the final discussion of the REC on whether benefits truly outweigh risks, i.e., given all the information provided, are the risks of the trial ethically acceptable due to the merits of the probable benefits?

It is important to note that in the risk literature [ 4 , 13 ], the CHMP Reflection [ 12 ], and the CIOMS report [ 16 ], the risk-benefit tasks are assumed to be done interdependently and that the tasks are reflective of various values, interests, and ethical perspectives. At least for marketing authorization and marketed drug evaluation purposes, the sponsor and/or the investigator are assumed to be responsible for risk-benefit assessment and to a certain extent, the proposal of risk treatment measures. It makes a lot of sense that the sponsor ought to be responsible for risk analysis precisely because in this task, “experts on the systems and activities being studied are usually necessary to carry out the analysis” [ 4 ]. The regulatory authorities, on the other hand, are expected to provide guidelines for the risk-benefit analysis criteria. They also ought to provide their own version of risk-benefit evaluation to determine areas of divergences and differences, to extensively discuss risk treatment measures and options, and finally to deliberate and decide based on all these inputs.

Conflation of the various risk-benefit tasks by the procedure-level approaches

At the most superficial level, we notice that Wendler and Rid used the terms “risk-benefit assessment” and “risk-benefit evaluation” interchangeably to refer to the one and the same Net Risk Test [ 11 , 17 ]. Nevertheless, it could be argued that this is just a matter of misuse of terms, and that such does not substantially affect the approach that is proposed. Thus, we would need to look deeper into the Net Risk Test to justify our claim that it conflates the various risk-benefit tasks.

In the latest seven-step framework of the Net Risk Test, what ought to be a framework for risk-benefit evaluation of RECs ended up incorporating aspects of risk-benefit assessment, risk treatment, and decision making. The first step, that is, ensuring and enhancing the study’s social value, is risk treatment. The second step, that is, identifying the research interventions, is risk analysis. The third and fourth steps, which are the evaluation and reduction of risks to participants, and the evaluation and enhancing of potential benefits to participants, both fall into risk-benefit evaluation and risk treatment. It is worthwhile to note that in the Net Risk Test, the evaluation and the treatment of risks and benefits were not preceded by the identification of these risks and benefits; instead, prior to the third and fourth steps is the step to identify research interventions, a necessary but incomplete step in risk-benefit analysis. The fifth step, that is, the evaluation whether the interventions pose net risks, is risk-benefit evaluation. The sixth step, which is to evaluate whether the net risks are justified by the potential benefits of other interventions, is decision making. The last step, which is to evaluate whether the remaining net risks are justified by the study’s social value, is also decision making. Thus, the Net Risk Test in principle encompasses all the risk-benefit tasks without taking into account the distinctions, the chronological order among the various tasks, nor the division of labor in the various risk-benefit tasks.

The Component Analysis, just like the Net Risk Test, does the same conflation. In the process of distinguishing procedures into either therapeutic or non-therapeutic, the REC members would first need to identify the procedures to assess, i.e., risk analysis. The REC members would then need to evaluate therapeutic procedures differently compared to non-therapeutic procedures. Therapeutic procedures have to be evaluated on whether clinical equipoise exists, and whether the procedure is consistent with competent care. These two criteria may be considered as ethical principles that ought to be present in the deliberation towards decision making. Thus, these are decision making tasks. Next, the REC members would need to determine if the therapeutic procedure is reasonable in relation to the potential benefits to subjects. Since REC members need to answer questions of “reasonability,” this is a decision making task that presupposes risk-benefit evaluation. Non-therapeutic procedures, on the other hand, would necessitate the assessor to evaluate if risks are minimized and if risks are consistent with sound scientific design. This is risk treatment. Next, the assessor would need to verify if the risk of the non-therapeutic procedure is reasonable in relation to knowledge to be gained. Again, this is a decision making task that presupposes risk-benefit evaluation. In cases where vulnerable patients are involved, the REC members would need to verify if no more than minor increase over minimal risk is involved; this is a discussion that is likely to be present in the deliberation towards decision making, which also presupposes risk-benefit evaluation. Lastly, the assessor would need to make a decision if both therapeutic and non-therapeutic procedures pass. This is decision making. Hence, again, what we have is a system that touches on each of the risk-benefit tasks without making a distinction among the various tasks.

Since the risk-benefit tasks are conflated, the various tasks are necessarily simplified and confused. We have seen that the various risk-benefit tasks are resource intensive (since various experts must be involved), necessarily complex (since a drug trial is rarely simple), and time consuming. This is the reason why they are done separately. To conflate the various tasks into one system that ought to be accomplished within the few hours that the REC convenes is an impossibility. Precisely because of this conflation, plus the consideration that all the risk-benefit tasks ought to be done within the time restrictions of an REC, both procedure-level approaches cursorily and confusedly “accomplish” the various tasks. As such, we cannot expect the procedure-level approaches to have the same level of robustness, transparency, explicitness, and coherence as the various approaches of decision studies have. Neither of the procedure-level approaches could have the same robustness that the value-tree had, for example, in expressing and illustrating the relations between the nature, cause, consequences, as well as the uncertainties, of both risk and benefit components. Neither is also transparent, explicit, and rigorous enough to capture the acceptable risk definitions and the various weights and scores that are reflective of the various values and ethical dispositions that the MAUT method provided. The two procedure-level approaches simply do not require evaluators to be explicit in terms of their evaluative values. Though risk treatment is largely present in both procedure-level approaches, risk treatment, at least in the Net Risk Test, is sometimes confounded with risk evaluation. In the procedure-level approaches, RECs would also not have the benefit of systematically focusing the discussion on divergences and differences that a good risk evaluation makes possible. Lastly, because of the conflation and confusion of the various risk-benefit tasks, REC members are left to their own devices and intuition to decide on what is important to discuss and which is not, and eventually, to decide if the risks are justifiable relative to the benefits. Such a “procedure” could be categorized as a “taking into account and bearing in mind” process, a process that Dowie rightfully criticized as vague, general, and plainly intuitive [ 15 ].

Recommendations

We have seen that the methods from decision studies are more robust, transparent, and coherent than any of the procedure-level approaches. This is not surprising considering the fact that decision studies have been utilized in many various fields for quite some time now. The robustness of the decision studies methods stems from the clear distinction between risk-benefit analysis, risk-benefit evaluation, risk treatment, and decision making. In decision studies, each of the risk-benefit tasks is a system in itself that ought not to be conflated. In addition, in contrast to “taking into account and bearing in mind” processes, decision studies encourage the exposure of beliefs and values [ 15 ] precisely because it is from this explicitness that discussions can be defined and ordered. As such, we recommend the following:

RECs should make clear what their task is. RECs do not have the time and are not in the best position to do risk analyses. As such, risk analysis must be a task for the sponsor. As regards risk evaluation, RECs ought to provide their own risk-benefit evaluation to pair with the sponsor’s/investigator’s evaluation since this is the best way to systematically point out areas of divergence/convergence. These areas would aid in putting order in REC discussions. The evaluation of risk treatment suggestions and possibly coming up with a revised or different risk treatment appraisal ought to also form part of REC discussions. Lastly, it is obviously the REC’s task to make the final decision on whether the risks of the trial are justified given the benefits.

Precisely because such a clarification of tasks is so essential if the REC is to function efficiently, RECs must look into how decision studies may be incorporated in its risk-benefit tasks. This is something we will do in our next article. For now, it is imperative to lay the theoretical groundwork for the urgency of such incorporation.

The procedure-level approaches emphasize on the role of the various ethical concepts such as net risk, minimum risk, clinical equipoise, in the risk-benefit task of RECs. These are legitimate concerns; nevertheless, RECs must know when these concepts play a role in the various risk-benefit tasks. Minimal risk, for example, is a concept that ought to be present in risk treatment and/or deliberation towards final decision making.

Both the Net Risk Test and the Component Analysis conflate risk-benefit analysis, risk-benefit evaluation, risk treatment, and decision making. This makes the risk-benefit task of RECs confusing, if not impossible. It is necessary to make a distinction between these four different tasks if RECs are to be clear about what their task truly is. By looking at decision studies, we realize that RECs ought to evaluate risks and benefits, appraise risk treatment suggestions, and make the final decision. Further clarification and elaboration of these tasks would necessitate research ethicists to look beyond the procedure-level approaches. It further requires research ethicists to allow decision studies discourses into the current discussion on the risk-benefit tasks of RECs. Admittedly, this would take a lot of time and research effort. Nevertheless, the discussion on the REC’s risk-benefit task would be more fruitful and democratic if research ethics opens its doors to other disciplines that could truly help clarify risk-benefit task distinctions.

King NM, Churchill LR: Assessing and comparing potential benefits and risks of harm. The Oxford textbook of clinical research ethics. Edited by: Emanuel E, Grady C, Crouch RA, Lie RA, Miller FG, Wendler D. 2008, Oxford University Press, New York, 514-26.

Google Scholar

US National Bioethics Advisory Commission: Ethical and Policy issues in Research Involving Human Participants. 2001

Westra AE, de Beufort ID: The merits of procedure-level risk-benefit assessment. 2011, Ethics and Human Research, IRB

Aven T: Risk analysis: asssessing uncertainties beyond expected values and probabilities. 2008, Wiley, Chichester

Book Google Scholar

Vose D: Risk analysis: a quantitative guide. 2008, John Wiley & Sons, Chichester, 3

European Network and Information Security Agency. Risk assessment. European Network and Information Security Agency. 2012, Available from: http://www.enisa.europa.eu/act/rm/cr/risk-management-inventory/rm-process/risk-assessment

Miller P, Weijer C: Evaluating benefits and harms in clinical research. Principles of Health Care Ethics, Second Edition. Edited by: Ashcroft RE, Dawson A, Draper H, McMillan JR. 2007, Wiley & Sons

Weijer C, Miller PB: When are research risks reasonable in relation to anticipated benefits?. Nat Med. 2004, 10 (6): 570-573. 10.1038/nm0604-570.

Article Google Scholar

Weijer C: When are research risks reasonable in relation to anticipated benefits?. J Law Med Ethics. 2000, 28: 344-361. 10.1111/j.1748-720X.2000.tb00686.x.

Wendler D, Miller FG: Assessing research risks systematically: the net risks test. J Med Ethics. 2007, 33 (8): 481-486. 10.1136/jme.2005.014043.

Rid A, Wendler D: A framework for risk-benefit evaluations in biomedical research. Kennedy Inst Ethics J. 2011 June, 21 (2): 141-179. 10.1353/ken.2011.0007.

European Medicines Agency -- Committee for Medicinal Products for Human Use. Reflection paper on benefit-risk assessment methods in the context of the evaluation of marketing authorization applications of medicinal products for human use. 2008

Mussen F, Salek S, Walker S: Benefit-risk appraisal of medicines. 2009, John Wiley & Sons, Chichester

Baron J: Thinking and deciding. 2008, Cambridge University Press, New York, 4

Dowie J: Decision analysis: the ethical approach to most health decision making. Principles of Health Care Ethics. Edited by: Ashcroft RE, Dawson A, Draper H, McMillan JR. 2007, John Wiley & Sons, 577-83.

Council for International Organizations of Medical Sciences’s Working Group IV. Benefit-risk balance for marketed drugs: evaluating safety signals. 1998

Rid A, Wendler D: Risk-benefit assessment in medical research– critical review and open questions. Law, Probability Risk. 2010, 9: 151-177. 10.1093/lpr/mgq006.

Pre-publication history

The pre-publication history for this paper can be accessed here: http://www.biomedcentral.com/1472-6939/13/6/prepub

Download references

Acknowledgements

This study was performed in the context of the Escher project (T6-202), a project of the Dutch Top Institute Pharma, Leiden, The Netherlands.

Author information

Authors and affiliations.

Julius Center for Health Sciences and Primary Care, Utrecht University Medical Center, Heidelberglaan 100, Utrecht, 3584CX, The Netherlands

Rosemarie D L C Bernabe, Ghislaine J M W van Thiel & Johannes J M van Delden

GlaxoSmithKline, Huis ter Heideweg 62, Zeist, 3705, LZ, The Netherlands

Jan A M Raaijmakers

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Rosemarie D L C Bernabe .

Additional information

Competing interests.

RB’s PhD project is funded by the Dutch Top Institute Pharma. JR works for and holds stocks in GlaxoSmithKline. JvD and GvT have no competing interests to declare.

Authors’ contributions

All authors were involved in the design of the manuscript. RB did the research and wrote the draft and final manuscript; GvT commented on the drafts, wrote parts of the manuscript, and approved the final version; JR commented on the drafts and approved the final version of the manuscript; JvD commented on the drafts and approved the final version of the manuscript. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Authors’ original file for figure 1

Authors’ original file for figure 2, authors’ original file for figure 3, authors’ original file for figure 4, authors’ original file for figure 5, rights and permissions.

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License ( http://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Reprints and permissions

About this article

Cite this article.

Bernabe, R.D.L.C., van Thiel, G.J.M.W., Raaijmakers, J.A.M. et al. The risk-benefit task of research ethics committees: An evaluation of current approaches and the need to incorporate decision studies methods. BMC Med Ethics 13 , 6 (2012). https://doi.org/10.1186/1472-6939-13-6

Download citation

Received : 05 April 2012

Accepted : 11 April 2012

Published : 20 April 2012

DOI : https://doi.org/10.1186/1472-6939-13-6

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Risk benefit assessment

- Ethics committee

- Decision theory

- Net risk test

- Component analysis

BMC Medical Ethics

ISSN: 1472-6939

- General enquiries: [email protected]

Risk-Benefit Assessment in Research Ethics

Introduction.

What is research ethics?

Research ethics deals with acceptable norms in the design and application of a research study. It governs the standards of conduct of investigators in scientific research in order to uphold important principles including autonomy, justice and beneficence (WHO).

What is Risk-Benefit Assessment?

Since the Belmont Report, most biomedical research guidelines require a sort of risk-benefits assessment in order to carry the research scientifically and ethically. The principles underlying this sort of assessment are beneficence and non-maleficence.

Justification for risk-benefit assessment are twofold “to verify the scientific/social validity of the research since an unscientific research is also an unethical research; and to ensure that the risks that the participants are exposed to are necessary, justified, and minimized.” (Bernabade et al 2012)

Therefore, participation in research trials is best when participants derive something health, or something other, valuable from it, or when it significantly improves generalizable knowledge without inducing unreasonable and unjust harm.

Assigned Reading

Rid, A., & Wendler, D. (2011). A framework for risk-benefit evaluations in biomedical research. Kennedy Institute of Ethics journal , 21 (2), 141–179. https://doi.org/10.1353/ken.2011.0007

Thesis : In their paper Rid and Wendler (2011) note the importance of risk-benefit assessment and highlight the paucity of comprehensive and concrete guidance to perform these assessment. This has resulted in rather unsystematic methods often largely based on intuitions and a disparity of assessment across several research studies. In order to address this gap the authors, designed the first step-by-step comprehensive guiding framework for risks benefits assessments in research ethics. Their framework is based on extant guidelines and regulations, other relevant literature and normative analysis.

Discussion Questions

The following questions were considered by seminar participants prior to the discussion:

- What were some of the strengths of the framework?

- What were some of the weaknesses or more vague elements of the framework?

Reflection Points

1. Evaluation of the requirements that studies meet a minimum threshold of “social value” – social value is a normatively laden concept (what ones person thinks as contributing to social value, another might think of as subtracting). It is also vague and may be too easy to apply (can hand waive that every study has social value of some sort).

2. “Enhancement” as an idea in their framework (requirement to “enhance” the social value the study and “enhance” potential benefits to participants). What is the normative justification for this? Why do researchers have an obligation to do this? Or IRB members? Also, consider potential unintended consequences of this – may negatively affect some of the science in the study, may impose costs on researchers or society, etc.

3. Evaluation of “clinical” benefit to participants. Is this biasing against non-clinical studies? Is this too narrow (benefits of psychological or other nature – such as fulfilled desire to be altruistic)? On the other hand, too broad or hand-waving (“benefit” from talking to researchers about feelings)? Violates equipoise/therapeutic misconception?

4. Steps 5, 6, 7/ the “weighing steps” – steps where the identified risks (and their likelihood), clinical benefits, net risks, and social value are weighed to establish whether the assessment is favorable toward allowing the study. How exactly is this weighing done? Ideas of “informed and impartial social arbitrator” and “informed clinician” are introduced to help, but these constructs are vague and can allow for introduction of bias.

5. Lack of context – avoids questions such as whether the study is therapeutic or non-therapeutic? Whether the study involves people who are dying, etc.6. May be too biased towards approval since it primes people to consider “social value” in first and last step–social value is easy to come by.

References and Additional Resources

Bernabe, R.D.L.C., van Thiel, G.J.M.W., Raaijmakers, J.A.M. et al. (2012). The risk-benefit task of research ethics committees: An evaluation of current approaches and the need to incorporate decision studies methods. BMC Med Ethics 13 , 6 https://doi.org/10.1186/1472-6939-13-6

Abdalla M.E. (2017) Ethical Issues Involved with the Analysis of Risks and Benefits. In: Silverman H. (eds) Research Ethics in the Arab Region. Research Ethics Forum, vol 5. Springer, Cham. https://doi.org/10.1007/978-3-319-65266-5_8

Rid, Annette & Wendler, David. (2010). Risk-benefit assessment in medical research–critical review and open questions. Law, Probability and Risk . 9 . 151-177. 10.1093/lpr/mgq006.

Risk Assessment and Risk-Benefit Assessment

- First Online: 14 July 2022

Cite this chapter

- Jinyao Chen 2 &

- Lishi Zhang 2

556 Accesses

The framework of risk analysis has become the principal procedure for dealing with food safety issues. Risk analysis consists of three components: risk management, risk assessment, and risk communication, while risk assessment is defined as the scientific evaluation of possibility and consequences of adverse health outcomes resulting from food-borne hazards exposure in the case of food safety issues. Risk assessment is a scientifically based process consisting of the following steps: hazard identification, hazard characterization, exposure assessment, and risk characterization. The procedures of risk assessment of chemical hazards and microbiological hazards are a little bit different. This chapter would focus on the chemical hazards. On the other hand, positive and adverse effects may be induced concurrently by a single food item, e.g., fish, whole grain products, or even a single food component, e.g., folic acid, phytosterols, in which scenarios the risk-benefit assessment should be adopted. The principles and main steps of risk-benefit assessment are the same with risk assessment. Risk-benefit assessment comprises three parts, i.e., risk assessment, benefit assessment, and risk-benefit comparison, among which risk-benefit comparison is the trickiest one, usually a common metric of the health outcome is needed. Risk-benefit assessment is a valuable approach to systematically integrating the current evidence to provide the best science-based answers to address complicated questions in the areas of food and nutrition, especially in evaluating nutrient fortification policy, developing a tolerable upper intake of nutrient, and recommending a particular dietary pattern.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

World Health Organization. About Risk Analysis in Food. 2010. Available at: http://www.who.int/foodsafety/micro/riskanalysis/en/

Codex Alimentarius Commission. 2010. Working principles for risk analysis for application in the framework of the Codex Alimentarius. Codex Alimentarius Commission Procedural Manual, 19th edition, Rome 2010. Available at: ftp://ftp.fao.org/codex/Publications/ProcManuals/Manual_19e.pdf

FAO/WHO. Food Safety Risk Analysis: A Guide for National Food Safety Authorities. FAO Food and Nutrition Paper No. 87. 2006. Available at: ftp://ftp.fao.org/docrep/fao/009/a0822e/a0822e.pdf

FAO/WHO. 1995. Application of Risk Analysis to Food Standards Issues. Report of the Joint FAO/WHO Expert Consultation. Geneva, 13-17 March 1995. Available at: ftp://ftp.fao.org/es/esn/food/Risk_Analysis.pdf

FAO/WHO. 1997. Risk management and food safety. FAO Food Nutr Pap No. 65. Available at: ftp://ftp.fao.org/docrep/fao/w4982e/w4982e00.pdf

FAO/WHO. 1998. The application of risk communication to food standards and safety matters. FAO Food and Nutrition Paper No 70. Available at: http://www.fao.org/docrep/005/x1271e/x1271e00.htm

FAO/WHO. 2005. Working principles for risk analysis for application in the framework of the Codex Alimentarius. In Codex Alimentarius Commission. Procedural Manual. 15th Edition. Available at: ftp://ftp.fao.org/codex/Publications/ProcManuals/Manual_15e.pdf

FAO. 2003. Food Safety: Science and Ethics. Report of an FAO Expert Consultation. Rome, 3–5 September 2002. FAO Readings in Ethics 1. Available at ftp://ftp.fao.org/docrep/fao/006/j0776e/j0776e00.pdf

European Food Safety Authority. Transparency in risk assessment carried out by EFSA: guidance document on procedural aspects. EFSA J. 2006;2006(353):1–16. Available at: http://www.efsa.europa.eu/en/science/sc_commitee/sc_documents/1494.html

Google Scholar

Joint Institute for Food Safety and Applied Nutrition. Website of the Food Safety Risk Analysis Clearinghouse. A joint project between the University of Maryland and the United States Food and Drug Administration. Collection of resources related to food safety risk communication. Available at: http://www.foodrisk.org/risk_communication.cfm

FAO/WHO. 2016. Risk communication applied to food safety handbook. Food safety and quality series, 2. Rome. Available at: https://www.who.int/foodsafety/Risk-Communication/en/

EFSA scientific committee; guidance on human health risk-benefit assessment of food. EFSA J. 2010;8(7):1673. https://doi.org/10.2093/j.efsa.2010.1673 . Available online: www.efsa.europa.eu

Weed DL. Weight of evidence: a review of concept and methods. Risk Anal. 2005;25:1545–57.

Article PubMed Google Scholar

Dixit R, Riviere J, Krishnan K, Andersen ME. Toxicokinetics and physiologically based toxicokinetics in toxicology and risk assessment. J Toxicol Environ Health B Crit Rev. 2003;6(1):1–40.

Article CAS PubMed Google Scholar

Coecke S, Pelkonen O, Leite SB, Bernauer U, Bessems JG, Bois FY, Gundert-Remy U, Loizou G, Testai E, Zaldívar JM. Toxicokinetics as a key to the integrated toxicity risk assessment based primarily on non-animal approaches. Toxicol In Vitro. 2013;27(5):1570–7.

ECETOC. Framework for the Integration of Human and Animal Data in Chemical Risk Assessment. Technical Report No. 104 ISSN-0773-8072-104. Brussels: European Centre for Ecotoxicology and Toxicology of Chemicals; 2009.

James RC, Britt JK, Halmes NC, Guzelian PS. Evidence-based causation in toxicology: a 10-year retrospective. Hum Exp Toxicol. 2015;34(12):1245–52.

Rodricks JV, Levy JI. Science and decisions: advancing toxicology to advance risk assessment. Toxicol Sci. 2013;131(1):1–8.

Jennings P, Corvi R, Culot M. A snapshot on the progress of in vitro toxicology for safety assessment. Toxicol In Vitro. 2017;45(Pt 3):269–71.

Sauer UG, Deferme L, Gribaldo L, Hackermüller J, Tralau T, van Ravenzwaay B, Yauk C, Poole A, Tong W, Gant TW. The challenge of the application of 'omics technologies in chemicals risk assessment: background and outlook. Regul Toxicol Pharmacol. 2017;91(Suppl 1):S14–26.

McMullen PD, Andersen ME, Cholewa B, Clewell HJ 3rd, Dunnick KM, Hartman JK, Mansouri K, Minto MS, Nicolas CI, Phillips MB, Slattery S, Yoon M, Clewell RA. Evaluating opportunities for advancing the use of alternative methods in risk assessment through the development of fit-for-purpose in vitro assays. Toxicol In Vitro. 2018;48:310–7.

Adami HO, Berry SC, Breckenridge CB, Smith LL, Swenberg JA, Trichopoulos D, Weiss NS, Pastoor TP. Toxicology and epidemiology: improving the science with a framework for combining toxicological and epidemiological evidence to establish causal inference. Toxicol Sci. 2011;122(2):223–34.

Article CAS PubMed PubMed Central Google Scholar

Hernández AF, Tsatsakis AM. Human exposure to chemical mixtures: challenges for the integration of toxicology with epidemiology data in risk assessment. Food Chem Toxicol. 2017;103:188–93. https://doi.org/10.1016/j.fct.2017.03.012 .

EFSA. Guidance of the scientific committee on a request from EFSA on the use of the benchmark dose approach in risk assessment. The EFSA Journal. 2009;2009(1150):1–72.

Neumann HG. Risk assessment of chemical carcinogens and thresholds. Crit Rev Toxicol. 2009;39(6):449–61.

Adeleye Y, Andersen M, Clewell R, Davies M, Dent M, Edwards S, Fowler P, Malcomber S, Nicol B, Scott A, Scott S, Sun B, Westmoreland C, White A, Zhang Q, Carmichael PL. Implementing toxicity testing in the 21st century (TT21C): making safety decisions using toxicity pathways, and progress in a prototype risk assessment. Toxicology. 2015;5(332):102–11.

Article CAS Google Scholar

McConnell ER, Bell SM, Cote I, Wang RL, Perkins EJ, Garcia-Reyero N, Gong P, Burgoon LD. Systematic omics analysis review (SOAR) tool to support risk assessment. PLoS One. 2014;9(12):e110379.

Article PubMed PubMed Central CAS Google Scholar

Dourson M, Becker RA, Haber LT, Pottenger LH, Bredfeldt T, Fenner-Crisp PA. Advancing human health risk assessment: integrating recent advisory committee recommendations. Crit Rev Toxicol. 2013;43(6):467–92.

Hartwig A, Arand M, Epe B, Guth S, Jahnke G, Lampen A, Martus HJ, Monien B, Rietjens IMCM, Schmitz-Spanke S, Schriever-Schwemmer G, Steinberg P, Eisenbrand G. Mode of action-based risk assessment of genotoxic carcinogens. Arch Toxicol. 2020;94(6):1787–877.

Thomas PC, Bicherel P, Bauer FJ. How in silico and QSAR approaches can increase confidence in environmental hazard and risk assessment. Integr Environ Assess Manag. 2019;15(1):40–50.

Gbeddy G, Egodawatta P, Goonetilleke A, Ayoko G, Chen L. Application of quantitative structure-activity relationship (QSAR) model in comprehensive human health risk assessment of PAHs, and alkyl-, nitro-, carbonyl-, and hydroxyl-PAHs laden in urban road dust. J Hazard Mater. 2020;5(383):121154.

Ågerstrand M, Beronius A. Weight of evidence evaluation and systematic review in EU chemical risk assessment: foundation is laid but guidance is needed. Environ Int. 2016;92-93:590–6.

Article PubMed CAS Google Scholar

Barlow S, Renwick AG, Kleiner J, Bridges JW, Busk L, Dybing E, Edler L, Eisenbrand G, Fink-Gremmels J, Knaap A, Kroes R, Liem D, Müller DJ, Page S, Rolland V, Schlatter J, Tritscher A, Tueting W, Würtzen G. Risk assessment of substances that are both genotoxic and carcinogenic report of an International Conference organized by EFSA and WHO with support of ILSI Europe. Food Chem Toxicol. 2006;44(10):1636–50.

Embry MR, Bachman AN, Bell DR, Boobis AR, Cohen SM, Dellarco M, Dewhurst IC, Doerrer NG, Hines RN, Moretto A, Pastoor TP, Phillips RD, Rowlands JC, Tanir JY, Wolf DC, Doe JE. Risk assessment in the 21st century: roadmap and matrix. Crit Rev Toxicol. 2014;44(Suppl 3):6–16.

Stedeford T, Zhao QJ, Dourson ML, et al. The application of non-default uncertainty factors in the U.S. EPA’s Integrated Risk Information System (IRIS). Part I: UF(L), UF(S), and “other uncertainty factors”[J]. J Environ Sci Health C. 2007;25(3):245–79.

Article Google Scholar

Pohl HR, Chou CH, Ruiz P, Holler JS. Chemical risk assessment and uncertainty associated with extrapolation across exposure duration. Regul Toxicol Pharmacol. 2010;57(1):18–23.

Moretto A, Bachman A, Boobis A, Solomon KR, Pastoor TP, Wilks MF, Embry MR. A framework for cumulative risk assessment in the 21st century. Crit Rev Toxicol. 2017;47(2):85–97.

Boobis AR, Ossendorp BC, Banasiak U, Hamey PY, Sebestyen I, Moretto A. Cumulative risk assessment of pesticide residues in food. Toxicol Lett. 2008;180(2):137–50.

Safe SH. Development validation and problems with the toxic equivalency factor approach for risk assessment of dioxins and related compounds. J Anim Sci. 1998;76(1):134–41.

Gallagher SS, Rice GE, Scarano LJ, Teuschler LK, Bollweg G, Martin L. Cumulative risk assessment lessons learned: a review of case studies and issue papers. Chemosphere. 2015;120:697–705.

Cote I, Andersen ME, Ankley GT, Barone S, Birnbaum LS, Boekelheide K, Bois FY, Burgoon LD, Chiu WA, Crawford-Brown D, Crofton KM, DeVito M, Devlin RB, Edwards SW, Guyton KZ, Hattis D, Judson RS, Knight D, Krewski D, Lambert J, Maull EA, Mendrick D, Paoli GM, Patel CJ, Perkins EJ, Poje G, Portier CJ, Rusyn I, Schulte PA, Simeonov A, Smith MT, Thayer KA, Thomas RS, Thomas R, Tice RR, Vandenberg JJ, Villeneuve DL, Wesselkamper S, Whelan M, Whittaker C, White R, Xia M, Yauk C, Zeise L, Zhao J, DeWoskin RS. The next generation of risk assessment multi-year study-highlights of findings, applications to risk assessment, and future directions. Environ Health Perspect. 2016;124(11):1671–82.

Article PubMed PubMed Central Google Scholar

Munro IC, Renwick AG, Danielewska-Nikiel B. The threshold of toxicological concern (TTC) in risk assessment. Toxicol Lett. 2008;180(2):151–6.

Lachenmeier DW, Rehm J. Comparative risk assessment of alcohol, tobacco, cannabis and other illicit drugs using the margin of exposure approach. Sci Rep. 2015;30(5):8126.

Tijhuis MJ, de Jong N, Pohjola MV, Gunnlaugsdóttir H, Hendriksen M, Hoekstra J, Holm F, Kalogeras N, Leino O, van Leeuwen FX, Luteijn JM, Magnússon SH, Odekerken G, Rompelberg C, Tuomisto JT, Ueland WBC, Verhagen H. State of the art in benefit-risk analysis: food and nutrition. Food Chem Toxicol. 2012;50(1):5–25.

Rietjens IM, Alink GM. Future of toxicology--low-dose toxicology and risk--benefit analysis. Chem Res Toxicol. 2006 Aug;19(8):977–81.

Fransen H, de Jong N, Hendriksen M, Mengelers M, Castenmiller J, Hoekstra J, van Leeuwen R, Verhagen H. A tiered approach for risk–benefit assessment of foods. Risk Anal. 2010;30:808–16.

Verhagen H, Andersen R, Antoine JM, Finglas P, Hoekstra J, Kardinaal A, Nordmann H, Pekcan G, Pentieva K, Sanders TA, van den Berg H, van Kranen H, Chiodini A. Application of the BRAFO tiered approach for benefit-risk assessment to case studies on dietary interventions. Food Chem Toxicol. 2012;50(Suppl 4):S710–23. https://doi.org/10.1016/j.fct.2011.06.068 .

Gold MR, Stevenson D, Fryback DG. HALYS and QALYS and DALYS, oh my: similarities and differences in summary measures of population health. Annu Rev Public Health. 2002;2002(23):115–34.

Hoekstra J, Fransen HP, van Eijkeren JC, Verkaik-Kloosterman J, de Jong N, Owen H, Kennedy M, Verhagen H, Hart A. Benefit-risk assessment of plant sterols in margarine: a QALIBRA case study. Food Chem Toxicol. 2013;54:35–42.

Hart A, Hoekstra J, Owen H, Kennedy M, Zeilmaker MJ, de Jong N, Gunnlaugsdottir H. Qalibra: A general model for food risk-benefit assessment that quantifies variability and uncertainty. Food Chem Toxicol. 2013;54:4–17.

Hoekstra J, Verkaik-Kloosterman J, Rompelberg C, van Kranen H, Zeilmaker M, Verhagen H, de Jong N. Integrated risk-benefit analyses: method development with folic acid as example. Food Chem Toxicol. 2008;46:893–909.

Krul L, Kremer BHA, Luijckx NBL, Leeman WR. Quantifiable risk-benefit assessment of micronutrients: from theory to practice. Crit Rev Food Sci Nutr. 2017;57(17):3729–46.

Cohen JT, Bellinger DC, Connor WE, Kris-Etherton PM, Lawrence RS, Savitz DA, Shaywitz BA, Teutsch SM, Gray GM. A quantitative risk-benefit analysis of changes in population fish consumption. Am J Prev Med. 2005;29:325–34.

Institute of Medicine (IoM). Sea food choices. In: Balancing benefits and risks. Washington, D.C: National Academy Press; 2007.

Ginsberg GL, Toal BF. Quantitative approach for incorporating methylmercury risks and omega-3 fatty acid benefits in developing species specific fish consumption advice. Environ Health Perspect. 2009;117:267–75.

Gao YX, Zhang HX, Li JG, Zhang L, Yu XW, He JL, Shang XH, Zhao YF, Wu YN. The benefit risk assessment of consumption of marine species based on benefit-risk analysis for foods (BRAFO)-tiered approach. Biomed Environ Sci. 2015;28(4):243–52.

CAS PubMed Google Scholar

Hoekstra J, Hart A, Boobis A, Claupein E, Cockburn A, Hunt A, Knudsen I, Richardson D, Schilter B, Schutte K, Torgerson PR, Verhagen H, Watzl B, Chiodini A. BRAFO tiered approach for benefit–risk assessment of foods. Food Chem Toxicol. 2012;50(Suppl 4):S684–98.

van den Berg M, Kypke K, Kotz A, Tritscher A, Lee SY, Magulova K, Fiedler H, Malisch R. WHO/UNEP global surveys of PCDDs, PCDFs, PCBs and DDTs in human milk and benefit-risk evaluation of breastfeeding. Arch Toxicol. 2017;91(1):83–96.

Download references

Author information

Authors and affiliations.

Department of Nutrition and Food Safety, West China School of Public Health, Sichuan University, Chengdu, China

Jinyao Chen & Lishi Zhang

You can also search for this author in PubMed Google Scholar

Editor information

Editors and affiliations.

Lishi Zhang

Rights and permissions

Reprints and permissions

Copyright information

© 2022 Springer Nature Singapore Pte Ltd.

About this chapter

Chen, J., Zhang, L. (2022). Risk Assessment and Risk-Benefit Assessment. In: Zhang, L. (eds) Nutritional Toxicology. Springer, Singapore. https://doi.org/10.1007/978-981-19-0872-9_10

Download citation

DOI : https://doi.org/10.1007/978-981-19-0872-9_10

Published : 14 July 2022

Publisher Name : Springer, Singapore

Print ISBN : 978-981-19-0870-5

Online ISBN : 978-981-19-0872-9

eBook Packages : Biomedical and Life Sciences Biomedical and Life Sciences (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Introduction

- Conclusions

- Article Information

A, Simplified Geneva risk score. The cumulative incidence was 1.2% (95% CI, 0.3%-2.2%) for patients at low risk and 2.6% (95% CI, 1.5%-3.6%) for patients at high risk (log-rank P = .09). B, Original Geneva risk score. The cumulative incidence was 1.1% (95% CI, 0.1%-2.0%) for patients at low risk and 2.6% (95% CI, 1.5%-3.6%) for patients at high risk (log-rank P = .07). C, Padua score. The cumulative incidence was 1.4% (95% CI, 0.5%-2.3%) for patients at low risk and 2.8% (95% CI, 1.5%-4.0%) for patients at high risk (log-rank P = .08). D, IMPROVE (International Medical Prevention Registry on Venous Thromboembolism) score. The cumulative incidence was 1.8% (95% CI, 0.9%-2.6%) for patients at low risk and 2.7% (95% CI, 1.1%-4.3%) for patients at high risk (log-rank P = .26).

The area under the ROC curve was 58.1% for the simplified Geneva score (95% CI, 55.4%-60.7%), 53.8% (95% CI, 51.1%-56.5%) for the original Geneva score, 56.5% (95% CI, 53.7%-59.1%) for the Padua score, and 55.0% (95% CI, 52.3%-57.7%) for the IMPROVE (International Medical Prevention Registry on Venous Thromboembolism) score.

eMethods. Variable Definitions

eFigure. Flow Chart

eTable 1. Venous Thromboembolism Risk Assessment Models

eTable 2. Venous Thromboembolism Events in Low- and High-Risk Patients According to the Four Risk Assessment Models

eTable 3. Discrimination and Goodness of Fit of Each Risk Assessment Model to Predict Hospital-Acquired Venous Thromboembolism

eTable 4. Venous Thromboembolism Events in Patients Without Pharmacological Thromboprophylaxis According to the Four Risk Assessment Models

eTable 5. Predictive Accuracy of Risk Assessment Models for Hospital-Acquired Venous Thromboembolism in Patients Without Pharmacological Thromboprophylaxis

eTable 6. Venous Thromboembolism Events in Low- and High-Risk Patients According to the Four Risk Assessment Models, Stratified by Antiplatelet Treatment During Hospitalization

eTable 7. Predictive Accuracy of Risk Assessment Models for Hospital-Acquired Venous Thromboembolism, Stratified by Antiplatelet Treatment During Hospitalization

eTable 8. Sensitivity Analysis Investigating the Discriminative Performance of Risk Assessment Models With Different Outcome Scenarios Among Patients Lost to Follow-up

eTable 9. Demographics, Predictors and Outcomes of Participants in the RISE Study and the Derivation Cohorts of the Four Risk Assessment Models

eReferences.

Data Sharing Statement

- VTE Risk Assessment Models for Acutely Ill Medical Patients JAMA Network Open Invited Commentary May 10, 2024 Lara N. Roberts, MBBS, MD(Res); Roopen Arya, BMBCh(Oxon), MA, PhD

See More About

Sign up for emails based on your interests, select your interests.

Customize your JAMA Network experience by selecting one or more topics from the list below.

- Academic Medicine

- Acid Base, Electrolytes, Fluids

- Allergy and Clinical Immunology

- American Indian or Alaska Natives

- Anesthesiology

- Anticoagulation

- Art and Images in Psychiatry

- Artificial Intelligence

- Assisted Reproduction

- Bleeding and Transfusion

- Caring for the Critically Ill Patient

- Challenges in Clinical Electrocardiography

- Climate and Health

- Climate Change

- Clinical Challenge

- Clinical Decision Support

- Clinical Implications of Basic Neuroscience

- Clinical Pharmacy and Pharmacology

- Complementary and Alternative Medicine

- Consensus Statements

- Coronavirus (COVID-19)

- Critical Care Medicine

- Cultural Competency

- Dental Medicine

- Dermatology

- Diabetes and Endocrinology

- Diagnostic Test Interpretation

- Drug Development

- Electronic Health Records

- Emergency Medicine

- End of Life, Hospice, Palliative Care

- Environmental Health

- Equity, Diversity, and Inclusion

- Facial Plastic Surgery

- Gastroenterology and Hepatology

- Genetics and Genomics

- Genomics and Precision Health

- Global Health

- Guide to Statistics and Methods

- Hair Disorders

- Health Care Delivery Models

- Health Care Economics, Insurance, Payment

- Health Care Quality

- Health Care Reform

- Health Care Safety

- Health Care Workforce

- Health Disparities

- Health Inequities

- Health Policy

- Health Systems Science

- History of Medicine

- Hypertension

- Images in Neurology

- Implementation Science

- Infectious Diseases

- Innovations in Health Care Delivery

- JAMA Infographic

- Law and Medicine

- Leading Change

- Less is More

- LGBTQIA Medicine

- Lifestyle Behaviors

- Medical Coding

- Medical Devices and Equipment

- Medical Education

- Medical Education and Training

- Medical Journals and Publishing

- Mobile Health and Telemedicine

- Narrative Medicine

- Neuroscience and Psychiatry

- Notable Notes

- Nutrition, Obesity, Exercise

- Obstetrics and Gynecology

- Occupational Health

- Ophthalmology

- Orthopedics

- Otolaryngology

- Pain Medicine

- Palliative Care

- Pathology and Laboratory Medicine

- Patient Care

- Patient Information

- Performance Improvement

- Performance Measures

- Perioperative Care and Consultation

- Pharmacoeconomics

- Pharmacoepidemiology

- Pharmacogenetics

- Pharmacy and Clinical Pharmacology

- Physical Medicine and Rehabilitation

- Physical Therapy

- Physician Leadership

- Population Health

- Primary Care

- Professional Well-being

- Professionalism

- Psychiatry and Behavioral Health

- Public Health

- Pulmonary Medicine

- Regulatory Agencies

- Reproductive Health

- Research, Methods, Statistics

- Resuscitation

- Rheumatology

- Risk Management

- Scientific Discovery and the Future of Medicine

- Shared Decision Making and Communication

- Sleep Medicine

- Sports Medicine

- Stem Cell Transplantation

- Substance Use and Addiction Medicine

- Surgical Innovation

- Surgical Pearls

- Teachable Moment

- Technology and Finance

- The Art of JAMA

- The Arts and Medicine

- The Rational Clinical Examination

- Tobacco and e-Cigarettes

- Translational Medicine

- Trauma and Injury

- Treatment Adherence

- Ultrasonography

- Users' Guide to the Medical Literature

- Vaccination

- Venous Thromboembolism

- Veterans Health

- Women's Health

- Workflow and Process

- Wound Care, Infection, Healing

Get the latest research based on your areas of interest.

Others also liked.

- Download PDF

- X Facebook More LinkedIn

Häfliger E , Kopp B , Darbellay Farhoumand P, et al. Risk Assessment Models for Venous Thromboembolism in Medical Inpatients. JAMA Netw Open. 2024;7(5):e249980. doi:10.1001/jamanetworkopen.2024.9980

Manage citations:

© 2024

- Permissions

Risk Assessment Models for Venous Thromboembolism in Medical Inpatients

- 1 Department of General Internal Medicine, Inselspital, Bern University Hospital, University of Bern, Bern, Switzerland

- 2 Division of General Internal Medicine, Department of Medicine, Geneva University Hospitals, Geneva, Switzerland

- 3 Division of Internal Medicine, Department of Medicine, Lausanne University Hospital, Lausanne, Switzerland

- 4 CTU Bern, University of Bern, Bern, Switzerland

- Invited Commentary VTE Risk Assessment Models for Acutely Ill Medical Patients Lara N. Roberts, MBBS, MD(Res); Roopen Arya, BMBCh(Oxon), MA, PhD JAMA Network Open

Question What is the prognostic performance of the simplified Geneva score and other validated risk assessment models (RAMs) to predict venous thromboembolism (VTE) in medical inpatients?

Findings In this cohort study providing a head-to-head comparison of validated RAMs among 1352 medical inpatients, sensitivity of RAMs to predict 90-day VTE ranged from 39.3% to 82.1% and specificity of RAMs ranged from 34.3% to 70.4%. Discrimination was poor, with an area under the receiver operating characteristic curve of less than 60% for all RAMs.

Meaning This study suggests that the accuracy and prognostic performance of the simplified Geneva score and other validated RAMs to predict VTE is limited and their clinical usefulness is thus questionable.

Importance Thromboprophylaxis is recommended for medical inpatients at risk of venous thromboembolism (VTE). Risk assessment models (RAMs) have been developed to stratify VTE risk, but a prospective head-to-head comparison of validated RAMs is lacking.

Objectives To prospectively validate an easy-to-use RAM, the simplified Geneva score, and compare its prognostic performance with previously validated RAMs.