We will keep fighting for all libraries - stand with us!

Internet Archive Audio

- This Just In

- Grateful Dead

- Old Time Radio

- 78 RPMs and Cylinder Recordings

- Audio Books & Poetry

- Computers, Technology and Science

- Music, Arts & Culture

- News & Public Affairs

- Spirituality & Religion

- Radio News Archive

- Flickr Commons

- Occupy Wall Street Flickr

- NASA Images

- Solar System Collection

- Ames Research Center

- All Software

- Old School Emulation

- MS-DOS Games

- Historical Software

- Classic PC Games

- Software Library

- Kodi Archive and Support File

- Vintage Software

- CD-ROM Software

- CD-ROM Software Library

- Software Sites

- Tucows Software Library

- Shareware CD-ROMs

- Software Capsules Compilation

- CD-ROM Images

- ZX Spectrum

- DOOM Level CD

- Smithsonian Libraries

- FEDLINK (US)

- Lincoln Collection

- American Libraries

- Canadian Libraries

- Universal Library

- Project Gutenberg

- Children's Library

- Biodiversity Heritage Library

- Books by Language

- Additional Collections

- Prelinger Archives

- Democracy Now!

- Occupy Wall Street

- TV NSA Clip Library

- Animation & Cartoons

- Arts & Music

- Computers & Technology

- Cultural & Academic Films

- Ephemeral Films

- Sports Videos

- Videogame Videos

- Youth Media

Search the history of over 866 billion web pages on the Internet.

Mobile Apps

- Wayback Machine (iOS)

- Wayback Machine (Android)

Browser Extensions

Archive-it subscription.

- Explore the Collections

- Build Collections

Save Page Now

Capture a web page as it appears now for use as a trusted citation in the future.

Please enter a valid web address

- Donate Donate icon An illustration of a heart shape

The art of problem solving 7th edition

Bookreader item preview, share or embed this item, flag this item for.

- Graphic Violence

- Explicit Sexual Content

- Hate Speech

- Misinformation/Disinformation

- Marketing/Phishing/Advertising

- Misleading/Inaccurate/Missing Metadata

plus-circle Add Review comment Reviews

200 Previews

10 Favorites

DOWNLOAD OPTIONS

No suitable files to display here.

PDF access not available for this item.

IN COLLECTIONS

Uploaded by station02.cebu on September 12, 2023

About the Art of Problem Solving Initiative

Founded in 2004, the Art of Problem Solving Initiative, Inc. was created by people who love math and love teaching to help students access the study of advanced mathematics.

The Initiative began by running the USA Mathematical Talent Search , a nationwide math contest sponsored by the National Security Agency which continues to run to this day. In each round, this contest gives students a full month to work on five challenging proof-based problems. Students then get individual feedback on their work, including comments on their mathematical reasoning and on their proof-writing skills. By taking away the usual time pressures seen in many math contests, students have the opportunity to go more deeply and to explore.

For many years, the Initiative also ran the Local Programs Initiative which provided fiscal sponsorship to math circles and clubs across the country.

Now, the main project of the Initiative is Bridge to Enter Advanced Mathematics (BEAM) , a project to help underserved students find a realistic pathway towards becoming scientists, mathematicians, engineers, and programmers. During the summer after 7th grade, students are invited to participate in a free three-week residential program on a college campus where they gain the academic and social/emotional preparation to succeed in future programs for advanced study. They then receive academic advising throughout 8th grade and high school to help them and their families find the best opportunities for their educations.

The Art of Problem Solving Initiative receives support from Art of Problem Solving (AoPS) , which develops resources for high-performing middle and high school students including the largest online community of avid math students in the English-speaking world. The AoPS online school has trained many winners of major national mathematics competitions, including several gold medalists at the International Math Olympiad, Davidson Fellows, and winners of the Intel and Siemens Talent Search competitions.

The Art of Problem Solving Initiative is a 501(c)(3) charitable organization. It continues to be led by expert mathematicians and educators to this day. Please consider joining our contributors by donating to support advanced math education.

Financial Information

The Art of Problem Solving Initiative, Inc. makes its financial statements available for public inspection in the interest of greater transparency. Please see below to learn more about the organization.

- Tax return (Form 990)

- Audited financial statements

- Tax return (Form 990) (Note that due to a change in fiscal year these cover a shortened period.)

An improved fruit fly optimization algorithm with Q-learning for solving distributed permutation flow shop scheduling problems

- Original Article

- Open access

- Published: 25 May 2024

Cite this article

You have full access to this open access article

- Cai Zhao 1 ,

- Lianghong Wu ORCID: orcid.org/0009-0000-9773-2280 2 ,

- Cili Zuo 2 &

- Hongqiang Zhang 2

The distributed permutation flow shop scheduling problem (DPFSP) is one of the hottest issues in the context of economic globalization. In this paper, a Q-learning enhanced fruit fly optimization algorithm (QFOA) is proposed to solve the DPFSP with the goal of minimizing the makespan. First, a hybrid strategy is used to cooperatively initialize the position of the fruit fly in the solution space and the boundary properties are used to improve the operation efficiency of QFOA. Second, the neighborhood structure based on problem knowledge is designed in the smell stage to generate neighborhood solutions, and the Q-learning method is conducive to the selection of high-quality neighborhood structures. Moreover, a local search algorithm based on key factories is designed to improve the solution accuracy by processing sequences of subjobs from key factories. Finally, the proposed QFOA is compared with the state-of-the-art algorithms for solving 720 well-known large-scale benchmark instances. The experimental results demonstrate the most outstanding performance of QFOA.

Avoid common mistakes on your manuscript.

Introduction

Due to the rapid development of the global economy and the increasing market demand, the traditional single-factory production model has been challenged and can no longer cope with the current changes. Distributed manufacturing has solved the above problems well, and it has now become a major trend [ 46 , 51 ]. Its advantages lie in strengthening the connections between various enterprises, cooperating with multiple factories, allocating resources well, greatly reducing production time [ 6 , 30 ]. In this way, it can better cope with the current situation, so distributed manufacturing is becoming more and more common and gradually occupying a dominant position, while the traditional single-factory production model is gradually withdrawing from the historical stage. In this context, research on distributed scheduling is particularly necessary as it directly affects the future development of the entire enterprise. In recent years, research on distributed scheduling has become more and more diversified, such as distributed hybrid flow shop scheduling [ 25 ], distributed blocking flow shop scheduling [ 53 ], distributed no-wait flow shop scheduling [ 31 ], distributed permutation flow shop scheduling [ 1 ], etc. At the same time, the goals are also becoming more complex, from single goals to multiple goals. More complex than a single factory, multi-factory production processing must consider at least two sub-problems: factory allocation and job scheduling order. Therefore, this brings great challenges to traditional algorithm design. Permutation constraints are one of the most important constraints in flow shop scheduling. Because only the first machine is sorted for jobs and no consideration is given to subsequent machines, it is suitable for sustainable large-scale production and has received widespread attention. The single-factory flow shop problem has been proven to be NP-hard [ 2 , 42 , 55 , 56 ]. As an extension of this problem, the distributed permutation flow shop scheduling problem (DPFSP) is inherently more complex and more in line with the current environment. Therefore, research on DPFSP problems has strong engineering value [ 19 , 36 ].

The fruit fly optimisation algorithm (FOA) is a highly competitive algorithm proposed by Pan [ 23 ]. Wang et al. [ 41 ] compared FOA with over ten heuristic algorithms on benchmark problems. Their findings validated that FOA exhibits strong optimization capabilities, requires fewer parameters, features simple operations and is easy to implement. Currently, FOA has found successful applications in various domains, such as power load forecasting, parameter identification and scheduling field. Ibrahim et al. [ 13 ] designed a wind-driven variant of FOA for parameter identification in photovoltaic cell models. Saminathan et al. [ 29 ] integrated the whale optimization algorithm with FOA to address energy-saving problems with delays. Zhang et al. [ 50 ] designed a problem-specific initialization method, four neighborhood structures and a local search structure in the sniffing phase, and proposed a discrete FOA algorithm for the scheduling problem of distributed manufacturing systems. The experimental results showed the benefit of the multi-neighborhood strategy for expanding the global search space. Huang et al. [ 12 ] proposed a FOA algorithm based on the elimination mechanism to maintain population diversity by eliminating and generating some drosophila with poor performance, and used it for the traveler problem. Shao et al. [ 32 ] designed a new FOA algorithm to solve blocked flow shop problems. Zheng et al. [ 57 ] proposed an enhance FOA to solve unrelated parallel machine scheduling problems.

With the rapid rise of artificial intelligence, which improves great convenience for all walks of life. The Q-learning, as the most typical algorithm of reinforcement learning (RL), originates from dynamic programming. It makes the best decision at every step to make the whole process optimal. Due to its simple operation and high efficiency, it is increasingly favored by researchers [ 3 ]. Below are some related literature on the use of Q-learning in various fields. Chen et al. [ 4 ] applied the Q-leaning algorithm to the parameter tuning of swarm intelligence optimization algorithms to better solve the assembly workshop problem. Similarly, Wang et al. [ 38 ] also studied and designed a dual Q-learning for the assembly flowshop problem to improve its adaptability to the environment. Hsieh and Su [ 11 ] designed a complementary solution to the economic dispatch problem with the goal of reducing energy consumption by combining the Q-learning with intelligent optimization algorithms. Zhang et al. [ 49 ] designed a new Q-learning model and proposed a method to solve scheduling problems in power systems. Shen et al. [ 33 ] also solved this type of problem well by designing a meme algorithm based on Q-learning for dynamic software project scheduling. From the above literature, it is learnt that Q-learning generally uses the greedy strategy e -greedy for the problem solving process, which selects the corresponding action with the largest reward value in each selection, but it does not consider from the global perspective, and it sets the Q-value as an equal value or a random value during initialisation, i.e., it learns in the environment without a priori knowledge, which leads to uncertainty in the learning effect.

Q-learning belongs to unsupervised learning, which selects the optimal action from the action set according to the current state and information feedback in the environment. Therefore, Q-learning is embedded into FOA to jointly solve the actual manufacturing decision-making problem, and the neighborhood structure is designed according to the properties of DPFSP. The Q-learning dynamically selects the most suitable neighborhood structure during the population evolution process based on the current state and historical information feedback. Furthermore, the “Sigmoid” function is introduced as a dynamic selection strategy instead of the greedy strategy of Q-learning, which is used to improve the evolutionary ability of the population.

In view of the engineering value and practicality of DPFSP, this paper attempts to address the DPFSP with the objective of minimizing makespan, a QFOA is proposed by combining the advantages of FOA and Q-learning and the properties of DPFSP.

Firstly, a hybrid strategy is used to cooperatively initialize the fruit fly population, and the boundary properties are used to improve the operational efficiency of QFOA [ 10 ].

Multiple neighbourhood structures are designed based on the problem attributes in the smell phase, and the mechanism of Q-learning is assembled to select the most appropriate neighbourhood structure. Notably, the "Sigmoid" function is introduced as a dynamic selection strategy, replacing the greedy strategy of Q-learning to further improve the algorithm’s ability to find the optimal.

A simulated annealing acceptance criterion is embedded in the visual phase to avoid premature convergence.

The remaining structure of the paper is as follows. In Sect. “ Literature review ” reviews the solution methods for DPFSP. The mathematical model of the DPFSP is described in Sect. “ Problem description ”. Section “ QFOA for DPFSP with minimizing makespan ” gives the details of QFOA solving for DPFSPs. Section “ Experimental analysis ” introduces parameter calibration and performance evaluation for QFOA. Section “ Conclusion ” concludes and looks forward to further research.

Literature review

Distributed manufacturing systems are widely used as society grows fast and cooperation between companies is enhanced. DPFSP has more engineering and academic value as a generalisation of shop flow scheduling. In the decade or so since the concept of DPFSP was proposed, it has received much attention, with a variety of solution methods and goals changing from single to multiple. Below we review and summarize the relevant literature.

Related scheduling problems and solutions

In the decade or so since the concept of DPFSP was proposed [ 20 ], it has received much attention, with various solution methods and goals changing from single to multiple. For the makespan (completion time) criterion, Li et al. [ 17 ] used the distributed iterative greedy method to guide the iterative search of three bee colonies, so as to design an efficient artificial bee colony algorithm to solve DPFSP. Zhao et al. [ 54 ] designed a memory discrete differential evolution algorithm, guided by an enhanced NEH (Nawaz-Enscore-Ham) algorithm and a knowledge-based crossover strategy to guide population evolution. Ren et al. [ 26 ] used the Q-learning as the theme framework while combining other strategies to solve this problem. Yang et al. [ 44 ] added optimization for proposed an enhanced distribution estimation algorithm, using a four-dimensional matrix based on sequence relationships to learn and accumulate the position information of high-quality solutions, thereby guiding global search. Fernandez-Viagas et al. [ 7 ] conducted in depth research on the DPFSP problem and proposed two properties of lower and upper bounds for the makespan, based on which they designed a bounded-search iterated greedy algorithm. Ruiz et al. [ 27 ] designed an iterative greedy algorithm to solve DPFSP.

For the optimization objective of minimizing total flow time, Fernandez-Viagas et al. [ 8 ] proposed an evolutionary algorithm and 18 constructive heuristic methods based on analysis of problem attributes, assignment rules, and acceleration processes. Pan et al. [ 22 ] designed four meta-heuristic algorithms to optimize DPFSP’s total flow time based on several high-performance algorithms as the main framework. For multi-objective DPFSP, Jing et al. [ 15 ] designed an iterated greedy algorithm to solve for total weighted earliness and tardiness as objectives. Fu et al. [ 9 ] used a brainstorming algorithm to solve DPFSP with the goal of total delay time and total energy consumption. Wang et al. [ 37 ] performed energy-saving processing on DPFSP and proposed a collaborative algorithm to minimize multiple objectives. Qian et al. [ 24 ] used the cross entropy method to learn from the better scheme and generate solutions, and proposed a super heuristic cross entropy algorithm to solve the DPFSP with the goal of completion time and total energy consumption.

In recent years, as the complexity of the DPFSP problem has increased, constraints have become more and more numerous, such as no-waiting, no-idle, batch flow and blocking. Many meta-heuristic methods have been proposed to solve DPFSP with various constraints. A hyper-heuristic-based memetic algorithm was developed to minimize the makespan of the assembly DPFSP by Song et al. [ 34 ]. To solve the problem of scheduling idle flow shops with multiple objectives, Zhao et al. [ 52 ] developed a discrete Jaya algorithm. Dong et al. [ 5 ] used iterative greedy algorithm to solve the assembly DPFSP. Zhu et al. [ 58 ] designed a new FOA algorithm to solve no waiting DPFSP and proposed a new initialization method to construct the initial solutions. The blocking DPFSP was solved using differential evolution by Zhang et al. [ 48 ]. An algorithm called iterative cocktail greedy was explored by Lin et al. [ 18 ] for the no-wait DPFSP. Komaki et al. [ 16 ] also studied no-wait DPFSP and designed a meta-heuristic algorithm with variable neighborhood search. Wang et al. [ 39 ] performed energy-saving processing on DPFSP and proposed a collaborative algorithm to minimize multiple objectives.

In summary, a brief review of the problems related to DPFSP and the solution methods has been made. Most of the solution methods for DPFSP use meta-heuristic algorithms, which are effective, but it is difficult to find a satisfactory solution quickly in a given short time, especially for complex large-scale problems, and the disadvantage is more obvious. Given the engineering and academic value of DPFSP, it is also of extraordinary significance to develop effective algorithms.

Research gap

DPFSP as an extension of PFSP is more in line with the current manufacturing environment than it is. Meanwhile, DPFSP is one of the most common production modes used by companies in the manufacturing process, which has been widely used in welding, medical and automotive fields. Therefore, studying DPFSP has significant engineering value.

Through the review of the related problems of DPFSP and the solution methods in Sect. “ Related scheduling problems and solutions , it can be found that up to now, various methods have been used to solve DPFSP, and many aspects are mature. However, the schemes given are generally acceptable for small and medium-sized instances, but not ideal for large-scale instances. Not only is it time-consuming and inefficient, but the results obtained are often significantly different from the currently obtained optimal solutions. According to the literature [ 44 ], it takes about 70 days to solve a large-scale instance of 500*20, which is generally not accepted. However, the possible reason is that when designing the solution method, the properties of the problem are not fully considered, so the effect is not very obvious when solving large-scale instances. Therefore, the purpose of this paper is to combine the characteristics of the problem to develop an effective algorithm so that in solving large-scale problems, a satisfactory solution can be quickly found in a short time.

In recent years, Q-learning is getting more and more attention from scholars due to its simplicity and high efficiency, and has been successfully applied in many fields, especially in solving complex large-scale problems. And there are related literatures using Q-learning combined with meta-heuristics to solve distributed flow shop problems, but it is only used to adjust the algorithm parameters, and for other aspects of the research is relatively small at present. Meanwhile, this gives us ideas to combine the advantages of meta-heuristic algorithms with Q-learning and consider the properties of the problem to design effective operators to solve the DPFSP.

To sum up, the above analyses provide a preliminary basis for the combination of Q-learning and meta-heuristic algorithms. To this end, an enhanced FOA algorithm (QFOA) based on the Q-learning mechanism is proposed to solve the DPFSP with the objective of the makespan. According to the properties of the problem, four neighbourhood perturbation operators are designed. The mechanism of Q-learning is assembled to select the most appropriate neighbourhood structure. Notably, the “Sigmoid” function is introduced as a dynamic selection strategy, replacing the greedy strategy of Q-learning to further improve the algorithm’s ability to find the optimal.

Problem description

According to the description of literature [ 54 ], the DPFSP can be described as: n jobs \( \{j_1,j_2,\ldots ,j_n \}\) needs to be in f factories processing \( F=\{F_1,F_2,\ldots ,F_f \}\) . All factories have exactly the same assembly line, which contains m processing machines \( M=\{M_1,M_2, \ldots ,M_m \}\) , and in each factory, represents the processing time of job j on machine i , and in different factories, after the job sequence is arranged on the first machine, the subsequent processing sequence is the same, that is, it presents the characteristics of replacement pipeline. There is no difference in job processing time among different factories. It is worth noting that a job can only be processed by one factory, and the processing process cannot be interrupted. Below are the meanings represented by each symbol in this article.

The classical DPFSP minimizes the makespan as the scheduling objective and is usually represented as \(DP|prmu|C_{max}\) where distributed factories are represented as DP; Permutation features are represented as prmu; the makespan is expressed as \(C_{max}\) .

Symbol meaning :

i : denotes the index of the machine, where \(i=1,2,\ldots ,m\) .

j : denotes the index of the job, where \(j=1,2,\ldots ,n\) .

F : denotes the number of factories.

f : denotes the index of the factory, where \(f=1,2,\ldots ,l\) .

n : denotes the number of jobs.

m : denotes the number of machines.

\(C_{max}\) : Indicates the completion time of all assignments.

\(P_{j,i}\) :denotes the processing time of job j in machine i .

\(I_{i,k,f}\) :denotes the idle time.

\(W_{i,k,f}\) :denotes the completion time of the k th job in factory f on machine i .

Psize :Population size.

\(\alpha \) :learning rate.

\(\gamma \) :discount factor.

\(T_P\) :annealing factor.

Decision variable

\(X_{i,k,f}\) :the decision variable with a value of 1 if job j is assigned to the kth position in factory f and 0 otherwise.

Objective :

Constraint :

where Eq. ( 1 ) indicates the minimize makespan. The constraint set is Eqs. ( 2 )–( 9 ), Eqs. ( 2 ) and ( 3 ) for all jobs can only occur in this way and each job must be processed in one factory. Equation ( 4 ) represents the idle time constraint. Equation ( 5 ) denotes the completion time for each factory. Equation ( 6 ) denotes the completion time of the entire problem, and Eqs. ( 7 ) and ( 8 ) indicate that the machine idle time after the completion of the current job and before the next processed job is greater than or equal to 0. Equation ( 9 ) is the range of values for the binary take variables.

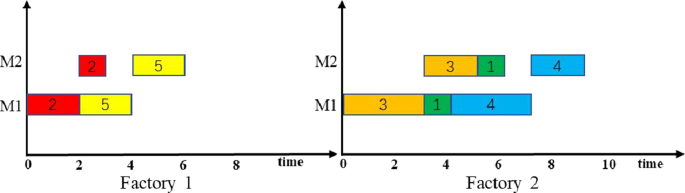

In order to understand this scheduling process more clearly, an example of 5 jobs, 2 machines, and 2 factories is given below. Table 1 shows the processing time of each job. Figure 1 shows the gantt chart of the example.

Example Gantt chart

As can be seen from Fig. 1 , Factory 1: The makespan \(C_{max} \) of the job sequence 2, 5 is 6. Factory 2: The makespan \(C_{max}\) of the job sequence 3, 1, 4 is 9. So, the makespan in the example is 9.

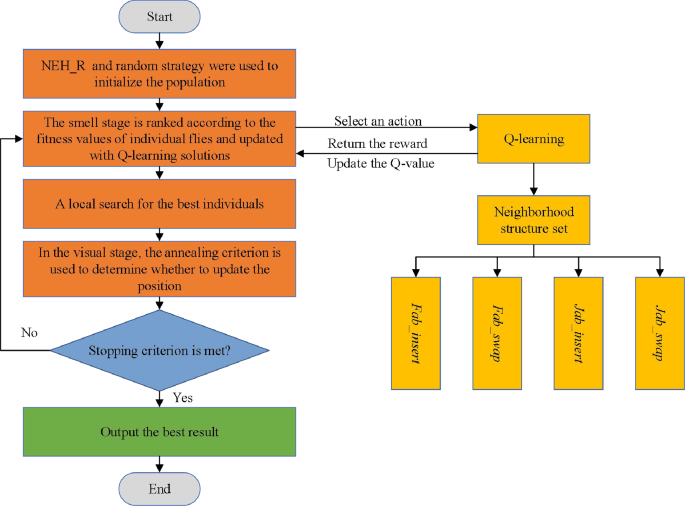

QFOA for DPFSP with minimizing makespan

Due to the excellent performance of FOA, it has been combined with other methods by researchers to solve discrete problems. We combine FOA and Q-learning together to propose a new hybrid algorithm, QFOA, which aims to minimize makespan by selecting high-quality neighborhood structures during the iterative process. It is worth noting that this paper makes corresponding adjustments to Q-learning algorithm and introduces "Sigmoid" function as a dynamic selection strategy instead of greedy strategy to improve the probability of the algorithm jumping out of the local optimal. The detailed details of the QFOA include the population initialization, the design of the smell and visual phases, and the local search composition.The detailed QFOA process is shown in Fig. 2 .

QFOA frame diagram

Representation of solutions

For this study, we adopted a representation method based on job sequences to represent a complete solution in the form of two-dimensional vectors. Thus, a solution can be represented as \({\pi = \{ {\pi _1},{\pi _2},...,{\pi _f}\} }\) , \({\pi _l} = \{ {\pi _{l,1}},{\pi _{l,2}},...,{\pi _{l.{n_l}}}\} \) , \(l = 1,2,...,f\) . where \(n_l\) indicates the number of jobs assigned to factory \(F_l\) . Based on the example in Fig. 1 above, the solution is represented as, \(\pi = \{ {\pi _1},{\pi _2}\}\) , \({\pi _1} = \{ 2,5\}\) , \({\pi _2} = \{3,1,4\}\) , \(n_1\) = 2, \(n_2\) = 3.

Initialization

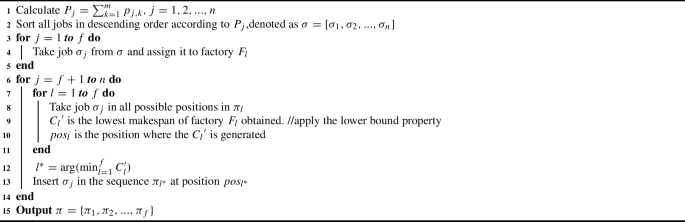

Excellent initial population helps to improve the rate of convergence and accuracy of the algorithm. NEH [ 21 ] can quickly obtain high-quality solutions. When it was first proposed, it was used to solve the PFSP. The NEH mechanism is described as follows: first, a sequence of jobs is sorted in descending order to obtain a seed sequence, second, the two longest jobs are found, and finally, the remaining jobs are inserted one by one into the two jobs taken out to obtain the minimum makespan. Here the NEH algorithm is extended to multiple factories for initialisation of the population. Inspired by literature [ 32 ], this paper designs a new NEH heuristic method (NEH_R), moreover, considering population diversity, the NEH_R method is used to initialize 10% of the individuals, and the remaining is generated randomly. The detailed steps of NEH_R are as follows.

The jobs are sorted in non-increasing order according to the total processing time to generate the seed sequence \(\sigma = [{\sigma _1},{\sigma _2},...,{\sigma _n}]\) , where \({\sigma _j} \in j,j = 1,2,....n\) . Then, we will evenly distribute the first f jobs ( \({\sigma _1},{\sigma _2},...,{\sigma _f}\) ) to f factories. Next, test all possible positions of all factories one by one for the remaining jobs \({\sigma _{f + 1}},{\sigma _{f + 2}},...,{\sigma _n}\) , and finally insert the job into the best position.

It is worth noting that the boundary property in the literature [ 10 ] is used in the process of insertion to save time and improve efficiency, and NEH_R pseudo-code Algorithm 1. To illustrate the NEH_R process more clearly, an example of a 5 jobs, 2 machines, and 2 factories is given for illustration, and Fig. 3 shows the exact scheduling process.

NEH_R Process

Example of initializing population process NEH_R

Smell phase

During the smell search phase, individual fruit fly within the population are updated mainly by neighborhood search. Fruit flies search for food sources, and once the best food source is found, all fruit flies will concentrate and fly here. Therefore, we can find that the generation method of neighborhood solutions is crucial for the algorithm. According to existing references, there is currently no neighborhood structure that is suitable for solving all problems. Considering the problem structure, we propose four types of neighborhood operators to generate neighborhood solutions. And combined with the mechanism of Q-learning, the most suitable operator is selected for each individual with different adaptations to generate better individuals.

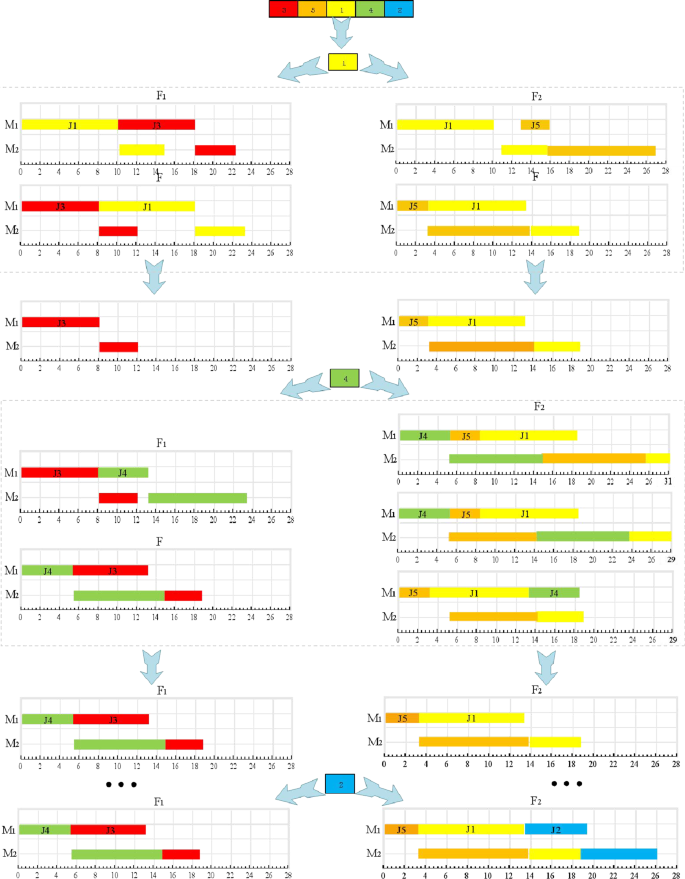

Neighborhood structure

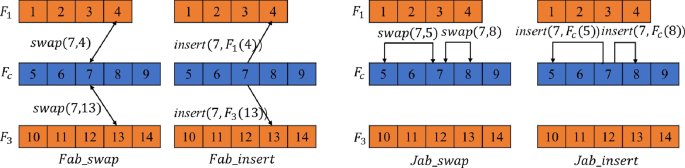

Given the specificity of minimizing \(C_{max}\) in a distributed environment, it is assumed in the design of the neighborhood that the solution’s are not optimized if the schedule of the largest factory (denoted as key factory \(F_c\) ) is not changed. Therefore for the design of the neighborhood structure are operated based on the key factory. In QFOA, four neighborhood operators are introduced to generate neighborhood solutions to improve the algorithm’s ability to search for solutions. One is based on factory assignment, including \({N_1}\) : insertion of key factories with other factories \(Fab\_insert\) ); \({N_2}\) : swap of key factories with other factories ( \(Fab\_swap\) ); the second is based on jobs sequence assignment, including \({N_3}\) : insertion within key factories ( \(Jab\_insert\) ); \({N_4}\) : swap within key factories ( \(Jab\_swap\) ). Figure 4 shows a detailed example to illustrate the process.

Four types of neighborhood structures

\(Fab\_swap\) : Randomly select a job n from the key factory \({F_c}\) , and another job \(n'\) from other factories. Swap jobs n and \(n'\) to generate \(f-1\) neighborhood solutions and select the best one.

\(Fab\_insert\) : A job n is randomly selected from the key factory \({F_c}\) , and another job \(n'\) is randomly selected from other factories. The job n is inserted before the location of \(n'\) to generate \(f-1\) neighborhood solutions and select the best one.

\(Jab\_insert\) : Randomly select l jobs from the key factory without duplication, and insert one of them before the position of \(l-1\) jobs. This generates \(l-1\) neighborhood solutions and selects the best one.

\(Jab\_swap\) :Randomly select l jobs from the key factory without duplication, and swap one of them with \(l-1\) jobs. This generates \(l-1\) neighborhood solutions and selects the best one.

Combining with Q-learning

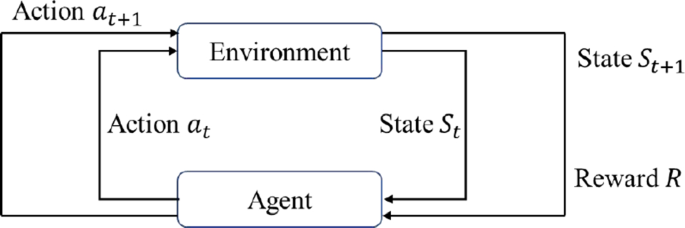

Reinforcement learning (RL) is a decision-making method that involves agents interacting with environments through states, actions, and rewards to achieve optimal outcomes [ 3 ]. The basic idea is that the agent obtains the current state \(S_t\) and selects an appropriate action at from the action set at time t to execute. The environment then provides a reward R (positive or negative) for executing a certain action. Subsequently, the agent performs a new action \(a_{t+1}\) based on the new state \(S_{t+1}\) and the reward R fed back by the environment according to a certain strategy. A detailed diagram of this principle is shown in Fig. 5 .

Model of RL

Q-Learning is a value-based algorithm in RL that uses a Q-value to represent the expected gain of taking an action in a given state [ 14 , 43 ]. The Q-value, denoted as \(Q(S_t,a_t)\) , is based on the agent’s action \({a_t}({a_t} \in A)\) at state \({S_t}({S_t} \in S)\) and the reward R provided by the environment. The algorithm constructs a Q-table to store the Q-value for each State and Action pair and selects the action with the maximum gain based on the Q-value. The Q-value is updated using equation ( 10 ).

where \(a_t\) , \(S_t\) , \(a_{t+1}\) and \(S_{t+1}\) respectively refer to the actions and states at time t and \(t+1\) ; \(\alpha \) are learning rate and \(\gamma \) discount factor respectively.

Q-learning algorithms for problem solving generally make use of the greedy strategy e -greedy for selection, which selects the reward in each choice The greedy strategy e -greedy is used in the problem solving process of Q-learning algorithms. Since the Q-value is set to be equal or random in the initialization process, the learning process is carried out in an environment with no prior knowledge. At the same time, the learning rate is not dynamically adjusted after initialization, so the decision space of the learning process is large, the learning speed is slow, the learning effect is uncertain, and the action with the largest Q-value is selected every time, which may lead to the failure of finding the optimal solution. In this paper, we introduce the “Sigmoid” function as a dynamic selection strategy, so that the improved Q-learning algorithm selects the actions randomly in the early stage, and then dynamically selects the optimal actions in the later stage. This improves the phenomenon that the greedy strategy may fall into the local optimum in the early stage and may still select the second best action in the later stage of the learning process.

This paper introduces “Sigmoid” as an action selection strategy, and its expression is as follows:

According to Eq. ( 11 ), when the Q-value of the initial state is 0, in the early stage of selection, each action has the same selection probability and is more random. When the learning reaches a certain degree, the reward value of each action begins to stand out, and the probability P increases with the new Q-value. As the system changes, it will gradually tilt towards the action of selecting the high reward value.

In this paper, three kinds of selection strategies are discussed, which are “completely random” strategy, “semi-random” strategy and greedy strategy.

Introduce the parameter \(\psi \) ,where \(\psi \) is a random number between [0, 1], the choice of parameter \(\psi \) will be corrected below, there are the following choices.

When \(\psi \) =0, when \(P > \psi \) , the greedy strategy is implemented.

When \(\psi \) =1, when \(P < \psi \) , a “completely random” strategy is implemented, i.e., the actions are chosen randomly throughout the selection process.

when \(\psi \in (0,1)\) , then the implementation of “semi-random” strategy, that is, the introduction of a “Sigmoid” function as a dynamic selection strategy, when \(P \ge \psi \) , the algorithm performs a greedy strategy to select the action with the largest value of the current reward; when \(P < \psi \) , the algorithm adopts a “completely random” strategy to select actions randomly.

In the initialization phase of the QFOA algorithm, the Q-value is set to 0, and the “Sigmoid” function is used as a dynamic selection strategy, so that the improved algorithm selects actions randomly in the early stage, and then dynamically changes between randomly selecting actions and selecting the action with the highest reward value in the later stage, in order to improve the ability of population evolution. In this paper, four kinds of neighbourhood perturbation operators are used as the action set, and the completion time of the job is the state set, and the Q-learning algorithm selects the appropriate action according to the current state, and then provides feedback on the result of the execution, and updates the Q-table according to the current searching state, in order to select the next perturbation operator in a reasonable way. The specific design mainly consists of four parts: state set, action set, reward function and selection strategy.

(1) State set

In QFOA, the definition of the state space is the key to the selection of actions. At different times, the set of states is different, which has a great influence on the selection of subsequent actions. the selection of the subsequent action has a great influence. In this paper, the state space S = \(\{ 0,1\} \) is represented in binary according to whether the quality of the population is improved after executing the action, and the state is s = 1 if the quality of the solution is improved, and s = 0 otherwise.

(2) Action set

In scheduling, Agent will choose the action according to the current state. In QFOA,four kinds of neighbourhood perturbation operators \(({N_1-N_4})\) are defined in as action sets.

(3) Reward function

After taking action, individual fruit fly can receive a reward R . In QFOA, the reward R is calculated based on the makespan, i.e.

where \(f_{new}\) and \(f_{old}\) represent the old and new individual fitness values.

(4) Selection strategy

A dynamic selection strategy for improving the Q-learning algorithm is proposed, and the introduction of the “Sigmoid” function as a probability function for selection is introduced in the context of the objectives of this paper.

(5) Update the Q-value in the Q-table according to Equation ( 10 ). At the completion of each iteration, update so individuals and states. Table 2 shows the Q-table.

Visual stage

In the traditional FOA algorithm, the fruit fly population flies to a new position if it is better than the original central position; otherwise, it stays at the current central position. However, if the central position is not updated for a long time, the search process will stagnate. In fact, those abandoned locations already have some search information close to the food source, and further search around them may yield better results. Therefore, we adopt a new location update strategy in QFOA. Specifically, if the new location is better than the original central location of the current fruit fly population, all individual fruit flies fly towards that location. Otherwise, an acceptance criterion of a class of simulated annealing [ 28 ] is used to decide whether to accept the new position as the central position. First, generate a random number \(rand \in [0,1]\) that follows a uniform distribution, and if \(rand \in [0,1]\) is smaller than the acceptance probability \(\eta \) , the position is accepted as the central position. The acceptance probability \(\eta \) is calculated as follows.

where \({C'}_{\max }\) is the current maximum completion time of the fruit fly and Temp is the temperature coefficient, calculated as follows.

where \(T_p\) is the temperature constant, the value of reference [ 32 ], \(T_p\) is 0.6.

It can be seen from Eq. ( 13 ) that \(\eta \) is close to 1 when \({C'}_{\max }\) is close to \({C}_{best}\) , and the opposite is close to 0 and is discarded. The above reception strategy can maintain the new location in the current search area, while also avoiding the algorithm from falling into local optima by accepting some poor new locations.

Local search

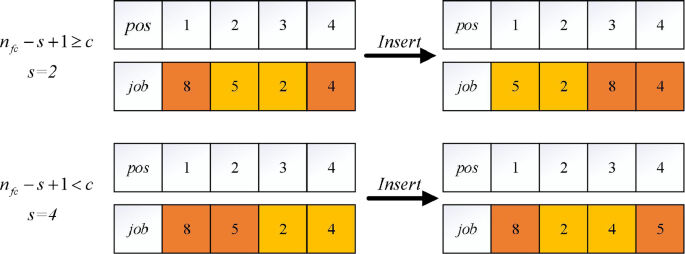

For DPFSP, the most commonly used local search operations are insertion and exchange [ 1 , 58 ], and these two methods are often accomplished by moving a job. However, for our problem, it has been experimentally proven that the solutions generated by local search methods that move a single job often do not show significant improvement in the later stages of the algorithm. The reason for this result is that moving a job often destroys the constructed job blocks, which carry more sequence features than a single job, and Zhang et al. [ 48 ] have proven that making full use of information can significantly enhance the caliber of the solutions.

Therefore, we design a local search algorithm based on job blocks (LsBlock). Its operating object is the key factory. We remove the job block \(\delta \) consisting of c ( \(c \in [1,3]\) ) consecutive jobs from the critical factory ( \(F_c\) ) and then reinsert the job blockinto the optimal position. Figure 6 provides an example for detailed explanation.To provide a solution \({\pi = \{ {\pi _1},{\pi _2},...,{\pi _f}\} }\) , we first remove a job block \(\delta \) with a length of c starting from s from the key factory. In Fig. 6 , \(n_{fc}\) represents the number of jobs in the factory \(F_c\) , here \(n_{fc}\) = 4, the starting job sequence is \(\{8,5,2,4\}\) , the deleted job block is \(\delta \) = \(\{ 5,2\}\) , the remaining job sequence is \(\{8,4\}\) , after testing, inserting the job block \(\delta \) = \(\{5,2\}\) before the job sequence \(\{8,4\}\) has the best effect, thus forming a new job sequence \(\{5,2,8,4\}\) . By deleting job blocks, the sequence information of job blocks can be fully utilized, making it more suitable for solving DPFSP. LsBlock pseudo code is shown in algorithm 2 .

Process of forming and inserting a job block with c = 2

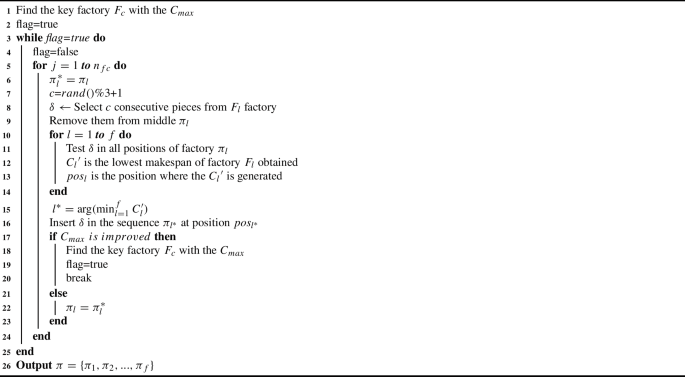

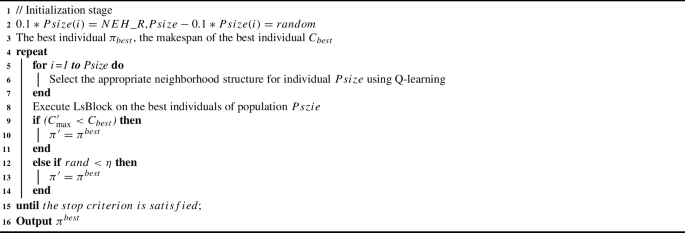

The detailed details of the QFOA algorithm include the population initialization, the design of the smell and visual phases, and the local search composition. the pseudo-code of the QFOA algorithm is shown in Algorithm 3.

QFOA( Psize , \(\alpha \) , \(\gamma \) )

Experimental analysis

The experiment runs on Windows 10 64-bit OS, Intel Core i7-4790 CPU at 3.6 GHz, 8.0 GB RAM, and MATLAB R2019b.

To verify the effectiveness of QFOA, 720 large-scale test instances against DPFSP proposed by Naderi and Ruiz [ 20 ] are used in this paper to test the effectiveness of the algorithm. These 720 arithmetic instances are composed of test cases with 120 PFSPs proposed by Taillard [ 35 ] and 6 distributed scenarios ( \(f \in \{2,3,4,5,6,7\} \) ). Each scenario considers 120 test instances from Taillard. Each scale contains 10 different use instances, so that each scenario consists of 12 groups of use instances of different scales ( \(n \times m:\{ 20,50,100\} \times \{ 5,10,20\},\{ 200\} \times \{ 10,20\},\{ 500\} \times \{ 20\} \) ).

In order to better validate the performance of QFOA, the QFOA algorithm was analysed in terms of stability, accuracy, convergence, variability, and composition, and so I haverelative percentage deviation (RPD) [ 22 , 54 ] has been taken as an evaluation metric in order to describe the problem more accurately and its equation is shown below:

where \(C_i\) denotes the \(C_{max}\) obtained by a particular algorithm at the ith time, and denotes \(C_{best}\) the optimal solution of the algorithm instance.

We set the termination criterion for all algorithms to the maximum CPU runtime, i.e.: \(T = C \times m \times n\times f(ms)\) and C is set to 5,15,30 levels. Each algorithm is run independently 10 times.

Parameter analysis

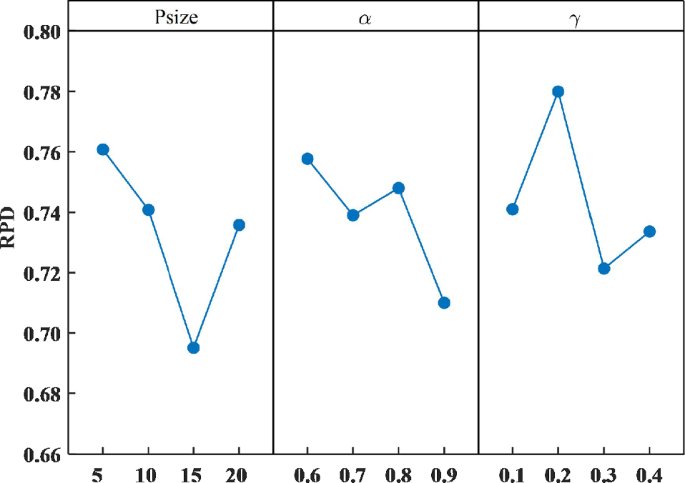

The parameters of QFOA algorithm are \( \alpha \) learning rate, \(\gamma \) discount factor and Psize population size. The parameter settings are as follows: There are a total of \(4 \times 4 \times 4 = 64\) different combinations of configurations of these three parameters. This is because the calibration algorithm using the same instances can lead to overfitting results. To calibrate the parameters of QFOA, this chapter regenerates a new set of test instances using the same generation method as the Tarllard instance [ 35 ], which contains 20 instances of different sizes, i.e. \(\{ 40,80,120,60,200\} \times \{ 5 \times 10 \times 15 \times 20\} \) . The machining time of the job on each machine is randomly generated in the interval [1, 99] according to a uniform distribution. In this chapter, three different distributed machining scenarios are considered in the parameter calibration phase, i.e.: \(f \in \{2,4,6\} \) . Therefore, we use 60 test instances for the parameter configuration of QFOA. Each configuration is executed 5 times on each instance. The termination criterion for all algorithms is the maximum CPU runtime, i.e., \(T = C \times m \times n\times f(ms)\) , where C is 10. RPD is the response value, and ANOVA is used to analyze the experimental results. Table 3 shows the ANOVA results, and Fig. 7 shows the main effect plots of all parameters.

Parameter main effect plot

Table 3 shows that all three parameters have an impact on QFOA’s performance, as their P-values are less than 0.05 confidence level. The largest F-ratio indicates the greatest impact. More specifically, from Table 3 , the F-ratio obtained from the 3 parameters is shown in descending order as \( \alpha \) , Psize and \( \gamma \) . In other words, the impact of the algorithm is ranked in this order. The variation curves of the parameters are then analysed in conjunction with Fig. 7 , so that the most appropriate parameters can be selected. Firstly, the trend of changes in the parameter main effect graph is worth paying attention to. When the learning rate is \( \alpha \) = 0.6, the performance of the algorithm is the worst because the individual learning rate is low, which affects the performance of the algorithm. When the learning rate is \( \alpha \) = 0.9, the performance of the algorithm is optimal, but considering that if the learning rate is too high in the later stage of the algorithm, it will lead to a decrease in diversity and fall into local optimality, so we choose a learning rate of \( \alpha \) = 0.7. Secondly, for population size Psize, the trend changes as the number of individuals increases, and RPD value first decreases and then increases. The reason for the increase is that when population size is large, most of time is spent on selecting neighborhood structures, So that Psize = 15 is selected. Finally, for discount factor \(\gamma \) , we adopt the same method to combine Fig. 7 and select points with lowest change rate to determine values of \(\gamma \) , that is \(\gamma \) =0.3. In summary, parameter selection is as follows: \( \alpha \) = 0.7, \(\gamma \) = 0.3 and Psize = 15.

Algorithm analysis and comparison

To verify the performance of QFOA, QFOA is combined with 7 advanced intelligent algorithms to solve the 720 large-scale test cases described above. The comparison algorithms are HDDE [ 40 ], MDDE [ 54 ], DDE [ 48 ], CDE [ 47 ], IG [ 27 ], DABC [ 45 ] and DFFO [ 10 ]. The setting of termination condition T and evaluation standard RPD is the same as above.

Experimental comparative analysis when C = 5

Table 4 records the average RPD values of each algorithm under \(T = 5 \times m \times n \times f (ms)\) , and the statistical results are based on three key points: factory scale ( f ), job scale ( n ), and machine scale ( m ). From Table 4 , it can be seen that no matter how many factories f and machines there are, the results of QFOA are better than those of other algorithms. It is worth noting that in Table 4 , the average RPD values of QFOA for f = 2, 3, 4, 5, 6 and 7 are 0.149, 0.172, 0.176, 0.205, 0.292 and 0.240 respectively. The second-ranked is MDDE, which obtained 0.210( f = 2), 0.190( f = 3), 0.210( f = 4), 0.290( f = 5), 0.340( f = 6) and 0.310( f = 7) respectively. In general, QFOA has obvious advantages compared with other algorithms.

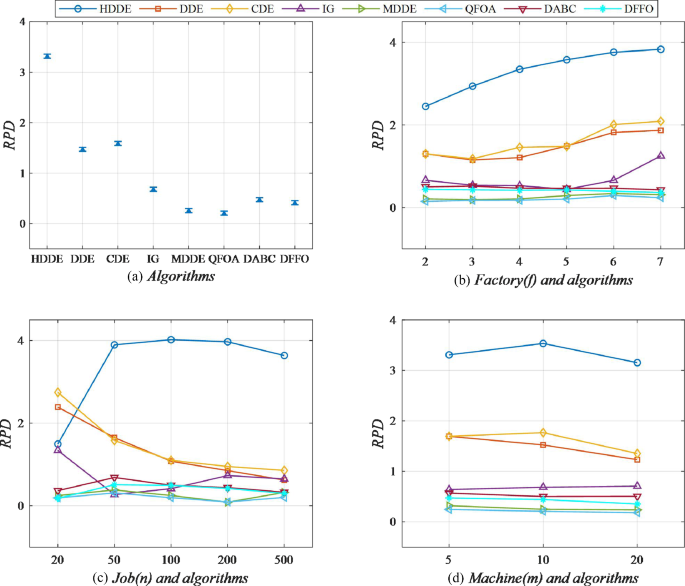

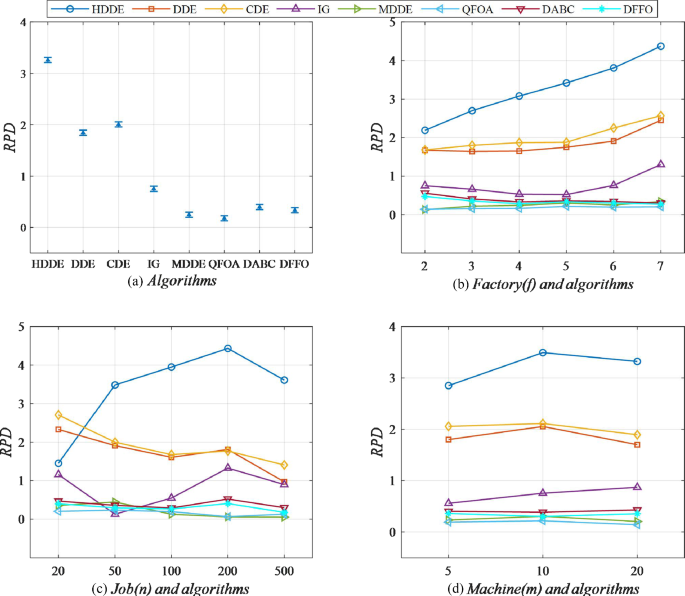

Average scatter plot and interaction plot when \(C=5\)

In order to show more clearly, the data in Table 4 is statistically analyzed, and Fig. 8 shows the average scatter plot and interaction plot of the algorithm with f , m , and n . As shown in Fig. 8 a, the average scatter plot of the algorithm, where QFOA performs the best with the smallest RPD value of 0.206, followed by MDDE with an RPD value of 0.258, and the remaining algorithms’ RPD values are ranked as DFFO, DABC, IG, DDE, CDE and HDDE have the worst effect. Figure 8 b is the interaction diagram of the algorithm with f . The size of f has a greater impact on the performance of HDDE, CDE, DDE and IG, but has little impact on other algorithms. Figure 8 c is the interaction diagram of the algorithm with n . For n = 50 and n = 200, IG and MDDE are slightly better than QFOA. HDDE, DDE, CDE and IG are greatly affected by the size of the job, and have a relatively small impact on the remaining algorithms such as QFOA and MDDE. Figure 8 d tells us that considering different m , QFOA is statistically superior to other algorithms. And except for QFOA and MDDE, all other algorithms are affected by how many m values are taken.

Experimental comparative analysis when C = 15

Table 5 records the average RPD values of all algorithms under \(T = 15 \times m \times n \times f (ms)\) , and the statistical results are based on three key points: factory scale ( f ), job scale ( n ), and machine scale ( m ). From Table 5 , it can be seen that no matter how many f and m there are, the results of QFOA are better than those of other algorithms. It is worth noting that in Table 5 , the average RPD values of QFOA for f = 2, 3, 4, 5, 6 and 7 are 0.146, 0.153, 0.187, 0.182, 0.248 and 0.245 respectively. The algorithm after QFOA is MDDE, which obtained 0.300( f =2), 0.180( f = 3), 0.290( f = 4), 0.250( f = 5), 0.290( f = 6) and 0.390( f = 7) respectively. In general, QFOA has obvious advantages compared with other algorithms.

Average scatter plot and interaction plot when \(C=15\)

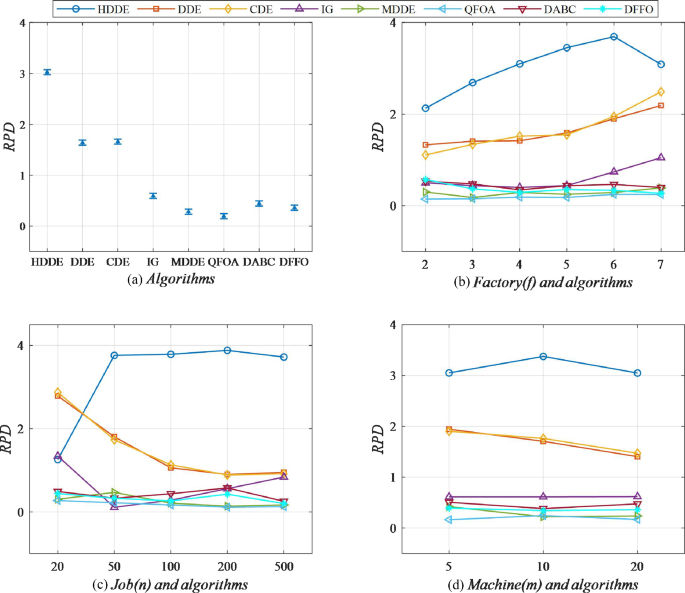

Similarly, the data in Table 5 is statistically analyzed, and Fig. 9 shows the average scatter plot and interaction plot of the algorithm with m , n and f . As shown in Fig. 9 a, the average scatter plot of the algorithm, where QFOA performs the best with the smallest RPD value of 0.194, followed by MDDE with an RPD value of 0.283, and the remaining algorithms’ RPD values are ranked as DFFO, DABC, IG, DDE, CDE and HDDE are also the two worst performing. Figure 9 b–d are the interaction diagrams of the algorithm with m , n and f , respectively. Their development trends are similar to C = 5.

Experimental comparative analysis when C = 30

Table 6 records the average RPD values of all algorithms under \(T = 30 \times m \times n \times f (ms)\) , where the statistical perspective is the same as C = 10 and C = 20, respectively, based on three key points: factory scale ( f ), job scale ( n ), and machine scale ( m ). From Table 6 , it can be seen that no matter how many m and f are, QFOA is the best. In Table 6 , the average RPD values of QFOA for f = 2, 3, 4, 5, 6 and 7 are 0.140, 0.157, 0.161, 0.215, 0.195 and 0.203 respectively. The algorithm after QFOA is MDDE, which obtained 0.130 ( f = 2), 0.220 ( f = 3), 0.240 ( f = 4), 0.300 ( f = 5), 0.250 ( f = 6) and 0.240 ( f = 7) respectively. In general, QFOA has obvious advantages compared with other algorithms.

Average scatter plot and interaction plot when \(C=30\)

Similarly, the data in Table 6 is statistically analyzed in the same way as Tables 4 and 5 , and Fig. 10 shows the average scatter plot and interaction plot of the algorithm with m , n , and f . As shown in Fig. 10 a, the average scatter plot of the algorithm, where QFOA performs the best with the smallest RPD value of 0.179, followed by MDDE with an RPD value of 0.247, and the remaining algorithms’ RPD values are ranked as, DFFO, DABC, IG, DDE, CDE and HDDE. Figure 10 b–d are the interaction diagrams of the algorithm with m , n , and f , respectively. Their development trends are similar to C = 5 and C = 15.

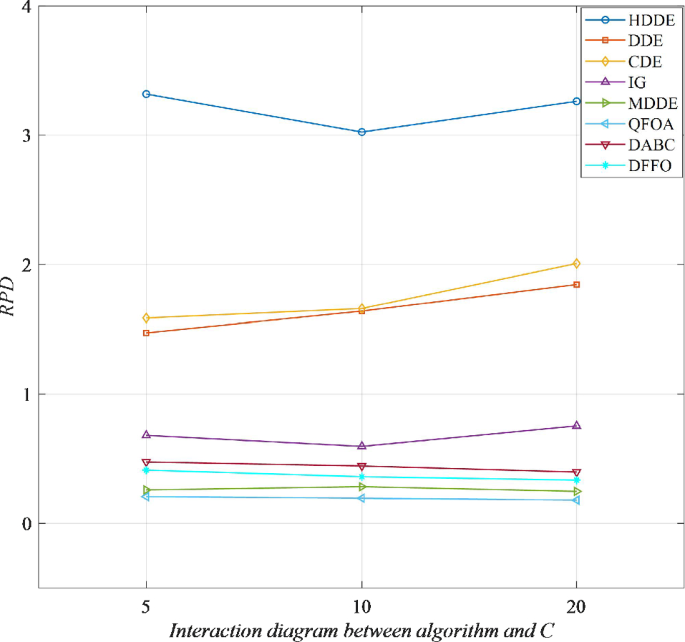

Also the effect of different stopping times of C on different algorithms is given in Fig. 11 . It can be seen from Fig. 11 that QFOA outperforms the other compared algorithms for different values of C . It is worth noting that MDDE and DFFO also work well, followed by DABC, IG, DDE, CDE and HDDE, which are shown by the above analysis to be the best performance of QFOA.

Interaction diagram of algorithm and C

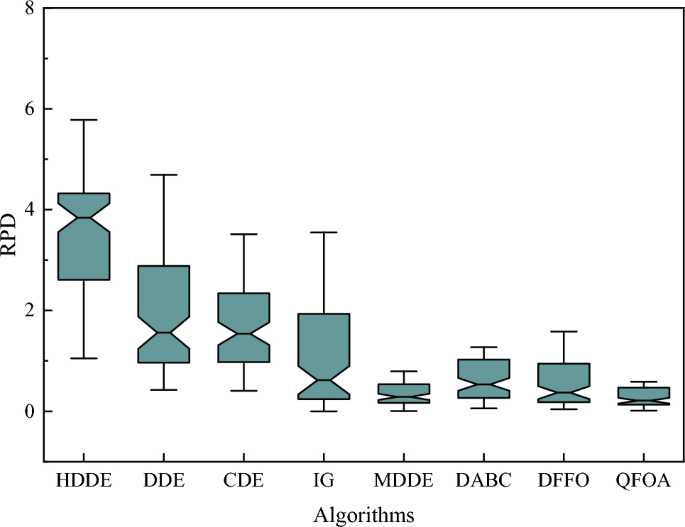

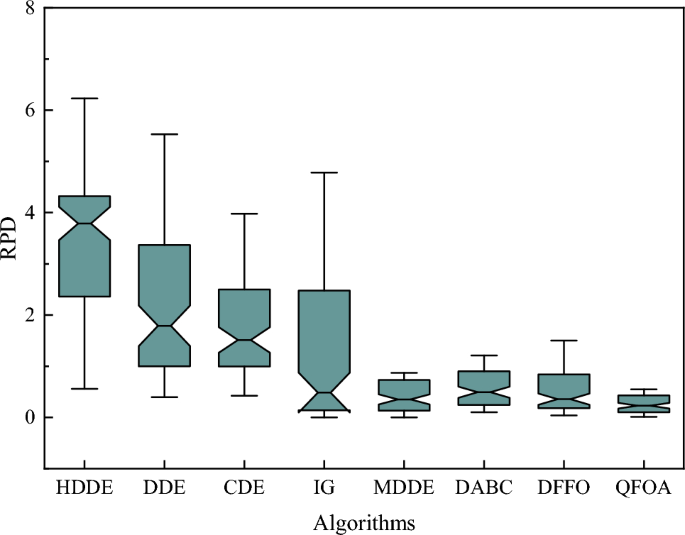

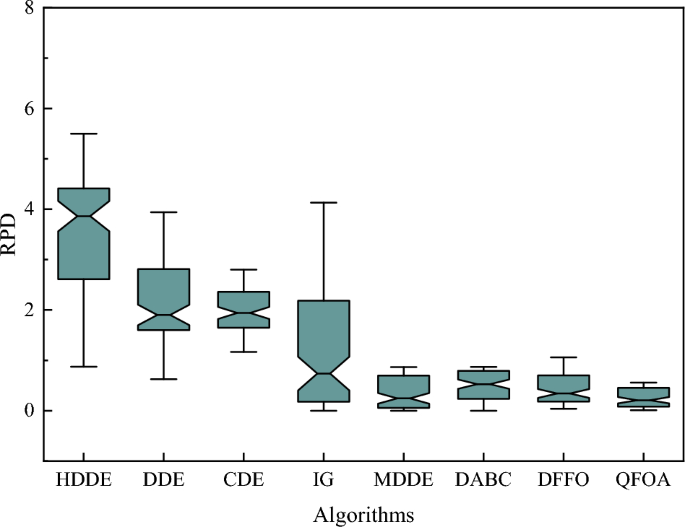

To further analyze the stability of algorithms such as QFOA and MDDE, we learned from literature [ 54 ] about the meaning represented by box plots. In summary, the narrower the box plot, the better the stability of the algorithm, and the closer to the bottom, the better the performance. Therefore, we statistically explain the RPD values obtained by algorithms such as QFOA and MDDE when solving large-scale examples from the perspective of box plots, thereby verifying the stability of QFOA.

Figures 12 , 13 and 14 are box plots of algorithm and RPD values for different C values. We can clearly understand that the box plot of the QFOA algorithm is the narrowest under different C values, followed by MDDE, DFFO, DABC, IG, CDE, DDE and HDDE. Among them, HDDE has the worst effect when C is 5, 15 and 30. On the contrary, QFOA has the narrowest box plot when C is 5, 15 and 30, indicating that QFOA is the most stable. Secondly, both MDDE and QFOA’s boxes are closest to the bottom but QFOA’s median line is lower, indicating that its performance is optimal. This verifies the stability of QFOA.

Box plot of QFOA and comparison algorithms when C = 5

Box plot of QFOA and comparison algorithms when C = 15

Box plot of QFOA and comparison algorithms when C = 30

Differential analysis

In order to illustrate the difference between QFOA and other advanced comparison algorithms, We use the RPD values obtained by solving 720 large-scale test instances with algorithms such as QFOA, and use two commonly used test methods, Mann–Whitney U and Friedman, to test the differences between QFOA and algorithms such as MDDE and DABC.

Mann–Whitney U test

The Mann–Whitney U test was mainly used to detect the difference between the two samples by first making the assumption that the difference between the two samples is not significant and then checking whether the P-value is less than 0.05, and less than 0.05 rejecting the original assumption that there is a difference between the samples. The results of our comparison using this method are shown in Table 7 , from which it can be seen that all P-value are less than 0.05 regardless of whether the time step C is 5, 15 or 30, indicating that there are significant differences between QFOA and the other algorithms.

Friedman test

Friedman test is also often used to test the differences between samples. First, assume that the samples being tested are equivalent. If the P-value < 0.05, reject the null hypothesis, indicating that there is a difference. Based on this, we conducted a differential test on QFOA and algorithms such as MDDE and DFFO to see if the null hypothesis was rejected. The test results are shown in Table 8 .

In Table 8 , statistical explanations are given from the total number( N ), mean, standard deviation(Std), average ranks, maximum (Max) and minimum (Min) values. Among them, when C = 5, QFOA’s mean = 0.205, Std = 0.135, Max = 0.586 and Min = 0.000. Followed by DFFO, MDDE, DABC, IG, DDE, CDE and HDDE. In summary of the above four points, QFOA is the smallest. This shows that QFOA is better than comparison algorithms such as MDDE. As for P-value = 0.000 < 0.005 rejecting the null hypothesis, it indicates the difference between QFOA and other algorithms. As for C = 15 and C = 30, it is the same as when C = 5.

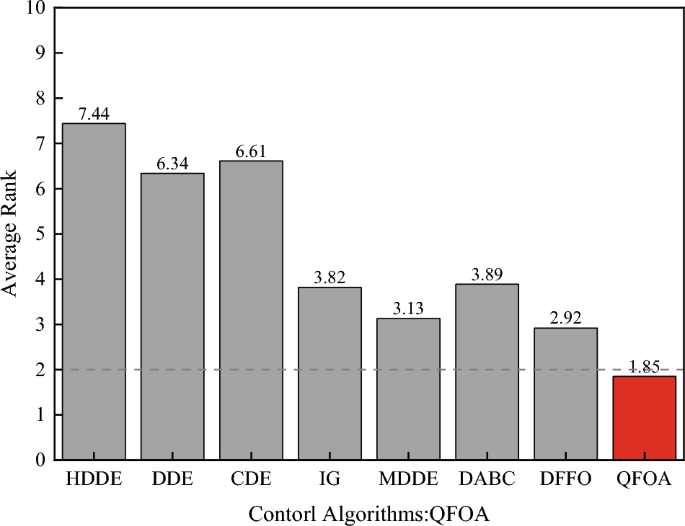

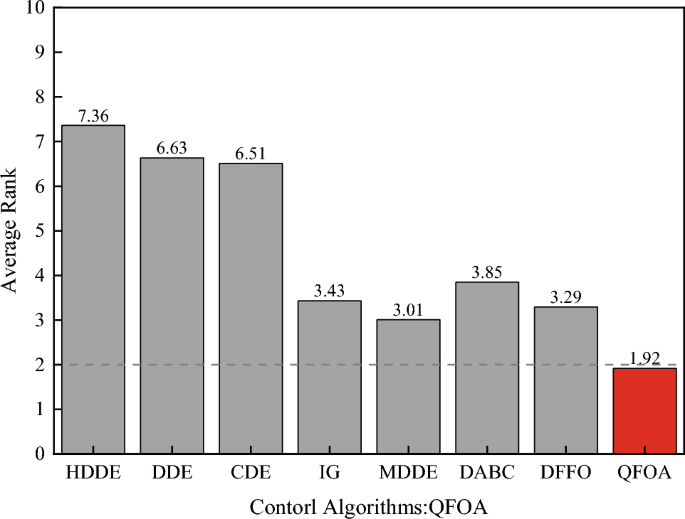

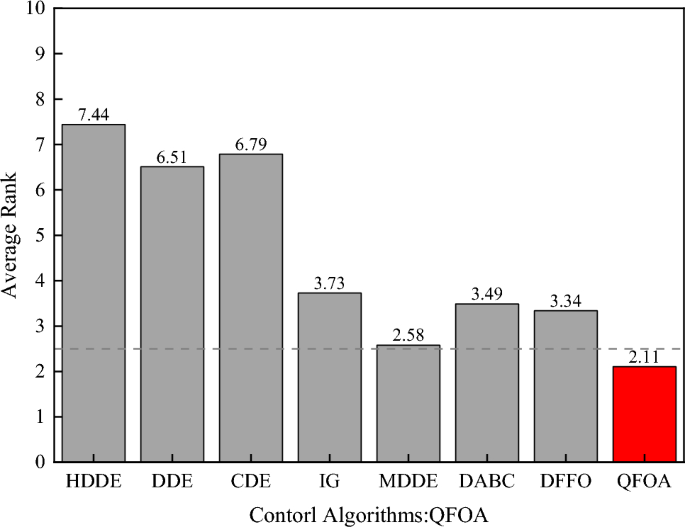

Furthermore, under different conditions of C = 5, 15 and 30, Bonferroni Dunn test was performed by controlling the QFOA algorithm and comparing it with algorithms such as MDDE and DFFO. The smaller the rank value of QFOA, the higher the ranking. The results are shown in Figs. 15 , 16 and 17 respectively. When C = 5, it can be seen from Fig. 15 that QFOA (average rank = 1.85) ranks first and DFFO (average rank = 2.92) ranks second. This shows the superiority of the QFOA algorithm. When C = 15 is the same as when C = 5, it can be seen from Fig. 16 that QFOA (average rank = 1.92) ranks first and MDDE (average rank = 3.01) ranks second. When C = 30, it can be seen from Fig. 17 that QFOA (average rank = 2.11) ranks first and MDDE (average rank = 2.58) ranks second. In summary, there are not only significant differences between QFOA and algorithms such as MDDE and DFFO but also their performance is the most stable and optimal.

The results of the Bonferroni Dunn experiment at \(C=5\)

The results of the Bonferroni Dunn experiment at \(C=15\)

The results of the Bonferroni Dunn experiment at \(C=30\)

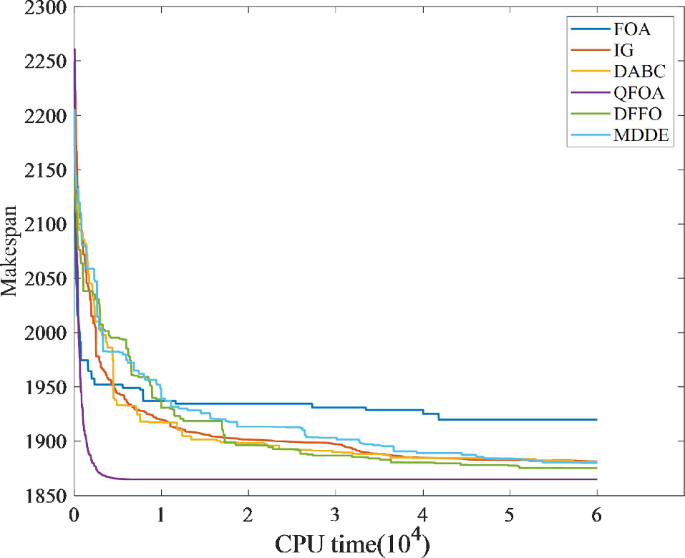

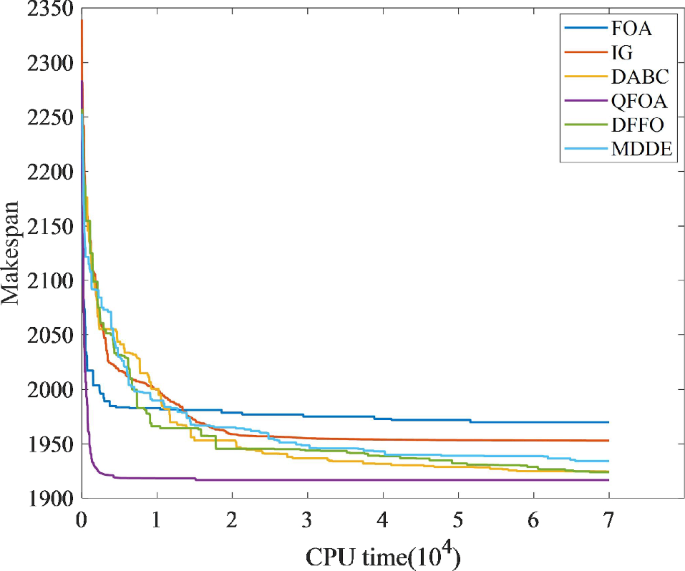

Convergence analysis

This section mainly discusses the convergence of QFOA, the evolution curves of two typical cases are shown in Figs. 18 and 19 . The sizes of the four typical cases are \(100 \times 20 \times 6\) and \(200 \times 10 \times 7\) respectively. Only the six algorithms with the best performance, QFOA, MDDE, DFFO, DABC, IG and FOA are used for convergence analysis experiments. The algorithm termination criterion is \(T=5\times m\times n \times f(ms)\) .

\(100 \times 20 \times 6\) convergence curve

\(200 \times 10 \times 7\) convergence curve

Figure 18 , it can be observed that for instances \(100 \times 20 \times 6\) , QFOA, FOA, DFFO, MDDE, IG and DABC, the disparities in initial solutions are minimal. With increasing runtime, all algorithms exhibit a noticeable downward trend. Particularly, the convergence curve of QFOA remains significantly lower compared to DFFO, MDDE, IG and DABC, achieving rapid convergence by 3000 ms . Conversely, FOA swiftly falls into local optima, necessitating a longer time to converge. Similarly, DFFO, MDDE, IG and DABC also exhibit extended convergence times. In summary, QFOA outperforms DFFO and MDDE, among other comparative algorithms, in both convergence speed and accuracy. Similarly, Fig. 19 shows the convergence curve of example \(200 \times 10 \times 7\) , and the analysis scheme is similar to Fig. 18 , obtaining the same results. From these two different scale examples, it can be seen that QFOA is also optimal in terms of convergence.

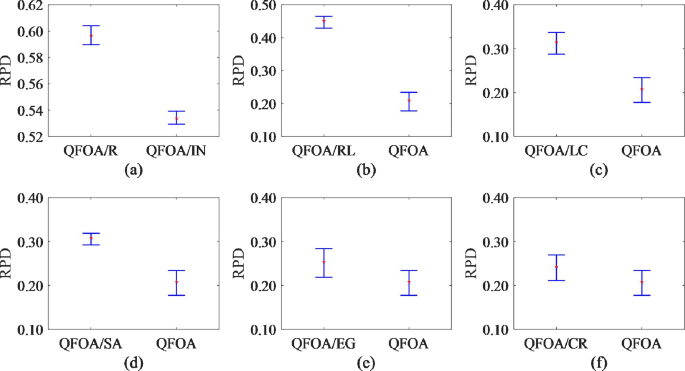

Component analysis

In this section, we mainly discuss the contribution of the proposed strategy to the QFOA algorithm. QFOA is mainly composed of the initialization method, the smell phase combined with Q-learning and the visual phase with the addition of an annealing mechanism. This paper verifies the contribution of these strategies through for sets of experiments.

QFOA/R: Random initialization of fruit fly population center position.

QFOA/IN: Initialize the central position of fruit fly population using NEH_R.

QFOA/RL: Q-learning algorithm selection strategy is removed and random selection strategy is used instead.

QFOA/LC: The operations based on job blocks in the local search are removed and replaced by the operations of a single job.

QFOA/SA: Remove the simulated annealing criterion.

QFOA/CR:Using Q-learning algorithm, the strategy selection decision is complete ly random.

QFOA/EG: The Q-learning algorithm is used to choose the greedy strategy.

95% confidence intervals for QFOA and its variants

Each algorithm is run 10 times independently on each arithmetic case with a stopping criterion of \(T=5\times m\times n \times f(ms)\) . The test arithmetic cases and evaluation metrics are the same as in Sect. “ Algorithm analysis and comparison ”. The results of QFOA as well as the variants are given in Table 9 .

From Table 9 , the results of QFOA/IN outperform QFOA/R illustrating that the improved NEH initialization rule helps to improve the performance of QFOA. The results of QFOA are superior to QFOA/LC, indicating that the designed local search method based on job blocks performs better than a single job. The performance of QFOA outperforms QFOA/RL illustrating that the inclusion of the Q-learning algorithm facilitates the selection of a high-quality neighborhood structure and ensures the feasibility of the solution. By comparing QFOA/CR, QFOA/EG and QFOA, we can see that the result of QFOA/EG is relatively poor. By adopting greedy strategy, QFOA/EG falls into local optimal and never jumps out of local optimal. The effect of QFOA/CR algorithm is better than that of QFOA/EG. The reason is that QFOA/CR adopts the “completely random” strategy by chance, and every selection is random. However, after multiple implementation comparisons, it can obtain relatively better results, and QFOA is the most effective. Using the “semi-random” strategy, which changes with iteration and jumps out of the local optimal strategy, relatively good results can be obtained and the speed is fast. Similarly, the performance of QFOA is also better than that of QFOA/SA, indicating that adding an annealing mechanism has a high probability of avoiding premature convergence in the QFOA.

To verify that the above findings are statistically significant, this section uses ANOVA to test the variance of the experimental results for the seven algorithms in Table 9 . Figure 20 shows the 95% confidence intervals for each variant. Figure 20 , it can be seen that the interval between QFOA and its variants does not overlap, indicating that QFOA has differences with other variants and has the best effect. To further illustrate, the effectiveness of the improved initialisation method can be seen from the comparison of QFOA/R and QFOA/IN in Fig. 20 a. And the comparison of QFOA/IN with QFOA can indirectly reflect the importance of initialisation diversity. Therefore, we use the NEH_R method to initialize 10% of individuals. From Fig. 20 b, we can see that QFOA results are better than QFOA/LC, which demonstrates that local search based on job blocks is better than single job, which is caused by the fact that moving a job tends to destroy constructed blocks of jobs that carry more sequence features than single job, especially when all the jobs are essentially sorted out by the later stages of the algorithm. From the comparison between QFOA/RL and QFOA in Fig. 20 c, the effectiveness of introducing the Q-learning algorithm is verified. From the comparison between QFOA/SA and QFOA in Fig. 20 d, the effectiveness of introducing the annealing algorithm is verified. Figure 20 e and Fig. 20 f show that the effect of QFOA is superior to that of QFOA/EG and QFOA/CR, because the “Sigmoid” function is introduced as a dynamic selection strategy, enabling the algorithm to randomly select actions in the early stage and dynamically select the optimal actions in the later stage. Jumping out of local optimal can get relatively good results and fast speed. From this, it can be seen that the strategies adopted by QFOA at each stage ensure QFOA’s ability to solve the problem being sought.

Experimental summary

This section focuses on summarising the above experiments. QFOA is tested against state-of-the-art algorithms such as MDDE, DABC and DFFO in terms of algorithmic accuracy and stability (Sect. “ Algorithm analysis and comparison ), variance (Sect. “ Differential analysis ”), convergence (Sect. “ Convergence analysis ”) and composition (Sect. “ Component analysis ”) in order to illustrate the effectiveness of QFOA.

Firstly, it can be learnt from Sect. “ Algorithm analysis and comparison that QFOA outperforms algorithms such as MDDE, DFFO and DABC in solving large-scale examples when C = 5, 15 and 30.

Secondly, in Sect. “ Differential analysis ”, two tests, Mann - Whitney U and Friedman , are employed to demonstrate that QFOA is significantly different from algorithms such as MDDE, DFFO and DABC, and then, in Sect. “ Convergence analysis ”, the algorithms are tested for convergence, and QFOA is fast and efficient in solving large-scale examples. The reasons for this phenomenon are as follows: (1) The initial solution NEH_R produces a better initial solution for subsequent iterations; (2) The combination with the Q-learning is proposed so that it can choose the best way to generate candidate solutions in each iteration; (3) A new local search method LsBlock is designed. LsBock inserts job blocks to retain more sequence information.

Finally, in Sect. “ Component analysis ”, seven QFOA variants are designed to investigate the contribution of the three suggested strategies: QFOA/R, QFOA/IN, QFOA/NR, QFOA/RL and QFOA/SA. From the comparison between QFOA/R and QFOA/IN, the effectiveness of the improved initialization method can be seen. From the comparison between QFOA/IN and QFOA, the importance of initialization diversity is indirectly reflected. Therefore, the NEH_R method is used to initialise 10% of the individuals. The effectiveness of the local search approach based on job blocks is illustrated by comparing QFOA/LC and QFOA. The effectiveness of introducing the Q-learning is verified by comparing QFOA/RL with QFOA. From the comparison between QFOA/SA and QFOA, the effectiveness of introducing the annealing algorithm is verified. Through the comparison of QFOA/EG, QFOA/CR and QFOA, the effectiveness of introducing “Sigmoid” function as a dynamic selection strategy is verified.

In summary, QFOA compares favourably with seven algorithms such as MDDE, DFFO, HDDE, DDE, CDE, IG and DABC in terms of convergence speed, accuracy and stability. Especially when solving real production scheduling problems, all algorithms under the same stopping criterion, the experimental results show that QFOA’s performance is at least 28.1% better than the other algorithms. Thus, the effectiveness of QFOA algorithm is verified.

In this paper, a QFOA algorithm is proposed to solve the DPFSP problem. In QFOA, NEH_R is used for population initialization. In the smell phase, four neighborhoods are designed and combined with the Q-learning algorithm to quickly select high-quality neighborhoods to update the solution and enhance the development ability of the algorithm. It is noteworthy that the “Sigmoid” function is introduced as a dynamic selection strategy to replace the greedy strategy of Q-learning algorithm to improve the evolutionary ability of the population. In addition, a local search method is designed for the characteristics of the DPFSP problem. In the visual phase, an annealing mechanism is introduced to avoid falling into local optima. Finally, the proposed QFOA is compared with the state-of-the-art algorithms for solving 720 well-known large-scale benchmark instances. Experimental results show that the performance of QFOA has an at least 28.1% improvement in solving large-scale instances compared to other algorithms.

Data availability

The dataset used and/or analyzed during the current research period can be obtained from corresponding author reasonable request.

Ali A, Gajpal Y, Elmekkawy TY (2020) Distributed permutation flowshop scheduling problem with total completion time objective. Opsearch 58:425–447

Article MathSciNet Google Scholar

Alireza G, Ali A, Mostafa H (2023) Efficient multi-objective meta-heuristic algorithms for energy-aware non-permutation flow shop scheduling problem. Expert Syst Appl 213:119077

Article Google Scholar

Babu KS, Vemuru S (2019) Spectrum signals handoff in lte cognitive radio networks using reinforcement learning. Traitement du Signal 36:119–125

Chen RH, Yang B, Li S (2020) A self-learning genetic algorithm based on reinforcement learning for flexible job-shop scheduling problem. Comput Ind Eng 149:106778

Dong H, Wang ZB (2022) Distributed assembly replacement flow shop scheduling based on iiga. Manuf Technol Mach Tools 11:169–176

Google Scholar

Du SL, Zhou WJ, Wu DK (2023) An effective discrete monarch butterfly optimization algorithm for distributed blocking flow shop scheduling with an assembly machine. Expert Syst Appl 225:120113

Fernandez-Viagas V, Framinan J (2015) A bounded-search iterated greedy algorithm for the distributed permutation flowshop scheduling problem. Int J Prod Res 53:1111–1123

Fernandez-Viagas V, Perez-Gonzalez P, Framinan J (2018) The distributed permutation flow shop to minimise the total flowtime. Comput Ind Eng 118:464–477

Fu Y, Zhou M, Guo X (2021) Stochastic multi-objective integrated disassembly-reprocessing-reassembly scheduling via fruit fly optimization algorithm. J Clean Prod 278:123364

Guo HW, Sang HY, Zhang XJ (2023) An effective fruit fly optimization algorithm for the distributed permutation flowshop scheduling problem with total flowtime. Eng Appl Artif Intell 123:106347

Hsieh YZ, Su MC (2016) A q-learning-based swarm optimization algorithm for economic dispatch problem. Neural Comput Appl 27:2333–2350

Huang L, Wang G, Bai T (2017) An improved fruit fly optimization algorithm for solving traveling salesman problem. Front Inform Technol Electron Eng 18:1525–1533

Ibrahim IA (2022) A hybrid wind driven-based fruit fly optimization algorithm for identifying the parameters of a double-diode photovoltaic cell model considering degradation effects. Sustain Energy Technol Assess 50:101685

Jiang L, Huang H, Ding Z (2020) Path planning for intelligent robots based on deep q-learning with experience replay and heuristic knowledge. IEEE/CAA J Autom Sin 7:1179–1189

Jing X, Pan Q, Gao L (2020) An effective iterated greedy algorithm for the distributed permutation flowshop scheduling with due windows. Appl Soft Comput 96:106629

Komaki M, Malakooti B (2017) General variable neighborhood search algorithm to minimize makespan of the distributed no-wait flow shop scheduling problem. Prod Eng Res Dev 11:315–329

Li JQ, Bai SC, Duan PY (2019) An improved artificial bee colony algorithm for addressing distributed flow shop with distance coefficient in a prefabricated system. Int J Prod Res 57:6922–6942

Lin SW, Ying KC (2016) Minimizing makespan for solving the distributed no-wait flowshop scheduling problem. Comput Ind Eng 99:202–209

Meng T, Pan QK, Wang L (2019) A distributed permutation flow shop scheduling problem with the customer order constraint. Knowl-Based Syst 184:104894

Naderi B, Ruiz R (2010) The distributed permutation flowshop scheduling problem. Comput Oper Res 37:754–768

Nawaz M, Enscore E, Ham I (1983) A heuristic algorithm for the m-machine, n-job flow-shop sequencing problem. Omega 11:91–95

Pan Q, Gao L, Wang L (2019) Effective heuristics and metaheuristics to minimize total flowtime for the distributed permutation flowshop problem. Expert Syst Appl 124:309–324

Pan WT (2012) A new fruit fly optimization algorithm: taking the financial distress model as an example. Knowl-Based Syst 26:69–74

Qian B, She MZ, Hu R (2021) The hyperheuristic cross-entropy algorithm solves the fuzzy distributed pipeline green scheduling problem. Control Decis Mak 36:1387–1396

Qin H, Li T, Teng Y (2021) Integrated production and distribution scheduling in distributed hybrid fow shops. Memetic Comput 13:185–202

Ren JF, Ye CM, Li Y (2021) A new solution to distributed permutation flow shop scheduling problem based on nash q-learning. Adv Prod Eng Manag 13:136–146

Ruiz R, Pan Q, Naderi B (2019) Iterated greedy methods for the distributed permutation flowshop scheduling problem. Omega 83:213–222

Ruiz R, Stutzle T (2007) A simple and effective iterated greedy algorithm for the permutation flowshop scheduling problem. Eur J Oper Res 177:2023–2049

Saminathan K, Thangavel R (2022) Energy efficient and delay aware clustering in mobile adhoc network: a hybrid fruit fly optimization algorithm and whale optimization algorithm approach. Concurr Comput Pract Exp 11:6867

Sang HY, Pan QK, Li JQ (2019) Effective invasive weed optimization algorithms for distributed assembly permutation flow shop problem with total flowtime criterion. Swarm Evol Comput 44:64–73

Shao W, Pi D, Shao Z (2017) Optimization of makespan for the distributed no-wait flow shop scheduling problem with iterated greedy algorithms. Knowl-Based Syst 137:163–181

Shao Z, Pi D, Shao W (2019) Hybrid enhanced discrete fruit fly optimization algorithm for scheduling blocking flow-shop in distributed environment. Expert Syst Appl 145:113147

Shen XN, Minku LL, Marturi N (2018) A q-learning-based memetic algorithm for multi-objective dynamic software project scheduling. Inf Sci 428:1–29

Song HB, Yang YH, Lin J (2023) An effective hyper heuristic-based memetic algorithm for the distributed assembly permutation flow-shop scheduling problem. Appl Soft Comput 135:110022

Taillard E (1993) Benchmarks for basic scheduling problems. Eur J Oper Res 64:278–285

Tasgetiren MF, Pan QK, Kizilay D (2019) A variable block insertion heuristic for permutation flow shops with makespan criterion. In: 2017 IEEE congress on evolutionary computation, p 12

Wang G, Gao L, Li X (2020) Energy-efficient distributed permutation flow shop scheduling problem using a multi-objective whale swarm algorithm. Swarm Evol Comput 57:100716

Wang HX, Sarker BR, Li J (2021) Adaptive scheduling for assembly job shop with uncertain assembly times based on dual q-learning. Int J Prod Res 59:5867–5883

Wang JJ, Wang L (2018) A knowledge-based cooperative algorithm for energy efficient scheduling of distributed flow-shop. IEEE Trans Syst Man Cybern Syst 50:1–15

Wang L, Pan QK, Suganthan PN (2010) A novel hybrid discrete differential evolution algorithm for blocking flow shop scheduling problems. Comput Oper Res 37:509–520

Wang L, Xiong YN, Li SW (2019) New fruit fly optimization algorithm with joint search strategies for function optimization problems. Knowl-Based Syst 176:77–96

Wang Z, Deng QW, Zhang LK (2023) Joint optimization of integrated mixed maintenance and distributed two-stage hybrid flow-shop production for multi-site maintenance requirements. Expert Syst Appl 215:119422

Watkins C, Dayan P (1992) Q-learning. Mach Learn 8:279–292

Yang XL, Hu R, Qian B (2019) Enhanced distribution estimation algorithm solves for distributed displacement flow workshop scheduling. Control Theory Appl 36:803–815

Yang Y, Zhang F, Huang J (2023) Acceleration-based artificial bee colony optimizer for a distributed permutation flowshop scheduling problem with sequence-dependent setup times. Appl Soft Comput 135:110029

Yang YH, Li X (2022) A knowledge-driven constructive heuristic algorithm for the distributed assembly blocking flow shop scheduling problem. Expert Syst Appl 202:117269

Zhang G, Xing K (2019) Differential evolution metaheuristics for distributed limited-buffer flow shop scheduling with makespan criterion. Comput Oper Res 108:33–43

Zhang GH, Xing KY, Cao F (2018) Discrete differential evolution algorithm for distributed blocking flow shop scheduling with makespan criterion. Eng Appl Artif Intell 76:96–107

Zhang QC, Lin M, Yang LT (2019) Energy-efficient scheduling for realtime systems based on deep q-learning model. IEEE Trans Sustain Comput 4:132–141

Zhang X, Liu X, Tang S (2019) Solving scheduling problem in a distributed manufacturing system using a discrete fruit fly optimization algorithm. Energies 12:3260

Zhang XH, Liu XH, Cichon A (2022) Scheduling of energy-efficient distributed blocking flowshop using pareto-based estimation of distribution algorithm. Expert Syst Appl 200:116910

Zhao F, Ma R, Wang L (2021) A self-learning discrete jaya algorithm for multi objective energy-efficient distributed no-idle flow-shop scheduling problem in heterogeneous factory system. IEEE Trans Cybern 52:20

Zhao F, Zhao L, Wang L (2020) An ensemble discrete differential evolution for the distributed blocking flowshop scheduling with minimizing makespan criterion. Expert Syst Appl 160:1136781–11367821

Zhao FQ, Hu XT, Wang L (2022) A memetic discrete differential evolution algorithm for the distributed permutation flow shop scheduling problem. Complex Intell Syst 8:141–161

Zhao FQ, Qin S, Zhang Y (2019) A hybrid bio-geography based optimization with variable neighborhood search mechanism for no-wait flow shop scheduling problem. Expert Syst Appl 126:321–339

Zhao FQ, Xue FL, Zhang Y (2019) A discrete gravitational search algorithm for the blocking flow shop problem with total flow time minimization. Appl Intell 49:3362–3382

Zheng X, Wang L (2016) A two-stage adaptive fruit fly optimization algorithm for unrelated parallel machine scheduling problem with additional resource constraints. Expert Syst Appl 65:28–39

Zhu NN, Zhao FQ, Wang L (2022) A discrete learning fruit fly algorithm based on knowledge for the distributed no-wait flow shop scheduling with due windows. Expert Syst Appl 198:116921

Download references

Acknowledgements

This research was supported by National Natural Science Foundation of China (grant nos. 62373146), Natural Science Foundation of Hunan Province (grant nos.2022JJ30265), Young Talent of Lifting Engineering for Science and Technology in Hunan Province (2022TJ-Q03), the Outstanding Youth Project of Education Department of Hunan Province (22B0476) and the Key Project of Education Department of Hunan Province of china (23A0382).

Author information

Authors and affiliations.

School of Mechanical Engineering, Hunan University of Science and Technology, Xiangtan, 411100, Hunan, China

School of Information and Electrical Engineering, Hunan University of Science and Technology, Xiangtan, 411100, Hunan, China

Lianghong Wu, Cili Zuo & Hongqiang Zhang

You can also search for this author in PubMed Google Scholar

Contributions

Cai Zhao: Conceptualization, Methodology, Software. Lianghong Wu: Data curation, Writing-Original Draft, Supervision. Cili Zuo: Visualization. Hongqiang Zhang: Supervision

Corresponding author

Correspondence to Lianghong Wu .

Ethics declarations

Conflict of interest.

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Zhao, C., Wu, L., Zuo, C. et al. An improved fruit fly optimization algorithm with Q-learning for solving distributed permutation flow shop scheduling problems. Complex Intell. Syst. (2024). https://doi.org/10.1007/s40747-024-01482-4

Download citation

Received : 12 January 2024

Accepted : 01 May 2024

Published : 25 May 2024

DOI : https://doi.org/10.1007/s40747-024-01482-4

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Fruit fly optimization algorithm

- Distributed permutation flow-shop scheduling

- Find a journal