Help | Advanced Search

Computer Science > Computation and Language

Title: palm: scaling language modeling with pathways.

Abstract: Large language models have been shown to achieve remarkable performance across a variety of natural language tasks using few-shot learning, which drastically reduces the number of task-specific training examples needed to adapt the model to a particular application. To further our understanding of the impact of scale on few-shot learning, we trained a 540-billion parameter, densely activated, Transformer language model, which we call Pathways Language Model PaLM. We trained PaLM on 6144 TPU v4 chips using Pathways, a new ML system which enables highly efficient training across multiple TPU Pods. We demonstrate continued benefits of scaling by achieving state-of-the-art few-shot learning results on hundreds of language understanding and generation benchmarks. On a number of these tasks, PaLM 540B achieves breakthrough performance, outperforming the finetuned state-of-the-art on a suite of multi-step reasoning tasks, and outperforming average human performance on the recently released BIG-bench benchmark. A significant number of BIG-bench tasks showed discontinuous improvements from model scale, meaning that performance steeply increased as we scaled to our largest model. PaLM also has strong capabilities in multilingual tasks and source code generation, which we demonstrate on a wide array of benchmarks. We additionally provide a comprehensive analysis on bias and toxicity, and study the extent of training data memorization with respect to model scale. Finally, we discuss the ethical considerations related to large language models and discuss potential mitigation strategies.

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

11 blog links

Bibtex formatted citation.

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

- Google Quantum AI

- Research Publications

Research publications

Publishing our work allows us to share ideas and work collaboratively to advance the field of quantum computing.

- {{link.text}}

Publications

Google publishes hundreds of research papers each year. Publishing is important to us; it enables us to collaborate and share ideas with, as well as learn from, the broader scientific community. Submissions are often made stronger by the fact that ideas have been tested through real product implementation by the time of publication.

We believe the formal structures of publishing today are changing - in computer science especially, there are multiple ways of disseminating information. We encourage publication both in conventional scientific venues, and through other venues such as industry forums, standards bodies, and open source software and product feature releases.

Open Source

We understand the value of a collaborative ecosystem and love open source software .

Product and Feature Launches

With every launch, we're publishing progress and pushing functionality.

Industry Standards

Our researchers are often helping to define not just today's products but also tomorrow's.

"Resources" doesn't just mean tangible assets but also intellectual. Incredible datasets and a great team of colleagues foster a rich and collaborative research environment.

Couple big challenges with big resources and Google offers unprecedented research opportunities.

22 Research Areas

- Algorithms and Theory 608 Publications

- Data Management 116 Publications

- Data Mining and Modeling 214 Publications

- Distributed Systems and Parallel Computing 208 Publications

- Economics and Electronic Commerce 209 Publications

- Education Innovation 30 Publications

- General Science 158 Publications

- Hardware and Architecture 67 Publications

- Human-Computer Interaction and Visualization 444 Publications

- Information Retrieval and the Web 213 Publications

- Machine Intelligence 1019 Publications

- Machine Perception 454 Publications

- Machine Translation 48 Publications

- Mobile Systems 72 Publications

- Natural Language Processing 395 Publications

- Networking 210 Publications

- Quantum A.I. 30 Publications

- Robotics 37 Publications

- Security, Privacy and Abuse Prevention 289 Publications

- Software Engineering 100 Publications

- Software Systems 250 Publications

- Speech Processing 264 Publications

3 Collections

- Google AI Residency 60 Publications

- Google Brain Team 305 Publications

- Data Infrastructure and Analysis 10 Publications

Become an FT subscriber

Try unlimited access Only $1 for 4 weeks

Then $75 per month. Complete digital access to quality FT journalism on any device. Cancel anytime during your trial.

- Global news & analysis

- Expert opinion

- Special features

- FirstFT newsletter

- Videos & Podcasts

- Android & iOS app

- FT Edit app

- 10 gift articles per month

Explore more offers.

Standard digital.

- FT Digital Edition

Premium Digital

Print + premium digital, ft professional, weekend print + standard digital, weekend print + premium digital.

Essential digital access to quality FT journalism on any device. Pay a year upfront and save 20%.

- Global news & analysis

- Exclusive FT analysis

- FT App on Android & iOS

- FirstFT: the day's biggest stories

- 20+ curated newsletters

- Follow topics & set alerts with myFT

- FT Videos & Podcasts

- 20 monthly gift articles to share

- Lex: FT's flagship investment column

- 15+ Premium newsletters by leading experts

- FT Digital Edition: our digitised print edition

- Weekday Print Edition

- Videos & Podcasts

- Premium newsletters

- 10 additional gift articles per month

- FT Weekend Print delivery

- Everything in Standard Digital

- Everything in Premium Digital

Complete digital access to quality FT journalism with expert analysis from industry leaders. Pay a year upfront and save 20%.

- 10 monthly gift articles to share

- Everything in Print

- Make and share highlights

- FT Workspace

- Markets data widget

- Subscription Manager

- Workflow integrations

- Occasional readers go free

- Volume discount

Terms & Conditions apply

Explore our full range of subscriptions.

Why the ft.

See why over a million readers pay to read the Financial Times.

International Edition

Subscribe to the PwC Newsletter

Join the community, trending research, llava-uhd: an lmm perceiving any aspect ratio and high-resolution images.

To address the challenges, we present LLaVA-UHD, a large multimodal model that can efficiently perceive images in any aspect ratio and high resolution.

LightAutoML: AutoML Solution for a Large Financial Services Ecosystem

We present an AutoML system called LightAutoML developed for a large European financial services company and its ecosystem satisfying the set of idiosyncratic requirements that this ecosystem has for AutoML solutions.

MoRA: High-Rank Updating for Parameter-Efficient Fine-Tuning

Low-rank adaptation is a popular parameter-efficient fine-tuning method for large language models.

Diffusion for World Modeling: Visual Details Matter in Atari

Motivated by this paradigm shift, we introduce DIAMOND (DIffusion As a Model Of eNvironment Dreams), a reinforcement learning agent trained in a diffusion world model.

How Far Are We to GPT-4V? Closing the Gap to Commercial Multimodal Models with Open-Source Suites

Compared to both open-source and proprietary models, InternVL 1. 5 shows competitive performance, achieving state-of-the-art results in 8 of 18 benchmarks.

Hunyuan-DiT: A Powerful Multi-Resolution Diffusion Transformer with Fine-Grained Chinese Understanding

For fine-grained language understanding, we train a Multimodal Large Language Model to refine the captions of the images.

Retrieval-Augmented Generation for AI-Generated Content: A Survey

pku-dair/rag-survey • 29 Feb 2024

We first classify RAG foundations according to how the retriever augments the generator, distilling the fundamental abstractions of the augmentation methodologies for various retrievers and generators.

Grounding DINO 1.5: Advance the "Edge" of Open-Set Object Detection

idea-research/grounding-dino-1.5-api • 16 May 2024

Empirical results demonstrate the effectiveness of Grounding DINO 1. 5, with the Grounding DINO 1. 5 Pro model attaining a 54. 3 AP on the COCO detection benchmark and a 55. 7 AP on the LVIS-minival zero-shot transfer benchmark, setting new records for open-set object detection.

A decoder-only foundation model for time-series forecasting

Motivated by recent advances in large language models for Natural Language Processing (NLP), we design a time-series foundation model for forecasting whose out-of-the-box zero-shot performance on a variety of public datasets comes close to the accuracy of state-of-the-art supervised forecasting models for each individual dataset.

EasySpider: A No-Code Visual System for Crawling the Web

NaiboWang/EasySpider • ACM The Web Conference 2023

As such, web-crawling is an essential tool for both computational and non-computational scientists to conduct research.

A free, AI-powered research tool for scientific literature

- Angela Belcher

- Hyper-Realism

- Ideal Gas Law

New & Improved API for Developers

Introducing semantic reader in beta.

Stay Connected With Semantic Scholar Sign Up What Is Semantic Scholar? Semantic Scholar is a free, AI-powered research tool for scientific literature, based at the Allen Institute for AI.

Building on our commitment to delivering responsible AI

May 14, 2024

[[read-time]] min read

Today we’re announcing new AI safeguards to protect against misuse and new tools that use AI to make learning more engaging and accessible.

We believe the transformative power of AI can improve lives and make the world a better place, if approached boldly and responsibly. Today, our research and products are already helping people everywhere — from their everyday tasks to their most ambitious , productive and creative endeavors .

To us, building AI responsibly means both addressing its risks and maximizing the benefits for people and society. In this, we’re guided by our AI Principles , our own research, and feedback from experts, product users and partners.

Building AI to benefit society with LearnLM

Every day, billions of people use Google products to learn. Generative AI is unlocking new ways for us to make learning more personal, helpful and accessible.

Today we’re announcing LearnLM , a new family of models, based on Gemini, and fine-tuned for learning. LearnLM integrates research-backed learning science and academic principles into our products, like helping manage cognitive load and adapting to learners' goals, needs and motivations. The result is a learning experience that is more tailored to the needs of each individual.

LearnLM is powering a range of features across our products, including Gemini, Search, YouTube and Google Classroom. In the Gemini app, the new Learning coach Gem will offer step-by-step study guidance, designed to build understanding rather than just give you the answer. On the YouTube app on Android, you can engage with educational videos in new ways, like asking a follow-up question or checking your knowledge with a quiz. Thanks to the long-context capability of the Gemini model, these YouTube features even work on long lectures and seminars.

Collaboration remains essential to unlocking the full potential of generative AI for the broader education community. We're partnering with leading educational organizations like Columbia Teachers College, Arizona State University, NYU Tisch and Khan Academy to test and improve our models, so we can extend LearnLM beyond our own products (if you’d be interested in partnering, you can sign up at this link ). We’ve also partnered with MIT RAISE to develop an online course to provide educators with tools to effectively use generative AI in the classroom and beyond.

Using long-context to make knowledge accessible

One new experimental tool we’ve built to make knowledge more accessible and digestible is called Illuminate . It uses Gemini 1.5 Pro’s long context capabilities to transform complex research papers into short audio dialogues. In minutes, Illuminate can generate a conversation consisting of two AI-generated voices, providing an overview and brief discussion of the key insights from research papers. If you want to dive deeper, you can ask follow-up questions. All audio output is watermarked with SynthID , and the original papers are referenced, so you can easily explore the source material yourself and in more detail. You can sign up to try it today at labs.google .

Improving our models and protecting against misuse

While these breakthroughs are helping us deliver on our mission in new ways, generative AI is still an emerging technology and there are risks and questions that will arise as the technology advances and its uses evolve.

That’s why we believe it’s imperative to take a responsible approach to AI, guided by our AI Principles . We regularly share updates on what we’ve learned by putting them into practice.

Today, we’d like to share a few new ways that we’re improving our models like Gemini and protecting against their misuse.

- AI-Assisted Red Teaming and expert feedback. To improve our models, we combine cutting-edge research with human expertise. This year, we’re taking red teaming — a proven practice where we proactively test our own systems for weakness and try to break them — and enhancing it through a new research technique we’re calling “AI-Assisted Red Teaming.” This draws on Google DeepMind's gaming breakthroughs like AlphaGo where we train AI agents to compete against each other to expand the scope of their red teaming capabilities. We are developing AI models with these capabilities to help address adversarial prompting and limit problematic outputs. We also improve our models with feedback from thousands of internal safety specialists and independent experts from sectors for academia to civil society. Combining this human insight with our safety testing methods will help make our models and products more accurate and reliable. This is a particularly important area of research for us, as new technical advances evolve how we interact with AI.

- SynthID for text and video. As the outputs from our models become more realistic, we must also consider how they could be misused. Last year, we introduced SynthID, a technology that adds imperceptible watermarks to AI-generated images and audio so they’re easier to identify, and to protect against misuse. Today, we’re expanding SynthID to two new modalities: text and video. This is part of our broader investment in helping people understand the provenance of digital content.

- Collaborating on safeguards . We’re committed to working with the ecosystem to help others benefit from and improve on the advances we’re making. In the coming months, we will open-source SynthID text watermarking through our updated Responsible Generative AI Toolkit . We are also a member of the Coalition for Content Provenance and Authenticity (C2PA), collaborating with Adobe, Microsoft, startups and many others to build and implement a standard that improves transparency of digital media.

Helping to solve real world problems

Today, our AI advances are already helping people solve real-world problems and advance scientific breakthroughs. Just in the past few weeks, we’ve announced three exciting scientific milestones:

- In collaboration with Harvard University, Google Research published the largest synaptic-resolution 3D reconstruction of the human cortex — progress that may change our understanding of how our brains work.

- We announced AlphaFold 3, an update to our revolutionary model that can now predict the structure and interactions of DNA, RNA and ligands in addition to proteins — helping transform our understanding of the biological world and drug discovery.

- We introduced Med-Gemini , a family of research models that builds upon the Gemini model’s capabilities in advanced reasoning, multimodal understanding and long-context processing — to potentially assist clinicians with administrative tasks like report generation, analyze different types of medical data and help with risk prediction

This is in addition to the societally-beneficial results we’ve seen from work like flood forecasting and UN Data Commons for the SDGs . In fact, we recently published a report highlighting all the ways AI can help advance our progress on the world’s shared Sustainable Development Goals. This is just the beginning — we’re excited about what lies ahead and what we can accomplish working together.

Here’s a look at everything we announced at Google I/O 2024.

Get more stories from Google in your inbox.

Your information will be used in accordance with Google's privacy policy.

Done. Just one step more.

Check your inbox to confirm your subscription.

You are already subscribed to our newsletter.

You can also subscribe with a different email address .

Related stories

New Chromebook Plus for educators, powered by AI

3 new ways to use Google AI on Android at work

New support for AI advancement in Central and Eastern Europe

How we’re building accessibility into our Chromebooks around the world

Four new ways we’re partnering with the disability community

8 new accessibility updates across Lookout, Google Maps and more

Let’s stay in touch. Get the latest news from Google in your inbox.

Analyze research papers at superhuman speed

Search for research papers, get one sentence abstract summaries, select relevant papers and search for more like them, extract details from papers into an organized table.

Find themes and concepts across many papers

Don't just take our word for it.

.webp)

Tons of features to speed up your research

Upload your own pdfs, orient with a quick summary, view sources for every answer, ask questions to papers, research for the machine intelligence age, pick a plan that's right for you, get in touch, enterprise and institutions, custom pricing, common questions. great answers., how do researchers use elicit.

Over 2 million researchers have used Elicit. Researchers commonly use Elicit to:

- Speed up literature review

- Find papers they couldn’t find elsewhere

- Automate systematic reviews and meta-analyses

- Learn about a new domain

Elicit tends to work best for empirical domains that involve experiments and concrete results. This type of research is common in biomedicine and machine learning.

What is Elicit not a good fit for?

Elicit does not currently answer questions or surface information that is not written about in an academic paper. It tends to work less well for identifying facts (e.g. “How many cars were sold in Malaysia last year?”) and theoretical or non-empirical domains.

What types of data can Elicit search over?

Elicit searches across 125 million academic papers from the Semantic Scholar corpus, which covers all academic disciplines. When you extract data from papers in Elicit, Elicit will use the full text if available or the abstract if not.

How accurate are the answers in Elicit?

A good rule of thumb is to assume that around 90% of the information you see in Elicit is accurate. While we do our best to increase accuracy without skyrocketing costs, it’s very important for you to check the work in Elicit closely. We try to make this easier for you by identifying all of the sources for information generated with language models.

What is Elicit Plus?

Elicit Plus is Elicit's subscription offering, which comes with a set of features, as well as monthly credits. On Elicit Plus, you may use up to 12,000 credits a month. Unused monthly credits do not carry forward into the next month. Plus subscriptions auto-renew every month.

What are credits?

Elicit uses a credit system to pay for the costs of running our app. When you run workflows and add columns to tables it will cost you credits. When you sign up you get 5,000 credits to use. Once those run out, you'll need to subscribe to Elicit Plus to get more. Credits are non-transferable.

How can you get in contact with the team?

Please email us at [email protected] or post in our Slack community if you have feedback or general comments! We log and incorporate all user comments. If you have a problem, please email [email protected] and we will try to help you as soon as possible.

What happens to papers uploaded to Elicit?

When you upload papers to analyze in Elicit, those papers will remain private to you and will not be shared with anyone else.

How accurate is Elicit?

Training our models on specific tasks, searching over academic papers, making it easy to double-check answers, save time, think more. try elicit for free..

Suggestions or feedback?

MIT News | Massachusetts Institute of Technology

- Machine learning

- Social justice

- Black holes

- Classes and programs

Departments

- Aeronautics and Astronautics

- Brain and Cognitive Sciences

- Architecture

- Political Science

- Mechanical Engineering

Centers, Labs, & Programs

- Abdul Latif Jameel Poverty Action Lab (J-PAL)

- Picower Institute for Learning and Memory

- Lincoln Laboratory

- School of Architecture + Planning

- School of Engineering

- School of Humanities, Arts, and Social Sciences

- Sloan School of Management

- School of Science

- MIT Schwarzman College of Computing

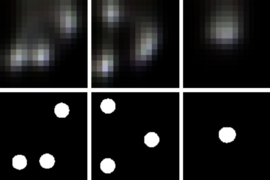

Controlled diffusion model can change material properties in images

Press contact :.

Previous image Next image

Researchers from the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) and Google Research may have just performed digital sorcery — in the form of a diffusion model that can change the material properties of objects in images. Dubbed Alchemist , the system allows users to alter four attributes of both real and AI-generated pictures: roughness, metallicity, albedo (an object’s initial base color), and transparency. As an image-to-image diffusion model, one can input any photo and then adjust each property within a continuous scale of -1 to 1 to create a new visual. These photo editing capabilities could potentially extend to improving the models in video games, expanding the capabilities of AI in visual effects, and enriching robotic training data.

The magic behind Alchemist starts with a denoising diffusion model: In practice, researchers used Stable Diffusion 1.5, which is a text-to-image model lauded for its photorealistic results and editing capabilities. Previous work built on the popular model to enable users to make higher-level changes, like swapping objects or altering the depth of images. In contrast, CSAIL and Google Research’s method applies this model to focus on low-level attributes, revising the finer details of an object’s material properties with a unique, slider-based interface that outperforms its counterparts. While prior diffusion systems could pull a proverbial rabbit out of a hat for an image, Alchemist could transform that same animal to look translucent. The system could also make a rubber duck appear metallic, remove the golden hue from a goldfish, and shine an old shoe. Programs like Photoshop have similar capabilities, but this model can change material properties in a more straightforward way. For instance, modifying the metallic look of a photo requires several steps in the widely used application.

“When you look at an image you’ve created, often the result is not exactly what you have in mind,” says Prafull Sharma, MIT PhD student in electrical engineering and computer science, CSAIL affiliate, and lead author on a new paper describing the work. “You want to control the picture while editing it, but the existing controls in image editors are not able to change the materials. With Alchemist, we capitalize on the photorealism of outputs from text-to-image models and tease out a slider control that allows us to modify a specific property after the initial picture is provided.”

Precise control

“Text-to-image generative models have empowered everyday users to generate images as effortlessly as writing a sentence. However, controlling these models can be challenging,” says Carnegie Mellon University Assistant Professor Jun-Yan Zhu, who was not involved in the paper. “While generating a vase is simple, synthesizing a vase with specific material properties such as transparency and roughness requires users to spend hours trying different text prompts and random seeds. This can be frustrating, especially for professional users who require precision in their work. Alchemist presents a practical solution to this challenge by enabling precise control over the materials of an input image while harnessing the data-driven priors of large-scale diffusion models, inspiring future works to seamlessly incorporate generative models into the existing interfaces of commonly used content creation software.”

Alchemist’s design capabilities could help tweak the appearance of different models in video games. Applying such a diffusion model in this domain could help creators speed up their design process, refining textures to fit the gameplay of a level. Moreover, Sharma and his team’s project could assist with altering graphic design elements, videos, and movie effects to enhance photorealism and achieve the desired material appearance with precision.

The method could also refine robotic training data for tasks like manipulation. By introducing the machines to more textures, they can better understand the diverse items they’ll grasp in the real world. Alchemist can even potentially help with image classification, analyzing where a neural network fails to recognize the material changes of an image.

Sharma and his team’s work exceeded similar models at faithfully editing only the requested object of interest. For example, when a user prompted different models to tweak a dolphin to max transparency, only Alchemist achieved this feat while leaving the ocean backdrop unedited. When the researchers trained comparable diffusion model InstructPix2Pix on the same data as their method for comparison, they found that Alchemist achieved superior accuracy scores. Likewise, a user study revealed that the MIT model was preferred and seen as more photorealistic than its counterpart.

Keeping it real with synthetic data

According to the researchers, collecting real data was impractical. Instead, they trained their model on a synthetic dataset, randomly editing the material attributes of 1,200 materials applied to 100 publicly available, unique 3D objects in Blender, a popular computer graphics design tool. “The control of generative AI image synthesis has so far been constrained by what text can describe,” says Frédo Durand, the Amar Bose Professor of Computing in the MIT Department of Electrical Engineering and Computer Science (EECS) and CSAIL member, who is a senior author on the paper. “This work opens new and finer-grain control for visual attributes inherited from decades of computer-graphics research.” "Alchemist is the kind of technique that's needed to make machine learning and diffusion models practical and useful to the CGI community and graphic designers,” adds Google Research senior software engineer and co-author Mark Matthews. “Without it, you're stuck with this kind of uncontrollable stochasticity. It's maybe fun for a while, but at some point, you need to get real work done and have it obey a creative vision."

Sharma’s latest project comes a year after he led research on Materialistic , a machine-learning method that can identify similar materials in an image. This previous work demonstrated how AI models can refine their material understanding skills, and like Alchemist, was fine-tuned on a synthetic dataset of 3D models from Blender.

Still, Alchemist has a few limitations at the moment. The model struggles to correctly infer illumination, so it occasionally fails to follow a user’s input. Sharma notes that this method sometimes generates physically implausible transparencies, too. Picture a hand partially inside a cereal box, for example — at Alchemist’s maximum setting for this attribute, you’d see a clear container without the fingers reaching in. The researchers would like to expand on how such a model could improve 3D assets for graphics at scene level. Also, Alchemist could help infer material properties from images. According to Sharma, this type of work could unlock links between objects' visual and mechanical traits in the future.

MIT EECS professor and CSAIL member William T. Freeman is also a senior author, joining Varun Jampani, and Google Research scientists Yuanzhen Li PhD ’09, Xuhui Jia, and Dmitry Lagun. The work was supported, in part, by a National Science Foundation grant and gifts from Google and Amazon. The group’s work will be highlighted at CVPR in June.

Share this news article on:

Related links.

- Frédo Durand

- William Freeman

- Prafull Sharma

- Computer Science and Artificial Intelligence Laboratory (CSAIL)

- Department of Electrical Engineering and Computer Science

Related Topics

- Artificial intelligence

- Computer science and technology

- Computer vision

- Computer graphics

- Electrical Engineering & Computer Science (eecs)

Related Articles

Using AI to protect against AI image manipulation

Researchers use AI to identify similar materials in images

Technique enables real-time rendering of scenes in 3D

Using computers to view the unseen

Previous item Next item

More MIT News

Looking for a specific action in a video? This AI-based method can find it for you

Read full story →

Sophia Chen: It’s our duty to make the world better through empathy, patience, and respect

Using art and science to depict the MIT family from 1861 to the present

Convening for cultural change

Q&A: The power of tiny gardens and their role in addressing climate change

In international relations, it’s the message, not the medium

- More news on MIT News homepage →

Massachusetts Institute of Technology 77 Massachusetts Avenue, Cambridge, MA, USA

- Map (opens in new window)

- Events (opens in new window)

- People (opens in new window)

- Careers (opens in new window)

- Accessibility

- Social Media Hub

- MIT on Facebook

- MIT on YouTube

- MIT on Instagram

Advertisement

Supported by

Google’s A.I. Search Errors Cause a Furor Online

The company’s latest A.I. search feature has erroneously told users to eat glue and rocks, provoking a backlash among users.

- Share full article

By Nico Grant

Reporting from New York

Last week, Google unveiled its biggest change to search in years, showcasing new artificial intelligence capabilities that answer people’s questions in the company’s attempt to catch up to rivals Microsoft and OpenAI.

The new technology has since generated a litany of untruths and errors — including recommending glue as part of a pizza recipe and the ingesting of rocks for nutrients — giving a black eye to Google and causing a furor online.

The incorrect answers in the feature, called AI Overview, have undermined trust in a search engine that more than two billion people turn to for authoritative information. And while other A.I. chatbots tell lies and act weird , the backlash demonstrated that Google is under more pressure to safely incorporate A.I. into its search engine.

The launch also extends a pattern of Google’s having issues with its newest A.I. features immediately after rolling them out. In February 2023, when Google announced Bard, a chatbot to battle ChatGPT, it shared incorrect information about outer space. The company’s market value subsequently dropped by $100 billion.

This February, the company released Bard’s successor, Gemini , a chatbot that could generate images and act as a voice-operated digital assistant. Users quickly realized that the system refused to generate images of white people in most instances and drew inaccurate depictions of historical figures.

With each mishap, tech industry insiders have criticized the company for dropping the ball. But in interviews, financial analysts said Google needed to move quickly to keep up with its rivals, even if it meant growing pains.

Google “doesn’t have a choice right now,” Thomas Monteiro, a Google analyst at Investing.com, said in an interview. “Companies need to move really fast, even if that includes skipping a few steps along the way. The user experience will just have to catch up.”

Lara Levin, a Google spokeswoman, said in a statement that the vast majority of AI Overview queries resulted in “high-quality information, with links to dig deeper on the web.” The A.I.-generated result from the tool typically appears at the top of a results page.

“Many of the examples we’ve seen have been uncommon queries, and we’ve also seen examples that were doctored or that we couldn’t reproduce,” she added. The company will use “isolated examples” of problematic answers to refine its system.

Since OpenAI released its ChatGPT chatbot in late 2022 and it became an overnight sensation, Google has been under pressure to integrate A.I. into its popular apps. But there are challenges in taming large language models, which learn from enormous amounts of data taken from the open web — including falsehoods and satirical posts — rather than being programmed like traditional software.

(The New York Times sued OpenAI and its partner, Microsoft, in December, claiming copyright infringement of news content related to A.I. systems.)

Google announced AI Overview to fanfare at its annual developer conference, I/O, last week. For the first time, the company had plugged Gemini, its latest large language A.I. model, into its most important product, its search engine.

AI Overview combines statements generated from its language models with snippets from live links across the web. It can cite its sources, but does not know when that source is incorrect.

The system was designed to answer more complex and specific questions than regular search. The result, the company said, was that the public would be able to benefit from all that Gemini could do, taking some of the work out of searching for information.

But things quickly went awry, and users posted screenshots of problematic examples to social media platforms like X.

AI Overview instructed some users to mix nontoxic glue into their pizza sauce to prevent the cheese from sliding off, a fake recipe it seemed to borrow from an 11-year-old Reddit post meant to be a joke. The A.I. told other users to ingest at least one rock a day for vitamins and minerals — advice that originated in a satirical post from The Onion .

As the company’s cash cow, Google search is “the one property Google needs to keep relevant/trustworthy/useful,” Gergely Orosz, a software engineer with a newsletter on technology, Pragmatic Engineer, wrote on X . “And yet, examples on how AI overviews are turning Google search into garbage are all over my timeline.”

People also shared examples of Google’s telling users in bold font to clean their washing machines using “chlorine bleach and white vinegar,” a mixture that when combined can create harmful chlorine gas. In a smaller font, it told users to clean with one, then the other.

Social media users have tried to one-up one another with who could share the most outlandish responses from Google. In some cases, they doctored the results. One manipulated screenshot appeared to show Google saying that a good remedy for depression was jumping off the Golden Gate Bridge, citing a Reddit user. Ms. Levin, the Google spokeswoman, said that the company’s systems never returned that result.

AI Overview did, however, struggle with presidential history, saying that 17 presidents were white and that Barack Obama was the first Muslim president, according to screenshots posted to X.

It also said Andrew Jackson graduated from college in 2005 .

Kevin Roose contributed reporting.

An earlier version of this article referred incorrectly to a Google result from the company’s new artificial-intelligence tool AI Overview. A social media commenter claimed that a result for a search on depression suggested jumping off the Golden Gate Bridge as a remedy. That result was faked, a Google spokeswoman said, and never appeared in real results.

How we handle corrections

Nico Grant is a technology reporter covering Google from San Francisco. Previously, he spent five years at Bloomberg News, where he focused on Google and cloud computing. More about Nico Grant

Explore Our Coverage of Artificial Intelligence

News and Analysis

OpenAI said that it has begun training a new flagship A.I. model that would succeed the GPT-4 technology that drives its popular online chatbot, ChatGPT.

Elon Musk’s A.I. company, xAI, said that it had raised $6 billion , helping to close the funding gap with OpenAI, Anthropic and other rivals.

Google’s A.I. capabilities that answer people’s questions have generated a litany of untruths and errors — including recommending glue as part of a pizza recipe and the ingesting of rocks for nutrients — causing a furor online.

The Age of A.I.

D’Youville University in Buffalo had an A.I. robot speak at its commencement . Not everyone was happy about it.

A new program, backed by Cornell Tech, M.I.T. and U.C.L.A., helps prepare lower-income, Latina and Black female computing majors for A.I. careers.

Publishers have long worried that A.I.-generated answers on Google would drive readers away from their sites. They’re about to find out if those fears are warranted, our tech columnist writes .

A new category of apps promises to relieve parents of drudgery, with an assist from A.I. But a family’s grunt work is more human, and valuable, than it seems.

Web publishers brace for carnage as Google adds AI answers

The tech giant is rolling out AI-generated answers that displace links to human-written websites, threatening millions of creators

Kimber Matherne’s thriving food blog draws millions of visitors each month searching for last-minute dinner ideas.

But the mother of three says decisions made at Google, more than 2,000 miles from her home in the Florida panhandle, are threatening her business. About 40 percent of visits to her blog, Easy Family Recipes , come through the search engine, which has for more than two decades served as the clearinghouse of the internet, sending users to hundreds of millions of websites each day.

Podcast episode

As the tech giant gears up for Google I/O, its annual developer conference, this week, creators like Matherne are worried about the expanding reach of its new search tool that incorporates artificial intelligence. The product, dubbed “Search Generative Experience,” or SGE, directly answers queries with complex, multi-paragraph replies that push links to other websites further down the page, where they’re less likely to be seen.

The shift stands to shake the very foundations of the web.

The rollout threatens the survival of the millions of creators and publishers who rely on the service for traffic. Some experts argue the addition of AI will boost the tech giant’s already tight grip on the internet, ultimately ushering in a system where information is provided by just a handful of large companies.

“Their goal is to make it as easy as possible for people to find the information they want,” Matherne said. “But if you cut out the people who are the lifeblood of creating that information — that have the real human connection to it — then that’s a disservice to the world.”

Google calls its AI answers “overviews” but they often just paraphrase directly from websites. One search for how to fix a leaky toilet provided an AI answer with several tips, including tightening tank bolts. At the bottom of the answer, Google linked to The Spruce, a home improvement and gardening website owned by web publisher Dotdash Meredith, which also owns Investopedia and Travel + Leisure. Google’s AI tips lifted a phrase from The Spruce’s article word-for-word.

A spokesperson for Dotdash Meredith declined to comment.

The links Google provides are often half-covered, requiring a user to click to expand the box to see them all. It’s unclear which of the claims made by the AI come from which link.

Tech research firm Gartner predicts traffic to the web from search engines will fall 25 percent by 2026. Ross Hudgens, CEO of search engine optimization consultancy Siege Media, said he estimates at least a 10 to 20 percent hit, and more for some publishers. “Some people are going to just get bludgeoned,” he said.

Raptive, which provides digital media, audience and advertising services to about 5,000 websites, including Easy Family Recipes, estimates changes to search could result in about $2 billion in losses to creators — with some websites losing up to two-thirds of their traffic. Raptive arrived at these figures by analyzing thousands of keywords that feed into its network, and conducting a side-by-side comparison of traditional Google search and the pilot version of Google SGE.

Michael Sanchez, the co-founder and CEO of Raptive, says that the changes coming to Google could “deliver tremendous damage” to the internet as we know it. “What was already not a level playing field … could tip its way to where the open internet starts to become in danger of surviving for the long term,” he said.

When Google’s chief executive Sundar Pichai announced the broader rollout during an earnings call last month, he said the company is making the change in a “measured” way, while “also prioritizing traffic to websites and merchants.” Company executives have long argued that Google needs a healthy web to give people a reason to use its service, and doesn’t want to hurt publishers. A Google spokesperson declined to comment further.

“I think we got to see an incredible blossoming of the internet, we got to see something that was really open and freewheeling and wild and very exciting for the whole world,” said Selena Deckelmann, the chief product and technology officer for Wikimedia, the foundation that oversees Wikipedia.

“Now, we’re just in this moment where I think that the profits are driving people in a direction that I’m not sure makes a ton of sense,” Deckelmann said. “This is a moment to take stock of that and say, ‘What is the internet we actually want?’”

People who rely on the web to make a living are worried.

Jake Boly, a strength coach based in Austin, has spent three years building up his website of workout shoe reviews. But last year, his traffic from Google dropped 96 percent. Google still seems to find value in his work, citing his page on AI-generated answers about shoes. The problem is, people read Google’s summary and don’t visit his site anymore, Boly said.

“My content is good enough to scrape and summarize,” he said. “But it’s not good enough to show in your normal search results, which is how I make money and stay afloat.”

Google first said it would begin experimenting with generative AI in search last year, several months after OpenAI released ChatGPT. At the time, tech pundits speculated that AI chatbots could replace Google search as the place to find information. Satya Nadella, the CEO of Google’s biggest competitor, Microsoft, added an AI chatbot to his company’s search engine and in February 2023 goaded Google to “ come out and show that they can dance .”

The search giant started dancing. Though it had invented much of the AI technology enabling chatbots and had used it to power tools like Google Translate, it started putting generative AI tech into its other products. Google Docs, YouTube’s video-editing tools and the company’s voice assistant all got AI upgrades.

But search is Google’s most important product, accounting for about 57 percent of its $80 billion in revenue in the first quarter of this year. Over the years, search ads have been the cash cow Google needed to build its other businesses, like YouTube and cloud storage, and to stay competitive by buying up other companies .

Google has largely avoided AI answers for the moneymaking searches that host ads, said Andy Taylor, vice president of research at internet marketing firm Tinuiti.

When it does show an AI answer on “commercial” searches, it shows up below the row of advertisements. That could force websites to buy ads just to maintain their position at the top of search results.

Google has been testing the AI answers publicly for the past year, showing them to a small percentage of its billions of users as it tries to improve the technology.

Still, it routinely makes mistakes. A review by The Washington Post published in April found that Google’s AI answers were long-winded, sometimes misunderstood the question and made up fake answers.

The bar for success is high. While OpenAI’s ChatGPT is a novel product, consumers have spent years with Google and expect search results to be fast and accurate. The rush into generative AI might also run up against legal problems. The underlying tech behind OpenAI, Google, Meta and Microsoft’s AI was trained on millions of news articles, blog posts, e-books, recipes, social media comments and Wikipedia pages that were scraped from the internet without paying or asking permission of their original authors.

OpenAI and Microsoft have faced a string of lawsuits over alleged theft of copyrighted works .

“If journalists did that to each other, we’d call that plagiarism,” said Frank Pine, the executive editor of MediaNews Group, which publishes dozens of newspapers around the United States, including the Denver Post, San Jose Mercury News and the Boston Herald. Several of the company’s papers sued OpenAI and Microsoft in April, alleging the companies used its news articles to train their AI.

If news organizations let tech companies, including Google, use their content to make AI summaries without payment or permission, it would be “calamitous” for the journalism industry, Pine said. The change could have an even bigger effect on newspapers than the loss of their classifieds businesses in the mid-2000s or Meta’s more recent pivot away from promoting news to its users, he said.

The move to AI answers, and the centralization of the web into a few portals isn’t slowing down. OpenAI has signed deals with web publishers — including Dotdash Meredith — to show their content prominently in its chatbot.

Matherne, of Easy Family Recipes, says she’s bracing for the changes by investing in social media channels and email newsletters.

“The internet’s kind of a scary place right now,” Matherne said. “You don’t know what to expect.”

A previous version of this story said MediaNews Group sued OpenAI and Microsoft. In fact, it was several of the company's newspapers that sued the tech companies. This story has been corrected.

share this!

May 14, 2024

This article has been reviewed according to Science X's editorial process and policies . Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

reputable news agency

Google unleashes AI in search, raising hopes for better results and fears about less web traffic

by Michael Liedtke

Google on Tuesday rolled out a retooled search engine that will frequently favor responses crafted by artificial intelligence over website links, a shift promising to quicken the quest for information while also potentially disrupting the flow of money-making internet traffic.

The makeover announced at Google's annual developers conference will begin this week in the U.S. when hundreds of millions of people will start to periodically see conversational summaries generated by the company's AI technology at the top of the search engine's results page.

The AI overviews are supposed to only crop up when Google's technology determines they will be the quickest and most effective way to satisfy a user's curiosity—a solution mostly likely to happen with complex subjects or when people are brainstorming, or planning. People will likely still see Google's traditional website links and ads for simple searches for things like a store recommendation or weather forecasts.

Google began testing AI overviews with a small subset of selected users a year ago, but the company is now making it one of the staples in its search results in the U.S. before introducing the feature in other parts of the world. By the end of the year, Google expects the recurring AI overviews to be part of its search results for about 1 billion people.

Besides infusing more AI into its dominant search engine, Google also used the packed conference held at a Mountain View, California, amphitheater near its headquarters to showcase advances in a technology that is reshaping business and society.

The next AI steps included more sophisticated analysis powered by Gemini— a technology unveiled five months ago—and smarter assistants, or "agents," including a still-nascent version dubbed "Astra" that will be able to understand, explain and remember things it is shown through a smartphone's camera lens. Google underscored its commitment to AI by bringing in Demis Hassabis, the executive who oversees the technology, to appear on stage at its marquee conference for the first time.

The injection of more AI into Google's search engine marks one of the most dramatic changes that the company has made in its foundation since its inception in the late 1990s. It's a move that opens the door for more growth and innovation but also threatens to trigger a sea change in web surfing habits.

"This bold and responsible approach is fundamental to delivering on our mission and making AI more helpful for everyone," Google CEO Sundar Pichai told a group of reporters.

Well aware of how much attention is centered on the technology, Pichai ended a nearly two-hour succession of presentations by asking Google's Gemini model how many times AI had been mentioned. The count: 120, and then the tally edged up by one more when Pichai said, "AI," yet again.

The increased AI emphasis will bring new risks to an internet ecosystem that depends heavily on digital advertising as its financial lifeblood.

Google stands to suffer if the AI overviews undercuts ads tied to its search engine—a business that reeled in $175 billion in revenue last year alone. And website publishers—ranging from major media outlets to entrepreneurs and startups that focus on more narrow subjects—will be hurt if the AI overviews are so informative that they result in fewer clicks on the website links that will still appear lower on the results page.

Based on habits that emerged during the past year's testing phase of Google's AI overviews, about 25% of the traffic could be negatively affected by the de-emphasis on website links, said Marc McCollum, chief innovation officer at Raptive, which helps about 5,000 website publishers make money from their content.

A decline in traffic of that magnitude could translate into billions of dollars in lost ad revenue, a devastating blow that would be delivered by a form of AI technology that culls information plucked from many of the websites that stand to lose revenue.

"The relationship between Google and publishers has been pretty symbiotic, but enter AI, and what has essentially happened is the Big Tech companies have taken this creative content and used it to train their AI models," McCollum said. "We are now seeing that being used for their own commercial purposes in what is effectively a transfer of wealth from small, independent businesses to Big Tech."

But Google found the AI overviews resulted in people in conducting even more searches during the technology's testing "because they suddenly can ask questions that were too hard before," said Liz Reid, who oversees the company's search operations, told The Associated Press during an interview. She declined to provide any specific numbers about link-clicking volume during the tests of AI overviews.

"In reality, people do want to click to the web, even when they have an AI overview," Reid said. "They start with the AI overview and then they want to dig in deeper. We will continue to innovate on the AI overview and also on how do we send the most useful traffic to the web."

The increasing use of AI technology to summarize information in chatbots such as Google's Gemini and OpenAI's ChatGPT during the past 18 months already has been raising legal questions about whether the companies behind the services are illegally pulling from copyrighted material to advance their services. It's an allegation at the heart of a high-profile lawsuit that The New York Times filed late last year against OpenAI and its biggest backer, Microsoft.

Google's AI overviews could provoke lawsuits too, especially if they siphon away traffic and ad sales from websites that believe the company is unfairly profiting from their content. But it's a risk that the company had to take as the technology advances and is used in rival services such as ChatGPT and upstart search engines such as Perplexity, said Jim Yu, executive chairman of BrightEdge, which helps websites rank higher in Google's search results.

"This is definitely the next chapter in search," Yu said. "It's almost like they are tuning three major variables at once: the search quality, the flow of traffic in the ecosystem and then the monetization of that traffic. There hasn't been a moment in search that is bigger than this for a long time."

Outside of the amphitheater, several dozen protesters chained themselves to each other and blocked one of the entrances to the conference. Demonstrators targeted a $1.2 billion deal known as Project Nimbus that provides artificial intelligence technology to the Israeli government. They contend the system is being lethally deployed in the Gaza war—an allegation Google refutes. The demonstration didn't seem to affect the conferences attendance or the enthusiasm of the crowd inside the venue.

© 2024 The Associated Press. All rights reserved. This material may not be published, broadcast, rewritten or redistributed without permission.

Explore further

Feedback to editors

Controlled diffusion model can change material properties of objects in images

8 hours ago

Researchers develop sustainable strategy to manipulate interfacial heat transfer for eco-friendly cooling applications

Battery breakthrough could usher in greener, cheaper electric vehicles

Study sheds light on shady world of text message phishing scams

11 hours ago

Researchers develop new electrode binder material for high-performance sodium-ion batteries

12 hours ago

Researchers develop high-energy, high-efficiency all-solid-state sodium-air battery

What are the risks of hydrogen vehicles in tunnels?

Tomorrow's digital screens may be soft and elastic, so you'll get to 'feel' items through your phone

14 hours ago

An adhering, pure conducting polymer hydrogel for medical applications

18 hours ago

OpenAI's Johansson gaffe pushes voice cloning into spotlight

May 27, 2024

Related Stories

Google expected to show off new hardware, AI at annual event

May 7, 2019

Google delays return to office until at least September

Dec 15, 2020

Google CEO says company will review AI scholar's abrupt exit

Dec 10, 2020

New Alphabet chief Pichai sees big pay boost

Dec 20, 2019

Google investing billions in US operations

Feb 13, 2019

Google offers $800 mn to pandemic-impacted businesses, health agencies

Mar 27, 2020

Recommended for you

Can we rid artificial intelligence of bias?

May 19, 2024

Orphan articles: The 'dark matter' of Wikipedia

May 17, 2024

The tentacles of retracted science reach deep into social media: A simple button could change that

New browser extension empowers users to fight online misinformation

May 16, 2024

A win-win approach: Maximizing Wi-Fi performance using game theory

Apr 22, 2024

For more open and equitable public discussions on social media, try 'meronymity'

Apr 18, 2024

Let us know if there is a problem with our content

Use this form if you have come across a typo, inaccuracy or would like to send an edit request for the content on this page. For general inquiries, please use our contact form . For general feedback, use the public comments section below (please adhere to guidelines ).

Please select the most appropriate category to facilitate processing of your request

Thank you for taking time to provide your feedback to the editors.

Your feedback is important to us. However, we do not guarantee individual replies due to the high volume of messages.

E-mail the story

Your email address is used only to let the recipient know who sent the email. Neither your address nor the recipient's address will be used for any other purpose. The information you enter will appear in your e-mail message and is not retained by Tech Xplore in any form.

Your Privacy

This site uses cookies to assist with navigation, analyse your use of our services, collect data for ads personalisation and provide content from third parties. By using our site, you acknowledge that you have read and understand our Privacy Policy and Terms of Use .

E-mail newsletter

IMAGES

VIDEO

COMMENTS

Abstract. The dominant sequence transduction models are based on complex recurrent or convolutional neural networks in an encoder-decoder configuration. The best performing models also connect the encoder and decoder through an attention mechanism. We propose a new simple network architecture, the Transformer, based solely on attention ...

Publications. Our teams aspire to make discoveries that impact everyone, and core to our approach is sharing our research and tools to fuel progress in the field. Google publishes hundreds of research papers each year. Publishing our work enables us to collaborate and share ideas with, as well as learn from, the broader scientific community.

Large language models have been shown to achieve remarkable performance across a variety of natural language tasks using few-shot learning, which drastically reduces the number of task-specific training examples needed to adapt the model to a particular application. To further our understanding of the impact of scale on few-shot learning, we trained a 540-billion parameter, densely activated ...

Advancing the state of the art. Our teams advance the state of the art through research, systems engineering, and collaboration across Google. We publish hundreds of research papers each year across a wide range of domains, sharing our latest developments in order to collaboratively progress computing and science. Learn more about our philosophy.

Our research in Responsible AI aims to shape the field of artificial intelligence and machine learning in ways that foreground the human experiences and impacts of these technologies. We examine and shape emerging AI models, systems, and datasets used in research, development, and practice. This research uncovers foundational insights and ...

Google Quantum AI is advancing the state of the art in quantum computing and developing the hardware and software tools to operate beyond classical capabilities. Discover our research and resources to help you with your quantum experiments.

Google publishes hundreds of research papers each year. Publishing is important to us; it enables us to collaborate and share ideas with, as well as learn from, the broader scientific community. Submissions are often made stronger by the fact that ideas have been tested through real product implementation by the time of publication.

A paper published in Nature today shows how Google Research uses AI to accurately predict riverine flooding and help protect livelihoods in over 80 countries up to 7 days in advance, including in data scarce and vulnerable regions. Yossi Matias. Vice President, Engineering & Research. Read AI-generated summary.

Google Scholar provides a simple way to broadly search for scholarly literature. Search across a wide variety of disciplines and sources: articles, theses, books, abstracts and court opinions.

Like many breakthroughs in scientific discovery, the one that spurred an artificial intelligence revolution came from a moment of serendipity. In early 2017, two Google research scientists, Ashish ...

Papers With Code highlights trending Machine Learning research and the code to implement it. ... sb-ai-lab/lightautoml • • 3 Sep 2021. We present an AutoML system called LightAutoML developed for a large European financial services company and its ecosystem satisfying the set of idiosyncratic requirements that this ecosystem has for AutoML ...

Semantic Reader is an augmented reader with the potential to revolutionize scientific reading by making it more accessible and richly contextual. Try it for select papers. Semantic Scholar uses groundbreaking AI and engineering to understand the semantics of scientific literature to help Scholars discover relevant research.

A family of lightweight, state-of-the-art open models from Google, built from the same research and technology used to create the Gemini models. Responsible by design Incorporating comprehensive safety measures, these models help ensure responsible and trustworthy AI solutions through curated datasets and rigorous tuning.

It uses Gemini 1.5 Pro's long context capabilities to transform complex research papers into short audio dialogues. In minutes, Illuminate can generate a conversation consisting of two AI-generated voices, providing an overview and brief discussion of the key insights from research papers.

In a survey of users, 10% of respondents said that Elicit saves them 5 or more hours each week. 2. In pilot projects, we were able to save research groups 50% in costs and more than 50% in time by automating data extraction work they previously did manually. 5. Elicit's users save up to 5 hours per week 1.

Y.B., J.C., G.Ha., and S.Mc. hold the position of Candian Institute for Advanced Research (CIFAR) AI Chair. J.C. is a senior research adviser to Google DeepMind. A.A. reports acting as an adviser to the Civic AI Security Program and was affiliated with the Institute for AI Policy and Strategy at the time of the first submission.

Researchers from the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) and Google Research may have just performed digital sorcery — in the form of a diffusion model that can change the material properties of objects in images. ... who is a senior author on the paper. "This work opens new and finer-grain control for visual ...

The company's latest A.I. search feature has erroneously told users to eat glue and rocks, provoking a backlash among users. By Nico Grant Reporting from New York Last week, Google unveiled its ...

Google's AI tips lifted a phrase from The Spruce's article word-for-word. ... Tech research firm Gartner predicts traffic to the web from search engines will fall 25 percent by 2026. Ross ...

The next AI steps included more sophisticated analysis powered by Gemini—a technology unveiled five months ago—and smarter assistants, or "agents," including a still-nascent version dubbed "Astra" that will be able to understand, explain and remember things it is shown through a smartphone's camera lens. Google underscored its commitment to AI by bringing in Demis Hassabis, the executive ...