Fundamentals of Data Representation: ASCII and unicode

Ascii [ edit | edit source ].

ASCII normally uses 8 bits (1 byte) to store each character. However, the 8th bit is used as a check digit, meaning that only 7 bits are available to store each character. This gives ASCII the ability to store a total of

ASCII values can take many forms:

- Letters (capitals and lower case are separate)

- Punctuation (?/|\£$ etc.)

- non-printing commands (enter, escape, F1)

Take a look at your keyboard and see how many different keys you have. The number should be 104 for a windows keyboard, or 101 for traditional keyboard. With the shift function valus (a, A; b, B etc.) and recognising that some keys have repeated functionality (two shift keys, the num pad). We roughly have 128 functions that a keyboard can perform.

If you look carefully at the ASCII representation of each character you might notice some patterns. For example:

As you can see, a = 97, b = 98, c = 99. This means that if we are told what value a character is we can easily work out the value of subsequent or prior characters.

For the Latin alphabet ASCII is generally fine, but what if you wanted to write something in Mandarin, or Hindi? We need another coding scheme!

Navigation menu

Find Study Materials for

- Explanations

- Business Studies

- Combined Science

- Computer Science

- Engineering

- English literature

- Environmental Science

- Human Geography

- Macroeconomics

- Microeconomics

- Social Studies

- Browse all subjects

- Textbook Solutions

- Read our Magazine

Create Study Materials

- Flashcards Create and find the best flashcards.

- Notes Create notes faster than ever before.

- Study Sets Everything you need for your studies in one place.

- Study Plans Stop procrastinating with our smart planner features.

- What is ASCII

Delve into the fascinating world of ASCII in computer science , a key component in data representation and communication between devices. Unearth the origins and purpose of ASCII code, discovering it as one of the most basic elements of programming and digital technology. Through relevant examples, you'll grasp the concept of ASCII and its application. Embrace the details of the ASCII table, learning to navigate it and identifying commonly-used characters. Guide yourself through the ASCII encoding process with a beginner-friendly, step-by-step approach. Finally, explore the limitations of ASCII, understanding its inherent challenges and how these influence data representation. This article is an essential exploration into the world of ASCII, offering you comprehensive knowledge of this fundamental part of computer science.

Create learning materials about What is ASCII with our free learning app!

- Instand access to millions of learning materials

- Flashcards, notes, mock-exams and more

- Everything you need to ace your exams

- Algorithms in Computer Science

- Computer Network

- Computer Organisation and Architecture

- Computer Programming

- Computer Systems

- Data Representation in Computer Science

- Analogue Signal

- Binary Arithmetic

- Binary Conversion

- Binary Number System

- Bitmap Graphics

- Data Compression

- Data Encoding

- Digital Signal

- Hexadecimal Conversion

- Hexadecimal Number System

- Huffman Coding

- Image Representation

- Lempel Ziv Welch

- Logic Circuits

- Lossless Compression

- Lossy Compression

- Numeral Systems

- Quantisation

- Run Length Encoding

- Sample Rate

- Sampling Informatics

- Sampling Theorem

- Signal Processing

- Sound Representation

- Two's Complement

- What is Unicode

- What is Vector Graphics

- Data Structures

- Functional Programming

- Issues in Computer Science

- Problem Solving Techniques

- Theory of Computation

Understanding ASCII in Computer Science

ASCII, which stands for American Standard Code for Information Interchange, is a character encoding standard used to represent text in computers and other devices that use text. This system primarily includes printable characters such as letters of English alphabet (upper and lower case), numbers, and punctuation marks. Each ASCII character is assigned a unique number between 0 and 127.

What is ASCII code?

ASCII code is a numeric representation of characters and it's vital to the functioning of modern-day computers. It essentially provides a way to standardise text, allowing computers from different manufacturers or with different software to exchange and read information seamlessly.

- ASCII includes 128 characters, including 32 control codes, 95 printable characters, and a DEL character.

- The first 32 characters, from 0 to 31, are known as control characters.

- The remaining 96 characters, from 32 to 127, are printable characters.

For example, the ASCII value for the uppercase letter 'A' is 65, and the lowercase letter 'a' is 97. ASCII value for number '0' is 48 and so on.

Explanation of ASCII with relevant examples

So in computer's language or in ASCII, "COMPUTER" is "67, 79, 77, 80, 85, 84, 69, 82". This format enables your computer to understand and process the text information in a standardised way.

Discovering ASCII Characters

Navigating the ascii table.

The ASCII table is split into two core sections. The first part, containing numbers from 0 to 31, is designated for control characters. These are non-printable characters that are utilised to control hardware devices. They include characters like 'Start of Heading' (SOH), 'End of Text' (EOT), and 'Escape' (ESC).

Commonly-used ASCII Characters

The space character, for example, represented as 32 in the ASCII table, is one of the most commonly used ASCII characters. Additionally, uppercase and lowercase English letters (ranging from 65 to 90 and 97 to 122 respectively) are frequently used.

The newline character represented as '\n' with ASCII value 10 and carriage return character represented as '\r' with ASCII value 13 are often used in text editing to control the cursor movement.

- The uppercase letters A to Z have ASCII values from 65 to 90

- The lowercase letters a to z have ASCII values from 97 to 122

- The numbers 0 to 9 have ASCII values from 48 to 57

- Common punctuation marks like comma (,), full stop (.), question mark (?), and exclamation mark (!) have ASCII values 44, 46, 63, and 33 respectively

It's also important to note the '@' symbol which, as well-known symbol in email addresses, holds an ASCII value of 64. Additionally, "%", often used in programming, has an ASCII value of 37.

The ASCII Encoding Process

Step-by-step guide to ascii encoding.

- The first step in ASCII encoding is capturing the input – that's every key press on your keyboard.

- Each key, when pressed, sends a signal to the computer that contains its unique ASCII value.

- The computer processes this ASCII value through its basic I/O system.

- The ASCII value of the character keys are usually used to display the character on the screen. Any control key press results in corresponding control command. For instance, a newline ('\n') command would move the cursor to the next line.

- The ASCII encoded characters can be stored or manipulated in various ways, depending on the program being run.

Limitations of ASCII

Inherent limitations in ascii characters.

Firstly, ASCII only supports 128 standard characters, which include a range of English letters, numbers, punctuation marks, and a set of control characters. This makes it markedly restricted when it comes to expressing the vast array of symbols, letters, and characters needed for the majority of non-English languages.

For instance, in ASCII, there's no representation for the multiplication symbol (×), fraction bar (÷), or other common mathematical symbols like \( \pi \) or \( \sqrt[2]{a} \) or more abstract scientific symbols and characters.

How ASCII Limitations Influence Data Representation

For example, the word 'café' would be inaccurately represented in ASCII as 'caf' without the accent or by replacing theé with an e, which becomes 'cafe'. The same would occur with the German word 'Frühstück', which would incorrectly be represented as 'Frhstck'.

For instance, the formula for the volume of a sphere, \( \frac{4}{3} \times \pi \times r^3 \), would have to be represented as "4/3 * PI * r^3" in ASCII, which is not as easy to read or interpret.

What is ASCII - Key takeaways

ASCII (American Standard Code for Information Interchange) is a character encoding standard used to represent text in computers and other devices, assigning a unique number between 0 and 127 to each ASCII character."

ASCII code is a numeric representation of characters vital to the functioning of modern computers, enabling standardisation of text and seamless information exchange between different devices.

ASCII includes 128 characters: 32 control codes, 95 printable characters, and a DEL character. Numbers 0 to 31 represent control characters, and numbers 32 to 127 signify printable characters.

The ASCII value for uppercase 'A' is 65, lowercase 'a' is 97, and for the number '0' is 48.

The ASCII table splits into two sections: the first for control characters (numbers 0 to 31), which are non-printable characters utilised to control hardware devices, the second for printable characters (numbers 32 to 127), which include letters, numerals, punctuation, and special characters.

Flashcards inWhat is ASCII 16

What does ASCII stand for in Computer Science?

ASCII stands for American Standard Code for Information Interchange.

How many characters does ASCII include and how are they distributed?

ASCII includes 128 characters: 32 control codes, 95 printable characters, and a DEL character.

What is the primary function of ASCII code in computers?

ASCII code provides a way to standardise text, allowing computers from different manufacturers or with different software to exchange and read information seamlessly.

When typing the word 'COMPUTER' on a computer, how is it being interpreted in ASCII?

In ASCII, 'COMPUTER' is interpreted as "67, 79, 77, 80, 85, 84, 69, 82".

What are ASCII characters in the context of computer science?

ASCII characters are the building blocks used to standardise text in computers, making them indispensable to modern computer operations.

How is the ASCII table structured?

The ASCII table is split into two parts - the first part (0-31) is for non-printable control characters, and the second part (32-127) is for printable characters including letters, numerals, and punctuation.

Learn with 16 What is ASCII flashcards in the free Vaia app

We have 14,000 flashcards about Dynamic Landscapes.

Already have an account? Log in

Frequently Asked Questions about What is ASCII

What is ascii coding?

What is ascii used for?

What is ascii in computer science?

What is included in the ascii code?

What is an ascii table and what does it contain?

Test your knowledge with multiple choice flashcards

Join the Vaia App and learn efficiently with millions of flashcards and more!

Keep learning, you are doing great.

Vaia is a globally recognized educational technology company, offering a holistic learning platform designed for students of all ages and educational levels. Our platform provides learning support for a wide range of subjects, including STEM, Social Sciences, and Languages and also helps students to successfully master various tests and exams worldwide, such as GCSE, A Level, SAT, ACT, Abitur, and more. We offer an extensive library of learning materials, including interactive flashcards, comprehensive textbook solutions, and detailed explanations. The cutting-edge technology and tools we provide help students create their own learning materials. StudySmarter’s content is not only expert-verified but also regularly updated to ensure accuracy and relevance.

Vaia Editorial Team

Team What is ASCII Teachers

- 10 minutes reading time

- Checked by Vaia Editorial Team

Study anywhere. Anytime.Across all devices.

Create a free account to save this explanation..

Save explanations to your personalised space and access them anytime, anywhere!

By signing up, you agree to the Terms and Conditions and the Privacy Policy of Vaia.

Sign up to highlight and take notes. It’s 100% free.

Join over 22 million students in learning with our Vaia App

The first learning app that truly has everything you need to ace your exams in one place

- Flashcards & Quizzes

- AI Study Assistant

- Study Planner

- Smart Note-Taking

Privacy Overview

You may ask why do I always put 8 binary digits there. Well, the smallest unit in the computer's memory to store data is called a BYTE , which consists of 8 BITS. One Byte allows upto 256 different combinations of data representation (2 8 = 256). What happens when we have numbers greater than 256? The computer simply uses more Bytes to hold the value, 2 Bytes can hold values upto 65536 (2 16 ) and so forth.

Not only does the computer not understand the (decimal) numbers you use, it doesn't even understand letters like "ABCDEFG...". The fact is, it doesn't care. Whatever letters you input into the computer, the computer just saves it there and delivers to you when you instruct it so. It saves these letters in the same Binary format as digits, in accordance to a pattern. In PC (including DOS, Windows 95/98/NT, and UNIX), the pattern is called ASCII (pronounced ask-ee ) which stands for A merican S tandard C ode for I nformation I nterchange .

In this format, the letter "A" is represented by "0100 0001" ,or most often, referred to decimal 65 in the ASCII Table. The standard coding under ASCII is here . When performing comparison of characters, the computer actually looks up the associated ASCII codes and compare the ASCII values instead of the characters. Therefore the letter "B" which has ASCII value of 66 is greater than the letter "A" with ASCII value of 65.

The computer stores data in different formats or types . The number 10 can be stored as numeric value as in "10 dollars" or as character as in the address "10 Main Street" . So how can the computer tell? Once again the computer doesn't care, it is your responsibility to ensure that you get the correct data out of it. (For illustration character 10 and numeric 10 are represented by 0011-0001-0011-0000 and 0000-1010 respectively — you can see how different they are.) Different programming launguages have different data types , although the foundamental ones are usually very similar.

C++ has many data types. The followings are some basic data types you will be facing in these chapters. Note that there are more complicated data types. You can even create your own data types. Some of these will be discussed later in the tutorial.

char is basically used to store alphanumerics (numbers are stored in character form). Recall that character is stored as ASCII representation in PC. ASCII -128 to -1 do not exist, so char accomodates data from ASCII 0 (null zero) to ASCII 127 (DEL key). The original C++ does not have a String data type (but string is available through the inclusion of a library — to be discussed later). String can be stored as an one-dimensional array (list) with a "null zero" (ASCII 0) store in the last "cell" in the array. Unsigned char effectively accomodates the use of Extended ASCII characters which represent most special characters like the copyright sign �, registered trademark sign � etc plus some European letters like �, �, etc. Both char and unsigned char are stored internally as integers so they can effectively be compared (to be greater or less than).

Whenever you write a char (letter) in your program you must include it in single quotes. When you write strings (words or sentences) you must include them in double quotes. Otherwise C++ will treat these letters/words/sentences as tokens (to be discussed in Chapter 4). Remember in C/C++, A, 'A', "A" are all different. The first A (without quotes) means a variable or constant (discussed in Chapter 4), the second 'A' (in single quotes) means a character A which occupies one byte of memory. The third "A" (in double quotes) means a string containing the letter A followed by a null character which occupies 2 bytes of memory (will use more memory if store in a variable/constant of bigger size). See these examples: letter = 'A'; cout << 'A'; cout << "10 Main Street";

int (integer) represents all non-frational real numbers. Since int has a relatively small range (upto 32767), whenever you need to store value that has the possibility of going beyond this limit, long int should be used instead. The beauty of using int is that since it has no frational parts, its value is absolute and calculations of int are extremely accurate. However note that dividing an int by another may result in truncation, eg int 10 / int 3 will result in 3, not 3.3333 (more on this will be discussed later).

float , on the other hand, contains fractions. However real fractional numbers are not possible in computers since they are discrete machines (they can only handle the numbers 0 and 1, not 1.5 nor 1.75 or anything in between 0 and 1). No matter how many digits your calculator can show, you cannot produce a result of 2/3 without rounding, truncating, or by approximation. Mathameticians always write 2/3 instead of 0.66666.......... when they need the EXACT values. Since computer cannot produce real fractions the issue of significant digits comes to sight. For most applications a certain significant numbers are all you need. For example when you talk about money, $99.99 has no difference to $99.988888888888 (rounded to nearest cent); when you talk about the wealth of Bill Gates, it make little sense of saying $56,123,456,789.95 instead of just saying approximately $56 billions (these figures are not real, I have no idea how much money Bill has, although I wish he would give me the roundings). As you may see from the above table, float has only 6 significant digits, so for some applications it may not be sufficient, espically in scentific calculations, in which case you may want to use double or even long double to handle the numbers. There is also another problem in using float/double . Since numbers are represented internally as binary values, whenever a frational number is calculated or translated to/from binary there will be a rounding/truncaion error. So if you have a float 0, add 0.01 to it for 100 times, then minus 1.00 from it ( see the codes here or get the executable codes here ), you will not get 0 as it should be, rather you will get a value close to zero, but not really zero. Using double or long double will reduce the error but will not eliminate it. However as I mentioned earlier, the relevance may not affect our real life, just mean you may need to exercise caution when programming with floating point numbers.

There is another C++ data type I haven't included here — bool (boolean) data type which can only store a value of either 0 (false) or 1 (true). I will be using int (integer) to handle logical comparisons which poses more challenge and variety of use.

Escape Sequences are not data types but I feel I would better discuss them here. I mentioned earlier that you have to include a null zero at the end of a "string" in using an array of char to represent string. The easiest way to do this is to write the escape sequence '\0' which is understood by C++ as null zero. The followings are Escape Sequences in C++:

Earlier I said you can create your own data types. Here I will show you how. In fact you not only can create new data types but you can also create an alias of existing data type. For example you are writing a program which deals with dollar values. Since dollar values have fractional parts you have to either use float or double data types (eg assign float data type to salary by writing float salary . You can create an alias of the same data type MONEY and write MONEY salary. You do this by adding the following type definition into your program:

typedef double MONEY;

You can also create new data types. I will discuss more on this when we come to Arrays in Chapter 10. But the following illustrates how you create a new data type of array from a base data type:

Data Representation 5.4. Text

Data representation.

- 5.1. What's the big picture?

- 5.2. Getting started

- 5.3. Numbers

Introduction to Unicode

Comparison of text representations, project: messages hidden in music.

- 5.5. Images and Colours

- 5.6. Program Instructions

- 5.7. The whole story!

- 5.8. Further reading

There are several different ways in which computers use bits to store text. In this section, we will look at some common ones and then look at the pros and cons of each representation.

We saw earlier that 64 unique patterns can be made using 6 dots in Braille. A dot corresponds to a bit, because both dots and bits have 2 different possible values.

Count how many different characters that you could type into a text editor using your keyboard. (Don’t forget to count both of the symbols that share the number keys, and the symbols to the side that are for punctuation!)

The collective name for upper case letters, lower case letters, numbers, and symbols is characters e.g. a, D, 1, h, 6, *, ], and ~ are all characters. Importantly, a space is also a character.

If you counted correctly, you should find that there were more than 64 characters, and you might have found up to around 95. Because 6 bits can only represent 64 characters, we will need more than 6 bits; it turns out that we need at least 7 bits to represent all of these characters as this gives 128 possible patterns. This is exactly what the ASCII representation for text does.

In the previous section, we explained what happens when the number of dots was increased by 1 (remember that a dot in Braille is effectively a bit). Can you explain how we knew that if 6 bits is enough to represent 64 characters, then 7 bits must be enough to represent 128 characters?

Each pattern in ASCII is usually stored in 8 bits, with one wasted bit, rather than 7 bits. However, the left-most bit in each 8-bit pattern is a 0, meaning there are still only 128 possible patterns. Where possible, we prefer to deal with full bytes (8 bits) on a computer, this is why ASCII has an extra wasted bit.

Here is a table that shows the patterns of bits that ASCII uses for each of the characters.

For example, the letter "c" (lower case) in the table has the pattern "01100011" (the 0 at the front is just extra padding to make it up to 8 bits). The letter "o" has the pattern "01101111". You could write a word out using this code, and if you give it to someone else, they should be able to decode it exactly.

Computers can represent pieces of text with sequences of these patterns, much like Braille does. For example, the word "computers" (all lower case) would be 01100011 01101111 01101101 01110000 01110101 01110100 01100101 01110010 01110011. This is because "c" is "01100011", "o" is "01101111", and so on. Have a look at the ASCII table above to check if we are right!

The name "ASCII" stands for "American Standard Code for Information Interchange", which was a particular way of assigning bit patterns to the characters on a keyboard. The ASCII system even includes "characters" for ringing a bell (useful for getting attention on old telegraph systems), deleting the previous character (kind of an early "undo"), and "end of transmission" (to let the receiver know that the message was finished). These days those characters are rarely used, but the codes for them still exist (they are the missing patterns in the table above). Nowadays ASCII has been supplanted by a code called "UTF-8", which happens to be the same as ASCII if the extra left-hand bit is a 0, but opens up a huge range of characters if the left-hand bit is a 1.

Have a go at the following ASCII exercises:

- How would you represent "science" in ASCII? (ignore the " marks)

- How would you represent "Wellington" in ASCII? (note that it starts with an upper case "W")

- How would you represent "358" in ASCII? (it is three characters, even though it looks like a number)

- How would you represent "Hello, how are you?" in ASCII? (look for the comma, question mark, and space characters in the ASCII table)

Be sure to have a go at all of them before checking the answer!

These are the answers.

- "science" = 01110011 01100011 01101001 01100101 01101110 01100011 01100101

- "Wellington" = 01010111 01100101 01101100 01101100 01101001 01101110 01100111 01110100 01101111 01101110

- "358" = 00110011 00110101 00111000

- "Hello, how are you?" = 1001000 1100101 1101100 1101100 1101111 0101100 0100000 1101000 1101111 1110111 0100000 1100001 1110010 1100101 0100000 1111001 1101111 1110101 0111111

Note that the text "358" is treated as 3 characters in ASCII, which may be confusing, as the text "358" is different to the number 358! You may have encountered this distinction in a spreadsheet e.g. if a cell starts with an inverted comma in Excel, it is treated as text rather than a number. One place this comes up is with phone numbers; if you type 027555555 into a spreadsheet as a number, it will come up as 27555555, but as text the 0 can be displayed. In fact, phone numbers aren't really just numbers because a leading zero can be important, as they can contain other characters – for example, +64 3 555 1234 extn. 1234.

ASCII usage in practice

ASCII was first used commercially in 1963, and despite the big changes in computers since then, it is still the basis of how English text is stored on computers. ASCII assigned a different pattern of bits to each of the characters, along with a few other "control" characters, such as delete or backspace.

English text can easily be represented using ASCII, but what about languages such as Chinese where there are thousands of different characters? Unsurprisingly, the 128 patterns aren’t nearly enough to represent such languages. Because of this, ASCII is not so useful in practice, and is no longer used widely. In the next sections, we will look at Unicode and its representations. These solve the problem of being unable to represent non-English characters.

There are several other codes that were popular before ASCII, including the Baudot code and EBCDIC . A widely used variant of the Baudot code was the "Murray code", named after New Zealand born inventor Donald Murray ). One of Murray's significant improvements was to introduce the idea of "control characters", such as the carriage return (new line). The "control" key still exists on modern keyboards.

In practice, we need to be able to represent more than just English characters. To solve this problem, we use a standard called unicode . Unicode is a character set with around 120,000 different characters, in many different languages, current and historic. Each character has a unique number assigned to it, making it easy to identify.

Unicode itself is not a representation – it is a character set. In order to represent Unicode characters as bits, a Unicode encoding scheme is used. The Unicode encoding scheme tells us how each number (which corresponds to a Unicode character) should be represented with a pattern of bits.

The following interactive will allow you to explore the Unicode character set. Enter a number in the box on the left to see what Unicode character corresponds to it, or enter a character on the right to see what its Unicode number is (you could paste one in from a foreign language web page to see what happens with non-English characters).

Unicode Characters

The most widely used Unicode encoding schemes are called UTF-8, UTF-16, and UTF-32; you may have seen these names in email headers or describing a text file. Some of the Unicode encoding schemes are fixed length , and some are variable length . Fixed length means that each character is represented using the same number of bits. Variable length means that some characters are represented with fewer bits than others. It's better to be variable length , as this will ensure that the most commonly used characters are represented with fewer bits than the uncommonly used characters. Of course, what might be the most commonly used character in English is not necessarily the most commonly used character in Japanese. You may be wondering why we need so many encoding schemes for Unicode. It turns out that some are better for English language text, and some are better for Asian language text.

The remainder of the text representation section will look at some of these Unicode encoding schemes so that you understand how to use them, and why some of them are better than others in certain situations.

UTF-32 is a fixed length Unicode encoding scheme. The representation for each character is simply its number converted to a 32 bit binary number. Leading zeroes are used if there are not enough bits (just like how you can represent 254 as a 4 digit decimal number – 0254). 32 bits is a nice round number on a computer, often referred to as a word (which is a bit confusing, since we can use UTF-32 characters to represent English words!)

For example, the character H in UTF-32 would be:

The character $ in UTF-32 would be:

And the character 犬 (dog in Chinese) in UTF-32 would be:

The following interactive will allow you to convert a Unicode character to its UTF-32 representation. The Unicode character's number is also displayed. The bits are simply the binary number form of the character number.

Your browser does not support iframes.

- Represent each character in your name using UTF-32.

- Check how many bits your representation required, and explain why it had this many (remember that each character should have required 32 bits)

- Explain how you knew how to represent each character. Even if you used the interactive, you should still be able to explain it in terms of binary numbers.

ASCII actually took the same approach. Each ASCII character has a number between 0 and 255, and the representation for the character the number converted to an 8 bit binary number. ASCII is also a fixed length encoding scheme – every character in ASCII is represented using 8 bits.

In practice, UTF-32 is rarely used – you can see that it's pretty wasteful of space. UTF-8 and UTF-16 are both variable length encoding schemes, and very widely used. We will look at them next.

What is the largest number that can be represented with 32 bits? (In both decimal and binary).

The largest number in Unicode that has a character assigned to it is not actually the largest possible 32 bit number – it is 00000000 00010000 11111111 11111111. What is this number in decimal?

Most numbers that can be made using 32 bits do not have a Unicode character attached to them – there is a lot of wasted space. There are good reasons for this, but if you had a shorter number that could represent any character, what is the minimum number of bits you would need, given that there are currently around 120,000 Unicode characters?

The largest number that can be represented using 32 bits is 4,294,967,295 (around 4.3 billion). You might have seen this number before – it is the largest unsigned integer that a 32 bit computer can easily represent in programming languages such as C.

The decimal number for the largest character is 1,114,111.

You can represent all current characters with 17 bits. The largest number you can represent with 16 bits is 65,536, which is not enough. If we go up to 17 bits, that gives 131,072, which is larger than 120,000. Therefore, we need 17 bits.

UTF-8 is a variable length encoding scheme for Unicode. Characters with a lower Unicode number require fewer bits for their representation than those with a higher Unicode number. UTF-8 representations contain either 8, 16, 24, or 32 bits. Remembering that a byte is 8 bits, these are 1, 2, 3, and 4 bytes.

For example, the character H in UTF-8 would be:

The character ǿ in UTF-8 would be:

And the character 犬 (dog in Chinese) in UTF-8 would be:

The following interactive will allow you to convert a Unicode character to its UTF-8 representation. The Unicode character's number is also displayed.

How does UTF-8 work?

So how does UTF-8 actually work? Use the following process to do what the interactive is doing and convert characters to UTF-8 yourself.

Step 1. Lookup the Unicode number of your character.

Step 2. Convert the Unicode number to a binary number, using as few bits as necessary. Look back to the section on binary numbers if you cannot remember how to convert a number to binary.

Step 3. Count how many bits are in the binary number, and choose the correct pattern to use, based on how many bits there were. Step 4 will explain how to use the pattern.

Step 4. Replace the x's in the pattern with the bits of the binary number you converted in Step 2. If there are more x's than bits, replace extra left-most x's with 0's.

For example, if you wanted to find out the representation for 貓 (cat in Chinese), the steps you would take would be as follows.

Step 1. Determine that the Unicode number for 貓 is 35987 .

Step 2. Convert 35987 to binary – giving 10001100 10010011 .

Step 3. Count that there are 16 bits, and therefore the third pattern 1110xxxx 10xxxxxx 10xxxxx should be used.

Step 4. Substitute the bits into the pattern to replace the x's – 11101000 10110010 10010011 .

Therefore, the representation for 貓 is 11101000 10110010 10010011 using UTF-8.

Just like UTF-8, UTF-16 is a variable length encoding scheme for Unicode. Because it is far more complex than UTF-8, we won't explain how it works here.

However, the following interactive will allow you to represent text with UTF-16. Try putting some text that is in English and some text that is in Japanese into it. Compare the representations to what you get with UTF-8.

We have looked at ASCII, UTF-32, UTF-8, and UTF-16.

The following table summarises what we have said so far about each representation.

In order to compare and evaluate them, we need to decide what it means for a representation to be "good". Two useful criteria are:

- Can represent all characters, regardless of language.

- Represents a piece of text using as few bits as possible.

We know that UTF-8, UTF-16, and UTF-32 can represent all characters, but ASCII can only represent English. Therefore, ASCII fails the first criterion. But for the second criteria, it isn't so simple.

The following interactive will allow you to find out the length of pieces of text using UTF-8, UTF-16, or UTF-32. Find some samples of English text and Asian text (forums or a translation site are a good place to look), and see how long your various samples are when encoded with each of the three representations. Copy paste or type text into the box.

Unicode Encoding Size

Enter text for length calculation:

Encoding lengths:

UTF-8: 0 bits

UTF-16: 0 bits

UTF-32: 0 bits

As a general rule, UTF-8 is better for English text, and UTF-16 is better for Asian text. UTF-32 always requires 32 bits for each character, so is unpopular in practice.

Those cute little characters that you might use in your social media statuses, texts, and so on, are called "emojis", and each one of them has their own Unicode value. Japanese mobile operators were the first to use emojis, but their recent popularity has resulted in many becoming part of the Unicode Standard and today there are well over 1000 different emojis included. A current list of these can be seen here . What is interesting to notice is that a single emoji will look very different across different platforms, i.e. 😆 ("smiling face with open mouth and tightly-closed eyes") in my tweet will look very different to what it does on your iPhone. This is because the Unicode Consortium only provides the character codes for each emoji and the end vendors determine what that emoji will look like, e.g. for Apple devices the "Apple Color Emoji" typeface is used (there are rules around this to make sure there is consistency across each system).

There are messages hidden in this video using a 5-bit representation. See if you can find them! Start by reading the explanation below to ensure you understand what we mean by a 5-bit representation.

If you only wanted to represent the 26 letters of the alphabet, and weren’t worried about upper case or lower case, you could get away with using just 5 bits, which allows for up to 32 different patterns.

You might have exchanged notes which used 1 for "a", 2 for "b", 3 for "c", all the way up to 26 for "z". We can convert those numbers into 5 digit binary numbers. In fact, you will also get the same 5 bits for each letter by looking at the last 5 bits for it in the ASCII table (and it doesn't matter whether you look at the upper case or the lower case letter).

Represent the word "water" with bits using this system. Check the below panel once you think you have it.

Now, have a go at decoding the music video!

Data Representation: Characters

Understanding data representation: characters, basics of characters in data representation:.

- Characters are the smallest readable unit in text, including alphabets, numbers, spaces, symbols etc.

- In computer systems, each character is represented by a unique binary code.

- The system by which characters are converted to binary code is known as a character set .

ASCII and Unicode:

- ASCII (American Standard Code for Information Interchange) and Unicode are two popular character sets.

- ASCII uses 7 bits to represent each character, leading to a total of 128 possible characters ( including some non-printable control characters).

- As a more modern and comprehensive system, Unicode can represent over a million characters, covering virtually all writing systems in use today.

- Unicode is backward compatible with ASCII, meaning ASCII values represent the same characters in Unicode.

Importance of Character Sets:

- Having a standard system for representing characters is important for interoperability, ensuring different systems can read and display the same characters in the same way.

- This is especially important in programming and when transmitting data over networks.

Understanding Binary Representations:

- Each character in a character set is represented by a unique binary number. E.g., in ASCII, the capital letter “A” is represented by the binary number 1000001.

- Different types of data (e.g., characters, integers, floating-point values) are stored in different ways, but ultimately all data in a computer is stored as binary .

Characters in Programming:

- In most programming languages, single characters are represented within single quotes, e.g., ‘a’, ‘1’, ‘$’.

- A series of characters, also known as a string , is represented within double quotes, e.g., “Hello, world!”.

- String manipulation is a key part of many programming tasks, and understanding how characters are represented is essential for manipulating strings effectively.

Not Just Text:

- It’s important to understand that computers interpret everything — not just letters and numbers, but also images, sounds, and more — as binary data.

- Understanding the binary representation of characters is a foundational part of understanding how data is stored and manipulated in a computer system.

Data Representation in Computer: Number Systems, Characters, Audio, Image and Video

Table of Contents

- 1 What is Data Representation in Computer?

- 2.1 Binary Number System

- 2.2 Octal Number System

- 2.3 Decimal Number System

- 2.4 Hexadecimal Number System

- 3.4 Unicode

- 4 Data Representation of Audio, Image and Video

- 5.1 What is number system with example?

What is Data Representation in Computer?

A computer uses a fixed number of bits to represent a piece of data which could be a number, a character, image, sound, video, etc. Data representation is the method used internally to represent data in a computer. Let us see how various types of data can be represented in computer memory.

Before discussing data representation of numbers, let us see what a number system is.

Number Systems

Number systems are the technique to represent numbers in the computer system architecture, every value that you are saving or getting into/from computer memory has a defined number system.

A number is a mathematical object used to count, label, and measure. A number system is a systematic way to represent numbers. The number system we use in our day-to-day life is the decimal number system that uses 10 symbols or digits.

The number 289 is pronounced as two hundred and eighty-nine and it consists of the symbols 2, 8, and 9. Similarly, there are other number systems. Each has its own symbols and method for constructing a number.

A number system has a unique base, which depends upon the number of symbols. The number of symbols used in a number system is called the base or radix of a number system.

Let us discuss some of the number systems. Computer architecture supports the following number of systems:

Binary Number System

Octal number system, decimal number system, hexadecimal number system.

A Binary number system has only two digits that are 0 and 1. Every number (value) represents 0 and 1 in this number system. The base of the binary number system is 2 because it has only two digits.

The octal number system has only eight (8) digits from 0 to 7. Every number (value) represents with 0,1,2,3,4,5,6 and 7 in this number system. The base of the octal number system is 8, because it has only 8 digits.

The decimal number system has only ten (10) digits from 0 to 9. Every number (value) represents with 0,1,2,3,4,5,6, 7,8 and 9 in this number system. The base of decimal number system is 10, because it has only 10 digits.

A Hexadecimal number system has sixteen (16) alphanumeric values from 0 to 9 and A to F. Every number (value) represents with 0,1,2,3,4,5,6, 7,8,9,A,B,C,D,E and F in this number system. The base of the hexadecimal number system is 16, because it has 16 alphanumeric values.

Here A is 10, B is 11, C is 12, D is 13, E is 14 and F is 15 .

Data Representation of Characters

There are different methods to represent characters . Some of them are discussed below:

The code called ASCII (pronounced ‘’.S-key”), which stands for American Standard Code for Information Interchange, uses 7 bits to represent each character in computer memory. The ASCII representation has been adopted as a standard by the U.S. government and is widely accepted.

A unique integer number is assigned to each character. This number called ASCII code of that character is converted into binary for storing in memory. For example, the ASCII code of A is 65, its binary equivalent in 7-bit is 1000001.

Since there are exactly 128 unique combinations of 7 bits, this 7-bit code can represent only128 characters. Another version is ASCII-8, also called extended ASCII, which uses 8 bits for each character, can represent 256 different characters.

For example, the letter A is represented by 01000001, B by 01000010 and so on. ASCII code is enough to represent all of the standard keyboard characters.

It stands for Extended Binary Coded Decimal Interchange Code. This is similar to ASCII and is an 8-bit code used in computers manufactured by International Business Machines (IBM). It is capable of encoding 256 characters.

If ASCII-coded data is to be used in a computer that uses EBCDIC representation, it is necessary to transform ASCII code to EBCDIC code. Similarly, if EBCDIC coded data is to be used in an ASCII computer, EBCDIC code has to be transformed to ASCII.

ISCII stands for Indian Standard Code for Information Interchange or Indian Script Code for Information Interchange. It is an encoding scheme for representing various writing systems of India. ISCII uses 8-bits for data representation.

It was evolved by a standardization committee under the Department of Electronics during 1986-88 and adopted by the Bureau of Indian Standards (BIS). Nowadays ISCII has been replaced by Unicode.

Using 8-bit ASCII we can represent only 256 characters. This cannot represent all characters of written languages of the world and other symbols. Unicode is developed to resolve this problem. It aims to provide a standard character encoding scheme, which is universal and efficient.

It provides a unique number for every character, no matter what the language and platform be. Unicode originally used 16 bits which can represent up to 65,536 characters. It is maintained by a non-profit organization called the Unicode Consortium.

The Consortium first published version 1.0.0 in 1991 and continues to develop standards based on that original work. Nowadays Unicode uses more than 16 bits and hence it can represent more characters. Unicode can represent characters in almost all written languages of the world.

Data Representation of Audio, Image and Video

In most cases, we may have to represent and process data other than numbers and characters. This may include audio data, images, and videos. We can see that like numbers and characters, the audio, image, and video data also carry information.

We will see different file formats for storing sound, image, and video .

Multimedia data such as audio, image, and video are stored in different types of files. The variety of file formats is due to the fact that there are quite a few approaches to compressing the data and a number of different ways of packaging the data.

For example, an image is most popularly stored in Joint Picture Experts Group (JPEG ) file format. An image file consists of two parts – header information and image data. Information such as the name of the file, size, modified data, file format, etc. is stored in the header part.

The intensity value of all pixels is stored in the data part of the file. The data can be stored uncompressed or compressed to reduce the file size. Normally, the image data is stored in compressed form. Let us understand what compression is.

Take a simple example of a pure black image of size 400X400 pixels. We can repeat the information black, black, …, black in all 16,0000 (400X400) pixels. This is the uncompressed form, while in the compressed form black is stored only once and information to repeat it 1,60,000 times is also stored.

Numerous such techniques are used to achieve compression. Depending on the application, images are stored in various file formats such as bitmap file format (BMP), Tagged Image File Format (TIFF), Graphics Interchange Format (GIF), Portable (Public) Network Graphic (PNG).

What we said about the header file information and compression is also applicable for audio and video files. Digital audio data can be stored in different file formats like WAV, MP3, MIDI, AIFF, etc. An audio file describes a format, sometimes referred to as the ‘container format’, for storing digital audio data.

For example, WAV file format typically contains uncompressed sound and MP3 files typically contain compressed audio data. The synthesized music data is stored in MIDI(Musical Instrument Digital Interface) files.

Similarly, video is also stored in different files such as AVI (Audio Video Interleave) – a file format designed to store both audio and video data in a standard package that allows synchronous audio with video playback, MP3, JPEG-2, WMV, etc.

FAQs About Data Representation in Computer

What is number system with example.

Let us discuss some of the number systems. Computer architecture supports the following number of systems: 1. Binary Number System 2. Octal Number System 3. Decimal Number System 4. Hexadecimal Number System

Related posts:

- 10 Types of Computers | History of Computers, Advantages

- What is Microprocessor? Evolution of Microprocessor, Types, Features

- What is operating system? Functions, Types, Types of User Interface

- What is Cloud Computing? Classification, Characteristics, Principles, Types of Cloud Providers

- What is Debugging? Types of Errors

- What are Functions of Operating System? 6 Functions

- What is Flowchart in Programming? Symbols, Advantages, Preparation

- Advantages and Disadvantages of Flowcharts

- What is C++ Programming Language? C++ Character Set, C++ Tokens

- What are C++ Keywords? Set of 59 keywords in C ++

- What are Data Types in C++? Types

- What are Operators in C? Different Types of Operators in C

- What are Expressions in C? Types

- What are Decision Making Statements in C? Types

- Types of Storage Devices, Advantages, Examples

Data Representation

Data in a computer is stored in the form of "bits." A bit is something that can be either zero or one. This web page shows eight interpretations of the same 32 bits. You can edit any of the interpretations, and the others will change to match it. For a more detailed explanation, see the rest of this page.

About the Representations

In a computer, items of data are represented in the form of bits , that is, as zeros and ones. More accurately, they are stored using physical components that can be in two states, such as a wire that can be at high voltage or low voltage, or a capacitor that can either be charged or not. These components represent bits, with one state used to mean "zero" and the other to mean "one." To be stored in a computer, a data item must be coded as a sequence of such zeros and ones. But a given sequence of zeros and ones has no built-in meaning; it only gets meaning from how it is used to represent data.

The table at the right shows some possible interpretations of four bits. This web page shows some possible interpretations of 32 bits. Here is more information about the eight interpretations:

- 32 Bits — The "32 Bits" input box shows each of the 32 bits as a zero or one. You can type anywhere from 1 to 32 zeros and ones in the input box; the bits you enter will be padded on the left with zeros to bring the total up to 32. You can think of this as a 32-bit "base-2 number" or "binary number," but really, saying that it is a "number" adds a level of interpretation that is not built into the bits themselves. (As an example, the base-2 number 1011 represents the integer 1 × 2 3 + 0 × 2 2 + 1 × 2 1 + 1 × 2 0 . This is similar to the way the base-10 number 3475 represents 3 × 10 3 + 4 × 10 2 + 7 × 10 1 + 5 × 10 0 .) In a modern computer's memory, the bits would be stored in four groups of eight bits each. Eight bits make up a "byte," so we are looking at four bytes of memory.

- Graphical — Instead of representing a bit as a zero or one, we can represent a bit as a square that is colored white (for zero) or black (for one). The 32 bits are shown here as a grid of such squares. You can click on a square to change its color. The squares are arranged in four rows of eight, so each row represents one byte. Note that there are two ways that the four bytes could be arranged in memory: with the high-order (leftmost) byte first or with the low order byte first. These two byte orders are referred to as "big endian" and "little endian." The big-endian byte order is used here.

- Hexadecimal — Hexadecimal notation uses the characters 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, A, B, C, D, E, and F. (Lower case letters are also OK when entering the value into the "Hexadecimal" input box.) Each of these characters corresponds to a group of four bits, so a 32-bit value corresponds to 8 hexadecimal characters. A string of hexadecimal characters can also be interpreted as a base-16 number, and the individual characters 0 through F correspond to the ordinary base-10 numbers 0 through 15.

- Unsigned Decimal — We usually represent integers as "decimal," or "base-10," numbers, rather than binary (base-2) or hexadecimal (base-16). Using just 4 bits, we could represent integers in the range 0 to 15. Using 32 bits, we can represent positive integers from 0 up to 2 32 minus 1. In terms of base-10 numbers, that means from 0 to 4,294,967,295. The "Unsigned Decimal" input box shows the base-10 equivalent of the 32-bit binary number. You can enter the digits 0 through 9 in this box (but no commas).

- Signed Decimal — Often, we want to use negative as well as positive integers. When we only have a certain number of bits to work with, we can use half of the available values to represent negative integer sand half to represent positive integers and zero. In the signed decimal interpretation of 32-bit values, a bit-pattern which has a 1 in the the first (leftmost) position represents a negative number. The representation used for negative numbers (called the twos complement representation) is not the most obvious. If we had only four bits to work with, we could represent signed decimal values from -8 to 7, as shown in the table. With 32-bits, the legal signed decimal values are -2,147,483,648 to 2,147,483,647. When entering values, you can type the digits 0 through 9, with an optional plus or minus sign. Note that for the integers 0 through 2,147,483,647, the signed decimal and unsigned decimal interpretations are identical.

- Real Number — A real number can have a decimal point, with an integer part before the decimal point and a fractional part after the decimal point. Examples are: 3.141592654, -1.25, 17.0, and -0.000012334. Real numbers can be written using scientific notation , such as 1.23 × 10 -7 . In the "Real Number" input box, this would be written 1.23e-7. These examples use base 10; the base 2 versions would use only zeros and ones. A real number can have infinitely many digits after the decimal point. When we are limited to 32 bits, most real numbers can only be represented approximately, with just 7 or 8 significant digits. The encoding that is used for real numbers is IEEE 754 . The first (leftmost) bit is a sign bit, which tells whether the number is positive or negative. The next eight bits encode the exponent, for scientific notation. The remaining 23 bits encode the significant bits of the number, referred to as the "mantissa." There are special bit patterns that represent positive and negative infinity. And there are many bit patterns that represent so-called NaN, or "not-a-number" values. The encoding is quite complicated! For the "Real Number" input box, you can type integers, decimal numbers, and scientific notation. You can also enter the special values infinity , -infinity , and nan . (There are many different not-a-number values, but in this web app they are all shown as "NaN".) When you leave the input box, your input will be converted into a standard form. For example, if you enter 3.141592654, it will be changed to 3.1415927, since your input has more significant digits than can be represented in a 32-bit number. And 17.42e100 will change to infinity , since the number you entered is too big for a 32-bit number. (Note, by the way, that real numbers on a computer are more properly referred as " floating point " numbers.)

- 8-bit Characters — Text is another kind of data that has to be represented in binary form to be stored on a computer. ASCII code uses seven bits per character, to represent the English alphabet, digits, punctuation, and certain "unprintable" characters that don't have a visual representation. ASCII can be extended to eight bits in various ways, allowing for 256 possible characters, numbered from 0 to 255. The "8-bit Characters" input box uses the first 256 characters of the 16-bit character set that is actually used in web apps. If you type characters outside that range into the input box, you'll get an error. The unprintable characters are represented using a notation such as for the character with code number 26. In fact, you can enter any character in this format, but when you leave the input box, it will be converted to a single character. For example, will show up as © and as A. The binary number consisting of 32 zeros shows up in this box as .

- 16-bit Characters — Unicode text encoding allows the use of 16 bits for each character, with 65536 possible characters, allowing it to represent text from all of the world's languages as well as mathematical and other symbols. (Even 65536 characters is not enough — some characters are actually represented using two or three 16-bit numbers, but that's not supported in this web app.) As with 8-bit characters, notations such as can be used in the "16-bit Characters" input box.

As a final remark, note that you can't really ask for something like "the binary representation of 17", any more than you can ask for the meaning of the binary number "11000100110111". You can type "17" into six of the seven input boxes in this web app, and you will get five different binary representations! The meaning of "17" depends on the interpretation.

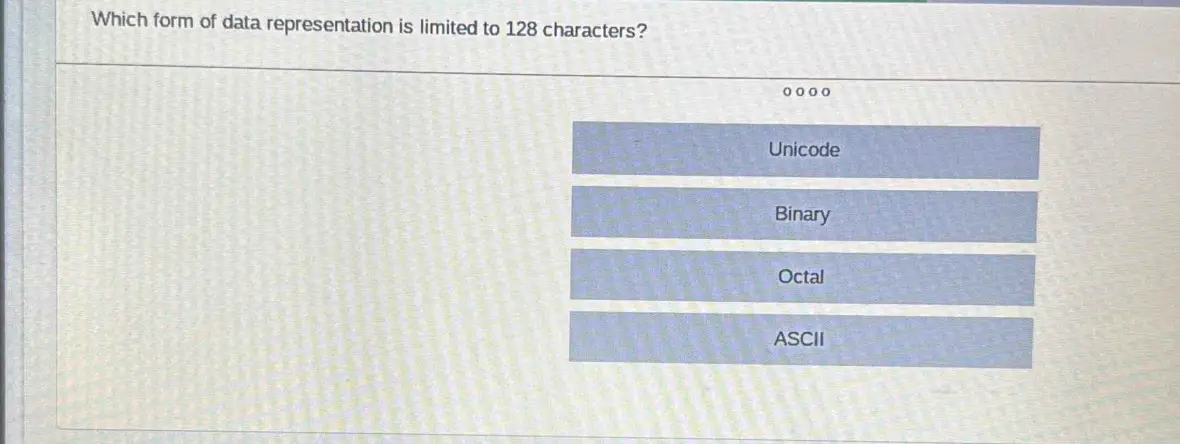

Which form of data representation is limited to 128 characters? o o o o Unicode Binary Octal ASCII

Gauth ai solution.

Which form of data representation is limited to 128 characters?

Explanation

IMAGES

VIDEO

COMMENTS

ASCII ( / ˈæskiː / ⓘ ASS-kee ), [3] : 6 an acronym for American Standard Code for Information Interchange, is a character encoding standard for electronic communication. ASCII codes represent text in computers, telecommunications equipment, and other devices. Because of technical limitations of computer systems at the time it was invented, ASCII has just 128 code points, of which only 95 ...

ASCII normally uses 8 bits (1 byte) to store each character. However, the 8th bit is used as a check digit, meaning that only 7 bits are available to store each character. This gives ASCII the ability to store a total of. 2^7 = 128 different values. The 95 printable ASCII characters, numbered from 32 to 126 (decimal)

An ASCII table is a numerical representation of the ASCII characters, consisting of 128 entries which include both non-printable and printable characters. It contains digits (0-9), lower-case letters (a-z), upper-case letters (A-Z), punctuation symbols, control characters and other special characters, each assigned with a unique decimal number ...

char is basically used to store alphanumerics (numbers are stored in character form). Recall that character is stored as ASCII representation in PC. ASCII -128 to -1 do not exist, so char accomodates data from ASCII 0 (null zero) to ASCII 127 (DEL key).

ASCII (American Standard Code for Information Interchange) is the most common format for text file s in computers and on the Internet. In an ASCII file, each alphabetic, numeric, or special character is represented with a 7-bit binary number (a string of seven 0s or 1s). 128 possible characters are defined.

For Higher Computing Science, revise the use of binary to represent and store data in a variety of forms.

The ASCII standard simply assigns to each of 128 distinct characters a distinct code in the form of a bit string of length seven. (Note that 2 7 is 128, not accidentally.)

The American Standard Code for Information Interchange maps a set of 128 characters into the set of integers from 0 to 127, requiring 7 bits for each numeric code:

Each ASCII character has a number between 0 and 255, and the representation for the character the number converted to an 8 bit binary number. ASCII is also a fixed length encoding scheme - every character in ASCII is represented using 8 bits.

Basics of Characters in Data Representation: Characters are the smallest readable unit in text, including alphabets, numbers, spaces, symbols etc. In computer systems, each character is represented by a unique binary code. The system by which characters are converted to binary code is known as a character set.

What is Data Representation in Computer? Number Systems, Data Representation of Characters, Data Representation of Audio, Image and Video.

ASCII is a standard form to represent text as binary. It uses 7 bits and can represent 128 characters (0-127).

Study with Quizlet and memorize flashcards containing terms like Character Set, Unicode, bit-mapped graphics and more.

Once you decided on the data representation scheme, certain constraints, in particular, the precision and range will be imposed. Hence, it is important to understand data representation to write correct and high-performance programs.

8-bit Characters — Text is another kind of data that has to be represented in binary form to be stored on a computer. ASCII code uses seven bits per character, to represent the English alphabet, digits, punctuation, and certain "unprintable" characters that don't have a visual representation.

Start studying Computer science revision Data representation. Learn vocabulary, terms, and more with flashcards, games, and other study tools.

Computers represent all data in binary, including characters that are input using a keyboard. 1 binary digit (bit) would allow us to represent only two possible characters, for example 1=A and 0=B. Using more bits allows more characters to be represented, 2 bits or 22 = 4 and so on.

Start studying Data Representation. Learn vocabulary, terms, and more with flashcards, games, and other study tools.

Signed Integers: 2's Complement Form. For non-negative integers, represent the value in base-2, using up to n - 1 bits, and pad to. 32 bits with leading 0's: 42: 101010 --> 0010 1010. For negative integers, take the base-2 representation of the value (ignoring the sign) pad with 0's to n - 1 bits, invert the bits and add 1:

ASCII is the form of data representation that is limited to 128 characters. It uses 7 bits for each character, allowing for a total of 128 unique characters to be represented. Unicode, binary, and octal are not limited to 128 characters.

The question is referring to a data representation format that has a specific limit on the number of characters it can store. A common format with such a limitation is ASCII (American Standard Code for Information Interchange). ASCII is a character encoding standard that uses 7 bits to represent each character, allowing for a total of 128 (2^7) different characters to be encoded. This ...

Study with Quizlet and memorize flashcards containing terms like Which technique for representing numeric data has two forms of zero?, Which of the following is true about representing color as an RGB value?, The _______________ character set is an attempt to represent characters and symbols for all languages in the world. and more.