Help | Advanced Search

Computer Science > Computer Vision and Pattern Recognition

Title: understanding and creating art with ai: review and outlook.

Abstract: Technologies related to artificial intelligence (AI) have a strong impact on the changes of research and creative practices in visual arts. The growing number of research initiatives and creative applications that emerge in the intersection of AI and art, motivates us to examine and discuss the creative and explorative potentials of AI technologies in the context of art. This paper provides an integrated review of two facets of AI and art: 1) AI is used for art analysis and employed on digitized artwork collections; 2) AI is used for creative purposes and generating novel artworks. In the context of AI-related research for art understanding, we present a comprehensive overview of artwork datasets and recent works that address a variety of tasks such as classification, object detection, similarity retrieval, multimodal representations, computational aesthetics, etc. In relation to the role of AI in creating art, we address various practical and theoretical aspects of AI Art and consolidate related works that deal with those topics in detail. Finally, we provide a concise outlook on the future progression and potential impact of AI technologies on our understanding and creation of art.

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

DBLP - CS Bibliography

Bibtex formatted citation.

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

- Search Menu

- Advance articles

- Browse content in Biological, Health, and Medical Sciences

- Administration Of Health Services, Education, and Research

- Agricultural Sciences

- Allied Health Professions

- Anesthesiology

- Anthropology

- Anthropology (Biological, Health, and Medical Sciences)

- Applied Biological Sciences

- Biochemistry

- Biophysics and Computational Biology (Biological, Health, and Medical Sciences)

- Biostatistics

- Cell Biology

- Dermatology

- Developmental Biology

- Environmental Sciences (Biological, Health, and Medical Sciences)

- Immunology and Inflammation

- Internal Medicine

- Medical Sciences

- Medical Microbiology

- Microbiology

- Neuroscience

- Obstetrics and Gynecology

- Ophthalmology

- Pharmacology

- Physical Medicine

- Plant Biology

- Population Biology

- Psychological and Cognitive Sciences (Biological, Health, and Medical Sciences)

- Public Health and Epidemiology

- Radiation Oncology

- Rehabilitation

- Sustainability Science (Biological, Health, and Medical Sciences)

- Systems Biology

- Browse content in Physical Sciences and Engineering

- Aerospace Engineering

- Applied Mathematics

- Applied Physical Sciences

- Bioengineering

- Biophysics and Computational Biology (Physical Sciences and Engineering)

- Chemical Engineering

- Civil and Environmental Engineering

- Computer Sciences

- Computer Science and Engineering

- Earth Resources Engineering

- Earth, Atmospheric, and Planetary Sciences

- Electric Power and Energy Systems Engineering

- Electronics, Communications and Information Systems Engineering

- Engineering

- Environmental Sciences (Physical Sciences and Engineering)

- Materials Engineering

- Mathematics

- Mechanical Engineering

- Sustainability Science (Physical Sciences and Engineering)

- Browse content in Social and Political Sciences

- Anthropology (Social and Political Sciences)

- Economic Sciences

- Environmental Sciences (Social and Political Sciences)

- Political Sciences

- Psychological and Cognitive Sciences (Social and Political Sciences)

- Social Sciences

- Sustainability Science (Social and Political Sciences)

- Author guidelines

- Submission site

- Open access policy

- Self-archiving policy

- Why submit to PNAS Nexus

- The PNAS portfolio

- For reviewers

- About PNAS Nexus

- About National Academy of Sciences

- Editorial Board

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

Introduction, robustness checks and sensitivity analyses, materials and methods, acknowledgments, supplementary material, author contributions, data availability.

- < Previous

Generative artificial intelligence, human creativity, and art

Competing Interest: The authors declare no competing interest.

- Article contents

- Figures & tables

- Supplementary Data

Eric Zhou, Dokyun Lee, Generative artificial intelligence, human creativity, and art, PNAS Nexus , Volume 3, Issue 3, March 2024, pgae052, https://doi.org/10.1093/pnasnexus/pgae052

- Permissions Icon Permissions

Recent artificial intelligence (AI) tools have demonstrated the ability to produce outputs traditionally considered creative. One such system is text-to-image generative AI (e.g. Midjourney, Stable Diffusion, DALL-E), which automates humans’ artistic execution to generate digital artworks. Utilizing a dataset of over 4 million artworks from more than 50,000 unique users, our research shows that over time, text-to-image AI significantly enhances human creative productivity by 25% and increases the value as measured by the likelihood of receiving a favorite per view by 50%. While peak artwork Content Novelty, defined as focal subject matter and relations, increases over time, average Content Novelty declines, suggesting an expanding but inefficient idea space. Additionally, there is a consistent reduction in both peak and average Visual Novelty, captured by pixel-level stylistic elements. Importantly, AI-assisted artists who can successfully explore more novel ideas, regardless of their prior originality, may produce artworks that their peers evaluate more favorably. Lastly, AI adoption decreased value capture (favorites earned) concentration among adopters. The results suggest that ideation and filtering are likely necessary skills in the text-to-image process, thus giving rise to “generative synesthesia”—the harmonious blending of human exploration and AI exploitation to discover new creative workflows.

We investigate the implications of incorporating text-to-image generative artificial intelligence (AI) into the human creative workflow. We find that generative AI significantly boosts artists’ productivity and leads to more favorable evaluations from their peers. While average novelty in artwork content and visual elements declines, peak Content Novelty increases, indicating a propensity for idea exploration. The artists who successfully explore novel ideas and filter model outputs for coherence benefit the most from AI tools, underscoring the pivotal role of human ideation and artistic filtering in determining an artist’s success with generative AI tools.

Recently, artificial intelligence (AI) has exhibited that it can feasibly produce outputs that society traditionally would judge as creative. Specifically, generative algorithms have been leveraged to automatically generate creative artifacts like music ( 1 ), digital artworks ( 2 , 3 ), and stories ( 4 ). Such generative models allow humans to directly engage in the creative process through text-to-image systems (e.g. Midjourney, Stable Diffusion, DALL-E) based on the latent diffusion model ( 5 ) or by participating in an open dialog with transformer-based language models (e.g. ChatGPT, Bard, Claude). Generative AI is projected to become more potent to automate even more creative tasks traditionally reserved for humans and generate significant economic value in the years to come ( 6 ).

Many such generative algorithms were released in the past year, and their diffusion into creative domains has concerned many artistic communities which perceive generative AI as a threat to substitute the natural human ability to be creative. Text-to-image generative AI has emerged as a candidate system that automates elements of humans’ creative process in producing high-quality digital artworks. Remarkably, an artwork created by Midjourney bested human artists in an art competition, a while another artist refused to accept the top prize in a photo competition after winning, citing ethical concerns. b Artists have filed lawsuits against the founding companies of some of the most prominent text-to-image generators, arguing that generative AI steals from the works upon which the models are trained and infringes on the copyrights of artists. c This has ignited a broader debate regarding the originality of AI-generated content and the extent to which it may replace human creativity, a faculty that many consider unique to humans. While generative AI has demonstrated the capability to automatically create new digital artifacts, there remains a significant knowledge gap regarding its impact on productivity in artistic endeavors which lack well-defined objectives, and the long-run implications on human creativity more broadly. In particular, if humans increasingly rely on generative AI for content creation, creative fields may become saturated with generic content, potentially stifling exploration of new creative frontiers. Given that generative algorithms will remain a mainstay in creative domains as it continues to mature, it is critical to understand how generative AI is affecting creative production, the evaluation of creative artifacts, and human creativity more broadly. To this end, our research questions are 3-fold:

How does the adoption of generative AI affect humans’ creative production?

Is generative AI enabling humans to produce more creative content?

When and for whom does the adoption of generative AI lead to more creative and valuable artifacts?

Our analyses of over 53,000 artists and 5,800 known AI adopters on one of the largest art-sharing platforms reveal that creative productivity and artwork value, measured as favorites per view, significantly increased with the adoption of text-to-image systems.

We then focus our analysis on creative novelty. A simplified view of human creative novelty with respect to art can be summarized via two main channels through which humans can inject creativity into an artifact: Contents and Visuals . These concepts are rooted in the classical philosophy of symbolism in art which suggests that the contents of an artwork is related to the meaning or subject matter, whereas visuals are simply the physical elements used to convey the content ( 7 ). In our setting, Contents concern the focal object(s) and relations depicted in an artifact, whereas Visuals consider the pixel-level stylistic elements of an artifact. Thus, Content and Visual Novelty are measured as the pairwise cosine distance between artifacts in the feature space (see Materials and methods for details on feature extraction and how novelty is measured).

Our analyses reveal that over time, adopters’ artworks exhibit decreasing novelty, both in terms of Concepts and Visual features. However, maximum Content Novelty increases, suggesting an expanding yet inefficient idea space. At the individual level, artists who harness generative AI while successfully exploring more innovative ideas, irrespective of their prior originality, may earn more favorable evaluations from their peers. In addition, the adoption of generative AI leads to a less concentrated distribution of favorites earned among adopters.

We present results from three analyses. Using an event study difference-in-differences approach ( 8 ), we first estimate the causal impact of adopting generative AI on creative productivity, artwork value measured as favorites per view, and artifact novelty with respect to Content and Visual features. Then, using a two-way fixed effects model, we offer correlational evidence regarding how humans’ originality prior to adopting generative AI may influence postadoption gains in artwork value when artists successfully explore the creative space. Lastly, we show how adoption of generative AI may lead to a more dispersed distribution of favorites across users on the platform.

Creative productivity

We define creative productivity as the log of the number of artifacts that a user posts in a month. Figure 1 a reveals that upon adoption, artists experience a 50% increase in productivity on average, which then doubles in the subsequent month. For the average user, this translates to approximately 7 additional artifacts published in the adoption month and 15 artifacts in the following month. Beyond the adoption month, user productivity gradually stabilizes to a level that still exceeds preadoption volume. By automating the execution stage of the creative process, adopters can experience prolonged productivity gains compared to their nonadopter counterparts.

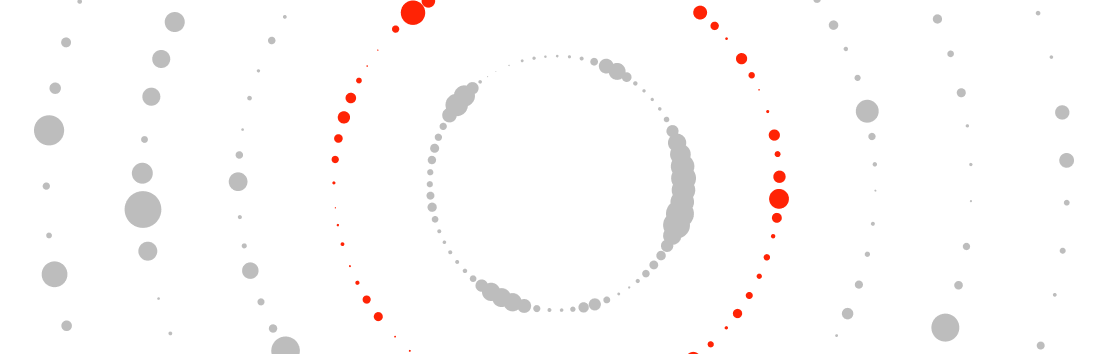

Causal effect of adopting generative AI on a) creative productivity as the log of monthly posts; b) creative value as number of favorites per view; c) mean Content Novelty; d) maximum Content Novelty; e) mean Visual Novelty; f) maximum Visual Novelty. The error bars represent 95% CI.

Creative value

If users are becoming more productive, what of the quality of the artifacts they are producing? We next examine how adopters’ artifacts are evaluated by their peers over time. In the literature, creative Value is intended to measure some aspect of utility, performance and/or attractiveness of an artifact, subject to temporal and cultural contexts ( 9 ). Given this subjectivity, we measure Value as the number of favorites an artwork receives per view after 2 weeks, reflecting its overall performance and contextual relevance within the community. This metric also hints at the artwork’s broader popularity within the cultural climate, suggesting a looser definition of Value based on cultural trends. Throughout the paper, the term “Value” will refer to these two notions.

Figure 1 b reveals an initial nonsignificant upward trend in the Value of artworks produced by AI adopters. But after 3 months, AI adopters consistently produce artworks judged significantly more valuable than those of nonadopters. This translates to a 50% increase in artwork favorability by the sixth month, jumping from the preadoption average of 2% to a steady 3% rate of earning a favorite per view.

Content Novelty

Figure 1 c shows that average Content Novelty decreases over time among adopters, meaning that the focal objects and themes within new artworks produced by AI adopters are becoming progressively more alike over time when compared to control units. Intuitively, this is equivalent to adopters’ ideas becoming more similar over time. In practice, many publicly available fine-tuned checkpoints and adapters are refined to enable text-to-image models to produce specific contents with consistency. Figure 1 d, however, reveals that maximum Content Novelty is increasing and marginally statistically significantly within the first several months after adoption. This suggests two possibilities: either a subset of adopters are exploring new ideas at the creative frontier or the adopter population as a whole is driving the exploration and expansion of the universe of artifacts.

Visual Novelty

The result shown in Fig. 1 e highlights that average Visual Novelty is decreasing over time among adopters when compared to nonadopters. The same result holds for the maximum Visual Novelty seen in Fig. 1 f. This suggests that adopters may be gravitating toward a preferred visual style, with relatively minor deviations from it. This tendency could be influenced by the nature of text-to-image workflows, where prompt engineering tends to follow a formulaic approach to generate consistent, high-quality images with a specific style. As is the case with contents, publicly available fine-tuned checkpoints and adapters for these models may be designed to capture specific visual elements from which users can sample from to maintain a particular and consistent visual style. In effect, AI may be pushing artists toward visual homogeneity.

Role of human creativity in AI-assisted value capture

Although aggregate trends suggest novelty of ideas and aesthetic features is sharply declining over time with generative AI, are there individual-level differences that enable certain artists to successfully produce more creative artworks? Specifically, how does humans’ baseline novelty, in the absence of AI tools, correlate with their ability to successfully explore novel ideas with generative AI to produce valuable artifacts? To delve into this heterogeneity, we categorize each user into quartiles based on their average Content and Visual Novelty without AI assistance to capture each users’ baseline novelty. We then employ a two-way fixed effects model to examine the interaction between adoption, pretreatment novelty quartiles, and posttreatment adjustments in novelty. Each point in Fig. 2 a and b represents the estimated impact of increasing mean Content (left) or Visual (right) Novelty on Value based on artists’ prior novelty denoted along the horizontal axis. Intuitively, these estimates quantify the degree to which artists can successfully navigate the creative space based on prior originality in both ideation and visuals to earn more favorable evaluations from peers. Refer to SI Appendix, Section 2B for estimation details.

Estimated effect of increases in mean Content and Visual Novelty on Value post-adoption based on a) average Content Novelty quartiles prior to treatment; b) average Visual Novelty quartiles prior to treatment. Each point shows the estimated effect of postadoption novelty increases given creativity levels prior to treatment on Value. The error bars represent 95% CI.

Figure 2 a presents correlational evidence that users, regardless of their proficiency in generating novel ideas, might be able to realize significant gains in Value if they can successfully produce more novel content with generative AI. The lowest quartile of content creators may also experience marginally significant gains. However, those same users who benefit from expressing more novel ideas may also face penalties for producing more divergent visuals.

Next, Fig. 2 b suggests that users who were proficient in creating exceedingly novel visual features before adopting generative AI may garner the most Value gains from successfully introducing more novel ideas. While marginally significant, less proficient users can also experience weak Value gains. In general, more novel ideas are linked to improved Value capture. Conversely, users capable of producing the most novel visual features may face penalties for pushing the boundaries of pixel-level aesthetics with generative AI. This finding might be attributed to the contextual nature of Value, implying an “acceptable range” of novelty. Artists already skilled at producing highly novel pixel-level features may exceed the limit of what can be considered coherent.

Despite penalties for pushing visual boundaries, the gains from exploring creative ideas with AI outweigh the losses from visual divergence. Unique concepts take priority over novel aesthetics, as shown by the larger Value gains for artists who were already adept at Visual Novelty before using AI. This suggests users who naturally lean toward visual exploration may benefit more from generative AI tools to explore the idea space.

Lastly, we estimate Generalized Random Forests ( 10 ) configured to optimize the splitting criteria that maximize heterogeneity in Value gains among adopters for each postadoption period. With each trained model, we extract feature importance weights quantified by the SHAP (SHapley Additive exPlanations) method ( 11 ). This method utilizes ideas from cooperative game theory to approximate the predictive signal of covariates, accounting for linear and nonlinear interactions through the Markov chain Monte Carlo method. Intuitively, a feature of greater importance indicates potentially greater impacts on treatment effect heterogeneity among adopters.

Figure 3 offers correlational evidence that Content Novelty significantly increases model performance within several months of adoption, whereas Visual Novelty remains marginally impactful until the last observation period. This suggests that Content Novelty plays a more significant role in predicting posttreatment variations in Value gains compared to Visual Novelty. In summary, these findings illustrate that content is king in the text-to-image creative paradigm.

SHAP values measuring importance of mean Content and Visual Novelty on Value gains.

Platform-level value capture

One question remains: do individual-level differences within adopters result in greater concentrations of value among fewer users at the platform-level? Specifically, are more favorites being captured by fewer users, or is generative AI promoting less concentrated value capture? To address these questions, we calculate the Gini coefficients with respect to favorites received of never-treated units, not-yet-treated units, and treated units and conduct permutation tests with 10,000 iterations to evaluate if adoption of generative AI may lead to a less concentrated distribution of favorites among users. The Gini coefficient is a common measure of aggregate inequality where a coefficient of 0 indicates that all users make up an equal proportion of favorites earned, and a coefficient of 1 indicates that a single user captures all favorites. Thus, higher values of the Gini coefficient indicate a greater concentration of favorites captured by fewer users. Figure 4 depicts the differences in cumulative distributions as well as Gini coefficients of both control groups and the treated group with respect to a state of perfect equality.

Gini coefficients of treated units vs. never-treated and not-yet-treated units.

First, observe that platform-level favorites are predominantly captured by a small portion of users, reflecting an aggregate concentration of favorites. Second, this concentration is more pronounced among not-yet-treated units than among never-treated units. Third, despite the presence of aggregate concentration, favorites captured among AI adopters are more evenly distributed compared to both never-treated and not-yet-treated control units. The results from the permutation tests in Table 1 , where column D shows the difference between the treated coefficient and the control group coefficients, show that the differences in coefficients are statistically significant between never-treated and not-yet-treated groups vs. the treated group. This suggests that generative AI may lead to a broader allocation of favorites earned (value capture from peer feedback), particularly among control units who eventually become adopters.

Permutation tests for statistical significance.

The column D denotes the difference in Gini coefficients relative to the treated population.

To reinforce the validity of our causal estimates, we employ the generalized synthetic control method ( 12 ) (GSCM). GSCM allows us to relax the parallel trends assumption by creating synthetic control units that closely match the pretreatment characteristics of the treated units while also accounting for unobservable factors that may influence treatment outcome. In addition, we conduct permutation tests to evaluate the robustness of our estimates to potential measurement errors in treatment time identification and control group contamination. Our results remain consistent even when utilizing GSCM and in the presence of substantial measurement error.

Because adopting generative AI is subject to selection issues, one emergent concern is the case where an artist who experiences renewed interest in creating artworks, and thus is more “inspired,” is also more likely to experiment with text-to-image AI tools and explore the creative space as they ramp up production. In this way, unobservable characteristics like a renewed interest in creating art or “spark of inspiration” might correlate with adoption of AI tools while driving the main effects rather than AI tools themselves. Thus, we also provide evidence that unobservable characteristics that may correlate with users’ productivity or “interest” shocks and selection into treatment are not driving the estimated effects by performing a series of falsification tests. For a comprehensive overview of all robustness checks and sensitivity analyses, please refer to SI Appendix, Section 3 .

The rapid adoption of generative AI technologies poses exceptional benefits as well as risks. Current research demonstrates that humans, when assisted by generative AI, can significantly increase productivity in coding ( 13 ), ideation ( 14 ), and written assignments ( 15 ) while raising concerns regarding potential disinformation ( 16 ) and stagnation of knowledge creation ( 17 ). Our research is focused on how generative AI is impacting and potentially coevolving with human creative workflows. In our setting, human creativity is embodied through prompts themselves, whereas in written assignments, generative AI is primarily used to source ideas that are subsequently evaluated by humans, representing a different paradigm shift in the creative process.

Within the first few months post-adoption, text-to-image generative AI can help individuals produce nearly double the volume of creative artifacts that are also evaluated 50% more favorably by their peers over time. Moreover, we observe that peak Content Novelty increases over time, while average Content and Visual Novelty diminish. This implies that the universe of creative possibilities is expanding but with some inefficiencies.

Our results hint that the widespread adoption of generative AI technologies in creative fields could lead to a long-run equilibrium where in aggregate, many artifacts converge to the same types of content or visual features. Creative domains may be inundated with generic content as exploration of the creative space diminishes. Without establishing new frontiers for creative exploration, AI systems trained on outdated knowledge banks run the risk of perpetuating the generation of generic content at a mass scale in a self-reinforcing cycle ( 17 ). Before we reach that point, technology firms and policy makers pioneering the future of generative AI must be sensitive to the potential consequences of such technologies in creative fields and society more broadly.

Encouragingly, humans assisted by generative AI who can successfully explore more novel ideas may be able to push the creative frontier, produce meaningful content, and be evaluated favorably by their peers. With respect to traditional theories of creativity, one particularly useful framework for understanding these results is the theory of blind variation and selective retention (BVSR) which posits that creativity is a process of generating new ideas (variation) and consequently selecting the most promising ones (retention) ( 18 ). The blindness feature suggests that variation is not guided by any specific goal but can also involve evaluating outputs against selection criteria in a genetic algorithm framework ( 19 ).

Because we do not directly observe users’ process, this discussion is speculative but suggestive that a text-to-image creative workflow models after a BVSR genetic process. First, humans manipulate and mutate known creative elements in the form of prompt engineering which requires that the human deconstruct an idea into atomic components, primarily in the form of distinct words and phrases, to compose abstract ideas or meanings. Then, visual realization of an idea is automated by the algorithm, allowing humans to rapidly sample ideas from their creative space and simply evaluate the output against selection criteria. The selection criteria varies based on humans’ ability to make sense of model outputs, and curate those that most align with individual or peer preferences, thus having direct implications on their evaluation by peers. Satisfactory outputs contribute to the genetic evolution of future ideas, prompts, and image refinements.

Although we can only observe the published artworks, it is plausible that many more unobserved iterations of ideation, prompt engineering, filtering, and refinement have occurred. This is especially likely given the documented increase in creative productivity. Thus, it is possible that individuals with less refined artistic filters are also less discerning when filtering artworks for quality which could lead to a flood of less refined content on platforms. In contrast, artists who prioritize coherence and quality may only publish artworks that are likely to be evaluated favorably.

The results suggest some evidence in this direction, indicating that humans who excel at producing novel ideas before adopting generative AI are evaluated most favorably after adoption if they successfully explore the idea space, implying that ability to manipulate novel concepts and curate artworks based on coherence are relevant skills when using text-to-image AI. This aligns with prior research which suggest that creative individuals are particularly adept at discerning which ideas are most meaningful ( 20 ), reflecting a refined sensitivity to the artistic coherence of artifacts ( 21 ). Furthermore, all artists, regardless of their ability to produce novel visual features without generative AI, appear to be evaluated more favorably if they can capably explore more novel ideas. This finding hints at the importance of humans’ baseline ideation and filtering abilities as focal expressions of creativity in a text-to-image paradigm. Finally, generative AI appears to promote a more even distribution of platform-level favorites among adopters, signaling a potential step toward an increasingly democratized, inclusive creative domain for artists empowered by AI tools.

In summary, our findings emphasize that humans’ ideation proficiency and a refined artistic filter rather than pure mechanical skill may become the focal skills required in a future of human–AI cocreative process as generative AI becomes more mainstream in creative endeavors. This phenomenon in which AI-assisted artistic creation is driven by ideas and filtering is what we term “generative synesthesia”—the harmonization of human exploration and AI exploitation to discover new creative workflows. This paradigm shift may provide avenues for creatives to focus on what ideas they are representing rather than how they represent it, opening new opportunities for creative exploration. While concerns about automation loom, society must consider a future where generative AI is not the source of human stagnation, but rather of symphonic collaboration and human enrichment.

Identifying AI adopters

Platform-level policy commonly suggests that users disclose their use of AI assistance in the form of tags associated with their artworks. Thus, we employ a rule-based classification scheme. As a first-pass, any artwork published before the original DALL-E in January 2021 is automatically labeled as non-AI generated. Then, for all artworks published after January 2021, we examine postlevel title and tags provided by the publishing user. We use simple keyword matching (AI-generated, Stable Diffusion, Midjourney, DALL-E, etc.) for each post to identify for which artworks a user employs AI tools. As a second-pass, we track artworks posts published in AI art communities which may not include explicit tags denoting AI assistance. We compile all of these artworks and simply label them as AI-generated. Finally, we assign adoption timing based on the first-known AI-generated post for each use ( SI Appendix, Fig. S2 ).

Measuring creative novelty

To measure the two types of novelty, we borrow the idea of conceptual spaces which can be understood as geometric representations of entities which capture particular attributes of the artifacts along various dimensions ( 9 , 22 ). This definition naturally aligns with the concept of embeddings, like word2vec ( 23 ), which capture the relative features of objects in a vector space. This concept can be applied to text passages and images such that measuring the distance between these vector representations captures whether an artifact deviates or converges with a reference object in the space.

Using embeddings, we apply the following algorithm: take all artifacts published before 2022 April 1, as the baseline set of artworks. We use this cutoff because nearly all adoption occurs after May 2022, so all artifacts in future periods are compared to non-AI-generated works in the baseline period, and it provides an adequate number of pretreatment and posttreatment observations (on average 3 and 7, respectively) for the majority of our causal sample. Then, take all artifacts published in the following month and measure the pairwise cosine distance between those artifacts and the baseline set, recovering the mean, minimum, and maximum distances for each artifact. This month’s artifacts are then added to the baseline set such that all future artworks are compared to all prior artworks, effectively capturing the time-varying nature of novelty. Continue for all remaining months. We apply this approach to all adopters’ artworks and a random sample of 10,000 control users due to computational feasibility.

Content feature extraction

To describe the focal objects and object relationships in an artifact, we utilize state-of-the-art multimodal model BLIP-2 ( 24 ) which takes as input an image and produces a text description of the content. A key feature of this approach is the availability of controlled text generation hyperparameters that allow us to generate more stable descriptions that are systematically similar in structure, having been trained on 129M images and human-annotated data. BLIP-2 can maintain consistent focus and regularity while avoiding the noise added by cross-individual differences.

Given the generated descriptions, we then utilize a pretrained text embedding model based on BERT ( 25 ), which has demonstrated state-of-the-art performance on semantic similarity benchmarks while also being highly efficient, to compute high-dimensional vector representations for each description. Then, we apply the algorithm described above to measure Content Novelty.

Visual feature extraction

To capture visual features of each artifact at the pixel level, we use a more flexible approach via the self-supervised visual representation learning algorithm DINOv2 ( 26 ) which overcomes the limitations of standard image-text pretraining approaches where visual features may not be explicitly described in text. Because we are dealing with creative concepts, this approach is particularly suitable to robustly identify object parts in an image and extract low-level pixel features of images while still exhibiting excellent generalization performance. We compute vector representations of each image such that we can apply the algorithm described above to obtain measures of Visual Novelty.

An AI-generated picture won an art prize. Artists are not happy.

Artist wins photography contest after submitting AI-generated image, then forfeits prize.

The current legal cases against generative AI are just the beginning.

The authors acknowledge the valuable contributions from their Business Insights through Text Lab (BITLAB) research assistants Animikh Aich, Aditya Bala, Amrutha Karthikeyan, Audrey Mao, and Esha Vaishnav in helping to prepare the data for analysis. Furthermore, the authors are grateful for Stefano Puntoni, Alex Burnap, Mi Zhou, Gregory Sun, our audiences at the Wharton Business & Generative AI Workshop (23/9/8), INFORMS Workshop on Data Science (23/10/14), INFORMS Annual Meeting (23/10/15) and seminar participants at the University of Wisconsin-Milwaukee (23/9/22), University of Texas-Dallas (23/10/6), and MIT Initiative on the Digital Economy (23/11/29) for their insightful comments and feedback.

Supplementary material is available at PNAS Nexus online.

The authors declare no funding.

D.L. and E.Z. designed the research and wrote the paper. E.Z. analyzed data and performed research with guidance from D.L.

A preprint of this article is available at SSRN .

Replication archive with code is available at Open Science Framework at https://osf.io/jfzyp/ . Data have been anonymized for the privacy of the users.

Dong H-W , Hsiao W-Y , Yang L-C , Yang Y-H . 2018 . MuseGAN: multi-track sequential generative adversarial networks for symbolic music generation and accompaniment. In: Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence and Thirtieth Innovative Applications of Artificial Intelligence Conference and Eighth AAAI Symposium on Educational Advances in Artificial Intelligence . p. 34–41.

Tan WR , Chan CS , Aguirre H , Tanaka K . 2017 . ArtGAN: artwork synthesis with conditional categorical GANs. In: 2017 IEEE International Conference on Image Processing (ICIP). IEEE. p. 3760–3764 .

Elgammal A , Liu B , Elhoseiny M , Mazzone M . 2017 . CAN: creative adversarial networks, generating “art” by learning about styles and deviating from style norms, arXiv, arXiv:1706.07068, preprint: not peer reviewed .

Brown TB , et al . 2020 . Language models are few-shot learners. Adv Neural Inf Process Syst. 33:1877–1901 .

Rombach R , Blattmann A , Lorenz D , Esser P , Ommer B . 2022 . High-resolution image synthesis with latent diffusion models. In: Proceedings of the IEEE/CVF conference on Computer Vision and Pattern Recognition. p. 10684–10695 .

Huang S , Grady P , GPT-3 . 2022 . Generative AI: a creative new world. Sequoia Capital US/Europe. https://www.sequoiacap.com/article/generative-ai-a-creative-new-world/

Wollheim R . 1970 . Nelson Goodman’s languages of art . J Philos . 67 ( 16 ): 531 – 539 .

Google Scholar

Callaway B , Sant’Anna PHC . 2021 . Difference-in-differences with multiple time periods . J Econom . 225 ( 2 ): 200 – 230 .

Boden MA . 1998 . Creativity and artificial intelligence. Artif Intell. 103(1–2):347–356 .

Athey S , Tibshirani J , Wager S . 2019 . Generalized random forests. Ann Statist . 47(2):1148–1178 .

Lundberg S , Lee S-I . 2017 . A unified approach to interpreting model predictions. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. p. 4768–4777 .

Xu Y . 2017 . Generalized synthetic control method: causal inference with interactive fixed effects models . Polit Anal . 25 ( 1 ): 57 – 76 .

Peng S , Kalliamvakou E , Cihon P , Demirer M . 2023 . The impact of AI on developer productivity: evidence from github copilot, arXiv, arXiv:2302.06590, preprint: not peer reviewed .

Noy S , Zhang W . 2023 . Experimental evidence on the productivity effects of generative artificial intelligence . Science . 381 ( 6654 ): 187 – 192 .

Dell’Acqua F , et al . 2023 . Navigating the jagged technological frontier: field experimental evidence of the effects of AI on knowledge worker productivity and quality. Harvard Business School Technology & Operations Mgt. Unit Working Paper, (24-013) .

Spitale G , Biller-Andorno N , Germani F . 2023 . AI model GPT-3 (dis)informs us better than humans . Sci Adv . 9 ( 26 ): eadh1850 .

Burtch G , Lee D , Chen Z . 2023 . The consequences of generative AI for UGC and online community engagement. Available at SSRN 4521754 .

Campbell DT . 1960 . Blind variation and selective retentions in creative thought as in other knowledge processes . Psychol Rev . 67 ( 6 ): 380 – 400 .

Simonton DK . 1999 . Creativity as blind variation and selective retention: is the creative process Darwinian? Psychol Inq. 10(4):309–328 .

Silvia PJ . 2008 . Discernment and creativity: how well can people identify their most creative ideas? Psychol Aesthet Creat Arts . 2 ( 3 ): 139 – 146 .

Ivcevic Z , Mayer JD . 2009 . Mapping dimensions of creativity in the life-space . Creat Res J . 21 ( 2–3 ): 152 – 165 .

McGregor S , Wiggins G , Purver M . 2014 . Computational creativity: a philosophical approach, and an approach to philosophy. In: International Conference on Innovative Computing and Cloud Computing. p. 254–262 .

Mikolov T , Chen K , Corrado G , Dean J . 2013 . Efficient estimation of word representations in vector space, arXiv, arXiv:1301.3781, preprint: not peer reviewed .

Li J , Li D , Savarese S , Hoi S . 2023 . Bootstrapping language-image pre-training with frozen image encoders and large language models, arXiv, arXiv:2301.12597, preprint: not peer reviewed .

Reimers N , Gurevych I . 2019 . Sentence-bert: sentence embeddings using siamese bert-networks, arXiv, arXiv:1908.10084, preprint: not peer reviewed .

Oquab M , et al . 2023 . DINOv2: learning robust visual features without supervision, arXiv, arXiv:2304.07193, preprint: not peer reviewed .

Author notes

Supplementary data, email alerts, citing articles via.

- Contact PNAS Nexus

- Advertising and Corporate Services

- Journals Career Network

Affiliations

- Online ISSN 2752-6542

- Copyright © 2024 National Academy of Sciences

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Advertisement

Artificial intelligence in the creative industries: a review

- Open access

- Published: 02 July 2021

- Volume 55 , pages 589–656, ( 2022 )

Cite this article

You have full access to this open access article

- Nantheera Anantrasirichai ORCID: orcid.org/0000-0002-2122-5781 1 &

- David Bull 1

98k Accesses

100 Citations

54 Altmetric

Explore all metrics

This paper reviews the current state of the art in artificial intelligence (AI) technologies and applications in the context of the creative industries. A brief background of AI, and specifically machine learning (ML) algorithms, is provided including convolutional neural networks (CNNs), generative adversarial networks (GANs), recurrent neural networks (RNNs) and deep Reinforcement Learning (DRL). We categorize creative applications into five groups, related to how AI technologies are used: (i) content creation, (ii) information analysis, (iii) content enhancement and post production workflows, (iv) information extraction and enhancement, and (v) data compression. We critically examine the successes and limitations of this rapidly advancing technology in each of these areas. We further differentiate between the use of AI as a creative tool and its potential as a creator in its own right. We foresee that, in the near future, ML-based AI will be adopted widely as a tool or collaborative assistant for creativity. In contrast, we observe that the successes of ML in domains with fewer constraints, where AI is the ‘creator’, remain modest. The potential of AI (or its developers) to win awards for its original creations in competition with human creatives is also limited, based on contemporary technologies. We therefore conclude that, in the context of creative industries, maximum benefit from AI will be derived where its focus is human-centric—where it is designed to augment, rather than replace, human creativity.

Similar content being viewed by others

Machine learning in human creativity: status and perspectives

The Role of Artificial Intelligence in Art: A Comprehensive Review of a Generative Adversarial Network Portrait Painting

Creativity and style in gan and ai art: some art-historical reflections.

Avoid common mistakes on your manuscript.

1 Introduction

The aim of new technologies is normally to make a specific process easier, more accurate, faster or cheaper. In some cases they also enable us to perform tasks or create things that were previously impossible. Over recent years, one of the most rapidly advancing scientific techniques for practical purposes has been Artificial Intelligence (AI). AI techniques enable machines to perform tasks that typically require some degree of human-like intelligence. With recent developments in high-performance computing and increased data storage capacities, AI technologies have been empowered and are increasingly being adopted across numerous applications, ranging from simple daily tasks, intelligent assistants and finance to highly specific command, control operations and national security. AI can, for example, help smart devices or computers to understand text and read it out loud, hear voices and respond, view images and recognize objects in them, and even predict what may happen next after a series of events. At higher levels, AI has been used to analyze human and social activity by observing their convocation and actions. It has also been used to understand socially relevant problems such as homelessness and to predict natural events. AI has been recognized by governments across the world to have potential as a major driver of economic growth and social progress (Hall and Pesenti 2018 ; NSTC 2016 ). This potential, however, does not come without concerns over the wider social impact of AI technologies which must be taken into account when designing and deploying these tools.

Processes associated with the creative sector demand significantly different levels of innovation and skill sets compared to routine behaviours. While AI accomplishments rely heavily on conformity of data, creativity often exploits the human imagination to drive original ideas which may not follow general rules. Basically, creatives have a lifetime of experiences to build on, enabling them to think ‘outside of the box’ and ask ‘What if’ questions that cannot readily be addressed by constrained learning systems.

There have been many studies over several decades into the possibility of applying AI in the creative sector. One of the limitations in the past was the readiness of the technology itself, and another was the belief that AI could attempt to replicate human creative behaviour (Rowe and Partridge 1993 ). A recent survey by Adobe Footnote 1 revealed that three quarters of artists in the US, UK, Germany and Japan would consider using AI tools as assistants, in areas such as image search, editing, and other ‘non-creative’ tasks. This indicates a general acceptance of AI as a tool across the community and reflects a general awareness of the state of the art, since most AI technologies have been developed to operate in closed domains where they can assist and support humans rather than replace them. Better collaboration between humans and AI technologies can thus maximize the benefits of the synergy. All that said, the first painting created solely by AI was auctioned for $432,500 in 2018. Footnote 2

Applications of AI in the creative industries have dramatically increased in the last five years. Based on analysis of data from arXiv Footnote 3 and Gateway to Research, Footnote 4 Davies et al. ( 2020 ) revealed that the growth rate of research publications on AI (relevant to the creative industries) exceeds 500% in many countries (in Taiwan the growth rate is 1490%), and the most of these publications relate to image-based data. Analysis on company usage from the Crunchbase database Footnote 5 indicates that AI is used more in games and for immersive applications, advertising and marketing, than in other creative applications. Caramiaux et al. ( 2019 ) recently reviewed AI in the current media and creative industries across three areas: creation, production and consumption. They provide details of AI/ML-based research and development, as well as emerging challenges and trends.

In this paper, we review how AI and its technologies are, or could be, used in applications relevant to creative industries. We first provide an overview of AI and current technologies (Sect. 1 ), followed by a selection of creative domain applications (Sect. 3 ). We group these into subsections Footnote 6 covering: (i) content creation: where AI is employed to generate original work, (ii) information analysis: where statistics of data are used to improve productivity, (iii) content enhancement and post production workflows: used to improve quality of creative work, (iv) information extraction and enhancement: where AI assists in interpretation, clarifies semantic meaning, and creates new ways to exhibit hidden information, and (v) data compression: where AI helps reduce the size of the data while preserving its quality. Finally we discuss challenges and the future potential of AI associated with the creative industries in Sect. 4 .

2 An introduction to artificial intelligence

Artificial intelligence (AI) embodies a set of codes, techniques, algorithms and data that enables a computer system to develop and emulate human-like behaviour and hence make decisions similar to (or in some cases, better than) humans (Russell and Norvig 2020 ). When a machine exhibits full human intelligence, it is often referred to as ‘general AI’ or ‘strong AI’ (Bostrom 2014 ). However, currently reported technologies are normally restricted to operation in a limited domain to work on specific tasks. This is called ‘narrow AI’ or ‘weak AI’. In the past, most AI technologies were model-driven; where the nature of the application is studied and a model is mathematically formed to describe it. Statistical learning is also data-dependent, but relies on rule-based programming (James et al. 2013 ). Previous generations of AI (mid-1950s until the late 1980s (Haugeland 1985 )) were based on symbolic AI, following the assumption that humans use symbols to represent things and problems. Symbolic AI is intended to produce general, human-like intelligence in a machine (Honavar 1995 ), whereas most modern research is directed at specific sub-problems.

2.1 Machine learning, neurons and artificial neural networks

The main class of algorithms in use today are based on machine learning (ML), which is data-driven. ML employs computational methods to ‘learn’ information directly from large amounts of example data without relying on a predetermined equation or model (Mitchell 1997 ). These algorithms adaptively converge to an optimum solution and generally improve their performance as the number of samples available for learning increases. Several types of learning algorithms exist, including supervised learning, unsupervised learning and reinforcement learning. Supervised learning algorithms build a mathematical model from a set of data that contains both the inputs and the desired outputs (each output usually representing a classification of the associated input vector), while unsupervised learning algorithms model the problems on unlabeled data. Self-supervised learning is a form of unsupervised learning where the data provide the measurable structure to build a loss function. Semi-supervised learning employs a limited set of labeled data to label, usually a larger amount of, unlabeled data. Then both datasets are combined to create a new model. Reinforcement learning methods learn from trial and error and are effectively self-supervised (Russell and Norvig 2020 ).

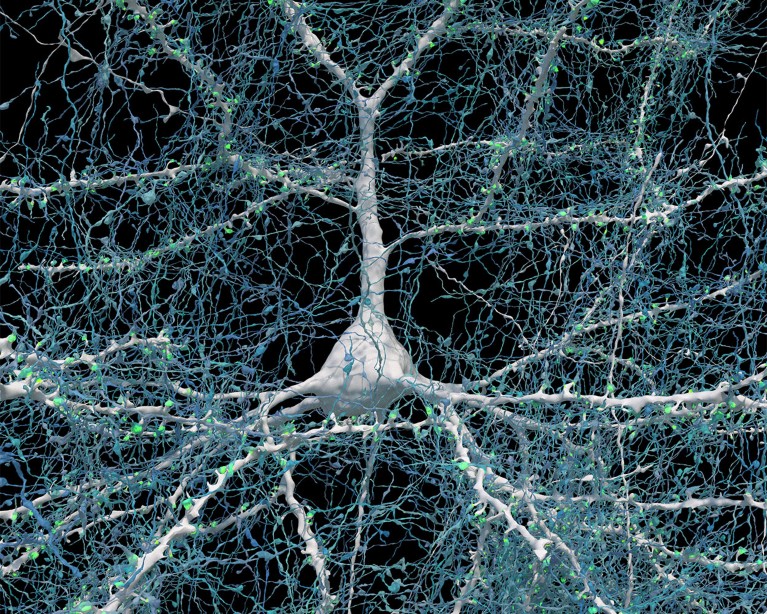

Modern ML methods have their roots in the early computational model of a neuron proposed by Warren MuCulloch (neuroscientist) and Walter Pitts (logician) in ( 1943 ). This is shown in Fig. 1 a. In their model, the artificial neuron receives one or more inputs, where each input is independently weighted. The neuron sums these weighted inputs and the result is passed through a non-linear function known as an activation function, representing the neuron’s action potential which is then transmitted along its axon to other neurons. The multi-layer perceptron (MLP) is a basic form of artificial neural network (ANN) that gained popularity in the 1980s. This connects its neural units in a multi-layered (typically one input layer, one hidden layer and one output layer) architecture (Fig. 1 b). These neural layers are generally fully connected to adjacent layers, (i.e., each neuron in one layer is connected to all neurons in the next layer). The disadvantage of this approach is that the total number of parameters can be very large and this can make them prone to overfitting data.

For training, the MLP (and most supervised ANNs) utilizes error backpropagation to compute the gradient of a loss function. This loss function maps the event values from multiple inputs into one real number to represent the cost of that event. The goal of the training process is therefore to minimize the loss function over multiple presentations of the input dataset. The backpropagation algorithm was originally introduced in the 1970s, but peaked in popularity after ( 1986 ), when Rumelhart et al. described several neural networks where backpropagation worked far faster than earlier approaches, making ANNs applicable to practical problems.

a Basic neural network unit by MuCulloch and Pitts. b Basic multi-layer perceptron (MLP)

2.2 An introduction to deep neural networks

Deep learning is a subset of ML that employs deep artificial neural networks (DNNs). The word ‘deep’ means that there are multiple hidden layers of neuron collections that have learnable weights and biases. When the data being processed occupies multiple dimensions (images for example), convolutional neural networks (CNNs) are often employed. CNNs are (loosely) a biologically-inspired architecture and their results are tiled so that they overlap to obtain a better representation of the original inputs.

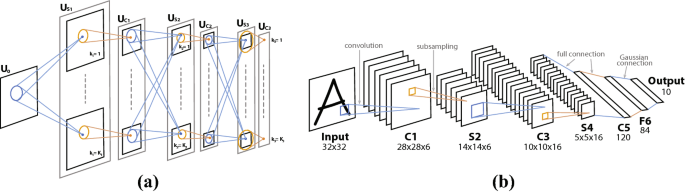

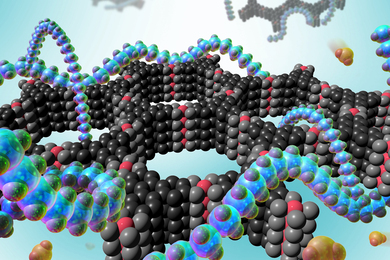

The first CNN was designed by Fukushima ( 1980 ) as a tool for visual pattern recognition (Fig. 2 a). This so called Neocognitron was a hierarchical architecture with multiple convolutional and pooling layers. LeCun et al. ( 1989 ) applied the standard backpropagation algorithm to a deep neural network with the purpose of recognizing handwritten ZIP codes. At that time, it took 3 days to train the network. Lecun et al. ( 1998 ) proposed LeNet5 (Fig. 2 b), one of the earliest CNNs which could outperform other models for handwritten character recognition. The deep learning breakthrough occurred in the 2000s driven by the availability of graphics processing units (GPUs) that could dramatically accelerate training. Since around 2012, CNNs have represented the state of the art for complex problems such as image classification and recognition, having won several major international competitions.

a Neocognitron (Fukushima 1980 ), where U \(_s\) and U \(_c\) learn simple and complex features, respectively. b LeNet5 (Lecun et al. 1998 ), consisting of two sets of convolutional and average pooling layers, followed by a flattening convolutional layer, then two fully-connected layers and finally a softmax classifier

A CNN creates its filters’ values based on the task at hand. Generally, the CNN learns to detect edges from the raw pixels in the first layer, then uses those edges to detect simple shapes in the next layer, and so on building complexity through subsequent layers. The higher layers produce high-level features with more semantically relevant meaning. This means that the algorithms can exploit both low-level features and a higher-level understanding of what the data represent. Deep learning has therefore emerged as a powerful tool to find patterns, analyze information, and to predict future events. The number of layers in a deep network is unlimited but most current networks contain between 10 and 100 layers.

Goodfellow et al. ( 2014 ) proposed an alternative form of architecture referred to as a Generative Adversarial Network (GAN). GANs consist of 2 AI competing modules where the first creates images (the generator) and the second (the discriminator) checks whether the received image is real or created from the first module. This competition results in the final picture being very similar to the real image. Because of their performance in reducing deceptive results, GAN technologies have become very popular and have been applied to numerous applications, including those related to creative practice.

While many types of machine learning algorithms exist, because of their prominence and performance, in this paper we place emphasis on deep learning methods. We will describe various applications relevant to the creative industries and critically review the methodologies that achieve, or have the potential to achieve, good performance.

2.3 Current AI technologies

This section presents state-of-the-art AI methods relevant to the creative industries. For those readers who prefer to focus on the applications, please refer to Sect. 3 .

2.3.1 AI and the need for data

An AI system effectively combines a computational architecture and a learning strategy with a data environment in which it learns. Training databases are thus a critical component in optimizing the performance of ML processes and hence a significant proportion of the value of an AI system resides in them. A well-designed training database with appropriate size and coverage can help significantly with model generalization and avoiding problems of overfitting.

In order to learn without being explicitly programmed, ML systems must be trained using data having statistics and characteristics typical of the particular application domain under consideration. This is true regardless of training methods (see Sect. 2.1 ). Good datasets typically contain large numbers of examples with a statistical distribution matched to this domain. This is crucial because it enables the network to estimate gradients in the data (error) domain that enables it to converge to an optimum solution, forming robust decision boundaries between its classes. The network will then, after training, be able to reliably match new unseen information to the right answer when deployed.

The reliability of training dataset labels is key in achieving high performance supervised deep learning. These datasets must comprise: i) data that are statistically similar to the inputs when the models are used in the real situations and ii) ground truth annotations that tell the machine what the desired outputs are. For example, in segmentation applications, the dataset would comprise the images and the corresponding segmentation maps indicating homogeneous, or semantically meaningful regions in each image. Similarly for object recognition, the dataset would also include the original images while the ground truth would be the object categories, e.g., car, house, human, type of animals, etc.

Some labeled datasets are freely available for public use, Footnote 7 but these are limited, especially in certain applications where data are difficult to collect and label. One of the largest, ImageNet, contains over 14 million images labeled into 22,000 classes. Care must be taken when collecting or using data to avoid imbalance and bias—skewed class distributions where the majority of data instances belong to a small number of classes with other classes being sparsely populated. For instance, in colorization, blue may appear more often as it is a color of sky, while pink flowers are much rarer. This imbalance causes ML algorithms to develop a bias towards classes with a greater number of instances; hence they preferentially predict majority class data. Features of minority classes are treated as noise and are often ignored.

Numerous approaches have been introduced to create balanced distributions and these can be divided into two major groups: modification of the learning algorithm, and data manipulation techniques (He and Garcia 2009 ). Zhang et al. ( 2016 ) solve the class-imbalance problem by re-weighting the loss of each pixel at train time based on the pixel color rarity. Recently, Lehtinen et al. ( 2018 ) have introduced an innovative approach to learning via their Noise2Noise network which demonstrates that it is possible to train a network without clean data if the corrupted data complies with certain statistical assumptions. However, this technique needs further testing and refinement to cope with real-world noisy data. Typical data manipulation techniques include downsampling majority classes, oversampling minority classes, or both. Two primary techniques are used to expand, adjust and rebalance the number of samples in the dataset and, in turn, to improve ML performance and model generalization: data augmentation and data synthesis. These are discussed further below.

2.3.1.1 Data augmentation

Data augmentation techniques are frequently used to increase the volume and diversity of a training dataset without the need to collect new data. Instead, existing data are used to generate more samples, through transformations such as cropping, flipping, translating, rotating and scaling (Anantrasirichai et al. 2018 ; Krizhevsky et al. 2012 ). This can assist by increasing the representation of minority classes and also help to avoid overfitting, which occurs when a model memorizes the full dataset instead of only learning the main concepts which underlie the problem. GANs (see Section 2.3.3 ) have recently been employed with success to enlarge training sets, with the most popular network currently being CycleGAN (Zhu et al. 2017 ). The original CycleGAN mapped one input to only one output, causing inefficiencies when dataset diversity is required. Huang et al. ( 2018 ) improved CycleGAN with a structure-aware network to augment training data for vehicle detection. This slightly modified architecture is trained to transform contrast CT images (computed tomography scans) into non-contrast images (Sandfort et al. 2019 ). A CycleGAN-based technique has also been used for emotion classification, to amplify cases of extremely rare emotions such as disgust (Zhu et al. 2018 ). IBM Research introduced a Balancing GAN (Mariani et al. 2018 ), where the model learns useful features from majority classes and uses these to generate images for minority classes that avoid features close to those of majority cases. An extensive survey of data augmentation techniques can be found in Shorten and Khoshgoftaar ( 2019 ).

2.3.1.2 Data synthesis

Scientific or parametric models can be exploited to generate synthetic data in those applications where it is difficult to collect real data, and where data augmentation techniques cannot increase variety in the dataset. Examples include signs of disease (Alsaih et al. 2017 ) and geological events that rarely happen (Anantrasirichai et al. 2019 ). In the case of creative processes, problems are often ill-posed as ground truth data or ideal outputs are not available. Examples include post-production operations such as deblurring, denoising and contrast enhancement. Synthetic data are often created by degrading the clean data. Su et al. ( 2017 ) applied synthetic motion blur on sharp video frames to train the deblurring model. LLNet (Lore et al. 2017 ), enhances low-light images, and is trained using a dataset generated with synthetic noise and intensity adjustment, while LLCNN (Tao et al. 2017 ) employs a gamma adjustment technique.

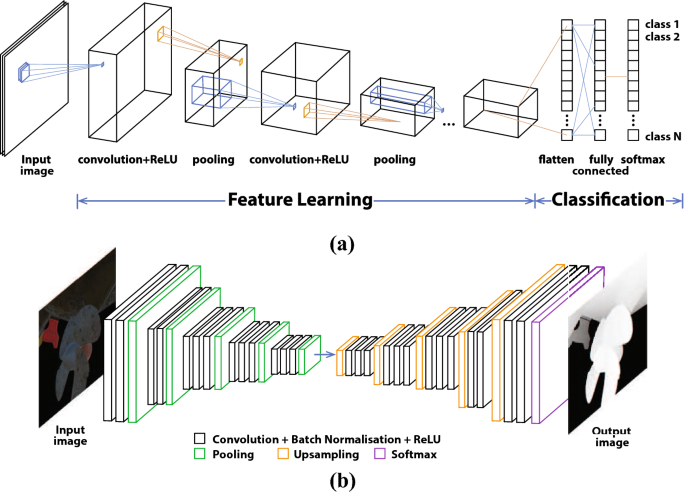

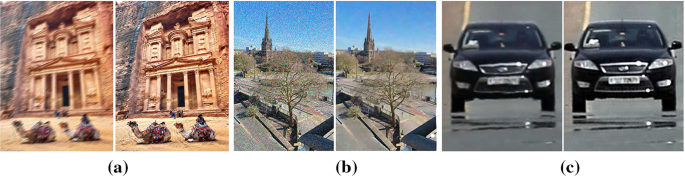

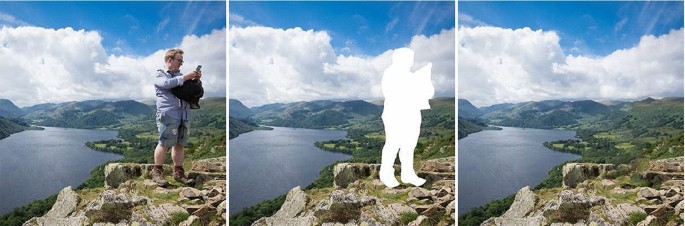

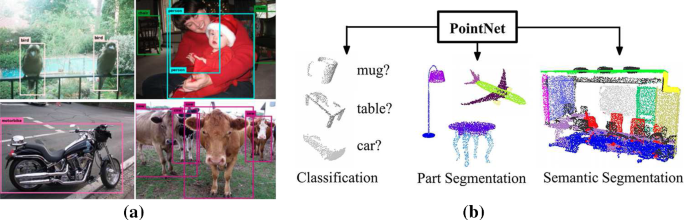

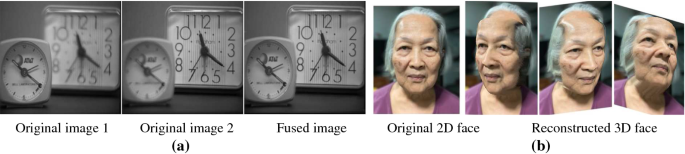

CNN architectures for a object recognition adapted from \(^{8}\) , b semantic segmentation \(^{9}\)

2.3.2 Convolutional neural networks (CNNs)

2.3.2.1 basic cnns.

Convolutional neural networks (CNNs) are a class of deep feed-forward ANN. They comprise a series of convolutional layers that are designed to take advantage of 2D structures, such as found in images. These employ locally connected layers that apply convolution operations between a predefined-size kernel and an internal signal; the output of each convolutional layer is the input signal modified by a convolution filter. The weights of the filter are adjusted according to a loss function that assesses the mismatch (during training) between the network output and the ground truth values or labels. Commonly used loss functions include \(\ell _1\) , \(\ell _2\) , SSIM (Tao et al. 2017 ) and perceptual loss (Johnson et al. 2016 )). These errors are then backpropagated through multiple forward and backward iterations and the filter weights adjusted based on estimated gradients of the local error surface. This in turn drives what features are detected, associating them to the characteristics of the training data. The early layers in a CNN extract low-level features conceptually similar to visual basis functions found in the primary visual cortex (Matsugu et al. 2003 ).

The most common CNN architecture (Fig. 3 a Footnote 8 ) has the outputs from its convolution layers connected to a pooling layer, which combines the outputs of neuron clusters into a single neuron. Subsequently, activation functions such as tanh (the hyperbolic tangent) or ReLU (Rectified Linear Unit) are applied to introduce non-linearity into the network (Agostinelli et al. 2015 ). This structure is repeated with similar or different kernel sizes. As a result, the CNN learns to detect edges from the raw pixels in the first layer, then combines these to detect simple shapes in the next layer. The higher layers produce higher-level features, which have more semantic meaning. The last few layers represent the classification part of the network. These consist of fully connected layers (i.e. being connected to all the activation outputs in the previous layer) and a softmax layer, where the output class is modelled as a probability distribution - exponentially scaling the output between 0 and 1 (this is also referred to as a normalised exponential function).

VGG (Simonyan and Zisserman 2015 ) is one of the most common backbone networks, offering two depths: VGG-16 and VGG-19 with 16 and 19 layers respectively. The networks incorporate a series of convolution blocks (comprising convolutional layers, ReLU activations and a max-pooling layer), and the last three layers are fully connection with ReLU activations. VGG employs very small receptive fields (3 \(\times\) 3 with a stride of 1) allowing deeper architectures than the older networks. DeepArt (Gatys et al. 2016 ) employs a VGG-Network without fully connected layers. It demonstrates that the higher layers in the VGG network can represent the content of an artwork. The pre-trained VGG network is widely used to provide a measure of perceptual loss (and style loss) during the training process of other networks (Johnson et al. 2016 ).

2.3.2.2 CNNs with reconstruction

The basic structure of CNNs described in the previous section is sometimes called an ‘encoder’. This is because the network learns a representation of a set of data, which often has fewer parameters than the input. In other words, it compresses the input to produce a code or a latent-space representation. In contrast, some architectures omit pooling layers in order to create dense features in an output with the same size as the input.

Alternatively, the size of the feature map can be enlarged to that of the input via deconvolutional layers or transposed convolution layers (Fig. 3 b Footnote 9 ). This structure is often referred to as a ‘decoder’ as it generates the output using the code produced by the encoder. Encoder-decoder architectures combine an encoder and a decoder. Autoencoders are a special case of encoder-decoder models, where the input and output are the same size. Encoder-decoder models are suitable for creative applications, such as style transfer (Zhang et al. 2016 ), image restoration (Nah et al. 2017 ; Yang and Sun 2018 ; Zhang et al. 2017 ), contrast enhancement (Lore et al. 2017 ; Tao et al. 2017 ), colorization (Zhang et al. 2016 ) and super-resolution (Shi et al. 2016 ).

Some architectures also add skip connections or a bridge section (Long et al. 2015 ) so that the local and global features, as well as semantics are connected and captured, providing improved pixel-wise accuracy. These techniques are widely used in object detection (Anantrasirichai and Bull 2019 ) and object tracking (Redmon and Farhadi 2018 ). U-Net (Ronneberger et al. 2015 ) is perhaps the most popular network of this kind, even though it was originally developed for biomedical image segmentation. Its network consists of a contracting path (encoder) and an expansive path (decoder), giving it the u-shaped architecture. The contracting path consists of the repeated application of two 3 \(\times\) 3 convolutions, followed by ReLU and a max-pooling layer. Each step in the expansive path consists of a transposed convolution layer for upsampling, followed by two sets of convolutional and ReLU layers, and concatenations with correspondingly-resolution features from the contracting path.

2.3.2.3 Advanced CNNs

Some architectures introduce modified convolution operations for specific applications. For example, dilated convolution (Yu and Koltun 2016 ), also called atrous convolution, enlarges the receptive field, to support feature extraction locally and globally. The dilated convolution is applied to the input with a defined spacing between the values in a kernel. For example, a 3 \(\times\) 3 kernel with a dilation rate of 2 has the same receptive field as a 5 \(\times\) 5 kernel, but using 9 parameters. This has been used for colorization by Zhang et al. ( 2016 ) in the creative sector. ResNet is an architecture developed for residual learning, comprising several residual blocks (He et al. 2016 ). A single residual block has two convolution layers and a skip connection between the input and the output of the last convolution layer. This avoids the problem of vanishing gradients, enabling very deep CNN architectures. Residual learning has become an important part of the state of the art in many application, such as contrast enhancement (Tao et al. 2017 ), colorization (Huang et al. 2017 ), SR (Dai et al. 2019 ; Zhang et al. 2018a ), object recognition (He et al. 2016 ), and denoising (Zhang et al. 2017 ).

Traditional convolution operations are performed in a regular grid fashion, leading to limitations for some applications, where the object and its location are not in the regular grid. Deformable convolution (Dai et al. 2017 ) has therefore been proposed to facilitate the region of support for the convolution operations to take on any shape, instead of just the traditional square shape. This has been used in object detection and SR (Wang et al. 2019a ). 3D deformable kernels have also been proposed for denoising video content, as they can better cope with large motions, producing cleaner and sharper sequences (Xiangyu Xu 2019 ).

Capsule networks were developed to address some of the deficiencies with traditional CNNs (Sabour et al. 2017 ). They are able to better model hierarchical relationships, where each neuron (referred to as a capsule) expresses the likelihood and properties of its features, e.g., orientation or size. This improves object recognition performance. Capsule networks have been extended to other applications that deal with complex data, including multi-label text classification (Zhao et al. 2019 ), slot filling and intent detection (Zhang et al. 2019a ), polyphonic sound event detection (Vesperini et al. 2019 ) and sign language recognition (Jalal et al. 2018 ).

2.3.3 Generative adversarial networks (GANs)

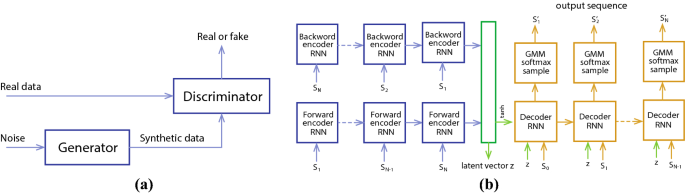

The generative adversarial network (GAN) is a recent algorithmic innovation that employs two neural networks: generative and discriminative. The GAN pits one against the other in order to generate new, synthetic instances of data that can pass for real data. The general GAN architecture is shown in Fig. 4 a. It can be observed that the generative network generates new candidates to increase the error rate of the discriminative network until the discriminative network cannot tell whether these candidates are real or synthesized. The generator is typically a deconvolutional neural network, and the discriminator is a CNN. Recent successful applications of GANs include SR (Ledig et al. 2017 ), inpainting (Yu et al. 2019 ), contrast enhancement (Kuang et al. 2019 ) and compression (Ma et al. 2019a ).

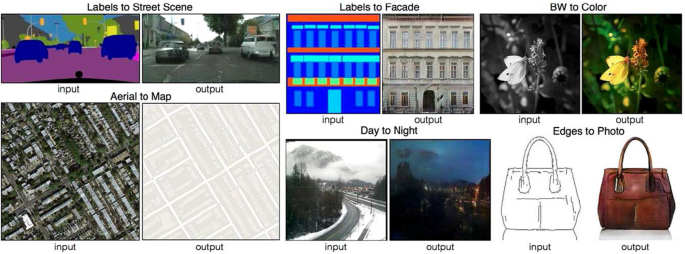

GANs have a reputation of being difficult to train since the two models are trained simultaneously to find a Nash equilibrium but with each model updating its cost (or error) independently. Failures often occur when the discriminator cannot feedback information that is good enough for the generator to make progress, leading to vanishing gradients. Wasserstein loss is designed to prevent this (Arjovsky et al. 2017 ; Frogner et al. 2015 ). A specific condition or characteristic, such as a label associated with an image, rather than a generic sample from an unknown noise distribution can be included in the generative model, creating what is referred to as a conditional GAN (cGAN) (Mirza and Osindero 2014 ). This improved GAN has been used in several applications, including pix2pix(Isola et al. 2017 ) and for deblurring (Kupyn et al. 2018 ).

Theoretically, the generator in a GAN will not learn to create new content, but it will just try to make its output look like the real data. Therefore, to produce creative works of art, the Creative Adversarial Network (CAN) has been proposed by Elgammal et al. ( 2017 ). This works by including an additional signal in the generator to prevent it from generating content that is too similar to existing examples. Similar to traditional CNNs, a perceptual loss based on VGG16 (Johnson et al. 2016 ) has become common in applications where new images are generated that have the same semantics as the input (Antic 2020 ; Ledig et al. 2017 ).

Most GAN-based methods are currently limited to the generation of relatively small square images, e.g., 256 \(\times\) 256 pixels (Zhang et al. 2017 ). The best resolution created up to the time of this review is 1024 \(\times\) 1024-pixels, achieved by NVIDIA research. The team introduced the progressive growing of GANs (Karras et al. 2018 ) and showed that their method can generate near-realistic 1024 \(\times\) 1024-pixel portrait images (trained for 14 days). However the problem of obvious artefacts at transition areas between foreground and background persists.

Another form of deep generative model is the Variational Autoencoder (VAE). A VAE is an autoencoder, where the encoding distribution is regularised to ensure the latent space has good properties to support the generative process. Then the decoder samples from this distribution to generate new data. Comparing VAEs to GANs, VAEs are more stable during training, while GANs are better at producing realistic images. Recently Deepmind (Google) has included vector quantization (VQ) within a VAE to learn a discrete latent representation (Razavi et al. 2019 ). Its performance for image generation are competitive with their BigGAN (Brock et al. 2019 ) but with greater capacity for generating a diverse range of images. There have also been many attempts to merge GANs and VAEs so that the end-to-end network benefits from both good samples and good representation, for example using a VAE as the generator for a GAN (Bhattacharyya et al. 2019 ; Wan et al. 2017 ). However, the results of this have not yet demonstrated significant improvement in terms of overall performance (Rosca et al. 2019 ), remaining an ongoing research topic.

A review of recent state-of-the-art GAN models and applications can be found in Foster ( 2019 ).

Architectures of a GAN, b RNN for drawing sketches (Ha and Eck 2018 )

2.3.4 Recurrent neural networks (RNNs)

Recurrent neural networks (RNNs) have been widely employed to perform sequential recognition; they offer benefits in this respect by incorporating at least one feedback connection. The most commonly used type of RNN is the Long Short-Term Memory (LSTM) network (Hochreiter and Schmidhuber 1997 ), as this solves problems associated with vanishing gradients, observed in traditional RNNs. It does this by memorizing sufficient context information in time series data via its memory cell. Deep RNNs use their internal state to process variable length sequences of inputs, combining across multiple levels of representation. This makes them amenable to tasks such as speech recognition (Graves et al. 2013 ), handwriting recognition (Doetsch et al. 2014 ), and music generation (Briot et al. 2020 ). RNNs are also employed in image and video processing applications, where recurrency is applied to convolutional encoders for tasks such as drawing sketches (Ha and Eck 2018 ) and deblurring videos (Zhang et al. 2018 ). VINet (Kim et al. 2019 ) employs an encoder-decoder model using an RNN to estimate optical flow, processing multiple input frames concatenated with the previous inpainting results. An example network using an RNN is illustrated in Fig. 4 b.