- News & Highlights

- Publications and Documents

- Postgraduate Education

- Browse Our Courses

- C/T Research Academy

- K12 Investigator Training

- Translational Innovator

- SMART IRB Reliance Request

- Biostatistics Consulting

- Regulatory Support

- Pilot Funding

- Informatics Program

- Community Engagement

- Diversity Inclusion

- Research Enrollment and Diversity

- Harvard Catalyst Profiles

Creating a Good Research Question

- Advice & Growth

- Process in Practice

Successful translation of research begins with a strong question. How do you get started? How do good research questions evolve? And where do you find inspiration to generate good questions in the first place? It’s helpful to understand existing frameworks, guidelines, and standards, as well as hear from researchers who utilize these strategies in their own work.

In the fall and winter of 2020, Naomi Fisher, MD, conducted 10 interviews with clinical and translational researchers at Harvard University and affiliated academic healthcare centers, with the purpose of capturing their experiences developing good research questions. The researchers featured in this project represent various specialties, drawn from every stage of their careers. Below you will find clips from their interviews and additional resources that highlight how to get started, as well as helpful frameworks and factors to consider. Additionally, visit the Advice & Growth section to hear candid advice and explore the Process in Practice section to hear how researchers have applied these recommendations to their published research.

- Naomi Fisher, MD , is associate professor of medicine at Harvard Medical School (HMS), and clinical staff at Brigham and Women’s Hospital (BWH). Fisher is founder and director of Hypertension Services and the Hypertension Specialty Clinic at the BWH, where she is a renowned endocrinologist. She serves as a faculty director for communication-related Boundary-Crossing Skills for Research Careers webinar sessions and the Writing and Communication Center .

- Christopher Gibbons, MD , is associate professor of neurology at HMS, and clinical staff at Beth Israel Deaconess Medical Center (BIDMC) and Joslin Diabetes Center. Gibbons’ research focus is on peripheral and autonomic neuropathies.

- Clare Tempany-Afdhal, MD , is professor of radiology at HMS and the Ferenc Jolesz Chair of Research, Radiology at BWH. Her major areas of research are MR imaging of the pelvis and image- guided therapy.

- David Sykes, MD, PhD , is assistant professor of medicine at Massachusetts General Hospital (MGH), he is also principal investigator at the Sykes Lab at MGH. His special interest area is rare hematologic conditions.

- Elliot Israel, MD , is professor of medicine at HMS, director of the Respiratory Therapy Department, the director of clinical research in the Pulmonary and Critical Care Medical Division and associate physician at BWH. Israel’s research interests include therapeutic interventions to alter asthmatic airway hyperactivity and the role of arachidonic acid metabolites in airway narrowing.

- Jonathan Williams, MD, MMSc , is assistant professor of medicine at HMS, and associate physician at BWH. He focuses on endocrinology, specifically unravelling the intricate relationship between genetics and environment with respect to susceptibility to cardiometabolic disease.

- Junichi Tokuda, PhD , is associate professor of radiology at HMS, and is a research scientist at the Department of Radiology, BWH. Tokuda is particularly interested in technologies to support image-guided “closed-loop” interventions. He also serves as a principal investigator leading several projects funded by the National Institutes of Health and industry.

- Osama Rahma, MD , is assistant professor of medicine at HMS and clinical staff member in medical oncology at Dana-Farber Cancer Institute (DFCI). Rhama is currently a principal investigator at the Center for Immuno-Oncology and Gastroenterology Cancer Center at DFCI. His research focus is on drug development of combinational immune therapeutics.

- Sharmila Dorbala, MD, MPH , is professor of radiology at HMS and clinical staff at BWH in cardiovascular medicine and radiology. She is also the president of the American Society of Nuclear Medicine. Dorbala’s specialty is using nuclear medicine for cardiovascular discoveries.

- Subha Ramani, PhD, MBBS, MMed , is associate professor of medicine at HMS, as well as associate physician in the Division of General Internal Medicine and Primary Care at BWH. Ramani’s scholarly interests focus on innovative approaches to teaching, learning and assessment of clinical trainees, faculty development in teaching, and qualitative research methods in medical education.

- Ursula Kaiser, MD , is professor at HMS and chief of the Division of Endocrinology, Diabetes and Hypertension, and senior physician at BWH. Kaiser’s research focuses on understanding the molecular mechanisms by which pulsatile gonadotropin-releasing hormone regulates the expression of luteinizing hormone and follicle-stimulating hormone genes.

Insights on Creating a Good Research Question

Play Junichi Tokuda video

Play Ursula Kaiser video

Start Successfully: Build the Foundation of a Good Research Question

Start Successfully Resources

Ideation in Device Development: Finding Clinical Need Josh Tolkoff, MS A lecture explaining the critical importance of identifying a compelling clinical need before embarking on a research project. Play Ideation in Device Development video .

Radical Innovation Jeff Karp, PhD This ThinkResearch podcast episode focuses on one researcher’s approach using radical simplicity to break down big problems and questions. Play Radical Innovation .

Using Healthcare Data: How can Researchers Come up with Interesting Questions? Anupam Jena, MD, PhD Another ThinkResearch podcast episode addresses how to discover good research questions by using a backward design approach which involves analyzing big data and allowing the research question to unfold from findings. Play Using Healthcare Data .

Important Factors: Consider Feasibility and Novelty

Refining Your Research Question

Play video of Clare Tempany-Afdhal

Play Elliott Israel video

Frameworks and Structure: Evaluate Research Questions Using Tools and Techniques

Frameworks and Structure Resources

Designing Clinical Research Hulley et al. A comprehensive and practical guide to clinical research, including the FINER framework for evaluating research questions. Learn more about the book .

Translational Medicine Library Guide Queens University Library An introduction to popular frameworks for research questions, including FINER and PICO. Review translational medicine guide .

Asking a Good T3/T4 Question Niteesh K. Choudhry, MD, PhD This video explains the PICO framework in practice as participants in a workshop propose research questions that compare interventions. Play Asking a Good T3/T4 Question video

Introduction to Designing & Conducting Mixed Methods Research An online course that provides a deeper dive into mixed methods’ research questions and methodologies. Learn more about the course

Network and Support: Find the Collaborators and Stakeholders to Help Evaluate Research Questions

Network & Support Resource

Bench-to-bedside, Bedside-to-bench Christopher Gibbons, MD In this lecture, Gibbons shares his experience of bringing research from bench to bedside, and from bedside to bench. His talk highlights the formation and evolution of research questions based on clinical need. Play Bench-to-bedside.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Research process

- Writing Strong Research Questions | Criteria & Examples

Writing Strong Research Questions | Criteria & Examples

Published on 30 October 2022 by Shona McCombes . Revised on 12 December 2023.

A research question pinpoints exactly what you want to find out in your work. A good research question is essential to guide your research paper , dissertation , or thesis .

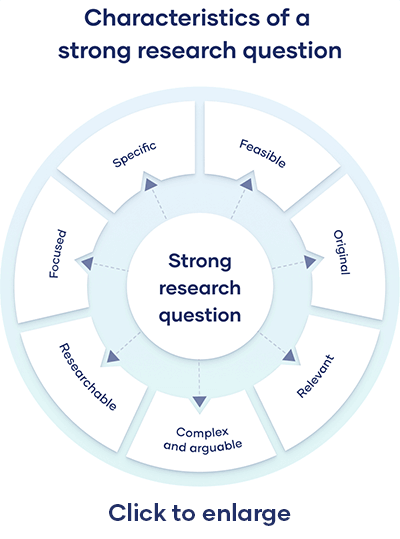

All research questions should be:

- Focused on a single problem or issue

- Researchable using primary and/or secondary sources

- Feasible to answer within the timeframe and practical constraints

- Specific enough to answer thoroughly

- Complex enough to develop the answer over the space of a paper or thesis

- Relevant to your field of study and/or society more broadly

Table of contents

How to write a research question, what makes a strong research question, research questions quiz, frequently asked questions.

You can follow these steps to develop a strong research question:

- Choose your topic

- Do some preliminary reading about the current state of the field

- Narrow your focus to a specific niche

- Identify the research problem that you will address

The way you frame your question depends on what your research aims to achieve. The table below shows some examples of how you might formulate questions for different purposes.

Using your research problem to develop your research question

Note that while most research questions can be answered with various types of research , the way you frame your question should help determine your choices.

Prevent plagiarism, run a free check.

Research questions anchor your whole project, so it’s important to spend some time refining them. The criteria below can help you evaluate the strength of your research question.

Focused and researchable

Feasible and specific, complex and arguable, relevant and original.

The way you present your research problem in your introduction varies depending on the nature of your research paper . A research paper that presents a sustained argument will usually encapsulate this argument in a thesis statement .

A research paper designed to present the results of empirical research tends to present a research question that it seeks to answer. It may also include a hypothesis – a prediction that will be confirmed or disproved by your research.

As you cannot possibly read every source related to your topic, it’s important to evaluate sources to assess their relevance. Use preliminary evaluation to determine whether a source is worth examining in more depth.

This involves:

- Reading abstracts , prefaces, introductions , and conclusions

- Looking at the table of contents to determine the scope of the work

- Consulting the index for key terms or the names of important scholars

An essay isn’t just a loose collection of facts and ideas. Instead, it should be centered on an overarching argument (summarised in your thesis statement ) that every part of the essay relates to.

The way you structure your essay is crucial to presenting your argument coherently. A well-structured essay helps your reader follow the logic of your ideas and understand your overall point.

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (‘ x affects y because …’).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses. In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2023, December 12). Writing Strong Research Questions | Criteria & Examples. Scribbr. Retrieved 22 April 2024, from https://www.scribbr.co.uk/the-research-process/research-question/

Is this article helpful?

Shona McCombes

Other students also liked, how to write a research proposal | examples & templates, how to write a results section | tips & examples, what is a research methodology | steps & tips.

Research Question Examples 🧑🏻🏫

25+ Practical Examples & Ideas To Help You Get Started

By: Derek Jansen (MBA) | October 2023

A well-crafted research question (or set of questions) sets the stage for a robust study and meaningful insights. But, if you’re new to research, it’s not always clear what exactly constitutes a good research question. In this post, we’ll provide you with clear examples of quality research questions across various disciplines, so that you can approach your research project with confidence!

Research Question Examples

- Psychology research questions

- Business research questions

- Education research questions

- Healthcare research questions

- Computer science research questions

Examples: Psychology

Let’s start by looking at some examples of research questions that you might encounter within the discipline of psychology.

How does sleep quality affect academic performance in university students?

This question is specific to a population (university students) and looks at a direct relationship between sleep and academic performance, both of which are quantifiable and measurable variables.

What factors contribute to the onset of anxiety disorders in adolescents?

The question narrows down the age group and focuses on identifying multiple contributing factors. There are various ways in which it could be approached from a methodological standpoint, including both qualitatively and quantitatively.

Do mindfulness techniques improve emotional well-being?

This is a focused research question aiming to evaluate the effectiveness of a specific intervention.

How does early childhood trauma impact adult relationships?

This research question targets a clear cause-and-effect relationship over a long timescale, making it focused but comprehensive.

Is there a correlation between screen time and depression in teenagers?

This research question focuses on an in-demand current issue and a specific demographic, allowing for a focused investigation. The key variables are clearly stated within the question and can be measured and analysed (i.e., high feasibility).

Examples: Business/Management

Next, let’s look at some examples of well-articulated research questions within the business and management realm.

How do leadership styles impact employee retention?

This is an example of a strong research question because it directly looks at the effect of one variable (leadership styles) on another (employee retention), allowing from a strongly aligned methodological approach.

What role does corporate social responsibility play in consumer choice?

Current and precise, this research question can reveal how social concerns are influencing buying behaviour by way of a qualitative exploration.

Does remote work increase or decrease productivity in tech companies?

Focused on a particular industry and a hot topic, this research question could yield timely, actionable insights that would have high practical value in the real world.

How do economic downturns affect small businesses in the homebuilding industry?

Vital for policy-making, this highly specific research question aims to uncover the challenges faced by small businesses within a certain industry.

Which employee benefits have the greatest impact on job satisfaction?

By being straightforward and specific, answering this research question could provide tangible insights to employers.

Examples: Education

Next, let’s look at some potential research questions within the education, training and development domain.

How does class size affect students’ academic performance in primary schools?

This example research question targets two clearly defined variables, which can be measured and analysed relatively easily.

Do online courses result in better retention of material than traditional courses?

Timely, specific and focused, answering this research question can help inform educational policy and personal choices about learning formats.

What impact do US public school lunches have on student health?

Targeting a specific, well-defined context, the research could lead to direct changes in public health policies.

To what degree does parental involvement improve academic outcomes in secondary education in the Midwest?

This research question focuses on a specific context (secondary education in the Midwest) and has clearly defined constructs.

What are the negative effects of standardised tests on student learning within Oklahoma primary schools?

This research question has a clear focus (negative outcomes) and is narrowed into a very specific context.

Need a helping hand?

Examples: Healthcare

Shifting to a different field, let’s look at some examples of research questions within the healthcare space.

What are the most effective treatments for chronic back pain amongst UK senior males?

Specific and solution-oriented, this research question focuses on clear variables and a well-defined context (senior males within the UK).

How do different healthcare policies affect patient satisfaction in public hospitals in South Africa?

This question is has clearly defined variables and is narrowly focused in terms of context.

Which factors contribute to obesity rates in urban areas within California?

This question is focused yet broad, aiming to reveal several contributing factors for targeted interventions.

Does telemedicine provide the same perceived quality of care as in-person visits for diabetes patients?

Ideal for a qualitative study, this research question explores a single construct (perceived quality of care) within a well-defined sample (diabetes patients).

Which lifestyle factors have the greatest affect on the risk of heart disease?

This research question aims to uncover modifiable factors, offering preventive health recommendations.

Examples: Computer Science

Last but certainly not least, let’s look at a few examples of research questions within the computer science world.

What are the perceived risks of cloud-based storage systems?

Highly relevant in our digital age, this research question would align well with a qualitative interview approach to better understand what users feel the key risks of cloud storage are.

Which factors affect the energy efficiency of data centres in Ohio?

With a clear focus, this research question lays a firm foundation for a quantitative study.

How do TikTok algorithms impact user behaviour amongst new graduates?

While this research question is more open-ended, it could form the basis for a qualitative investigation.

What are the perceived risk and benefits of open-source software software within the web design industry?

Practical and straightforward, the results could guide both developers and end-users in their choices.

Remember, these are just examples…

In this post, we’ve tried to provide a wide range of research question examples to help you get a feel for what research questions look like in practice. That said, it’s important to remember that these are just examples and don’t necessarily equate to good research topics . If you’re still trying to find a topic, check out our topic megalist for inspiration.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Developing a Research Question

17 Developing a Researchable Research Question

After thinking about what topics interest you, identifying a topic that is both empirical and sociological, and decide whether your research will be exploratory, descriptive, or explanatory, the next step is to form a research question about your topic. For many researchers, forming hypotheses comes after developing one’s research question. However, for now, we will just think about research questions.

So then, what makes a good research question? Let us first consider some practical aspects. A good research question is one that:

- You are interested in;

- You have resources (money, technology, assistance, etc) to answer the question;

- You have access to the data you need (human, animal or numerical/ file data);

- Is operationalized appropriately;

- Has a specific objective (anything from explaining something to describing something).

A good research question also has some specific characteristics, as follows:

- It is generally written in the form of a question;

- It is also well focused;

- It cannot be answered with a simple yes or no;

- It should have more than one plausible answer;

- It considers relationships amongst multiple concepts.

Generally speaking, your research question will guide whether your research project is best approached with a quantitative, qualitative, mixed methods, or other [1] approaches. Table 3.2 provides some examples of problematic research questions and then suggestions for how to improve each research question.

In Chapter 8 , we look at designing survey questions, which are not to be confused with research questions.

Text Attributions

- This chapter has been adapted from Chapter 4.4 in Principles of Sociological Inquiry , which was adapted by the Saylor Academy without attribution to the original authors or publisher, as requested by the licensor. © Creative Commons Attribution-NonCommercial-ShareAlike 3.0 License .

- We will look at “other” methods, such as unobtrusive methods, in Chapters ↵

An Introduction to Research Methods in Sociology Copyright © 2019 by Valerie A. Sheppard is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Loading metrics

Open Access

Ten simple rules for good research practice

* E-mail: [email protected]

Affiliations Center for Reproducible Science, University of Zurich, Zurich, Switzerland, Epidemiology, Biostatistics and Prevention Institute, University of Zurich, Zurich, Switzerland

Affiliation Department of Clinical Research, University Hospital Basel, University of Basel, Basel, Switzerland

Affiliation Human Neuroscience Platform, Fondation Campus Biotech Geneva, Geneva, Switzerland

Affiliation Department of Environmental Sciences, Zoology, University of Basel, Basel, Switzerland

Affiliation Institute of Social and Preventive Medicine, University of Bern, Bern, Switzerland

Affiliation SIB Training Group, SIB Swiss Institute of Bioinformatics, Lausanne, Switzerland

Affiliations Department of Clinical Research, University Hospital Basel, University of Basel, Basel, Switzerland, Meta-Research Innovation Center at Stanford (METRICS), Stanford University, Stanford, California, United States of America, Meta-Research Innovation Center Berlin (METRIC-B), Berlin Institute of Health, Berlin, Germany

Affiliation Applied Face Cognition Lab, University of Lausanne, Lausanne, Switzerland

Affiliation Faculty of Psychology, UniDistance Suisse, Brig, Switzerland

Affiliation Statistical Consultant, Edinburgh, United Kingdom

- Simon Schwab,

- Perrine Janiaud,

- Michael Dayan,

- Valentin Amrhein,

- Radoslaw Panczak,

- Patricia M. Palagi,

- Lars G. Hemkens,

- Meike Ramon,

- Nicolas Rothen,

Published: June 23, 2022

- https://doi.org/10.1371/journal.pcbi.1010139

- Reader Comments

Citation: Schwab S, Janiaud P, Dayan M, Amrhein V, Panczak R, Palagi PM, et al. (2022) Ten simple rules for good research practice. PLoS Comput Biol 18(6): e1010139. https://doi.org/10.1371/journal.pcbi.1010139

Copyright: © 2022 Schwab et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Funding: S.S. received funding from SfwF (Stiftung für wissenschaftliche Forschung an der Universität Zürich; grant no. STWF-19-007). The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The authors have declared that no competing interests exist.

This is a PLOS Computational Biology Methods paper.

Introduction

The lack of research reproducibility has caused growing concern across various scientific fields [ 1 – 5 ]. Today, there is widespread agreement, within and outside academia, that scientific research is suffering from a reproducibility crisis [ 6 , 7 ]. Researchers reach different conclusions—even when the same data have been processed—simply due to varied analytical procedures [ 8 , 9 ]. As we continue to recognize this problematic situation, some major causes of irreproducible research have been identified. This, in turn, provides the foundation for improvement by identifying and advocating for good research practices (GRPs). Indeed, powerful solutions are available, for example, preregistration of study protocols and statistical analysis plans, sharing of data and analysis code, and adherence to reporting guidelines. Although these and other best practices may facilitate reproducible research and increase trust in science, it remains the responsibility of researchers themselves to actively integrate them into their everyday research practices.

Contrary to ubiquitous specialized training, cross-disciplinary courses focusing on best practices to enhance the quality of research are lacking at universities and are urgently needed. The intersections between disciplines offer a space for peer evaluation, mutual learning, and sharing of best practices. In medical research, interdisciplinary work is inevitable. For example, conducting clinical trials requires experts with diverse backgrounds, including clinical medicine, pharmacology, biostatistics, evidence synthesis, nursing, and implementation science. Bringing researchers with diverse backgrounds and levels of experience together to exchange knowledge and learn about problems and solutions adds value and improves the quality of research.

The present selection of rules was based on our experiences with teaching GRP courses at the University of Zurich, our course participants’ feedback, and the views of a cross-disciplinary group of experts from within the Swiss Reproducibility Network ( www.swissrn.org ). The list is neither exhaustive, nor does it aim to address and systematically summarize the wide spectrum of issues including research ethics and legal aspects (e.g., related to misconduct, conflicts of interests, and scientific integrity). Instead, we focused on practical advice at the different stages of everyday research: from planning and execution to reporting of research. For a more comprehensive overview on GRPs, we point to the United Kingdom’s Medical Research Council’s guidelines [ 10 ] and the Swedish Research Council’s report [ 11 ]. While the discussion of the rules may predominantly focus on clinical research, much applies, in principle, to basic biomedical research and research in other domains as well.

The 10 proposed rules can serve multiple purposes: an introduction for researchers to relevant concepts to improve research quality, a primer for early-career researchers who participate in our GRP courses, or a starting point for lecturers who plan a GRP course at their own institutions. The 10 rules are grouped according to planning (5 rules), execution (3 rules), and reporting of research (2 rules); see Fig 1 . These principles can (and should) be implemented as a habit in everyday research, just like toothbrushing.

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

GRP, good research practices.

https://doi.org/10.1371/journal.pcbi.1010139.g001

Research planning

Rule 1: specify your research question.

Coming up with a research question is not always simple and may take time. A successful study requires a narrow and clear research question. In evidence-based research, prior studies are assessed in a systematic and transparent way to identify a research gap for a new study that answers a question that matters [ 12 ]. Papers that provide a comprehensive overview of the current state of research in the field are particularly helpful—for example, systematic reviews. Perspective papers may also be useful, for example, there is a paper with the title “SARS-CoV-2 and COVID-19: The most important research questions.” However, a systematic assessment of research gaps deserves more attention than opinion-based publications.

In the next step, a vague research question should be further developed and refined. In clinical research and evidence-based medicine, there is an approach called population, intervention, comparator, outcome, and time frame (PICOT) with a set of criteria that can help framing a research question [ 13 ]. From a well-developed research question, subsequent steps will follow, which may include the exact definition of the population, the outcome, the data to be collected, and the sample size that is required. It may be useful to find out if other researchers find the idea interesting as well and whether it might promise a valuable contribution to the field. However, actively involving the public or the patients can be a more effective way to determine what research questions matter.

The level of details in a research question also depends on whether the planned research is confirmatory or exploratory. In contrast to confirmatory research, exploratory research does not require a well-defined hypothesis from the start. Some examples of exploratory experiments are those based on omics and multi-omics experiments (genomics, bulk RNA-Seq, single-cell, etc.) in systems biology and connectomics and whole-brain analyses in brain imaging. Both exploration and confirmation are needed in science, and it is helpful to understand their strengths and limitations [ 14 , 15 ].

Rule 2: Write and register a study protocol

In clinical research, registration of clinical trials has become a standard since the late 1990 and is now a legal requirement in many countries. Such studies require a study protocol to be registered, for example, with ClinicalTrials.gov, the European Clinical Trials Register, or the World Health Organization’s International Clinical Trials Registry Platform. Similar effort has been implemented for registration of systematic reviews (PROSPERO). Study registration has also been proposed for observational studies [ 16 ] and more recently in preclinical animal research [ 17 ] and is now being advocated across disciplines under the term “preregistration” [ 18 , 19 ].

Study protocols typically document at minimum the research question and hypothesis, a description of the population, the targeted sample size, the inclusion/exclusion criteria, the study design, the data collection, the data processing and transformation, and the planned statistical analyses. The registration of study protocols reduces publication bias and hindsight bias and can safeguard honest research and minimize waste of research [ 20 – 22 ]. Registration ensures that studies can be scrutinized by comparing the reported research with what was actually planned and written in the protocol, and any discrepancies may indicate serious problems (e.g., outcome switching).

Note that registration does not mean that researchers have no flexibility to adapt the plan as needed. Indeed, new or more appropriate procedures may become available or known only after registration of a study. Therefore, a more detailed statistical analysis plan can be amended to the protocol before the data are observed or unblinded [ 23 , 24 ]. Likewise, registration does not exclude the possibility to conduct exploratory data analyses; however, they must be clearly reported as such.

To go even further, registered reports are a novel article type that incentivize high-quality research—irrespective of the ultimate study outcome [ 25 , 26 ]. With registered reports, peer-reviewers decide before anyone knows the results of the study, and they have a more active role in being able to influence the design and analysis of the study. Journals from various disciplines increasingly support registered reports [ 27 ].

Naturally, preregistration and registered reports also have their limitations and may not be appropriate in a purely hypothesis-generating (explorative) framework. Reports of exploratory studies should indeed not be molded into a confirmatory framework; appropriate rigorous reporting alternatives have been suggested and start to become implemented [ 28 , 29 ].

Rule 3: Justify your sample size

Early-career researchers in our GRP courses often identify sample size as an issue in their research. For example, they say that they work with a low number of samples due to slow growth of cells, or they have a limited number of patient tumor samples due to a rare disease. But if your sample size is too low, your study has a high risk of providing a false negative result (type II error). In other words, you are unlikely to find an effect even if there truly was an effect.

Unfortunately, there is more bad news with small studies. When an effect from a small study was selected for drawing conclusions because it was statistically significant, low power increases the probability that an effect size is overestimated [ 30 , 31 ]. The reason is that with low power, studies that due to sampling variation find larger (overestimated) effects are much more likely to be statistically significant than those that happen to find smaller (more realistic) effects [ 30 , 32 , 33 ]. Thus, in such situations, effect sizes are often overestimated. For the phenomenon that small studies often report more extreme results (in meta-analyses), the term “small-study effect” was introduced [ 34 ]. In any case, an underpowered study is a problematic study, no matter the outcome.

In conclusion, small sample sizes can undermine research, but when is a study too small? For one study, a total of 50 patients may be fine, but for another, 1,000 patients may be required. How large a study needs to be designed requires an appropriate sample size calculation. Appropriate sample size calculation ensures that enough data are collected to ensure sufficient statistical power (the probability to reject the null hypothesis when it is in fact false).

Low-powered studies can be avoided by performing a sample size calculation to find out the required sample size of the study. This requires specifying a primary outcome variable and the magnitude of effect you are interested in (among some other factors); in clinical research, this is often the minimal clinically relevant difference. The statistical power is often set at 80% or larger. A comprehensive list of packages for sample size calculation are available [ 35 ], among them the R package “pwr” [ 36 ]. There are also many online calculators available, for example, the University of Zurich’s “SampleSizeR” [ 37 ].

A worthwhile alternative for planning the sample size that puts less emphasis on null hypothesis testing is based on the desired precision of the study; for example, one can calculate the sample size that is necessary to obtain a desired width of a confidence interval for the targeted effect [ 38 – 40 ]. A general framework to sample size justification beyond a calculation-only approach has been proposed [ 41 ]. It is also worth mentioning that some study types have other requirements or need specific methods. In diagnostic testing, one would need to determine the anticipated minimal sensitivity or specificity; in prognostic research, the number of parameters that can be used to fit a prediction model given a fixed sample size should be specified. Designs can also be so complex that a simulation (Monte Carlo method) may be required.

Sample size calculations should be done under different assumptions, and the largest estimated sample size is often the safer bet than a best-case scenario. The calculated sample size should further be adjusted to allow for possible missing data. Due to the complexity of accurately calculating sample size, researchers should strongly consider consulting a statistician early in the study design process.

Rule 4: Write a data management plan

In 2020, 2 Coronavirus Disease 2019 (COVID-19) papers in leading medical journals were retracted after major concerns about the data were raised [ 42 ]. Today, raw data are more often recognized as a key outcome of research along with the paper. Therefore, it is important to develop a strategy for the life cycle of data, including suitable infrastructure for long-term storage.

The data life cycle is described in a data management plan: a document that describes what data will be collected and how the data will be organized, stored, handled, and protected during and after the end of the research project. Several funders require a data management plan in grant submissions, and publishers like PLOS encourage authors to do so as well. The Wellcome Trust provides guidance in the development of a data management plan, including real examples from neuroimaging, genomics, and social sciences [ 43 ]. However, projects do not always allocate funding and resources to the actual implementation of the data management plan.

The Findable, Accessible, Interoperable, and Reusable (FAIR) data principles promote maximal use of data and enable machines to access and reuse data with minimal human intervention [ 44 ]. FAIR principles require the data to be retained, preserved, and shared preferably with an immutable unique identifier and a clear usage license. Appropriate metadata will help other researchers (or machines) to discover, process, and understand the data. However, requesting researchers to fully comply with the FAIR data principles in every detail is an ambitious goal.

Multidisciplinary data repositories that support FAIR are, for example, Dryad (datadryad.org https://datadryad.org/ ), EUDAT ( www.eudat.eu ), OSF (osf.io https://osf.io/ ), and Zenodo (zenodo.org https://zenodo.org/ ). A number of institutional and field-specific repositories may also be suitable. However, sometimes, authors may not be able to make their data publicly available for legal or ethical reasons. In such cases, a data user agreement can indicate the conditions required to access the data. Journals highlight what are acceptable and what are unacceptable data access restrictions and often require a data availability statement.

Organizing the study artifacts in a structured way greatly facilitates the reuse of data and code within and outside the lab, enhancing collaborations and maximizing the research investment. Support and courses for data management plans are sometimes available at universities. Another 10 simple rules paper for creating a good data management plan is dedicated to this topic [ 45 ].

Rule 5: Reduce bias

Bias is a distorted view in favor of or against a particular idea. In statistics, bias is a systematic deviation of a statistical estimate from the (true) quantity it estimates. Bias can invalidate our conclusions, and the more bias there is, the less valid they are. For example, in clinical studies, bias may mislead us into reaching a causal conclusion that the difference in the outcomes was due to the intervention or the exposure. This is a big concern, and, therefore, the risk of bias is assessed in clinical trials [ 46 ] as well as in observational studies [ 47 , 48 ].

There are many different forms of bias that can occur in a study, and they may overlap (e.g., allocation bias and confounding bias) [ 49 ]. Bias can occur at different stages, for example, immortal time bias in the design of the study, information bias in the execution of the study, and publication bias in the reporting of research. Understanding bias allows us researchers to remain vigilant of potential sources of bias when peer-reviewing and designing own studies. We summarized some common types of bias and some preventive steps in Table 1 , but many other forms of bias exist; for a comprehensive overview, see the Oxford University’s Catalogue of Bias [ 50 ].

https://doi.org/10.1371/journal.pcbi.1010139.t001

Here are some noteworthy examples of study bias from the literature: An example of information bias was observed when in 1998 an alleged association between the measles, mumps, and rubella (MMR) vaccine and autism was reported. Recall bias (a subtype of information bias) emerged when parents of autistic children recalled the onset of autism after an MMR vaccination more often than parents of similar children who were diagnosed prior to the media coverage of that controversial and meanwhile retracted study [ 51 ]. A study from 2001 showed better survival for academy award-winning actors, but this was due to immortal time bias that favors the treatment or exposure group [ 52 , 53 ]. A study systematically investigated self-reports about musculoskeletal symptoms and found the presence of information bias. The reason was that participants with little computer-time overestimated, and participants with a lot of computer-time spent underestimated their computer usage [ 54 ].

Information bias can be mitigated by using objective rather than subjective measurements. Standardized operating procedures (SOP) and electronic lab notebooks additionally help to follow well-designed protocols for data collection and handling [ 55 ]. Despite the failure to mitigate bias in studies, complete descriptions of data and methods can at least allow the assessment of risk of bias.

Research execution

Rule 6: avoid questionable research practices.

Questionable research practices (QRPs) can lead to exaggerated findings and false conclusions and thus lead to irreproducible research. Often, QRPs are used with no bad intentions. This becomes evident when methods sections explicitly describe such procedures, for example, to increase the number of samples until statistical significance is reached that supports the hypothesis. Therefore, it is important that researchers know about QRPs in order to recognize and avoid them.

Several questionable QRPs have been named [ 56 , 57 ]. Among them are low statistical power, pseudoreplication, repeated inspection of data, p -hacking [ 58 ], selective reporting, and hypothesizing after the results are known (HARKing).

The first 2 QRPs, low statistical power and pseudoreplication, can be prevented by proper planning and designing of studies, including sample size calculation and appropriate statistical methodology to avoid treating data as independent when in fact they are not. Statistical power is not equal to reproducibility, but statistical power is a precondition of reproducibility as the lack thereof can result in false negative as well as false positive findings (see Rule 3 ).

In fact, a lot of QRP can be avoided with a study protocol and statistical analysis plan. Preregistration, as described in Rule 2, is considered best practice for this purpose. However, many of these issues can additionally be rooted in institutional incentives and rewards. Both funding and promotion are often tied to the quantity rather than the quality of the research output. At universities, still only few or no rewards are given for writing and registering protocols, sharing data, publishing negative findings, and conducting replication studies. Thus, a wider “culture change” is needed.

Rule 7: Be cautious with interpretations of statistical significance

It would help if more researchers were familiar with correct interpretations and possible misinterpretations of statistical tests, p -values, confidence intervals, and statistical power [ 59 , 60 ]. A statistically significant p -value does not necessarily mean that there is a clinically or biologically relevant effect. Specifically, the traditional dichotomization into statistically significant ( p < 0.05) versus statistically nonsignificant ( p ≥ 0.05) results is seldom appropriate, can lead to cherry-picking of results and may eventually corrupt science [ 61 ]. We instead recommend reporting exact p -values and interpreting them in a graded way in terms of the compatibility of the null hypothesis with the data [ 62 , 63 ]. Moreover, a p -value around 0.05 (e.g., 0.047 or 0.055) provides only little information, as is best illustrated by the associated replication power: The probability that a hypothetical replication study of the same design will lead to a statistically significant result is only 50% [ 64 ] and is even lower in the presence of publication bias and regression to the mean (the phenomenon that effect estimates in replication studies are often smaller than the estimates in the original study) [ 65 ]. Claims of novel discoveries should therefore be based on a smaller p -value threshold (e.g., p < 0.005) [ 66 ], but this really depends on the discipline (genome-wide screenings or studies in particle physics often apply much lower thresholds).

Generally, there is often too much emphasis on p -values. A statistical index such as the p -value is just the final product of an analysis, the tip of the iceberg [ 67 ]. Statistical analyses often include many complex stages, from data processing, cleaning, transformation, addressing missing data, modeling, to statistical inference. Errors and pitfalls can creep in at any stage, and even a tiny error can have a big impact on the result [ 68 ]. Also, when many hypothesis tests are conducted (multiple testing), false positive rates may need to be controlled to protect against wrong conclusions, although adjustments for multiple testing are debated [ 69 – 71 ].

Thus, a p -value alone is not a measure of how credible a scientific finding is [ 72 ]. Instead, the quality of the research must be considered, including the study design, the quality of the measurement, and the validity of the assumptions that underlie the data analysis [ 60 , 73 ]. Frameworks exist that help to systematically and transparently assess the certainty in evidence; the most established and widely used one is Grading of Recommendations, Assessment, Development and Evaluations (GRADE; www.gradeworkinggroup.org ) [ 74 ].

Training in basic statistics, statistical programming, and reproducible analyses and better involvement of data professionals in academia is necessary. University departments sometimes have statisticians that can support researchers. Importantly, statisticians need to be involved early in the process and on an equal footing and not just at the end of a project to perform the final data analysis.

Rule 8: Make your research open

In reality, science often lacks transparency. Open science makes the process of producing evidence and claims transparent and accessible to others [ 75 ]. Several universities and research funders have already implemented open science roadmaps to advocate free and public science as well as open access to scientific knowledge, with the aim of further developing the credibility of research. Open research allows more eyes to see it and critique it, a principle similar to the “Linus’s law” in software development, which says that if there are enough people to test a software, most bugs will be discovered.

As science often progresses incrementally, writing and sharing a study protocol and making data and methods readily available is crucial to facilitate knowledge building. The Open Science Framework (osf.io) is a free and open-source project management tool that supports researchers throughout the entire project life cycle. OSF enables preregistration of study protocols and sharing of documents, data, analysis code, supplementary materials, and preprints.

To facilitate reproducibility, a research paper can link to data and analysis code deposited on OSF. Computational notebooks are now readily available that unite data processing, data transformations, statistical analyses, figures and tables in a single document (e.g., R Markdown, Jupyter); see also the 10 simple rules for reproducible computational research [ 76 ]. Making both data and code open thus minimizes waste of funding resources and accelerates science.

Open science can also advance researchers’ careers, especially for early-career researchers. The increased visibility, retrievability, and citations of datasets can all help with career building [ 77 ]. Therefore, institutions should provide necessary training, and hiring committees and journals should align their core values with open science, to attract researchers who aim for transparent and credible research [ 78 ].

Research reporting

Rule 9: report all findings.

Publication bias occurs when the outcome of a study influences the decision whether to publish it. Researchers, reviewers, and publishers often find nonsignificant study results not interesting or worth publishing. As a consequence, outcomes and analyses are only selectively reported in the literature [ 79 ], also known as the file drawer effect [ 80 ].

The extent of publication bias in the literature is illustrated by the overwhelming frequency of statistically significant findings [ 81 ]. A study extracted p -values from MEDLINE and PubMed Central and showed that 96% of the records reported at least 1 statistically significant p -value [ 82 ], which seems implausible in the real world. Another study plotted the distribution of more than 1 million z -values from Medline, revealing a huge gap from −2 to 2 [ 83 ]. Positive studies (i.e., statistically significant, perceived as striking or showing a beneficial effect) were 4 times more likely to get published than negative studies [ 84 ].

Often a statistically nonsignificant result is interpreted as a “null” finding. But a nonsignificant finding does not necessarily mean a null effect; absence of evidence is not evidence of absence [ 85 ]. An individual study may be underpowered, resulting in a nonsignificant finding, but the cumulative evidence from multiple studies may indeed provide sufficient evidence in a meta-analysis. Another argument is that a confidence interval that contains the null value often also contains non-null values that may be of high practical importance. Only if all the values inside the interval are deemed unimportant from a practical perspective, then it may be fair to describe a result as a null finding [ 61 ]. We should thus never report “no difference” or “no association” just because a p -value is larger than 0.05 or, equivalently, because a confidence interval includes the “null” [ 61 ].

On the other hand, studies sometimes report statistically nonsignificant results with “spin” to claim that the experimental treatment is beneficial, often by focusing their conclusions on statistically significant differences on secondary outcomes despite a statistically nonsignificant difference for the primary outcome [ 86 , 87 ].

Findings that are not being published have a tremendous impact on the research ecosystem, distorting our knowledge of the scientific landscape by perpetuating misconceptions, and jeopardizing judgment of researchers and the public trust in science. In clinical research, publication bias can mislead care decisions and harm patients, for example, when treatments appear useful despite only minimal or even absent benefits reported in studies that were not published and thus are unknown to physicians [ 88 ]. Moreover, publication bias also directly affects the formulation and proliferation of scientific theories, which are taught to students and early-career researchers, thereby perpetuating biased research from the core. It has been shown in modeling studies that unless a sufficient proportion of negative studies are published, a false claim can become an accepted fact [ 89 ] and the false positive rates influence trustworthiness in a given field [ 90 ].

In sum, negative findings are undervalued. They need to be more consistently reported at the study level or be systematically investigated at the systematic review level. Researchers have their share of responsibilities, but there is clearly a lack of incentives from promotion and tenure committees, journals, and funders.

Rule 10: Follow reporting guidelines

Study reports need to faithfully describe the aim of the study and what was done, including potential deviations from the original protocol, as well as what was found. Yet, there is ample evidence of discrepancies between protocols and research reports, and of insufficient quality of reporting [ 79 , 91 – 95 ]. Reporting deficiencies threaten our ability to clearly communicate findings, replicate studies, make informed decisions, and build on existing evidence, wasting time and resources invested in the research [ 96 ].

Reporting guidelines aim to provide the minimum information needed on key design features and analysis decisions, ensuring that findings can be adequately used and studies replicated. In 2008, the Enhancing the QUAlity and Transparency Of Health Research (EQUATOR) network was initiated to provide reporting guidelines for a variety of study designs along with guidelines for education and training on how to enhance quality and transparency of health research. Currently, there are 468 reporting guidelines listed in the network; see the most prominent guidelines in Table 2 . Furthermore, following the ICMJE recommendations, medical journals are increasingly endorsing reporting guidelines [ 97 ], in some cases making it mandatory to submit the appropriate reporting checklist along with the manuscript.

https://doi.org/10.1371/journal.pcbi.1010139.t002

The use of reporting guidelines and journal endorsement has led to a positive impact on the quality and transparency of research reporting, but improvement is still needed to maximize the value of research [ 98 , 99 ].

Conclusions

Originally, this paper targeted early-career researchers; however, throughout the development of the rules, it became clear that the present recommendations can serve all researchers irrespective of their seniority. We focused on practical guidelines for planning, conducting, and reporting of research. Others have aligned GRP with similar topics [ 100 , 101 ]. Even though we provide 10 simple rules, the word “simple” should not be taken lightly. Putting the rules into practice usually requires effort and time, especially at the beginning of a research project. However, time can also be redeemed, for example, when certain choices can be justified to reviewers by providing a study protocol or when data can be quickly reanalyzed by using computational notebooks and dynamic reports.

Researchers have field-specific research skills, but sometimes are not aware of best practices in other fields that can be useful. Universities should offer cross-disciplinary GRP courses across faculties to train the next generation of scientists. Such courses are an important building block to improve the reproducibility of science.

Acknowledgments

This article was written along the Good Research Practice (GRP) courses at the University of Zurich provided by the Center of Reproducible Science ( www.crs.uzh.ch ). All materials from the course are available at https://osf.io/t9rqm/ . We appreciated the discussion, development, and refinement of this article within the working group “training” of the SwissRN ( www.swissrn.org ). We are grateful to Philip Bourne for a lot of valuable comments on the earlier versions of the manuscript.

- View Article

- PubMed/NCBI

- Google Scholar

- 35. H. G. Zhang EZ. CRAN task view: Clinical trial design, monitoring, and analysis. 20 Jun 2021 [cited 3 Mar 2022]. Available: https://CRAN.R-project.org/view=ClinicalTrials .

- 36. Champely S. pwr: Basic Functions for Power Analysis. 2020. Available: https://CRAN.R-project.org/package=pwr .

- 43. Outputs Management Plan—Grant Funding. In: Wellcome [Internet]. [cited 13 Feb 2022]. Available: https://wellcome.org/grant-funding/guidance/how-complete-outputs-management-plan .

- 62. Cox DR, Donnelly CA. Principles of Applied Statistics. Cambridge University Press; 2011. Available: https://play.google.com/store/books/details?id=BMLMGr4kbQYC .

- 74. GRADE approach. [cited 3 Mar 2022]. Available: https://training.cochrane.org/grade-approach .

Researching

The qualities of a good research question.

Research = the physical process of gathering information + the mental process of deriving the answer to your question from the information you gathered. Research writing = the process of sharing the answer to your research question along with the evidence on which your answer is based, the sources you used, and your own reasoning and explanation .

- Questions that are purely values-based (such as “Should assisted suicide be legal?”) cannot be answered objectively because the answer varies depending on one’s values. Be wary of questions that include “should” or “ought” because those words often (although not always) indicate a values-based question.However, note that most values-based questions can be turned into research questions by judicious reframing. For instance, you could reframe “Should assisted suicide be legal?” as “What are the ethical implications of legalizing assisted suicide?” Using a “what are” frame turns a values-based question into a legitimate research question by moving it out of the world of debate and into the world of investigation.

- The question, “Does carbon-based life exist outside of Earth’s solar system?” is a perfectly good research question in the sense that it is not values-based and therefore could be answered in an objective way, IF it were possible to collect data about the presence of life outside of Earth’s solar system. That is not yet possible with current technology; therefore, this is not (yet) a research question because it’s not (now) possible to obtain the data that would be needed to answer it.

- If the answer to the question is readily available in a good encyclopedia, textbook, or reference book, then it is a homework question, not a research question. It was probably a research question in the past, but if the answer is so thoroughly known that you can easily look it up and find it, then it is no longer an open question. However, it is important to remember that as new information becomes available, homework questions can sometimes be reopened as research questions. Equally important, a question may have been answered for one population or circumstance, but not for all populations or all circumstances.

- Composition II. Authored by : Janet Zepernick. Provided by : Pittsburg State University. Located at : http://www.pittstate.edu/ . Project : Kaleidoscope Open Course Initiative. License : CC BY: Attribution

Want to Get your Dissertation Accepted?

Discover how we've helped doctoral students complete their dissertations and advance their academic careers!

Join 200+ Graduated Students

Get Your Dissertation Accepted On Your Next Submission

Get customized coaching for:.

- Crafting your proposal,

- Collecting and analyzing your data, or

- Preparing your defense.

Trapped in dissertation revisions?

What makes a good research question, published by dr. david banner on november 4, 2022 november 4, 2022.

Last Updated on: 4th March 2024, 06:04 am

Creating a good research question is vital to successfully completing your dissertation. Here are some tips that will help you formulate a good research question.

These are the three most important qualities of a good research question:

#1: Open-Ended (Not Yes/No)

You do NOT want a question that can be answered with a simple “yes” or “no.” That is a dead end for a question. There needs to be something beyond a simple yes/no” or the research has nowhere to go!

#2: Addresses a Gap in Literature

Secondly, you want a question, ideally, that fits into a niche of questions that have not been addressed yet in peer reviewed research yet is worthy of scholarly study. If you wish to address a topic that has been researched before, you may use different subjects or time periods of study; this is called a replication and is acceptable in most universities.

#3: Holds Your Interest

This last point is especially crucial for dissertation research. You will be thinking about and studying a particular question for at least a year, so you want it to be something that you are REALLY interested in learning about. This will hold your interest throughout the process. The research question is really the heart of the research process. A good research question will hold your interest and contribute to the body of scholarly knowledge about a subject.

How Do You Find a Good Research Question?

Look to your interests.

Problems that can use research are everywhere. Where do your interests lie? Pick an area that you are excited about. It needs to engage your interest and, ideally, your passion.

Identify the Type of Research

There are basically two kinds of research; applied research and basic research. Applied research is meant to inform decision making about practical problems, while basic research can advance theoretical conceptualizations about a particular topic. Both are useful, but chances are you will find an applied research topic.

Review the Existing Literature

Over 50% of doctoral candidates don’t finish their dissertations..

Start your search by looking at what other scholars have studied. Go look at dissertation abstracts in the library; see if anything grabs your interest. But self-enlightenment is not the goal of research. Gathering information about a certain topic is fine, but it doesn’t lead to new knowledge. The same is true for comparing two sets of data; you can go to the library and do this (e.g. comparing men and women over 100 years as to the number of each employed during that span of years).

Chapter 2 of a dissertation proposal usually is called the literature review and this needs to be done early on. This is where you discover what has been studied in your chosen area of interest. If you find a topic that grabs your interest, think through the feasibility that the project implies.

You want something that is doable in a reasonable amount of time. A project that is too ambitious can lead to frustration and heartache. Remember; you want a question that leads you to new research but too big a topic can wait until you complete the PhD!

Develop Your Research Question

A statement of the research question needs to be precise. You need to say exactly what you mean. You cannot assume that others will be able to read your mind. If you cannot state the problem clearly and succinctly, then your data gathering might be sloppy, too.

Develop Your Problem Statement

Occasionally a researcher talks about a problem, but never states exactly what the problem is – avoid this at all costs. Be sure to edit your work.

You may wish to subdivide the problem into sub-problems, so that the sub-problems add up to the totality of the problem. But sub-problems need to be small in number (ideally, 2-5 subproblems will do.) Having too many sub-problems is not helpful in designing a research project. If you come up with too many sub-problems, see if any are just procedural issues and not really sub-problems.

Final Thoughts

Remember, you need to find a question that really energizes you and, ideally, one that fills a gap in the existing research in this area. Make sure the problem statement is concise and doable; the scope of the problem needs to be something that you can do in a reasonable amount of time. And, above all, keep in mind that your job is to increase the body of knowledge in this field. You are providing fertile ground for future research. Get going!

Dr. David Banner

David Banner is the author of 6 books, 40 journal articles, and 35 conference papers on transformational leadership, Dr. David Banner received his PhD in Policy and Organizational Behavior from the Kellogg Graduate School of Management, Northwestern University in Illinois. He worked for the DePaul College of Commerce, The University of the Pacific School of Business, and the University of New Brunswick (Canada) School of Management; he was tenured at all 3 universities and was voted “Outstanding Professor” at all three. He also worked at Viterbo University in La Crosse, Wi, where he was the Director of the values-based MBA program, which he designed, recruited students, mentored faculty, set up an Advisory Board and got the program accredited (2003-07). He also worked for 16 years as a faculty mentor for the Leadership and Organizational Change PhD students (2005-21); in his 16 years, he graduated 82 PhDs in his roles as Committee Chair, Committee Member and URR (University Research Reviewer). Mentoring PhD students gives him the most joy and satisfaction. He offers his services to help people complete their PhDs, find good academic jobs, get published in peer-reviewed journals and find their place in the academic environment. Book a Free Consultation with Branford McAllister

Related Posts

Dissertation

Dissertation structure.

When it comes to writing a dissertation, one of the most fraught questions asked by graduate students is about dissertation structure. A dissertation is the lengthiest writing project that many graduate students ever undertake, and Read more…

Choosing a Dissertation Chair

Choosing your dissertation chair is one of the most important decisions that you’ll make in graduate school. Your dissertation chair will in many ways shape your experience as you undergo the most rigorous intellectual challenge Read more…

Dissertation Chair: An Owner’s Manual

One of the most important faculty members in a doctoral student’s academic life is their dissertation chair. Part mentor, part administrator, the dissertation chair’s role encompasses responsibilities that directly impact your graduate experience. Prior to Read more…

Make This Your Last Round of Dissertation Revision.

Learn How to Get Your Dissertation Accepted .

Discover the 5-Step Process in this Free Webinar .

Almost there!

Please verify your email address by clicking the link in the email message we just sent to your address.

If you don't see the message within the next five minutes, be sure to check your spam folder :).

Hack Your Dissertation

5-Day Mini Course: How to Finish Faster With Less Stress

Interested in more helpful tips about improving your dissertation experience? Join our 5-day mini course by email!

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Indian Assoc Pediatr Surg

- v.24(1); Jan-Mar 2019

Formulation of Research Question – Stepwise Approach

Simmi k. ratan.

Department of Pediatric Surgery, Maulana Azad Medical College, New Delhi, India

1 Department of Community Medicine, North Delhi Municipal Corporation Medical College, New Delhi, India

2 Department of Pediatric Surgery, Batra Hospital and Research Centre, New Delhi, India

Formulation of research question (RQ) is an essentiality before starting any research. It aims to explore an existing uncertainty in an area of concern and points to a need for deliberate investigation. It is, therefore, pertinent to formulate a good RQ. The present paper aims to discuss the process of formulation of RQ with stepwise approach. The characteristics of good RQ are expressed by acronym “FINERMAPS” expanded as feasible, interesting, novel, ethical, relevant, manageable, appropriate, potential value, publishability, and systematic. A RQ can address different formats depending on the aspect to be evaluated. Based on this, there can be different types of RQ such as based on the existence of the phenomenon, description and classification, composition, relationship, comparative, and causality. To develop a RQ, one needs to begin by identifying the subject of interest and then do preliminary research on that subject. The researcher then defines what still needs to be known in that particular subject and assesses the implied questions. After narrowing the focus and scope of the research subject, researcher frames a RQ and then evaluates it. Thus, conception to formulation of RQ is very systematic process and has to be performed meticulously as research guided by such question can have wider impact in the field of social and health research by leading to formulation of policies for the benefit of larger population.

I NTRODUCTION

A good research question (RQ) forms backbone of a good research, which in turn is vital in unraveling mysteries of nature and giving insight into a problem.[ 1 , 2 , 3 , 4 ] RQ identifies the problem to be studied and guides to the methodology. It leads to building up of an appropriate hypothesis (Hs). Hence, RQ aims to explore an existing uncertainty in an area of concern and points to a need for deliberate investigation. A good RQ helps support a focused arguable thesis and construction of a logical argument. Hence, formulation of a good RQ is undoubtedly one of the first critical steps in the research process, especially in the field of social and health research, where the systematic generation of knowledge that can be used to promote, restore, maintain, and/or protect health of individuals and populations.[ 1 , 3 , 4 ] Basically, the research can be classified as action, applied, basic, clinical, empirical, administrative, theoretical, or qualitative or quantitative research, depending on its purpose.[ 2 ]

Research plays an important role in developing clinical practices and instituting new health policies. Hence, there is a need for a logical scientific approach as research has an important goal of generating new claims.[ 1 ]

C HARACTERISTICS OF G OOD R ESEARCH Q UESTION

“The most successful research topics are narrowly focused and carefully defined but are important parts of a broad-ranging, complex problem.”

A good RQ is an asset as it:

- Details the problem statement

- Further describes and refines the issue under study

- Adds focus to the problem statement

- Guides data collection and analysis

- Sets context of research.

Hence, while writing RQ, it is important to see if it is relevant to the existing time frame and conditions. For example, the impact of “odd-even” vehicle formula in decreasing the level of air particulate pollution in various districts of Delhi.

A good research is represented by acronym FINERMAPS[ 5 ]

Interesting.

- Appropriate

- Potential value and publishability

- Systematic.

Feasibility means that it is within the ability of the investigator to carry out. It should be backed by an appropriate number of subjects and methodology as well as time and funds to reach the conclusions. One needs to be realistic about the scope and scale of the project. One has to have access to the people, gadgets, documents, statistics, etc. One should be able to relate the concepts of the RQ to the observations, phenomena, indicators, or variables that one can access. One should be clear that the collection of data and the proceedings of project can be completed within the limited time and resources available to the investigator. Sometimes, a RQ appears feasible, but when fieldwork or study gets started, it proves otherwise. In this situation, it is important to write up the problems honestly and to reflect on what has been learned. One should try to discuss with more experienced colleagues or the supervisor so as to develop a contingency plan to anticipate possible problems while working on a RQ and find possible solutions in such situations.

This is essential that one has a real grounded interest in one's RQ and one can explore this and back it up with academic and intellectual debate. This interest will motivate one to keep going with RQ.

The question should not simply copy questions investigated by other workers but should have scope to be investigated. It may aim at confirming or refuting the already established findings, establish new facts, or find new aspects of the established facts. It should show imagination of the researcher. Above all, the question has to be simple and clear. The complexity of a question can frequently hide unclear thoughts and lead to a confused research process. A very elaborate RQ, or a question which is not differentiated into different parts, may hide concepts that are contradictory or not relevant. This needs to be clear and thought-through. Having one key question with several subcomponents will guide your research.

This is the foremost requirement of any RQ and is mandatory to get clearance from appropriate authorities before stating research on the question. Further, the RQ should be such that it minimizes the risk of harm to the participants in the research, protect the privacy and maintain their confidentiality, and provide the participants right to withdraw from research. It should also guide in avoiding deceptive practices in research.

The question should of academic and intellectual interest to people in the field you have chosen to study. The question preferably should arise from issues raised in the current situation, literature, or in practice. It should establish a clear purpose for the research in relation to the chosen field. For example, filling a gap in knowledge, analyzing academic assumptions or professional practice, monitoring a development in practice, comparing different approaches, or testing theories within a specific population are some of the relevant RQs.

Manageable (M): It has the similar essence as of feasibility but mainly means that the following research can be managed by the researcher.

Appropriate (A): RQ should be appropriate logically and scientifically for the community and institution.

Potential value and publishability (P): The study can make significant health impact in clinical and community practices. Therefore, research should aim for significant economic impact to reduce unnecessary or excessive costs. Furthermore, the proposed study should exist within a clinical, consumer, or policy-making context that is amenable to evidence-based change. Above all, a good RQ must address a topic that has clear implications for resolving important dilemmas in health and health-care decisions made by one or more stakeholder groups.

Systematic (S): Research is structured with specified steps to be taken in a specified sequence in accordance with the well-defined set of rules though it does not rule out creative thinking.

Example of RQ: Would the topical skin application of oil as a skin barrier reduces hypothermia in preterm infants? This question fulfills the criteria of a good RQ, that is, feasible, interesting, novel, ethical, and relevant.

Types of research question

A RQ can address different formats depending on the aspect to be evaluated.[ 6 ] For example:

- Existence: This is designed to uphold the existence of a particular phenomenon or to rule out rival explanation, for example, can neonates perceive pain?

- Description and classification: This type of question encompasses statement of uniqueness, for example, what are characteristics and types of neuropathic bladders?

- Composition: It calls for breakdown of whole into components, for example, what are stages of reflux nephropathy?

- Relationship: Evaluate relation between variables, for example, association between tumor rupture and recurrence rates in Wilm's tumor

- Descriptive—comparative: Expected that researcher will ensure that all is same between groups except issue in question, for example, Are germ cell tumors occurring in gonads more aggressive than those occurring in extragonadal sites?

- Causality: Does deletion of p53 leads to worse outcome in patients with neuroblastoma?

- Causality—comparative: Such questions frequently aim to see effect of two rival treatments, for example, does adding surgical resection improves survival rate outcome in children with neuroblastoma than with chemotherapy alone?

- Causality–Comparative interactions: Does immunotherapy leads to better survival outcome in neuroblastoma Stage IV S than with chemotherapy in the setting of adverse genetic profile than without it? (Does X cause more changes in Y than those caused by Z under certain condition and not under other conditions).

How to develop a research question

- Begin by identifying a broader subject of interest that lends itself to investigate, for example, hormone levels among hypospadias

- Do preliminary research on the general topic to find out what research has already been done and what literature already exists.[ 7 ] Therefore, one should begin with “information gaps” (What do you already know about the problem? For example, studies with results on testosterone levels among hypospadias

- What do you still need to know? (e.g., levels of other reproductive hormones among hypospadias)

- What are the implied questions: The need to know about a problem will lead to few implied questions. Each general question should lead to more specific questions (e.g., how hormone levels differ among isolated hypospadias with respect to that in normal population)