What Is Apache Kafka?

Developed as a publish-subscribe messaging system to handle mass amounts of data at LinkedIn, today, Apache Kafka® is an open-source distributed event streaming platform used by over 80% of the Fortune 100.

This beginner’s Kafka tutorial will help you learn Kafka, its benefits, and use cases, and how to get started from the ground up. It includes a look at Kafka architecture, core concepts, and the connector ecosystem.

Introduction

Apache Kafka is an event streaming platform used to collect, process, store, and integrate data at scale. It has numerous use cases including distributed streaming, stream processing, data integration, and pub/sub messaging.

In order to make complete sense of what Kafka does, we'll delve into what an event streaming platform is and how it works. So before delving into Kafka architecture or its core components, let's discuss what an event is. This will help explain how Kafka stores events, how to get events in and out of the system, and how to analyze event streams.

What Is an Event?

An event is any type of action, incident, or change that's identified or recorded by software or applications. For example, a payment, a website click, or a temperature reading, along with a description of what happened.

In other words, an event is a combination of notification—the element of when-ness that can be used to trigger some other activity—and state. That state is usually fairly small, say less than a megabyte or so, and is normally represented in some structured format, say in JSON or an object serialized with Apache Avro™ or Protocol Buffers.

Kafka and Events – Key/Value Pairs

Kafka is based on the abstraction of a distributed commit log. By splitting a log into partitions, Kafka is able to scale-out systems. As such, Kafka models events as key/value pairs . Internally, keys and values are just sequences of bytes, but externally in your programming language of choice, they are often structured objects represented in your language’s type system. Kafka famously calls the translation between language types and internal bytes serialization and deserialization. The serialized format is usually JSON, JSON Schema, Avro, or Protobuf.

Values are typically the serialized representation of an application domain object or some form of raw message input, like the output of a sensor.

Keys can also be complex domain objects but are often primitive types like strings or integers. The key part of a Kafka event is not necessarily a unique identifier for the event, like the primary key of a row in a relational database would be. It is more likely the identifier of some entity in the system, like a user, order, or a particular connected device.

This may not sound so significant now, but we’ll see later on that keys are crucial for how Kafka deals with things like parallelization and data locality.

Why Kafka? Benefits and Use Cases

Kafka is used by over 100,000 organizations across the world and is backed by a thriving community of professional developers, who are constantly advancing the state of the art in stream processing together. Due to Kafka's high throughput, fault tolerance, resilience, and scalability, there are numerous use cases across almost every industry - from banking and fraud detection, to transportation and IoT. We typically see Kafka used for purposes like those below.

Data Integration

Kafka can connect to nearly any other data source in traditional enterprise information systems, modern databases, or in the cloud. It forms an efficient point of integration with built-in data connectors, without hiding logic or routing inside brittle, centralized infrastructure.

Metrics and Monitoring

Kafka is often used for monitoring operational data. This involves aggregating statistics from distributed applications to produce centralized feeds with real-time metrics.

Log Aggregation

A modern system is typically a distributed system, and logging data must be centralized from the various components of the system to one place. Kafka often serves as a single source of truth by centralizing data across all sources, regardless of form or volume.

Stream Processing

Performing real-time computations on event streams is a core competency of Kafka. From real-time data processing to dataflow programming, Kafka ingests, stores, and processes streams of data as it's being generated, at any scale.

Publish-Subscribe Messaging

As a distributed pub/sub messaging system, Kafka works well as a modernized version of the traditional message broker. Any time a process that generates events must be decoupled from the process or from processes receiving the events, Kafka is a scalable and flexible way to get the job done.

Kafka Architecture – Fundamental Concepts

Kafka topics.

Events have a tendency to proliferate—just think of the events that happened to you this morning—so we’ll need a system for organizing them. Kafka’s most fundamental unit of organization is the topic , which is something like a table in a relational database. As a developer using Kafka, the topic is the abstraction you probably think the most about. You create different topics to hold different kinds of events and different topics to hold filtered and transformed versions of the same kind of event.

A topic is a log of events. Logs are easy to understand, because they are simple data structures with well-known semantics. First, they are append only: When you write a new message into a log, it always goes on the end. Second, they can only be read by seeking an arbitrary offset in the log, then by scanning sequential log entries. Third, events in the log are immutable—once something has happened, it is exceedingly difficult to make it un-happen. The simple semantics of a log make it feasible for Kafka to deliver high levels of sustained throughput in and out of topics, and also make it easier to reason about the replication of topics, which we’ll cover more later.

Logs are also fundamentally durable things. Traditional enterprise messaging systems have topics and queues, which store messages temporarily to buffer them between source and destination.

Since Kafka topics are logs, there is nothing inherently temporary about the data in them. Every topic can be configured to expire data after it has reached a certain age (or the topic overall has reached a certain size), from as short as seconds to as long as years or even to retain messages indefinitely . The logs that underlie Kafka topics are files stored on disk. When you write an event to a topic, it is as durable as it would be if you had written it to any database you ever trusted.

The simplicity of the log and the immutability of the contents in it are key to Kafka’s success as a critical component in modern data infrastructure—but they are only the beginning.

Kafka Partitioning

If a topic were constrained to live entirely on one machine, that would place a pretty radical limit on the ability of Kafka to scale. It could manage many topics across many machines—Kafka is a distributed system, after all—but no one topic could ever get too big or aspire to accommodate too many reads and writes. Fortunately, Kafka does not leave us without options here: It gives us the ability to partition topics.

Partitioning takes the single topic log and breaks it into multiple logs, each of which can live on a separate node in the Kafka cluster. This way, the work of storing messages, writing new messages, and processing existing messages can be split among many nodes in the cluster.

How Kafka Partitioning Works

Having broken a topic up into partitions, we need a way of deciding which messages to write to which partitions. Typically, if a message has no key, subsequent messages will be distributed round-robin among all the topic’s partitions. In this case, all partitions get an even share of the data, but we don’t preserve any kind of ordering of the input messages. If the message does have a key, then the destination partition will be computed from a hash of the key. This allows Kafka to guarantee that messages having the same key always land in the same partition, and therefore are always in order.

For example, if you are producing events that are all associated with the same customer, using the customer ID as the key guarantees that all of the events from a given customer will always arrive in order. This creates the possibility that a very active key will create a larger and more active partition, but this risk is small in practice and is manageable when it presents itself. It is often worth it in order to preserve the ordering of keys.

Kafka Brokers

So far we have talked about events, topics, and partitions, but as of yet, we have not been too explicit about the actual computers in the picture. From a physical infrastructure standpoint, Kafka is composed of a network of machines called brokers . In a contemporary deployment, these may not be separate physical servers but containers running on pods running on virtualized servers running on actual processors in a physical datacenter somewhere. However they are deployed, they are independent machines each running the Kafka broker process. Each broker hosts some set of partitions and handles incoming requests to write new events to those partitions or read events from them. Brokers also handle replication of partitions between each other.

Replication

It would not do if we stored each partition on only one broker. Whether brokers are bare metal servers or managed containers, they and their underlying storage are susceptible to failure, so we need to copy partition data to several other brokers to keep it safe. Those copies are called follower replica, whereas the main partition is called the leader replica. When you produce data to the leader—in general, reading and writing are done to the leader—the leader and the followers work together to replicate those new writes to the followers.

This happens automatically, and while you can tune some settings in the producer to produce varying levels of durability guarantees, this is not usually a process you have to think about as a developer building systems on Kafka. All you really need to know as a developer is that your data is safe, and that if one node in the cluster dies, another will take over its role.

Client Applications

Now let’s get outside of the Kafka cluster itself to the applications that use Kafka: the producers and consumers . These are client applications that contain your code, putting messages into topics and reading messages from topics. Every component of the Kafka platform that is not a Kafka broker is, at bottom, either a producer or a consumer or both. Producing and consuming are how you interface with a cluster.

Kafka Producers

The API surface of the producer library is fairly lightweight: In Java, there is a class called KafkaProducer that you use to connect to the cluster. You give this class a map of configuration parameters, including the address of some brokers in the cluster, any appropriate security configuration, and other settings that determine the network behavior of the producer. There is another class called ProducerRecord that you use to hold the key-value pair you want to send to the cluster.

To a first-order approximation, this is all the API surface area there is to producing messages. Under the covers, the library is managing connection pools, network buffering, waiting for brokers to acknowledge messages, retransmitting messages when necessary, and a host of other details no application programmer need concern herself with.

Kafka Consumers

Using the consumer API is similar in principle to the producer. You use a class called KafkaConsumer to connect to the cluster (passing a configuration map to specify the address of the cluster, security, and other parameters). Then you use that connection to subscribe to one or more topics. When messages are available on those topics, they come back in a collection called ConsumerRecords, which contains individual instances of messages in the form of ConsumerRecord objects. A ConsumerRecord object represents the key/value pair of a single Kafka message.

KafkaConsumer manages connection pooling and the network protocol just like KafkaProducer does, but there is a much bigger story on the read side than just the network plumbing. First of all, Kafka is different from legacy message queues in that reading a message does not destroy it; it is still there to be read by any other consumer that might be interested in it. In fact, it’s perfectly normal in Kafka for many consumers to read from one topic. This one small fact has a positively disproportionate impact on the kinds of software architectures that emerge around Kafka, which is a topic covered very well elsewhere.

Also, consumers need to be able to handle the scenario in which the rate of message consumption from a topic combined with the computational cost of processing a single message are together too high for a single instance of the application to keep up. That is, consumers need to scale. In Kafka, scaling consumer groups is more or less automatic.

Kafka Components and Ecosystem

If all you had were brokers managing partitioned, replicated topics with an ever-growing collection of producers and consumers writing and reading events, you would actually have a pretty useful system. However, the experience of the Kafka community is that certain patterns will emerge that will encourage you and your fellow developers to build the same bits of functionality over and over again around core Kafka.

You will end up building common layers of application functionality to repeat certain undifferentiated tasks. This is code that does important work but is not tied in any way to the business you’re actually in. It doesn’t contribute value directly to your customers. It’s infrastructure, and it should be provided by the community or by an infrastructure vendor.

It can be tempting to write this code yourself, but you should not. Kafka Connect , the Confluent Schema Registry , Kafka Streams , and ksqlDB are examples of this kind of infrastructure code. We’ll take a look at each of them in turn.

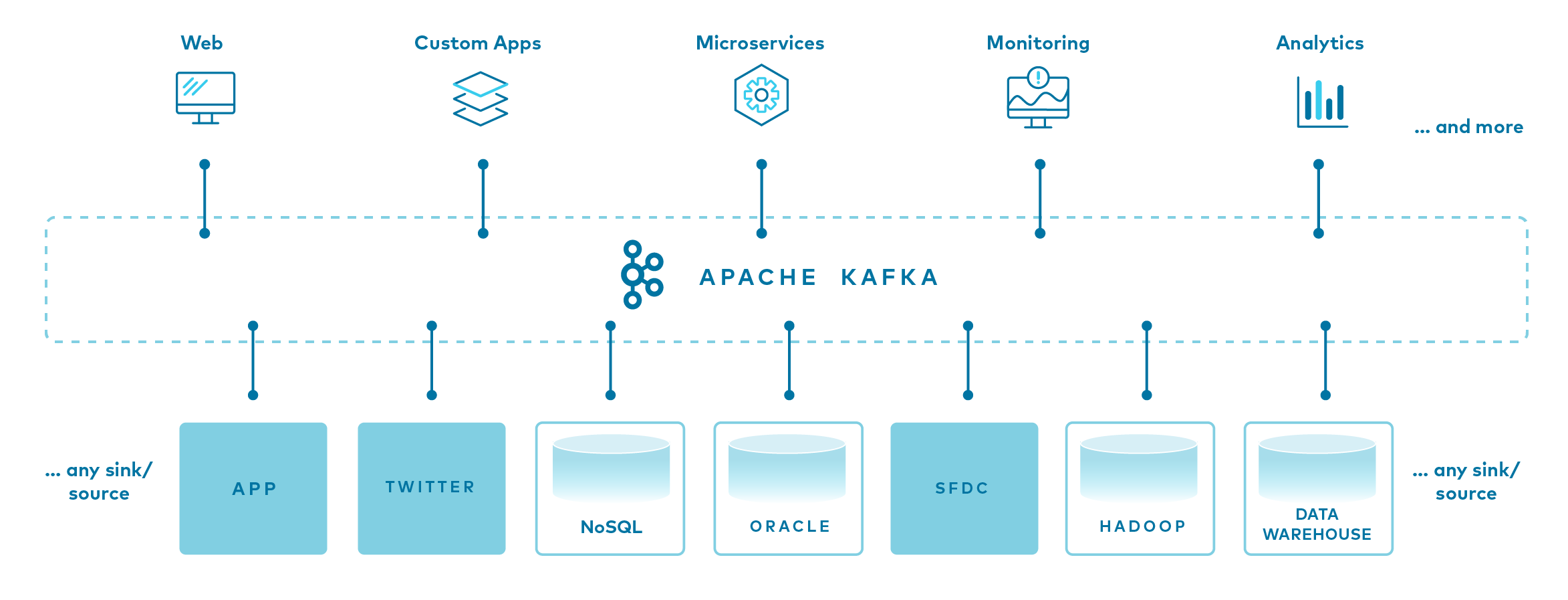

Kafka Connect

In the world of information storage and retrieval, some systems are not Kafka. Sometimes you would like the data in those other systems to get into Kafka topics, and sometimes you would like data in Kafka topics to get into those systems. As Apache Kafka's integration API, this is exactly what Kafka Connect does.

What Does Kafka Connect Do?

On the one hand, Kafka Connect is an ecosystem of pluggable connectors, and on the other, a client application. As a client application, Connect is a server process that runs on hardware independent of the Kafka brokers themselves. It is scalable and fault tolerant, meaning you can run not just one single Connect worker but a cluster of Connect workers that share the load of moving data in and out of Kafka from and to external systems. Kafka Connect also abstracts the business of code away from the user and instead requires only JSON configuration to run. For example, here’s how you’d stream data from Kafka to Elasticsearch:

Benefits of Kafka Connect

One of the primary advantages of Kafka Connect is its large ecosystem of connectors. Writing the code that moves data to a cloud blob store, or writes to Elasticsearch, or inserts records into a relational database is code that is unlikely to vary from one business to the next. Likewise, reading from a relational database, Salesforce, or a legacy HDFS filesystem is the same operation no matter what sort of application does it. You can definitely write this code, but spending your time doing that doesn’t add any kind of unique value to your customers or make your business more uniquely competitive.

All of these are examples of Kafka connectors available in the Confluent Hub , a curated collection of connectors of all sorts and most importantly, all licenses and levels of support. Some are commercially licensed and some can be used for free. Connect Hub lets you search for source and sink connectors of all kinds and clearly shows the license of each connector. Of course, connectors need not come from the Hub and can be found on GitHub or elsewhere in the marketplace. And if after all that you still can’t find a connector that does what you need, you can write your own using a fairly simple API.

Now, it might seem straightforward to build this kind of functionality on your own: If an external source system is easy to read from, it would be easy enough to read from it and produce to a destination topic. If an external sink system is easy to write to, it would again be easy enough to consume from a topic and write to that system. But any number of complexities arise, including how to handle failover, horizontally scale, manage commonplace transformation operations on inbound or outbound data, distribute common connector code, configure and operate this through a standard interface, and more.

Connect seems deceptively simple on its surface, but it is in fact a complex distributed system and plugin ecosystem in its own right. And if that plugin ecosystem happens not to have what you need, the open-source Connect framework makes it simple to build your own connector and inherit all the scalability and fault tolerance properties Connect offers.

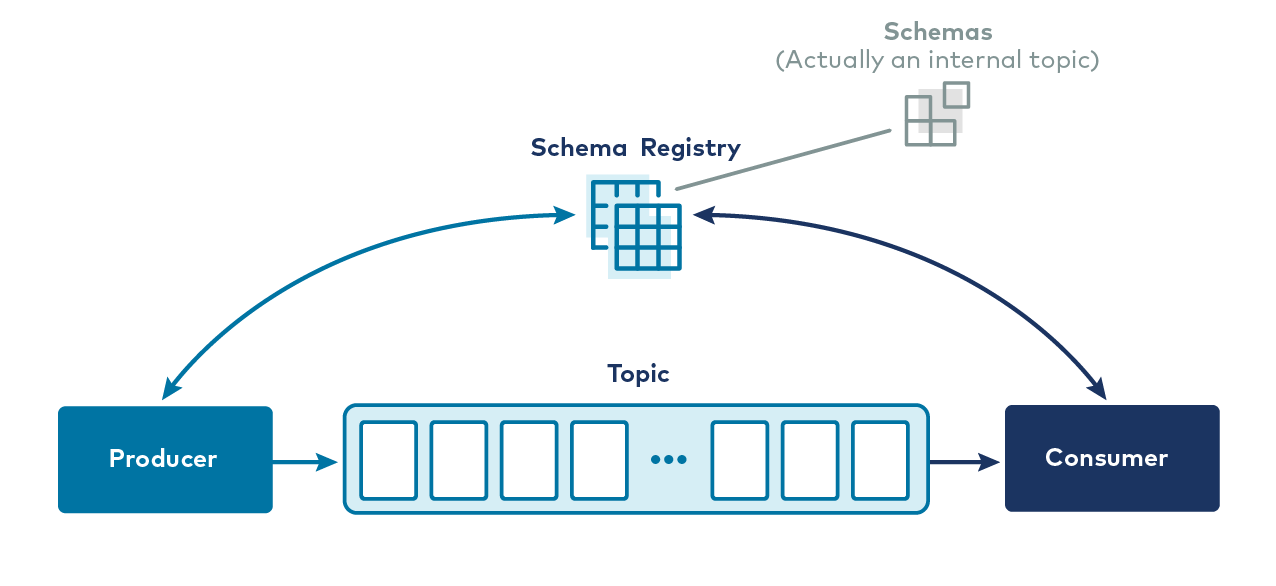

Schema Registry

Once applications are busily producing messages to Kafka and consuming messages from it, two things will happen. First, new consumers of existing topics will emerge. These are brand new applications—perhaps written by the team that wrote the original producer of the messages, perhaps by another team—and will need to understand the format of the messages in the topic. Second, the format of those messages will evolve as the business evolves. Order objects gain a new status field, usernames split into first and last name from full name, and so on. The schema of our domain objects is a constantly moving target, and we must have a way of agreeing on the schema of messages in any given topic.

Confluent Schema Registry exists to solve this problem.

What Is Schema Registry?

Schema Registry is a standalone server process that runs on a machine external to the Kafka brokers. Its job is to maintain a database of all of the schemas that have been written into topics in the cluster for which it is responsible. That “database” is persisted in an internal Kafka topic and cached in the Schema Registry for low-latency access. Schema Registry can be run in a redundant, high-availability configuration, so it remains up if one instance fails.

Schema Registry is also an API that allows producers and consumers to predict whether the message they are about to produce or consume is compatible with previous versions. When a producer is configured to use the Schema Registry, it calls an API at the Schema Registry REST endpoint and presents the schema of the new message. If it is the same as the last message produced, then the produce may succeed. If it is different from the last message but matches the compatibility rules defined for the topic, the produce may still succeed. But if it is different in a way that violates the compatibility rules, the produce will fail in a way that the application code can detect.

Likewise on the consume side, if a consumer reads a message that has an incompatible schema from the version the consumer code expects, Schema Registry will tell it not to consume the message. Schema Registry doesn’t fully automate the problem of schema evolution—that is a challenge in any system regardless of the tooling—but it does make a difficult problem much easier by keeping runtime failures from happening when possible.

Looking at what we’ve covered so far, we’ve got a system for storing events durably, the ability to write and read those events, a data integration framework, and even a tool for managing evolving schemas. What remains is the purely computational side of stream processing.

Kafka Streams

In a growing Kafka-based application, consumers tend to grow in complexity. What might have started as a simple stateless transformation (e.g., masking out personally identifying information or changing the format of a message to conform with internal schema requirements) soon evolves into complex aggregation, enrichment, and more. If you recall the consumer code we looked at up above, there isn’t a lot of support in that API for operations like those: You’re going to have to build a lot of framework code to handle time windows, late-arriving messages, lookup tables, aggregation by key, and more. And once you’ve got that, recall that operations like aggregation and enrichment are typically stateful.

That “state” is going to be memory in your program’s heap, which means it’s a fault tolerance liability. If your stream processing application goes down, its state goes with it, unless you’ve devised a scheme to persist that state somewhere. That sort of thing is fiendishly complex to write and debug at scale and really does nothing to directly make your users’ lives better. This is why Apache Kafka provides a stream processing API. This is why we have Kafka Streams .

What Is Kafka Streams?

Kafka Streams is a Java API that gives you easy access to all of the computational primitives of stream processing: filtering, grouping, aggregating, joining, and more, keeping you from having to write framework code on top of the consumer API to do all those things. It also provides support for the potentially large amounts of state that result from stream processing computations. If you’re grouping events in a high-throughput topic by a field with many unique values then computing a rollup over that group every hour, you might need to use a lot of memory.

Indeed, for high-volume topics and complex stream processing topologies, it’s not at all difficult to imagine that you’d need to deploy a cluster of machines sharing the stream processing workload like a regular consumer group would. The Streams API solves both problems by handling all of the distributed state problems for you: It persists state to local disk and to internal topics in the Kafka cluster, and it automatically reassigns state between nodes in a stream processing cluster when adding or removing stream processing nodes to the cluster.

In a typical microservice, stream processing is a thing the application does in addition to other functions. For example, a shipment notification service might combine shipment events with events in a product information changelog containing customer records to produce shipment notification objects, which other services might turn into emails and text messages. But that shipment notification service might also be obligated to expose a REST API for synchronous key lookups by the mobile app or web front end when rendering views that show the status of a given shipment.

The service is reacting to events—and in this case, joining three streams together, and perhaps doing other windowed computations on the joined result—but it is also servicing HTTP requests against its REST endpoint, perhaps using the Spring Framework or Micronaut or some other Java API in common use. Because Kafka Streams is a Java library and not a set of dedicated infrastructure components that do stream processing and only stream processing, it’s trivial to stand up services that use other frameworks to accomplish other ends (like REST endpoints) and sophisticated, scalable, fault-tolerant stream processing.

Learn About Kafka with More Free Courses and Tutorials

- Learn stream processing in Kafka with the Kafka Streams course

- Get started with Kafka Connectors in the Kafka Connect course

- Check out Michael Noll’s four-part series on Streams and Tables in Apache Kafka

- Listen to the podcast about Knative 101: Kubernetes and Serverless Explained with Jacques Chester

Confluent Cloud is a fully managed Apache Kafka service available on all three major clouds. Try it for free today.

Apache Kafka

Jul 11, 2014

1.48k likes | 2.69k Views

Apache Kafka. A high-throughput distributed messaging system. Johan Lundahl. Agenda. Kafka overview Main concepts and comparisons to other messaging systems Features, strengths and tradeoffs Message format and broker concepts Partitioning, Keyed messages, Replication

Share Presentation

- fluentd official

- topic2 push broker kafka

- consumer group

- serialization message10 consumer3

- replication

- millisecond latencies

Presentation Transcript

Apache Kafka A high-throughput distributed messaging system Johan Lundahl

Agenda • Kafka overview • Main concepts and comparisons to other messaging systems • Features, strengths and tradeoffs • Message format and broker concepts • Partitioning, Keyed messages, Replication • Producer / Consumer APIs • Operation considerations • Kafka ecosystem If time permits: • Kafka as a real-time processing backbone • Brief intro to Storm • Kafka-Storm wordcount demo

What is Apache Kafka? • Distributed, high-throughput, pub-sub messaging system • Fast, Scalable, Durable • Main use cases: • log aggregation, real-time processing, monitoring, queueing • Originally developed by LinkedIn • Implemented in Scala/Java • Top level Apache project since 2012: http://kafka.apache.org/

Comparison to other messaging systems • Traditional: JMS, xxxMQ/AMQP • New gen: Kestrel, Scribe, Flume, Kafka Message queues Low throughput, low latency Log aggregators High throughput, high latency RabbitMQ JMS Flume Hedwig Kafka ActiveMQ Scribe Batch jobs Qpid Kestrel

Kafka concepts Producers Service Frontend Frontend Topic1 Topic3 Topic1 Topic2 Push Broker Kafka Pull Topic3 Topic3 Topic2 Topic2 Topic3 Topic1 Topic1 Data warehouse Batch processing Consumers Monitoring Stream processing

Distributed model KAFKA-156 Producer Producer Producer Producer persistence Partitioned Data Publication Intra cluster replication Broker Broker Broker Zookeeper Ordered subscription Topic2 consumer group Topic1 consumer group

Performance factors • Broker doesn’t track consumer state • Everything is distributed • Zero-copy (sendfile) reads/writes • Usage of page cache backed by sequential disk allocation • Like a distributed commit log • Low overhead protocol • Message batching (Producer & Consumer) • Compression (End to end) • Configurable ack levels From: http://queue.acm.org/detail.cfm?id=1563874

Kafka features and strengths • Simple model, focused on high throughput and durability • O(1) time persistence on disk • Horizontally scalable by design (broker and consumers) • Push - pull => consumer burst tolerance • Replay messages • Multiple independent subscribes per topic • Configurable batching, compression, serialization • Online upgrades

Tradeoffs • Not optimized for millisecond latencies • Have not beaten CAP • Simple messaging system, no processing • Zookeeper becomes a bottleneck when using too many topics/partitions (>>10000) • Not designed for very large payloads (full HD movie etc.) • Helps to know your data in advance

Message/Log Format Message Length Version Checksum Payload

Log based queue (Simplified model) Broker Topic1 Topic2 Producer API used directly by application or through one of the contributed implementations, e.g. log4j/logbackappender Consumer1 Message1 Message1 Message2 Message2 Message3 Message3 Consumer2 Producer1 Message4 Message4 Message5 Message5 Producer2 Message6 Message6 Message7 Message7 Message8 Consumer3 Message9 ConsumerGroup1 Consumer3 • Batching • Compression • Serialization Message10 Consumer3

Partitioning Broker Partitions Topic1 Group1 Producer Group2 Consumer Producer Consumer Producer Consumer Topic2 Producer Group3 Producer Consumer Consumer Consumer No partition for this guy Consumer

Keyed messages #partitions=3 hash(key) % #partitions BrokerId=3 BrokerId=1 BrokerId=2 Topic1 Topic1 Topic1 Message3 Message1 Message2 Message7 Message5 Message4 Message11 Message9 Message6 Message15 Message13 Message8 Message17 Message10 Message12 Message14 Message16 Producer Message18

Intra cluster replication Replication factor = 3 Broker1 Broker2 Broker3 InSyncReplicas Topic1 follower Topic1 leader Topic1 follower Follower fails: • Follower dropped from ISR • When follower comes online again: fetch data from leader, then ISR gets updated Leader fails: • Detected via Zookeeper from ISR • New leader gets elected Message1 Message1 Message1 Message2 Message2 Message2 Message3 Message3 Message3 Message4 Message4 Message4 Message5 Message5 Message5 Message6 Message6 Message6 Message7 Message7 Message7 Message8 Message8 Message8 Producer Message9 Message9 Message9 ack ack ack ack Message10 Message10 Message10 3 commit modes:

Producer API …or for log aggregation: Configuration parameters: ProducerType (sync/async) CompressionCodec (none/snappy/gzip) BatchSize EnqueueSize/Time Encoder/Serializer Partitioner #Retries MaxMessageSize …

Consumer API(s) • High-level (consumer group, auto-commit) • Low-level (simple consumer, manual commit)

Broker Protips • Reasonable number of partitions – will affect performance • Reasonable number of topics – will affect performance • Performance decrease with larger Zookeeper ensembles • Disk flush rate settings • message.max.bytes – max accept size, should be smaller than the heap • socket.request.max.bytes – max fetch size, should be smaller than the heap • log.retention.bytes – don’t want to run out of disk space… • Keep Zookeeper logs under control for same reason as above • Kafka brokers have been tested on Linux and Solaris

Operating Kafka • Zookeeper usage • Producer loadbalancing • Broker ISR • Consumer tracking • Monitoring • JMX • Audit trail/console in the making • Distribution Tools: • Controlled shutdown tool • Preferred replica leader election tool • List topic tool • Create topic tool • Add partition tool • Reassign partitions tool • MirrorMaker

Multi-datacenter replication

Ecosystem • Producers: • Java (in standard dist) • Scala (in standard dist) • Log4j (in standard dist) • Logback: logback-kafka • Udp-kafka-bridge • Python: kafka-python • Python: pykafka • Python: samsa • Python: pykafkap • Python: brod • Go: Sarama • Go: kafka.go • C: librdkafka • C/C++: libkafka • Clojure: clj-kafka • Clojure: kafka-clj • Ruby: Poseidon • Ruby: kafka-rb • Ruby: em-kafka • PHP: kafka-php(1) • PHP: kafka-php(2) • PHP: log4php • Node.js: Prozess • Node.js: node-kafka • Node.js: franz-kafka • Erlang: erlkafka • Consumers: • Java (in standard dist) • Scala (in standard dist) • Python: kafka-python • Python: samsa • Python: brod • Go: Sarama • Go: nuance • Go: kafka.go • C/C++: libkafka • Clojure: clj-kafka • Clojure: kafka-clj • Ruby: Poseidon • Ruby: kafka-rb • Ruby: Kafkaesque • Jruby::Kafka • PHP: kafka-php(1) • PHP: kafka-php(2) • Node.js: Prozess • Node.js: node-kafka • Node.js: franz-kafka • Erlang: erlkafka • Erlang: kafka-erlang Common integration points: Stream Processing Storm - A stream-processing framework. Samza - A YARN-based stream processing framework. Hadoop Integration Camus - LinkedIn's Kafka=>HDFS pipeline. This one is used for all data at LinkedIn, and works great. Kafka Hadoop Loader A different take on Hadoop loading functionality from what is included in the main distribution. AWS Integration Automated AWS deployment Kafka->S3 Mirroring Logging klogd - A python syslog publisher klogd2 - A java syslog publisher Tail2Kafka - A simple log tailing utility Fluentd plugin - Integration with Fluentd Flume Kafka Plugin - Integration with Flume Remote log viewer LogStash integration - Integration with LogStash and Fluentd Official logstash integration Metrics Mozilla Metrics Service - A Kafka and Protocol Buffers based metrics and logging system Ganglia Integration Packing and Deployment RPM packaging Debian packaginghttps://github.com/tomdz/kafka-deb-packaging Puppet integration Dropwizard packaging Misc. Kafka Mirror - An alternative to the built-in mirroring tool Ruby Demo App Apache Camel Integration Infobright integration

What’s in the future? • Topic and transient consumer garbage collection (KAFKA-560/KAFKA-559) • Producer side persistence (KAFKA-156/KAFKA-789) • Exact mirroring (KAFKA-658) • Quotas (KAFKA-656) • YARN integration (KAFKA-949) • RESTful proxy (KAFKA-639) • New build system? (KAFKA-855) • More tooling (Console, Audit trail) (KAFKA-266/KAFKA-260) • Client API rewrite (Proposal) • Application level security (Proposal)

Stream processing Kafka as a processing pipeline backbone Producer Process1 Process2 Kafka topic1 Kafka topic2 Process1 Producer Process2 Process1 Producer Process2 System1 System2

What is Storm? • Distributed real-time computation system with design goals: • Guaranteed processing • No orphaned tasks • Horizontally scalable • Fault tolerant • Fast • Use cases: Stream processing, DRPC, Continuous computation • 4 basic concepts: streams, spouts, bolts, topologies • In Apache incubator • Implemented in Clojure

Streams an [infinite] sequence (of tuples) (timestamp,sessionid,exceptionstacktrace) (t4,s2,e2) (t4,s2,e2) (t3,s3) (t3,s3) (t2,s1,e2) (t2,s1,e2) (t1,s1,e1) (t1,s1,e1) Spouts a source of streams Connects to queues, logs, API calls, event data. Some features like transactional topologies (which gives exactly-once messaging semantics) is only possible using the Kafka-TransactionalSpout-consumer

Bolts (t2,s1,h2) (t1,s1,h1) • Filters • Transformations • Apply functions • Aggregations • Access DB, APIs etc. • Emitting new streams • Trident = a high level abstraction on top of Storm (t4,s2,e2) (t5,s4) (t3,s3)

Topologies (t2,s1,h2) (t1,s1,h1) (t4,s2,e2) (t5,s4) (t3,s3) (t8,s8) (t7,s7) (t6,s6)

Storm cluster Deploy Topology Compare with Hadoop: Nimbus (JobTracker) Zookeeper (TaskTrackers) Supervisor Supervisor Supervisor Supervisor Supervisor Mesos/YARN

Links Apache Kafka: Papers and presentations Main project page Small Mediawiki case study Storm: Introductory article Realtime discussing blog post Kafka+Storm for realtime BigData Trifecta blog post: Kafka+Storm+Cassandra IBM developer article Kafka+Storm@Twitter BigDataQuadfecta blog post

- More by User

Franz Kafka

Franz Kafka. i ndividuals in the grip of incomprehensible bureaucracy literal political meaning or metaphor for the human condition? I suggest: both ; I acknowledge second level but focus here on first

945 views • 35 slides

Franz Kafka. The Metamorphosis. Early Life. Born in 1883 in Prague, Bohemia (Czech Rep.) Middle-class, German-speaking Jewish family Strained relationship with parents Still strong dependence on family Mirrored in Metamorphosis Gregor’s dependence on his sister and mother

608 views • 24 slides

Franz Kafka Born in 1883 to a middle-class, German-speaking, Jewish family in Prague, Bohemia (now the Czech Republic). Helped raise three younger sisters while his parents worked at his father’s business as many as 12 hours every day. Kafka had a difficult relationship with his

411 views • 6 slides

FRANZ KAFKA

FRANZ KAFKA . By Celile Önürt -20627842494. Overview. Life and Work Education Employment Literary Career Writing Style Publications Death. L ife and Work. Franz Kafka, born 3 July 1883 in Prague and died 3 June 1924 in Keirling

451 views • 13 slides

The Metamorphosis. Franz Kafka. The Metamorphosis. Great ending point for the semester a very specific study in Psychoanalysis and Formalism. 1. Formalism and The Metamorphosis. You should understand these literary terms: eponym and Kafkaesque defamiliarization novella Parable

742 views • 34 slides

1883-1924. Franz Kafka. Born in the middle class His whole family was Jewish Eldest of 6 children He was a shy kid. Early Years. Admitted to Charles Ferdinand University of Prague: first studied chemistry but, after two weeks, switched to law.

1.31k views • 17 slides

Apache Samza * Reliable Stream Processing atop Apache Kafka and Yarn

Apache Samza * Reliable Stream Processing atop Apache Kafka and Yarn. Sriram Subramanian Me on Linkedin Me on twitter - @sriramsub1. * Incubating. Agenda. Why Stream Processing? What is Samza’s Design ? How is Samza’s Design Implemented? How can you use Samza ?

1.36k views • 100 slides

Franz Kafka. His life His work His “issues”. Early Life. Born July 3, 1883 Born into a Jewish family in Prague. Early life. Named for Emperor Franz Joseph of Austria-Hungary Middle class family Father had a dry goods store

569 views • 24 slides

Apache Kafka Courses

Apache Kafka’s unique architecture enables huge scalability, but it must be deployed and managed in a considered fashion. This course covers the basic concepts of Apache Kafka, and considerations for deploying Kafka and managing servers. http://www.e-learningcenter.com/

359 views • 4 slides

Apache Kafka Plugin-ORIEN IT

To provide detailed knowledge on Spark And Scala and its prominence in data handling. • To build knowledge on the future trends and career scope by attaining our institutes Spark And Scala Course In Hyderabad. • Scope for employment opportunities with Spark And Scala training. • Sorting out all kinds of doubts regarding Spark And Scala with the experts. webpage:http://www.orienit.com/courses/Spark-and-Scala-Training-in-Hyderabad.html Blogs: http://www.kalyanhadooptraining.com/ http://kalyanbigdatatraining.blogspot.com/

248 views • 9 slides

This presentation gives an overview of the Apache Kafka project. It covers areas like producer, consumer, topic, partitions, API's, architecture and usage. Links for further information and connecting http://www.amazon.com/Michael-Frampton/e/B00NIQDOOM/ https://nz.linkedin.com/pub/mike-frampton/20/630/385 https://open-source-systems.blogspot.com/ Music by "Little Planet", composed and performed by Bensound from http://www.bensound.com/

418 views • 11 slides

![presentation on kafka Apache Pulsar vs Apache Kafka [Infographic]](https://cdn5.slideserve.com/10140291/slide1-dt.jpg)

Apache Pulsar vs Apache Kafka [Infographic]

From zero message loss to all in one messaging, native geo-replication and much more, when it comes to distributed messaging, Pulsar outperforms Kafka at every turn. Learn more about Pandio's solutions at: https://pandio.com/apache-pulsar-as-a-service/

90 views • 1 slides

Integrating Apache NiFi and Apache Kafka

Learn More About How The Nifi-Kafka Combo Can Benefit You! Check out PPT contains detailed info. For NiFi Kafka experts visit Ksolves.

94 views • 7 slides

Apache Kafka PPT

Title: The Power of Apache Kafka: A Comprehensive Guide

Introduction

- Begin by defining distributed streaming platforms and their importance in the modern data landscape.

- Handling massive data volumes

- Real-time data processing

- Fault tolerance and reliability needs

What is Apache Kafka?

- A high-throughput, distributed, publish-subscribe messaging system.

- Designed to handle real-time data streams from diverse sources.

- Built for scalability and reliability to handle massive datasets.

Key Concepts

- Topics: Logical categories for organizing data streams.

- Partitions: Topics are divided into partitions for scalability and distribution.

- Producers: Applications that send messages to Kafka topics.

- Consumers: Applications that read and process messages from Kafka topics.

- Brokers: Kafka servers that manage data storage and replication.

- ZooKeeper: Coordination service for Kafka clusters (although note that newer versions may lessen reliance on Zookeeper).

- Real-time Analytics: Processing data streams for immediate insights.

- Microservices Communication: Enabling reliable communication between distributed services.

- Activity Tracking: Monitoring website clicks, user behavior, etc.

- Log Aggregation: Centralizing logs from different systems.

- Messaging: Replacing traditional message queues with a more scalable solution.

Architecture

- Components: Visually illustrate producers, consumers, brokers, topics, and partitions.

- Data Flow: Describe the message journey to clarify interactions.

- Replication: Show how replication ensures high availability and fault tolerance.

Benefits of Kafka

- Scalability: Handles massive data volumes with ease.

- Reliability: Replicated data and fault tolerance ensure no data loss.

- Performance: Low latency high throughput for demanding applications.

- Flexibility: Supports a wide range of use cases and programming languages.

Getting Started

- Installation and Configuration: Basic setup steps.

- Simple Producer/Consumer Example: Demonstrate sending and receiving messages.

Additional Considerations

- Integration with Other Systems: Spark, Flink, Hadoop, etc.

- Advanced Concepts: Kafka Streams, Kafka Connect, KSQL.

- Security: Authentication, authorization, and encryption.

Slide 1: Title Slide

- Title: The Power of Apache Kafka: A Comprehensive Guide

- Your Name/Company

Slide 2: Introduction

- Define distributed streaming platforms and their importance.

- Mention key challenges of handling large, real-time datasets.

Slide 3: Apache Kafka Overview

- Brief definition of Kafka.

- Highlight core features.

Slides 4-6: Key Concepts

- Dedicated slides to Topics, Partitions, Producers, Consumers, and Brokers.

- Use simple diagrams where possible.

Slide 7: Use Cases

- 3-4 strong use case examples, with visuals if possible

Slide 8: Kafka Architecture

- Key components diagram.

- Arrows to show data flow.

Slides 9-10: Benefits

- Bullet points out the primary benefits of Kafka.

Slides 11-12: Getting Started

- Overview of installation (download link).

- Simple code example of a producer and consumer.

Slide 13: Conclusion

- Summarize Kafka’s strengths

- End with a call to action (explore further, try it out).

Important Notes:

- Tailor to Audience: Adjust technical depth based on audience knowledge.

- Visuals: Make liberal use of diagrams and illustrations.

- Practice: Rehearse for confidence and timing.

Conclusion:

Unogeeks is the No.1 IT Training Institute for Apache kafka Training. Anyone Disagree? Please drop in a comment

You can check out our other latest blogs on Apache Kafka here – Apache kafka Blogs

You can check out our Best In Class Apache Kafka Details here – Apache kafka Training

Follow & Connect with us:

———————————-

For Training inquiries:

Call/Whatsapp: +91 73960 33555

Mail us at: [email protected]

Our Website ➜ https://unogeeks.com

Instagram: https://www.instagram.com/unogeeks

Facebook: https://www.facebook.com/UnogeeksSoftwareTrainingInstitute

Twitter: https://twitter.com/unogeek

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Apache Kafka Architecture and Its Components-The A-Z Guide

Apache Kafka Architecture with Diagram - Explore the Event Driven Architecture of Kafka Cluster, its features and the role of various components.

A detailed introduction to Apache Kafka Architecture, one of the most popular messaging systems for distributed applications.

The first COVID-19 cases were reported in the United States in January 2020. By the end of the year, over 200,000 cases were reported per day, which climbed to 250,000 cases in early 2021. Responding to a pandemic on such a large scale involves technical and public health challenges. One of the challenges was keeping track of the data coming in from many data streams in multiple formats. The CELR (COVID Electronic Lab Reporting) program of the Centre for Disease Control and Prevention (CDC) was established to validate, transform and aggregate laboratory testing data submitted by various public health departments and other partners. Kafka Streams and Kafka Connect were used to keep track of the threat of the COVID-19 virus and analyze the data for a more thorough response on local, state, and federal levels.

Kafka is an integral part of Netflix’s real-time monitoring and event-processing pipeline. The Keystone Data Pipeline of Netflix processes over 500 billion events a day . These events include error logs, data on user viewing activities, and troubleshooting events, among other valuable datasets.

Streaming ETL in Kafka with KSQL using NYC TLC Data

Downloadable solution code | Explanatory videos | Tech Support

At LinkedIn, Kafka is the backbone behind various products, including LinkedIn Newsfeed and LinkedIn Today. Spotify uses Kafka as part of its log delivery system.

Kafka is used by thousands of companies today, including over 60% of the Fortune 100, including Box, Goldman Sachs, Target, Cisco, and Intuit. Apache Kafka is one of the most popular open-source distributed streaming platforms for processing large volumes of streaming data from real-time applications.

Table of Contents

Why is apache kafka so popular.

- Apache Kafka Architecture - Overview of Kafka Components

Apache Kafka Event-Driven Workflow Orchestration

The role of zookeeper in apache kafka architecture, drawbacks of apache kafka, apache kafka use cases .

So why is Kafka so popular? And what makes it such a popular choice for companies?

Scalability: The scalability of a system is determined by how well it can maintain its performance when exposed to changes in application and processing demands. Apache Kafka has a distributed architecture capable of handling incoming messages with higher volume and velocity. As a result, Kafka is highly scalable without any downtime impact.

High Throughput: Apache Kafka is able to handle thousands of messages per second. Messages coming in at a high volume or a high velocity or both will not affect the performance of Kafka.

Low Latency: Latency refers to the amount of time taken for a system to process a single event. Kafka offers a very low latency, which is as low as ten milliseconds.

Fault Tolerance: By using replication, Kafka can handle failures at nodes in a cluster without any data loss. Running processes, too, can remain undisturbed. The replication factor determines the number of replicas for a partition. For a replication factor of ‘n,’ Kafka guarantees a fault tolerance for up to n-1 servers in the Kafka cluster.

Reliability: Apache Kafka is a distributed platform with very high fault tolerance, making it a very reliable system to use.

Durability: Data present on the Kafka cluster is allowed to remain persistent more on the cluster than on the disk. This ensures that Kafka’s data remains durable.

- Ability to handle real-time data: Kafka supports real-time data handling and is an excellent choice when data has to be processed in real-time.

New Projects

Apache Kafka Architecture Explained- Overview of Kafka Components

Let’s look in detail at the architecture of Apache Kafka and the relationship between the various architectural components to develop a deeper understanding of Kafka for distributed streaming. But before delving into the components of Apache Kafka, it is crucial to grasp the concept of a Kafka cluster first.

What is a Kafka Cluster?

A Kafka cluster is a distributed system composed of multiple Kafka brokers working together to handle the storage and processing of real-time streaming data. It provides fault tolerance, scalability, and high availability for efficient data streaming and messaging in large-scale applications.

Apache Kafka Components and Its Architectural Concepts

A stream of messages that are a part of a specific category or feed name is referred to as a Kafka topic. In Kafka, data is stored in the form of topics. Producers write their data to topics, and consumers read the data from these topics.

Here's what valued users are saying about ProjectPro

Ameeruddin Mohammed

ETL (Abintio) developer at IBM

Graduate Research assistance at Stony Brook University

Not sure what you are looking for?

A Kafka cluster comprises one or more servers that are known as brokers. In Kafka, a broker works as a container that can hold multiple topics with different partitions. A unique integer ID is used to identify brokers in the Kafka cluster. Connection with any one of the kafka brokers in the cluster implies a connection with the whole cluster. If there is more than one broker in a cluster, the brokers need not contain the complete data associated with a particular topic.

Consumers and Consumer Groups

Consumers read data from the Kafka cluster. The data to be read by the consumers has to be pulled from the broker when the consumer is ready to receive the message. A consumer group in Kafka refers to a number of consumers that pull data from the same topic or same set of topics.

Ace Your Next Job Interview with Mock Interviews from Experts to Improve Your Skills and Boost Confidence!

Producers in Kafka publish messages to one or more topics. They send data to the Kafka cluster. Whenever a Kafka producer publishes a message to Kafka, the broker receives the message and appends it to a particular partition. Producers are given a choice to publish messages to a partition of their choice.

Topics in Kafka are divided into a configurable number of parts, which are known as partitions. Partitions allow several consumers to read data from a particular topic in parallel. Partitions are separated in order. The number of partitions is specified when configuring a topic, but this number can be changed later on. The partitions comprising a topic are distributed across servers in the Kafka cluster. Each server in the cluster handles the data and requests for its share of partitions. Messages are sent to the broker along with a key. The key can be used to determine which partition that particular message will go to. All messages which have the same key go to the same partition. If the key is not specified, then the partition will be decided in a round-robin fashion.

Partition Offset

Messages or records in Kafka are assigned to a partition. To specify the position of the records within the partition, each record is provided with an offset. A record can be uniquely identified within its partition using the offset value associated with it. A partition offset carries meaning only within that particular partition. Older records will have lower offset values since records are added to the ends of partitions.

Replicas are like backups for partitions in Kafka. They are used to ensure that there is no data loss in the event of a failure or a planned shutdown. Partitions of a topic are published across multiple servers in a Kafka cluster. Copies of the partition are known as Replicas.

Leader and Follower

Every partition in Kafka will have one server that plays the role of a leader for that particular partition. The leader is responsible for performing all the read and write tasks for the partition. Each partition can have zero or more followers. The duty of the follower is to replicate the data of the leader. In the event of a failure in the leader for a particular partition, one of the follower nodes can take on the role of the leader.

Get FREE Access to Data Analytics Example Codes for Data Cleaning, Data Munging, and Data Visualization

Kafka Producers

In Kafka, the producers send data directly to the broker that plays the role of leader for a given partition. In order to help the producer send the messages directly, the nodes of the Kafka cluster answer requests for metadata on which servers are alive and the current status of the leaders of partitions of a topic so that the producer can direct its requests accordingly. The client decides which partition it publishes its messages to. This can either be done arbitrarily or by making use of a partitioning key, where all messages containing the same partition key will be sent to the same partition.

Messages in Kafka are sent in the form of batches, known as record batches. The producers accumulate messages in memory and send them in batches either after a fixed number of messages are accumulated or before a fixed latency bound period of time has elapsed.

Explore Categories

Kafka Brokers

In Kafka, the cluster usually contains multiple nodes, that are known as brokers, to maintain the load balance. The brokers are stateless, and hence their cluster state is maintained by the ZooKeeper. One Kafka broker is able to handle hundreds of thousands of reads and writes per second. For one particular partition, one broker serves as the leader. The leader may have one or more followers, where the data on the leader is to be replicated across the followers for that particular partition. The role of leader for partitions is distributed across brokers in the cluster.

The nodes in a cluster have to send messages called Heartbeat messages to the ZooKeeper to keep the ZooKeeper informed that they are alive. The followers have to stay caught up with the data that is in the leader. The leader keeps track of the followers that are “in sync” with it. If a follower is no longer alive or does not stay caught up with the leader, it is removed from the list of in-sync replicas (ISRs) associated with that particular leader. If the leader dies, a new leader is selected from among the followers. The election of the new leader is handled by the ZooKeeper.

Kafka Consumers

In Kafka, the consumer has to issue requests to the brokers indicating the partitions it wants to consume. The consumer is required to specify its offset in the request and receives a chunk of log beginning from the offset position from the broker. Since the consumer has control over this position, it can re-consume data if required. Records remain in the log for a configurable time period which is known as the retention period. The consumer may re-consume the data as long as the data is present in the log.

In Kafka, the consumers work on a pull-based approach. This means that data is not immediately pushed onto the consumers from the brokers. The consumers have to send requests to the brokers to indicate that they are ready to consume the data. A pull-based system ensures that the consumer does not get overwhelmed with messages and can fall behind and catch up when it can. A pull-based system can also allow aggressive batching of data sent to the consumer since the consumer will pull all available messages after its current position in the log. In this manner, batching is performed without any unnecessary latency.

End to End Batch Compression

To efficiently handle large volumes of data, Kafka performs compression of messages. Efficient compression involves compressing multiple messages together instead of compressing individual messages. For the reason that Apache Kafka supports an efficient batching format, a batch of messages can be compressed together and sent to the server in this format. The batch of messages here get written to the broker in a compressed format and continue to remain compressed in the log until they are extracted and decompressed by the consumer.

Get confident to build end-to-end projects

Access to a curated library of 250+ end-to-end industry projects with solution code, videos and tech support.

Apache ZooKeeper is a software developed by Apache that acts as a centralized service and is used to maintain the configuration of data to provide flexible yet robust synchronization for distributed systems. The ZooKeeper is used to manage and coordinate Kafka brokers in the cluster. It maintains a list of the brokers and manages them using this list. It is used to notify the producer and consumer about the presence of new brokers or about the failure of brokers in the Kafka cluster. Using this information, the producer and consumer can make a decision and accordingly coordinate with some other broker in the cluster. The ZooKeeper is also used to store information about the Kafka cluster and the various details regarding the consumer clients. The ZooKeeper is also responsible for choosing a leader for the partitions. In the event of a failure in the leader node, it is the duty of the ZooKeeper to coordinate the leader election and choose the next leader for a partition.

Up until Kafka 2.8.0, it was not possible to run a Kafka cluster without the ZooKeeper. However, in the 2.8.0 release, the Kafka team is rolling out an alternative method where users can run a Kafka cluster without ZooKeeper but instead using an internal implementation of the Raft consensus algorithm. The changes are outlined in KIP-500 (Kafka Improvement Proposal - 500). The goal here is to move topic metadata and configurations out of ZooKeeper and into a new internal topic, named @metadata, which is managed by an internal Raft quorum of controllers, and replicated to all brokers in the cluster.

Recommended Reading:

- Apache Spark Architecture Explained in Detail

- Hadoop Architecture Explained in Detail

- Top 75 Data Engineer Interview Questions and Answers for 2021

Achieving Performance Tuning in Apache Kafka

Optimum performance involves the consideration of two key measures: latency and throughput. Latency refers to the time taken to process one event. Hence a lower latency is required for better performance. Throughput denotes the number of events that can be processed in a specific amount of time, and hence, the goal is to always have a higher throughput. Many systems tend to optimize one and end up compromising the other, but Kafka attains a perfect balance of the two.

Tuning Apache Kafka for optimal performance involves:

Tuning Kafka Producer: Data that the producers publish to the brokers is stored in a batch and sent only when the batch is ready. To tune the producers, two parameters are taken into consideration -

Batch Size: The batch size has to be decided based on the nature of the volume of messages sent by the producer. Producers which send messages frequently will work better with larger batch sizes so that throughput can be maximized without compromising heavily on the latency. In cases where the producers do not send messages frequently, smaller batch size is preferred. In such cases, if the batch size is very large, it may never get full or take a long time to fill up. This will increase the latency and hence, compromise performance.

Linger Time: The linger time is added to create a delay to allow more records to be filled up in the batch so that larger batches can be sent. A longer linger time allows more messages to be sent in one batch but can result in compromising latency. On the other hand, a reduced linger time results in fewer messages getting sent faster, and as a result, there is lower latency but reduced throughput too.

Tuning Kafka Brokers: Every partition has a leader associated with it and zero or more followers for the leader. While the Kafka cluster is running, due to failures in some of the brokers or due to reallocation of partitions, an imbalance may occur among the brokers in the cluster. Some brokers might be overworked compared to others. In such cases, it is important to monitor the brokers and ensure that the workload is balanced across the various brokers present in the cluster.

Tuning Kafka Consumers: While tuning consumers, it is important to keep in mind that a consumer can read many partitions, but one consumer can only read one partition. A good practice to follow is to keep the number of consumers equal to or lower than the partition count. If the customers are lower than the partition count, the number of partitions can be an exact multiple of the number of consumers. More consumers than partitions will result in some consumers remaining idle.

Get More Practice, More Big Data and Analytics Projects , and More guidance.Fast-Track Your Career Transition with ProjectPro

We have already seen some interesting reasons that make Apache Kafka a popular tool for distributed streaming, but like every other big data tool , there are a few downsides to using Apache Kafka-

Tweaking messages in Kafka results in performance issues. Kafka is well-suited for cases where the message does not have to be changed.

In Kafka, there is no support for wildcard topic selection. The topic name has to be an exact match.

Certain message paradigms such as point-to-point queues and request/reply features are not supported by Kafka.

Large messages require compression and decompression of messages. This results in an effect on the throughput and performance of Kafka.

Message Broker

Kafka serves as an excellent replacement for traditional message brokers. Compared to traditional massage brokers, Apache Kafka provides better throughput and is capable of handling a larger volume of messages. Kafka can be used as a publish-subscribe messaging service and is a good tool for large-scale message processing applications.

Tracking Website Activities

The activity associated with a website, that includes metrics like page views, searches, and other actions that users take, is published to a centralized topic which in turn contains a topic for each type of activity. This data can be further used for real-time processing, real-time monitoring, and loading into the Hadoop Ecosystem for processing in the future. Website activity usually involves a very high volume of data as several messages are generated for page views by a single user.

Monitoring Metrics

Kafka finds applications in monitoring the metrics associated with operational data. Statistics from distributed applications are consolidated into centralized feeds to monitor their metrics.

Stream Processing

A widespread use case for Kafka is to process data in processing pipelines, where raw data is consumed from topics and then further processed or transformed into a new topic or topics, that will be consumed for another round of processing. These processing pipelines create channels of real-time data. Kafka version 0.10.0.0 onwards, a powerful stream processing library known as Kafka Streams, has been made available in Kafka to process data in such a format.

Build an Awesome Job Winning Project Portfolio with Solved End-to-End Big Data Projects

Event Sourcing

Event sourcing refers to an application design that involves logging state changes as a sequence of records ordered based on time. Kafka’s ability to store large logs make it a great choice for event sourcing applications.

Kafka can be used as an external commit-log for a distributed application.

Kafka’s replication feature can help replicate data between multiple nodes and allow re-syncing in failed nodes for data restoration when required. In addition, Kafka can also be used as a centralized repository for log files from multiple data sources and in cases where there is distributed data consumption. In such cases, data can be collected from physical log files of servers and from numerous sources and made available in a single location.

To explore the powerful and versatile streaming loads on Apache Kafka , it is necessary to have some hands-on experience working with the architecture in real life. Working on real-time Apache Kafka projects is an excellent way to build your big data skills and experience to nail your next big data job interview to land a top gig as a big data professional.

About the Author

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,

© 2024

© 2024 Iconiq Inc.

Privacy policy

User policy

Write for ProjectPro

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between.

A wide range of resources to get you started

Deep-dives into key concepts

Architectures for event streaming

Q & A about Kafka® and its ecosystem

The Confluent blog

Our podcast, Streaming Audio

Build a client app, explore use cases, and build on our demos and resources

Build apps in your favorite language

Hands-on stream processing examples

More resources to get you started

Confluent proudly supports the global community of streaming platforms, real-time data streams, Apache Kafka®️, and its ecosystems

Kafka and data streaming community

Community forums and Slack channels

Sharing expertise with the community

Bi-weekly newsletter with Apache Kafka® resources, news from the community, and fun links.

Nominate amazing use cases and view previous winners

Real-world Examples of Apache Kafka® and Flink® in action

Register now!

View sessions and slides from Current 2023

View sessions and slides from Kafka Summit 2023

- Get Started with Confluent Platform

Kafka Basics on Confluent Platform ¶

Apache Kafka® is an open-source, distributed, event streaming platform capable of handling large volumes of real-time data. You use Kafka to build real-time streaming applications. Confluent is a commercial, global corporation that specializes in providing businesses with real-time access to data. Confluent was founded by the creators of Kafka, and its product line includes proprietary products based on open-source Kafka. This topic describes Kafka use cases, the relationship between Confluent and Kafka, and key differences between the Confluent products.

How Kafka relates to Confluent ¶

Confluent products are built on the open-source software framework of Kafka to provide customers with reliable ways to stream data in real time. Confluent provides the features and know-how that enhance your ability to reliably stream data. If you’re already using Kafka, that means Confluent products support any producer or consumer code you’ve already written with the Kafka Java libraries. Whether you’re already using Kafka or just getting started with streaming data, Confluent provides features not found in Kafka. This includes non-Java libraries for client development and server processes that help you stream data more efficiently in a production environment, like Confluent Schema Registry , ksqlDB , and Confluent Hub . Confluent offers Confluent Cloud , a data-streaming service, and Confluent Platform , software you download and manage yourself.

Kafka use cases ¶

Consider an application that uses Kafka topics as a backend to store and retrieve posts, likes, and comments from a popular social media site. The application incorporates producers and consumers that subscribe to those Kafka topics. When a user of the application publishes a post, likes something, or comments, the Kafka producer code in the application sends that data to the associated topic. When the user navigates to a particular page in the application, a Kafka consumer reads from the associated backend topic and the application renders data on the user’s device. For more information, see Use cases in the Apache Kafka Docs hosted by Confluent.

Confluent Platform ¶

Confluent Platform is software you download and manage yourself. Any Kafka use cases are also Confluent Platform use cases. Confluent Platform is a specialized distribution of Kafka that includes additional features and APIs . Many of the commercial Confluent Platform features are built into the brokers as a function of Confluent Server .

The fundamental capabilities, concepts , design ethos , and ways of working that you already know from using Kafka, also apply to Confluent Platform. By definition, Confluent Platform ships with all of the basic Kafka command utilities and APIs used in development, along with several additional CLIs to support Confluent specific features. To learn more about Confluent Platform, see What is Confluent Platform? .

Confluent Platform releases include the latest stable version of Apache Kafka , so when you install Confluent Platform you are also installing Kafka. To view a mapping of Confluent Platform release to Kafka versions, see Supported Versions and Interoperability for Confluent Platform .

Ready to get started?

- Download Confluent Platform , the self managed, enterprise-grade distribution of Apache Kafka and get started using the Confluent Platform quick start .

Confluent Cloud ¶

Confluent Cloud provides Kafka as a cloud service, so that means you no longer need to install, upgrade or patch Kafka server components. You also get access to a cloud-native design , which offers Infinite Storage, elastic scaling and an uptime guarantee. If you’re coming to Confluent Cloud from open source Kafka, you can use data-streaming features only available from Confluent, including non-Java client libraries and proxies for Kafka producers and consumers, tools for monitoring and observability, an intuitive browser-based user interface, enterprise-grade security and data governance features.

Confluent Cloud includes different types of server processes for steaming data in a production environment. In addition to brokers and topics, Confluent Cloud provides implementations of Kafka Connect, Schema Registry, and ksqlDB.

- Sign up for Confluent Cloud , the fully managed cloud-native service for Apache Kafka® and get started for free using the Cloud quick start .

What’s next ¶

- An overview of options for How to run Confluent Platform

- Instructions on how to set up Confluent Enterprise deployments on a single laptop or machine that models production style configurations, such as multi-broker or multi-cluster , including discussion of replication factors for topics

- Kafka Commands Primer , a commands cheat sheet that also helps clarify how Apache Kafka® utilities might fit into a development or administrator workflow

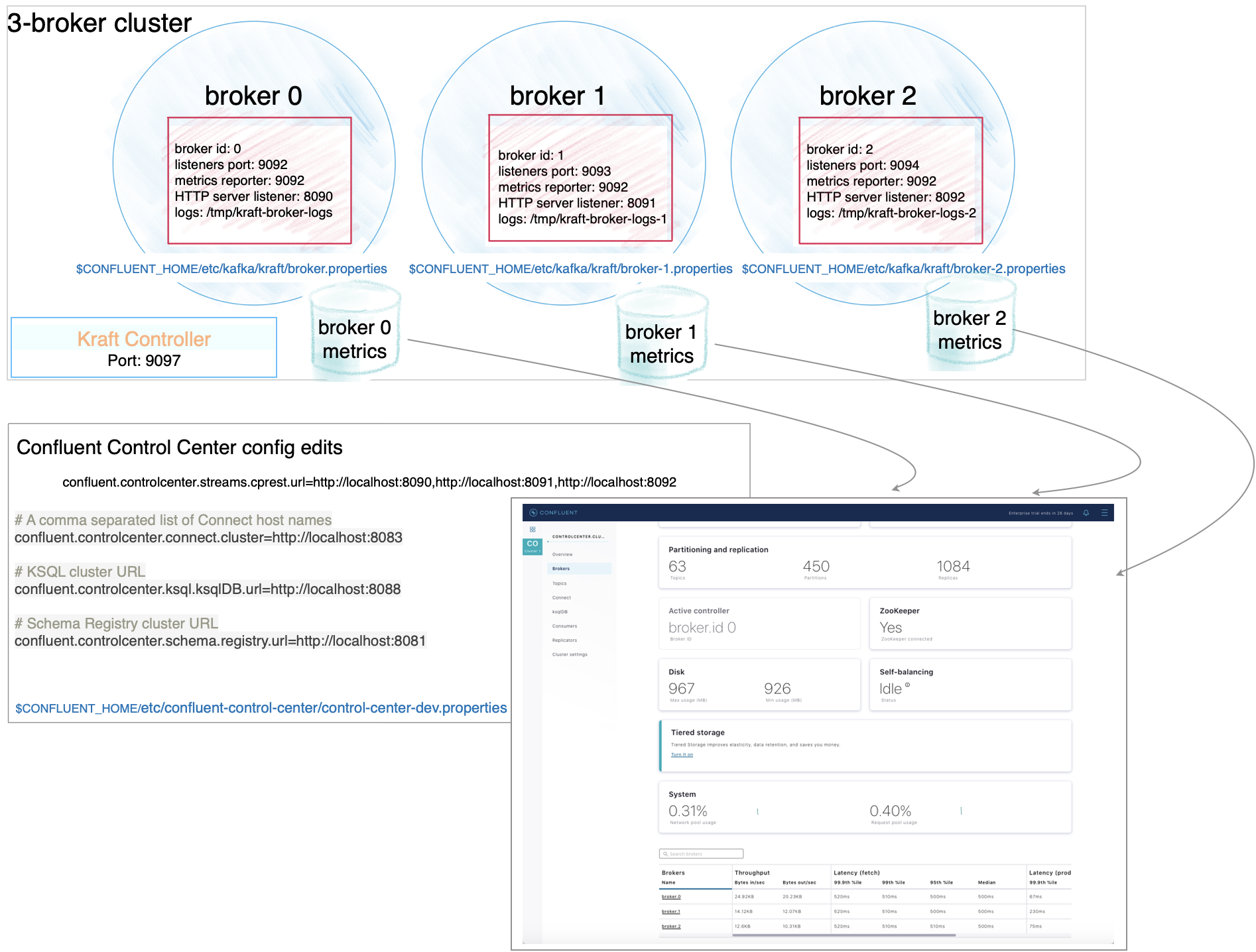

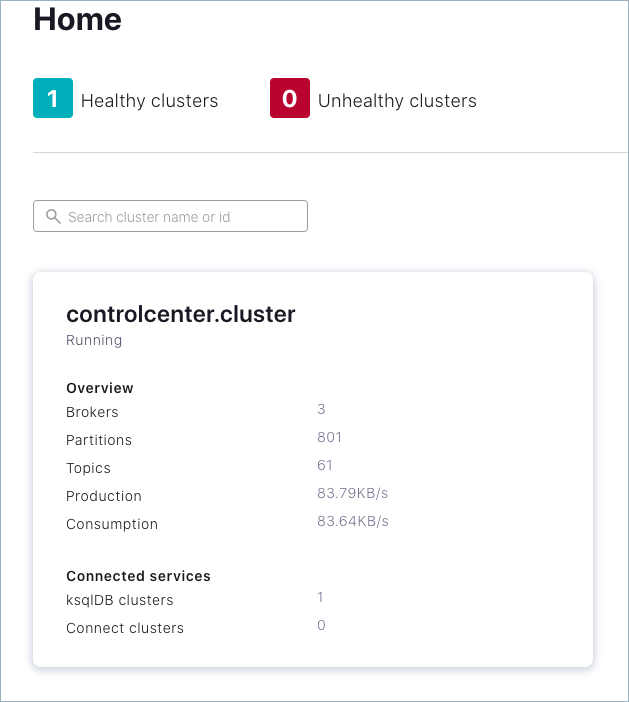

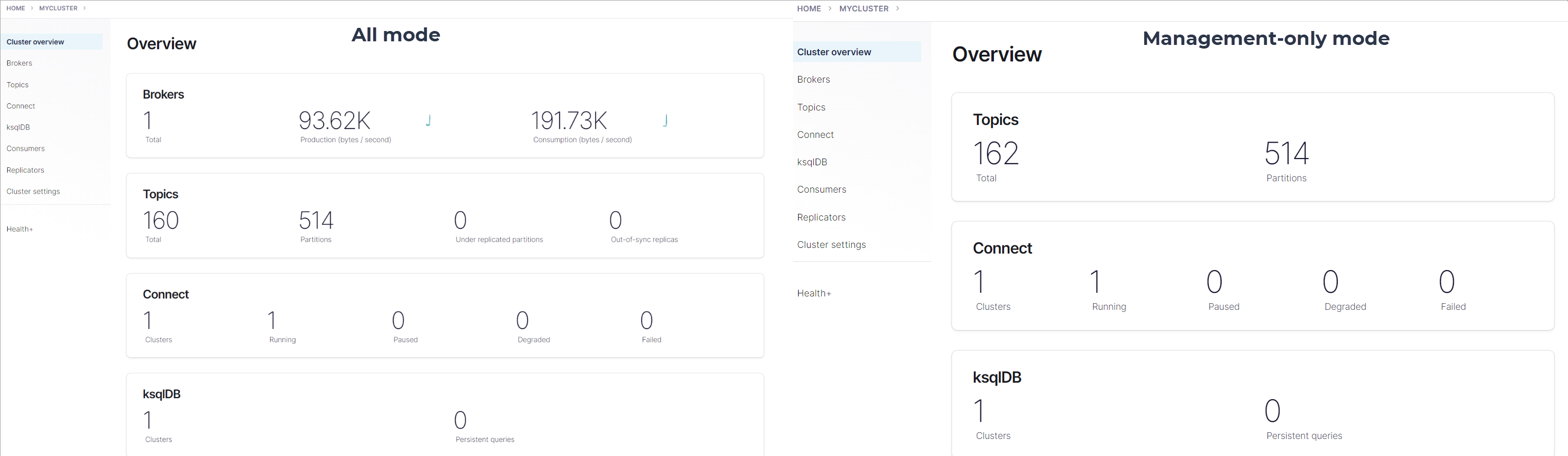

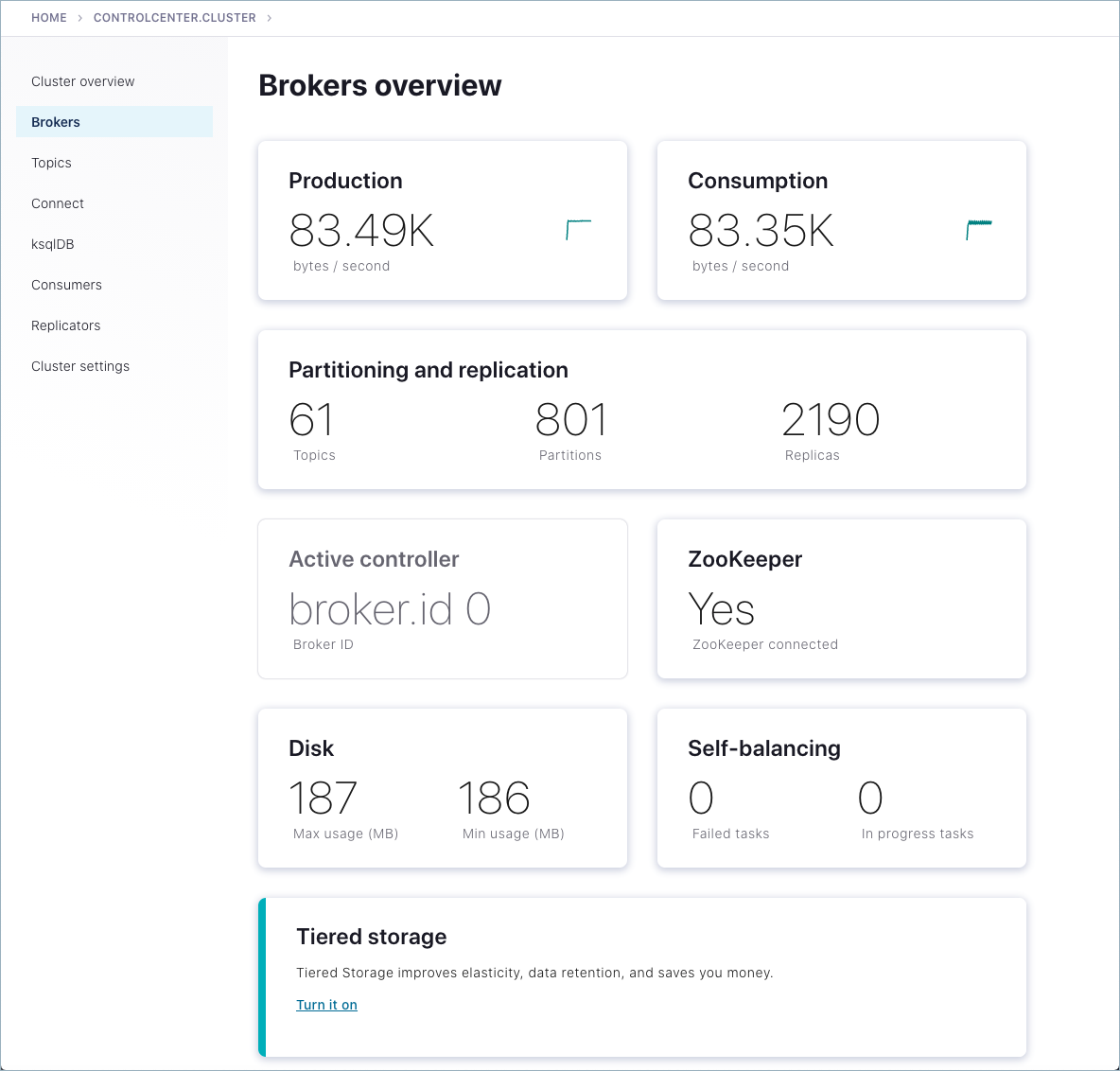

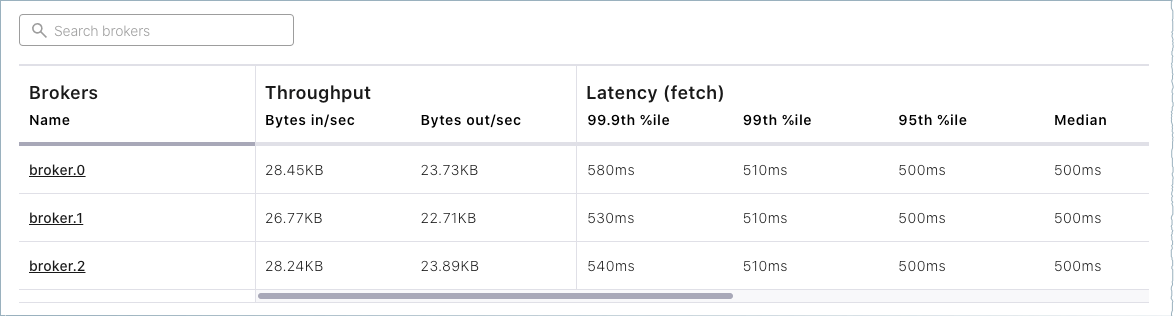

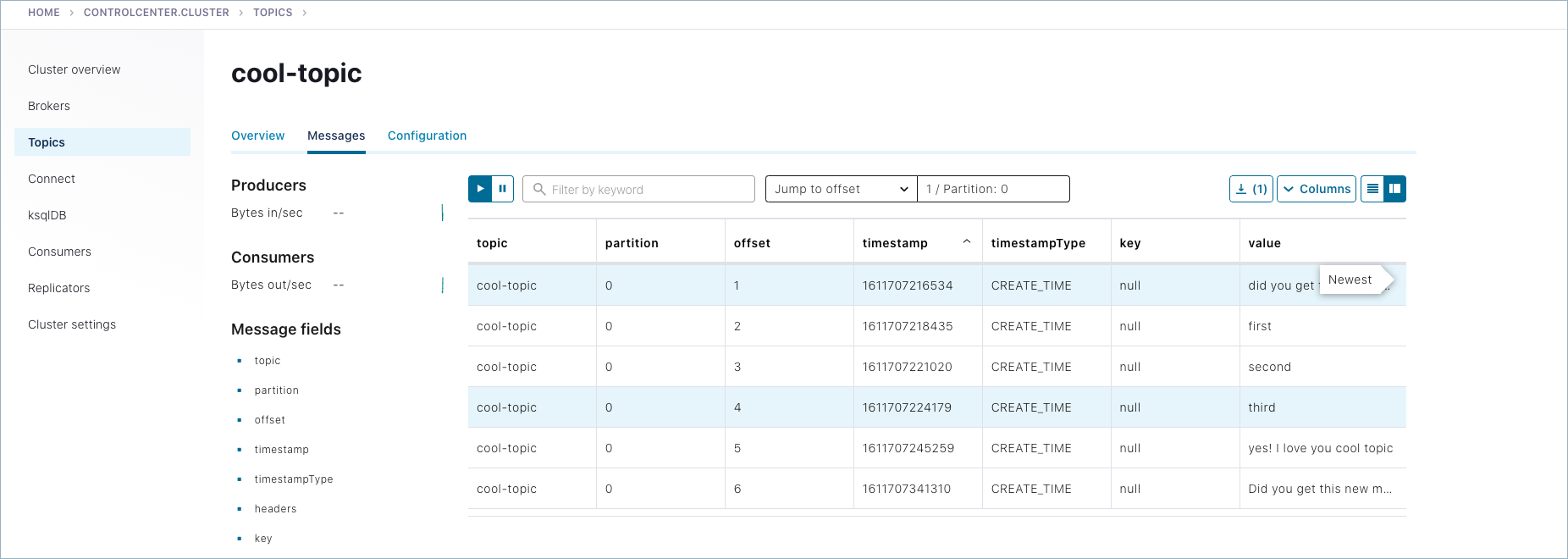

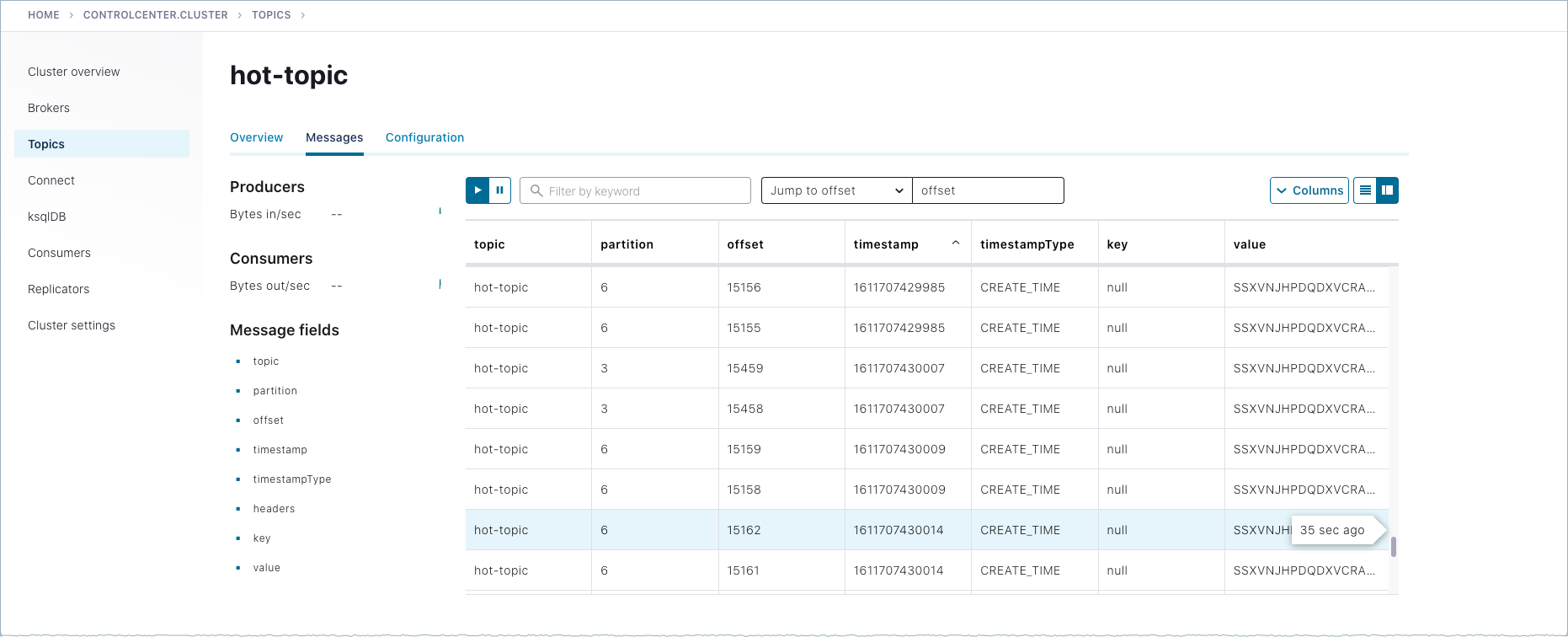

- Explanation of how to configure listeners, Metrics Reporter, and REST endpoints on a multi-broker setup so that all of the brokers and other components show up on Confluent Control Center. Brief introduction to using Control Center to verify topics and messages you create with Kafka commands .

- Code Examples and Demo Apps

How to Run Confluent Platform ¶

You have several options for running Confluent Platform (and Kafka), depending on your use cases and goals.

Quick Start ¶

For developers who want to get familiar with the platform, you can start with the Quick Start for Confluent Platform . This quick start shows you how to run Confluent Platform using Docker on a single broker, single cluster development environment with topic replication factors set to 1 .

If you want both an introduction to using Confluent Platform and an understanding of how to configure your clusters, a suggested learning progression is:

- Follow the steps for a local install as shown in the Quick Start for Confluent Platform and run a default single-broker cluster. Experiment with the features as shown in the workflow for that tutorial.

- Return to this page and walk through the steps to configure and run a multi-broker cluster .

Multi-node production-ready deployments ¶

Operators and developers who want to set up production-ready deployments can follow the workflows for Install Confluent Platform On-Premises or Ansible Playbooks .

Single machine, multi-broker and multi-cluster configurations ¶

To bridge the gap between the developer environment quick starts and full-scale, multi-node deployments, you can start by pioneering multi-broker clusters and multi-cluster setups on a single machine, like your laptop.

Trying out these different setups is a great way to learn your way around the configuration files for Kafka broker and Control Center, and experiment locally with more sophisticated deployments. These setups more closely resemble real-world configurations and support data sharing and other scenarios for Confluent Platform specific features like Replicator, Self-Balancing, Cluster Linking, and multi-cluster Schema Registry.

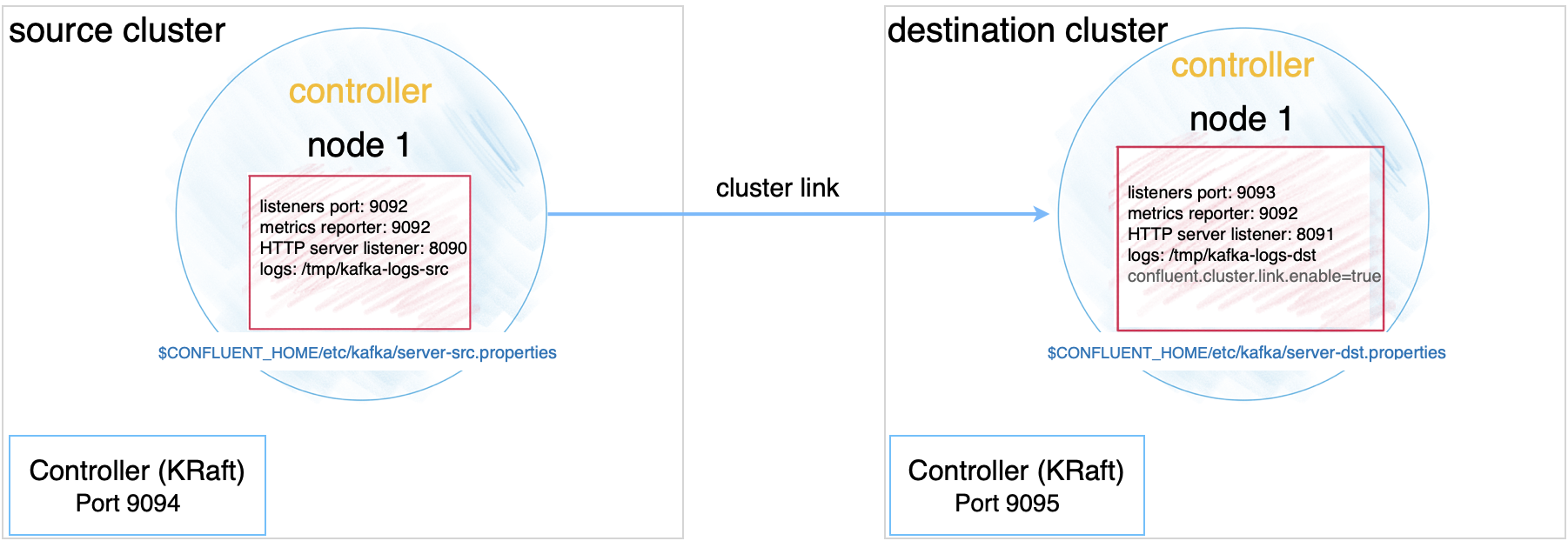

- For a single cluster with multiple brokers, you must configure and start a single ZooKeeper or KRaft controller, and as many brokers as you want to run in the cluster. A detailed example of how to run this with ZooKeeper is provided in the Run a multi-broker cluster section that follows.

- For a multi-cluster deployment, you should have a dedicated controller for each cluster, and a Kafka server properties file for each broker. To learn more about multi-cluster setups, see Run multiple clusters .

Does all this run on my laptop? ¶

Yes, these examples show you how to run all clusters and brokers on a single laptop or machine.

That said, you can apply what you learn in this topic to create similar deployments on your favorite cloud provider, using multiple virtual hosts. Use these examples as stepping stones to more complex deployments and feature integrations.

KRaft and ZooKeeper ¶

As of Confluent Platform 7.5, ZooKeeper is deprecated for new deployments. Confluent recommends KRaft mode for new deployments. To learn more about running Kafka in KRaft mode, see KRaft Overview , the KRaft steps in the Platform Quick Start , and Settings for other components .

The following tutorial on how to run a multi-broker cluster provides examples for both KRaft mode and ZooKeeper mode.

For KRaft, the examples show an isolated mode configuration for a multi-broker cluster managed by a single controller. This maps to the deprecated ZooKeeper configuration, which uses one ZooKeeper and multiple brokers in a single cluster. To learn more about KRaft, see KRaft Overview and Kraft mode under Configure Confluent Platform for production .

In addition to some other differences noted in the steps below, note that:

- For KRaft mode, you will use $CONFLUENT_HOME/etc/kafka/kraft/broker.properties and $CONFLUENT_HOME/etc/kafka/kraft/controller.properties .

- For ZooKeeper mode, you will use $CONFLUENT_HOME/etc/kafka/server.properties and $CONFLUENT_HOME/etc/kafka/zookeeper.properties .

Run a multi-broker cluster ¶

To run a single cluster with multiple brokers (3 brokers, for this example) you need:

- 1 controller properties file (KRaft mode) or 1 ZooKeeper properties file (ZooKeeper mode)

- 3 Kafka broker properties files with unique broker IDs, listener ports (to surface details for all brokers on Control Center), and log file directories.

- Control Center properties file with the REST endpoints for controlcenter.cluster mapped to your brokers.

- Metrics Reporter JAR file installed and enabled on the brokers. (If you start Confluent Platform as described below, from $CONFLUENT_HOME/bin/ , the Metrics Reporter is automatically installed on the broker. Otherwise, you would need to add the path to the Metrics Reporter JAR file to your CLASSPATH.)

- Properties files for any other Confluent Platform components you want to run, with default settings to start with.

All of this is described in detail below.

Configure replication factors ¶

The broker.properties (KRaft) and server.properties (ZooKeeper) files that ships with Confluent Platform have replication factors set to 1 on several system topics to support development test environments and Quick Start for Confluent Platform scenarios. For real-world scenarios, however, a replication factor greater than 1 is preferable to support fail-over and auto-balancing capabilities on both system and user-created topics.

For the purposes of this example, set the replication factors to 2 , which is one less than the number of brokers ( 3 ). When you create your topics, make sure that they also have the needed replication factor, depending on the number of brokers.

Run these commands to update replication configurations in KRaft mode.

Run these commands to update replication configurations in ZooKeeper mode.

When you complete these steps, your file should show the following configs:

- offsets.topic.replication.factor=2

- transaction.state.log.replication.factor=2

- confluent.license.topic.replication.factor=2

- confluent.metadata.topic.replication.factor=2

- confluent.balancer.topic.replication.factor=2

Configuration snapshot preview: Basic configuration for a three-broker cluster ¶

The following table shows a summary of the configurations to specify for each of these files, as a reference to check against if needed. The steps in the next sections guide you through a quick way to set up these files, using existing the existing broker.properties file (KRaft) or server.properties file (ZooKeeper) as a basis for your specialized ones.

Ready to get started? Skip to Configure the servers .

In server.properties and other configuration files, commented out properties or those not listed at all, take the default values. For example, the commented out line for listeners on broker 0 has the effect of setting a single listener to PLAINTEXT://:9092 .

Configure the servers ¶

Start with the broker.properties file you updated in the previous sections with regard to replication factors and enabling Self-Balancing Clusters. You will make a few more changes to this file, then use it as the basis for the other servers.

Update the node ID, controller quorum voters and port for the first broker, and then add the REST endpoint listener configuration for this broker at the end of the file:

Copy the properties file for the first broker to use as a basis for the other two:

Update the node ID, listener, and data directories for broker-1, and then update the REST endpoint listener for this broker:

Update the node ID, listener, controller, and data directories for broker-2, and then update the REST endpoint listener for this broker:

Finally, update the controller node ID, quorum voters, and port:

Start with the server.properties file you updated in the previous sections with regard to replication factors and enabling Self-Balancing. You will make a few more changes to this file, then use it as the basis for the other servers.

Uncomment the listener, and then add the REST endpoint listener configuration at the end of the file: