deep learning Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Synergic Deep Learning for Smart Health Diagnosis of COVID-19 for Connected Living and Smart Cities

COVID-19 pandemic has led to a significant loss of global deaths, economical status, and so on. To prevent and control COVID-19, a range of smart, complex, spatially heterogeneous, control solutions, and strategies have been conducted. Earlier classification of 2019 novel coronavirus disease (COVID-19) is needed to cure and control the disease. It results in a requirement of secondary diagnosis models, since no precise automated toolkits exist. The latest finding attained using radiological imaging techniques highlighted that the images hold noticeable details regarding the COVID-19 virus. The application of recent artificial intelligence (AI) and deep learning (DL) approaches integrated to radiological images finds useful to accurately detect the disease. This article introduces a new synergic deep learning (SDL)-based smart health diagnosis of COVID-19 using Chest X-Ray Images. The SDL makes use of dual deep convolutional neural networks (DCNNs) and involves a mutual learning process from one another. Particularly, the representation of images learned by both DCNNs is provided as the input of a synergic network, which has a fully connected structure and predicts whether the pair of input images come under the identical class. Besides, the proposed SDL model involves a fuzzy bilateral filtering (FBF) model to pre-process the input image. The integration of FBL and SDL resulted in the effective classification of COVID-19. To investigate the classifier outcome of the SDL model, a detailed set of simulations takes place and ensures the effective performance of the FBF-SDL model over the compared methods.

A deep learning approach for remote heart rate estimation

Weakly supervised spatial deep learning for earth image segmentation based on imperfect polyline labels.

In recent years, deep learning has achieved tremendous success in image segmentation for computer vision applications. The performance of these models heavily relies on the availability of large-scale high-quality training labels (e.g., PASCAL VOC 2012). Unfortunately, such large-scale high-quality training data are often unavailable in many real-world spatial or spatiotemporal problems in earth science and remote sensing (e.g., mapping the nationwide river streams for water resource management). Although extensive efforts have been made to reduce the reliance on labeled data (e.g., semi-supervised or unsupervised learning, few-shot learning), the complex nature of geographic data such as spatial heterogeneity still requires sufficient training labels when transferring a pre-trained model from one region to another. On the other hand, it is often much easier to collect lower-quality training labels with imperfect alignment with earth imagery pixels (e.g., through interpreting coarse imagery by non-expert volunteers). However, directly training a deep neural network on imperfect labels with geometric annotation errors could significantly impact model performance. Existing research that overcomes imperfect training labels either focuses on errors in label class semantics or characterizes label location errors at the pixel level. These methods do not fully incorporate the geometric properties of label location errors in the vector representation. To fill the gap, this article proposes a weakly supervised learning framework to simultaneously update deep learning model parameters and infer hidden true vector label locations. Specifically, we model label location errors in the vector representation to partially reserve geometric properties (e.g., spatial contiguity within line segments). Evaluations on real-world datasets in the National Hydrography Dataset (NHD) refinement application illustrate that the proposed framework outperforms baseline methods in classification accuracy.

Prediction of Failure Categories in Plastic Extrusion Process with Deep Learning

Hyperparameters tuning of faster r-cnn deep learning transfer for persistent object detection in radar images, a comparative study of automated legal text classification using random forests and deep learning, a semi-supervised deep learning approach for vessel trajectory classification based on ais data, an improved approach towards more robust deep learning models for chemical kinetics, power system transient security assessment based on deep learning considering partial observability, a multi-attention collaborative deep learning approach for blood pressure prediction.

We develop a deep learning model based on Long Short-term Memory (LSTM) to predict blood pressure based on a unique data set collected from physical examination centers capturing comprehensive multi-year physical examination and lab results. In the Multi-attention Collaborative Deep Learning model (MAC-LSTM) we developed for this type of data, we incorporate three types of attention to generate more explainable and accurate results. In addition, we leverage information from similar users to enhance the predictive power of the model due to the challenges with short examination history. Our model significantly reduces predictive errors compared to several state-of-the-art baseline models. Experimental results not only demonstrate our model’s superiority but also provide us with new insights about factors influencing blood pressure. Our data is collected in a natural setting instead of a setting designed specifically to study blood pressure, and the physical examination items used to predict blood pressure are common items included in regular physical examinations for all the users. Therefore, our blood pressure prediction results can be easily used in an alert system for patients and doctors to plan prevention or intervention. The same approach can be used to predict other health-related indexes such as BMI.

Export Citation Format

Share document.

Google Research, 2022 & beyond: Algorithms for efficient deep learning

February 7, 2023

Posted by Sanjiv Kumar, VP and Google Fellow, Google Research

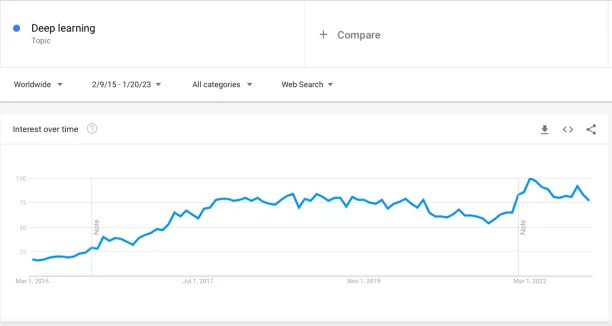

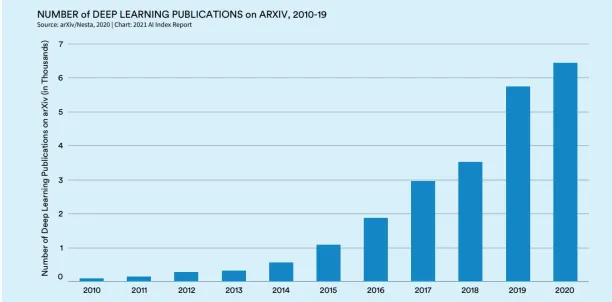

The explosion in deep learning a decade ago was catapulted in part by the convergence of new algorithms and architectures, a marked increase in data, and access to greater compute. In the last 10 years, AI and ML models have become bigger and more sophisticated — they’re deeper, more complex, with more parameters, and trained on much more data, resulting in some of the most transformative outcomes in the history of machine learning.

As these models increasingly find themselves deployed in production and business applications, the efficiency and costs of these models has gone from a minor consideration to a primary constraint. In response, Google has continued to invest heavily in ML efficiency, taking on the biggest challenges in (a) efficient architectures, (b) training efficiency, (c) data efficiency, and (d) inference efficiency. Beyond efficiency, there are a number of other challenges around factuality, security, privacy and freshness in these models. Below, we highlight an array of works that demonstrate Google Research’s efforts in developing new algorithms to address the above challenges.

Efficient architectures

A fundamental question is “Are there better ways of parameterizing a model to allow for greater efficiency?” In 2022, we focused on new techniques for infusing external knowledge by augmenting models via retrieved context; mixture of experts; and making transformers (which lie at the heart of most large ML models) more efficient.

Context-augmented models

In the quest for higher quality and efficiency, neural models can be augmented with external context from large databases or trainable memory. By leveraging retrieved context, a neural network may not have to memorize the huge amount of world knowledge within its internal parameters, leading to better parameter efficiency, interpretability and factuality.

In “ Decoupled Context Processing for Context Augmented Language Modeling ”, we explored a simple architecture for incorporating external context into language models based on a decoupled encoder-decoder architecture. This led to significant computational savings while giving competitive results on auto-regressive language modeling and open domain question answering tasks. However, pre-trained large language models (LLMs) consume a significant amount of information through self-supervision on big training sets. But, it is unclear precisely how the “world knowledge” of such models interacts with the presented context. With knowledge aware fine-tuning (KAFT), we strengthen both controllability and robustness of LLMs by incorporating counterfactual and irrelevant contexts into standard supervised datasets.

One of the questions in the quest for a modular deep network is how a database of concepts with corresponding computational modules could be designed. We proposed a theoretical architecture that would “remember events” in the form of sketches stored in an external LSH table with pointers to modules that process such sketches.

Another challenge in context-augmented models is fast retrieval on accelerators of information from a large database. We have developed a TPU-based similarity search algorithm that aligns with the performance model of TPUs and gives analytical guarantees on expected recall , achieving peak performance. Search algorithms typically involve a large number of hyperparameters and design choices that make it hard to tune them on new tasks. We have proposed a new constrained optimization algorithm for automating hyperparameter tuning. Fixing the desired cost or recall as input, the proposed algorithm generates tunings that empirically are very close to the speed-recall Pareto frontier and give leading performance on standard benchmarks.

Mixture-of-experts models

Mixture-of-experts (MoE) models have proven to be an effective means of increasing neural network model capacity without overly increasing their computational cost. The basic idea of MoEs is to construct a network from a number of expert sub-networks, where each input is processed by a suitable subset of experts. Thus, compared to a standard neural network, MoEs invoke only a small portion of the overall model, resulting in high efficiency as shown in language model applications such as GLaM .

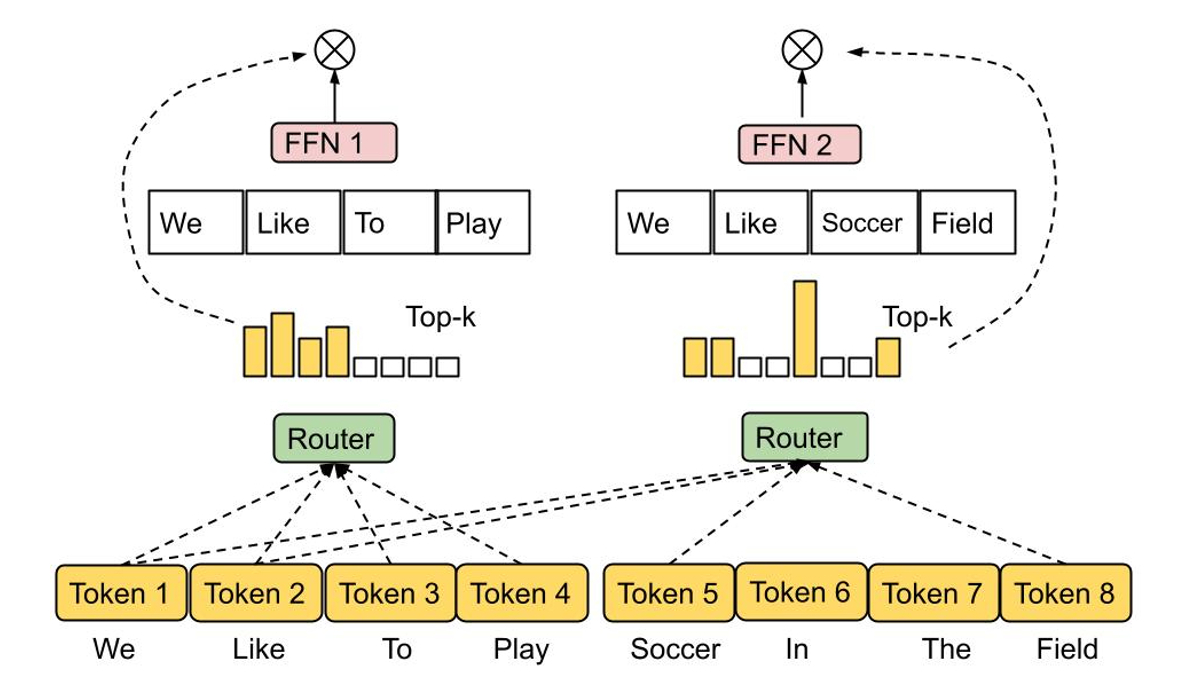

The decision of which experts should be active for a given input is determined by a routing function , the design of which is challenging, since one would like to prevent both under- and over-utilization of each expert. In a recent work, we proposed Expert Choice Routing , a new routing mechanism that, instead of assigning each input token to the top- k experts, assigns each expert to the top- k tokens. This automatically ensures load-balancing of experts while also naturally allowing for an input token to be handled by multiple experts.

Efficient transformers

Transformers are popular sequence-to-sequence models that have shown remarkable success in a range of challenging problems from vision to natural language understanding. A central component of such models is the attention layer, which identifies the similarity between “queries” and “keys”, and uses these to construct a suitable weighted combination of “values”. While effective, attention mechanisms have poor (i.e., quadratic) scaling with sequence length.

As the scale of transformers continues to grow, it is interesting to study if there are any naturally occurring structures or patterns in the learned models that may help us decipher how they work. Towards that, we studied the learned embeddings in intermediate MLP layers, revealing that they are very sparse — e.g, T5-Large models have <1% nonzero entries. Sparsity further suggests that we can potentially reduce FLOPs without affecting model performance.

We recently proposed Treeformer , an alternative to standard attention computation that relies on decision trees. Intuitively, this quickly identifies a small subset of keys that are relevant for a query and only performs the attention operation on this set. Empirically, the Treeformer can lead to a 30x reduction in FLOPs for the attention layer. We also introduced Sequential Attention , a differentiable feature selection method that combines attention with a greedy algorithm . This technique has strong provable guarantees for linear models and scales seamlessly to large embedding models.

Another way to make transformers efficient is by making the softmax computations faster in the attention layer. Building on our previous work on low-rank approximation of the softmax kernel, we proposed a new class of random features that provides the first “positive and bounded” random feature approximation of the softmax kernel and is computationally linear in the sequence length. We also proposed the first approach for incorporating various attention masking mechanisms, such as causal and relative position encoding, in a scalable manner (i.e., sub-quadratic with relation to the input sequence length).

Training efficiency

Efficient optimization methods are the cornerstone of modern ML applications and are particularly crucial in large scale settings. In such settings, even first order adaptive methods like Adam are often expensive, and training stability becomes challenging. In addition, these approaches are often agnostic to the architecture of the neural network, thereby ignoring the rich structure of the architecture leading to inefficient training. This motivates new techniques to more efficiently and effectively optimize modern neural network models. We are developing new architecture-aware training techniques, e.g., for training transformer networks, including new scale-invariant transformer networks and novel clipping methods that, when combined with vanilla stochastic gradient descent (SGD), results in faster training. Using this approach, for the first time, we were able to effectively train BERT using simple SGD without the need for adaptivity.

Moreover, with LocoProp we proposed a new method that achieves performance similar to that of a second-order optimizer while using the same computational and memory resources as a first-order optimizer. LocoProp takes a modular view of neural networks by decomposing them into a composition of layers. Each layer is then allowed to have its own loss function as well as output target and weight regularizer. With this setup, after a suitable forward-backward pass , LocoProp proceeds to perform parallel updates to each layer’s “local loss”. In fact, these updates can be shown to resemble those of higher-order optimizers, both theoretically and empirically. On a deep autoencoder benchmark, LocoProp achieves performance comparable to that of higher-order optimizers while being significantly faster.

One key assumption in optimizers like SGD is that each data point is sampled independently and identically from a distribution. This is unfortunately hard to satisfy in practical settings such as reinforcement learning, where the model (or agent) has to learn from data generated based on its own predictions. We proposed a new algorithmic approach named SGD with reverse experience replay , which finds optimal solutions in several settings like linear dynamical systems , non-linear dynamical systems , and in Q-learning for reinforcement learning . Furthermore, an enhanced version of this method — IER — turns out to be the state of the art and is the most stable experience replay technique on a variety of popular RL benchmarks.

Data efficiency

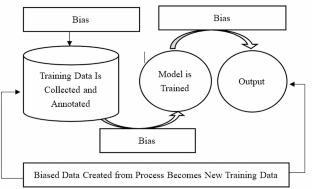

For many tasks, deep neural networks heavily rely on large datasets. In addition to the storage costs and potential security/privacy concerns that come along with large datasets, training modern deep neural networks on such datasets incurs high computational costs. One promising way to solve this problem is with data subset selection, where the learner aims to find the most informative subset from a large number of training samples to approximate (or even improve upon) training with the entire training set.

We analyzed a subset selection framework designed to work with arbitrary model families in a practical batch setting. In such a setting, a learner can sample examples one at a time, accessing both the context and true label, but in order to limit overhead costs, is only able to update its state (i.e., further train model weights) once a large enough batch of examples is selected. We developed an algorithm, called IWeS , that selects examples by importance sampling where the sampling probability assigned to each example is based on the entropy of models trained on previously selected batches. We provide a theoretical analysis, proving generalization and sampling rate bounds.

Another concern with training large networks is that they can be highly sensitive to distribution shifts between training data and data seen at deployment time, especially when working with limited amounts of training data that might not cover all of deployment time scenarios. A recent line of work has hypothesized “ extreme simplicity bias ” as the key issue behind this brittleness of neural networks. Our latest work makes this hypothesis actionable, leading to two new complementary approaches — DAFT and FRR — that when combined provide significantly more robust neural networks. In particular, these two approaches use adversarial fine-tuning along with inverse feature predictions to make the learned network robust.

Inference efficiency

Increasing the size of neural networks has proven surprisingly effective in improving their predictive accuracy. However, it is challenging to realize these gains in the real-world, as the inference costs of large models may be prohibitively high for deployment. This motivates strategies to improve the serving efficiency, without sacrificing accuracy. In 2022, we studied different strategies to achieve this, notably those based on knowledge distillation and adaptive computation.

Distillation

Distillation is a simple yet effective method for model compression, which greatly expands the potential applicability of large neural models. Distillation has proved widely effective in a range of practical applications, such as ads recommendation . Most use-cases of distillation involve a direct application of the basic recipe to the given domain, with limited understanding of when and why this ought to work. Our research this year has looked at tailoring distillation to specific settings and formally studying the factors that govern the success of distillation.

On the algorithmic side, by carefully modeling the noise in the teacher labels, we developed a principled approach to reweight the training examples, and a robust method to sample a subset of data to have the teacher label. In “ Teacher Guided Training ”, we presented a new distillation framework: rather than passively using the teacher to annotate a fixed dataset, we actively use the teacher to guide the selection of informative samples to annotate. This makes the distillation process shine in limited data or long-tail settings.

We also researched new recipes for distillation from a cross-encoder (e.g., BERT ) to a factorized dual-encoder , an important setting for the task of scoring the relevance of a [ query , document ] pair. We studied the reasons for the performance gap between cross- and dual-encoders, noting that this can be the result of generalization rather than capacity limitation in dual-encoders. The careful construction of the loss function for distillation can mitigate this and reduce the gap between cross- and dual-encoder performance. Subsequently, in EmbedDistill , we looked at further improving dual-encoder distillation by matching embeddings from the teacher model. This strategy can also be used to distill from a large to small dual-encoder model, wherein inheriting and freezing the teacher’s document embeddings can prove highly effective.

On the theoretical side, we provided a new perspective on distillation through the lens of supervision complexity , a measure of how well the student can predict the teacher labels. Drawing on neural tangent kernel (NTK) theory, this offers conceptual insights, such as the fact that a capacity gap may affect distillation because such teachers’ labels may appear akin to purely random labels to the student. We further demonstrated that distillation can cause the student to underfit points the teacher model finds “hard” to model. Intuitively, this may help the student focus its limited capacity on those samples that it can reasonably model.

Adaptive computation

While distillation is an effective means of reducing inference cost, it does so uniformly across all samples. Intuitively however, some “easy” samples may inherently require less compute than the “hard” samples. The goal of adaptive compute is to design mechanisms that enable such sample-dependent computation.

Confident Adaptive Language Modeling (CALM) introduced a controlled early-exit functionality to Transformer-based text generators such as T5 . In this form of adaptive computation, the model dynamically modifies the number of transformer layers that it uses per decoding step. The early-exit gates use a confidence measure with a decision threshold that is calibrated to satisfy statistical performance guarantees. In this way, the model needs to compute the full stack of decoder layers for only the most challenging predictions. Easier predictions only require computing a few decoder layers. In practice, the model uses about a third of the layers for prediction on average, yielding 2–3x speed-ups while preserving the same level of generation quality.

One popular adaptive compute mechanism is a cascade of two or more base models. A key issue in using cascades is deciding whether to simply use the current model’s predictions, or whether to defer prediction to a downstream model. Learning when to defer requires designing a suitable loss function, which can leverage appropriate signals to act as supervision for the deferral decision. We formally studied existing loss functions for this goal, demonstrating that they may underfit the training sample owing to an implicit application of label smoothing. We showed that one can mitigate this with post-hoc training of a deferral rule, which does not require modifying the model internals in any way.

For the retrieval applications, standard semantic search techniques use a fixed representation for each embedding generated by a large model. That is, irrespective of downstream task and its associated compute environment or constraints, the representation size and capability is mostly fixed. Matryoshka representation learning introduces flexibility to adapt representations according to the deployment environment. That is, it forces representations to have a natural ordering within its coordinates such that for resource constrained environments, we can use only the top few coordinates of the representation, while for richer and precision-critical settings, we can use more coordinates of the representation. When combined with standard approximate nearest neighbor search techniques like ScaNN , MRL is able to provide up to 16x lower compute with the same recall and accuracy metrics.

Concluding thoughts

Large ML models are showing transformational outcomes in several domains but efficiency in both training and inference is emerging as a critical need to make these models practical in the real-world. Google Research has been investing significantly in making large ML models efficient by developing new foundational techniques. This is an on-going effort and over the next several months we will continue to explore core challenges to make ML models even more robust and efficient.

Acknowledgements

The work in efficient deep learning is a collaboration among many researchers from Google Research, including Amr Ahmed, Ehsan Amid, Rohan Anil, Mohammad Hossein Bateni, Gantavya Bhatt, Srinadh Bhojanapalli, Zhifeng Chen, Felix Chern, Gui Citovsky, Andrew Dai, Andy Davis, Zihao Deng, Giulia DeSalvo, Nan Du, Avi Dubey, Matthew Fahrbach, Ruiqi Guo, Blake Hechtman, Yanping Huang, Prateek Jain, Wittawat Jitkrittum, Seungyeon Kim, Ravi Kumar, Aditya Kusupati, James Laudon, Quoc Le, Daliang Li, Zonglin Li, Lovish Madaan, David Majnemer, Aditya Menon, Don Metzler, Vahab Mirrokni, Vaishnavh Nagarajan, Harikrishna Narasimhan, Rina Panigrahy, Srikumar Ramalingam, Ankit Singh Rawat, Sashank Reddi, Aniket Rege, Afshin Rostamizadeh, Tal Schuster, Si Si, Apurv Suman, Phil Sun, Erik Vee, Ke Ye, Chong You, Felix Yu, Manzil Zaheer, and Yanqi Zhou.

Google Research, 2022 & beyond

This was the fourth blog post in the “Google Research, 2022 & Beyond” series. Other posts in this series are listed in the table below:

- Algorithms & Theory

- Machine Intelligence

- Year in Review

Other posts of interest

May 13, 2024

- Machine Intelligence ·

- Natural Language Processing ·

- Security, Privacy and Abuse Prevention

May 1, 2024

- Algorithms & Theory ·

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Review Article

- Open access

- Published: 05 April 2022

Recent advances and applications of deep learning methods in materials science

- Kamal Choudhary ORCID: orcid.org/0000-0001-9737-8074 1 , 2 , 3 ,

- Brian DeCost ORCID: orcid.org/0000-0002-3459-5888 4 ,

- Chi Chen ORCID: orcid.org/0000-0001-8008-7043 5 ,

- Anubhav Jain ORCID: orcid.org/0000-0001-5893-9967 6 ,

- Francesca Tavazza ORCID: orcid.org/0000-0002-5602-180X 1 ,

- Ryan Cohn ORCID: orcid.org/0000-0002-7898-0059 7 ,

- Cheol Woo Park 8 ,

- Alok Choudhary 9 ,

- Ankit Agrawal 9 ,

- Simon J. L. Billinge ORCID: orcid.org/0000-0002-9734-4998 10 ,

- Elizabeth Holm 7 ,

- Shyue Ping Ong ORCID: orcid.org/0000-0001-5726-2587 5 &

- Chris Wolverton ORCID: orcid.org/0000-0003-2248-474X 8

npj Computational Materials volume 8 , Article number: 59 ( 2022 ) Cite this article

66k Accesses

240 Citations

38 Altmetric

Metrics details

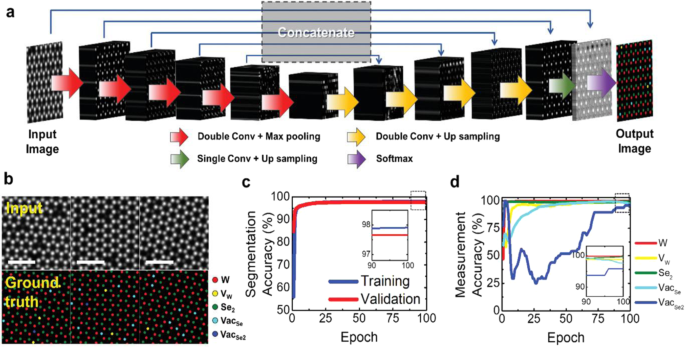

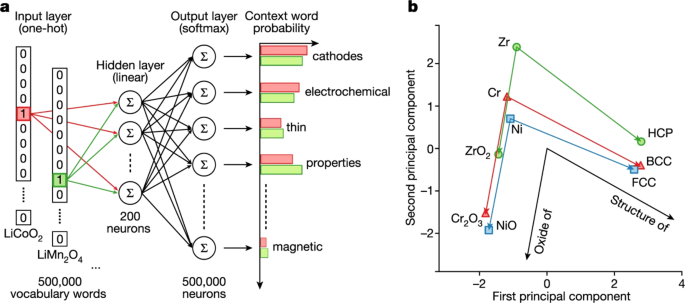

- Atomistic models

- Computational methods

Deep learning (DL) is one of the fastest-growing topics in materials data science, with rapidly emerging applications spanning atomistic, image-based, spectral, and textual data modalities. DL allows analysis of unstructured data and automated identification of features. The recent development of large materials databases has fueled the application of DL methods in atomistic prediction in particular. In contrast, advances in image and spectral data have largely leveraged synthetic data enabled by high-quality forward models as well as by generative unsupervised DL methods. In this article, we present a high-level overview of deep learning methods followed by a detailed discussion of recent developments of deep learning in atomistic simulation, materials imaging, spectral analysis, and natural language processing. For each modality we discuss applications involving both theoretical and experimental data, typical modeling approaches with their strengths and limitations, and relevant publicly available software and datasets. We conclude the review with a discussion of recent cross-cutting work related to uncertainty quantification in this field and a brief perspective on limitations, challenges, and potential growth areas for DL methods in materials science.

Similar content being viewed by others

Accurate structure prediction of biomolecular interactions with AlphaFold 3

Highly accurate protein structure prediction with AlphaFold

Augmenting large language models with chemistry tools

Introduction.

“Processing-structure-property-performance” is the key mantra in Materials Science and Engineering (MSE) 1 . The length and time scales of material structures and phenomena vary significantly among these four elements, adding further complexity 2 . For instance, structural information can range from detailed knowledge of atomic coordinates of elements to the microscale spatial distribution of phases (microstructure), to fragment connectivity (mesoscale), to images and spectra. Establishing linkages between the above components is a challenging task.

Both experimental and computational techniques are useful to identify such relationships. Due to rapid growth in automation in experimental equipment and immense expansion of computational resources, the size of public materials datasets has seen exponential growth. Several large experimental and computational datasets 3 , 4 , 5 , 6 , 7 , 8 , 9 , 10 have been developed through the Materials Genome Initiative (MGI) 11 and the increasing adoption of Findable, Accessible, Interoperable, Reusable (FAIR) 12 principles. Such an outburst of data requires automated analysis which can be facilitated by machine learning (ML) techniques 13 , 14 , 15 , 16 , 17 , 18 , 19 , 20 .

Deep learning (DL) 21 , 22 is a specialized branch of machine learning (ML). Originally inspired by biological models of computation and cognition in the human brain 23 , 24 , one of DL’s major strengths is its potential to extract higher-level features from the raw input data.

DL applications are rapidly replacing conventional systems in many aspects of our daily lives, for example, in image and speech recognition, web search, fraud detection, email/spam filtering, financial risk modeling, and so on. DL techniques have been proven to provide exciting new capabilities in numerous fields (such as playing Go 25 , self-driving cars 26 , navigation, chip design, particle physics, protein science, drug discovery, astrophysics, object recognition 27 , etc).

Recently DL methods have been outperforming other machine learning techniques in numerous scientific fields, such as chemistry, physics, biology, and materials science 20 , 28 , 29 , 30 , 31 , 32 . DL applications in MSE are still relatively new, and the field has not fully explored its potential, implications, and limitations. DL provides new approaches for investigating material phenomena and has pushed materials scientists to expand their traditional toolset.

DL methods have been shown to act as a complementary approach to physics-based methods for materials design. While large datasets are often viewed as a prerequisite for successful DL applications, techniques such as transfer learning, multi-fidelity modelling, and active learning can often make DL feasible for small datasets as well 33 , 34 , 35 , 36 .

Traditionally, materials have been designed experimentally using trial and error methods with a strong dose of chemical intuition. In addition to being a very costly and time-consuming approach, the number of material combinations is so huge that it is intractable to study experimentally, leading to the need for empirical formulation and computational methods. While computational approaches (such as density functional theory, molecular dynamics, Monte Carlo, phase-field, finite elements) are much faster and cheaper than experiments, they are still limited by length and time scale constraints, which in turn limits their respective domains of applicability. DL methods can offer substantial speedups compared to conventional scientific computing, and, for some applications, are reaching an accuracy level comparable to physics-based or computational models.

Moreover, entering a new domain of materials science and performing cutting-edge research requires years of education, training, and the development of specialized skills and intuition. Fortunately, we now live in an era of increasingly open data and computational resources. Mature, well-documented DL libraries make DL research much more easily accessible to newcomers than almost any other research field. Testing and benchmarking methodologies such as underfitting/overfitting/cross-validation 15 , 16 , 37 are common knowledge, and standards for measuring model performance are well established in the community.

Despite their many advantages, DL methods have disadvantages too, the most significant one being their black-box nature 38 which may hinder physical insights into the phenomena under examination. Evaluating and increasing the interpretability and explainability of DL models remains an active field of research. Generally a DL model has a few thousand to millions of parameters, making model interpretation and direct generation of scientific insight difficult.

Although there are several good recent reviews of ML applications in MSE 15 , 16 , 17 , 19 , 39 , 40 , 41 , 42 , 43 , 44 , 45 , 46 , 47 , 48 , 49 , DL for materials has been advancing rapidly, warranting a dedicated review to cover the explosion of research in this field. This article discusses some of the basic principles in DL methods and highlights major trends among the recent advances in DL applications for materials science. As the tools and datasets for DL applications in materials keep evolving, we provide a github repository ( https://github.com/deepmaterials/dlmatreview ) that can be updated as new resources are made publicly available.

General machine learning concepts

It is beyond the scope of this article to give a detailed hands-on introduction to Deep Learning. There are many materials for this purpose, for example, the free online book “Neural Networks and Deep Learning” by Michael Nielsen ( http://neuralnetworksanddeeplearning.com ), Deep Learning by Goodfellow et al. 21 , and multiple online courses at Coursera, Udemy, and so on. Rather, this article aims to motivate materials scientist researchers in the types of problems that are amenable to DL, and to introduce some of the basic concepts, jargon, and materials-specific databases and software (at the time of writing) as a helpful on-ramp to help get started. With this in mind, we begin with a very basic introduction to Deep learning.

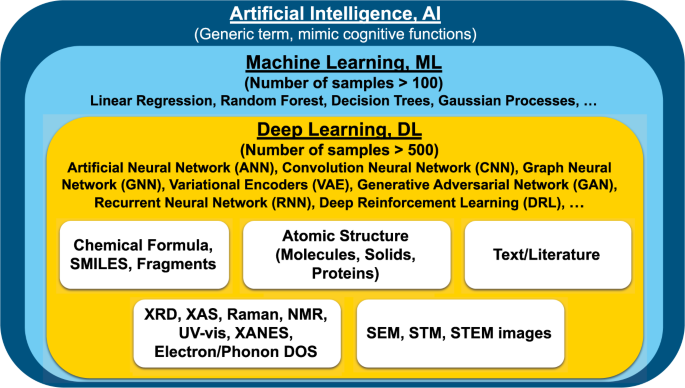

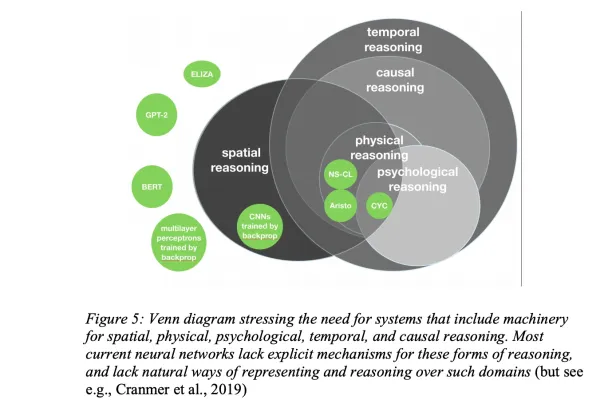

Artificial intelligence (AI) 13 is the development of machines and algorithms that mimic human intelligence, for example, by optimizing actions to achieve certain goals. Machine learning (ML) is a subset of AI, and provides the ability to learn without explicitly being programmed for a given dataset such as playing chess, social network recommendation etc. DL, in turn, is the subset of ML that takes inspiration from biological brains and uses multilayer neural networks to solve ML tasks. A schematic of AI-ML-DL context and some of the key application areas of DL in the materials science and engineering field are shown in Fig. 1 .

Deep learning is considered a part of machine learning, which is contained in an umbrella term artificial intelligence.

Some of the commonly used ML technologies are linear regression, decision trees, and random forest in which generalized models are trained to learn coefficients/weights/parameters for a given dataset (usually structured i.e., on a grid or a spreadsheet).

Applying traditional ML techniques to unstructured data (such as pixels or features from an image, sounds, text, and graphs) is challenging because users have to first extract generalized meaningful representations or features themselves (such as calculating pair-distribution for an atomic structure) and then train the ML models. Hence, the process becomes time-consuming, brittle, and not easily scalable. Here, deep learning (DL) techniques become more important.

DL methods are based on artificial neural networks and allied techniques. According to the “universal approximation theorem” 50 , 51 , neural networks can approximate any function to arbitrary accuracy. However, it is important to note that the theorem doesn’t guarantee that the functions can be learnt easily 52 .

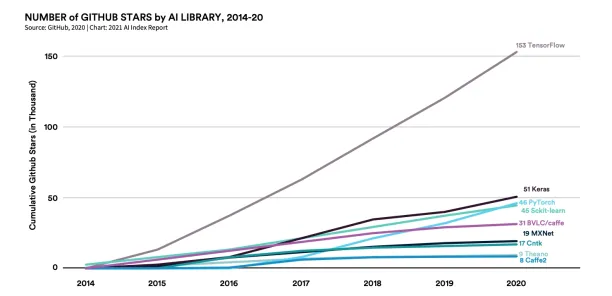

Neural networks

A perceptron or a single artificial neuron 53 is the building block of artificial neural networks (ANNs) and performs forward propagation of information. For a set of inputs [ x 1 , x 2 , . . . , x m ] to the perceptron, we assign floating number weights (and biases to shift wights) [ w 1 , w 2 , . . . , w m ] and then we multiply them correspondingly together to get a sum of all of them. Some of the common software packages allowing NN trainings are: PyTorch 54 , Tensorflow 55 , and MXNet 56 . Please note that certain commercial equipment, instruments, or materials are identified in this paper in order to specify the experimental procedure adequately. Such identification is not intended to imply recommendation or endorsement by NIST, nor is it intended to imply that the materials or equipment identified are necessarily the best available for the purpose.

Activation function

Activation functions (such as sigmoid, hyperbolic tangent (tanh), rectified linear unit (ReLU), leaky ReLU, Swish) are the critical nonlinear components that enable neural networks to compose many small building blocks to learn complex nonlinear functions. For example, the sigmoid activation maps real numbers to the range (0, 1); this activation function is often used in the last layer of binary classifiers to model probabilities. The choice of activation function can affect training efficiency as well as final accuracy 57 .

Loss function, gradient descent, and normalization

The weight matrices of a neural network are initialized randomly or obtained from a pre-trained model. These weight matrices are multiplied with the input matrix (or output from a previous layer) and subjected to a nonlinear activation function to yield updated representations, which are often referred to as activations or feature maps. The loss function (also known as an objective function or empirical risk) is calculated by comparing the output of the neural network and the known target value data. Typically, network weights are iteratively updated via stochastic gradient descent algorithms to minimize the loss function until the desired accuracy is achieved. Most modern deep learning frameworks facilitate this by using reverse-mode automatic differentiation 58 to obtain the partial derivatives of the loss function with respect to each network parameter through recursive application of the chain rule. Colloquially, this is also known as back-propagation.

Common gradient descent algorithms include: Stochastic Gradient Descent (SGD), Adam, Adagrad etc. The learning rate is an important parameter in gradient descent. Except for SGD, all other methods use adaptive learning parameter tuning. Depending on the objective such as classification or regression, different loss functions such as Binary Cross Entropy (BCE), Negative Log likelihood (NLLL) or Mean Squared Error (MSE) are used.

The inputs of a neural network are generally scaled i.e., normalized to have zero mean and unit standard deviation. Scaling is also applied to the input of hidden layers (using batch or layer normalization) to improve the stability of ANNs.

Epoch and mini-batches

A single pass of the entire training data is called an epoch, and multiple epochs are performed until the weights converge. In DL, datasets are usually large and computing gradients for the entire dataset and network becomes challenging. Hence, the forward passes are done with small subsets of the training data called mini-batches.

Underfitting, overfitting, regularization, and early stopping

During an ML training, the dataset is split into training, validation, and test sets. The test set is never used during the training process. A model is said to be underfitting if the model performs poorly on the training set and lacks the capacity to fully learn the training data. A model is said to overfit if the model performs too well on the training data but does not perform well on the validation data. Overfitting is controlled with regularization techniques such as L2 regularization, dropout, and early stopping 37 .

Regularization discourages the model from simply memorizing the training data, resulting in a model that is more generalizable. Overfitting models are often characterized by neurons that have weights with large magnitudes. L2 regularization reduces the possibility of overfitting by adding an additional term to the loss function that penalizes the large weight values, keeping the values of the weights and biases small during training. Another popular regularization is dropout 59 in which we randomly set the activations for an NN layer to zero during training. Similar to bagging 60 , the use of dropout brings about the same effect of training a collection of randomly chosen models which prevents the co-adaptations among the neurons, consequently reducing the likelihood of the model from overfitting. In early stopping, further epochs for training are stopped before the model overfits i.e., accuracy on the validation set flattens or decreases.

Convolutional neural networks

Convolutional neural networks (CNN) 61 can be viewed as a regularized version of multilayer perceptrons with a strong inductive bias for learning translation-invariant image representations. There are four main components in CNNs: (a) learnable convolution filterbanks, (b) nonlinear activations, (c) spatial coarsening (via pooling or strided convolution), (d) a prediction module, often consisting of fully connected layers that operate on a global instance representation.

In CNNs we use convolution functions with multiple kernels or filters with trainable and shared weights or parameters, instead of general matrix multiplication. These filters/kernels are matrices with a relatively small number of rows and columns that convolve over the input to automatically extract high-level local features in the form of feature maps. The filters slide/convolve (element-wise multiply) across the input with a fixed number of strides to produce the feature map and the information thus learnt is passed to the hidden/fully connected layers. Depending on the input data, these filters can be one, two, or three-dimensional.

Similar to the fully connected NNs, nonlinearities such as ReLU are then applied that allows us to deal with nonlinear and complicated data. The pooling operation preserves spatial invariance, downsamples and reduces the dimension of each feature map obtained after convolution. These downsampling/pooling operations can be of different types such as maximum-pooling, minimum-pooling, average pooling, and sum pooling. After one or more convolutional and pooling layers, the outputs are usually reduced to a one-dimensional global representation. CNNs are especially popular for image data.

Graph neural networks

Graphs and their variants.

Classical CNNs as described above are based on a regular grid Euclidean data (such as 2D grid in images). However, real-life data structures, such as social networks, segments of images, word vectors, recommender systems, and atomic/molecular structures, are usually non-Euclidean. In such cases, graph-based non-Euclidean data structures become especially important.

Mathematically, a graph G is defined as a set of nodes/vertices V , a set of edges/links, E and node features, X : G = ( V , E , X ) 62 , 63 , 64 and can be used to represent non-Euclidean data. An edge is formed between a pair of two nodes and contains the relation information between the nodes. Each node and edge can have attributes/features associated with it. An adjacency matrix A is a square matrix indicating connections between the nodes or not in the form of 1 (connected) and 0 (unconnected). A graph can be of various types such as: undirected/directed, weighted/unweighted, homogeneous/heterogeneous, static/dynamic.

An undirected graph captures symmetric relations between nodes, while a directed one captures asymmetric relations such that A i j ≠ A j i . In a weighted graph, each edge is associated with a scalar weight rather than just 1s and 0s. In a homogeneous graph, all the nodes represent instances of the same type, and all the edges capture relations of the same type while in a heterogeneous graph, the nodes and edges can be of different types. Heterogeneous graphs provide an easy interface for managing nodes and edges of different types as well as their associated features. When input features or graph topology vary with time, they are called dynamic graphs otherwise they are considered static. If a node is connected to another node more than once it is termed a multi-graph.

Types of GNNs

At present, GNNs are probably the most popular AI method for predicting various materials properties based on structural information 33 , 65 , 66 , 67 , 68 , 69 . Graph neural networks (GNNs) are DL methods that operate on graph domain and can capture the dependence of graphs via message passing between the nodes and edges of graphs. There are two key steps in GNN training: (a) we first aggregate information from neighbors and (b) update the nodes and/or edges. Importantly, aggregation is permutation invariant. Similar to the fully connected NNs, the input node features, X (with embedding matrix) are multiplied with the adjacency matrix and the weight matrices and then multiplied with the nonlinear activation function to provide outputs for the next layer. This method is called the propagation rule.

Based on the propagation rule and aggregation methodology, there could be different variants of GNNs such as Graph convolutional network (GCN) 70 , Graph attention network (GAT) 71 , Relational-GCN 72 , graph recurrent network (GRN) 73 , Graph isomerism network (GIN) 74 , and Line graph neural network (LGNN) 75 . Graph convolutional neural networks are the most popular GNNs.

Sequence-to-sequence models

Traditionally, learning from sequential inputs such as text involves generating a fixed-length input from the data. For example, the “bag-of-words” approach simply counts the number of instances of each word in a document and produces a fixed-length vector that is the size of the overall vocabulary.

In contrast, sequence-to-sequence models can take into account sequential/contextual information about each word and produce outputs of arbitrary length. For example, in named entity recognition (NER), an input sequence of words (e.g., a chemical abstract) is mapped to an output sequence of “entities” or categories where every word in the sequence is assigned a category.

An early form of sequence-to-sequence model is the recurrent neural network, or RNN. Unlike the fully connected NN architecture, where there is no connection between hidden nodes in the same layer, but only between nodes in adjacent layers, RNN has feedback connections. Each hidden layer can be unfolded and processed similarly to traditional NNs sharing the same weight matrices. There are multiple types of RNNs, of which the most common ones are: gated recurrent unit recurrent neural network (GRURNN), long short-term memory (LSTM) network, and clockwork RNN (CW-RNN) 76 .

However, all such RNNs suffer from some drawbacks, including: (i) difficulty of parallelization and therefore difficulty in training on large datasets and (ii) difficulty in preserving long-range contextual information due to the “vanishing gradient” problem. Nevertheless, as we will later describe, LSTMs have been successfully applied to various NER problems in the materials domain.

More recently, sequence-to-sequence models based on a “transformer” architecture, such as Google’s Bidirectional Encoder Representations from Transformers (BERT) model 77 , have helped address some of the issues of traditional RNNs. Rather than passing a state vector that is iterated word-by-word, such models use an attention mechanism to allow access to all previous words simultaneously without explicit time steps. This mechanism facilitates parallelization and also better preserves long-term context.

Generative models

While the above DL frameworks are based on supervised machine learning (i.e., we know the target or ground truth data such as in classification and regression) and discriminative (i.e., learn differentiating features between various datasets), many AI tasks are based on unsupervised (such as clustering) and are generative (i.e., aim to learn underlying distributions) 78 .

Generative models are used to (a) generate data samples similar to the training set with variations i.e., augmentation and for synthetic data, (b) learn good generalized latent features, (c) guide mixed reality applications such as virtual try-on. There are various types of generative models, of which the most common are: (a) variational encoders (VAE), which explicitly define and learn likelihood of data, (b) Generative adversarial networks (GAN), which learn to directly generate samples from model’s distribution, without defining any density function.

A VAE model has two components: namely encoder and decoder. A VAE’s encoder takes input from a target distribution and compresses it into a low-dimensional latent space. Then the decoder takes that latent space representation and reproduces the original image. Once the network is trained, we can generate latent space representations of various images, and interpolate between these before forwarding them through the decoder which produces new images. A VAE is similar to a principal component analysis (PCA) but instead of linear data assumption in PCA, VAEs work in nonlinear domain. A GAN model also has two components: namely generator, and discriminator. GAN’s generator generates fake/synthetic data that could fool the discriminator. Its discriminator tries to distinguish fake data from real ones. This process is also termed as “min-max two-player game.” We note that VAE models learn the hidden state distributions during the training process, while GAN’s hidden state distributions are predefined. Rather GAN generators serve to generate images that could fool the discriminator. These techniques are widely used for images and spectra and have also been recently applied to atomic structures.

Deep reinforcement learning

Reinforcement learning (RL) deals with tasks in which a computational agent learns to make decisions by trial and error. Deep RL uses DL into the RL framework, allowing agents to make decisions from unstructured input data 79 . In traditional RL, Markov decision process (MDP) is used in which an agent at every timestep takes action to receive a scalar reward and transitions to the next state according to system dynamics to learn policy in order to maximize returns. However, in deep RL, the states are high-dimensional (such as continuous images or spectra) which act as an input to DL methods. DRL architectures can be either model-based or model-free.

Scientific machine learning

The nascent field of scientific machine learning (SciML) 80 is creating new opportunities across all paradigms of machine learning, and deep learning in particular. SciML is focused on creating ML systems that incorporate scientific knowledge and physical principles, either directly in the specific form of the model or indirectly through the optimization algorithms used for training. This offers potential improvements in sample and training complexity, robustness (particularly under extrapolation), and model interpretability. One prominent theme can be found in ref. 57 . Such implementations usually involve applying multiple physics-based constraints while training a DL model 81 , 82 , 83 . One of the key challenges of universal function approximation is that a NN can quickly learn spurious features that have nothing to do with the features that a researcher could be actually interested in, within the data. In this sense, physics-based regularization can assist. Physics-based deep learning can also aid in inverse design problems, a challenging but important task 84 , 85 . On the flip side, deep Learning using Graph Neural Nets and symbolic regression (stochastically building symbolic expressions) has even been used to “discover” symbolic equations from data that capture known (and unknown) physics behind the data 86 , i.e., to deep learn a physics model rather than to use a physics model to constrain DL.

Overview of applications

Some aspects of successful DL application that require materials-science-specific considerations are:

acquiring large, balanced, and diverse datasets (often on the order of 10,000 data points or more),

determing an appropriate DL approach and suitable vector or graph representation of the input samples, and

selecting appropriate performance metrics relevant to scientific goals.

In the following sections we discuss some of the key areas of materials science in which DL has been applied with available links to repositories and datasets that help in the reproducibility and extensibility of the work. In this review we categorize materials science applications at a high level by the type of input data considered: 11 atomistic, 12 stoichiometric, 13 spectral, 14 image, and 15 text. We summarize prevailing machine learning tasks and their impact on materials research and development within each broad materials data modality.

Applications in atomistic representations

In this section, we provide a few examples of solving materials science problems with DL methods trained on atomistic data. The atomic structure of material usually consists of atomic coordinates and atomic composition information of material. An arbitrary number of atoms and types of elements in a system poses a challenge to apply traditional ML algorithms for atomistic predictions. DL-based methods are an obvious strategy to tackle this problem. There have been several previous attempts to represent crystals and molecules using fixed-size descriptors such as Coulomb matrix 87 , 88 , 89 , classical force field inspired descriptors (CFID) 90 , 91 , 92 , pair-distribution function (PRDF), Voronoi tessellation 93 , 94 , 95 . Recently graph neural network methods have been shown to surpass previous hand-crafted feature set 28 .

DL for atomistic materials applications include: (a) force-field development, (b) direct property predictions, (c) materials screening. In addition to the above points, we also elucidate upon some of the recent generative adversarial network and complimentary methods to atomistic aproaches.

Databases and software libraries

In Table 1 we provide some of the commonly used datasets used for atomistic DL models for molecules, solids, and proteins. We note that the computational methods used for different datasets are different and many of them are continuously evolving. Generally it takes years to generate such databases using conventional methods such as density functional theory; in contrast, DL methods can be used to make predictions with much reduced computational cost and reasonable accuracy.

Table 1 we provide DL software packages used for atomistic materials design. The type of models includes general property (GP) predictors and interatomic force fields (FF). The models have been demonstrated in molecules (Mol), solid-state materials (Sol), or proteins (Prot). For some force fields, high-performance large-scale implementations (LSI) that leverage paralleling computing exist. Some of these methods mainly used interatomic distances to build graphs while others use distances as well as bond-angle information. Recently, including bond angle within GNN has been shown to drastically improve the performance with comparable computational timings.

Force-field development

The first application includes the development of DL-based force fields (FF) 96 , 97 /interatomic potentials. Some of the major advantages of such applications are that they are very fast (on the order of hundreds to thousands times 64 ) for making predictions and solving the tenuous development of FFs, but the disadvantage is they still require a large dataset using computationally expensive methods to train.

Models such as Behler-Parrinello neural network (BPNN) and its variants 98 , 99 are used for developing interatomic potentials that can be used beyond just 0 K temperature and time-dependent behavior using molecular dynamics simulations such as for nanoparticles 100 . Such FF models have been developed for molecular systems, such as water, methane, and other organic molecules 99 , 101 as well as solids such as silicon 98 , sodium 102 , graphite 103 , and titania ( T i O 2 ) 104 .

While the above works are mainly based on NNs, there has also been the development of graph neural network force-field (GNNFF) framework 105 , 106 that bypasses both computational bottlenecks. GNNFF can predict atomic forces directly using automatically extracted structural features that are not only translationally invariant, but rotationally-covariant to the coordinate space of the atomic positions, i.e., the features and hence the predicted force vectors rotate the same way as the rotation of coordinates. In addition to the development of pure NN-based FFs, there have also been recent developments of combining traditional FFs such as bond-order potentials with NNs and ReaxFF with message passing neural network (MPNN) that can help mitigate the NNs issue for extrapolation 82 , 107 .

Direct property prediction from atomistic configurations

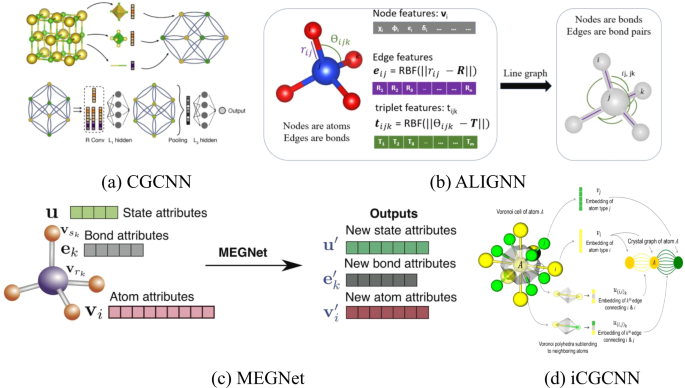

DL methods can be used to establish a structure-property relationship between atomic structure and their properties with high accuracy 28 , 108 . Models such as SchNet, crystal graph convolutional neural network (CGCNN), improved crystal graph convolutional neural network (iCGCNN), directional message passing neural network (DimeNet), atomistic line graph neural network (ALIGNN) and materials graph neural network (MEGNet) shown in Table 1 have been used to predict up to 50 properties of crystalline and molecular materials. These property datasets are usually obtained from ab-initio calculations. A schematic of such models shown in Fig. 2 . While SchNet, CGCNN, MEGNet are primarily based on atomic distances, iCGCNN, DimeNet, and ALIGNN models capture many-body interactions using GCNN.

a CGCNN model in which crystals are converted to graphs with nodes representing atoms in the unit cell and edges representing atom connections. Nodes and edges are characterized by vectors corresponding to the atoms and bonds in the crystal, respectively [Reprinted with permission from ref. 67 Copyright 2019 American Physical Society], b ALIGNN 65 model in which the convolution layer alternates between message passing on the bond graph and its bond-angle line graph. c MEGNet in which the initial graph is represented by the set of atomic attributes, bond attributes and global state attributes [Reprinted with permission from ref. 33 Copyright 2019 American Chemical Society] model, d iCGCNN model in which multiple edges connect a node to neighboring nodes to show the number of Voronoi neighbors [Reprinted with permission from ref. 122 Copyright 2019 American Physical Society].

Some of these properties include formation energies, electronic bandgaps, solar-cell efficiency, topological spin-orbit spillage, dielectric constants, piezoelectric constants, 2D exfoliation energies, electric field gradients, elastic modulus, Seebeck coefficients, power factors, carrier effective masses, highest occupied molecular orbital, lowest unoccupied molecular orbital, energy gap, zero-point vibrational energy, dipole moment, isotropic polarizability, electronic spatial extent, internal energy.

For instance, the current state-of-the-art mean absolute error for formation energy for solids at 0 K is 0.022 eV/atom as obtained by the ALIGNN model 65 . DL is also heavily being used for predicting catalytic behavior of materials such as the Open Catalyst Project 109 which is driven by the DL methods materials design. There is an ongoing effort to continuously improve the models. Usually energy-based models such as formation and total energies are more accurate than electronic property-based models such as bandgaps and power factors.

In addition to molecules and solids, property predictions models have also been used for bio-materials such as proteins, which can be viewed as large molecules. There have been several efforts for predicting protein-based properties, such as binding affinity 66 and docking predictions 110 .

There have also been several applications for identifying reasonable chemical space using DL methods such as autoencoders 111 and reinforcement learning 112 , 113 , 114 for inverse materials design. Inverse materials design with techniques such as GAN deals with finding chemical compounds with suitable properties and act as complementary to forward prediction models. While such concepts have been widely applied to molecular systems, 115 , recently these methods have been applied to solids as well 116 , 117 , 118 , 119 , 120 .

Fast materials screening

DFT-based high-throughput methods are usually limited to a few thousands of compounds and take a long time for calculations, DL-based methods can aid this process and allow much faster predictions. DL-based property prediction models mentioned above can be used for pre-screening chemical compounds. Hence, DL-based tools can be viewed as a pre-screening tool for traditional methods such as DFT. For example, Xie et al. used CGCNN model to screen stable perovskite materials 67 as well hierarchical visualization of materials space 121 . Park et al. 122 used iCGCNN to screen T h C r 2 S i 2 -type materials. Lugier et al. used DL methods to predict thermoelectric properties 123 . Rosen et al. 124 used graph neural network models to predict the bandgaps of metal-organic frameworks. DL for molecular materials has been used to predict technologically important properties such as aqueous solubility 125 and toxicity 126 .

It should be noted that the full atomistic representations and the associated DL models are only possible if the crystal structure and atom positions are available. In practice, the precise atom positions are only available from DFT structural relaxations or experiments, and are one of the goals for materials discovery instead of the starting point. Hence, alternative methods have been proposed to bypass the necessity for atom positions in building DL models. For example, Jain and Bligaard 127 proposed the atomic position-independent descriptors and used a CNN model to learn the energies of crystals. Such descriptors include information based only on the symmetry (e.g., space group and Wyckoff position). In principle, the method can be applied universally in all crystals. Nevertheless, the model errors tend to be much higher than graph-based models. Similar coarse-grained representation using Wyckoff representation was also used by Goodall et al. 128 . Alternatively, Zuo et al. 129 started from the hypothetical structures without precise atom positions, and used a Bayesian optimization method coupled with a MEGNet energy model as an energy evaluator to perform direct structural relaxation. Applying the Bayesian optimization with symmetry relaxation (BOWSR) algorithm successfully discovered ReWB (Pca2 1 ) and MoWC 2 (P6 3 /mmc) hard materials, which were then experimentally synthesized.

Applications in chemical formula and segment representations

One of the earliest applications for DL included SMILES for molecules, elemental fractions and chemical descriptors for solids, and sequence of protein names as descriptors. Such descriptors lack explicit inclusion of atomic structure information but are still useful for various pre-screening applications for both theoretical and experimental data.

SMILES and fragment representation

The simplified molecular-input line-entry system (SMILES) is a method to represent elemental and bonding for molecular structures using short American Standard Code for Information Interchange (ASCII) strings. SMILES can express structural differences including the chirality of compounds, making it more useful than a simply chemical formula. A SMILES string is a simple grid-like (1-D grid) structure that can represent molecular sequences such as DNA, macromolecules/polymers, protein sequences also 130 , 131 . In addition to the chemical constituents as in the chemical formula, bondings (such as double and triple bondings) are represented by special symbols (such as ’=’ and ’#’). The presence of a branch point indicated using a left-hand bracket “(” while the right-hand bracket “)” indicates that all the atoms in that branch have been taken into account. SMILES strings are represented as a distributed representation termed a SMILES feature matrix (as a sparse matrix), and then we can apply DL to the matrix similar to image data. The length of the SMILES matrix is generally kept fixed (such as 400) during training and in addition to the SMILES multiple elemental attributes and bonding attributes (such as chirality, aromaticity) can be used. Key DL tasks for molecules include (a) novel molecule design, (b) molecule screening.

Novel molecules with target properties can designed using VAE, GAN and RNN based methods 132 , 133 , 134 . These DL-generated molecules might not be physically valid, but the goal is to train the model to learn the patterns in SMILES strings such that the output resembles valid molecules. Then chemical intuitions can be further used to screen the molecules. DL for SMILES can also be used for molecularscreening such as to predict molecular toxicity. Some of the common SMILES datasets are: ZINC 135 , Tox21 136 , and PubChem 137 .

Due to the limitations to enforce the generation of valid molecular structures from SMILES, fragment-based models are developed such as DeepFrag and DeepFrag-K 138 , 139 . In fragment-based models, a ligand/receptor complex is removed and then a DL model is trained to predict the most suitable fragment substituent. A set of useful tools for SMILES and fragment representations are provided in Table 2 .

Chemical formula representation

There are several ways of using the chemical formula-based representations for building ML/DL models, beginning with a simple vector of raw elemental fractions 140 , 141 or of weight percentages of alloying compositions 142 , 143 , 144 , 145 , as well as more sophisticated hand-crafted descriptors or physical attributes to add known chemistry knowledge (e.g., electronegativity, valency, etc. of constituent elements) to the feature representations 146 , 147 , 148 , 149 , 150 , 151 . Statistical and mathematical operations such as average, max, min, median, mode, and exponentiation can be carried out on elemental properties of the constituent elements to get a set of descriptors for a given compound. The number of such composition-based features can range from a few dozens to a few hundreds. One of the commonly used representations that have been shown to work for a variety of different use-cases is the materials agnostic platform for informatics and exploration (MagPie) 150 . All these composition-based representations can be used with both traditional ML methods such as Random Forest as well as DL.

It is relevant to note that ElemNet 141 , which is a 17-layer neural network composed of fully connected layers and uses only raw elemental fractions as input, was found to significantly outperform traditional ML methods such as Random Forest, even when they were allowed to use more sophisticated physical attributes based on MagPie as input. Although no periodic table information was provided to the model, it was found to self-learn some interesting chemistry, like groups (element similarity) and charge balance (element interaction). It was also able to predict phase diagrams on unseen materials systems, underscoring the power of DL for representation learning directly from raw inputs without explicit feature extraction. Further increasing the depth of the network was found to adversely affect the model accuracy due to the vanishing gradient problem. To address this issue, Jha et al. 152 developed IRNet, which uses individual residual learning to allow a smoother flow of gradients and enable deeper learning for cases where big data is available. IRNet models were tested on a variety of big and small materials datasets, such as OQMD, AFLOW, Materials Project, JARVIS, using different vector-based materials representations (element fractions, MagPie, structural) and were found to not only successfully alleviate the vanishing gradient problem and enable deeper learning, but also lead to significantly better model accuracy as compared to plain deep neural networks and traditional ML techniques for a given input materials representation in the presence of big data 153 . Further, graph-based methods such as Roost 154 have also been developed which can outperform many similar techniques.

Such methods have been used for diverse DFT datasets mentioned above in Table 1 as well as experimental datasets such as SuperCon 155 , 156 for quick pre-screening applications. In terms of applications, they have been applied for predicting properties such as formation energy 141 , bandgap, and magnetization 152 , superconducting temperatures 156 , bulk, and shear modulus 153 . They have also been used for transfer learning across datasets for enhanced predictive accuracy on small data 34 , even for different source and target properties 157 , which is especially useful to build predictive models for target properties for which big source datasets may not be readily available.

There have been libraries of such descriptors developed such as MatMiner 151 and DScribe 158 . Some examples of such models are given in Table 2 . Such representations are especially useful for experimental datasets such as those for superconducting materials where the atomic structure is not tabulated. However, these representations cannot distinguish different polymorphs of a system with different point groups and space groups. It has been recently shown that although composition-based representations can help build ML/DL models to predict some properties like formation energy with remarkable accuracy, it does not necessarily translate to accurate predictions of other properties such as stability, when compared to DFT’s own accuracy 159 .

Spectral models

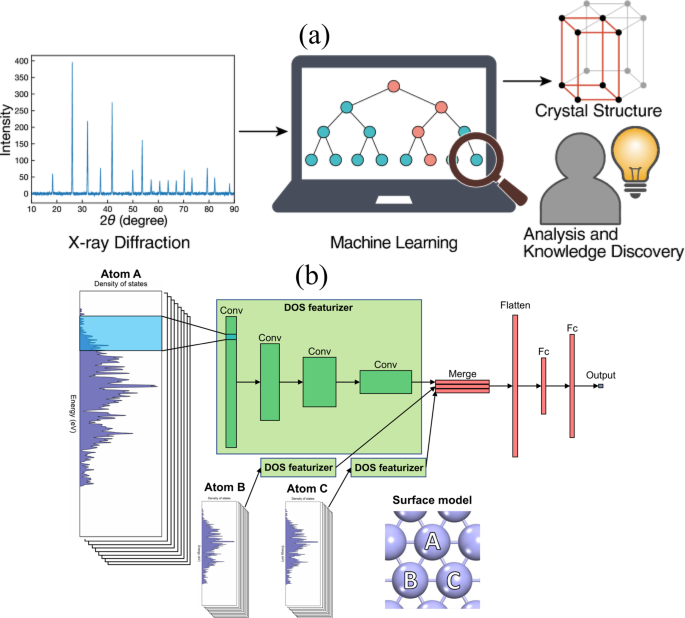

When electromagnetic radiation hits materials, the interaction between the radiation and matter measured as a function of the wavelength or frequency of the radiation produces a spectroscopic signal. By studying spectroscopy, researchers can gain insights into the materials’ composition, structural, and dynamic properties. Spectroscopic techniques are foundational in materials characterization. For instance, X-ray diffraction (XRD) has been used to characterize the crystal structure of materials for more than a century. Spectroscopic analysis can involve fitting quantitative physical models (for example, Rietveld refinement) or more empirical approaches such as fitting linear combinations of reference spectra, such as with x-ray absorption near-edge spectroscopy (XANES). Both approaches require a high degree of researcher expertise through careful design of experiments; specification, revision, and iterative fitting of physical models; or the availability of template spectra of known materials. In recent years, with the advances in high-throughput experiments and computational data, spectroscopic data has multiplied, giving opportunities for researchers to learn from the data and potentially displace the conventional methods in analyzing such data. This section covers emerging DL applications in various modes of spectroscopic data analysis, aiming to offer practice examples and insights. Some of the applications are shown in Fig. 3 .

a Predicting structure information from the X-ray diffraction 374 , Reprinted according to the terms of the CC-BY license 374 . Copyright 2020. b Predicting catalysis properties from computational electronic density of states data. Reprinted according to the terms of the CC-BY license 202 . Copyright 2021.

Currently, large-scale and element-diverse spectral data mainly exist in computational databases. For example, in ref. 160 , the authors calculated the infrared spectra, piezoelectric tensor, Born effective charge tensor, and dielectric response as a part of the JARVIS-DFT DFPT database. The Materials Project has established the largest computational X-ray absorption database (XASDb), covering the K-edge X-ray near-edge fine structure (XANES) 161 , 162 and the L-edge XANES 163 of a large number of material structures. The database currently hosts more than 400,000 K-edge XANES site-wise spectra and 90,000 L-edge XANES site-wise spectra of many compounds in the Materials Project. There are considerably fewer experimental XAS spectra, being on the order of hundreds, as seen in the EELSDb and the XASLib. Collecting large experimental spectra databases that cover a wide range of elements is a challenging task. Collective efforts focused on curating data extracted from different sources, as found in the RRUFF Raman, XRD and chemistry database 164 , the open Raman database 165 , and the SOP spectra library 166 . However, data consistency is not guaranteed. It is also now possible for contributors to share experimental data in a Materials Project curated database, MPContribs 167 . This database is supported by the US Department of Energy (DOE) providing some expectation of persistence. Entries can be kept private or published and are linked to the main materials project computational databases. There is an ongoing effort to capture data from DOE-funded synchrotron light sources ( https://lightsources.materialsproject.org/ ) into MPContribs in the future.

Recent advances in sources, detectors, and experimental instrumentation have made high-throughput measurements of experimental spectra possible, giving rise to new possibilities for spectral data generation and modeling. Such examples include the HTEM database 10 that contains 50,000 optical absorption spectra and the UV-Vis database of 180,000 samples from the Joint Center for Artificial Photosynthesis. Some of the common spectra databases for spectra data are shown in Table 3 . There are beginning to appear cloud-based software as a service platforms for high-throughput data analysis, for example, pair-distribution function (PDF) in the cloud ( https://pdfitc.org ) 168 which are backed by structured databases, where data can be kept private or made public. This transition to the cloud from data analysis software installed and run locally on a user’s computer will facilitate the sharing and reuse of data by the community.

Applications

Due to the widespread deployment of XRD across many materials technologies, XRD spectra became one of the first test grounds for DL models. Phase identification from XRD can be mapped into a classification task (assuming all phases are known) or an unsupervised clustering task. Unlike the traditional analysis of XRD data, where the spectra are treated as convolved, discrete peak positions and intensities, DL methods treat the data as a continuous pattern similar to an image. Unfortunately, a significant number of experimental XRD datasets in one place are not readily available at the moment. Nevertheless, extensive, high-quality crystal structure data makes creating simulated XRD trivial.

Park et al. 169 calculated 150,000 XRD patterns from the Inorganic Crystal Structure Database (ICSD) structural database 170 and then used CNN models to predict structural information from the simulated XRD patterns. The accuracies of the CNN models reached 81.14%, 83.83%, and 94.99% for space-group, extinction-group, and crystal-system classifications, respectively.

Liu et al. 95 obtained similar accuracies by using a CNN for classifying atomic pair-distribution function (PDF) data into space groups. The PDF is obtained by Fourier transforming XRD into real space and is particularly useful for studying the local and nanoscale structure of materials. In the case of the PDF, models were trained, validated, and tested on simulated data from the ICSD. However, the trained model showed excellent performance when given experimental data, something that can be a challenge in XRD data because of the different resolutions and line-shapes of the diffraction data depending on specifics of the sample and experimental conditions. The PDF seems to be more robust against these aspects.

Similarly, Zaloga et al. 171 also used the ICSD database for XRD pattern generation and CNN models to classify crystals. The models achieved 90.02% and 79.82% accuracy for crystal systems and space groups, respectively.

It should be noted that the ICSD database contains many duplicates, and such duplicates should be filtered out to avoid information leakage. There is also a large difference in the number of structures represented in each space group (the label) in the database resulting in data normalization challenges.

Lee et al. 172 developed a CNN model for phase identification from samples consisting of a mixture of several phases in a limited chemical space relevant for battery materials. The training data are mixed patterns consisting of 1,785,405 synthetic XRD patterns from the Sr-Li-Al-O phase space. The resulting CNN can not only identify the phases but also predict the compound fraction in the mixture. A similar CNN was utilized by Wang et al. 173 for fast identification of metal-organic frameworks (MOFs), where experimental spectral noise was extracted and then synthesized into the theoretical XRD for training data augmentation.