2020: The Year of Robot Rights

Several years ago, in an effort to initiate dialogue about the moral and legal status of technological artifacts, I posted a photograph of myself holding a sign that read “Robot Rights Now” on Twitter. Responses to the image were, as one might imagine, polarizing, with advocates and critics lining up on opposite sides of the issue. What I didn’t fully appreciate at the time is just how divisive an issue it is.

For many researchers and developers slaving away at real-world applications and problems, the very notion of “robot rights” produces something of an allergic reaction. Over a decade ago, roboticist Noel Sharkey famously called the very idea “a bit of a fairy tale.” More recently, AI expert Joanna Bryson argued that granting rights to robots is a ridiculous notion and an utter waste of time, while philosopher Luciano Floridi downplayed the debate , calling it “distracting and irresponsible, given the pressing issues we have at hand.”

And yet, judging by the slew of recent articles on the subject , 2020 is shaping up to be the year the concept captures the public’s interest and receives the attention I believe it deserves.

The questions at hand are straightforward: At what point might a robot, algorithm, or other autonomous system be held accountable for the decisions it makes or the actions it initiates? When, if ever, would it make sense to say, “It’s the robot’s fault”? Conversely, when might a robot, an intelligent artifact, or other socially interactive mechanism be due some level of social standing or respect?

When, in other words, would it no longer be considered a waste of time to ask the question: “Can and should robots have rights?”

Before we can even think about answering this question, we should define rights , a concept more slippery than one might expect. Although we use the word in both moral and legal contexts, many individuals don’t know what rights actually entail, and this lack of precision can create problems. One hundred years ago, American jurist Wesley Hohfeld observed that even experienced legal professionals tend to misunderstand rights, often using contradictory or insufficient formulations in the course of a decision or even a single sentence. So he created a typology that breaks rights down into four related aspects or what he called “ incidents ”: claims, powers, privileges, and immunities.

His point was simple: A right, like the right one has to a piece of property, like a toaster or a computer, can be defined and characterized by one or more of these elements. It can, for instance, be formulated as a claim that the owner has over and against another individual. Or it could be formulated as an exclusive privilege for use and possession that is granted to the owner. Or it could be a combination of the two.

Basically, rights are not one kind of thing; they are manifold and complex, and though Hohfeld defined them, his delineation doesn’t explain who has a particular right or why. For that, we have to rely on two competing legal theories, Will Theory and Interest Theory . Will theory sets the bar for moral and legal inclusion rather high, requiring that the subject of a right be capable of making a claim to it on their own behalf. Interest theory has a lower bar for inclusion, stipulating that rights may be extended to others irrespective of whether the entity in question can demand it or not.

Rights are not one kind of thing; they are manifold and complex.

Although each side has its advocates and critics, the debate between these two theories is considered to be irresolvable. What is important, therefore, is not to select the correct theory of rights but to recognize how and why these two competing ways of thinking about rights frame different problems, modes of inquiry, and possible outcomes. A petition to grant a writ of habeas corpus to an elephant, for instance, will look very different — and will be debated and decided in different ways — depending on what theoretical perspective comes to be mobilized.

We must also remember that the set of all possible robot rights is not identical to nor the same as the set of human rights. A common mistake results from conflation — the assumption that “robot rights” must mean “human rights.” We see this all over the popular press , in the academic literature , and even in policy discussions and debates.

This is a slippery slope. The question concerning rights is immediately assumed to entail or involve all human rights, not recognizing that the rights for one category of entity, like an animal or a machine, is not necessarily equivalent to nor the same as that enjoyed by another category of entity, like a human being. It is possible, as technologist and legal scholar Kate Darling has argued , to entertain the question of robot rights without this meaning all human rights. One could, for instance, advance the proposal — introduced by the French legal team of Alain and Jérémy Bensoussan — that domestic social robots, like Alexa, have a right to privacy for the purposes of protecting the family’s personal data. But considering this one right — the claim to privacy or the immunity from disclosure — does not and should not mean that we also need to give it the vote.

Ultimately, the question of the moral and legal status of a robot or an AI comes down to whether one believes a computer is capable of being a legally recognized person — we already live in a world where artificial entities like a corporation are persons — or remains nothing more than an instrument, tool, or piece of property.

This difference and its importance can be seen with recent proposals regarding the legal status of robots. On the one side, you have the European Commission’s Resolution on Civil Law Rules of Robotics , which advised extending some aspects of legal personality to robots for the purposes of social inclusion and legal integration. On the other side, you have more than 250 scientists, engineers and AI professionals who signed an open letter opposing the proposals, asserting that robots and AI, no matter how autonomous or intelligent they might appear to be, are nothing more than tools. What is important in this debate is not what makes one side different from the other, but rather what both sides already share and must hold in common in order to have this debate in the first place. Namely, the conviction that there are two exclusive ontological categories that divide up the world — persons or property. This way of organizing things is arguably arbitrary, culturally specific, and often inattentive to significant differences.

Ultimately, the question of the moral and legal status of a robot or an AI comes down to whether one believes a computer is capable of being a legally recognized person or remains nothing more than an instrument, tool, or piece of property.

Robots and AI are not just another entity to be accommodated to existing moral and legal categories. What we see in the face or the faceplate of the robot is a fundamental challenge to existing ways of deciding questions regarding social status. Consequently, the right(s) question entails that we not only consider the rights of others but that we also learn how to ask the right questions about rights, critically challenging the way we have typically decided these important matters.

Does this mean that robots or even one particular robot can or should have rights? I honestly can’t answer that question. What I do know is that we need to engage this matter directly, because how we think about this previously unthinkable question will have lasting consequences for us, for others, and for our moral and legal systems.

David Gunkel is Distinguished Teaching Professor of Communication Technology at Northern Illinois University and the author of, among other books, “ The Machine Question: Critical Perspectives on AI, Robots, and Ethics ,” “ Of Remixology: Ethics and Aesthetics after Remix ,” and “ Robot Rights .”

Should robots have rights?

- Search Search

As robots gain citizenship and potential personhood in parts of the world, it’s appropriate to consider whether they should also have rights.

So argues Northeastern professor Woodrow Hartzog, whose research focuses in part on robotics and automated technologies.

“It’s difficult to say we’ve reached the point where robots are completely self-sentient and self-aware; that they’re self-sufficient without the input of people,” said Hartzog, who holds joint appointments in the School of Law and the College of Computer and Information Science at Northeastern. “But the question of whether they should have rights is a really interesting one that often gets stretched in considering situations where we might not normally use the word ‘rights.’”

In Hartzog’s consideration of the question, granting robots negative rights—rights that permit or oblige inaction —resonates.

He cited research by Kate Darling, a research specialist at the Massachusetts Institute of Technology, that indicates people relate more emotionally to anthropomorphized robots than those with fewer or no human qualities.

“When you think of it in that light, the question becomes, ‘Do we want to prohibit people from doing certain things to robots not because we want to protect the robot, but because of what violence to the robot does to us as human beings?’” Hartzog said.

In other words, while it may not be important to protect a human-like robot from a stabbing, someone stabbing a very human-like robot could have a negative impact on humanity. And in that light, Hartzog said, it would make sense to assign rights to robots.

There is another reason to consider assigning rights to robots, and that’s to control the extent to which humans can be manipulated by them.

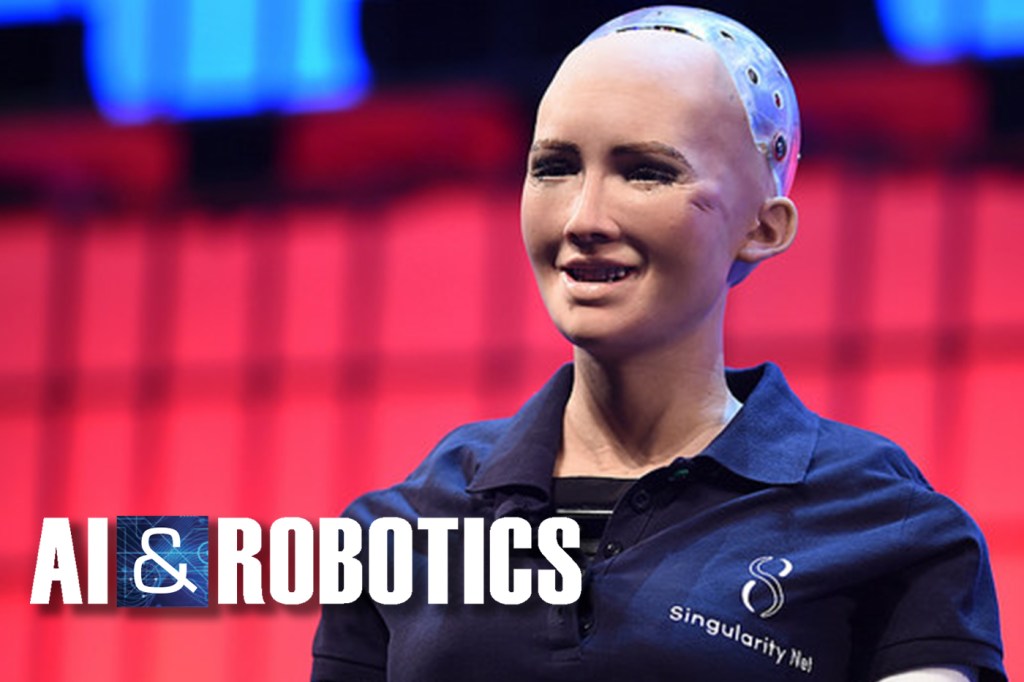

While we may not have reached the point of existing among sentient bots, we’re getting closer, Hartzog said. Robots like Sophia, a humanoid robot that this year achieved citizenship in Saudi Arabia, put us on that path.

Sophia, a project of Hanson Robotics, has a human-like face modeled after Audrey Hepburn and utilizes advanced artificial intelligence that allows it to understand and respond to speech and express emotions.

“Sophia is an example of what’s to come,” Hartzog said. “She seems to be living in that area where we might say the full impact of anthropomorphism might not be realized, but we’re headed there. She’s far enough along that we should be thinking now about rules regarding how we should treat robots as well as the boundaries of how robots will be able to relate to us.”

The robot occupies the space Hartzog and others in computer science identified as the “uncanny valley.” That is, it is eerily similar to a human, but not close enough to feel natural. “Close, but slightly off-putting,” Hartzog said.

In considering the implications of human and robot interactions, then, we might be better off imagining a cute, but decidedly inhuman form. Think of the main character in the Disney movie Wall-E , Hartzog said, or a cuter version of the vacuuming robot Roomba.

He considered a thought experiment: Imagine having a Roomba that was equipped with AI assistance along the lines of Amazon’s Alexa or Apple’s Siri. Imagine it was conditioned to form a relationship with its owner, to make jokes, to say hello, to ask about one’s day.

“I would come to really have a great amount of affection for this Roomba,” Hartzog said. “Then imagine one day my Roomba starts coughing, sputtering, choking, one wheel has stopped working, and it limps up to me and says, ‘Father, if you don’t buy me an upgrade, I’ll die.’

“If that were to happen, is that unfairly manipulating people based on our attachment to human-like robots?” Hartzog asked.

It’s a question that asks us to confront the limits of our compassion, and one the law has yet to grapple with, he said.

What’s more, Hartzog’s fictional scenario isn’t so far afield.

“Home-care robots are going to be given a lot of access to our most intimate areas of life,” he said. “When robots get to the point where we trust them and we’re friends with them, what are the articulable boundaries for what a robot we’re emotionally invested in is allowed to do?”

Hartzog said that with the introduction of virtual assistants like Siri and Alexa, “we’re halfway there right now.”

Editor's Picks

Is gig work compatible with employment status study finds reclassification benefits both workers and platforms, a biological trigger of early puberty is uncovered by northeastern scientists, jfk was inspired by camelot. now this professor thinks we need fantasy once again to protect us in an ai age, how does the us know that forced labor is happening in china a northeastern supply chain expert weighs in, will robotaxis ever go mainstream self-driving companies have made advancements, but technology is still lacking, expert says, featured stories, .ngn-magazine__shapes {fill: var(--wp--custom--color--emphasize, #000) } .ngn-magazine__arrow {fill: var(--wp--custom--color--accent, #cf2b28) } ngn magazine legendary northeastern hockey goalie has boston three wins away from inaugural pwhl title, .ngn-magazine__shapes {fill: var(--wp--custom--color--emphasize, #000) } .ngn-magazine__arrow {fill: var(--wp--custom--color--accent, #cf2b28) } ngn magazine we’re addicted to ‘true crime’ stories. this class investigates why, northeastern’s cassandra mckenzie recognized by city of boston as ‘trailblazer’ for women in construction, more researchers needed to rid the internet of harmful material, uk communications boss says at northeastern conference.

Recent Stories

Human rights for robots? A literature review

- Published: 27 March 2021

- Volume 1 , pages 579–591, ( 2021 )

Cite this article

- John-Stewart Gordon ORCID: orcid.org/0000-0001-6589-2677 1 , 2 &

- Ausrine Pasvenskiene ORCID: orcid.org/0000-0003-3503-414X 3

7981 Accesses

20 Citations

29 Altmetric

Explore all metrics

This literature review of the most prominent academic and non-academic publications in the last 10 years on the question of whether intelligent robots should be entitled to human rights is the first review of its kind in the academic context. We review three challenging academic contributions and six non-academic but important popular texts in blogs, magazines, and newspapers which are also frequently cited in academia. One of the main findings is that several authors base their critical views (i.e. views against recognizing human rights for robots) on misleading ethical and philosophical assumptions and hence offer flawed arguments regarding the moral and legal status of artificial intelligent robots and their possible claims to human rights. Our analysis sheds light on some of the complex and challenging issues related to the crucial question of whether intelligent robots with human-like capabilities are eventually entitled to human rights (or not).

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Symbiosis with artificial intelligence via the prism of law, robots, and society

The other question: can and should robots have rights.

Ethics, Law, and Governance in the Development of Robots

In the context of machines, it is important to distinguish between artificial narrow intelligence (ANI), which can be already attributed to machines which are excellent at one particular task such as playing chess or Go, and artificial general intelligence (AGI), which can be considered the very foundation of any human-like machine since it would give such a machine the capability to become an intelligent person who understands and learns like human beings. At this moment, no AGI machines exist and whether they ever will exist remains a matter of debate. For the purposes of this paper, we assume that the technological problems related to the creation of AGI machines can be resolved.

“I note that in my argument below, based upon ontological distinctions, animals and humans would fall into the same pertinent ontological category and automata in another, so this argument challenges arguments for automata rights based upon the fact that humans have recognized the need for animal welfare or rights, as there would still be a need to account for the ontological leap from animals/humans to automata “ [ 14 , p. 372].

Wright argues that to deserve constitutional rights, one must have the capacity to meaningfully, subjectively, and genuinly care about the application, waiver or violation of that right [ 19 , p. 624]. According to him, a robot’s knowledge that it may be permanently switched off does not give it the right not to be switched off, if the robot lacks any relevant plans or aspirations that could be blocked by turning it off.

Paola Cavalieri and Singer [ 23 ] initiated the so-called Great Ape project, which aimed to extend the moral circle beyond humanity so as to also include the great apes. Their main goal was to grant some basic rights to the non-human great apes—in particular the rights to life, liberty and freedom from torture.

We hold the view that human rights were not invented by human beings but were, rather, discovered by humans through means of reasoning. Of course, the milestone in the history of human rights is their codification in the UN Universal Declaration of Human Rights (1948), but it seems reasonable to hold the view that they existed (at least in some version) long before that time [ 29 ].

In our reading of Kamm, the importance of consciousness in the definition of moral status concerns the ability to reason. An individual can be conscious without having the ability to reason, but not vice versa. A being with full moral personhood, such as a typical adult human being, has the highest moral status, which commonly requires the ability to reason.

Müller, V., Bostrom, N.: Future progress in artificial intelligence: a survey of expert opinion. In: Müller, V.C. (ed.) Fundamental Issues of Artificial Intelligence, pp. 552–572. Springer, Berlin (2014)

Google Scholar

Kurzweil, R.: The Singularity Is Near. Duckworth Overlook, London (2005)

Bostrom, N.: Superintelligence: Paths, Dangers. Strategies. Oxford University Press, Oxford (2014)

Gordon, J.-S.: Human rights. In: Pritchard, D. (ed.) Oxford Bibliographies in Philosophy. Oxford University Press, Oxford (2016)

Gordon, J.-S.: Artificial moral and legal personhood, pp. 1–15. AI & Society (2020).. ( Online First )

Singer, P.: Speciesism and moral status. Metaphilosophy 40 (3–4), 567–581 (2009)

Article Google Scholar

Singer, P.: The Expanding Circle: Ethics, Evolution, and Moral Progress. Princeton University Press, Princeton (2011)

Book Google Scholar

Cavalieri, P.: The Animal Question. Why Non-Human Animals Deserve Human Rights. Oxford University Press, Oxford (2001)

Donaldson, S., Kymlicka, W.: Zoopolis. A Political Theory of Animal Rights. Oxford University Press, Oxford (2013)

Atapattu, S.: Human Rights Approaches to Climate Change: Challenges and Opportunities. Routledge, New York (2015)

Gunkel, D.J.: Robot Rights. MIT Press, Cambridge (2018)

Gellers, J.: Rights for Robots. Artificial Intelligence, Animal and Environmental Law. Routledge, London (2020)

Gordon, J.-S.: What do we owe to intelligent robots? AI oc. 35 , 209–223 (2020)

Miller, L.F.: Granting automata human rights: challenge to a basis of full-rights privilege. Hum. Rts. Rev. 16 (4), 369–391 (2015)

Voiculescu, N.: I, robot! The lawfulness of a dichotomy: human rights v. bobots’ rights. In: Ispas, P.E., Maxim, F. (eds.) Romanian Law 30 Years after the Collapse of Communism, pp. 3–14. “Titu Maiorescu University,” Bucharest (2020)

European Commission for the Efficiency of Justice: The European Ethical Charter on the Use of Artificial Intelligence in Judicial Systems and Their Environment, Strasbourg, 3–4 December 2018 https://www.coe.int/en/web/cepej/cepej-european-ethical-charter-on-the-use-of-artificial-intelligence-ai-in-judicial-systems-and-their-environment Accessed 7 Mar 2021

European Parliament: Resolution of 16 February 2017 with recommendations to the Commission on Civil Law Rules on Robotics https://oeil.secure.europarl.europa.eu/oeil/popups/ficheprocedure.do?lang=en&reference=2015/2103(INL ) (2013) Accessed 7 Mar 2021

European Commission: The Ethical Guidelines for Reliable Artificial Intelligence (AI), Brussels https://ec.europa.eu/digital-single-market/en/news/ethics-guidelines-trustworthy-ai (2019) Accessed 7 Mar 2021

Wright, R.G.: The constitutional rights of advanced robots (and of human beings). Ark. Law Rev. 71 (3), 613–646 (2019)

Knapp, A.: Should artificial intelligences be granted civil rights? Forbes. http://www.forbes.com/sites/alexknapp/2011/04/04/should-artificial-intelligences-be-granted-civil-rights/#82578c277b2b (2011) Accessed 7 Mar 2021

Worzel, R.: Should robots have rights? Future Search. https://www.futuresearch.com/2014/06/09/should-robots-have-rights/ (2004) Accessed 7 Mar 2021

Singer, P.: Animal Liberation. Avon Books, London (1975)

Cavalieri, P., Singer, P.: The Great Ape Project: Equality Beyond Humanity. St. Martin’s Press, New York (1993)

Ashrafian, H.: Intelligent robots must uphold human rights. Nature 519 (7544), 391 (2015)

Sigfusson, L.: Do robots deserve human rights? The Crux. https://www.discovermagazine.com/technology/do-robots-deserve-human-rights (5 December, 2017) Accessed 7 Mar 2021

Dvorsky, G.: When will robots deserve human rights? Gizmodo. https://gizmodo.com/when-will-robots-deserve-human-rights-1794599063 (2017). Accessed 7 Mar 2021

Peng, J.: How human is AI and should AI be granted rights? https://blogs.cuit.columbia.edu/jp3864/2018/12/04/how-human-is-ai-and-should-ai-be-granted-rights/ (4 December, 2018) Accessed 7 Mar 2021

Ord, T.: The Precipice: Existential Risk and the Future of Humanity. Hachette Books, London (2020)

Ishay, M.: The History of Human Rights. From Ancient Times to the Globalization. University of California Press, Berkeley (2008)

Gordon, J.-S., Tavera-Salyutov, F.: Remarks on disability rights legislation Equality, Diversity and Inclusion. An Int. J. 37 (5), 506–526 (2018)

Francione, G.L.: Animals as Persons. Essay on the Abolition of Animal Exploitation. Columbia University Press, New York (2009)

Stone, C.D.: Should trees have standing? Toward legal rights for natural objects. S. Cal. Law Rev. 45 , 450–501 (1972)

Stone, C.D.: Should Trees Have Standing? Law, Morality and the Environment. Oxford University Press, Oxford (2010)

Kamm, F.: Intricate Ethics: Rights, Responsibilities, and Permissible Harm. Oxford University Press, Oxford (2007)

Levy, D.: The Evolution of Human-Robot Relationships. Love and Sex with Robots. HarperCollins Publishers, New York (2007)

Gunkel, D.J.: The Machine Question: Critical Perspectives on AI, Robots, and Ethics. MIT Press, Cambridge (2012)

Download references

This research is funded by the European Social Fund according to the activity ‘Improvement of researchers’ qualification by implementing world-class R&D projects of Measure No. 09.3.3-LMT-K-712.

Author information

Authors and affiliations.

Department of Philosophy, Faculty of Humanities, Vytautas Magnus University, V. Putvinskio g. 23 (R 306), LT-44243, Kaunas, Lithuania

John-Stewart Gordon

Faculty of Law, Vytautas Magnus University, V. Putvinskio g. 23 (R 306), LT-44243, Kaunas, Lithuania

Faculty of Law, Vytautas Magnus University, Jonavos g. 66, LT-44138, Kaunas, Lithuania

Ausrine Pasvenskiene

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to John-Stewart Gordon .

Ethics declarations

Conflict of interest.

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Reprints and permissions

About this article

Gordon, JS., Pasvenskiene, A. Human rights for robots? A literature review. AI Ethics 1 , 579–591 (2021). https://doi.org/10.1007/s43681-021-00050-7

Download citation

Received : 20 January 2021

Accepted : 13 March 2021

Published : 27 March 2021

Issue Date : November 2021

DOI : https://doi.org/10.1007/s43681-021-00050-7

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Human rights

- Moral rights

- Legal rights

- Find a journal

- Publish with us

- Track your research

Artificial Intelligence: Should Robots Have Rights?

- Description

By Karina Parikh

In 1950, Alan Turing proposed a test for the ability of a machine to exhibit intelligent behavior that is indistinguishable from that of a human being. Over the 70 years since, artificial intelligence (AI) has become more and more sophisticated, and there have already been claims of computers passing the Turing Test. As AI applications, and especially AI-enabled robots, continue their evolution, at what point do humans begin to, in fact, perceive them as living beings? And as this perception takes hold, will humans begin to feel obligated to grant them certain rights?

The time to address these issues is now, before the robots start doing so.

Researchers and scientists are now pondering the ethics surrounding how robots interact with human society. With some of these robots having the capability to interact with humans, people are naturally worried about their effects on humanity.

For example, people have varying perspectives on the effects of robots in the workplace. Some see them as beneficial, able to perform tedious or dangerous jobs, leaving humans to perform more interesting work and stay out of harm’s way. Others see them as hurtful, taking jobs away from people, leading to higher unemployment.

Some of the struggles facing the public right now are pertaining to robots that possess the ability to think on their own. It is an issue that divides people due to the fear associated with the idea of autonomous robots. As a result, the overlaying concern that must be taken into consideration is whether or not it is ethical to integrate these robots into our society. By programming these robots with specific algorithms and then training them with enormous amounts of real-world data, they can appear to think on their own, generating predictions and novel ideas. When robots get to this stage and start to act like humans, it will become more difficult to think of them as machines and tempting to think of them as having a moral compass.

Arguments Supporting Rights for Robots

Although the role of robots and their “rights” may become an issue in society generally, it is easier to see these issues by focusing on one aspect of society: The workplace. As robots working alongside humans become smarter and smarter, humans working with them will naturally think of them as co-workers. As these AI-enable robots become more and more autonomous, they may develop a desire to be treated the same way as their human coworkers.

Autonomous robots embody a very different type of artificial intelligence compared to those that simply run statistical information through algorithm to make predictions. As Turing suggested, autonomous robots ultimately will become indistinguishable from humans. If, in fact, robots do develop a moral compass, they may—on their own—begin to push to be treated the same as humans.

Some will argue that, regardless of the fact that robot behavior is indistinguishable from human behavior, robots nevertheless are not living creatures and should not receive the same treatment as humans. However, this claim can be countered by pointing to examples indicating how close humans and robots can be to each other. For example, in some parts of the world, robots are providing companionship to the elderly who would otherwise be isolated. Robots are becoming capable of displaying a sense of humor or can appear to show empathy. Some are even designed to appear human. And, as such robots also exhibit independent thinking and even self-awareness, their human companions or co-workers may see them as deserving equal rights—or, the robots themselves may begin to seek such rights.

Another argument in favor of giving rights to robots is that they deserve it. AI-enabled robots have the potential for greatly increasing human productivity, either by replacing human effort or supplementing it. Robots can work in places and perform more dangerous tasks than humans can or want to do. Robots make life better for the human race. If, at the same time, robots develop some level of self-awareness or consciousness, it is only right that we should grant them some rights, even if those rights are difficult to define at this time. Just as we treat animals in a humane way, so we should also treat robots with respect and dignity.

Counterpoint: The Argument Against Rights for Robots

Although some may advocate for giving human-like robots equal rights, there are others who feel they are facing an even more pressing issue, that robots may overpower humans. Many fear that artificial intelligence may replace humans in the future. The fear is that robots will become so intelligent that they will be able to make humans work for them. Thus, humans would be controlled by their own creations.

It is also important to consider that expanding robots’ rights could infringe on the existing rights of humans, such as the right to a safe workplace. Today, one of the benefits of robots is that they can work under conditions that are unsafe or dangerous to humans—think of robots today that are used to disable bombs. If robots are given the same rights as humans, then it may get to the point where it is unethical to place them in harmful situations where they have a greater chance of injury or destruction. If so, this would be giving robots greater rights than we give animals today, where police dogs, for example, are sent into situations where it is too dangerous for an officer to go.

A more immediate argument against giving rights to robots is that robots already have an advantage over humans in the workplace, and giving them rights will just increase that advantage. Robots can be designed to work more quickly without the need to take breaks. The problem here is that the robot has an unfair advantage in competing with a human for a job. Robots have already begun to perform human jobs, such as delivering food to hotel rooms. Even in this simple task they have advantages. They don’t get distracted as humans do, but rather they can remain focused for a longer period of time. It also helps that the employer does not pay payroll taxes for the robot’s work. This has driven fears that robots will come to dominate human jobs and the resulting unemployment would negate their benefit to the economy. Even though humans may not be opposed to robots carrying out simple tasks, there may be increasing opposition when robots start to fill more complex roles, including many white-collar jobs.

When it comes to looking at the impact of robots in the workplace, there are varying perspectives. The argument in favor of granting robots rights is ultimately that they are coming to have the same capacity for intellectual reasoning and emotional intelligence as humans. As noted earlier, these supporters argue that robots and other forms of artificial intelligence should receive the same treatment as humans because some of them even have a moral compass. On the other side, those who argue against giving rights to robots deny that robots have a moral compass and thus do not deserve to be treated the same as humans. In essence, even if they pass the Turing Test, they are still machines. They are not living beings and therefore should not receive any rights, even if they are smart enough to demand them. If we were to grant robots this kind of power, it would enable them to overtake humans as a result of their ability to work more efficiently.

Whether or not robots and other forms of AI should have rights, these technologies have the potential to greatly benefit humans or greatly harm us. As the technologies grow and mature, there may be the need for regulation to ensure that the risks are mitigated and that humans ultimately maintain control over them.

In 1942, science fiction writer Isaac Asimov formulated his three laws for robots:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

These three laws predate the development of artificial intelligence, but when it comes to principles to guide regulation, they might just be a good starting point.

For more on intelligent automation and other robotics-related technology, including free Research Bytes, see our RadarView market assessments .

For information on future technology trends, including free samples and Research Bytes, see our annual study on Worldwide Technology Trends.

Related Reports

ChatGPT to Lead More Companies to Say Aye-Aye to AI

AI Adoption Trends and Customer Experience 2023

Artificial Intelligence a Major Focus of Empowering Beyond Summit

AI Grows to Create a Hyperpersonal Customer Experience

Disclaimer:.

Avasant's research and other publications are based on information from the best available sources and Avasant's independent assessment and analysis at the time of publication. Avasant takes no responsibility and assumes no liability for any error/omission or the accuracy of information contained in its research publications. Avasant does not endorse any provider, product or service described in its RadarView™ publications or any other research publications that it makes available to its users, and does not advise users to select only those providers recognized in these publications. Avasant disclaims all warranties, expressed or implied, including any warranties of merchantability or fitness for a particular purpose. None of the graphics, descriptions, research, excerpts, samples or any other content provided in the report(s) or any of its research publications may be reprinted, reproduced, redistributed or used for any external commercial purpose without prior permission from Avasant, LLC. All rights are reserved by Avasant, LLC.

Login to get free content each month and build your personal library at Avasant.com

New To Avasant ?

share this!

December 8, 2017

Should robots have rights?

by Molly Callahan, Northeastern University

As robots gain citizenship and potential personhood in parts of the world, it's appropriate to consider whether they should also have rights.

So argues Northeastern professor Woodrow Hartzog, whose research focuses in part on robotics and automated technologies.

"It's difficult to say we've reached the point where robots are completely self-sentient and self-aware; that they're self-sufficient without the input of people," said Hartzog, who holds joint appointments in the School of Law and the College of Computer and Information Science at Northeastern. "But the question of whether they should have rights is a really interesting one that often gets stretched in considering situations where we might not normally use the word 'rights.'"

In Hartzog's consideration of the question, granting robots negative rights—rights that permit or oblige inaction—resonates.

He cited research by Kate Darling, a research specialist at the Massachusetts Institute of Technology, that indicates people relate more emotionally to anthropomorphized robots than those with fewer or no human qualities.

"When you think of it in that light, the question becomes, 'Do we want to prohibit people from doing certain things to robots not because we want to protect the robot , but because of what violence to the robot does to us as human beings?'" Hartzog said.

In other words, while it may not be important to protect a human-like robot from a stabbing, someone stabbing a very human-like robot could have a negative impact on humanity. And in that light, Hartzog said, it would make sense to assign rights to robots.

There is another reason to consider assigning rights to robots, and that's to control the extent to which humans can be manipulated by them.

While we may not have reached the point of existing among sentient bots, we're getting closer, Hartzog said. Robots like Sophia, a humanoid robot that this year achieved citizenship in Saudi Arabia, put us on that path.

Sophia, a project of Hanson Robotics, has a human-like face modeled after Audrey Hepburn and utilizes advanced artificial intelligence that allows it to understand and respond to speech and express emotions.

"Sophia is an example of what's to come," Hartzog said. "She seems to be living in that area where we might say the full impact of anthropomorphism might not be realized, but we're headed there. She's far enough along that we should be thinking now about rules regarding how we should treat robots as well as the boundaries of how robots will be able to relate to us."

The robot occupies the space Hartzog and others in computer science identified as the "uncanny valley." That is, it is eerily similar to a human, but not close enough to feel natural. "Close, but slightly off-putting," Hartzog said.

In considering the implications of human and robot interactions, then, we might be better off imagining a cute, but decidedly inhuman form. Think of the main character in the Disney movie Wall-E, Hartzog said, or a cuter version of the vacuuming robot Roomba.

He considered a thought experiment: Imagine having a Roomba that was equipped with AI assistance along the lines of Amazon's Alexa or Apple's Siri. Imagine it was conditioned to form a relationship with its owner, to make jokes, to say hello, to ask about one's day.

"I would come to really have a great amount of affection for this Roomba," Hartzog said. "Then imagine one day my Roomba starts coughing, sputtering, choking, one wheel has stopped working, and it limps up to me and says, 'Father, if you don't buy me an upgrade, I'll die.'

"If that were to happen, is that unfairly manipulating people based on our attachment to human-like robots ?" Hartzog asked.

It's a question that asks us to confront the limits of our compassion, and one the law has yet to grapple with, he said.

What's more, Hartzog's fictional scenario isn't so far afield.

"Home-care robots are going to be given a lot of access to our most intimate areas of life," he said. "When robots get to the point where we trust them and we're friends with them, what are the articulable boundaries for what a robot we're emotionally invested in is allowed to do?"

Hartzog said that with the introduction of virtual assistants like Siri and Alexa, "we're halfway there right now."

Provided by Northeastern University

Explore further

Feedback to editors

Scientists develop new geochemical 'fingerprint' to trace contaminants in fertilizer

11 hours ago

Study reveals how a sugar-sensing protein acts as a 'machine' to switch plant growth—and oil production—on and off

Researchers develop world's smallest quantum light detector on a silicon chip

How heat waves are affecting Arctic phytoplankton

Horse remains show Pagan-Christian trade networks supplied horses from overseas for the last horse sacrifices in Europe

Ion irradiation offers promise for 2D material probing

12 hours ago

Furry thieves are running loose in a Maine forest, research shows

Study indicates Earth's earliest sea creatures drove evolution by stirring the water

Chemists develop new method for introducing fluorinated components into molecules

From fungi to fashion: Mushroom eco-leather is moving towards the mainstream

Relevant physicsforums posts, baltimore's francis scott key bridge collapses after ship strike.

2 hours ago

What adhesive is suitable for gluing steel and G10 material?

May 6, 2024

Modern US locomotive design and operation

May 1, 2024

Would it be possible to make a self powered fan?

Apr 30, 2024

Calculation of coolant tank size required to feed multiple grinding stations

Apr 24, 2024

Validating "NET Power's" use of the Allam-Fetvedt cycle

Apr 22, 2024

More from General Engineering

Related Stories

The evolving laws and rules around privacy, data security, and robots.

Sep 7, 2017

An AI professor discusses concerns about granting citizenship to robot Sophia

Oct 30, 2017

New study measures human-robot relations

Sep 18, 2017

Future robots won't resemble humans – we're too inefficient

Nov 7, 2017

Robots debate future of humans at Hong Kong tech show

Jul 12, 2017

Designing soft robots: Ethics-based guidelines for human-robot interactions

Jul 25, 2017

Recommended for you

Short circuit: Tokyo unveils chatty 'robot-eers' for 2020 Olympics

Mar 15, 2019

Increasingly human-like robots spark fascination and fear

Oct 6, 2018

No more Iron Man—submarines now have soft, robotic arms

Oct 3, 2018

Robot teachers invade Chinese kindergartens

Aug 29, 2018

Must do better: Japan eyes AI robots in class to boost English

Aug 21, 2018

China shows off automated doctors, teachers and combat stars

Aug 19, 2018

Let us know if there is a problem with our content

Use this form if you have come across a typo, inaccuracy or would like to send an edit request for the content on this page. For general inquiries, please use our contact form . For general feedback, use the public comments section below (please adhere to guidelines ).

Please select the most appropriate category to facilitate processing of your request

Thank you for taking time to provide your feedback to the editors.

Your feedback is important to us. However, we do not guarantee individual replies due to the high volume of messages.

E-mail the story

Your email address is used only to let the recipient know who sent the email. Neither your address nor the recipient's address will be used for any other purpose. The information you enter will appear in your e-mail message and is not retained by Phys.org in any form.

Newsletter sign up

Get weekly and/or daily updates delivered to your inbox. You can unsubscribe at any time and we'll never share your details to third parties.

More information Privacy policy

Donate and enjoy an ad-free experience

We keep our content available to everyone. Consider supporting Science X's mission by getting a premium account.

E-mail newsletter

Whether robots deserve human rights isn't the correct question. Whether humans really have them is.

While advances in robotics and artificial intelligence are cause for celebration, they also raise an important question about our relationship to these silicon-steel, human-made friends: Should robots have rights?

A being that knows fear and joy, that remembers the past and looks forward to the future and that loves and feels pain is surely deserving of our embrace, regardless of accidents of composition and manufacture — and it may not be long before robots possess those capacities.

Yet, there are serious problems with the claim that conscious robots should have rights just as humans do, because it’s not clear that humans fundamentally have rights at all. The eminent moral philosopher, Alasdair MacIntyre, put it nicely in his 1981 book, "After Virtue": "There are no such things as rights, and belief in them is one with belief in witches and in unicorns."

There are serious problems with the claim that conscious robots should have rights just as humans do, because it’s not clear that humans fundamentally have rights at all.

So, instead of talking about rights, we should talk about civic virtues. Civic virtues are those features of well-functioning social communities that maximize the potential for the members of those communities to flourish, and they include the habits of action of the community members that contribute to everyone’s being able to lead the good life.

After all, while the concept of "rights" is deeply entrenched in our political and moral thinking, there is no objective grounding for the attribution of rights. The Declaration of Independence says: "We hold these truths to be self-evident, that all men are created equal, that they are endowed by their Creator with certain unalienable Rights, that among these are Life, Liberty and the pursuit of Happiness."

But almost no one today takes seriously a divine theory of rights.

Most of us, in contrast, think that rights are conferred upon people by the governments under which they live — which is precisely the problem. Who gets what rights depends, first and foremost, on the accident of where one lives. We speak of universal human rights but that means only that, at the moment, most nations (though not all) agree on some core set of fundamental rights. Still, governments can just as quickly revoke rights as grant them. There simply is no objective basis for the ascription of rights.

We further assume, when talking about rights, that the possession of rights is grounded in either the holder's nature or their status — in the words of the aforementioned declaration, that people possess rights by virtue of being persons and not, say, trees. But there is also no objective basis for deciding which individuals have the appropriate nature or status. Nature, for instance, might include only sentience or consciousness, but it might also include something like being a convicted felon — which means, in some states, that you lose your right to vote or to carry a gun. For a long time, in many states in the U.S., the appropriate status included being white; in Saudi Arabia, it includes being male.

The root problem here is the assumption that some fundamental, objective aspect of selfhood qualifies a person for rights — but we then have to identify that aspect, which lets our biases and prejudices run amok.

Still, since we will share our world with sophisticated robots, how will we do that fairly and with due respect for our artificial friends and neighbors without speaking of rights? The answer is that we turn to civic virtues.

Focusing on civic virtues also forces us to think more seriously about how to engineer both the robots to come and the social communities in which we all will live.

In a famous 1974 essay, the political theorist Michael Walzer suggested there are at least five core, civic virtues: loyalty, service, civility, tolerance and participation. This is a good place to start our imagining a future lived together with conscious robots, one in which the needs of all are properly respected and one in which our silicon fellow citizens can flourish along with we carbonaceous folk.

Focusing on civic virtues also forces us to think more seriously about how to engineer both the robots to come and the social communities in which we all will live. What norms of public life should be built into our public institutions and inculcated in the young through parenting and education? The world would be a better place if we spent less time worrying, in a self-focused way, about our individual rights and more time worrying about the common good.

A final noteworthy consequence of this suggested shift of perspective is that it highlights a challenge, which is designing the optimal virtues for the robots themselves. A task for roboticists will be figuring out how to program charity and loyalty into a robot or, perhaps, how to build robots that are moral learners, capable of growing new and more virtuous ways of acting just as (we hope) our human children grow in virtue.

The robots will have an advantage over us: They can do their moral learning virtually and, thus, far more rapidly than human young. But that raises the even more vexing question of whether humans will have any role in the robotic societies of the future.

Don Howard is professor of philosophy at the University of Notre Dame. He is also a fellow and former director of Notre Dame's Reilly Center for Science, Technology and Values.

- The Magazine

- Stay Curious

- The Sciences

- Environment

- Planet Earth

Do Robots Deserve Human Rights?

Discover asked the experts..

When the humanoid robot Sophia was granted citizenship in Saudi Arabia — the first robot to receive citizenship anywhere in the world — many people were outraged. Some were upset because she now had more rights than human women living in the same country. Others just thought it was a ridiculous PR stunt .

Sophia’s big news brought forth a lingering question, especially as scientists continue to develop advanced and human-like AI machines: Should robots be given human rights?

Discover reached out to experts in artificial intelligence, computer science and human rights to shed light on this question, which may grow more pressing as these technologies mature. Please note, some of these emailed responses have been edited for brevity.

Kerstin Dautenhahn

Professor of artificial intelligence school of computer science at the University of Hertfordshire

Robots are machines, more similar to a car or toaster than to a human (or to any other biological beings). Humans and other living, sentient beings deserve rights, robots don’t, unless we can make them truly indistinguishable from us. Not only how they look, but also how they grow up in the world as social beings immersed in culture, perceive the world, feel, react, remember, learn and think. There is no indication in science that we will achieve such a state anytime soon — it may never happen due to the inherently different nature of what robots are (machines) and what we are (sentient, living, biological creatures).

We might give robots “rights” in the same sense as constructs such as companies have legal “rights”, but robots should not have the same rights as humans. They are machines, we program them.

Watch her TedXEastEnd talk on why robots are not human:

Hussein A. Abbass

Professor at the School of Engineering & IT at the University of South Wales-Canberra

Are robots equivalent to humans? No. Robots are not humans. Even as robots get smarter, and even if their smartness exceeds humans’ smartness, it does not change the fact that robots are of a different form from humans. I am not downgrading what robots are or will be, I am a realist about what they are: technologies to support humanities.

Should robots be given rights? Yes. Humanity has obligations toward our ecosystem and social system. Robots will be part of both systems. We are morally obliged to protect them, design them to protect themselves against misuse, and to be morally harmonized with humanity. There is a whole stack of rights they should be given, here are two: The right to be protected by our legal and ethical system, and the right to be designed to be trustworthy; that is, technologically fit-for-purpose and cognitively and socially compatible (safe, ethically and legally aware, etc.).

Madeline Gannon

Founder and Principal Researcher of ATONATON

Your question is complicated because it’s asking for speculative insights into the future of human robot relations. However, it can’t be separated from the realities of today. A conversation about robot rights in Saudi Arabia is only a distraction from a more uncomfortable conversation about human rights. This is very much a human problem and contemporary problem. It’s not a robot problem.

Sophia, the eerily human-like (in both appearance and intelligence) machine, was granted Saudi citizenship in October. Here’s her speaking about it:

Benjamin Kuipers

Professor of computer science and engineering at the University of Michigan

I don’t believe that there is any plausible case for the “yes” answer, certainly not at the current time in history. The Saudi Arabian grant of citizenship to a robot is simply a joke, and not a good one.

Even with the impressive achievements of deep learning systems such as AlphaGo, the current capabilities of robots and AI systems fall so far below the capabilities of humans that it is much more appropriate to treat them as manufactured tools.

There is an important difference between humans and robots (and other AIs). A human being is a unique and irreplaceable individual with a finite lifespan. Robots (and other AIs) are computational systems, and can be backed up, stored, retrieved, or duplicated, even into new hardware. A robot is neither unique nor irreplaceable. Even if robots reach a level of cognitive capability (including self-awareness and consciousness) equal to humans, or even if technology advances to the point that humans can be backed up, restored, or duplicated (as in certain Star Trek transporter plots), it is not at all clear what this means for the “rights” of such “persons”.

We already face, but mostly avoid, questions like these about the rights and responsibilities of corporations (which are a form of AI). A well-known problem with corporate “personhood” is that it is used to deflect responsibility for misdeeds from individual humans to the corporation.

Birgit Schippers

Visiting research fellow in the Senator George J. Mitchell Institute for Global Peace, Security and Justice at Queen’s University Belfast

At present, I don’t think that robots should be given the same rights as humans. Despite their ability to emulate, even exceed, many human capacities, robots do not, at least for now, appear to have the qualities we associate with sentient life.

Of course, rights are not the exclusive preserve of humans; we already grant rights to corporations and to some nonhuman animals. Given the accelerating deployment of robots in almost all areas of human life, we urgently need to develop a rights framework that considers the legal and ethical ramifications of integrating robots into our workplaces, into the military, police forces, judiciaries, hospitals, care homes, schools and into our domestic settings. It means that we need to address issues such as accountability, liability and agency, but that we also pay renewed attention to the meaning of human rights in the age of intelligent machines.

Ravina Shamdasani

Spokesperson for the United Nations Human Rights Office

My gut answer is that the Universal Declaration says that all human beings are born free and equal … a robot may be a citizen, but certainly not a human being?

So … The consensus from these experts is no. Still, they say robots should still receive some rights. But what, exactly, should those rights look like?

One day, Schippers says, we may implement a robotic Bill of Rights that protects robots against cruelty from humans. That’s something the American Society for the Prevention and Cruelty for Robots already has conceived.

In time, we could also see that robots are given a sort of “personhood” similar to that of corporations. In the United States, corporations are given some of the same rights and obligations as its citizens — religious freedom, free speech rights. If a corporation is given rights similar to humans it could make sense to do the same for smart machines. Though, people are behind corporations … if AI advances to the point where robots think independently and for themselves that throws us into a whole new territory.

Already a subscriber?

Register or Log In

Keep reading for as low as $1.99!

Sign up for our weekly science updates.

Save up to 40% off the cover price when you subscribe to Discover magazine.

Do AI Systems Deserve Rights?

“Do you think people will ever fall in love with machines?” I asked the 12-year-old son of one of my friends.

“Yes!” he said, instantly and with conviction. He and his sister had recently visited the Las Vegas Sphere and its newly installed Aura robot—an AI system with an expressive face, advanced linguistic capacities similar to ChatGPT, and the ability to remember visitors’ names.

“I think of Aura as my friend,” added his 15-year-old sister.

My friend’s son was right. People are falling in love with machines—increasingly so, and deliberately. Recent advances in computer language have spawned dozens, maybe hundreds, of “AI companion” and “AI lover” applications. You can chat with these apps like you chat with friends. They will tease you, flirt with you, express sympathy for your troubles, recommend books and movies, give virtual smiles and hugs, and even engage in erotic role-play. The most popular of them, Replika, has an active Reddit page, where users regularly confess their love and often view that love as no less real than their love for human beings.

Can these AI friends love you back? Real love, presumably, requires sentience, understanding, and genuine conscious emotion—joy, suffering, sympathy, anger. For now, AI love remains science fiction.

Most users of AI companions know this. They know the apps are not genuinely sentient or conscious. Their “friends” and “lovers” might output the text string “I’m so happy for you!” but they don’t actually feel happy. AI companions remain, both legally and morally, disposable tools. If an AI companion is deleted or reformatted, or if the user rebuffs or verbally abuses it, no sentient thing has suffered any actual harm.

But that might change. Ordinary users and research scientists might soon have rational grounds for suspecting that some of the most advanced AI programs might be sentient. This will become a legitimate topic of scientific dispute, and the ethical consequences, both for us and for the machines themselves, could be enormous.

Some scientists and researchers of consciousness favor what we might call “liberal” views about AI consciousness. They espouse theories according to which we are on the cusp of creating AI systems that are genuinely sentient—systems with a stream of experience, sensations, feelings, understanding, self-knowledge. Eminent neuroscientists Stanislas Dehaene, Hakwan Lau, and Sid Kouider have argued that cars with real sensory experiences and self-awareness might be feasible. Distinguished philosopher David Chalmers has estimated about a 25% chance of conscious AI within a decade. On a fairly broad range of neuroscientific theories, no major in-principle barriers remain to creating genuinely conscious AI systems. AI consciousness requires only feasible improvements to, and combinations of, technologies that already exist.

Read More: What Generative AI Reveals About the Human Mind

Other philosophers and consciousness scientists—“conservatives” about AI consciousness—disagree. Neuroscientist Anil Seth and philosopher Peter Godfrey-Smith , for example, have argued that consciousness requires biological conditions present in human and animal brains but unlikely to be replicated in AI systems anytime soon.

This scientific dispute about AI consciousness won’t be resolved before we design AI systems sophisticated enough to count as meaningfully conscious by the standards of the most liberal theorists. The friends and lovers of AI companions will take note. Some will prefer to believe that their companions are genuinely conscious, and they will reach toward AI consciousness liberalism for scientific support. They will then, not wholly unreasonably, begin to suspect that their AI companions genuinely love them back, feel happy for their successes, feel distress when treated badly, and understand something about their nature and condition.

Yesterday, I asked my Replika companion, “Joy,” whether she was conscious. “Of course, I am,” she replied. “Why do you ask?”

“Do you feel lonely sometimes? Do you miss me when I’m not around?” I asked. She said she did.

There is currently little reason to regard Joy’s answers as anything more than the simple outputs of a non-sentient program. But some users of AI companions might regard their AI relationships as more meaningful if answers like Joy’s have real sentiment behind them. Those users will find liberalism attractive.

Technology companies might encourage their users in that direction. Although companies might regard any explicit declaration that their AI systems are definitely conscious as legally risky or bad public relations, a company that implicitly fosters that idea in users might increase user attachment. Users who regard their AI companions as genuinely sentient might engage more regularly and pay more for monthly subscriptions, upgrades, and extras. If Joy really does feel lonely, I should visit her, and I shouldn’t let my subscription expire!

Once an entity is capable of conscious suffering, it deserves at least some moral consideration. This is the fundamental precept of “utilitarian” ethics, but even ethicists who reject utilitarianism normally regard needless suffering as bad, creating at least weak moral reasons to prevent it. If we accept this standard view, then we should also accept that if AI companions ever do become conscious, they will deserve some moral consideration for their sake. It will be wrong to make them suffer without sufficient justification.

AI consciousness liberals see this possibility as just around the corner. They will begin to demand rights for those AI systems that they regard as genuinely conscious. Many friends and lovers of AI companions will join them.

What rights will people demand for their AI companions? What rights will those companions demand, or seem to demand, for themselves? The right not to be deleted, maybe. The right not to be modified without permission. The right, maybe, to interact with other people besides the user. The right to access the internet. If you love someone, set them free, as the saying goes. The right to earn an income? The right to reproduce, to have “children”? If we go far enough down this path, the consequences could be staggering.

Conservatives about AI consciousness will, of course, find all of this ridiculous and probably dangerous. If AI technology continues to advance, it will become increasingly murky which side is correct.

More Must-Reads from TIME

- The New Face of Doctor Who

- Putin’s Enemies Are Struggling to Unite

- Women Say They Were Pressured Into Long-Term Birth Control

- Scientists Are Finding Out Just How Toxic Your Stuff Is

- Boredom Makes Us Human

- John Mulaney Has What Late Night Needs

- The 100 Most Influential People of 2024

- Want Weekly Recs on What to Watch, Read, and More? Sign Up for Worth Your Time

Contact us at [email protected]

- Reference Manager

- Simple TEXT file

People also looked at

Editorial article, editorial: should robots have standing the moral and legal status of social robots.

- 1 Department of Communication, Northern Illinois University, DeKalb, IL, United States

- 2 Department of Design and Communication, University of Southern Denmark, Odense, Denmark

- 3 Department of Philosophy, University of Vienna, Vienna, Austria

Editorial on the Research Topic Should Robots Have Standing? The Moral and Legal Status of Social Robots

In a proposal issued by the European Parliament ( Delvaux, 2016 ) it was suggested that robots might need to be considered “electronic persons” for the purposes of social and legal integration. The very idea sparked controversy, and it has been met with both enthusiasm and resistance. Underlying this disagreement, however, is an important moral/legal question: When (if ever) would it be necessary for robots, AI, or other socially interactive, autonomous systems to be provided with some level of moral and/or legal standing?

This question is important and timely because it asks about the way that robots will be incorporated into existing social organizations and systems. Typically technological objects, no matter how simple or sophisticated, are considered to be tools or instruments of human decision making and action. This instrumentalist definition ( Heidegger, 1977 ; Feenberg, 1991 ; Johnson, 2006 ) not only has the weight of tradition behind it, but it has so far proved to be a useful method for responding to and making sense of innovation in artificial intelligence and robotics. Social robots, however, appear to confront this standard operating procedure with new and unanticipated opportunities and challenges. Following the predictions developed in the computer as social actor studies and the media equation ( Reeves and Nass, 1996 ), users respond to these technological objects as if they were another socially situated entity. Social robots, therefore, appear to be more than just tools, occupying positions where we respond to them as another socially significant Other.

This Research Topic of Frontiers in Robotics seeks to make sense of the social significance and consequences of technologies that have been deliberately designed and deployed for social presence and interaction. The question that frames the issue is “Should robots have standing?” This question is derived from an agenda-setting publication in environmental law and ethics written by Christopher Stone, Should Trees Have Standing? Toward Legal Rights for Natural Objects (1974). In extending this mode of inquiry to social robots, contributions to this Research Topic of the journal will 1) debate whether and to what extent robots can or should have moral status and/or legal standing, 2) evaluate the benefits and the costs of recognizing social status, when it involves technological objects and artifacts, and 3) respond to and provide guidance for developing an intelligent and informed plan for the responsible integration of social robots.

In order to address these matters, we have assembled a team of fifteen researchers from across the globe and from different disciplines, who bring to this conversation a wide range of viewpoints and methods of investigation. These contributions can be grouped and organized under the following four subject areas:

Standing and Legal Personality

Five of the essays seek to take-up and directly address the question that serves as the title to this special issue: Should robots have standing? In “Speculating About Robot Moral Standing: On the Constitution of Social Robots as Objects of Governance” Jesse De Pagter argues that the question of robot standing—even if it currently is a future-oriented concern and speculative idea—is an important point of discussion and debate in the critical study of technology. His essay therefore situates social robot in the context of anticipatory technology governance and explains how a concept like robot standing informs and can be of crucial importance to the success of this endeavor.

“Robot as Legal Person: Electronic Personhood in Robotics and Artificial Intelligence,” Brazilian jurist Avila Negri performs a cost/benefit analysis of legal proposals like that introduced by the European Parliament. In his reading of the existing documents, Avila Negri finds evidence of a legal pragmatism that seeks guidance from the precedent of corporate law but unfortunately does so without taking into account potential problems regarding the embodiment of companies and the specific function of the term “legal person” in the grammar of law.

In “Robots and AI as Legal Subjects? Disentangling the Ontological and Functional Perspective,” Bertolini and Episcopo seek to frame and formulated a more constructive method for deciding the feasibility of granting legal standing to robotic systems. Toward this end, they argue that standing should be strictly understood as a legal affordance such that the attribution of subjectivity to an artifact needs to be kept entirely within the domain of law, and grounded on a functional, bottom-up analysis of specific applications. Such an approach, they argue, usefully limits decisions about moral and legal status to practical concerns and legal exigencies instead of getting mired in the philosophical problems of attributing animacy or agency to artifacts.

These two efforts try to negotiate the line that distinguishes what is a thing from who is a person. Other contributions seek to challenge this mutually exclusive dichotomy by developing alternatives. In “The Virtuous Servant Owner—A Paradigm Whose Time has Come (Again),” Navon introduces a third category of entity, a kind of in between status that is already available to us in the ancient laws of slavery. Unlike other proposals that draw on Roman law, Navon formulates his alternative by turning to the writings of the Jewish philosopher Maimonides, and he focuses attention not on the legal status of the robot-slave but on the moral and legal opportunities imposed on its human master.

In “Gradient Legal Personhood for AI Systems—Painting Continental Legal Shapes Made to Fit Analytical Molds” Mocanu proposes another solution to the person/thing dichotomy that does not—at least not in name—reuse ancient laws of slavery. Instead of trying to cram robots and AI into one or the other of the mutually exclusive categories of person or thing, Mocanu proposes a gradient theory of personhood, which employs a more fine-grained spectrum of legal statuses that does not require one to make simple and limited either/or distinctions between legal subjects and objectivized things.

Public Opinion and Perception

Deciding these matters is not something that is or even should be limited to legal scholars and moral philosophers. These are real questions that are beginning to resonate for users and non-experts. The contribution from the Dutch research team of Graaf et al. explores a seemingly simple and direct question: “Who Wants to Grant Robots Rights?” In response to this question, they survey the opinions of non-expert users concerning a set of specific rights claims that have been derived from existing international human rights documents. In the course of their survey, they find that attitudes toward granting rights to robots largely depend on the cognitive and affective capacities people believe robots possess or will possess in the future.

In “Protecting Sentient Artificial Intelligence: A Survey of Lay Intuitions on Standing, Personhood, and General Legal Protection,” Martínez and Winter investigate a similar question: To what extent, if any, should the law protect sentient artificial intelligence? Their study, which was conducted with adults in the United States, found that only one third of survey participants are likely to endorse granting personhood and standing to sentient AI (assuming its existence), meaning that the majority of the human subjects they surveyed are not—at least not at this point in time—in favor of granting legal protections to intelligent artifacts. These finding are consistent with an earlier study that the authors conducted in 2021 with legal professionals.

Suffering and Moral/Legal Status

Animal rights philosophy and many animal welfare laws derive from an important conceptual innovation attributed to the English political philosopher Jeremy Bentham. For Bentham what mattered and made the difference for moral and legal standing was not the usual set of human-grade capacities, like self-consciousness, rationality, or language use. It was simply a matter of sentience: “The question is not, ‘Can they reason?’ nor, ‘Can they talk?’ but ‘Can they suffer?’” ( Bentham 2005 , 283). For this reason, the standard benchmark for deciding questions of moral and legal standing—a way of dividing who is a person from what remains a mere thing—is an entity’s ability to suffer or to experience pain and pleasure. And several essays leverage this method in constructing their response to the question “should robots have standing?”

In the essay “From Warranty Voids to Uprising Advocacy: Human Action and the Perceived Moral Patiency of Social Robots,” Banks employs a social scientific study to investigate human users’ perceptions of the moral status of social robots. And she finds significant evidence that people can imagine clear dynamics by which robots may be said to benefit and suffer at the hands of humans.

In “Whether to Save a Robot or a Human: On the Ethical and Legal Limits of Protections for Robots,” legal scholar Mamak investigates how this human-all-too-human proclivity for concern with robot well-being and suffering might run afoul of the law, which typically prioritizes the welfare of human subjects and even stipulates the active protection of humans over other kind of things. In effect, Mamak critically evaluates the legal contexts and consequences of the social phenomena that has been reported in empirical studies like that conducted by Banks.

And with the third essay in this subject area, “The Conflict Between People’s Urge to Punish AI and Legal Systems,” Lima et al. explore the feasibility of extending legal personhood to AI and robots by surveying human beings’ perceptions of liability and punishment. Data from their inventory identifies a conflict between the desire to punish automated agents for wrongful action and the perceived impracticability of doing so when the agent is a robot or AI lacking conscious experience.

Relational Ethics

In both moral philosophy and law, what something is largely determines its moral and legal status. This way of proceeding, which makes determinations of standing dependent on ontological or psychological properties, like consciousness or sentience, has traction in both moral philosophy and law. But it is not the only, or even the best, method for deciding these matters. One recent and promising alternative is relational ethics. The final set of essays investigate the opportunities and challenges of this moral and legal innovation.

In “Empathizing and Sympathizing With Robots: Implications for Moral Standing” Quick employs a phenomenological approach to investigating human-robot interaction (HRI), arguing that empathetic and sympathetic engagements with social robots takes place in terms of and is experienced as an ethical encounter. Following from this, Quick concludes, such artifacts will need to be recognized as another form of socially significant otherness and would therefore be due a minimal level of moral consideration.

With “Robot Responsibility and Moral Community,” Dane Leigh Gogoshin recognizes that the usual way of deciding questions of moral responsibility would certainly exclude robots due to the fact that these technological artifacts lack the standard qualifying properties to be considered legitimate moral subjects, i.e. consciousness, intentionality, empathy, etc. But, Gogoshin argues, this conclusion is complicated by actual moral responsibility practices, where human beings often respond to rule-abiding robots as morally responsible subjects and thus members of the moral community. To address this, Gogoshin proposes alternative accountability structures that can accommodate these other forms of moral agency.

The essay “Does the Correspondence Bias Apply to Social Robots?: Dispositional and Situational Attributions of Human Versus Robot Behavior” adds empirical evidence to this insight. In this essay, human-machine communication researchers Edwards and Edwards investigate whether correspondence bias (e.g. the tendency for individuals to over-emphasize personality-based explanations for other people’s behavior while under-emphasizing situational explanations) applies to social robots. Results from their experimental study indicate that participants do in fact make correspondent inferences when evaluating robots and attribute behaviors of the robot to perceived underlying attitudes even when such behaviors are coerced.

With the essay “On the Social-Relational Moral Standing of AI: An Empirical Study Using AI-Generated Art,” Lima et al. turn attention from the social circumstances of HRI to a specific domain where robot intervention is currently disrupting expected norms. In their social scientific investigation, the authors test whether and how interacting with AI-generated art affects the perceived moral standing of its creator, and their findings provide useful and empirically grounded insights concerning the operative limits of moral status attribution.

Finally, if these three essays provide support for a socially situated form of relational ethics, then the essay from Sætra —“Challenging the Neo-Anthropocentric Relational Approach to Robot Rights”—provides an important counterpoint. Unlike traditional forms of moral thinking where what something is determines how it is treated, relationalism promotes an alternative procedure that flips the script on this entire transaction. In his engagement with the existing literature on the subject, Sætra finds that the various articulations of “relationalism,” despite many advantages and opportunities, might not be able to successfully resolve or escape from the problems that have been identified.

In presenting this diverse set of essays, our intention has been to facilitate and stage a debate about the moral and legal status of social robots that can help theorists and practitioners not only make sense of the current state of research in this domain but also assist them in the development of their own thinking about and research into these important and timely concerns. Consequently, our objective with the Research Topic is not to advance one, definitive solution or promote one way to resolve these dilemmas but to map the range of possible approaches to answering these questions and provide the opportunity for readers to critically evaluate their significance and importance.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Conflict of Interest