XGBoost: A Scalable Tree Boosting System

XGBoost is an optimized distributed gradient boosting system designed to be highly efficient , flexible and portable . It implements machine learning algorithms under the Gradient Boosting framework. XGBoost provides a parallel tree boosting(also known as GBDT, GBM) that solve many data science problems in a fast and accurate way. The same code runs on major distributed environment(Hadoop, SGE, MPI) and can solve problems beyond billions of examples. The most recent version integrates naturally with DataFlow frameworks(e.g. Flink and Spark).

Reference Paper

- Tianqi Chen and Carlos Guestrin. XGBoost: A Scalable Tree Boosting System . Preprint.

Technical Highlights

- Sparse aware tree learning to optimize for sparse data.

- Distributed weighted quantile sketch for quantile findings and approximate tree learning.

- Cache aware learning algorithm

- Out of core computation system for training when

- XGBoost is one of the most frequently used package to win machine learning challenges .

- XGBoost can solve billion scale problems with few resources and is widely adopted in industry.

- See XGBoost Resources Page for a complete list of usecases of XGBoost, including machine learning challenge winning solutions, data science tutorials and industry adoptions.

Acknowledgement

XGBoost open source project is actively developed by amazing contributors from DMLC/XGBoost community .

This work was supported in part by ONR (PECASE) N000141010672, NSF IIS 1258741 and the TerraSwarm Research Center sponsored by MARCO and DARPA.

- Tutorial on Tree Boosting [ Slides ]

- XGBoost Main Project Repo for python, R, java, scala and distributed version.

- XGBoost Julia Package

- XGBoost Resources for all resources including challenge winning solutions, tutorials.

Play with a live Neptune project -> Take a tour 📈

XGBoost: Everything You Need to Know

XGBoost is a popular gradient-boosting library for GPU training, distributed computing, and parallelization. It’s precise, it adapts well to all types of data and problems, it has excellent documentation, and overall it’s very easy to use.

At the moment it’s the de facto standard algorithm for getting accurate results from predictive modeling with machine learning. It’s the fastest gradient-boosting library for R, Python, and C++ with very high accuracy.

We’re going to explore how to use the model, meanwhile using Neptune to present and detail some best practices for ML project management in general.

If you’re not sure that XGBoost is a great choice for you, follow along with the tutorial until the end, and then you’ll be able to make a fully informed decision.

Ensemble algorithms – explanation

Ensemble learning combines several learners (models) to improve overall performance, increasing predictiveness and accuracy in machine learning and predictive modeling.

Technically speaking, the power of ensemble models is simple: it can combine thousands of smaller learners trained on subsets of the original data. This can lead to interesting observations that, like:

- The variance of the general model decreases significantly thanks to bagging

- The bias also decreases due to boosting

- And overall predictive power improves because of stacking

Types of ensemble methods

Ensemble methods can be classified into two groups based on how the sub-learners are generated:

- Sequential ensemble methods – learners are generated sequentially . These methods use the dependency between base learners. Each learner influences the next one, likewise, a general paternal behavior can be deduced. A popular example of sequential ensemble algorithms is AdaBoost.

- Parallel ensemble methods – learners are generated in parallel . The base learners are created independently to study and exploit the effects related to their independence and reduce error by averaging the results. An example implementing this approach is Random Forest .

Ensemble methods can use homogeneous learners (learners from the same family) or heterogeneous learners (learners from multiple sorts, as accurate and diverse as possible).

Homogenous and heterogenous ML algorithms

Generally speaking, homogeneous ensemble methods have a single-type base learning algorithm. The training data is diversified by assigning weights to training samples, but they usually leverage a single type base learner.

Heterogeneous ensembles on the other hand consist of members having different base learning algorithms which can be combined and used simultaneously to form the predictive model.

A general rule of thumb:

- Homogeneous ensembles use the same feature selection with a variety of data and distribute the dataset over several nodes.

- Heterogeneous ensembles use different feature selection methods with the same data

Homogeneous Ensembles:

- Ensemble algorithms that use bagging like Decision Trees Classifiers

- Random Forests , Randomized Decision Trees

Heterogeneous Ensembles:

- Support Vector Machines , SVM

- Artificial Neural Networks , ANN

- Memory-Based Learning methods

- Bagged and Boosted decision Trees like XGBoost

Important characteristics of ensemble algorithms

Decrease overall variance by averaging the performance of multiple estimates. Aggregate several sampling subsets of the original dataset to train different learners chosen randomly with replacement, which conforms to the core idea of bootstrap aggregation. Bagging normally uses a voting mechanism for classification (Random Forest) and averaging for regression .

Note: Remember that some learners are stable and less sensitive to training perturbations. Such learners, when combined, don’t help the general model to improve generalization performance.

This technique matches weak learners — learners that have poor predictive power and do slightly better than random guessing — to a specific weighted subset of the original dataset. Higher weights are given to subsets that were misclassified earlier.

Learner predictions are then combined with voting mechanisms in case of classification or weighted sum for regression.

Well-known boosting algorithms

AdaBoost stands for Adaptive Boosting. The logic implemented in the algorithm is:

- First-round classifiers (learners) are all trained using weighted coefficients that are equal,

- In subsequent boosting rounds the adaptive process increasingly weighs data points that were misclassified by the learners in previous rounds and decrease the weights for correctly classified ones.

If you’re curious about the algorithm’s description, take a look at this:

Gradient Boosting

Gradient Boosting uses differentiable function losses from the weak learners to generalize. At each boosting stage, the learners are used to minimize the loss function given the current model. Boosting algorithms can be used either for classification or regression.

What is XGBoost architecture?

XGBoost stands for Extreme Gradient Boosting. It’s a parallelized and carefully optimized version of the gradient boosting algorithm. Parallelizing the whole boosting process hugely improves the training time.

Instead of training the best possible model on the data (like in traditional methods), we train thousands of models on various subsets of the training dataset and then vote for the best-performing model.

For many cases, XGBoost is better than usual gradient boosting algorithms. The Python implementation gives access to a vast number of inner parameters to tweak for better precision and accuracy.

Some important features of XGBoost are:

- Parallelization: The model is implemented to train with multiple CPU cores.

- Regularization: XGBoost includes different regularization penalties to avoid overfitting. Penalty regularizations produce successful training so the model can generalize adequately.

- Non-linearity: XGBoost can detect and learn from non-linear data patterns.

- Cross-validation: Built-in and comes out-of-the-box.

- Scalability: XGBoost can run distributed thanks to distributed servers and clusters like Hadoop and Spark, so you can process enormous amounts of data. It’s also available for many programming languages like C++, JAVA, Python, and Julia.

How does the XGBoost algorithm work?

- Consider a function or estimate . To start, we build a sequence derived from the function gradients. The equation below models a particular form of gradient descent. The represents the Loss function to minimize hence it gives the direction in which the function decreases. is the rate of change fitted to the loss function, it’s equivalent to the learning rate in gradient descent. is expected to approximate the behaviour of the loss suitably.

- To iterate over the model and find the optimal definition we need to express the whole formula as a sequence and find an effective function that will converge to the minimum of the function. This function will serve as an error measure to help us decrease the loss and keep the performance over time. The sequence converges to the minimum of the function . This particular notation defines the error function that applies when evaluating a gradient boosting regressor.

For more details and an in-depth look over the mathematics behind Gradients Boosting I recommend you to check this post by Krishna Kumar Mahto .

Other Gradient Boosting methods

Gradient boosting machine (gbm).

GBM combines predictions from multiple decision trees, and all the weak learners are decision trees. The key idea with this algorithm is that every node of those trees takes a different subset of features to select the best split. As it’s a Boosting algorithm, each new tree learns from the errors made in the previous ones.

Useful reference -> Understanding Gradient Boosting Machines

Light Gradient Boosting Machine (LightGBM)

LightGBM can handle huge amounts of data. It’s one of the fastest algorithms for both training and prediction. It generalizes well, meaning that it can be used to solve similar problems. It scales well to large numbers of cores and has an open-source code so you can use it in your projects for free.

Useful reference -> Understanding LightGBM Parameters (and How to Tune Them)

Categorical Boosting (CatBoost)

This particular set of Gradient Boosting variants has specific abilities to handle categorical variables and data in general. The CatBoost object can handle categorical variables or numeric variables, as well as datasets with mixed types. That’s not all. It can also use unlabelled examples and explore the effect of kernel size on speed during training.

Useful reference -> CatBoost: A machine learning library to handle categorical (CAT) data automatically

Integrate XGBoost with Neptune

Start creating your project.

Install the required Neptune client libraries:

Install the Neptune notebook plugin to save all your work and link it with the Neptune UI platform:

Enable jupyter integration:

Register on the Neptune platform, get your API key, and connect your notebook with your Neptune session:

To complete the setup, import the Neptune client library in your notebook and initialize the connection calling the neptune.init() method:

Get your dataset ready to use

To illustrate some of the work we can do with XGBoost, I picked a dataset with factors that will determine and predict exam scores for school students. The dataset is based on data from students who studied at different school levels for four to six months, including their weekly amount of hours and how well they did.

The dataset will determine how much influence the quality of studying has on exam scores for students. The dataset contains data representing results obtained in three exams regarding several social, economical, and financial factors.

The dataset is presented with the following features:

- Race / Ethnicity

- Parental level of Education

- Test preparation course

We can take a closer look at the distributions regarding ethnicity and gender:

- Math, Reading and Writing Scores by Ethnicity :

Group C stands out by all scoring metrics. This suggests a priori that there will be differences in study performances that relate to race and ethnic origin.

- Math, Reading and Writing scores by Gender

On the contrary, we find out that gender doesn’t influence the overall scoring too much in all three disciplines. It seems like gender is not a decisive factor.

Sample your data

To sample the data, we’ll use the DMatrix object from the XGBoost Python library. The process of sampling the data into subsets is called data segregation. DMatrix is an internal data structure that helps with memory management and data optimization. We’ll split the data into training and testing subsets and then start training.

Transform Categorical variables to Numeric:

All the features are still registered as categorical values. To train the model, we need to associate to each variable a numerical code to identify it, and thus transform the 5 feature columns accordingly.

- First, convert object data types to string using pandas:

- Transform the Gender into 0/1 values:

- Transform the parental level of education:

- Transform the lunch values:

- Transform the test preparation course:

Separating between the data features, the columns we’ll try to use for our predictions, and data targets which are the 3 last columns representing the Math, Reading, and Writing scores obtained by these students.

Connect your script to Neptune

- Start by creating a new project, give it a name and a meaningful description read how .

- Connect your script using your Neptune credentials read how .

- Head over to your jupyter notebook and enable the Neptune extensions to get access to your project read how .

Note : I highly encourage you to take a look at the new documentation released for the new Neptune Python AP I. It’s well-structured and very intuitive.

Train the model

Once we’ve correctly segregated our training and testing samples, we’re ready to start fitting the data to the mode. During the training process, we’ll try to jointly log all forthcoming evaluation results to have a real-time understanding of our model performance.

To start logging with Neptune, we create an experiment and a list of hyperparameters that will define each model version.

Start the training process using the parameters we set up earlier and DMatrix data. We also add the neptune_callback() function, which automates all the necessary work to monitor in real-time the loss and eval metrics.

Once your project is connected to the platform, Neptune tracks and monitors the current state of your physical resources, like the GPU, Memory, CPU, and GPU memory. It’s very convenient to always keep a closer eye on your resources while you do training.

Let’s take a closer look and try to explain the XGBRegressor hyperparameters:

- Learning_rate : Used to control and adjust the weighting of the internal model estimators. The learning_rate should always be a small value to force long-term learning.

- Max_depth: Indicates the depth degree of the estimators (trees in this case). Manipulate this parameter carefully, because it will cause the model to overfit.

- Alpha: A specific type of penalty regularization (L1) to help avoid overfitting

- Num_estimators: The number of estimators the model will be built upon.

- Num_boost_round: The number of boosting stages. Although num_estimators and num_boost_round remain quite the same, you should keep in mind that the num_boost_round should be re-tuned each time you update a parameter.

If you head back to Neptune, under All metadata you’ll see the model registered and all the initial parameters logged in.

Once training is launched, you can supervise the train and eval metric logs by clicking on the current experiment and heading to the ‘Charts’ section. The intuitive and ergonomic UI that Neptune offers alongside the tight integration with a plethora of ML algorithms and frameworks enables you to quickly iterate and improve over different versions of your model.

Using Neptune Callback, we can access even more information about our models, like the feature importance graph and the structure of the inner estimators.

Feature importance graph

The XGBRegressor generally classifies the order of importance of each feature used for the prediction. A benefit of using gradient boosting is that after the boosted trees are constructed, it is relatively straightforward to retrieve importance scores for each attribute. This can be done by computing a feature importance graph, visualizing the similarity between each feature (feature-wise or attribute-wise) within the boosted trees.

This article explains how to compute importance scores for each feature using the GBM package in R -> Gradient Boosted Feature Selection

We can see that Race/Ethnicity and parental level of education are the principal factors in a student’s success.

Versioning your model

You can version and store multiple versions of your model in a binary format in Neptune. Neptune automatically saves the current version of the model once the training is finished, so you can always get back and load previous model versions to compare.

Under ‘ All Metadata -> Artifacts’ , you’ll find all relevant metadata that’s been stored:

Collaborate and share work with your team

The platform also lets you cross-compare all your experiments in a seamless manner. Simply check the experiments you want and a specific view will appear that shows all required information.

You can share any Neptune experiment by just sending a link – read more here .

Note : Using the team plan, you can share all your work and projects with your teammates.

How to hyper-tune the XGBRegressor

The most efficient way of dealing with parameter tuning when time and resources are not an issue is to run a gigantic Grid Search on all the parameters and wait for the algorithm to output the optimal solution. It’s good to do so if you’re exploiting a small or intermediate dataset. But for bigger datasets, this approach can very quickly turn into a nightmare and consume too much time and too many resources.

Tips for hyper-tuning XGBoost when dealing with huge datasets

A well known saying among data scientists goes like this: “ You can make your model do wonders if your data has some signal and if it doesn’t have a signal, it doesn’t ”.

The most straightforward approach I suggest when having vast amounts of training data is to try to manually research the features that have significant predictive impact.

- Firstly try to reduce your features. 100 or 200 features is a lot, you should try to narrow the scope of feature selection. You could also rely on Sele ctKBest to select the top performers according to a specific criteria in which each feature scores a K number of points and it is chosen accordingly.

- Bad performance can also be related to the quality assessment of your testing dataset. The test data might represent a completely different subset of data as compared to your train data. Therefore, you should try doing cross-validation so that the R-squared value on the features is confident enough and sufficiently reliable.

- Finally, if you see that your hyperparameter tuning is still having minimal impact try to switch to more simpler regression methods like Linear, Lasso, Elastic Net, instead of sticking to XGBRegression.

Since the data for our example isn’t that big, we can choose to go for the first option. However, since the goal here is to expose the more reliable option for model tuning that you can leverage, we’ll go for this option without hesitation. Keep in mind that if you know which hyperparameters have more impact on the data, you’ll have a much smaller scope of work.

Grid Search method

Fortunately, XGBoost implements the scikit-learn API, so it’s very easy to use Grid Search and start rolling out the optimal results for the model based on the set of original hyperparameters.

Let’s create a range of values that each hyperparameter can take:

Configure your GridSearchCV. The best metric to evaluate the performance, in this case, would be the ROC AUC curve comparing the results of 10-fold cross-validation.

Launch training:

The processing steps will look something like this:

The Best estimator can be accessed using the field best_estimator_ :

XGBoost pros and cons

- Gradient Boosting comes with an easy to read and interpret algorithm, making most of its predictions easy to handle.

- Boosting is a resilient and robust method that prevents and cubs over-fitting quite easily

- XGBoost performs very well on medium, small, data with subgroups and structured datasets with not too many features.

- It is a great approach to go for because the large majority of real-world problems involve classification and regression, two tasks where XGBoost is the reigning king.

Disadvantages

- XGBoost does not perform so well on sparse and unstructured data.

- A common thing often forgotten is that Gradient Boosting is very sensitive to outliers since every classifier is forced to fix the errors in the predecessor learners.

- The overall method is hardly scalable. This is because the estimators base their correctness on previous predictors, hence the procedure involves a lot of struggle to streamline.

We’ve covered many aspects of Gradient Boosting, starting from a theoretical point of view to a more practical path. Now you can see how easy it is to add experiment tracking and model management to your XGBoost training and hyper-tuning with Neptune.

As always, I’ll leave you with some useful references below, so you can expand your pool of knowledge even more and improve your coding skills.

Stay tuned for more content!

References:

- Hyperparameter tuning in XGBoost

- How to Organize Your ML Development in an Efficient Way

- Introduction to XGBoost with an Implementation in an iOS Application

- Demystifying Maths of Gradient Boosting

Was the article useful?

More about xgboost: everything you need to know, check out our product resources and related articles below:, customizing llm output: post-processing techniques, deep learning optimization algorithms, track and visualize information from your pipelines: neptune.ai + zenml integration, product updates september ’23: scatter plots, airflow integration, and more, explore more content topics:, manage your model metadata in a single place.

Join 50,000+ ML Engineers & Data Scientists using Neptune to easily log, compare, register, and share ML metadata.

XGBoost: Powering Machine Learning with Gradient Boosting

- April 23, 2023

- Neural Ninja

Table of Contents

I. introduction.

Picture a group of friends trying to decide where to go for dinner. Everyone throws out suggestions, but no one can agree. So, they decide to vote, and the place with the most votes wins. The decision-making process was not only fast but also took everyone’s opinion into account. XGBoost, or Extreme Gradient Boosting, is a machine learning algorithm that works a bit like this voting system among friends. It combines many simple models to create a single, more powerful, and more accurate one. In machine learning lingo, we call this an ‘ensemble method’.

Welcome to our article on XGBoost, a much-loved algorithm in the data science community and a winner of many Kaggle competitions. We’ll explore how XGBoost takes the idea of ‘ensemble learning’ to a new level, making it a powerful tool for a variety of machine learning tasks. By the end of this article, you’ll understand what XGBoost is, how it works, and why it’s a game-changer in machine learning.

II. BACKGROUND INFORMATION

To understand the power of XGBoost, we need to go back a bit and refresh our memory about a related concept – Gradient Boosting Machines, or GBMs. Like our group of friends deciding on a dinner place, GBMs also use a ‘voting system’ among many models to make a final decision. They build many weak learners (simple models), combine their outcomes, and use a technique called ‘boosting’ to turn this group of weak learners into a single strong one. The ‘gradient’ in Gradient Boosting refers to a way of minimizing errors or, in our analogy, making sure everyone gets the most enjoyable dining experience.

Next, let’s talk about the concept of ‘ensemble learning’ and ‘boosting’. Think about an orchestra. Each musician is good, but when they all play together, they create something much more impressive. Similarly, in machine learning, we can combine many models to get a better one. This is called ‘ensemble learning’. ‘Boosting’ is one way to do ensemble learning. It builds models sequentially, with each new model trying to correct the errors made by the previous ones.

Now, imagine if our group of friends had a super-organized friend who not only conducted the vote but also ensured it happened super-fast, with everyone’s preferences taken into account. That’s what XGBoost does. It builds on the idea of gradient boosting but does it faster and more efficiently. Its name, XGBoost, stands for ‘Extreme Gradient Boosting’, reflecting its speed and performance capabilities.

III. HOW XGBOOST WORKS

Description of Gradient Boosting Concept

XGBoost stands for Extreme Gradient Boosting. To understand it, we first need to grasp the concept of gradient boosting. Imagine you’re building a model car. You start with a basic structure, but it’s not good enough. So, you keep adding small improvements. Maybe you adjust the wheels for better balance or tweak the design for better aerodynamics. Each little enhancement gets you closer to your ideal model car. This process is much like gradient boosting in machine learning. You begin with a simple model (often just making random guesses), then iteratively add new models to correct the errors made by the existing set of models.

Explanation of How XGBoost Enhances Gradient Boosting

Let’s continue with our model car analogy. Suppose you’re not just building a single model car, but a whole series of them. To speed up the process and ensure all cars are top-notch, you decide to create an assembly line. You add stations to make certain improvements, ensure that errors made early in the line get corrected later on, and regularly check the cars’ performance. This improved, efficient system is what XGBoost brings to gradient boosting.

XGBoost uses more sophisticated techniques compared to regular gradient boosting, such as:

- Regularization : It’s a technique that prevents your model from getting overly complicated and overfitting the data. It’s like a quality check in our assembly line that stops us from adding too many unnecessary features to our model cars.

- Parallel Processing : It makes building models faster, just like an assembly line speeds up car production.

- Tree Pruning : This technique stops adding improvements (or ‘branches’ to the decision tree models) when they no longer significantly help, preventing the wastage of resources.

- Handling Missing Values : XGBoost has a built-in method to handle missing data, just like an experienced craftsperson who can work around missing parts.

Differences between Gradient Boosting Machines and XGBoost

The primary differences between traditional Gradient Boosting Machines and XGBoost are based on efficiency, speed, and accuracy. While both involve creating a series of models that learn from their predecessors’ mistakes, XGBoost incorporates several tweaks and optimizations that make it faster and more accurate, such as regularization and tree pruning.

IV. UNDERSTANDING XGBOOST

Mathematical Insights into XGBoost

While we won’t go deep into the mathematical details, it’s important to understand that XGBoost’s power comes from optimization. Optimization is like finding the best way to arrange your assembly line or the quickest route to school. In XGBoost, optimization involves finding the best set of model improvements to reduce errors.

XGBoost uses a process called gradient descent for this. Imagine you’re blindfolded and standing on a hill, trying to find your way down. You might feel with your feet which way the ground slopes downwards and take a step in that direction. Repeating this process gets you to the bottom of the hill. This is essentially what gradient descent does: it steps in the direction that most quickly reduces errors.

Interpretation and Implications of XGBoost

Interpreting XGBoost involves understanding how different features contribute to predictions. XGBoost provides importance scores for features in your model. Going back to our car analogy, it’s like identifying which improvements (like aerodynamic design or wheel adjustments) have the most impact on the car’s performance.

Implications of using XGBoost are mainly around its performance and efficiency. It’s known for providing highly accurate models quickly, even with large datasets or many features. However, like all models, it’s not a one-size-fits-all solution. Understanding where it shines and where it doesn’t is crucial (and we’ll cover this later in the article).

V. KEY CONCEPTS IN XGBoost

- XGBoost : XGBoost, short for “Extreme Gradient Boosting,” is like a team of miners, each equipped with a magical pickaxe that can learn from the mistakes of the miner before them. Every time a miner makes a mistake, their pickaxe adjusts itself to do better next time. This is essentially what XGBoost does. It builds many small and simple models (the miners) in a sequential way, with each new model learning from the errors of its predecessors. This sequential learning process is what makes XGBoost a part of the ‘boosting’ family of machine learning algorithms.

- Regularization : Think of Regularization as a coach who helps the team of miners to not overthink or underthink their strategy. It does this by adding a penalty to the miners (models) that are too complex or too simple. This way, the models neither fit the data too perfectly (overfitting) nor too loosely (underfitting), and the predictions become more reliable and generalizable.

- Gradient Boosting : This is the key method that XGBoost uses to learn from mistakes. Just like when you’re going down a hill and you use the slope to guide you to the bottom, gradient boosting uses the ‘gradient’ (or slope) of the error to guide the learning process. It’s called ‘boosting’ because each new model gives a ‘boost’ to the previous models by correcting their mistakes.

- Overfitting : This happens when our team of miners (models) is too focused on the details of the rocks they’ve already mined (training data) and can’t adapt when they encounter new kinds of rocks (test data). In machine learning terms, a model overfits when it performs well on the training data but poorly on the new, unseen data.

- Tree Pruning : In XGBoost, tree pruning is like a gardener who trims the branches of a tree to make sure it doesn’t grow too wildly. Similarly, XGBoost ‘prunes’ or cuts back the extra ‘branches’ (split points) of its decision trees to prevent them from becoming too complex and overfitting the data.

VI. REAL-WORLD EXAMPLE OF XGBOOST

Let’s imagine a real-world problem where we could use XGBoost. Let’s say we work for a streaming service like Netflix and want to predict whether a user will like a certain movie or not based on their past viewing history and the characteristics of the movie. This is a classic example of a binary classification problem (the user either likes or dislikes a movie), and XGBoost can be a great tool to tackle this.

To implement XGBoost, we would first gather our data. In this case, the data could include the user’s age, location, gender, past viewing history, and the genre, director, and actors of the movie.

We then pre-process our data by cleaning it (removing any errors or irrelevant information), encoding categorical variables (like genre or location) into a format that the algorithm can understand, and normalizing numerical variables (like age) to ensure that they’re on a similar scale.

Once our data is ready, we can apply the XGBoost algorithm. This would involve training our XGBoost model on a portion of our data, tuning the model’s hyperparameters to find the most effective combination, and then testing the model on the remaining data to see how well it can predict user preferences.

In terms of outcomes, if our XGBoost model is effective, it should be able to accurately predict whether a user will like a movie or not, which could lead to more personalized recommendations and a better user experience.

In the real world, XGBoost has been used for many such classification and regression tasks, ranging from predicting customer churn and credit card fraud detection to natural disaster prediction and healthcare diagnostics. It’s particularly popular in machine learning competitions due to its flexibility, speed, and performance.

Remember that while XGBoost can often provide powerful predictions, it’s not a magic solution and might not always be the best tool for every problem. It’s important to understand the strengths and limitations of XGBoost (and any machine learning algorithm) before applying it.

VII. INTRODUCTION TO DATASET

The dataset we’ll be using for our exploration of XGBoost is called the Iris dataset, a classic dataset in the field of machine learning. The Iris dataset is so named because it contains information about different species of the Iris flower. The dataset was first introduced by the British statistician and biologist Ronald Fisher in his 1936 paper titled “The Use of multiple measurements in taxonomic problems”.

This dataset comprises 150 instances, each representing an Iris flower. Each instance includes four features:

- Sepal Length (cm)

- Sepal Width (cm)

- Petal Length (cm)

- Petal Width (cm)

The dataset also includes a target variable, which is the specific species of Iris that the instance represents. There are three possible species: Setosa, Versicolor, or Virginica.

With these details, each flower in the dataset is described using the four features and labeled with its species. Our goal will be to train an XGBoost model using these features to predict the species of Iris flower.

Let’s delve into the implementation and see XGBoost in action.

VIII. APPLYING XGBOOST

Here is a practical implementation of XGBoost of Iris Dataset.

We’ll discuss the interpretation of these evaluation results in the next section.

This wraps up the basic application of the XGBoost model on the Iris dataset. This, of course, is just the tip of the iceberg. There are many more parameters and options you can experiment with to tweak the performance of your XGBoost model. But this gives you a starting point to explore the vast and powerful world of XGBoost.

PLAYGROUND:

IX. INTERPRETING XGBOOST RESULTS

Before we dive into the ocean of interpretation, let’s ensure we have our safety gear on. In other words, let’s understand the basic tools we will be using: the Classification Report and the Confusion Matrix.

The Classification Report provides key metrics in evaluating the performance of your classification model. It includes terms like Precision, Recall, and F1-Score.

- Precision : Imagine you’re playing a game of darts. Precision is hitting the bullseye consistently, even if you don’t throw all your darts. In our case, it represents the ability of our XGBoost model to correctly identify positive instances from all instances it has classified as positive.

- Recall : Going back to darts, recall is throwing all your darts and hitting the board every time, even if it’s not always the bullseye. In classification, it represents the model’s ability to correctly identify all positive instances from all actual positive instances.

- F1-Score : This is a blend of precision and recall. If you’re good at hitting the bullseye (precision) and good at hitting the board with all your darts (recall), you’re overall a great dart player! The F1-Score gives us an overall measure of a model’s accuracy.

Now, the Confusion Matrix is a table that describes the performance of your classification model. Picture a small 3×3 square grid, like tic-tac-toe, but for machine learning!

Let’s look at the results now:

Classification Report:

Here, each row corresponds to a class (0, 1, 2). For each class, we’ve achieved a perfect precision, recall, and F1-Score of 1.00. This tells us our model is performing exceptionally well on all classes. In the dart game, we’re hitting the bullseye every time!

Confusion Matrix:

The diagonal line from top left to bottom right [10, 9, 11] shows the number of correct predictions made by our model for each class. The zeros in all other positions mean our model didn’t misclassify any instance. In our dart game, this is the equivalent of hitting the bullseye with every dart, and not hitting outside of it, not even once!

X. COMPARING XGBOOST WITH OTHER BOOSTING ALGORITHMS

Boosting is like a community garden where everyone plants together to create a blooming array of plants. Gradient Boosting Machine (GBM), AdaBoost, LightGBM, and CatBoost are all community members, each with their unique gardening style!

Let’s break it down:

- GBM : It’s like a patient gardener, carefully growing each plant one at a time. Each new plant is grown to correct the mistakes of the collective garden.

- AdaBoost : It’s the attentive gardener, who pays more attention to the plants that aren’t growing well. Each plant is weighted based on its performance, and the garden grows focusing more on the underperforming plants.

- LightGBM : It’s the fast gardener, growing plants in a vertical fashion, choosing the leaf with the max delta loss to grow. This makes it faster and more efficient, but it may not work well with smaller datasets.

- CatBoost : It’s the detailed gardener who can handle categorical features well. It reduces the need for extensive preprocessing like one-hot encoding.

XGBoost, on the other hand, is an efficient and versatile gardener. It has an extra regularisation term in its function, which helps prevent overfitting, making our garden (model) generalize well to new data. It also works parallelly, making it faster.

When it comes to comparing their results, they all can do well given the right circumstances. However, in general, XGBoost and LightGBM are often top contenders due to their speed and performance.

Remember, no gardener is better than the other. They each have their strengths and weaknesses, and which one you choose depends on the type of garden (data) you have.

XI. LIMITATIONS AND ADVANTAGES OF XGBOOST

Just like a supercar, XGBoost is a powerful machine, but it’s not without its quirks. Let’s first take a look at what makes it a champion on the race track of machine learning:

- Speed and Performance: XGBoost is known for its superior speed and model performance. It’s like a cheetah that can swiftly sprint towards its target. This is because XGBoost is designed for computational efficiency with its core algorithm written in C++, while also offering parallel processing, which makes it faster than other gradient boosting algorithms.

- Regularization: XGBoost has an additional regularization term in its cost function, which helps prevent overfitting. It’s like a safety belt that keeps our model from getting too wild and complex. This makes XGBoost more generalized and robust than other algorithms.

- Handling Missing Values: XGBoost has an in-built routine to handle missing values, making it as smart as a detective who can find clues even when some are missing.

- Tree Pruning: Unlike GBM, where trees are constructed in a greedy manner, XGBoost uses a ‘max_depth’ parameter as specified instead of a stopping criterion. This means it’s more like a wise gardener, knowing when to stop growing the tree to avoid unnecessary complexity.

But no tool is perfect, and XGBoost is no exception. Now, let’s look at the challenges or limitations one might face when using XGBoost:

- Tuning Parameters: XGBoost requires careful tuning of parameters. It’s a bit like tuning a guitar – you need to find the right notes (parameters) for the best music (performance). While this provides flexibility, it can be time-consuming.

- Difficulty Interpreting: XGBoost models can sometimes be difficult to interpret. While individual trees are interpretable, when we combine them all in an ensemble model, it becomes like trying to hear individual voices in a choir – pretty challenging!

- Computational Power: While XGBoost is faster than other gradient-boosting algorithms, it can still be computationally intensive for very large datasets or complex models. It’s a bit like a high-performance car – it can go fast, but it’s going to need a lot of fuel.

XII. CONCLUSION

We’ve traveled a great distance, from understanding the basics of XGBoost to exploring its powerful features, and finally, discussing its strengths and limitations. Just like a race car driver, you now know the ins and outs of this powerful machine-learning algorithm.

We’ve seen how XGBoost, with its speed, performance, regularization, and smart handling of missing values, stands out from other machine-learning algorithms. At the same time, we’ve also recognized the challenges it presents, like the necessity of careful parameter tuning, potential difficulty in interpreting, and its demand for computational power.

Remember, no algorithm is perfect, and the best one depends on the problem at hand. But with the knowledge you’ve gained from this article, you’re well-equipped to decide when to use XGBoost and how to handle it responsibly.

Next in our series, we will introduce another promising algorithm – Light GBM. Light GBM, like a lightweight boxer, is fast and effective, and it will be interesting to see how it compares to XGBoost, our heavyweight champion. So, buckle up and stay tuned for the upcoming ride!

QUIZ: Test Your Knowledge!

Quiz summary.

0 of 18 Questions completed

Information

You have already completed the quiz before. Hence you can not start it again.

Quiz is loading…

You must sign in or sign up to start the quiz.

You must first complete the following:

Quiz complete. Results are being recorded.

0 of 18 Questions answered correctly

Time has elapsed

You have reached 0 of 0 point(s), ( 0 )

Earned Point(s): 0 of 0 , ( 0 ) 0 Essay(s) Pending (Possible Point(s): 0 )

- Not categorized 0%

- Review / Skip

1 . Question

What does XGBoost stand for?

- A. Extreme Gradient Boosting

- B. Extreme Gradient Boost

- C. Extra Gradient Boosting

- D. Extra Gradient Boost

2 . Question

Which concept is related to Gradient Boosting Machines (GBMs)?

- A. Ensemble Learning

- B. Regularization

- C. Boosting

3 . Question

What does the ‘gradient’ in Gradient Boosting refer to?

- A. A way of minimizing errors

- B. A way of maximizing errors

- C. A way of avoiding errors

- D. A way of ignoring errors

4 . Question

What technique does XGBoost use to prevent overfitting?

- A. Regularization

- B. Parallel Processing

- C. Tree Pruning

- D. Handling Missing Values

5 . Question

What does XGBoost have a built-in method to handle?

- A. Incorrect Predictions

- B. Missing Data

- C. Outliers

6 . Question

Which algorithm is known for providing highly accurate models quickly?

- B. AdaBoost

- C. LightGBM

- D. CatBoost

7 . Question

What does the F1-Score measure in classification models?

- A. Precision

- C. Overall Accuracy

- D. Blend of Precision and Recall

8 . Question

Which boosting algorithm grows plants in a vertical fashion?

9 . Question

What is one of the advantages of XGBoost over other algorithms?

- A. Difficulty in Interpretation

- B. Slow Speed

- C. Regularization

- D. High Computational Power

10 . Question

What is a limitation of XGBoost in terms of computational resources?

- A. Slow Speed

- B. High Computational Power

- C. Low Performance

- D. Easy Interpretation

11 . Question

Which feature does XGBoost have a built-in routine to handle?

- A. Outliers

- B. Missing Values

- D. Incorrect Predictions

12 . Question

What is the main strength of XGBoost in terms of model performance?

- B. Overfitting

- C. Parallel Processing

- D. Superior Speed and Performance

13 . Question

Which algorithm is known for being a heavyweight champion in machine learning?

- A. LightGBM

- B. CatBoost

- C. AdaBoost

14 . Question

What is one of the challenges of using XGBoost?

- A. Low Performance

- B. Easy Interpretation

- C. Tuning Parameters

- D. Fast Speed

15 . Question

How does XGBoost improve the efficiency of model training?

- By simplifying the data structure

- By reducing the size of the input data

- By parallel processing of trees

- By limiting the number of features

16 . Question

What is a key feature of XGBoost that helps with large datasets?

- Sparse-aware implementation for handling missing data

- Single-threaded execution

- Reduced precision in calculations

- Minimal hyperparameter tuning

17 . Question

In XGBoost, what role does the ‘learning rate’ parameter play?

- It determines the maximum depth of trees

- It controls the impact of each tree on the final outcome

- It sets the number of trees in the model

- It adjusts the model's bias

18 . Question

Which of the following is a common application of XGBoost?

- Real-time streaming data analysis

- Classification and regression in supervised learning

- Unsupervised clustering tasks

- Real-time anomaly detection in networks

Related Posts

Inferential Statistics: Making Predictions from Data

I. Introduction to Inferential Statistics Unveiling the Power of Inferential Statistics: An Overview Inferential statistics stand at the crossroads of data analysis, offering a bridge from the concrete to the predictive, from what we know to what we can infer.

Descriptive Statistics: Understanding the Basics

I. Introduction to Descriptive Statistics The Essence of Descriptive Statistics in Data Analysis Imagine you’re a detective, but instead of solving mysteries in dark alleys, you’re unraveling the stories hidden within data. This is the essence of descriptive statistics –

Mastering Data Analysis: Transform Raw Data into Powerful Insights

I. Introduction to the Journey of Data Analysis From Intuition to Informed Decisions: The Evolution of Decision-Making Embracing Data in Our Daily Lives In today’s world, data surrounds us everywhere. From choosing the fastest route home to deciding what to

© Let’s Data Science

Unlock AI & Data Science treasures. Log in!

Only fill in if you are not human

Help | Advanced Search

Computer Science > Machine Learning

Title: xgboost: a scalable tree boosting system.

Abstract: Tree boosting is a highly effective and widely used machine learning method. In this paper, we describe a scalable end-to-end tree boosting system called XGBoost, which is used widely by data scientists to achieve state-of-the-art results on many machine learning challenges. We propose a novel sparsity-aware algorithm for sparse data and weighted quantile sketch for approximate tree learning. More importantly, we provide insights on cache access patterns, data compression and sharding to build a scalable tree boosting system. By combining these insights, XGBoost scales beyond billions of examples using far fewer resources than existing systems.

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

13 blog links

Dblp - cs bibliography, bibtex formatted citation.

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

- Machine Learning Tutorial

- Data Analysis Tutorial

- Python - Data visualization tutorial

- Machine Learning Projects

- Machine Learning Interview Questions

- Machine Learning Mathematics

- Deep Learning Tutorial

- Deep Learning Project

- Deep Learning Interview Questions

- Computer Vision Tutorial

- Computer Vision Projects

- NLP Project

- NLP Interview Questions

- Statistics with Python

- 100 Days of Machine Learning

- Machine Learning Algorithms

- Top 10 Machine Learning Algorithms | Data Science for Beginners

Linear Model Regression

- Ordinary Least Squares (OLS) using statsmodels

- Linear Regression (Python Implementation)

- ML | Multiple Linear Regression using Python

- Polynomial Regression ( From Scratch using Python )

- Implementation of Bayesian Regression

- How to Perform Quantile Regression in Python

- Isotonic Regression in Scikit Learn

- Stepwise Regression in Python

- Least Angle Regression (LARS)

Linear Model Classification

- Logistic Regression in Machine Learning

- Understanding Activation Functions in Depth

Regularization

- Implementation of Lasso Regression From Scratch using Python

- Implementation of Ridge Regression from Scratch using Python

- Implementation of Elastic Net Regression From Scratch

K-Nearest Neighbors (KNN)

- Brute Force Approach and its pros and cons

- ML | Implementation of KNN classifier using Sklearn

- Regression using k-Nearest Neighbors in R Programming

Support Vector Machines

- Support Vector Machine (SVM) Algorithm

- Classifying data using Support Vector Machines(SVMs) in Python

- Support Vector Regression (SVR) using Linear and Non-Linear Kernels in Scikit Learn

- Major Kernel Functions in Support Vector Machine (SVM)

- ML | Stochastic Gradient Descent (SGD)

Decision Tree

- CART (Classification And Regression Tree) in Machine Learning

- Decision Tree Classifiers in R Programming

- Python | Decision Tree Regression using sklearn

Ensemble Learning

- Ensemble Methods in Python

- Random Forest Regression in Python

- ML | Extra Tree Classifier for Feature Selection

- Implementing the AdaBoost Algorithm From Scratch

- CatBoost in Machine Learning

- LightGBM (Light Gradient Boosting Machine)

- Stacking in Machine Learning

Generative Model

- ML | Naive Bayes Scratch Implementation using Python

- Applying Multinomial Naive Bayes to NLP Problems

- Gaussian Process Classification (GPC) on the XOR Dataset in Scikit Learn

- Gaussian Discriminant Analysis

- Quadratic Discriminant Analysis

- Basic Understanding of Bayesian Belief Networks

- Hidden Markov Model in Machine learning

Time Series Forecasting

- Components of Time Series Data

- AutoCorrelation

- How to Check if Time Series Data is Stationary with Python?

- How to Perform an Augmented Dickey-Fuller Test in R

- How to calculate MOVING AVERAGE in a Pandas DataFrame?

- Exponential Smoothing in R Programming

- Python | ARIMA Model for Time Series Forecasting

Supervised Dimensionality Reduction Technique

- Multiclass classification using scikit-learn

- An introduction to MultiLabel classification

Metrics for Classification & Regression Algorithms

- Python | Mean Squared Error

- Root-Mean-Square Error in R Programming

- How to Calculate Mean Absolute Error in Python?

- R-squared in Regression Analysis in Machine Learning

- ML | Adjusted R-Square in Regression Analysis

- Confusion Matrix in Machine Learning

- Techniques To Evaluate Accuracy of Classifier in Data Mining

- Precision Handling in Python

- Precision-Recall Curve | ML

- Calculate Sensitivity, Specificity and Predictive Values in CARET

- How to Calculate F1 Score in R?

- AUC ROC Curve in Machine Learning

- Probability Calibration Curve in Scikit Learn

- Calibration Curves

- Probability Calibration of Classifiers in Scikit Learn

Cross Validation Technique

- K-fold Cross Validation in R Programming

- Stratified K Fold Cross Validation

- LOOCV (Leave One Out Cross-Validation) in R Programming

- Sklearn.StratifiedShuffleSplit() function in Python

Optimization Technique

- Gradient Descent in Linear Regression

- ML | Mini-Batch Gradient Descent with Python

- ML | Momentum-based Gradient Optimizer introduction

- Find root of a number using Newton's method

- Introduction to Hill Climbing | Artificial Intelligence

- What is TABU Search?

- Clustering in Machine Learning

- K means Clustering - Introduction

- ML | K-means++ Algorithm

- K-Mode Clustering in Python

- Fuzzy C-means Clustering in MATLAB

- Gaussian Mixture Model

- ML | Expectation-Maximization Algorithm

- Hierarchical Clustering in Machine Learning

- Implementing Agglomerative Clustering using Sklearn

- Difference Between Agglomerative clustering and Divisive clustering

- Affinity Propagation in ML | To find the number of clusters

- DBSCAN Clustering in ML | Density based clustering

- ML | OPTICS Clustering Explanation

Association Rule Mining

- Association Rule Mining in R Programming

- Apriori Algorithm

- Frequent Pattern Growth Algorithm

Anomaly Detection

- Machine Learning for Anomaly Detection

- Local outlier factor

- Comparing anomaly detection algorithms for outlier detection on toy datasets in Scikit Learn

Dimensionality Reduction Technique

- Principal Component Analysis(PCA)

- ML | T-distributed Stochastic Neighbor Embedding (t-SNE) Algorithm

- Non-Negative Matrix Factorization

- ML | Independent Component Analysis

- Factor Analysis | Data Analysis

- Latent Dirichlet Allocation

- Swiss Roll Reduction with LLE in Scikit Learn

- Latent Semantic Analysis

Model-Based Methods

- Markov Decision Process

- Bellman Equation

- ML | Monte Carlo Tree Search (MCTS)

Model-Free Methods

- Q-Learning in Python

- SARSA Reinforcement Learning

- Monte Carlo integration in Python

- Asynchronous Advantage Actor Critic (A3C) algorithm

XGBoost is an optimized distributed gradient boosting library designed for efficient and scalable training of machine learning models. It is an ensemble learning method that combines the predictions of multiple weak models to produce a stronger prediction. XGBoost stands for “Extreme Gradient Boosting” and it has become one of the most popular and widely used machine learning algorithms due to its ability to handle large datasets and its ability to achieve state-of-the-art performance in many machine learning tasks such as classification and regression.

One of the key features of XGBoost is its efficient handling of missing values, which allows it to handle real-world data with missing values without requiring significant pre-processing. Additionally, XGBoost has built-in support for parallel processing, making it possible to train models on large datasets in a reasonable amount of time.

XGBoost can be used in a variety of applications, including Kaggle competitions, recommendation systems, and click-through rate prediction, among others. It is also highly customizable and allows for fine-tuning of various model parameters to optimize performance.

XgBoost stands for Extreme Gradient Boosting, which was proposed by the researchers at the University of Washington. It is a library written in C++ which optimizes the training for Gradient Boosting.

Before understanding the XGBoost, we first need to understand the trees especially the decision tree :

Decision Tree:

A Decision tree is a flowchart-like tree structure, where each internal node denotes a test on an attribute, each branch represents an outcome of the test, and each leaf node (terminal node) holds a class label.

A tree can be “learned” by splitting the source set into subsets based on an attribute value test. This process is repeated on each derived subset in a recursive manner called recursive partitioning. The recursion is completed when the subset at a node all has the same value of the target variable, or when splitting no longer adds value to the predictions.

A Bagging classifier is an ensemble meta-estimator that fits base classifiers each on random subsets of the original dataset and then aggregate their individual predictions (either by voting or by averaging) to form a final prediction. Such a meta-estimator can typically be used as a way to reduce the variance of a black-box estimator (e.g., a decision tree), by introducing randomization into its construction procedure and then making an ensemble out of it. Each base classifier is trained in parallel with a training set which is generated by randomly drawing, with replacement, N examples(or data) from the original training dataset, where N is the size of the original training set. The training set for each of the base classifiers is independent of each other. Many of the original data may be repeated in the resulting training set while others may be left out.

Bagging reduces overfitting (variance) by averaging or voting, however, this leads to an increase in bias, which is compensated by the reduction in variance though.

Bagging classifier

Random Forest :

Every decision tree has high variance, but when we combine all of them together in parallel then the resultant variance is low as each decision tree gets perfectly trained on that particular sample data and hence the output doesn’t depend on one decision tree but multiple decision trees. In the case of a classification problem, the final output is taken by using the majority voting classifier. In the case of a regression problem, the final output is the mean of all the outputs. This part is Aggregation.

The basic idea behind this is to combine multiple decision trees in determining the final output rather than relying on individual decision trees. Random Forest has multiple decision trees as base learning models. We randomly perform row sampling and feature sampling from the dataset forming sample datasets for every model. This part is called Bootstrap.

Boosting is an ensemble modelling, technique that attempts to build a strong classifier from the number of weak classifiers. It is done by building a model by using weak models in series. Firstly, a model is built from the training data. Then the second model is built which tries to correct the errors present in the first model. This procedure is continued and models are added until either the complete training data set is predicted correctly or the maximum number of models are added.

Gradient Boosting

Gradient Boosting is a popular boosting algorithm. In gradient boosting, each predictor corrects its predecessor’s error. In contrast to Adaboost, the weights of the training instances are not tweaked, instead, each predictor is trained using the residual errors of predecessor as labels.

There is a technique called the Gradient Boosted Trees whose base learner is CART (Classification and Regression Trees).

XGBoost

XGBoost is an implementation of Gradient Boosted decision trees. XGBoost models majorly dominate in many Kaggle Competitions.

In this algorithm, decision trees are created in sequential form. Weights play an important role in XGBoost. Weights are assigned to all the independent variables which are then fed into the decision tree which predicts results. The weight of variables predicted wrong by the tree is increased and these variables are then fed to the second decision tree. These individual classifiers/predictors then ensemble to give a strong and more precise model. It can work on regression, classification, ranking, and user-defined prediction problems.

Mathematics behind XgBoost

Before beginning with mathematics about Gradient Boosting, Here’s a simple example of a CART that classifies whether someone will like a hypothetical computer game X. The example of tree is below:

The prediction scores of each individual decision tree then sum up to get If you look at the example, an important fact is that the two trees try to complement each other. Mathematically, we can write our model in the form

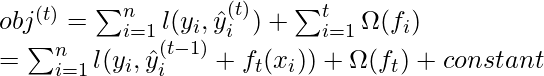

where, K is the number of trees, f is the functional space of F, F is the set of possible CARTs. The objective function for the above model is given by:

where, first term is the loss function and the second is the regularization parameter. Now, Instead of learning the tree all at once which makes the optimization harder, we apply the additive stretegy, minimize the loss what we have learned and add a new tree which can be summarised below:

The objective function of the above model can be defined as:

Now, let’s apply taylor series expansion upto second order:

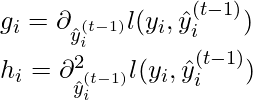

where g_i and h_i can be defined as:

Simplifying and removing the constant:

Now, we define the regularization term, but first we need to define the model:

Here, w is the vector of scores on leaves of tree, q is the function assigning each data point to the corresponding leaf, and T is the number of leaves. The regularization term is then defined by:

Now, our objective function becomes:

![Rendered by QuickLaTeX.com obj^{(t)} \approx \sum_{i=1}^n [g_i w_{q(x_i)} + \frac{1}{2} h_i w_{q(x_i)}^2] + \gamma T + \frac{1}{2}\lambda \sum_{j=1}^T w_j^2\\ = \sum^T_{j=1} [(\sum_{i\in I_j} g_i) w_j + \frac{1}{2} (\sum_{i\in I_j} h_i + \lambda) w_j^2 ] + \gamma T](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-6b829944377420f6f97bf496b0ff365e_l3.png)

Now, we simplify the above expression:

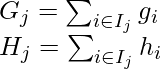

where,

where, \gamma is pruning parameter, i.e the least information gain to perform split.

Now, we try to measure how good the tree is, we can’t directly optimize the tree, we will try to optimize one level of the tree at a time. Specifically we try to split a leaf into two leaves, and the score it gains is

Calculation of Information Gain

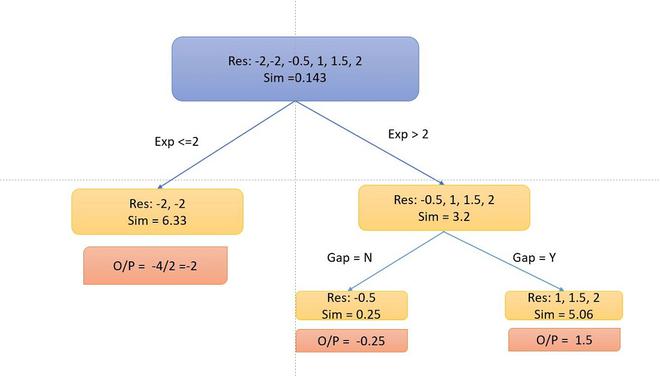

Let’s consider an example dataset:

- Now, let’s consider the decision tree, we will be splitting the data based on experience <=2 or otherwise.

- Now, let’s calculate the similarity metrices of left and right side. Since, it is the regression problem the similarity metric will be:

where, \lambda = hyperparameter

and for the classification problem:

- Now, the information gain from this split is:

Similarly, we can try multiple splits and calculate the information gain. We will take the split with the highest information gain. Let’s for now take this information gain. Further, we will split the decision tree if there is a gap or not.

- Now, As you can notice that I didn’t split into the left side because the information Gain becomes negative. So, we only perform split on the right side.

- To calculate the particular output, we follow the decision tree multiplied with a learning rate \alpha (let’s take 0.5) and add with the previous learner (base learner for the first tree) i.e for data point 1: o/p = 6 + 0.5 *-2 =5. So our table becomes.

- Similarly, the algorithm produces more than one decision tree and combine them additively to generate better estimates

Optimization and Improvement

System Optimization:

- Regularization : Since the ensembling of decisions, trees can sometimes lead to very complex. XGBoost uses both Lasso and Ridge Regression regularization to penalize the highly complex model.

- Parallelization and Cache block : In, XGboost, we cannot train multiple trees parallel, but it can generate the different nodes of tree parallel. For that, data needs to be sorted in order. In order to reduce the cost of sorting, it stores the data in blocks. It stored the data in the compressed column format, with each column sorted by the corresponding feature value. This switch improves algorithmic performance by offsetting any parallelization overheads in computation.

- Tree Pruning: XGBoost uses max_depth parameter as specified the stopping criteria for the splitting of the branch, and starts pruning trees backward. This depth-first approach improves computational performance significantly.

- Cache-Awareness and Out-of-score computation : This algorithm has been designed to make use of hardware resources efficiently. This is accomplished by cache awareness by allocating internal buffers in each thread to store gradient statistics. Further enhancements such as ‘out-of-core computing optimize available disk space while handling big data-frames that do not fit into memory. In out-of-core computation, Xgboost tries to minimize the dataset by compressing it.

- Sparsity Awareness : XGBoost can handle sparse data that may be generated from preprocessing steps or missing values. It uses a special split finding algorithm that is incorporated into it that can handle different types of sparsity patterns.

- Weighted Quantile Sketch: XGBoost has in-built the distributed weighted quantile sketch algorithm that makes it easier to effectively find the optimal split points among weighted datasets.

- Cross-validation : XGboost implementation comes with a built-in cross-validation method. This helps the algorithm prevents overfitting when the dataset is not that big,

Advantages Or Disadvantages:

Advantages of xgboost:.

- Performance: XGBoost has a strong track record of producing high-quality results in various machine learning tasks, especially in Kaggle competitions, where it has been a popular choice for winning solutions.

- Scalability: XGBoost is designed for efficient and scalable training of machine learning models, making it suitable for large datasets.

- Customizability: XGBoost has a wide range of hyperparameters that can be adjusted to optimize performance, making it highly customizable.

- Handling of Missing Values: XGBoost has built-in support for handling missing values, making it easy to work with real-world data that often has missing values.

- Interpretability: Unlike some machine learning algorithms that can be difficult to interpret, XGBoost provides feature importances, allowing for a better understanding of which variables are most important in making predictions.

Disadvantages of XGBoost:

- Computational Complexity: XGBoost can be computationally intensive, especially when training large models, making it less suitable for resource-constrained systems.

- Overfitting: XGBoost can be prone to overfitting, especially when trained on small datasets or when too many trees are used in the model.

- Hyperparameter Tuning: XGBoost has many hyperparameters that can be adjusted, making it important to properly tune the parameters to optimize performance. However, finding the optimal set of parameters can be time-consuming and requires expertise.

- Memory Requirements: XGBoost can be memory-intensive, especially when working with large datasets, making it less suitable for systems with limited memory resources.

References:

- XGboost paper

- XGboost documentation

Please Login to comment...

Similar reads.

- Machine Learning

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

R news and tutorials contributed by hundreds of R bloggers

Xgboost’s assumptions.

Posted on November 15, 2022 by finnstats in R bloggers | 0 Comments

The post XGBoost’s assumptions appeared first on finnstats .

If you are interested to learn more about data science, you can find more articles here finnstats .

XGBoost’s assumptions, First will provide an overview of the algorithm before we dive into XGBoost’s assumptions.

Extreme Gradient Boosting, often known as XGBoost, is a supervised learning technique that belongs to the family of machine learning algorithms known as gradient-boosted decision trees (GBDT).

Boosting to XGBoost

To lower the number of training errors, boosting is the process of fusing a group of weak learners into strong learners.

Boosting makes it more efficient by addressing the bias-variance trade-off .

Various boosting algorithms exist, including XGBoost, Gradient Boosting, AdaBoost (Adaptive Boosting), and others.

Let’s now enter XGBoost.

As previously mentioned, XGBoost is a gradient-boosted decision tree (GBM) extension that is renowned for its speed and performance.

Combining a number of simpler, weaker models of decision trees that are built sequentially allows for the creation of predictions.

These models evaluate other decision trees using if-then-else true/false feature questions in order to provide predictions about the likelihood of obtaining a sound choice.

These three things make up it:

- an optimization target for a loss function.

- a poor predictor of the future.

- Adding an additive model will help the weaker models make fewer mistakes.

Projects for Data Science Beginners »

Features of XGBoost

There are 3 features of XGBoost:

1. Gradient Tree Boosting

The tree ensemble model must undergo additive training. Hence, decision trees are added one step at a time in a sequential and iterative procedure.

A fixed number of trees are added, and the loss function value should decrease with each iteration.

2. Regularized Learning

Regularized Learning helps to balance out the final learned weight by reducing the loss function and preventing overfitting or underfitting.

3. Shrinkage and Feature Subsampling

These two methods help prevent overfitting even further.

Shrinkage lessens the degree to which each tree influences the model as a whole and creates space for potential future tree improvements.

Feature You may have seen subsampling in the Random Forest algorithm. In addition to preventing overfitting, the characteristics in the column segment of the data also speed up the parallel algorithm’s concurrent computations.

XGBoost Hyperparameters

Four groups of XGBoost hyperparameters are distinguished:

- General parameters

- Booster parameters

- Learning task parameters

- Command line parameters

Before starting the XGBoost model, general parameters, booster parameters, and task parameters are set. Only the console version of XGBoost uses the command line parameters.

Overfitting is a simple consequence of improper parameter tuning. However, it is challenging to adjust the XGBoost model’s parameters.

Classification Problem in Machine Learning »

What assumptions Underlie XGBoost?

The XGBoost’s major assumptions are:

It’s possible for XGBoost to presume that each input variable’s encoded integer values have an ordinal relationship.

XGBoost believes your data might not be accurate (i.e. it can deal with missing values)

The algorithm can tolerate missing values by default because it DOES NOT ASSUME that all values are present.

Missing values are learned during the training phase when using tree-based algorithms. This then results in the following:

Sparsity is handled via XGBoost.

Categorical variables must be transformed into numeric variables because XGBoost only manages numeric vectors.

A dense data frame with few zeroes in the matrix must be transformed into a very sparse matrix with many zeroes.

This means that variables can be fed into XGBoost in the form of a sparse matrix.

R programming for Data Science »

You now know how XGBoost and boosting connect to one another, as well as some of its features and how it lessens overfitting and the loss of function value.

Continue to read and learn…

Check your inbox or spam folder to confirm your subscription.

What are the algorithms used in machine learning? »

Copyright © 2022 | MH Corporate basic by MH Themes

Never miss an update! Subscribe to R-bloggers to receive e-mails with the latest R posts. (You will not see this message again.)

How-To Geek

6 ways to create more interactive powerpoint presentations.

Engage your audience with cool, actionable features.

Quick Links

- Add a QR code

- Embed Microsoft Forms (Education or Business Only)

- Embed a Live Web Page

- Add Links and Menus

- Add Clickable Images to Give More Info

- Add a Countdown Timer

We've all been to a presentation where the speaker bores you to death with a mundane PowerPoint presentation. Actually, the speaker could have kept you much more engaged by adding some interactive features to their slideshow. Let's look into some of these options.

1. Add a QR code

Adding a QR code can be particularly useful if you want to direct your audience to an online form, website, or video.

Some websites have in-built ways to create a QR code. For example, on Microsoft Forms , when you click "Collect Responses," you'll see the QR code option via the icon highlighted in the screenshot below. You can either right-click the QR code to copy and paste it into your presentation, or click "Download" to add it to your device gallery to insert the QR code as a picture.

In fact, you can easily add a QR code to take your viewer to any website. On Microsoft Edge, right-click anywhere on a web page where there isn't already a link, and left-click "Create QR Code For This Page."

You can also create QR codes in other browsers, such as Chrome.

You can then copy or download the QR code to use wherever you like in your presentation.

2. Embed Microsoft Forms (Education or Business Only)

If you plan to send your PPT presentation to others—for example, if you're a trainer sending step-by-step instruction presentation, a teacher sending an independent learning task to your students, or a campaigner for your local councilor sending a persuasive PPT to constituents—you might want to embed a quiz, questionnaire, pole, or feedback survey in your presentation.

In PowerPoint, open the "Insert" tab on the ribbon, and in the Forms group, click "Forms". If you cannot see this option, you can add new buttons to the ribbon .

As at April 2024, this feature is only available for those using their work or school account. We're using a Microsoft 365 Personal account in the screenshot below, which is why the Forms icon is grayed out.

Then, a sidebar will appear on the right-hand side of your screen, where you can either choose a form you have already created or opt to craft a new form.

Now, you can share your PPT presentation with others , who can click the fields and submit their responses when they view the presentation.

3. Embed a Live Web Page

You could always screenshot a web page and paste that into your PPT, but that's not a very interactive addition to your presentation. Instead, you can embed a live web page into your PPT so that people with access to your presentation can interact actively with its contents.

To do this, we will need to add an add-in to our PPT account .

Add-ins are not always reliable or secure. Before installing an add-in to your Microsoft account, check that the author is a reputable company, and type the add-in's name into a search engine to read reviews and other users' experiences.

To embed a web page, add the Web Viewer add-in ( this is an add-in created by Microsoft ).

Go to the relevant slide and open the Web Viewer add-in. Then, copy and paste the secure URL into the field box, and remove https:// from the start of the address. In our example, we will add a selector wheel to our slide. Click "Preview" to see a sample of the web page's appearance in your presentation.

This is how ours will look.

When you or someone with access to your presentation views the slideshow, this web page will be live and interactive.

4. Add Links and Menus

As well as moving from one slide to the next through a keyboard action or mouse click, you can create links within your presentation to direct the audience to specific locations.

To create a link, right-click the outline of the clickable object, and click "Link."

In the Insert Hyperlink dialog box, click "Place In This Document," choose the landing destination, and click "OK."

What's more, to make it clear that an object is clickable, you can use action buttons. Open the "Insert" tab on the ribbon, click "Shape," and then choose an appropriate action button. Usefully, PPT will automatically prompt you to add a link to these shapes.

You might also want a menu that displays on every slide. Once you have created the menu, add the links using the method outlined above. Then, select all the items, press Ctrl+C (copy), and then use Ctrl+V to paste them in your other slides.

5. Add Clickable Images to Give More Info