Research Design 101

Everything You Need To Get Started (With Examples)

By: Derek Jansen (MBA) | Reviewers: Eunice Rautenbach (DTech) & Kerryn Warren (PhD) | April 2023

Navigating the world of research can be daunting, especially if you’re a first-time researcher. One concept you’re bound to run into fairly early in your research journey is that of “ research design ”. Here, we’ll guide you through the basics using practical examples , so that you can approach your research with confidence.

Overview: Research Design 101

What is research design.

- Research design types for quantitative studies

- Video explainer : quantitative research design

- Research design types for qualitative studies

- Video explainer : qualitative research design

- How to choose a research design

- Key takeaways

Research design refers to the overall plan, structure or strategy that guides a research project , from its conception to the final data analysis. A good research design serves as the blueprint for how you, as the researcher, will collect and analyse data while ensuring consistency, reliability and validity throughout your study.

Understanding different types of research designs is essential as helps ensure that your approach is suitable given your research aims, objectives and questions , as well as the resources you have available to you. Without a clear big-picture view of how you’ll design your research, you run the risk of potentially making misaligned choices in terms of your methodology – especially your sampling , data collection and data analysis decisions.

The problem with defining research design…

One of the reasons students struggle with a clear definition of research design is because the term is used very loosely across the internet, and even within academia.

Some sources claim that the three research design types are qualitative, quantitative and mixed methods , which isn’t quite accurate (these just refer to the type of data that you’ll collect and analyse). Other sources state that research design refers to the sum of all your design choices, suggesting it’s more like a research methodology . Others run off on other less common tangents. No wonder there’s confusion!

In this article, we’ll clear up the confusion. We’ll explain the most common research design types for both qualitative and quantitative research projects, whether that is for a full dissertation or thesis, or a smaller research paper or article.

Research Design: Quantitative Studies

Quantitative research involves collecting and analysing data in a numerical form. Broadly speaking, there are four types of quantitative research designs: descriptive , correlational , experimental , and quasi-experimental .

Descriptive Research Design

As the name suggests, descriptive research design focuses on describing existing conditions, behaviours, or characteristics by systematically gathering information without manipulating any variables. In other words, there is no intervention on the researcher’s part – only data collection.

For example, if you’re studying smartphone addiction among adolescents in your community, you could deploy a survey to a sample of teens asking them to rate their agreement with certain statements that relate to smartphone addiction. The collected data would then provide insight regarding how widespread the issue may be – in other words, it would describe the situation.

The key defining attribute of this type of research design is that it purely describes the situation . In other words, descriptive research design does not explore potential relationships between different variables or the causes that may underlie those relationships. Therefore, descriptive research is useful for generating insight into a research problem by describing its characteristics . By doing so, it can provide valuable insights and is often used as a precursor to other research design types.

Correlational Research Design

Correlational design is a popular choice for researchers aiming to identify and measure the relationship between two or more variables without manipulating them . In other words, this type of research design is useful when you want to know whether a change in one thing tends to be accompanied by a change in another thing.

For example, if you wanted to explore the relationship between exercise frequency and overall health, you could use a correlational design to help you achieve this. In this case, you might gather data on participants’ exercise habits, as well as records of their health indicators like blood pressure, heart rate, or body mass index. Thereafter, you’d use a statistical test to assess whether there’s a relationship between the two variables (exercise frequency and health).

As you can see, correlational research design is useful when you want to explore potential relationships between variables that cannot be manipulated or controlled for ethical, practical, or logistical reasons. It is particularly helpful in terms of developing predictions , and given that it doesn’t involve the manipulation of variables, it can be implemented at a large scale more easily than experimental designs (which will look at next).

That said, it’s important to keep in mind that correlational research design has limitations – most notably that it cannot be used to establish causality . In other words, correlation does not equal causation . To establish causality, you’ll need to move into the realm of experimental design, coming up next…

Need a helping hand?

Experimental Research Design

Experimental research design is used to determine if there is a causal relationship between two or more variables . With this type of research design, you, as the researcher, manipulate one variable (the independent variable) while controlling others (dependent variables). Doing so allows you to observe the effect of the former on the latter and draw conclusions about potential causality.

For example, if you wanted to measure if/how different types of fertiliser affect plant growth, you could set up several groups of plants, with each group receiving a different type of fertiliser, as well as one with no fertiliser at all. You could then measure how much each plant group grew (on average) over time and compare the results from the different groups to see which fertiliser was most effective.

Overall, experimental research design provides researchers with a powerful way to identify and measure causal relationships (and the direction of causality) between variables. However, developing a rigorous experimental design can be challenging as it’s not always easy to control all the variables in a study. This often results in smaller sample sizes , which can reduce the statistical power and generalisability of the results.

Moreover, experimental research design requires random assignment . This means that the researcher needs to assign participants to different groups or conditions in a way that each participant has an equal chance of being assigned to any group (note that this is not the same as random sampling ). Doing so helps reduce the potential for bias and confounding variables . This need for random assignment can lead to ethics-related issues . For example, withholding a potentially beneficial medical treatment from a control group may be considered unethical in certain situations.

Quasi-Experimental Research Design

Quasi-experimental research design is used when the research aims involve identifying causal relations , but one cannot (or doesn’t want to) randomly assign participants to different groups (for practical or ethical reasons). Instead, with a quasi-experimental research design, the researcher relies on existing groups or pre-existing conditions to form groups for comparison.

For example, if you were studying the effects of a new teaching method on student achievement in a particular school district, you may be unable to randomly assign students to either group and instead have to choose classes or schools that already use different teaching methods. This way, you still achieve separate groups, without having to assign participants to specific groups yourself.

Naturally, quasi-experimental research designs have limitations when compared to experimental designs. Given that participant assignment is not random, it’s more difficult to confidently establish causality between variables, and, as a researcher, you have less control over other variables that may impact findings.

All that said, quasi-experimental designs can still be valuable in research contexts where random assignment is not possible and can often be undertaken on a much larger scale than experimental research, thus increasing the statistical power of the results. What’s important is that you, as the researcher, understand the limitations of the design and conduct your quasi-experiment as rigorously as possible, paying careful attention to any potential confounding variables .

Research Design: Qualitative Studies

There are many different research design types when it comes to qualitative studies, but here we’ll narrow our focus to explore the “Big 4”. Specifically, we’ll look at phenomenological design, grounded theory design, ethnographic design, and case study design.

Phenomenological Research Design

Phenomenological design involves exploring the meaning of lived experiences and how they are perceived by individuals. This type of research design seeks to understand people’s perspectives , emotions, and behaviours in specific situations. Here, the aim for researchers is to uncover the essence of human experience without making any assumptions or imposing preconceived ideas on their subjects.

For example, you could adopt a phenomenological design to study why cancer survivors have such varied perceptions of their lives after overcoming their disease. This could be achieved by interviewing survivors and then analysing the data using a qualitative analysis method such as thematic analysis to identify commonalities and differences.

Phenomenological research design typically involves in-depth interviews or open-ended questionnaires to collect rich, detailed data about participants’ subjective experiences. This richness is one of the key strengths of phenomenological research design but, naturally, it also has limitations. These include potential biases in data collection and interpretation and the lack of generalisability of findings to broader populations.

Grounded Theory Research Design

Grounded theory (also referred to as “GT”) aims to develop theories by continuously and iteratively analysing and comparing data collected from a relatively large number of participants in a study. It takes an inductive (bottom-up) approach, with a focus on letting the data “speak for itself”, without being influenced by preexisting theories or the researcher’s preconceptions.

As an example, let’s assume your research aims involved understanding how people cope with chronic pain from a specific medical condition, with a view to developing a theory around this. In this case, grounded theory design would allow you to explore this concept thoroughly without preconceptions about what coping mechanisms might exist. You may find that some patients prefer cognitive-behavioural therapy (CBT) while others prefer to rely on herbal remedies. Based on multiple, iterative rounds of analysis, you could then develop a theory in this regard, derived directly from the data (as opposed to other preexisting theories and models).

Grounded theory typically involves collecting data through interviews or observations and then analysing it to identify patterns and themes that emerge from the data. These emerging ideas are then validated by collecting more data until a saturation point is reached (i.e., no new information can be squeezed from the data). From that base, a theory can then be developed .

As you can see, grounded theory is ideally suited to studies where the research aims involve theory generation , especially in under-researched areas. Keep in mind though that this type of research design can be quite time-intensive , given the need for multiple rounds of data collection and analysis.

Ethnographic Research Design

Ethnographic design involves observing and studying a culture-sharing group of people in their natural setting to gain insight into their behaviours, beliefs, and values. The focus here is on observing participants in their natural environment (as opposed to a controlled environment). This typically involves the researcher spending an extended period of time with the participants in their environment, carefully observing and taking field notes .

All of this is not to say that ethnographic research design relies purely on observation. On the contrary, this design typically also involves in-depth interviews to explore participants’ views, beliefs, etc. However, unobtrusive observation is a core component of the ethnographic approach.

As an example, an ethnographer may study how different communities celebrate traditional festivals or how individuals from different generations interact with technology differently. This may involve a lengthy period of observation, combined with in-depth interviews to further explore specific areas of interest that emerge as a result of the observations that the researcher has made.

As you can probably imagine, ethnographic research design has the ability to provide rich, contextually embedded insights into the socio-cultural dynamics of human behaviour within a natural, uncontrived setting. Naturally, however, it does come with its own set of challenges, including researcher bias (since the researcher can become quite immersed in the group), participant confidentiality and, predictably, ethical complexities . All of these need to be carefully managed if you choose to adopt this type of research design.

Case Study Design

With case study research design, you, as the researcher, investigate a single individual (or a single group of individuals) to gain an in-depth understanding of their experiences, behaviours or outcomes. Unlike other research designs that are aimed at larger sample sizes, case studies offer a deep dive into the specific circumstances surrounding a person, group of people, event or phenomenon, generally within a bounded setting or context .

As an example, a case study design could be used to explore the factors influencing the success of a specific small business. This would involve diving deeply into the organisation to explore and understand what makes it tick – from marketing to HR to finance. In terms of data collection, this could include interviews with staff and management, review of policy documents and financial statements, surveying customers, etc.

While the above example is focused squarely on one organisation, it’s worth noting that case study research designs can have different variation s, including single-case, multiple-case and longitudinal designs. As you can see in the example, a single-case design involves intensely examining a single entity to understand its unique characteristics and complexities. Conversely, in a multiple-case design , multiple cases are compared and contrasted to identify patterns and commonalities. Lastly, in a longitudinal case design , a single case or multiple cases are studied over an extended period of time to understand how factors develop over time.

As you can see, a case study research design is particularly useful where a deep and contextualised understanding of a specific phenomenon or issue is desired. However, this strength is also its weakness. In other words, you can’t generalise the findings from a case study to the broader population. So, keep this in mind if you’re considering going the case study route.

How To Choose A Research Design

Having worked through all of these potential research designs, you’d be forgiven for feeling a little overwhelmed and wondering, “ But how do I decide which research design to use? ”. While we could write an entire post covering that alone, here are a few factors to consider that will help you choose a suitable research design for your study.

Data type: The first determining factor is naturally the type of data you plan to be collecting – i.e., qualitative or quantitative. This may sound obvious, but we have to be clear about this – don’t try to use a quantitative research design on qualitative data (or vice versa)!

Research aim(s) and question(s): As with all methodological decisions, your research aim and research questions will heavily influence your research design. For example, if your research aims involve developing a theory from qualitative data, grounded theory would be a strong option. Similarly, if your research aims involve identifying and measuring relationships between variables, one of the experimental designs would likely be a better option.

Time: It’s essential that you consider any time constraints you have, as this will impact the type of research design you can choose. For example, if you’ve only got a month to complete your project, a lengthy design such as ethnography wouldn’t be a good fit.

Resources: Take into account the resources realistically available to you, as these need to factor into your research design choice. For example, if you require highly specialised lab equipment to execute an experimental design, you need to be sure that you’ll have access to that before you make a decision.

Keep in mind that when it comes to research, it’s important to manage your risks and play as conservatively as possible. If your entire project relies on you achieving a huge sample, having access to niche equipment or holding interviews with very difficult-to-reach participants, you’re creating risks that could kill your project. So, be sure to think through your choices carefully and make sure that you have backup plans for any existential risks. Remember that a relatively simple methodology executed well generally will typically earn better marks than a highly-complex methodology executed poorly.

Recap: Key Takeaways

We’ve covered a lot of ground here. Let’s recap by looking at the key takeaways:

- Research design refers to the overall plan, structure or strategy that guides a research project, from its conception to the final analysis of data.

- Research designs for quantitative studies include descriptive , correlational , experimental and quasi-experimenta l designs.

- Research designs for qualitative studies include phenomenological , grounded theory , ethnographic and case study designs.

- When choosing a research design, you need to consider a variety of factors, including the type of data you’ll be working with, your research aims and questions, your time and the resources available to you.

If you need a helping hand with your research design (or any other aspect of your research), check out our private coaching services .

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

Is there any blog article explaining more on Case study research design? Is there a Case study write-up template? Thank you.

Thanks this was quite valuable to clarify such an important concept.

Thanks for this simplified explanations. it is quite very helpful.

This was really helpful. thanks

Thank you for your explanation. I think case study research design and the use of secondary data in researches needs to be talked about more in your videos and articles because there a lot of case studies research design tailored projects out there.

Please is there any template for a case study research design whose data type is a secondary data on your repository?

This post is very clear, comprehensive and has been very helpful to me. It has cleared the confusion I had in regard to research design and methodology.

This post is helpful, easy to understand, and deconstructs what a research design is. Thanks

how to cite this page

Thank you very much for the post. It is wonderful and has cleared many worries in my mind regarding research designs. I really appreciate .

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

Research Design | Step-by-Step Guide with Examples

Published on 5 May 2022 by Shona McCombes . Revised on 20 March 2023.

A research design is a strategy for answering your research question using empirical data. Creating a research design means making decisions about:

- Your overall aims and approach

- The type of research design you’ll use

- Your sampling methods or criteria for selecting subjects

- Your data collection methods

- The procedures you’ll follow to collect data

- Your data analysis methods

A well-planned research design helps ensure that your methods match your research aims and that you use the right kind of analysis for your data.

Table of contents

Step 1: consider your aims and approach, step 2: choose a type of research design, step 3: identify your population and sampling method, step 4: choose your data collection methods, step 5: plan your data collection procedures, step 6: decide on your data analysis strategies, frequently asked questions.

- Introduction

Before you can start designing your research, you should already have a clear idea of the research question you want to investigate.

There are many different ways you could go about answering this question. Your research design choices should be driven by your aims and priorities – start by thinking carefully about what you want to achieve.

The first choice you need to make is whether you’ll take a qualitative or quantitative approach.

Qualitative research designs tend to be more flexible and inductive , allowing you to adjust your approach based on what you find throughout the research process.

Quantitative research designs tend to be more fixed and deductive , with variables and hypotheses clearly defined in advance of data collection.

It’s also possible to use a mixed methods design that integrates aspects of both approaches. By combining qualitative and quantitative insights, you can gain a more complete picture of the problem you’re studying and strengthen the credibility of your conclusions.

Practical and ethical considerations when designing research

As well as scientific considerations, you need to think practically when designing your research. If your research involves people or animals, you also need to consider research ethics .

- How much time do you have to collect data and write up the research?

- Will you be able to gain access to the data you need (e.g., by travelling to a specific location or contacting specific people)?

- Do you have the necessary research skills (e.g., statistical analysis or interview techniques)?

- Will you need ethical approval ?

At each stage of the research design process, make sure that your choices are practically feasible.

Prevent plagiarism, run a free check.

Within both qualitative and quantitative approaches, there are several types of research design to choose from. Each type provides a framework for the overall shape of your research.

Types of quantitative research designs

Quantitative designs can be split into four main types. Experimental and quasi-experimental designs allow you to test cause-and-effect relationships, while descriptive and correlational designs allow you to measure variables and describe relationships between them.

With descriptive and correlational designs, you can get a clear picture of characteristics, trends, and relationships as they exist in the real world. However, you can’t draw conclusions about cause and effect (because correlation doesn’t imply causation ).

Experiments are the strongest way to test cause-and-effect relationships without the risk of other variables influencing the results. However, their controlled conditions may not always reflect how things work in the real world. They’re often also more difficult and expensive to implement.

Types of qualitative research designs

Qualitative designs are less strictly defined. This approach is about gaining a rich, detailed understanding of a specific context or phenomenon, and you can often be more creative and flexible in designing your research.

The table below shows some common types of qualitative design. They often have similar approaches in terms of data collection, but focus on different aspects when analysing the data.

Your research design should clearly define who or what your research will focus on, and how you’ll go about choosing your participants or subjects.

In research, a population is the entire group that you want to draw conclusions about, while a sample is the smaller group of individuals you’ll actually collect data from.

Defining the population

A population can be made up of anything you want to study – plants, animals, organisations, texts, countries, etc. In the social sciences, it most often refers to a group of people.

For example, will you focus on people from a specific demographic, region, or background? Are you interested in people with a certain job or medical condition, or users of a particular product?

The more precisely you define your population, the easier it will be to gather a representative sample.

Sampling methods

Even with a narrowly defined population, it’s rarely possible to collect data from every individual. Instead, you’ll collect data from a sample.

To select a sample, there are two main approaches: probability sampling and non-probability sampling . The sampling method you use affects how confidently you can generalise your results to the population as a whole.

Probability sampling is the most statistically valid option, but it’s often difficult to achieve unless you’re dealing with a very small and accessible population.

For practical reasons, many studies use non-probability sampling, but it’s important to be aware of the limitations and carefully consider potential biases. You should always make an effort to gather a sample that’s as representative as possible of the population.

Case selection in qualitative research

In some types of qualitative designs, sampling may not be relevant.

For example, in an ethnography or a case study, your aim is to deeply understand a specific context, not to generalise to a population. Instead of sampling, you may simply aim to collect as much data as possible about the context you are studying.

In these types of design, you still have to carefully consider your choice of case or community. You should have a clear rationale for why this particular case is suitable for answering your research question.

For example, you might choose a case study that reveals an unusual or neglected aspect of your research problem, or you might choose several very similar or very different cases in order to compare them.

Data collection methods are ways of directly measuring variables and gathering information. They allow you to gain first-hand knowledge and original insights into your research problem.

You can choose just one data collection method, or use several methods in the same study.

Survey methods

Surveys allow you to collect data about opinions, behaviours, experiences, and characteristics by asking people directly. There are two main survey methods to choose from: questionnaires and interviews.

Observation methods

Observations allow you to collect data unobtrusively, observing characteristics, behaviours, or social interactions without relying on self-reporting.

Observations may be conducted in real time, taking notes as you observe, or you might make audiovisual recordings for later analysis. They can be qualitative or quantitative.

Other methods of data collection

There are many other ways you might collect data depending on your field and topic.

If you’re not sure which methods will work best for your research design, try reading some papers in your field to see what data collection methods they used.

Secondary data

If you don’t have the time or resources to collect data from the population you’re interested in, you can also choose to use secondary data that other researchers already collected – for example, datasets from government surveys or previous studies on your topic.

With this raw data, you can do your own analysis to answer new research questions that weren’t addressed by the original study.

Using secondary data can expand the scope of your research, as you may be able to access much larger and more varied samples than you could collect yourself.

However, it also means you don’t have any control over which variables to measure or how to measure them, so the conclusions you can draw may be limited.

As well as deciding on your methods, you need to plan exactly how you’ll use these methods to collect data that’s consistent, accurate, and unbiased.

Planning systematic procedures is especially important in quantitative research, where you need to precisely define your variables and ensure your measurements are reliable and valid.

Operationalisation

Some variables, like height or age, are easily measured. But often you’ll be dealing with more abstract concepts, like satisfaction, anxiety, or competence. Operationalisation means turning these fuzzy ideas into measurable indicators.

If you’re using observations , which events or actions will you count?

If you’re using surveys , which questions will you ask and what range of responses will be offered?

You may also choose to use or adapt existing materials designed to measure the concept you’re interested in – for example, questionnaires or inventories whose reliability and validity has already been established.

Reliability and validity

Reliability means your results can be consistently reproduced , while validity means that you’re actually measuring the concept you’re interested in.

For valid and reliable results, your measurement materials should be thoroughly researched and carefully designed. Plan your procedures to make sure you carry out the same steps in the same way for each participant.

If you’re developing a new questionnaire or other instrument to measure a specific concept, running a pilot study allows you to check its validity and reliability in advance.

Sampling procedures

As well as choosing an appropriate sampling method, you need a concrete plan for how you’ll actually contact and recruit your selected sample.

That means making decisions about things like:

- How many participants do you need for an adequate sample size?

- What inclusion and exclusion criteria will you use to identify eligible participants?

- How will you contact your sample – by mail, online, by phone, or in person?

If you’re using a probability sampling method, it’s important that everyone who is randomly selected actually participates in the study. How will you ensure a high response rate?

If you’re using a non-probability method, how will you avoid bias and ensure a representative sample?

Data management

It’s also important to create a data management plan for organising and storing your data.

Will you need to transcribe interviews or perform data entry for observations? You should anonymise and safeguard any sensitive data, and make sure it’s backed up regularly.

Keeping your data well organised will save time when it comes to analysing them. It can also help other researchers validate and add to your findings.

On their own, raw data can’t answer your research question. The last step of designing your research is planning how you’ll analyse the data.

Quantitative data analysis

In quantitative research, you’ll most likely use some form of statistical analysis . With statistics, you can summarise your sample data, make estimates, and test hypotheses.

Using descriptive statistics , you can summarise your sample data in terms of:

- The distribution of the data (e.g., the frequency of each score on a test)

- The central tendency of the data (e.g., the mean to describe the average score)

- The variability of the data (e.g., the standard deviation to describe how spread out the scores are)

The specific calculations you can do depend on the level of measurement of your variables.

Using inferential statistics , you can:

- Make estimates about the population based on your sample data.

- Test hypotheses about a relationship between variables.

Regression and correlation tests look for associations between two or more variables, while comparison tests (such as t tests and ANOVAs ) look for differences in the outcomes of different groups.

Your choice of statistical test depends on various aspects of your research design, including the types of variables you’re dealing with and the distribution of your data.

Qualitative data analysis

In qualitative research, your data will usually be very dense with information and ideas. Instead of summing it up in numbers, you’ll need to comb through the data in detail, interpret its meanings, identify patterns, and extract the parts that are most relevant to your research question.

Two of the most common approaches to doing this are thematic analysis and discourse analysis .

There are many other ways of analysing qualitative data depending on the aims of your research. To get a sense of potential approaches, try reading some qualitative research papers in your field.

A sample is a subset of individuals from a larger population. Sampling means selecting the group that you will actually collect data from in your research.

For example, if you are researching the opinions of students in your university, you could survey a sample of 100 students.

Statistical sampling allows you to test a hypothesis about the characteristics of a population. There are various sampling methods you can use to ensure that your sample is representative of the population as a whole.

Operationalisation means turning abstract conceptual ideas into measurable observations.

For example, the concept of social anxiety isn’t directly observable, but it can be operationally defined in terms of self-rating scores, behavioural avoidance of crowded places, or physical anxiety symptoms in social situations.

Before collecting data , it’s important to consider how you will operationalise the variables that you want to measure.

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts, and meanings, use qualitative methods .

- If you want to analyse a large amount of readily available data, use secondary data. If you want data specific to your purposes with control over how they are generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2023, March 20). Research Design | Step-by-Step Guide with Examples. Scribbr. Retrieved 22 April 2024, from https://www.scribbr.co.uk/research-methods/research-design/

Is this article helpful?

Shona McCombes

Skills for Learning : Research Skills

Data analysis is an ongoing process that should occur throughout your research project. Suitable data-analysis methods must be selected when you write your research proposal. The nature of your data (i.e. quantitative or qualitative) will be influenced by your research design and purpose. The data will also influence the analysis methods selected.

We run interactive workshops to help you develop skills related to doing research, such as data analysis, writing literature reviews and preparing for dissertations. Find out more on the Skills for Learning Workshops page.

We have online academic skills modules within MyBeckett for all levels of university study. These modules will help your academic development and support your success at LBU. You can work through the modules at your own pace, revisiting them as required. Find out more from our FAQ What academic skills modules are available?

Quantitative data analysis

Broadly speaking, 'statistics' refers to methods, tools and techniques used to collect, organise and interpret data. The goal of statistics is to gain understanding from data. Therefore, you need to know how to:

- Produce data – for example, by handing out a questionnaire or doing an experiment.

- Organise, summarise, present and analyse data.

- Draw valid conclusions from findings.

There are a number of statistical methods you can use to analyse data. Choosing an appropriate statistical method should follow naturally, however, from your research design. Therefore, you should think about data analysis at the early stages of your study design. You may need to consult a statistician for help with this.

Tips for working with statistical data

- Plan so that the data you get has a good chance of successfully tackling the research problem. This will involve reading literature on your subject, as well as on what makes a good study.

- To reach useful conclusions, you need to reduce uncertainties or 'noise'. Thus, you will need a sufficiently large data sample. A large sample will improve precision. However, this must be balanced against the 'costs' (time and money) of collection.

- Consider the logistics. Will there be problems in obtaining sufficient high-quality data? Think about accuracy, trustworthiness and completeness.

- Statistics are based on random samples. Consider whether your sample will be suited to this sort of analysis. Might there be biases to think about?

- How will you deal with missing values (any data that is not recorded for some reason)? These can result from gaps in a record or whole records being missed out.

- When analysing data, start by looking at each variable separately. Conduct initial/exploratory data analysis using graphical displays. Do this before looking at variables in conjunction or anything more complicated. This process can help locate errors in the data and also gives you a 'feel' for the data.

- Look out for patterns of 'missingness'. They are likely to alert you if there’s a problem. If the 'missingness' is not random, then it will have an impact on the results.

- Be vigilant and think through what you are doing at all times. Think critically. Statistics are not just mathematical tricks that a computer sorts out. Rather, analysing statistical data is a process that the human mind must interpret!

Top tips! Try inventing or generating the sort of data you might get and see if you can analyse it. Make sure that your process works before gathering actual data. Think what the output of an analytic procedure will look like before doing it for real.

(Note: it is actually difficult to generate realistic data. There are fraud-detection methods in place to identify data that has been fabricated. So, remember to get rid of your practice data before analysing the real stuff!)

Statistical software packages

Software packages can be used to analyse and present data. The most widely used ones are SPSS and NVivo.

SPSS is a statistical-analysis and data-management package for quantitative data analysis. Click on ‘ How do I install SPSS? ’ to learn how to download SPSS to your personal device. SPSS can perform a wide variety of statistical procedures. Some examples are:

- Data management (i.e. creating subsets of data or transforming data).

- Summarising, describing or presenting data (i.e. mean, median and frequency).

- Looking at the distribution of data (i.e. standard deviation).

- Comparing groups for significant differences using parametric (i.e. t-test) and non-parametric (i.e. Chi-square) tests.

- Identifying significant relationships between variables (i.e. correlation).

NVivo can be used for qualitative data analysis. It is suitable for use with a wide range of methodologies. Click on ‘ How do I access NVivo ’ to learn how to download NVivo to your personal device. NVivo supports grounded theory, survey data, case studies, focus groups, phenomenology, field research and action research.

- Process data such as interview transcripts, literature or media extracts, and historical documents.

- Code data on screen and explore all coding and documents interactively.

- Rearrange, restructure, extend and edit text, coding and coding relationships.

- Search imported text for words, phrases or patterns, and automatically code the results.

Qualitative data analysis

Miles and Huberman (1994) point out that there are diverse approaches to qualitative research and analysis. They suggest, however, that it is possible to identify 'a fairly classic set of analytic moves arranged in sequence'. This involves:

- Affixing codes to a set of field notes drawn from observation or interviews.

- Noting reflections or other remarks in the margins.

- Sorting/sifting through these materials to identify: a) similar phrases, relationships between variables, patterns and themes and b) distinct differences between subgroups and common sequences.

- Isolating these patterns/processes and commonalties/differences. Then, taking them out to the field in the next wave of data collection.

- Highlighting generalisations and relating them to your original research themes.

- Taking the generalisations and analysing them in relation to theoretical perspectives.

(Miles and Huberman, 1994.)

Patterns and generalisations are usually arrived at through a process of analytic induction (see above points 5 and 6). Qualitative analysis rarely involves statistical analysis of relationships between variables. Qualitative analysis aims to gain in-depth understanding of concepts, opinions or experiences.

Presenting information

There are a number of different ways of presenting and communicating information. The particular format you use is dependent upon the type of data generated from the methods you have employed.

Here are some appropriate ways of presenting information for different types of data:

Bar charts: These may be useful for comparing relative sizes. However, they tend to use a large amount of ink to display a relatively small amount of information. Consider a simple line chart as an alternative.

Pie charts: These have the benefit of indicating that the data must add up to 100%. However, they make it difficult for viewers to distinguish relative sizes, especially if two slices have a difference of less than 10%.

Other examples of presenting data in graphical form include line charts and scatter plots .

Qualitative data is more likely to be presented in text form. For example, using quotations from interviews or field diaries.

- Plan ahead, thinking carefully about how you will analyse and present your data.

- Think through possible restrictions to resources you may encounter and plan accordingly.

- Find out about the different IT packages available for analysing your data and select the most appropriate.

- If necessary, allow time to attend an introductory course on a particular computer package. You can book SPSS and NVivo workshops via MyHub .

- Code your data appropriately, assigning conceptual or numerical codes as suitable.

- Organise your data so it can be analysed and presented easily.

- Choose the most suitable way of presenting your information, according to the type of data collected. This will allow your information to be understood and interpreted better.

Primary, secondary and tertiary sources

Information sources are sometimes categorised as primary, secondary or tertiary sources depending on whether or not they are ‘original’ materials or data. For some research projects, you may need to use primary sources as well as secondary or tertiary sources. However the distinction between primary and secondary sources is not always clear and depends on the context. For example, a newspaper article might usually be categorised as a secondary source. But it could also be regarded as a primary source if it were an article giving a first-hand account of a historical event written close to the time it occurred.

- Primary sources

- Secondary sources

- Tertiary sources

- Grey literature

Primary sources are original sources of information that provide first-hand accounts of what is being experienced or researched. They enable you to get as close to the actual event or research as possible. They are useful for getting the most contemporary information about a topic.

Examples include diary entries, newspaper articles, census data, journal articles with original reports of research, letters, email or other correspondence, original manuscripts and archives, interviews, research data and reports, statistics, autobiographies, exhibitions, films, and artists' writings.

Some information will be available on an Open Access basis, freely accessible online. However, many academic sources are paywalled, and you may need to login as a Leeds Beckett student to access them. Where Leeds Beckett does not have access to a source, you can use our Request It! Service .

Secondary sources interpret, evaluate or analyse primary sources. They're useful for providing background information on a topic, or for looking back at an event from a current perspective. The majority of your literature searching will probably be done to find secondary sources on your topic.

Examples include journal articles which review or interpret original findings, popular magazine articles commenting on more serious research, textbooks and biographies.

The term tertiary sources isn't used a great deal. There's overlap between what might be considered a secondary source and a tertiary source. One definition is that a tertiary source brings together secondary sources.

Examples include almanacs, fact books, bibliographies, dictionaries and encyclopaedias, directories, indexes and abstracts. They can be useful for introductory information or an overview of a topic in the early stages of research.

Depending on your subject of study, grey literature may be another source you need to use. Grey literature includes technical or research reports, theses and dissertations, conference papers, government documents, white papers, and so on.

Artificial intelligence tools

Before using any generative artificial intelligence or paraphrasing tools in your assessments, you should check if this is permitted on your course.

If their use is permitted on your course, you must acknowledge any use of generative artificial intelligence tools such as ChatGPT or paraphrasing tools (e.g., Grammarly, Quillbot, etc.), even if you have only used them to generate ideas for your assessments or for proofreading.

- Academic Integrity Module in MyBeckett

- Assignment Calculator

- Building on Feedback

- Disability Advice

- Essay X-ray tool

- International Students' Academic Introduction

- Manchester Academic Phrasebank

- Quote, Unquote

- Skills and Subject Suppor t

- Turnitin Grammar Checker

{{You can add more boxes below for links specific to this page [this note will not appear on user pages] }}

- Research Methods Checklist

- Sampling Checklist

Skills for Learning FAQs

0113 812 1000

- University Disclaimer

- Accessibility

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Data Analysis in Research: Types & Methods

Content Index

Why analyze data in research?

Types of data in research, finding patterns in the qualitative data, methods used for data analysis in qualitative research, preparing data for analysis, methods used for data analysis in quantitative research, considerations in research data analysis, what is data analysis in research.

Definition of research in data analysis: According to LeCompte and Schensul, research data analysis is a process used by researchers to reduce data to a story and interpret it to derive insights. The data analysis process helps reduce a large chunk of data into smaller fragments, which makes sense.

Three essential things occur during the data analysis process — the first is data organization . Summarization and categorization together contribute to becoming the second known method used for data reduction. It helps find patterns and themes in the data for easy identification and linking. The third and last way is data analysis – researchers do it in both top-down and bottom-up fashion.

LEARN ABOUT: Research Process Steps

On the other hand, Marshall and Rossman describe data analysis as a messy, ambiguous, and time-consuming but creative and fascinating process through which a mass of collected data is brought to order, structure and meaning.

We can say that “the data analysis and data interpretation is a process representing the application of deductive and inductive logic to the research and data analysis.”

Researchers rely heavily on data as they have a story to tell or research problems to solve. It starts with a question, and data is nothing but an answer to that question. But, what if there is no question to ask? Well! It is possible to explore data even without a problem – we call it ‘Data Mining’, which often reveals some interesting patterns within the data that are worth exploring.

Irrelevant to the type of data researchers explore, their mission and audiences’ vision guide them to find the patterns to shape the story they want to tell. One of the essential things expected from researchers while analyzing data is to stay open and remain unbiased toward unexpected patterns, expressions, and results. Remember, sometimes, data analysis tells the most unforeseen yet exciting stories that were not expected when initiating data analysis. Therefore, rely on the data you have at hand and enjoy the journey of exploratory research.

Create a Free Account

Every kind of data has a rare quality of describing things after assigning a specific value to it. For analysis, you need to organize these values, processed and presented in a given context, to make it useful. Data can be in different forms; here are the primary data types.

- Qualitative data: When the data presented has words and descriptions, then we call it qualitative data . Although you can observe this data, it is subjective and harder to analyze data in research, especially for comparison. Example: Quality data represents everything describing taste, experience, texture, or an opinion that is considered quality data. This type of data is usually collected through focus groups, personal qualitative interviews , qualitative observation or using open-ended questions in surveys.

- Quantitative data: Any data expressed in numbers of numerical figures are called quantitative data . This type of data can be distinguished into categories, grouped, measured, calculated, or ranked. Example: questions such as age, rank, cost, length, weight, scores, etc. everything comes under this type of data. You can present such data in graphical format, charts, or apply statistical analysis methods to this data. The (Outcomes Measurement Systems) OMS questionnaires in surveys are a significant source of collecting numeric data.

- Categorical data: It is data presented in groups. However, an item included in the categorical data cannot belong to more than one group. Example: A person responding to a survey by telling his living style, marital status, smoking habit, or drinking habit comes under the categorical data. A chi-square test is a standard method used to analyze this data.

Learn More : Examples of Qualitative Data in Education

Data analysis in qualitative research

Data analysis and qualitative data research work a little differently from the numerical data as the quality data is made up of words, descriptions, images, objects, and sometimes symbols. Getting insight from such complicated information is a complicated process. Hence it is typically used for exploratory research and data analysis .

Although there are several ways to find patterns in the textual information, a word-based method is the most relied and widely used global technique for research and data analysis. Notably, the data analysis process in qualitative research is manual. Here the researchers usually read the available data and find repetitive or commonly used words.

For example, while studying data collected from African countries to understand the most pressing issues people face, researchers might find “food” and “hunger” are the most commonly used words and will highlight them for further analysis.

LEARN ABOUT: Level of Analysis

The keyword context is another widely used word-based technique. In this method, the researcher tries to understand the concept by analyzing the context in which the participants use a particular keyword.

For example , researchers conducting research and data analysis for studying the concept of ‘diabetes’ amongst respondents might analyze the context of when and how the respondent has used or referred to the word ‘diabetes.’

The scrutiny-based technique is also one of the highly recommended text analysis methods used to identify a quality data pattern. Compare and contrast is the widely used method under this technique to differentiate how a specific text is similar or different from each other.

For example: To find out the “importance of resident doctor in a company,” the collected data is divided into people who think it is necessary to hire a resident doctor and those who think it is unnecessary. Compare and contrast is the best method that can be used to analyze the polls having single-answer questions types .

Metaphors can be used to reduce the data pile and find patterns in it so that it becomes easier to connect data with theory.

Variable Partitioning is another technique used to split variables so that researchers can find more coherent descriptions and explanations from the enormous data.

LEARN ABOUT: Qualitative Research Questions and Questionnaires

There are several techniques to analyze the data in qualitative research, but here are some commonly used methods,

- Content Analysis: It is widely accepted and the most frequently employed technique for data analysis in research methodology. It can be used to analyze the documented information from text, images, and sometimes from the physical items. It depends on the research questions to predict when and where to use this method.

- Narrative Analysis: This method is used to analyze content gathered from various sources such as personal interviews, field observation, and surveys . The majority of times, stories, or opinions shared by people are focused on finding answers to the research questions.

- Discourse Analysis: Similar to narrative analysis, discourse analysis is used to analyze the interactions with people. Nevertheless, this particular method considers the social context under which or within which the communication between the researcher and respondent takes place. In addition to that, discourse analysis also focuses on the lifestyle and day-to-day environment while deriving any conclusion.

- Grounded Theory: When you want to explain why a particular phenomenon happened, then using grounded theory for analyzing quality data is the best resort. Grounded theory is applied to study data about the host of similar cases occurring in different settings. When researchers are using this method, they might alter explanations or produce new ones until they arrive at some conclusion.

LEARN ABOUT: 12 Best Tools for Researchers

Data analysis in quantitative research

The first stage in research and data analysis is to make it for the analysis so that the nominal data can be converted into something meaningful. Data preparation consists of the below phases.

Phase I: Data Validation

Data validation is done to understand if the collected data sample is per the pre-set standards, or it is a biased data sample again divided into four different stages

- Fraud: To ensure an actual human being records each response to the survey or the questionnaire

- Screening: To make sure each participant or respondent is selected or chosen in compliance with the research criteria

- Procedure: To ensure ethical standards were maintained while collecting the data sample

- Completeness: To ensure that the respondent has answered all the questions in an online survey. Else, the interviewer had asked all the questions devised in the questionnaire.

Phase II: Data Editing

More often, an extensive research data sample comes loaded with errors. Respondents sometimes fill in some fields incorrectly or sometimes skip them accidentally. Data editing is a process wherein the researchers have to confirm that the provided data is free of such errors. They need to conduct necessary checks and outlier checks to edit the raw edit and make it ready for analysis.

Phase III: Data Coding

Out of all three, this is the most critical phase of data preparation associated with grouping and assigning values to the survey responses . If a survey is completed with a 1000 sample size, the researcher will create an age bracket to distinguish the respondents based on their age. Thus, it becomes easier to analyze small data buckets rather than deal with the massive data pile.

LEARN ABOUT: Steps in Qualitative Research

After the data is prepared for analysis, researchers are open to using different research and data analysis methods to derive meaningful insights. For sure, statistical analysis plans are the most favored to analyze numerical data. In statistical analysis, distinguishing between categorical data and numerical data is essential, as categorical data involves distinct categories or labels, while numerical data consists of measurable quantities. The method is again classified into two groups. First, ‘Descriptive Statistics’ used to describe data. Second, ‘Inferential statistics’ that helps in comparing the data .

Descriptive statistics

This method is used to describe the basic features of versatile types of data in research. It presents the data in such a meaningful way that pattern in the data starts making sense. Nevertheless, the descriptive analysis does not go beyond making conclusions. The conclusions are again based on the hypothesis researchers have formulated so far. Here are a few major types of descriptive analysis methods.

Measures of Frequency

- Count, Percent, Frequency

- It is used to denote home often a particular event occurs.

- Researchers use it when they want to showcase how often a response is given.

Measures of Central Tendency

- Mean, Median, Mode

- The method is widely used to demonstrate distribution by various points.

- Researchers use this method when they want to showcase the most commonly or averagely indicated response.

Measures of Dispersion or Variation

- Range, Variance, Standard deviation

- Here the field equals high/low points.

- Variance standard deviation = difference between the observed score and mean

- It is used to identify the spread of scores by stating intervals.

- Researchers use this method to showcase data spread out. It helps them identify the depth until which the data is spread out that it directly affects the mean.

Measures of Position

- Percentile ranks, Quartile ranks

- It relies on standardized scores helping researchers to identify the relationship between different scores.

- It is often used when researchers want to compare scores with the average count.

For quantitative research use of descriptive analysis often give absolute numbers, but the in-depth analysis is never sufficient to demonstrate the rationale behind those numbers. Nevertheless, it is necessary to think of the best method for research and data analysis suiting your survey questionnaire and what story researchers want to tell. For example, the mean is the best way to demonstrate the students’ average scores in schools. It is better to rely on the descriptive statistics when the researchers intend to keep the research or outcome limited to the provided sample without generalizing it. For example, when you want to compare average voting done in two different cities, differential statistics are enough.

Descriptive analysis is also called a ‘univariate analysis’ since it is commonly used to analyze a single variable.

Inferential statistics

Inferential statistics are used to make predictions about a larger population after research and data analysis of the representing population’s collected sample. For example, you can ask some odd 100 audiences at a movie theater if they like the movie they are watching. Researchers then use inferential statistics on the collected sample to reason that about 80-90% of people like the movie.

Here are two significant areas of inferential statistics.

- Estimating parameters: It takes statistics from the sample research data and demonstrates something about the population parameter.

- Hypothesis test: I t’s about sampling research data to answer the survey research questions. For example, researchers might be interested to understand if the new shade of lipstick recently launched is good or not, or if the multivitamin capsules help children to perform better at games.

These are sophisticated analysis methods used to showcase the relationship between different variables instead of describing a single variable. It is often used when researchers want something beyond absolute numbers to understand the relationship between variables.

Here are some of the commonly used methods for data analysis in research.

- Correlation: When researchers are not conducting experimental research or quasi-experimental research wherein the researchers are interested to understand the relationship between two or more variables, they opt for correlational research methods.

- Cross-tabulation: Also called contingency tables, cross-tabulation is used to analyze the relationship between multiple variables. Suppose provided data has age and gender categories presented in rows and columns. A two-dimensional cross-tabulation helps for seamless data analysis and research by showing the number of males and females in each age category.

- Regression analysis: For understanding the strong relationship between two variables, researchers do not look beyond the primary and commonly used regression analysis method, which is also a type of predictive analysis used. In this method, you have an essential factor called the dependent variable. You also have multiple independent variables in regression analysis. You undertake efforts to find out the impact of independent variables on the dependent variable. The values of both independent and dependent variables are assumed as being ascertained in an error-free random manner.

- Frequency tables: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Analysis of variance: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Researchers must have the necessary research skills to analyze and manipulation the data , Getting trained to demonstrate a high standard of research practice. Ideally, researchers must possess more than a basic understanding of the rationale of selecting one statistical method over the other to obtain better data insights.

- Usually, research and data analytics projects differ by scientific discipline; therefore, getting statistical advice at the beginning of analysis helps design a survey questionnaire, select data collection methods , and choose samples.

LEARN ABOUT: Best Data Collection Tools

- The primary aim of data research and analysis is to derive ultimate insights that are unbiased. Any mistake in or keeping a biased mind to collect data, selecting an analysis method, or choosing audience sample il to draw a biased inference.

- Irrelevant to the sophistication used in research data and analysis is enough to rectify the poorly defined objective outcome measurements. It does not matter if the design is at fault or intentions are not clear, but lack of clarity might mislead readers, so avoid the practice.

- The motive behind data analysis in research is to present accurate and reliable data. As far as possible, avoid statistical errors, and find a way to deal with everyday challenges like outliers, missing data, data altering, data mining , or developing graphical representation.

LEARN MORE: Descriptive Research vs Correlational Research The sheer amount of data generated daily is frightening. Especially when data analysis has taken center stage. in 2018. In last year, the total data supply amounted to 2.8 trillion gigabytes. Hence, it is clear that the enterprises willing to survive in the hypercompetitive world must possess an excellent capability to analyze complex research data, derive actionable insights, and adapt to the new market needs.

LEARN ABOUT: Average Order Value

QuestionPro is an online survey platform that empowers organizations in data analysis and research and provides them a medium to collect data by creating appealing surveys.

MORE LIKE THIS

Customer Communication Tool: Types, Methods, Uses, & Tools

Apr 23, 2024

Top 12 Sentiment Analysis Tools for Understanding Emotions

QuestionPro BI: From Research Data to Actionable Dashboards

Apr 22, 2024

21 Best Customer Experience Management Software in 2024

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

What is Project Analysis and Why it is Important?

By Aastha Shaw Jan 25, 2022

What do you do to check if a project is going on track?

You analyze it!

Consistent project analysis helps you make the right choices at the right time, leading you towards a more successful outcome and the highest possible ROI.

Here we will talk about project analysis, its importance, the different types of project analysis, and lastly, how you can implement it using the right tools.

What is Project Analysis?

When executing a project , you need to analyze it periodically. Failing to do so, would mean unexpected challenges, overlooked critical information, or flaws in the work process that manifest as the project unfolds.

This is why you need project analysis.

It is assessing every expense or problem related to a project before working on it and evaluating the outcome once the work is done.

Analyze your projects with SmartTask

Importance of project analysis.

A study conducted last year stated that over two-thirds of all projects were not completed on time and went over the budget.

What separates the failed two-thirds of the projects from the successful one-third?

Regular analysis.

Project analysis lets you see the present problems and prepare for and avoid future problems. This ensures smooth project execution and timely project delivery.

For efficient project analysis, it is equally important to have the right tool that will help you monitor and analyze your project from its initiation to completion.

Types of project analysis:

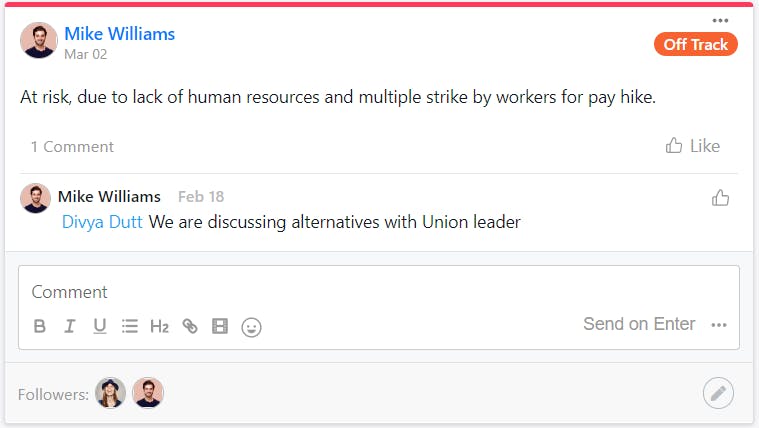

A. ongoing project risk analysis.

What happens if your key teammate gets injured during a project and needs to take a month off? Or your equipment malfunctions which stop the work, but the labor charges keep adding up? Or a natural calamity takes place?

All of these can affect the project timeline and cost.

Risk analysis ensures that the least number of surprises occur during your project. It helps you predict the uncertainties in the projects and minimize the occurrence and impact of these uncertainties.

How to do project risk analysis?

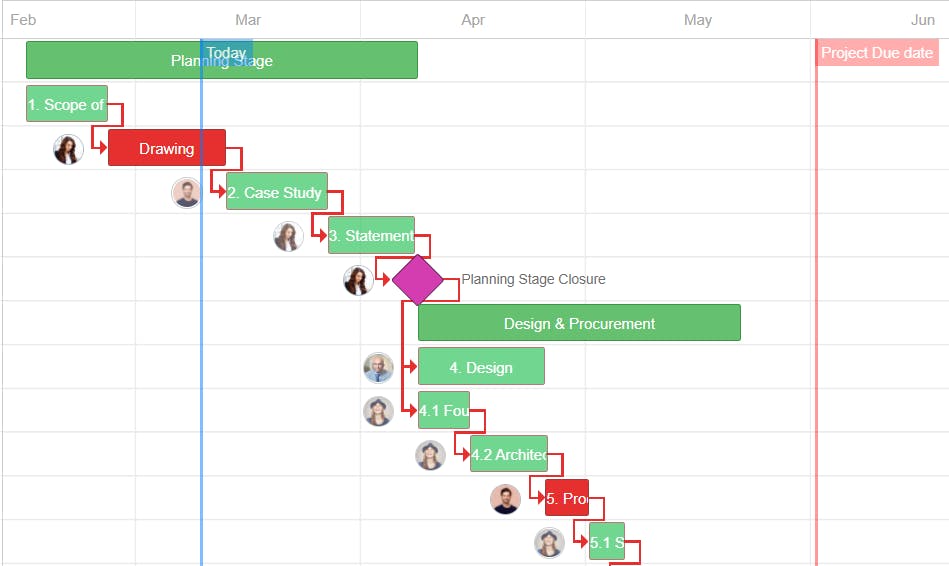

1. Define Critical Path:

Each project consists of dependent tasks that rely on one or more tasks to be performed in a particular order for their completion.

This is where understanding the longest chain of dependencies or the project's critical path becomes very important. Any delay in the critical path would ultimately lead to missed deadlines.

You can use project management software that helps you map your project plan and highlight its critical path. (In the image below, you would see the critical path highlighted in red)

2. Streamline communication channels:

You don't want to be spending most of your time coordinating with the management, sales team, clients, and vendors. So, it's essential to keep communication and information flowing in one tool.

Establishing a good communication channel instead of checking on everyone for updates will help you better track task progress and save time. With a proper communication channel in place, task assignees can keep you updated in real-time and let you know about any delays, problems, or requirements easily.

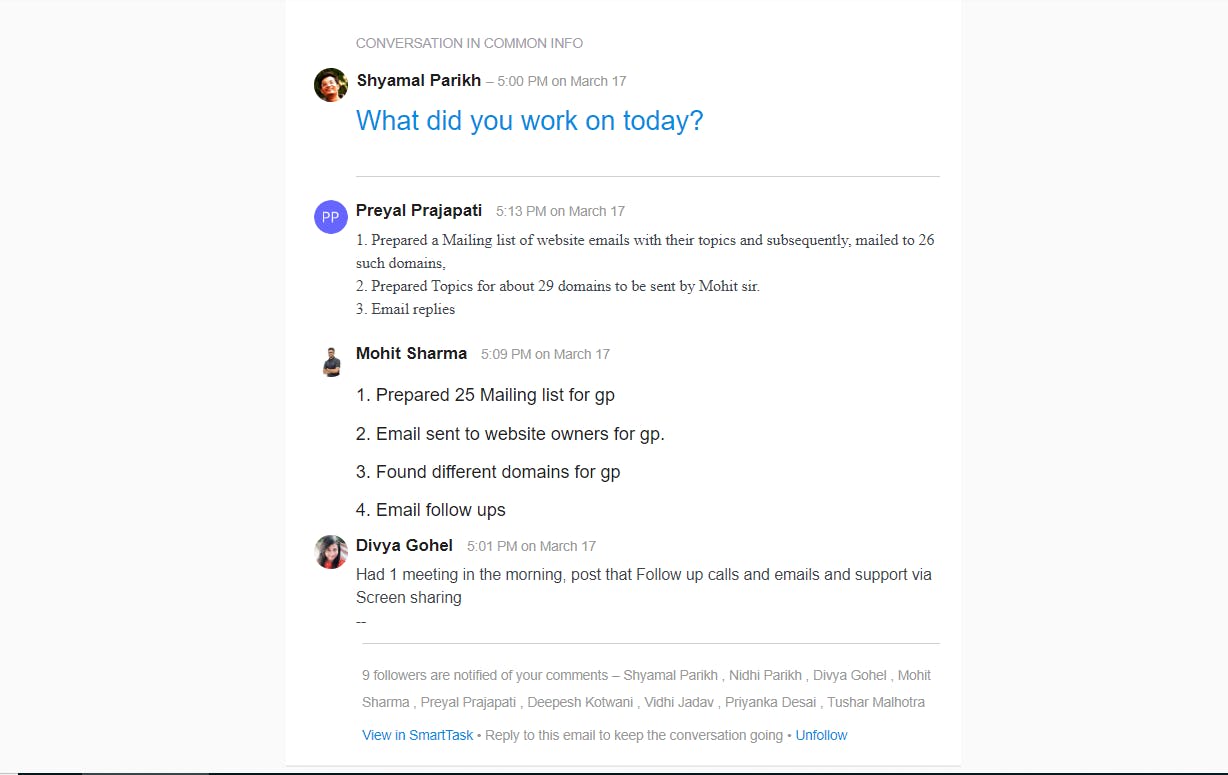

Thus, it is critical to have a project management tool that allows real-time collaboration & communication via Chat, Task Comments, and Video conferencing.

3. Regularly monitor risks:

Once you have defined the project's critical path and streamlined the communication channel, you need to focus on how each task is panning out.

You would not want to be unaware of situations such as delays in the shipment of raw material on which a lot of your project tasks depend.

This is why we recommend tracking and analyzing the project's progress every week. (In SmartTask, there's a News Feed view that would allow you to share the project status with the team and for team members to share their thoughts on the status update.)

Say today is Saturday, and you are going through the last week's progress. You identified that one procurement item might become a bottleneck and affect the critical path.

This will help you immediately take necessary actions and save your project from facing major drawbacks.

Monitor your projects with SmartTask

Free for Unlimited Users, Tasks, & Projects

4. Determine their impact on the project:

Every risk has an impact, some more than others.

You can evaluate the impact of the risks by looking at:

- How much will the task be delayed?

- Would it affect the critical path?

If the delay does affect the critical path, can we have the procurement team expedite the delivery? Or is it a lost cause?

5. Prepare a contingency plan to treat those risks:

A contingency plan helps you to be prepared for future challenges and reduce the biggest risks to manageable levels. It is a course of action that enables you to deal with a situation that might happen.

In the above example, we tried to expedite the raw material delivery; however, it is clear now that the item won't be delivered on time.

Now, as a project manager, it's time to re-evaluate the project plan and see if you can save the project from overshooting the timeline.

There are two ways to deal with this:

- Allocating additional resources to tasks dependent on raw material shipments and attempting to complete tasks ahead of schedule. - This would speed up your work and allow you to get back on track.

- Executing dependent processes in parallel. - Some tasks can be executed parallelly, but we avoid doing this to be safe and complete the tasks with perfection. - But with good planning and required resources, you can execute dependent tasks parallelly to complete the project on time and with the same quality.

Your decision would be based on the trade-offs you are ready to make. So, it's wise to your team's and management's feedback to get better insights and make the best decisions.

6. Regularly update the team on the project’s progress:

It’s important to keep everyone updated on the project progress and all the important decisions taken. With SmartTask’s News Feed feature you can update your teammates and project stakeholders about everything related to your project in real-time.

On the feed update itself, they can share their feedback, mention others, and share important files.

With SmartTask, you can record all your project history in one place and easily access them when needed. It also helps you track your projects to identify any potential problems and bottlenecks so that you can deal with them on time.

B. Project Cost Analysis

Suppose you land a software project. You roughly forecast the project timeline, resources, budget, etc., and reach a tentative project cost estimate at the project proposal time.

But moving forward without a thorough project cost analysis leads to budget overruns, missed deadlines, and a miserably failed project.

Therefore it's critical to conduct a proper project cost analysis to develop a strategic plan to avoid repetitive cost overruns and save your project from sinking.

How to do project cost analysis?

1. Determine the project goal:

Before you start working on project costing, your team needs to have a clear idea of the final goal and requirements of the project.

This is where a clearly defined client requirement document helps.

The client's requirement document will help your team divide the project into milestones and adequately define all the resources needed for timely project delivery.

2. Draw up a project plan:

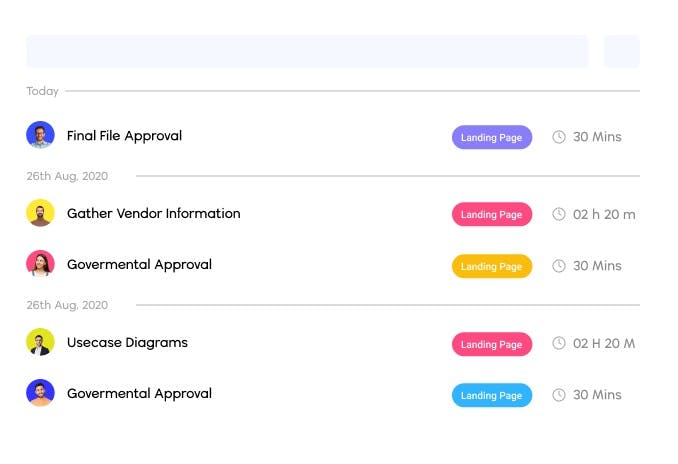

Once the milestones are set, utilize project management software to map out tasks within those milestones with their delivery timelines and expectations.

You can use task dependencies to better map out the project plan and provide that clear understanding of the critical path to your team.

Lastly, assign each task to a suitable person. A good collaboration software would notify each team member when a new task is assigned.

3. Set Time Estimates:

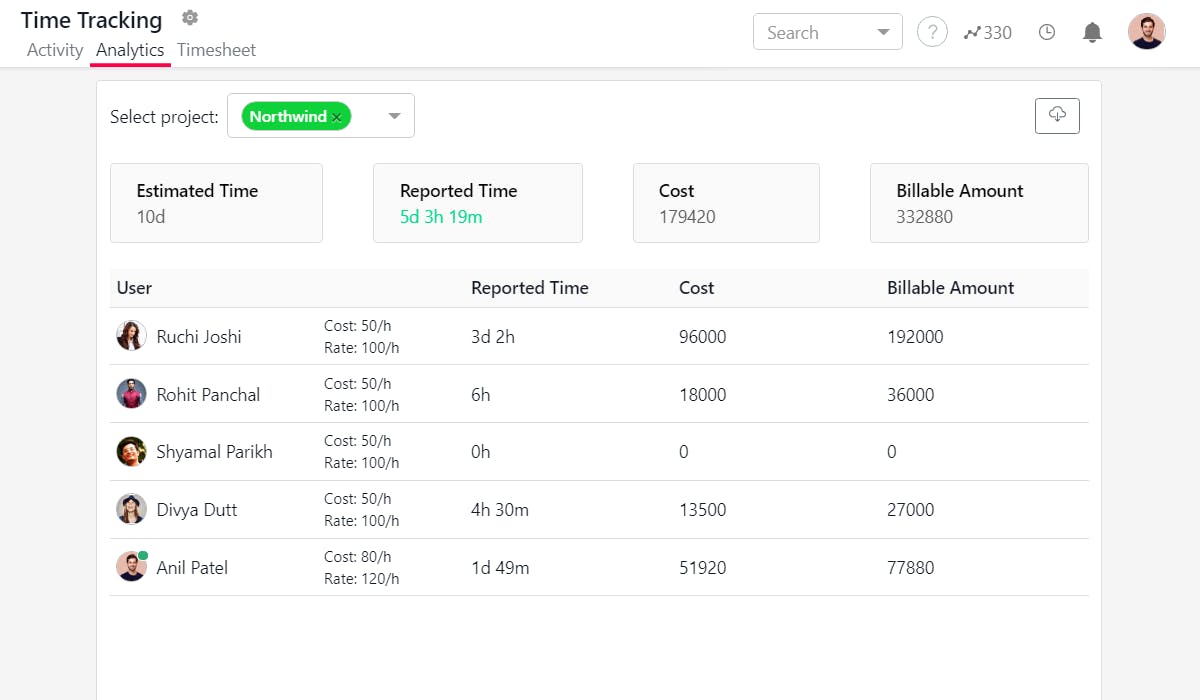

One of the most crucial cost factors of any project is the resources needed to accomplish this project.

Make sure that the time to be spent on a task is set as a Time estimate. (Note: Time estimates or efforts needed to accomplish the task and task timeline are independent of each other.)

4. Define Resource Cost:

A good resource management software allows you to define the cost per hour and Billing rate for each resource.

So, along with the fixed cost, assignee, time estimates, and efforts needed to accomplish each task in the project, you also get a clear idea of the cost per resource. And in turn, you can set and track the total cost of the project better.

5. Set a Factor of Safety:

While you understand the project's total cost, it's essential to consider contingency funds if things don't go as planned.

Government compliances, taxes, client and vendor delays may delay the project and increase the cost of delivery.

It's prudent to consider all these unknown factors and add them to the project cost as a Factor of Safety.

While each project is unique and Factor of Safety(FOS) percentages can be different, we recommend not having less than 20% as FOS.

Track your project's cost with ease

Try SmartTask - Free for Unlimited Users

C. Workload Analysis

Suppose your team has two software engineers who are working on a project. You divide the tasks equally amongst them.

After they start working, you see that while Engineer A has completed his tasks, Engineer B has not started his work.

But when dwelled deeper, it is seen that Engineer A didn't have much on his plate, whereas Engineer B was overloaded with tasks from more than 3 projects, causing the delay.

To avoid such situations, you need a Workload analysis tool that would forecast each team member's workload, whether they are overloaded or under-utilized.

How to do workload analysis?

1. Define effort required in each project: