What Is Statistical Analysis?

Statistical analysis is a technique we use to find patterns in data and make inferences about those patterns to describe variability in the results of a data set or an experiment.

In its simplest form, statistical analysis answers questions about:

- Quantification — how big/small/tall/wide is it?

- Variability — growth, increase, decline

- The confidence level of these variabilities

What Are the 2 Types of Statistical Analysis?

- Descriptive Statistics: Descriptive statistical analysis describes the quality of the data by summarizing large data sets into single measures.

- Inferential Statistics: Inferential statistical analysis allows you to draw conclusions from your sample data set and make predictions about a population using statistical tests.

What’s the Purpose of Statistical Analysis?

Using statistical analysis, you can determine trends in the data by calculating your data set’s mean or median. You can also analyze the variation between different data points from the mean to get the standard deviation . Furthermore, to test the validity of your statistical analysis conclusions, you can use hypothesis testing techniques, like P-value, to determine the likelihood that the observed variability could have occurred by chance.

More From Abdishakur Hassan The 7 Best Thematic Map Types for Geospatial Data

Statistical Analysis Methods

There are two major types of statistical data analysis: descriptive and inferential.

Descriptive Statistical Analysis

Descriptive statistical analysis describes the quality of the data by summarizing large data sets into single measures.

Within the descriptive analysis branch, there are two main types: measures of central tendency (i.e. mean, median and mode) and measures of dispersion or variation (i.e. variance , standard deviation and range).

For example, you can calculate the average exam results in a class using central tendency or, in particular, the mean. In that case, you’d sum all student results and divide by the number of tests. You can also calculate the data set’s spread by calculating the variance. To calculate the variance, subtract each exam result in the data set from the mean, square the answer, add everything together and divide by the number of tests.

Inferential Statistics

On the other hand, inferential statistical analysis allows you to draw conclusions from your sample data set and make predictions about a population using statistical tests.

There are two main types of inferential statistical analysis: hypothesis testing and regression analysis. We use hypothesis testing to test and validate assumptions in order to draw conclusions about a population from the sample data. Popular tests include Z-test, F-Test, ANOVA test and confidence intervals . On the other hand, regression analysis primarily estimates the relationship between a dependent variable and one or more independent variables. There are numerous types of regression analysis but the most popular ones include linear and logistic regression .

Statistical Analysis Steps

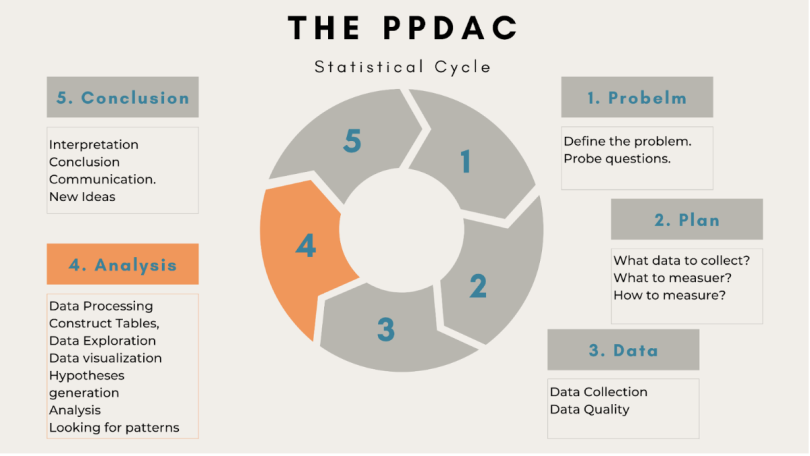

In the era of big data and data science, there is a rising demand for a more problem-driven approach. As a result, we must approach statistical analysis holistically. We may divide the entire process into five different and significant stages by using the well-known PPDAC model of statistics: Problem, Plan, Data, Analysis and Conclusion.

In the first stage, you define the problem you want to tackle and explore questions about the problem.

Next is the planning phase. You can check whether data is available or if you need to collect data for your problem. You also determine what to measure and how to measure it.

The third stage involves data collection, understanding the data and checking its quality.

4. Analysis

Statistical data analysis is the fourth stage. Here you process and explore the data with the help of tables, graphs and other data visualizations. You also develop and scrutinize your hypothesis in this stage of analysis.

5. Conclusion

The final step involves interpretations and conclusions from your analysis. It also covers generating new ideas for the next iteration. Thus, statistical analysis is not a one-time event but an iterative process.

Statistical Analysis Uses

Statistical analysis is useful for research and decision making because it allows us to understand the world around us and draw conclusions by testing our assumptions. Statistical analysis is important for various applications, including:

- Statistical quality control and analysis in product development

- Clinical trials

- Customer satisfaction surveys and customer experience research

- Marketing operations management

- Process improvement and optimization

- Training needs

More on Statistical Analysis From Built In Experts Intro to Descriptive Statistics for Machine Learning

Benefits of Statistical Analysis

Here are some of the reasons why statistical analysis is widespread in many applications and why it’s necessary:

Understand Data

Statistical analysis gives you a better understanding of the data and what they mean. These types of analyses provide information that would otherwise be difficult to obtain by merely looking at the numbers without considering their relationship.

Find Causal Relationships

Statistical analysis can help you investigate causation or establish the precise meaning of an experiment, like when you’re looking for a relationship between two variables.

Make Data-Informed Decisions

Businesses are constantly looking to find ways to improve their services and products . Statistical analysis allows you to make data-informed decisions about your business or future actions by helping you identify trends in your data, whether positive or negative.

Determine Probability

Statistical analysis is an approach to understanding how the probability of certain events affects the outcome of an experiment. It helps scientists and engineers decide how much confidence they can have in the results of their research, how to interpret their data and what questions they can feasibly answer.

You’ve Got Questions. Our Experts Have Answers. Confidence Intervals, Explained!

What Are the Risks of Statistical Analysis?

Statistical analysis can be valuable and effective, but it’s an imperfect approach. Even if the analyst or researcher performs a thorough statistical analysis, there may still be known or unknown problems that can affect the results. Therefore, statistical analysis is not a one-size-fits-all process. If you want to get good results, you need to know what you’re doing. It can take a lot of time to figure out which type of statistical analysis will work best for your situation .

Thus, you should remember that our conclusions drawn from statistical analysis don’t always guarantee correct results. This can be dangerous when making business decisions. In marketing , for example, we may come to the wrong conclusion about a product . Therefore, the conclusions we draw from statistical data analysis are often approximated; testing for all factors affecting an observation is impossible.

Built In’s expert contributor network publishes thoughtful, solutions-oriented stories written by innovative tech professionals. It is the tech industry’s definitive destination for sharing compelling, first-person accounts of problem-solving on the road to innovation.

Great Companies Need Great People. That's Where We Come In.

Table of Contents

Types of statistical analysis, importance of statistical analysis, benefits of statistical analysis, statistical analysis process, statistical analysis methods, statistical analysis software, statistical analysis examples, career in statistical analysis, choose the right program, become proficient in statistics today, what is statistical analysis types, methods and examples.

Statistical analysis is the process of collecting and analyzing data in order to discern patterns and trends. It is a method for removing bias from evaluating data by employing numerical analysis. This technique is useful for collecting the interpretations of research, developing statistical models, and planning surveys and studies.

Statistical analysis is a scientific tool in AI and ML that helps collect and analyze large amounts of data to identify common patterns and trends to convert them into meaningful information. In simple words, statistical analysis is a data analysis tool that helps draw meaningful conclusions from raw and unstructured data.

The conclusions are drawn using statistical analysis facilitating decision-making and helping businesses make future predictions on the basis of past trends. It can be defined as a science of collecting and analyzing data to identify trends and patterns and presenting them. Statistical analysis involves working with numbers and is used by businesses and other institutions to make use of data to derive meaningful information.

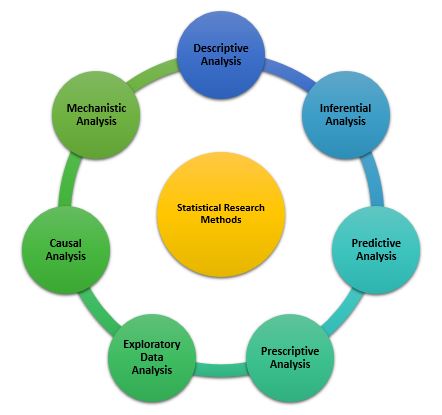

Given below are the 6 types of statistical analysis:

Descriptive Analysis

Descriptive statistical analysis involves collecting, interpreting, analyzing, and summarizing data to present them in the form of charts, graphs, and tables. Rather than drawing conclusions, it simply makes the complex data easy to read and understand.

Inferential Analysis

The inferential statistical analysis focuses on drawing meaningful conclusions on the basis of the data analyzed. It studies the relationship between different variables or makes predictions for the whole population.

Predictive Analysis

Predictive statistical analysis is a type of statistical analysis that analyzes data to derive past trends and predict future events on the basis of them. It uses machine learning algorithms, data mining , data modelling , and artificial intelligence to conduct the statistical analysis of data.

Prescriptive Analysis

The prescriptive analysis conducts the analysis of data and prescribes the best course of action based on the results. It is a type of statistical analysis that helps you make an informed decision.

Exploratory Data Analysis

Exploratory analysis is similar to inferential analysis, but the difference is that it involves exploring the unknown data associations. It analyzes the potential relationships within the data.

Causal Analysis

The causal statistical analysis focuses on determining the cause and effect relationship between different variables within the raw data. In simple words, it determines why something happens and its effect on other variables. This methodology can be used by businesses to determine the reason for failure.

Statistical analysis eliminates unnecessary information and catalogs important data in an uncomplicated manner, making the monumental work of organizing inputs appear so serene. Once the data has been collected, statistical analysis may be utilized for a variety of purposes. Some of them are listed below:

- The statistical analysis aids in summarizing enormous amounts of data into clearly digestible chunks.

- The statistical analysis aids in the effective design of laboratory, field, and survey investigations.

- Statistical analysis may help with solid and efficient planning in any subject of study.

- Statistical analysis aid in establishing broad generalizations and forecasting how much of something will occur under particular conditions.

- Statistical methods, which are effective tools for interpreting numerical data, are applied in practically every field of study. Statistical approaches have been created and are increasingly applied in physical and biological sciences, such as genetics.

- Statistical approaches are used in the job of a businessman, a manufacturer, and a researcher. Statistics departments can be found in banks, insurance businesses, and government agencies.

- A modern administrator, whether in the public or commercial sector, relies on statistical data to make correct decisions.

- Politicians can utilize statistics to support and validate their claims while also explaining the issues they address.

Become a Data Science & Business Analytics Professional

- 28% Annual Job Growth By 2026

- 11.5 M Expected New Jobs For Data Science By 2026

Data Analyst

- Industry-recognized Data Analyst Master’s certificate from Simplilearn

- Dedicated live sessions by faculty of industry experts

Data Scientist

- Industry-recognized Data Scientist Master’s certificate from Simplilearn

Here's what learners are saying regarding our programs:

Gayathri Ramesh

Associate data engineer , publicis sapient.

The course was well structured and curated. The live classes were extremely helpful. They made learning more productive and interactive. The program helped me change my domain from a data analyst to an Associate Data Engineer.

A.Anthony Davis

Simplilearn has one of the best programs available online to earn real-world skills that are in demand worldwide. I just completed the Machine Learning Advanced course, and the LMS was excellent.

Statistical analysis can be called a boon to mankind and has many benefits for both individuals and organizations. Given below are some of the reasons why you should consider investing in statistical analysis:

- It can help you determine the monthly, quarterly, yearly figures of sales profits, and costs making it easier to make your decisions.

- It can help you make informed and correct decisions.

- It can help you identify the problem or cause of the failure and make corrections. For example, it can identify the reason for an increase in total costs and help you cut the wasteful expenses.

- It can help you conduct market analysis and make an effective marketing and sales strategy.

- It helps improve the efficiency of different processes.

Given below are the 5 steps to conduct a statistical analysis that you should follow:

- Step 1: Identify and describe the nature of the data that you are supposed to analyze.

- Step 2: The next step is to establish a relation between the data analyzed and the sample population to which the data belongs.

- Step 3: The third step is to create a model that clearly presents and summarizes the relationship between the population and the data.

- Step 4: Prove if the model is valid or not.

- Step 5: Use predictive analysis to predict future trends and events likely to happen.

Although there are various methods used to perform data analysis, given below are the 5 most used and popular methods of statistical analysis:

Mean or average mean is one of the most popular methods of statistical analysis. Mean determines the overall trend of the data and is very simple to calculate. Mean is calculated by summing the numbers in the data set together and then dividing it by the number of data points. Despite the ease of calculation and its benefits, it is not advisable to resort to mean as the only statistical indicator as it can result in inaccurate decision making.

Standard Deviation

Standard deviation is another very widely used statistical tool or method. It analyzes the deviation of different data points from the mean of the entire data set. It determines how data of the data set is spread around the mean. You can use it to decide whether the research outcomes can be generalized or not.

Regression is a statistical tool that helps determine the cause and effect relationship between the variables. It determines the relationship between a dependent and an independent variable. It is generally used to predict future trends and events.

Hypothesis Testing

Hypothesis testing can be used to test the validity or trueness of a conclusion or argument against a data set. The hypothesis is an assumption made at the beginning of the research and can hold or be false based on the analysis results.

Sample Size Determination

Sample size determination or data sampling is a technique used to derive a sample from the entire population, which is representative of the population. This method is used when the size of the population is very large. You can choose from among the various data sampling techniques such as snowball sampling, convenience sampling, and random sampling.

Everyone can't perform very complex statistical calculations with accuracy making statistical analysis a time-consuming and costly process. Statistical software has become a very important tool for companies to perform their data analysis. The software uses Artificial Intelligence and Machine Learning to perform complex calculations, identify trends and patterns, and create charts, graphs, and tables accurately within minutes.

Look at the standard deviation sample calculation given below to understand more about statistical analysis.

The weights of 5 pizza bases in cms are as follows:

Calculation of Mean = (9+2+5+4+12)/5 = 32/5 = 6.4

Calculation of mean of squared mean deviation = (6.76+19.36+1.96+5.76+31.36)/5 = 13.04

Sample Variance = 13.04

Standard deviation = √13.04 = 3.611

A Statistical Analyst's career path is determined by the industry in which they work. Anyone interested in becoming a Data Analyst may usually enter the profession and qualify for entry-level Data Analyst positions right out of high school or a certificate program — potentially with a Bachelor's degree in statistics, computer science, or mathematics. Some people go into data analysis from a similar sector such as business, economics, or even the social sciences, usually by updating their skills mid-career with a statistical analytics course.

Statistical Analyst is also a great way to get started in the normally more complex area of data science. A Data Scientist is generally a more senior role than a Data Analyst since it is more strategic in nature and necessitates a more highly developed set of technical abilities, such as knowledge of multiple statistical tools, programming languages, and predictive analytics models.

Aspiring Data Scientists and Statistical Analysts generally begin their careers by learning a programming language such as R or SQL. Following that, they must learn how to create databases, do basic analysis, and make visuals using applications such as Tableau. However, not every Statistical Analyst will need to know how to do all of these things, but if you want to advance in your profession, you should be able to do them all.

Based on your industry and the sort of work you do, you may opt to study Python or R, become an expert at data cleaning, or focus on developing complicated statistical models.

You could also learn a little bit of everything, which might help you take on a leadership role and advance to the position of Senior Data Analyst. A Senior Statistical Analyst with vast and deep knowledge might take on a leadership role leading a team of other Statistical Analysts. Statistical Analysts with extra skill training may be able to advance to Data Scientists or other more senior data analytics positions.

Supercharge your career in AI and ML with Simplilearn's comprehensive courses. Gain the skills and knowledge to transform industries and unleash your true potential. Enroll now and unlock limitless possibilities!

Program Name AI Engineer Post Graduate Program In Artificial Intelligence Post Graduate Program In Artificial Intelligence Geo All Geos All Geos IN/ROW University Simplilearn Purdue Caltech Course Duration 11 Months 11 Months 11 Months Coding Experience Required Basic Basic No Skills You Will Learn 10+ skills including data structure, data manipulation, NumPy, Scikit-Learn, Tableau and more. 16+ skills including chatbots, NLP, Python, Keras and more. 8+ skills including Supervised & Unsupervised Learning Deep Learning Data Visualization, and more. Additional Benefits Get access to exclusive Hackathons, Masterclasses and Ask-Me-Anything sessions by IBM Applied learning via 3 Capstone and 12 Industry-relevant Projects Purdue Alumni Association Membership Free IIMJobs Pro-Membership of 6 months Resume Building Assistance Upto 14 CEU Credits Caltech CTME Circle Membership Cost $$ $$$$ $$$$ Explore Program Explore Program Explore Program

Hope this article assisted you in understanding the importance of statistical analysis in every sphere of life. Artificial Intelligence (AI) can help you perform statistical analysis and data analysis very effectively and efficiently.

If you are a science wizard and fascinated by the role of AI in statistical analysis, check out this amazing Caltech Post Graduate Program in AI & ML course in collaboration with Caltech. With a comprehensive syllabus and real-life projects, this course is one of the most popular courses and will help you with all that you need to know about Artificial Intelligence.

Our AI & Machine Learning Courses Duration And Fees

AI & Machine Learning Courses typically range from a few weeks to several months, with fees varying based on program and institution.

Get Free Certifications with free video courses

Data Science & Business Analytics

Introduction to Data Analytics Course

Introduction to Data Science

Learn from Industry Experts with free Masterclasses

Ai & machine learning.

Transform into a Gemini Guru for Google Workspace in Just 60 Minutes

Unlock Gen AI Skills to Rule the Industry in 2024

The Art of Resume Writing: Techniques for Landing Your Dream Job

Recommended Reads

Free eBook: Guide To The CCBA And CBAP Certifications

Understanding Statistical Process Control (SPC) and Top Applications

A Complete Guide on the Types of Statistical Studies

Digital Marketing Salary Guide 2021

What Is Data Analysis: A Comprehensive Guide

A Complete Guide to Get a Grasp of Time Series Analysis

Get Affiliated Certifications with Live Class programs

- PMP, PMI, PMBOK, CAPM, PgMP, PfMP, ACP, PBA, RMP, SP, and OPM3 are registered marks of the Project Management Institute, Inc.

Statistical Methods for Data Analysis: a Comprehensive Guide

In today’s data-driven world, understanding statistical methods for data analysis is like having a superpower.

Whether you’re a student, a professional, or just a curious mind, diving into the realm of data can unlock insights and decisions that propel success.

Statistical methods for data analysis are the tools and techniques used to collect, analyze, interpret, and present data in a meaningful way.

From businesses optimizing operations to researchers uncovering new discoveries, these methods are foundational to making informed decisions based on data.

In this blog post, we’ll embark on a journey through the fascinating world of statistical analysis, exploring its key concepts, methodologies, and applications.

Introduction to Statistical Methods

At its core, statistical methods are the backbone of data analysis, helping us make sense of numbers and patterns in the world around us.

Whether you’re looking at sales figures, medical research, or even your fitness tracker’s data, statistical methods are what turn raw data into useful insights.

But before we dive into complex formulas and tests, let’s start with the basics.

Data comes in two main types: qualitative and quantitative data .

Quantitative data is all about numbers and quantities (like your height or the number of steps you walked today), while qualitative data deals with categories and qualities (like your favorite color or the breed of your dog).

And when we talk about measuring these data points, we use different scales like nominal, ordinal , interval , and ratio.

These scales help us understand the nature of our data—whether we’re ranking it (ordinal), simply categorizing it (nominal), or measuring it with a true zero point (ratio).

In a nutshell, statistical methods start with understanding the type and scale of your data.

This foundational knowledge sets the stage for everything from summarizing your data to making complex predictions.

Descriptive Statistics: Simplifying Data

Imagine you’re at a party and you meet a bunch of new people.

When you go home, your roommate asks, “So, what were they like?” You could describe each person in detail, but instead, you give a summary: “Most were college students, around 20-25 years old, pretty fun crowd!”

That’s essentially what descriptive statistics does for data.

It summarizes and describes the main features of a collection of data in an easy-to-understand way. Let’s break this down further.

The Basics: Mean, Median, and Mode

- Mean is just a fancy term for the average. If you add up everyone’s age at the party and divide by the number of people, you’ve got your mean age.

- Median is the middle number in a sorted list. If you line up everyone from the youngest to the oldest and pick the person in the middle, their age is your median. This is super handy when someone’s age is way off the chart (like if your grandma crashed the party), as it doesn’t skew the data.

- Mode is the most common age at the party. If you notice a lot of people are 22, then 22 is your mode. It’s like the age that wins the popularity contest.

Spreading the News: Range, Variance, and Standard Deviation

- Range gives you an idea of how spread out the ages are. It’s the difference between the oldest and the youngest. A small range means everyone’s around the same age, while a big range means a wider variety.

- Variance is a bit more complex. It measures how much the ages differ from the average age. A higher variance means ages are more spread out.

- Standard Deviation is the square root of variance. It’s like variance but back on a scale that makes sense. It tells you, on average, how far each person’s age is from the mean age.

Picture Perfect: Graphical Representations

- Histograms are like bar charts showing how many people fall into different age groups. They give you a quick glance at how ages are distributed.

- Bar Charts are great for comparing different categories, like how many men vs. women were at the party.

- Box Plots (or box-and-whisker plots) show you the median, the range, and if there are any outliers (like grandma).

- Scatter Plots are used when you want to see if there’s a relationship between two things, like if bringing more snacks means people stay longer at the party.

Why Descriptive Statistics Matter?

Descriptive statistics are your first step in data analysis.

They help you understand your data at a glance and prepare you for deeper analysis.

Without them, you’re like someone trying to guess what a party was like without any context.

Whether you’re looking at survey responses, test scores, or party attendees, descriptive statistics give you the tools to summarize and describe your data in a way that’s easy to grasp.

This approach is crucial in educational settings, particularly for enhancing math learning outcomes. For those looking to deepen their understanding of math or seeking additional support, check out this link: https://www.mathnasium.com/ math-tutors-near-me .

Remember, the goal of descriptive statistics is to simplify the complex.

Inferential Statistics: Beyond the Basics

Let’s keep the party analogy rolling, but this time, imagine you couldn’t attend the party yourself.

You’re curious if the party was as fun as everyone said it would be.

Instead of asking every single attendee, you decide to ask a few friends who went.

Based on their experiences, you try to infer what the entire party was like.

This is essentially what inferential statistics does with data.

It allows you to make predictions or draw conclusions about a larger group (the population) based on a smaller group (a sample). Let’s dive into how this works.

Probability

Inferential statistics is all about playing the odds.

When you make an inference, you’re saying, “Based on my sample, there’s a certain probability that my conclusion about the whole population is correct.”

It’s like betting on whether the party was fun, based on a few friends’ opinions.

The Central Limit Theorem (CLT)

The Central Limit Theorem is the superhero of statistics.

It tells us that if you take enough samples from a population, the sample means (averages) will form a normal distribution (a bell curve), no matter what the population distribution looks like.

This is crucial because it allows us to use sample data to make inferences about the population mean with a known level of uncertainty.

Confidence Intervals

Imagine you’re pretty sure the party was fun, but you want to know how fun.

A confidence interval gives you a range of values within which you believe the true mean fun level of the party lies.

It’s like saying, “I’m 95% confident the party’s fun rating was between 7 and 9 out of 10.”

Hypothesis Testing

This is where you get to be a bit of a detective. You start with a hypothesis (a guess) about the population.

For example, your null hypothesis might be “the party was average fun.” Then you use your sample data to test this hypothesis.

If the data strongly suggests otherwise, you might reject the null hypothesis and accept the alternative hypothesis, which could be “the party was super fun.”

The p-value tells you how likely it is that your data would have occurred by random chance if the null hypothesis were true.

A low p-value (typically less than 0.05) indicates that your findings are significant—that is, unlikely to have happened by chance.

It’s like saying, “The chance that all my friends are exaggerating about the party being fun is really low, so the party probably was fun.”

Why Inferential Statistics Matter?

Inferential statistics let us go beyond just describing our data.

They allow us to make educated guesses about a larger population based on a sample.

This is incredibly useful in almost every field—science, business, public health, and yes, even planning your next party.

By using probability, the Central Limit Theorem, confidence intervals, hypothesis testing, and p-values, we can make informed decisions without needing to ask every single person in the population.

It saves time, resources, and helps us understand the world more scientifically.

Remember, while inferential statistics gives us powerful tools for making predictions, those predictions come with a level of uncertainty.

Being a good data scientist means understanding and communicating that uncertainty clearly.

So next time you hear about a party you missed, use inferential statistics to figure out just how much FOMO (fear of missing out) you should really feel!

Common Statistical Tests: Choosing Your Data’s Best Friend

Alright, now that we’ve covered the basics of descriptive and inferential statistics, it’s time to talk about how we actually apply these concepts to make sense of data.

It’s like deciding on the best way to find out who was the life of the party.

You have several tools (tests) at your disposal, and choosing the right one depends on what you’re trying to find out and the type of data you have.

Let’s explore some of the most common statistical tests and when to use them.

T-Tests: Comparing Averages

Imagine you want to know if the average fun level was higher at this year’s party compared to last year’s.

A t-test helps you compare the means (averages) of two groups to see if they’re statistically different.

There are a couple of flavors:

- Independent t-test : Use this when comparing two different groups, like this year’s party vs. last year’s party.

- Paired t-test : Use this when comparing the same group at two different times or under two different conditions, like if you measured everyone’s fun level before and after the party.

ANOVA : When Three’s Not a Crowd.

But what if you had three or more parties to compare? That’s where ANOVA (Analysis of Variance) comes in handy.

It lets you compare the means across multiple groups at once to see if at least one of them is significantly different.

It’s like comparing the fun levels across several years’ parties to see if one year stood out.

Chi-Square Test: Categorically Speaking

Now, let’s say you’re interested in whether the type of music (pop, rock, electronic) affects party attendance.

Since you’re dealing with categories (types of music) and counts (number of attendees), you’ll use the Chi-Square test.

It’s great for seeing if there’s a relationship between two categorical variables.

Correlation and Regression: Finding Relationships

What if you suspect that the amount of snacks available at the party affects how long guests stay? To explore this, you’d use:

- Correlation analysis to see if there’s a relationship between two continuous variables (like snacks and party duration). It tells you how closely related two things are.

- Regression analysis goes a step further by not only showing if there’s a relationship but also how one variable predicts the other. It’s like saying, “For every extra bag of chips, guests stay an average of 10 minutes longer.”

Non-parametric Tests: When Assumptions Don’t Hold

All the tests mentioned above assume your data follows a normal distribution and meets other criteria.

But what if your data doesn’t play by these rules?

Enter non-parametric tests, like the Mann-Whitney U test (for comparing two groups when you can’t use a t-test) or the Kruskal-Wallis test (like ANOVA but for non-normal distributions).

Picking the Right Test

Choosing the right statistical test is crucial and depends on:

- The type of data you have (categorical vs. continuous).

- Whether you’re comparing groups or looking for relationships.

- The distribution of your data (normal vs. non-normal).

Why These Tests Matter?

Just like you’d pick the right tool for a job, selecting the appropriate statistical test helps you make valid and reliable conclusions about your data.

Whether you’re trying to prove a point, make a decision, or just understand the world a bit better, these tests are your gateway to insights.

By mastering these tests, you become a detective in the world of data, ready to uncover the truth behind the numbers!

Regression Analysis: Predicting the Future

Ever wondered if you could predict how much fun you’re going to have at a party based on the number of friends going, or how the amount of snacks available might affect the overall party vibe?

That’s where regression analysis comes into play, acting like a crystal ball for your data.

What is Regression Analysis?

Regression analysis is a powerful statistical method that allows you to examine the relationship between two or more variables of interest.

Think of it as detective work, where you’re trying to figure out if, how, and to what extent certain factors (like snacks and music volume) predict an outcome (like the fun level at a party).

The Two Main Characters: Independent and Dependent Variables

- Independent Variable(s): These are the predictors or factors that you suspect might influence the outcome. For example, the quantity of snacks.

- Dependent Variable: This is the outcome you’re interested in predicting. In our case, it could be the fun level of the party.

Linear Regression: The Straight Line Relationship

The most basic form of regression analysis is linear regression .

It predicts the outcome based on a linear relationship between the independent and dependent variables.

If you plot this on a graph, you’d ideally see a straight line where, as the amount of snacks increases, so does the fun level (hopefully!).

- Simple Linear Regression involves just one independent variable. It’s like saying, “Let’s see if just the number of snacks can predict the fun level.”

- Multiple Linear Regression takes it up a notch by including more than one independent variable. Now, you’re looking at whether the quantity of snacks, type of music, and number of guests together can predict the fun level.

Logistic Regression: When Outcomes are Either/Or

Not all predictions are about numbers.

Sometimes, you just want to know if something will happen or not—will the party be a hit or a flop?

Logistic regression is used for these binary outcomes.

Instead of predicting a precise fun level, it predicts the probability of the party being a hit based on the same predictors (snacks, music, guests).

Making Sense of the Results

- Coefficients: In regression analysis, each predictor has a coefficient, telling you how much the dependent variable is expected to change when that predictor changes by one unit, all else being equal.

- R-squared : This value tells you how much of the variation in your dependent variable can be explained by the independent variables. A higher R-squared means a better fit between your model and the data.

Why Regression Analysis Rocks?

Regression analysis is like having a superpower. It helps you understand which factors matter most, which can be ignored, and how different factors come together to influence the outcome.

This insight is invaluable whether you’re planning a party, running a business, or conducting scientific research.

Bringing It All Together

Imagine you’ve gathered data on several parties, including the number of guests, type of music, and amount of snacks, along with a fun level rating for each.

By running a regression analysis, you can start to predict future parties’ success, tailoring your planning to maximize fun.

It’s a practical tool for making informed decisions based on past data, helping you throw legendary parties, optimize business strategies, or understand complex relationships in your research.

In essence, regression analysis helps turn your data into actionable insights, guiding you towards smarter decisions and better predictions.

So next time you’re knee-deep in data, remember: regression analysis might just be the key to unlocking its secrets.

Non-parametric Methods: Playing By Different Rules

So far, we’ve talked a lot about statistical methods that rely on certain assumptions about your data, like it being normally distributed (forming that classic bell curve) or having a specific scale of measurement.

But what happens when your data doesn’t fit these molds?

Maybe the scores from your last party’s karaoke contest are all over the place, or you’re trying to compare the popularity of various party games but only have rankings, not scores.

This is where non-parametric methods come to the rescue.

Breaking Free from Assumptions

Non-parametric methods are the rebels of the statistical world.

They don’t assume your data follows a normal distribution or that it meets strict requirements regarding measurement scales.

These methods are perfect for dealing with ordinal data (like rankings), nominal data (like categories), or when your data is skewed or has outliers that would throw off other tests.

When to Use Non-parametric Methods?

- Your data is not normally distributed, and transformations don’t help.

- You have ordinal data (like survey responses that range from “Strongly Disagree” to “Strongly Agree”).

- You’re dealing with ranks or categories rather than precise measurements.

- Your sample size is small, making it hard to meet the assumptions required for parametric tests.

Some Popular Non-parametric Tests

- Mann-Whitney U Test: Think of it as the non-parametric counterpart to the independent samples t-test. Use this when you want to compare the differences between two independent groups on a ranking or ordinal scale.

- Kruskal-Wallis Test: This is your go-to when you have three or more groups to compare, and it’s similar to an ANOVA but for ranked/ordinal data or when your data doesn’t meet ANOVA’s assumptions.

- Spearman’s Rank Correlation: When you want to see if there’s a relationship between two sets of rankings, Spearman’s got your back. It’s like Pearson’s correlation for continuous data but designed for ranks.

- Wilcoxon Signed-Rank Test: Use this for comparing two related samples when you can’t use the paired t-test, typically because the differences between pairs are not normally distributed.

The Beauty of Flexibility

The real charm of non-parametric methods is their flexibility.

They let you work with data that’s not textbook perfect, which is often the case in the real world.

Whether you’re analyzing customer satisfaction surveys, comparing the effectiveness of different marketing strategies, or just trying to figure out if people prefer pizza or tacos at parties, non-parametric tests provide a robust way to get meaningful insights.

Keeping It Real

It’s important to remember that while non-parametric methods are incredibly useful, they also come with their own limitations.

They might be more conservative, meaning you might need a larger effect to detect a significant result compared to parametric tests.

Plus, because they often work with ranks rather than actual values, some information about your data might get lost in translation.

Non-parametric methods are your statistical toolbox’s Swiss Army knife, ready to tackle data that doesn’t fit into the neat categories required by more traditional tests.

They remind us that in the world of data analysis, there’s more than one way to uncover insights and make informed decisions.

So, the next time you’re faced with skewed distributions or rankings instead of scores, remember that non-parametric methods have got you covered, offering a way to navigate the complexities of real-world data.

Data Cleaning and Preparation: The Unsung Heroes of Data Analysis

Before any party can start, there’s always a bit of housecleaning to do—sweeping the floors, arranging the furniture, and maybe even hiding those laundry piles you’ve been ignoring all week.

Similarly, in the world of data analysis, before we can dive into the fun stuff like statistical tests and predictive modeling, we need to roll up our sleeves and get our data nice and tidy.

This process of data cleaning and preparation might not be the most glamorous part of data science, but it’s absolutely critical.

Let’s break down what this involves and why it’s so important.

Why Clean and Prepare Data?

Imagine trying to analyze party RSVPs when half the responses are “yes,” a quarter are “Y,” and the rest are a creative mix of “yup,” “sure,” and “why not?”

Without standardization, it’s hard to get a clear picture of how many guests to expect.

The same goes for any data set. Cleaning ensures that your data is consistent, accurate, and ready for analysis.

Preparation involves transforming this clean data into a format that’s useful for your specific analysis needs.

The Steps to Sparkling Clean Data

- Dealing with Missing Values: Sometimes, data is incomplete. Maybe a survey respondent skipped a question, or a sensor failed to record a reading. You’ll need to decide whether to fill in these gaps (imputation), ignore them, or drop the observations altogether.

- Identifying and Handling Outliers: Outliers are data points that are significantly different from the rest. They might be errors, or they might be valuable insights. The challenge is determining which is which and deciding how to handle them—remove, adjust, or analyze separately.

- Correcting Inconsistencies: This is like making sure all your RSVPs are in the same format. It could involve standardizing text entries, correcting typos, or converting all measurements to the same units.

- Formatting Data: Your analysis might require data in a specific format. This could mean transforming data types (e.g., converting dates into a uniform format) or restructuring data tables to make them easier to work with.

- Reducing Dimensionality: Sometimes, your data set might have more information than you actually need. Reducing dimensionality (through methods like Principal Component Analysis) can help simplify your data without losing valuable information.

- Creating New Variables: You might need to derive new variables from your existing ones to better capture the relationships in your data. For example, turning raw survey responses into a numerical satisfaction score.

The Tools of the Trade

There are many tools available to help with data cleaning and preparation, ranging from spreadsheet software like Excel to programming languages like Python and R.

These tools offer functions and libraries specifically designed to make data cleaning as painless as possible.

Why It Matters

Skipping the data cleaning and preparation stage is like trying to cook without prepping your ingredients first.

Sure, you might end up with something edible, but it’s not going to be as good as it could have been.

Clean and well-prepared data leads to more accurate, reliable, and meaningful analysis results.

It’s the foundation upon which all good data analysis is built.

Data cleaning and preparation might not be the flashiest part of data science, but it’s where all successful data analysis projects begin.

By taking the time to thoroughly clean and prepare your data, you’re setting yourself up for clearer insights, better decisions, and, ultimately, more impactful outcomes.

Software Tools for Statistical Analysis: Your Digital Assistants

Diving into the world of data without the right tools can feel like trying to cook a gourmet meal without a kitchen.

Just as you need pots, pans, and a stove to create a culinary masterpiece, you need the right software tools to analyze data and uncover the insights hidden within.

These digital assistants range from user-friendly applications for beginners to powerful suites for the pros.

Let’s take a closer look at some of the most popular software tools for statistical analysis.

R and RStudio: The Dynamic Duo

- R is like the Swiss Army knife of statistical analysis. It’s a programming language designed specifically for data analysis, graphics, and statistical modeling. Think of R as the kitchen where you’ll be cooking up your data analysis.

- RStudio is an integrated development environment (IDE) for R. It’s like having the best kitchen setup with organized countertops (your coding space) and all your tools and ingredients within reach (packages and datasets).

Why They Rock:

R is incredibly powerful and can handle almost any data analysis task you throw at it, from the basics to the most advanced statistical models.

Plus, there’s a vast community of users, which means a wealth of tutorials, forums, and free packages to add on.

Python with pandas and scipy: The Versatile Virtuoso

- Python is not just for programming; with the right libraries, it becomes an excellent tool for data analysis. It’s like a kitchen that’s not only great for baking but also equipped for gourmet cooking.

- pandas is a library that provides easy-to-use data structures and data analysis tools for Python. Imagine it as your sous-chef, helping you to slice and dice data with ease.

- scipy is another library used for scientific and technical computing. It’s like having a set of precision knives for the more intricate tasks.

Why They Rock: Python is known for its readability and simplicity, making it accessible for beginners. When combined with pandas and scipy, it becomes a powerhouse for data manipulation, analysis, and visualization.

SPSS: The Point-and-Click Professional

SPSS (Statistical Package for the Social Sciences) is a software package used for interactive, or batched, statistical analysis. Long produced by SPSS Inc., it was acquired by IBM in 2009.

Why It Rocks: SPSS is particularly user-friendly with its point-and-click interface, making it a favorite among non-programmers and researchers in the social sciences. It’s like having a kitchen gadget that does the job with the push of a button—no manual setup required.

SAS: The Corporate Chef

SAS (Statistical Analysis System) is a software suite developed for advanced analytics, multivariate analysis, business intelligence, data management, and predictive analytics.

Why It Rocks: SAS is a powerhouse in the corporate world, known for its stability, deep analytical capabilities, and support for large data sets. It’s like the industrial kitchen used by professional chefs to serve hundreds of guests.

Excel: The Accessible Apprentice

Excel might not be a specialized statistical software, but it’s widely accessible and capable of handling basic statistical analyses. Think of Excel as the microwave in your kitchen—it might not be fancy, but it gets the job done for quick and simple tasks.

Why It Rocks: Almost everyone has access to Excel and knows the basics, making it a great starting point for those new to data analysis. Plus, with add-ons like the Analysis ToolPak, Excel’s capabilities can be extended further into statistical territory.

Choosing Your Tool

Selecting the right software tool for statistical analysis is like choosing the right kitchen for your cooking style—it depends on your needs, expertise, and the complexity of your recipes (data).

Whether you’re a coding chef ready to tackle R or Python, or someone who prefers the straightforwardness of SPSS or Excel, there’s a tool out there that’s perfect for your data analysis kitchen.

Ethical Considerations

Embarking on a data analysis journey is like setting sail on the vast ocean of information.

Just as a captain needs a compass to navigate the seas safely and responsibly, a data analyst requires a strong sense of ethics to guide their exploration of data.

Ethical considerations in data analysis are the moral compass that ensures we respect privacy, consent, and integrity while uncovering the truths hidden within data. Let’s delve into why ethics are so crucial and what principles you should keep in mind.

Respect for Privacy

Imagine you’ve found a diary filled with personal secrets.

Reading it without permission would be a breach of privacy.

Similarly, when you’re handling data, especially personal or sensitive information, it’s essential to ensure that privacy is protected.

This means not only securing data against unauthorized access but also anonymizing data to prevent individuals from being identified.

Informed Consent

Before you can set sail, you need the ship owner’s permission.

In the world of data, this translates to informed consent. Participants should be fully aware of what their data will be used for and voluntarily agree to participate.

This is particularly important in research or when collecting data directly from individuals. It’s like asking for permission before you start the journey.

Data Integrity

Maintaining data integrity is like keeping the ship’s log accurate and unaltered during your voyage.

It involves ensuring the data is not corrupted or modified inappropriately and that any data analysis is conducted accurately and reliably.

Tampering with data or cherry-picking results to fit a narrative is not just unethical—it’s like falsifying the ship’s log, leading to mistrust and potentially dangerous outcomes.

Avoiding Bias

The sea is vast, and your compass must be calibrated correctly to avoid going off course. Similarly, avoiding bias in data analysis ensures your findings are valid and unbiased.

This means being aware of and actively addressing any personal, cultural, or statistical biases that might skew your analysis.

It’s about striving for objectivity and ensuring your journey is guided by truth, not preconceived notions.

Transparency and Accountability

A trustworthy captain is open about their navigational choices and ready to take responsibility for them.

In data analysis, this translates to transparency about your methods and accountability for your conclusions.

Sharing your methodologies, data sources, and any limitations of your analysis helps build trust and allows others to verify or challenge your findings.

Ethical Use of Findings

Finally, just as a captain must consider the impact of their journey on the wider world, you must consider how your data analysis will be used.

This means thinking about the potential consequences of your findings and striving to ensure they are used to benefit, not harm, society.

It’s about being mindful of the broader implications of your work and using data for good.

Navigating with a Moral Compass

In the realm of data analysis, ethical considerations form the moral compass that guides us through complex moral waters.

They ensure that our work respects individuals’ rights, contributes positively to society, and upholds the highest standards of integrity and professionalism.

Just as a captain navigates the seas with respect for the ocean and its dangers, a data analyst must navigate the world of data with a deep commitment to ethical principles.

This commitment ensures that the insights gained from data analysis serve to enlighten and improve, rather than exploit or harm.

Conclusion and Key Takeaways

And there you have it—a whirlwind tour through the fascinating landscape of statistical methods for data analysis.

From the grounding principles of descriptive and inferential statistics to the nuanced details of regression analysis and beyond, we’ve explored the tools and ethical considerations that guide us in turning raw data into meaningful insights.

The Takeaway

Think of data analysis as embarking on a grand adventure, one where numbers and facts are your map and compass.

Just as every explorer needs to understand the terrain, every aspiring data analyst must grasp these foundational concepts.

Whether it’s summarizing data sets with descriptive statistics, making predictions with inferential statistics, choosing the right statistical test, or navigating the ethical considerations that ensure our analyses benefit society, each aspect is a crucial step on your journey.

The Importance of Preparation

Remember, the key to a successful voyage is preparation.

Cleaning and preparing your data sets the stage for a smooth journey, while choosing the right software tools ensures you have the best equipment at your disposal.

And just as every responsible navigator respects the sea, every data analyst must navigate the ethical dimensions of their work with care and integrity.

Charting Your Course

As you embark on your own data analysis adventures, remember that the path you chart is unique to you.

Your questions will guide your journey, your curiosity will fuel your exploration, and the insights you gain will be your treasure.

The world of data is vast and full of mysteries waiting to be uncovered. With the tools and principles we’ve discussed, you’re well-equipped to start uncovering those mysteries, one data set at a time.

The Journey Ahead

The journey of statistical methods for data analysis is ongoing, and the landscape is ever-evolving.

As new methods emerge and our understanding deepens, there will always be new horizons to explore and new insights to discover.

But the fundamentals we’ve covered will remain your steadfast guide, helping you navigate the challenges and opportunities that lie ahead.

So set your sights on the questions that spark your curiosity, arm yourself with the tools of the trade, and embark on your data analysis journey with confidence.

About The Author

Silvia Valcheva

Silvia Valcheva is a digital marketer with over a decade of experience creating content for the tech industry. She has a strong passion for writing about emerging software and technologies such as big data, AI (Artificial Intelligence), IoT (Internet of Things), process automation, etc.

Leave a Reply Cancel Reply

This site uses Akismet to reduce spam. Learn how your comment data is processed .

Your Modern Business Guide To Data Analysis Methods And Techniques

Table of Contents

1) What Is Data Analysis?

2) Why Is Data Analysis Important?

3) What Is The Data Analysis Process?

4) Types Of Data Analysis Methods

5) Top Data Analysis Techniques To Apply

6) Quality Criteria For Data Analysis

7) Data Analysis Limitations & Barriers

8) Data Analysis Skills

9) Data Analysis In The Big Data Environment

In our data-rich age, understanding how to analyze and extract true meaning from our business’s digital insights is one of the primary drivers of success.

Despite the colossal volume of data we create every day, a mere 0.5% is actually analyzed and used for data discovery , improvement, and intelligence. While that may not seem like much, considering the amount of digital information we have at our fingertips, half a percent still accounts for a vast amount of data.

With so much data and so little time, knowing how to collect, curate, organize, and make sense of all of this potentially business-boosting information can be a minefield – but online data analysis is the solution.

In science, data analysis uses a more complex approach with advanced techniques to explore and experiment with data. On the other hand, in a business context, data is used to make data-driven decisions that will enable the company to improve its overall performance. In this post, we will cover the analysis of data from an organizational point of view while still going through the scientific and statistical foundations that are fundamental to understanding the basics of data analysis.

To put all of that into perspective, we will answer a host of important analytical questions, explore analytical methods and techniques, while demonstrating how to perform analysis in the real world with a 17-step blueprint for success.

What Is Data Analysis?

Data analysis is the process of collecting, modeling, and analyzing data using various statistical and logical methods and techniques. Businesses rely on analytics processes and tools to extract insights that support strategic and operational decision-making.

All these various methods are largely based on two core areas: quantitative and qualitative research.

To explain the key differences between qualitative and quantitative research, here’s a video for your viewing pleasure:

Gaining a better understanding of different techniques and methods in quantitative research as well as qualitative insights will give your analyzing efforts a more clearly defined direction, so it’s worth taking the time to allow this particular knowledge to sink in. Additionally, you will be able to create a comprehensive analytical report that will skyrocket your analysis.

Apart from qualitative and quantitative categories, there are also other types of data that you should be aware of before dividing into complex data analysis processes. These categories include:

- Big data: Refers to massive data sets that need to be analyzed using advanced software to reveal patterns and trends. It is considered to be one of the best analytical assets as it provides larger volumes of data at a faster rate.

- Metadata: Putting it simply, metadata is data that provides insights about other data. It summarizes key information about specific data that makes it easier to find and reuse for later purposes.

- Real time data: As its name suggests, real time data is presented as soon as it is acquired. From an organizational perspective, this is the most valuable data as it can help you make important decisions based on the latest developments. Our guide on real time analytics will tell you more about the topic.

- Machine data: This is more complex data that is generated solely by a machine such as phones, computers, or even websites and embedded systems, without previous human interaction.

Why Is Data Analysis Important?

Before we go into detail about the categories of analysis along with its methods and techniques, you must understand the potential that analyzing data can bring to your organization.

- Informed decision-making : From a management perspective, you can benefit from analyzing your data as it helps you make decisions based on facts and not simple intuition. For instance, you can understand where to invest your capital, detect growth opportunities, predict your income, or tackle uncommon situations before they become problems. Through this, you can extract relevant insights from all areas in your organization, and with the help of dashboard software , present the data in a professional and interactive way to different stakeholders.

- Reduce costs : Another great benefit is to reduce costs. With the help of advanced technologies such as predictive analytics, businesses can spot improvement opportunities, trends, and patterns in their data and plan their strategies accordingly. In time, this will help you save money and resources on implementing the wrong strategies. And not just that, by predicting different scenarios such as sales and demand you can also anticipate production and supply.

- Target customers better : Customers are arguably the most crucial element in any business. By using analytics to get a 360° vision of all aspects related to your customers, you can understand which channels they use to communicate with you, their demographics, interests, habits, purchasing behaviors, and more. In the long run, it will drive success to your marketing strategies, allow you to identify new potential customers, and avoid wasting resources on targeting the wrong people or sending the wrong message. You can also track customer satisfaction by analyzing your client’s reviews or your customer service department’s performance.

What Is The Data Analysis Process?

When we talk about analyzing data there is an order to follow in order to extract the needed conclusions. The analysis process consists of 5 key stages. We will cover each of them more in detail later in the post, but to start providing the needed context to understand what is coming next, here is a rundown of the 5 essential steps of data analysis.

- Identify: Before you get your hands dirty with data, you first need to identify why you need it in the first place. The identification is the stage in which you establish the questions you will need to answer. For example, what is the customer's perception of our brand? Or what type of packaging is more engaging to our potential customers? Once the questions are outlined you are ready for the next step.

- Collect: As its name suggests, this is the stage where you start collecting the needed data. Here, you define which sources of data you will use and how you will use them. The collection of data can come in different forms such as internal or external sources, surveys, interviews, questionnaires, and focus groups, among others. An important note here is that the way you collect the data will be different in a quantitative and qualitative scenario.

- Clean: Once you have the necessary data it is time to clean it and leave it ready for analysis. Not all the data you collect will be useful, when collecting big amounts of data in different formats it is very likely that you will find yourself with duplicate or badly formatted data. To avoid this, before you start working with your data you need to make sure to erase any white spaces, duplicate records, or formatting errors. This way you avoid hurting your analysis with bad-quality data.

- Analyze : With the help of various techniques such as statistical analysis, regressions, neural networks, text analysis, and more, you can start analyzing and manipulating your data to extract relevant conclusions. At this stage, you find trends, correlations, variations, and patterns that can help you answer the questions you first thought of in the identify stage. Various technologies in the market assist researchers and average users with the management of their data. Some of them include business intelligence and visualization software, predictive analytics, and data mining, among others.

- Interpret: Last but not least you have one of the most important steps: it is time to interpret your results. This stage is where the researcher comes up with courses of action based on the findings. For example, here you would understand if your clients prefer packaging that is red or green, plastic or paper, etc. Additionally, at this stage, you can also find some limitations and work on them.

Now that you have a basic understanding of the key data analysis steps, let’s look at the top 17 essential methods.

17 Essential Types Of Data Analysis Methods

Before diving into the 17 essential types of methods, it is important that we go over really fast through the main analysis categories. Starting with the category of descriptive up to prescriptive analysis, the complexity and effort of data evaluation increases, but also the added value for the company.

a) Descriptive analysis - What happened.

The descriptive analysis method is the starting point for any analytic reflection, and it aims to answer the question of what happened? It does this by ordering, manipulating, and interpreting raw data from various sources to turn it into valuable insights for your organization.

Performing descriptive analysis is essential, as it enables us to present our insights in a meaningful way. Although it is relevant to mention that this analysis on its own will not allow you to predict future outcomes or tell you the answer to questions like why something happened, it will leave your data organized and ready to conduct further investigations.

b) Exploratory analysis - How to explore data relationships.

As its name suggests, the main aim of the exploratory analysis is to explore. Prior to it, there is still no notion of the relationship between the data and the variables. Once the data is investigated, exploratory analysis helps you to find connections and generate hypotheses and solutions for specific problems. A typical area of application for it is data mining.

c) Diagnostic analysis - Why it happened.

Diagnostic data analytics empowers analysts and executives by helping them gain a firm contextual understanding of why something happened. If you know why something happened as well as how it happened, you will be able to pinpoint the exact ways of tackling the issue or challenge.

Designed to provide direct and actionable answers to specific questions, this is one of the world’s most important methods in research, among its other key organizational functions such as retail analytics , e.g.

c) Predictive analysis - What will happen.

The predictive method allows you to look into the future to answer the question: what will happen? In order to do this, it uses the results of the previously mentioned descriptive, exploratory, and diagnostic analysis, in addition to machine learning (ML) and artificial intelligence (AI). Through this, you can uncover future trends, potential problems or inefficiencies, connections, and casualties in your data.

With predictive analysis, you can unfold and develop initiatives that will not only enhance your various operational processes but also help you gain an all-important edge over the competition. If you understand why a trend, pattern, or event happened through data, you will be able to develop an informed projection of how things may unfold in particular areas of the business.

e) Prescriptive analysis - How will it happen.

Another of the most effective types of analysis methods in research. Prescriptive data techniques cross over from predictive analysis in the way that it revolves around using patterns or trends to develop responsive, practical business strategies.

By drilling down into prescriptive analysis, you will play an active role in the data consumption process by taking well-arranged sets of visual data and using it as a powerful fix to emerging issues in a number of key areas, including marketing, sales, customer experience, HR, fulfillment, finance, logistics analytics , and others.

As mentioned at the beginning of the post, data analysis methods can be divided into two big categories: quantitative and qualitative. Each of these categories holds a powerful analytical value that changes depending on the scenario and type of data you are working with. Below, we will discuss 17 methods that are divided into qualitative and quantitative approaches.

Without further ado, here are the 17 essential types of data analysis methods with some use cases in the business world:

A. Quantitative Methods

To put it simply, quantitative analysis refers to all methods that use numerical data or data that can be turned into numbers (e.g. category variables like gender, age, etc.) to extract valuable insights. It is used to extract valuable conclusions about relationships, differences, and test hypotheses. Below we discuss some of the key quantitative methods.

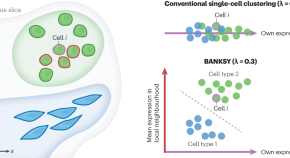

1. Cluster analysis

The action of grouping a set of data elements in a way that said elements are more similar (in a particular sense) to each other than to those in other groups – hence the term ‘cluster.’ Since there is no target variable when clustering, the method is often used to find hidden patterns in the data. The approach is also used to provide additional context to a trend or dataset.

Let's look at it from an organizational perspective. In a perfect world, marketers would be able to analyze each customer separately and give them the best-personalized service, but let's face it, with a large customer base, it is timely impossible to do that. That's where clustering comes in. By grouping customers into clusters based on demographics, purchasing behaviors, monetary value, or any other factor that might be relevant for your company, you will be able to immediately optimize your efforts and give your customers the best experience based on their needs.

2. Cohort analysis

This type of data analysis approach uses historical data to examine and compare a determined segment of users' behavior, which can then be grouped with others with similar characteristics. By using this methodology, it's possible to gain a wealth of insight into consumer needs or a firm understanding of a broader target group.

Cohort analysis can be really useful for performing analysis in marketing as it will allow you to understand the impact of your campaigns on specific groups of customers. To exemplify, imagine you send an email campaign encouraging customers to sign up for your site. For this, you create two versions of the campaign with different designs, CTAs, and ad content. Later on, you can use cohort analysis to track the performance of the campaign for a longer period of time and understand which type of content is driving your customers to sign up, repurchase, or engage in other ways.

A useful tool to start performing cohort analysis method is Google Analytics. You can learn more about the benefits and limitations of using cohorts in GA in this useful guide . In the bottom image, you see an example of how you visualize a cohort in this tool. The segments (devices traffic) are divided into date cohorts (usage of devices) and then analyzed week by week to extract insights into performance.

3. Regression analysis

Regression uses historical data to understand how a dependent variable's value is affected when one (linear regression) or more independent variables (multiple regression) change or stay the same. By understanding each variable's relationship and how it developed in the past, you can anticipate possible outcomes and make better decisions in the future.

Let's bring it down with an example. Imagine you did a regression analysis of your sales in 2019 and discovered that variables like product quality, store design, customer service, marketing campaigns, and sales channels affected the overall result. Now you want to use regression to analyze which of these variables changed or if any new ones appeared during 2020. For example, you couldn’t sell as much in your physical store due to COVID lockdowns. Therefore, your sales could’ve either dropped in general or increased in your online channels. Through this, you can understand which independent variables affected the overall performance of your dependent variable, annual sales.

If you want to go deeper into this type of analysis, check out this article and learn more about how you can benefit from regression.

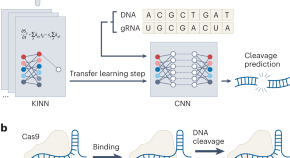

4. Neural networks

The neural network forms the basis for the intelligent algorithms of machine learning. It is a form of analytics that attempts, with minimal intervention, to understand how the human brain would generate insights and predict values. Neural networks learn from each and every data transaction, meaning that they evolve and advance over time.

A typical area of application for neural networks is predictive analytics. There are BI reporting tools that have this feature implemented within them, such as the Predictive Analytics Tool from datapine. This tool enables users to quickly and easily generate all kinds of predictions. All you have to do is select the data to be processed based on your KPIs, and the software automatically calculates forecasts based on historical and current data. Thanks to its user-friendly interface, anyone in your organization can manage it; there’s no need to be an advanced scientist.

Here is an example of how you can use the predictive analysis tool from datapine:

**click to enlarge**

5. Factor analysis

The factor analysis also called “dimension reduction” is a type of data analysis used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors. The aim here is to uncover independent latent variables, an ideal method for streamlining specific segments.

A good way to understand this data analysis method is a customer evaluation of a product. The initial assessment is based on different variables like color, shape, wearability, current trends, materials, comfort, the place where they bought the product, and frequency of usage. Like this, the list can be endless, depending on what you want to track. In this case, factor analysis comes into the picture by summarizing all of these variables into homogenous groups, for example, by grouping the variables color, materials, quality, and trends into a brother latent variable of design.

If you want to start analyzing data using factor analysis we recommend you take a look at this practical guide from UCLA.

6. Data mining

A method of data analysis that is the umbrella term for engineering metrics and insights for additional value, direction, and context. By using exploratory statistical evaluation, data mining aims to identify dependencies, relations, patterns, and trends to generate advanced knowledge. When considering how to analyze data, adopting a data mining mindset is essential to success - as such, it’s an area that is worth exploring in greater detail.

An excellent use case of data mining is datapine intelligent data alerts . With the help of artificial intelligence and machine learning, they provide automated signals based on particular commands or occurrences within a dataset. For example, if you’re monitoring supply chain KPIs , you could set an intelligent alarm to trigger when invalid or low-quality data appears. By doing so, you will be able to drill down deep into the issue and fix it swiftly and effectively.

In the following picture, you can see how the intelligent alarms from datapine work. By setting up ranges on daily orders, sessions, and revenues, the alarms will notify you if the goal was not completed or if it exceeded expectations.

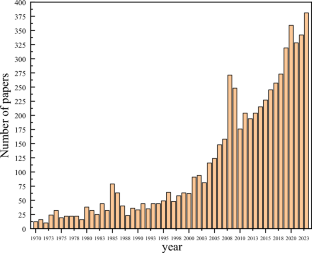

7. Time series analysis

As its name suggests, time series analysis is used to analyze a set of data points collected over a specified period of time. Although analysts use this method to monitor the data points in a specific interval of time rather than just monitoring them intermittently, the time series analysis is not uniquely used for the purpose of collecting data over time. Instead, it allows researchers to understand if variables changed during the duration of the study, how the different variables are dependent, and how did it reach the end result.

In a business context, this method is used to understand the causes of different trends and patterns to extract valuable insights. Another way of using this method is with the help of time series forecasting. Powered by predictive technologies, businesses can analyze various data sets over a period of time and forecast different future events.

A great use case to put time series analysis into perspective is seasonality effects on sales. By using time series forecasting to analyze sales data of a specific product over time, you can understand if sales rise over a specific period of time (e.g. swimwear during summertime, or candy during Halloween). These insights allow you to predict demand and prepare production accordingly.

8. Decision Trees

The decision tree analysis aims to act as a support tool to make smart and strategic decisions. By visually displaying potential outcomes, consequences, and costs in a tree-like model, researchers and company users can easily evaluate all factors involved and choose the best course of action. Decision trees are helpful to analyze quantitative data and they allow for an improved decision-making process by helping you spot improvement opportunities, reduce costs, and enhance operational efficiency and production.

But how does a decision tree actually works? This method works like a flowchart that starts with the main decision that you need to make and branches out based on the different outcomes and consequences of each decision. Each outcome will outline its own consequences, costs, and gains and, at the end of the analysis, you can compare each of them and make the smartest decision.