Creating and Scoring Essay Tests

FatCamera / Getty Images

- Tips & Strategies

- An Introduction to Teaching

- Policies & Discipline

- Community Involvement

- School Administration

- Technology in the Classroom

- Teaching Adult Learners

- Issues In Education

- Teaching Resources

- Becoming A Teacher

- Assessments & Tests

- Elementary Education

- Secondary Education

- Special Education

- Homeschooling

- M.Ed., Curriculum and Instruction, University of Florida

- B.A., History, University of Florida

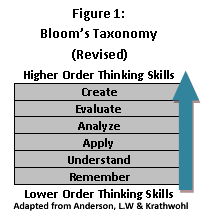

Essay tests are useful for teachers when they want students to select, organize, analyze, synthesize, and/or evaluate information. In other words, they rely on the upper levels of Bloom's Taxonomy . There are two types of essay questions: restricted and extended response.

- Restricted Response - These essay questions limit what the student will discuss in the essay based on the wording of the question. For example, "State the main differences between John Adams' and Thomas Jefferson's beliefs about federalism," is a restricted response. What the student is to write about has been expressed to them within the question.

- Extended Response - These allow students to select what they wish to include in order to answer the question. For example, "In Of Mice and Men , was George's killing of Lennie justified? Explain your answer." The student is given the overall topic, but they are free to use their own judgment and integrate outside information to help support their opinion.

Student Skills Required for Essay Tests

Before expecting students to perform well on either type of essay question, we must make sure that they have the required skills to excel. Following are four skills that students should have learned and practiced before taking essay exams:

- The ability to select appropriate material from the information learned in order to best answer the question.

- The ability to organize that material in an effective manner.

- The ability to show how ideas relate and interact in a specific context.

- The ability to write effectively in both sentences and paragraphs.

Constructing an Effective Essay Question

Following are a few tips to help in the construction of effective essay questions:

- Begin with the lesson objectives in mind. Make sure to know what you wish the student to show by answering the essay question.

- Decide if your goal requires a restricted or extended response. In general, if you wish to see if the student can synthesize and organize the information that they learned, then restricted response is the way to go. However, if you wish them to judge or evaluate something using the information taught during class, then you will want to use the extended response.

- If you are including more than one essay, be cognizant of time constraints. You do not want to punish students because they ran out of time on the test.

- Write the question in a novel or interesting manner to help motivate the student.

- State the number of points that the essay is worth. You can also provide them with a time guideline to help them as they work through the exam.

- If your essay item is part of a larger objective test, make sure that it is the last item on the exam.

Scoring the Essay Item

One of the downfalls of essay tests is that they lack in reliability. Even when teachers grade essays with a well-constructed rubric, subjective decisions are made. Therefore, it is important to try and be as reliable as possible when scoring your essay items. Here are a few tips to help improve reliability in grading:

- Determine whether you will use a holistic or analytic scoring system before you write your rubric . With the holistic grading system, you evaluate the answer as a whole, rating papers against each other. With the analytic system, you list specific pieces of information and award points for their inclusion.

- Prepare the essay rubric in advance. Determine what you are looking for and how many points you will be assigning for each aspect of the question.

- Avoid looking at names. Some teachers have students put numbers on their essays to try and help with this.

- Score one item at a time. This helps ensure that you use the same thinking and standards for all students.

- Avoid interruptions when scoring a specific question. Again, consistency will be increased if you grade the same item on all the papers in one sitting.

- If an important decision like an award or scholarship is based on the score for the essay, obtain two or more independent readers.

- Beware of negative influences that can affect essay scoring. These include handwriting and writing style bias, the length of the response, and the inclusion of irrelevant material.

- Review papers that are on the borderline a second time before assigning a final grade.

- Utilizing Extended Response Items to Enhance Student Learning

- Study for an Essay Test

- How to Create a Rubric in 6 Steps

- UC Personal Statement Prompt #1

- Top 10 Tips for Passing the AP US History Exam

- Tips to Create Effective Matching Questions for Assessments

- ACT Format: What to Expect on the Exam

- The Computer-Based GED Test

- Self Assessment and Writing a Graduate Admissions Essay

- Tips to Cut Writing Assignment Grading Time

- GMAT Exam Structure, Timing, and Scoring

- 10 Common Test Mistakes

- Holistic Grading (Composition)

- Best Practices for Subjective Test Questions

- Ideal College Application Essay Length

- Tips for the 8 University of California Personal Insight Questions

- Illinois Online

- Illinois Remote

- New Freshmen

- New International Students

- Info about COMPOSITION

- Info about MATH

- Info about SCIENCE

- LOTE for Non-Native Speakers

- Log-in Instructions

- ALEKS PPL Math Placement Exam

- Advanced Placement (AP) Credit

- What is IB?

- Advanced Level (A-Levels) Credit

- Departmental Proficiency Exams

- Departmental Proficiency Exams in LOTE ("Languages Other Than English")

- Testing in Less Commonly Studied Languages

- FAQ on placement testing

- FAQ on proficiency testing

- Legislation FAQ

- 2024 Cutoff Scores Math

- 2024 Cutoff Scores Chemistry

- 2024 Cutoff Scores IMR-Biology

- 2024 Cutoff Scores MCB

- 2024 Cutoff Scores Physics

- 2024 Cutoff Scores Rhetoric

- 2024 Cutoff Scores ESL

- 2024 Cutoff Scores Chinese

- 2024 Cutoff Scores French

- 2024 Cutoff Scores German

- 2024 Cutoff Scores Latin

- 2024 Cutoff Scores Spanish

- 2024 Advanced Placement Program

- 2024 International Baccalaureate Program

- 2024 Advanced Level Exams

- 2023 Cutoff Scores Math

- 2023 Cutoff Scores Chemistry

- 2023 Cutoff Scores IMR-Biology

- 2023 Cutoff Scores MCB

- 2023 Cutoff Scores Physics

- 2023 Cutoff Scores Rhetoric

- 2023 Cutoff Scores ESL

- 2023 Cutoff Scores Chinese

- 2023 Cutoff Scores French

- 2023 Cutoff Scores German

- 2023 Cutoff Scores Latin

- 2023 Cutoff Scores Spanish

- 2023 Advanced Placement Program

- 2023 International Baccalaureate Program

- 2023 Advanced Level Exams

- 2022 Cutoff Scores Math

- 2022 Cutoff Scores Chemistry

- 2022 Cutoff Scores IMR-Biology

- 2022 Cutoff Scores MCB

- 2022 Cutoff Scores Physics

- 2022 Cutoff Scores Rhetoric

- 2022 Cutoff Scores ESL

- 2022 Cutoff Scores Chinese

- 2022 Cutoff Scores French

- 2022 Cutoff Scores German

- 2022 Cutoff Scores Latin

- 2022 Cutoff Scores Spanish

- 2022 Advanced Placement Program

- 2022 International Baccalaureate Program

- 2022 Advanced Level Exams

- 2021 Cutoff Scores Math

- 2021 Cutoff Scores Chemistry

- 2021 Cutoff Scores IMR-Biology

- 2021 Cutoff Scores MCB

- 2021 Cutoff Scores Physics

- 2021 Cutoff Scores Rhetoric

- 2021 Cutoff Scores ESL

- 2021 Cutoff Scores Chinese

- 2021 Cutoff Scores French

- 2021 Cutoff Scores German

- 2021 Cutoff Scores Latin

- 2021 Cutoff Scores Spanish

- 2021 Advanced Placement Program

- 2021 International Baccalaureate Program

- 2021 Advanced Level Exams

- 2020 Cutoff Scores Math

- 2020 Cutoff Scores Chemistry

- 2020 Cutoff Scores MCB

- 2020 Cutoff Scores Physics

- 2020 Cutoff Scores Rhetoric

- 2020 Cutoff Scores ESL

- 2020 Cutoff Scores Chinese

- 2020 Cutoff Scores French

- 2020 Cutoff Scores German

- 2020 Cutoff Scores Latin

- 2020 Cutoff Scores Spanish

- 2020 Advanced Placement Program

- 2020 International Baccalaureate Program

- 2020 Advanced Level Exams

- 2019 Cutoff Scores Math

- 2019 Cutoff Scores Chemistry

- 2019 Cutoff Scores MCB

- 2019 Cutoff Scores Physics

- 2019 Cutoff Scores Rhetoric

- 2019 Cutoff Scores Chinese

- 2019 Cutoff Scores ESL

- 2019 Cutoff Scores French

- 2019 Cutoff Scores German

- 2019 Cutoff Scores Latin

- 2019 Cutoff Scores Spanish

- 2019 Advanced Placement Program

- 2019 International Baccalaureate Program

- 2019 Advanced Level Exams

- 2018 Cutoff Scores Math

- 2018 Cutoff Scores Chemistry

- 2018 Cutoff Scores MCB

- 2018 Cutoff Scores Physics

- 2018 Cutoff Scores Rhetoric

- 2018 Cutoff Scores ESL

- 2018 Cutoff Scores French

- 2018 Cutoff Scores German

- 2018 Cutoff Scores Latin

- 2018 Cutoff Scores Spanish

- 2018 Advanced Placement Program

- 2018 International Baccalaureate Program

- 2018 Advanced Level Exams

- 2017 Cutoff Scores Math

- 2017 Cutoff Scores Chemistry

- 2017 Cutoff Scores MCB

- 2017 Cutoff Scores Physics

- 2017 Cutoff Scores Rhetoric

- 2017 Cutoff Scores ESL

- 2017 Cutoff Scores French

- 2017 Cutoff Scores German

- 2017 Cutoff Scores Latin

- 2017 Cutoff Scores Spanish

- 2017 Advanced Placement Program

- 2017 International Baccalaureate Program

- 2017 Advanced Level Exams

- 2016 Cutoff Scores Math

- 2016 Cutoff Scores Chemistry

- 2016 Cutoff Scores Physics

- 2016 Cutoff Scores Rhetoric

- 2016 Cutoff Scores ESL

- 2016 Cutoff Scores French

- 2016 Cutoff Scores German

- 2016 Cutoff Scores Latin

- 2016 Cutoff Scores Spanish

- 2016 Advanced Placement Program

- 2016 International Baccalaureate Program

- 2016 Advanced Level Exams

- 2015 Fall Cutoff Scores Math

- 2016 Spring Cutoff Scores Math

- 2015 Cutoff Scores Chemistry

- 2015 Cutoff Scores Physics

- 2015 Cutoff Scores Rhetoric

- 2015 Cutoff Scores ESL

- 2015 Cutoff Scores French

- 2015 Cutoff Scores German

- 2015 Cutoff Scores Latin

- 2015 Cutoff Scores Spanish

- 2015 Advanced Placement Program

- 2015 International Baccalaureate (IB) Program

- 2015 Advanced Level Exams

- 2014 Cutoff Scores Math

- 2014 Cutoff Scores Chemistry

- 2014 Cutoff Scores Physics

- 2014 Cutoff Scores Rhetoric

- 2014 Cutoff Scores ESL

- 2014 Cutoff Scores French

- 2014 Cutoff Scores German

- 2014 Cutoff Scores Latin

- 2014 Cutoff Scores Spanish

- 2014 Advanced Placement (AP) Program

- 2014 International Baccalaureate (IB) Program

- 2014 Advanced Level Examinations (A Levels)

- 2013 Cutoff Scores Math

- 2013 Cutoff Scores Chemistry

- 2013 Cutoff Scores Physics

- 2013 Cutoff Scores Rhetoric

- 2013 Cutoff Scores ESL

- 2013 Cutoff Scores French

- 2013 Cutoff Scores German

- 2013 Cutoff Scores Latin

- 2013 Cutoff Scores Spanish

- 2013 Advanced Placement (AP) Program

- 2013 International Baccalaureate (IB) Program

- 2013 Advanced Level Exams (A Levels)

- 2012 Cutoff Scores Math

- 2012 Cutoff Scores Chemistry

- 2012 Cutoff Scores Physics

- 2012 Cutoff Scores Rhetoric

- 2012 Cutoff Scores ESL

- 2012 Cutoff Scores French

- 2012 Cutoff Scores German

- 2012 Cutoff Scores Latin

- 2012 Cutoff Scores Spanish

- 2012 Advanced Placement (AP) Program

- 2012 International Baccalaureate (IB) Program

- 2012 Advanced Level Exams (A Levels)

- 2011 Cutoff Scores Math

- 2011 Cutoff Scores Chemistry

- 2011 Cutoff Scores Physics

- 2011 Cutoff Scores Rhetoric

- 2011 Cutoff Scores French

- 2011 Cutoff Scores German

- 2011 Cutoff Scores Latin

- 2011 Cutoff Scores Spanish

- 2011 Advanced Placement (AP) Program

- 2011 International Baccalaureate (IB) Program

- 2010 Cutoff Scores Math

- 2010 Cutoff Scores Chemistry

- 2010 Cutoff Scores Rhetoric

- 2010 Cutoff Scores French

- 2010 Cutoff Scores German

- 2010 Cutoff Scores Latin

- 2010 Cutoff Scores Spanish

- 2010 Advanced Placement (AP) Program

- 2010 International Baccalaureate (IB) Program

- 2009 Cutoff Scores Math

- 2009 Cutoff Scores Chemistry

- 2009 Cutoff Scores Rhetoric

- 2009 Cutoff Scores French

- 2009 Cutoff Scores German

- 2009 Cutoff Scores Latin

- 2009 Cutoff Scores Spanish

- 2009 Advanced Placement (AP) Program

- 2009 International Baccalaureate (IB) Program

- 2008 Cutoff Scores Math

- 2008 Cutoff Scores Chemistry

- 2008 Cutoff Scores Rhetoric

- 2008 Cutoff Scores French

- 2008 Cutoff Scores German

- 2008 Cutoff Scores Latin

- 2008 Cutoff Scores Spanish

- 2008 Advanced Placement (AP) Program

- 2008 International Baccalaureate (IB) Program

- Log in & Interpret Student Profiles

- Mobius View

- Classroom Test Analysis: The Total Report

- Item Analysis

- Error Report

- Omitted or Multiple Correct Answers

- QUEST Analysis

- Assigning Course Grades

Improving Your Test Questions

- ICES Online

- Myths & Misperceptions

- Longitudinal Profiles

- List of Teachers Ranked as Excellent by Their Students

- Focus Groups

- IEF Question Bank

For questions or information:

- Choosing between Objective and Subjective Test Items

Multiple-Choice Test Items

True-false test items, matching test items, completion test items, essay test items, problem solving test items, performance test items.

- Two Methods for Assessing Test Item Quality

- Assistance Offered by The Center for Innovation in Teaching and Learning (CITL)

- References for Further Reading

I. Choosing Between Objective and Subjective Test Items

There are two general categories of test items: (1) objective items which require students to select the correct response from several alternatives or to supply a word or short phrase to answer a question or complete a statement; and (2) subjective or essay items which permit the student to organize and present an original answer. Objective items include multiple-choice, true-false, matching and completion, while subjective items include short-answer essay, extended-response essay, problem solving and performance test items. For some instructional purposes one or the other item types may prove more efficient and appropriate. To begin out discussion of the relative merits of each type of test item, test your knowledge of these two item types by answering the following questions.

Quiz Answers

1 Sax, G., & Collet, L. S. (1968). An empirical comparison of the effects of recall and multiple-choice tests on student achievement. J ournal of Educational Measurement, 5 (2), 169–173. doi:10.1111/j.1745-3984.1968.tb00622.x

Paterson, D. G. (1926). Do new and old type examinations measure different mental functions? School and Society, 24 , 246–248.

When to Use Essay or Objective Tests

Essay tests are especially appropriate when:

- the group to be tested is small and the test is not to be reused.

- you wish to encourage and reward the development of student skill in writing.

- you are more interested in exploring the student's attitudes than in measuring his/her achievement.

- you are more confident of your ability as a critical and fair reader than as an imaginative writer of good objective test items.

Objective tests are especially appropriate when:

- the group to be tested is large and the test may be reused.

- highly reliable test scores must be obtained as efficiently as possible.

- impartiality of evaluation, absolute fairness, and freedom from possible test scoring influences (e.g., fatigue, lack of anonymity) are essential.

- you are more confident of your ability to express objective test items clearly than of your ability to judge essay test answers correctly.

- there is more pressure for speedy reporting of scores than for speedy test preparation.

Either essay or objective tests can be used to:

- measure almost any important educational achievement a written test can measure.

- test understanding and ability to apply principles.

- test ability to think critically.

- test ability to solve problems.

- test ability to select relevant facts and principles and to integrate them toward the solution of complex problems.

In addition to the preceding suggestions, it is important to realize that certain item types are better suited than others for measuring particular learning objectives. For example, learning objectives requiring the student to demonstrate or to show , may be better measured by performance test items, whereas objectives requiring the student to explain or to describe may be better measured by essay test items. The matching of learning objective expectations with certain item types can help you select an appropriate kind of test item for your classroom exam as well as provide a higher degree of test validity (i.e., testing what is supposed to be tested). To further illustrate, several sample learning objectives and appropriate test items are provided on the following page.

After you have decided to use either an objective, essay or both objective and essay exam, the next step is to select the kind(s) of objective or essay item that you wish to include on the exam. To help you make such a choice, the different kinds of objective and essay items are presented in the following section. The various kinds of items are briefly described and compared to one another in terms of their advantages and limitations for use. Also presented is a set of general suggestions for the construction of each item variation.

II. Suggestions for Using and Writing Test Items

The multiple-choice item consists of two parts: (a) the stem, which identifies the question or problem and (b) the response alternatives. Students are asked to select the one alternative that best completes the statement or answers the question. For example:

Sample Multiple-Choice Item

*correct response

Advantages in Using Multiple-Choice Items

Multiple-choice items can provide...

- versatility in measuring all levels of cognitive ability.

- highly reliable test scores.

- scoring efficiency and accuracy.

- objective measurement of student achievement or ability.

- a wide sampling of content or objectives.

- a reduced guessing factor when compared to true-false items.

- different response alternatives which can provide diagnostic feedback.

Limitations in Using Multiple-Choice Items

Multiple-choice items...

- are difficult and time consuming to construct.

- lead an instructor to favor simple recall of facts.

- place a high degree of dependence on the student's reading ability and instructor's writing ability.

Suggestions For Writing Multiple-Choice Test Items

Item alternatives.

13. Use at least four alternatives for each item to lower the probability of getting the item correct by guessing.

14. Randomly distribute the correct response among the alternative positions throughout the test having approximately the same proportion of alternatives a, b, c, d and e as the correct response.

15. Use the alternatives "none of the above" and "all of the above" sparingly. When used, such alternatives should occasionally be used as the correct response.

A true-false item can be written in one of three forms: simple, complex, or compound. Answers can consist of only two choices (simple), more than two choices (complex), or two choices plus a conditional completion response (compound). An example of each type of true-false item follows:

Sample True-False Item: Simple

Sample true-false item: complex, sample true-false item: compound, advantages in using true-false items.

True-False items can provide...

- the widest sampling of content or objectives per unit of testing time.

- an objective measurement of student achievement or ability.

Limitations In Using True-False Items

True-false items...

- incorporate an extremely high guessing factor. For simple true-false items, each student has a 50/50 chance of correctly answering the item without any knowledge of the item's content.

- can often lead an instructor to write ambiguous statements due to the difficulty of writing statements which are unequivocally true or false.

- do not discriminate between students of varying ability as well as other item types.

- can often include more irrelevant clues than do other item types.

- can often lead an instructor to favor testing of trivial knowledge.

Suggestions For Writing True-False Test Items

In general, matching items consist of a column of stimuli presented on the left side of the exam page and a column of responses placed on the right side of the page. Students are required to match the response associated with a given stimulus. For example:

Sample Matching Test Item

Advantages in using matching items.

Matching items...

- require short periods of reading and response time, allowing you to cover more content.

- provide objective measurement of student achievement or ability.

- provide highly reliable test scores.

- provide scoring efficiency and accuracy.

Limitations in Using Matching Items

- have difficulty measuring learning objectives requiring more than simple recall of information.

- are difficult to construct due to the problem of selecting a common set of stimuli and responses.

Suggestions for Writing Matching Test Items

5. Keep matching items brief, limiting the list of stimuli to under 10.

6. Include more responses than stimuli to help prevent answering through the process of elimination.

7. When possible, reduce the amount of reading time by including only short phrases or single words in the response list.

The completion item requires the student to answer a question or to finish an incomplete statement by filling in a blank with the correct word or phrase. For example,

Sample Completion Item

According to Freud, personality is made up of three major systems, the _________, the ________ and the ________.

Advantages in Using Completion Items

Completion items...

- can provide a wide sampling of content.

- can efficiently measure lower levels of cognitive ability.

- can minimize guessing as compared to multiple-choice or true-false items.

- can usually provide an objective measure of student achievement or ability.

Limitations of Using Completion Items

- are difficult to construct so that the desired response is clearly indicated.

- are more time consuming to score when compared to multiple-choice or true-false items.

- are more difficult to score since more than one answer may have to be considered correct if the item was not properly prepared.

Suggestions for Writing Completion Test Items

7. Avoid lifting statements directly from the text, lecture or other sources.

8. Limit the required response to a single word or phrase.

The essay test is probably the most popular of all types of teacher-made tests. In general, a classroom essay test consists of a small number of questions to which the student is expected to demonstrate his/her ability to (a) recall factual knowledge, (b) organize this knowledge and (c) present the knowledge in a logical, integrated answer to the question. An essay test item can be classified as either an extended-response essay item or a short-answer essay item. The latter calls for a more restricted or limited answer in terms of form or scope. An example of each type of essay item follows.

Sample Extended-Response Essay Item

Explain the difference between the S-R (Stimulus-Response) and the S-O-R (Stimulus-Organism-Response) theories of personality. Include in your answer (a) brief descriptions of both theories, (b) supporters of both theories and (c) research methods used to study each of the two theories. (10 pts. 20 minutes)

Sample Short-Answer Essay Item

Identify research methods used to study the S-R (Stimulus-Response) and S-O-R (Stimulus-Organism-Response) theories of personality. (5 pts. 10 minutes)

Advantages In Using Essay Items

Essay items...

- are easier and less time consuming to construct than are most other item types.

- provide a means for testing student's ability to compose an answer and present it in a logical manner.

- can efficiently measure higher order cognitive objectives (e.g., analysis, synthesis, evaluation).

Limitations In Using Essay Items

- cannot measure a large amount of content or objectives.

- generally provide low test and test scorer reliability.

- require an extensive amount of instructor's time to read and grade.

- generally do not provide an objective measure of student achievement or ability (subject to bias on the part of the grader).

Suggestions for Writing Essay Test Items

4. Ask questions that will elicit responses on which experts could agree that one answer is better than another.

5. Avoid giving the student a choice among optional items as this greatly reduces the reliability of the test.

6. It is generally recommended for classroom examinations to administer several short-answer items rather than only one or two extended-response items.

Suggestions for Scoring Essay Items

Examples essay item and grading models.

"Americans are a mixed-up people with no sense of ethical values. Everyone knows that baseball is far less necessary than food and steel, yet they pay ball players a lot more than farmers and steelworkers."

WHY? Use 3-4 sentences to indicate how an economist would explain the above situation.

Analytical Scoring

Global quality.

Assign scores or grades on the overall quality of the written response as compared to an ideal answer. Or, compare the overall quality of a response to other student responses by sorting the papers into three stacks:

Read and sort each stack again divide into three more stacks

In total, nine discriminations can be used to assign test grades in this manner. The number of stacks or discriminations can vary to meet your needs.

- Try not to allow factors which are irrelevant to the learning outcomes being measured affect your grading (i.e., handwriting, spelling, neatness).

- Read and grade all class answers to one item before going on to the next item.

- Read and grade the answers without looking at the students' names to avoid possible preferential treatment.

- Occasionally shuffle papers during the reading of answers to help avoid any systematic order effects (i.e., Sally's "B" work always followed Jim's "A" work thus it looked more like "C" work).

- When possible, ask another instructor to read and grade your students' responses.

Another form of a subjective test item is the problem solving or computational exam question. Such items present the student with a problem situation or task and require a demonstration of work procedures and a correct solution, or just a correct solution. This kind of test item is classified as a subjective type of item due to the procedures used to score item responses. Instructors can assign full or partial credit to either correct or incorrect solutions depending on the quality and kind of work procedures presented. An example of a problem solving test item follows.

Example Problem Solving Test Item

It was calculated that 75 men could complete a strip on a new highway in 70 days. When work was scheduled to commence, it was found necessary to send 25 men on another road project. How many days longer will it take to complete the strip? Show your work for full or partial credit.

Advantages In Using Problem Solving Items

Problem solving items...

- minimize guessing by requiring the students to provide an original response rather than to select from several alternatives.

- are easier to construct than are multiple-choice or matching items.

- can most appropriately measure learning objectives which focus on the ability to apply skills or knowledge in the solution of problems.

- can measure an extensive amount of content or objectives.

Limitations in Using Problem Solving Items

- require an extensive amount of instructor time to read and grade.

- generally do not provide an objective measure of student achievement or ability (subject to bias on the part of the grader when partial credit is given).

Suggestions For Writing Problem Solving Test Items

6. Ask questions that elicit responses on which experts could agree that one solution and one or more work procedures are better than others.

7. Work through each problem before classroom administration to double-check accuracy.

A performance test item is designed to assess the ability of a student to perform correctly in a simulated situation (i.e., a situation in which the student will be ultimately expected to apply his/her learning). The concept of simulation is central in performance testing; a performance test will simulate to some degree a real life situation to accomplish the assessment. In theory, a performance test could be constructed for any skill and real life situation. In practice, most performance tests have been developed for the assessment of vocational, managerial, administrative, leadership, communication, interpersonal and physical education skills in various simulated situations. An illustrative example of a performance test item is provided below.

Sample Performance Test Item

Assume that some of the instructional objectives of an urban planning course include the development of the student's ability to effectively use the principles covered in the course in various "real life" situations common for an urban planning professional. A performance test item could measure this development by presenting the student with a specific situation which represents a "real life" situation. For example,

An urban planning board makes a last minute request for the professional to act as consultant and critique a written proposal which is to be considered in a board meeting that very evening. The professional arrives before the meeting and has one hour to analyze the written proposal and prepare his critique. The critique presentation is then made verbally during the board meeting; reactions of members of the board or the audience include requests for explanation of specific points or informed attacks on the positions taken by the professional.

The performance test designed to simulate this situation would require that the student to be tested role play the professional's part, while students or faculty act the other roles in the situation. Various aspects of the "professional's" performance would then be observed and rated by several judges with the necessary background. The ratings could then be used both to provide the student with a diagnosis of his/her strengths and weaknesses and to contribute to an overall summary evaluation of the student's abilities.

Advantages In Using Performance Test Items

Performance test items...

- can most appropriately measure learning objectives which focus on the ability of the students to apply skills or knowledge in real life situations.

- usually provide a degree of test validity not possible with standard paper and pencil test items.

- are useful for measuring learning objectives in the psychomotor domain.

Limitations In Using Performance Test Items

- are difficult and time consuming to construct.

- are primarily used for testing students individually and not for testing groups. Consequently, they are relatively costly, time consuming, and inconvenient forms of testing.

- generally do not provide an objective measure of student achievement or ability (subject to bias on the part of the observer/grader).

Suggestions For Writing Performance Test Items

- Prepare items that elicit the type of behavior you want to measure.

- Clearly identify and explain the simulated situation to the student.

- Make the simulated situation as "life-like" as possible.

- Provide directions which clearly inform the students of the type of response called for.

- When appropriate, clearly state time and activity limitations in the directions.

- Adequately train the observer(s)/scorer(s) to ensure that they are fair in scoring the appropriate behaviors.

III. TWO METHODS FOR ASSESSING TEST ITEM QUALITY

This section presents two methods for collecting feedback on the quality of your test items. The two methods include using self-review checklists and student evaluation of test item quality. You can use the information gathered from either method to identify strengths and weaknesses in your item writing.

Checklist for Evaluating Test Items

EVALUATE YOUR TEST ITEMS BY CHECKING THE SUGGESTIONS WHICH YOU FEEL YOU HAVE FOLLOWED.

Grading Essay Test Items

Student evaluation of test item quality , using ices questionnaire items to assess your test item quality .

The following set of ICES (Instructor and Course Evaluation System) questionnaire items can be used to assess the quality of your test items. The items are presented with their original ICES catalogue number. You are encouraged to include one or more of the items on the ICES evaluation form in order to collect student opinion of your item writing quality.

IV. ASSISTANCE OFFERED BY THE CENTER FOR INNOVATION IN TEACHING AND LEARNING (CITL)

The information on this page is intended for self-instruction. However, CITL staff members will consult with faculty who wish to analyze and improve their test item writing. The staff can also consult with faculty about other instructional problems. Instructors wishing to acquire CITL assistance can contact [email protected] .

V. REFERENCES FOR FURTHER READING

Ebel, R. L. (1965). Measuring educational achievement . Prentice-Hall. Ebel, R. L. (1972). Essentials of educational measurement . Prentice-Hall. Gronlund, N. E. (1976). Measurement and evaluation in teaching (3rd ed.). Macmillan. Mehrens W. A. & Lehmann I. J. (1973). Measurement and evaluation in education and psychology . Holt, Rinehart & Winston. Nelson, C. H. (1970). Measurement and evaluation in the classroom . Macmillan. Payne, D. A. (1974). The assessment of learning: Cognitive and affective . D.C. Heath & Co. Scannell, D. P., & Tracy D. B. (1975). Testing and measurement in the classroom . Houghton Mifflin. Thorndike, R. L. (1971). Educational measurement (2nd ed.). American Council on Education.

Center for Innovation in Teaching & Learning

249 Armory Building 505 East Armory Avenue Champaign, IL 61820

217 333-1462

Email: [email protected]

Office of the Provost

Assessment: Test construction basics

- Aligning outcomes, assessment, and instruction

- Examples from a range of disciplines

- Examples from carpentry

- Examples from electrical

- Examples from english

- Examples from english language development

- Examples from marketing

- Examples from practical nursing

- Examples from psychology

- Types of assessment

Test construction basics

- Multiple choice questions

- Short answer questions

- Completion questions

- Matching questions

- True/false questions

- Take home/open book

- Reducing test anxiety

- Benefits and challenges

- Planning and implementing group work

- Preparing students for group work

- Assessing group work

- Additional resources

- Writing clear assignments

- Assessment and AI This link opens in a new window

- Academic integrity

- Further readings

The following information provides some general guidelines to assist with test development and is meant to be applicable across disciplines.

Make sure you are familiar with Bloom’s Taxonomy , as it is referenced frequently.

General Tips

Start with your learning outcomes. Choose objective and subjective assessments that match your learning outcomes and the level of complexity of the learning outcome.

Use a test blueprint. A test blueprint is a rubric, document, or table that lists the learning outcomes to be tested, the level of complexity, and the weight for the learning outcome (see sample). A blueprint will make writing the test easier and contribute immensely to test validity. Note that Bloom’s taxonomy can be very useful with this activity. Share this information with your students, to help them to prepare for the test.

Let your students know what to expect on the test . Be explicit; otherwise students may make incorrect assumptions about the test.

Word questions clearly and simply. Avoid complex questions, double negatives, and idiomatic language that may be difficult for students, especially multilingual students, to understand.

Have a colleague or instructional assistant read through (or even take) your exam. This will help ensure your questions and exam are clear and unambiguous. This also contributes to the reliability and validity of the test

Assess the length of the exam . Unless your goal is to assess students’ ability to work within time constraints, design your exam so that students can comfortably complete it in the allocated time. A good guideline is to take the exam yourself and time it, then triple the amount of time it took you to complete the exam, or adjust accordingly.

Write your exam key prior to students taking the exam. The point value you assign to each question should align with the level of difficulty and the importance of the skill being assessed. Writing the exam key enables you to see how the questions align with instructional activities. You should be able to easily answer all the questions. Decide if you will give partial credit to multi-step questions and determine the number of steps that will be assigned credit. Doing this in advance assures the test is reliable and valid.

Design your exam so that students in your class have an equal opportunity to fully demonstrate their learning . Use different types of questions, reduce or eliminate time pressure, allow memory aids when appropriate, and make your questions fair. An exam that is too easy or too demanding will not accurately measure your students’ understanding of the material.

Characteristics of test questions, and how to choose which to use

Including a variety of question types in an exam enables the test designer to better leverage the strengths and overcome the weaknesses of any individual question type. Multiple choice questions are popular for their versatility and efficiency, but many other question types can add value to a test. Some points to consider when deciding which, when, and how often to use a particular question type include:

- Workload: Some questions require more front-end workload (i.e., time-consuming to write), while others require more back-end workload (i.e., time-consuming to mark).

- Depth of knowledge: Some question types are better at tapping higher-order thinking skills, such as analyzing or synthesizing, while others are better for surface level recall.

- Processing speed: Some question types are more easily processed and can be more quickly answered. This can impact the timing of the test and the distribution of students’ effort across different knowledge domains.

All test items should :

- Assess achievement of learning outcomes for the unit and/or course

- Measure important concepts and their relationship to that unit and/or course

- Align with your teaching and learning activities and the emphasis placed on concepts and tasks

- Measure the appropriate level of knowledge

- Vary in levels of difficulty (some factual recall and demonstration of knowledge, some application and analysis, and some evaluation and creation)

Two important characteristics of tests are:

- Reliability – to be reliable, the test needs to be consistent and free from errors.

- Validity – to be valid, the test needs to measure what it is supposed to measure.

There are two general categories for test items:

1. Objective items – students select the correct response from several alternatives or supply a word or short phrase answer. These types of items are easier to create for lower order Bloom’s (recall and comprehension) while still possible to design for higher order thinking test items (apply and analyze).

Objective test items include:

- Multiple choice

- Completion/Fill-in-the-blank

Objective test items are best used when:

- The group tested is large; objective tests are fast and easy to score.

- The test will be reused (must be stored securely).

- Highly reliable scores on a broad range of learning goals must be obtained as efficiently as possible.

- Fairness and freedom from possible test scoring influences are essential.

2. Subjective or essay items – students present an original answer. These types of items are easier to use for higher order Bloom’s (apply, analyze, synthesize, create, evaluate).

Subjective test items include:

- Short answer essay

- Extended response essay

- Problem solving

- Performance test items (these can be graded as complete/incomplete, performed/not performed)

Subjective test items are best used when:

- The group to be tested is small or there is a method in place to minimize marking load.

- The test in not going to be reused (but could be built upon).

- The development of students’ writing skills is a learning outcome for the course.

- Student attitudes, critical thinking, and perceptions are as, or more, important than measuring achievement.

Objective and subjective test items are both suitable for measuring most learning outcomes and are often used in combination. Both types can be used to test comprehension, application of concepts, problem solving, and ability to think critically. However, certain types of test items are better suited than others to measure learning outcomes. For example, learning outcomes that require a student to ‘demonstrate’ may be better measured by a performance test item, whereas an outcome requiring the student to ‘evaluate’ may be better measured by an essay or short answer test item.

Bloom's Taxonomy

Bloom’s Taxonomy by Vanderbilt University Center for Teaching , licensed under CC-BY 2.0 .

Common question types

- Multiple choice

- True/false

- Completion/fill-in-the-blank

- Short answer

Tips to reduce cheating

- Use randomized questions

- Use question pools

- Use calculated formula questions

- Use a range of different types of questions

- Avoid publisher test banks

- Do not re-use old tests

- Minimize use of multiple choice questions

- Have students “show their work” (for online courses they can scan/upload their work)

- Remind students of academic integrity guidelines, policies and consequences

- Have students sign an academic honesty form at the beginning of the assessment

Additional considerations for constructing effective tests

Prepare new or revised tests each time you teach a course. A past test will not reflect the changes in how you presented the material and the topics you emphasized. Writing questions at the end of each unit is one way to make sure your test reflects the learning outcomes and teaching activities for the unit.

Be cautious about using item banks from textbook publishers. The items may be poorly written, may focus on trivial topics, and may not reflect the learning outcomes for your course.

Make your tests cumulative. Cumulative tests require students to review material they have already studied and provide additional opportunity to include higher-order thinking questions, thus improving retention and learning.

Printable version

- Test Construction Basics

- Sample Test Blueprint

- << Previous: Test construction

- Next: Multiple choice questions >>

- Last Updated: Nov 10, 2023 11:13 AM

- URL: https://camosun.libguides.com/AFL

- Faculty & Staff

Constructing tests

Whether you use low-stakes assessments, such as practice quizzes, or high-stakes assessments, such as midterms and finals, the careful design of your tests and quizzes can provide you with better information on what and how much students have learned, as well as whether they are able to apply what they have learned.

On this page, you can explore strategies for:

Designing your test or quiz Creating multiple choice questions Creating essay and short answer questions Helping students succeed on your test/quiz Promoting academic integrity Assessing your assessment

Designing your test or quiz

Tests and quizzes can help instructors work toward a number of different goals. For example, a frequent cadence of quizzes can help motivate students, give you insight into students’ progress, and identify aspects of the course you might need to adjust.

Understanding what you want to accomplish with the test or quiz will help guide your decisionmaking about things like length, format, level of detail expected from students, and the time frame for providing feedback to the students. Regardless of what type of test or quiz you develop, it is good to:

- Align your test/quiz with your course learning outcomes and objectives. For example, if your course goals focus primarily on building students’ synthesis skills, make sure your test or quiz asks students to demonstrate their ability to connect concepts.

- Design questions that allow students to demonstrate their level of learning. To determine which concepts you might need to reinforce, create questions that give you insight into a student’s level of competency. For example, if you want students to understand a 4-step process, develop questions that show you which of the steps they grasp and which they need more help understanding.

- Develop questions that map to what you have explored and discussed in class. If you are using publisher-provided question banks or assessment tools, be sure to review and select questions carefully to ensure alignment with what students have encountered in class or in your assignments.

- Incorporate Universal Design for Learning (UDL) principles into your test or quiz design. UDL embraces a commitment to offering students multiple means of demonstrating their understanding. Consider offering practice tests/quizzes and creating tests/quizzes with different types of questions (e.g., multiple choice, written, diagram-based). Also, offer options in the test/quiz itself. For example, give students a choice of questions to answer or let them choose between writing or speaking their essay response. Valuing different communication modes helps create an inclusive environment that supports all students.

Creating multiple choice questions

While it is not advisable to rely solely on multiple choice questions to gauge student learning, they are often necessary in large-enrollment courses. And multiple choice questions can add value to any course by providing instructors with quick insight into whether students have a basic understanding of key information. They are also a great way to incorporate more formative assessment into your teaching .

Creating effective multiple choice questions can be difficult, especially if you want students to go beyond simply recalling information. But it is possible to develop multiple choice questions that require higher-order thinking. Here are some strategies for writing effective multiple choice questions:

- Design questions that ask students to evaluate information (e.g., use an example, case study, or real-world dataset).

- Make sure the answer options are consistent in length and detail. If the correct answer is noticeably different, students may end up choosing the right answer for the wrong reasons.

- Design questions focused on your learning goals. Avoid writing “gotcha” questions – the goal is not to trip students up, but to assess their learning and progress toward your learning outcomes.

- Test drive your questions with a colleague or teaching assistant to determine if your intention is clear.

Creating essay and short answer questions

Essay and short answer questions that require students to compose written responses of several sentences or paragraphs offer instructors insights into students’ ability to reason, synthesize, and evaluate information. Here are some strategies for writing effective essay questions:

- Signal the type of thinking you expect students to demonstrate. Use specific words and phrases (e.g., identify, compare, critique) that guide students to how to go about responding to the question.

- Write questions that can be reasonably answered in the time allotted. Consider offering students some guideposts on how much effort they should give to a question (e.g., “Write no more than 2 short paragraphs” or “Each short answer question is worth 20 points/10% of the test”).

- Share your grading criteria before the test or quiz. Rubrics are a great way to help students prepare for an essay- or short answer-based test.

Strategies for grading essay and short answer questions

Although essay and short paragraph questions are more labor-intensive to grade than multiple-choice questions, the pay-off is often greater – they provide more insight into students’ critical thinking skills. Here are some strategies to help streamline the essay/short answer grading process:

- Develop a rubric to keep you focused on your core criteria for success. Identify the point value/percentage associated with each criterion to streamline scoring.

- Grade all student responses to the same question before moving to the next question. For example, if your test has two essay questions, grade all essay #1 responses first. Then grade all essay #2 responses. This promotes grading equity and may provide a more holistic view of how the class as a whole answered each question.

- Focus on assessing students’ ideas and arguments. Few people write beautifully in a timed test. Unless it is key to your learning outcomes, don’t grade on grammar or polish.

Helping students succeed

While important in university settings, tests aren’t commonly found outside classroom settings. Think about your own work – how often are you expected to sit down, turn over a sheet of paper, and demonstrate the isolated skill or understanding listed on the paper in less than an hour? Sound stressful? It is! And sometimes that stress can be compounded by students’ lives beyond the classroom.

“Giving a traditional test feels fair from the vantage point of an instructor….The students take it at the same time and work in the same room. But their lives outside the test spill into it. Some students might have to find child care to take an evening exam. Others…have ADHD and are under additional stress in a traditional testing environment. So test conditions can be inequitable. They are also artificial: People are rarely required to solve problems under similar pressure, without outside resources, after they graduate. They rarely have to wait for a professor to grade them to understand their own performance.” “ What fixing a snowblower taught one professor about teaching ” Chronicle of Higher Education

Many students understandably experience stress, anxiety, and apprehension about taking tests and that can affect their performance. Here are some strategies for reducing stress in testing environments:

- Set expectations. In your syllabus and on the first day of class, share your course learning outcomes and clearly define what constitutes cheating. As the quarter progresses, talk with students about the focus, time, location, and format of each test or quiz.

- Provide study questions or low-/no-stakes practice tests that scaffold to the actual test. Practice questions should ask students to engage in the same kind of thinking you will be assessing on the actual test or quiz.

- Co-create questions with your students . Ask students (individually or in small groups) to develop and answer potential test questions. Collect these and use them to create the questions for the actual test.

- Develop relevant tests/quiz questions that assess skills and knowledge you explored during lecture or discussion.

- Share examples of successful answers and provide explanations of why those answers were successful.

Promoting academic integrity

The primary goal of a test or quiz is to provide insight into a student’s understanding or ability. If a student cheats, the instructor has no opportunity to assess learning and help the student grow. There are a variety of strategies instructors can employ to create a culture of academic integrity . Here are a few specifically related to developing tests and quizzes:

- Avoid high-stakes tests. High-stakes tests (e.g., a test worth more than 25% of the course grade) make it hard for a student to rebound from a mistake. If students worry that a disastrous exam will make it impossible to pass the course, they are more likely to cheat. Opt for assessments that provides students opportunities to grow and rebound from missteps (e.g., more low- or no-stakes assignments ).

- Require students to reference specific materials or assignments in their answers. Asking students to draw on specific materials in their answers makes it harder for students to copy/paste answers from other sources.

- Require students to explain or justify their answers . If it isn’t feasible to do this during the test, consider developing a post-test assignment that asks learners to justify their test answers. Instructors need not spend a lot of time grading these; just spot check them to get a sense of whether learners are thinking for themselves.

- Prompt students to apply their learning through questions built around discrete scenarios, real-world problems, or unique datasets.

- Use question banks and randomize questions when building your quiz or test.

- Include a certification question. Ask students to acknowledge their academic integrity responsibilities at the beginning of a quiz/exam with a question like this one: “The work I submit is my own work. I will not consult with, discuss the contents of this quiz/test with, or show the quiz/test to anyone else, including other students. I understand that doing so is a violation of UW’s academic integrity policy and may subject me to disciplinary action, including suspension and dismissal.”

- Allow learners to work together. Ask learners to collaborate to answer quiz/exam questions. By helping each other, learners engage with the course’s content and develop valuable collaboration skills.

Assessing your assessment

Observation and iteration are key parts of a reflective teaching practice . Take time after you’ve graded a test or quiz to examine its effectiveness and identify ways to improve it. Start by asking yourself some basic questions:

- Did the test/quiz assess what I wanted it to assess? Based on students’ performance on your questions, are you confident that students grasp the concepts and skills you designed the test/quiz to measure?

- Did the test align with my course goals and learning objectives? Does student performance indicate that they have made progress toward your learning goals? If not, you may need to revise your questions.

- Were there particular questions that many students missed? If so, reconsider how you’ve worded the question or examine whether the question asked students about something you didn’t discuss (or didn’t discuss enough) in class.

- Were students able to finish the test or quiz in the time allotted? If not, reconsider the number and difficulty of your questions.

CAVEON SECURITY INSIGHTS BLOG

The World's Only Test Security Blog

Pull up a chair among Caveon's experts in psychometrics, psychology, data science, test security, law, education, and oh-so-many other fields and join in the conversation about all things test security.

Constructing Test Items (Guidelines & 7 Common Item Types)

Posted by Erika Johnson

December 7, 2023 at 4:16 PM

updated over a week ago

Introduction

Let's say you have been given the task of building an examination for your organization.

Finally (after spending two weeks panicking about how you would do this and definitely not procrastinating the work that must be done), you are finally ready to begin the test development process.

But you can't help but ask yourself:

- Where in the world do you begin?

- Why do you need to create this exam?

- And while you know you need to construct test items, which item types are the best fit for your exam?

- Who is your audience?

- How do you determine all that?

Luckily for you, Caveon has an amazing team of experts on hand to help with every step of the way: Caveon Secure Exam Development (C-SEDs). Whether working with our team or trying your hand at test development yourself, here's some information on item best practices to help guide you on your way.

Table of Contents

- The Benefits of Identifying Your Exam’s Purpose

- What Is a Minimally Qualified Candidate (MQC)?

Common Exam Types

Common item types.

- General Guidelines for Constructing Test Items

- Conclusion & More Resources

Determine Your Purpose for Testing: Why and Who

First thing’s first.

Before creating your test, you need to determine your purpose:

- Why you are testing your candidates, and

- Who exactly will be taking your exam

Assessing your testing program's purpose (the "why" and "who" of your exam) is the first vital step of the development process. You do not want to test just to test; you want to scope out the reason for your exam. Ask yourself:

- Why is this exam important to your organization?

- What are you trying to achieve with having your test takers sit for it?

Consider the following:

Is your organization interested in testing to see what was learned at the end of a course presented to students?

- Are you looking to assess if an applicant for a job has the necessary knowledge to perform the role?

- Are candidates trying to obtain certification within a certain field?

The Benefits of Identifying Your Exam's Purpose

Learning the purpose of your exam will help you come up with a plan on how best to set up your exam—which exam type to use, which type of exam items will best measure the skills of your candidates (we will discuss this in a minute), etc.

Determining your test's purpose will also help you to be better able to figure out your testing audience, which will en sure your exam is testing your examinees at the right level.

Whether they are students still in school, individuals looking to qualify for a position, or experts looking to get certification in a certain product or field, it’s important to make sure your exam is actually testing at the appropriate level .

For example, your test scores will not be valid if your items are too easy or too hard, so keeping the minimally qualified candidate (MQC) in mind during all of the steps of the exam development process will ensure you are capturing valid test results overall.

What Is the MQC?

MQC is the acronym for “minimally qualified candidate.”

The MQC is a conceptualization of the assessment candidate who possesses the minimum knowledge, skills, experience, and competence to just meet the expectations of a credentialed individual.

If the credential is entry level, the expectations of the MQC will be less than if the credential is designated at an intermediate or expert level.

Think of an ability continuum that goes from low ability to high ability. Somewhere along that ability continuum, a cut point will be set. Those candidates who score below that cut point are not qualified and will fail the test. Those candidates who score above that cut point are qualified and will pass.

The minimally qualified candidate, though, should just barely make the cut. It’s important to focus on the word “qualified,” because even though this candidate will likely gain more expertise over time, they are still deemed to have the requisite knowledge and abilities to perform the job or understand the subject.

Factors to Consider when Constructing Your Test

Now that you’ve determined the purpose of your exam and identified the audience, it’s time to decide on the exam type and which item types to use that will be most appropriate to measure the skills of your test takers.

First up, your exam type.

The type of exam you choose depends on what you are trying to test and the kind of tool you are using to deliver your exam.

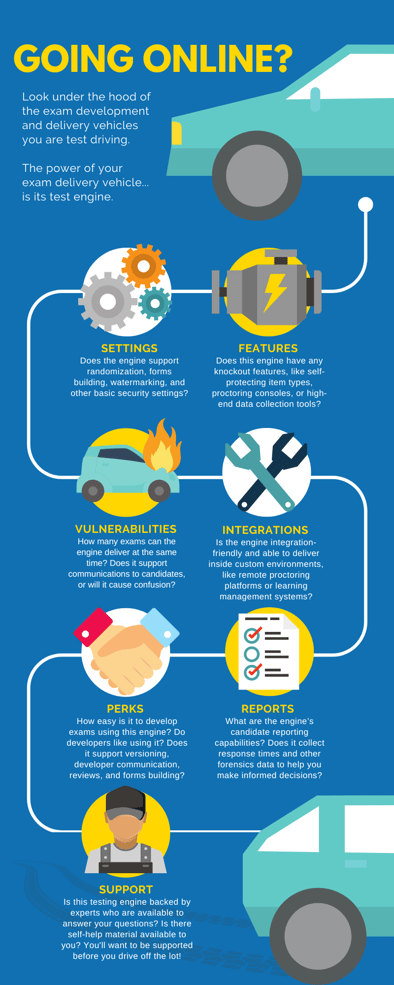

You should always make sure the software you use to develop and deliver your exam is thoroughly vetted—here's an outline of some of the most important things to look for in your testing engine:

Next up, the type of exam and items you choose.

The type of exam and type(s) of items you choose depend on your measurement goals and what you are trying to assess. It is essential to take all of this into consideration before moving forward with development.

Here are some common exam types to consider:

Fixed-Form Exam

Fixed-form delivery is a method of testing where every test taker receives the same items. An organization can have more than one fixed-item form in rotation, using the same items that are randomized on each live form. Additionally, forms can be made using a larger item bank and published with a fixed set of items equated to a comparable difficulty and content area match.

Computer Adaptive Testing (CAT)

A CAT exam is a test that adapts to the candidate's ability in real time by selecting different questions from the bank in order to provide a more accurate measurement of their ability level on a common scale. Every time a test taker answers an item, the computer re-estimates the tester’s ability based on all the previous answers and the difficulty of those items. The computer then selects the next item that the test taker should have a 50% chance of answering correctly.

Linear on the Fly Testing (LOFT)

A LOFT exam is a test where the items are drawn from an item bank pool and presented on the exam in a way that each person sees a different set of items. The difficulty of the overall test is controlled to be equal for all examinees. LOFT exams utilize automated item generation ( AIG ) to create large item banks.

The above three exam types can be used with any standard item type.

Before moving on, however, there is another more innovative exam type to consider if your delivery method allows for it:

Performance-Based Testing

A performance-based assessment measures the test taker's ability to apply the skills and knowledge learned beyond typical methods of study and/or learned through research and experience. For example, a test taker in a medical field may be asked to draw blood from a patient to show they can competently perform the task. Or a test taker wanting to become a chef may be asked to prepare a specific dish to ensure they can execute it properly.

Once you've decided on the type of exam you'll use, it's time to choose your item types.

There are many different item types to choose from (you can check out a few of our favorites in this article.)

While utilizing more item types on your exam won’t ensure you have more valid test results , it’s important to know what’s available in order to decide on the best item format for your program.

Here are a few of the most common items to consider when constructing your test:

Multiple-Choice

A multiple-choice item is a question where a candidate is asked to select the correct response from a choice of four (or more) options.

Multiple Response

A multiple response item is an item where a candidate is asked to select more than one response from a select pool of options (i.e., “choose two,” “choose 3,” etc.)

Short Answer

Short answer items ask a test taker to synthesize, analyze, and evaluate information, and then to present it coherently in written form.

A matching item requires test takers to connect a definition/description/scenario to its associated correct keyword or response.

A build list item challenges a candidate’s ability to identify and order the steps/tasks needed to perform a process or procedure.

Discrete Option Multiple Choice ™ (DOMC)

DOMC™ is known as the “multiple-choice item makeover.” Instead of showing all the answer options, DOMC options are randomly presented one at a time. For each option, the test taker chooses “yes” or “no.” When the question is answered correctly or incorrectly, the next question is presented. DOMC has been used by award-winning testing programs to prevent cheating and test theft. You can learn more about the DOMC item type in this white paper .

SmartItem ™

A self-protecting item, otherwise known as a SmartItem , employs a proprietary technology resistant to cheating and theft. A SmartItem contains multiple variations, all of which work together to cover an entire learning objective completely. Each time the item is administered, the computer generates a random variation. SmartItem technology has numerous benefits, including curbing item development costs and mitigating the effects of testwiseness. You can learn more about the SmartItem in this infographic and this white paper .

What Are the General Guidelines for Constructing Test Items?

Regardless of the exam type and item types you choose, focusing on some best practice guidelines can set up your exam for success in the long run.

There are many guidelines for creating tests (see this handy guide, for example), but this list sticks to the most important points. Little things can really make a difference when developing a valid and reliable exam!

Institute Fairness

Although you want to ensure that your items are difficult enough that not everyone gets them correct, you never want to trick your test takers! Keeping your wording clear and making sure your questions are direct and not ambiguous is very important. For example, asking a question such as “What is the most important ingredient to include when baking chocolate chip cookies?” does not set your test taker up for success. One person may argue that sugar is the most important, while another test taker may say that the chocolate chips are the most necessary ingredient. A better way to ask this question would be “What is an ingredient found in chocolate chip cookies?” or “Place the following steps in the proper order when baking chocolate chip cookies.”

Stick to the Topic at Hand

When creating your items, ensuring that each item aligns with the objective being tested is very important. If the objective asks the test taker to identify genres of music from the 1990s, and your item is asking the test taker to identify different wind instruments, your item is not aligning with the objective.

Ensure Item Relevancy

Your items should be relevant to the task that you are trying to test. Coming up with ideas to write on can be difficult, but avoid asking your test takers to identify trivial facts about your objective just to find something to write about. If your objective asks the test taker to know the main female characters in the popular TV show Friends , asking the test taker what color Rachel’s skirt was in episode 3 is not an essential fact that anyone would need to recall to fully understand the objective.

Gauge Item Difficulty

As discussed above, remembering your audience when writing your test items can make or break your exam. To put it into perspective, if you are writing a math exam for a fourth-grade class, but you write all of your items on advanced trigonometry, you have clearly not met the difficulty level for the test taker.

Inspect Your Options

When writing your options, keep these points in mind:

- Always make sure your correct option is 100% correct, and your incorrect options are 100% incorrect. By using partially correct or partially incorrect options, you will confuse your candidate. Doing this could keep a truly qualified candidate from answering the item correctly.

- Make sure your distractors are plausible. If your correct response logically answers the question being asked, but your distractors are made up or even silly, it will be very easy for any test taker to figure out which option is correct. Thus, your exam will not properly discriminate between qualified and unqualified candidates.

- Try to make your options parallel to one another. Ensuring that your options are all worded similarly and are approximately the same length will keep one from standing out from another, helping to remove that testwiseness effect.

Constructing test items and creating entire examinations is no easy undertaking.

This article will hopefully help you identify your specific purpose for testing and determine the exam and item types you can use to best measure the skills of your test takers.

We’ve also gone over general best practices to consider when constructing items, and we’ve sprinkled helpful resources throughout to help you on your exam development journey.

(Note: This article helps you tackle the first step of the 8-step assessment process : Planning & Developing Test Specifications.)

To learn more about creating your exam —i ncluding how to increase the usable lifespan of your exam — review our ultimate guide on secure exam creation and also our workbook on evaluating your testing engine, leveraging secure item types, and increasing the number of items on your tests.

And if you need help constructing your exam and/or items, our award-winning exam development team is here to help!

Erika Johnson

Erika is an Exam Development Manager in Caveon’s C-SEDs group. With almost 20 years in the testing industry, nine of which have been with Caveon, Erika is a veteran of both exam development and test security. Erika has extensive experience working with new, innovative test designs, and she knows how to best keep an exam secure and valid.

About Caveon

For more than 18 years, Caveon Test Security has driven the discussion and practice of exam security in the testing industry. Today, as the recognized leader in the field, we have expanded our offerings to encompass innovative solutions and technologies that provide comprehensive protection: Solutions designed to detect, deter, and even prevent test fraud.

Topics from this blog: Exam Development K-12 Education Test Security Basics DOMC™ Certification Higher Education Online Exams Automated Item Generation (AIG) SmartItem™ Medical Licensure

Posts by Topic

- Test Security Basics (34)

- Detection Measures (29)

- K-12 Education (27)

- Online Exams (21)

- Test Security Plan (21)

- Higher Education (20)

- Prevention Measures (20)

- Test Security Consulting (20)

- Certification (19)

- Exam Development (19)

- Deterrence Measures (15)

- Medical Licensure (15)

- Web Monitoring (12)

- Data Forensics (11)

- Investigating Security Incidents (11)

- Test Security Stories (9)

- Security Incident Response Plan (8)

- Monitoring Test Administration (7)

- SmartItem™ (7)

- Automated Item Generation (AIG) (6)

- Braindumps (6)

- Proctoring (4)

- DMCA Letters (2)

Recent Posts

Subscribe to our newsletter.

Get expert knowledge delivered straight to your inbox, including exclusive access to industry publications and Caveon's subscriber-only resource, The Lockbox .

VIEW MORE RESOURCES

NEED HELP CREATING TEST ITEMS?

THAT'S WHAT WE'RE HERE FOR.

- CRLT Consultation Services

- Consultation

- Midterm Student Feedback

- Classroom Observation

- Teaching Philosophy

- Upcoming Events and Seminars

- CRLT Calendar

- Orientations

- Teaching Academies

- Provost's Seminars

- Past Events

- For Faculty

- For Grad Students & Postdocs

- For Chairs, Deans & Directors

- Customized Workshops & Retreats

- Assessment, Curriculum, & Learning Analytics Services

- CRLT in Engineering

- CRLT Players

- Foundational Course Initiative

- CRLT Grants

- Other U-M Grants

- Provost's Teaching Innovation Prize

- U-M Teaching Awards

- Retired Grants

- Staff Directory

- Faculty Advisory Board

- Annual Report

- Equity-Focused Teaching

- Preparing to Teach

- Teaching Strategies

- Testing and Grading

- Teaching with Technology

- Teaching Philosophy & Statements

- Training GSIs

- Evaluation of Teaching

- Occasional Papers

Best Practices for Designing and Grading Exams

Adapted from crlt occasional paper #24: m.e. piontek (2008), center for research on learning and teaching.

The most obvious function of assessment methods (such as exams, quizzes, papers, and presentations) is to enable instructors to make judgments about the quality of student learning (i.e., assign grades). However, the method of assessment also can have a direct impact on the quality of student learning. Students assume that the focus of exams and assignments reflects the educational goals most valued by an instructor, and they direct their learning and studying accordingly (McKeachie & Svinicki, 2006). General grading systems can have an impact as well. For example, a strict bell curve (i.e., norm-reference grading) has the potential to dampen motivation and cooperation in a classroom, while a system that strictly rewards proficiency (i.e., criterion-referenced grading ) could be perceived as contributing to grade inflation. Given the importance of assessment for both faculty and student interactions about learning, how can instructors develop exams that provide useful and relevant data about their students' learning and also direct students to spend their time on the important aspects of a course or course unit? How do grading practices further influence this process?

Guidelines for Designing Valid and Reliable Exams

Ideally, effective exams have four characteristics. They are:

- Valid, (providing useful information about the concepts they were designed to test),

- Reliable (allowing consistent measurement and discriminating between different levels of performance),

- Recognizable (instruction has prepared students for the assessment), and

- Realistic (concerning time and effort required to complete the assignment) (Svinicki, 1999).

Most importantly, exams and assignments should f ocus on the most important content and behaviors emphasized during the course (or particular section of the course). What are the primary ideas, issues, and skills you hope students learn during a particular course/unit/module? These are the learning outcomes you wish to measure. For example, if your learning outcome involves memorization, then you should assess for memorization or classification; if you hope students will develop problem-solving capacities, your exams should focus on assessing students’ application and analysis skills. As a general rule, assessments that focus too heavily on details (e.g., isolated facts, figures, etc.) “will probably lead to better student retention of the footnotes at the cost of the main points" (Halpern & Hakel, 2003, p. 40). As noted in Table 1, each type of exam item may be better suited to measuring some learning outcomes than others, and each has its advantages and disadvantages in terms of ease of design, implementation, and scoring.

Table 1: Advantages and Disadvantages of Commonly Used Types of Achievement Test Items

Adapted from Table 10.1 of Worthen, et al., 1993, p. 261.

General Guidelines for Developing Multiple-Choice and Essay Questions

The following sections highlight general guidelines for developing multiple-choice and essay questions, which are often used in college-level assessment because they readily lend themselves to measuring higher order thinking skills (e.g., application, justification, inference, analysis and evaluation). Yet instructors often struggle to create, implement, and score these types of questions (McMillan, 2001; Worthen, et al., 1993).

Multiple-choice questions have a number of advantages. First, they can measure various kinds of knowledge, including students' understanding of terminology, facts, principles, methods, and procedures, as well as their ability to apply, interpret, and justify. When carefully designed, multiple-choice items also can assess higher-order thinking skills.