This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Role assignments

- 8 contributors

In Reporting Services, role assignments determine access to stored items and to the report server itself. A role assignment has the following parts:

A securable item for which you want to control access. Examples of securable items include folders, reports, and resources.

A user or group account that Windows security or another authentication mechanism can authenticate.

Role definitions define a set of permissible tasks and include:

- Content Manager

- Report Builder

- System Administrator

- System User

Role assignments are inherited within the folder hierarchy and are automatically inherited by contained:

- Shared data sources

You can override inherited security by defining role assignments for individual items. At least one role assignment must secure all parts of the folder hierarchy. You can't create an unsecured item or manipulate settings in a way that produces an unsecured item.

The following diagram illustrates a role assignment that maps a group and a specific user to the Publisher role for Folder B.

System-level and item-level role assignments

Role-based security in Reporting Services is organized into the following levels:

Item-level role assignments control access to items in the report server folder hierarchy such as:

- report models

- shared data sources

- other resources

Item-level role assignments are defined when you create a role assignment on a specific item, or on the Home folder.

System role assignments authorize operations that are scoped to the server as a whole. For example, the ability to manage jobs is a system level operation. A system role assignment isn't the equivalent of a system administrator. It doesn't confer advanced permissions that grant full control of a report server.

A system role assignment doesn't authorize access to items in the folder hierarchy. System and item security are mutually exclusive. Sometimes, you might need to create both a system-level and item-level role assignment to provide sufficient access for a user or group.

Users and groups in role assignments

The users or group accounts that you specify in role assignments are domain accounts. The report server references, but doesn't create or manage, users and groups from a Microsoft Windows domain (or another security model if you're using a custom security extension).

Of all the role assignments that apply to any given item, no two can specify the same user or group. If a user account is also a member of a group account, and you have role assignments for both, the combined set of tasks for both role assignments are available to the user.

When you add a user to a group that already has a role assignment, you must reset Internet Information Services (IIS) for the new role assignment to take effect.

Predefined role assignments

By default, predefined role assignments are implemented that allow local administrators to manage the report server. You can add other role assignments to grant access to other users.

For more information about the predefined role assignments that provide default security, see Predefined roles .

Related content

Create, delete, or modify a role (Management Studio) Modify or delete a role assignment ( SSRS web portal ) Set permissions for report server items on a SharePoint site (Reporting Services in SharePoint integrated mode) Grant permissions on a native mode report server

Was this page helpful?

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback .

Submit and view feedback for

Additional resources

SQL Server Reporting Services Report Manager Site Permissions Error After Installation

By: Dallas Snider | Comments (12) | Related: > Reporting Services Security

After a successful installation of SQL Server Reporting Services 2012 on a personal computer such as a laptop, a permissions error is received when accessing the Report Manager site for the first time. The message typically states the following: "User DOMAIN_NAME\userID does not have required permissions. Verify that sufficient permissions have been granted and Windows User Account Control (UAC) restrictions have been addressed." This error can occur even though you the user, installer and database administrator have full administrative rights on your device.

Depending on your situation there are two methods that will typically resolve this problem. We will start with the easier solution first before moving to the more complicated solution. The goal of both solutions is to get the Site Settings to display in the top right corner as shown below. Site Settings is not displayed in the image above containing the error message.

1. The first method involves starting Internet Explorer by using the Run as administrator option as shown below.

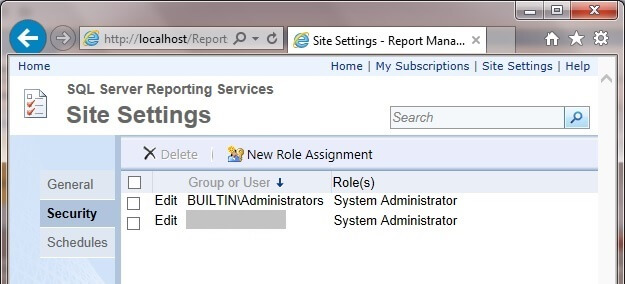

2. If Site Settings is not displayed, please skip to step 8. If Site Settings is displayed in the top right corner, click on Site Settings. Click on the Security tab on the left. Next, click on New Role Assignment.

3. On the New System Role Assignment page, enter your group or user name and then select the System Administrator checkbox. Click on OK.

4. After clicking on OK, the system role assignments will be shown.

5. Next, click on Home in the top right and then click on Folder Settings.

6. Click on New Role Assignment. Add your group or user name and then choose the desired roles to be assigned. Click on OK when finished.

7. If you have made it this far, then the problem should be resolved. Exit from Internet Explorer and reopen Internet Explorer normally without running as administrator and navigate to your local Report Manager site.

8. The second method involves temporarily changing the User Access Control settings.

9. Change the settings from the default level as shown above to "Never notify" shown below. After clicking OK and rebooting your computer, attempt steps 1 through 7 above. When successfully making these changes, make sure to set the User Access Control settings back to the default level and reboot.

- Read through our Reporting Services Tutorial.

- Read through our Report Builder Tutorial.

About the author

Comments For This Article

Related Content

SQL Server Reporting Services Custom Security with Single Sign-on

SQL Server Reporting Services 2012 Permissions

Determining who is viewing reports in SQL Server 2012 Reporting Services

SQL Server Reporting Services Encryption Key

SQL Server Reporting Services Security Options

SQL Server Reporting Services Column Level Security

Centralize and Control Data Access in SSRS 2008 R2

Free Learning Guides

Learn Power BI

What is SQL Server?

Donwload Links

Become a DBA

What is SSIS?

Related Categories

Reporting Services Administration

Reporting Services Best Practices

Reporting Services Configuration

Reporting Services Installation

Reporting Services Migration

Reporting Services Monitoring

Reporting Services Network Load Balancing

Reporting Services Performance

Reporting Services Security

Development

Date Functions

System Functions

JOIN Tables

SQL Server Management Studio

Database Administration

Performance

Performance Tuning

Locking and Blocking

Data Analytics \ ETL

Microsoft Fabric

Azure Data Factory

Integration Services

Popular Articles

Date and Time Conversions Using SQL Server

Format SQL Server Dates with FORMAT Function

SQL Server CROSS APPLY and OUTER APPLY

SQL CASE Statement in Where Clause to Filter Based on a Condition or Expression

SQL Server Cursor Example

SQL NOT IN Operator

DROP TABLE IF EXISTS Examples for SQL Server

SQL Convert Date to YYYYMMDD

Rolling up multiple rows into a single row and column for SQL Server data

Resolving could not open a connection to SQL Server errors

Format numbers in SQL Server

SQL Server PIVOT and UNPIVOT Examples

Script to retrieve SQL Server database backup history and no backups

How to install SQL Server 2022 step by step

An Introduction to SQL Triggers

Using MERGE in SQL Server to insert, update and delete at the same time

How to monitor backup and restore progress in SQL Server

List SQL Server Login and User Permissions with fn_my_permissions

SQL Server Management Studio Dark Mode

SQL Server Loop through Table Rows without Cursor

- SQL Server training

- Write for us!

Managing SSRS security and using PowerShell automation scripts

So much has changed with Reporting Services 2016 but in terms of security it’s the same under the hood and that’s not necessarily a bad thing. SSRS has long had a robust folder & item level security model with the ability to inherit permissions from parent folders, much like SharePoint and windows in general.

Managing this security model, however, can become difficult as the use of SSRS expands over years & even versions. 5 folders & 40 reports quickly become 30 folders, 200 reports and many different business units or even clients in the same environment. Once you introduce processes to move databases down to non-production environments, it quickly becomes a difficult task to maintain security never mind implement any changes or improvements. I want to outline some tips that have helped me over the years and some PowerShell scripts that will save you hours of clicking!

Best Practices & tips

AD Groups reduce maintenance

It might be an obvious one, but it’s a basic rule in my opinion. Wherever possible, grant security in SSRS (& your database too) to AD groups and fill those groups with the relevant users. This gives you a single place to add/remove people, whether that’s a quarterly task or once every decade. Using AD groups may give you one more step to check “who has access to what” but it makes finding & maintaining those users significantly easier.

Keep permissions to a minimum

Reporting Services has several “out of the box” roles to choose from. If none of those fit the bill or a user needs wants slightly more than Browser access (i.e. View Data Sources) don’t just bump them up to full Content Manager.

By connecting to your Reporting Services instance via Management Studio (SSMS) you can View the built in security roles. From here you can Add permissions to an existing role or create a whole new role, if only a subset of users need the extra permissions.

Some further reading on SSRS roles: Role Definitions – Predefined Roles

Clean up default Permissions

You may have noticed that by Default, BUILTIN\Administrators is added as a Content Manager to the Home folder (and every inherited folder!). This is great for initial setup. It allows the server admin(s) to access Report Manager & get started without any security prerequisites.

Beyond “Day 1” setup this should be removed. In the vast majority of implementations, the server admin will not be the Reporting Services admin, or there will always be people in one group who shouldn’t be in the other.

If you leave this in place you are giving everyone who has administrator rights of the SSRS server full Content Manager access. This is best to remove at first implementation before your instance grows, folders get unique permissions and it’s no longer a single click to fix (though I’ll give you a fast way to fix it later!)

Plan your Security Model

When implementing Reporting Services from scratch, or any new technology/app, it can be too easy to just use a select few “service accounts” for multiple functions & tasks. Usually it’s a case of “whatever gets this fixed/online the fastest”. Using a single AD account for each function within SSRS is good practice & minimises security risk.

An example of accounts used in a production environment:

- Domain\DataAccess for stored credentials in datasources. This account doesn’t need any access in SSRS or any server permissions. It may be granted db_datareader or more on the datasources it needed to access to.

- Domain\Deploy would be used to deploy content to Reporting Services. This would only need the Publisher role in SSRS. It could also be a group of senior developers or a dev manager.

- Domain\Service is the account Reporting Services would run under. This would need the RSExecRole on the ReportServer DB (this is granted during configuration/install). This account would have no data access or Reporting services access.

- Domain\rsAdmins is an AD group with the admins who manage content & permissions. Generally, this group would not need data access

Now, this level of separation isn’t always possible and in some smaller organizations a single person covers most of these functions so don’t take the above as a hard requirement. Using these separate domain accounts reduces a single point of failure caused by password lockouts & resets or compromised accounts.

PowerShell Automation

There’s a great deal of automation that can be achieved with PowerShell in Reporting Services., I’ve detailed a few scripts below specific to this security topic, but there’s an abundance of content out there for many tasks, such as deploying reports, folders, data sources etc. Although I’ve focused primarily on native mode Reporting Services, there are also scripts that work with SharePoint integrated mode too.

Development environment security

Unlike your production environment you may want to simplify your dev environment’s security to make it easier for developers to deploy & test without running into permissions issues. This is a good place to utilize Reporting Services’ inherit functionality. Setting all folders to “Revert to Parent Security” makes it easy to add/remove permissions to the whole environment from the top level folder.

If you ever need to copy down your production database this can be a mammoth task to update. This is where PowerShell comes in handy. The following simple script will revert all subfolders in an SSRS environment to Revert to Parent Security .

You may need to adjust the .asmx file for different versions of SSRS though this should work just fine in 2012 onwards.

Security auditing

If you’re inheriting an existing environment or even want to overhaul/audit your current security, the following PowerShell script will allow you to quickly output every folder’s security to csv allowing you to analyse erroneous permissions without searching through folders in Report Manager.

Targeted Changes

Following a security review, you may want to add or remove a single AD account/group across every folder in your environment. There may be many occasions that call for such a blanket change. Again, this would normally be a laboriously manual task without PowerShell. These little snippets show how it can be done & you can always edit these to target a specific folder (& all its sub-folders).

You can then use the following script to remove a user/group or reverse the change made in the last script.

NOTE: The above targeted scripts won’t add or remove users or groups from the top level folder. This process can be easily added, though I’ve omitted it to reduce the risk of removing an admin user/group from the entire site and in the case of adding users, I’ve worked with RS instances where multiple clients share a single instance and only admin accounts have access to the top level “home”.

Love PowerShell!

I hope I’ve provided a few examples of security practices in SSRS and some basic PowerShell scripts to automate administration of security in Reporting Services. You can build upon these scripts to do more advanced tasks such as setting instance wide security from an input file (good for refreshing other environments from production backups.

I know there is a lot of good work going into PowerShell for DBA tasks over at dbatools.io that shows PowerShell is something you want on your tool belt!

Microsoft also put together a bunch of PowerShell scripts for Reporting Services late last year. You can find the article: Community contributions to the PowerShell scripts for Reporting Services & the scripts are on GitHub here: ReportingServicesTools

For SSRS documentation, consider ApexSQL Doc , a tool that documents reports (*.rdl), shared datasets (*.rsd), shared data sources (*.rds) and projects (*.rptproj) from the file system and web services (native and SharePoint) in different output formats.

- SSRS Roles: Role Definitions – Predefined Roles

- DBATools page

- Microsoft SSRS PowerShell Tools: Community contributions to the PowerShell scripts for Reporting Services

- GitHub link: Reporting Services Powershell Tools

- Recent Posts

- How to secure Reporting Services with Group Managed Service Accounts (GMSA) - November 7, 2018

- Contribute, contribute, contribute! - June 20, 2018

- Top 10 things you must document in SQL Server Reporting Services (SSRS) - February 26, 2018

Related posts:

- Choosing and Setting a PowerShell Execution Policy

- Migrating SSRS content with PowerShell

- SQL Server Policy Based Management – best practices

- SQL Server Policy Based Management – applying policies to non-compliant targets

- PowerShell SQL Server Validation Utility – DBAChecks

- Microsoft Dynamics 365

- Power Connector

- Employee Expenses

- Enhanced Intercompany Automation

- Field Service

- Customer Voice

- Customer Service

- Supply Chain Management (Dyn 365 F&O)

- Project Operations

- Human Resources

- Dynamics GP

- Dynamics GP to Business Central Migration

- Implementation Packages

- Microsoft Cloud Licensing Review

- Shopify & Business Central Integration

- Support Services for Dynamics GP

- Support Services for Dynamics 365

- Product Videos

- Success Stories

- News & Events

- Schedule a consultation

- Get a quote

- Schedule a demo

- Contact support

Microsoft Dynamics GP | Managing SSRS Security Roles

Article by: Eduardo Haro – Business Analyst

When people use SSRS for their reporting needs, many times, we get requests on how to set up or manage security roles. We have put together this quick guide detailing the steps on how to edit the security role of either an entire folder or a single report.

Within SSRS, click the Site Settings icon located in the top right-hand corner of the page.

Navigate to the Security tab and click New Role Assignment .

Enter the Group Name or User name in the following format “Domain\username or group name.” Then, click, OK .

Navigate to the SSRS Report or SSRS Folder, which needs its security role changed.

Hover over the report with the cursor and click the yellow arrow to the right of the report name or if you would like to apply the same security settings for all reports within a folder, click the folder settings icon (skip to step 7 if changing the security role for the entire folder).

Click Manage .

Navigate to the Security tab and click Edit Item Security .

Enter the Group Name or User name in the following format “Domain\username or group name” of who/whom should be granted access. Also, make sure to select the desired security role from the option set below. Then, click, OK .

Privacy Preference Center

Privacy preferences.

SSRS Security Configuration

Report manager general overview, create user groups, reporting server predefined roles, create new role, update site settings, change security for preinstalled folders in report manager.

- Create and Edit Folders in Report Manager

Report Manager can be used to perform the following tasks

- View, search, and subscribe to reports

- Create and manage folders, linked reports, report history, schedules, data source connections, and subscriptions

- Set properties and report parameters

- Manage role definitions and assignments that control user access to reports and folders

You can access items that are stored in a report server by navigating the folder hierarchy and clicking on items that you want to view or update, provided that you have permissions to do that. The ability to perform a task in Report Manager depends on the user role assignment. A user who is assigned to a role that has full permissions, such as a report server administrator, has access to the complete set of application menus and pages. A user assigned to a role that has permissions to view and run reports, on the other hand, sees only the menus and pages that support those activities.

Users can be assigned to multiple roles. Each user can have different role assignments for different report servers, or even for the various reports and folders that are stored on a single server. For more information refer Microsoft Report Manager help >> .

Provided that the suggested security settings are applied and the recommended path to access reports are used, an end user, i.e., a report viewer, will access Published Reports and Dashboard from IFS EE and not from Report Manager. Thus a report viewer will not have access to these folders in Report Manager so any change in these folders will only affect the Report Publisher user group.

Typically there are three user groups that use the External Report Integration within IFS Business Reporting & Analysis. These are Report Administrators, Report Publishers and Report Viewers. A Report Administrator has the overall permission and can administrate and manage everything related to Reporting Services. Report Publishers are power users that create reports that are viewed by other users. This user group stores reports in the Report Manager Published Reports and Dashboards folders. Report Publishers also have permissions to configure and manage these folders. Report Viewers are end users that have access to view published reports and dashboards. This user category can also work with ad hoc reporting in Report Builder, create their own reports and save them in their My Report personal folders to which only they have access. A Report Viewer can manage his own My Report folder but has no permission to administrate any of the other report folders in Report Manager.

In Report Manager you define which user group should have access to respective folder. Access can be given to a single user or to a user group with multiple users.

!2013-09-05 Added the note

Note: Windows User groups cannot be used when using IFS provided SQL Server Reporting Services Extensions . Rights for Reporting Server contents should be granted for each user individually. (Reporting Server user groups can be granted to a user).

It is advisable to give access on Report Manager folders to user groups instead of single users since that will facilitate Report Manager folder administration. The user group must be a valid domain account at the network and it is recommended to create user groups for Report Administrators, Report Publishers and Report Viewers. For information on how to create user groups, check your windows security documentation. The user groups will then be connected to the Report Manager folders and this is further described in the Configuration of Report Services >> .

Add domain users to the user groups according to the functionality that they need to access.

Reporting Services installs with predefined roles that you can use to grant access to report server operations. Each predefined role describes a collection of related tasks. Use SQL Server Management Studio to view the set of tasks that each role support. We recommend that the predefined roles and their related tasks are kept unchanged. Refer Microsoft documentation for more information on the pre-defined roles in Reporting Services >> .

The predefined roles that will be used in IFS Reporting are; Content Manager, Publisher, Browser and Report Builder. In addition to this we need a new role with permissions only to view reports. This is described in the next section.

For IFS Reporting we need one additional role in the Reporting Server. This new role should only have access to view reports and should be attached to the Report Viewer user group for the Dashboards and Published Reports folders. This is further described in the Configuration Report Manager page.

To create a new role:

- Open SQL Server Management Studio and connect to your server.

- Expand the Report Server node.

- Expand the Security folder.

- Right-click on Roles and then click New Role

- Type the name Viewer for the role.

- Type a description, e.g., May view reports

- Select only the task View Reports

The site setting security page controls access to the report server site. System role assignments exist outside of the scope of the report server namespace or folder hierarchy. Operations that are supported through system role assignments include creating and using shared schedules, using Report Builder, and setting default values for some server features.

A default system role assignment is created when the report server is installed. This system roles assignment grants to local system administrators permissions to manage the report server environment. All other users who requires access to Report Builder must also be assigned to a system role assignment. This implies that all user groups, Report Administrators, Report Publisher and Report Viewers must be assigned to a system role to be able to use Report Builder. A system administrator must execute the the steps below.

Edit site security settings for user groups

- Click the Site Settings link and select Security

- Click New Role Assignment

- Enter group ReportAdministrator and select the System Administrator role.

- Repeat step 2-4 for ReportPublisher and ReportViewer but select the System User role for these user groups.

The contents page in Report Manager shows the items that you have permission to view. Depending on the permissions you have, you may also be able to move, delete, and add items.

The security settings that are set on the root folder, Home, gets inherited to subfolders. During Reporting Services installation the Administrator will be given the Content Manager role.

If you have created the user group according to the security description, you should update the security settings on the Home folder according to the following description:

To add a New Role Assignment

- In the root folder, click Folder Settings

- Click New Role Assignment to open the New Role Assignment page, which is used to create additional role assignments for the current folder.

- Type the name of a group account for which the role assignment is being created, e.g, Report Viewer. The group must be a valid windows domain account. Enter the account in this format: <domain>\<account>

- Select the role(s) that respective user group should have. i.e., for Report Publisher select, Publisher, Browser and Report Builder.

- Repeat step 2-5 for each user group.

Edit Folders in Report Manager

The role assignments for the new created folders will be inherited from the parent level which implies that Report Publishers will be connected to the Publisher, Browser and Report Builder roles and Report Viewers will be connected to the Browser role. However the role assignment for Report Viewers should be changed from Browser to Viewer. The reason for this is that Report Viewers should access Published Reports and Dashboards from IFS Applications and not from Report Manager.

Edit Role Assignment for the New Folders

- In the Dashboard folder, click Folder Settings and then on the Security page.

- Click Edit Item Security . You will get a message saying that the item security is inherited from a parent item and a confirmation message asking whether you want to apply other security settings. Click Ok .

- Click on the Edit link for Report Viewer user group.

- Deselect the Browser role and select the Viewer role instead.

- Click Apply

- Repeat steps 1-4 for the Published Reports folder.

Please enter your information to subscribe to the Microsoft Fabric Blog.

Microsoft fabric updates blog.

Microsoft Fabric May 2024 Update

- Monthly Update

Welcome to the May 2024 update.

Here are a few, select highlights of the many we have for Fabric. You can now ask Copilot questions about data in your model, Model Explorer and authoring calculation groups in Power BI desktop is now generally available, and Real-Time Intelligence provides a complete end-to-end solution for ingesting, processing, analyzing, visualizing, monitoring, and acting on events.

There is much more to explore, please continue to read on.

Microsoft Build Announcements

At Microsoft Build 2024, we are thrilled to announce a huge array of innovations coming to the Microsoft Fabric platform that will make Microsoft Fabric’s capabilities even more robust and even customizable to meet the unique needs of each organization. To learn more about these changes, read the “ Unlock real-time insights with AI-powered analytics in Microsoft Fabric ” announcement blog by Arun Ulag.

Fabric Roadmap Update

Last October at the Microsoft Power Platform Community Conference we announced the release of the Microsoft Fabric Roadmap . Today we have updated that roadmap to include the next semester of Fabric innovations. As promised, we have merged Power BI into this roadmap to give you a single, unified road map for all of Microsoft Fabric. You can find the Fabric Roadmap at https://aka.ms/FabricRoadmap .

We will be innovating our Roadmap over the coming year and would love to hear your recommendation ways that we can make this experience better for you. Please submit suggestions at https://aka.ms/FabricIdeas .

Earn a discount on your Microsoft Fabric certification exam!

We’d like to thank the thousands of you who completed the Fabric AI Skills Challenge and earned a free voucher for Exam DP-600 which leads to the Fabric Analytics Engineer Associate certification.

If you earned a free voucher, you can find redemption instructions in your email. We recommend that you schedule your exam now, before your discount voucher expires on June 24 th . All exams must be scheduled and completed by this date.

If you need a little more help with exam prep, visit the Fabric Career Hub which has expert-led training, exam crams, practice tests and more.

Missed the Fabric AI Skills Challenge? We have you covered. For a limited time , you could earn a 50% exam discount by taking the Fabric 30 Days to Learn It Challenge .

Modern Tooltip now on by Default

Matrix layouts, line updates, on-object interaction updates, publish to folders in public preview, you can now ask copilot questions about data in your model (preview), announcing general availability of dax query view, copilot to write and explain dax queries in dax query view public preview updates, new manage relationships dialog, refreshing calculated columns and calculated tables referencing directquery sources with single sign-on, announcing general availability of model explorer and authoring calculation groups in power bi desktop, microsoft entra id sso support for oracle database, certified connector updates, view reports in onedrive and sharepoint with live connected semantic models, storytelling in powerpoint – image mode in the power bi add-in for powerpoint, storytelling in powerpoint – data updated notification, git integration support for direct lake semantic models.

- Editor’s pick of the quarter

- New visuals in AppSource

- Financial Reporting Matrix by Profitbase

- Horizon Chart by Powerviz

Milestone Trend Analysis Chart by Nova Silva

- Sunburst Chart by Powerviz

- Stacked Bar Chart with Line by JTA

Fabric Automation

Streamlining fabric admin apis, microsoft fabric workload development kit, external data sharing, apis for onelake data access roles, shortcuts to on-premises and network-restricted data, copilot for data warehouse.

- Unlocking Insights through Time: Time travel in Data warehouse

Copy Into enhancements

Faster workspace resource assignment powered by just in time database attachment, runtime 1.3 (apache spark 3.5, delta lake 3.1, r 4.3.3, python 3.11) – public preview, native execution engine for fabric runtime 1.2 (apache spark 3.4) – public preview , spark run series analysis, comment @tagging in notebook, notebook ribbon upgrade, notebook metadata update notification, environment is ga now, rest api support for workspace data engineering/science settings, fabric user data functions (private preview), introducing api for graphql in microsoft fabric (preview), copilot will be enabled by default, the ai and copilot setting will be automatically delegated to capacity admins, abuse monitoring no longer stores your data, real-time hub, source from real-time hub in enhanced eventstream, use real-time hub to get data in kql database in eventhouse, get data from real-time hub within reflexes, eventstream edit and live modes, default and derived streams, route streams based on content in enhanced eventstream, eventhouse is now generally available, eventhouse onelake availability is now generally available, create a database shortcut to another kql database, support for ai anomaly detector, copilot for real-time intelligence, eventhouse tenant level private endpoint support, visualize data with real-time dashboards, new experience for data exploration, create triggers from real-time hub, set alert on real-time dashboards, taking action through fabric items, general availability of the power query sdk for vs code, refresh the refresh history dialog, introducing data workflows in data factory, introducing trusted workspace access in fabric data pipelines.

- Introducing Blob Storage Event Triggers for Data Pipelines

- Parent/child pipeline pattern monitoring improvements

Fabric Spark job definition activity now available

Hd insight activity now available, modern get data experience in data pipeline.

Power BI tooltips are embarking on an evolution to enhance their functionality. To lay the groundwork, we are introducing the modern tooltip as the new default , a feature that many users may already recognize from its previous preview status. This change is more than just an upgrade; it’s the first step in a series of remarkable improvements. These future developments promise to revolutionize tooltip management and customization, offering possibilities that were previously only imaginable. As we prepare for the general availability of the modern tooltip, this is an excellent opportunity for users to become familiar with its features and capabilities.

Discover the full potential of the new tooltip feature by visiting our dedicated blog . Dive into the details and explore the comprehensive vision we’ve crafted for tooltips, designed to enhance your Power BI experience.

We’ve listened to our community’s feedback on improving our tabular visuals (Table and Matrix), and we’re excited to initiate their transformation. Drawing inspiration from the familiar PivotTable in Excel , we aim to build new features and capabilities upon a stronger foundation. In our May update, we’re introducing ‘ Layouts for Matrix .’ Now, you can select from compact , outline , or tabular layouts to alter the arrangement of components in a manner akin to Excel.

As an extension of the new layout options, report creators can now craft custom layout patterns by repeating row headers. This powerful control, inspired by Excel’s PivotTable layout, enables the creation of a matrix that closely resembles the look and feel of a table. This enhancement not only provides greater flexibility but also brings a touch of Excel’s intuitive design to Power BI’s matrix visuals. Only available for Outline and Tabular layouts.

To further align with Excel’s functionality, report creators now have the option to insert blank rows within the matrix. This feature allows for the separation of higher-level row header categories, significantly enhancing the readability of the report. It’s a thoughtful addition that brings a new level of clarity and organization to Power BI’s matrix visuals and opens a path for future enhancements for totals/subtotals and rows/column headers.

We understand your eagerness to delve deeper into the matrix layouts and grasp how these enhancements fulfill the highly requested features by our community. Find out more and join the conversation in our dedicated blog , where we unravel the details and share the community-driven vision behind these improvements.

Following last month’s introduction of the initial line enhancements, May brings a groundbreaking set of line capabilities that are set to transform your Power BI experience:

- Hide/Show lines : Gain control over the visibility of your lines for a cleaner, more focused report.

- Customized line pattern : Tailor the pattern of your lines to match the style and context of your data.

- Auto-scaled line pattern : Ensure your line patterns scale perfectly with your data, maintaining consistency and clarity.

- Line dash cap : Customize the end caps of your customized dashed lines for a polished, professional look.

- Line upgrades across other line types : Experience improvements in reference lines, forecast lines, leader lines, small multiple gridlines, and the new card’s divider line.

These enhancements are not to be missed. We recommend visiting our dedicated blog for an in-depth exploration of all the new capabilities added to lines, keeping you informed and up to date.

This May release, we’re excited to introduce on-object formatting support for Small multiples , Waterfall , and Matrix visuals. This new feature allows users to interact directly with these visuals for a more intuitive and efficient formatting experience. By double-clicking on any of these visuals, users can now right-click on the specific visual component they wish to format, bringing up a convenient mini-toolbar. This streamlined approach not only saves time but also enhances the user’s ability to customize and refine their reports with ease.

We’re also thrilled to announce a significant enhancement to the mobile reporting experience with the introduction of the pane manager for the mobile layout view. This innovative feature empowers users to effortlessly open and close panels via a dedicated menu, streamlining the design process of mobile reports.

We recently announced a public preview for folders in workspaces, allowing you to create a hierarchical structure for organizing and managing your items. In the latest Desktop release, you can now publish your reports to specific folders in your workspace.

When you publish a report, you can choose the specific workspace and folder for your report. The interface is simplistic and easy to understand, making organizing your Power BI content from Desktop better than ever.

To publish reports to specific folders in the service, make sure the “Publish dialogs support folder selection” setting is enabled in the Preview features tab in the Options menu.

Learn more about folders in workspaces.

We’re excited to preview a new capability for Power BI Copilot allowing you to ask questions about the data in your model! You could already ask questions about the data present in the visuals on your report pages – and now you can go deeper by getting answers directly from the underlying model. Just ask questions about your data, and if the answer isn’t already on your report, Copilot will then query your model for the data instead and return the answer to your question in the form of a visual!

We’re starting this capability off in both Edit and View modes in Power BI Service. Because this is a preview feature, you’ll need to enable it via the preview toggle in the Copilot pane. You can learn more about all the details of the feature in our announcement post here! (will link to announcement post)

We are excited to announce the general availability of DAX query view. DAX query view is the fourth view in Power BI Desktop to run DAX queries on your semantic model.

DAX query view comes with several ways to help you be as productive as possible with DAX queries.

- Quick queries. Have the DAX query written for you from the context menu of tables, columns, or measures in the Data pane of DAX query view. Get the top 100 rows of a table, statistics of a column, or DAX formula of a measure to edit and validate in just a couple clicks!

- DirectQuery model authors can also use DAX query view. View the data in your tables whenever you want!

- Create and edit measures. Edit one or multiple measures at once. Make changes and see the change in action in a DA query. Then update the model when you are ready. All in DAX query view!

- See the DAX query of visuals. Investigate the visuals DAX query in DAX query view. Go to the Performance Analyzer pane and choose “Run in DAX query view”.

- Write DAX queries. You can create DAX queries with Intellisense, formatting, commenting/uncommenting, and syntax highlighting. And additional professional code editing experiences such as “Change all occurrences” and block folding to expand and collapse sections. Even expanded find and replace options with regex.

Learn more about DAX query view with these resources:

- Deep dive blog: https://powerbi.microsoft.com/blog/deep-dive-into-dax-query-view-and-writing-dax-queries/

- Learn more: https://learn.microsoft.com/power-bi/transform-model/dax-query-view

- Video: https://youtu.be/oPGGYLKhTOA?si=YKUp1j8GoHHsqdZo

DAX query view includes an inline Fabric Copilot to write and explain DAX queries, which remains in public preview. This month we have made the following updates.

- Run the DAX query before you keep it . Previously the Run button was disabled until the generated DAX query was accepted or Copilot was closed. Now you can Run the DAX query then decide to Keep or Discard the DAX query.

2. Conversationally build the DAX query. Previously the DAX query generated was not considered if you typed additional prompts and you had to keep the DAX query, select it again, then use Copilot again to adjust. Now you can simply adjust by typing in additional user prompts.

3. Syntax checks on the generated DAX query. Previously there was no syntax check before the generated DAX query was returned. Now the syntax is checked, and the prompt automatically retried once. If the retry is also invalid, the generated DAX query is returned with a note that there is an issue, giving you the option to rephrase your request or fix the generated DAX query.

4. Inspire buttons to get you started with Copilot. Previously nothing happened until a prompt was entered. Now click any of these buttons to quickly see what you can do with Copilot!

Learn more about DAX queries with Copilot with these resources:

- Deep dive blog: https://powerbi.microsoft.com/en-us/blog/deep-dive-into-dax-query-view-with-copilot/

- Learn more: https://learn.microsoft.com/en-us/dax/dax-copilot

- Video: https://www.youtube.com/watch?v=0kE3TE34oLM

We are excited to introduce you to the redesigned ‘Manage relationships’ dialog in Power BI Desktop! To open this dialog simply select the ‘Manage relationships’ button in the modeling ribbon.

Once opened, you’ll find a comprehensive view of all your relationships, along with their key properties, all in one convenient location. From here you can create new relationships or edit an existing one.

Additionally, you have the option to filter and focus on specific relationships in your model based on cardinality and cross filter direction.

Learn more about creating and managing relationships in Power BI Desktop in our documentation .

Ever since we released composite models on Power BI semantic models and Analysis Services , you have been asking us to support the refresh of calculated columns and tables in the Service. This month, we have enabled the refresh of calculated columns and tables in Service for any DirectQuery source that uses single sign-on authentication. This includes the sources you use when working with composite models on Power BI semantic models and Analysis Services.

Previously, the refresh of a semantic model that uses a DirectQuery source with single-sign-on authentication failed with one of the following error messages: “Refresh is not supported for datasets with a calculated table or calculated column that depends on a table which references Analysis Services using DirectQuery.” or “Refresh over a dataset with a calculated table or a calculated column which references a Direct Query data source is not supported.”

Starting today, you can successfully refresh the calculated table and calculated columns in a semantic model in the Service using specific credentials as long as:

- You used a shareable cloud connection and assigned it and/or.

- Enabled granular access control for all data connection types.

Here’s how to do this:

- Create and publish your semantic model that uses a single sign-on DirectQuery source. This can be a composite model but doesn’t have to be.

- In the semantic model settings, under Gateway and cloud connections , map each single sign-on DirectQuery connection to a specific connection. If you don’t have a specific connection yet, select ‘Create a connection’ to create it:

- If you are creating a new connection, fill out the connection details and click Create , making sure to select ‘Use SSO via Azure AD for DirectQuery queries:

- Finally, select the connection for each single sign-on DirectQuery source and select Apply :

2. Either refresh the semantic model manually or plan a scheduled refresh to confirm the refresh now works successfully. Congratulations, you have successfully set up refresh for semantic models with a single sign-on DirectQuery connection that uses calculated columns or calculated tables!

We are excited to announce the general availability of Model Explorer in the Model view of Power BI, including the authoring of calculation groups. Semantic modeling is even easier with an at-a-glance tree view with item counts, search, and in context paths to edit the semantic model items with Model Explorer. Top level semantic model properties are also available as well as the option to quickly create relationships in the properties pane. Additionally, the styling for the Data pane is updated to Fluent UI also used in Office and Teams.

A popular community request from the Ideas forum, authoring calculation groups is also included in Model Explorer. Calculation groups significantly reduce the number of redundant measures by allowing you to define DAX formulas as calculation items that can be applied to existing measures. For example, define a year over year, prior month, conversion, or whatever your report needs in DAX formula once as a calculation item and reuse it with existing measures. This can reduce the number of measures you need to create and make the maintenance of the business logic simpler.

Available in both Power BI Desktop and when editing a semantic model in the workspace, take your semantic model authoring to the next level today!

Learn more about Model Explorer and authoring calculation groups with these resources:

- Use Model explorer in Power BI (preview) – Power BI | Microsoft Learn

- Create calculation groups in Power BI (preview) – Power BI | Microsoft Learn

Data connectivity

We’re happy to announce that the Oracle database connector has been enhanced this month with the addition of Single Sign-On support in the Power BI service with Microsoft Entra ID authentication.

Microsoft Entra ID SSO enables single sign-on to access data sources that rely on Microsoft Entra ID based authentication. When you configure Microsoft Entra SSO for an applicable data source, queries run under the Microsoft Entra identity of the user that interacts with the Power BI report.

We’re pleased to announce the new and updated connectors in this release:

- [New] OneStream : The OneStream Power BI Connector enables you to seamlessly connect Power BI to your OneStream applications by simply logging in with your OneStream credentials. The connector uses your OneStream security, allowing you to access only the data you have based on your permissions within the OneStream application. Use the connector to pull cube and relational data along with metadata members, including all their properties. Visit OneStream Power BI Connector to learn more. Find this connector in the other category.

- [New] Zendesk Data : A new connector developed by the Zendesk team that aims to go beyond the functionality of the existing Zendesk legacy connector created by Microsoft. Learn more about what this new connector brings.

- [New] CCH Tagetik

- [Update] Azure Databricks

Are you interested in creating your own connector and publishing it for your customers? Learn more about the Power Query SDK and the Connector Certification program .

Last May, we announced the integration between Power BI and OneDrive and SharePoint. Previously, this capability was limited to only reports with data in import mode. We’re excited to announce that you can now seamlessly view Power BI reports with live connected data directly in OneDrive and SharePoint!

When working on Power BI Desktop with a report live connected to a semantic model in the service, you can easily share a link to collaborate with others on your team and allow them to quickly view the report in their browser. We’ve made it easier than ever to access the latest data updates without ever leaving your familiar OneDrive and SharePoint environments. This integration streamlines your workflows and allows you to access reports within the platforms you already use. With collaboration at the heart of this improvement, teams can work together more effectively to make informed decisions by leveraging live connected semantic models without being limited to data only in import mode.

Utilizing OneDrive and SharePoint allows you to take advantage of built-in version control, always have your files available in the cloud, and utilize familiar and simplistic sharing.

While you told us that you appreciate the ability to limit the image view to only those who have permission to view the report, you asked for changes for the “Public snapshot” mode.

To address some of the feedback we got from you, we have made a few more changes in this area.

- Add-ins that were saved as “Public snapshot” can be printed and will not require that you go over all the slides and load the add-ins for permission check before the public image is made visible.

- You can use the “Show as saved image” on add-ins that were saved as “Public snapshot”. This will replace the entire add-in with an image representation of it, so the load time might be faster when you are presenting your presentation.

Many of us keep presentations open for a long time, which might cause the data in the presentation to become outdated.

To make sure you have in your slides the data you need, we added a new notification that tells you if more up to date data exists in Power BI and offers you the option to refresh and get the latest data from Power BI.

Developers

Direct Lake semantic models are now supported in Fabric Git Integration , enabling streamlined version control, enhanced collaboration among developers, and the establishment of CI/CD pipelines for your semantic models using Direct Lake.

Learn more about version control, testing, and deployment of Power BI content in our Power BI implementation planning documentation: https://learn.microsoft.com/power-bi/guidance/powerbi-implementation-planning-content-lifecycle-management-overview

Visualizations

Editor’s pick of the quarter .

– Animator for Power BI Innofalls Charts SuperTables Sankey Diagram for Power BI by ChartExpo Dynamic KPI Card by Sereviso Shielded HTML Viewer Text search slicer

New visuals in AppSource

Mapa Polski – Województwa, Powiaty, Gminy Workstream Income Statement Table

Gas Detection Chart

Seasonality Chart PlanIn BI – Data Refresh Service

Chart Flare

PictoBar ProgBar

Counter Calendar Donut Chart image

Financial Reporting Matrix by Profitbase

Making financial statements with a proper layout has just become easier with the latest version of the Financial Reporting Matrix.

Users are now able to specify which rows should be classified as cost-rows, which will make it easier to get the conditional formatting of variances correctly:

Selecting a row, and ticking “is cost” will tag the row as cost. This can be used in conditional formatting to make sure that positive variances on expenses are a bad for the result, while a positive variance on an income row is good for the result.

The new version also includes more flexibility in measuring placement and column subtotals.

Measures can be placed either:

- Default (below column headers)

- Above column headers

- Conditionally hide columns

- + much more

Highlighted new features:

- Measure placement – In rows

- Select Column Subtotals

- New Format Pane design

- Row Options

Get the visual from AppSource and find more videos here !

Horizon Chart by Powerviz

A Horizon Chart is an advanced visual, for time-series data, revealing trends and anomalies. It displays stacked data layers, allowing users to compare multiple categories while maintaining data clarity. Horizon Charts are particularly useful to monitor and analyze complex data over time, making this a valuable visual for data analysis and decision-making.

Key Features:

- Horizon Styles: Choose Natural, Linear, or Step with adjustable scaling.

- Layer: Layer data by range or custom criteria. Display positive and negative values together or separately on top.

- Reference Line : Highlight patterns with X-axis lines and labels.

- Colors: Apply 30+ color palettes and use FX rules for dynamic coloring.

- Ranking: Filter Top/Bottom N values, with “Others”.

- Gridline: Add gridlines to the X and Y axis.

- Custom Tooltip: Add highest, lowest, mean, and median points without additional DAX.

- Themes: Save designs and share seamlessly with JSON files.

Other features included are ranking, annotation, grid view, show condition, and accessibility support.

Business Use Cases: Time-Series Data Comparison, Environmental Monitoring, Anomaly Detection

🔗 Try Horizon Chart for FREE from AppSource

📊 Check out all features of the visual: Demo file

📃 Step-by-step instructions: Documentation

💡 YouTube Video: Video Link

📍 Learn more about visuals: https://powerviz.ai/

✅ Follow Powerviz : https://lnkd.in/gN_9Sa6U

Exciting news! Thanks to your valuable feedback, we’ve enhanced our Milestone Trend Analysis Chart even further. We’re thrilled to announce that you can now switch between horizontal and vertical orientations, catering to your preferred visualization style.

The Milestone Trend Analysis (MTA) Chart remains your go-to tool for swiftly identifying deadline trends, empowering you to take timely corrective actions. With this update, we aim to enhance deadline awareness among project participants and stakeholders alike.

In our latest version, we seamlessly navigate between horizontal and vertical views within the familiar Power BI interface. No need to adapt to a new user interface – enjoy the same ease of use with added flexibility. Plus, it benefits from supported features like themes, interactive selection, and tooltips.

What’s more, ours is the only Microsoft Certified Milestone Trend Analysis Chart for Power BI, ensuring reliability and compatibility with the platform.

Ready to experience the enhanced Milestone Trend Analysis Chart? Download it from AppSource today and explore its capabilities with your own data – try for free!

We welcome any questions or feedback at our website: https://visuals.novasilva.com/ . Try it out and elevate your project management insights now!

Sunburst Chart by Powerviz

Powerviz’s Sunburst Chart is an interactive tool for hierarchical data visualization. With this chart, you can easily visualize multiple columns in a hierarchy and uncover valuable insights. The concentric circle design helps in displaying part-to-whole relationships.

- Arc Customization: Customize shapes and patterns.

- Color Scheme: Accessible palettes with 30+ options.

- Centre Circle: Design an inner circle with layers. Add text, measure, icons, and images.

- Conditional Formatting: Easily identify outliers based on measure or category rules.

- Labels: Smart data labels for readability.

- Image Labels: Add an image as an outer label.

- Interactivity: Zoom, drill down, cross-filtering, and tooltip features.

Other features included are annotation, grid view, show condition, and accessibility support.

Business Use Cases:

- Sales and Marketing: Market share analysis and customer segmentation.

- Finance : Department budgets and expenditures distribution.

- Operations : Supply chain management.

- Education : Course structure, curriculum creation.

- Human Resources : Organization structure, employee demographics.

🔗 Try Sunburst Chart for FREE from AppSource

Stacked Bar Chart with Line by JTA

Clustered bar chart with the possibility to stack one of the bars

Stacked Bar Chart with Line by JTA seamlessly merges the simplicity of a traditional bar chart with the versatility of a stacked bar, revolutionizing the way you showcase multiple datasets in a single, cohesive display.

Unlocking a new dimension of insight, our visual features a dynamic line that provides a snapshot of data trends at a glance. Navigate through your data effortlessly with multiple configurations, gaining a swift and comprehensive understanding of your information.

Tailor your visual experience with an array of functionalities and customization options, enabling you to effortlessly compare a primary metric with the performance of an entire set. The flexibility to customize the visual according to your unique preferences empowers you to harness the full potential of your data.

Features of Stacked Bar Chart with Line:

- Stack the second bar

- Format the Axis and Gridlines

- Add a legend

- Format the colors and text

- Add a line chart

- Format the line

- Add marks to the line

- Format the labels for bars and line

If you liked what you saw, you can try it for yourself and find more information here . Also, if you want to download it, you can find the visual package on the AppSource .

We have added an exciting new feature to our Combo PRO, Combo Bar PRO, and Timeline PRO visuals – Legend field support . The Legend field makes it easy to visually split series values into smaller segments, without the need to use measures or create separate series. Simply add a column with category names that are adjacent to the series values, and the visual will do the following:

- Display separate segments as a stack or cluster, showing how each segment contributed to the total Series value.

- Create legend items for each segment to quickly show/hide them without filtering.

- Apply custom fill colors to each segment.

- Show each segment value in the tooltip

Read more about the Legend field on our blog article

Drill Down Combo PRO is made for creators who want to build visually stunning and user-friendly reports. Cross-chart filtering and intuitive drill down interactions make data exploration easy and fun for any user. Furthermore, you can choose between three chart types – columns, lines, or areas; and feature up to 25 different series in the same visual and configure each series independently.

📊 Get Drill Down Combo PRO on AppSource

🌐 Visit Drill Down Combo PRO product page

Documentation | ZoomCharts Website | Follow ZoomCharts on LinkedIn

We are thrilled to announce that Fabric Core REST APIs are now generally available! This marks a significant milestone in the evolution of Microsoft Fabric, a platform that has been meticulously designed to empower developers and businesses alike with a comprehensive suite of tools and services.

The Core REST APIs are the backbone of Microsoft Fabric, providing the essential building blocks for a myriad of functionalities within the platform. They are designed to improve efficiency, reduce manual effort, increase accuracy, and lead to faster processing times. These APIs help with scale operations more easily and efficiently as the volume of work grows, automate repeatable processes with consistency, and enable integration with other systems and applications, providing a streamlined and efficient data pipeline.

The Microsoft Fabric Core APIs encompasses a range of functionalities, including:

- Workspace management: APIs to manage workspaces, including permissions.

- Item management: APIs for creating, reading, updating, and deleting items, with partial support for data source discovery and granular permissions management planned for the near future.

- Job and tenant management: APIs to manage jobs, tenants, and users within the platform.

These APIs adhere to industry standards and best practices, ensuring a unified developer experience that is both coherent and easy to use.

For developers looking to dive into the details of the Microsoft Fabric Core APIs, comprehensive documentation is available. This includes guidelines on API usage, examples, and articles managed in a centralized repository for ease of access and discoverability. The documentation is continuously updated to reflect the latest features and improvements, ensuring that developers have the most current information at their fingertips. See Microsoft Fabric REST API documentation

We’re excited to share an important update we made to the Fabric Admin APIs. This enhancement is designed to simplify your automation experience. Now, you can manage both Power BI and the new Fabric items (previously referred to as artifacts) using the same set of APIs. Before this enhancement, you had to navigate using two different APIs—one for Power BI items and another for new Fabric items. That’s no longer the case.

The APIs we’ve updated include GetItem , ListItems , GetItemAccessDetails , and GetAccessEntities . These enhancements mean you can now query and manage all your items through a single API call, regardless of whether they’re Fabric types or Power BI types. We hope this update makes your work more straightforward and helps you accomplish your tasks more efficiently.

We’re thrilled to announce the public preview of the Microsoft Fabric workload development kit. This feature now extends to additional workloads and offers a robust developer toolkit for designing, developing, and interoperating with Microsoft Fabric using frontend SDKs and backend REST APIs. Introducing the Microsoft Fabric Workload Development Kit .

The Microsoft Fabric platform now provides a mechanism for ISVs and developers to integrate their new and existing applications natively into Fabric’s workload hub. This integration provides the ability to add net new capabilities to Fabric in a consistent experience without leaving their Fabric workspace, thereby accelerating data driven outcomes from Microsoft Fabric.

By downloading and leveraging the development kit , ISVs and software developers can build and scale existing and new applications on Microsoft Fabric and offer them via the Azure Marketplace without the need to ever leave the Fabric environment.

The development kit provides a comprehensive guide and sample code for creating custom item types that can be added to the Fabric workspace. These item types can leverage the Fabric frontend SDKs and backend REST APIs to interact with other Fabric capabilities, such as data ingestion, transformation, orchestration, visualization, and collaboration. You can also embed your own data application into the Fabric item editor using the Fabric native experience components, such as the header, toolbar, navigation pane, and status bar. This way, you can offer consistent and seamless user experience across different Fabric workloads.

This is a call to action for ISVs, software developers, and system integrators. Let’s leverage this opportunity to create more integrated and seamless experiences for our users.

We’re excited about this journey and look forward to seeing the innovative workloads from our developer community.

We are proud to announce the public preview of external data sharing. Sharing data across organizations has become a standard part of day-to-day business for many of our customers. External data sharing, built on top of OneLake shortcuts, enables seamless, in-place sharing of data, allowing you to maintain a single copy of data even when sharing data across tenant boundaries. Whether you’re sharing data with customers, manufacturers, suppliers, consultants, or partners; the applications are endless.

How external data sharing works

Sharing data across tenants is as simple as any other share operation in Fabric. To share data, navigate to the item to be shared, click on the context menu, and then click on External data share . Select the folder or table you want to share and click Save and continue . Enter the email address and an optional message and then click Send .

The data consumer will receive an email containing a share link. They can click on the link to accept the share and access the data within their own tenant.

Click here for more details about external data sharing .

Following the release of OneLake data access roles in public preview, the OneLake team is excited to announce the availability of APIs for managing data access roles. These APIs can be used to programmatically manage granular data access for your lakehouses. Manage all aspects of role management such as creating new roles, editing existing ones, or changing memberships in a programmatic way.

Do you have data stored on-premises or behind a firewall that you want to access and analyze with Microsoft Fabric? With OneLake shortcuts, you can bring on-premises or network-restricted data into OneLake, without any data movement or duplication. Simply install the Fabric on-premises data gateway and create a shortcut to your S3 compatible, Amazon S3, or Google Cloud Storage data source. Then use any of Fabric’s powerful analytics engines and OneLake open APIs to explore, transform, and visualize your data in the cloud.

Try it out today and unlock the full potential of your data with OneLake shortcuts!

Data Warehouse

We are excited to announce Copilot for Data Warehouse in public preview! Copilot for Data Warehouse is an AI assistant that helps developers generate insights through T-SQL exploratory analysis. Copilot is contextualized your warehouse’s schema. With this feature, data engineers and data analysts can use Copilot to:

- Generate T-SQL queries for data analysis.

- Explain and add in-line code comments for existing T-SQL queries.

- Fix broken T-SQL code.

- Receive answers regarding general data warehousing tasks and operations.

There are 3 areas where Copilot is surfaced in the Data Warehouse SQL Query Editor:

- Code completions when writing a T-SQL query.

- Chat panel to interact with the Copilot in natural language.

- Quick action buttons to fix and explain T-SQL queries.

Learn more about Copilot for Data Warehouse: aka.ms/data-warehouse-copilot-docs. Copilot for Data Warehouse is currently only available in the Warehouse. Copilot in the SQL analytics endpoint is coming soon.

Unlocking Insights through Time: Time travel in Data warehouse (public preview)

As data volumes continue to grow in today’s rapidly evolving world of Artificial Intelligence, it is crucial to reflect on historical data. It empowers businesses to derive valuable insights that aid in making well-informed decisions for the future. Preserving multiple historical data versions not only incurred significant costs but also presented challenges in upholding data integrity, resulting in a notable impact on query performance. So, we are thrilled to announce the ability to query the historical data through time travel at the T-SQL statement level which helps unlock the evolution of data over time.

The Fabric warehouse retains historical versions of tables for seven calendar days. This retention allows for querying the tables as if they existed at any point within the retention timeframe. Time travel clause can be included in any top level SELECT statement. For complex queries that involve multiple tables, joins, stored procedures, or views, the timestamp is applied just once for the entire query instead of specifying the same timestamp for each table within the same query. This ensures the entire query is executed with reference to the specified timestamp, maintaining the data’s uniformity and integrity throughout the query execution.

From historical trend analysis and forecasting to compliance management, stable reporting and real-time decision support, the benefits of time travel extend across multiple business operations. Embrace the capability of time travel to navigate the data-driven landscape and gain a competitive edge in today’s fast-paced world of Artificial Intelligence.

We are excited to announce not one but two new enhancements to the Copy Into feature for Fabric Warehouse: Copy Into with Entra ID Authentication and Copy Into for Firewall-Enabled Storage!

Entra ID Authentication

When authenticating storage accounts in your environment, the executing user’s Entra ID will now be used by default. This ensures that you can leverage A ccess C ontrol L ists and R ole – B ased a ccess c ontrol to authenticate to your storage accounts when using Copy Into. Currently, only organizational accounts are supported.

How to Use Entra ID Authentication

- Ensure your Entra ID organizational account has access to the underlying storage and can execute the Copy Into statement on your Fabric Warehouse.

- Run your Copy Into statement without specifying any credentials; the Entra ID organizational account will be used as the default authentication mechanism.

Copy into firewall-enabled storage

The Copy Into for firewall-enabled storage leverages the trusted workspace access functionality ( Trusted workspace access in Microsoft Fabric (preview) – Microsoft Fabric | Microsoft Learn ) to establish a secure and seamless connection between Fabric and your storage accounts. Secure access can be enabled for both blob and ADLS Gen2 storage accounts. Secure access with Copy Into is available for warehouses in workspaces with Fabric Capacities (F64 or higher).

To learn more about Copy into , please refer to COPY INTO (Transact-SQL) – Azure Synapse Analytics and Microsoft Fabric | Microsoft Learn

We are excited to announce the launch of our new feature, Just in Time Database Attachment, which will significantly enhance your first experience, such as when connecting to the Datawarehouse or SQL endpoint or simply opening an item. These actions trigger the workspace resource assignment process, where, among other actions, we attach all necessary metadata of your items, Data warehouses and SQL endpoints, which can be a long process, particularly for workspaces that have a high number of items.

This feature is designed to attach your desired database during the activation process of your workspace, allowing you to execute queries immediately and avoid unnecessary delays. However, all other databases will be attached asynchronously in the background while you are able to execute queries, ensuring a smooth and efficient experience.

Data Engineering

We are advancing Fabric Runtime 1.3 from an Experimental Public Preview to a full Public Preview. Our Apache Spark-based big data execution engine, optimized for both data engineering and science workflows, has been updated and fully integrated into the Fabric platform.

The enhancements in Fabric Runtime 1.3 include the incorporation of Delta Lake 3.1, compatibility with Python 3.11, support for Starter Pools, integration with Environment and library management capabilities. Additionally, Fabric Runtime now enriches the data science experience by supporting the R language and integrating Copilot.

We are pleased to share that the Native Execution Engine for Fabric Runtime 1.2 is currently available in public preview. The Native Execution Engine can greatly enhance the performance for your Spark jobs and queries. The engine has been rewritten in C++ and operates in columnar mode and uses vectorized processing. The Native Execution Engine offers superior query performance – encompassing data processing, ETL, data science, and interactive queries – all directly on your data lake. Overall, Fabric Spark delivers a 4x speed-up on the sum of execution time of all 99 queries in the TPC-DS 1TB benchmark when compared against Apache Spark. This engine is fully compatible with Apache Spark™ APIs (including Spark SQL API).

It is seamless to use with no code changes – activate it and go. Enable it in your environment for your notebooks and your SJDs.

This feature is in the public preview, at this stage of the preview, there is no additional cost associated with using it.

We are excited to announce the Spark Monitoring Run Series Analysis features, which allow you to analyze the run duration trend and performance comparison for Pipeline Spark activity recurring run instances and repetitive Spark run activities from the same Notebook or Spark Job Definition.

- Run Series Comparison: Users can compare the duration of a Notebook run with that of previous runs and evaluate the input and output data to understand the reasons behind prolonged run durations.

- Outlier Detection and Analysis: The system can detect outliers in the run series and analyze them to pinpoint potential contributing factors.

- Detailed Run Instance Analysis: Clicking on a specific run instance provides detailed information on time distribution, which can be used to identify performance enhancement opportunities.

- Configuration Insights : Users can view the Spark configuration used for each run, including auto-tuned configurations for Spark SQL queries in auto-tune enabled Notebook runs.

You can access the new feature from the item’s recent runs panel and Spark application monitoring page.

We are excited to announce that Notebook now supports the ability to tag others in comments, just like the familiar functionality of using Office products!

When you select a section of code in a cell, you can add a comment with your insights and tag one or more teammates to collaborate or brainstorm on the specifics. This intuitive enhancement is designed to amplify collaboration in your daily development work.

Moreover, you can easily configure the permissions when tagging someone who doesn’t have the permission, to make sure your code asset is well managed.

We are thrilled to unveil a significant enhancement to the Fabric notebook ribbon, designed to elevate your data science and engineering workflows.

In the new version, you will find the new Session connect control on the Home tab, and now you can start a standard session without needing to run a code cell.

You can also easily spin up a High concurrency session and share the session across multiple notebooks to improve the compute resource utilization. And you can easily attach/leave a high concurrency session with a single click.

The “ View session information ” can navigate you to the session information dialog, where you can find a lot of useful detailed information, as well as configure the session timeout. The diagnostics info is essentially helpful when you need support for notebook issues.

Now you can easily access the powerful “ Data Wrangler ” on Home tab with the new ribbon! You can explore your data with the fancy low-code experience of data wrangler, and the pandas DataFrames and Spark DataFrames are all supported.

We recently made some changes to the Fabric notebook metadata to ensure compliance and consistency:

Notebook file content:

- The keyword “trident” has been replaced with “dependencies” in the notebook content. This adjustment ensures consistency and compliance.

- Notebook Git format:

- The preface of the notebook has been modified from “# Synapse Analytics notebook source” to “# Fabric notebook source”.

- Additionally, the keyword “synapse” has been updated to “dependencies” in the Git repo.

The above changes will be marked as ‘uncommitted’ for one time if your workspace is connected to Git. No action is needed in terms of these changes , and there won’t be any breaking scenario within the Fabric platform . If you have any further updates or questions, feel free to share with us.

We are thrilled to announce that the environment is now a generally available item in Microsoft Fabric. During this GA timeframe, we have shipped a few new features of Environment.

- Git support

The environment is now Git supported. You can check-in the environment into your Git repo and manipulate the environment locally with its YAML representations and custom library files. After updating the changes from local to Fabric portal, you can publish them by manual action or through REST API.

- Deployment pipeline

Deploying environments from one workspace to another is supported. Now, you can deploy the code items and their dependent environments together from development to test and even production.