Collaborative Active Learning pp 33–52 Cite as

Active Learning: An Integrative Review

- Gillian Kidman 4 &

- Minh Nguyet Nguyen 4

- First Online: 10 December 2022

950 Accesses

This chapter provides an integrated review of active learning in higher education over the past two decades. The research during this time was scrutinised, revealing four methodological approaches (students’ behaviour and how they engage in their studies, activities/tasks/strategies developed/used to generate/nurture/promote active learning, the theoretical approach to active learning, and the impact of the physical learning environment). Creating an Active Learning Framework of student engagement facilitated a deeper analysis of the 30 articles against four engagement indicators (reflective and integrative learning, learning strategies, quantitative reasoning, and collaborative learning). An adjacency analysis of the methodological approaches and engagement indicators revealed that the active learning field is a maturing research area where researched areas indicate high influence relationships. However, the research focuses on the student undertaking active learning and the materials used. Devoid of research attention is the Lecturer/tutor, their identity as a facilitator of active learning, and as a learner in this area.

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

* Denotes that the paper is included in the integrative review

Google Scholar

* Beckerson, W. C., Anderson, J. O., Perpich, J. D., & Yoder-Himes, D. (2020). An introvert’s perspective: Analyzing the impact of active learning on multiple levels of class social personalities in an upper level biology course. Journal of College Science Teaching, 49 (3), 47–57.

Article Google Scholar

* Brewe, E., Dou, R., & Shand, R. (2018). Costs of success: Financial implications of implementation of active learning in introductory physics courses for students and administrators. Physical Review. Physics Education Research, 14 (1), 010109. https://doi.org/10.1103/PhysRevPhysEducRes.14.010109

* Bucklin, B. A., Asdigian, N. L., Hawkins, J. L., & Klein, U. (2021). Making it stick: Use of active learning strategies in continuing medical education. BMC Medical Education, 21 (1), 44–44. https://doi.org/10.1186/s12909-020-02447-0

* Chan, K., Cheung, G., Wan, K., Brown, I., & Luk, G. (2015). Synthesizing technology adoption and learners’ approaches towards active learning in higher education. Electronic Journal of e-Learning, 13 (6), 431–440.

* Cooper, K. M., Downing, V. R., & Brownell, S. E. (2018). The influence of active learning practices on student anxiety in large-enrollment college science classrooms. Int J STEM Educ, 5 (1), 1–18. https://doi.org/10.1186/s40594-018-0123-6

* Damaskou, E., & Petratos, P. (2018). Management strategies for active learning in AACSB accredited STEM discipline of CIS: Evidence from traditional and novel didactic methods in higher edu. International Journal for Business Education, 158 , 41–56.

* Daouk, Z., Bahous, R., & Bacha, N. N. (2016). Perceptions on the effectiveness of active learning strategies. Journal of Applied Research in Higher Education, 8 (3), 360–375. https://doi.org/10.1108/JARHE-05-2015-0037

* Das Neves, R. M., Lima, R. M., & Mesquita, D. (2021). Teacher competences for active learning in engineering education. Sustainability (Basel, Switzerland), 13 (16), 9231. https://doi.org/10.3390/su13169231

* Fields, L., Trostian, B., Moroney, T., & Dean, B. A. (2021). Active learning pedagogy transformation: A whole-of-school approach to person-centred teaching and nursing graduates. Nurse Education in Practice, 53 , 103051–103051. https://doi.org/10.1016/j.nepr.2021.103051

* Gahl, M. K., Gale, A., Kaestner, A., Yoshina, A., Paglione, E., & Bergman, G. (2021). Perspectives on facilitating dynamic ecology courses online using active learning. Ecology and Evolution, 11 (8), 3473–3480. https://doi.org/10.1002/ece3.6953

* Ghilay, Y., & Ghilay, R. (2015). TBAL: Technology-based active learning in higher education. Journal of education and learning, 4 (4). https://doi.org/10.5539/jel.v4n4p10

* Grossman, G. D., & Simon, T. N. (2020). Student perceptions of open educational resources video-based active learning in university-level biology classes: A multi-class evaluation. Journal of College Science Teaching, 49 (6), 36–44.

* Hartikainen, S., Rintala, H., Pylväs, L., & Nokelainen, P. (2019). The concept of active learning and the measurement of learning outcomes: A review of research in engineering higher education. Education Sciences, 9 (4), 276. https://doi.org/10.3390/educsci9040276

* Holec, V., & Marynowski, R. (2020). Does it matter where you teach? Insights from a quasi-experimental study of student engagement in an active learning classroom. Teaching and learning inquiry, 8 (2), 140–163. LEARNINQU.8.2.10. https://doi.org/10.20343/TEACH

* Hyun, J., Ediger, R., & Lee, D. (2017). Students’ satisfaction on their Learning process in active learning and traditional classrooms. International Journal of Teaching and Learning in Higher Education, 29 (1), 108–118.

* Ito, H., & Kawazoe, N. (2015). Active learning for creating innovators: Employability skills beyond industrial needs. International Journal of Higher Education, 4 (2). https://doi.org/10.5430/ijhe.v4n2p81

* Kressler, B., & Kressler, J. (2020). Diverse student perceptions of active learning in a large enrollment STEM course. The Journal of Scholarship of Teaching and Learning, 20 (1). https://doi.org/10.14434/josotl.v20i1.24688

* Lim, J., Ko, H., Yang, J. W., Kim, S., Lee, S., Chun, M.-S., Ihm, J., & Park, J. (2019). Active learning through discussion: ICAP framework for education in health professions. BMC Medical Education, 19 (1), 477–477. https://doi.org/10.1186/s12909-019-1901-7

* Linsey, J., Talley, A., White, C., Jensen, D., & Wood, K. (2009). From tootsie rolls to broken bones: An innovative approach for active learning in mechanics of materials. Advances in Engineering Education, 1 (3), 1–22.

* Ludwig, J. (2021). An experiment in active learning: The effects of teams. International Journal of Educational Methodology, 7 (2), 353–360. https://doi.org/10.12973/ijem.7.2.353

* MacVaugh, J., & Norton, M. (2012). Introducing sustainability into business education contexts using active learning. International Journal of Sustainability in Higher Education, 13 (1), 72–87. https://doi.org/10.1108/14676371211190326

* Mangram, J. A., Haddix, M., Ochanji, M. K., & Masingila, J. (2015). Active learning strategies for complementing the lecture teaching methods in large classes in higher education. Journal of Instructional Research, 4 , 57–68.

* Pundak, D., Herscovitz, O., & Shacham, M. (2010). Attitudes of face-to-face and e-learning instructors toward “Active Learning”. The European Journal of Open, Distance and E-Learning, 13 .

* Rose, S., Hamill, R., Caruso, A., & Appelbaum, N. P. (2021). Applying the plan-do-study-act cycle in medical education to refine an antibiotics therapy active learning session. BMC Medical Education, 21 (1), 1–459. https://doi.org/10.1186/s12909-021-02886-3

* Stewart, D. W., Brown, S. D., Clavier, C. W., & Wyatt, J. (2011). Active-learning processes used in us pharmacy education. American Journal of Pharmaceutical Education, 75 (4), 68–68. https://doi.org/10.5688/ajpe75468

* Tirado-Olivares, S., Cózar-Gutiérrez, R., García-Olivares, R., & González-Calero, J. A. (2021). Active learning in history teaching in higher education: The effect of inquiry-based learning and a student response system-based formative assessment in teacher training. Australasian Journal of Educational Technology, 37 (5), 61–76. https://doi.org/10.14742/ajet.7087

* Torres, V., Sampaio, C. A., & Caldeira, A. P. (2019). Incoming medical students and their perception on the transition towards an active learning. Interface (Botucatu, Brazil), 23 . https://doi.org/10.1590/Interface.170471

* Van Amburgh, J. A., Devlin, J. W., Kirwin, J. L., & Qualters, D. M. (2007). A tool for measuring active learning in the classroom. American Journal of Pharmaceutical Education, 71 (5), 85. https://doi.org/10.5688/aj710585

* Walters, K. (2014). Sharing classroom research and the scholarship of teaching: Student resistance to active learning may not be as pervasive as is commonly believed. Nursing Education Perspectives, 35 (5), 342–343. https://doi.org/10.5480/11-691.1

* William, C. B., Jennifer, O. A., John, D. P., & Debbie, Y.-H. (2020). An introvert’s perspective: Analyzing the impact of active learning on multiple levels of class social personalities in an upper level biology course. Journal of College Science Teaching, 49 (3), 47–57.

Braun, V., & Clarke, V. (2013). Successful qualitative research: A practical guide for beginners . SAGE.

Bonwell, C., & Eison, J. (1991). Active learning: Creating excitement in the classroom AEHE-ERIC higher education ( Report No. 1 ). Jossey-Bass.

Cooper, H. M. (1984). The integrative research review: A systematic approach . SAGE Publications.

Coughlan, M., & Cronin, P. (2017). Doing a literature review in nursing, health and social care (2nd ed.) (p. 11), Beverly Hills. SAGE Publications.

Denney, A. S., & Tewksbury, R. (2013). How to write a literature review. Journal of Criminal Justice Education, 24 (2), 218–234. https://doi.org/10.1080/10511253.2012.730617

Freeman, S., Eddy, S., McDonough, M., Smith, K., Okoroafor, N., Jordt, H., & Wenderoth, M. (2014). Active learning increases student performance in science, engineering, and mathematics. Proceedings of the National Academy of Sciences of the United States of America., 111 (23), 8410–8415.

Hake, R. R. (1998). Interactive-engagement vs. traditional methods: A six-thousand-student survey of mechanics test data for introductory physics courses. American Journal of Physics, 66, 64. https://doi.org/10.1119/1.18809

Keathley-Herring, H., Van Aken, E., Gonzalez-Aleu, F., et al. (2016). Assessing the maturity of a research area: Bibliometric review and proposed framework. Scientometrics, 109 , 927–951. https://doi.org/10.1007/s11192-016-2096-x

Landscape design validation. (2009). Before drawing look at adjacency . July 26 post. https://ldvalidate.wordpress.com/2009/07/26/before-drawing-look-at-adjacency/

National Survey of Student Engagement (NSSE). (2020). Engagement indicators & high-impact practices . https://nsse.indiana.edu/nsse/survey-instruments/engagement-indicators.html

Russell, C. L. (2005). An overview of the integrative research review. Progress in Transplantation, 15 (1), 8–13. https://doi.org/10.7182/prtr.15.1.0n13660r26g725kj

Toronto, C. E. (2020). Overview of the integrative review. In C. E. Toronto & R. Remington (Eds.), A step-by-step guide to conducting an integrative review (1st ed.). Springer.

Download references

Author information

Authors and affiliations.

School of Curriculum, Teaching and Inclusive Education, Monash University, Melbourne, Australia

Gillian Kidman & Minh Nguyet Nguyen

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Gillian Kidman .

Editor information

Editors and affiliations.

Monash University Malaysia, Subang Jaya, Malaysia

Chan Chang-Tik

Monash University, Clayton, VIC, Australia

Gillian Kidman

Universiti Malaya, Kuala Lumpur, Malaysia

Meng Yew Tee

Rights and permissions

Reprints and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter.

Kidman, G., Nguyen, M.N. (2022). Active Learning: An Integrative Review. In: Chang-Tik, C., Kidman, G., Tee, M.Y. (eds) Collaborative Active Learning. Palgrave Macmillan, Singapore. https://doi.org/10.1007/978-981-19-4383-6_2

Download citation

DOI : https://doi.org/10.1007/978-981-19-4383-6_2

Published : 10 December 2022

Publisher Name : Palgrave Macmillan, Singapore

Print ISBN : 978-981-19-4382-9

Online ISBN : 978-981-19-4383-6

eBook Packages : Education Education (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Open access

- Published: 15 March 2021

Instructor strategies to aid implementation of active learning: a systematic literature review

- Kevin A. Nguyen 1 ,

- Maura Borrego 2 ,

- Cynthia J. Finelli ORCID: orcid.org/0000-0001-9148-1492 3 ,

- Matt DeMonbrun 4 ,

- Caroline Crockett 3 ,

- Sneha Tharayil 2 ,

- Prateek Shekhar 5 ,

- Cynthia Waters 6 &

- Robyn Rosenberg 7

International Journal of STEM Education volume 8 , Article number: 9 ( 2021 ) Cite this article

23k Accesses

36 Citations

5 Altmetric

Metrics details

Despite the evidence supporting the effectiveness of active learning in undergraduate STEM courses, the adoption of active learning has been slow. One barrier to adoption is instructors’ concerns about students’ affective and behavioral responses to active learning, especially student resistance. Numerous education researchers have documented their use of active learning in STEM classrooms. However, there is no research yet that systematically analyzes these studies for strategies to aid implementation of active learning and address students’ affective and behavioral responses. In this paper, we conduct a systematic literature review and identify 29 journal articles and conference papers that researched active learning, affective and behavioral student responses, and recommended at least one strategy for implementing active learning. In this paper, we ask: (1) What are the characteristics of studies that examine affective and behavioral outcomes of active learning and provide instructor strategies? (2) What instructor strategies to aid implementation of active learning do the authors of these studies provide?

In our review, we noted that most active learning activities involved in-class problem solving within a traditional lecture-based course ( N = 21). We found mostly positive affective and behavioral outcomes for students’ self-reports of learning, participation in the activities, and course satisfaction ( N = 23). From our analysis of the 29 studies, we identified eight strategies to aid implementation of active learning based on three categories. Explanation strategies included providing students with clarifications and reasons for using active learning. Facilitation strategies entailed working with students and ensuring that the activity functions as intended. Planning strategies involved working outside of the class to improve the active learning experience.

To increase the adoption of active learning and address students’ responses to active learning, this study provides strategies to support instructors. The eight strategies are listed with evidence from numerous studies within our review on affective and behavioral responses to active learning. Future work should examine instructor strategies and their connection with other affective outcomes, such as identity, interests, and emotions.

Introduction

Prior reviews have established the effectiveness of active learning in undergraduate science, technology, engineering, and math (STEM) courses (e.g., Freeman et al., 2014 ; Lund & Stains, 2015 ; Theobald et al., 2020 ). In this review, we define active learning as classroom-based activities designed to engage students in their learning through answering questions, solving problems, discussing content, or teaching others, individually or in groups (Prince & Felder, 2007 ; Smith, Sheppard, Johnson, & Johnson, 2005 ), and this definition is inclusive of research-based instructional strategies (RBIS, e.g., Dancy, Henderson, & Turpen, 2016 ) and evidence-based instructional practices (EBIPs, e.g., Stains & Vickrey, 2017 ). Past studies show that students perceive active learning as benefitting their learning (Machemer & Crawford, 2007 ; Patrick, Howell, & Wischusen, 2016 ) and increasing their self-efficacy (Stump, Husman, & Corby, 2014 ). Furthermore, the use of active learning in STEM fields has been linked to improvements in student retention and learning, particularly among students from some underrepresented groups (Chi & Wylie, 2014 ; Freeman et al., 2014 ; Prince, 2004 ).

Despite the overwhelming evidence in support of active learning (e.g., Freeman et al., 2014 ), prior research has found that traditional teaching methods such as lecturing are still the dominant mode of instruction in undergraduate STEM courses, and low adoption rates of active learning in undergraduate STEM courses remain a problem (Hora & Ferrare, 2013 ; Stains et al., 2018 ). There are several reasons for these low adoption rates. Some instructors feel unconvinced that the effort required to implement active learning is worthwhile, and as many as 75% of instructors who have attempted specific types of active learning abandon the practice altogether (Froyd, Borrego, Cutler, Henderson, & Prince, 2013 ).

When asked directly about the barriers to adopting active learning, instructors cite a common set of concerns including the lack of preparation or class time (Finelli, Daly, & Richardson, 2014 ; Froyd et al., 2013 ; Henderson & Dancy, 2007 ). Among these concerns, student resistance to active learning is a potential explanation for the low rates of instructor persistence with active learning, and this negative response to active learning has gained increased attention from the academic community (e.g., Owens et al., 2020 ). Of course, students can exhibit both positive and negative responses to active learning (Carlson & Winquist, 2011 ; Henderson, Khan, & Dancy, 2018 ; Oakley, Hanna, Kuzmyn, & Felder, 2007 ), but due to the barrier student resistance can present to instructors, we focus here on negative student responses. Student resistance to active learning may manifest, for example, as lack of student participation and engagement with in-class activities, declining attendance, or poor course evaluations and enrollments (Tolman, Kremling, & Tagg, 2016 ; Winkler & Rybnikova, 2019 ).

We define student resistance to active learning (SRAL) as a negative affective or behavioral student response to active learning (DeMonbrun et al., 2017 ; Weimer, 2002 ; Winkler & Rybnikova, 2019 ). The affective domain, as it relates to active learning, encompasses not only student satisfaction and perceptions of learning but also motivation-related constructs such as value, self-efficacy, and belonging. The behavioral domain relates to participation, putting forth a good effort, and attending class. The affective and behavioral domains differ from much of the prior research on active learning that centers measuring cognitive gains in student learning, and systematic reviews are readily available on this topic (e.g., Freeman et al., 2014 ; Theobald et al., 2020 ). Schmidt, Rosenberg, and Beymer ( 2018 ) explain the relationship between affective, cognitive, and behavioral domains, asserting all three types of engagement are necessary for science learning, and conclude that “students are unlikely to exert a high degree of behavioral engagement during science learning tasks if they do not also engage deeply with the content affectively and cognitively” (p. 35). Thus, SRAL and negative affective and behavioral student response is a critical but underexplored component of STEM learning.

Recent research on student affective and behavioral responses to active learning has uncovered mechanisms of student resistance. Deslauriers, McCarty, Miller, Callaghan, and Kestin’s ( 2019 ) interviews of physics students revealed that the additional effort required by the novel format of an interactive lecture was the primary source of student resistance. Owens et al. ( 2020 ) identified a similar source of student resistance, which was to their carefully designed biology active learning intervention. Students were concerned about the additional effort required and the unfamiliar student-centered format. Deslauriers et al. ( 2019 ) and Owens et al. ( 2020 ) go a step further in citing self-efficacy (Bandura, 1982 ), mindset (Dweck & Leggett, 1988 ), and student engagement (Kuh, 2005 ) literature to explain student resistance. Similarly, Shekhar et al.’s ( 2020 ) review framed negative student responses to active learning in terms of expectancy-value theory (Wigfield & Eccles, 2000 ); students reacted negatively when they did not find active learning useful or worth the time and effort, or when they did not feel competent enough to complete the activities. Shekhar et al. ( 2020 ) also applied expectancy violation theory from physics education research (Gaffney, Gaffney, & Beichner, 2010 ) to explain how students’ initial expectations of a traditional course produced discomfort during active learning activities. To address both theories of student resistance, Shekhar et al. ( 2020 ) suggested that instructors provide scaffolding (Vygotsky, 1978 ) and support for self-directed learning activities. So, while framing the research as SRAL is relatively new, ideas about working with students to actively engage them in their learning are not. Prior literature on active learning in STEM undergraduate settings includes clues and evidence about strategies instructors can employ to reduce SRAL, even if they are not necessarily framed by the authors as such.

Recent interest in student affective and behavioral responses to active learning, including SRAL, is a relatively new development. But, given the discipline-based educational research (DBER) knowledge base around RBIS and EBIP adoption, we need not to reinvent the wheel. In this paper, we conduct a system review. Systematic reviews are designed to methodically gather and synthesize results from multiple studies to provide a clear overview of a topic, presenting what is known and what is not known (Borrego, Foster, & Froyd, 2014 ). Such clarity informs decisions when designing or funding future research, interventions, and programs. Relevant studies for this paper are scattered across STEM disciplines and in DBER and general education venues, which include journals and conference proceedings. Quantitative, qualitative, and mixed methods approaches have been used to understand student affective and behavioral responses to active learning. Thus, a systematic review is appropriate for this topic given the long history of research on the development of RBIS, EBIPs, and active learning in STEM education; the distribution of primary studies across fields and formats; and the different methods taken to evaluate students’ affective and behavioral responses.

Specifically, we conducted a systematic review to address two interrelated research questions. (1) What are the characteristics of studies that examine affective and behavioral outcomes of active learning and provide instructor strategies ? (2) What instructor strategies to aid implementation of active learning do the authors of these studies provide ? These two questions are linked by our goal of sharing instructor strategies that can either reduce SRAL or encourage positive student affective and behavioral responses. Therefore, the instructor strategies in this review are only from studies that present empirical data of affective and behavioral student response to active learning. The strategies we identify in this review will not be surprising to highly experienced teaching and learning practitioners or researchers. However, this review does provide an important link between these strategies and student resistance, which remains one of the most feared barriers to instructor adoption of RBIS, EBIPs, and other forms of active learning.

Conceptual framework: instructor strategies to reduce resistance

Recent research has identified specific instructor strategies that correlate with reduced SRAL and positive student response in undergraduate STEM education (Finelli et al., 2018 ; Nguyen et al., 2017 ; Tharayil et al., 2018 ). For example, Deslauriers et al. ( 2019 ) suggested that physics students perceive the additional effort required by active learning to be evidence of less effective learning. To address this, the authors included a 20-min lecture about active learning in a subsequent course offering. By the end of that course, 65% of students reported increased enthusiasm for active learning, and 75% said the lecture intervention positively impacted their attitudes toward active learning. Explaining how active learning activities contribute to student learning is just one of many strategies instructors can employ to reduce SRAL (Tharayil et al., 2018 ).

DeMonbrun et al. ( 2017 ) provided a conceptual framework for differentiating instructor strategies which includes not only an explanation type of instructor strategies (e.g., Deslauriers et al., 2019 ; Tharayil et al., 2018 ) but also a facilitation type of instructor strategies. Explanation strategies involve describing the purpose (such as how the activity relates to students’ learning) and expectations of the activity to students. Typically, instructors use explanation strategies before the in-class activity has begun. Facilitation strategies include promoting engagement and keeping the activity running smoothly once the activity has already begun, and some specific strategies include walking around the classroom or directly encouraging students. We use the existing categories of explanation and facilitation as a conceptual framework to guide our analysis and systematic review.

As a conceptual framework, explanation and facilitation strategies describe ways to aid the implementation of RBIS, EBIP, and other types of active learning. In fact, the work on these types of instructor strategies is related to higher education faculty development, implementation, and institutional change research perspectives (e.g., Borrego, Cutler, Prince, Henderson, & Froyd, 2013 ; Henderson, Beach, & Finkelstein, 2011 ; Kezar, Gehrke, & Elrod, 2015 ). As such, the specific types of strategies reviewed here are geared to assist instructors in moving toward more student-centered teaching methods by addressing their concerns of student resistance.

SRAL is a particular negative form of affective or behavioral student response (DeMonbrun et al., 2017 ; Weimer, 2002 ; Winkler & Rybnikova, 2019 ). Affective and behavioral student responses are conceptualized at the reactionary level (Kirkpatrick, 1976 ) of outcomes, which consists of how students feel (affective) and how they conduct themselves within the course (behavioral). Although affective and behavioral student responses to active learning are less frequently reported than cognitive outcomes, prior research suggests a few conceptual constructs within these outcomes.

Affective outcomes consist of any students’ feelings, preferences, and satisfaction with the course. Affective outcomes also include students’ self-reports of whether they thought they learned more (or less) during active learning instruction. Some relevant affective outcomes include students’ perceived value or utility of active learning (Shekhar et al., 2020 ; Wigfield & Eccles, 2000 ), their positivity toward or enjoyment of the activities (DeMonbrun et al., 2017 ; Finelli et al., 2018 ), and their self-efficacy or confidence with doing the in-class activity (Bandura, 1982 ).

In contrast, students’ behavioral responses to active learning consist of their actions and practices during active learning. This includes students’ attendance in the class, their participation , engagement, and effort with the activity, and students’ distraction or off-task behavior (e.g., checking their phones, leaving to use the restroom) during the activity (DeMonbrun et al., 2017 ; Finelli et al., 2018 ; Winkler & Rybnikova, 2019 ).

We conceptualize negative or low scores in either affective or behavioral student outcomes as an indicator of SRAL (DeMonbrun et al., 2017 ; Nguyen et al., 2017 ). For example, a low score in reported course satisfaction would be an example of SRAL. This paper aims to synthesize instructor strategies to aid implementation of active learning from studies that either address SRAL and its negative or low scores or relate instructor strategies to positive or high scores. Therefore, we also conceptualize positive student affective and behavioral outcomes as the absence of SRAL. For easy categorization of this review then, we summarize studies’ affective and behavioral outcomes on active learning to either being positive , mostly positive , mixed/neutral , mostly negative , or negative .

We conducted a systematic literature review (Borrego et al., 2014 ; Gough, Oliver, & Thomas, 2017 ; Petticrew & Roberts, 2006 ) to identify primary research studies that describe active learning interventions in undergraduate STEM courses, recommend one or more strategies to aid implementation of active learning, and report student response outcomes to active learning.

A systematic review was warranted due to the popularity of active learning and the publication of numerous papers on the topic. Multiple STEM disciplines and research audiences have published journal articles and conference papers on the topic of active learning in the undergraduate STEM classroom. However, it was not immediately clear which studies addressed active learning, affective and behavioral student responses, and strategies to aid implementation of active learning. We used the systematic review process to efficiently gather results of multiple types of studies and create a clear overview of our topic.

Definitions

For clarity, we define several terms in this review. Researchers refer to us, the authors of this manuscript. Authors and instructors wrote the primary studies we reviewed, and we refer to these primary studies as “studies” consistently throughout. We use the term activity or activities to refer to the specific in-class active learning tasks assigned to students. Strategies refer to the instructor strategies used to aid implementation of active learning and address student resistance to active learning (SRAL). Student response includes affective and behavioral responses and outcomes related to active learning. SRAL is an acronym for student resistance to active learning, defined here as a negative affective or behavioral student response. Categories or category refer to a grouping of strategies to aid implementation of active learning, such as explanation or facilitation. Excerpts are quotes from studies, and these excerpts are used as codes and examples of specific strategies.

Study timeline, data collection, and sample selection

From 2015 to 2016, we worked with a research librarian to locate relevant studies and conduct a keyword search within six databases: two multidisciplinary databases (Web of Science and Academic Search Complete), two major engineering and technology indexes (Compendex and Inspec), and two popular education databases (Education Source and Education Resource Information Center). We created an inclusion criteria that listed both search strings and study requirements:

Studies must include an in-class active learning intervention. This does not include laboratory classes. The corresponding search string was:

“active learning” or “peer-to-peer” or “small group work” or “problem based learning” or “problem-based learning” or “problem-oriented learning” or “project-based learning” or “project based learning” or “peer instruction” or “inquiry learning” or “cooperative learning” or “collaborative learning” or “student response system” or “personal response system” or “just-in-time teaching” or “just in time teaching” or clickers

Studies must include empirical evidence addressing student response to the active learning intervention. The corresponding search string was:

“affective outcome” or “affective response” or “class evaluation” or “course evaluation” or “student attitudes” or “student behaviors” or “student evaluation” or “student feedback” or “student perception” or “student resistance” or “student response”

Studies must describe a STEM course, as defined by the topic of the course, rather than by the department of the course or the major of the students enrolled (e.g., a business class for mathematics majors would not be included, but a mathematics class for business majors would).

Studies must be conducted in undergraduate courses and must not include K-12, vocational, or graduate education.

Studies must be in English and published between 1990 and 2015 as journal articles or conference papers.

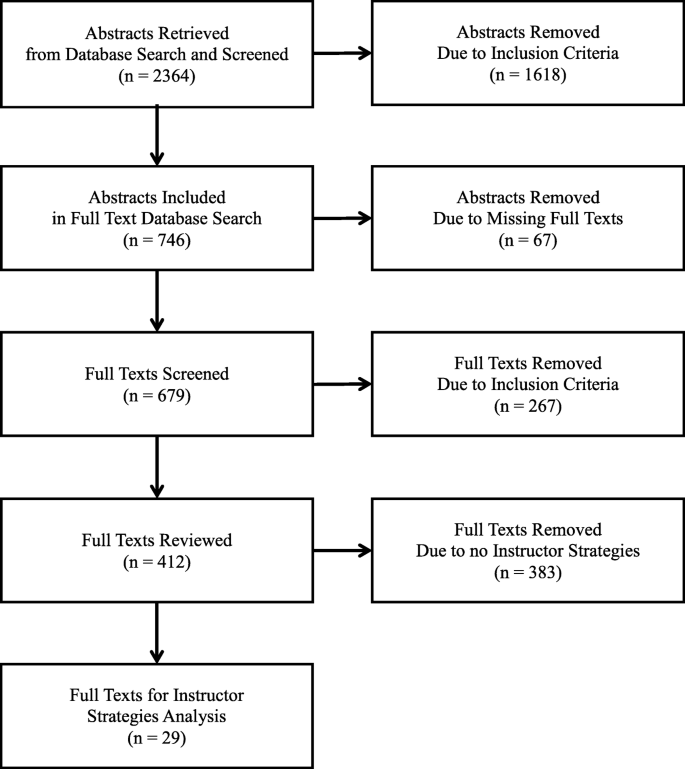

In addition to searching the six databases, we emailed solicitations to U.S. National Science Foundation Improving Undergraduate STEM Education (NSF IUSE) grantees. Between the database searches and email solicitation, we identified 2364 studies after removing duplicates. Most studies were from the database search, as we received just 92 studies from email solicitation (Fig. 1 ).

PRISMA screening overview styled after Liberati et al. ( 2009 ) and Passow and Passow ( 2017 )

Next, we followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines for screening studies with our inclusion criteria (Borrego et al., 2014 ; Petticrew & Roberts, 2006 ). From 2016 to 2018, a team of seven researchers conducted two rounds of review in Refworks: the first round with only titles and abstracts and the second round with the entire full-text. In both rounds, two researchers independently decided whether each study should be retained based on our inclusion criteria listed above. At the abstract review stage, if there was a disagreement between independent coders, we decided to pass the study on to the full text screening round. We screened a total of 2364 abstracts, and only 746 studies passed the first round of title and abstract verification (see PRISMA flow chart on Fig. 1 ). If there was still a disagreement between independent coders at the full text screening round, then the seven researchers met and discussed the study, clarified the inclusion criteria as needed to resolve potential future disagreements, and when necessary, took a majority vote (4 out of the 7 researchers) on the inclusion of the study. Due to the high number of coders, it was unusual to reach full consensus with all 7 coders, so a majority vote was used to finalize the inclusion of certain studies. We resolved these disagreements on a rolling basis, and depending on the round (abstract or full text), we disagreed about 10–15% of the time on the inclusion of a study. In both the first and second round of screening, studies were often excluded because they did not gather novel empirical data or evidence (inclusion criteria #2) or were not in an undergraduate STEM course (inclusion criteria #3 and #4). Only 412 studies met all our final inclusion criteria.

Coding procedure

From 2017 to 2018, a team of five researchers then coded these 412 studies for detailed information. To quickly gather information about all 412 studies and to answer the first part of our research question (What are the characteristics of studies that examine affective and behavioral outcomes of active learning and provide instructor strategies?), we developed an online coding form using Google Forms and Google Sheets. The five researchers piloted and refined the coding form over three rounds of pair coding, and 19 studies were used to test and revise early versions of the coding form. The final coding form (Borrego et al., 2018 ) used a mix of multiple choice and free response items regarding study characteristics (bibliographic information, type of publication, location of study), course characteristics (discipline, course level, number of students sampled, and type of active learning), methodology (main type of evidence collected, sample size, and analysis methods), study findings (types of student responses and outcomes), and strategy reported (if the study explicitly mentioned using strategies to implementation of active learning).

In the end, only 29 studies explicitly described strategies to aid implementation of active learning (Fig. 1 ), and we used these 29 studies as the dataset for this study. The main difference between these 29 studies and the other 383 studies was that these 29 studies explicitly described the ways authors implemented active learning in their courses to address SRAL or positive student outcomes. Although some readers who are experienced active learning instructors or educational researchers may view pedagogies and strategies as integrated, we found that most papers described active learning methods in terms of student tasks, while advice on strategies, if included, tended to appear separately. We chose to not over interpret passing mentions of how active learning was implemented as strategies recommended by the authors.

Analysis procedure for coding strategies

To answer our second research question (What instructor strategies to aid implementation of active learning do the authors of these studies provide?), we closely reviewed the 29 studies to analyze the strategies in more detail. We used Boyatzis’s ( 1998 ) thematic analysis technique to compile all mentions of instructor strategies to aid implementation of active learning and categorize these excerpts into certain strategies. This technique uses both deductive and inductive coding processes (Creswell & Creswell, 2017 ; Jesiek, Mazzurco, Buswell, & Thompson, 2018 ).

In 2018, three researchers reread the 29 studies, marking excerpts related to strategies independently. We found a total of 126 excerpts. The number of excerpts within each study ranged from 1 to 14 excerpts ( M = 4, SD = 3). We then took all the excerpts and pasted each into its own row in a Google Sheet. We examined the entire spreadsheet as a team and grouped similar excerpts together using a deductive coding process. We used the explanation and facilitation conceptual framework (DeMonbrun et al., 2017 ) and placed each excerpt into either category. We also assigned a specific strategy (i.e., describing the purpose of the activity, or encouraging students) from the framework for each excerpt.

However, there were multiple excerpts that did not easily match either category; we set these aside for the inductive coding process. We then reviewed all excerpts without a category and suggested the creation of a new third category, called planning . We based this new category on the idea that the existing explanation and facilitation conceptual framework did not capture strategies that occurred outside of the classroom. We discuss the specific strategies within the planning category in the Results. With a new category in hand, we created a preliminary codebook consisting of explanation, facilitation, and planning categories, and their respective specific strategies.

We then passed the spreadsheet and preliminary codebook to another researcher who had not previously seen the excerpts. The second researcher looked through all the excerpts and assigned categories and strategies, without being able to see the suggestions of the initial three researchers. The second researcher also created their own new strategies and codes, especially when a specific strategy was not presented in the preliminary codebook. All of their new strategies and codes were created within the planning category. The second researcher agreed on assigned categories and implementation strategies for 71% of the total excerpts. A researcher from the initial strategies coding met with the second researcher and discussed all disagreements. The high number of disagreements, 29%, arose from the specific strategies within the new third category, planning. Since the second researcher created new planning strategies, by default these assigned codes would be a disagreement. The two researchers resolved the disagreements by finalizing a codebook with the now full and combined list of planning strategies and the previous explanation and facilitation strategies. Finally, they started the last round of coding, and they coded the excerpts with the final codebook. This time, they worked together in the same coding sessions. Any disagreements were immediately resolved through discussion and updating of final strategy codes. In the end, all 126 excerpts were coded and kept.

Characteristics of the primary studies

To answer our first research question (What are the characteristics of studies that examine affective and behavioral outcomes of active learning and provide instructor strategies?), we report the results from our coding and systematic review process. We discuss characteristics of studies within our dataset below and in Table 1 .

Type of publication and research audience

Of the 29 studies, 11 studies were published in conference proceedings, while the remaining 18 studies were journal articles. Examples of journals included the European Journal of Engineering Education , Journal of College Science Teaching , and PRIMUS (Problems, Resources, and Issues in Mathematics Undergraduate Studies).

In terms of research audiences and perspectives, both US and international views were represented. Eighteen studies were from North America, two were from Australia, three were from Asia, and six were from Europe. For more details about the type of research publications, full bibliographic information for all 29 studies is included in the Appendix.

Types of courses sampled

Studies sampled different types of undergraduate STEM courses. In terms of course year, most studies sampled first-year courses (13 studies). All four course years were represented (4 second-year, 3 third-year, 2 fourth-year, 7 not reported). In regards to course discipline or major, all major STEM education disciplines were represented. Fourteen studies were conducted in engineering courses, and most major engineering subdisciplines were represented, such as electrical and computer engineering (4 studies), mechanical engineering (3 studies), general engineering courses (3 studies), chemical engineering (2 studies), and civil engineering (1 study). Thirteen studies were conducted in science courses (3 physics/astronomy, 7 biology, 3 chemistry), and 2 studies were conducted in mathematics or statistics courses.

For teaching methods, most studies sampled traditional courses that were primarily lecture-based but included some in-class activities. The most common activity was giving class time for students to do problem solving (PS) (21 studies). Students were instructed to either do problem solving in groups (16 studies) or individually (5 studies) and sometimes both in the same course. Project or problem-based learning (PBL) was the second most frequently reported activity with 8 studies, and the implementation of this teaching method ranged from end of term final projects to an entire project or problem-based course. The third most common activity was using clickers (4 studies) or having class discussions (4 studies).

Research design, methods, and outcomes

The 29 studies used quantitative (10 studies), qualitative (6 studies), or mixed methods (13 studies) research designs. Most studies contained self-made instructor surveys (IS) as their main source of evidence (20 studies). In contrast, only 2 studies used survey instruments with evidence of validity (IEV). Other forms of data collection included using institutions’ end of course evaluations (EOC) (10 studies), observations (5 studies), and interviews (4 studies).

Studies reported a variety of different measures for researching students’ affective and behavioral responses to active learning. The most common measure was students’ self-reports of learning (an affective outcome); twenty-one studies measured whether students thought they learned more or less due to the active learning intervention. Other common measures included whether students participated in the activities (16 studies, participation), whether they enjoyed the activities (15 studies, enjoyment), and if students were satisfied with the overall course experience (13 studies, course satisfaction). Most studies included more than one measure. Some studies also measured course attendance (4 studies) and students’ self-efficacy with the activities and relevant STEM disciplines (4 studies).

We found that the 23 of the 29 studies reported positive or mostly positive outcomes for their students’ affective and behavioral responses to active learning. Only 5 studies reported mixed/neutral study outcomes, and only one study reported negative student response to active learning. We discuss the implications of this lack of negative study outcomes and reports of SRAL in our dataset in the “Discussion” section.

To answer our second research question (What instructor strategies to aid implementation of active learning do the authors of these studies provide?), we provide descriptions, categories, and excerpts of specific strategies found within our systematic literature review.

Explanation strategies

Explanation strategies provide students with clarifications and reasons for using active learning (DeMonbrun et al., 2017 ). Within the explanation category, we identified two specific strategies: establish expectations and explain the purpose .

Establish expectations

Establishing expectations means setting the tone and routine for active learning at both the course and in-class activity level. Instructors can discuss expectations at the beginning of the semester, at the start of a class session, or right before the activity.

For establishing expectations at the beginning of the semester, studies provide specific ways to ensure students became familiar with active learning as early as possible. This included “introduc[ing] collaborative learning at the beginning of the academic term” (Herkert , 1997 , p. 450) and making sure that “project instructions and the data were posted fairly early in the semester, and the students were made aware that the project was an important part of their assessment” (Krishnan & Nalim, 2009 , p. 5).

McClanahan and McClanahan ( 2002 ) described the importance of explaining how the course will use active learning and purposely using the syllabus to do this:

Set the stage. Create the expectation that students will actively participate in this class. One way to accomplish that is to include a statement in your syllabus about your teaching strategies. For example: I will be using a variety of teaching strategies in this class. Some of these activities may require that you interact with me or other students in class. I hope you will find these methods interesting and engaging and that they enable you to be more successful in this course . In the syllabus, describe the specific learning activities you plan to conduct. These descriptions let the students know what to expect from you as well as what you expect from them (emphasis added, p. 93).

Early on, students see that the course is interactive, and they also see the activities required to be successful in the course.

These studies and excerpts demonstrate the importance of explaining to students how in-class activities relate to course expectations. Instructors using active learning should start the semester with clear expectations for how students should engage with activities.

Explain the purpose

Explaining the purpose includes offering students reasons why certain activities are being used and convincing them of the importance of participating.

One way that studies explained the purpose of the activities was by leveraging and showing assessment data on active learning. For example, Lenz ( 2015 ) dedicated class time to show current students comments from previous students:

I spend the first few weeks reminding them of the research and of the payoff that they will garner and being a very enthusiastic supporter of the [active learning teaching] method. I show them comments I have received from previous classes and I spend a lot of time selling the method (p. 294).

Providing current students comments from previous semesters may help students see the value of active learning. Lake ( 2001 ) also used data from prior course offerings to show students “the positive academic performance results seen in the previous use of active learning” on the first day of class (p. 899).

However, sharing the effectiveness of the activities does not have to be constrained to the beginning of the course. Autin et al. ( 2013 ) used mid-semester test data and comparisons to sell the continued use of active learning to their students. They said to students:

Based on your reflections, I can see that many of you are not comfortable with the format of this class. Many of you said that you would learn better from a traditional lecture. However, this class, as a whole, performed better on the test than my other [lecture] section did. Something seems to be working here (p. 946).

Showing students’ comparisons between active learning and traditional lecture classes is a powerful way to explain how active learning is a benefit to students.

Explaining the purpose of the activities by sharing course data with students appears to be a useful strategy, as it tells students why active learning is being used and convinces students that active learning is making a difference.

Facilitation strategies

Facilitation strategies ensure the continued engagement in the class activities once they have begun, and many of the specific strategies within this category involve working directly with students. We identified two strategies within the facilitation category: approach students and encourage students .

Approach students

Approaching students means engaging with students during the activity. This includes physical proximity and monitoring students, walking around the classroom, and providing students with additional feedback, clarifications, or questions about the activity.

Several studies described how instructors circulated around the classroom to check on the progress of students during an activity. Lenz ( 2015 ) stated this plainly in her study, “While the students work on these problems I walk around the room, listening to their discussions” (p. 284). Armbruster et al. ( 2009 ) described this strategy and noted positive student engagement, “During each group-work exercise the instructor would move throughout the classroom to monitor group progress, and it was rare to find a group that was not seriously engaged in the exercise” (p. 209). Haseeb ( 2011 ) combined moving around the room and approaching students with questions, and they stated, “The instructor moves around from one discussion group to another and listens to their discussions, ask[ing] provoking questions” (p. 276). Certain group-based activities worked better with this strategy, as McClanahan and McClanahan ( 2002 ) explained:

Breaking the class into smaller working groups frees the professor to walk around and interact with students more personally. He or she can respond to student questions, ask additional questions, or chat informally with students about the class (p. 94).

Approaching students not only helps facilitate the activity, but it provides a chance for the instructor to work with students more closely and receive feedback. Instructors walking around the classroom ensure that both the students and instructor continue to engage and participate with the activity.

Encourage students

Encouraging students includes creating a supportive classroom environment, motivating students to do the activity, building respect and rapport with students, demonstrating care, and having a positive demeanor toward students’ success.

Ramsier et al. ( 2003 ) provided a detailed explanation of the importance of building a supportive classroom environment:

Most of this success lies in the process of negotiation and the building of mutual respect within the class, and requires motivation, energy and enthusiasm on behalf of the instructor… Negotiation is the key to making all of this work, and building a sense of community and shared ownership. Learning students’ names is a challenge but a necessary part of our approach. Listening to student needs and wants with regard to test and homework due dates…projects and activities, etc. goes a long way to build the type of relationships within the class that we need in order to maintain and encourage performance (pp. 16–18).

Here, the authors described a few specific strategies for supporting a positive demeanor, such as learning students’ names and listening to student needs and wants, which helped maintain student performance in an active learning classroom.

Other ways to build a supportive classroom environment were for instructors to appear more approachable. For example, Bullard and Felder ( 2007 ) worked to “give the students a sense of their instructors as somewhat normal and approachable human beings and to help them start to develop a sense of community” (p. 5). As instructors and students become more comfortable working with each other, instructors can work toward easing “frustration and strong emotion among students and step by step develop the students’ acceptance [of active learning]” (Harun, Yusof, Jamaludin, & Hassan, 2012 , p. 234). In all, encouraging students and creating a supportive environment appear to be useful strategies to aid implementation of active learning.

Planning strategies

The planning category encompasses strategies that occur outside of class time, distinguishing it from the explanation and facilitation categories. Four strategies fall into this category: design appropriate activities , create group policies , align the course , and review student feedback .

Design appropriate activities

Many studies took into consideration the design of appropriate or suitable activities for their courses. This meant making sure the activity was suitable in terms of time, difficulty, and constraints of the course. Activities were designed to strike a balance between being too difficult and too simple, to be engaging, and to provide opportunities for students to participate.

Li et al. ( 2009 ) explained the importance of outside-of-class planning and considering appropriate projects: “The selection of the projects takes place in pre-course planning. The subjects for projects should be significant and manageable” (p. 491). Haseeb ( 2011 ) further emphasized a balance in design by discussing problems (within problem-based learning) between two parameters, “the problem is deliberately designed to be open-ended and vague in terms of technical details” (p. 275). Armbruster et al. ( 2009 ) expanded on the idea of balanced activities by connecting it to group-work and positive outcomes, and they stated, “The group exercises that elicited the most animated student participation were those that were sufficiently challenging that very few students could solve the problem individually, but at least 50% or more of the groups could solve the problem by working as a team” (p. 209).

Instructors should consider the design of activities outside of class time. Activities should be appropriately challenging but achievable for students, so that students remain engaged and participate with the activity during class time.

Create group policies

Creating group policies means considering rules when using group activities. This strategy is unique in that it directly addresses a specific subset of activities, group work. These policies included setting team sizes and assigning specific roles to group members.

Studies outlined a few specific approaches for assigning groups. For example, Ramsier et al. ( 2003 ) recommended frequently changing and randomizing groups: “When students enter the room on these days they sit in randomized groups of 3 to 4 students. Randomization helps to build a learning community atmosphere and eliminates cliques” (p. 4). Another strategy in combination with frequent changing of groups was to not allow students to select their own groups. Lehtovuori et al. ( 2013 ) used this to avoid problems of freeriding and group dysfunction:

For example, group division is an issue to be aware of...An easy and safe solution is to draw lots to assign the groups and to change them often. This way nobody needs to suffer from a dysfunctional group for too long. Popular practice that students self-organize into groups is not the best solution from the point of view of learning and teaching. Sometimes friendly relationships can complicate fair division of responsibility and work load in the group (p. 9).

Here, Lehtovuori et al. ( 2013 ) considered different types of group policies and concluded that frequently changing groups worked best for students. Kovac ( 1999 ) also described changing groups but assigned specific roles to individuals:

Students were divided into groups of four and assigned specific roles: manager, spokesperson, recorder, and strategy analyst. The roles were rotated from week to week. To alleviate complaints from students that they were "stuck in a bad group for the entire semester," the groups were changed after each of the two in-class exams (p. 121).

The use of four specific group roles is a potential group policy, and Kovac ( 1999 ) continued the trend of changing group members often.

Overall, these studies describe the importance of thinking about ways to implement group-based activities before enacting them during class, and they suggest that groups should be reconstituted frequently. Instructors using group activities should consider whether to use specific group member policies before implementing the activity in the classroom.

Align the course

Aligning the course emphasizes the importance of purposely connecting multiple parts of the course together. This strategy involves planning to ensure students are graded on their participation with the activities as well as considering the timing of the activities with respect to other aspects of the course.

Li et al. ( 2009 ) described aligning classroom tasks by discussing the importance of timing, and they wrote, “The coordination between the class lectures and the project phases is very important. If the project is assigned near the directly related lectures, students can instantiate class concepts almost immediately in the project and can apply the project experience in class” (p. 491).

Krishnan and Nalim ( 2009 ) aligned class activities with grades to motivate students and encourage participation: “The project was a component of the course counting for typically 10-15% of the total points for the course grade. Since the students were told about the project and that it carried a significant portion of their grade, they took the project seriously” (p. 4). McClanahan and McClanahan ( 2002 ) expanded on the idea of using grades to emphasize the importance of active learning to students:

Develop a grading policy that supports active learning. Active learning experiences that are important enough to do are important enough to be included as part of a student's grade…The class syllabus should describe your grading policy for active learning experiences and how those grades factor into the student's final grade. Clarify with the students that these points are not extra credit. These activities, just like exams, will be counted when grades are determined (p. 93).

Here, they suggest a clear grading policy that includes how activities will be assessed as part of students’ final grades.

de Justo and Delgado ( 2014 ) connected grading and assessment to learning and further suggested that reliance on exams may negatively impact student engagement:

Particular attention should be given to alignment between the course learning outcomes and assessment tasks. The tendency among faculty members to rely primarily on written examinations for assessment purposes should be overcome, because it may negatively affect students’ engagement in the course activities (p. 8).

Instructors should consider their overall assessment strategies, as overreliance on written exams could mean that students engage less with the activities.

When planning to use active learning, instructors should consider how activities are aligned with course content and students’ grades. Instructors should decide before active learning implementation whether class participation and engagement will be reflected in student grades and in the course syllabus.

Review student feedback

Reviewing student feedback includes both soliciting feedback about the activity and using that feedback to improve the course. This strategy can be an iterative process that occurs over several course offerings.

Many studies utilized student feedback to continuously revise and improve the course. For example, Metzger ( 2015 ) commented that “gathering and reviewing feedback from students can inform revisions of course design, implementation, and assessment strategies” (p. 8). Rockland et al. ( 2013 ) further described changing and improving the course in response to student feedback, “As a result of these discussions, the author made three changes to the course. This is the process of continuous improvement within a course” (p. 6).

Herkert ( 1997 ) also demonstrated the use of student feedback for improving the course over time: “Indeed, the [collaborative] learning techniques described herein have only gradually evolved over the past decade through a process of trial and error, supported by discussion with colleagues in various academic fields and helpful feedback from my students” (p. 459).

In addition to incorporating student feedback, McClanahan and McClanahan ( 2002 ) commented on how student feedback builds a stronger partnership with students, “Using student feedback to make improvements in the learning experience reinforces the notion that your class is a partnership and that you value your students’ ideas as a means to strengthen that partnership and create more successful learning” (p. 94). Making students aware that the instructor is soliciting and using feedback can help encourage and build rapport with students.

Instructors should review student feedback for continual and iterative course improvement. Much of the student feedback review occurs outside of class time, and it appears useful for instructors to solicit student feedback to guide changes to the course and build student rapport.

Summary of strategies

We list the appearance of strategies within studies in Table 1 in short-hand form. No study included all eight strategies. Studies that included the most strategies were Bullard and Felder’s ( 2007 ) (7 strategies), Armbruster et al.’s ( 2009 ) (5 strategies), and Lenz’s ( 2015 ) (5 strategies). However, these three studies were exemplars, as most studies included only one or two strategies.

Table 2 presents a summary list of specific strategies, their categories, and descriptions. We also note the number of unique studies ( N ) and excerpts ( n ) that included the specific strategies. In total, there were eight specific strategies within three categories. Most strategies fell under the planning category ( N = 26), with align the course being the most reported strategy ( N = 14). Approaching students ( N = 13) and reviewing student feedback ( N = 11) were the second and third most common strategies, respectively. Overall, we present eight strategies to aid implementation of active learning.

Characteristics of the active learning studies

To address our first research question (What are the characteristics of studies that examine affective and behavioral outcomes of active learning and provide instructor strategies?), we discuss the different ways studies reported research on active learning.

Limitations and gaps within the final sample

First, we must discuss the gaps within our final sample of 29 studies. We excluded numerous active learning studies ( N = 383) that did not discuss or reflect upon the efficacy of their strategies to aid implementation of active learning. We also began this systematic literature review in 2015 and did not finish our coding and analysis of 2364 abstracts and 746 full-texts until 2018. We acknowledge that there have been multiple studies published on active learning since 2015. Acknowledging these limitations, we discuss our results and analysis in the context of the 29 studies in our dataset, which were published from 1990 to 2015.

Our final sample included only 2 studies that sampled mathematics and statistics courses. In addition, there was also a lack of studies outside of first-year courses. Much of the active learning research literature introduces interventions in first-year (cornerstone) or fourth-year (capstone) courses, but we found within our dataset a tendency to oversample first-year courses. However, all four course-years were represented, as well as all major STEM disciplines, with the most common STEM disciplines being engineering (14 studies) and biology (7 studies).

Thirteen studies implemented course-based active learning interventions, such as project-based learning (8 studies), inquiry-based learning (3 studies), or a flipped classroom (2 studies). Only one study, Lenz ( 2015 ), used a previously published active learning intervention, which was Process-Oriented Guided Inquiry Learning (POGIL). Other examples of published active learning programs include the Student-Centered Active Learning Environment for Upside-down Pedagogies (SCALE-UP, Gaffney et al., 2010 ) and Chemistry, Life, the Universe, and Everything (CLUE, Cooper & Klymkowsky, 2013 ), but these were not included in our sample of 29 studies.

In contrast, most of the active learning interventions involved adding in-class problem solving (either with individual students or groups of students) to a traditional lecture course (21 studies). For some instructors attempting to adopt active learning, using this smaller active learning intervention (in-class problem solving) may be a good starting point.

Despite the variety of quantitative, qualitative, and mixed method research designs, most studies used either self-made instructor surveys (20 studies) or their institution’s course evaluations (10 studies). The variation between so many different versions of instructor surveys and course evaluations made it difficult to compare data or attempt a quantitative meta-analysis. Further, only 2 studies used instruments with evidence of validity. However, that trend may change as there are more examples of instruments with evidence of validity, such as the Student Response to Instructional Practices (StRIP, DeMonbrun et al., 2017 ), the Biology Interest Questionnaire (BIQ, Knekta, Rowland, Corwin, & Eddy, 2020 ), and the Pedagogical Expectancy Violation Assessment (PEVA, Gaffney et al., 2010 ).

We were also concerned about the use of institutional course evaluations (10 studies) as evidence of students’ satisfaction and affective responses to active learning. Course evaluations capture more than just students’ responses to active learning, as the scores are biased toward the instructors’ gender (Mitchell & Martin, 2018 ) and race (Daniel, 2019 ), and they are strongly correlated with students’ expected grade in the class (Nguyen et al., 2017 ). Despite these limitations, we kept course evaluations in our keyword search and inclusion criteria, because they relate to instructors concerns about student resistance to active learning, and these scores continue to be used for important instructor reappointment, tenure, and promotion decisions (DeMonbrun et al., 2017 ).

In addition to students’ satisfaction, there were other measures related to students’ affective and behavioral responses to active learning. The most common measure was students’ self-reports of whether they thought they learned more or less (21 studies). Other important affective outcomes included enjoyment (13 studies) and self-efficacy (4 students). The most common behavioral measure was students’ participation (16 studies). However, missing from this sample were other affective outcomes, such as students’ identities, beliefs, emotions, values, and buy-in.

Positive outcomes for using active learning

Twenty-three of the 29 studies reported positive or mostly positive outcomes for their active learning intervention. At the start of this paper, we acknowledged that much of the existing research suggested the widespread positive benefits of using active learning in undergraduate STEM courses. However, much of these positive benefits related to active learning were centered on students’ cognitive learning outcomes (e.g., Theobald et al., 2020 ) and not students’ affective and behavioral responses to active learning. Here, we show positive affective and behavioral outcomes in terms of students’ self-reports of learning, enjoyment, self-efficacy, attendance, participation, and course satisfaction.

Due to the lack of mixed/neutral or negative affective outcomes, it is important to acknowledge potential publication bias within our dataset. Authors may be hesitant to report negative outcomes to active learning interventions. It could also be the case that negative or non-significant outcomes are not easily published in undergraduate STEM education venues. These factors could help explain the lack of mixed/neutral or negative study outcomes in our dataset.

Strategies to aid implementation of active learning

We aimed to answer the question: what instructor strategies to aid implementation of active learning do the authors of these studies provide? We addressed this question by providing instructors and readers a summary of actionable strategies they can take back to their own classrooms. Here, we discuss the range of strategies found within our systematic literature review.

Supporting instructors with actionable strategies

We identified eight specific strategies across three major categories: explanation, facilitation, and planning. Each strategy appeared in at least seven studies (Table 2 ), and each strategy was written to be actionable and practical.

Strategies in the explanation category emphasized the importance of establishing expectations and explaining the purpose of active learning to students. The facilitation category focused on approaching and encouraging students once activities were underway. Strategies in the planning category highlight the importance of working outside of class time to thoughtfully design appropriate activities , create policies for group work , align various components of the course , and review student feedback to iteratively improve the course.

However, as we note in the “Introduction” section, these strategies are not entirely new, and the strategies will not be surprising to experienced researchers and educators. Even still, there has yet to be a systematic review that compiles these instructor strategies in relation to students’ affective and behavioral responses to active learning. For example, the “explain the purpose” strategy is similar to the productive framing (e.g., Hutchison & Hammer, 2010 ) of the activity for students. “Design appropriate activities” and “align various components of the course” relate to Vygotsky’s ( 1978 ) theories of scaffolding for students (Shekhar et al., 2020 ). “Review student feedback” and “approaching students” relate to ideas on formative assessment (e.g., Pellegrino, DiBello, & Brophy, 2014 ) or revising the course materials in relation to students’ ongoing needs.

We also acknowledge that we do not have an exhaustive list of specific strategies to aid implementation of active learning. More work needs to be done measuring and observing these strategies in-action and testing the use of these strategies against certain outcomes. Some of this work of measuring instructor strategies has already begun (e.g., DeMonbrun et al., 2017 ; Finelli et al., 2018 ; Tharayil et al., 2018 ), but further testing and analysis would benefit the active learning community. We hope that our framework of explanation, facilitation, and planning strategies provide a guide for instructors adopting active learning. Since these strategies are compiled from the undergraduate STEM education literature and research on affective and behavioral responses to active learning, instructors have compelling reason to use these strategies to aid implementation of active learning.

One way to consider using these strategies is to consider the various aspects of instruction and their sequence. That is, planning strategies would be most applicable during the phase of work that occurs prior to classroom instruction, the explanation strategies would be more useful when introducing students to active learning activities, while facilitation strategies would be best enacted while students are already working and engaged in the assigned activities. Of course, these strategies may also be used in conjunction with each other and are not strictly limited to these phases. For example, one plausible approach could be using the planning strategies of design and alignment as areas of emphasis during explanation . Overall, we hope that this framework of strategies supports instructors’ adoption and sustained use of active learning.

Creation of the planning category

At the start of this paper, we presented a conceptual framework for strategies consisting of only explanation and facilitation categories (DeMonbrun et al., 2017 ). One of the major contributions of this paper is the addition of a third category, which we call the planning category, to the existing conceptual framework. The planning strategies were common throughout the systematic literature review, and many studies emphasized the need to consider how much time and effort is needed when adding active learning to the course. Although students may not see this preparation, and we did not see this type of strategy initially, explicitly adding the planning category acknowledges the work instructors do outside of the classroom.

The planning strategies also highlight the need for instructors to not only think about implementing active learning before they enter the class, but to revise their implementation after the class is over. Instructors should refine their use of active learning through feedback, reflection, and practice over multiple course offerings. We hope this persistence can lead to long-term adoption of active learning.

Despite our review ending in 2015, most of STEM instruction remains didactic (Laursen, 2019 ; Stains et al., 2018 ), and there has not been a long-term sustained adoption of active learning. In a push to increase the adoption of active learning within undergraduate STEM courses, we hope this study provided support and actionable strategies for instructors who are considering active learning but are concerned about student resistance to active learning.

We identified eight specific strategies to aid implementation of active learning based on three categories. The three categories of strategies were explanation, facilitation, and planning. In this review, we created the third category, planning, and we suggested that this category should be considered first when implementing active learning in the course. Instructors should then focus on explaining and facilitating their activity in the classroom. The eight specific strategies provided here can be incorporated into faculty professional development programs and readily adopted by instructors wanting to implement active learning in their STEM courses.

There remains important future work in active learning research, and we noted these gaps within our review. It would be useful to specifically review and measure instructor strategies in-action and compare its use against other affective outcomes, such as identity, interest, and emotions.

There has yet to be a study that compiles and synthesizes strategies reported from multiple active learning studies, and we hope that this paper filled this important gap. The strategies identified in this review can help instructors persist beyond awkward initial implementations, avoid some problems altogether, and most importantly address student resistance to active learning. Further, the planning strategies emphasize that the use of active learning can be improved over time, which may help instructors have more realistic expectations for the first or second time they implement a new activity. There are many benefits to introducing active learning in the classroom, and we hope that these benefits are shared among more STEM instructors and students.

Availability of data and materials

Journal articles and conference proceedings which make up this review can be found through reverse citation lookup. See the Appendix for the references of all primary studies within this systematic review. We used the following databases to find studies within the review: Web of Science, Academic Search Complete, Compendex, Inspec, Education Source, and Education Resource Information Center. More details and keyword search strings are provided in the “Methods” section.

Abbreviations

Science, technology, engineering, and mathematics

Student resistance to active learning

Instrument with evidence of validity

Instructor surveys

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

Problem solving

Problem or project-based learning

End of course evaluations

Armbruster, P., Patel, M., Johnson, E., & Weiss, M. (2009). Active learning and student-centered pedagogy improve student attitudes and performance in introductory biology. CBE Life Sciences Education , 8 (3), 203–213. https://doi.org/10.1187/cbe.09-03-0025 .

Article Google Scholar

Autin, M., Bateiha, S., & Marchionda, H. (2013). Power through struggle in introductory statistics. PRIMUS , 23 (10), 935–948. https://doi.org/10.1080/10511970.2013.820810 .

Bandura, A. (1982). Self-efficacy mechanism in human agency. American Psychologist , 37 (2), 122 https://psycnet.apa.org/doi/10.1037/0003-066X.37.2.122 .