Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 23 February 2023

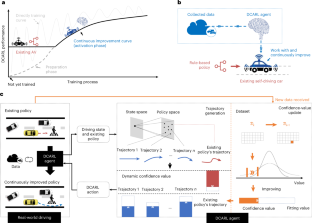

Continuous improvement of self-driving cars using dynamic confidence-aware reinforcement learning

- Zhong Cao ORCID: orcid.org/0000-0002-2243-5705 1 ,

- Kun Jiang 1 ,

- Weitao Zhou ORCID: orcid.org/0000-0002-1266-3843 1 ,

- Shaobing Xu 1 ,

- Huei Peng 2 &

- Diange Yang ORCID: orcid.org/0000-0003-0825-5609 1

Nature Machine Intelligence volume 5 , pages 145–158 ( 2023 ) Cite this article

4197 Accesses

12 Citations

30 Altmetric

Metrics details

- Mechanical engineering

Today’s self-driving vehicles have achieved impressive driving capabilities, but still suffer from uncertain performance in long-tail cases. Training a reinforcement-learning-based self-driving algorithm with more data does not always lead to better performance, which is a safety concern. Here we present a dynamic confidence-aware reinforcement learning (DCARL) technology for guaranteed continuous improvement. Continuously improving means that more training always improves or maintains its current performance. Our technique enables performance improvement using the data collected during driving, and does not need a lengthy pre-training phase. We evaluate the proposed technology both using simulations and on an experimental vehicle. The results show that the proposed DCARL method enables continuous improvement in various cases, and, in the meantime, matches or outperforms the default self-driving policy at any stage. This technology was demonstrated and evaluated on the vehicle at the 2022 Beijing Winter Olympic Games.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

111,21 € per year

only 9,27 € per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Maximum diffusion reinforcement learning

Machine learning reveals the control mechanics of an insect wing hinge

Solving olympiad geometry without human demonstrations

Data availability.

The Supplementary Software file contains the minimum data to run and render the results for all three experiments. These data are also available in a public repository at https://github.com/zhcao92/DCARL (ref. 37 ).

Code availability

The source code of the self-driving experiments is available at https://github.com/zhcao92/DCARL (ref. 38 ). It contains the proposed DCARL planning algorithms as well as the used perception, localization and control algorithms in our self-driving cars.

Sutton, R. S. & Barto, A. G. Reinforcement Learning: An Introduction (MIT Press, 2018).

Silver, D. et al. A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Science 362 , 1140–1144 (2018).

Article MATH MathSciNet Google Scholar

Silver, D. et al. Mastering the game of Go with deep neural networks and tree search. Nature 529 , 484–489 (2016).

Article Google Scholar

Mnih, V. et al. Human-level control through deep reinforcement learning. Nature 518 , 529–533 (2015).

Ye, F., Zhang, S., Wang, P. & Chan, C.-Y. A survey of deep reinforcement learning algorithms for motion planning and control of autonomous vehicles. In 2021 IEEE Intelligent Vehicles Symposium (IV) 1073–1080 (IEEE, 2021).

Zhu, Z. & Zhao, H. A survey of deep RL and IL for autonomous driving policy learning. IEEE Trans. Intell. Transp. Syst. 23 , 14043–14065 (2022).

Aradi, S. Survey of deep reinforcement learning for motion planning of autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 23 , 740–759 (2022).

Cao, Z. et al. Highway exiting planner for automated vehicles using reinforcement learning. IEEE Trans. Intell. Transp. Syst. 22 , 990–1000 (2020).

Stilgoe, J. Self-driving cars will take a while to get right. Nat. Mach. Intell. 1 , 202–203 (2019).

Kalra, N. & Paddock, S. M. Driving to safety: How many miles of driving would it take to demonstrate autonomous vehicle reliability? Transp. Res. Part A 94 , 182–193 (2016).

Google Scholar

Disengagement reports. California DMV https://www.dmv.ca.gov/portal/vehicle-industry-services/autonomous-vehicles/disengagement-reports/ (2021).

Li, G. et al. Decision making of autonomous vehicles in lane change scenarios: deep reinforcement learning approaches with risk awareness. Transp. Res. Part C 134 , 103452 (2022).

Shu, H., Liu, T., Mu, X. & Cao, D. Driving tasks transfer using deep reinforcement learning for decision-making of autonomous vehicles in unsignalized intersection. IEEE Trans. Veh. Technol. 71 , 41–52 (2021).

Pek, C., Manzinger, S., Koschi, M. & Althoff, M. Using online verification to prevent autonomous vehicles from causing accidents. Nat. Mach. Intell. 2 , 518–528 (2020).

Xu, S., Peng, H., Lu, P., Zhu, M. & Tang, Y. Design and experiments of safeguard protected preview lane keeping control for autonomous vehicles. IEEE Access 8 , 29944–29953 (2020).

Yang, J., Zhang, J., Xi, M., Lei, Y. & Sun, Y. A deep reinforcement learning algorithm suitable for autonomous vehicles: double bootstrapped soft-actor-critic-discrete. IEEE Trans. Cogn. Dev. Syst. https://doi.org/10.1109/TCDS.2021.3092715 (2021).

Schwall, M., Daniel, T., Victor, T., Favaro, F. & Hohnhold, H. Waymo public road safety performance data. Preprint at arXiv https://doi.org/10.48550/arXiv.2011.00038 (2020).

Fan, H. et al. Baidu Apollo EM motion planner. Preprint at arXiv https://doi.org/10.48550/arXiv.1807.08048 (2018).

Kato, S. et al. Autoware on board: enabling autonomous vehicles with embedded systems. In 2018 ACM/IEEE 9th International Conference on Cyber-Physical Systems 287–296 (IEEE, 2018).

Cao, Z., Xu, S., Peng, H., Yang, D. & Zidek, R. Confidence-aware reinforcement learning for self-driving cars. IEEE Trans. Intell. Transp. Syst. 23 , 7419–7430 (2022).

Thomas, P. S. et al. Preventing undesirable behavior of intelligent machines. Science 366 , 999–1004 (2019).

Levine, S., Kumar, A., Tucker, G. & Fu, J. Offline reinforcement learning: tutorial, review, and perspectives on open problems. Preprint at arXiv https://doi.org/10.48550/arXiv.2005.01643 (2020).

Garcıa, J. & Fernández, F. A comprehensive survey on safe reinforcement learning. J. Mach. Learn. Res. 16 , 1437–1480 (2015).

MATH MathSciNet Google Scholar

Achiam, J., Held, D., Tamar, A. & Abbeel, P. Constrained policy optimization. In International Conference on Machine Learning 22–31 (JMLR, 2017).

Berkenkamp, F., Turchetta, M., Schoellig, A. & Krause, A. Safe model-based reinforcement learning with stability guarantees. Adv. Neural Inf. Process. Syst . 30 , 908-919 (2017).

Ghadirzadeh, A., Maki, A., Kragic, D. & Björkman, M. Deep predictive policy training using reinforcement learning. In 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems 2351–2358 (IEEE, 2017).

Abbeel, P. & Ng, A. Y. Apprenticeship learning via inverse reinforcement learning. In Proc. Twenty-first International Conference on Machine Learning , 1 (Association for Computing Machinery, 2004).

Abbeel, P. & Ng, A. Y. Exploration and apprenticeship learning in reinforcement learning. In Proc. 22nd International Conference on Machine Learning 1–8 (Association for Computing Machinery, 2005).

Ross, S., Gordon, G. & Bagnell, D. A reduction of imitation learning and structured prediction to no-regret online learning. In Gordon, G., Dunson, D. & Dudík, M. (eds) Proc. Fourteenth International Conference on Artificial Intelligence and Statistics , 627–635 (JMLR, 2011).

Zhang, J. & Cho, K. Query-efficient imitation learning for end-to-end autonomous driving. In Thirty-First AAAI Conference on Artificial Intelligence (AAAI) , 2891–2897 (AAAI Press, 2017).

Bicer, Y., Alizadeh, A., Ure, N. K., Erdogan, A. & Kizilirmak, O. Sample efficient interactive end-to-end deep learning for self-driving cars with selective multi-class safe dataset aggregation. In 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems 2629–2634 (IEEE, 2019).

Alshiekh, M. et al Safe reinforcement learning via shielding. In Proc. Thirty-Second AAAI Conference on Artificial Intelligence Vol. 32, 2669-2678 (AAAI Press, 2018).

Brun, W., Keren, G., Kirkeboen, G. & Montgomery, H. Perspectives on Thinking, Judging, and Decision Making (Universitetsforlaget, 2011).

Dabney, W. et al. A distributional code for value in dopamine-based reinforcement learning. Nature 577 , 671–675 (2020).

Cao, Z. et al. A geometry-driven car-following distance estimation algorithm robust to road slopes. Transp. Res. Part C 102 , 274–288 (2019).

Xu, S. et al. System and experiments of model-driven motion planning and control for autonomous vehicles. IEEE Trans. Syst. Man. Cybern. Syst. 52 , 5975–5988 (2022).

Cao, Z. Codes and data for dynamic confidence-aware reinforcement learning. DCARL. Zenodo https://zenodo.org/badge/latestdoi/578512035 (2022).

Kochenderfer, M. J. Decision Making Under Uncertainty: Theory and Application (MIT Press, 2015).

Ivanovic, B. et al. Heterogeneous-agent trajectory forecasting incorporating class uncertainty. In 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) , 12196–12203 (IEEE, 2022).

Yang, Y., Zha, K., Chen, Y., Wang, H. & Katabi, D. Delving into deep imbalanced regression. In International Conference on Machine Learning 11842–11851 (PMLR, 2021).

Efron, B. & Tibshirani, R. J. An Introduction to the Bootstrap (CRC Press, 1994).

Dosovitskiy, A., Ros, G., Codevilla, F., Lopez, A. & Koltun, V. CARLA: An open urban driving simulator. In Proceedings of the 1st Annual Conference on Robot Learning , 1–16 (PMLR, 2017).

Download references

Acknowledgements

This work is supported by the National Natural Science Foundation of China (NSFC) (U1864203 (D.Y.), 52102460 (Z.C.), 61903220 (K.J.)), China Postdoctoral Science Foundation (2021M701883 (Z.C.)) and Beijing Municipal Science and Technology Commission (Z221100008122011 (D.Y.)). It is also funded by the Tsinghua University-Toyota Joint Center (D.Y.).

Author information

Authors and affiliations.

School of Vehicle and Mobility, Tsinghua University, Beijing, China

Zhong Cao, Kun Jiang, Weitao Zhou, Shaobing Xu & Diange Yang

Mechanical Engineering, University of Michigan, Ann Arbor, MI, USA

You can also search for this author in PubMed Google Scholar

Contributions

Z.C., S.X., D.Y. and H.P. developed the performance improvement technique, which can outperform the existing self-driving policy. Z.C. and W.Z. developed the continuous improvement technique using the worst confidence value. Z.C., S.X. and W.Z. designed the whole self-driving platform in the real world. Z.C., K.J. and D.Y. designed and conducted the experiments and collected the data.

Corresponding author

Correspondence to Diange Yang .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Peer review

Peer review information.

Nature Machine Intelligence thanks Ali Alizadeh and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information.

Essential supplementary description for the proposed technology, detailed setting and results for the experiments, descriptions of the data file and vehicles.

Supplementary Video 1

Evaluation results on running self-driving vehicle.

Supplementary Video 2

Continuous performance improvement using confidence value.

Supplementary Video 3

Comparing with classical value-based RL agent.

Supplementary Software

Software to run and render the results of experiments 1 to 3.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Cite this article.

Cao, Z., Jiang, K., Zhou, W. et al. Continuous improvement of self-driving cars using dynamic confidence-aware reinforcement learning. Nat Mach Intell 5 , 145–158 (2023). https://doi.org/10.1038/s42256-023-00610-y

Download citation

Received : 14 May 2022

Accepted : 04 January 2023

Published : 23 February 2023

Issue Date : February 2023

DOI : https://doi.org/10.1038/s42256-023-00610-y

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Stable training via elastic adaptive deep reinforcement learning for autonomous navigation of intelligent vehicles.

- Yujiao Zhao

- Xinping Yan

Communications Engineering (2024)

Novel multiple access protocols against Q-learning-based tunnel monitoring using flying ad hoc networks

- Bakri Hossain Awaji

- M. M. Kamruzzaman

- Udayakumar Allimuthu

Wireless Networks (2024)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Self-Driving Cars

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Help | Advanced Search

Computer Science > Computer Vision and Pattern Recognition

Title: end to end learning for self-driving cars.

Abstract: We trained a convolutional neural network (CNN) to map raw pixels from a single front-facing camera directly to steering commands. This end-to-end approach proved surprisingly powerful. With minimum training data from humans the system learns to drive in traffic on local roads with or without lane markings and on highways. It also operates in areas with unclear visual guidance such as in parking lots and on unpaved roads. The system automatically learns internal representations of the necessary processing steps such as detecting useful road features with only the human steering angle as the training signal. We never explicitly trained it to detect, for example, the outline of roads. Compared to explicit decomposition of the problem, such as lane marking detection, path planning, and control, our end-to-end system optimizes all processing steps simultaneously. We argue that this will eventually lead to better performance and smaller systems. Better performance will result because the internal components self-optimize to maximize overall system performance, instead of optimizing human-selected intermediate criteria, e.g., lane detection. Such criteria understandably are selected for ease of human interpretation which doesn't automatically guarantee maximum system performance. Smaller networks are possible because the system learns to solve the problem with the minimal number of processing steps. We used an NVIDIA DevBox and Torch 7 for training and an NVIDIA DRIVE(TM) PX self-driving car computer also running Torch 7 for determining where to drive. The system operates at 30 frames per second (FPS).

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

12 blog links

Dblp - cs bibliography, bibtex formatted citation.

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

IMAGES

VIDEO

COMMENTS

Abstract --- Self-Driving car, a car capable of sensing its surrounding and moving on its own through traffic and. other obstacles with minimum or no human input. This is the current upcoming ...

Abstract. Autonomous driving is expected to revolutionize road traffic attenuating current externalities, especially accidents and congestion. Carmakers, researchers and administrations have been working on autonomous driving for years and significant progress has been made. However, the doubts and challenges to overcome are still huge, as the ...

This paper highlighted the research advances made in autonomous driving using six requirements as parameters for the successful deployment of autonomous cars and discussed the future research challenges. The core requirements are fault tolerance, strict latency, architecture, resource management, and security.

2018). In this paper, we survey research on self-driving cars published in the literature focusing on self-driving cars devel-oped since the DARPA challenges, which are equipped with an autonomy system that can be categorized as SAE level 3 or higher (SAE, 2018). The architecture of the autonomy system of self-driving cars 2

Download PDF. Article; ... Some recent research has started to train an RL-based self-driving policy in specific scenarios 5,6,7,8. ... J. Self-driving cars will take a while to get right. Nat. Mach.

View PDF Abstract: We survey research on self-driving cars published in the literature focusing on autonomous cars developed since the DARPA challenges, which are equipped with an autonomy system that can be categorized as SAE level 3 or higher. The architecture of the autonomy system of self-driving cars is typically organized into the perception system and the decision-making system.

Capable of identifying suitable parking spaces and taking control of some or all of the functions to set the gear selector, steer, accelerate and brake in order to park the vehicle. Uses radar-based parking sensors and cameras and control mechanisms for braking, steering and acceleration (where supported). 1-2. BSD.

Abstract. This paper presents a scientometric and bibliometric analysis of research and innovation on self-driving cars. Through an examination of quantitative empirical evidence, we explore the importance of Artificial Intelligence (AI) as machine learning, deep learning and data mining on self-driving car research and development as measured by patents and papers.

A self-driving car may use for localization one or more Offline Maps, such as occupancy grid maps, remission maps or landmark maps. We survey the literature on methods for generating Offline Maps in Section 3.2. Download : Download high-res image (507KB) Download : Download full-size image.

Self-driving cars are coming closer and closer to being fact not fiction, but are we ready for them? In this research we analyze the current status in place for self-driving cars. We address the gaps that need to be filled, and we identify the questions that need to be answered before having self-driving cars on the road becomes a reality. Towards this, we discuss four issues with self-driving ...

Many recent technological advances have helped to pave the way forward for fully autonomous vehicles. This special issue explores three aspects of the self-driving car revolution: a historical perspective with a focus on perception for autonomous vehicles, how government policy will impact self-driving cars technically and commercially, and how cloud-based infrastructure plays a role in the ...

from work. Research showed that car current modal share enjoyed by rail (and buses) in the UK in favour to personal self-driving car. The lower travel and concomitant energy use and carbon emissions, could increase by 5% from mid-level automation to up to 60% for a high penetration of self-driving cars in the USA.*

End to End Learning for Self-Driving Cars. We trained a convolutional neural network (CNN) to map raw pixels from a single front-facing camera directly to steering commands. This end-to-end approach proved surprisingly powerful. With minimum training data from humans the system learns to drive in traffic on local roads with or without lane ...

Research: Self-Driving Cars 2 Introduction A self-driving car (autonomous vehicle) is a car that does not require a human operator, but rely mostly on sensors and artificial intelligence to operate itself. The car is able to sense its surroundings using technology such as radar, laser, and computer vision. According to science

This paper presents an analysis of research and innovation on self-driving cars. Through an examination of quantitative evidence, the importance of Artificial Intelligence (AI) as machine learning, deep learning and data mining on self-driving car research and development is discussed. With the immense growth in the rate of

In this paper, they demonstrated visual ego-motion estimation for a car equipped with a multi-camera system with minimal field of- ... "Self-driving car ISEAUTO for research and education", 2018 19th International Conference on Research and Education in Mechatronics (REM) June 7-8, 2018, Delft, The Netherlands. 978-1-5386-5413-2/18/$31.00 ...

The research paper "Self Driving Cars: A Peep into the Future" presented by T. Banerjee, S. Bose, A. Chakraborty, T. Samadder Bhaskar Kumar, T.K. Rana. This research paper describes the design of embedded controller for self-driving, electric, impact-resistant, and directional GSM vehicles. The vehicle's position, starting point, and ...