MBA Knowledge Base

Business • Management • Technology

Home » Management Case Studies » Case Study: Quality Management System at Coca Cola Company

Case Study: Quality Management System at Coca Cola Company

Coca Cola’s history can be traced back to a man called Asa Candler, who bought a specific formula from a pharmacist named Smith Pemberton. Two years later, Asa founded his business and started production of soft drinks based on the formula he had bought. From then, the company grew to become the biggest producers of soft drinks with more than five hundred brands sold and consumed in more than two hundred nations worldwide.

Although the company is said to be the biggest bottler of soft drinks, they do not bottle much. Instead, Coca Cola Company manufactures a syrup concentrate, which is bought by bottlers all over the world. This distribution system ensures the soft drink is bottled by these smaller firms according to the company’s standards and guidelines. Although this franchised method of distribution is the primary method of distribution, the mother company has a key bottler in America, Coca Cola Refreshments.

In addition to soft drinks, which are Coca Cola’s main products, the company also produces diet soft drinks. These are variations of the original soft drinks with improvements in nutritional value, and reductions in sugar content. Saccharin replaced industrial sugar in 1963 so that the drinks could appeal to health-conscious consumers. A major cause for concern was the inter product competition which saw some sales dwindle in some products in favor of others.

Coca Cola started diversifying its products during the First World War when ‘Fanta’ was introduced. During World War 1, the heads of Coca Cola in Nazi Germany decided to establish a new soft drink into the market. During the ongoing war, America’s promotion in Germany was not acceptable. Therefore, he decided to use a new name and ‘Fanta’ was born. The creation was successful and production continued even after the war. ‘Sprite’ followed soon after.

In the 1990’s, health concerns among consumers of soft drinks forced their manufactures to consider altering the energy content of these products. ‘Minute Maid’ Juices, ‘PowerAde’ sports drinks, and a few flavored teas variants were Coca Cola’s initial reactions to this new interest. Although most of these new products were well received, some did not perform as well. An example of such was Coca Cola classic, dubbed C2.

Coca Cola Company has been a successful company for more than a century. This can be attributed partly to the nature of its products since soft drinks will always appeal to people. In addition to this, Coca Cola has one of the best commercial and public relations programs in the world. The company’s products can be found on adverts in virtually every corner of the globe. This success has led to its support for a wide range of sporting activities. Soccer, baseball, ice hockey, athletics and basketball are some of these sports, where Coca Cola is involved

The Quality Management System at Coca Cola

It is very important that each product that Coca Cola produces is of a high quality standard to ensure that each product is exactly the same. This is important as the company wants to meet with customer requirements and expectations. With the brand having such a global presence, it is vital that these checks are continually consistent. The standardized bottle of Coca Cola has elements that need to be checked whilst on the production line to make sure that a high quality is being met. The most common checks include ingredients, packaging and distribution. Much of the testing being taken place is during the production process, as machines and a small team of employees monitor progress. It is the responsibility of all of Coca Colas staff to check quality from hygiene operators to product and packaging quality. This shows that these constant checks require staff to be on the lookout for problems and take responsibility for this, to ensure maintained quality.

Coca-cola uses inspection throughout its production process, especially in the testing of the Coca-Cola formula to ensure that each product meets specific requirements. Inspection is normally referred to as the sampling of a product after production in order to take corrective action to maintain the quality of products. Coca-Cola has incorporated this method into their organisational structure as it has the ability of eliminating mistakes and maintaining high quality standards, thus reducing the chance of product recall. It is also easy to implement and is cost effective.

Coca-cola uses both Quality Control (QC) and Quality Assurance (QA) throughout its production process. QC mainly focuses on the production line itself, whereas QA focuses on its entire operations process and related functions, addressing potential problems very quickly. In QC and QA, state of the art computers check all aspects of the production process, maintaining consistency and quality by checking the consistency of the formula, the creation of the bottle (blowing), fill levels of each bottle, labeling of each bottle, overall increasing the speed of production and quality checks, which ensures that product demands are met. QC and QA helps reduce the risk of defective products reaching a customer; problems are found and resolved in the production process, for example, bottles that are considered to be defective are placed in a waiting area for inspection. QA also focuses on the quality of supplied goods to Coca-cola, for example sugar, which is supplied by Tate and Lyle. Coca-cola informs that they have never had a problem with their suppliers. QA can also involve the training of staff ensuring that employees understand how to operate machinery. Coca-Cola ensures that all members of staff receive training prior to their employment, so that employees can operate machinery efficiently. Machinery is also under constant maintenance, which requires highly skilled engineers to fix problems, and help Coca-cola maintain high outputs.

Every bottle is also checked that it is at the correct fill level and has the correct label. This is done by a computer which every bottle passes through during the production process. Any faulty products are taken off the main production line. Should the quality control measures find any errors, the production line is frozen up to the last good check that was made. The Coca Cola bottling plant also checks the utilization level of each production line using a scorecard system. This shows the percentage of the line that is being utilized and allows managers to increase the production levels of a line if necessary.

Coca-Cola also uses Total Quality Management (TQM) , which involves the management of quality at every level of the organisation , including; suppliers, production, customers etc. This allows Coca-cola to retain/regain competitiveness to achieve increased customer satisfaction . Coca-cola uses this method to continuously improve the quality of their products. Teamwork is very important and Coca-cola ensures that every member of staff is involved in the production process, meaning that each employee understands their job/roles, thus improving morale and motivation , overall increasing productivity. TQM practices can also increase customer involvement as many organisations, including Coca-Cola relish the opportunity to receive feedback and information from their consumers. Overall, reducing waste and costs, provides Coca-cola with a competitive advantage .

The Production Process

Before production starts on the line cleaning quality tasks are performed to rinse internal pipelines, machines and equipment. This is often performed during a switch over of lines for example, changing Coke to Diet Coke to ensure that the taste is the same. This quality check is performed for both hygiene purposes and product quality. When these checks are performed the production process can begin.

Coca Cola uses a database system called Questar which enables them to perform checks on the line. For example, all materials are coded and each line is issued with a bill of materials before the process starts. This ensures that the correct materials are put on the line. This is a check that is designed to eliminate problems on the production line and is audited regularly. Without this system, product quality wouldn’t be assessed at this high level. Other quality checks on the line include packaging and carbonation which is monitored by an operator who notes down the values to ensure they are meeting standards.

To test product quality further lab technicians carry out over 2000 spot checks a day to ensure quality and consistency. This process can be prior to production or during production which can involve taking a sample of bottles off the production line. Quality tests include, the CO2 and sugar values, micro testing, packaging quality and cap tightness. These tests are designed so that total quality management ideas can be put forward. For example, one way in which Coca Cola has improved their production process is during the wrapping stage at the end of the line. The machine performed revolutions around the products wrapping it in plastic until the contents were secure. One initiative they adopted meant that one less revolution was needed. This idea however, did not impact on the quality of the packaging or the actual product therefore saving large amounts of money on packaging costs. This change has been beneficial to the organisation. Continuous improvement can also be used to adhere to environmental and social principles which the company has the responsibility to abide by. Continuous Improvement methods are sometimes easy to identify but could lead to a big changes within the organisation. The idea of continuous improvement is to reveal opportunities which could change the way something is performed. Any sources of waste, scrap or rework are potential projects which can be improved.

The successfulness of this system can be measured by assessing the consistency of the product quality. Coca Cola say that ‘Our Company’s Global Product Quality Index rating has consistently reached averages near 94 since 2007, with a 94.3 in 2010, while our Company Global Package Quality Index has steadily increased since 2007 to a 92.6 rating in 2010, our highest value to date’. This is an obvious indication this quality system is working well throughout the organisation. This increase of the index shows that the consistency of the products is being recognized by consumers.

Related posts:

- Case Study: The Coca-Cola Company Struggles with Ethical Crisis

- Case Study: Analysis of the Ethical Behavior of Coca Cola

- Case Study of Burger King: Achieving Competitive Advantage through Quality Management

- SWOT Analysis of Coca Cola

- Case Study: Marketing Strategy of Walt Disney Company

- Case Study of Papa John’s: Quality as a Core Business Strategy

- Case Study: Johnson & Johnson Company Analysis

- Case Study: Inventory Management Practices at Walmart

- Case Study: Analysis of Performance Management at British Petroleum

- Total Quality Management And Continuous Quality Improvement

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Sign up for the newsletter

Digital editions.

Total quality management: three case studies from around the world

With organisations to run and big orders to fill, it’s easy to see how some ceos inadvertently sacrifice quality for quantity. by integrating a system of total quality management it’s possible to have both.

Top 5 ways to manage the board during turbulent times Top 5 ways to create a family-friendly work culture Top 5 tips for a successful joint venture Top 5 ways managers can support ethnic minority workers Top 5 ways to encourage gender diversity in the workplace Top 5 ways CEOs can create an ethical company culture Top 5 tips for going into business with your spouse Top 5 ways to promote a healthy workforce Top 5 ways to survive a recession Top 5 tips for avoiding the ‘conference vortex’ Top 5 ways to maximise new parents’ work-life balance with technology Top 5 ways to build psychological safety in the workplace Top 5 ways to prepare your workforce for the AI revolution Top 5 ways to tackle innovation stress in the workplace Top 5 tips for recruiting Millennials

There are few boardrooms in the world whose inhabitants don’t salivate at the thought of engaging in a little aggressive expansion. After all, there’s little room in a contemporary, fast-paced business environment for any firm whose leaders don’t subscribe to ambitions of bigger factories, healthier accounts and stronger turnarounds. Yet too often such tales of excess go hand-in-hand with complaints of a severe drop in quality.

Food and entertainment markets are riddled with cautionary tales, but service sectors such as health and education aren’t immune to the disappointing by-products of unsustainable growth either. As always, the first steps in avoiding a catastrophic forsaking of quality begins with good management.

There are plenty of methods and models geared at managing the quality of a particular company’s goods or services. Yet very few of those models take into consideration the widely held belief that any company is only as strong as its weakest link. With that in mind, management consultant William Deming developed an entirely new set of methods with which to address quality.

Deming, whose managerial work revolutionised the titanic Japanese manufacturing industry, perceived quality management to be more of a philosophy than anything else. Top-to-bottom improvement, he reckoned, required uninterrupted participation of all key employees and stakeholders. Thus, the total quality management (TQM) approach was born.

All in Similar to the Six Sigma improvement process, TQM ensures long-term success by enforcing all-encompassing internal guidelines and process standards to reduce errors. By way of serious, in-depth auditing – as well as some well-orchestrated soul-searching – TQM ensures firms meet stakeholder needs and expectations efficiently and effectively, without forsaking ethical values.

By opting to reframe the way employees think about the company’s goals and processes, TQM allows CEOs to make sure certain things are done right from day one. According to Teresa Whitacre, of international consulting firm ASQ , proper quality management also boosts a company’s profitability.

“Total quality management allows the company to look at their management system as a whole entity — not just an output of the quality department,” she says. “Total quality means the organisation looks at all inputs, human resources, engineering, production, service, distribution, sales, finance, all functions, and their impact on the quality of all products or services of the organisation. TQM can improve a company’s processes and bottom line.”

Embracing the entire process sees companies strive to improve in several core areas, including: customer focus, total employee involvement, process-centred thinking, systematic approaches, good communication and leadership and integrated systems. Yet Whitacre is quick to point out that companies stand to gain very little from TQM unless they’re willing to go all-in.

“Companies need to consider the inputs of each department and determine which inputs relate to its governance system. Then, the company needs to look at the same inputs and determine if those inputs are yielding the desired results,” she says. “For example, ISO 9001 requires management reviews occur at least annually. Aside from minimum standard requirements, the company is free to review what they feel is best for them. While implementing TQM, they can add to their management review the most critical metrics for their business, such as customer complaints, returns, cost of products, and more.”

The customer knows best: AtlantiCare TQM isn’t an easy management strategy to introduce into a business; in fact, many attempts tend to fall flat. More often than not, it’s because firms maintain natural barriers to full involvement. Middle managers, for example, tend to complain their authority is being challenged when boots on the ground are encouraged to speak up in the early stages of TQM. Yet in a culture of constant quality enhancement, the views of any given workforce are invaluable.

AtlantiCare in numbers

5,000 Employees

$280m Profits before quality improvement strategy was implemented

$650m Profits after quality improvement strategy

One firm that’s proven the merit of TQM is New Jersey-based healthcare provider AtlantiCare . Managing 5,000 employees at 25 locations, AtlantiCare is a serious business that’s boasted a respectable turnaround for nearly two decades. Yet in order to increase that margin further still, managers wanted to implement improvements across the board. Because patient satisfaction is the single-most important aspect of the healthcare industry, engaging in a renewed campaign of TQM proved a natural fit. The firm chose to adopt a ‘plan-do-check-act’ cycle, revealing gaps in staff communication – which subsequently meant longer patient waiting times and more complaints. To tackle this, managers explored a sideways method of internal communications. Instead of information trickling down from top-to-bottom, all of the company’s employees were given freedom to provide vital feedback at each and every level.

AtlantiCare decided to ensure all new employees understood this quality culture from the onset. At orientation, staff now receive a crash course in the company’s performance excellence framework – a management system that organises the firm’s processes into five key areas: quality, customer service, people and workplace, growth and financial performance. As employees rise through the ranks, this emphasis on improvement follows, so managers can operate within the company’s tight-loose-tight process management style.

After creating benchmark goals for employees to achieve at all levels – including better engagement at the point of delivery, increasing clinical communication and identifying and prioritising service opportunities – AtlantiCare was able to thrive. The number of repeat customers at the firm tripled, and its market share hit a six-year high. Profits unsurprisingly followed. The firm’s revenues shot up from $280m to $650m after implementing the quality improvement strategies, and the number of patients being serviced dwarfed state numbers.

Hitting the right notes: Santa Cruz Guitar Co For companies further removed from the long-term satisfaction of customers, it’s easier to let quality control slide. Yet there are plenty of ways in which growing manufacturers can pursue both quality and sales volumes simultaneously. Artisan instrument makers the Santa Cruz Guitar Co (SCGC) prove a salient example. Although the California-based company is still a small-scale manufacturing operation, SCGC has grown in recent years from a basement operation to a serious business.

SCGC in numbers

14 Craftsmen employed by SCGC

800 Custom guitars produced each year

Owner Dan Roberts now employs 14 expert craftsmen, who create over 800 custom guitars each year. In order to ensure the continued quality of his instruments, Roberts has created an environment that improves with each sale. To keep things efficient (as TQM must), the shop floor is divided into six workstations in which guitars are partially assembled and then moved to the next station. Each bench is manned by a senior craftsman, and no guitar leaves that builder’s station until he is 100 percent happy with its quality. This product quality is akin to a traditional assembly line; however, unlike a traditional, top-to-bottom factory, Roberts is intimately involved in all phases of instrument construction.

Utilising this doting method of quality management, it’s difficult to see how customers wouldn’t be satisfied with the artists’ work. Yet even if there were issues, Roberts and other senior management also spend much of their days personally answering web queries about the instruments. According to the managers, customers tend to be pleasantly surprised to find the company’s senior leaders are the ones answering their technical questions and concerns. While Roberts has no intentions of taking his manufacturing company to industrial heights, the quality of his instruments and high levels of customer satisfaction speak for themselves; the company currently boasts one lengthy backlog of orders.

A quality education: Ramaiah Institute of Management Studies Although it may appear easier to find success with TQM at a boutique-sized endeavour, the philosophy’s principles hold true in virtually every sector. Educational institutions, for example, have utilised quality management in much the same way – albeit to tackle decidedly different problems.

The global financial crisis hit higher education harder than many might have expected, and nowhere have the odds stacked higher than in India. The nation plays home to one of the world’s fastest-growing markets for business education. Yet over recent years, the relevance of business education in India has come into question. A report by one recruiter recently asserted just one in four Indian MBAs were adequately prepared for the business world.

RIMS in numbers

9% Increase in test scores post total quality management strategy

22% Increase in number of recruiters hiring from the school

20,000 Increase in the salary offered to graduates

50,000 Rise in placement revenue

At the Ramaiah Institute of Management Studies (RIMS) in Bangalore, recruiters and accreditation bodies specifically called into question the quality of students’ educations. Although the relatively small school has always struggled to compete with India’s renowned Xavier Labour Research Institute, the faculty finally began to notice clear hindrances in the success of graduates. The RIMS board decided it was time for a serious reassessment of quality management.

The school nominated Chief Academic Advisor Dr Krishnamurthy to head a volunteer team that would audit, analyse and implement process changes that would improve quality throughout (all in a particularly academic fashion). The team was tasked with looking at three key dimensions: assurance of learning, research and productivity, and quality of placements. Each member underwent extensive training to learn about action plans, quality auditing skills and continuous improvement tools – such as the ‘plan-do-study-act’ cycle.

Once faculty members were trained, the team’s first task was to identify the school’s key stakeholders, processes and their importance at the institute. Unsurprisingly, the most vital processes were identified as student intake, research, knowledge dissemination, outcomes evaluation and recruiter acceptance. From there, Krishnamurthy’s team used a fishbone diagram to help identify potential root causes of the issues plaguing these vital processes. To illustrate just how bad things were at the school, the team selected control groups and administered domain-based knowledge tests.

The deficits were disappointing. A RIMS students’ knowledge base was rated at just 36 percent, while students at Harvard rated 95 percent. Likewise, students’ critical thinking abilities rated nine percent, versus 93 percent at MIT. Worse yet, the mean salaries of graduating students averaged $36,000, versus $150,000 for students from Kellogg. Krishnamurthy’s team had their work cut out.

To tackle these issues, Krishnamurthy created an employability team, developed strategic architecture and designed pilot studies to improve the school’s curriculum and make it more competitive. In order to do so, he needed absolutely every employee and student on board – and there was some resistance at the onset. Yet the educator asserted it didn’t actually take long to convince the school’s stakeholders the changes were extremely beneficial.

“Once students started seeing the results, buy-in became complete and unconditional,” he says. Acceptance was also achieved by maintaining clearer levels of communication with stakeholders. The school actually started to provide shareholders with detailed plans and projections. Then, it proceeded with a variety of new methods, such as incorporating case studies into the curriculum, which increased general test scores by almost 10 percent. Administrators also introduced a mandate saying students must be certified in English by the British Council – increasing scores from 42 percent to 51 percent.

By improving those test scores, the perceived quality of RIMS skyrocketed. The number of top 100 businesses recruiting from the school shot up by 22 percent, while the average salary offers graduates were receiving increased by $20,000. Placement revenue rose by an impressive $50,000, and RIMS has since skyrocketed up domestic and international education tables.

No matter the business, total quality management can and will work. Yet this philosophical take on quality control will only impact firms that are in it for the long haul. Every employee must be in tune with the company’s ideologies and desires to improve, and customer satisfaction must reign supreme.

Contributors

- Industry Outlook

- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

Quality management

- Business management

- Process management

- Project management

Fixing Health Care from the Inside, Today

- Steven J. Spear

- September 01, 2005

Creating a Culture of Quality

- Ashwin Srinivasan

- Bryan Kurey

- From the April 2014 Issue

Framing the Big Picture

- Scott D. Anthony

- March 31, 2011

Reign of Zero Tolerance (HBR Case Study)

- Janet Parker

- Eugene Volokh

- Jean Halloran

- Michael G. Cherkasky

- October 31, 2006

Manage Your Human Sigma

- John H. Fleming

- Curt Coffman

- James K. Harter

- From the July–August 2005 Issue

Strategies for Learning from Failure

- Amy C. Edmondson

- From the April 2011 Issue

Made in U.S.A.: A Renaissance in Quality

- Joseph M. Juran

- July 01, 1993

The Contradictions That Drive Toyota's Success

- Hirotaka Takeuchi

- Norihiko Shimizu

- From the June 2008 Issue

Making Mass Customization Work

- B. Joseph Pine II

- Bart Victor

- Andrew C. Boynton

- From the September–October 1993 Issue

Power of Internal Guarantees

- Christopher W.L. Hart

- From the January–February 1995 Issue

Why (and How) to Take a Plant Tour

- David M. Upton

- Stephen E. MacAdam

- From the May–June 1997 Issue

U.S. Health Care Reform Can't Wait for Quality Measures to Be Perfect

- Brian J Marcotte

- Annette Guarisco Fildes

- Michael Thompson

- Leah Binder

- October 04, 2017

Organizational Grit

- Thomas H. Lee

- Angela L. Duckworth

- From the September–October 2018 Issue

Selection Bias and the Perils of Benchmarking

- Jerker Denrell

- From the April 2005 Issue

4 Actions to Reduce Medical Errors in U.S. Hospitals

- John S. Toussaint

- Kenneth T Segel

- April 20, 2022

Teaching Smart People How to Learn

- Chris Argyris

- From the May–June 1991 Issue

Beyond Toyota: How to Root Out Waste and Pursue Perfection

- James P. Womack

- Daniel T. Jones

- From the September–October 1996 Issue

A Better Way to Onboard AI

- Boris Babic

- Daniel L. Chen

- Theodoros Evgeniou

- Anne-Laure Fayard

- From the July–August 2020 Issue

The CEO of Canada Goose on Creating a Homegrown Luxury Brand

- From the September–October 2019 Issue

Coming Commoditization of Processes

- Thomas H. Davenport

- From the June 2005 Issue

Solid as Steel: Production Planning at thyssenkrupp

- Karl Schmedders

- Markus Schulze

- February 11, 2016

Texas Instruments: Cost of Quality (A)

- Robert S. Kaplan

- Christopher D. Ittner

- August 18, 1988

Era of Quality at the Akshaya Patra Foundation

- Srujana H M

- Haritha Saranga

- Dinesh Kumar Unnikrishnan

- January 30, 2015

Pumping Iron at Cliffs & Associates: The Circored Iron Ore Reduction Plant in Trinidad

- Christoph H. Loch

- Christian Terwiesch

- December 06, 2002

Cost System Analysis

- December 01, 1994

Sky Deutschland (B): How Supply Chain Management Enabled a Dramatic Company Turnaround

- Ralf W. Seifert

- Katrin Siebenburger Hacki

- January 12, 2016

NEA Baptist Health System (A): Building a Management System One Experiment at a Time

- Sylvain Landry

- Valerie Belanger

- Martin Beaulieu

- Jean-Marc Legentil

- December 20, 2019

Lean as a Universal Model of Excellence: It Is Not Just a Manufacturing Tool!

- Elliott N. Weiss

- Donald Stevenson

- Austin English

- December 14, 2016

BPO, Incorporated

- Scott M. Shafer

- January 15, 2006

Eurasia International: Total Quality Management in the Shipping Industry

- Ali Farhoomand

- Amir Hoosain

- July 23, 2004

NEA Baptist Health System (B): Deployment of the Toyota Kata Practice and the Role of the Shepherding Group

- June Marques Fernandes

NovaStar Financial: A Short Seller's Battle

- Suraj Srinivasan

- March 13, 2013

Spin Master Toys (A): Finding a Manufacturer for E-Chargers

- John S. Haywood-Farmer

- January 19, 2001

John Smithers at Sigtek

- Todd D. Jick

- October 05, 1990

Philips: Redefining Telehealth

- Regina E. Herzlinger

- Alec Petersen

- Natalie Kindred

- Sara M McKinley

- March 24, 2021

Creating and Spreading New Knowledge at Hewlett-Packard

- Robert Cole

- Gwendolyn Lee

- August 01, 2004

Product Innovation at Aguas Danone

- Javier Jorge Silva

- Femando Zerboni

- Andres Chehtman

- Maria Alonso

- December 01, 2012

The Challenge Facing the U.S. Healthcare Delivery System

- Richard Bohmer

- Carin-Isabel Knoop

- June 06, 2006

Maestro Pizza (H): Making the Best of the Situation

- Ramon Casadesus-Masanell

- Fares Khrais

- May 26, 2022

Mastering the Dynamics of Innovation

- James M. Utterback

- August 16, 1996

Solid as Steel: Production Planning at thyssenkrupp, Teaching Note

- March 09, 2016

Solid as Steel: Production Planning at thyssenkrupp, Student Spreadsheet

- January 28, 2016

Managing Service Operations: The Managerial Research Design Process

- Frances X. Frei

- Dennis Campbell

- April 04, 2008

How to Prevent Your Customers from Failing

- Stephen S. Tax

- Mark Colgate

- David E. Bowen

- April 01, 2006

Popular Topics

Partner center.

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

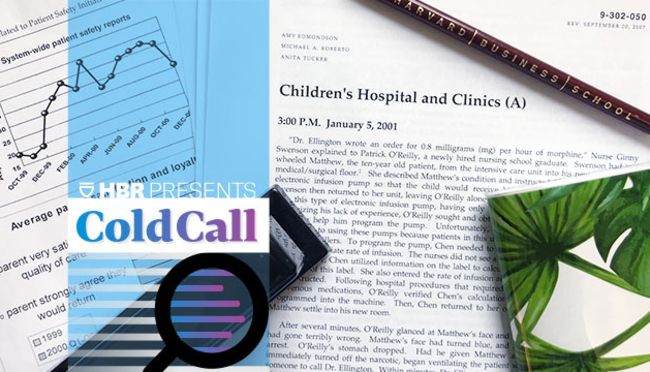

A CASE STUDY OF QUALITY CONTROL CHARTS IN A MANUFACTURING INDUSTRY

Related Papers

Eng Mohamed Hamdy

– Most of the modern industrial processes are naturally multivariate. Multivariate control charts are supplanted univariate control charts, as it takes into account the relationship between variables and identifies the real process changes, which are undetectable by univariate control charts. In practice, the basic assumption that the measurements are independently and identically distributed about a target value is not always valid. Violation of this assumption increases the False Alarm Rate (FAR) and deteriorates the separation of assignable causes from common causes. This paper presents the application of Multivariate Statistical Process Control (MSPC) charts (e. g., Hotelling , MEWMA) to monitor the flare making process in a straight fluorescent light bulb industry. Furthermore, it develops the appropriate procedure for monitoring a multivariate autocorrelated data variable (i. e., dynamic behavior) by using Autoregressive Integrated Moving Average (ARIMA) models. Univariate SPC charts and decomposition approach are used to identify the out-of-control signals that are generated from multivariate SPC charts. Software packages such as Minitab 17 and Statgraphics Centurion XVI are used to construct the control charts.

Jonathan Quiroz

cristian palacio

control estadistico de la calidad

Abidemi Adeniyi

This project is aimed at providing a stock price prediction system which can be used to forecast the future stock price of the Nigerian Stock Exchange using the artificial neural network. This study will attempt to reduce the stress people have in analyzing large amount of data in order to predict stock. This study will have to look into the problem areas of the stock market prediction and devise a method of proffering solutions to all these problems.

Seyed Taghi Akhavan Niaki

In this paper, two control charts based on the generalized linear test (GLT) and contingency table are proposed for Phase-II monitoring of multivariate categorical processes. The performances of the proposed methods are compared with the exponentially weighted moving average-generalized likelihood ratio test (EWMA-GLRT) control chart proposed in the literature. The results show the better performance of the proposed control charts under moderate and large shifts. Moreover, a new scheme is proposed to identify the parameter responsible for an out-of-control signal. The performance of the proposed diagnosing procedure is evaluated through some simulation experiments.

OLUMA URGESSA

Statistical Quality Control, 6th Edition

Regiz Faria

Anwar Shaker

sanika kamtekar

RELATED PAPERS

shayne cabrera

Journal of Materials in Civil Engineering

Barzin Mobasher

Shawn Dmello

Everardo Emmanuel Tovar

Jayesh Hirani

Russian Geology and Geophysics

Alexey Ariskin , Georgy Nikolaev

Loévanofski Hiribarnovitch

Alexey Ariskin

The Hymenoptera of Costa Rica

James M Carpenter

transstellar

TJPRC Publication

Mohammed Alami

Eli Bressert

Nature chemistry

Frank Neese

Ulrich Wienand , Michele Scagliarini , Napoli Nicola

Communications in Statistics - Theory and Methods

Nasir A R Syed , Raja Fawad Zafar

Ridwan Sanusi

Astronomy and Astrophysics

Quentin A Parker

Earth and Planetary Science Letters

Roberta Rudnick

Noorlisa Harun

Science Park Research Organization & Counselling

Amirhossein Amiri

Astronomical Journal

Ricardo Covarrubias

E. Nikogossian

Communications in Statistics - Simulation and Computation

Subha Chakraborti

T. Movsessian

Ridwan Sanusi , Nurudeen Adegoke

Environmental Science and Pollution Research

Pranvera Lazo

Economic Quality Control

Saddam Akber Abbasi

Dhaka University Institutional Repository

Md. Anwar Hossain

Arnoldo Sandoval

Hungarian Journal Of Industry And Chemistry Veszprém, Vol. 41(1) pp. 77-82 (2013)

Janos Abonyi

Héctor O Portillo Reyes, Fausto Elvir y Marcio Martínez

Héctor O R L A N D O Portillo Reyes

Michelle Cluver

Journal of Cellular Plastics

Nelson Oliveira

Jeh-Nan Pan

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

- Browse All Articles

- Newsletter Sign-Up

- 11 Apr 2023

- Cold Call Podcast

A Rose by Any Other Name: Supply Chains and Carbon Emissions in the Flower Industry

Headquartered in Kitengela, Kenya, Sian Flowers exports roses to Europe. Because cut flowers have a limited shelf life and consumers want them to retain their appearance for as long as possible, Sian and its distributors used international air cargo to transport them to Amsterdam, where they were sold at auction and trucked to markets across Europe. But when the Covid-19 pandemic caused huge increases in shipping costs, Sian launched experiments to ship roses by ocean using refrigerated containers. The company reduced its costs and cut its carbon emissions, but is a flower that travels halfway around the world truly a “low-carbon rose”? Harvard Business School professors Willy Shih and Mike Toffel debate these questions and more in their case, “Sian Flowers: Fresher by Sea?”

- 17 Sep 2019

How a New Leader Broke Through a Culture of Accuse, Blame, and Criticize

Children’s Hospital & Clinics COO Julie Morath sets out to change the culture by instituting a policy of blameless reporting, which encourages employees to report anything that goes wrong or seems substandard, without fear of reprisal. Professor Amy Edmondson discusses getting an organization into the “High Performance Zone.” Open for comment; 0 Comments.

- 27 Feb 2019

- Research & Ideas

The Hidden Cost of a Product Recall

Product failures create managerial challenges for companies but market opportunities for competitors, says Ariel Dora Stern. The stakes have only grown higher. Open for comment; 0 Comments.

- 31 Mar 2018

- Working Paper Summaries

Expected Stock Returns Worldwide: A Log-Linear Present-Value Approach

Over the last 20 years, shortcomings of classical asset-pricing models have motivated research in developing alternative methods for measuring ex ante expected stock returns. This study evaluates the main paradigms for deriving firm-level expected return proxies (ERPs) and proposes a new framework for estimating them.

- 26 Apr 2017

Assessing the Quality of Quality Assessment: The Role of Scheduling

Accurate inspections enable companies to assess the quality, safety, and environmental practices of their business partners, and enable regulators to protect consumers, workers, and the environment. This study finds that inspectors are less stringent later in their workday and after visiting workplaces with fewer problems. Managers and regulators can improve inspection accuracy by mitigating these biases and their consequences.

- 23 Sep 2013

Status: When and Why It Matters

Status plays a key role in everything from the things we buy to the partnerships we make. Professor Daniel Malter explores when status matters most. Closed for comment; 0 Comments.

- 16 May 2011

What Loyalty? High-End Customers are First to Flee

Companies offering top-drawer customer service might have a nasty surprise awaiting them when a new competitor comes to town. Their best customers might be the first to defect. Research by Harvard Business School's Ryan W. Buell, Dennis Campbell, and Frances X. Frei. Key concepts include: Companies that offer high levels of customer service can't expect too much loyalty if a new competitor offers even better service. High-end businesses must avoid complacency and continue to proactively increase relative service levels when they're faced with even the potential threat of increased service competition. Even though high-end customers can be fickle, a company that sustains a superior service position in its local market can attract and retain customers who are more valuable over time. Firms rated lower in service quality are more or less immune from the high-end challenger. Closed for comment; 0 Comments.

- 08 Dec 2008

Thinking Twice About Supply-Chain Layoffs

Cutting the wrong employees can be counterproductive for retailers, according to research from Zeynep Ton. One suggestion: Pay special attention to staff who handle mundane tasks such as stocking and labeling. Your customers do. Closed for comment; 0 Comments.

- 01 Dec 2006

- What Do You Think?

How Important Is Quality of Labor? And How Is It Achieved?

A new book by Gregory Clark identifies "labor quality" as the major enticement for capital flows that lead to economic prosperity. By defining labor quality in terms of discipline and attitudes toward work, this argument minimizes the long-term threat of outsourcing to developed economies. By understanding labor quality, can we better confront anxieties about outsourcing and immigration? Closed for comment; 0 Comments.

- 20 Sep 2004

How Consumers Value Global Brands

What do consumers expect of global brands? Does it hurt to be an American brand? This Harvard Business Review excerpt co-written by HBS professor John A. Quelch identifies the three characteristics consumers look for to make purchase decisions. Closed for comment; 0 Comments.

What are you looking for?

Suggestions.

- Journalists

Pharma Quality Control Case Studies

BIOCAD’s Quest for a Reliable Microbiological Quantitative Reference Material

®</sup> 3D", "link" : "/content/biomerieux/corp/en/resource-hub/knowledge/case-studies/pharmaceutical-qc-case-studies/how-a-top-5-pharma-company-protects-production-an-increases-productivity-using-bact-alert-3d-media-statement.html", "type" : "Link"}}' href="/corp/en/resource-hub/knowledge/case-studies/pharmaceutical-qc-case-studies/how-a-top-5-pharma-company-protects-production-an-increases-productivity-using-bact-alert-3d-media-statement.html"> How a Top 5 Pharma Company Protects Production and Increases Productivity Using BACT/ALERT ® 3D

®</sup>", "link" : "/content/biomerieux/corp/en/resource-hub/knowledge/case-studies/pharmaceutical-qc-case-studies/how-thalgo-increased-productivity-with-chemunex-media-statement.html", "type" : "Link"}}' href="/corp/en/resource-hub/knowledge/case-studies/pharmaceutical-qc-case-studies/how-thalgo-increased-productivity-with-chemunex-media-statement.html"> How Thalgo Increased Productivity With CHEMUNEX ®

®</sup>", "link" : "/content/biomerieux/corp/en/resource-hub/knowledge/case-studies/pharmaceutical-qc-case-studies/how-shiseido-increased-the-efficiency-of-microbiological-controls-with-chemunex-case-study.html", "type" : "Link"}}' href="/corp/en/resource-hub/knowledge/case-studies/pharmaceutical-qc-case-studies/how-shiseido-increased-the-efficiency-of-microbiological-controls-with-chemunex-case-study.html"> How Shiseido Increased the Efficiency of Microbiological Controls With CHEMUNEX ®

®</sup> System?", "link" : "/content/biomerieux/corp/en/resource-hub/knowledge/case-studies/pharmaceutical-qc-case-studies/how-did-loreal-optimize-microbial-testing-with-the-chemunex-system-case-study.html", "type" : "Link"}}' href="/corp/en/resource-hub/knowledge/case-studies/pharmaceutical-qc-case-studies/how-did-loreal-optimize-microbial-testing-with-the-chemunex-system-case-study.html"> How did L’Oréal Optimize Microbial Testing With the CHEMUNEX ® System?

®</sup>?", "link" : "/content/biomerieux/corp/en/resource-hub/knowledge/case-studies/pharmaceutical-qc-case-studies/how-did-cosmebac-improve-microbiological-testing-process-with-chemunex-case-study.html", "type" : "Link"}}' href="/corp/en/resource-hub/knowledge/case-studies/pharmaceutical-qc-case-studies/how-did-cosmebac-improve-microbiological-testing-process-with-chemunex-case-study.html"> How did Cosmebac Improve Microbiological Testing Process With CHEMUNEX ® ?

Statistical quality control: A case study research

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Recommended

About us | Advertise with us | Contact us

Case Study: Nestle Nordic Quality management system audits

- Odnoklassniki

- Facebook Messenger

- LiveJournal

Posted: 6 November 2020 | Intertek | No comments yet

Nestlé is the world’s leading nutrition, health, and wellness company, with over 280,000 employees and over 450 factories globally.

The Challenge

Prior to obtaining ISO 9001 certification with Intertek, Nestlé used their own proprietary quality management system, However, in 2017, Nestlé’s global operations made the decision that the entire company would be converting to ISO standards. Being accredited to an international standard looked better from the perspective of customers. Since then, they have been audited to ISO 9001, ISO 14001, and ISO 45001 integrated into one management system.

Related content from this organisation

- Guide to Testing: The latest on lab techniques and research

- New Food Issue 4 2021

- Featured Partnership: Challenges in meat pathogen detection – improving your food safety plan

- Feature Partnership: Getting it right – the global allergy maze

- Food Integrity Supplement – April 2021

Related topics

Quality analysis & quality control (QA/QC)

Related organisations

Scientists develop new method to detect fake alcoholic spirits

By Grace Galler

The Food Fortress: A story of success for others to follow?

By Professor Chris Elliott

Staying ahead of the curve: Harnessing informatics to meet changing F&B consumer expectations

By STARLIMS Corporation

Researchers advocate for detection technology over best-before dates

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

This site uses Akismet to reduce spam. Learn how your comment data is processed .

© Russell Publishing Limited , 2010-2024. All rights reserved. Terms & Conditions | Privacy Policy | Cookie Policy

Website design and development by e-Motive Media Limited .

Privacy Overview

This website uses cookies to improve your experience while you navigate through the website. Out of these cookies, the cookies that are categorised as "Necessary" are stored on your browser as they are as essential for the working of basic functionalities of the website. For our other types of cookies "Advertising & Targeting", "Analytics" and "Performance", these help us analyse and understand how you use this website. These cookies will be stored in your browser only with your consent. You also have the option to opt-out of these different types of cookies. But opting out of some of these cookies may have an effect on your browsing experience. You can adjust the available sliders to 'Enabled' or 'Disabled', then click 'Save and Accept'. View our Cookie Policy page.

Necessary cookies are absolutely essential for the website to function properly. This category only includes cookies that ensures basic functionalities and security features of the website. These cookies do not store any personal information.

Performance cookies are includes cookies that deliver enhanced functionalities of the website, such as caching. These cookies do not store any personal information.

Analytics cookies collect information about your use of the content, and in combination with previously collected information, are used to measure, understand, and report on your usage of this website.

Advertising and targeting cookies help us provide our visitors with relevant ads and marketing campaigns.

Analytical Instruments & Supplies

- Chromatography

- Mass Spectrometry

- Certified Pre-Owned Instruments

- Spectroscopy

- Capillary Electrophoresis

- Chromatography & Spectroscopy Lab Supplies

- Instrument Repair

- Sample Preparation

- Chemical Standards

Life Science

- Cell Analysis

- Automated Electrophoresis

- Microarray Solutions

- Mutagenesis & Cloning

- Next Generation Sequencing

- Research Flow Cytometry

- PCR/Real-Time PCR (qPCR)

- CRISPR/Cas9

- Microscopes and Microplate Instrumentation

- Oligo Pools & Oligo GMP Manufacturing

Clinical & Diagnostic Testing

- Immunohistochemistry

- Companion Diagnostics

- Hematoxylin & Eosin

- Special Stains

- In Situ Hybridization

- Clinical Flow Cytometry

- Specific Proteins

- Clinical Microplate Instrumentation

Lab Management & Consulting

- Lab Management

- Lab Consulting

Lab Software

- Software & Informatics

- Genomics Informatics

Lab Supplies

- Microplates

Dissolution Testing

- Dissolution

Lab Automation

- Automated Liquid Handling

Vacuum & Leak Detection

- Vacuum Technologies

- Leak Detection

- Applications & Industries

- Biopharma/Pharma

- Cancer Research

- Cannabis & Hemp Testing

- Clinical Diagnostics

- Clinical Research

- Infectious Disease

- Energy & Chemicals

- Environmental

- Food & Beverage Testing

- Materials Testing & Research

- Security, Defense & First Response

- Vacuum Solutions

- Lithium-Ion Battery Testing

- Oligonucleotide Therapeutics

- PFAS Testing in Water

- Training & Events

Mass spectrometry, chromatography, spectroscopy, software, dissolution, sample handling and vacuum technologies courses

On-demand continuing education

Instrument training and workshops

Live or on-demand webinars on product introductions, applications and software enhancements

Worldwide trade shows, conferences, local seminars and user group meetings

Lab Management Services

Service Plans, On Demand Repair, Preventive Maintenance, and Service Center Repair

Software to manage instrument access, sample processing, inventories, and more

Instrument/software qualifications, consulting, and data integrity validations

Learn essential lab skills and enhance your workflows

Instrument & equipment deinstallation, transportation, and reinstallation

Other Services Header1

CrossLab Connect services use laboratory data to improve control and decision-making

Advance lab operations with lab-wide services, asset management, relocation

Shorten the time it takes to start seeing the full value of your instrument investment

Other Services Header2

- Agilent Community

- Financial Solutions

- Agilent University

- Instrument Trade-In & BuyBack

Pathology Services

- Lab Solution Deployment Services

- Instrument & Solution Services

- Training & Application Services

- Workflow & Connectivity Services

Nucleic Acid Therapeutics

- Oligonucleotide GMP Manufacturing

Vacuum Product & Leak Detector Services

- Advance Exchange Service

- Repair Support Services & Spare Parts

- Support Services, Agreements & Training

- Technology Refresh & Upgrade

- Leak Detector Services

- Support & Resources

Technical Support

- Instrument Support Resources

- Columns, Supplies, & Standards

- Contact Support

- See All Technical Support

Purchase & Order Support

- Instrument Subscriptions

- Flexible Spend Plan

- eProcurement

- eCommerce Guides

Literature & Videos

- Application Notes

- Technical Overviews

- User Manuals

- Life Sciences Publication Database

- Electronic Instructions for Use (eIFU)

- Safety Data Sheets

- Technical Data Sheets

- Site Prep Checklist

E-Newsletters

- Solution Insights

- ICP-MS Journal

Certificates

- Certificate of Analysis

- Certificate of Conformance

- Certificate of Performance

- ISO Certificates

More Resources

- iOS & Android Apps

- QuikChange Primer Design Tools

- GC Calculators & Method Translation Software

- BioCalculators / Nucleic Acid Calculators

- Order Center

- Quick Order

- Request a Quote

- My Favorites

- Where to Buy

- Flex Spend Portal

- Genomics Applications and Solutions

- Sample Quality Control Solutions

- Sample Quality Control Case Studies

An official website of the United States government

Here’s how you know

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( A locked padlock ) or https:// means you’ve safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Heart-Healthy Living

- High Blood Pressure

- Sickle Cell Disease

- Sleep Apnea

- Information & Resources on COVID-19

- The Heart Truth®

- Learn More Breathe Better®

- Blood Diseases and Disorders Education Program

- Publications and Resources

- Blood Disorders and Blood Safety

- Sleep Science and Sleep Disorders

- Lung Diseases

- Health Disparities and Inequities

- Heart and Vascular Diseases

- Precision Medicine Activities

- Obesity, Nutrition, and Physical Activity

- Population and Epidemiology Studies

- Women’s Health

- Research Topics

- Clinical Trials

- All Science A-Z

- Grants and Training Home

- Policies and Guidelines

- Funding Opportunities and Contacts

- Training and Career Development

- Email Alerts

- NHLBI in the Press

- Research Features

- Past Events

- Upcoming Events

- Mission and Strategic Vision

- Divisions, Offices and Centers

- Advisory Committees

- Budget and Legislative Information

- Jobs and Working at the NHLBI

- Contact and FAQs

- NIH Sleep Research Plan

- < Back To Health Topics

Study Quality Assessment Tools

In 2013, NHLBI developed a set of tailored quality assessment tools to assist reviewers in focusing on concepts that are key to a study’s internal validity. The tools were specific to certain study designs and tested for potential flaws in study methods or implementation. Experts used the tools during the systematic evidence review process to update existing clinical guidelines, such as those on cholesterol, blood pressure, and obesity. Their findings are outlined in the following reports:

- Assessing Cardiovascular Risk: Systematic Evidence Review from the Risk Assessment Work Group

- Management of Blood Cholesterol in Adults: Systematic Evidence Review from the Cholesterol Expert Panel

- Management of Blood Pressure in Adults: Systematic Evidence Review from the Blood Pressure Expert Panel

- Managing Overweight and Obesity in Adults: Systematic Evidence Review from the Obesity Expert Panel

While these tools have not been independently published and would not be considered standardized, they may be useful to the research community. These reports describe how experts used the tools for the project. Researchers may want to use the tools for their own projects; however, they would need to determine their own parameters for making judgements. Details about the design and application of the tools are included in Appendix A of the reports.

Quality Assessment of Controlled Intervention Studies - Study Quality Assessment Tools

*CD, cannot determine; NA, not applicable; NR, not reported

Guidance for Assessing the Quality of Controlled Intervention Studies

The guidance document below is organized by question number from the tool for quality assessment of controlled intervention studies.

Question 1. Described as randomized

Was the study described as randomized? A study does not satisfy quality criteria as randomized simply because the authors call it randomized; however, it is a first step in determining if a study is randomized

Questions 2 and 3. Treatment allocation–two interrelated pieces

Adequate randomization: Randomization is adequate if it occurred according to the play of chance (e.g., computer generated sequence in more recent studies, or random number table in older studies). Inadequate randomization: Randomization is inadequate if there is a preset plan (e.g., alternation where every other subject is assigned to treatment arm or another method of allocation is used, such as time or day of hospital admission or clinic visit, ZIP Code, phone number, etc.). In fact, this is not randomization at all–it is another method of assignment to groups. If assignment is not by the play of chance, then the answer to this question is no. There may be some tricky scenarios that will need to be read carefully and considered for the role of chance in assignment. For example, randomization may occur at the site level, where all individuals at a particular site are assigned to receive treatment or no treatment. This scenario is used for group-randomized trials, which can be truly randomized, but often are "quasi-experimental" studies with comparison groups rather than true control groups. (Few, if any, group-randomized trials are anticipated for this evidence review.)

Allocation concealment: This means that one does not know in advance, or cannot guess accurately, to what group the next person eligible for randomization will be assigned. Methods include sequentially numbered opaque sealed envelopes, numbered or coded containers, central randomization by a coordinating center, computer-generated randomization that is not revealed ahead of time, etc. Questions 4 and 5. Blinding

Blinding means that one does not know to which group–intervention or control–the participant is assigned. It is also sometimes called "masking." The reviewer assessed whether each of the following was blinded to knowledge of treatment assignment: (1) the person assessing the primary outcome(s) for the study (e.g., taking the measurements such as blood pressure, examining health records for events such as myocardial infarction, reviewing and interpreting test results such as x ray or cardiac catheterization findings); (2) the person receiving the intervention (e.g., the patient or other study participant); and (3) the person providing the intervention (e.g., the physician, nurse, pharmacist, dietitian, or behavioral interventionist).

Generally placebo-controlled medication studies are blinded to patient, provider, and outcome assessors; behavioral, lifestyle, and surgical studies are examples of studies that are frequently blinded only to the outcome assessors because blinding of the persons providing and receiving the interventions is difficult in these situations. Sometimes the individual providing the intervention is the same person performing the outcome assessment. This was noted when it occurred.

Question 6. Similarity of groups at baseline

This question relates to whether the intervention and control groups have similar baseline characteristics on average especially those characteristics that may affect the intervention or outcomes. The point of randomized trials is to create groups that are as similar as possible except for the intervention(s) being studied in order to compare the effects of the interventions between groups. When reviewers abstracted baseline characteristics, they noted when there was a significant difference between groups. Baseline characteristics for intervention groups are usually presented in a table in the article (often Table 1).

Groups can differ at baseline without raising red flags if: (1) the differences would not be expected to have any bearing on the interventions and outcomes; or (2) the differences are not statistically significant. When concerned about baseline difference in groups, reviewers recorded them in the comments section and considered them in their overall determination of the study quality.

Questions 7 and 8. Dropout

"Dropouts" in a clinical trial are individuals for whom there are no end point measurements, often because they dropped out of the study and were lost to followup.

Generally, an acceptable overall dropout rate is considered 20 percent or less of participants who were randomized or allocated into each group. An acceptable differential dropout rate is an absolute difference between groups of 15 percentage points at most (calculated by subtracting the dropout rate of one group minus the dropout rate of the other group). However, these are general rates. Lower overall dropout rates are expected in shorter studies, whereas higher overall dropout rates may be acceptable for studies of longer duration. For example, a 6-month study of weight loss interventions should be expected to have nearly 100 percent followup (almost no dropouts–nearly everybody gets their weight measured regardless of whether or not they actually received the intervention), whereas a 10-year study testing the effects of intensive blood pressure lowering on heart attacks may be acceptable if there is a 20-25 percent dropout rate, especially if the dropout rate between groups was similar. The panels for the NHLBI systematic reviews may set different levels of dropout caps.

Conversely, differential dropout rates are not flexible; there should be a 15 percent cap. If there is a differential dropout rate of 15 percent or higher between arms, then there is a serious potential for bias. This constitutes a fatal flaw, resulting in a poor quality rating for the study.

Question 9. Adherence

Did participants in each treatment group adhere to the protocols for assigned interventions? For example, if Group 1 was assigned to 10 mg/day of Drug A, did most of them take 10 mg/day of Drug A? Another example is a study evaluating the difference between a 30-pound weight loss and a 10-pound weight loss on specific clinical outcomes (e.g., heart attacks), but the 30-pound weight loss group did not achieve its intended weight loss target (e.g., the group only lost 14 pounds on average). A third example is whether a large percentage of participants assigned to one group "crossed over" and got the intervention provided to the other group. A final example is when one group that was assigned to receive a particular drug at a particular dose had a large percentage of participants who did not end up taking the drug or the dose as designed in the protocol.

Question 10. Avoid other interventions

Changes that occur in the study outcomes being assessed should be attributable to the interventions being compared in the study. If study participants receive interventions that are not part of the study protocol and could affect the outcomes being assessed, and they receive these interventions differentially, then there is cause for concern because these interventions could bias results. The following scenario is another example of how bias can occur. In a study comparing two different dietary interventions on serum cholesterol, one group had a significantly higher percentage of participants taking statin drugs than the other group. In this situation, it would be impossible to know if a difference in outcome was due to the dietary intervention or the drugs.

Question 11. Outcome measures assessment

What tools or methods were used to measure the outcomes in the study? Were the tools and methods accurate and reliable–for example, have they been validated, or are they objective? This is important as it indicates the confidence you can have in the reported outcomes. Perhaps even more important is ascertaining that outcomes were assessed in the same manner within and between groups. One example of differing methods is self-report of dietary salt intake versus urine testing for sodium content (a more reliable and valid assessment method). Another example is using BP measurements taken by practitioners who use their usual methods versus using BP measurements done by individuals trained in a standard approach. Such an approach may include using the same instrument each time and taking an individual's BP multiple times. In each of these cases, the answer to this assessment question would be "no" for the former scenario and "yes" for the latter. In addition, a study in which an intervention group was seen more frequently than the control group, enabling more opportunities to report clinical events, would not be considered reliable and valid.

Question 12. Power calculation

Generally, a study's methods section will address the sample size needed to detect differences in primary outcomes. The current standard is at least 80 percent power to detect a clinically relevant difference in an outcome using a two-sided alpha of 0.05. Often, however, older studies will not report on power.

Question 13. Prespecified outcomes

Investigators should prespecify outcomes reported in a study for hypothesis testing–which is the reason for conducting an RCT. Without prespecified outcomes, the study may be reporting ad hoc analyses, simply looking for differences supporting desired findings. Investigators also should prespecify subgroups being examined. Most RCTs conduct numerous post hoc analyses as a way of exploring findings and generating additional hypotheses. The intent of this question is to give more weight to reports that are not simply exploratory in nature.

Question 14. Intention-to-treat analysis

Intention-to-treat (ITT) means everybody who was randomized is analyzed according to the original group to which they are assigned. This is an extremely important concept because conducting an ITT analysis preserves the whole reason for doing a randomized trial; that is, to compare groups that differ only in the intervention being tested. When the ITT philosophy is not followed, groups being compared may no longer be the same. In this situation, the study would likely be rated poor. However, if an investigator used another type of analysis that could be viewed as valid, this would be explained in the "other" box on the quality assessment form. Some researchers use a completers analysis (an analysis of only the participants who completed the intervention and the study), which introduces significant potential for bias. Characteristics of participants who do not complete the study are unlikely to be the same as those who do. The likely impact of participants withdrawing from a study treatment must be considered carefully. ITT analysis provides a more conservative (potentially less biased) estimate of effectiveness.

General Guidance for Determining the Overall Quality Rating of Controlled Intervention Studies

The questions on the assessment tool were designed to help reviewers focus on the key concepts for evaluating a study's internal validity. They are not intended to create a list that is simply tallied up to arrive at a summary judgment of quality.

Internal validity is the extent to which the results (effects) reported in a study can truly be attributed to the intervention being evaluated and not to flaws in the design or conduct of the study–in other words, the ability for the study to make causal conclusions about the effects of the intervention being tested. Such flaws can increase the risk of bias. Critical appraisal involves considering the risk of potential for allocation bias, measurement bias, or confounding (the mixture of exposures that one cannot tease out from each other). Examples of confounding include co-interventions, differences at baseline in patient characteristics, and other issues addressed in the questions above. High risk of bias translates to a rating of poor quality. Low risk of bias translates to a rating of good quality.

Fatal flaws: If a study has a "fatal flaw," then risk of bias is significant, and the study is of poor quality. Examples of fatal flaws in RCTs include high dropout rates, high differential dropout rates, no ITT analysis or other unsuitable statistical analysis (e.g., completers-only analysis).

Generally, when evaluating a study, one will not see a "fatal flaw;" however, one will find some risk of bias. During training, reviewers were instructed to look for the potential for bias in studies by focusing on the concepts underlying the questions in the tool. For any box checked "no," reviewers were told to ask: "What is the potential risk of bias that may be introduced by this flaw?" That is, does this factor cause one to doubt the results that were reported in the study?

NHLBI staff provided reviewers with background reading on critical appraisal, while emphasizing that the best approach to use is to think about the questions in the tool in determining the potential for bias in a study. The staff also emphasized that each study has specific nuances; therefore, reviewers should familiarize themselves with the key concepts.

Quality Assessment of Systematic Reviews and Meta-Analyses - Study Quality Assessment Tools

Guidance for Quality Assessment Tool for Systematic Reviews and Meta-Analyses

A systematic review is a study that attempts to answer a question by synthesizing the results of primary studies while using strategies to limit bias and random error.424 These strategies include a comprehensive search of all potentially relevant articles and the use of explicit, reproducible criteria in the selection of articles included in the review. Research designs and study characteristics are appraised, data are synthesized, and results are interpreted using a predefined systematic approach that adheres to evidence-based methodological principles.

Systematic reviews can be qualitative or quantitative. A qualitative systematic review summarizes the results of the primary studies but does not combine the results statistically. A quantitative systematic review, or meta-analysis, is a type of systematic review that employs statistical techniques to combine the results of the different studies into a single pooled estimate of effect, often given as an odds ratio. The guidance document below is organized by question number from the tool for quality assessment of systematic reviews and meta-analyses.

Question 1. Focused question

The review should be based on a question that is clearly stated and well-formulated. An example would be a question that uses the PICO (population, intervention, comparator, outcome) format, with all components clearly described.

Question 2. Eligibility criteria

The eligibility criteria used to determine whether studies were included or excluded should be clearly specified and predefined. It should be clear to the reader why studies were included or excluded.

Question 3. Literature search

The search strategy should employ a comprehensive, systematic approach in order to capture all of the evidence possible that pertains to the question of interest. At a minimum, a comprehensive review has the following attributes:

- Electronic searches were conducted using multiple scientific literature databases, such as MEDLINE, EMBASE, Cochrane Central Register of Controlled Trials, PsychLit, and others as appropriate for the subject matter.

- Manual searches of references found in articles and textbooks should supplement the electronic searches.

Additional search strategies that may be used to improve the yield include the following:

- Studies published in other countries

- Studies published in languages other than English

- Identification by experts in the field of studies and articles that may have been missed

- Search of grey literature, including technical reports and other papers from government agencies or scientific groups or committees; presentations and posters from scientific meetings, conference proceedings, unpublished manuscripts; and others. Searching the grey literature is important (whenever feasible) because sometimes only positive studies with significant findings are published in the peer-reviewed literature, which can bias the results of a review.

In their reviews, researchers described the literature search strategy clearly, and ascertained it could be reproducible by others with similar results.

Question 4. Dual review for determining which studies to include and exclude

Titles, abstracts, and full-text articles (when indicated) should be reviewed by two independent reviewers to determine which studies to include and exclude in the review. Reviewers resolved disagreements through discussion and consensus or with third parties. They clearly stated the review process, including methods for settling disagreements.

Question 5. Quality appraisal for internal validity

Each included study should be appraised for internal validity (study quality assessment) using a standardized approach for rating the quality of the individual studies. Ideally, this should be done by at least two independent reviewers appraised each study for internal validity. However, there is not one commonly accepted, standardized tool for rating the quality of studies. So, in the research papers, reviewers looked for an assessment of the quality of each study and a clear description of the process used.

Question 6. List and describe included studies

All included studies were listed in the review, along with descriptions of their key characteristics. This was presented either in narrative or table format.

Question 7. Publication bias

Publication bias is a term used when studies with positive results have a higher likelihood of being published, being published rapidly, being published in higher impact journals, being published in English, being published more than once, or being cited by others.425,426 Publication bias can be linked to favorable or unfavorable treatment of research findings due to investigators, editors, industry, commercial interests, or peer reviewers. To minimize the potential for publication bias, researchers can conduct a comprehensive literature search that includes the strategies discussed in Question 3.

A funnel plot–a scatter plot of component studies in a meta-analysis–is a commonly used graphical method for detecting publication bias. If there is no significant publication bias, the graph looks like a symmetrical inverted funnel.

Reviewers assessed and clearly described the likelihood of publication bias.

Question 8. Heterogeneity

Heterogeneity is used to describe important differences in studies included in a meta-analysis that may make it inappropriate to combine the studies.427 Heterogeneity can be clinical (e.g., important differences between study participants, baseline disease severity, and interventions); methodological (e.g., important differences in the design and conduct of the study); or statistical (e.g., important differences in the quantitative results or reported effects).

Researchers usually assess clinical or methodological heterogeneity qualitatively by determining whether it makes sense to combine studies. For example:

- Should a study evaluating the effects of an intervention on CVD risk that involves elderly male smokers with hypertension be combined with a study that involves healthy adults ages 18 to 40? (Clinical Heterogeneity)

- Should a study that uses a randomized controlled trial (RCT) design be combined with a study that uses a case-control study design? (Methodological Heterogeneity)

Statistical heterogeneity describes the degree of variation in the effect estimates from a set of studies; it is assessed quantitatively. The two most common methods used to assess statistical heterogeneity are the Q test (also known as the X2 or chi-square test) or I2 test.

Reviewers examined studies to determine if an assessment for heterogeneity was conducted and clearly described. If the studies are found to be heterogeneous, the investigators should explore and explain the causes of the heterogeneity, and determine what influence, if any, the study differences had on overall study results.

Quality Assessment Tool for Observational Cohort and Cross-Sectional Studies - Study Quality Assessment Tools

Guidance for Assessing the Quality of Observational Cohort and Cross-Sectional Studies

The guidance document below is organized by question number from the tool for quality assessment of observational cohort and cross-sectional studies.

Question 1. Research question

Did the authors describe their goal in conducting this research? Is it easy to understand what they were looking to find? This issue is important for any scientific paper of any type. Higher quality scientific research explicitly defines a research question.

Questions 2 and 3. Study population

Did the authors describe the group of people from which the study participants were selected or recruited, using demographics, location, and time period? If you were to conduct this study again, would you know who to recruit, from where, and from what time period? Is the cohort population free of the outcomes of interest at the time they were recruited?

An example would be men over 40 years old with type 2 diabetes who began seeking medical care at Phoenix Good Samaritan Hospital between January 1, 1990 and December 31, 1994. In this example, the population is clearly described as: (1) who (men over 40 years old with type 2 diabetes); (2) where (Phoenix Good Samaritan Hospital); and (3) when (between January 1, 1990 and December 31, 1994). Another example is women ages 34 to 59 years of age in 1980 who were in the nursing profession and had no known coronary disease, stroke, cancer, hypercholesterolemia, or diabetes, and were recruited from the 11 most populous States, with contact information obtained from State nursing boards.