- Search Menu

- Sign in through your institution

- Supplements

- Cohort Profiles

- Education Corner

- Author Guidelines

- Submission Site

- Open Access

- About the International Journal of Epidemiology

- About the International Epidemiological Association

- Editorial Team

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Self-Archiving Policy

- Dispatch Dates

- Contact the IEA

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

Conclusions.

- < Previous

A case study of using artificial neural networks for classifying cause of death from verbal autopsy

- Article contents

- Figures & tables

- Supplementary Data

Andrew Boulle, Daniel Chandramohan, Peter Weller, A case study of using artificial neural networks for classifying cause of death from verbal autopsy, International Journal of Epidemiology , Volume 30, Issue 3, June 2001, Pages 515–520, https://doi.org/10.1093/ije/30.3.515

- Permissions Icon Permissions

Background Artificial neural networks (ANN) are gaining prominence as a method of classification in a wide range of disciplines. In this study ANN is applied to data from a verbal autopsy study as a means of classifying cause of death.

Methods A simulated ANN was trained on a subset of verbal autopsy data, and the performance was tested on the remaining data. The performance of the ANN models were compared to two other classification methods (physician review and logistic regression) which have been tested on the same verbal autopsy data.

Results Artificial neural network models were as accurate as or better than the other techniques in estimating the cause-specific mortality fraction (CSMF). They estimated the CSMF within 10% of true value in 8 out of 16 causes of death. Their sensitivity and specificity compared favourably with that of data-derived algorithms based on logistic regression models.

Conclusions Cross-validation is crucial in preventing the over-fitting of the ANN models to the training data. Artificial neural network models are a potentially useful technique for classifying causes of death from verbal autopsies. Large training data sets are needed to improve the performance of data-derived algorithms, in particular ANN models.

KEY MESSAGES

Artifical neural networks have potential for classifying causes of death from verbal autopsies.

Large datasets are needed to train neural networks and for validating their performance.

Generalizability of neural network models to various settings needs further evaluation.

In many countries routine vital statistics are of poor quality, and often incomplete or unavailable. In countries where vital registration and routine health information systems are weak, the application of verbal autopsy (VA) in demographic surveillance systems or cross-sectional surveys has been suggested for assessing cause-specific burden of mortality. The technique involves taking an interviewer-led account of the symptoms and signs that were present preceding the death of individuals from their caretakers. Traditionally the information obtained from caretakers is analysed by physicians and a cause(s) of death is reached if a majority of physicians on a panel agreed on a cause(s). The accuracy of physician reviews has been tested in several settings using causes of death assigned from hospital records as the ‘gold standard’. Although physician reviews of VA gave robust estimates of cause-specific mortality fractions (CSMF) of several causes of death, the sensitivity, specificity and predictive values varied between causes of death and between populations 1 , 2 and had poor repeatability of results. 3

Arguments to introduce opinion-based and/or data-derived algorithm methods of assigning cause of death from VA data are based on both the quest for accuracy and consistency, as well as the logistical difficulties in getting together a panel of physicians to review what are often large numbers of records. However, physician review performed better than set diagnostic criteria (opinion-based or data-derived) given in an algorithm to assign a cause of adult death. 4 One promising approach to diagnose disease status has been artificial neural networks (ANN) which apply non-linear statistics to pattern recognition. For example, ANN predicted outcomes in cancer patients better than a logistic regression model. 5 Duh et al. speculate that ANN will prove useful in epidemiological problems that require pattern recognition and complex classification techniques. 6 In this report, we compare the performance of ANN and logistic regression models and physician review for reaching causes of adult death from VA.

An overview of neural networks

Although often referred to as black boxes, neural networks can in fact easily be understood by those versed in regression analysis techniques. In essence, they are complex non-linear modelling equations. The inputs, outputs and weights in a neural network are analogous to the input variables, outcome variables and coefficients in a regression analysis. The added complexity is largely the result of a layering of ‘nodes’ which provides a far more detailed map of the decision space. A single node neural network will produce a comparable output to logistic regression, where a function will combine the weights of the inputs to produce the output (Figure 1 ).

Combining these nodes into multiple layers adds to the complexity of the model and hence the discriminatory power. In so doing, a number of elements, each receiving all of the inputs and producing an output, have these outputs sent as inputs to a further element(s). The architecture is called a multi-layer perceptron (Figure 2 ).

The study population and field procedures of the VA data used in this analysis are described elsewhere. 1 In brief, data were collected at three sites (a regional hospital in Ethiopia, and two rural hospitals in Tanzania and Ghana). Adults dying at these hospitals who lived within a 60-km radius of the institution were included in the study. A VA questionnaire was administered by interviewers with at least 12 years of formal education. The reference diagnoses (gold standard) were obtained from a combination of hospital records and death certificates by one of the authors (DC) together with a local physician in each site. A panel of three physicians reviewed the VA data and reached a cause of death if any two agreed on a cause (physician review).

The method used to derive algorithms from the data using logistic regression models has been described elsewhere. 4 Each subject was randomly assigned to the train dataset (n = 410) or test dataset (n = 386), such that the number of deaths due to each cause (gold standard) was the same in both datasets. If a cause of death had odd numbers, the extra subject was included in the train dataset. Symptoms (includes signs) with odds ratio (OR) ≥2 or ≤0.5 in univariate analyses were included in a logistic model and then those symptoms that were not significant statistically ( P > 0.1) were dropped from the model in a backward stepwise manner. Coefficients of each symptom remaining in the model were summed to obtain a score for each subject i.e. Score = b 1 × 1 +b 2 × 2 +…, where b i x i are the log OR b i of symptoms x i in the model. A cut-off score was identified for each cause of death (included 16 primary causes of adult death) that gave the estimated number of deaths closest to the true number of cause-specific deaths, such that the sensitivity was at least 50%.

We used the same train and test datasets used by Quigley et al. for training and testing an ANN. The data were ported to Microsoft Excel™ and analysed using NeuroSolutions 3.0™ (Lefebvre WC. NeuroSolution Version 3.020, Neurodimension Inc.1994. [ www.nd.com ]). All models were multi-layer perceptrons with a single hidden layer and trained with static backpropogation. The number of nodes in the hidden layer were varied according to the number of inputs and network performance. A learning rate of 0.7 was used throughout with the momentum learning rule. A sigmoid activation function was used for all processing elements.

Model inputs were based on those used in the logistic regression study, with further variables added to improve discrimination in instances when they improved the model performance. Sensitivity analysis provided the basis for evaluating the role of the inputs in the models.

For each diagnosis, the first 100 records of the training subset were used in the first training run of each model as a cross-validation set to determine the optimal number of hidden nodes and the training point at which the cross-validation mean squared error reached a trough. Thereafter the full training set was used to train the network to this point.

The output weights were then adjusted by a variable factor until the CSMF was as close as possible to 100% of the expected value in testing runs on the training set. At this point the network was tested on the unseen data in the test subset.

Weighted (by number of deaths) averages for sensitivity and specificity were calculated for each method. A summary measure for CSMF was calculated for each method by summing the absolute difference in observed and estimated number of cases for each cause of death, dividing by the total number of deaths, and converting to a percentage.

Table 1 shows the comparison of validity of the logistic regression models versus the ANN models for estimating CSMF by comparing estimated with observed number of cases as well as sensitivity and specificity.

The CSMF was estimated to within 10% of the true value in 8 out of 16 classes (causes of death) by the ANN. In a further six classes it was estimated to within 25% of the true value. In the remaining two classes the CSMF was extremely low (tetanus and rabies). The summary measure for CSMF favours those methods that are more accurate on the more frequently occurring classes and may mask poor performance on rare causes of death. In this measure however, calculated from the absolute number of over- or under-diagnosed cases, the neural network method performed better than logistic regression models (average error 11.27% versus 31.27%), and compared well with physician review (average error of 12.84%). In the assessment of chance agreement between ANN and gold-standard diagnoses, the kappa value was ≥0.5 for the following classes: rabies (0.86), injuries (0.76), tetanus (0.66), tuberculosis and AIDS (0.55), direct maternal causes (0.52), meningitis (0.50), and diarrhoea (0.50).

There was a trade-off between specificity and sensitivity, and in some instances the neural network performed better than other techniques in one at the expense of the other. Compared to logistic regression, the networks performed better in both parameters for tuberculosis and AIDS, meningitis, cardiovascular disorders, diarrhoea, and tetanus. They produced a lower sensitivity for malaria (compared with logistic regression), but a higher specificity. The overall and disease-specific sensitivities and specificities compared favourably with logistic regression, but did not match the performance of physician review.

Accuracy of CSMF estimates

One of the most significant findings of this analysis is the relative accuracy in assessing the fraction of deaths that are due to specific causes, especially for the more frequently occurring classes. The accuracy in this estimate does not always correlate with the reliability estimated by the kappa statistic. Care was taken to find a weighting for the output that would lead to a correct CSMF in the training set. The choice of this weight is analogous to selecting the minimum total score at which a case is defined in the logistic regression models. This then led to surprisingly good estimates in the testing set. It is a feature of the train and test subsets however that the number of members in each class is similar. Manipulating either subset so that the CSMF differed, by randomly removing or adding records of the class in question, did not alter the accuracy of the CSMF estimates if the number of training examples for the class was not decreased in the training subset. With less frequently occurring classes such as pneumonia, decreasing the number of training examples in the training set, reduced the accuracy of the CSMF estimate. This is essentially an issue of generalisation, and it is to be expected that networks that are trained with fewer examples are less likely to be generalizable. It is suggested that it is for this reason that the CSMF estimates for the five most frequently occurring classes are all within 10% of the expected values. It would be expected furthermore that if the datasets were larger, that the generalizability of the CSMF estimates for the less frequently occurring classes would improve.

At the stage of data analysis the question can be asked as to whether or not there is an output level above which class membership is reasonably certain, and below which misclassification is more likely to occur. Looking at the tuberculosis-AIDS model (n = 71), as well as the meningitis model (n = 32), and ranking the top 20 test outputs in descending order by value (reflecting the certainty of the classification), 13/20 of these outputs correctly predict the class membership in both instances. The sensitivities for the models overall were 66% and 56% respectively. The implication is that without a gold standard result for comparison, it would be difficult to delineate the true positives from the false positives even in the least equivocal outputs. This is in keeping with observations that different data-derived methods arrive at their estimates differently. One study to predict an acute abdomen diagnosis from surgical admission records demonstrated that data-derived methods with similar overall performance correlated poorly as to which of the records they were correctly predicting. 7

Mechanisms of improved performance

A single layer neural network (i.e. a network with only inputs, and one processing element) is isomorphic with logistic regression. A network with no hidden nodes produced almost identical results when comparing the input weights to the log(OR) for the four inputs used in the regression model to predict malaria as the cause of death. In those instances where the performance of logistic regression and neural network models differ, it is of interest as to know the mechanisms by which improvements are made. The results from this study indicate that the differences in performance of the neural networks are achieved both by improved fitting of those variables already known to be significantly predictive of class membership, through the modelling of interaction between them, and by additional discriminating power conferred by variables that are not significantly predictive on their own

The first mechanism was borne out in one of the meningitis models in which the exact same inputs used in the logistic regression model were used in the neural network model with an improvement in performance. Exploring the sensitivity analysis for cardiovascular deaths (Table 2 ), the network outputs are surprisingly sensitive to the absence of a tuberculosis history, which was not strongly predictive by itself. Age above 45 years old was the seventh most predictive input in the regression model, whereas it was the input to which the neural network model was second most sensitive. In the case of meningitis, presence of continuous fever was more important in the regression model, whilst presence or absence of recent surgery and abdominal distension were more significant in the ANN model (Table 3 ). The network has mapped relationships between the inputs that were not predicted by the regression model.

Effect of size of dataset

Both data-derived methods stand to benefit from more training examples. In the regression models, some inputs not currently utilized may yield significant associations with outputs when larger datasets are used. With enough nodes and training time, it was possible in the course of this analysis to train a neural network to completely map the training set with 100% sensitivity and specificity. However, this level of sensitivity and specificity was not reproduced when these models were tested in the test dataset. What it did demonstrate is the ability of the method to map complex functions. The key point is one of generalizability. In the models presented above, training was stopped and the nodes limited to ensure that the generalizability was not compromised. With more training examples, it is likely that the networks would develop a better understanding of the relationships between inputs and outputs before over-training occurs. Arguably, the neural network models would stand to improve performance more than the regression models should larger training sets be available. However, further training may not achieve algorithms of sufficiently high sensitivity and specificity to obviate the need for algorithms with particular operating characteristics suitable for use in specific environments.

Physician review

Only 78% of the reference diagnoses were confirmed by laboratory tests. Since 22% of the reference diagnoses were based on hospital physicians' clinical judgement, it is not surprising that physician review of VA performed better than the other methods. Nevertheless, physician review remains the optimal method of analysis, as far as overall performance is concerned, for gathering cause-specific mortality data as good as the data produced by routine health information systems. 1 The technique by which physicians in this study came to their classification differed considerably, as they made extensive use of the open section of the questionnaire from which information was not coded for analysis by the other techniques. Interestingly though, other methods are able to come close if the CSMF is used as the outcome of choice, as indeed it often is. Thus ANN or logistic regression models based algorithms have the potential for substituting physician review of VA.

Limitations of the technique

At various points we have alluded to some of the difficulties and limitations of using neural networks for the analysis. These are summarized in Table 4 .

Even with sensitivity analysis, we had no way of working out which were going to be the most important inputs prior to creating a model and conducting a sensitivity analysis on it. There is some correlation with linearly predictive inputs that helps in the initial stages.

Determining the weighting for the output for providing the optimum estimate of the CSMF was time-consuming. The software provides an option for prioritizing sensitivity over specificity, but no way of balancing the number of false positives and false negatives that would give an accurate CSMF estimate.

Designing the optimal network topology requires building numerous networks in search of the one with the lowest least mean squared error. The number of hidden nodes, inputs and training time all affect the performance of the network. Whilst training is relatively quick compared to the many hours it took to train ANN in the early days of their development, it is still time-consuming to build and train multiple networks for each model.

Cross-validation to prevent over-training required compromising the number of training examples to allow for a cross-validation dataset.

Sensitivity and specificity of the ANN algorithms were not high enough to be generalizable to a variety of settings. Furthermore, the accuracy of individual and summary estimates of CSMF obtained in this study could be due to the similarity in the CSMF between the training and test datasets. Thus large datasets from a variety of settings are needed to identify optimal algorithms for each site with different distributions of causes of death.

Classification software based on neural network simulations is an accessible tool which can be applied to VA data potentially outperforming other the data-derived techniques already studied for this purpose. As with other data-derived techniques, over-fitting to the training data leading to a compromise in the generalizability of the models is a potential limitation of ANN. Increasing the number of training examples is likely to improve performance of neural networks for VA. However, ANN algorithms with particular operating characteristics would be site-specific. Thus optimal algorithms need to be identified for use in a variety of settings.

Comparison of performance of physician review, logistic regression and neural network models

Comparison of the most important inputs for two data-derived models for assigning cardiovascular deaths

Comparison of the most important inputs for two data-derived models for assigning death due to meningitis

Limitations of the artificial neural network technique

Schematic representation of a single node in a neural network

Schematic representation of multi-layer perceptron

London School of Hygiene & Tropical Medicine, Keppel Street, London WC1E, UK.

Chandramohan D, Maude H, Rodrigues L, Hayes R. Verbal autopsies for adult deaths: their development and validation in a multicentre study. Trop Med Int Health 1998 ; 3 : 436 –46.

Snow RW, Armstrong ARM, Forster D et al . Childhood deaths in Africa: uses and limitations of verbal autopsies. Lancet 1992 ; 340 : 351 –55.

Todd JE, De Francisco A, O'Dempsey TJD, Greenwood BM. The limitations of verbal autopsy in a malaria-endemic region. Ann Trop Paediatr 1994 ; 14 : 31 –36.

Quigley M, Chandramohan D, Rodrigues L. Diagnostic accuracy of physician review, expert algorithms and data-derived algorithms in adult verbal autopsies. Int J Epidemiol 1999 ; 28 : 1081 –87.

Jefferson MF, Pendleton N, Lucas SB, Horan MA. Comparison of a genetic algorithm neural network with logistic regression for predicting outcome after surgery for patients with nonsmall cell lung carcinoma. Cancer 1997 ; 79 : 1338 –42.

Duh MS, Walker AM, Pagano M, Kronlund K. Epidemiological interpretation of artificial neural networks. Am J Epidemiol 1998 ; 147 : 1112 –22.

Schwartz S, et al . Connectionist, rule-based and Bayesian diagnostic decision aids: an empirical comparison. In: Hand DJ (ed.). Artificial Intelligence Frontiers in Statistics . London: Chapman and Hall, 1993, pp.264–77.

Email alerts

Citing articles via, looking for your next opportunity.

- About International Journal of Epidemiology

- Recommend to your Library

Affiliations

- Online ISSN 1464-3685

- Copyright © 2024 International Epidemiological Association

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

These computer science terms are often used interchangeably, but what differences make each a unique technology?

Technology is becoming more embedded in our daily lives by the minute. To keep up with the pace of consumer expectations, companies are relying more heavily on machine learning algorithms to make things easier. You can see its application in social media (through object recognition in photos) or in talking directly to devices (like Alexa or Siri).

While artificial intelligence (AI), machine learning (ML), deep learning and neural networks are related technologies, the terms are often used interchangeably, which frequently leads to confusion about their differences. This blog post clarifies some of the ambiguity.

The easiest way to think about AI, machine learning, deep learning and neural networks is to think of them as a series of AI systems from largest to smallest, each encompassing the next.

AI is the overarching system. Machine learning is a subset of AI. Deep learning is a subfield of machine learning, and neural networks make up the backbone of deep learning algorithms. It’s the number of node layers, or depth, of neural networks that distinguishes a single neural network from a deep learning algorithm, which must have more than three.

Artificial intelligence or AI, the broadest term of the three, is used to classify machines that mimic human intelligence and human cognitive functions like problem-solving and learning. AI uses predictions and automation to optimize and solve complex tasks that humans have historically done, such as facial and speech recognition, decision-making and translation.

Categories of AI

The three main categories of AI are:

- Artificial Narrow Intelligence (ANI)

- Artificial General Intelligence (AGI)

- Artificial Super Intelligence (ASI)

ANI is considered “weak” AI, whereas the other two types are classified as “strong” AI. We define weak AI by its ability to complete a specific task, like winning a chess game or identifying a particular individual in a series of photos. Natural language processing and computer vision, which let companies automate tasks and underpin chatbots and virtual assistants such as Siri and Alexa, are examples of ANI. Computer vision is a factor in the development of self-driving cars.

Stronger forms of AI, like AGI and ASI, incorporate human behaviors more prominently, such as the ability to interpret tone and emotion. Strong AI is defined by its ability compared to humans. AGI would perform on par with another human, while ASI—also known as superintelligence—would surpass a human’s intelligence and ability. Neither form of Strong AI exists yet, but research in this field is ongoing.

Using AI for business

An increasing number of businesses, about 35% globally, are using AI, and another 42% are exploring the technology. The development of generative AI , which uses powerful foundation models that train on large amounts of unlabeled data, can be adapted to new use cases and bring flexibility and scalability that is likely to accelerate the adoption of AI significantly. In early tests, IBM has seen generative AI bring time to value up to 70% faster than traditional AI.

Whether you use AI applications based on ML or foundation models, AI can give your business a competitive advantage. Integrating customized AI models into your workflows and systems, and automating functions such as customer service, supply chain management and cybersecurity, can help a business meet customers’ expectations, both today and as they increase in the future.

The key is identifying the right data sets from the start to help ensure that you use quality data to achieve the most substantial competitive advantage. You’ll also need to create a hybrid, AI-ready architecture that can successfully use data wherever it lives—on mainframes, data centers, in private and public clouds and at the edge.

Your AI must be trustworthy because anything less means risking damage to a company’s reputation and bringing regulatory fines. Misleading models and those containing bias or that hallucinate (link resides outside ibm.com) can come at a high cost to customers’ privacy, data rights and trust. Your AI must be explainable, fair and transparent.

Machine learning is a subset of AI that allows for optimization. When set up correctly, it helps you make predictions that minimize the errors that arise from merely guessing. For example, companies like Amazon use machine learning to recommend products to a specific customer based on what they’ve looked at and bought before.

Classic or “nondeep” machine learning depends on human intervention to allow a computer system to identify patterns, learn, perform specific tasks and provide accurate results. Human experts determine the hierarchy of features to understand the differences between data inputs, usually requiring more structured data to learn.

For example, let’s say I showed you a series of images of different types of fast food—“pizza,” “burger” and “taco.” A human expert working on those images would determine the characteristics distinguishing each picture as a specific fast food type. The bread in each food type might be a distinguishing feature. Alternatively, they might use labels, such as “pizza,” “burger” or “taco” to streamline the learning process through supervised learning.

While the subset of AI called deep machine learning can leverage labeled data sets to inform its algorithm in supervised learning, it doesn’t necessarily require a labeled data set. It can ingest unstructured data in its raw form (for example, text, images), and it can automatically determine the set of features that distinguish “pizza,” “burger” and “taco” from one another. As we generate more big data, data scientists use more machine learning. For a deeper dive into the differences between these approaches, check out Supervised versus Unsupervised Learning: What’s the Difference?

A third category of machine learning is reinforcement learning, where a computer learns by interacting with its surroundings and getting feedback (rewards or penalties) for its actions. And online learning is a type of ML where a data scientist updates the ML model as new data becomes available.

To learn more about machine learning, check out the following video:

As our article on deep learning explains, deep learning is a subset of machine learning. The primary difference between machine learning and deep learning is how each algorithm learns and how much data each type of algorithm uses.

Deep learning automates much of the feature extraction piece of the process, eliminating some of the manual human intervention required. It also enables the use of large data sets, earning the title of scalable machine learning . That capability is exciting as we explore the use of unstructured data further, particularly since over 80% of an organization’s data is estimated to be unstructured (link resides outside ibm.com).

Observing patterns in the data allows a deep-learning model to cluster inputs appropriately. Taking the same example from earlier, we might group pictures of pizzas, burgers and tacos into their respective categories based on the similarities or differences identified in the images. A deep-learning model requires more data points to improve accuracy, whereas a machine-learning model relies on less data given its underlying data structure. Enterprises generally use deep learning for more complex tasks, like virtual assistants or fraud detection.

Neural networks, also called artificial neural networks or simulated neural networks, are a subset of machine learning and are the backbone of deep learning algorithms. They are called “neural” because they mimic how neurons in the brain signal one another.

Neural networks are made up of node layers—an input layer, one or more hidden layers and an output layer. Each node is an artificial neuron that connects to the next, and each has a weight and threshold value. When one node’s output is above the threshold value, that node is activated and sends its data to the network’s next layer. If it’s below the threshold, no data passes along.

Training data teach neural networks and help improve their accuracy over time. Once the learning algorithms are fined-tuned, they become powerful computer science and AI tools because they allow us to quickly classify and cluster data. Using neural networks, speech and image recognition tasks can happen in minutes instead of the hours they take when done manually. Google’s search algorithm is a well-known example of a neural network.

As mentioned in the explanation of neural networks above, but worth noting more explicitly, the “deep” in deep learning refers to the depth of layers in a neural network. A neural network of more than three layers, including the inputs and the output, can be considered a deep-learning algorithm. That can be represented by the following diagram:

Most deep neural networks are feed-forward, meaning they only flow in one direction from input to output. However, you can also train your model through back-propagation, meaning moving in the opposite direction, from output to input. Back-propagation allows us to calculate and attribute the error that is associated with each neuron, allowing us to adjust and fit the algorithm appropriately.

While all these areas of AI can help streamline areas of your business and improve your customer experience, achieving AI goals can be challenging because you’ll first need to ensure that you have the right systems to construct learning algorithms to manage your data. Data management is more than merely building the models that you use for your business. You need a place to store your data and mechanisms for cleaning it and controlling for bias before you can start building anything.

At IBM we are combining the power of machine learning and artificial intelligence in our new studio for foundation models, generative AI and machine learning, watsonx.ai™.

Get the latest tech insights and expert thought leadership in your inbox.

Learn more about watsonx.ai

Get our newsletters and topic updates that deliver the latest thought leadership and insights on emerging trends.

A case study of using artificial neural networks for classifying cause of death from verbal autopsy

Affiliation.

- 1 London School of Hygiene and Tropical Medicine, London, UK.

- PMID: 11416074

- DOI: 10.1093/ije/30.3.515

Background: Artificial neural networks (ANN) are gaining prominence as a method of classification in a wide range of disciplines. In this study ANN is applied to data from a verbal autopsy study as a means of classifying cause of death.

Methods: A simulated ANN was trained on a subset of verbal autopsy data, and the performance was tested on the remaining data. The performance of the ANN models were compared to two other classification methods (physician review and logistic regression) which have been tested on the same verbal autopsy data.

Results: Artificial neural network models were as accurate as or better than the other techniques in estimating the cause-specific mortality fraction (CSMF). They estimated the CSMF within 10% of true value in 8 out of 16 causes of death. Their sensitivity and specificity compared favourably with that of data-derived algorithms based on logistic regression models.

Conclusions: Cross-validation is crucial in preventing the over-fitting of the ANN models to the training data. Artificial neural network models are a potentially useful technique for classifying causes of death from verbal autopsies. Large training data sets are needed to improve the performance of data-derived algorithms, in particular ANN models.

Publication types

- Comparative Study

- Multicenter Study

- Autopsy / methods*

- Cause of Death*

- Classification / methods*

- Data Collection / methods

- Ethiopia / epidemiology

- Ghana / epidemiology

- Logistic Models

- Models, Statistical

- Neural Networks, Computer*

- Reproducibility of Results

- Sensitivity and Specificity

- Tanzania / epidemiology

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 21 May 2024

Strategies for multi-case physics-informed neural networks for tube flows: a study using 2D flow scenarios

- Hong Shen Wong 1 na1 ,

- Wei Xuan Chan 1 na1 ,

- Bing Huan Li 2 &

- Choon Hwai Yap 1

Scientific Reports volume 14 , Article number: 11577 ( 2024 ) Cite this article

1 Altmetric

Metrics details

- Biomedical engineering

- Computational models

- Machine learning

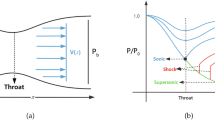

Fluid dynamics computations for tube-like geometries are crucial in biomedical evaluations of vascular and airways fluid dynamics. Physics-Informed Neural Networks (PINNs) have emerged as a promising alternative to traditional computational fluid dynamics (CFD) methods. However, vanilla PINNs often demand longer training times than conventional CFD methods for each specific flow scenario, limiting their widespread use. To address this, multi-case PINN approach has been proposed, where varied geometry cases are parameterized and pre-trained on the PINN. This allows for quick generation of flow results in unseen geometries. In this study, we compare three network architectures to optimize the multi-case PINN through experiments on a series of idealized 2D stenotic tube flows. The evaluated architectures include the ‘Mixed Network’, treating case parameters as additional dimensions in the vanilla PINN architecture; the “Hypernetwork”, incorporating case parameters into a side network that computes weights in the main PINN network; and the “Modes” network, where case parameters input into a side network contribute to the final output via an inner product, similar to DeepONet. Results confirm the viability of the multi-case parametric PINN approach, with the Modes network exhibiting superior performance in terms of accuracy, convergence efficiency, and computational speed. To further enhance the multi-case PINN, we explored two strategies. First, incorporating coordinate parameters relevant to tube geometry, such as distance to wall and centerline distance, as inputs to PINN, significantly enhanced accuracy and reduced computational burden. Second, the addition of extra loss terms, enforcing zero derivatives of existing physics constraints in the PINN (similar to gPINN), improved the performance of the Mixed Network and Hypernetwork, but not that of the Modes network. In conclusion, our work identified strategies crucial for future scaling up to 3D, wider geometry ranges, and additional flow conditions, ultimately aiming towards clinical utility.

Similar content being viewed by others

Prediction of 3D Cardiovascular hemodynamics before and after coronary artery bypass surgery via deep learning

Multi-fidelity information fusion with concatenated neural networks

Continuous and discontinuous compressible flows in a converging–diverging channel solved by physics-informed neural networks without exogenous data

Introduction.

The simulation of fluid dynamics in tube-like structures is a critical aspect of biomedical computational engineering, with significant applications in vascular and airway fluid dynamics. Understanding disease severity 1 , perfusion and transport physiology 2 , and the biomechanical stimuli leading to the initiation and progression of diseases relies on accurate fluid dynamics computations 3 . Traditionally, this involves extracting anatomic geometry from medical imaging and performing computational fluid dynamics simulations, but this process, although efficient, still demands computational time ranging from hours to days 4 . and the procedure is repeated for anatomically similar geometries, leading to an inefficient repetitive computational expenditure. Hastening fluid dynamics simulations to enable real-time results can enhance clinical adoption and potentially generate improvements in disease evaluation and decision-making.

In recent years, physics-informed neural networks (PINNs) have gained attention for approximating the behavior of complex, non-linear physical systems. These networks incorporate the underlying physics and governing equations of a system, allowing them to approximate solutions with good accuracy 5 . However, vanilla PINN requires individual training for each new simulation case, such as with variations in geometry, viscosity or flow boundary conditions, causing it to be more time-consuming than traditional fluid dynamics simulations.

Several past studies have provided strategies for resolving this limitation. Kashefi et al. 6 proposed a physics-informed point-net to solve fluid dynamics PDEs that were trained on cases with varied geometry parameters, by incorporating latent variables calculated from point clouds representing various geometries. Ha et al. 7 developed a hypernetwork architecture, where a fully connected network was used to compute weights of the original neural network, and showed that this could retain the good performance of various convolutional and recurrent neural networks while reducing learnable parameters and thus computational time. Felipe et al. 8 developed the HyperPINN using a similar concept specifically for PINNs. Additionally, a few past studies have attempted to use parameterized geometry inputs in PINNs for solving fluid dynamics in tube-like structures of various geometries 9 , 10 , as demonstrated through 2D simulations.

In essence, by pre-training the PINN network for a variety of geometric and parametric cases (multi-case PINN), the network can be used to generate results quickly even for unseen cases, and can be much faster than traditional simulation approaches, where the transfer of results from one geometry to another is not possible. In developing the multi-case PINN, strategies and architectures proposed in the past for vanilla PINN are potentially useful. For example, Shazeer et al. used a “sparse hypernetwork” approach, where the hypernetwork supplies only a subset of the weights in the target network, thus achieving a significant reduction in memory and computational requirements without sacrificing performance 11 . A similar approach called DeepONet merges feature embeddings from two subnetworks, the branch and trunk nets, using an inner product 12 , 13 . Similar to hypernetworks, a second subnetwork in DeepONet can take specific case parameters as input, enhancing adaptability across diverse scenarios. Further, the gradient-enhanced PINN (gPINN) has previously been proposed to enhance performance with limited training samples, where additional loss functions imposed constraints on the gradient of the PDE residual loss terms with respect to the network inputs 14 .

However, the relative performance of various proposed networks for calculating fluid dynamics in tube-like structures is investigated here. We used a range of 2D tube-like geometries with a narrowing in the middle as our test case and investigated the comparative performance of three common PINN network designs for doing so, where geometric case parameters were (1) directly used as additional dimensions in the inputs to vanilla PINN (“Mixed Network”), (2) input via hypernetwork approach (“Hypernetwork”), or (3) inputs via partial hypernetwork similar to DeepONet (“Modes Network”).

To enhance the performance of multi-case tube flow PINN, we further tested two strategies. First, in solving fluid dynamics in tube-like structures, tube-specific parameters, such as distance along the tube centerline and distance from tube walls are extracted for inputs into the PINN network. This is likely to enhance outcomes as such parameters have a direct influence on fluid dynamics. For example, locations with small distance-to-wall coordinates require low-velocity magnitude solutions, due to the physics of the no-slip boundary conditions, where fluid velocities close to the walls must take on the velocities of the walls. Further, the pressure of the fluid should typically decrease with increasing distance along the tube coordinates, due to flow energy losses. Additionally, we investigated enhancing our multi-case PINN with gPINN 14 .

Our PINNs are conducted in 2D tube-like flow scenarios with a narrowing in the middle. As such, they are not ready for clinical usage, but they can be used to inform future work on 3D multi-case PINN with more realistic geometries and flow rates.

Problem definition

In this study, we seek the steady-state incompressible flow solutions of a series of 2D tube-like channels with a narrowing in the middle, in the absence of body forces, where the geometric case parameter, \({\varvec{\lambda}}\) , describes the geometric shape of the narrowing. The governing equations for this problem are as follows:

with fluid density \(\rho\) = 1000 kg/m 3 , kinematic viscosity \({\upnu }\) = 1.85 m 3 /s, \(p\) = \(p\left( {\varvec{x}} \right)\) is the fluid pressure, \({\varvec{x}} = \left( {x,y} \right)\) is the spatial coordinates and \({\mathbf{u}}\) \(=\) \({\mathbf{u}}\) ( \({\varvec{x}},{\varvec{\lambda}}\) ) \(=\) [ \(u\) ( \({\varvec{x}},{\varvec{\lambda}}\) ), \(v\) ( \({\varvec{x}},{\varvec{\lambda}}\) )] T denotes the fluid velocity with components \(u\) and \(v\) in two dimensions across the fluid domain \({\varvec{\varOmega}}\) and the domain boundaries \({{\varvec{\Gamma}}}\) . A parabolic velocity inlet profile is defined with \(R\) as the radius of the inlet, and \(u_{max} = 0.00925\) ms. prescribed. A zero-pressure condition is prescribed at the outlet. \({\varvec{\lambda}}\) is a \(n\) -dimensional parameter vector, consisting of two case parameters, \({\varvec{A}}\) and \({{\varvec{\upsigma}}}\) , which describe the height (and thus severity) and length of the narrowing, respectively, given as:

where \(R\) is the radius of the channel at a specific location, and \({R}_{0}\) and \(\upmu\) are constants with values 0.05 m and 0.5 respectively. The Reynolds number of these flows is thus between 375 and 450.

Network architecture

In this study, we utilize PINN to solve the above physical PDE system. Predictions of \({\varvec{u}}\) and \(p\) are formulated as a constrained optimization problem and the network is trained (without labelled data) with the governing equations and given boundary conditions. The loss function \({\mathbf{\mathcal{L}}}\left( {\varvec{\theta}} \right)\) of the physics-constrained learning is formulated as,

where W and b are weights and biases of the FCNN (see Eq. 11 ), \({\mathbf{\mathcal{L}}}_{physics}\) represents the loss function over the entire domain for the parameterized Continuity and Navier–Stokes equations, and \({\mathbf{\mathcal{L}}}_{BC}\) represents the boundary condition loss of the \({\varvec{u}}\) prediction. \(\omega_{physics}\) and \(\omega_{bc}\) are the weights parameters for the terms. A value of 1 is used for both as the loss terms are unit normalized. Loss terms can be expressed as:

where \({\varvec{N}}\) is the number of randomly selected collocation points in the domain or at the boundaries, and V kg , V m and V s are the unit normalization of 1 kg, 0.1 m and 10.811 s respectively corresponding to the density \(\rho\) , inlet tube diameter 2 \(R_{0}\) and inlet maximum velocity \(u_{max}\) .

Training of the PINN was done using the Adam optimizer 15 , using a single GPU (NVIDIA Quadro RTX 5000). A feedforward fully connected neural network (FCNN), \({\varvec{f}}\) , was employed in this work where the surrogate network model is built to approximate the solutions, \(\widehat{{\user2{y }}} = \left[ {{\text{u}}\left( {{\varvec{x}},{\varvec{\lambda}}} \right),{\text{v}}\left( {{\varvec{x}},{\varvec{\lambda}}} \right),{\text{p}}\left( {{\varvec{x}},{\varvec{\lambda}}} \right)} \right]^{{\varvec{T}}}\) . In the FCNN, the output from the network (a series of fully connected layers), \(\widehat{{\user2{y }}}\) ( \({\varvec{\psi}}_{\user2{ }} ;{\varvec{\theta}}\) ), where \({\varvec{\psi}}_{\user2{ }}\) represents the network inputs, was computed using trainable parameters \({\varvec{\theta}}\) , consisting of the weights \({\varvec{W}}_{{\varvec{i}}}\) and biases \({\varvec{b}}_{{\varvec{i}}}\) , of the \(i\) -th layer for \(n\) layers, according to the equation:

where \({\varvec{\varPhi}}_{{\varvec{i}}}\) represents the nodes of the \({\varvec{i}}\) th layer in the network. The Sigmoid Linear Unit (SiLu) function, \({\varvec{\alpha}}\) , is used as the activation function and partial differential operators are computed using automatic differentiation 16 . All networks and losses were constructed using NVIDIA’s Modulus framework v22.09 17 , and codes are available at https://github.com/WeiXuanChan/ModulusVascularFlow .

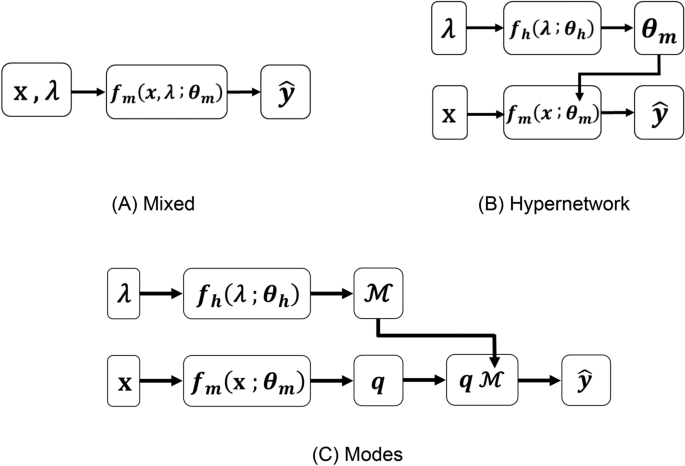

Mixed network, hypernetwork and modes network

Three network architectures are investigated, as shown in Fig. 1 . The number of learnable parameters in each NN architecture was kept approximately the same ( \(\pm 0.1\%\) difference) for comparison. In the Mixed Network approach, \({\varvec{\psi}}_{{}}\) consist of both \({\varvec{x}}\) and \({\varvec{\lambda}}\) , and only one main FCNN network, \({\varvec{f}}_{{\varvec{m}}}\) , is used to compute the velocity and pressure outputs.

Schematic for the three different neural network architectures. ( A ) The “Mixed network” where the main network, \({\varvec{f}}_{{\varvec{m}}}\) , takes in both coordinate parameters, x , and case (geometric) parameters, λ , to compute outputs variables, \(\hat{\user2{y}}\) , where hyperparameters, \({\varvec{\theta}}_{{\varvec{m}}}\) , are optimized during training. ( B ) The “Hypernetwork” where \({\varvec{f}}_{{\varvec{m}}}\) is coupled to a side hypernetwork, \({\varvec{f}}_{{\varvec{h}}}\) , which takes λ as inputs and outputs \({\varvec{\theta}}_{{\varvec{m}}}\) in \({\varvec{f}}_{{\varvec{m}}}\) , and hyperparameters for the hypernetwork, \({\varvec{\theta}}_{{\varvec{h}}}\) , are optimized during training. ( C ) The “Modes network” where \({\varvec{f}}_{{\varvec{h}}}\) outputs a modes layer, \({\text{M}}\) , that is multiplied mode weights output by \({\varvec{f}}_{{\varvec{m}}}\) , \({\varvec{q}}_{{}}\) , to give output variable, \(\hat{\user2{y}}\) .

In the Hypernetwork approach, \({\varvec{x}}\) is input into the main FCNN network, \({\varvec{f}}_{{\varvec{m}}}\) , while \({\varvec{\lambda}}\) is input into a FCNN hypernetwork, \({\varvec{f}}_{{\varvec{h}}}\) , which is used to compute the weights and biases ( \({\varvec{\theta}}_{{\varvec{m}}}\) ) of \({\varvec{f}}_{{\varvec{m}}}\) . This can be mathematically expressed as:

where \({\varvec{\theta}}_{{\varvec{h}}}\) are the trainable parameters of \({\varvec{f}}_{{\varvec{h}}}\) .

In the Modes Network, a hypernetwork, \({\varvec{f}}_{{\varvec{h}}}\) , outputs a series of modes, ℳ . Its inner product with the main network ( \({\varvec{f}}_{{\varvec{m}}}\) ) outputs, q , is taken as the final output of the network to approximate flow velocities and pressures, expressed as:

where \({\varvec{\theta}}_{{\varvec{h}}}\) and \({\varvec{\theta}}_{{\varvec{m}}}\) are, again, the trainable parameters of \({\varvec{f}}_{{\varvec{h}}}\) and \({\varvec{f}}_{{\varvec{m}}}\) , respectively. This formulation is previously proposed as the DeepONet 12 , 13 .

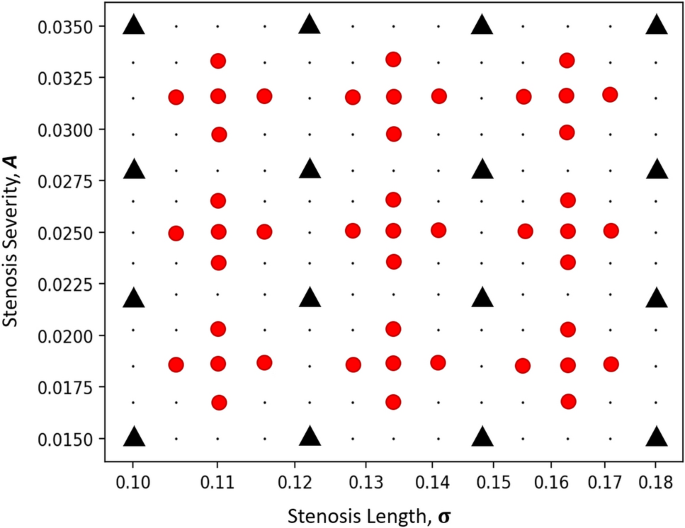

The NN architecture is trained for an arbitrary range of geometric parameters, \({\varvec{\lambda}} = \left\{ {{\varvec{A}},{{\varvec{\upsigma}}}} \right\}\) , where \({\varvec{A}}\) varies between 0.015 and 0.035 and \({{\varvec{\upsigma}}}\) varies between 0.1 and 0.18. A total of 16 regularly spaced ( A ) and logarithmically spaced ( \({{\varvec{\upsigma}}}\) ) combinations are selected, and the performance of the three NN architectures is evaluated for the 16 training cases, as well as an additional 45 untrained cases. This is illustrated in Fig. 2 .

A batch size of 1000 was employed, with 3840 batch points in each training iteration, resulting in a total of 3.8 million spatial points per epoch. The individual batch points used within each training step are compiled in Table 1 .

Computational fluid dynamics and error analysis

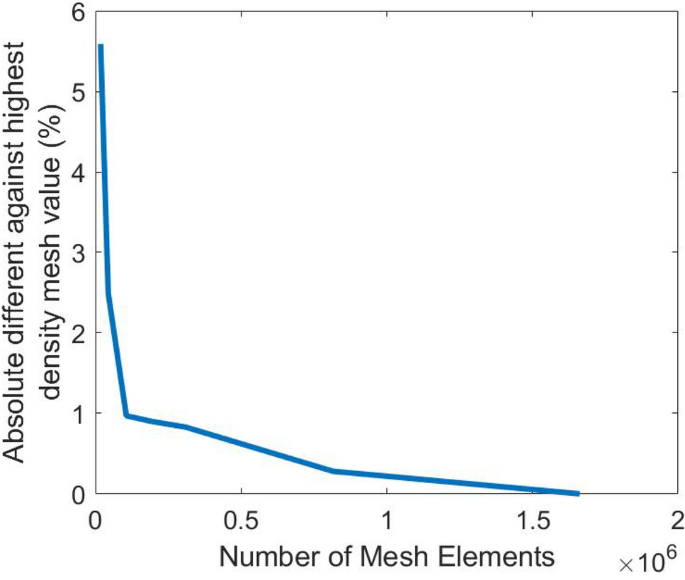

CFD ground truths of the training and prediction cases were generated using COMSOL Multiphysics v5.3 with the same boundary conditions set for the PINN. Mesh convergence was achieved by incrementally increasing the mesh size until the wall shear stress magnitude differed by approximately 0.5% compared to a finely resolved mesh, totalling around 1 million 2D triangular elements for each case model, shown in Fig. 3 . Wall shear stress vector ( \(\overrightarrow {WSS}\) ) was calculated as:

where \(\mu\) is the shear viscosity of the fluid, \(\left( {\nabla \vec{v}} \right)\) is the gradient velocity tensor and \(\hat{n}\) is the unit surface normal vector. The accuracy of the PINN was quantified using relative norm-2 error, \(\varepsilon\) , expressed as a percentage difference:

Maximum wall shear stress obtained for a 2D tube flow with narrowing ( A = 0.035 and σ = 0.10) across various mesh densities. The values are presented as a percentage difference relative to the highest tested density.

This error was evaluated for the output variable \({\varvec{y}}\) on \({\varvec{N}}\) random collocation points.

Tube-specific coordinate inputs, TSC

In the context of flows in a tube-like structure, we propose the inclusion of tube-specific coordinate parameters, referred to as TSCs. These additional variables, derived from the coordinates, are introduced as inputs into the PINN (as part of coordinate inputs, \(x\) , in Fig. 1 , with the same resolution as \(x\) ). 8 different TSCs were added: (1) “centerline distance”, \(c = \left( { - 1,1} \right)\) , which increases linearly along the centerline from the inlet to the outlet, (2) “normalized width”, \(L_{n} = \left( { - 1,1} \right)\) , which varies linearly across the channel width from the bottom and top wall, (3) \(d_{sq} = 1 - L_{n}^{2}\) , as well as multiplication combinations of the above variables, (4) \(c^{2}\) , (5) \(L_{n}^{2}\) , (6) \(c \times d_{sq}\) , (7) \(c \times L_{n}\) and (8) \(L_{n} \times d_{sq}\) .

Gradient-enhanced PINN, gPINN

In many clinical applications, obtaining patient-specific data is challenging, and the ability to train robustly with reduced cases would be beneficial. Therefore, we tested the use of gPINN, where the gradient of loss functions with respect to case inputs is added as additional loss functions for training. This aims to improve training robustness and reduce the number of training cases needed 14 . The addition of this derivative loss function is denoted as \({\mathbf{\mathcal{L}}}_{derivative}\) , is hypothesized to enhance the sensitivity of the network to unseen cases close to the trained cases. This potentially allows for effective coverage of the entire case parameter space with fewer training cases. The approach involves additional loss functions:

where \(\omega_{derivative}\) is the weight parameter of the derivative loss function, \({\varvec{R}}_{{{\varvec{GE}}}}\) and \({\varvec{R}}_{{{\varvec{BC}}}}\) are the residual loss of the governing equation and boundary conditions, respectively, and N is the number of randomly selected collocation points in the domain.

Advantages of tube-specific coordinate inputs

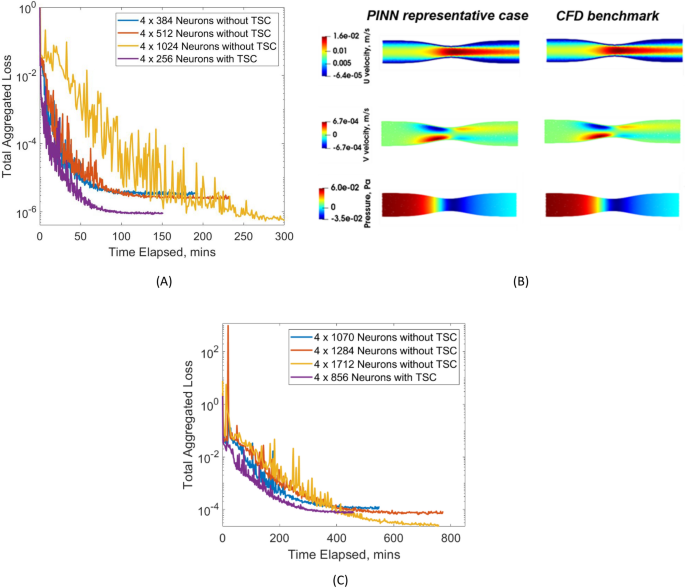

We first test the use of a vanilla FCNN on a single narrowing case, to assess the accuracy, sensitivity to network size, and utility of the TSC inputs. Results are shown in Table 2 and Fig. 4 for the single narrowing test case where A = 0.025, σ = 0.134. Figure 4 A illustrates the successful convergence of the loss function during the training process, while Table 2 shows that, in comparison with CFD results, errors in velocities and errors are reasonably low. Figure 4 B further demonstrates a visual similarity between network outputs and CFD simulation results. It should be noted that absolute errors in the y-direction velocity are not higher than those of other outputs, but as errors are normalized by the root-mean-square of the truth values, and because the truth flow field has very low y-direction velocities, the normalized y-direction velocity errors, \(\varepsilon_{v}\) , were higher. Accuracy and training can likely be enhanced with dynamic adjustment of weightage for the different loss functions and adaptive activation functions 18 , 19 , but such further optimizations are not explored here.

( A ) Comparison of convergence for total aggregated loss plotted against time taken in minutes for training the narrowing case with A = 0.025 and σ = 0.134, using various neural network depth sizes as well as a smaller NN when employing “local coordinates inputs”. ( B ) Illustration of the flow results for single case training using 4 × 256 neurons with LCIs, show a good match between predictions from neural network and computational fluid dynamics (CFD) results. ( C ) Comparison of convergence for total aggregated loss plotted against time taken in minutes for multi-case training across various a range of narrowing severity, A and narrowing length, \({{\varvec{\upsigma}}}\) , using the Mixed Network.( A ) Single Case Training. ( B ) Fluid Flow – Single Case Training, 4 x 256 Neurons with LCI. ( C ) Multi-Case Training.

Previous studies have reported that accurate results are more difficult without the use of hard boundary constraints 9 , where the PINN outputs are multiplied to fixed functions to enforce no-slip flow conditions at boundaries. In our networks, no-slip boundary conditions are enforced as soft constraints in the form of loss function while reasonable accuracy is achieved. We believe that this is due to our larger network size enabled by randomly selecting smaller batches for processing from a significantly larger pool of random spatial points (1000 times the number of samples in a single batch). The sampling and batch sample selection are part of the NVIDIA Modulus framework. The soft constraint approach does not perform as well as the hard constraint approach, but hard boundary constraints are difficult to extend to Neumann constraints and implement on complex geometry and may pose difficulty for future scaling up.

As expected, Table 2 results demonstrate that increasing the network width while maintaining the same depth decreases errors significantly but at the same time, increases requirements for GPU memory and computational time. Interestingly, incorporating TSC inputs leads to significant improvements in accuracy and a reduction of computational resources needed. The network incorporating TSC with a width of 256 produces a similar accuracy as the network without TSC with twice the width (512) and takes approximately 50% less time to train. The reduction in time is related to the reduced network size, such that the number of trainable parameters is reduced from 790,531 to 200,707. Further, training converges data shows that with the TSC, losses could converge to be lower, and converge faster than the network without TSC with twice the network size.

Next, using the Mixed Network architecture, we train 16 case geometries and evaluate accuracy on a validation set comprising 45 unseen case geometries, as depicted in Fig. 2 and summarized our findings in Table 3 . Again, the network with TSC, having a smaller width of 856, demonstrates statistically comparable accuracy to the network without TSC with an approximately 50% greater width of 1284, despite having more than halved the number of trainable parameters, and reduced training time by approximately 20%.

The superior accuracy provided by TSCs suggests that flow dynamics in tube-like structures are strongly correlated to tube-specific coordinates, and the network does not naturally produce such parameters without deliberate input. Due to these observed advantages, we incorporated TSCs in all further multi-case PINN investigations.

Comparison of various multi-case PINN architectures

We conducted a comparative analysis to determine the best network architecture for multi-case PINN training for tube flows. We design the networks such that the number of trainable parameters is standardized across the three network architectures for a controlled comparison. Two experiments are conducted, where the trainable parameters are approximately 2.2 million and 0.8 million. The network size parameters are shown in Table 4 , while the results are shown in Table 5 . We investigated L2 errors for velocities, pressures, and wall shear stresses (WSS).

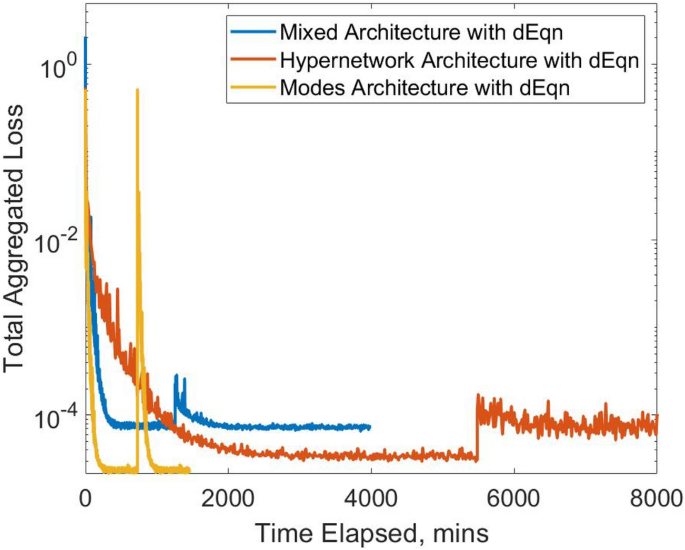

From Table 5 , it can be observed that with a larger network size (2.2 million trainable parameters), the Modes network has the lowest relative L2 errors, averaged across all testing cases, of between 0.4 and 2.1%, which is significantly more accurate than the Mixed network and the Hypernetwork. Results indicate that the percentage errors of the spanwise velocity, \(v\) , are higher than those in the streamwise velocity, \(u\) , due to the larger amplitude of \(u\) . As such, errors in WSS are aligned to errors in u rather than v. However, when a smaller network size (0.8 million trainable parameters) is used, the Hypernetwork displayed the highest accuracy, followed by the Mixed network and then the Modes network.

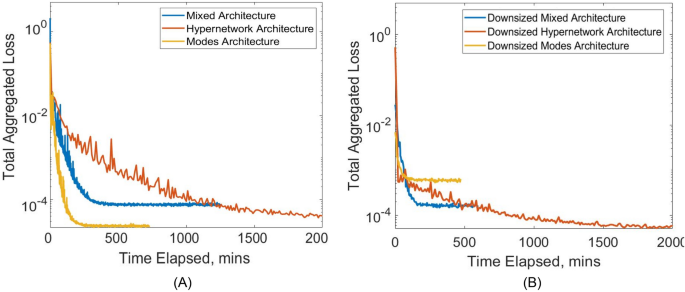

These results are also observable in Fig. 5 , which illustrates the convergence of loss functions under various network training. Specifically, Fig. 5 A demonstrates that the Modes network exhibits the swiftest convergence with the lowest total aggregated loss. This was followed by the Hypernetwork, which has the next lowest converged loss but has a very slow slower convergence rate. The mixed network exhibits the highest converged loss but shows a moderate convergence speed. In contrast, Fig. 5 B demonstrates the convergence patterns when there is a smaller number of trainable parameters. Although the order in the speed of convergence remains consistent, the Modes Network now has the highest converged aggregated loss. This highlights the necessity of a sufficiently large network size for the effectiveness of the Modes Network.

( A ) Comparison of convergence for total aggregated loss plotted against time taken in minutes for multi-case training across various a range of narrowing severity, A and narrowing length, \({{\varvec{\upsigma}}}\) , between the three different neural network architectures. ( B ) Repeat comparison was done but with smaller NN sizes for each architecture, standardized to approximately 0.8 million hyperparameters, compared to 2.2 million hyperparameters in ( A ). ( A ) Architecture Comparison with Approx. 2.1 million Hyperparameters. ( B ) Architecture Comparison with Approx. 0.8 million Hyperparameters.

Another advantage of the Modes Network is that it takes up the lowest GPU memory and training time (Table 5 ). Further, although the Hypernetwork was more accurate than the Mixed Network, the training time and GPU memory required was several times that of the Mixed Network. The Hypernetwork consumes at least 13 times more memory than the Modes Network, and several times longer to converge.

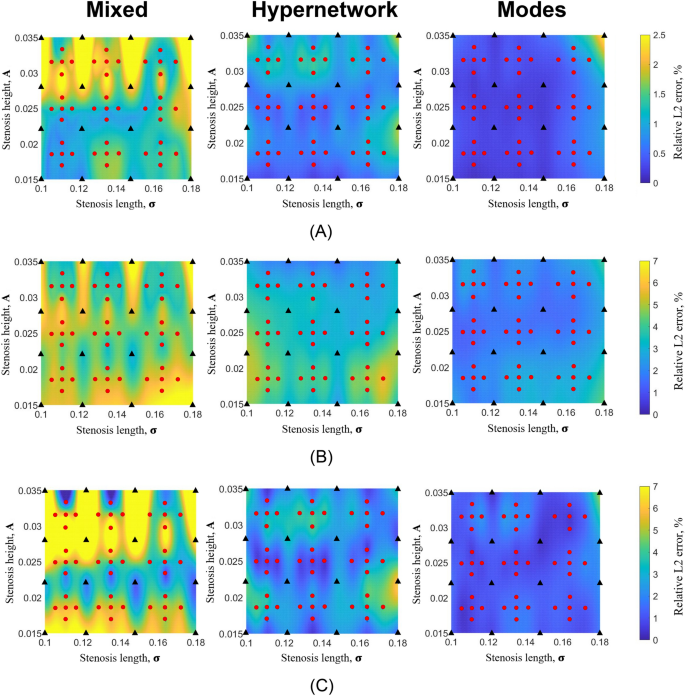

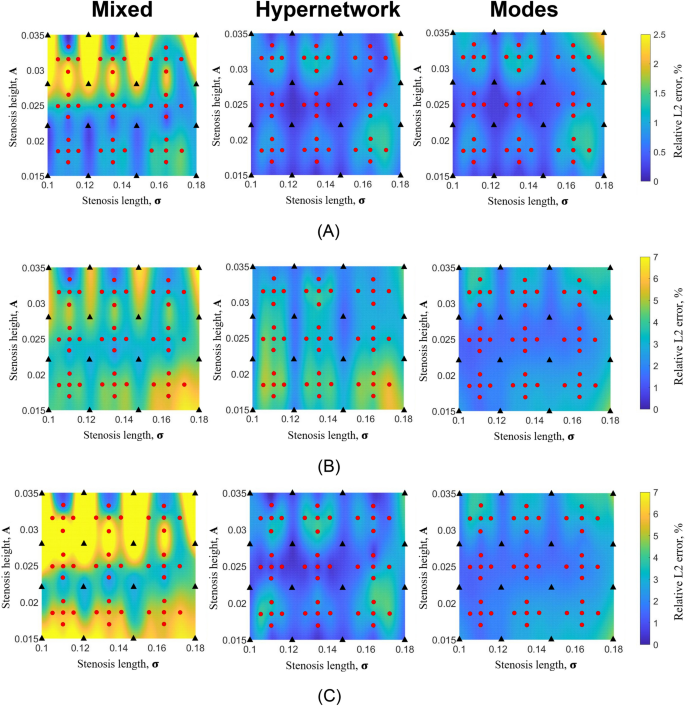

Figures 6 and 7 show the distribution of relative L2 errors across the geometric parameter space for the three networks. Training geometric cases are indicated as black triangles while testing cases are indicated as red dots. It can be observed that the geometric parameter spaces in between training cases have good, low errors similar to errors of training cases, demonstrating that the multi-case PINN approach of training only in some cases is feasible and can ensure accuracy in unseen cases. The results further demonstrate that cases with larger A parameters tend to have larger errors. This is understandable as larger A corresponds to more severe narrowing and a flow field with higher spatial gradients.

Color contour plot of relative L2 error of ( A ) U velocity, ( B ) V velocity and ( C ) pressure from multi-case training across various range of narrowing severity, A and narrowing length, \({{\varvec{\upsigma}}}\) , between the three different neural network architecture with 2.2 million hyperparameters. ( A ) U relative L2 error. ( B ) V relative L2 error. ( C ) P relative L2 error.

Colour contour plot of relative L2 error plot of ( A ) U velocity, ( B ) V velocity and ( C ) pressure from multi-case training across various range of narrowing severity, A and narrowing length, \({{\varvec{\upsigma}}}\) , between the three different neural network architecture with a reduced number of hyperparameters (0.8 million). ( A ) U relative L2 error. ( B ) V relative L2 error. ( C ) P relative L2 error.

The results suggest that the Modes network has the potential to be the most effective and efficient network; however, a sufficiently large network size is necessary for accuracy.

Utilizing gradient-enhanced PINNs (gPINNs)

We test the approach of adding derivatives of governing and boundary equations with respect to case parameters as additional loss functions, and investigate enhancements to accuracy and training efficiency, using the networks with approximately 2.2 million trainable parameters. The networks are trained with the original loss functions until convergence before the new derivative loss function is added and the training restarted.

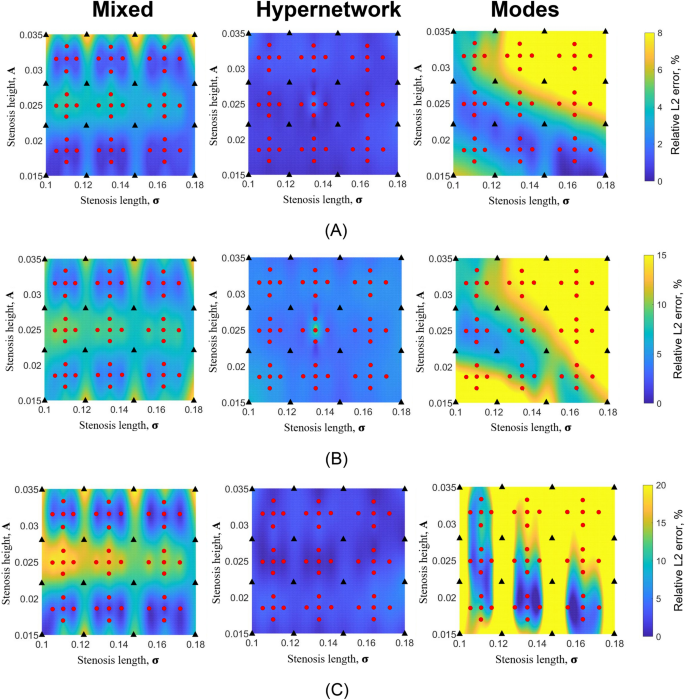

The convergence plot is illustrated in Fig. 9 , while the results are shown in Table 6 . Results in Fig. 8 indicate that this approach generally led to small-magnitude improvements in velocities and pressure errors, most of which are statistically significant. Significant improvements are the most evident for the Mixed network, where all output parameters significantly improve. This is followed by the Hypernetwork, where the streamwise velocity, \(u\) and pressure errors significantly improve. However, for the Modes network, error reduction is not evident, and the accuracy of the spanwise velocity, \(v\) deteriorated. Imposing the additional loss functions causes a roughly double increase in training time and a 3–4 times increase in GPU memory requirements for the Mixed and Modes networks.

Colour contour plots of relative L2 error plot of ( A ) U velocity, ( B ) V velocity and ( C ) pressure from multi-case training across various ranges of narrowing severity, A and narrowing length, \({{\varvec{\upsigma}}}\) , between the three different neural network architectures with 2.2 million hyperparameters, after adding the derivatives of governing equations and boundary conditions wrt. case parameters as additional loss functions. ( A ) U relative L2 error. ( B ) V relative L2 error. ( C ) P relative L2 error.

In summary, the derivatives loss function yielded improvements for the Mixed Network and Hypernetwork but did not show improvements for the Modes Network.

In this study, we investigated three common training strategies for multi-case PINN applied to fluid flows in tube-like structures. Additionally, we investigated the use of gPINN and TSC to enhance these networks. While our algorithms are not ready for biomedical applications, they lay the groundwork for future work in scaling up to 3D complex geometries with more clinically relevant flows. If successful, this approach could offer substantial advantages over the traditional CFD approach.

Traditional CFD simulations are required for every new vascular or airway geometry encountered, and even though this is currently a well-optimized and efficient process, a minimum of several tens of minutes is required for meshing and simulating each case. Much of this simulation process is repetitive, such as when very similar geometries are encountered, but the same full simulation is required for each of such cases and transfer learning is not possible without machine learning. In contrast, multi-case PINN enables a single learning process for a range of geometries, avoiding redundant computations and potentially providing real-time results. Real-time capabilities could encourage clinical adoption, enhance clinical decision-making, and facilitate faster engineering computations, ultimately contributing to increased result sample sizes for demonstrating the clinical impact of biomechanical factors.

Similar to previous investigations 9 , 10 , one key motivation for adopting multi-case PINN is its ability to pre-train on a small series of cases, allowing real-time results for unseen cases close to the trained cases. In the original form, PINN is case-specific, the training time required for single cases far exceeds that required for traditional CFD simulations, for results with similar accuracy 20 . There is thus no reason for using PINN to solve such single cases, unless inverse computing, such as matching certain observations in the flow field is required 21 . At present, using our trained PINN to solve 2D tube flows only yields small advantages compared to conventional CFD of steady 2D flows, but in future when the 3D version of the multi-case PINN is available, this advantage can become more pronounced.

When comparing the Mixed, Modes and Hypernetworks, we designed our study to utilize various extents of hyperparameter networks, with the Hypernetwork approach representing the fullest extent, the Mixed network representing the minimum extent, and the Modes network falling in between. Results show that the hypernetwork can yield better results than the Mixed network when the number of trainable parameters for both networks is retained. This agrees with previous investigations on the Hypernetwork approach, where investigators found that a reduced network size to achieve the same accuracy is possible 7 , 8 . However, the hypernetwork approach requires a large GPU memory, because the links between the hyperparameter network and the first few layers of the main PINN network result in a very deep network with many sequential layers, and the backward differentiation process via chain rule requires the storage of many more parameters. The complexity of this network architecture also resulted in long training times and slower convergence.

In comparison, the Modes Network reduces complexity, resulting in faster training times and faster convergence. This approach aligns with the “sparse hypernetwork” approach, where the hypernetwork supplies only a subset of the weights in the main network, which corroborates our observations of significantly reduced memory and computational requirements without sacrificing performance 11 . The good performance of the Modes Network suggests that the complexity in the Hypernetwork is excessive and is not needed to achieve the correct flow fields. The Modes network also has similarities to reduced order PINNs, such as proposed by Buoso et al. 22 for simulations of cardiac myocardial biomechanics. Buoso et al. use shape modes for inputs into the PINN and utilize outputs as weights for a set of motion modes, where all modes are pre-determined from statistical analysis of multiple traditional simulations. Our Modes Network similarly calculates a set of modes, \({\varvec{q}}\) in Eq. ( 14 ), and used PINN outputs as weights for these modes to obtain flow field results. The difference, however, is that we determined these modes from the training itself, instead of pre-determining them through traditional CFD simulations.

Another important result here is the improved accuracy provided by tube-specific coordinate inputs when simulating tube flows. This not only accelerates convergence rates and reduces computational costs, but it also leads to improved accuracies as well. An explanation for this is that the tube flow fields have a strong correlation to the tube geometry and thus tube-specific coordinate inputs, and having such coordinates directly input into the PINN allows it to find the solution more easily. For example, in laminar tubular flow, flow profiles are likely to approximate the parabolic flow profile, which is a square function of the y-coordinates, and as such multiplicative expressions are needed for the solution. By itself, the fully connected network can approximate squares and cross-multiplication of inputs, but this requires substantial complexity and is associated with approximation errors. Pre-computing these second-order terms for inputs into the network can reduce the modelling burden and approximation errors, thus leading to improved performance with smaller networks. The strategy is likely not limited to tube flows, for any non-tubular flow geometry, coordinate parameters relevant to that geometry are likely to improve PINN performance as well. In our experiments with the simple parameterized 2D narrowing geometries, tube-specific coordinates can be easily calculated, however, for more complex tube geometries, specific strategies to calculate these coordinates are needed. Such computations will likely need to be in the form of an additional neural network because derivates of the coordinates will need to be computed in the multi-case PINN architecture.

Our investigation of the gPINN framework featuring the gradient of the loss functions shows its usefulness for the Mixed Network and Hypernetwork but not the Modes Network. In the Hypernetwork and Mixed network, this gPINN modifies the solution map, reducing loss residuals for cases close to the trained cases by enforcing a low gradient of loss across case parameters. In the Modes Network, solutions are modelled in reduced order, as they are expressed as a linear finite combination of solution modes, and consequently already exhibit smoothness across case parameters. This is thus a possible explanation for why the Modes Network does not respond to the gPINN strategy. Further, the Modes Network shows an excessive increase in losses when the loss function derivatives were added during the training (Fig. 9 ), which may indicate an incompatibility of the reduced order nature of the network with the gPINN framework, where there are excessive changes to the solution map in response to adjusting this new loss function.

Comparison of convergence for total aggregated loss plotted against the time taken in minutes for multi-case training with approximately 2.2 million hyperparameters for the three different PINN methodologies. The derivative of governing equations and boundary conditions (gPINN) is added as an additional loss term after the initial training is converged, and noticeable spikes in loss are observed.

The results suggest the feasibility of employing unsupervised PINN training through the multi-PINN approach to generate real-time fluid dynamics results with reasonable accuracies compared to CFD results. Our findings suggest that the most effective strategy for multi-case PINN in tube-like structures is the Modes Network, particularly when combined with tube-specific coordinate inputs. This approach not only provides the best accuracy but also requires the least computational time and resources for training. It is important to note that our investigations are confined to time-independent 2D flows within a specific geometric parameter space of straight, symmetric channels without curvature and a limited range of Reynolds numbers. Despite these limitations, our results may serve as a foundation for future endeavors, scaling up to 3D simulations with time variability and exploring a broader spectrum of geometrical variation.

Pijls, N. H. J. et al. Measurement of fractional flow reserve to assess the functional severity of coronary-artery stenoses. N. Engl. J. Med. 334 , 1703–1708. https://doi.org/10.1056/nejm199606273342604 (1996).

Article CAS PubMed Google Scholar

Bordones, A. D. et al. Computational fluid dynamics modeling of the human pulmonary arteries with experimental validation. Ann. Biomed. Eng. 46 , 1309–1324. https://doi.org/10.1007/s10439-018-2047-1 (2018).

Article PubMed PubMed Central Google Scholar

Zhou, M. et al. Wall shear stress and its role in atherosclerosis. Front. Cardiovasc. Med. 10 , 1083547 (2023).

Article CAS PubMed PubMed Central Google Scholar

Frieberg, P.A.-O. et al. Computational fluid dynamics support for fontan planning in minutes, not hours: The next step in clinical pre-interventional simulations. J. Cardiovasc. Transl. Res. 15 (4), 708–720 (2022).

Article PubMed Google Scholar

Raissi, M., Perdikaris, P. & Karniadakis, G. E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 378 , 686–707. https://doi.org/10.1016/j.jcp.2018.10.045 (2019).

Article ADS MathSciNet Google Scholar

Kashefi, A. & Mukerji, T. Physics-informed PointNet: A deep learning solver for steady-state incompressible flows and thermal fields on multiple sets of irregular geometries. J. Comput. Phys. 468 , 111510. https://doi.org/10.1016/j.jcp.2022.111510 (2022).

Article MathSciNet Google Scholar

Ha, D., Dai, A. & Quoc. HyperNetworks. arxiv:1609.09106 (2016).

Filipe, Chen, Y.-f. & Sha, F. HyperPINN: Learning Parameterized Differential Equations with Physics-Informed Hypernetworks (Springer, 2021).

Sun, L., Gao, H., Pan, S. & Wang, J.-X. Surrogate modeling for fluid flows based on physics-constrained deep learning without simulation data. Comput. Methods Appl. Mech. Eng. 361 , 112732 (2019).

Oldenburg, J., Borowski, F., Öner, A., Schmitz, K.-P. & Stiehm, M. Geometry aware physics informed neural network surrogate for solving Navier-Stokes equation (GAPINN). Adv. Model. Simul. Eng. Sci. 9 , 8. https://doi.org/10.1186/s40323-022-00221-z (2022).

Article Google Scholar

Shazeer, N. et al. Outrageously Large Neural Networks: The Sparsely-Gated Mixture-of-Experts Layer (Springer, 2017).

Lu, L., Jin, P., Pang, G., Zhang, Z. & Karniadakis, G. E. Learning nonlinear operators via DeepONet based on the universal approximation theorem of operators. Nat. Mach. Intell. 3 , 218–229. https://doi.org/10.1038/s42256-021-00302-5 (2021).

Wang, S., Wang, H. & Perdikaris, P. Learning the solution operator of parametric partial differential equations with physics-informed DeepONets. Sci. Adv. 7 , eabi8605. https://doi.org/10.1126/sciadv.abi8605 (2021).

Article ADS PubMed PubMed Central Google Scholar

Yu, J., Lu, L., Meng, X. & Karniadakis, G. E. Gradient-enhanced physics-informed neural networks for forward and inverse PDE problems. Comput. Methods Appl. Mech. Eng. 393 , 114823. https://doi.org/10.1016/j.cma.2022.114823 (2022).

Diederik, H. & Ba, J. Adam: A Method for Stochastic Optimization (Springer, 2017).

Atilim, B. & Alexey, J. Automatic Differentiation in Machine Learning: A Survey (Springer, 2018).

Hennigh, O. et al. 447–461 (Springer International Publishing, 2021).