network security Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

A Survey on Ransomware Malware and Ransomware Detection Techniques

Abstract: is a kind of malignant programming (malware) that takes steps to distribute or hinders admittance to information or a PC framework, for the most part by scrambling it, until the casualty pays a payoff expense to the assailant. As a rule, the payoff request accompanies a cutoff time. Assuming that the casualty doesn't pay on schedule, the information is gone perpetually or the payoff increments. Presently days and assailants executed new strategies for effective working of assault. In this paper, we center around ransomware network assaults and study of discovery procedures for deliver product assault. There are different recognition methods or approaches are accessible for identification of payment product assault. Keywords: Network Security, Malware, Ransomware, Ransomware Detection Techniques

Analysis and Evaluation of Wireless Network Security with the Penetration Testing Execution Standard (PTES)

The use of computer networks in an agency aims to facilitate communication and data transfer between devices. The network that can be applied can be using wireless media or LAN cable. At SMP XYZ, most of the computers still use wireless networks. Based on the findings in the field, it was found that there was no user management problem. Therefore, an analysis and audit of the network security system is needed to ensure that the network security system at SMP XYZ is safe and running well. In conducting this analysis, a tool is needed which will be used as a benchmark to determine the security of the wireless network. The tools used are Penetration Testing Execution Standard (PTES) which is one of the tools to become a standard in analyzing or auditing network security systems in a company in this case, namely analyzing and auditing wireless network security systems. After conducting an analysis based on these tools, there are still many security holes in the XYZ wireless SMP that allow outsiders to illegally access and obtain vulnerabilities in terms of WPA2 cracking, DoS, wireless router password cracking, and access point isolation so that it can be said that network security at SMP XYZ is still not safe

A Sensing Method of Network Security Situation Based on Markov Game Model

The sensing of network security situation (NSS) has become a hot issue. This paper first describes the basic principle of Markov model and then the necessary and sufficient conditions for the application of Markov game model. And finally, taking fuzzy comprehensive evaluation model as the theoretical basis, this paper analyzes the application fields of the sensing method of NSS with Markov game model from the aspects of network randomness, non-cooperative and dynamic evolution. Evaluation results show that the sensing method of NSS with Markov game model is best for financial field, followed by educational field. In addition, the model can also be used in the applicability evaluation of the sensing methods of different industries’ network security situation. Certainly, in different categories, and under the premise of different sensing methods of network security situation, the proportions of various influencing factors are different, and once the proportion is unreasonable, it will cause false calculation process and thus affect the results.

The Compound Prediction Analysis of Information Network Security Situation based on Support Vector Combined with BP Neural Network Learning Algorithm

In order to solve the problem of low security of data in network transmission and inaccurate prediction of future security situation, an improved neural network learning algorithm is proposed in this paper. The algorithm makes up for the shortcomings of the standard neural network learning algorithm, eliminates the redundant data by vector support, and realizes the effective clustering of information data. In addition, the improved neural network learning algorithm uses the order of data to optimize the "end" data in the standard neural network learning algorithm, so as to improve the accuracy and computational efficiency of network security situation prediction.MATLAB simulation results show that the data processing capacity of support vector combined BP neural network is consistent with the actual security situation data requirements, the consistency can reach 98%. the consistency of the security situation results can reach 99%, the composite prediction time of the whole security situation is less than 25s, the line segment slope change can reach 2.3% ,and the slope change range can reach 1.2%,, which is better than BP neural network algorithm.

Network intrusion detection using oversampling technique and machine learning algorithms

The expeditious growth of the World Wide Web and the rampant flow of network traffic have resulted in a continuous increase of network security threats. Cyber attackers seek to exploit vulnerabilities in network architecture to steal valuable information or disrupt computer resources. Network Intrusion Detection System (NIDS) is used to effectively detect various attacks, thus providing timely protection to network resources from these attacks. To implement NIDS, a stream of supervised and unsupervised machine learning approaches is applied to detect irregularities in network traffic and to address network security issues. Such NIDSs are trained using various datasets that include attack traces. However, due to the advancement in modern-day attacks, these systems are unable to detect the emerging threats. Therefore, NIDS needs to be trained and developed with a modern comprehensive dataset which contains contemporary common and attack activities. This paper presents a framework in which different machine learning classification schemes are employed to detect various types of network attack categories. Five machine learning algorithms: Random Forest, Decision Tree, Logistic Regression, K-Nearest Neighbors and Artificial Neural Networks, are used for attack detection. This study uses a dataset published by the University of New South Wales (UNSW-NB15), a relatively new dataset that contains a large amount of network traffic data with nine categories of network attacks. The results show that the classification models achieved the highest accuracy of 89.29% by applying the Random Forest algorithm. Further improvement in the accuracy of classification models is observed when Synthetic Minority Oversampling Technique (SMOTE) is applied to address the class imbalance problem. After applying the SMOTE, the Random Forest classifier showed an accuracy of 95.1% with 24 selected features from the Principal Component Analysis method.

Cyber Attacks Visualization and Prediction in Complex Multi-Stage Network

In network security, various protocols exist, but these cannot be said to be secure. Moreover, is not easy to train the end-users, and this process is time-consuming as well. It can be said this way, that it takes much time for an individual to become a good cybersecurity professional. Many hackers and illegal agents try to take advantage of the vulnerabilities through various incremental penetrations that can compromise the critical systems. The conventional tools available for this purpose are not enough to handle things as desired. Risks are always present, and with dynamically evolving networks, they are very likely to lead to serious incidents. This research work has proposed a model to visualize and predict cyber-attacks in complex, multilayered networks. The calculation will correspond to the cyber software vulnerabilities in the networks within the specific domain. All the available network security conditions and the possible places where an attacker can exploit the system are summarized.

Network Security Policy Automation

Network security policy automation enables enterprise security teams to keep pace with increasingly dynamic changes in on-premises and public/hybrid cloud environments. This chapter discusses the most common use cases for policy automation in the enterprise, and new automation methodologies to address them by taking the reader step-by-step through sample use cases. It also looks into how emerging automation solutions are using big data, artificial intelligence, and machine learning technologies to further accelerate network security policy automation and improve application and network security in the process.

Rule-Based Anomaly Detection Model with Stateful Correlation Enhancing Mobile Network Security

Research on network security technology of industrial control system.

The relationship between industrial control system and Internet is becoming closer and closer, and its network security has attracted much attention. Penetration testing is an active network intrusion detection technology, which plays an indispensable role in protecting the security of the system. This paper mainly introduces the principle of penetration testing, summarizes the current cutting-edge penetration testing technology, and looks forward to its development.

Detection and Prevention of Malicious Activities in Vulnerable Network Security Using Deep Learning

Export citation format, share document.

- Search Menu

- Sign in through your institution

- Editor's Choice

- Author Guidelines

- Submission Site

- Open Access

- About Journal of Cybersecurity

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Self-Archiving Policy

- Journals on Oxford Academic

- Books on Oxford Academic

Editors-in-Chief

Tyler Moore

About the journal

Journal of Cybersecurity publishes accessible articles describing original research in the inherently interdisciplinary world of computer, systems, and information security …

Latest articles

Call for Papers: Workshop on the Economics of Information Security

Journal of Cybersecurity is inviting submissions to a new special issue from the workshop on the economics of information security. Authors whose papers appeared at the workshop are invited to submit a revised version to the journal.

Call for Papers

Journal of Cybersecurity is soliciting papers for a special collection on the philosophy of information security. This collection will explore research at the intersection of philosophy, information security, and philosophy of science.

Find out more

Submit your paper

Join the conversation moving the science of security forward. Visit our Instructions to Authors for more information about how to submit your manuscript.

High-Impact Research Collection

Explore a collection of recently published high-impact research in the Journal of Cybersecurity .

Browse the collection here

Email alerts

Register to receive table of contents email alerts as soon as new issues of Journal of Cybersecurity are published online.

Read and Publish deals

Authors interested in publishing in Journal of Cybersecurity may be able to publish their paper Open Access using funds available through their institution’s agreement with OUP.

Find out if your institution is participating

Related Titles

Affiliations

- Online ISSN 2057-2093

- Print ISSN 2057-2085

- Copyright © 2024 Oxford University Press

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

- Open access

- Published: 10 August 2020

Using deep learning to solve computer security challenges: a survey

- Yoon-Ho Choi 1 , 2 ,

- Peng Liu 1 ,

- Zitong Shang 1 ,

- Haizhou Wang 1 ,

- Zhilong Wang 1 ,

- Lan Zhang 1 ,

- Junwei Zhou 3 &

- Qingtian Zou 1

Cybersecurity volume 3 , Article number: 15 ( 2020 ) Cite this article

21 Citations

1 Altmetric

Metrics details

Although using machine learning techniques to solve computer security challenges is not a new idea, the rapidly emerging Deep Learning technology has recently triggered a substantial amount of interests in the computer security community. This paper seeks to provide a dedicated review of the very recent research works on using Deep Learning techniques to solve computer security challenges. In particular, the review covers eight computer security problems being solved by applications of Deep Learning: security-oriented program analysis, defending return-oriented programming (ROP) attacks, achieving control-flow integrity (CFI), defending network attacks, malware classification, system-event-based anomaly detection, memory forensics, and fuzzing for software security.

Introduction

Using machine learning techniques to solve computer security challenges is not a new idea. For example, in the year of 1998, Ghosh and others in ( Ghosh et al. 1998 ) proposed to train a (traditional) neural network based anomaly detection scheme(i.e., detecting anomalous and unknown intrusions against programs); in the year of 2003, Hu and others in ( Hu et al. 2003 ) and Heller and others in ( Heller et al. 2003 ) applied Support Vector Machines to based anomaly detection scheme (e.g., detecting anomalous Windows registry accesses).

The machine-learning-based computer security research investigations during 1990-2010, however, have not been very impactful. For example, to the best of our knowledge, none of the machine learning applications proposed in ( Ghosh et al. 1998 ; Hu et al. 2003 ; Heller et al. 2003 ) has been incorporated into a widely deployed intrusion-detection commercial product.

Regarding why not very impactful, although researchers in the computer security community seem to have different opinions, the following remarks by Sommer and Paxson ( Sommer and Paxson 2010 ) (in the context of intrusion detection) have resonated with many researchers:

Remark A: “It is crucial to have a clear picture of what problem a system targets: what specifically are the attacks to be detected? The more narrowly one can define the target activity, the better one can tailor a detector to its specifics and reduce the potential for misclassifications.” ( Sommer and Paxson 2010 )

Remark B: “If one cannot make a solid argument for the relation of the features to the attacks of interest, the resulting study risks foundering on serious flaws.” ( Sommer and Paxson 2010 )

These insightful remarks, though well aligned with the machine learning techniques used by security researchers during 1990-2010, could become a less significant concern with Deep Learning (DL), a rapidly emerging machine learning technology, due to the following observations. First, Remark A implies that even if the same machine learning method is used, one algorithm employing a cost function that is based on a more specifically defined target attack activity could perform substantially better than another algorithm deploying a less specifically defined cost function. This could be a less significant concern with DL, since a few recent studies have shown that even if the target attack activity is not narrowly defined, a DL model could still achieve very high classification accuracy. Second, Remark B implies that if feature engineering is not done properly, the trained machine learning models could be plagued by serious flaws. This could be a less significant concern with DL, since many deep learning neural networks require less feature engineering than conventional machine learning techniques.

As stated in NSCAI Intern Report for Congress (2019 ), “DL is a statistical technique that exploits large quantities of data as training sets for a network with multiple hidden layers, called a deep neural network (DNN). A DNN is trained on a dataset, generating outputs, calculating errors, and adjusting its internal parameters. Then the process is repeated hundreds of thousands of times until the network achieves an acceptable level of performance. It has proven to be an effective technique for image classification, object detection, speech recognition, and natural language processing–problems that challenged researchers for decades. By learning from data, DNNs can solve some problems much more effectively, and also solve problems that were never solvable before.”

Now let’s take a high-level look at how DL could make it substantially easier to overcome the challenges identified by Sommer and Paxson ( Sommer and Paxson 2010 ). First, one major advantage of DL is that it makes learning algorithms less dependent on feature engineering. This characteristic of DL makes it easier to overcome the challenge indicated by Remark B. Second, another major advantage of DL is that it could achieve high classification accuracy with minimum domain knowledge. This characteristic of DL makes it easier to overcome the challenge indicated by Remark A.

Key observation. The above discussion indicates that DL could be a game changer in applying machine learning techniques to solving computer security challenges.

Motivated by this observation, this paper seeks to provide a dedicated review of the very recent research works on using Deep Learning techniques to solve computer security challenges. It should be noticed that since this paper aims to provide a dedicated review, non-deep-learning techniques and their security applications are out of the scope of this paper.

The remaining of the paper is organized as follows. In “ A four-phase workflow framework can summarize the existing works in a unified manner ” section, we present a four-phase workflow framework which we use to summarize the existing works in a unified manner. In “ A closer look at applications of deep learning in solving security-oriented program analysis challenges - A closer look at applications of deep learning in security-oriented fuzzing ” section, we provide a review of eight computer security problems being solved by applications of Deep Learning, respectively. In “ Discussion ” section, we will discuss certain similarity and certain dissimilarity among the existing works. In “ Further areas of investigation ” section, we mention four further areas of investigation. In “ Conclusion section, we conclude the paper.

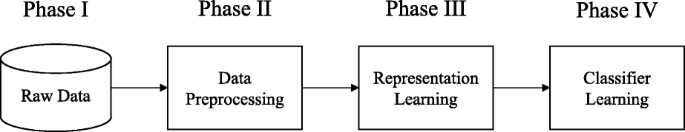

A four-phase workflow framework can summarize the existing works in a unified manner

We found that a four-phase workflow framework can provide a unified way to summarize all the research works surveyed by us. In particular, we found that each work surveyed by us employs a particular workflow when using machine learning techniques to solve a computer security challenge, and we found that each workflow consists of two or more phases. By “a unified way”, we mean that every workflow surveyed by us is essentially an instantiation of a common workflow pattern which is shown in Fig. 1 .

Overview of the four-phase workflow

Definitions of the four phases

The four phases, shown in Fig. 1 , are defined as follows. To make the definitions of the four phases more tangible, we use a running example to illustrate each of the four phases. Phase I.(Obtaining the raw data)

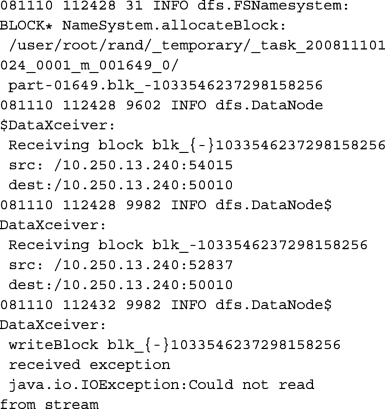

In this phase, certain raw data are collected. Running Example: When Deep Learning is used to detect suspicious events in a Hadoop distributed file system (HDFS), the raw data are usually the events (e.g., a block is allocated, read, written, replicated, or deleted) that have happened to each block. Since these events are recorded in Hadoop logs, the log files hold the raw data. Since each event is uniquely identified by a particular (block ID, timestamp) tuple, we could simply view the raw data as n event sequences. Here n is the total number of blocks in the HDFS. For example, the raw data collected in Xu et al. (2009) in total consists of 11,197,954 events. Since 575,139 blocks were in the HDFS, there were 575,139 event sequences in the raw data, and on average each event sequence had 19 events. One such event sequence is shown as follows:

Phase II.(Data preprocessing)

Both Phase II and Phase III aim to properly extract and represent the useful information held in the raw data collected in Phase I. Both Phase II and Phase III are closely related to feature engineering. A key difference between Phase II and Phase III is that Phase III is completely dedicated to representation learning, while Phase II is focused on all the information extraction and data processing operations that are not based on representation learning. Running Example: Let’s revisit the aforementioned HDFS. Each recorded event is described by unstructured text. In Phase II, the unstructured text is parsed to a data structure that shows the event type and a list of event variables in (name, value) pairs. Since there are 29 types of events in the HDFS, each event is represented by an integer from 1 to 29 according to its type. In this way, the aforementioned example event sequence can be transformed to:

22, 5, 5, 7

Phase III.(Representation learning)

As stated in Bengio et al. (2013) , “Learning representations of the data that make it easier to extract useful information when building classifiers or other predictors.” Running Example: Let’s revisit the same HDFS. Although DeepLog ( Du et al. 2017 ) directly employed one-hot vectors to represent the event types without representation learning, if we view an event type as a word in a structured language, one may actually use the word embedding technique to represent each event type. It should be noticed that the word embedding technique is a representation learning technique.

Phase IV.(Classifier learning)

This phase aims to build specific classifiers or other predictors through Deep Learning. Running Example: Let’s revisit the same HDFS. DeepLog ( Du et al. 2017 ) used Deep Learning to build a stacked LSTM neural network for anomaly detection. For example, let’s consider event sequence {22,5,5,5,11,9,11,9,11,9,26,26,26} in which each integer represents the event type of the corresponding event in the event sequence. Given a window size h = 4, the input sample and the output label pairs to train DeepLog will be: {22,5,5,5 → 11 }, {5,5,5,11 → 9 }, {5,5,11,9 → 11 }, and so forth. In the detection stage, DeepLog examines each individual event. It determines if an event is treated as normal or abnormal according to whether the event’s type is predicted by the LSTM neural network, given the history of event types. If the event’s type is among the top g predicted types, the event is treated as normal; otherwise, it is treated as abnormal.

Using the four-phase workflow framework to summarize some representative research works

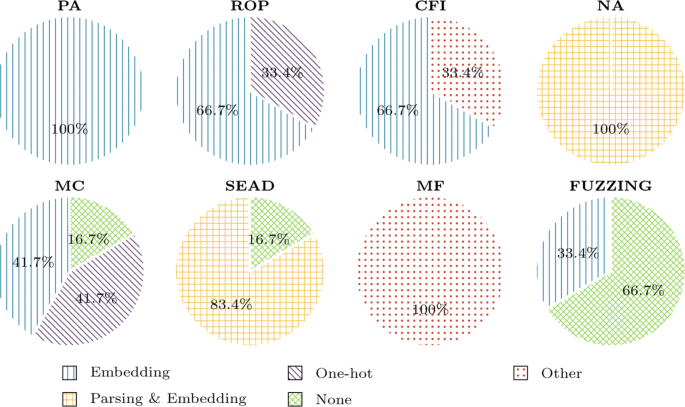

In this subsection, we use the four-phase workflow framework to summarize two representative works for each security problem. System security includes many sub research topics. However, not every research topics are suitable to adopt deep learning-based methods due to their intrinsic characteristics. For these security research subjects that can combine with deep-learning, some of them has undergone intensive research in recent years, others just emerging. We notice that there are 5 mainstream research directions in system security. This paper mainly focuses on system security, so the other mainstream research directions (e.g., deepfake) are out-of-scope. Therefore, we choose these 5 widely noticed research directions, and 3 emerging research direction in our survey:

In security-oriented program analysis, malware classification (MC), system-event-based anomaly detection (SEAD), memory forensics (MF), and defending network attacks, deep learning based methods have already undergone intensive research.

In defending return-oriented programming (ROP) attacks, Control-flow integrity (CFI), and fuzzing, deep learning based methods are emerging research topics.

We select two representative works for each research topic in our survey. Our criteria to select papers mainly include: 1) Pioneer (one of the first papers in this field); 2) Top (published on top conference or journal); 3) Novelty; 4) Citation (The citation of this paper is high); 5) Effectiveness (the result of this paper is pretty good); 6) Representative (the paper is a representative work for a branch of the research direction). Table 1 lists the reasons why we choose each paper, which is ordered according to their importance.

The summary is shown in Table 2 . There are three columns in the table. In the first column, we listed eight security problems, including security-oriented program analysis, defending return-oriented programming (ROP) attacks, control-flow integrity (CFI), defending network attacks (NA), malware classification (MC), system-event-based anomaly detection (SEAD), memory forensics (MF), and fuzzing for software security. In the second column, we list the very recent two representative works for each security problem. In the “Summary” column, we sequentially describe how the four phases are deployed at each work, then, we list the evaluation results for each work in terms of accuracy (ACC), precision (PRC), recall (REC), F1 score (F1), false-positive rate (FPR), and false-negative rate (FNR), respectively.

Methodology for reviewing the existing works

Data representation (or feature engineering) plays an important role in solving security problems with Deep Learning. This is because data representation is a way to take advantage of human ingenuity and prior knowledge to extract and organize the discriminative information from the data. Many efforts in deploying machine learning algorithms in security domain actually goes into the design of preprocessing pipelines and data transformations that result in a representation of the data to support effective machine learning.

In order to expand the scope and ease of applicability of machine learning in security domain, it would be highly desirable to find a proper way to represent the data in security domain, which can entangle and hide more or less the different explanatory factors of variation behind the data. To let this survey adequately reflect the important role played by data representation, our review will focus on how the following three questions are answered by the existing works:

Question 1: Is Phase II pervasively done in the literature? When Phase II is skipped in a work, are there any particular reasons?

Question 2: Is Phase III employed in the literature? When Phase III is skipped in a work, are there any particular reasons?

Question 3: When solving different security problems, is there any commonality in terms of the (types of) classifiers learned in Phase IV? Among the works solving the same security problem, is there dissimilarity in terms of classifiers learned in Phase IV?

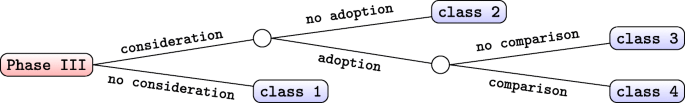

To group the Phase III methods at different applications of Deep Learning in solving the same security problem, we introduce a classification tree as shown in Fig. 2 . The classification tree categorizes the Phase III methods in our selected survey works into four classes. First, class 1 includes the Phase III methods which do not consider representation learning. Second, class 2 includes the Phase III methods which consider representation learning but, do not adopt it. Third, class 3 includes the Phase III methods which consider and adopt representation learning but, do not compare the performance with other methods. Finally, class 4 includes the Phase III methods which consider and adopt representation learning and, compare the performance with other methods.

Classification tree for different Phase III methods. Here, consideration , adoption , and comparison indicate that a work considers Phase III, adopts Phase III and makes comparison with other methods, respectively

In the remaining of this paper, we take a closer look at how each of the eight security problems is being solved by applications of Deep Learning in the literature.

A closer look at applications of deep learning in solving security-oriented program analysis challenges

Recent years, security-oriented program analysis is widely used in software security. For example, symbolic execution and taint analysis are used to discover, detect and analyze vulnerabilities in programs. Control flow analysis, data flow analysis and pointer/alias analysis are important components when enforcing many secure strategies, such as control flow integrity, data flow integrity and doling dangling pointer elimination. Reverse engineering was used by defenders and attackers to understand the logic of a program without source code.

In the security-oriented program analysis, there are many open problems, such as precise pointer/alias analysis, accurate and complete reversing engineer, complex constraint solving, program de-obfuscation, and so on. Some problems have theoretically proven to be NP-hard, and others still need lots of human effort to solve. Either of them needs a lot of domain knowledge and experience from expert to develop better solutions. Essentially speaking, the main challenges when solving them through traditional approaches are due to the sophisticated rules between the features and labels, which may change in different contexts. Therefore, on the one hand, it will take a large quantity of human effort to develop rules to solve the problems, on the other hand, even the most experienced expert cannot guarantee completeness. Fortunately, the deep learning method is skillful to find relations between features and labels if given a large amount of training data. It can quickly and comprehensively find all the relations if the training samples are representative and effectively encoded.

In this section, we will review the very recent four representative works that use Deep Learning for security-oriented program analysis. We observed that they focused on different goals. Shin, et al. designed a model ( Shin et al. 2015 ) to identify the function boundary. EKLAVYA ( Chua et al. 2017 ) was developed to learn the function type. Gemini ( Xu et al. 2017 ) was proposed to detect similarity among functions. DEEPVSA ( Guo et al. 2019 ) was designed to learn memory region of an indirect addressing from the code sequence. Among these works, we select two representative works ( Shin et al. 2015 ; Chua et al. 2017 ) and then, summarize the analysis results in Table 2 in detail.

Our review will be centered around three questions described in “ Methodology for reviewing the existing works ” section. In the remaining of this section, we will first provide a set of observations, and then we provide the indications. Finally, we provide some general remarks.

Key findings from a closer look

From a close look at the very recent applications using Deep Learning for solving security-oriented program analysis challenges, we observed the followings:

Observation 3.1: All of the works in our survey used binary files as their raw data. Phase II in our survey had one similar and straightforward goal – extracting code sequences from the binary. Difference among them was that the code sequence was extracted directly from the binary file when solving problems in static program analysis, while it was extracted from the program execution when solving problems in dynamic program analysis.

*Observation 3.2: Most data representation methods generally took into account the domain knowledge.

Most data representation methods generally took into the domain knowledge, i.e., what kind of information they wanted to reserve when processing their data. Note that the feature selection has a wide influence on Phase II and Phase III, for example, embedding granularities, representation learning methods. Gemini ( Xu et al. 2017 ) selected function level feature and other works in our survey selected instruction level feature. To be specifically, all the works except Gemini ( Xu et al. 2017 ) vectorized code sequence on instruction level.

Observation 3.3: To better support data representation for high performance, some works adopted representation learning.

For instance, DEEPVSA ( Guo et al. 2019 ) employed a representation learning method, i.e., bi-directional LSTM, to learn data dependency within instructions. EKLAVYA ( Chua et al. 2017 ) adopted representation learning method, i.e., word2vec technique, to extract inter-instruciton information. It is worth noting that Gemini ( Xu et al. 2017 ) adopts the Structure2vec embedding network in its siamese architecture in Phase IV (see details in Observation 3.7). The Structure2vec embedding network learned information from an attributed control flow graph.

Observation 3.4: According to our taxonomy, most works in our survey were classified into class 4.

To compare the Phase III, we introduced a classification tree with three layers as shown in Fig. 2 to group different works into four categories. The decision tree grouped our surveyed works into four classes according to whether they considered representation learning or not, whether they adopted representation learning or not, and whether they compared their methods with others’, respectively, when designing their framework. According to our taxonomy, EKLAVYA ( Chua et al. 2017 ), DEEPVSA ( Guo et al. 2019 ) were grouped into class 4 shown in Fig. 2 . Also, Gemini’s work ( Xu et al. 2017 ) and Shin, et al.’s work ( Shin et al. 2015 ) belonged to class 1 and class 2 shown in Fig. 2 , respectively.

Observation 3.5: All the works in our survey explain why they adopted or did not adopt one of representation learning algorithms.

Two works in our survey adopted representation learning for different reasons: to enhance model’s ability of generalization ( Chua et al. 2017 ); and to learn the dependency within instructions ( Guo et al. 2019 ). It is worth noting that Shin, et al. did not adopt representation learning because they wanted to preserve the “attractive” features of neural networks over other machine learning methods – simplicity. As they stated, “first, neural networks can learn directly from the original representation with minimal preprocessing (or “feature engineering”) needed.” and “second, neural networks can learn end-to-end, where each of its constituent stages are trained simultaneously in order to best solve the end goal.” Although Gemini ( Xu et al. 2017 ) did not adopt representation learning when processing their raw data, the Deep Learning models in siamese structure consisted of two graph embedding networks and one cosine function.

*Observation 3.6: The analysis results showed that a suitable representation learning method could improve accuracy of Deep Learning models.

DEEPVSA ( Guo et al. 2019 ) designed a series of experiments to evaluate the effectiveness of its representative method. By combining with the domain knowledge, EKLAVYA ( Chua et al. 2017 ) employed t-SNE plots and analogical reasoning to explain the effectiveness of their representation learning method in an intuitive way.

Observation 3.7: Various Phase IV methods were used.

In Phase IV, Gemini ( Xu et al. 2017 ) adopted siamese architecture model which consisted of two Structure2vec embedding networks and one cosine function. The siamese architecture took two functions as its input, and produced the similarity score as the output. The other three works ( Shin et al. 2015 ; Chua et al. 2017 ; Guo et al. 2019 ) adopted bi-directional RNN, RNN, bi-directional LSTM respectively. Shin, et al. adopted bi-directional RNN because they wanted to combine both the past and the future information in making a prediction for the present instruction ( Shin et al. 2015 ). DEEPVSA ( Guo et al. 2019 ) adopted bi-directional RNN to enable their model to infer memory regions in both forward and backward ways.

The above observations seem to indicate the following indications:

Indication 3.1: Phase III is not always necessary.

Not all authors regard representation learning as a good choice even though some case experiments show that representation learning can improve the final results. They value more the simplicity of Deep Learning methods and suppose that the adoption of representation learning weakens the simplicity of Deep Learning methods.

Indication 3.2: Even though the ultimate objective of Phase III in the four surveyed works is to train a model with better accuracy, they have different specific motivations as described in Observation 3.5.

When authors choose representation learning, they usually try to convince people the effectiveness of their choice by empirical or theoretical analysis.

*Indication 3.3: Observation 3.7 indicates that authors usually refer to the domain knowledge when designing the architecture of Deep Learning model.

For instance, the works we reviewed commonly adopt bi-directional RNN when their prediction partly based on future information in data sequence.

Despite the effectiveness and agility of deep learning-based methods, there are still some challenges in developing a scheme with high accuracy due to the hierarchical data structure, lots of noisy, and unbalanced data composition in program analysis. For instance, an instruction sequence, a typical data sample in program analysis, contains three-level hierarchy: sequence–instruction–opcode/operand. To make things worse, each level may contain many different structures, e.g., one-operand instructions, multi-operand instructions, which makes it harder to encode the training data.

A closer look at applications of deep learning in defending ROP attacks

Return-oriented programming (ROP) attack is one of the most dangerous code reuse attacks, which allows the attackers to launch control-flow hijacking attack without injecting any malicious code. Rather, It leverages particular instruction sequences (called “gadgets”) widely existing in the program space to achieve Turing-complete attacks ( Shacham and et al. 2007 ). Gadgets are instruction sequences that end with a RET instruction. Therefore, they can be chained together by specifying the return addresses on program stack. Many traditional techniques could be used to detect ROP attacks, such as control-flow integrity (CFI Abadi et al. (2009) ), but many of them either have low detection rate or have high runtime overhead. ROP payloads do not contain any codes. In other words, analyzing ROP payload without the context of the program’s memory dump is meaningless. Thus, the most popular way of detecting and preventing ROP attacks is control-flow integrity. The challenge after acquiring the instruction sequences is that it is hard to recognize whether the control flow is normal. Traditional methods use the control flow graph (CFG) to identify whether the control flow is normal, but attackers can design the instruction sequences which follow the normal control flow defined by the CFG. In essence, it is very hard to design a CFG to exclude every single possible combination of instructions that can be used to launch ROP attacks. Therefore, using data-driven methods could help eliminate such problems.

In this section, we will review the very recent three representative works that use Deep Learning for defending ROP attacks: ROPNN ( Li et al. 2018 ), HeNet ( Chen et al. 2018 ) and DeepCheck ( Zhang et al. 2019 ). ROPNN ( Li et al. 2018 ) aims to detect ROP attacks, HeNet ( Chen et al. 2018 ) aims to detect malware using CFI, and DeepCheck ( Zhang et al. 2019 ) aims at detecting all kinds of code reuse attacks.

Specifically, ROPNN is to protect one single program at a time, and its training data are generated from real-world programs along with their execution. Firstly, it generates its benign and malicious data by “chaining-up” the normally executed instruction sequences and “chaining-up” gadgets with the help of gadgets generation tool, respectively, after the memory dumps of programs are created. Each data sample is byte-level instruction sequence labeled as “benign” or “malicious”. Secondly, ROPNN will be trained using both malicious and benign data. Thirdly, the trained model is deployed to a target machine. After the protected program started, the executed instruction sequences will be traced and fed into the trained model, the protected program will be terminated once the model found the instruction sequences are likely to be malicious.

HeNet is also proposed to protect a single program. Its malicious data and benign data are generated by collecting trace data through Intel PT from malware and normal software, respectively. Besides, HeNet preprocesses its dataset and shape each data sample in the format of image, so that they could implement transfer learning from a model pre-trained on ImageNet. Then, HeNet is trained and deployed on machines with features of Intel PT to collect and classify the program’s execution trace online.

The training data for DeepCheck are acquired from CFGs, which are constructed by dissembling the programs and using the information from Intel PT. After the CFG for a protected program is constructed, authors sample benign instruction sequences by chaining up basic blocks that are connected by edges, and sample malicious instruction sequences by chaining up those that are not connected by edges. Although a CFG is needed during training, there is no need to construct CFG after the training phase. After deployed, instruction sequences will be constructed by leveraging Intel PT on the protected program. Then the trained model will classify whether the instruction sequences are malicious or benign.

We observed that none of the works considered Phase III, so all of them belong to class 1 according to our taxonomy as shown in Fig. 2 . The analysis results of ROPNN ( Li et al. 2018 ) and HeNet ( Chen et al. 2018 ) are shown in Table 2 . Also, we observed that three works had different goals.

From a close look at the very recent applications using Deep Learning for defending return-oriented programming attacks, we observed the followings:

Observation 4.1: All the works ( Li et al. 2018 ; Zhang et al. 2019 ; Chen et al. 2018 ) in this survey focused on data generation and acquisition.

In ROPNN ( Li et al. 2018 ), both malicious samples (gadget chains) were generated using an automated gadget generator (i.e. ROPGadget ( Salwant 2015 )) and a CPU emulator (i.e. Unicorn ( Unicorn-The ultimate CPU emulator 2015 )). ROPGadget was used to extract instruction sequences that could be used as gadgets from a program, and Unicorn was used to validate the instruction sequences. Corresponding benign sample (gadget-chain-like instruction sequences) were generated by disassembling a set of programs. In DeepCheck ( Zhang et al. 2019 ) refers to the key idea of control-flow integrity ( Abadi et al. 2009 ). It generates program’s run-time control flow through new feature of Intel CPU (Intel Processor Tracing), then compares the run-time control flow with the program’s control-flow graph (CFG) that generates through static analysis. Benign instruction sequences are that with in the program’s CFG, and vice versa. In HeNet ( Chen et al. 2018 ), program’s execution trace was extracted using the similar way as DeepCheck. Then, each byte was transformed into a pixel with an intensity between 0-255. Known malware samples and benign software samples were used to generate malicious data benign data, respectively.

Observation 4.2: None of the ROP works in this survey deployed Phase III.

Both ROPNN ( Li et al. 2018 ) and DeepCheck ( Zhang et al. 2019 ) used binary instruction sequences for training. In ROPNN ( Li et al. 2018 ), one byte was used as the very basic element for data pre-processing. Bytes were formed into one-hot matrices and flattened for 1-dimensional convolutional layer. In DeepCheck ( Zhang et al. 2019 ), half-byte was used as the basic unit. Each half-byte (4 bits) was transformed to decimal form ranging from 0-15 as the basic element of the input vector, then was fed into a fully-connected input layer. On the other hand, HeNet ( Chen et al. 2018 ) used different kinds of data. By the time this survey has been drafted, the source code of HeNet was not available to public and thus, the details of the data pre-processing was not be investigated. However, it is still clear that HeNet used binary branch information collected from Intel PT rather than binary instructions. In HeNet, each byte was converted to one decimal number ranging from 0 to 255. Byte sequences was sliced and formed into image sequences (each pixel represented one byte) for a fully-connected input layer.

Observation 4.3: Fully-connected neural network was widely used.

Only ROPNN ( Li et al. 2018 ) used 1-dimensional convolutional neural network (CNN) when extracting features. Both HeNet ( Chen et al. 2018 ) and DeepCheck ( Zhang et al. 2019 ) used fully-connected neural network (FCN). None of the works used recurrent neural network (RNN) and the variants.

Indication 4.1: It seems like that one of the most important factors in ROP problem is feature selection and data generation.

All three works use very different methods to collect/generate data, and all the authors provide very strong evidences and/or arguments to justify their approaches. ROPNN ( Li et al. 2018 ) was trained by the malicious and benign instruction sequences. However, there is no clear boundary between benign instruction sequences and malicious gadget chains. This weakness may impair the performance when applying ROPNN to real world ROP attacks. As oppose to ROPNN, DeepCheck ( Zhang et al. 2019 ) utilizes CFG to generate training basic-block sequences. However, since the malicious basic-block sequences are generated by randomly connecting nodes without edges, it is not guaranteed that all the malicious basic-blocks are executable. HeNet ( Chen et al. 2018 ) generates their training data from malware. Technically, HeNet could be used to detect any binary exploits, but their experiment focuses on ROP attack and achieves 100% accuracy. This shows that the source of data in ROP problem does not need to be related to ROP attacks to produce very impressive results.

Indication 4.2: Representation learning seems not critical when solving ROP problems using Deep Learning.

Minimal process on data in binary form seems to be enough to transform the data into a representation that is suitable for neural networks. Certainly, it is also possible to represent the binary instructions at a higher level, such as opcodes, or use embedding learning. However, as stated in ( Li et al. 2018 ), it appears that the performance will not change much by doing so. The only benefit of representing input data to a higher level is to reduce irrelevant information, but it seems like neural network by itself is good enough at extracting features.

Indication 4.3: Different Neural network architecture does not have much influence on the effectiveness of defending ROP attacks.

Both HeNet ( Chen et al. 2018 ) and DeepCheck ( Zhang et al. 2019 ) utilizes standard DNN and achieved comparable results on ROP problems. One can infer that the input data can be easily processed by neural networks, and the features can be easily detected after proper pre-process.

It is not surprising that researchers are not very interested in representation learning for ROP problems as stated in Observation 4.1. Since ROP attack is focus on the gadget chains, it is straightforward for the researcher to choose the gadgets as their training data directly. It is easy to map the data into numerical representation with minimal processing. An example is that one can map binary executable to hexadecimal ASCII representation, which could be a good representation for neural network.

Instead, researchers focus more in data acquisition and generation. In ROP problems, the amount of data is very limited. Unlike malware and logs, ROP payloads normally only contain addresses rather than codes, which do not contain any information without providing the instructions in corresponding addresses. It is thus meaningless to collect all the payloads. At the best of our knowledge, all the previous works use pick instruction sequences rather than payloads as their training data, even though they are hard to collect.

Even though, Deep Learning based method does not face the challenge to design a very complex fine-grained CFG anymore, it suffers from a limited number of data sources. Generally, Deep Learning based method requires lots of training data. However, real-world malicious data for the ROP attack is very hard to find, because comparing with benign data, malicious data need to be carefully crafted and there is no existing database to collect all the ROP attacks. Without enough representative training set, the accuracy of the trained model cannot be guaranteed.

A closer look at applications of deep learning in achieving CFI

The basic ideas of control-flow integrity (CFI) techniques, proposed by Abadi in 2005 ( Abadi et al. 2009 ), could be dated back to 2002, when Vladimir and his fellow researchers proposed an idea called program shepherding ( Kiriansky et al. 2002 ), a method of monitoring the execution flow of a program when it is running by enforcing some security policies. The goal of CFI is to detect and prevent control-flow hijacking attacks, by restricting every critical control flow transfers to a set that can only appear in correct program executions, according to a pre-built CFG. Traditional CFI techniques typically leverage some knowledge, gained from either dynamic or static analysis of the target program, combined with some code instrumentation methods, to ensure the program runs on a correct track.

However, the problems of traditional CFI are: (1) Existing CFI implementations are not compatible with some of important code features ( Xu et al. 2019 ); (2) CFGs generated by static, dynamic or combined analysis cannot always be precisely completed due to some open problems ( Horwitz 1997 ); (3) There always exist certain level of compromises between accuracy and performance overhead and other important properties ( Tan and Jaeger 2017 ; Wang and Liu 2019 ). Recent research has proposed to apply Deep Learning on detecting control flow violation. Their result shows that, compared with traditional CFI implementation, the security coverage and scalability were enhanced in such a fashion ( Yagemann et al. 2019 ). Therefore, we argue that Deep Learning could be another approach which requires more attention from CFI researchers who aim at achieving control-flow integrity more efficiently and accurately.

In this section, we will review the very recent three representative papers that use Deep Learning for achieving CFI. Among the three, two representative papers ( Yagemann et al. 2019 ; Phan et al. 2017 ) are already summarized phase-by-phase in Table 2 . We refer to interested readers the Table 2 for a concise overview of those two papers.

Our review will be centered around three questions described in Section 3 . In the remaining of this section, we will first provide a set of observations, and then we provide the indications. Finally, we provide some general remarks.

From a close look at the very recent applications using Deep Learning for achieving control-flow integrity, we observed the followings:

Observation 5.1: None of the related works realize preventive Footnote 1 prevention of control flow violation.

After doing a thorough literature search, we observed that security researchers are quite behind the trend of applying Deep Learning techniques to solve security problems. Only one paper has been founded by us, using Deep Learning techniques to directly enhance the performance of CFI ( Yagemann et al. 2019 ). This paper leveraged Deep Learning to detect document malware through checking program’s execution traces that generated by hardware. Specifically, the CFI violations were checked in an offline mode. So far, no works have realized Just-In-Time checking for program’s control flow.

In order to provide more insightful results, in this section, we try not to narrow down our focus on CFI detecting attacks at run-time, but to extend our scope to papers that take good use of control flow related data, combined with Deep Learning techniques ( Phan et al. 2017 ; Nguyen et al. 2018 ). In one work, researchers used self-constructed instruction-level CFG to detect program defection ( Phan et al. 2017 ). In another work, researchers used lazy-binding CFG to detect sophisticated malware ( Nguyen et al. 2018 ).

Observation 5.2: Diverse raw data were used for evaluating CFI solutions.

In all surveyed papers, there are two kinds of control flow related data being used: program instruction sequences and CFGs. Barnum et al. ( Yagemann et al. 2019 ) employed statically and dynamically generated instruction sequences acquired by program disassembling and Intel ® Processor Trace. CNNoverCFG ( Phan et al. 2017 ) used self-designed algorithm to construct instruction level control-flow graph. Minh Hai Nguyen et al. ( Nguyen et al. 2018 ) used proposed lazy-binding CFG to reflect the behavior of malware DEC.

Observation 5.3: All the papers in our survey adopted Phase II.

All the related papers in our survey employed Phase II to process their raw data before sending them into Phase III. In Barnum ( Yagemann et al. 2019 ), the instruction sequences from program run-time tracing were sliced into basic-blocks. Then, they assigned each basic-blocks with an unique basic-block ID (BBID). Finally, due to the nature of control-flow hijacking attack, they selected the sequences ending with indirect branch instruction (e.g., indirect call/jump, return and so on) as the training data. In CNNoverCFG ( Phan et al. 2017 ), each of instructions in CFG were labeled with its attributes in multiple perspectives, such as opcode, operands, and the function it belongs to. The training data is generated are sequences generated by traversing the attributed control-flow graph. Nguyen and others ( Nguyen et al. 2018 ) converted the lazy-binding CFG to corresponding adjacent matrix and treated the matrix as a image as their training data.

Observation 5.4: All the papers in our survey did not adopt Phase III. We observed all the papers we surveyed did not adopted Phase III. Instead, they adopted the form of numerical representation directly as their training data. Specifically, Barnum ( Yagemann et al. 2019 ) grouped the instructions into basic-blocks, then represented basic-blocks with uniquely assigning IDs. In CNNoverCFG ( Phan et al. 2017 ), each of instructions in the CFG was represented by a vector that associated with its attributes. Nguyen and others directly used the hashed value of bit string representation.

Observation 5.5: Various Phase IV models were used. Barnum ( Yagemann et al. 2019 ) utilized BBID sequence to monitor the execution flow of the target program, which is sequence-type data. Therefore, they chose LSTM architecture to better learn the relationship between instructions. While in the other two papers ( Phan et al. 2017 ; Nguyen et al. 2018 ), they trained CNN and directed graph-based CNN to extract information from control-flow graph and image, respectively.

Indication 5.1: All the existing works did not achieve Just-In-Time CFI violation detection.

It is still a challenge to tightly embed Deep Learning model in program execution. All existing work adopted lazy-checking – checking the program’s execution trace following its execution.

Indication 5.2: There is no unified opinion on how to generate malicious sample.

Data are hard to collect in control-flow hijacking attacks. The researchers must carefully craft malicious sample. It is not clear whether the “handcrafted” sample can reflect the nature the control-flow hijacking attack.

*Observation 5.3: The choice of methods in Phase II are based on researchers’ security domain knowledge.

The strength of using deep learning to solve CFI problems is that it can avoid the complicated processes of developing algorithms to build acceptable CFGs for the protected programs. Compared with the traditional approaches, the DL based method could prevent CFI designer from studying the language features of the targeted program and could also avoid the open problem (pointer analysis) in control flow analysis. Therefore, DL based CFI provides us a more generalized, scalable, and secure solution. However, since using DL in CFI problem is still at an early age, which kinds of control-flow related data are more effective is still unclear yet in this research area. Additionally, applying DL in real-time control-flow violation detection remains an untouched area and needs further research.

A closer look at applications of deep learning in defending network attacks

Network security is becoming more and more important as we depend more and more on networks for our daily lives, works and researches. Some common network attack types include probe, denial of service (DoS), Remote-to-local (R2L), etc. Traditionally, people try to detect those attacks using signatures, rules, and unsupervised anomaly detection algorithms. However, signature based methods can be easily fooled by slightly changing the attack payload; rule based methods need experts to regularly update rules; and unsupervised anomaly detection algorithms tend to raise lots of false positives. Recently, people are trying to apply Deep Learning methods for network attack detection.

In this section, we will review the very recent seven representative works that use Deep Learning for defending network attacks. Millar et al. (2018 ); Varenne et al. (2019 ); Ustebay et al. (2019 ) build neural networks for multi-class classification, whose class labels include one benign label and multiple malicious labels for different attack types. Zhang et al. (2019 ) ignores normal network activities and proposes parallel cross convolutional neural network (PCCN) to classify the type of malicious network activities. Yuan et al. (2017 ) applies Deep Learning to detecting a specific attack type, distributed denial of service (DDoS) attack. Yin et al. (2017 ); Faker and Dogdu (2019 ) explores both binary classification and multi-class classification for benign and malicious activities. Among these seven works, we select two representative works ( Millar et al. 2018 ; Zhang et al. 2019 ) and summarize the main aspects of their approaches regarding whether the four phases exist in their works, and what exactly do they do in the Phase if it exists. We direct interested readers to Table 2 for a concise overview of these two works.

From a close look at the very recent applications using Deep Learning for solving network attack challenges, we observed the followings:

Observation 6.1: All the seven works in our survey used public datasets, such as UNSW-NB15 ( Moustafa and Slay 2015 ) and CICIDS2017 ( IDS 2017 Datasets 2019 ).

The public datasets were all generated in test-bed environments, with unbalanced simulated benign and attack activities. For attack activities, the dataset providers launched multiple types of attacks, and the numbers of malicious data for those attack activities were also unbalanced.

Observation 6.2: The public datasets were given into one of two data formats, i.e., PCAP and CSV.

One was raw PCAP or parsed CSV format, containing network packet level features, and the other was also CSV format, containing network flow level features, which showed the statistic information of many network packets. Out of all the seven works, ( Yuan et al. 2017 ; Varenne et al. 2019 ) used packet information as raw inputs, ( Yin et al. 2017 ; Zhang et al. 2019 ; Ustebay et al. 2019 ; Faker and Dogdu 2019 ) used flow information as raw inputs, and ( Millar et al. 2018 ) explored both cases.

Observation 6.3: In order to parse the raw inputs, preprocessing methods, including one-hot vectors for categorical texts, normalization on numeric data, and removal of unused features/data samples, were commonly used.

Commonly removed features include IP addresses and timestamps. Faker and Dogdu (2019 ) also removed port numbers from used features. By doing this, they claimed that they could “avoid over-fitting and let the neural network learn characteristics of packets themselves”. One outlier was that, when using packet level features in one experiment, ( Millar et al. 2018 ) blindly chose the first 50 bytes of each network packet without any feature extracting processes and fed them into neural network.

Observation 6.4: Using image representation improved the performance of security solutions using Deep Learning.

After preprocessing the raw data, while ( Zhang et al. 2019 ) transformed the data into image representation, ( Yuan et al. 2017 ; Varenne et al. 2019 ; Faker and Dogdu 2019 ; Ustebay et al. 2019 ; Yin et al. 2017 ) directly used the original vectors as an input data. Also, ( Millar et al. 2018 ) explored both cases and reported better performance using image representation.

Observation 6.5: None of all the seven surveyed works considered representation learning.

All the seven surveyed works belonged to class 1 shown in Fig. 2 . They either directly used the processed vectors to feed into the neural networks, or changed the representation without explanation. One research work ( Millar et al. 2018 ) provided a comparison on two different representations (vectors and images) for the same type of raw input. However, the other works applied different preprocessing methods in Phase II. That is, since the different preprocessing methods generated different feature spaces, it was difficult to compare the experimental results.

Observation 6.6: Binary classification model showed better results from most experiments.

Among all the seven surveyed works, ( Yuan et al. 2017 ) focused on one specific attack type and only did binary classification to classify whether the network traffic was benign or malicious. Also, ( Millar et al. 2018 ; Ustebay et al. 2019 ; Zhang et al. 2019 ; Varenne et al. 2019 ) included more attack types and did multi-class classification to classify the type of malicious activities, and ( Yin et al. 2017 ; Faker and Dogdu 2019 ) explored both cases. As for multi-class classification, the accuracy for selective classes was good, while accuracy for other classes, usually classes with much fewer data samples, suffered by up to 20% degradation.

Observation 6.7: Data representation influenced on choosing a neural network model.

Indication 6.1: All works in our survey adopt a kind of preprocessing methods in Phase II, because raw data provided in the public datasets are either not ready for neural networks, or that the quality of data is too low to be directly used as data samples.

Preprocessing methods can help increase the neural network performance by improving the data samples’ qualities. Furthermore, by reducing the feature space, pre-processing can also improve the efficiency of neural network training and testing. Thus, Phase II should not be skipped. If Phase II is skipped, the performance of neural network is expected to go down considerably.

Indication 6.2: Although Phase III is not employed in any of the seven surveyed works, none of them explains a reason for it. Also, they all do not take representation learning into consideration.

Indication 6.3: Because no work uses representation learning, the effectiveness are not well-studied.

Out of other factors, it seems that the choice of pre-processing methods has the largest impact, because it directly affects the data samples fed to the neural network.

Indication 6.4: There is no guarantee that CNN also works well on images converted from network features.

Some works that use image data representation use CNN in Phase IV. Although CNN has been proven to work well on image classification problem in the recent years, there is no guarantee that CNN also works well on images converted from network features.

From the observations and indications above, we hereby present two recommendations: (1) Researchers can try to generate their own datasets for the specific network attack they want to detect. As stated, the public datasets have highly unbalanced number of data for different classes. Doubtlessly, such unbalance is the nature of real world network environment, in which normal activities are the majority, but it is not good for Deep Learning. ( Varenne et al. 2019 ) tries to solve this problem by oversampling the malicious data, but it is better to start with a balanced data set. (2) Representation learning should be taken into consideration. Some possible ways to apply representation learning include: (a) apply word2vec method to packet binaries, and categorical numbers and texts; (b) use K-means as one-hot vector representation instead of randomly encoding texts. We suggest that any change of data representation may be better justified by explanations or comparison experiments.

One critical challenge in this field is the lack of high-quality data set suitable for applying deep learning. Also, there is no agreement on how to apply domain knowledge into training deep learning models for network security problems. Researchers have been using different pre-processing methods, data representations and model types, but few of them have enough explanation on why such methods/representations/models are chosen, especially for data representation.

A closer look at applications of deep learning in malware classification

The goal of malware classification is to identify malicious behaviors in software with static and dynamic features like control-flow graph and system API calls. Malware and benign programs can be collected from open datasets and online websites. Both the industry and the academic communities have provided approaches to detect malware with static and dynamic analyses. Traditional methods such as behavior-based signatures, dynamic taint tracking, and static data flow analysis require experts to manually investigate unknown files. However, those hand-crafted signatures are not sufficiently effective because attackers can rewrite and reorder the malware. Fortunately, neural networks can automatically detect large-scale malware variants with superior classification accuracy.

In this section, we will review the very recent twelve representative works that use Deep Learning for malware classification ( De La Rosa et al. 2018 ; Saxe and Berlin 2015 ; Kolosnjaji et al. 2017 ; McLaughlin et al. 2017 ; Tobiyama et al. 2016 ; Dahl et al. 2013 ; Nix and Zhang 2017 ; Kalash et al. 2018 ; Cui et al. 2018 ; David and Netanyahu 2015 ; Rosenberg et al. 2018 ; Xu et al. 2018 ). De La Rosa et al. (2018 ) selects three different kinds of static features to classify malware. Saxe and Berlin (2015 ); Kolosnjaji et al. (2017 ); McLaughlin et al. (2017 ) also use static features from the PE files to classify programs. ( Tobiyama et al. 2016 ) extracts behavioral feature images using RNN to represent the behaviors of original programs. ( Dahl et al. 2013 ) transforms malicious behaviors using representative learning without neural network. Nix and Zhang (2017 ) explores RNN model with the API calls sequences as programs’ features. Cui et al. (2018 ); Kalash et al. (2018 ) skip Phase II by directly transforming the binary file to image to classify the file. ( David and Netanyahu 2015 ; Rosenberg et al. 2018 ) applies dynamic features to analyze malicious features. Xu et al. (2018 ) combines static features and dynamic features to represent programs’ features. Among these works, we select two representative works ( De La Rosa et al. 2018 ; Rosenberg et al. 2018 ) and identify four phases in their works shown as Table 2 .

From a close look at the very recent applications using Deep Learning for solving malware classification challenges, we observed the followings:

Observation 7.1: Features selected in malware classification were grouped into three categories: static features, dynamic features, and hybrid features.

Typical static features include metadata, PE import Features, Byte/Entorpy, String, and Assembly Opcode Features derived from the PE files ( Kolosnjaji et al. 2017 ; McLaughlin et al. 2017 ; Saxe and Berlin 2015 ). De La Rosa et al. (2018 ) took three kinds of static features: byte-level, basic-level (strings in the file, the metadata table, and the import table of the PE header), and assembly features-level. Some works directly considered binary code as static features ( Cui et al. 2018 ; Kalash et al. 2018 ).

Different from static features, dynamic features were extracted by executing the files to retrieve their behaviors during execution. The behaviors of programs, including the API function calls, their parameters, files created or deleted, websites and ports accessed, etc, were recorded by a sandbox as dynamic features ( David and Netanyahu 2015 ). The process behaviors including operation name and their result codes were extracted ( Tobiyama et al. 2016 ). The process memory, tri-grams of system API calls and one corresponding input parameter were chosen as dynamic features ( Dahl et al. 2013 ). An API calls sequence for an APK file was another representation of dynamic features ( Nix and Zhang 2017 ; Rosenberg et al. 2018 ).

Static features and dynamic features were combined as hybrid features ( Xu et al. 2018 ). For static features, Xu and others in ( Xu et al. 2018 ) used permissions, networks, calls, and providers, etc. For dynamic features, they used system call sequences.

Observation 7.2: In most works, Phase II was inevitable because extracted features needed to be vertorized for Deep Learning models.

One-hot encoding approach was frequently used to vectorize features ( Kolosnjaji et al. 2017 ; McLaughlin et al. 2017 ; Rosenberg et al. 2018 ; Tobiyama et al. 2016 ; Nix and Zhang 2017 ). Bag-of-words (BoW) and n -gram were also considered to represent features ( Nix and Zhang 2017 ). Some works brought the concepts of word frequency in NLP to convert the sandbox file to fixed-size inputs ( David and Netanyahu 2015 ). Hashing features into a fixed vector was used as an effective method to represent features ( Saxe and Berlin 2015 ). Bytes histogram using the bytes analysis and bytes-entropy histogram with a sliding window method were considered ( De La Rosa et al. 2018 ). In ( De La Rosa et al. 2018 ), De La Rosa and others embeded strings by hashing the ASCII strings to a fixed-size feature vector. For assembly features, they extracted four different levels of granularity: operation level (instruction-flow-graph), block level (control-flow-graph), function level (call-graph), and global level (graphs summarized). bigram, trigram and four-gram vectors and n -gram graph were used for the hybrid features ( Xu et al. 2018 ).

Observation 7.3: Most Phase III methods were classified into class 1.

Following the classification tree shown in Fig. 2 , most works were classified into class 1 shown in Fig. 2 except two works ( Dahl et al. 2013 ; Tobiyama et al. 2016 ), which belonged to class 3 shown in Fig. 2 . To reduce the input dimension, Dahl et al. (2013 ) performed feature selection using mutual information and random projection. Tobiyama et al. generated behavioral feature images using RNN ( Tobiyama et al. 2016 ).

Observation 7.4: After extracting features, two kinds of neural network architectures, i.e., one single neural network and multiple neural networks with a combined loss function, were used.

Hierarchical structures, like convolutional layers, fully connected layers and classification layers, were used to classify programs ( McLaughlin et al. 2017 ; Dahl et al. 2013 ; Nix and Zhang 2017 ; Saxe and Berlin 2015 ; Tobiyama et al. 2016 ; Cui et al. 2018 ; Kalash et al. 2018 ). A deep stack of denoising autoencoders was also introduced to learn programs’ behaviors ( David and Netanyahu 2015 ). De La Rosa and others ( De La Rosa et al. 2018 ) trained three different models with different features to compare which static features are relevant for the classification model. Some works investigated LSTM models for sequential features ( Nix and Zhang 2017 ; Rosenberg et al. 2018 ).

Two networks with different features as inputs were used for malware classification by combining their outputs with a dropout layer and an output layer ( Kolosnjaji et al. 2017 ). In ( Kolosnjaji et al. 2017 ), one network transformed PE Metadata and import features using feedforward neurons, another one leveraged convolutional network layers with opcode sequences. Lifan Xu et al. ( Xu et al. 2018 ) constructed a few networks and combined them using a two-level multiple kernel learning algorithm.

Indication 7.1: Except two works transform binary into images ( Cui et al. 2018 ; Kalash et al. 2018 ), most works surveyed need to adapt methods to vectorize extracted features.

The vectorization methods should not only keep syntactic and semantic information in features, but also consider the definition of the Deep Learning model.

Indication 7.2: Only limited works have shown how to transform features using representation learning.

Because some works assume the dynamic and static sequences, like API calls and instruction, and have similar syntactic and semantic structure as natural language, some representation learning techniques like word2vec may be useful in malware detection. In addition, for the control-flow graph, call graph and other graph representations, graph embedding is a potential method to transform those features.

Though several pieces of research have been done in malware detection using Deep Learning, it’s hard to compare their methods and performances because of two uncertainties in their approaches. First, the Deep Learning model is a black-box, researchers cannot detail which kind of features the model learned and explain why their model works. Second, feature selection and representation affect the model’s performance. Because they do not use the same datasets, researchers cannot prove their approaches – including selected features and Deep Learning model – are better than others. The reason why few researchers use open datasets is that existing open malware datasets are out of data and limited. Also, researchers need to crawl benign programs from app stores, so their raw programs will be diverse.

A closer look at applications of Deep Learning in system-event-based anomaly detection

System logs recorded significant events at various critical points, which can be used to debug the system’s performance issues and failures. Moreover, log data are available in almost all computer systems and are a valuable resource for understanding system status. There are a few challenges in anomaly detection based on system logs. Firstly, the raw log data are unstructured, while their formats and semantics can vary significantly. Secondly, logs are produced by concurrently running tasks. Such concurrency makes it hard to apply workflow-based anomaly detection methods. Thirdly, logs contain rich information and complexity types, including text, real value, IP address, timestamp, and so on. The contained information of each log is also varied. Finally, there are massive logs in every system. Moreover, each anomaly event usually incorporates a large number of logs generated in a long period.

Recently, a large number of scholars employed deep learning techniques ( Du et al. 2017 ; Meng et al. 2019 ; Das et al. 2018 ; Brown et al. 2018 ; Zhang et al. 2019 ; Bertero et al. 2017 ) to detect anomaly events in the system logs and diagnosis system failures. The raw log data are unstructured, while their formats and semantics can vary significantly. To detect the anomaly event, the raw log usually should be parsed to structure data, the parsed data can be transformed into a representation that supports an effective deep learning model. Finally, the anomaly event can be detected by deep learning based classifier or predictor.

In this section, we will review the very recent six representative papers that use deep learning for system-event-based anomaly detection ( Du et al. 2017 ; Meng et al. 2019 ; Das et al. 2018 ; Brown et al. 2018 ; Zhang et al. 2019 ; Bertero et al. 2017 ). DeepLog ( Du et al. 2017 ) utilizes LSTM to model the system log as a natural language sequence, which automatically learns log patterns from the normal event, and detects anomalies when log patterns deviate from the trained model. LogAnom ( Meng et al. 2019 ) employs Word2vec to extract the semantic and syntax information from log templates. Moreover, it uses sequential and quantitative features simultaneously. Das et al. (2018 ) uses LSTM to predict node failures that occur in super computing systems from HPC logs. Brown et al. (2018 ) presented RNN language models augmented with attention for anomaly detection in system logs. LogRobust ( Zhang et al. 2019 ) uses FastText to represent semantic information of log events, which can identify and handle unstable log events and sequences. Bertero et al. (2017 ) map log word to a high dimensional metric space using Google’s word2vec algorithm and take it as features to classify. Among these six papers, we select two representative works ( Du et al. 2017 ; Meng et al. 2019 ) and summarize the four phases of their approaches. We direct interested readers to Table 2 for a concise overview of these two works.

From a close look at the very recent applications using deep learning for solving security-event-based anomaly detection challenges, we observed the followings:

Observation 8.1: Most works of our surveyed papers evaluated their performance using public datasets.

By the time we surveyed this paper, only two works in ( Das et al. 2018 ; Bertero et al. 2017 ) used their private datasets.

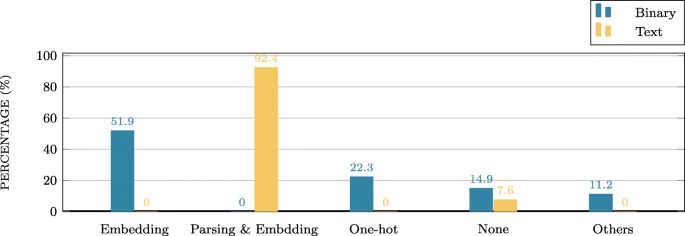

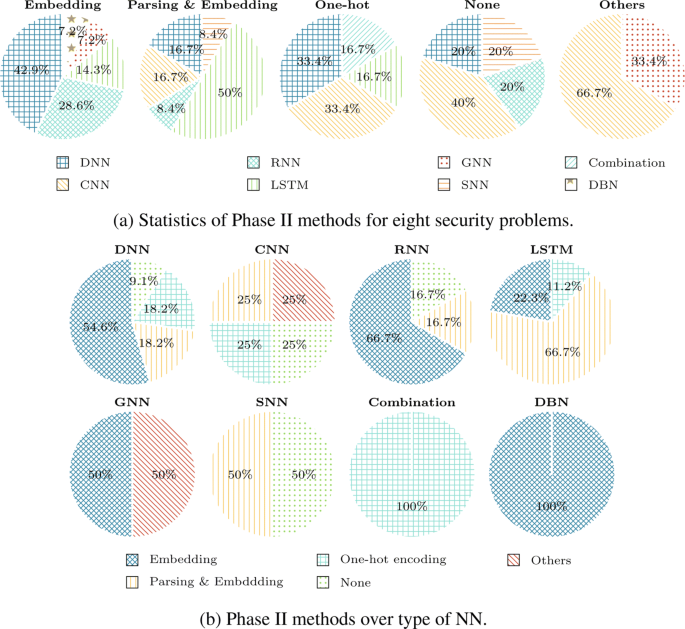

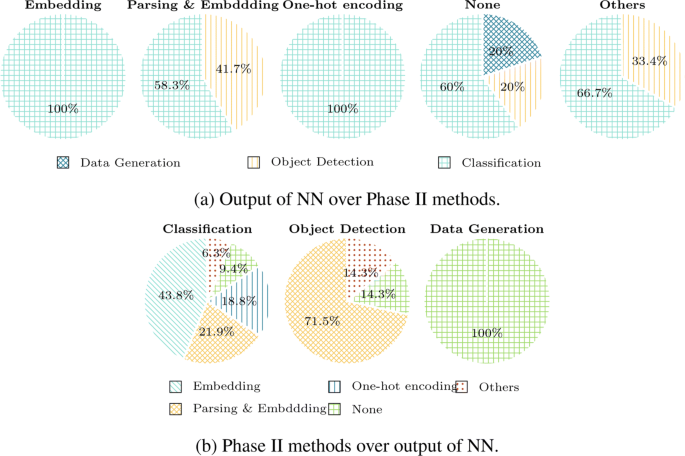

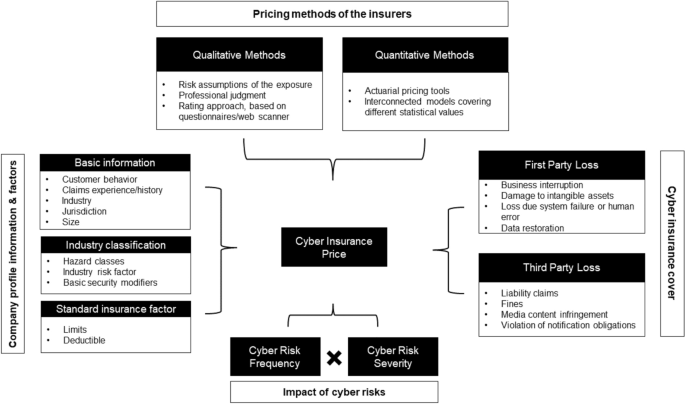

Observation 8.2: Most works in this survey adopted Phase II when parsing the raw log data.