If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

Biology library

Course: biology library > unit 1, the scientific method.

- Controlled experiments

- The scientific method and experimental design

Introduction

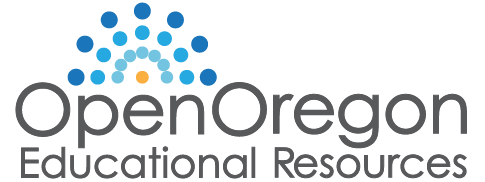

- Make an observation.

- Ask a question.

- Form a hypothesis , or testable explanation.

- Make a prediction based on the hypothesis.

- Test the prediction.

- Iterate: use the results to make new hypotheses or predictions.

Scientific method example: Failure to toast

1. make an observation., 2. ask a question., 3. propose a hypothesis., 4. make predictions., 5. test the predictions..

- If the toaster does toast, then the hypothesis is supported—likely correct.

- If the toaster doesn't toast, then the hypothesis is not supported—likely wrong.

Logical possibility

Practical possibility, building a body of evidence, 6. iterate..

- If the hypothesis was supported, we might do additional tests to confirm it, or revise it to be more specific. For instance, we might investigate why the outlet is broken.

- If the hypothesis was not supported, we would come up with a new hypothesis. For instance, the next hypothesis might be that there's a broken wire in the toaster.

Want to join the conversation?

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

1 Hypothesis Testing

Biology is a science, but what exactly is science? What does the study of biology share with other scientific disciplines? Science (from the Latin scientia, meaning “knowledge”) can be defined as knowledge about the natural world.

Biologists study the living world by posing questions about it and seeking science-based responses. This approach is common to other sciences as well and is often referred to as the scientific method . The scientific process was used even in ancient times, but it was first documented by England’s Sir Francis Bacon (1561–1626) ( Figure 1 ), who set up inductive methods for scientific inquiry. The scientific method is not exclusively used by biologists but can be applied to almost anything as a logical problem solving method.

The scientific process typically starts with an observation (often a problem to be solved) that leads to a question. Science is very good at answering questions having to do with observations about the natural world, but is very bad at answering questions having to do with purely moral questions, aesthetic questions, personal opinions, or what can be generally categorized as spiritual questions. Science has cannot investigate these areas because they are outside the realm of material phenomena, the phenomena of matter and energy, and cannot be observed and measured.

Let’s think about a simple problem that starts with an observation and apply the scientific method to solve the problem. Imagine that one morning when you wake up and flip a the switch to turn on your bedside lamp, the light won’t turn on. That is an observation that also describes a problem: the lights won’t turn on. Of course, you would next ask the question: “Why won’t the light turn on?”

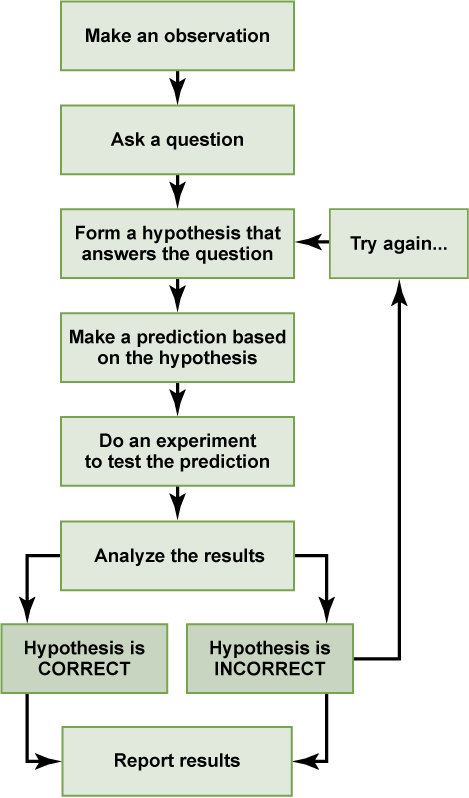

A hypothesis is a suggested explanation that can be tested. A hypothesis is NOT the question you are trying to answer – it is what you think the answer to the question will be and why . Several hypotheses may be proposed as answers to one question. For example, one hypothesis about the question “Why won’t the light turn on?” is “The light won’t turn on because the bulb is burned out.” There are also other possible answers to the question, and therefore other hypotheses may be proposed. A second hypothesis is “The light won’t turn on because the lamp is unplugged” or “The light won’t turn on because the power is out.” A hypothesis should be based on credible background information. A hypothesis is NOT just a guess (not even an educated one), although it can be based on your prior experience (such as in the example where the light won’t turn on). In general, hypotheses in biology should be based on a credible, referenced source of information.

A hypothesis must be testable to ensure that it is valid. For example, a hypothesis that depends on what a dog thinks is not testable, because we can’t tell what a dog thinks. It should also be falsifiable, meaning that it can be disproven by experimental results. An example of an unfalsifiable hypothesis is “Red is a better color than blue.” There is no experiment that might show this statement to be false. To test a hypothesis, a researcher will conduct one or more experiments designed to eliminate one or more of the hypotheses. This is important: a hypothesis can be disproven, or eliminated, but it can never be proven. If an experiment fails to disprove a hypothesis, then that explanation (the hypothesis) is supported as the answer to the question. However, that doesn’t mean that later on, we won’t find a better explanation or design a better experiment that will disprove the first hypothesis and lead to a better one.

A variable is any part of the experiment that can vary or change during the experiment. Typically, an experiment only tests one variable and all the other conditions in the experiment are held constant.

- The variable that is being changed or tested is known as the independent variable .

- The dependent variable is the thing (or things) that you are measuring as the outcome of your experiment.

- A constant is a condition that is the same between all of the tested groups.

- A confounding variable is a condition that is not held constant that could affect the experimental results.

Let’s start with the first hypothesis given above for the light bulb experiment: the bulb is burned out. When testing this hypothesis, the independent variable (the thing that you are testing) would be changing the light bulb and the dependent variable is whether or not the light turns on.

- HINT: You should be able to put your identified independent and dependent variables into the phrase “dependent depends on independent”. If you say “whether or not the light turns on depends on changing the light bulb” this makes sense and describes this experiment. In contrast, if you say “changing the light bulb depends on whether or not the light turns on” it doesn’t make sense.

It would be important to hold all the other aspects of the environment constant, for example not messing with the lamp cord or trying to turn the lamp on using a different light switch. If the entire house had lost power during the experiment because a car hit the power pole, that would be a confounding variable.

You may have learned that a hypothesis can be phrased as an “If..then…” statement. Simple hypotheses can be phrased that way (but they must always also include a “because”), but more complicated hypotheses may require several sentences. It is also very easy to get confused by trying to put your hypothesis into this format. Don’t worry about phrasing hypotheses as “if…then” statements – that is almost never done in experiments outside a classroom.

The results of your experiment are the data that you collect as the outcome. In the light experiment, your results are either that the light turns on or the light doesn’t turn on. Based on your results, you can make a conclusion. Your conclusion uses the results to answer your original question.

We can put the experiment with the light that won’t go in into the figure above:

- Observation: the light won’t turn on.

- Question: why won’t the light turn on?

- Hypothesis: the lightbulb is burned out.

- Prediction: if I change the lightbulb (independent variable), then the light will turn on (dependent variable).

- Experiment: change the lightbulb while leaving all other variables the same.

- Analyze the results: the light didn’t turn on.

- Conclusion: The lightbulb isn’t burned out. The results do not support the hypothesis, time to develop a new one!

- Hypothesis 2: the lamp is unplugged.

- Prediction 2: if I plug in the lamp, then the light will turn on.

- Experiment: plug in the lamp

- Analyze the results: the light turned on!

- Conclusion: The light wouldn’t turn on because the lamp was unplugged. The results support the hypothesis, it’s time to move on to the next experiment!

In practice, the scientific method is not as rigid and structured as it might at first appear. Sometimes an experiment leads to conclusions that favor a change in approach; often, an experiment brings entirely new scientific questions to the puzzle. Many times, science does not operate in a linear fashion; instead, scientists continually draw inferences and make generalizations, finding patterns as their research proceeds. Scientific reasoning is more complex than the scientific method alone suggests.

Control Groups

Another important aspect of designing an experiment is the presence of one or more control groups. A control group allows you to make a comparison that is important for interpreting your results. Control groups are samples that help you to determine that differences between your experimental groups are due to your treatment rather than a different variable – they eliminate alternate explanations for your results (including experimental error and experimenter bias). They increase reliability, often through the comparison of control measurements and measurements of the experimental groups. Often, the control group is a sample that is not treated with the independent variable, but is otherwise treated the same way as your experimental sample. This type of control group is treated the same way as the experimental group except it does not get treated with the independent variable. Therefore, if the results of the experimental group differ from the control group, the difference must be due to the change of the independent, rather than some outside factor. It is common in complex experiments (such as those published in scientific journals) to have more control groups than experimental groups.

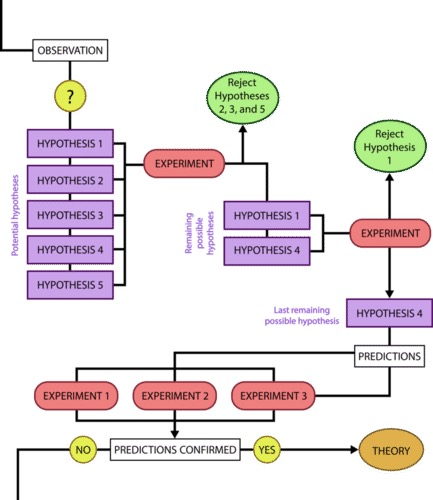

Question: Which fertilizer will produce the greatest number of tomatoes when applied to the plants?

Hypothesis : If I apply different brands of fertilizer to tomato plants, the most tomatoes will be produced from plants watered with Brand A because Brand A advertises that it produces twice as many tomatoes as other leading brands.

Experiment: Purchase 10 tomato plants of the same type from the same nursery. Pick plants that are similar in size and age. Divide the plants into two groups of 5. Apply Brand A to the first group and Brand B to the second group according to the instructions on the packages. After 10 weeks, count the number of tomatoes on each plant.

Independent Variable: Brand of fertilizer.

Dependent Variable : Number of tomatoes.

- The number of tomatoes produced depends on the brand of fertilizer applied to the plants.

Constants: amount of water, type of soil, size of pot, amount of light, type of tomato plant, length of time plants were grown.

Confounding variables : any of the above that are not held constant, plant health, diseases present in the soil or plant before it was purchased.

Results: Tomatoes fertilized with Brand A produced an average of 20 tomatoes per plant, while tomatoes fertilized with Brand B produced an average of 10 tomatoes per plant.

You’d want to use Brand A next time you grow tomatoes, right? But what if I told you that plants grown without fertilizer produced an average of 30 tomatoes per plant! Now what will you use on your tomatoes?

Results including control group : Tomatoes which received no fertilizer produced more tomatoes than either brand of fertilizer.

Conclusion: Although Brand A fertilizer produced more tomatoes than Brand B, neither fertilizer should be used because plants grown without fertilizer produced the most tomatoes!

More examples of control groups:

- You observe growth . Does this mean that your spinach is really contaminated? Consider an alternate explanation for growth: the swab, the water, or the plate is contaminated with bacteria. You could use a control group to determine which explanation is true. If you wet one of the swabs and wiped on a nutrient plate, do bacteria grow?

- You don’t observe growth. Does this mean that your spinach is really safe? Consider an alternate explanation for no growth: Salmonella isn’t able to grow on the type of nutrient you used in your plates. You could use a control group to determine which explanation is true. If you wipe a known sample of Salmonella bacteria on the plate, do bacteria grow?

- You see a reduction in disease symptoms: you might expect a reduction in disease symptoms purely because the person knows they are taking a drug so they believe should be getting better. If the group treated with the real drug does not show more a reduction in disease symptoms than the placebo group, the drug doesn’t really work. The placebo group sets a baseline against which the experimental group (treated with the drug) can be compared.

- You don’t see a reduction in disease symptoms: your drug doesn’t work. You don’t need an additional control group for comparison.

- You would want a “placebo feeder”. This would be the same type of feeder, but with no food in it. Birds might visit a feeder just because they are interested in it; an empty feeder would give a baseline level for bird visits.

- You would want a control group where you knew the enzyme would function. This would be a tube where you did not change the pH. You need this control group so you know your enzyme is working: if you didn’t see a reaction in any of the tubes with the pH adjusted, you wouldn’t know if it was because the enzyme wasn’t working at all or because the enzyme just didn’t work at any of your tested pH values.

- You would also want a control group where you knew the enzyme would not function (no enzyme added). You need the negative control group so you can ensure that there is no reaction taking place in the absence of enzyme: if the reaction proceeds without the enzyme, your results are meaningless.

Text adapted from: OpenStax , Biology. OpenStax CNX. May 27, 2016 http://cnx.org/contents/[email protected]:RD6ERYiU@5/The-Process-of-Science .

MHCC Biology 112: Biology for Health Professions Copyright © 2019 by Lisa Bartee is licensed under a Creative Commons Attribution 4.0 International License , except where otherwise noted.

Share This Book

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Mol Biol Cell

- v.30(12); 2019 Jun 1

Empowering statistical methods for cellular and molecular biologists

Daniel a. pollard.

a Department of Biology, Western Washington University, Bellingham, WA 98225-9160

Thomas D. Pollard

b Departments of Molecular Cellular and Developmental Biology, Molecular Biophysics and Biochemistry, and Cell Biology, Yale University, New Haven, CT 06520-8103

Katherine S. Pollard

c Gladstone Institutes, Chan-Zuckerberg Biohub, and University of California, San Francisco, San Francisco, CA 94158

Associated Data

We provide guidelines for using statistical methods to analyze the types of experiments reported in cellular and molecular biology journals such as Molecular Biology of the Cell . Our aim is to help experimentalists use these methods skillfully, avoid mistakes, and extract the maximum amount of information from their laboratory work. We focus on comparing the average values of control and experimental samples. A Supplemental Tutorial provides examples of how to analyze experimental data using R software.

PERSPECTIVE

Our purpose is to help experimental biologists use statistical methods to extract useful information from their data, draw valid conclusions, and avoid common errors. Unfortunately, statistical analysis often comes last in the lab, leading to the observation by the famous 20th century statistician R. A. Fisher ( Fisher, 1938 ):

“To consult [statistics] after an experiment is finished is often merely to […] conduct a post mortem examination. [You] can perhaps say what the experiment died of.”

To promote a more proactive approach to statistical analysis, we consider seven steps in the process. We offer advice on experimental design, assumptions for certain types of data, and decisions about when statistical tests are required. The article concludes with suggestions about how to present data, including the use of confidence intervals. We focus on comparisons of control and experimental samples, the most common application of statistics in cellular and molecular biology. The concepts are applicable to a wide variety of data, including measurements by any type of microscopic or biochemical assay. Following our guidelines will avoid the types of data handling mistakes that are troubling the research community ( Vaux, 2012 ). Readers interested in more detail might consult a biostatistics book such as The Analysis of Biological Data , Second Edition ( Whitlock and Schluter, 2014 ).

SEVEN STEPS

1. decide what you aim to estimate from your experimental data.

Experimentalists typically make measurements to estimate a property or “parameter” of a population from which the data were drawn, such as a mean, rate, proportion, or correlation. One should be aware that the actual parameter has a fixed, unknown value in the population. Take the example of a population of cells, each dividing at their own rate. At a given point in time, the population has a true mean and variance of the cell division rate. Neither of these parameters is knowable. When one measures the rate in a sample of cells from this population, the sample mean and variance are estimates of the true population mean and variance ( Box 1 ). Such estimates differ from the true parameter values for two reasons. First, systematic biases in the measurement methods can lead to inaccurate estimates. Such measurements may be precise but not accurate. Making measurements by independent methods can verify accurate methods and help identify biased methods. Second, the sample may not be representative of the population, either by chance or due to systematic bias in the sampling procedure. Estimates tend to be closer to the true values if more cells are measured, and they vary as the experiment is repeated. By accounting for this variability in the sample mean and variance, one can test a hypothesis about the true mean in the population or estimate its confidence interval.

Box 1: Statistics describing normal distributions

The sample standard deviation (SD) is the square root of the variance of the measurements in a sample and describes the distribution of values around the mean:

Examples of distributions of measurements. (A) Normal distribution with vertical lines showing the mean = median = mode (dotted) and ±1, 2, and 3 standard deviations (SD or σ). The fractions of the distribution are ∼0.67 within ±1 SD and ∼0.95 within ±2 SD. (B) Histogram of approximately normally distributed data. (C) Histogram of a skewed distribution of data. (D) Histogram of the natural log transformation of the skewed data in C. (D) Histogram of exponentially distributed data. (F) Histogram of a bimodal distribution of data.

Box 2: Confidence intervals

2. Frame your biological and statistical hypotheses

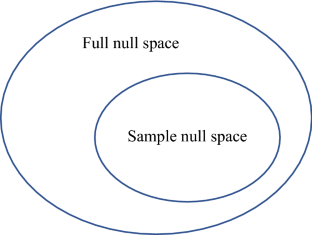

A critical step in designing a successful experiment is translating a biological hypothesis into null and alternative statistical hypotheses. Hypotheses in cellular and molecular biology are often framed as qualitative statements about the effect of a treatment (i.e., genotype or condition) relative to a control or prediction. For example, a biological hypothesis might be that the rate of contractile ring constriction depends on the concentration of myosin-II. Statistical hypothesis testing requires the articulation of a null hypothesis , which is typically framed as a concrete statement about no effect of a treatment or no deviation from a prediction. For example, a null hypothesis could be that the mean rate of contractile ring constriction is the same for cells depleted of myosin-II by RNA interference (RNAi) and for cells treated with a control RNAi molecule. Likewise, the alternative hypothesis is all outcomes other than the null hypothesis. For example, the mean rates of constriction are different under the two conditions. Most hypothesis testing allows for the effect of each treatment to be in either direction relative to a control or other treatments. These are referred to as two-sided hypotheses. Occasionally, the biological circumstances are such that the effect of a treatment could never be in one of the two possible directions, and therefore a one-sided hypothesis is used. The null hypothesis then is that the treatment either has no effect or an effect in the direction that is never expected. The section on hypothesis testing illustrates how this framework enables scientists to assess quantitatively whether their data support or refute the biological hypothesis.

3. Design your experiment

As indicated by Fisher’s admonition, one should build statistical analysis into the design of an experiment including the number of measurements, nature and number of variables measured, methods of data acquisition, biological and technical replication, and selection of an appropriate statistical test.

Nature and number of variables.

All variables that could influence responses and are measurable should be recorded and considered in statistical analyses. In addition to intentional treatments such as genotype or drug concentration, so-called nuisance variables (e.g., date of data collection, lot number of a reagent) can influence responses and if not included can obscure the effects of the treatments of interest.

Treatments and measured responses can either be numerical or categorical. Different statistical tools are required to evaluate numerical and categorical treatments and responses ( Table 1 and Figure 2 ). Failing to make these distinctions may be the most common error in the analysis of data from experiments in cellular and molecular biology.

Matching types of data appropriately with commonly used statistical tests ( Crawley, 2013 ; Whitlock and Schluter, 2014 ).

Decision tree to select an appropriate statistical test for association between a response and one or more treatments. Multiple treatments or a treatment and potential confounders can be tested using linear models (also known as ANCOVA) or generalized linear models (e.g., logistic regression for binary responses). Multiple treatments with repeated measurements on the same specimens, such as time courses, can be tested using mixed model regression. Questions in squares; answers on solid arrows; actions in ovals; tests in diamonds.

A range of inhibitor concentrations or time after adding a drug are examples of numerical treatments . Examples of categorical treatments are comparing wild-type versus mutant cells or control cells versus cells depleted of a mRNA.

Continuous numerical responses are measured as precisely as possible, so every data point may have a unique value. Examples include concentrations, rates, lengths, and fluorescence intensities. Categorical responses are typically recorded as counts of observations for each category such as stages of the cell cycle (e.g., 42 interphase cells and eight mitotic cells). Proportions (e.g., 0.84 interphase cells and 0.16 mitotic cells) and percentages (e.g., 84% interphase cells and 16% mitotic cells) are also categorical responses but are often inappropriately treated as numerical responses in statistical tests. For example, many authors make the mistake of using a t test to compare proportions. They may think that proportions are numerical responses, because they are numbers, but they are not numerical responses. The decision tree in Figure 2 guides the experimentalist to the appropriate statistical test and Table 1 lists the assumptions for widely used statistical tests.

Often researchers must make choices with regard to the number and nature of the variables in their experiment to address their biological question. For example, color can be measured as a categorical variable or as a continuous numerical variable of wavelengths. Recording variables as continuous numerical variables is best, because they contain more information and subsequently can be converted into categorical variables, if the data appear to be strongly categorical. Furthermore, the choice of variable may be less clear with complicated experiments. For example, in the time-course experiments described in Figure 3 , one study measured rates as a response variable ( Figure 3A ) and two others used time until an event ( Figure 3, B and C ). All could have treated the event as a categorical variable and used time as a treatment variable. It is often best to record the most direct observations (e.g., counts of cells with and without the event) and then subsequently to consider using response variables that involve calculations (e.g., rate of event or time until event).

Comparison of data presentation for three experiments on the constriction of cytokinetic contractile rings with several perturbations. (A) Rate of ring constriction in Caenorhabditis elegans embryos from Zhuravlev et al. (2017) . Error bars represent SD; p values were obtained by an unpaired, two-tailed Student’s t test; n.s., p ≥ 0.05; *, p < 0.05; **, p < 0.01; ****, p < 0.0001. Sample sizes 10–12. (B) Time to complete ring constriction in Schizosaccharomyces pombe from Li et al. (2016) . Error bars, SD; n ≥ 10 cells. *, p < 0.05 obtained with one-tailed t tests for two samples with unequal variance . (C) Kaplan-Meier outcomes plots comparing the times (relative to spindle pole body separation) of the onset of contractile ring constriction in populations of (○) wild-type and (⬤) blt1∆ fission yeast cells from Goss et al. (2014) . A log-rank test determined that the curves differed with p < 0.0001.

Methods of data acquisition.

Common statistical tests ( Table 1 ) assume randomization and exchangeability, meaning that all experimental units (e.g., cells) are equally likely to get each treatment and the data for one experimental unit is the same as that of any other receiving the same treatment. The challenge is to understand your experiment well enough to randomize treatments effectively across potential confounding variables. For example, it is unwise to image all mutant cells one week and all control cells the next week, because differences in the conditions during the experiment could have confounding effects difficult to separate from any differences between mutant and control cells. Randomly assigning mutants and controls to specific dates allows for date effects to be separated from the genotype effects that are of interest if both genotype and date are included in the statistical test as treatments. Many possible experimental designs effectively control for the effects of confounding variables such as randomized block designs and factorial designs. Planning ahead allows one to avoid the common mistake of failing to randomize batches of data acquisition across experimental conditions.

Many statistical tests further assume that observations are independent. When this is not the case, as with paired or repeated measurements on the same specimen, one should use methods that account for correlated observations, such as paired t tests or mixed model regression analysis with random effects ( Whitlock and Schluter, 2014 ). Time-course studies are a common example of repeated measurements in molecular cell biology that require special handling of nonindependence with approaches such as mixed models.

Statistical test.

Having decided on the experimental variables and the method to collect the data, the next step is to select the appropriate statistical test. Statistical tests are available to evaluate the effect of treatments on responses for every type and combination of treatment and response variables ( Figure 2 and Table 1 ). All statistical tests are based on certain assumptions ( Table 1 ) that must be met to maintain their accuracy. Start by selecting a test appropriate for the experimental design under ideal circumstances. If the actual data collected do not meet these assumptions, one option is to change to an appropriate statistical test as discussed in Step 4 and illustrated in Example 1 of the Supplemental Tutorial. In addition to matching variables with types of tests, it is also important to make sure that the null and alternative hypotheses for a test will address your biological hypothesis.

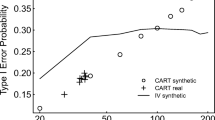

Most common statistical tests require predetermining an acceptable rate of false positives . For an individual test this is referred to as the type I error rate (α) and is typically set at α = 0.05, which means that a true null hypothesis will be mistakenly rejected at most five times out of 100 repetitions of the experiment. The type I error rate is adjusted to a lower value when multiple tests are being performed to address a common biological question ( Dudoit and van der Laan, 2008 ). Otherwise, lowering the type I error rate is not recommended, because it decreases the power of the test to detect small effects of treatments (see below).

Biological and technical replication.

Biological replicates (measurements on separate samples) are used for parameter estimates and statistical tests, because they allow one to describe variation in the population. Technical replicates (multiple measurements on the same sample) are used to improve estimation of the measurement for each biological replicate. Treating technical replicates as biological replicates is called pseudoreplication and often produces low estimates of variance and erroneous test results. The difference between technical and biological replicates depends on how one defines the population of interest. For example, measurements on cells within one culture flask are considered to be technical replicates, and each culture flask to be a biological replicate, if the population is all cells of this type and variability between flasks is biologically important. But in another study, cell to cell variability might be of primary interest, and measurements on separate cells within a flask could be considered biological replicates as long as one is cautious about making inferences beyond the population in that flask. Typically, one considers biological replicates to be the most independent samples.

The design should be balanced in the sense of collecting equal numbers of replicates for each treatment. Balanced designs are more robust to deviations from hypothesis test assumptions, such as equal variances in responses between treatments ( Table 1 ).

Number of measurements.

Extensive replication of experiments (large numbers of observations) has bountiful virtues, including higher precision of parameter estimates, more power of statistical tests to detect small effects, and ability to verify the assumptions of statistical tests. However, time and reagents can be expensive in cellular and molecular biology experiments, so the numbers of measurements tend to be relatively small (<20). Fortunately, statistical analysis in experimental biology has two major advantages over observational biology. First, experimental conditions are often well controlled, for example using genetically identical organisms under laboratory conditions or administering a precise amount of a drug. This reduces the variation between samples and compensates to some extent for small sample sizes. Second, experimentalists can randomize the assignment of treatments to their specimens and therefore minimize the influence of confounding variables. Nonetheless, small numbers of observations make it difficult to verify important assumptions and can compromise the interpretation of an experiment.

Statistical power.

One can estimate the appropriate number of measurements required by calculating statistical power when designing each experiment. Statistical power is the probability of rejecting a truly false null hypothesis. A common target is 0.80 power ( Cohen, 1992 ). Three variables contribute to statistical power: number of measurements, variability of those measurements (SD), and effect size (mean difference in response between the control and the treated populations). A simple rule of thumb is that power decreases with the variability and increases with sample size and effect size as shown in Figure 4 . One can increase the power of an experiment by reducing measurement error (variance) or increasing the sample size. For the statistical tests in Table 1 , simple formulas are available in most statistical software packages (e.g., R [ www.r-project.org ], Stata [ www.stata.com ], SAS [ www.sas.com ], SPSS [ www.ibm.com/SPSS/Software ]) to compute power as a function of these three variables.

Three graphs show factors affecting the statistical power, the probability of rejecting a truly false null hypothesis in a two-sample t test. The statistical power depends on three factors: (A) increases with the number of measurements ( n ); (B) decreases with the size of the SD (sd); and (C) increases with effect size (Δ), the difference between the control and the test samples on both sides of minimum at zero effect size. Two variables are held constant in each example.

Of course, one does not know the outcome of an experiment before it is done, but one may know the expected variability in the measurements from previous experiments, or one can run a pilot experiment on the control sample to estimate the variability in the measurements in a new system. Then one can design the experiment knowing roughly how many measurements will be required to detect a certain difference between the control and experimental samples. Alternatively, if the sample size is fixed, one can rearrange the power formula to compute the effect size one could detect at a given power and variability. If this effect size is not meaningful, proceeding is not advised. This strategy avoids performing a statistical “autopsy” after the experiment has failed to detect a significant difference.

4. Examine your data and finalize your analysis plan

Experimental data should not deviate strongly from the assumptions of the chosen statistical test ( Table 1 ), and the sample sizes should be large enough to evaluate if this is the case. Strong deviations from expectations will result in inaccurate test results. Even a very well-designed experiment may require adjustments to the data analysis plan, if the data do not conform to expectations and assumptions. See Examples 1, 2, and 4 in the Supplemental Tutorial.

For example, a t test calls for continuous numerical data and assumes that the responses have a normal distribution ( Figure 1, A and B ) with equal variances for both treatments. Samples from a population are never precisely normally distributed and rarely have identical variances. How can one tell whether the data are meeting or failing to meet the assumptions?

Find out whether the measurements are distributed normally by visualizing the unprocessed data. For numerical data this is best done by making a histogram with the range of values on the horizontal axis and frequency (count) of the value on the vertical-axis ( Figure 1B ). Most statistical tests are robust to small deviations from a perfect bell-shaped curve, so a visual inspection of the histogram is sufficient, and formal tests of normality are usually unnecessary. The main problem encountered at this point in experimental biology is that the number of measurements is too small to determine whether they are distributed normally.

Not all data are distributed normally. A common deviation is a skewed distribution where the distribution of values around the peak value is asymmetrical ( Figure 1C ). In many cases asymmetric distributions can be made symmetric by a transformation such as taking the log, square root, or reciprocal of the measurements for right-skewed data, and the exponential or square of the measurements for left-skewed data. For example, an experiment measuring cell division rates might result in many values symmetrically distributed around the mean rate but a long tail of much lower rates from cells that rarely or never divide. A log transformation ( Figure 1D ) would bring the histogram of this data closer to a normal distribution and allow for more statistical tests. See Example 2 in the Supplemental Tutorial for an example of a log transformation. Exponential ( Figure 1E ) and bimodal ( Figure 1F ) distributions are also common.

One can evaluate whether variances differ between treatments by visual inspection of histograms of the data or calculating the variance and SD for each treatment. If the sample sizes are equal between treatments (i.e., balanced design), tests like the t test and analysis of variance (ANOVA) are robust to variances severalfold different from each other.

To determine whether the assumption of linearity in regression has been met, one can look at a plot of residuals (i.e., the differences between observed responses and responses predicted from the linear model) versus fitted values. Residuals should be roughly uniform across fitted values, and deviations from uniform fitted values suggest nonlinearity. When nonlinearity is observed, one can consider more complicated parametric models of the relationship of responses and treatments.

If the data do not meet the assumptions or sample sizes are too small to verify that assumptions have been met, alternative tests are available. If the responses are not normally distributed (such as a bimodal distribution, Figure 1F ), the Mann-Whitney U test can replace the t test, and the Kruskal-Wallis test can replace ANOVA with the assumption of consistently distributed responses across treatments. However, relaxing the assumptions in such nonparametric tests reduces the power to detect the effects of treatments. If the data are not normally distributed but sample sizes are large ( N > 20), a permutation test is an alternative that can have better power than nonparametric tests. If the variances are not equal, one can use Welch’s unequal variance t test. See Supplemental Tutorial Example 1 for an example.

Categorical tests typically only assume sample sizes are large enough to avoid low expected numbers of observations in each category. It is important to confirm that these assumptions have been met, so larger samples can be collected, if they have not been met.

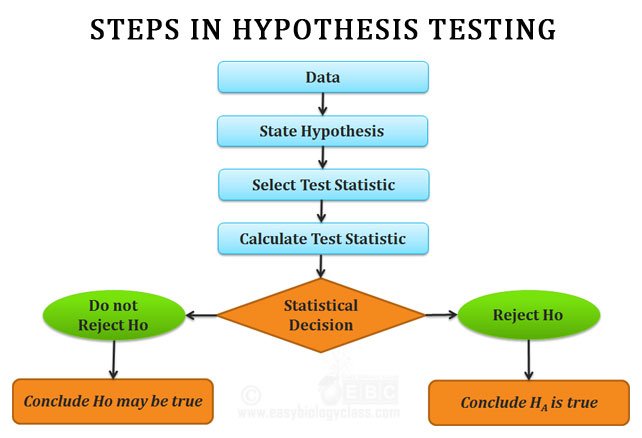

5. Perform a hypothesis test

A hypothesis test is done to determine the probability of observing the experimental data, if the null hypothesis is true. Such tests compare the properties of the experimental data with a theoretical distribution of outcomes expected when the null hypothesis is true. Note that different tests are required depending on whether the treatments and responses are categorical or numerical ( Table 1 ).

One example is the t test used for continuous numerical responses. In this case the properties of the data are summarized by a t statistic and compared with a t distribution ( Figure 5 ). The t distribution gives the probability of obtaining a given t statistic upon taking many random samples from a population where the null hypothesis is true. The shape of the distribution depends on the sample sizes.

Comparison of two t distributions with degrees of freedom of 3 (sample size 4) and 10 (sample size 11) with a normal distribution with a mean value of 0 and SD = 1. The vertical dashed lines are 2.5th and 97.5th quantiles of the corresponding (same color) t distribution. The area below the left dashed line and above the right dashed line totals 5% of the total area under the curve. The t distribution is the theoretical probability of obtaining a given t statistic with many random samples from a population where the null hypothesis is true. The shape of the distribution depends on the sample size. The distribution is symmetric, centered on 0. The tails are thicker than a standard normal distribution, reflecting the higher chance of values away from the mean when both the mean and the variance are being estimated from a sample. The t distribution is a probability density function so the total area under the curve is equal to 1. The area under the curve between two x -axis ( t statistic) values can be calculated using integration. With large sample sizes the accuracy of estimates of the true variance in an experiment increase and the t distribution converges on a standard normal distribution. To determine the probability of the observed statistic if the null hypothesis were true, one compares the t statistic from an experiment with the theoretical t distribution. For a one-sided test in the greater-than direction, the area above the observed t statistic is the p value. The 97.5th quantile has p = 0.025. For a one-sided test in the less-than direction, the area below the observed t statistic is the p value. The 2.5th quantile has p = 0.025 in this case. For a two-sided test, the p value is the sum of the area beyond the observed statistic and the area beyond the negative of the observed statistic. If this probability value ( p value) is low, the data are not likely under the null hypothesis.

If the null hypothesis for a t test is true (i.e., the means of the control and treated populations are the same), the most likely outcome is no difference ( t = 0). However, depending on the sample sizes and variances, other outcomes occur by chance. Comparing the t statistic from an experiment with the theoretical t distribution gives the probability that the experimental outcome occurred by chance. If the probability value ( p value ) is low, the null hypothesis is unlikely to be true.

One-sample t test.

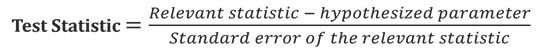

A useful way to think about this equation is that the numerator is the signal (the difference between the sample mean and µ 0 ) and the denominator is the noise (SEM or the variability of the samples). If the sample mean and µ 0 are the same, then t = 0. If the SEM is large relative to the difference in the numerator, t is also small. Small t statistic values are consistent with the null hypothesis of no difference between the true mean and the null value, while large t statistic values are less consistent with the null hypothesis. To see the signal over the noise, the variability must be small relative to the deviation of the sample mean from the null value.

Two-sample t test.

where N 1 and N 2 are the numbers of measurements in each sample, and the pooled sample variance is

Again, if the data are noisy, the large denominator weighs down any difference in the means and the t statistic is small.

Conversion of a t statistic to a p value.

One converts the test statistic (such as t from a two-sample t test) into the corresponding p value with conversion tables or the software noted in Table 1 . The p value is the probability of observing a test statistic at least as extreme as the measured t statistic if the null hypothesis is true. One assumes the null hypothesis is true and calculates the p value from the expected distribution of test statistic values.

In the case of a two-sample t test, under the null hypothesis, the t distribution is completely determined by the number of replicates for the two treatments (i.e., degrees of freedom). For two-sided null hypotheses, values near 0 are very likely under the null hypothesis while values far out in the positive and negative tails are unlikely. If one chooses a p value cutoff (α) of 0.05 (a false-positive outcome in five out of 100 random trials), the area under the curve in the extreme tails (i.e., where t statistic values result in rejecting the null hypothesis) is 0.025 in the left tail and 0.025 in the right tail. An observed test statistic that falls in one tail at exactly the threshold between failing to reject and rejecting the null hypothesis has a p value of 0.05 and any test statistics farther out in the tails has smaller p values. The p value is calculated by integrating between the measured t statistic and the infinite value in the nearest tail of the distribution and then multiplying that probability by 2 to account for both tails ( Minitab Blog, 2019 ).

If the p value is less than or equal to α, the null hypothesis is rejected because the data are improbable under the null hypothesis. Else the null hypothesis is not rejected. The following section discusses the interpretation of p values.

Note that t tests come with assumptions about the nature of the data, so one must choose an appropriate test ( Table 1 ). Beware that statistical software will default to certain t tests that may or may not be appropriate. For example, when “t.test” is selected, the R package defaults to Welch’s t test, but the user can also specify Student’s or Mann-Whitney t tests where they are more appropriate for the data ( Table 1 ). Furthermore, the software may not alert the user with an error message if categorical response data are incorrectly entered for a test that assumes continuous numerical response data.

Confidence intervals ( Box 2 ) are a second, equivalent way to summarize evidence for the null versus alternative hypothesis.

Comparing the outcomes of multiple treatments.

A common misconception is that a series of pairwise tests (e.g., t tests) comparing each of several treatments and a control is equivalent to a single integrated statistical analysis (e.g., ANOVA followed by a Tukey-Kramer post-hoc test). The key distinction between these approaches is that the series of pairwise tests is much more vulnerable to false positives, because the type I error rate is added across tests, while the integrated statistical analysis keeps the type I error rate at α = 0.05. For example, in an experiment with three treatments and a control the total type I error across the tests rises up to 0.3 with six pairwise t tests each with α = 0.05. On the other hand, an ANOVA analysis on the three treatments and control tests the null hypothesis that all treatments and control have the same response with α = 0.05. If the test rejects that null, then one can run a Tukey-Kramer post-hoc analysis to determine which pairs differed significantly, all while keeping the overall type I error rate for the analysis at or below α = 0.05. A series of pairwise tests and a single integrated analysis typically gives the same kind of information, but the integrated approach does so without exposure to high levels of false positives. See Figure 3A for an example where an integrated statistical analysis would have been helpful and Example 5 in the Supplemental Tutorial for how to perform the analysis.

6. Frame appropriate conclusions based on your statistical test

Assuming that one has chosen an appropriate statistical test and the data conform to the assumptions of that test, the statistical test will reject the null hypothesis that the control and treatments have the same responses, if the p value is less than α.

Still, one must use judgment before concluding that two treatments are different or that any detected difference is meaningful in the biological context. One should be skeptical about small but statistically significant differences that are unlikely to impact function. Some statisticians believe that the widespread use of α = 0.05 has resulted in an excess of false positives in biology and the social sciences and recommend smaller cutoffs ( Benjamin et al. , 2018 ). Others have advocated for abandoning tests of statistical significance altogether ( McShane et al. , 2018 ; Amrhein et al. , 2019 ) in favor of a more nuanced approach that takes into account the collective knowledge about the system including statistical tests.

Likewise, a biologically interesting trend that is not statistically significant may warrant collecting more samples and further investigation, particularly when the statistical test is not well powered. Fortunately, rigorous methods exist to determine whether low statistical power (see Step 3) is the issue. Then a decision can be made about whether to repeat the experiment or accept the result and avoid wasting effort and reagents.

7. Choose the best way to illustrate your results for publication or presentation

The nature of the experiment and statistical test should guide the selection of an appropriate presentation. Some types of data are well displayed in a table rather than a figure, such as counts for a categorical treatment and categorical response (see Example 4 in the Supplemental Tutorial). Other types of data may require more sophisticated figures, such as the Kaplan-Meier plot of the cumulative probability of an event through time in Figure 3C .

The type of statistical test, and any transformations applied, must be specified when reporting results. Unfortunately, researchers often fail to provide sufficient detail (e.g., software options, test assumptions) for others to repeat the analysis. Many papers report p values that appear improbable based on simple inspection of the data and without specifying the statistical test used. Some report SEM without the number of measurements, so the actual variability is not revealed.

It is helpful to show raw data along with the results of a statistical test. Some formats used to present data provide much more information than others ( Figure 3 ). These figures display both the mean and the SD for each treatment as well as the p value from comparing treatments. Figure 3 A includes the individual measurements so that the number and distribution of data points are available to show whether the assumptions of a test are met and to help with the interpretation of the experiment. Bar graphs ( Figure 3B ) do not include such raw data, but strip plots (see Figure 3A and Examples 1, 2, and 5 in the Supplemental Tutorial), histograms, and scatter plots do.

An alternative to indicating p values on a figure is to display 95% confidence intervals as error bars about the mean for each treatment (see Supplemental Tutorial Examples 1, 2, 3, and 5 for examples). When the 95% confidence intervals of two treatments do not overlap, we know that a t test would produce a significant result, and when the confidence interval for one treatment overlaps the mean of another treatment we know that a t test would produce a nonsignificant result. We do not recommend using SEM as error bars, because SEM fails to convey either true variation or statistical significance. Unfortunately, authors commonly use SEM for error bars without appreciating that it is not a measure of true variation and, at best, is difficult to interpret as a description of the significance of the differences of group means. Many ( Figure 3, A and C ) but not all ( Figure 3B ) papers explain their statistical methods clearly. Unfortunately, a substantial number of papers in Molecular Biology of the Cell and other journals include error bars without explaining what was measured.

Cellular and molecular biologists can use statistics effectively when analyzing and presenting their data, if they follow the seven steps described here. This will avoid making common mistakes ( Box 3 ). The Molecular Biology of the Cell website has advice about experimental design and statistical tests aligned with this perspective ( Box 4 ). Many institutions also have consultants available to offer advice about these basic matters or more advanced topics.

Box 3: Common mistakes to avoid

Not publishing raw data so analyses can be replicated.

Using proportions or percentages of categorical variables as continuous numerical variables in a t test or ANOVA.

Combining biological and technical replicates (pseudoreplication).

Ignoring nuisance treatment variables such as date of experiment.

Performing a hypothesis test without providing evidence that the data meet the assumptions of the test.

Performing multiple pairwise tests (e.g., t tests) instead of a single integrated test (e.g., ANOVA to Tukey-Kramer).

Not reporting the details of the hypothesis test (name of test, test statistic, parameters, and p value).

Figures lacking interpretable information about the spread of the responses for each treatment.

Figures lacking interpretable information about the outcomes of the hypothesis tests.

Box 4: Molecular Biology of the Cell statistical checklist

Where appropriate, the following information is included in the Materials and Methods section:

- How the sample size was chosen to ensure adequate power to detect a prespecified effect size.

- Inclusion/exclusion criteria if samples or animals were excluded from the analysis.

- Description of a method of randomization to determine how samples/animals were allocated to experimental groups and processed.

- The extent of blinding if the investigator was blinded to the group allocation during the experiment and/or when assessing the outcome.

- a. Do the data meet the assumptions of the tests (e.g., normal distribution)?

- b. Is there an estimate of variation within each group of data?

- c. Is the variance similar between the groups that are being statistically compared?

Source: www.ascb.org/files/mboc-checklist.pdf

STATISTICS TUTORIAL

The Supplemental Materials online provide a tutorial as both a pdf file and a Jupyter.ipynb file to practice analyzing data. The Supplemental Tutorial uses free R statistical software ( www.r-project.org/ ) to analyze five data sets (provided as Excel files). Each example uses a different statistical test: Welch’s t test for unequal variances; Student’s t test on log transformed responses; logistic regression for categorical response and two treatment variables; chi-square contingency test on combined response and combined treatment groups; and ANOVA with Tukey-Kramer post-hoc analysis.

Supplementary Material

Acknowledgments.

Research reported in this publication was supported by National Science Foundation Award MCB-1518314 to D.A.P., the National Institute of General Medical Sciences of the National Institutes of Health under awards no. R01GM026132 and no. R01GM026338 to T.D.P., and the Gladstone Institutes to K.S.P. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Science Foundation or the National Institutes of Health. We thank Alex Epstein and Samantha Dundon for their suggestions on the text.

DOI: http://www.molbiolcell.org/cgi/doi/10.1091/mbc.E15-02-0076

- Amrhein V, Greenland S, McShane B. (2019). Scientists rise up against statistical significance . Nature , 305–307. [ PubMed ] [ Google Scholar ]

- Atay O, Skotheim JM. (2014). Modularity and predictability in cell signaling and decision making . Mol Biol Cell , 3445–3450. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Bartolini F, Andres-Delgado L, Qu X, Nik S, Ramalingam N, Kremer L, Alonso MA, Gundersen GG. (2016). An mDia1-INF2 formin activation cascade facilitated by IQGAP1 regulates stable microtubules in migrating cells . Mol Biol Cell , 1797–1808. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Benjamin DJ, Berger JO, Johannesson M, Nosek BA, Wagenmakers E-J, Berk R, Bollen KA, Brembs B, Brown L, Camerer C, et al (2018). Redefine statistical significance . Nat Hum Behav , 6–10. [ PubMed ] [ Google Scholar ]

- Cohen J. (1992). A power primer . Psychol Bull , 155–159. [ PubMed ] [ Google Scholar ]

- Crawley MJ. (2013). The R Book , 2nd ed., New York: John Wiley & Sons. [ Google Scholar ]

- Dudoit S, van der Laan MJ. (2008). Multiple Testing Procedures with Applications to Genomics . In Springer Series in Statistics , New York: Springer Science & Business Media, LLC, 1–590. [ Google Scholar ]

- Fisher RA. (1938). Presidential address to the first Indian Statistical Congress . Sankhya , 14–17. [ Google Scholar ]

- Goss JW, Kim S, Bledsoe H, Pollard TD. (2014). Characterization of the roles of Blt1p in fission yeast cytokinesis . Mol Biol Cell , 1946–1957. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Kumfer KT, Cook SJ, Squirrell JM, Eliceiri KW, Peel N, O’Connell KF, White JG. (2010). CGEF-1 and CHIN-1 regulate CDC-42 activity during asymmetric division in the Caenorhabditis elegans embryo . Mol Biol Cell , 266–277. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Li Y, Christensen JR, Homa KE, Hocky GM, Fok A, Sees JA, Voth GA, Kovar DR. (2016). The F-actin bundler α-actinin Ain1 is tailored for ring assembly and constriction during cytokinesis in fission yeast . Mol Biol Cell , 1821–1833. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- McShane BB, Gal D, Gelman A, Robert C, Tackett JL. (2018). Abandon statistical significance . arXiv :1709.07588v2 [stat.ME]. [ Google Scholar ]

- Minitab Blog. (2019). http://blog.minitab.com/blog/adventures-in-statistics-2/understanding-t-tests-t-values-and-t-distributions .

- Nowotarski SH, McKeon N, Moser RJ, Peifer M. (2014). The actin regulators Enabled and Diaphanous direct distinct protrusive behaviors in different tissues during Drosophila development . Mol Biol Cell , 3147–3165. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Plooster M, Menon S, Winkle CC, Urbina FL, Monkiewicz C, Phend KD, Weinberg RJ, Gupton SL. (2017). TRIM9-dependent ubiquitination of DCC constrains kinase signaling, exocytosis, and axon branching . Mol Biol Cell , 2374–2385. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Spencer AK, Schaumberg AJ, Zallen JA. (2017). Scaling of cytoskeletal organization with cell size in Drosophila . Mol Biol Cell , 1519–1529. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Vaux DL. (2012). Research methods: know when your numbers are significant . Nature , 180–181. [ PubMed ] [ Google Scholar ]

- Whitlock MC, Schluter D. (2014). The Analysis of Biological Data , 2nd Ed, Englewood, CO: Roberts & Company Publishers. [ Google Scholar ]

- Zhuravlev Y, Hirsch SM, Jordan SN, Dumont J, Shirasu-Hiza M, Canman JC. (2017). CYK-4 regulates Rac, but not Rho, during cytokinesis . Mol Biol Cell , 1258–1270. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

1.4: Basic Concepts of Hypothesis Testing

- Last updated

- Save as PDF

- Page ID 1715

- John H. McDonald

- University of Delaware

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

Learning Objectives

- One of the main goals of statistical hypothesis testing is to estimate the \(P\) value, which is the probability of obtaining the observed results, or something more extreme, if the null hypothesis were true. If the observed results are unlikely under the null hypothesis, reject the null hypothesis.

- Alternatives to this "frequentist" approach to statistics include Bayesian statistics and estimation of effect sizes and confidence intervals.

Introduction

There are different ways of doing statistics. The technique used by the vast majority of biologists, and the technique that most of this handbook describes, is sometimes called "frequentist" or "classical" statistics. It involves testing a null hypothesis by comparing the data you observe in your experiment with the predictions of a null hypothesis. You estimate what the probability would be of obtaining the observed results, or something more extreme, if the null hypothesis were true. If this estimated probability (the \(P\) value) is small enough (below the significance value), then you conclude that it is unlikely that the null hypothesis is true; you reject the null hypothesis and accept an alternative hypothesis.

Many statisticians harshly criticize frequentist statistics, but their criticisms haven't had much effect on the way most biologists do statistics. Here I will outline some of the key concepts used in frequentist statistics, then briefly describe some of the alternatives.

Null Hypothesis

The null hypothesis is a statement that you want to test. In general, the null hypothesis is that things are the same as each other, or the same as a theoretical expectation. For example, if you measure the size of the feet of male and female chickens, the null hypothesis could be that the average foot size in male chickens is the same as the average foot size in female chickens. If you count the number of male and female chickens born to a set of hens, the null hypothesis could be that the ratio of males to females is equal to a theoretical expectation of a \(1:1\) ratio.

The alternative hypothesis is that things are different from each other, or different from a theoretical expectation.

For example, one alternative hypothesis would be that male chickens have a different average foot size than female chickens; another would be that the sex ratio is different from \(1:1\).

Usually, the null hypothesis is boring and the alternative hypothesis is interesting. For example, let's say you feed chocolate to a bunch of chickens, then look at the sex ratio in their offspring. If you get more females than males, it would be a tremendously exciting discovery: it would be a fundamental discovery about the mechanism of sex determination, female chickens are more valuable than male chickens in egg-laying breeds, and you'd be able to publish your result in Science or Nature . Lots of people have spent a lot of time and money trying to change the sex ratio in chickens, and if you're successful, you'll be rich and famous. But if the chocolate doesn't change the sex ratio, it would be an extremely boring result, and you'd have a hard time getting it published in the Eastern Delaware Journal of Chickenology . It's therefore tempting to look for patterns in your data that support the exciting alternative hypothesis. For example, you might look at \(48\) offspring of chocolate-fed chickens and see \(31\) females and only \(17\) males. This looks promising, but before you get all happy and start buying formal wear for the Nobel Prize ceremony, you need to ask "What's the probability of getting a deviation from the null expectation that large, just by chance, if the boring null hypothesis is really true?" Only when that probability is low can you reject the null hypothesis. The goal of statistical hypothesis testing is to estimate the probability of getting your observed results under the null hypothesis.

Biological vs. Statistical Null Hypotheses

It is important to distinguish between biological null and alternative hypotheses and statistical null and alternative hypotheses. "Sexual selection by females has caused male chickens to evolve bigger feet than females" is a biological alternative hypothesis; it says something about biological processes, in this case sexual selection. "Male chickens have a different average foot size than females" is a statistical alternative hypothesis; it says something about the numbers, but nothing about what caused those numbers to be different. The biological null and alternative hypotheses are the first that you should think of, as they describe something interesting about biology; they are two possible answers to the biological question you are interested in ("What affects foot size in chickens?"). The statistical null and alternative hypotheses are statements about the data that should follow from the biological hypotheses: if sexual selection favors bigger feet in male chickens (a biological hypothesis), then the average foot size in male chickens should be larger than the average in females (a statistical hypothesis). If you reject the statistical null hypothesis, you then have to decide whether that's enough evidence that you can reject your biological null hypothesis. For example, if you don't find a significant difference in foot size between male and female chickens, you could conclude "There is no significant evidence that sexual selection has caused male chickens to have bigger feet." If you do find a statistically significant difference in foot size, that might not be enough for you to conclude that sexual selection caused the bigger feet; it might be that males eat more, or that the bigger feet are a developmental byproduct of the roosters' combs, or that males run around more and the exercise makes their feet bigger. When there are multiple biological interpretations of a statistical result, you need to think of additional experiments to test the different possibilities.

Testing the Null Hypothesis

The primary goal of a statistical test is to determine whether an observed data set is so different from what you would expect under the null hypothesis that you should reject the null hypothesis. For example, let's say you are studying sex determination in chickens. For breeds of chickens that are bred to lay lots of eggs, female chicks are more valuable than male chicks, so if you could figure out a way to manipulate the sex ratio, you could make a lot of chicken farmers very happy. You've fed chocolate to a bunch of female chickens (in birds, unlike mammals, the female parent determines the sex of the offspring), and you get \(25\) female chicks and \(23\) male chicks. Anyone would look at those numbers and see that they could easily result from chance; there would be no reason to reject the null hypothesis of a \(1:1\) ratio of females to males. If you got \(47\) females and \(1\) male, most people would look at those numbers and see that they would be extremely unlikely to happen due to luck, if the null hypothesis were true; you would reject the null hypothesis and conclude that chocolate really changed the sex ratio. However, what if you had \(31\) females and \(17\) males? That's definitely more females than males, but is it really so unlikely to occur due to chance that you can reject the null hypothesis? To answer that, you need more than common sense, you need to calculate the probability of getting a deviation that large due to chance.

In the figure above, I used the BINOMDIST function of Excel to calculate the probability of getting each possible number of males, from \(0\) to \(48\), under the null hypothesis that \(0.5\) are male. As you can see, the probability of getting \(17\) males out of \(48\) total chickens is about \(0.015\). That seems like a pretty small probability, doesn't it? However, that's the probability of getting exactly \(17\) males. What you want to know is the probability of getting \(17\) or fewer males. If you were going to accept \(17\) males as evidence that the sex ratio was biased, you would also have accepted \(16\), or \(15\), or \(14\),… males as evidence for a biased sex ratio. You therefore need to add together the probabilities of all these outcomes. The probability of getting \(17\) or fewer males out of \(48\), under the null hypothesis, is \(0.030\). That means that if you had an infinite number of chickens, half males and half females, and you took a bunch of random samples of \(48\) chickens, \(3.0\%\) of the samples would have \(17\) or fewer males.

This number, \(0.030\), is the \(P\) value. It is defined as the probability of getting the observed result, or a more extreme result, if the null hypothesis is true. So "\(P=0.030\)" is a shorthand way of saying "The probability of getting \(17\) or fewer male chickens out of \(48\) total chickens, IF the null hypothesis is true that \(50\%\) of chickens are male, is \(0.030\)."

False Positives vs. False Negatives

After you do a statistical test, you are either going to reject or accept the null hypothesis. Rejecting the null hypothesis means that you conclude that the null hypothesis is not true; in our chicken sex example, you would conclude that the true proportion of male chicks, if you gave chocolate to an infinite number of chicken mothers, would be less than \(50\%\).

When you reject a null hypothesis, there's a chance that you're making a mistake. The null hypothesis might really be true, and it may be that your experimental results deviate from the null hypothesis purely as a result of chance. In a sample of \(48\) chickens, it's possible to get \(17\) male chickens purely by chance; it's even possible (although extremely unlikely) to get \(0\) male and \(48\) female chickens purely by chance, even though the true proportion is \(50\%\) males. This is why we never say we "prove" something in science; there's always a chance, however miniscule, that our data are fooling us and deviate from the null hypothesis purely due to chance. When your data fool you into rejecting the null hypothesis even though it's true, it's called a "false positive," or a "Type I error." So another way of defining the \(P\) value is the probability of getting a false positive like the one you've observed, if the null hypothesis is true.

Another way your data can fool you is when you don't reject the null hypothesis, even though it's not true. If the true proportion of female chicks is \(51\%\), the null hypothesis of a \(50\%\) proportion is not true, but you're unlikely to get a significant difference from the null hypothesis unless you have a huge sample size. Failing to reject the null hypothesis, even though it's not true, is a "false negative" or "Type II error." This is why we never say that our data shows the null hypothesis to be true; all we can say is that we haven't rejected the null hypothesis.

Significance Levels

Does a probability of \(0.030\) mean that you should reject the null hypothesis, and conclude that chocolate really caused a change in the sex ratio? The convention in most biological research is to use a significance level of \(0.05\). This means that if the \(P\) value is less than \(0.05\), you reject the null hypothesis; if \(P\) is greater than or equal to \(0.05\), you don't reject the null hypothesis. There is nothing mathematically magic about \(0.05\), it was chosen rather arbitrarily during the early days of statistics; people could have agreed upon \(0.04\), or \(0.025\), or \(0.071\) as the conventional significance level.

The significance level (also known as the "critical value" or "alpha") you should use depends on the costs of different kinds of errors. With a significance level of \(0.05\), you have a \(5\%\) chance of rejecting the null hypothesis, even if it is true. If you try \(100\) different treatments on your chickens, and none of them really change the sex ratio, \(5\%\) of your experiments will give you data that are significantly different from a \(1:1\) sex ratio, just by chance. In other words, \(5\%\) of your experiments will give you a false positive. If you use a higher significance level than the conventional \(0.05\), such as \(0.10\), you will increase your chance of a false positive to \(0.10\) (therefore increasing your chance of an embarrassingly wrong conclusion), but you will also decrease your chance of a false negative (increasing your chance of detecting a subtle effect). If you use a lower significance level than the conventional \(0.05\), such as \(0.01\), you decrease your chance of an embarrassing false positive, but you also make it less likely that you'll detect a real deviation from the null hypothesis if there is one.

The relative costs of false positives and false negatives, and thus the best \(P\) value to use, will be different for different experiments. If you are screening a bunch of potential sex-ratio-changing treatments and get a false positive, it wouldn't be a big deal; you'd just run a few more tests on that treatment until you were convinced the initial result was a false positive. The cost of a false negative, however, would be that you would miss out on a tremendously valuable discovery. You might therefore set your significance value to \(0.10\) or more for your initial tests. On the other hand, once your sex-ratio-changing treatment is undergoing final trials before being sold to farmers, a false positive could be very expensive; you'd want to be very confident that it really worked. Otherwise, if you sell the chicken farmers a sex-ratio treatment that turns out to not really work (it was a false positive), they'll sue the pants off of you. Therefore, you might want to set your significance level to \(0.01\), or even lower, for your final tests.

The significance level you choose should also depend on how likely you think it is that your alternative hypothesis will be true, a prediction that you make before you do the experiment. This is the foundation of Bayesian statistics, as explained below.

You must choose your significance level before you collect the data, of course. If you choose to use a different significance level than the conventional \(0.05\), people will be skeptical; you must be able to justify your choice. Throughout this handbook, I will always use \(P< 0.05\) as the significance level. If you are doing an experiment where the cost of a false positive is a lot greater or smaller than the cost of a false negative, or an experiment where you think it is unlikely that the alternative hypothesis will be true, you should consider using a different significance level.

One-tailed vs. Two-tailed Probabilities

The probability that was calculated above, \(0.030\), is the probability of getting \(17\) or fewer males out of \(48\). It would be significant, using the conventional \(P< 0.05\)criterion. However, what about the probability of getting \(17\) or fewer females? If your null hypothesis is "The proportion of males is \(17\) or more" and your alternative hypothesis is "The proportion of males is less than \(0.5\)," then you would use the \(P=0.03\) value found by adding the probabilities of getting \(17\) or fewer males. This is called a one-tailed probability, because you are adding the probabilities in only one tail of the distribution shown in the figure. However, if your null hypothesis is "The proportion of males is \(0.5\)", then your alternative hypothesis is "The proportion of males is different from \(0.5\)." In that case, you should add the probability of getting \(17\) or fewer females to the probability of getting \(17\) or fewer males. This is called a two-tailed probability. If you do that with the chicken result, you get \(P=0.06\), which is not quite significant.

You should decide whether to use the one-tailed or two-tailed probability before you collect your data, of course. A one-tailed probability is more powerful, in the sense of having a lower chance of false negatives, but you should only use a one-tailed probability if you really, truly have a firm prediction about which direction of deviation you would consider interesting. In the chicken example, you might be tempted to use a one-tailed probability, because you're only looking for treatments that decrease the proportion of worthless male chickens. But if you accidentally found a treatment that produced \(87\%\) male chickens, would you really publish the result as "The treatment did not cause a significant decrease in the proportion of male chickens"? I hope not. You'd realize that this unexpected result, even though it wasn't what you and your farmer friends wanted, would be very interesting to other people; by leading to discoveries about the fundamental biology of sex-determination in chickens, in might even help you produce more female chickens someday. Any time a deviation in either direction would be interesting, you should use the two-tailed probability. In addition, people are skeptical of one-tailed probabilities, especially if a one-tailed probability is significant and a two-tailed probability would not be significant (as in our chocolate-eating chicken example). Unless you provide a very convincing explanation, people may think you decided to use the one-tailed probability after you saw that the two-tailed probability wasn't quite significant, which would be cheating. It may be easier to always use two-tailed probabilities. For this handbook, I will always use two-tailed probabilities, unless I make it very clear that only one direction of deviation from the null hypothesis would be interesting.

Reporting your results