How to Create a Data Analysis Plan: A Detailed Guide

by Barche Blaise | Aug 12, 2020 | Writing

If a good research question equates to a story then, a roadmap will be very vita l for good storytelling. We advise every student/researcher to personally write his/her data analysis plan before seeking any advice. In this blog article, we will explore how to create a data analysis plan: the content and structure.

This data analysis plan serves as a roadmap to how data collected will be organised and analysed. It includes the following aspects:

- Clearly states the research objectives and hypothesis

- Identifies the dataset to be used

- Inclusion and exclusion criteria

- Clearly states the research variables

- States statistical test hypotheses and the software for statistical analysis

- Creating shell tables

1. Stating research question(s), objectives and hypotheses:

All research objectives or goals must be clearly stated. They must be Specific, Measurable, Attainable, Realistic and Time-bound (SMART). Hypotheses are theories obtained from personal experience or previous literature and they lay a foundation for the statistical methods that will be applied to extrapolate results to the entire population.

2. The dataset:

The dataset that will be used for statistical analysis must be described and important aspects of the dataset outlined. These include; owner of the dataset, how to get access to the dataset, how the dataset was checked for quality control and in what program is the dataset stored (Excel, Epi Info, SQL, Microsoft access etc.).

3. The inclusion and exclusion criteria :

They guide the aspects of the dataset that will be used for data analysis. These criteria will also guide the choice of variables included in the main analysis.

4. Variables:

Every variable collected in the study should be clearly stated. They should be presented based on the level of measurement (ordinal/nominal or ratio/interval levels), or the role the variable plays in the study (independent/predictors or dependent/outcome variables). The variable types should also be outlined. The variable type in conjunction with the research hypothesis forms the basis for selecting the appropriate statistical tests for inferential statistics. A good data analysis plan should summarize the variables as demonstrated in Figure 1 below.

5. Statistical software

There are tons of software packages for data analysis, some common examples are SPSS, Epi Info, SAS, STATA, Microsoft Excel. Include the version number, year of release and author/manufacturer. Beginners have the tendency to try different software and finally not master any. It is rather good to select one and master it because almost all statistical software have the same performance for basic and the majority of advance analysis needed for a student thesis. This is what we recommend to all our students at CRENC before they begin writing their results section .

6. Selecting the appropriate statistical method to test hypotheses

Depending on the research question, hypothesis and type of variable, several statistical methods can be used to answer the research question appropriately. This aspect of the data analysis plan outlines clearly why each statistical method will be used to test hypotheses. The level of statistical significance (p-value) which is often but not always <0.05 should also be written. Presented in figures 2a and 2b are decision trees for some common statistical tests based on the variable type and research question

A good analysis plan should clearly describe how missing data will be analysed.

7. Creating shell tables

Data analysis involves three levels of analysis; univariable, bivariable and multivariable analysis with increasing order of complexity. Shell tables should be created in anticipation for the results that will be obtained from these different levels of analysis. Read our blog article on how to present tables and figures for more details. Suppose you carry out a study to investigate the prevalence and associated factors of a certain disease “X” in a population, then the shell tables can be represented as in Tables 1, Table 2 and Table 3 below.

Table 1: Example of a shell table from univariate analysis

Table 2: Example of a shell table from bivariate analysis

Table 3: Example of a shell table from multivariate analysis

aOR = adjusted odds ratio

Now that you have learned how to create a data analysis plan, these are the takeaway points. It should clearly state the:

- Research question, objectives, and hypotheses

- Dataset to be used

- Variable types and their role

- Statistical software and statistical methods

- Shell tables for univariate, bivariate and multivariate analysis

Further readings

Creating a Data Analysis Plan: What to Consider When Choosing Statistics for a Study https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4552232/pdf/cjhp-68-311.pdf

Creating an Analysis Plan: https://www.cdc.gov/globalhealth/healthprotection/fetp/training_modules/9/creating-analysis-plan_pw_final_09242013.pdf

Data Analysis Plan: https://www.statisticssolutions.com/dissertation-consulting-services/data-analysis-plan-2/

Photo created by freepik – www.freepik.com

Dr Barche is a physician and holds a Masters in Public Health. He is a senior fellow at CRENC with interests in Data Science and Data Analysis.

Post Navigation

16 comments.

Thanks. Quite informative.

Educative write-up. Thanks.

Easy to understand. Thanks Dr

Very explicit Dr. Thanks

I will always remember how you help me conceptualize and understand data science in a simple way. I can only hope that someday I’ll be in a position to repay you, my dear friend.

Plan d’analyse

This is interesting, Thanks

Very understandable and informative. Thank you..

love the figures.

Nice, and informative

This is so much educative and good for beginners, I would love to recommend that you create and share a video because some people are able to grasp when there is an instructor. Lots of love

Thank you Doctor very helpful.

Educative and clearly written. Thanks

Well said doctor,thank you.But when do you present in tables ,bars,pie chart etc?

Very informative guide!

Submit a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

Notify me of follow-up comments by email.

Notify me of new posts by email.

Submit Comment

Receive updates on new courses and blog posts

Never Miss a Thing!

Subscribe to our mailing list to receive the latest news and updates on our webinars, articles and courses.

You have Successfully Subscribed!

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Can J Hosp Pharm

- v.68(4); Jul-Aug 2015

Creating a Data Analysis Plan: What to Consider When Choosing Statistics for a Study

There are three kinds of lies: lies, damned lies, and statistics. – Mark Twain 1

INTRODUCTION

Statistics represent an essential part of a study because, regardless of the study design, investigators need to summarize the collected information for interpretation and presentation to others. It is therefore important for us to heed Mr Twain’s concern when creating the data analysis plan. In fact, even before data collection begins, we need to have a clear analysis plan that will guide us from the initial stages of summarizing and describing the data through to testing our hypotheses.

The purpose of this article is to help you create a data analysis plan for a quantitative study. For those interested in conducting qualitative research, previous articles in this Research Primer series have provided information on the design and analysis of such studies. 2 , 3 Information in the current article is divided into 3 main sections: an overview of terms and concepts used in data analysis, a review of common methods used to summarize study data, and a process to help identify relevant statistical tests. My intention here is to introduce the main elements of data analysis and provide a place for you to start when planning this part of your study. Biostatistical experts, textbooks, statistical software packages, and other resources can certainly add more breadth and depth to this topic when you need additional information and advice.

TERMS AND CONCEPTS USED IN DATA ANALYSIS

When analyzing information from a quantitative study, we are often dealing with numbers; therefore, it is important to begin with an understanding of the source of the numbers. Let us start with the term variable , which defines a specific item of information collected in a study. Examples of variables include age, sex or gender, ethnicity, exercise frequency, weight, treatment group, and blood glucose. Each variable will have a group of categories, which are referred to as values , to help describe the characteristic of an individual study participant. For example, the variable “sex” would have values of “male” and “female”.

Although variables can be defined or grouped in various ways, I will focus on 2 methods at this introductory stage. First, variables can be defined according to the level of measurement. The categories in a nominal variable are names, for example, male and female for the variable “sex”; white, Aboriginal, black, Latin American, South Asian, and East Asian for the variable “ethnicity”; and intervention and control for the variable “treatment group”. Nominal variables with only 2 categories are also referred to as dichotomous variables because the study group can be divided into 2 subgroups based on information in the variable. For example, a study sample can be split into 2 groups (patients receiving the intervention and controls) using the dichotomous variable “treatment group”. An ordinal variable implies that the categories can be placed in a meaningful order, as would be the case for exercise frequency (never, sometimes, often, or always). Nominal-level and ordinal-level variables are also referred to as categorical variables, because each category in the variable can be completely separated from the others. The categories for an interval variable can be placed in a meaningful order, with the interval between consecutive categories also having meaning. Age, weight, and blood glucose can be considered as interval variables, but also as ratio variables, because the ratio between values has meaning (e.g., a 15-year-old is half the age of a 30-year-old). Interval-level and ratio-level variables are also referred to as continuous variables because of the underlying continuity among categories.

As we progress through the levels of measurement from nominal to ratio variables, we gather more information about the study participant. The amount of information that a variable provides will become important in the analysis stage, because we lose information when variables are reduced or aggregated—a common practice that is not recommended. 4 For example, if age is reduced from a ratio-level variable (measured in years) to an ordinal variable (categories of < 65 and ≥ 65 years) we lose the ability to make comparisons across the entire age range and introduce error into the data analysis. 4

A second method of defining variables is to consider them as either dependent or independent. As the terms imply, the value of a dependent variable depends on the value of other variables, whereas the value of an independent variable does not rely on other variables. In addition, an investigator can influence the value of an independent variable, such as treatment-group assignment. Independent variables are also referred to as predictors because we can use information from these variables to predict the value of a dependent variable. Building on the group of variables listed in the first paragraph of this section, blood glucose could be considered a dependent variable, because its value may depend on values of the independent variables age, sex, ethnicity, exercise frequency, weight, and treatment group.

Statistics are mathematical formulae that are used to organize and interpret the information that is collected through variables. There are 2 general categories of statistics, descriptive and inferential. Descriptive statistics are used to describe the collected information, such as the range of values, their average, and the most common category. Knowledge gained from descriptive statistics helps investigators learn more about the study sample. Inferential statistics are used to make comparisons and draw conclusions from the study data. Knowledge gained from inferential statistics allows investigators to make inferences and generalize beyond their study sample to other groups.

Before we move on to specific descriptive and inferential statistics, there are 2 more definitions to review. Parametric statistics are generally used when values in an interval-level or ratio-level variable are normally distributed (i.e., the entire group of values has a bell-shaped curve when plotted by frequency). These statistics are used because we can define parameters of the data, such as the centre and width of the normally distributed curve. In contrast, interval-level and ratio-level variables with values that are not normally distributed, as well as nominal-level and ordinal-level variables, are generally analyzed using nonparametric statistics.

METHODS FOR SUMMARIZING STUDY DATA: DESCRIPTIVE STATISTICS

The first step in a data analysis plan is to describe the data collected in the study. This can be done using figures to give a visual presentation of the data and statistics to generate numeric descriptions of the data.

Selection of an appropriate figure to represent a particular set of data depends on the measurement level of the variable. Data for nominal-level and ordinal-level variables may be interpreted using a pie graph or bar graph . Both options allow us to examine the relative number of participants within each category (by reporting the percentages within each category), whereas a bar graph can also be used to examine absolute numbers. For example, we could create a pie graph to illustrate the proportions of men and women in a study sample and a bar graph to illustrate the number of people who report exercising at each level of frequency (never, sometimes, often, or always).

Interval-level and ratio-level variables may also be interpreted using a pie graph or bar graph; however, these types of variables often have too many categories for such graphs to provide meaningful information. Instead, these variables may be better interpreted using a histogram . Unlike a bar graph, which displays the frequency for each distinct category, a histogram displays the frequency within a range of continuous categories. Information from this type of figure allows us to determine whether the data are normally distributed. In addition to pie graphs, bar graphs, and histograms, many other types of figures are available for the visual representation of data. Interested readers can find additional types of figures in the books recommended in the “Further Readings” section.

Figures are also useful for visualizing comparisons between variables or between subgroups within a variable (for example, the distribution of blood glucose according to sex). Box plots are useful for summarizing information for a variable that does not follow a normal distribution. The lower and upper limits of the box identify the interquartile range (or 25th and 75th percentiles), while the midline indicates the median value (or 50th percentile). Scatter plots provide information on how the categories for one continuous variable relate to categories in a second variable; they are often helpful in the analysis of correlations.

In addition to using figures to present a visual description of the data, investigators can use statistics to provide a numeric description. Regardless of the measurement level, we can find the mode by identifying the most frequent category within a variable. When summarizing nominal-level and ordinal-level variables, the simplest method is to report the proportion of participants within each category.

The choice of the most appropriate descriptive statistic for interval-level and ratio-level variables will depend on how the values are distributed. If the values are normally distributed, we can summarize the information using the parametric statistics of mean and standard deviation. The mean is the arithmetic average of all values within the variable, and the standard deviation tells us how widely the values are dispersed around the mean. When values of interval-level and ratio-level variables are not normally distributed, or we are summarizing information from an ordinal-level variable, it may be more appropriate to use the nonparametric statistics of median and range. The first step in identifying these descriptive statistics is to arrange study participants according to the variable categories from lowest value to highest value. The range is used to report the lowest and highest values. The median or 50th percentile is located by dividing the number of participants into 2 groups, such that half (50%) of the participants have values above the median and the other half (50%) have values below the median. Similarly, the 25th percentile is the value with 25% of the participants having values below and 75% of the participants having values above, and the 75th percentile is the value with 75% of participants having values below and 25% of participants having values above. Together, the 25th and 75th percentiles define the interquartile range .

PROCESS TO IDENTIFY RELEVANT STATISTICAL TESTS: INFERENTIAL STATISTICS

One caveat about the information provided in this section: selecting the most appropriate inferential statistic for a specific study should be a combination of following these suggestions, seeking advice from experts, and discussing with your co-investigators. My intention here is to give you a place to start a conversation with your colleagues about the options available as you develop your data analysis plan.

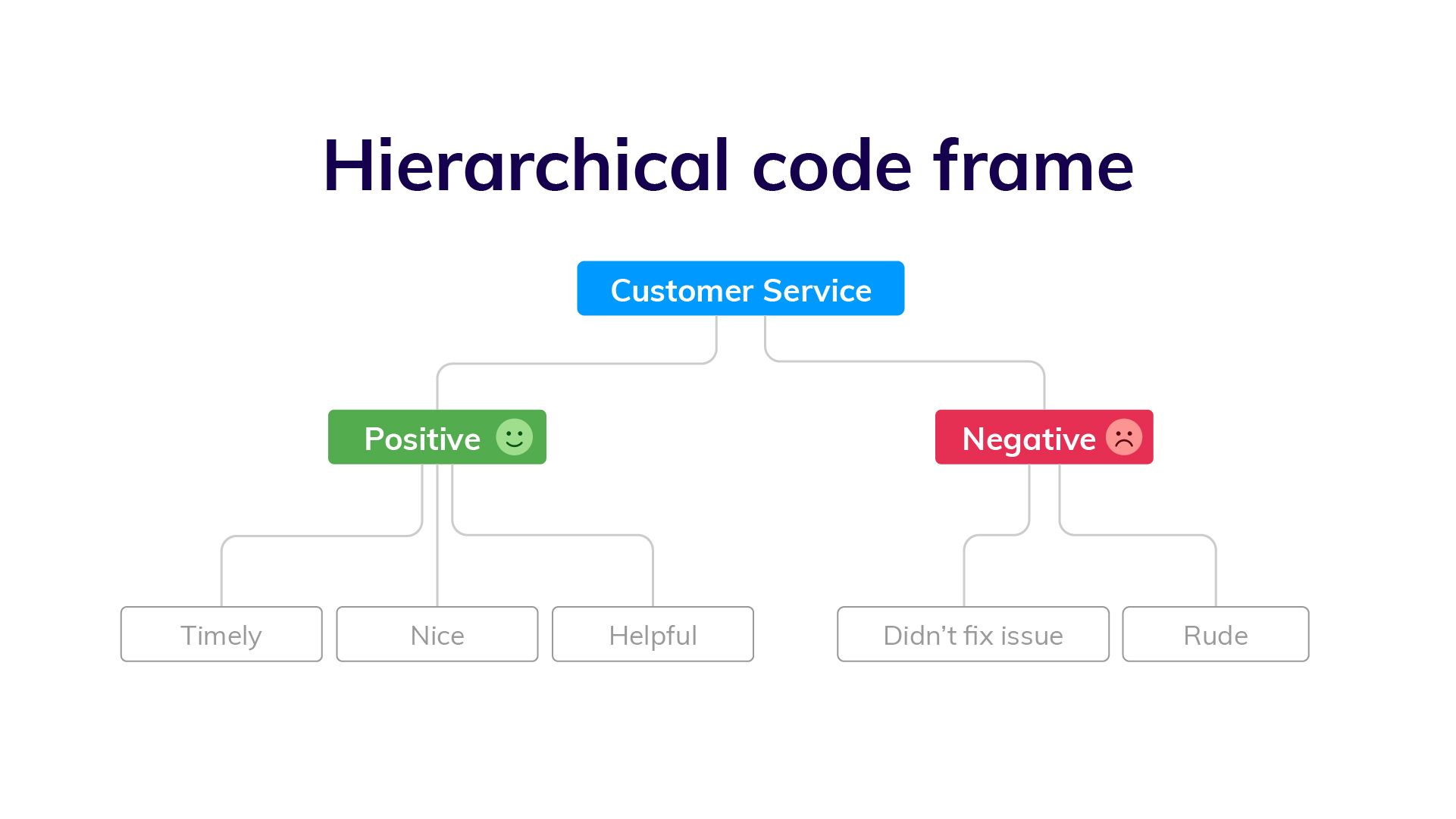

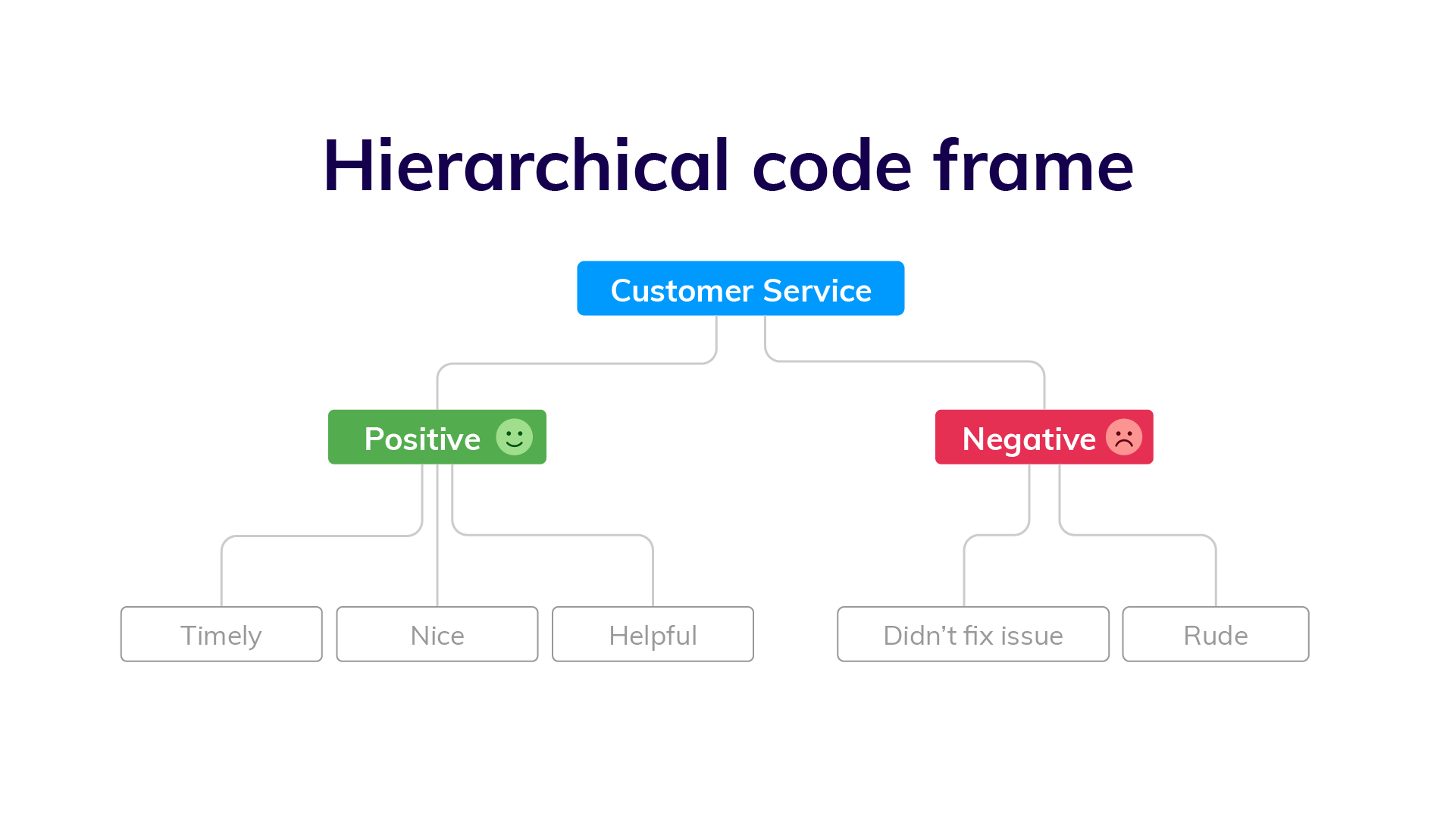

There are 3 key questions to consider when selecting an appropriate inferential statistic for a study: What is the research question? What is the study design? and What is the level of measurement? It is important for investigators to carefully consider these questions when developing the study protocol and creating the analysis plan. The figures that accompany these questions show decision trees that will help you to narrow down the list of inferential statistics that would be relevant to a particular study. Appendix 1 provides brief definitions of the inferential statistics named in these figures. Additional information, such as the formulae for various inferential statistics, can be obtained from textbooks, statistical software packages, and biostatisticians.

What Is the Research Question?

The first step in identifying relevant inferential statistics for a study is to consider the type of research question being asked. You can find more details about the different types of research questions in a previous article in this Research Primer series that covered questions and hypotheses. 5 A relational question seeks information about the relationship among variables; in this situation, investigators will be interested in determining whether there is an association ( Figure 1 ). A causal question seeks information about the effect of an intervention on an outcome; in this situation, the investigator will be interested in determining whether there is a difference ( Figure 2 ).

Decision tree to identify inferential statistics for an association.

Decision tree to identify inferential statistics for measuring a difference.

What Is the Study Design?

When considering a question of association, investigators will be interested in measuring the relationship between variables ( Figure 1 ). A study designed to determine whether there is consensus among different raters will be measuring agreement. For example, an investigator may be interested in determining whether 2 raters, using the same assessment tool, arrive at the same score. Correlation analyses examine the strength of a relationship or connection between 2 variables, like age and blood glucose. Regression analyses also examine the strength of a relationship or connection; however, in this type of analysis, one variable is considered an outcome (or dependent variable) and the other variable is considered a predictor (or independent variable). Regression analyses often consider the influence of multiple predictors on an outcome at the same time. For example, an investigator may be interested in examining the association between a treatment and blood glucose, while also considering other factors, like age, sex, ethnicity, exercise frequency, and weight.

When considering a question of difference, investigators must first determine how many groups they will be comparing. In some cases, investigators may be interested in comparing the characteristic of one group with that of an external reference group. For example, is the mean age of study participants similar to the mean age of all people in the target group? If more than one group is involved, then investigators must also determine whether there is an underlying connection between the sets of values (or samples ) to be compared. Samples are considered independent or unpaired when the information is taken from different groups. For example, we could use an unpaired t test to compare the mean age between 2 independent samples, such as the intervention and control groups in a study. Samples are considered related or paired if the information is taken from the same group of people, for example, measurement of blood glucose at the beginning and end of a study. Because blood glucose is measured in the same people at both time points, we could use a paired t test to determine whether there has been a significant change in blood glucose.

What Is the Level of Measurement?

As described in the first section of this article, variables can be grouped according to the level of measurement (nominal, ordinal, or interval). In most cases, the independent variable in an inferential statistic will be nominal; therefore, investigators need to know the level of measurement for the dependent variable before they can select the relevant inferential statistic. Two exceptions to this consideration are correlation analyses and regression analyses ( Figure 1 ). Because a correlation analysis measures the strength of association between 2 variables, we need to consider the level of measurement for both variables. Regression analyses can consider multiple independent variables, often with a variety of measurement levels. However, for these analyses, investigators still need to consider the level of measurement for the dependent variable.

Selection of inferential statistics to test interval-level variables must include consideration of how the data are distributed. An underlying assumption for parametric tests is that the data approximate a normal distribution. When the data are not normally distributed, information derived from a parametric test may be wrong. 6 When the assumption of normality is violated (for example, when the data are skewed), then investigators should use a nonparametric test. If the data are normally distributed, then investigators can use a parametric test.

ADDITIONAL CONSIDERATIONS

What is the level of significance.

An inferential statistic is used to calculate a p value, the probability of obtaining the observed data by chance. Investigators can then compare this p value against a prespecified level of significance, which is often chosen to be 0.05. This level of significance represents a 1 in 20 chance that the observation is wrong, which is considered an acceptable level of error.

What Are the Most Commonly Used Statistics?

In 1983, Emerson and Colditz 7 reported the first review of statistics used in original research articles published in the New England Journal of Medicine . This review of statistics used in the journal was updated in 1989 and 2005, 8 and this type of analysis has been replicated in many other journals. 9 – 13 Collectively, these reviews have identified 2 important observations. First, the overall sophistication of statistical methodology used and reported in studies has grown over time, with survival analyses and multivariable regression analyses becoming much more common. The second observation is that, despite this trend, 1 in 4 articles describe no statistical methods or report only simple descriptive statistics. When inferential statistics are used, the most common are t tests, contingency table tests (for example, χ 2 test and Fisher exact test), and simple correlation and regression analyses. This information is important for educators, investigators, reviewers, and readers because it suggests that a good foundational knowledge of descriptive statistics and common inferential statistics will enable us to correctly evaluate the majority of research articles. 11 – 13 However, to fully take advantage of all research published in high-impact journals, we need to become acquainted with some of the more complex methods, such as multivariable regression analyses. 8 , 13

What Are Some Additional Resources?

As an investigator and Associate Editor with CJHP , I have often relied on the advice of colleagues to help create my own analysis plans and review the plans of others. Biostatisticians have a wealth of knowledge in the field of statistical analysis and can provide advice on the correct selection, application, and interpretation of these methods. Colleagues who have “been there and done that” with their own data analysis plans are also valuable sources of information. Identify these individuals and consult with them early and often as you develop your analysis plan.

Another important resource to consider when creating your analysis plan is textbooks. Numerous statistical textbooks are available, differing in levels of complexity and scope. The titles listed in the “Further Reading” section are just a few suggestions. I encourage interested readers to look through these and other books to find resources that best fit their needs. However, one crucial book that I highly recommend to anyone wanting to be an investigator or peer reviewer is Lang and Secic’s How to Report Statistics in Medicine (see “Further Reading”). As the title implies, this book covers a wide range of statistics used in medical research and provides numerous examples of how to correctly report the results.

CONCLUSIONS

When it comes to creating an analysis plan for your project, I recommend following the sage advice of Douglas Adams in The Hitchhiker’s Guide to the Galaxy : Don’t panic! 14 Begin with simple methods to summarize and visualize your data, then use the key questions and decision trees provided in this article to identify relevant statistical tests. Information in this article will give you and your co-investigators a place to start discussing the elements necessary for developing an analysis plan. But do not stop there! Use advice from biostatisticians and more experienced colleagues, as well as information in textbooks, to help create your analysis plan and choose the most appropriate statistics for your study. Making careful, informed decisions about the statistics to use in your study should reduce the risk of confirming Mr Twain’s concern.

Appendix 1. Glossary of statistical terms * (part 1 of 2)

- 1-way ANOVA: Uses 1 variable to define the groups for comparing means. This is similar to the Student t test when comparing the means of 2 groups.

- Kruskall–Wallis 1-way ANOVA: Nonparametric alternative for the 1-way ANOVA. Used to determine the difference in medians between 3 or more groups.

- n -way ANOVA: Uses 2 or more variables to define groups when comparing means. Also called a “between-subjects factorial ANOVA”.

- Repeated-measures ANOVA: A method for analyzing whether the means of 3 or more measures from the same group of participants are different.

- Freidman ANOVA: Nonparametric alternative for the repeated-measures ANOVA. It is often used to compare rankings and preferences that are measured 3 or more times.

- Fisher exact: Variation of chi-square that accounts for cell counts < 5.

- McNemar: Variation of chi-square that tests statistical significance of changes in 2 paired measurements of dichotomous variables.

- Cochran Q: An extension of the McNemar test that provides a method for testing for differences between 3 or more matched sets of frequencies or proportions. Often used as a measure of heterogeneity in meta-analyses.

- 1-sample: Used to determine whether the mean of a sample is significantly different from a known or hypothesized value.

- Independent-samples t test (also referred to as the Student t test): Used when the independent variable is a nominal-level variable that identifies 2 groups and the dependent variable is an interval-level variable.

- Paired: Used to compare 2 pairs of scores between 2 groups (e.g., baseline and follow-up blood pressure in the intervention and control groups).

Lang TA, Secic M. How to report statistics in medicine: annotated guidelines for authors, editors, and reviewers. 2nd ed. Philadelphia (PA): American College of Physicians; 2006.

Norman GR, Streiner DL. PDQ statistics. 3rd ed. Hamilton (ON): B.C. Decker; 2003.

Plichta SB, Kelvin E. Munro’s statistical methods for health care research . 6th ed. Philadelphia (PA): Wolters Kluwer Health/ Lippincott, Williams & Wilkins; 2013.

This article is the 12th in the CJHP Research Primer Series, an initiative of the CJHP Editorial Board and the CSHP Research Committee. The planned 2-year series is intended to appeal to relatively inexperienced researchers, with the goal of building research capacity among practising pharmacists. The articles, presenting simple but rigorous guidance to encourage and support novice researchers, are being solicited from authors with appropriate expertise.

Previous articles in this series:

- Bond CM. The research jigsaw: how to get started. Can J Hosp Pharm . 2014;67(1):28–30.

- Tully MP. Research: articulating questions, generating hypotheses, and choosing study designs. Can J Hosp Pharm . 2014;67(1):31–4.

- Loewen P. Ethical issues in pharmacy practice research: an introductory guide. Can J Hosp Pharm. 2014;67(2):133–7.

- Tsuyuki RT. Designing pharmacy practice research trials. Can J Hosp Pharm . 2014;67(3):226–9.

- Bresee LC. An introduction to developing surveys for pharmacy practice research. Can J Hosp Pharm . 2014;67(4):286–91.

- Gamble JM. An introduction to the fundamentals of cohort and case–control studies. Can J Hosp Pharm . 2014;67(5):366–72.

- Austin Z, Sutton J. Qualitative research: getting started. C an J Hosp Pharm . 2014;67(6):436–40.

- Houle S. An introduction to the fundamentals of randomized controlled trials in pharmacy research. Can J Hosp Pharm . 2014; 68(1):28–32.

- Charrois TL. Systematic reviews: What do you need to know to get started? Can J Hosp Pharm . 2014;68(2):144–8.

- Sutton J, Austin Z. Qualitative research: data collection, analysis, and management. Can J Hosp Pharm . 2014;68(3):226–31.

- Cadarette SM, Wong L. An introduction to health care administrative data. Can J Hosp Pharm. 2014;68(3):232–7.

Competing interests: None declared.

Further Reading

- Devor J, Peck R. Statistics: the exploration and analysis of data. 7th ed. Boston (MA): Brooks/Cole Cengage Learning; 2012. [ Google Scholar ]

- Lang TA, Secic M. How to report statistics in medicine: annotated guidelines for authors, editors, and reviewers. 2nd ed. Philadelphia (PA): American College of Physicians; 2006. [ Google Scholar ]

- Mendenhall W, Beaver RJ, Beaver BM. Introduction to probability and statistics. 13th ed. Belmont (CA): Brooks/Cole Cengage Learning; 2009. [ Google Scholar ]

- Norman GR, Streiner DL. PDQ statistics. 3rd ed. Hamilton (ON): B.C. Decker; 2003. [ Google Scholar ]

- Plichta SB, Kelvin E. Munro’s statistical methods for health care research. 6th ed. Philadelphia (PA): Wolters Kluwer Health/Lippincott, Williams & Wilkins; 2013. [ Google Scholar ]

Customize Your Path

Filters Applied

Customize Your Experience.

Utilize the "Customize Your Path" feature to refine the information displayed in myRESEARCHpath based on your role, project inclusions, sponsor or funding, and management center.

Design the analysis plan

Need assistance with analysis planning?

Get help with analysis planning.

Contact the Biostatistics, Epidemiology, and Research Design (BERD) Methods Core:

- Submit a help request

Guide to the statistical analysis plan

Affiliations.

- 1 Department of Anesthesiology and Critical Care Medicine, The Children's Hospital of Philadelphia, University of Pennsylvania, Perelman School of Medicine, Philadelphia, Pennsylvania.

- 2 Department of Epidemiology and Biostatistics, Dornsife School of Public Health, Drexel University, Philadelphia, Pennsylvania.

- PMID: 30609103

- DOI: 10.1111/pan.13576

Biomedical research has been struck with the problem of study findings that are not reproducible. With the advent of large databases and powerful statistical software, it has become easier to find associations and form conclusions from data without forming an a-priori hypothesis. This approach may yield associations without clinical relevance, false positive findings, or biased results due to "fishing" for the desired results. To improve reproducibility, transparency, and validity among clinical trials, the National Institute of Health recently updated its grant application requirements, which mandates registration of clinical trials and submission of the original statistical analysis plan (SAP) along with the research protocol. Many leading journals also require the SAP as part of the submission package. The goal of this article and the companion article detailing the SAP of an actual research study is to provide a practical guide on writing an effective SAP. We describe the what, why, when, where, and who of a SAP, and highlight the key contents of the SAP.

Keywords: SAP; reproducibility; research methodology; statistical analysis; transparency; validity.

© 2019 John Wiley & Sons Ltd.

Publication types

- Biomedical Research / standards*

- Data Interpretation, Statistical

- Databases, Factual

- Reproducibility of Results

- Research Design

- Statistics as Topic / standards*

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Data Analysis in Research: Types & Methods

Content Index

Why analyze data in research?

Types of data in research, finding patterns in the qualitative data, methods used for data analysis in qualitative research, preparing data for analysis, methods used for data analysis in quantitative research, considerations in research data analysis, what is data analysis in research.

Definition of research in data analysis: According to LeCompte and Schensul, research data analysis is a process used by researchers to reduce data to a story and interpret it to derive insights. The data analysis process helps reduce a large chunk of data into smaller fragments, which makes sense.

Three essential things occur during the data analysis process — the first is data organization . Summarization and categorization together contribute to becoming the second known method used for data reduction. It helps find patterns and themes in the data for easy identification and linking. The third and last way is data analysis – researchers do it in both top-down and bottom-up fashion.

LEARN ABOUT: Research Process Steps

On the other hand, Marshall and Rossman describe data analysis as a messy, ambiguous, and time-consuming but creative and fascinating process through which a mass of collected data is brought to order, structure and meaning.

We can say that “the data analysis and data interpretation is a process representing the application of deductive and inductive logic to the research and data analysis.”

Researchers rely heavily on data as they have a story to tell or research problems to solve. It starts with a question, and data is nothing but an answer to that question. But, what if there is no question to ask? Well! It is possible to explore data even without a problem – we call it ‘Data Mining’, which often reveals some interesting patterns within the data that are worth exploring.

Irrelevant to the type of data researchers explore, their mission and audiences’ vision guide them to find the patterns to shape the story they want to tell. One of the essential things expected from researchers while analyzing data is to stay open and remain unbiased toward unexpected patterns, expressions, and results. Remember, sometimes, data analysis tells the most unforeseen yet exciting stories that were not expected when initiating data analysis. Therefore, rely on the data you have at hand and enjoy the journey of exploratory research.

Create a Free Account

Every kind of data has a rare quality of describing things after assigning a specific value to it. For analysis, you need to organize these values, processed and presented in a given context, to make it useful. Data can be in different forms; here are the primary data types.

- Qualitative data: When the data presented has words and descriptions, then we call it qualitative data . Although you can observe this data, it is subjective and harder to analyze data in research, especially for comparison. Example: Quality data represents everything describing taste, experience, texture, or an opinion that is considered quality data. This type of data is usually collected through focus groups, personal qualitative interviews , qualitative observation or using open-ended questions in surveys.

- Quantitative data: Any data expressed in numbers of numerical figures are called quantitative data . This type of data can be distinguished into categories, grouped, measured, calculated, or ranked. Example: questions such as age, rank, cost, length, weight, scores, etc. everything comes under this type of data. You can present such data in graphical format, charts, or apply statistical analysis methods to this data. The (Outcomes Measurement Systems) OMS questionnaires in surveys are a significant source of collecting numeric data.

- Categorical data: It is data presented in groups. However, an item included in the categorical data cannot belong to more than one group. Example: A person responding to a survey by telling his living style, marital status, smoking habit, or drinking habit comes under the categorical data. A chi-square test is a standard method used to analyze this data.

Learn More : Examples of Qualitative Data in Education

Data analysis in qualitative research

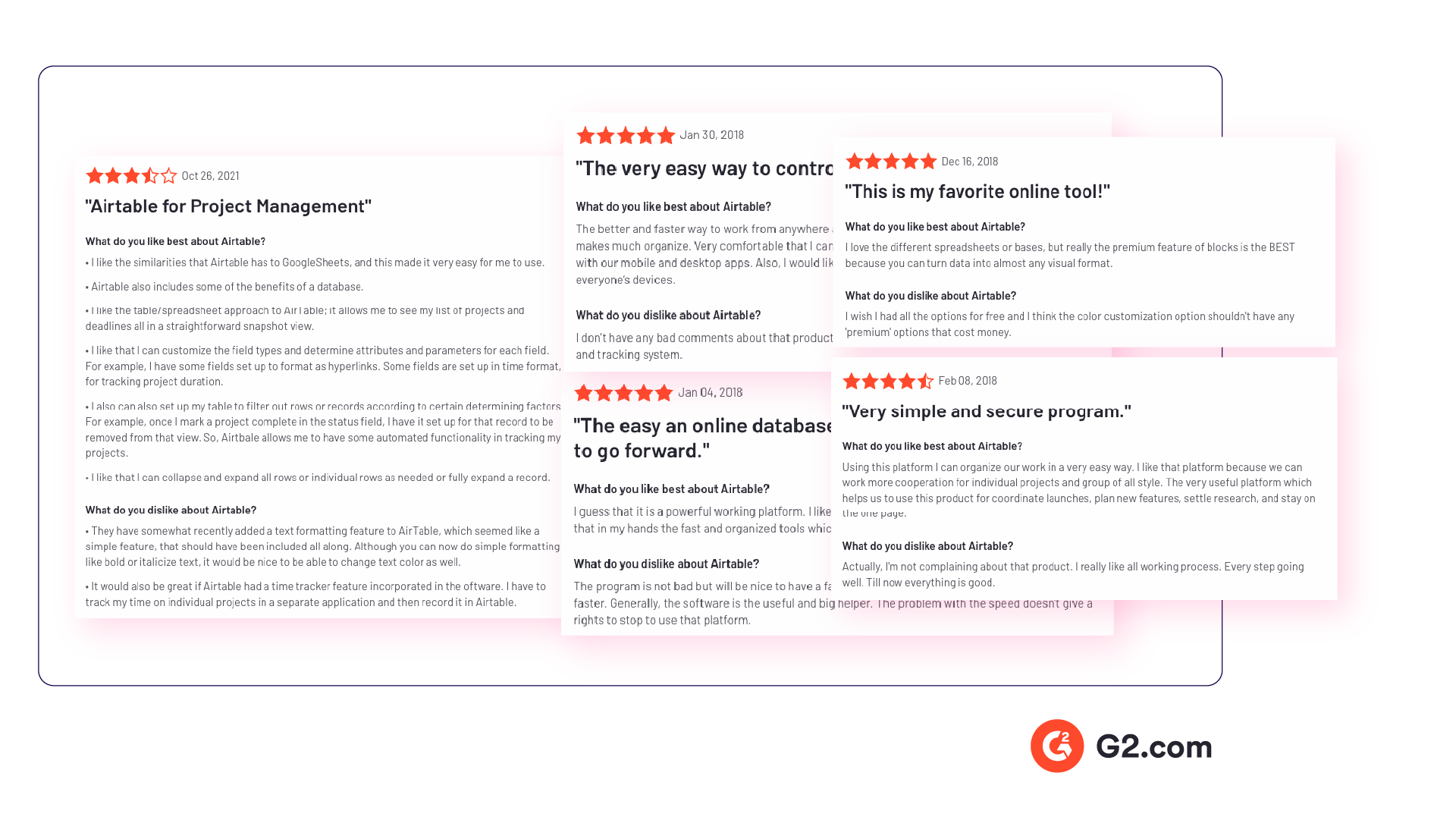

Data analysis and qualitative data research work a little differently from the numerical data as the quality data is made up of words, descriptions, images, objects, and sometimes symbols. Getting insight from such complicated information is a complicated process. Hence it is typically used for exploratory research and data analysis .

Although there are several ways to find patterns in the textual information, a word-based method is the most relied and widely used global technique for research and data analysis. Notably, the data analysis process in qualitative research is manual. Here the researchers usually read the available data and find repetitive or commonly used words.

For example, while studying data collected from African countries to understand the most pressing issues people face, researchers might find “food” and “hunger” are the most commonly used words and will highlight them for further analysis.

LEARN ABOUT: Level of Analysis

The keyword context is another widely used word-based technique. In this method, the researcher tries to understand the concept by analyzing the context in which the participants use a particular keyword.

For example , researchers conducting research and data analysis for studying the concept of ‘diabetes’ amongst respondents might analyze the context of when and how the respondent has used or referred to the word ‘diabetes.’

The scrutiny-based technique is also one of the highly recommended text analysis methods used to identify a quality data pattern. Compare and contrast is the widely used method under this technique to differentiate how a specific text is similar or different from each other.

For example: To find out the “importance of resident doctor in a company,” the collected data is divided into people who think it is necessary to hire a resident doctor and those who think it is unnecessary. Compare and contrast is the best method that can be used to analyze the polls having single-answer questions types .

Metaphors can be used to reduce the data pile and find patterns in it so that it becomes easier to connect data with theory.

Variable Partitioning is another technique used to split variables so that researchers can find more coherent descriptions and explanations from the enormous data.

LEARN ABOUT: Qualitative Research Questions and Questionnaires

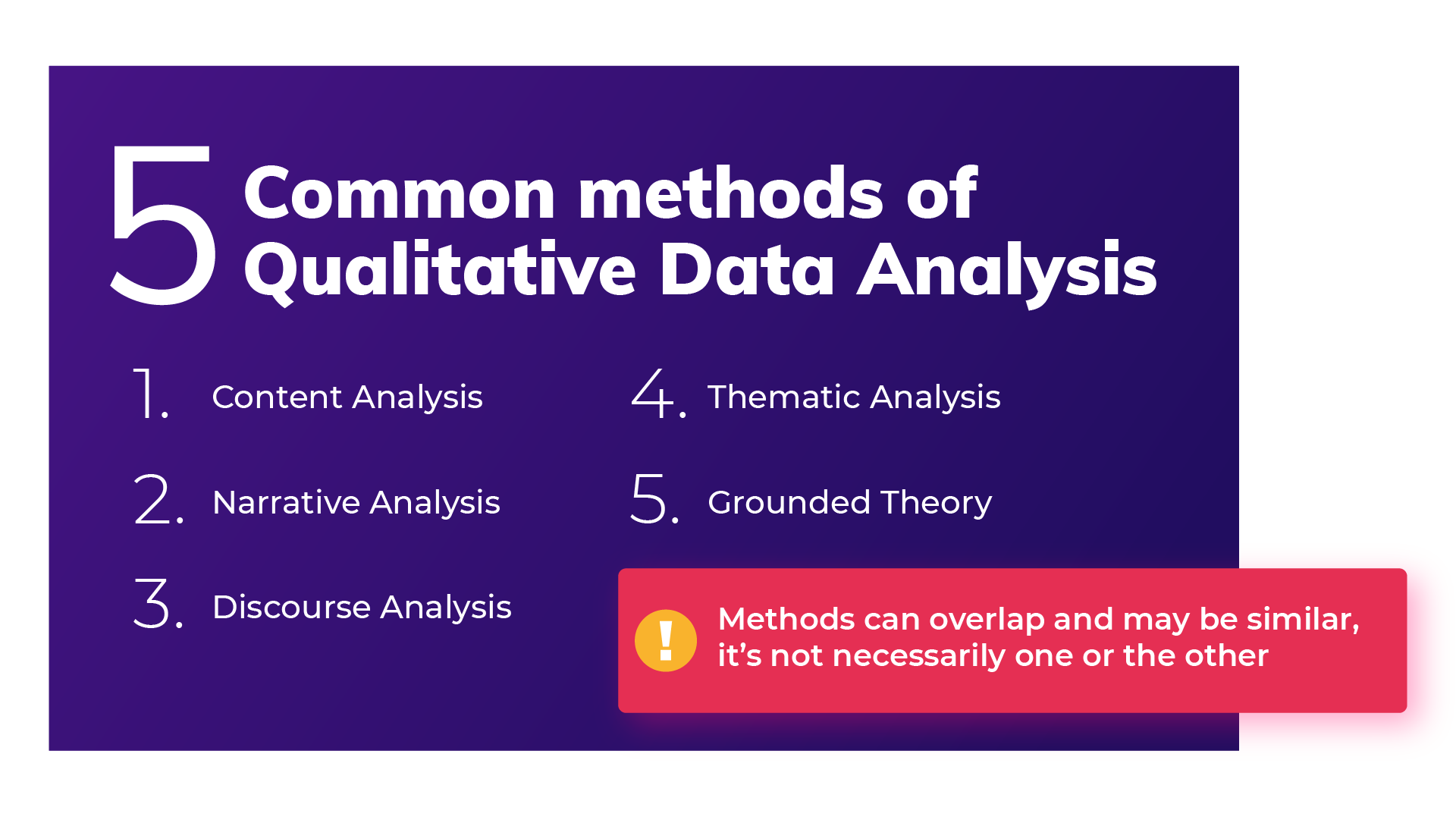

There are several techniques to analyze the data in qualitative research, but here are some commonly used methods,

- Content Analysis: It is widely accepted and the most frequently employed technique for data analysis in research methodology. It can be used to analyze the documented information from text, images, and sometimes from the physical items. It depends on the research questions to predict when and where to use this method.

- Narrative Analysis: This method is used to analyze content gathered from various sources such as personal interviews, field observation, and surveys . The majority of times, stories, or opinions shared by people are focused on finding answers to the research questions.

- Discourse Analysis: Similar to narrative analysis, discourse analysis is used to analyze the interactions with people. Nevertheless, this particular method considers the social context under which or within which the communication between the researcher and respondent takes place. In addition to that, discourse analysis also focuses on the lifestyle and day-to-day environment while deriving any conclusion.

- Grounded Theory: When you want to explain why a particular phenomenon happened, then using grounded theory for analyzing quality data is the best resort. Grounded theory is applied to study data about the host of similar cases occurring in different settings. When researchers are using this method, they might alter explanations or produce new ones until they arrive at some conclusion.

LEARN ABOUT: 12 Best Tools for Researchers

Data analysis in quantitative research

The first stage in research and data analysis is to make it for the analysis so that the nominal data can be converted into something meaningful. Data preparation consists of the below phases.

Phase I: Data Validation

Data validation is done to understand if the collected data sample is per the pre-set standards, or it is a biased data sample again divided into four different stages

- Fraud: To ensure an actual human being records each response to the survey or the questionnaire

- Screening: To make sure each participant or respondent is selected or chosen in compliance with the research criteria

- Procedure: To ensure ethical standards were maintained while collecting the data sample

- Completeness: To ensure that the respondent has answered all the questions in an online survey. Else, the interviewer had asked all the questions devised in the questionnaire.

Phase II: Data Editing

More often, an extensive research data sample comes loaded with errors. Respondents sometimes fill in some fields incorrectly or sometimes skip them accidentally. Data editing is a process wherein the researchers have to confirm that the provided data is free of such errors. They need to conduct necessary checks and outlier checks to edit the raw edit and make it ready for analysis.

Phase III: Data Coding

Out of all three, this is the most critical phase of data preparation associated with grouping and assigning values to the survey responses . If a survey is completed with a 1000 sample size, the researcher will create an age bracket to distinguish the respondents based on their age. Thus, it becomes easier to analyze small data buckets rather than deal with the massive data pile.

LEARN ABOUT: Steps in Qualitative Research

After the data is prepared for analysis, researchers are open to using different research and data analysis methods to derive meaningful insights. For sure, statistical analysis plans are the most favored to analyze numerical data. In statistical analysis, distinguishing between categorical data and numerical data is essential, as categorical data involves distinct categories or labels, while numerical data consists of measurable quantities. The method is again classified into two groups. First, ‘Descriptive Statistics’ used to describe data. Second, ‘Inferential statistics’ that helps in comparing the data .

Descriptive statistics

This method is used to describe the basic features of versatile types of data in research. It presents the data in such a meaningful way that pattern in the data starts making sense. Nevertheless, the descriptive analysis does not go beyond making conclusions. The conclusions are again based on the hypothesis researchers have formulated so far. Here are a few major types of descriptive analysis methods.

Measures of Frequency

- Count, Percent, Frequency

- It is used to denote home often a particular event occurs.

- Researchers use it when they want to showcase how often a response is given.

Measures of Central Tendency

- Mean, Median, Mode

- The method is widely used to demonstrate distribution by various points.

- Researchers use this method when they want to showcase the most commonly or averagely indicated response.

Measures of Dispersion or Variation

- Range, Variance, Standard deviation

- Here the field equals high/low points.

- Variance standard deviation = difference between the observed score and mean

- It is used to identify the spread of scores by stating intervals.

- Researchers use this method to showcase data spread out. It helps them identify the depth until which the data is spread out that it directly affects the mean.

Measures of Position

- Percentile ranks, Quartile ranks

- It relies on standardized scores helping researchers to identify the relationship between different scores.

- It is often used when researchers want to compare scores with the average count.

For quantitative research use of descriptive analysis often give absolute numbers, but the in-depth analysis is never sufficient to demonstrate the rationale behind those numbers. Nevertheless, it is necessary to think of the best method for research and data analysis suiting your survey questionnaire and what story researchers want to tell. For example, the mean is the best way to demonstrate the students’ average scores in schools. It is better to rely on the descriptive statistics when the researchers intend to keep the research or outcome limited to the provided sample without generalizing it. For example, when you want to compare average voting done in two different cities, differential statistics are enough.

Descriptive analysis is also called a ‘univariate analysis’ since it is commonly used to analyze a single variable.

Inferential statistics

Inferential statistics are used to make predictions about a larger population after research and data analysis of the representing population’s collected sample. For example, you can ask some odd 100 audiences at a movie theater if they like the movie they are watching. Researchers then use inferential statistics on the collected sample to reason that about 80-90% of people like the movie.

Here are two significant areas of inferential statistics.

- Estimating parameters: It takes statistics from the sample research data and demonstrates something about the population parameter.

- Hypothesis test: I t’s about sampling research data to answer the survey research questions. For example, researchers might be interested to understand if the new shade of lipstick recently launched is good or not, or if the multivitamin capsules help children to perform better at games.

These are sophisticated analysis methods used to showcase the relationship between different variables instead of describing a single variable. It is often used when researchers want something beyond absolute numbers to understand the relationship between variables.

Here are some of the commonly used methods for data analysis in research.

- Correlation: When researchers are not conducting experimental research or quasi-experimental research wherein the researchers are interested to understand the relationship between two or more variables, they opt for correlational research methods.

- Cross-tabulation: Also called contingency tables, cross-tabulation is used to analyze the relationship between multiple variables. Suppose provided data has age and gender categories presented in rows and columns. A two-dimensional cross-tabulation helps for seamless data analysis and research by showing the number of males and females in each age category.

- Regression analysis: For understanding the strong relationship between two variables, researchers do not look beyond the primary and commonly used regression analysis method, which is also a type of predictive analysis used. In this method, you have an essential factor called the dependent variable. You also have multiple independent variables in regression analysis. You undertake efforts to find out the impact of independent variables on the dependent variable. The values of both independent and dependent variables are assumed as being ascertained in an error-free random manner.

- Frequency tables: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Analysis of variance: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Researchers must have the necessary research skills to analyze and manipulation the data , Getting trained to demonstrate a high standard of research practice. Ideally, researchers must possess more than a basic understanding of the rationale of selecting one statistical method over the other to obtain better data insights.

- Usually, research and data analytics projects differ by scientific discipline; therefore, getting statistical advice at the beginning of analysis helps design a survey questionnaire, select data collection methods , and choose samples.

LEARN ABOUT: Best Data Collection Tools

- The primary aim of data research and analysis is to derive ultimate insights that are unbiased. Any mistake in or keeping a biased mind to collect data, selecting an analysis method, or choosing audience sample il to draw a biased inference.

- Irrelevant to the sophistication used in research data and analysis is enough to rectify the poorly defined objective outcome measurements. It does not matter if the design is at fault or intentions are not clear, but lack of clarity might mislead readers, so avoid the practice.

- The motive behind data analysis in research is to present accurate and reliable data. As far as possible, avoid statistical errors, and find a way to deal with everyday challenges like outliers, missing data, data altering, data mining , or developing graphical representation.

LEARN MORE: Descriptive Research vs Correlational Research The sheer amount of data generated daily is frightening. Especially when data analysis has taken center stage. in 2018. In last year, the total data supply amounted to 2.8 trillion gigabytes. Hence, it is clear that the enterprises willing to survive in the hypercompetitive world must possess an excellent capability to analyze complex research data, derive actionable insights, and adapt to the new market needs.

LEARN ABOUT: Average Order Value

QuestionPro is an online survey platform that empowers organizations in data analysis and research and provides them a medium to collect data by creating appealing surveys.

MORE LIKE THIS

The Best Email Survey Tool to Boost Your Feedback Game

May 7, 2024

Top 10 Employee Engagement Survey Tools

Top 20 Employee Engagement Software Solutions

May 3, 2024

15 Best Customer Experience Software of 2024

May 2, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

2.3 Data management and analysis

Learning objectives.

Learners will be able to…

- Define and construct a data analysis plan

- Define key quantitative data management terms—variable name, data dictionary, and observations/cases

- Differentiate between univariate and bivariate quantitative analysis

- Explain when we might use quantitative bivariate analysis in social work research

- Identify how your qualitative research question, research aim, and type of data may influence your choice of analytic methods

- Outline the steps you will take in preparation for conducting qualitative data analysis

After you have your raw data, whether this is secondary data or data you collected yourself, you will need to analyze it. While the specific steps to follow in quantitative or qualitative data analysis are beyond the scope of this chapter, we are going to address some basic concepts in this section to help you create a data analysis plan. A data analysis plan is an ordered outline that includes your research question, a description of the data you are going to use to answer it, and the exact step-by-step analyses that you plan to run to answer your research question. If you look back at Table 2.1, you will see that creating a data analysis plan is a part of the study design process. The data analysis plan flows from the research question, is integral to the study design, and should be well conceptualized prior to beginning data collection. In this section, we will walk through the basics of quantitative and qualitative data analysis to help you understand the fundamentals of creating a data analysis plan.

Quantitative Data: Management

When considering what data you might want to collect as part of your project, there are two important considerations that can create dilemmas for researchers. You might only get one chance to interact with your participants, so you must think comprehensively in your planning phase about what information you need and collect as much relevant data as possible. At the same time, though, especially when collecting sensitive information, you need to consider how onerous the data collection is for participants and whether you really need them to share that information. Just because something is interesting to us doesn’t mean it’s related enough to our research question to chase it down. Work with your research team and/or faculty early in your project to talk through these issues before you get to this point. And if you’re using secondary data, make sure you have access to all the information you need in that data before you use it.

Once you’ve collected your quantitative data, you need to make sure it is well-organized in a database in a way that’s actually usable. “Database” can be kind of a scary word, but really, it can be as simple as an Excel spreadsheet or a data file in whatever program you’re using to analyze your data. You may want to avoid Excel and use a formal database such as Microsoft Access or MySQL if you’ve got a large or complicated data set. But if your data set is smaller and you plan to keep your analyses simple, you can definitely get away with Excel. A typical data set is organized with variables as columns and observations/cases as rows. For example, let’s say we did a survey on ice cream preferences and collected the following information in Table 2.3:

There are a few key data management terms to understand:

- Variable name : Just what it sounds like—the name of your variable. Make sure this is something useful, short and, if you’re using something other than Excel, all one word. Most statistical programs will automatically rename variables for you if they aren’t one word, but the names can be a little ridiculous and long.

- Observations/cases : The rows in your data set. In social work, these are often your study participants (people), but can be anything from census tracts to black bears to trains. When we talk about sample size, we’re talking about the number of observations/cases. In our mini data set, each person is an observation/case.

- Data dictionary (also called a code book or metadata) : This is the document where you list your variable names, what the variables actually measure or represent, what each of the values of the variable mean if the meaning isn’t obvious (i.e., if there are numbers assigned to gender), the level of measurement and anything special to know about the variables (for instance, the source if you mashed two data sets together). If you’re using secondary data, the researchers sharing the data should make the data dictionary available.

Let’s take that mini data set we’ve got up above and we’ll show you what your data dictionary might look like in Table 2.4.

Quantitative Data: Univariate Analysis

As part of planning for your research, you should come up with a data analysis plan. Remember, a data analysis plan is an ordered outline that includes your research question, a description of the data you are going to use to answer it, and the exact step-by-step analyses that you plan to run to answer your research question. A basic data analysis plan might look something like what you see in Table 2.5. Don’t panic if you don’t yet understand some of the statistical terms in the plan; we’re going to delve into some of them in this section, and others will be covered in more depth in your statistics courses. Note here also that this is what operationalizing your variables and moving through your research with them looks like on a basic level. We will cover operationalization in more depth in Chapter 10.

An important point to remember is that you should never get stuck on using a particular statistical method because you or one of your co-researchers thinks it’s cool or it’s the hot thing in your field right now. You should certainly go into your data analysis plan with ideas, but in the end, you need to let your research question guide what statistical tests you plan to use. Be prepared to be flexible if your plan doesn’t pan out because the data is behaving in unexpected ways.

You’ll notice that the first step in the quantitative data analysis plan is univariate and descriptive statistics. Univariate data analysis is a quantitative method in which a variable is examined individually to determine its distribution , or the way the scores are distributed across the levels, or values, of that variable. When we talk about levels , what we are talking about are the possible values of the variable—like a participant’s age, income or gender. (Note that this is different from levels of measurement , which will be discussed in Chapter 11, but the level of measurement of your variables absolutely affects what kinds of analyses you can do with it.) Univariate analysis is non-relational , which just means that we’re not looking into how our variables relate to each other. Instead, we’re looking at variables in isolation to try to understand them better. For this reason, univariate analysis is used for descriptive research questions.

So when do you use univariate data analysis? Always! It should be the first thing you do with your quantitative data, whether you are planning to move on to more sophisticated statistical analyses or are conducting a study to describe a new phenomenon. You need to understand what the values of each variable look like—what if one of your variables has a lot of missing data because participants didn’t answer that question on your survey? What if there isn’t much variation in the gender of your sample? These are things you’ll learn through univariate analysis.

Quantitative Data: Bivariate Analysis

Did you know that ice cream causes shark attacks? It’s true! When ice cream sales go up in the summer, so does the rate of shark attacks. So you’d better put down that ice cream cone, unless you want to make yourself look more delicious to a shark.

Ok, so it’s quite obviously not true that ice cream causes shark attacks. But if you looked at these two variables and how they’re related, you’d notice that during times of the year with high ice cream sales, there are also the most shark attacks. This is a classic example of the difference between correlation and causation. Despite the fact that the conclusion we drew about causation was wrong, it’s nonetheless true that these two variables appear related, and researchers figured that out through the use of bivariate analysis.

Bivariate analysis consists of a group of statistical techniques that examine the association between two variables. We could look at how anti-depressant medications and appetite are related, whether there is a relation between having a pet and emotional well-being, or if a policy-maker’s level of education is related to how they vote on bills related to environmental issues.

Bivariate analysis forms the foundation of multivariate analysis, which we don’t get to in this book. All you really need to know here is that there are steps beyond bivariate analysis, which you’ve undoubtedly seen in scholarly literature already! But before we can move forward with multivariate analysis, we need to understand the associations between the variables in our study.

Throughout your PhD program, you will learn much more about quantitative data analysis techniques, including more sophisticated multivariate analysis methods. Hopefully this section has provided you with some initial insights into how data is analyzed, and the importance of creating a data analysis plan prior to collecting data. Next, we will discuss some basic strategies for creating a qualitative data analysis plan.

Resources for Quantitative Data Analysis

While you are affiliated with a university, it is likely that you will have access to some kind of commercial statistics software. Examples in the previous section uses SPSS, the most common one our authoring team has seen in social work education. Like its competitors SAS and STATA, SPSS is expensive and your license to the software must be renewed every year (like a subscription). Even if you are able to install commercial statistics software on your computer, once your license expires, your program will no longer work. We believe that forcing students to learn software they will never use is wasteful and contributes to the (accurate, in many cases) perception from students that research class is unrelated to real-world practice. SPSS is more accessible due to its graphical user interface and does not require researchers to learn basic computer programming, but it is prohibitively costly if a student wanted to use it to measure practice data in their agency post-graduation.

Instead, we suggest getting familiar with JASP Statistics , a free and open-source alternative to SPSS developed and supported by the University of Amsterdam. It has a similar user interface as SPSS, and should be similarly easy to learn. Moreover, usability upgrades from SPSS like generating APA formatted tables make it a compelling option. While a great many of my students will rely on statistical analyses of their programs and practices in reports to funders, it is unlikely that any will use SPSS. Browse JASP’s how-to guide or consult this textbook Learning Statistics with JASP: A Tutorial for Psychology Students and Other Beginners , written by Danielle J. Navarro , David R. Foxcroft , and Thomas J. Faulkenberry .

Another open source statistics software package is R (a.k.a. The R Project for Statistical Computing ). R uses a command line interface, so you will need some coding knowledge in order to use it. Luckily, R is the most commonly used statistics software in the world, and the community of support and guides for using R are omnipresent online. For beginning researchers, consult the textbook Learning Statistics with R: A tutorial for psychology students and other beginners by Danielle J. Navarro .

While statistics software is sometimes needed to perform advanced statistical tests, most univariate and bivariate tests can be performed in spreadsheet software like Microsoft Excel, Google Sheets, or the free and open source LibreOffice Calc . Microsoft includes a ToolPak to perform complex data analysis as an add-on to Excel. For more information on using spreadsheet software to perform statistics, the open textbook Collaborative Statistics Using Spreadsheets by Susan Dean, Irene Mary Duranczyk, Barbara Illowsky, Suzanne Loch, and Janet Stottlemyer.

Statistical analysis is performed in just about every discipline, and as a result, there are a lot of openly licensed, free resources to assist you with your data analysis. We have endeavored to provide you the basics in the past few chapters, but ultimately, you will likely need additional support in completing quantitative data analysis from an instructor, textbook, or other resource. Browse the Open Textbook Library for statistics resources or look for video tutorials from reputable instructors like this video textbook on statistics by Bryan Koenig .

Qualitative Data: Management

Qualitative research often involves human participants and qualitative data can include of recordings or transcripts of their words, photographs or images, or diaries and documents. The personal nature of qualitative data poses the challenge of recognizability of sensitive information on individuals, communities, and places. If you choose this methodology for your research, you should familiarize yourself with policies, procedures, and rules to ensure safety and security of data in the documentation and dissemination process.

In any research involving primary data, a researcher is not only entrusted with the responsibility of upholding privacy of their participants but also accountable to them, making confidentiality and human subjects’ protection front and center of qualitative data management. Data such as audiotapes, videotapes, transcripts, notes, and other records should be stored and secured in locations where only authorized persons have access to them.

Sometimes in qualitative research, you will learn intimate details about people’s lives. Often, qualitative data contain personal identifiers. A helpful practice to ensure that participants confidentiality is to replace personal information in transcripts with pseudonyms or descriptive language (e.g., “[the participant’s sister]” instead of the sister’s name). Once audio and video recordings have been accurately transcribed with the de-identification of personal identifiers, the original recordings should be destroyed.

Qualitative Data: Analysis

There are many different types of qualitative data, including transcripts of interviews and focus groups, observational data, documents and other artifacts, and more. Your qualitative data analysis plan should be anchored in the type of data collected and the purpose of your study. Qualitative research can serve a range of purposes. Below is a brief list of general purposes we might consider when using a qualitative approach.

- Are you trying to understand how a particular group is affected by an issue?

- Are you trying to uncover how people arrive at a decision in a given situation?

- Are you trying to examine different points of view on the impact of a recent event?

- Are you trying to summarize how people understand or make sense of a condition?

- Are you trying to describe the needs of your target population?

If you don’t see the general aim of your research question reflected in one of these areas, don’t fret! This is only a small sampling of what you might be trying to accomplish with your qualitative study. Whatever your aim, you need to have a plan for what you will do once you have collected your data.

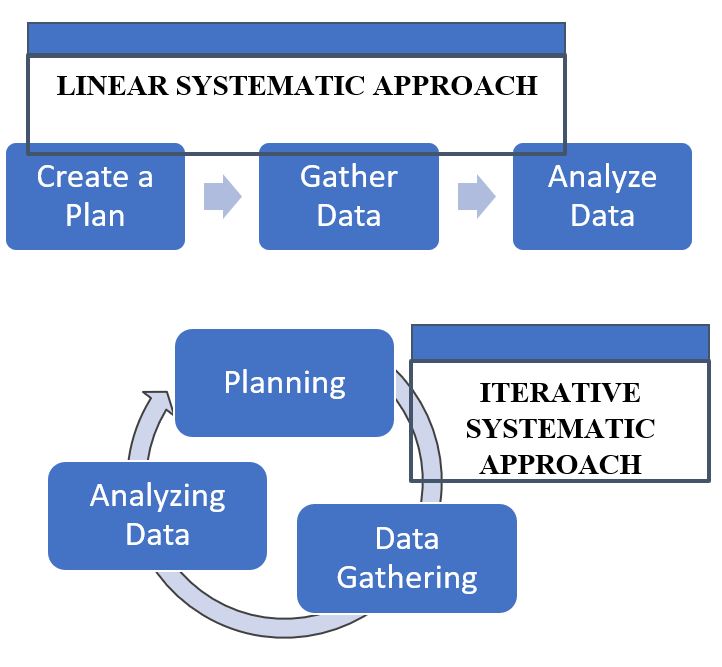

Iterative or Linear

Some qualitative research is linear , meaning it follows more of a traditionally quantitative process: create a plan, gather data, and analyze data; each step is completed before we proceed to the next. You can think of this like how information is presented in this book. We discuss each topic, one after another.

However, many times qualitative research is iterative , or evolving in cycles. An iterative approach means that once we begin collecting data, we also begin analyzing data as it is coming in. This early and ongoing analysis of our (incomplete) data then impacts our continued planning, data gathering and future analysis. Again, coming back to this book, while it may be written linear, we hope that you engage with it iteratively as you design and conduct your own research. By this we mean that you will revisit previous sections so you can understand how they fit together and you are in continuous process of building and revising how you think about the concepts you are learning about.

As you may have guessed, there are benefits and challenges to both linear and iterative approaches. A linear approach is much more straightforward, each step being fairly defined. However, linear research being more defined and rigid also presents certain challenges. A linear approach assumes that we know what we need to ask or look for at the very beginning of data collection, which often is not the case. Figure 2.1 contrasts the two approaches.

With iterative research, we have more flexibility to adapt our approach as we learn new things. We still need to keep our approach systematic and organized, however, so that our work doesn’t become a free-for-all. As we adapt, we do not want to stray too far from the original premise of our study. It’s also important to remember with an iterative approach that we may risk ethical concerns if our work extends beyond the original boundaries of our informed consent and institutional review board agreement (IRB; see Chapter 3 for more on IRBs). If you feel that you do need to modify your original research plan in a significant way as you learn more about the topic, you can submit an addendum to modify your original application that was submitted. Make sure to keep detailed notes of the decisions that you are making and what is informing these choices. This helps to support transparency and your credibility throughout the research process.

Acquainting yourself with your data

As you begin your analysis, you need to get to know your data. This often means reading through your data prior to any attempt at breaking it apart and labeling it. You might read through a couple of times, in fact. This helps give you a more comprehensive feel for each piece of data and the data as a whole, again, before you start to break it down into smaller units or deconstruct it. This is especially important if others assisted us in the data collection process. We often gather data as part of team and everyone involved in the analysis needs to be very familiar with all of the data.

Capturing your emerging understanding of the data

During your reviewing you will start to develop and evolve your understanding of what the data means. Coding is a part of the qualitative data analysis process where we begin to interpret and assign meaning to the data. It represents one of the first steps as we begin to filter the data through our own subjective lens as the researcher. This understanding of the data should be dynamic and flexible, but you want to have a way to capture this understanding as it evolves. You may include this as part of your qualitative codebook where you are tracking the main ideas that are emerging and what they mean. Table 2.6 is an example of how your thinking might change about a code and how you can go about capturing it.

There are a variety of different approaches to qualitative analysis, including thematic analysis, content analysis, grounded theory, phenomenology, photovoice, and more. The specific steps you will take to code your qualitative data, and to generate themes from these codes, will vary based on the analytic strategy you are employing. In designing your qualitative study, you would identify an analytical approach as you plan out your project. The one you select would depend on the type of data you have and what you want to accomplish with it. In Chapter 19, we will go into more detail about various types of qualitative data analysis. Each qualitative approach has specific techniques and methods that take substantial study and practice to master.

Key Takeaways

- Getting organized at the beginning of your project with a data analysis plan will help keep you on track. Data analysis plans should include your research question, a description of your data, and a step-by-step outline of what you’re going to do with it. [chapter 14.1]

- Be flexible with your data analysis plan—sometimes data surprises us and we have to adjust the statistical tests we are using. [chapter 14.1]

- Always make a data dictionary or, if using secondary data, get a copy of the data dictionary so you (or someone else) can understand the basics of your data. [chapter 14.1]

- Bivariate analysis is a group of statistical techniques that examine the relationship between two variables. [chapter 15.1]

- You need to conduct bivariate analyses before you can begin to draw conclusions from your data, including in future multivariate analyses. [chapter 15.1]

- There are a lot of high quality and free online resources to learn and perform statistical analysis.

- Qualitative research analysis requires preparation and careful planning. You will need to take time to familiarize yourself with the data in a general sense before you begin analyzing. [chapter 19.3]

- The specific steps you will take to code your qualitative data and generate final themes will depend on the qualitative analytic approach you select.

TRACK 1 (IF YOU ARE CREATING A RESEARCH PROPOSAL FOR THIS CLASS)

- Make a data analysis plan for your project. Remember this should include your research question, a description of the data you will use, and a step-by-step outline of what you’re going to do with your data once you have it, including statistical tests (non-relational and relational) that you plan to use. You can do this exercise whether you’re using quantitative or qualitative data! The same principles apply.

- Make a data dictionary for the data you are proposing to collect as part of your study. You can use the example above as a template.

TRACK 2 (IF YOU AREN’T CREATING A RESEARCH PROPOSAL FOR THIS CLASS)

You are researching the impact of your city’s recent harm reduction interventions for intravenous drug users (e.g., sterile injection kits, monitored use, overdose prevention, naloxone provision, etc.).

- Make a draft quantitative data analysis plan for your project. Remember this should include your research question, a description of the data you will use, and a step-by-step outline of what you’re going to do with your data once you have it, including statistical tests (non-relational and relational) that you plan to use. It’s okay if you don’t yet have a complete idea of the types of statistical analyses you might use.

An ordered outline that includes your research question, a description of the data you are going to use to answer it, and the exact analyses, step-by-step, that you plan to run to answer your research question.

The name of your variable.

The rows in your data set. In social work, these are often your study participants (people), but can be anything from census tracts to black bears to trains.