- Open access

- Published: 14 December 2020

A comprehensive performance analysis of Apache Hadoop and Apache Spark for large scale data sets using HiBench

- N. Ahmed ORCID: orcid.org/0000-0001-5663-0042 1 ,

- Andre L. C. Barczak ORCID: orcid.org/0000-0001-7648-285X 1 ,

- Teo Susnjak ORCID: orcid.org/0000-0001-9416-1435 1 &

- Mohammed A. Rashid ORCID: orcid.org/0000-0002-0844-5819 2

Journal of Big Data volume 7 , Article number: 110 ( 2020 ) Cite this article

18k Accesses

43 Citations

1 Altmetric

Metrics details

Big Data analytics for storing, processing, and analyzing large-scale datasets has become an essential tool for the industry. The advent of distributed computing frameworks such as Hadoop and Spark offers efficient solutions to analyze vast amounts of data. Due to the application programming interface (API) availability and its performance, Spark becomes very popular, even more popular than the MapReduce framework. Both these frameworks have more than 150 parameters, and the combination of these parameters has a massive impact on cluster performance. The default system parameters help the system administrator deploy their system applications without much effort, and they can measure their specific cluster performance with factory-set parameters. However, an open question remains: can new parameter selection improve cluster performance for large datasets? In this regard, this study investigates the most impacting parameters, under resource utilization, input splits, and shuffle, to compare the performance between Hadoop and Spark, using an implemented cluster in our laboratory. We used a trial-and-error approach for tuning these parameters based on a large number of experiments. In order to evaluate the frameworks of comparative analysis, we select two workloads: WordCount and TeraSort. The performance metrics are carried out based on three criteria: execution time, throughput, and speedup. Our experimental results revealed that both system performances heavily depends on input data size and correct parameter selection. The analysis of the results shows that Spark has better performance as compared to Hadoop when data sets are small, achieving up to two times speedup in WordCount workloads and up to 14 times in TeraSort workloads when default parameter values are reconfigured.

Introduction

Hadoop [ 1 ] has become a very popular platform in the IT industry and academia for its ability to handle large amounts of data, along with extensive processing and analysis facilities. Different users produce these large datasets, and most of data are unstructured, increasing the requirements for memory and I/O. Besides, the advent of many new applications and technologies brought much larger volumes of complex data, including social media, e.g., Facebook, Twitter, YouTube, online shopping, machine data, system data, and browsing history [ 2 ]. This massive amount of digital data becomes a challenging task for the management to store, process, and analyze.

The conventional database management tools are unable to handle this type of data [ 3 ]. Big data technologies, tools, and procedures allowed organizations to capture, process speedily, and analyze large quantities of data and extract appropriate information at a reasonable cost.

Several solutions are available to handle this problems [ 4 ]. Distributed computing is one possible solution considered as the most efficient and fault-tolerant method for companies to store and process massive amounts of data. Among this new group of tools, MapReduce and Spark are the most commonly used cluster computing tools. They provide users with various functions using simple application programming interfaces (API). MapReduce is a framework used for distributed computing used for parallel processing and designed purposely to write, read, and process bulky amounts of data [ 1 , 5 , 6 ]. This data processing framework is comprised of three stages: Map phase, Shuffle phase and Reduce phase. In this technique, the large files are divided into several small blocks of equal sizes and distributed across the cluster for storage. MapReduce and Hadoop distributed file systems (HDFS) are core parts of the Hadoop system, so computing and storage work together across all nodes that compose a cluster of computers [ 7 ].

Apache Spark is an open-source cluster-computing framework [ 8 ]. It is designed based on the Hadoop and its purpose is to build a programing model that “fits a wider class of applications than MapReduce while maintaining the automatic fault tolerance” [ 9 ]. It is not only an alternative to the Hadoop framework but it also provides various functions to process real streaming data. Apart from the map and reduce functions, Spark also supports MLib1, GraphX, and Spark streaming for big data analysis. Hadoop MapReduce processing speed is slow because it requires accessing disks for reads and writes. On the other hand, Spark uses memory to store data reducing the read/write cycle [ 1 ]. In this paper, we have addressed the above mentioned critical challenges. According to our knowledge, none of the previous works have addressed those challenges. Our proposed work will help the system administrators and researchers to understand the system behavior when processing large scale data sets. The main contributions of this paper are as follows:

We introduced a comprehensive empirical performance analysis between MapReduce and Spark frameworks by correlating resource utilization, splits size, and shuffle behavior parameters. As per our knowledge, few previous studies have presented information regarding that. Considering this point, the authors have focused on a comprehensive study about various parameters impact with large data set instead of a large number of workloads.

We accomplished comprehensive comparison work between Hadoop and Spark where large scale datasets (600 GB) are used for the first time. The experiments present the various aspects of cluster performance overhead. We applied two Hibenchmark workloads to test the efficiency of the system under MapReduce and Spark, where the data sets are repeatedly changing.

We selected several parameters covering different aspects of system behavior. Multiple parameters are used to tune job performance. The results of the analysis will facilitate job performance tuning and enhance the freedom to modify the ideal parameters to enhance job efficiency.

We measured the scalability of the experiment by repeating the experiment three times, getting the average execution time for each job. Besides, we investigate the system execution time, maximum sustainable throughput and speedup.

We used a real cluster capable of handling large scale data set (600 GB) with benchmarking tools for a comprehensive evaluation of MapReduce and Spark.

The remainder of the paper is organized as follows: “ Related work ” section presents a critical review of related research works, and then describes Hadoop and Spark systems. The difference between Hadoop and Spark is explained in “ Difference between Hadoop and Spark ” section. The experimental setup is presented in “ Experimental setup ” section. In “ The parameters of interest and tuning approach ” section, we explain the chosen parameters and tuning approach. “ Results and discussion ” section presents the performance analysis of the results and finally, we conclude in “ Conclusion ” section.

Related work

Shi et al. [ 10 ] proposed two profiling tools to quantify the performance of the MapReduce and Spark framework based on a micro-benchmark experiment. The comparative study between these frameworks are conducted with batch and iterative jobs. In their work, the authors consider three components: shuffle, executive model, and caching. The workloads, Wordcount, k-means, Sort, Linear Regression, and PageRank, are chosen to evaluate the system behavior based on CPU bound, disk-bound, and network bound [ 11 ]. They disabled map and reduce function for all workloads apart of a Sort. For the Sort, the reduce task is configured up to 60 map tasks, and the reduce task conFigured to 120. The map output buffer is allocated to 550 MB to avoid additional spills for sorting the map output. Spark intermediate data are stored in 8 disks where each worker is configured with four threads. The authors claim that Spark is faster than MapReduce when WordCount runs with different data sets (1 GB, 40 GB, and 200 GB). The TeraSort is used by sort-by-key() function. They have found that Spark is faster than MapReduce when the data set is smaller (1 GB), but Mapreduce is nearly two times faster than Spark when the data set is of bigger sizes (40 GB or 100 GB). Besides, Spark is one and a half times faster than MapReduce with machine learning workloads such as K-means and Linear Regression. It is claimed that in a subsequent iteration, Spark is five times faster than MapReduce due to the RDD caching and Spark-GraphX is four times faster than MapReduce.

Li et al. [ 12 ] proposed a spark benchmarking suite [ 13 ], which significantly enhances the optimization of workload configuration. This work has identified the distinct features of each benchmark application regarding resource consumption, the data flow, and the communication pattern that can impact the job execution time. The applications are characterized based on extensive experiments using synthetic data sets. There are ten different workloads such as Logistic Regression, Support Vector Machine, Matrix Factorization, Page Rank, Tringle Count, SVD++, Hive, RDD Relation, Twitter, and PageView used with different input data sizes. An eleven nodes virtual cluster is used to analyze the performance of the workloads. The workload analysis is carried out concerning CPU utilization, memory, disk, and network input/output consumption at the time of job execution. They have found that most of the workloads spend more than 50% execution time for MapShuffle-Tasks except logistic regression. They concluded that the job execution time could be reduced while increasing task parallelism to leverage the CPU utilization fully.

Thiruvathukal et al. [ 14 ] have considered the importance and implication of the language such as Python and Scala built on the Java Virtual Machine (JVM) to investigate how the individual language affects the systems’ overall performance. This work proposed a comprehensive benchmarking test for Massage Passing Interface (MPI) and cloud-based application considering typical parallel analysis. The proposed benchmark techniques are designed to emulate a typical image analysis. Therefore, they presented one mid-size (Argonne Leadership Computing Facility) cluster with 126 nodes, which run on COOLEY [ 14 ] and a large scale supercomputer (Cray XC40 supercomputer) cluster with a single node which runs on THETA [ 14 ]. Significantly, they have increased some important Spark parameters (Spark driver memory, and executor memory) values as per the machine resource. They have recommended that COOLEY and THETA frameworks are be beneficial for immediate research work and high-performance computing (HPC) environments.

Marcue et al. [ 15 ] present the comparative analysis between Spark and Flink frameworks for large scale data analysis. This work proposed a new methodology for iterative workloads (K-Means, and Page Rank) and batch processing workloads (WordCount, Grep, and TeraSort) benchmarking. They considered four most important parameters that impact scalability, resource consumption, and execution time. Grid 5000 [ 16 ] has used upto 100 nodes cluster deploying Spark and Flink. They have recommended that Spark parameter (i.e., parallelism and partitions) configuration is sensitive and depends on data sets, while the Flink is highly extensive memory oriented.

Samadi et al. [ 7 ] has investigated the criteria of the performance comparison between Hadoop and Spark framework. In his work, for an impartial comparison, the input data size and configuration remained the same. Their experiment used eight benchmarks of the HiBench suite [ 13 ]. The input data was generated automatically for every case and size, and the computation was performed several times to find out the execution time and throughput. When they deployed microbenchmark (Short and TeraSort) on both systems, Spark showed higher involvement of processor in I/Os while Hadoop mostly processed user tasks. On the other hand, Spark’s performance was excellent when dealing with small input sizes, such as micro and web search (Page Rank). Finally, they concluded that Spark is faster and very strong for processing data in-memory while Hadoop MapReduce performs maps and reduces function in the disk.

In another paper, Samadi et al. [ 9 ] proposed a virtual machine based on Hadoop and Spark to get the benefit of virtualization. This virtual machine’s main advantage is that it can perform all operations even if the hardware fails. In this deployment, they have used Centos operating system built a Hadoop cluster based on a pseudo-distribution mode with various workloads. In their experiments, they have deployed the Hadoop machine on a single workstation and all other demos on its JVM. To justify the big data framework, they have presented the results of Hadoop deployment on Amazon Elastic Computing (EC2). They have concluded that Hadoop is a better choice because Spark requires more memory resources than Hadoop. Finally, they have suggested that the cluster configuration is essential to reduce job execution time, and the cluster parameter configuration must align with Mappers and Reducers.

The computational frameworks, namely Apache Hadoop and Apache Spark, were investigated by [ 17 ]. In this investigation, the Apache webserver log file was taken into consideration to evaluate the two frameworks’ comparative performance. In these experiments, they have used Okeanos’s virtualized computing resources based on infrastructures as a Service (IaaS) developed by the Greek Research and Technology Network [ 17 ]. They proposed a number of applications and conducted several experiments to determine each application’s execution time. They have used various input files and the slave nodes to find out the execution time. They have found that the execution time is proportional to the input data size. They have concluded that the performance of Spark is much better in most cases as compared to Hadoop.

Satish and Rohan [ 18 ] have shown a comparative performance study between Hadoop MapReduce and Spark-based on the K-means algorithm. In this study, they have used a specific data set that supports this algorithm and considered both single and double nodes when gathering each experiment’s execution time. They have concluded that the Spark speed reaches up to three times higher than the MapReduce, though Spark performance heavily depends on sufficient memory size [ 19 ].

Lin et al. [ 20 ] have proposed a unified cloud platform, including batch processing ability over standalone log analysis tools. This investigation has considered four different frameworks: Hadoop, Spark, and warehouse data analysis tools Hive and Shark. They implemented two machine learning algorithms (K-means and PageRank) based on this framework with six nodes to validate the cloud platform. They have used different data sizes as inputs. In the case of K-means, as the data size increased and exceed memory size, the latency schedule and overall Spark performance degraded. However, the overall performance was still six times higher than Hadoop on average. On the other hand, Shark shows significant performance improvement while using queries directly from disk.

Petridis et al. [ 21 ] have investigated the most important Spark parameters shown in Table 4 and given a guideline to the developers and system administrators to select the correct parameter values by replacing the default parameter values based on trial-and-error methodology. Three types of case studies with different categories such as Shuffle Behavior, Compression and Serialization, and Memory Management parameters were performed in this study. They have highlighted the impact of memory allocation and serialization when the number of cores and default parallelism values change. Therefore, there are 12 parameters chosen with three benchmarking applications: sort-by-key, shuffling, and k-means. The sort-by-key experiments used both 1 million and 1 billion key-values of lengths 10 and 90 bytes and the optimal degree of partition is set to 640. The Hash performance is increased to 127 s, which is 30 s faster than the default parameter, and shuffle.file.buffer is increased by 140 s. The rest of the parameters do not play any important role in improving the performance. For another Shuffling experiment, they used a 400 GB dataset. The Hash shuffle performance is degraded by 200 s, and Tungsten-Sort speed is increased by 90 s. By decreasing the buffer size from 32 to 15 KB, the system performance was degraded by about 135s, which is more than 10% from the primary selection. For K-means, they used two sizes of data input (100 MB and 200 MB). They have not found significant k-means performance improvement by changing the parameters. Therefore, they have concluded that based on their methodology, the speedup achievement is tenfold. However, the main challenges of tuning Hadoop and Spark configuration parameters are due to the complicated behavior of distributed large scale systems while the parameter selection is not always trivial for the system administrators. Inappropriate combination of parameter values can affect the overall system performance. Inappropriate combination of parameter values can affect the overall system performance.

The published literature in Table 1 presents some empirical studies. None of these studies have considered larger data sizes (600 GB), more parameters, and real clusters. In our study, we chose a conventional trial-and-error approach [ 21 ], larger data set, and 18 important parameters (listed in Tables 3 and 4 ) from resource utilization, input splits, and shuffle category.

Difference between Hadoop and Spark

Hadoop [ 22 ] is a very popular and useful open-source software framework that enables distributed storage, including the capability of storing a large amount of big datasets across clusters. It is designed in such a way that it can scale up from a single server to thousands of nodes. Hadoop processes large data concurrently and produces fast results. With Hadoop, the core parts are Hadoop Distributed File System (HDFS) and MapReduce.

HDFS [ 23 ] splits the files into small pieces into blocks and saves them into different nodes. There are two kinds of nodes on HDFS: data-nodes (worker) and name-nodes (master nodes) [ 24 , 25 ]. All the operations, including delete, read, and write, are based on these two types of nodes. The workflow of HDFS is like the following flow: firstly, the name-node asks for access permission. If accepted, it will turn the file name into a list of HDFS block IDs, including the files and the data-nodes that saved the blocks related to that file. The ID list will then be sent back to the client, and the users can do further operations based on that.

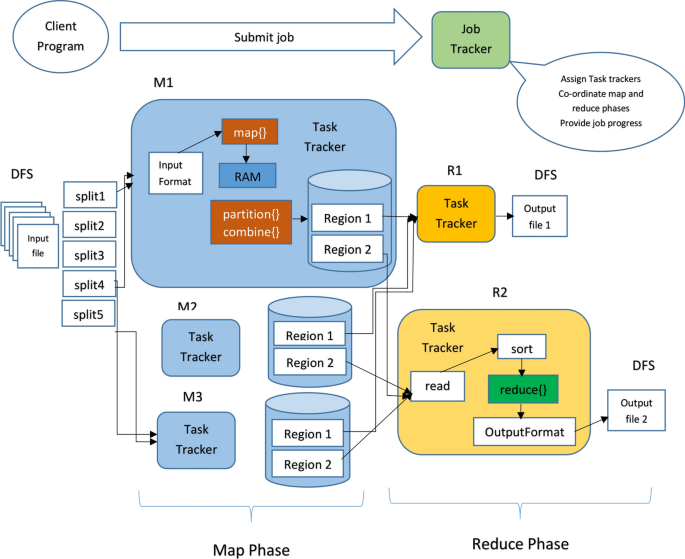

MapReduce [ 26 ] is a computing framework that includes two operations: Mappers and Reducers. The mappers will process files based on the map function and transfer them into the new key-value pairs [ 27 ]. Next, the new key-value pairs are assigned to different partitions and sorted based on their keys. The combiner is optional and can be recognized as a local reduces operation which allows counting the values with the same key in advance to reduce the I/O pressure. Finally, partitions will divide the intermediate key-value pairs into different pieces and transfer them to a reducer. MapReduce needs to implement one operation: shuffle. Shuffle means transferring the mapper output data to the proper reducer. After the shuffle process is finished, the reducer starts some copy threads (Fetcher) and obtains the output files of the map task through HTTP [ 28 ]. The next step is merging the output into different final files, which are then recognized as reducer input data. After that, the reducer processes the data based on the reduced function and writes the output back to the HDFS. Figure 1 depicts a Hadoop MapReduce architecture.

Hadoop MapReduce architecture [ 1 ]

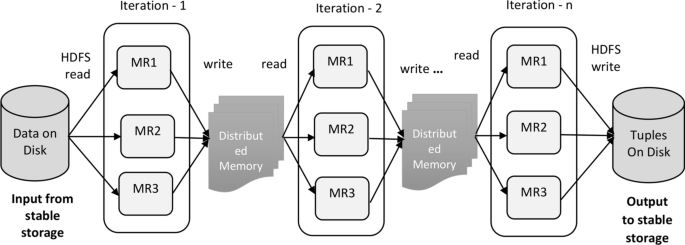

Spark became an open-source project from 2010. Zahari has developed this project at UC Berkely’s AMPLab in 2009 [ 4 , 29 ]. Spark offers numerous advantages for developers to build big data applications. Spark proposed two important terms: Resilient Distributed Datasets (RDD) and Directed Acyclic Graph (DAG). These two techniques work together perfectly and accelerate Spark up to tens of times faster than Hadoop under certain circumstances, even though it usually only achieves a performance two to three times more quickly than MapReduce. It supports multiple sources that have a fault tolerance mechanism that can be cached and supports parallel operations. Besides, it can represent a single dataset with multiple partitions. When Spark runs on the Hadoop cluster, RDDs will be created on the HDFS in many formats supported by Hadoop, likewise text and sequence files. The DAG scheduler [ 30 ] system expresses the dependencies of RDDs. Each spark job will create a DAG and the scheduler will drive the graph into the different stages of tasks then the tasks will be launched to the cluster. The DAG will be created in both maps and reduce stages to express the dependencies fully. Figure 2 illustrates the iterative operation on RDD. Theoretically, limited Spark memory causes the performance to slow down.

Spark workflow [ 31 ]

Experimental setup

Cluster architecture.

In the last couple of years, many proposals came from different research groups about the suitability of Hadoop and Spark frameworks when various types of data of different sizes are used as input in different clusters. Therefore, it becomes necessary to study the performance of the frameworks and understand the influence of various parameters. For the experiments, we will present our cluster performance based on MapReduce and Spark using the HiBench suite [ 23 , 23 ]. In particular, we have selected two Hibench workloads out of thirteen standard workloads to represent the two types of jobs, namely WordCount (aggregation job) [ 32 ], and TeraSort (shuffle job) [ 33 ] with large datasets. We selected both the workloads because of their complex characteristics to study how efficiently both the workloads analyze the cluster performance by correlating MapReduce and Spark function with a combination of groups of parameters.

Hardware and software specification

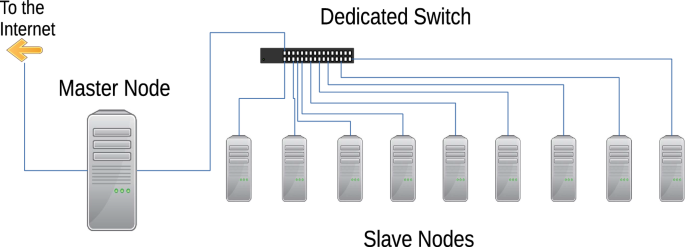

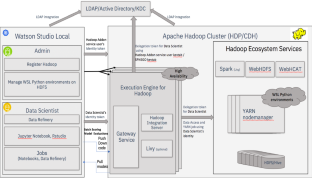

The experiments were deployed in our own cluster. The cluster is configured with 1 master and 9 slaves nodes which is presented in Fig. 3 . The cluster has 80 CPU cores and 60 TB local storage. The implemented hardware is suitable for handling various difficult situations in Spark and MapReduce.

Hadoop cluster nodes

The detailed Hadoop cluster and software specifications are presented in Table 2 . All our jobs run in Spark and MapReduce. We have selected Yarn as a resource manager, which can help us monitor each working node’s situation and track the details of each job with its history. We have used Apache Ambari to monitor and profile the selective workloads running on Spark and MapReduce. It supports most of the Hadoop components, including HDFS, MapReduce, Hive, Pig, Hbase, Zookeeper, Sqoop, and Hcatalog” [ 34 ]. Besides, Ambari supports the user to control the Hadoop cluster on three aspects, namely provision, management, and monitoring.

As stated above, in this study we chose two workloads for the experiments [ 32 , 33 ]:

WordCount : The wordCount workload is map-dependent, and it counts the number of occurrences of separate words from text or sequence file. The input data is produced by RandomTextWriter . It splits into each word by using the map function and generates intermediate data for the reduce function as a key-value [ 35 ]. The intermediate results are added up, generating the final word count by the reduce function.

TeraSort : The TeraSort package was released by Hadoop in 2008 [ 36 ] to measure the capabilities of cluster performance. The input data is generated by the TeraGen function which is implemented in Java. The TeraSort function does the sorting using the MapReduce, and the TeraValidate function is used to validate the output of the sorted data. For both workloads, we used up to 600 GB of synthetic input data generated using a string concatenation technique.

The parameters of interest and tuning approach

Tuning parameters in Apache Hadoop and Apache Spark is a challenging task. We want to find out which parameters have important impacts on system performance. The configuration of the parameters needs to be investigated according to work-load, data size, and cluster architecture. We have conducted a number of experiments using Apache Hadoop and Apache Spark with different parameter settings. For this experiment, we have chosen the core MapReduce and Spark parameter setting from resource utilization, input splits and shuffle groups. The selected tuned parameters with their respective tuned values on the map-reduce and Spark category are shown in Tables 3 and 4 .

Results and discussion

In this section, the results obtained after running the jobs are evaluated. We have used synthetic input data and used the same parameter configuration for a realistic comparison. Each test was repeated three times, and the average runtime was plotted in each graph. For both frameworks, we show the execution time, throughput, and speedup to compare the two frameworks and visualize the effects of changing the default parameters.

Execution time

The execution time is affected by the input data sizes, the number of active nodes, and the application types. We have fixed the same parameters for the fair comparative analysis, such as the number of executors to 50, executor memory to 8 GB, executor cores to 4.

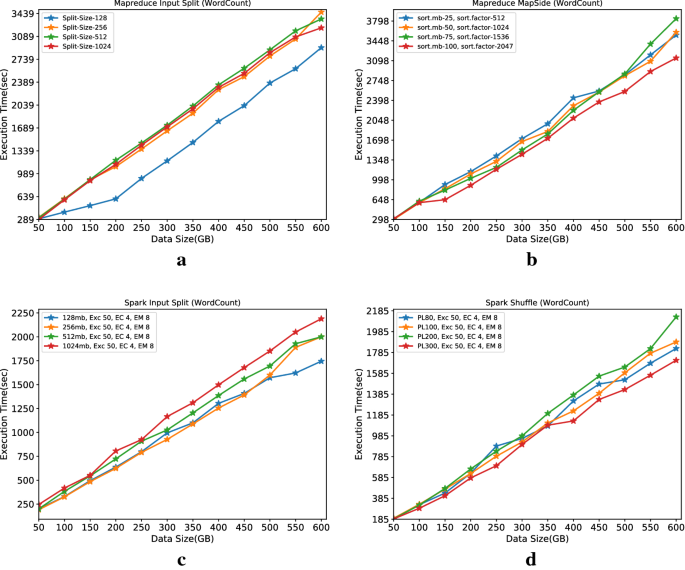

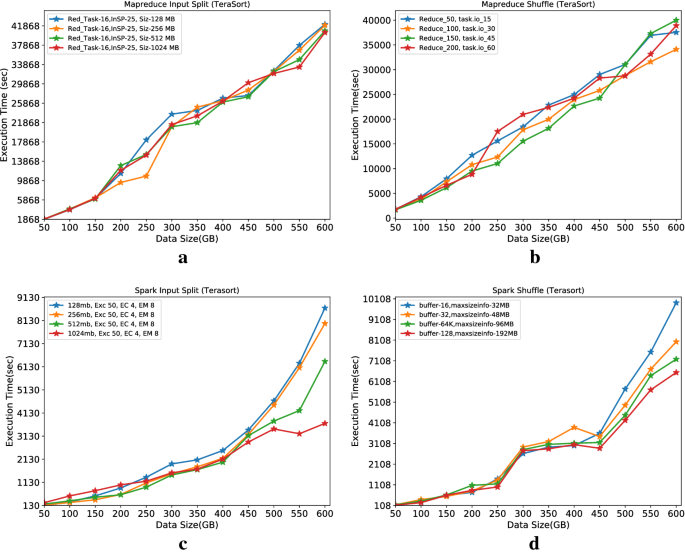

Figure 4 a, b show how MapReduce and Spark execution time depend on the datasets’ size and the different input splits and shuffle parameters. The execution time of MapReduce WordCount workload with the default input split size (128 MB) and shuffle parameter ( sort.mb 100, sort.factor 2047) obtained better execution time for entire data sizes compared to other parameters. Hadoop Map and Reduce function behave better because of their faster execution time and overlooked container initialization overhead for specific workload types. This result suggests that the default parameter is more suitable for our cluster when using data sizes from 50 to 600 GB.

The performance of the WordCount application with a varied number of input splits and shuffle tasks

In Fig. 4 c the default input splits of Spark is 128 MB. Previously, we have mentioned that the number of executors, executor memory, and executor cores are fixed. From the above Fig. 4 c, we see that the execution time of input split size 256 MB outperforms the default set up until 450 GB data sizes. In fact, the default splits size (128 MB) is more efficient when the data size is larger than the 450 GB. Notably, we can see that the default parameter shows better execution performance when the data set reaches 500 GB or above. The new parameter values can improve the processing efficiency by 2.2% higher than the default value (128 MB). Table 5 presents the experimental data of WordCount workload between MapReduce and Spark while the default parameters are changing.

For the Spark shuffle parameter, we have chosen the default serializer, the (JavaSerializer) because of the simplicity and easy control of the performance of the serialization [ 37 ]. In this category, the serializer is PL100 object [ 37 ]. We can see from Fig. 4 d that the improvement rate is significantly increased when we set the PL value to 300. It is evident that the best performance is achieved for sizes larger than 400 GB. Also, it shows that when tuning the PL value to 300, the system can achieve a 3% higher improvement for the rest of the data sizes. Consequently, we can conclude that input splits can be considered an important factor in enhancing Spark WordCount jobs’ efficiency when executing small datasets.

Figure 5 a is comparing MapReduce TeraSort workloads based on input splits that include default parameters. In this analysis, we have set ( Red_Task and InSp ) value fixed with default split size 128 MB. We have changed the parameter values and tested whether the splits’ size can keep the impact on the runtime. So, for this reason, we have selected three different sizes: 256 MB, 512 MB, and 1024 MB. We have observed that with a split size of 256MB, the execution performance is increased by around 2% in datasets with up to 300 GB. On the contrary, when the data sizes are larger than 300 GB, the default size outperforms split size equals 512 MB. Moreover, we have noticed that the improvement rates are similar when the data sizes are smaller than 200 GB.

The performance of the TeraSort application with a varied number of input splits and shuffle tasks

Figure 5 b illustrates the execution performance with the MapReduce shuffle parameter for the TeraSort workload. We have seen that the average execution time behaves linearly for sizes up to 450 GB when the parameter change to ( Reduce_150 and task.io_45 ) as compared to the default configuration ( Reduce_100 and task.io_30 ). Besides, We have also noticed that the default configuration is outperforming all other settings when the data sizes are larger than 450 GB. So, we can conclude that by changing the shuffled value, the system execution performance improves by 1%. In general, this is very unlikely that the default size has optimum performance for larger data sizes.

Figure 5 c illustrates the Spark input split parameter execution performance analysis for the TeraSort workload. The Spark executor memory, number of executors, and executor memory are fixed while changing the block size to measure the execution performance. Apart from the default block size (128 MB), there are 3 pairs (256 MB, 512 MB, and 1024 MB) of block size is taken into this consideration. Our results revealed that the block size 512 MB and 1024 MB present better runtime for sizes up to 500 GB data size. We have also observed a significant performance improvement achieved by the 1024 block size, which is 4% when the data size is larger than 500 GB. Thus, we can conclude that by adding the input splits block size for large scale data size, Spark performance can be increased.

Figure 5 d shows Spark shuffle behaviour performance for TeraSort workloads. We have taken two important default parameters ( buffer = 32, spark.reducer.maxSizeIn Flight = 48 MB) into our analysis. We have found that when the buffer and maxSizeInFlight are increased by 128 and 192, the execution performance increased proportionally up to 600 GB data sizes. Our results show that the default execution is equal, with a tested value of up to 200 GB data sizes. The possible reason for this performance improvement is the larger number of splits size for different executors. Table 6 presents the experimental data of the TeraSort workload between MapReduce and Spark, while the default parameters are changing.

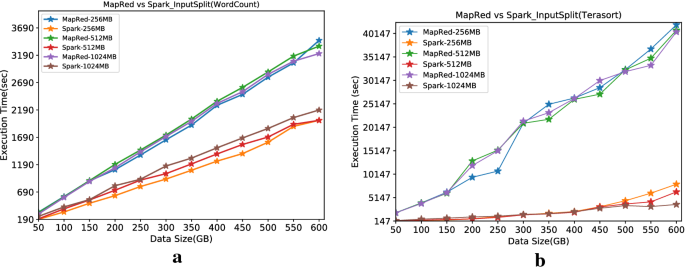

Figure 6 a illustrates the comparison between Spark and MapReduce for WordCount and TeraSort workloads after applying the different input splits. We have observed that Spark with WordCount workloads shows higher execution performance by more than 2 times when data sizes are larger than 300 GB for WordCount workloads. For the smaller data sizes, the performance improvement gap is around ten times. Figure 6 shows a TeraSort workload for MapReduce and Spark. We can see that Spark execution performance is linear and proportionally larger as the data size increase. Also, we noticed that the runtime for MapReduce jobs are not as linear in relation to the data size as Spark jobs. The possible reason could be unavoidable job action on the clusters and as a result that the dataset is larger than the available RAM. So, we conclude that MapReduce has slower data sharing capabilities and a longer time to the read-write operation than Spark [ 4 ].

The comparison of Hadoop and Spark with WordCount and TeraSort workload with varied input splits and shuffle tasks

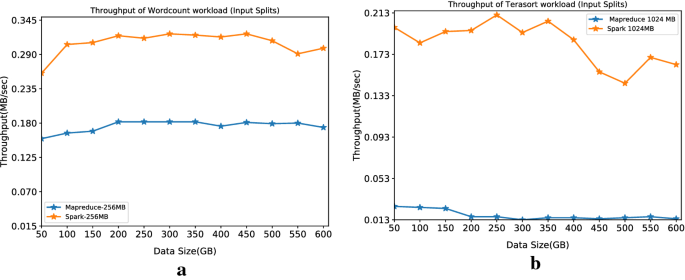

The throughput metrics are all in MB per second. For this analysis, we only considered the best results from each category. We have observed that MapReduce throughput performance for the TeraSort workload is decreasing slightly as the data size crosses beyond 200 GB. Besides, for the WordCount workload, the MapReduce throughput is almost linear. For the Spark TeraSort workload, it can be observed that the throughput is not constant, but for the WordCount workload, the throughput is almost constant. In this analysis, the main focus was to present the throughput difference between WordCount and TeraSort workload for MapReduce and Spark. We found that WordCount workload remains almost stable for most of the data sizes, and concerning the TeraSort workload, MapReduce remain stable than Spark (see Fig. 7 ).

Throughput of WordCount and TeraSort workload

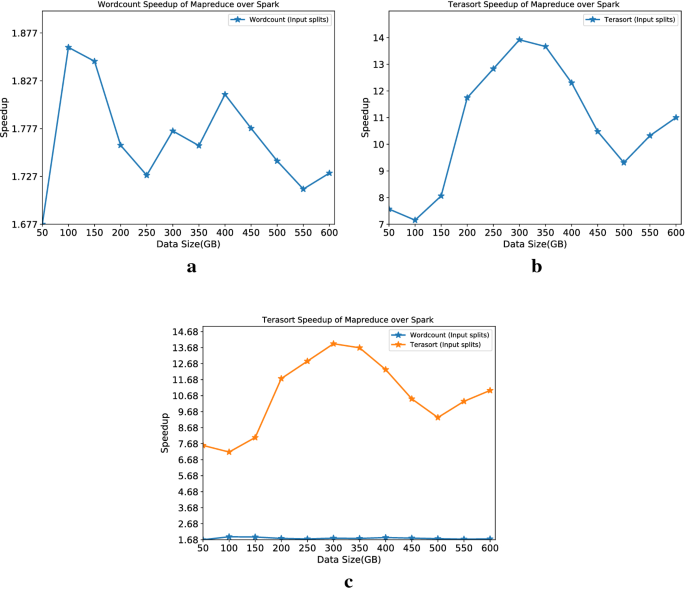

Figure 8 a–c show the Spark’s speed up compared to MapReduce. Figure 8 a, b depicts individual workload speedup. The best results are taken into this consideration from each category in order to get a speedup. From the above figures, we can see that as the data size increases, WordCount workload speedup decreases with some non-linearity. Besides, we can see that the TeraSort speedup decreases when data reaches sizes larger than 300 GB. Notably, as the data size increases to more than 500GB for both workloads, the speedup starts to increase. Figure 8 c illustrates the speedup comparison between the workloads. It can be seen that the TeraSort workload outperforms WordCount workload and achieves an all-time maximum speedup of around 14 times. The literature presents that Spark is up to ten times faster than Hadoop under certain circumstances and in normal conditions, and it only achieves a performance two to three times faster than MapReduce [ 38 ]. However, this study found that Spark performance is degraded when the input data size is big.

Spark over MapReduce speedup on input splits and shuffle

This article presented the empirical performance analysis between Hadoop and Spark based on a large scale dataset. We have executed WordCount and Terasort workloads and 18 different parameter values by replacing them with default set-up. To investigate the execution performance, we have used trial-and-error approach for tuning these parameters performing number of experiments on nine node cluster with a capacity of 600 GB dataset. Our experimental results confirm that both Hadoop and Spark systems performance heavily depends on input data size and right parameter selection and tuning. We have found that Spark has better performance as compared to Hadoop by two times with WordCount work load and 14 times with Tera-Sort workloads respectively when default parameters are tuned with new values. Further more, the throughput and speedup results show that Spark is more stable and faster than Hadoop because of Spark data processing ability in memory instead of store in disk for the map and reduced function. We have also found that Spark performance degraded when input data was larger.

As future work, we plan to add and investigate 15 HiBench workloads, consider more parameters under resource utilization, parallelization, and other aspects, including practical data sets. The main focus would be to analyze the job performance based on auto-tuning techniques for MapReduce and Spark when several parameter configurations replace the default values.

Availability of data and materials

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Apache Hadoop Documentation 2014. http://hadoop.apache.org/ . Accessed 15 July 2020.

Verma A, Mansuri AH, Jain N. Big data management processing with hadoop mapreduce and spark technology: A comparison. In: 2016 symposium on colossal data analysis and networking (CDAN). New York: IEEE; 2016. p. 1–4.

Management Association IR. Big Data: concepts, methodologies, tools, and applications. Hershey: IGI Global; 2016.

Book Google Scholar

Zaharia M, Chowdhury M, Das T, Dave A, Ma J, Mccauley M, Franklin M, Shenker S, Stoica I. Fast and interactive analytics over hadoop data with spark. Usenix Login. 2012;37:45–51.

Google Scholar

Dean J, Ghemawat S. Mapreduce: simplified data processing on large clusters. Commun ACM. 2008;51(1):107–13.

Article Google Scholar

Wang G, Butt AR, Pandey P, Gupta K. Using realistic simulation for performance analysis of mapreduce setups. In: Proceedings of the 1st ACM workshop on large-scale system and application performance; 2009. p. 19–26.

Samadi Y, Zbakh M, Tadonki C. Comparative study between hadoop and spark based on hibench benchmarks. In: 2016 2nd international conference on cloud computing technologies and applications (CloudTech). New York: IEEE; 2016. p. 267–75.

Ahmadvand H, Goudarzi M, Foroutan F. Gapprox: using gallup approach for approximation in big data processing. J Big Data. 2019;6(1):20.

Samadi Y, Zbakh M, Tadonki C. Performance comparison between hadoop and spark frameworks using hibench benchmarks. Concurr Comput Pract Exp. 2018;30(12):4367.

Shi J, Qiu Y, Minhas UF, Jiao L, Wang C, Reinwald B, Özcan F. Clash of the titans: mapreduce vs. spark for large scale data analytics. Proc VLDB Endow. 2015;8(13):2110–211.

Veiga J, Expósito RR, Pardo XC, Taboada GL, Tourifio J. Performance evaluation of big data frameworks for large-scale data analytics. In: 2016 ieee international conference on Big Data (Big Data). New York: IEEE; 2016. p. 424–31.

Li M, Tan J, Wang Y, Zhang L, Salapura V. Sparkbench: a comprehensive benchmarking suite for in memory data analytic platform spark. In: Proceedings of the 12th ACM international conference on computing frontiers; 2015. p. 1–8.

Wang L, Zhan J, Luo C, Zhu Y, Yang Q, He Y, Gao W, Jia Z, Shi Y, Zhang S. Bigdatabench: a big data benchmark suite from internet services. In: 2014 IEEE 20th international symposium on high performance computer architecture (HPCA). New York: IEEE; 2014. p. 488–99.

Thiruvathukal GK, Christensen C, Jin X, Tessier F, Vishwanath V. A benchmarking study to evaluate apache spark on large-scale supercomputers. 2019; arXiv preprint arXiv:1904.11812 .

Marcu O-C, Costan A, Antoniu G, Pérez-Hernández MS. Spark versus flink: Understanding performance in big data analytics frameworks. In: 2016 IEEE international conference on cluster computing (CLUSTER). New York: IEEE; 2016. p. 433–42.

Bolze R, Cappello F, Caron E, Daydé M, Desprez F, Jeannot E, Jégou Y, Lanteri S, Leduc J, Melab N, et al. Grid’5000: a large scale and highly reconfigurable experimental grid testbed. Int J High Perform Comput Appl. 2006;20(4):481–94.

Mavridis I, Karatza E. Log file analysis in cloud with apache hadoop and apache spark 2015.

Gopalani S, Arora R. Comparing apache spark and map reduce with performance analysis using k-means. Int J Comput Appl. 2015;113(1):8–11.

Gu L, Li H. Memory or time: Performance evaluation for iterative operation on hadoop and spark. In: 2013 IEEE 10th international conference on high performance computing and communications & 2013 IEEE international conference on embedded and ubiquitous computing. New York: IEEE; 2013. p. 721–7.

Lin X, Wang P, Wu B. Log analysis in cloud computing environment with hadoop and spark. In: 2013 5th IEEE international conference on broadband network & multimedia technology. New York: IEEE; 2013. p. 273–6.

Petridis P, Gounaris A, Torres J. Spark parameter tuning via trial-and-error. In: INNS conference on big data. Berlin: Springer; 2016. p. 226–37.

Landset S, Khoshgoftaar TM, Richter AN, Hasanin T. A survey of open source tools for machine learning with big data in the hadoop ecosystem. J Big Data. 2015;2(1):24.

HiBench Benchmark Suite. https://github.com/intel-hadoop/HiBench . Accessed 15 July 2020.

Shvachko K, Kuang H, Radia S, Chansler R. The hadoop distributed file system. In: 2010 IEEE 26th symposium on mass storage systems and technologies (MSST). New York: IEEE; 2010. p. 1–10.

Luo M, Yokota H. Comparing hadoop and fat-btree based access method for small file i/o applications. In: International conference on web-age information management. Berlin: Springer; 2010. p. 182–93.

Taylor RC. An overview of the hadoop/mapreduce/hbase framework and its current applications in bioinformatics. BMC Bioinform. 2010;11:1.

Vohra D. Practical Hadoop ecosystem: a definitive guide to hadoop-related frameworks and tools. California: Apress; 2016.

Lee K-H, Lee Y-J, Choi H, Chung YD, Moon B. Parallel data processing with mapreduce: a survey. AcM sIGMoD record. 2012;40(4):11–20.

Zaharia M, Chowdhury M, Franklin MJ, Shenker S, Stoica I. Spark: cluster computing with working sets. HotCloud. 2010;10:95.

Kannan P. Beyond hadoop mapreduce apache tez and apache spark. San Jose State University); 2015. http://www.sjsu.edu/people/robert.chun/courses/CS259Fall2013/s3/F.pdf . Accessed 15 July 2020.

Spark Core Programming. https://www.tutorialspoint.com/apache_spark/apache_spark_rdd.htm . Accessed 15 July 2020.

Huang S, Huang J, Dai J, Xie T, Huang B. The hibench benchmark suite: Characterization of the mapreduce-based data analysis. In: 2010 IEEE 26th international conference on data engineering workshops (ICDEW 2010). New York: IEEE; 2010. p. 41–51.

Chen C-O, Zhuo Y-Q, Yeh C-C, Lin C-M, Liao S-W. Machine learning-based configuration parameter tuning on hadoop system. In: 2015 IEEE international congress on big data. New York: IEEE; 2015. p. 386–92.

Ambari. https://ambari.apache.org/ . Accessed 15 July 2020.

Xiang L-H, Miao L, Zhang D-F, Chen F-P. Benefit of compression in hadoop: A case study of improving io performance on hadoop. In: Proceedings of the 6th international asia conference on industrial engineering and management innovation. Berlin: Springer; 2016. p. 879–90.

O’Malley O. Terabyte sort on apache hadoop. Report, Yahoo!; 2008. http://sortbenchmark.org/YahooHadoop.pdf . Accessed 15 July 2020.

Apache Tuning Spark 1.1.1. https://spark.apache.org/docs/1.1.1/tuning.html . Accessed 15 July 2020.

Rathore MM, Son H, Ahmad A, Paul A, Jeon G. Real-time big data stream processing using gpu with spark over hadoop ecosystem. Int J Parallel Progr. 2018;46(3):630–46.

Download references

Acknowledgements

The authors acknowledge Sibgat Bazai for his valuable suggestions.

This work was not funded.

Author information

Authors and affiliations.

School of Natural and Computational Sciences, Massey University, Albany, Auckland, 0745, New Zealand

N. Ahmed, Andre L. C. Barczak & Teo Susnjak

Department of Mechanical and Electrical Engineering, Massey University, Auckland, 0745, New Zealand

Mohammed A. Rashid

You can also search for this author in PubMed Google Scholar

Contributions

NA was the main contributor of this work. He has done an initial literature review, data collection, experiments, prepare results, and drafted the manuscript. ALCB and TS deployed and configured the physical Hadoop cluster. ALCB also worked closely with NA to review, analyze, and manuscript preparation. TS and MAR helped to improve the final paper. All authors read and approved the final manuscript.

Corresponding author

Correspondence to N. Ahmed .

Ethics declarations

Ethics approval and consent to participate.

Not applicable.

Consent for publication

Competing interests.

The authors declare that they have no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Ahmed, N., Barczak, A.L.C., Susnjak, T. et al. A comprehensive performance analysis of Apache Hadoop and Apache Spark for large scale data sets using HiBench. J Big Data 7 , 110 (2020). https://doi.org/10.1186/s40537-020-00388-5

Download citation

Received : 30 July 2020

Accepted : 26 November 2020

Published : 14 December 2020

DOI : https://doi.org/10.1186/s40537-020-00388-5

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

Investigating the performance of Hadoop and Spark platforms on machine learning algorithms

- Published: 13 May 2020

- Volume 77 , pages 1273–1300, ( 2021 )

Cite this article

- Ali Mostafaeipour 1 ,

- Amir Jahangard Rafsanjani 2 ,

- Mohammad Ahmadi 2 &

- Joshuva Arockia Dhanraj ORCID: orcid.org/0000-0001-5048-7775 3

1641 Accesses

52 Citations

Explore all metrics

One of the most challenging issues in the big data research area is the inability to process a large volume of information in a reasonable time. Hadoop and Spark are two frameworks for distributed data processing. Hadoop is a very popular and general platform for big data processing. Because of the in-memory programming model, Spark as an open-source framework is suitable for processing iterative algorithms. In this paper, Hadoop and Spark frameworks, the big data processing platforms, are evaluated and compared in terms of runtime, memory and network usage, and central processor efficiency. Hence, the K-nearest neighbor (KNN) algorithm is implemented on datasets with different sizes within both Hadoop and Spark frameworks. The results show that the runtime of the KNN algorithm implemented on Spark is 4 to 4.5 times faster than Hadoop. Evaluations show that Hadoop uses more sources, including central processor and network. It is concluded that the CPU in Spark is more effective than Hadoop. On the other hand, the memory usage in Hadoop is less than Spark.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

A survey of transfer learning.

Supervised Classification Algorithms in Machine Learning: A Survey and Review

Learning from imbalanced data: open challenges and future directions

Chen M, Mao S, Liu Y (2014) Big data: a survey. Mob Netw Appl 19(2):171–209

Article Google Scholar

Wu C, Zapevalova E, Chen Y, Zeng D, Liu F (2018) Optimal model of continuous knowledge transfer in the big data environment. Computr Model Eng Sci 116(1):89–107

Google Scholar

Dean J, Ghemawat S (2008) MapReduce: simplified data processing on large clusters. Commun ACM 51(1):107–113

Tang Z, Jiang L, Yang L, Li K, Li K (2015) CRFs based parallel biomedical named entity recognition algorithm employing MapReduce framework. Clust Comput 18(2):493–505

Tang Z, Liu K, Xiao J, Yang L, Xiao Z (2017) A parallel k-means clustering algorithm based on redundance elimination and extreme points optimization employing MapReduce. Concurr Comput Pract Exp 29(20):e4109

Zaharia M, Chowdhury M, Das T, Dave A, Ma J, McCauly M, Michael J, Franklin SS, Stoica I (2012) Resilient distributed datasets: a fault-tolerant abstraction for in-memory cluster computing. In: Presented as Part of the 9th {USENIX} Symposium on Networked Systems Design and Implementation ({NSDI} 12), pp 15–28

Cobb AN, Benjamin AJ, Huang ES, Kuo PC (2018) Big data: more than big data sets. Surgery 164(4):640–642

Qin SJ, Chiang LH (2019) Advances and opportunities in machine learning for process data analytics. Comput Chem Eng 126:465–473

Jordan MI, Mitchell TM (2015) Machine learning: trends, perspectives, and prospects. Science 349(6245):255–260

Article MathSciNet Google Scholar

Wu C, Zapevalova E, Li F, Zeng D (2018) Knowledge structure and its impact on knowledge transfer in the big data environment. J Internet Technol 19(2):581–590

Zhou L, Pan S, Wang J, Vasilakos AV (2017) Machine learning on big data: opportunities and challenges. Neurocomputing 237:350–361

Russell SJ, Norvig P (2016) Artificial intelligence: a modern approach. Pearson Education Limited, Kuala Lumpur

MATH Google Scholar

Aziz K, Zaidouni D, Bellafkih M (2018) Real-time data analysis using Spark and Hadoop. In: 2018 4th International Conference on Optimization and Applications (ICOA). IEEE, pp 1–6

Hazarika AV, Ram GJSR, Jain E (2017) Performance comparison of Hadoop and spark engine. In: 2017 International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud)(I-SMAC). IEEE, pp 671–674

Gopalani S, Arora R (2015) Comparing apache spark and map reduce with performance analysis using k-means. Int J Comput Appl 113(1):8–11

Wang H, Wu B, Yang S, Wang B, Liu Y (2014) Research of decision tree on yarn using mapreduce and Spark. In: Proceedings of the 2014 World Congress in Computer Science, Computer Engineering, and Applied Computing, pp 21–24

Liang F, Feng C, Lu X, Xu Z (2014) Performance benefits of DataMPI: a case study with BigDataBench. In: Workshop on Big Data Benchmarks, Performance Optimization, and Emerging Hardware. Springer, Cham, pp 111–123

Pirzadeh P (2015) On the performance evaluation of big data systems. Doctoral dissertation, UC Irvine

Mavridis I, Karatza H (2017) Performance evaluation of cloud-based log file analysis with Apache Hadoop and Apache Spark. J Syst Softw 125:133–151

Im S, Moseley B (2019) A conditional lower bound on graph connectivity in mapreduce. arXiv preprint arXiv:1904.08954

Kodali S, Dabbiru M, Rao BT, Patnaik UKC (2019) A k-NN-based approach using MapReduce for meta-path classification in heterogeneous information networks. In: Soft Computing in Data Analytics. Springer, Singapore, pp 277–284

Li Y, Eldawy A, Xue J, Knorozova N, Mokbel MF, Janardan R (2019) Scalable computational geometry in MapReduce. VLDB J 28(4):523–548

Li F, Chen J, Wang Z (2019) Wireless MapReduce distributed computing. IEEE Trans Inf Theory 65(10):6101–6114

Liu J, Wang P, Zhou J, Li K (2020) McTAR: a multi-trigger check pointing tactic for fast task recovery in MapReduce. IEEE Trans Serv Comput. https://doi.org/10.1109/TSC.2019.2904270

Glushkova D, Jovanovic P, Abelló A (2019) Mapreduce performance model for Hadoop 2.x. Inf Syst 79:32–43

Saxena A, Chaurasia A, Kaushik N, Kaushik N (2019) Handling big data using MapReduce over hybrid cloud. In: International Conference on Innovative Computing and Communications. Springer, Singapore, pp 135–144

Kuo A, Chrimes D, Qin P, Zamani H (2019) A Hadoop/MapReduce based platform for supporting health big data analytics. In: ITCH, pp 229–235

Kumar DK, Bhavanam D, Reddy L (2020) Usage of HIVE tool in Hadoop ECO system with loading data and user defined functions. Int J Psychosoc Rehabil 24(4):1058–1062

Alnasir JJ, Shanahan HP (2020) The application of hadoop in structural bioinformatics. Brief Bioinform 21(1):96–105

Park HM, Park N, Myaeng SH, Kang U (2020) PACC: large scale connected component computation on Hadoop and Spark. PLoS ONE 15(3):e0229936

Xu Y, Wu S, Wang M, Zou Y (2020) Design and implementation of distributed RSA algorithm based on Hadoop. J Ambient Intell Humaniz Comput 11(3):1047–1053

Wang J, Li X, Ruiz R, Yang J, Chu D (2020) Energy utilization task scheduling for MapReduce in heterogeneous clusters. IEEE Trans Serv Comput. https://doi.org/10.1109/TSC.2020.2966697

Wei P, He F, Li L, Shang C, Li J (2020) Research on large data set clustering method based on MapReduce. Neural Comput Appl 32(1):93–99

Souza A, Garcia I (2020) A preemptive fair scheduler policy for disco MapReduce framework. In: Anais do XV Workshop em Desempenho de Sistemas Computacionais e de Comunicação. SBC, pp 1–12

Jang S, Jang YE, Kim YJ, Yu H (2020) Input initialization for inversion of neural networks using k-nearest neighbor approach. Inf Sci 519:229–242

Chen Y, Hu X, Fan W, Shen L, Zhang Z, Liu X et al (2020) Fast density peak clustering for large scale data based on kNN. Knowl-Based Syst 187:104824

Janardhanan PS, Samuel P (2020) Optimum parallelism in Spark framework on Hadoop YARN for maximum cluster resource. In: First International Conference on Sustainable Technologies for Computational Intelligence: Proceedings of ICTSCI 2019, vol 1045. Springer Nature, p 351

Qin Y, Tang Y, Zhu X, Yan C, Wu C, Lin D (2020) Zone-based resource allocation strategy for heterogeneous spark clusters. In: Artificial Intelligence in China. Springer, Singapore, pp 113–121

Hussain DM, Surendran D (2020) The efficient fast-response content-based image retrieval using spark and MapReduce model framework. J Ambient Intell Humaniz Comput. https://doi.org/10.1007/s12652-020-01775-9

Nguyen MC, Won H, Son S, Gil MS, Moon YS (2019) Prefetching-based metadata management in advanced multitenant Hadoop. J Supercomput 75(2):533–553

Javanmardi AK, Yaghoubyan SH, Bagherifard K et al (2020) A unit-based, cost-efficient scheduler for heterogeneous Hadoop systems. J Supercomput. https://doi.org/10.1007/s11227-020-03256-4

Guo A, Jiang A, Lin J, Li X (2020) Data mining algorithms for bridge health monitoring: Kohonen clustering and LSTM prediction approaches. J Supercomput 76(2):932–947

Cheng F, Yang Z (2019) FastMFDs: a fast, efficient algorithm for mining minimal functional dependencies from large-scale distributed data with Spark. J Supercomput 75(5):2497–2517

Kang M, Lee J (2020) Effect of garbage collection in iterative algorithms on Spark: an experimental analysis. J Supercomput. https://doi.org/10.1007/s11227-020-03150-z

Xiao W, Hu J (2020) SWEclat: a frequent itemset mining algorithm over streaming data using Spark Streaming. J Supercomput. https://doi.org/10.1007/s11227-020-03190-5

Massie M, Li B, Nicholes B, Vuksan V, Alexander R, Buchbinder J, Costa F, Dean A, Josephsen D, Phaal P, Pocock D (2012) Monitoring with Ganglia: tracking dynamic host and application metrics at scale. O’Reilly Media Inc, Newton

Whiteson D (2014) Higgs data set. https://archive.ics.uci.edu/ml/datasets/HIGGS . Accessed 2016

Harrington P (2012) Machine learning in action. Manning Publications Co, New York

Masarat S, Sharifian S, Taheri H (2016) Modified parallel random forest for intrusion detection systems. J Supercomput 72(6):2235–2258

Lai WK, Chen YU, Wu TY, Obaidat MS (2014) Towards a framework for large-scale multimedia data storage and processing on Hadoop platform. J Supercomput 68(1):488–507

Won H, Nguyen MC, Gil MS, Moon YS, Whang KY (2017) Moving metadata from ad hoc files to database tables for robust, highly available, and scalable HDFS. J Supercomput 73(6):2657–2681

Lee ZJ, Lee CY (2020) A parallel intelligent algorithm applied to predict students dropping out of university. J Supercomput 76(2):1049–1062

Sandrini M, Xu B, Volochayev R, Awosika O, Wang WT, Butman JA, Cohen LG (2020) Transcranial direct current stimulation facilitates response inhibition through dynamic modulation of the fronto-basal ganglia network. Brain Stimul 13(1):96–104

Jiang W, Fu J, Chen F, Zhan Q, Wang Y, Wei M, Xiao B (2020) Basal ganglia infarction after mild head trauma in pediatric patients with basal ganglia calcification. Clin Neurol Neurosurg 192:105706

Kowalski CW, Lindberg JE, Fowler DK, Simasko SM, Peters JH (2020) Contributing mechanisms underlying desensitization of CCK-induced activation of primary nodose ganglia neurons. Am J Physiol Cell Physiol 318:C787–C796

Download references

Author information

Authors and affiliations.

Industrial Engineering Department, Yazd University, Yazd, Iran

Ali Mostafaeipour

Computer Engineering Department, Yazd University, Yazd, Iran

Amir Jahangard Rafsanjani & Mohammad Ahmadi

Centre for Automation and Robotics (ANRO), Department of Mechanical Engineering, Hindustan Institute of Technology and Science, Chennai, 603103, India

Joshuva Arockia Dhanraj

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Joshuva Arockia Dhanraj .

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Reprints and permissions

About this article

Mostafaeipour, A., Jahangard Rafsanjani, A., Ahmadi, M. et al. Investigating the performance of Hadoop and Spark platforms on machine learning algorithms. J Supercomput 77 , 1273–1300 (2021). https://doi.org/10.1007/s11227-020-03328-5

Download citation

Published : 13 May 2020

Issue Date : February 2021

DOI : https://doi.org/10.1007/s11227-020-03328-5

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Machine learning

- Find a journal

- Publish with us

- Track your research

Big Data Analysis Using Apache Hadoop

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

TechRepublic

8 Best Data Science Tools and Software

Apache Spark and Hadoop, Microsoft Power BI, Jupyter Notebook and Alteryx are among the top data science tools for finding business insights. Compare their features, pros and cons.

EU’s AI Act: Europe’s New Rules for Artificial Intelligence

Europe's AI legislation, adopted March 13, attempts to strike a tricky balance between promoting innovation and protecting citizens' rights.

10 Best Predictive Analytics Tools and Software for 2024

Tableau, TIBCO Data Science, IBM and Sisense are among the best software for predictive analytics. Explore their features, pricing, pros and cons to find the best option for your organization.

Tableau Review: Features, Pricing, Pros and Cons

Tableau has three pricing tiers that cater to all kinds of data teams, with capabilities like accelerators and real-time analytics. And if Tableau doesn’t meet your needs, it has a few alternatives worth noting.

Top 6 Enterprise Data Storage Solutions for 2024

Amazon, IDrive, IBM, Google, NetApp and Wasabi offer some of the top enterprise data storage solutions. Explore their features and benefits, and find the right solution for your organization's needs.

Latest Articles

What is the EU’s AI Office? New Body Formed to Oversee the Rollout of General Purpose Models and AI Act

The AI Office will be responsible for enforcing the rules of the AI Act, ensuring its implementation across Member States, funding AI and robotics innovation and more.

Top Tech Conferences & Events to Add to Your Calendar in 2024

A great way to stay current with the latest technology trends and innovations is by attending conferences. Read and bookmark our 2024 tech events guide.

What is Data Science? Benefits, Techniques and Use Cases

Data science involves extracting valuable insights from complex datasets. While this process can be technically challenging and time-consuming, it can lead to better business decision-making.

Gartner’s 7 Predictions for the Future of Australian & Global Cloud Computing

An explosion in AI computing, a big shift in workloads to the cloud, and difficulties in gaining value from hybrid cloud strategies are among the trends Australian cloud professionals will see to 2028.

OpenAI Adds PwC as Its First Resale Partner for the ChatGPT Enterprise Tier

PwC employees have 100,000 ChatGPT Enterprise seats. Plus, OpenAI forms a new safety and security committee in their quest for more powerful AI, and seals media deals.

What Is Contact Management? Importance, Benefits and Tools

Contact management ensures accurate, organized and accessible information for effective communication and relationship building.

How to Use Tableau: A Step-by-Step Tutorial for Beginners

Learn how to use Tableau with this guide. From creating visualizations to analyzing data, this guide will help you master the essentials of Tableau.

HubSpot CRM vs. Mailchimp (2024): Which Tool Is Right for You?

HubSpot and Mailchimp can do a lot of the same things. In most cases, though, one will likely be a better choice than the other for a given use case.

Top 5 Cloud Trends U.K. Businesses Should Watch in 2024

TechRepublic identified the top five emerging cloud technology trends that businesses in the U.K. should be aware of this year.

Pipedrive vs. monday.com (2024): CRM Comparison

Find out which CRM platform is best for your business by comparing Pipedrive and Monday.com. Learn about their features, pricing and more.

Celoxis: Project Management Software Is Changing Due to Complexity and New Ways of Working

More remote work and a focus on resource planning are two trends driving changes in project management software in APAC and around the globe. Celoxis’ Ratnakar Gore explains how PM vendors are responding to fast-paced change.

SAP vs. Oracle (2024): Which ERP Solution Is Best for You?

Explore the key differences between SAP and Oracle with this in-depth comparison to determine which one is the right choice for your business needs.

How to Create Effective CRM Strategy in 8 Steps

Learn how to create an effective CRM strategy that will help you build stronger customer relationships, improve sales and increase customer satisfaction.

CISOs in Australia Urged to Take a Closer Look at Data Breach Risks

A leading cyber expert in Australia has warned CISOs and other IT leaders their organisations and careers could be at stake if they do not understand data risk and data governance practices.

Snowflake Arctic, a New AI LLM for Enterprise Tasks, is Coming to APAC

Data cloud company Snowflake’s Arctic is promising to provide APAC businesses with a true open source large language model they can use to train their own custom enterprise LLMs and inference more economically.

Create a TechRepublic Account

Get the web's best business technology news, tutorials, reviews, trends, and analysis—in your inbox. Let's start with the basics.

* - indicates required fields

Sign in to TechRepublic

Lost your password? Request a new password

Reset Password

Please enter your email adress. You will receive an email message with instructions on how to reset your password.

Check your email for a password reset link. If you didn't receive an email don't forgot to check your spam folder, otherwise contact support .

Welcome. Tell us a little bit about you.

This will help us provide you with customized content.

Want to receive more TechRepublic news?

You're all set.

Thanks for signing up! Keep an eye out for a confirmation email from our team. To ensure any newsletters you subscribed to hit your inbox, make sure to add [email protected] to your contacts list.

IMAGES

VIDEO

COMMENTS

Big Data And Hadoop: A Review Paper. Rahul Beakta. CSE Deptt., Baddi University of Emerging Sciences & Technology, Baddi, India. [email protected]. Abstract —In this world of information ...

Hadoop is a framework which is designed to process the large data sets and provides high performance, fault tolerance from a single server to thousands of machines. In this paper we describe a detailed review of big data and Hadoop along with a comparison of various tools/technologies of big data.

Context: In recent years, the valuable knowledge that can be retrieved from petabyte scale datasets - known as Big Data - led to the development of solutions to process information based on parallel and distributed computing.Lately, Apache Hadoop has attracted strong attention due to its applicability to Big Data processing. Problem: The support of Hadoop by the research community has ...

According to a report by International Data Corporation (IDC), a research company claims that between 2012 and 2020, the amount of information in the digital universe ... In 2010, Apache Hadoop defined big data as "datasets, which could not be captured, managed, and processed by general computers within an acceptable scope" (p.173, Chen et ...

In this review pap er, an summary is delivered on BigData, Hadoop a nd a pplications in Data Mining. 4 V's of. Big Data has b een discussed. The su mmary to big data encounters is assumed a nd ...

capabilities for storing and processing large volumes of data. Hadoop clusters make distributed computing readily accessible to the Java community and MPI clusters provide high parallel efficiency for compute intensive workloads. Bringing the big data and big compute communities together is an active area of research.

PDF | On Jul 1, 2020, Yassine Benlachmi and others published Big data and Spark: Comparison with Hadoop | Find, read and cite all the research you need on ResearchGate

We are living in the 21st century, world of Big Data. Big Data is a huge collection of structured, semi-structured and unstructured data that is impossible to be processed with the existing data processing system. So, to overcome this problem Hadoop, an open source framework came into existence as a solution. With the help of Hadoop users can run their application on Hadoop clusters. This ...

Hadoop is an open source software project that enables the distributed processing of large data sets across clusters of commodity servers, designed to scale up from a single server to thousands of machines, with a very high degree of fault tolerance. The term 'Big Data' describes innovative techniques and technologies to capture, store, distribute, manage and analyze petabyte- or larger-sized ...

Big Data Analytics Overview with Hadoop and Spark. Msis Harrison Carranza, PhD Aparicio Carranza. Published 2017. Computer Science, Engineering, Environmental Science. TLDR. This work shall describe how Apache Hadoop and Spark functions across various Operating Systems as well as how it is used for the analyses of large and diverse datasets.

Big Data analytics for storing, processing, and analyzing large-scale datasets has become an essential tool for the industry. The advent of distributed computing frameworks such as Hadoop and Spark offers efficient solutions to analyze vast amounts of data. Due to the application programming interface (API) availability and its performance, Spark becomes very popular, even more popular than ...

Big Data is a data whose scale, diversity, and complexity require new architecture, techniques, algorithms, and analytics to manage it and extract value and hidden knowledge from it. Hadoop is the core platform for structuring Big Data, and solves the problem of making it useful for analytics purposes.

2018. TLDR. This paper provides a short review of the literature about research issues of traditional data warehouses, a Hadoop-based architecture and a conceptual data model for designing medical Big Data warehouse are given, and implementation detail of big data warehouse based on the proposed architecture and data model in the ApacheHadoop ...

data sets. Users specify a map function that processes a key/value pair to generate a set of intermediate key/value pairs, and a reduce function that merges all intermediate values associated with the same intermediate key. Many real world tasks are expressible in this model, as shown in the paper. Programs written in this functional style are ...

Sources of big data can be classified to: (1) various transactions, (2) enterprise data, (3) public data, (4) social media, and (5) sensor data. Table 1.1 illustrates the difference between traditional data and big data. Big Data Workflow Big data workflow consists of the following steps, as illustrated in Fig. 1.1. These steps are defined as:

The Internet of Things (IoT) is a communication paradigm and a collection of heterogeneous interconnected devices. It produces large-scale distributed, and diverse data called big data. Big Data Management (BDM) in IoT is used for knowledge discovery and intelligent decision-making and is one of the most significant research challenges today. There are several mechanisms and technologies for ...

This paper, introduce advanced data processing tools to solve the extreme problems as efficiently by using the most recent and world's primary powerful Map-Reduce framework, but it has few data processing issues. Therefore, recently Apache Spark fastest tool has introduced to overcome the limitations of Map Reduce.

One of the most challenging issues in the big data research area is the inability to process a large volume of information in a reasonable time. Hadoop and Spark are two frameworks for distributed data processing. Hadoop is a very popular and general platform for big data processing. Because of the in-memory programming model, Spark as an open-source framework is suitable for processing ...

Big Data and Hadoop Technology Solution s with Cloudera. Manager. Dr. Urmila R. Pol. Department of Computer Science, Shivaji University, Kolhapur, India. Abstract: Big data represents a new era in ...

We live in on-demand, on-command Digital universe with data prolifering by Institutions, Individuals and Machines at a very high rate. This data is categories as "Big Data" due to its sheer Volume, Variety and Velocity. Most of this data is unstructured, quasi structured or semi structured and it is heterogeneous in nature. The volume and the heterogeneity of data with the speed it is ...

Research on Security Mechanism of Hadoop Big Data Platform CIUP '22: Proceedings of the 2022 International Conference on Computational Infrastructure and Urban Planning As a virtualized resource realization mode, Hadoop cloud platform has become an open-source cloud computing architecture and big data analysis platform.

During the COVID-19 pandemic, Apache Hadoop and its MapReduce were proposed as an inexpensive and flexible processing and analysis solution for big data processing during the unprecedented data ...

J. Wang, 2023 Research on Intelligent Data Analysis Techniques for Big Data. Information recording material, 02:79-81 Google Scholar J Z. A. A. Al_Bairmani and A. A. Ismael, 2021 Using logistic regression model to study the most important factors which affects diabetes for the elderly in the city of h, Journal of Physics: Conference Series, vol ...

Apache Spark and Hadoop, Microsoft Power BI, Jupyter Notebook and Alteryx are among the top data science tools for finding business insights. Compare their features, pros and cons. By Aminu ...

the data of Big data store on cloud, security and privacy of. cloud is main issue [9]. For security of cloud encryption, decryption, comp ression and Au thentication is used. To. secure from na ...

The Apache Hadoop platform is used to handle, store, manage, and distribute big data across many server nodes. Here are different tools, which research on the top of Apache Hadoop stack to provide ...

The CRISP-DM (Cross-Industry Standard Process for Data Mining) framework is a. widely used methodology for data mining and big data analytics projects. It describes. commonly used approaches that ...