How-To Geek

What is json and how do you use it.

JSON (JavaScript Object Notation) is a standardized format for representing structured data.

Quick Links

Json basics, a basic json example, json data types, semantics and validation, designating json content, working with json, json limitations, json alternatives.

JSON (JavaScript Object Notation) is a standardized format for representing structured data. Although JSON grew out of the JavaScript programming language, it's now an ubiquitous method of data exchange between systems. Most modern-day APIs accept JSON requests and issue JSON responses so it's useful to have a good working knowledge of the format and its features.

In this article, we'll explain what JSON is, how it expresses different data types, and the ways you can produce and consume it in popular programming languages. We'll also cover some of JSON's limitations and the alternatives that have emerged.

JSON was originally devised by Douglas Crockford as a stateless format for communicating data between browsers and servers. Back in the early 2000s, websites were beginning to asynchronously fetch extra data from their server, after the initial page load. As a text-based format derived from JavaScript, JSON made it simpler to fetch and consume data within these applications. The specification was eventually standardized as ECMA-404 in 2013 .

JSON is always transmitted as a string. These strings can be decoded into a range of basic data types, including numbers, booleans, arrays, and objects. This means object hierarchies and relationships can be preserved during transmission, then reassembled on the receiving end in a way that's appropriate for the programming environment.

This is a JSON representation of a blog post:

"id": 1001,

"title": "What is JSON?",

"author": {

"name": "James Walker"

"tags": ["api", "json", "programming"],

"published": false,

"publishedTimestamp": null

This example demonstrates all the JSON data types. It also illustrates the concision of JSON-formatted data, one of the characteristics that's made it so appealing for use in APIs. In addition, JSON is relatively easy to read as-is, unlike more verbose formats such as XML .

Six types of data can be natively represented in JSON:

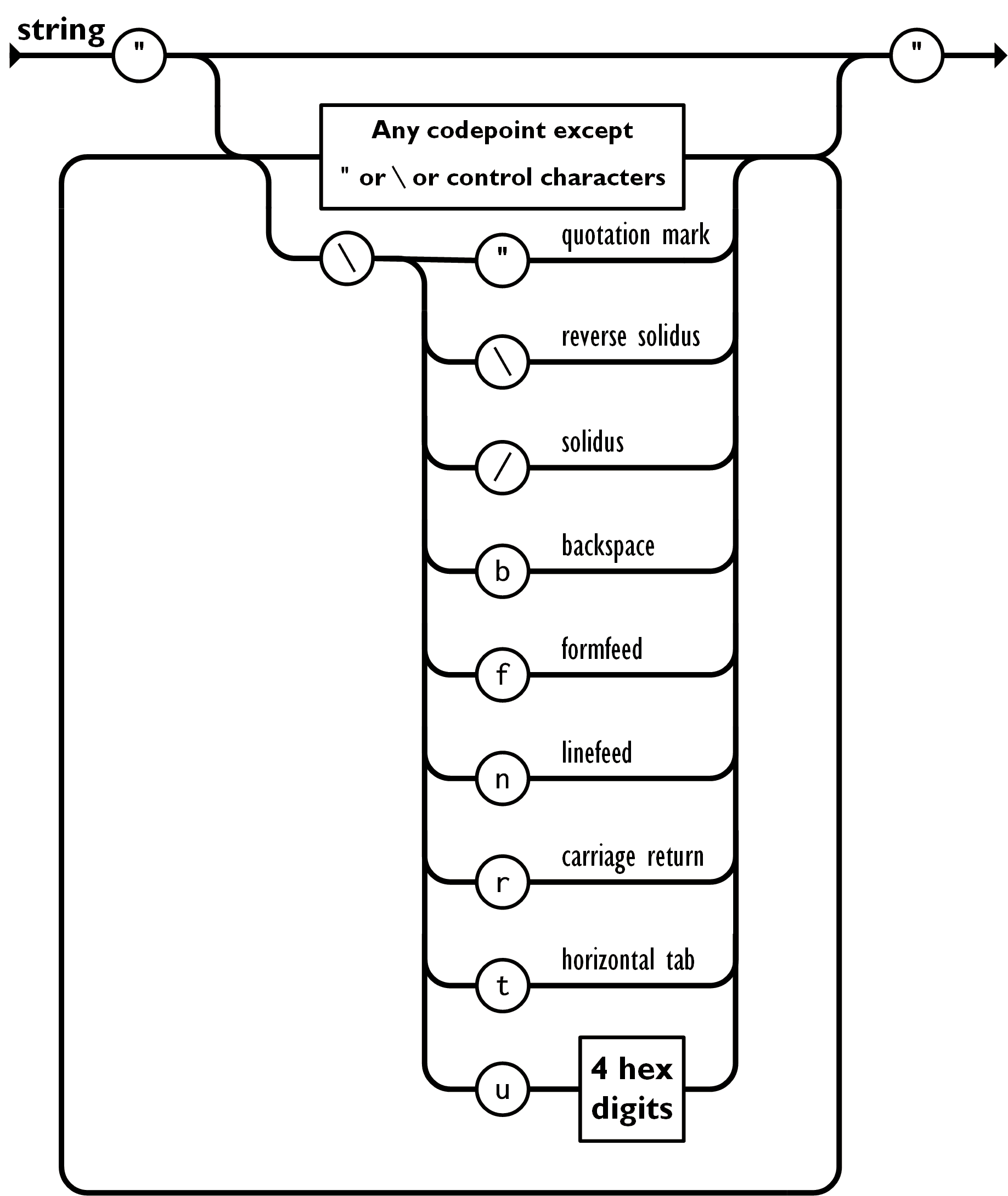

- Strings - Strings are written between double quotation marks; characters may be escaped using backslashes.

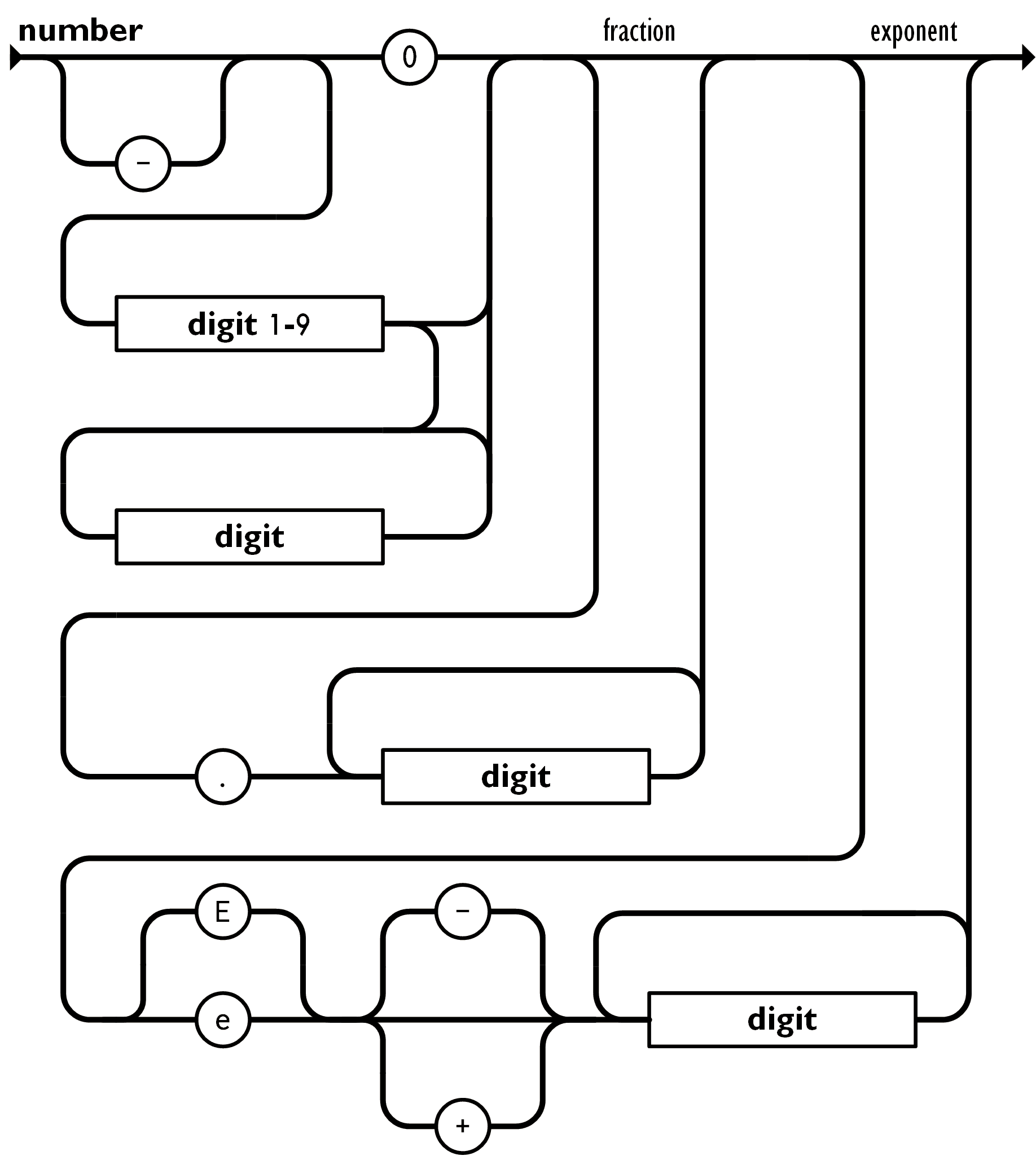

- Numbers - Numbers are written as digits without quotation marks. You can include a fractional component to denote a float. Most JSON parsing implementations assume an integer when there's no decimal point present.

- Booleans - The literal values true and false are supported.

- Null - The null literal value can be used to signify an empty or omitted value.

- Arrays - An array is a simple list denoted by square brackets. Each element in the list is separated by a comma. Arrays can contain any number of items and they can use all the supported data types.

- Objects - Objects are created by curly brackets. They're a collection of key-value pairs where the keys are strings, wrapped in double quotation marks. Each key has a value that can take any of the available data types. You can nest objects to create cascading hierarchies. A comma must follow each value, signifying the end of that key-value pair.

JSON parsers automatically convert these data types into structures appropriate to their language. You don't need to manually cast

to an integer, for example. Parsing the entire JSON string is sufficient to map values back to their original data format.

JSON has certain rules that need to be respected when you encode your data. Strings that don't adhere to the syntax won't be parseable by consumers.

It's particularly important to pay attention to quotation marks around strings and object keys. You must also ensure a comma's used after each entry in an object or array. JSON doesn't allow a trailing comma after the last entry though - unintentionally including one is a common cause of validation errors. Most text editors will highlight syntax problems for you, helping to uncover mistakes.

Despite these common trip points, JSON is one of the easiest data formats to write by hand. Most people find the syntax quick and convenient once they gain familiarity with it. Overall JSON tends to be less error-prone than XML, where mismatched opening and closing tags, invalid schema declarations, and character encoding problems often cause issues.

extension is normally used when JSON is saved to a file. JSON content has the standardized MIME type

is sometimes used for compatibility reasons. Nowadays you should rely on

HTTP headers.

Most APIs that use JSON will encapsulate everything in a top-level object:

"error": 1000

This isn't required though - a literal type is valid as the top-level node in a file, so the following examples are all valid JSON too:

They'll decode to their respective scalars in your programming language.

Most programming languages have built-in JSON support. Here's how to interact with JSON data in a few popular environments.

In JavaScript the

methods are used to encode and decode JSON strings:

const post = {

title: "What Is JSON?",

name: "James Walker"

const encodedJson = JSON.stringify(post);

// {"id": 1001, "title": "What Is JSON?", ...}

console.log(encodedJson);

const decodedJson = JSON.parse(encodedJson);

// James Walker

console.log(decodedJson.author.name);

The equivalent functions in PHP are

"id" => 1001,

"title" => "What Is JSON?",

"author" => [

"id" => 1,

"name" => "James Walker"

$encodedJson = json_encode($post);

echo $encodedJson;

$decodedJson = json_decode($encodedJson, true);

echo $decodedJson["author"]["name"];

Python provides

to serialize and deserialize respectively:

import json

"title": "What Is JSON?",

encodedJson = json.dumps(post)

# {"id": 1001, "title": "What Is JSON?", ...}

print(encodedJson)

decodedJson = json.loads(encodedJson)

# James Walker

print(decodedJson["author"]["name"])

Ruby offers

require "json"

"author" => {

encodedJson = JSON.generate(post)

puts encodedJson

decodedJson = JSON.parse(encodedJson)

puts decodedJson["author"]["name"]

JSON is a lightweight format that's focused on conveying the values within your data structure. This makes it quick to parse and easy to work with but means there are drawbacks that can cause frustration. Here are some of the biggest problems.

No Comments

JSON data can't include comments. The lack of annotations reduces clarity and forces you to put documentation elsewhere. This can make JSON unsuitable for situations such as config files, where modifications are infrequent and the purposes of fields could be unclear.

JSON doesn't let you define a schema for your data. There's no way to enforce that

is a required integer field, for example. This can lead to unintentionally malformed data structures.

No References

Fields can't reference other values in the data structure. This often causes repetition that increases filesize. Returning to the blog post example from earlier, you could have a list of blog posts as follows:

"id": 1002,

"title": "What is SaaS?",

Both posts have the same author but the information associated with that object has had to be duplicated. In an ideal world, JSON parser implementations would be able to produce the structure shown above from input similar to the following:

"author": "{{ .authors.james }}"

"authors": {

This is not currently possible with standard JSON.

No Advanced Data Types

The six supported data types omit many common kinds of value. JSON can't natively store dates, times, or geolocation points, so you need to decide on your own format for this information.

This causes inconvenient discrepancies and edge cases. If your application handles timestamps as strings, like

, but an external API presents time as seconds past the Unix epoch -

- you'll need to remember when to use each of the formats.

YAML is the leading JSON alternative. It's a superset of the format that has a more human-readable presentation, custom data types, and support for references. It's intended to address most of the usability challenges associated with JSON.

YAML has seen wide adoption for config files and within DevOps , IaC , and observability tools. It's less frequently used as a data exchange format for APIs. YAML's relative complexity means it's less approachable to newcomers. Small syntax errors can cause confusing parsing failures.

Protocol buffers (protobufs) are another emerging JSON contender designed to serialize structured data. Protobufs have data type declarations, required fields, and support for most major programming languages. The system is gaining popularity as a more efficient way of transmitting data over networks.

JSON is a text-based data representation format that can encode six different data types. JSON has become a staple of the software development ecosystem; it's supported by all major programming languages and has become the default choice for most REST APIs developed over the past couple of decade.

While JSON's simplicity is part of its popularity, it also imposes limitations on what you can achieve with the format. The lack of support for schemas, comments, object references, and custom data types means some applications will find they outgrow what's possible with JSON. Younger alternatives such as YAML and Protobuf have helped to address these challenges, while XML remains a contender for applications that want to define a data schema and don't mind the verbosity.

JSON for Beginners – JavaScript Object Notation Explained in Plain English

Many software applications need to exchange data between a client and server.

For a long time, XML was the preferred data format when it came to information exchange between the two points. Then in early 2000, JSON was introduced as an alternate data format for information exchange.

In this article, you will learn all about JSON. You'll understand what it is, how to use it, and we'll clarify a few misconceptions. So, without any further delay, let's get to know JSON.

What is JSON?

JSON ( J ava S cript O bject N otation) is a text-based data exchange format. It is a collection of key-value pairs where the key must be a string type, and the value can be of any of the following types:

A couple of important rules to note:

- In the JSON data format, the keys must be enclosed in double quotes.

- The key and value must be separated by a colon (:) symbol.

- There can be multiple key-value pairs. Two key-value pairs must be separated by a comma (,) symbol.

- No comments (// or /* */) are allowed in JSON data. (But you can get around that , if you're curious.)

Here is how some simple JSON data looks:

Valid JSON data can be in two different formats:

- A collection of key-value pairs enclosed by a pair of curly braces {...} . You saw this as an example above.

- A collection of an ordered list of key-value pairs separated by comma (,) and enclosed by a pair of square brackets [...] . See the example below:

Suppose you are coming from a JavaScript developer background. In that case, you may feel like the JSON format and JavaScript objects (and array of objects) are very similar. But they are not. We will see the differences in detail soon.

The structure of the JSON format was derived from the JavaScript object syntax. That's the only relationship between the JSON data format and JavaScript objects.

JSON is a programming language-independent format. We can use the JSON data format in Python, Java, PHP, and many other programming languages.

JSON Data Format Examples

You can save JSON data in a file with the extension of .json . Let's create an employee.json file with attributes (represented by keys and values) of an employee.

The above JSON data shows the attributes of an employee. The attributes are:

- name : the name of the employee. The value is of String type. So, it is enclosed with double quotes.

- id : a unique identifier of an employee. It is a String type again.

- role : the roles an employee plays in the organization. There could be multiple roles played by an employee. So Array is the preferred data type.

- age : the current age of the employee. It is a Number .

- doj : the date the employee joined the company. As it is a date, it must be enclosed within double-quotes and treated like a String .

- married : is the employee married? If so, true or false. So the value is of Boolean type.

- address : the address of the employee. An address can have multiple parts like street, city, country, zip, and many more. So, we can treat the address value as an Object representation (with key-value pairs).

- referred-by : the id of an employee who referred this employee in the organization. If this employee joined using a referral, this attribute would have value. Otherwise, it will have null as a value.

Now let's create a collection of employees as JSON data. To do that, we need to keep multiple employee records inside the square brackets [...].

Did you notice the referred-by attribute value for the second employee, Bob Washington? It is null . It means he was not referred by any of the employees.

How to Use JSON Data as a String Value

We have seen how to format JSON data inside a JSON file. Alternatively, we can use JSON data as a string value and assign it to a variable. As JSON is a text-based format, it is possible to handle as a string in most programming languages.

Let's take an example to understand how we can do it in JavaScript. You can enclose the entire JSON data as a string within a single quote '...' .

If you want to keep the JSON formatting intact, you can create the JSON data with the help of template literals.

It is also useful when you want to build JSON data using dynamic values.

JavaScript Objects and JSON are NOT the Same

The JSON data format is derived from the JavaScript object structure. But the similarity ends there.

Objects in JavaScript:

- Can have methods, and JSON can't.

- The keys can be without quotes.

- Comments are allowed.

- Are JavaScript's own entity.

Here's a Twitter thread that explains the differences with a few examples.

JavaScript Object and JSON(JavaScript Object Notation) are NOT the same. We often think they are similar. That's NOT TRUE 👀 Let's Understand 🔥 A Thread 🧵 👇 — Tapas Adhikary (@tapasadhikary) November 24, 2021

How to Convert JSON to a JavaScript Object, and vice-versa

JavaScript has two built-in methods to convert JSON data into a JavaScript object and vice-versa.

How to Convert JSON Data to a JavaScript Object

To convert JSON data into a JavaScript object, use the JSON.parse() method. It parses a valid JSON string into a JavaScript object.

How to Convert a JavaScript Object to JSON Data

To convert a JavaScript object into JSON data, use the JSON.stringify() method.

Did you notice the JSON term we used to invoke the parse() and stringify() methods above? That's a built-in JavaScript object named JSON (could have been named JSONUtil as well) but it's not related to the JSON data format we've discussed so far. So, please don't get confused.

How to Handle JSON Errors like "Unexpected token u in JSON at position 1"?

While handling JSON, it is very normal to get an error like this while parsing the JSON data into a JavaScript object:

Whenever you encounter this error, please question the validity of your JSON data format. You probably made a trivial error and that is causing it. You can validate the format of your JSON data using a JSON Linter .

Before We End...

I hope you found the article insightful and informative. My DMs are open on Twitter if you want to discuss further.

Recently I have published a few helpful tips for beginners to web development. You may want to have a look:

Let's connect. I share my learnings on JavaScript, Web Development, and Blogging on these platforms as well:

- Follow me on Twitter

- Subscribe to my YouTube Channel

- Side projects on GitHub

Writer . YouTuber . Creator . Mentor

If you read this far, thank the author to show them you care. Say Thanks

Learn to code for free. freeCodeCamp's open source curriculum has helped more than 40,000 people get jobs as developers. Get started

- React Native

- CSS Frameworks

- JS Frameworks

- Web Development

- What is HTML ?

- What is CSS ?

- What is JavaScript ?

- What is TypeScript ?

What is JSON

- What is NPM & How to use it ?

- What is React?

- What is React Router?

- What is Material UI ?

- What is Node?

- What is Express?

- What is SQL?

- What is PHP and Why we use it ?

- What is WordPress?

JSON, short for JavaScript Object Notation , is a lightweight data-interchange format used for transmitting and storing data. It has become a standard format for web-based APIs due to its simplicity and ease of use.

What is JSON?

JSON is a text-based data format that is easy for humans to read and write, as well as parse and generate programmatically. It is based on a subset of JavaScript’s object literal syntax but is language-independent, making it widely adopted in various programming languages beyond JavaScript.

JSON Structure

Data Representation : JSON represents data in key-value pairs. Each key is a string enclosed in double quotes, followed by a colon, and then its corresponding value. Values can be strings, numbers, arrays, objects, booleans, or null.

Why do we use JSON?

- Lightweight and Human-Readable : JSON’s syntax is simple and human-readable, making it easy to understand and work with both by developers and machines.

- Data Interchange Format : JSON is commonly used for transmitting data between a server and a client in web applications. It’s often used in APIs to send and receive structured data.

- Language Independence : JSON is language-independent, meaning it can be used with any programming language that has JSON parsing capabilities.

- Supported Data Types : JSON supports various data types such as strings, numbers, booleans, arrays, objects, and null values, making it versatile for representing complex data structures.

- Compatibility : Most modern programming languages provide built-in support for JSON parsing and serialization, making it easy to work with JSON data in different environments.

- Web APIs : JSON is widely used in web APIs to format data responses sent from a server to a client or vice versa. APIs often return JSON-formatted data for easy consumption by front-end applications.

- Configuration Files : JSON is used in configuration files for web applications, software settings, and data storage due to its readability and ease of editing.

- Data Storage : JSON is also used for storing and exchanging data in NoSQL databases like MongoDB, as it aligns well with document-based data structures.

JSON Data Types

Converting a json text to a javascript object.

In JavaScript, you can parse a JSON text into a JavaScript object using the JSON.parse() method:

JavaScript Object:

JSON vs XML

JSON is a versatile and widely adopted data format that plays a crucial role in modern web development, especially in building APIs and handling data interchange between different systems. Its simplicity, readability, and compatibility with various programming languages make it a preferred choice for developers working with data-driven applications.

Please Login to comment...

Similar reads.

- Web Technologies

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

JSON (JavaScript Object Notation) is a lightweight data-interchange format. It is easy for humans to read and write. It is easy for machines to parse and generate. It is based on a subset of the JavaScript Programming Language Standard ECMA-262 3rd Edition - December 1999. JSON is a text format that is completely language independent but uses conventions that are familiar to programmers of the C-family of languages, including C, C++, C#, Java, JavaScript, Perl, Python, and many others. These properties make JSON an ideal data-interchange language.

JSON is built on two structures:

- A collection of name/value pairs. In various languages, this is realized as an object , record, struct, dictionary, hash table, keyed list, or associative array.

- An ordered list of values. In most languages, this is realized as an array , vector, list, or sequence.

These are universal data structures. Virtually all modern programming languages support them in one form or another. It makes sense that a data format that is interchangeable with programming languages also be based on these structures.

In JSON, they take on these forms:

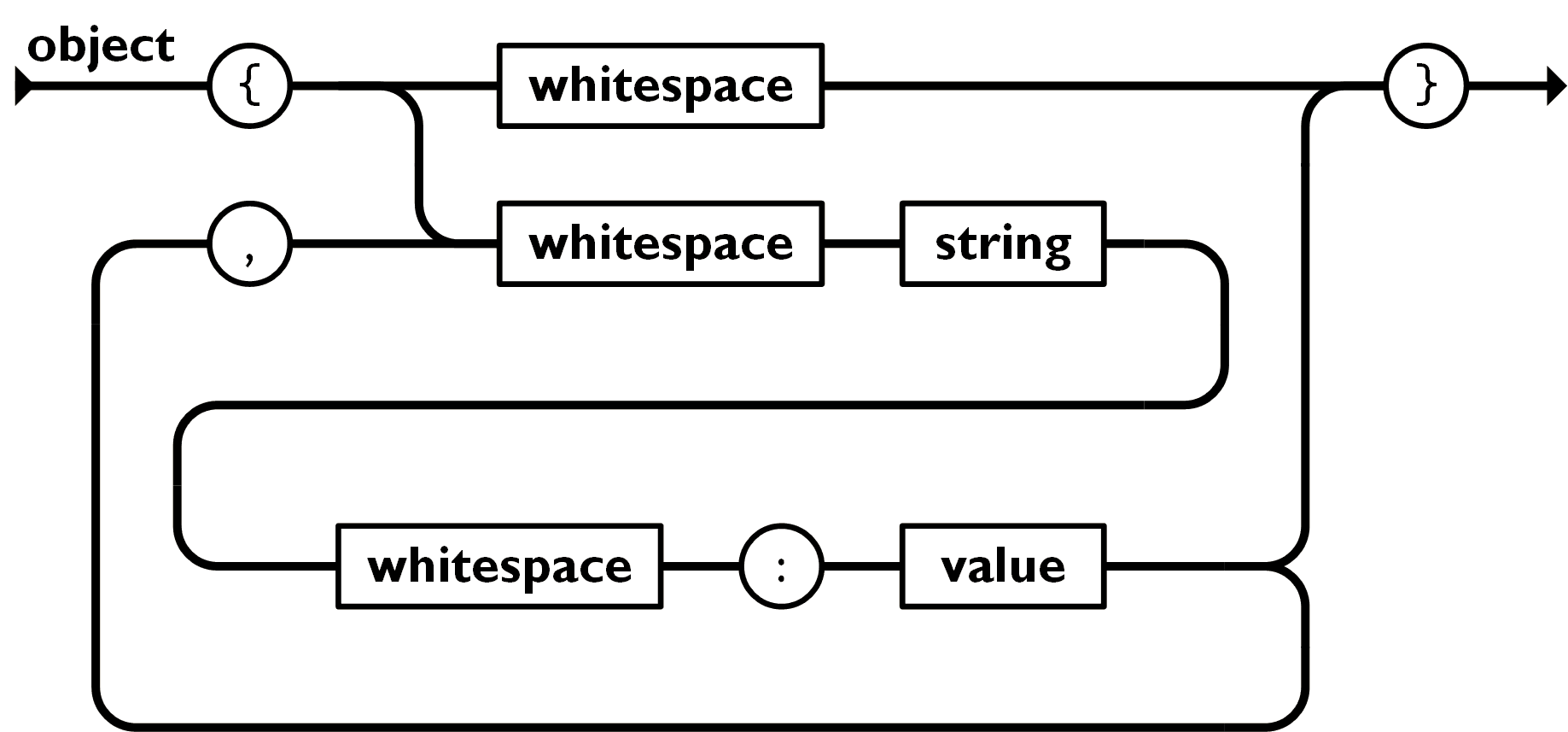

An object is an unordered set of name/value pairs. An object begins with { left brace and ends with } right brace . Each name is followed by : colon and the name/value pairs are separated by , comma .

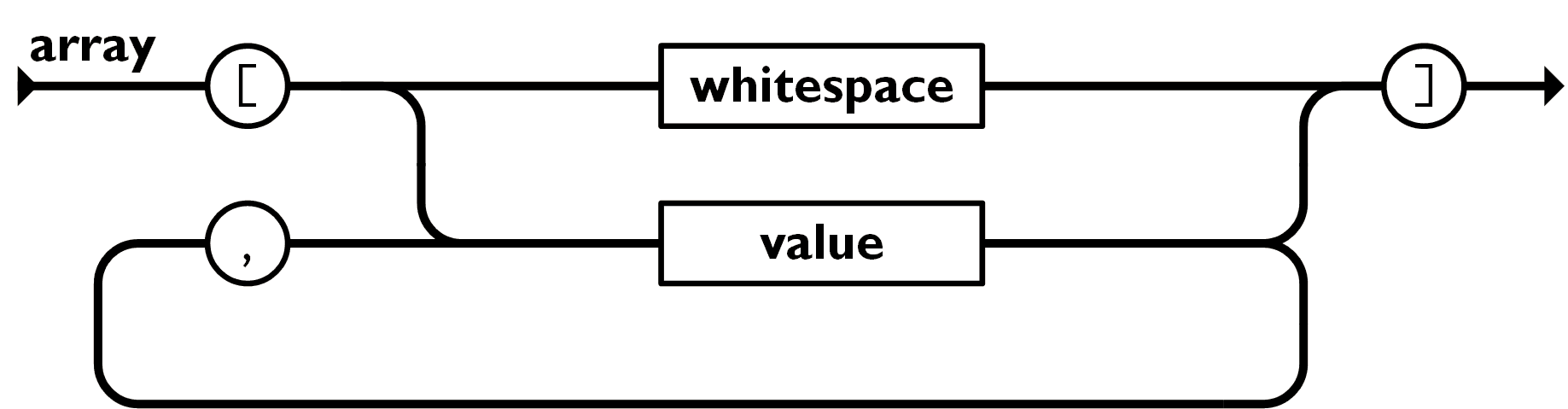

An array is an ordered collection of values. An array begins with [ left bracket and ends with ] right bracket . Values are separated by , comma .

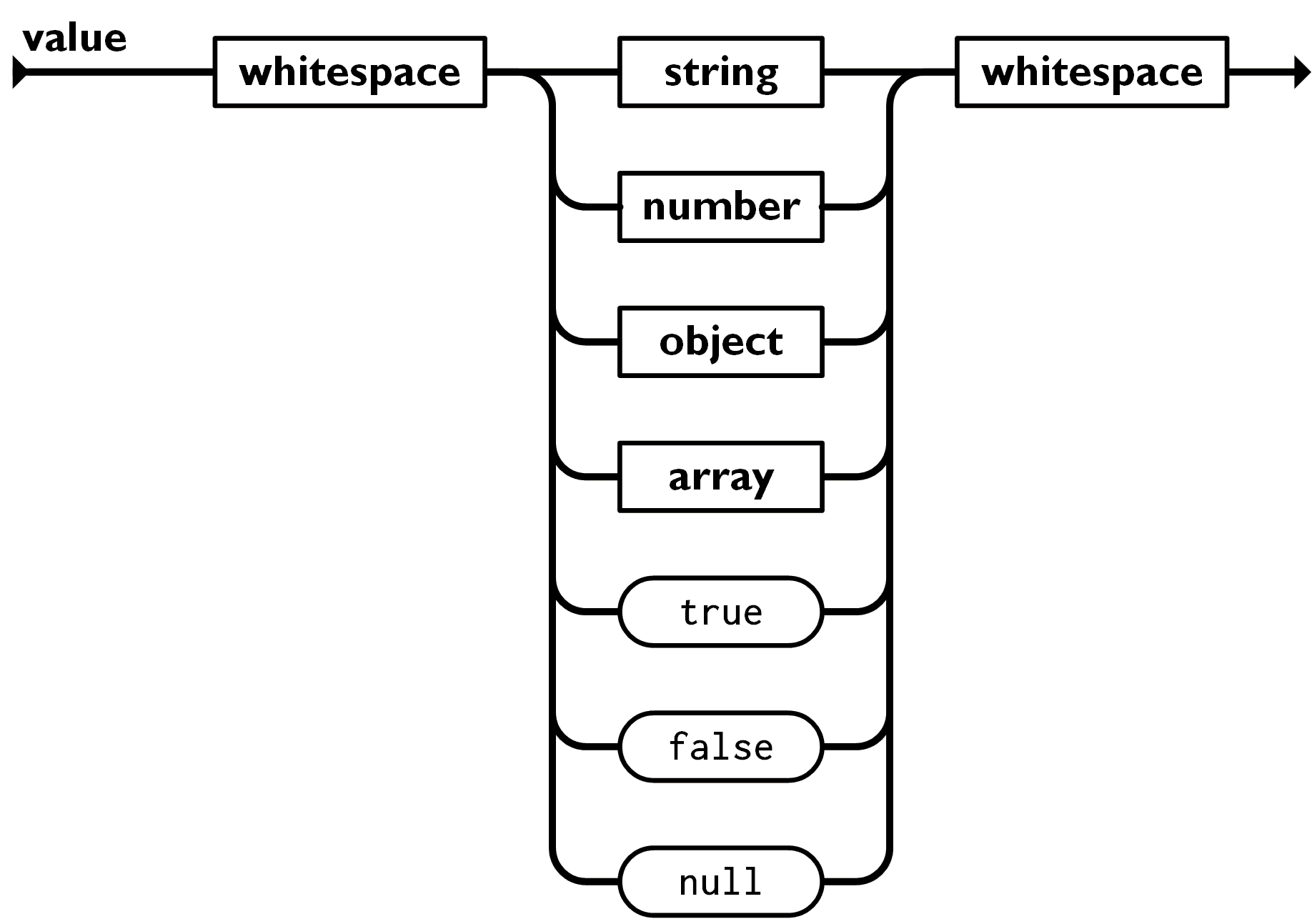

A value can be a string in double quotes, or a number , or true or false or null , or an object or an array . These structures can be nested.

A string is a sequence of zero or more Unicode characters, wrapped in double quotes, using backslash escapes. A character is represented as a single character string. A string is very much like a C or Java string.

A number is very much like a C or Java number, except that the octal and hexadecimal formats are not used.

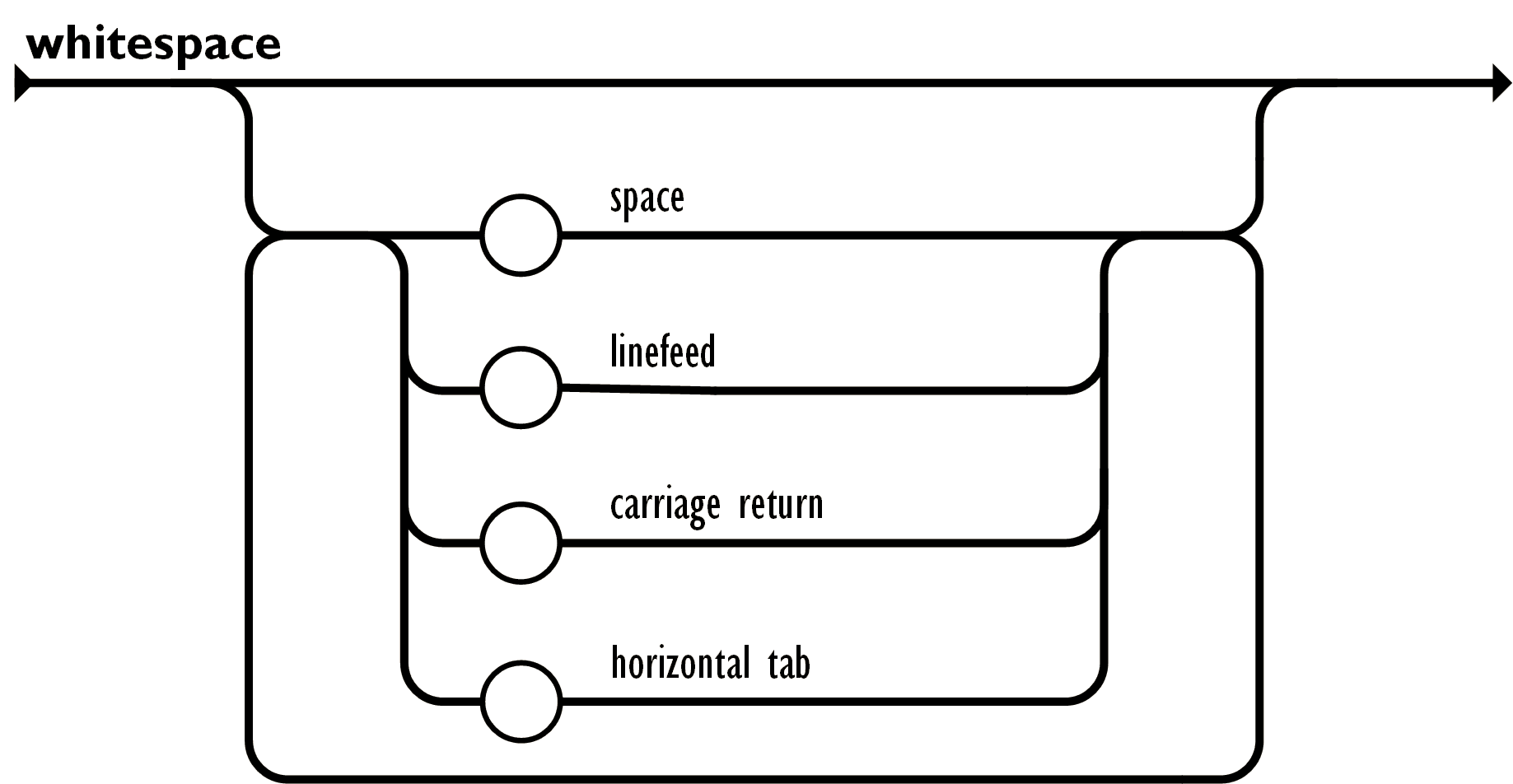

Whitespace can be inserted between any pair of tokens. Excepting a few encoding details, that completely describes the language.

json element

value object array string number "true" "false" "null"

object '{' ws '}' '{' members '}'

members member member ',' members

member ws string ws ':' element

array '[' ws ']' '[' elements ']'

elements element element ',' elements

element ws value ws

string '"' characters '"'

characters "" character characters

character '0020' . '10FFFF' - '"' - '\' '\' escape

escape '"' '\' '/' 'b' 'f' 'n' 'r' 't' 'u' hex hex hex hex

hex digit 'A' . 'F' 'a' . 'f'

number integer fraction exponent

integer digit onenine digits '-' digit '-' onenine digits

digits digit digit digits

digit '0' onenine

onenine '1' . '9'

fraction "" '.' digits

exponent "" 'E' sign digits 'e' sign digits

sign "" '+' '-'

ws "" '0020' ws '000A' ws '000D' ws '0009' ws

- ActionScript3

- GNATCOLL.JSON

- JSON for ASP

- JSON ASP utility class

- JSON_checker

- json-parser

- M's JSON parser

- ThorsSerializer

- JSON for Modern C++

- ArduinoJson

- JSON library for IoT

- JSON Support in Qt

- JsonWax for Qt

- Qentem-Engine

- JSON for .NET

- Manatee Json

- FastJsonParser

- Liersch.Json

- Liersch.JsonSerialization

- JSON Essentials

- Redvers COBOL JSON Interface

- SerializeJSON

- vibe.data.json

- json library

- Delphi Web Utils

- JSON Delphi Library

- JSON in TermL

- json-fortran

- package json

- RJson package

- json package

- json-taglib

- json-simple

- google-gson

- FOSS Nova JSON

- Corn CONVERTER

- Apache johnzon

- Common Lisp JSON

- JSON Modules

- netdata-json

- Module json

- NSJSONSerialization

- json-framework

- JSON Photoshop Scripting

- picolisp-json

- Public.Parser.JSON

- Public.Parser.JSON2

- The Python Standard Library

- metamagic.json

- json-parsing

- JSON Utilities

- json-stream

- JSON-struct

- .NET-JSON-Transformer

- Videos about JSON

- Videos about the JSON Logo

- Heresy & Heretical Open Source: A Heretic's Perspective

- Nota Message Format

- United States

- United Kingdom

What is JSON? The universal data format

Json is the leading data interchange format for web applications and more. here’s what you need to know about javascript object notation..

Contributor, InfoWorld |

A little bit of history

Why developers use json, how json works, json vs. xml, json vs. yaml and csv, complex json: nesting, objects, and arrays, parsing and generating json, json schema and json formatter, using json with typescript.

JSON, or JavaScript Object Notation, is a format used to represent data. It was introduced in the early 2000s as part of JavaScript and gradually expanded to become the most common medium for describing and exchanging text-based data. Today, JSON is the universal standard of data exchange. It is found in every area of programming, including front-end and server-side development, systems, middleware, and databases.

This article introduces you to JSON. You'll get an overview of the technology, find out how it compares to similar standards like XML, YAML, and CSV, and see examples of JSON in a variety of programs and use cases.

JSON was initially developed as a format for communicating between JavaScript clients and back-end servers. It quickly gained popularity as a human-readable format that front-end programmers could use to communicate with the back end using a terse, standardized format. Developers also discovered that JSON was very flexible: you could add, remove, and update fields ad hoc. (That flexibility came at the cost of safety, which was later addressed with the JSON schema.)

In a curious turn, JSON was popularized by the AJAX revolution . Strange, given the emphasis on XML, but it was JSON that made AJAX really shine. Using REST as the convention for APIs and JSON as the medium for exchange proved a potent combination for balancing simplicity, flexibility, and consistency.

Next, JSON spread from front-end JavaScript to client-server communication, and from there to system config files, back-end languages, and all the way to databases. JSON even helped spur the NoSQL movement that revolutionized data storage. It turned out that database administrators also enjoyed JSON's flexibility and ease of programming.

Today, document-oriented data stores like MongoDB provide an API that works with JSON-like data structures. In an interview in early 2022, MongoDB CTO Mark Porter noted that, from his perspective, JSON is still pushing the frontier of data . Not bad for a data format that started with a humble curly brace and a colon.

No matter what type of program or use case they're working on, software developers need a way to describe and exchange data. This need is found in databases, business logic, user interfaces, and in all systems communication. There are many approaches to structuring data for exchange. The two broad camps are binary and text-based data. JSON is a text-based format, so it is readable by both people and machines.

JSON is a wildly successful way of formatting data for several reasons. First, it's native to JavaScript, and it's used inside of JavaScript programs as JSON literals. You can also use JSON with other programming languages, so it's useful for data exchange between heterogeneous systems. Finally, it is human readable. For a language data structure, JSON is an incredibly versatile tool. It is also fairly painless to use, especially when compared to other formats.

When you enter your username and password into a form on a web page, you are interacting with an object with two fields: username and password. As an example, consider the login page in Figure 1.

Figure 1. A simple login page.

Listing 1 shows this page described using JSON.

Listing 1. JSON for a login page

Everything inside of the braces or squiggly brackets ( {...} ) belongs to the same object. An object , in this case, refers in the most general sense to a “single thing." Inside the braces are the properties that belong to the thing. Each property has two parts: a name and a value, separated by a colon. These are known as the keys and values. In Listing 1, "username" is a key and "Bilbo Baggins" is a value.

The key takeaway here is that JSON does everything necessary to handle the need—in this case, holding the information in the form—without a lot of extra information. You can glance at this JSON file and understand it. That is why we say that JSON is concise . Conciseness also makes JSON an excellent format for sending over the wire.

JSON was created as an alternative to XML, which was once the dominant format for data exchange. The login form in Listing 2 is described using XML.

Listing 2. Login form in XML

Yikes! Just looking at this form is tiring. Imagine having to create and parse it in code. In contrast, using JSON in JavaScript is dead simple. Try it out. Hit F12 in your browser to open a JavaScript console, then paste in the JSON shown in Listing 3.

Listing 3. Using JSON in JavaScript

XML is hard to read and leaves much to be desired in terms of coding agility. JSON was created to resolve these issues. It's no wonder it has more or less supplanted XML.

Two data formats sometimes compared to JSON are YAML and CSV. The two formats are on opposite ends of the temporal spectrum. CSV is an ancient, pre-digital format that eventually found its way to being used in computers. YAML was inspired by JSON and is something of its conceptual descendant.

CSV is a simple list of values, with each entry denoted by a comma or other separator character, with an optional first row of header fields. It is rather limited as a medium of exchange and programming structure, but it is still useful for outputting large amounts of data to disk. And, of course, CSV's organization of tabular data is perfect for things like spreadsheets.

YAML is actually a superset of JSON, meaning it will support anything JSON supports. But YAML also supports a more stripped-down syntax, intended to be even more concise than JSON. For example, YAML uses indentation for hierarchy, forgoing the braces. Although YML is sometimes used as a data exchange format, its biggest use case is in configuration files.

So far, you've only seen examples of JSON used with shallow (or simple) objects. That just means every field on the object holds the value of a primitive. JSON is also capable of modeling arbitrary complex data structures such as object graphs and cyclic graphs—that is, structures with circular references. In this section, you'll see examples of complex modeling via nesting, object references, and arrays.

JSON with nested objects

Listing 4 shows how to define nested JSON objects.

Listing 4. Nested JSON

The bestfriend property in Listing 4 refers to another object, which is defined inline as a JSON literal.

JSON with object references

Now consider Listing 5, where instead of holding a name in the bestfriend property, we hold a reference to the actual object.

Listing 5. An object reference

In Listing 5, we put the handle to the merry object on the bestfriend property. Then, we are able to obtain the actual merry object off the pippin object via the bestfriend property. We obtained the name off the merry object with the name property. This is called traversing the object graph , which is done using the dot operator.

JSON with arrays

Another type of structure that JSON properties can have is arrays. These look just like JavaScript arrays and are denoted with a square bracket, as shown in Listing 6.

Listing 6. An array property

Of course, arrays may hold references to other objects, as well. With these two structures, JSON can model any range of complex object relations.

Parsing and generating JSON means reading it and creating it, respectively. You’ve seen JSON.stringify() in action already. That is the built-in mechanism for JavaScript programs to take an in-memory object representation and turn it into a JSON string. To go in the other direction—that is, take a JSON string and turn it into an in-memory object—you use JSON.parse() .

In most other languages, it’s necessary to use a third-party library for parsing and generating. For example, in Java there are numerous libraries , but the most popular are Jackson and GSON . These libraries are more complex than stringify and parse in JavaScript, but they also offer advanced capabilities such as mapping to and from custom types and dealing with other data formats.

In JavaScript, it is common to send and receive JSON to servers. For example with the built in fetch() API. When doing so, you can automatically parse the response, as shown in Listing 7.

Listing 7. Parsing a JSON response with fetch()

Once you turn JSON into an in-memory data structure, be it JavaScript or another language, you can employ the APIs for manipulating the structure. For example, in JavaScript, the JSON parsed in Listing 7 would be accessed like any other JavaScript object—perhaps by looping through data.keys or accessing known properties on the data object.

JavaScript and JSON are incredibly flexible, but sometimes you need more structure than they provide. In a language like Java, strong typing and abstract types (like interfaces) help structure large-scale programs. In SQL stores, a schema provides a similar structure. If you need more structure in your JSON documents, you can use JSON schema to explicitly define the characteristics of your JSON objects. Once defined, you can use the schema to validate object instances and ensure that they conform to the schema.

Another issue is dealing with machine-processed JSON that is minified and illegible. Fortunately, this problem is easy to solve. Just jump over to the JSON Formatter & Validator (I like this tool but there are others), paste in your JSON, and hit the Process button. You'll see a human-readable version that you can use. Most IDEs also have a built-in JavaScript formatter to format your JSON.

TypeScript allows for defining types and interfaces, so there are times when using JSON with TypeScript is useful. A class, like a schema, outlines the acceptable properties of an instance of a given type. In plain JavaScript there’s no way to restrict properties and their types. JavaScript classes are like suggestions; the programmer can set them now and modify the JSON later. A TypeScript class, however, enforces what properties the JSON can have and what types they can be.

JSON is one of the most essential technologies used in the modern software landscape. It is crucial to JavaScript but also used as a common mode of interaction between a wide range of technologies. Fortunately, the very thing that makes JSON so useful makes it relatively easy to understand. It is a concise and readable format for representing textual data.

Next read this:

- Why companies are leaving the cloud

- 5 easy ways to run an LLM locally

- Coding with AI: Tips and best practices from developers

- Meet Zig: The modern alternative to C

- What is generative AI? Artificial intelligence that creates

- The best open source software of 2023

- Web Development

- Software Development

Matthew Tyson is a founder of Dark Horse Group, Inc. He believes in people-first technology. When not playing guitar, Matt explores the backcountry and the philosophical hinterlands. He has written for JavaWorld and InfoWorld since 2007.

Copyright © 2022 IDG Communications, Inc.

A beginner's guide to JSON, the data format for the internet

When APIs send data, chances are they send it as JSON objects. Here's a primer on why JSON is how networked applications send data.

As the web grows in popularity and power, so does the amount of data stored and transferred between systems, many of which know nothing about each other. From early on, the format that this data was transferred in mattered, and like the web, the best formats were open standards that anyone could use and contribute to. XML gained early popularity, as it looked like HTML, the foundation of the web. But it was clunky and confusing.

That’s where JSON (JavaScript Object Notation) comes in. If you’ve consumed an API in the last five to ten years, you’ve probably seen JSON data. While the format was first developed in the early 2000s, the first standards were published in 2006. Understanding what JSON is and how it works is a foundational skill for any web developer.

In this article, we’ll cover the basics of what JSON looks like and how to use it in your web applications, as well as talk about serialized JSON—JST and JWT—and the competing data formats.

What JSON looks like

JSON is a human-readable format for storing and transmitting data. As the name implies, it was originally developed for JavaScript, but can be used in any language and is very popular in web applications. The basic structure is built from one or more keys and values:

You’ll often see a collection of key:value pairs enclosed in brackets described as a JSON object. While the key is any string, the value can be a string, number, array, additional object, or the literals, false, true and null. For example, the following is valid JSON:

JSON doesn't have to have only key:value pairs; the specification allows to any value to be passed without a key. However, almost all of the JSON objects that you see will contain key:value pairs.

Using JSON in API calls

One of the most common uses for JSON is when using an API, both in requests and responses. It is much more compact than other standards and allows for easy consumption in web browsers as JavaScript can easily parse JSON strings, only requiring JSON.parse() to start using it.

JSON.parse(string) takes a string of valid JSON and returns a JavaScript object. For example, it can be called on the body of an API response to give you a usable object. The inverse of this function is JSON.stringify(object) which takes a JavaScript object and returns a string of JSON, which can then be transmitted in an API request or response.

JSON isn’t required by REST or GraphQL, both very popular API formats. However, they are often used together, particularly with GraphQL, where it is best practice to use JSON due to it being small and mostly text. If necessary, it compresses very well with GZIP.

GraphQL's requests aren’t made in JSON, instead using a system that resembles JSON, like this

Which will return the relevant data, and if using JSON, it will match very closely:

Using JSON files in JavaScript

In some cases, you may want to load JSON from a file, such as for configuration files or mock data. Using pure JavaScript, it currently isn’t possible to import a JSON file, however a proposal has been created to allow this . In addition, it is a very common feature in bundlers and compilers, like webpack and Babel . Currently, you can get equivalent functionality by exporting a JavaScript Object the same as your desired JSON from a JavaScript file.

export const data = {"foo": "bar"}

Now this object will be stored in the constant, data, and will be accessible throughout your application using import or require statements. Note that this will import a copy of the data, so modifying the object won’t write the data back to the file or allow the modified data to be used in other files.

Accessing and modifying JavaScript objects

Once you have a variable containing your data, in this example data, to access a key’s value inside it, you could use either data.key or data["key"]. Square brackets must be used for array indexing; for example if that value was an array, you could do data.key[0], but data.key.0 wouldn’t work.

Object modification works in the same way. You can just set data.key = "foo" and that key will now have the value “foo”. Although only the final element in the chain of objects can be replaced; for example if you tried to set data.key.foo.bar to something, it would fail as you would first have to set data.key.foo to an object.

Comparison to YAML and XML

JSON isn’t the only web-friendly data standard out there. The major competitor for JSON in APIs is XML. Instead of the following JSON:

in XML, you’d instead have:

JSON was standardized much later than XML, with the specification for XML coming in 1998, whereas Ecma International standardized JSON in 2013. XML was extremely popular and seen in standards such as AJAX (Asynchronous JavaScript and XML) and the XMLHttpRequest function in JavaScript.

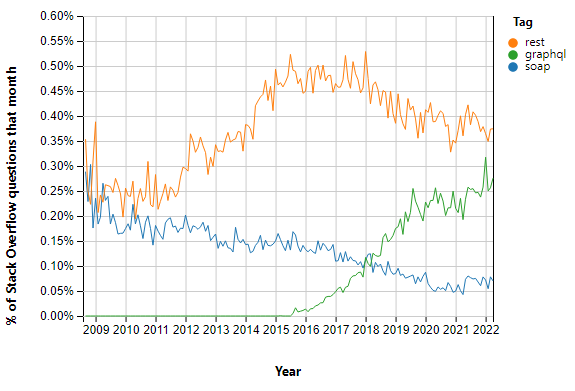

XML used by a major API standard: Simple Object Access Protocol (SOAP). This standard can be significantly more verbose than REST and GraphQL, in part due to the usage of XML and because the standard includes more information, such as describing the XML namespace as part of the envelope system. This might be a reason why SOAP usage has declined for years.

Another alternative is YAML, which is much more similar in length to JSON compared to XML, with the same example being:

However, unlike XML, YAML doesn’t really compete with JSON as an API data format. Instead, it’s primarily used for configuration files— Kubernetes primarily uses YAML to configure infrastructure. YAML offers features that JSON doesn’t have, such as comments. Unlike JSON and XML, browsers cannot parse YAML, so a parser would need to be added as a library if you want to use YAML for data interchange.

Signed JSON

While many of JSONs use cases transmit it as clear text, the format can be used for secure data transfers as well. JSON web signatures (JWS) are JSON objects securely signed using either a secret or a public/private key pair. These are composed of a header , payload , and signature .

The header specifies the type of token and the signing algorithm being used. The only required field is alg to specify the encryption algorithm used, but many other keys can be included, such as typ for the type of signature it is.

The payload of a JWS is the information being transmitted and doesn’t need to be formatted in JSON though commonly is.

The signature is constructed by applying the encryption algorithm specified in the header to the base64 versions of the header and payload joined by a dot. The final JWS is then the base64 header, base64 payload, and signature joined by dots. For example:

eyJ0eXAiOiJKV1QiLA0KICJhbGciOiJIUzI1NiJ9.eyJpc3MiOiJqb2UiLA0KICJleHAiOjEzMDA4MTkzODAsDQogImh0dHA6Ly9leGFtcGxlLmNvbS9pc19yb290Ijp0cnVlfQ.dBjftJeZ4CVP-mB92K27uhbUJU1p1r_wW1gFWFOEjXk

JSON Web Tokens (JWT) are a special form of a JWS. These are particularly useful for authorization : when a user logs into a website, they will be provided with a JWT. For each subsequent request, they will include this token as a bearer token in the authorization header.

To create a JWT from a JWS, you’ll need to configure each section specifically. In the header , ensure that the typ key is JWT. For the alg key, the options of HS256 (HMAC SHA-256) and none (unencrypted) must be supported by the authorization server in order to be a conforming JWT implementation, so can always be used. Additional algorithms are recommended but not enforced.

In the payload are a series of keys called claims, which are pieces of information about a subject, as JWTs are most commonly used for authentication, this is commonly a user, but could be anything when used for exchanging information.

The signature is then constructed in the same way as all other JWSs.

Compared to Security Assertion Markup Language Tokens (SAML), a similar standard that uses XML, JSON allows for JWTs to be smaller than SAML tokens and is easier to parse due to the use of both tokens in the browser, where JavaScript is the primary language, and can easily parse JSON.

JSON has come to be one of the most popular standards for data interchange, being easy for humans to read while being lightweight to ensure small transmission size. Its success has also been caused by it being equivalent to JavaScript objects, making it simple to process in web frontends. However, JSON isn’t the solution for everything, and alternate standards like YAML are more popular for things like configuration files, so it’s important to consider your purpose before choosing.

- Skip to main content

- Skip to search

- Skip to select language

- Sign up for free

- Português (do Brasil)

The JSON namespace object contains static methods for parsing values from and converting values to JavaScript Object Notation ( JSON ).

Description

Unlike most global objects, JSON is not a constructor. You cannot use it with the new operator or invoke the JSON object as a function. All properties and methods of JSON are static (just like the Math object).

JavaScript and JSON differences

JSON is a syntax for serializing objects, arrays, numbers, strings, booleans, and null . It is based upon JavaScript syntax, but is distinct from JavaScript: most of JavaScript is not JSON. For example:

Property names must be double-quoted strings; trailing commas are forbidden.

Leading zeros are prohibited. A decimal point must be followed by at least one digit. NaN and Infinity are unsupported.

Any JSON text is a valid JavaScript expression, but only after the JSON superset revision. Before the revision, U+2028 LINE SEPARATOR and U+2029 PARAGRAPH SEPARATOR are allowed in string literals and property keys in JSON; but the same use in JavaScript string literals is a SyntaxError .

Other differences include allowing only double-quoted strings and no support for undefined or comments. For those who wish to use a more human-friendly configuration format based on JSON, there is JSON5 , used by the Babel compiler, and the more commonly used YAML .

The same text may represent different values in JavaScript object literals vs. JSON as well. For more information, see Object literal syntax vs. JSON .

Full JSON grammar

Valid JSON syntax is formally defined by the following grammar, expressed in ABNF , and copied from IETF JSON standard (RFC) :

Insignificant whitespace may be present anywhere except within a JSONNumber (numbers must contain no whitespace) or JSONString (where it is interpreted as the corresponding character in the string, or would cause an error). The tab character ( U+0009 ), carriage return ( U+000D ), line feed ( U+000A ), and space ( U+0020 ) characters are the only valid whitespace characters.

Static properties

The initial value of the @@toStringTag property is the string "JSON" . This property is used in Object.prototype.toString() .

Static methods

Tests whether a value is an object returned by JSON.rawJSON() .

Parse a piece of string text as JSON, optionally transforming the produced value and its properties, and return the value.

Creates a "raw JSON" object containing a piece of JSON text. When serialized to JSON, the raw JSON object is treated as if it is already a piece of JSON. This text is required to be valid JSON.

Return a JSON string corresponding to the specified value, optionally including only certain properties or replacing property values in a user-defined manner.

Example JSON

You can use the JSON.parse() method to convert the above JSON string into a JavaScript object:

Lossless number serialization

JSON can contain number literals of arbitrary precision. However, it is not possible to represent all JSON numbers exactly in JavaScript, because JavaScript uses floating point representation which has a fixed precision. For example, 12345678901234567890 === 12345678901234567000 in JavaScript because they have the same floating point representation. This means there is no JavaScript number that corresponds precisely to the 12345678901234567890 JSON number.

Let's assume you have a exact representation of some number (either via BigInt or a custom library):

You want to serialize it and then parse to the same exact number. There are several difficulties:

- On the serialization side, in order to obtain a number in JSON, you have to pass a number to JSON.stringify , either via the replacer function or via the toJSON method. But, in either case, you have already lost precision during number conversion. If you pass a string to JSON.stringify , it will be serialized as a string, not a number.

- On the parsing side, not all numbers can be represented exactly. For example, JSON.parse("12345678901234567890") returns 12345678901234568000 because the number is rounded to the nearest representable number. Even if you use a reviver function, the number will already be rounded before the reviver function is called.

There are, in general, two ways to ensure that numbers are losslessly converted to JSON and parsed back: one involves a JSON number, another involves a JSON string. JSON is a communication format , so if you use JSON, you are likely communicating with another system (HTTP request, storing in database, etc.). The best solution to choose depends on the recipient system.

Using JSON strings

If the recipient system does not have same JSON-handling capabilities as JavaScript, and does not support high precision numbers, you may want to serialize the number as a string, and then handle it as a string on the recipient side. This is also the only option in older JavaScript.

To specify how custom data types (including BigInt ) should be serialized to JSON, either add a toJSON method to your data type, or use the replacer function of JSON.stringify() .

In either case, the JSON text will look like {"gross_gdp":"12345678901234567890"} , where the value is a string, not a number. Then, on the recipient side, you can parse the JSON and handle the string.

Using JSON numbers

If the recipient of this message natively supports high precision numbers (such as Python integers), passing numbers as JSON numbers is obviously better, because they can directly parse to the high precision type instead of parsing a string from JSON, and then parsing a number from the string. In JavaScript, you can serialize arbitrary data types to JSON numbers without producing a number value first (resulting in loss of precision) by using JSON.rawJSON() to precisely specify what the JSON source text should be.

The text passed to JSON.rawJSON is treated as if it is already a piece of JSON, so it won't be serialized again as a string. Therefore, the JSON text will look like {"gross_gdp":12345678901234567890} , where the value is a number. This JSON can then be parsed by the recipient without any extra processing, provided that the recipient system does not have the same precision limitations as JavaScript.

When parsing JSON containing high-precision numbers in JavaScript, take extra care because when JSON.parse() invokes the reviver function, the value you receive is already parsed (and has lost precision). You can use the context.source parameter of the JSON.parse() reviver function to re-parse the number yourself.

Specifications

Browser compatibility.

BCD tables only load in the browser with JavaScript enabled. Enable JavaScript to view data.

- Date.prototype.toJSON()

- JSON Beautifier/editor

- JSON Parser

- JSON Validator

A Beginner's Guide to JSON with Examples

Syntax and data types, json strings, json numbers, json booleans, json objects, json arrays, nesting objects & arrays, transforming json data in javascript, json vs xml, json resources, further reading.

JSON — short for JavaScript Object Notation — is a popular format for storing and exchanging data. As the name suggests, JSON is derived from JavaScript but later embraced by other programming languages.

JSON file ends with a .json extension but not compulsory to store the JSON data in a file. You can define a JSON object or an array in JavaScript or HTML files.

In a nutshell, JSON is lightweight, human-readable, and needs less formatting, which makes it a good alternative to XML.

JSON data is stored as key-value pairs similar to JavaScript object properties, separated by commas, curly braces, and square brackets. A key-value pair consists of a key , called name (in double quotes), followed by a colon ( : ), followed by value (in double-quotes):

Multiple key-value pairs are separated by a comma:

JSON keys are strings , always on the left of the colon, and must be wrapped in double quotes . Within each object, keys need to be unique and can contain whitespaces , as in "author name": "John Doe" .

It is not recommended to use whitespaces in keys. It will make it difficult to access the key during programming. Instead, use an underscore in keys as in "author_name": "John Doe" .

JSON values must be one of the following data types:

- Boolean ( true or false )

Note: Unlike JavaScript, JSON values cannot be a function, a date or undefined .

String values in JSON are a set of characters wrapped in double-quotes:

A number value in JSON must be an integer or a floating-point:

Boolean values are simple true or false in JSON:

Null values in JSON are empty words:

JSON objects are wrapped in curly braces. Inside the object, we can list any number of key-value pairs, separated by commas:

JSON arrays are wrapped in square brackets. Inside an array, we can declare any number of objects, separated by commas:

In the above JSON array, there are three objects. Each object is a record of a person (with name, gender, and age).

JSON can store nested objects and arrays as values assigned to keys. It is very helpful for storing different sets of data in one file:

The JSON format is syntactically similar to the way we create JavaScript objects. Therefore, it is easier to convert JSON data into JavaScript native objects.

JavaScript built-in JSON object provides two important methods for encoding and decoding JSON data: parse() and stringify() .

JSON.parse() takes a JSON string as input and converts it into JavaScript object:

JSON.stringify() does the opposite. It takes a JavaScript object as input and transforms it into a string that represents it in JSON:

A few years back, XML (Extensible Markup Language) was a popular choice for storing and sharing data over the network. But that is not the case anymore.

JSON has emerged as a popular alternative to XML for the following reasons:

- Less verbose — XML uses many more words than required, which makes it time-consuming to read and write.

- Lightweight & faster — XML must be parsed by an XML parser, but JSON can be parsed using JavaScript built-in functions. Parsing large XML files is slow and requires a lot of memory.

- More data types — You cannot store arrays in XML which are extensively used in JSON format.

Let us see an example of an XML document and then the corresponding document written in JSON:

databases.xml

databases.json

As you can see above, the XML structure is not intuitive , making it hard to represent in code. On the other hand, the JSON structure is much more compact and intuitive , making it easy to read and map directly to domain objects in any programming language.

There are many useful resources available online for free to learn and work with JSON:

- Introducing JSON — Learn the JSON language supported features.

- JSONLint — A JSON validator that you can use to verify if the JSON string is valid.

- JSON.dev — A little tool for viewing, parsing, validating, minifying, and formatting JSON.

- JSON Schema — Annotate and validate JSON documents according to your own specific format.

A few more articles related to JSON that you might be interested in:

- How to read and write JSON files in Node.js

- Reading and Writing JSON Files in Java

- How to read and write JSON using Jackson in Java

- How to read and write JSON using JSON.simple in Java

- Understanding JSON.parse() and JSON.stringify()

- Processing JSON Data in Spring Boot

- Export PostgreSQL Table Data as JSON

✌️ Like this article? Follow me on Twitter and LinkedIn . You can also subscribe to RSS Feed .

You might also like...

- How to convert XML to JSON in Node.js

- How to send JSON request using XMLHttpRequest (XHR)

- How to read JSON from a file using Gson in Java

- How to write JSON to a file using Gson in Java

- Read and write JSON as a stream using Gson

- How to pretty print JSON using Gson in Java

The simplest cloud platform for developers & teams. Start with a $200 free credit.

Buy me a coffee ☕

If you enjoy reading my articles and want to help me out paying bills, please consider buying me a coffee ($5) or two ($10). I will be highly grateful to you ✌️

Enter the number of coffees below:

✨ Learn to build modern web applications using JavaScript and Spring Boot

I started this blog as a place to share everything I have learned in the last decade. I write about modern JavaScript, Node.js, Spring Boot, core Java, RESTful APIs, and all things web development.

The newsletter is sent every week and includes early access to clear, concise, and easy-to-follow tutorials, and other stuff I think you'd enjoy! No spam ever, unsubscribe at any time.

- JavaScript, Node.js & Spring Boot

- In-depth tutorials

- Super-handy protips

- Cool stuff around the web

- 1-click unsubscribe

- No spam, free-forever!

Build more. Break less. Empower others.

Json schema enables the confident and reliable use of the json data format..

Please visit the official list of adopters and discover more companies using JSON Schema.

Why JSON Schema?

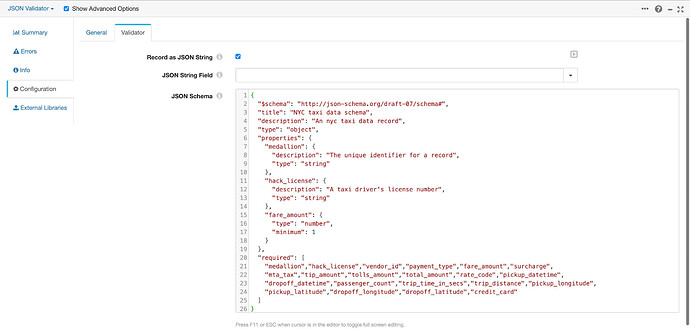

While JSON is probably the most popular format for exchanging data, JSON Schema is the vocabulary that enables JSON data consistency, validity, and interoperability at scale.

Streamline testing and validation

Simplify your validation logic to reduce your code’s complexity and save time on development. Define constraints for your data structures to catch and prevent errors, inconsistencies, and invalid data.

Exchange data seamlessly

Establish a common language for data exchange, no matter the scale or complexity of your project. Define precise validation rules for your data structures to create shared understanding and increase interoperability across different systems and platforms.

Document your data

Create a clear, standardized representation of your data to improve understanding and collaboration among developers, stakeholders, and collaborators.

Vibrant tooling ecosystem

Adopt JSON Schema with an expansive range of community-driven tools, libraries, and frameworks across many programming languages.

Start learning JSON Schema

Explore the json schema ecosystem.

Discover JSON Schema tooling to help your organization leverage the benefits of JSON Schema. Because JSON Schema is much more than a Specification, it is a vibrant ecosystem of Validators, Generators, Linters, and other JSON Schema Utilities made by this amazing Community.

Welcome to the JSON Schema Community

With over 60 million weekly downloads, JSON Schema has a large and active developer community across the world. Join the Community to learn, share ideas, ask questions, develop JSON Schema tooling and build new connections.

Join the JSON Schema Slack Workspace!

Join our Slack to ask questions, get feedback on your projects, and connect with +5000 practitioners and experts.

The JSON Schema Blog

Understanding JSON Schema Lexical and Dynamic Scopes

Juan Cruz Viotti

JSON Schema Community Meetings & Events

We hold monthly Office Hours and weekly Open Community Working Meetings. Office Hours are every first Tuesday of the month at 15:00 BST, and by appointment. Open Community Working Meetings are every Monday at 14:00 PT.

Upcoming events

JSON Schema Open Community Working Meeting

June 3rd 2024, 9:00 pm ( UTC )

JSON Schema Office Hours

June 4th 2024, 2:00 pm ( UTC )

June 10th 2024, 9:00 pm ( UTC )

June 17th 2024, 9:00 pm ( UTC )

June 24th 2024, 9:00 pm ( UTC )

July 1st 2024, 9:00 pm ( UTC )

Start contributing to JSON Schema

If you ❤️ JSON Schema consider becoming a sponsor or a backer .

Support us!

Gold Sponsors

Silver sponsors, bronze sponsors.

Supported by

The following companies support us by letting us use their products. Email us for more info!

- Python »

- 3.12.3 Documentation »

- The Python Standard Library »

- Internet Data Handling »

- json — JSON encoder and decoder

- Theme Auto Light Dark |

json — JSON encoder and decoder ¶

Source code: Lib/json/__init__.py

JSON (JavaScript Object Notation) , specified by RFC 7159 (which obsoletes RFC 4627 ) and by ECMA-404 , is a lightweight data interchange format inspired by JavaScript object literal syntax (although it is not a strict subset of JavaScript [ 1 ] ).

Be cautious when parsing JSON data from untrusted sources. A malicious JSON string may cause the decoder to consume considerable CPU and memory resources. Limiting the size of data to be parsed is recommended.

json exposes an API familiar to users of the standard library marshal and pickle modules.

Encoding basic Python object hierarchies:

Compact encoding:

Pretty printing:

Decoding JSON:

Specializing JSON object decoding:

Extending JSONEncoder :

Using json.tool from the shell to validate and pretty-print:

See Command Line Interface for detailed documentation.

JSON is a subset of YAML 1.2. The JSON produced by this module’s default settings (in particular, the default separators value) is also a subset of YAML 1.0 and 1.1. This module can thus also be used as a YAML serializer.

This module’s encoders and decoders preserve input and output order by default. Order is only lost if the underlying containers are unordered.

Basic Usage ¶

Serialize obj as a JSON formatted stream to fp (a .write() -supporting file-like object ) using this conversion table .

If skipkeys is true (default: False ), then dict keys that are not of a basic type ( str , int , float , bool , None ) will be skipped instead of raising a TypeError .

The json module always produces str objects, not bytes objects. Therefore, fp.write() must support str input.

If ensure_ascii is true (the default), the output is guaranteed to have all incoming non-ASCII characters escaped. If ensure_ascii is false, these characters will be output as-is.

If check_circular is false (default: True ), then the circular reference check for container types will be skipped and a circular reference will result in a RecursionError (or worse).

If allow_nan is false (default: True ), then it will be a ValueError to serialize out of range float values ( nan , inf , -inf ) in strict compliance of the JSON specification. If allow_nan is true, their JavaScript equivalents ( NaN , Infinity , -Infinity ) will be used.

If indent is a non-negative integer or string, then JSON array elements and object members will be pretty-printed with that indent level. An indent level of 0, negative, or "" will only insert newlines. None (the default) selects the most compact representation. Using a positive integer indent indents that many spaces per level. If indent is a string (such as "\t" ), that string is used to indent each level.

Changed in version 3.2: Allow strings for indent in addition to integers.

If specified, separators should be an (item_separator, key_separator) tuple. The default is (', ', ': ') if indent is None and (',', ': ') otherwise. To get the most compact JSON representation, you should specify (',', ':') to eliminate whitespace.

Changed in version 3.4: Use (',', ': ') as default if indent is not None .

If specified, default should be a function that gets called for objects that can’t otherwise be serialized. It should return a JSON encodable version of the object or raise a TypeError . If not specified, TypeError is raised.

If sort_keys is true (default: False ), then the output of dictionaries will be sorted by key.

To use a custom JSONEncoder subclass (e.g. one that overrides the default() method to serialize additional types), specify it with the cls kwarg; otherwise JSONEncoder is used.

Changed in version 3.6: All optional parameters are now keyword-only .

Unlike pickle and marshal , JSON is not a framed protocol, so trying to serialize multiple objects with repeated calls to dump() using the same fp will result in an invalid JSON file.

Serialize obj to a JSON formatted str using this conversion table . The arguments have the same meaning as in dump() .

Keys in key/value pairs of JSON are always of the type str . When a dictionary is converted into JSON, all the keys of the dictionary are coerced to strings. As a result of this, if a dictionary is converted into JSON and then back into a dictionary, the dictionary may not equal the original one. That is, loads(dumps(x)) != x if x has non-string keys.

Deserialize fp (a .read() -supporting text file or binary file containing a JSON document) to a Python object using this conversion table .

object_hook is an optional function that will be called with the result of any object literal decoded (a dict ). The return value of object_hook will be used instead of the dict . This feature can be used to implement custom decoders (e.g. JSON-RPC class hinting).

object_pairs_hook is an optional function that will be called with the result of any object literal decoded with an ordered list of pairs. The return value of object_pairs_hook will be used instead of the dict . This feature can be used to implement custom decoders. If object_hook is also defined, the object_pairs_hook takes priority.

Changed in version 3.1: Added support for object_pairs_hook .

parse_float , if specified, will be called with the string of every JSON float to be decoded. By default, this is equivalent to float(num_str) . This can be used to use another datatype or parser for JSON floats (e.g. decimal.Decimal ).

parse_int , if specified, will be called with the string of every JSON int to be decoded. By default, this is equivalent to int(num_str) . This can be used to use another datatype or parser for JSON integers (e.g. float ).

Changed in version 3.11: The default parse_int of int() now limits the maximum length of the integer string via the interpreter’s integer string conversion length limitation to help avoid denial of service attacks.

parse_constant , if specified, will be called with one of the following strings: '-Infinity' , 'Infinity' , 'NaN' . This can be used to raise an exception if invalid JSON numbers are encountered.

Changed in version 3.1: parse_constant doesn’t get called on ‘null’, ‘true’, ‘false’ anymore.

To use a custom JSONDecoder subclass, specify it with the cls kwarg; otherwise JSONDecoder is used. Additional keyword arguments will be passed to the constructor of the class.

If the data being deserialized is not a valid JSON document, a JSONDecodeError will be raised.

Changed in version 3.6: fp can now be a binary file . The input encoding should be UTF-8, UTF-16 or UTF-32.

Deserialize s (a str , bytes or bytearray instance containing a JSON document) to a Python object using this conversion table .

The other arguments have the same meaning as in load() .

Changed in version 3.6: s can now be of type bytes or bytearray . The input encoding should be UTF-8, UTF-16 or UTF-32.

Changed in version 3.9: The keyword argument encoding has been removed.

Encoders and Decoders ¶

Simple JSON decoder.

Performs the following translations in decoding by default:

It also understands NaN , Infinity , and -Infinity as their corresponding float values, which is outside the JSON spec.

object_hook , if specified, will be called with the result of every JSON object decoded and its return value will be used in place of the given dict . This can be used to provide custom deserializations (e.g. to support JSON-RPC class hinting).

object_pairs_hook , if specified will be called with the result of every JSON object decoded with an ordered list of pairs. The return value of object_pairs_hook will be used instead of the dict . This feature can be used to implement custom decoders. If object_hook is also defined, the object_pairs_hook takes priority.

If strict is false ( True is the default), then control characters will be allowed inside strings. Control characters in this context are those with character codes in the 0–31 range, including '\t' (tab), '\n' , '\r' and '\0' .

Changed in version 3.6: All parameters are now keyword-only .

Return the Python representation of s (a str instance containing a JSON document).

JSONDecodeError will be raised if the given JSON document is not valid.

Decode a JSON document from s (a str beginning with a JSON document) and return a 2-tuple of the Python representation and the index in s where the document ended.

This can be used to decode a JSON document from a string that may have extraneous data at the end.

Extensible JSON encoder for Python data structures.

Supports the following objects and types by default:

Changed in version 3.4: Added support for int- and float-derived Enum classes.

To extend this to recognize other objects, subclass and implement a default() method with another method that returns a serializable object for o if possible, otherwise it should call the superclass implementation (to raise TypeError ).

If skipkeys is false (the default), a TypeError will be raised when trying to encode keys that are not str , int , float or None . If skipkeys is true, such items are simply skipped.

If check_circular is true (the default), then lists, dicts, and custom encoded objects will be checked for circular references during encoding to prevent an infinite recursion (which would cause a RecursionError ). Otherwise, no such check takes place.

If allow_nan is true (the default), then NaN , Infinity , and -Infinity will be encoded as such. This behavior is not JSON specification compliant, but is consistent with most JavaScript based encoders and decoders. Otherwise, it will be a ValueError to encode such floats.

If sort_keys is true (default: False ), then the output of dictionaries will be sorted by key; this is useful for regression tests to ensure that JSON serializations can be compared on a day-to-day basis.

Implement this method in a subclass such that it returns a serializable object for o , or calls the base implementation (to raise a TypeError ).

For example, to support arbitrary iterators, you could implement default() like this:

Return a JSON string representation of a Python data structure, o . For example:

Encode the given object, o , and yield each string representation as available. For example:

Exceptions ¶

Subclass of ValueError with the following additional attributes:

The unformatted error message.

The JSON document being parsed.

The start index of doc where parsing failed.

The line corresponding to pos .

The column corresponding to pos .

Added in version 3.5.

Standard Compliance and Interoperability ¶

The JSON format is specified by RFC 7159 and by ECMA-404 . This section details this module’s level of compliance with the RFC. For simplicity, JSONEncoder and JSONDecoder subclasses, and parameters other than those explicitly mentioned, are not considered.

This module does not comply with the RFC in a strict fashion, implementing some extensions that are valid JavaScript but not valid JSON. In particular:

Infinite and NaN number values are accepted and output;

Repeated names within an object are accepted, and only the value of the last name-value pair is used.

Since the RFC permits RFC-compliant parsers to accept input texts that are not RFC-compliant, this module’s deserializer is technically RFC-compliant under default settings.

Character Encodings ¶

The RFC requires that JSON be represented using either UTF-8, UTF-16, or UTF-32, with UTF-8 being the recommended default for maximum interoperability.

As permitted, though not required, by the RFC, this module’s serializer sets ensure_ascii=True by default, thus escaping the output so that the resulting strings only contain ASCII characters.

Other than the ensure_ascii parameter, this module is defined strictly in terms of conversion between Python objects and Unicode strings , and thus does not otherwise directly address the issue of character encodings.

The RFC prohibits adding a byte order mark (BOM) to the start of a JSON text, and this module’s serializer does not add a BOM to its output. The RFC permits, but does not require, JSON deserializers to ignore an initial BOM in their input. This module’s deserializer raises a ValueError when an initial BOM is present.

The RFC does not explicitly forbid JSON strings which contain byte sequences that don’t correspond to valid Unicode characters (e.g. unpaired UTF-16 surrogates), but it does note that they may cause interoperability problems. By default, this module accepts and outputs (when present in the original str ) code points for such sequences.

Infinite and NaN Number Values ¶

The RFC does not permit the representation of infinite or NaN number values. Despite that, by default, this module accepts and outputs Infinity , -Infinity , and NaN as if they were valid JSON number literal values:

In the serializer, the allow_nan parameter can be used to alter this behavior. In the deserializer, the parse_constant parameter can be used to alter this behavior.

Repeated Names Within an Object ¶

The RFC specifies that the names within a JSON object should be unique, but does not mandate how repeated names in JSON objects should be handled. By default, this module does not raise an exception; instead, it ignores all but the last name-value pair for a given name:

The object_pairs_hook parameter can be used to alter this behavior.

Top-level Non-Object, Non-Array Values ¶

The old version of JSON specified by the obsolete RFC 4627 required that the top-level value of a JSON text must be either a JSON object or array (Python dict or list ), and could not be a JSON null, boolean, number, or string value. RFC 7159 removed that restriction, and this module does not and has never implemented that restriction in either its serializer or its deserializer.

Regardless, for maximum interoperability, you may wish to voluntarily adhere to the restriction yourself.

Implementation Limitations ¶

Some JSON deserializer implementations may set limits on:

the size of accepted JSON texts

the maximum level of nesting of JSON objects and arrays

the range and precision of JSON numbers

the content and maximum length of JSON strings

This module does not impose any such limits beyond those of the relevant Python datatypes themselves or the Python interpreter itself.

When serializing to JSON, beware any such limitations in applications that may consume your JSON. In particular, it is common for JSON numbers to be deserialized into IEEE 754 double precision numbers and thus subject to that representation’s range and precision limitations. This is especially relevant when serializing Python int values of extremely large magnitude, or when serializing instances of “exotic” numerical types such as decimal.Decimal .

Command Line Interface ¶

Source code: Lib/json/tool.py

The json.tool module provides a simple command line interface to validate and pretty-print JSON objects.

If the optional infile and outfile arguments are not specified, sys.stdin and sys.stdout will be used respectively:

Changed in version 3.5: The output is now in the same order as the input. Use the --sort-keys option to sort the output of dictionaries alphabetically by key.

Command line options ¶

The JSON file to be validated or pretty-printed:

If infile is not specified, read from sys.stdin .

Write the output of the infile to the given outfile . Otherwise, write it to sys.stdout .

Sort the output of dictionaries alphabetically by key.

Disable escaping of non-ascii characters, see json.dumps() for more information.

Added in version 3.9.

Parse every input line as separate JSON object.

Added in version 3.8.

Mutually exclusive options for whitespace control.

Show the help message.

Table of Contents

- Basic Usage

- Encoders and Decoders

- Character Encodings

- Infinite and NaN Number Values

- Repeated Names Within an Object

- Top-level Non-Object, Non-Array Values

- Implementation Limitations

- Command line options

Previous topic

email.iterators : Iterators

mailbox — Manipulate mailboxes in various formats

- Report a Bug

- Show Source

JSON document representation

JSON documents consist of fields, which are name-value pair objects. The fields can be in any order, and be nested or arranged in arrays. Db2® can work with JSON documents in either their original JSON format or in the binary-encoded format called BSON (Binary JSON).

For more information about JSON documents, see JSON documents .

JSON data must be provided in Unicode and use UTF-8 encoding. Data in the BSON format must use the little-endian format internally.

For your convenience, the SYSIBM.JSON_TO_BSON and SYSIBM.BSON_TO_JSON conversion functions are provided. You can run these functions to convert a file from one format to the other, as needed.