- A-Z Sitemap

- Indigenous Education

- Academic Calendars

- Course Schedule

- Dates & Deadlines

- Course Registration

- Faculty & Staff Links

- Williams Lake

- News & Events

- Career Connections

- Student Email

- Staff Email

- TRUemployee

- Register now

Section Menu

- Open Learning

- Program Advising

- Programs FAQ

- TRU Start BC

- Arts and Science Breadth Requirements

- Business Breadth Requirements

- Courses FAQ

- Course Delivery Formats

- Education and Social Work Professional Development Courses

- Health Sciences Transition Courses

- Nursing Transition Courses

- Summer Science Lab Courses

- Registration FAQ

- Keys to Success

- Moodle Tutorials

- Finance FAQ

- Educational Advising

- ProctorU FAQ

- Canadian Invigilator Network (CIN)

- GDBA/MBA Exam Schedule

- Student Forms

- Academic Integrity and Student Conduct

- Formal Transfer Credit

- Registration

- Final Grades

- Grading Systems and Procedures

- Student Academic Appeals

- Program Completion and Graduation

- Release of Student Information

- Accessibility Services

- Writing Support

- Library Services

- Career Planning

- Open Learning Calendar

- Transferring Credit to Other Institutions

- University of Victoria Transfer Credit

- First Nations Language Centres

- Partnerships Contact

- Transfer FAQ

- Credit Bank

- Campus Students

- Open Learning Faculty Members

- Open Learning News

- Open Learning Teaching Excellence Awards

- Learning Technology

RSMT 3501: Introduction to Research Methods

This course will provide an opportunity for participants to establish or advance their understanding of research through critical exploration of research language, ethics, and approaches. The course introduces the language of research, ethical principles and challenges, and the elements of the research process within quantitative, qualitative, and mixed methods approaches. Participants will use these theoretical underpinnings to begin to critically review literature relevant to their field or interests and determine how research findings are useful in forming their understanding of their work, social, local and global environment .

Online, Paced

- 60 credits of coursework

Credit will only be granted for one of HEAL 350, HLTH 3501 or RSMT 3501 .

Learning outcomes

- Understand research terminology

- Be aware of the ethical principles of research, ethical challenges and approval processes

- Describe quantitative, qualitative and mixed methods approaches to research

- Identify the components of a literature review process

- Critically analyze published research

Course topics

- Module 1: Foundations

- Module 2: Quantitative Research

- Module 3: Qualitative Research

- Module 4: Mixed Methods Research

Required text and materials

The following materials are rquired for this course:

- Creswell, J. W. (2023). Research design: Qualitative, Quantitative and Mixed Methods Approaches (6th Ed.) Sage Publications. Type: Textbook. ISBN: 9781071817940

- Thompson Rivers University Library. (2011). APA Citation Style - Quick Guide (6th ed.). Retrieved from https://tru.ca/__shared/assets/apastyle31967.pdf

Optional materials

Students are recommended to have access to a print copy of a dictionary of epidemiology, research or statistics or an online glossary.

A highly recommended dictionary is: Porta, M. (2014). A dictionary of epidemiology (6th ed). New York, NY: Oxford University Press.

Assessments

To successfully complete this course, students must achieve a passing grade of 50% or higher on the overall course and 50% or higher on the mandatory final project.

Tri-council Ethics Certification

As part of the module on Research Ethics, students will work through the Tri-council Research Ethics Certification online program that will provide them with a certificate of completion. Students will need to submit a copy of this certification as part of their final project to complete the course.

Open Learning Faculty Member Information

An Open Learning Faculty Member is available to assist students. Students will receive the necessary contact information at the start of the course.

- Terms & Privacy

- Emergency Information

- Accreditation

- Accessibility Feedback

- Current Students

- Prospective Students

- Open Learning Students

- Faculty & Staff

- Financial Aid

- All Student Services

- Careers at TRU

- News & Events

- Conference Centre

- Room Bookings

This course is currently unavailable.

Please continue to check here for availability.

This course is not currently available for registration.

Registrations will open as follows: - March (for May course start dates) - July (for September course start dates) - November (for January course start dates)

Please enable JavaScript in your web browser to get the best experience.

- Find a course

- Undergraduate study

- Postgraduate study

- Research degrees

- Short courses

- MOOCs - free short courses

- Why study with us

- Where to study

- Online learning

- Study with a local teaching centre

- Study in Paris

- Study humanities in London

- Fees and funding

- Costs of your course

- Funding your study

- How to pay your fees

- How to apply

- Undergraduate applications

- Postgraduate applications

- Help with your application

- Entry routes

- Am I qualified?

- English requirements

- Computer requirements

- Recognition of prior learning

- Supplying evidence

- What happens next?

- Transferring from another institution

- Student terms and conditions

- Inclusive practice and access

- Worldwide education delivered locally

- Register your interest

- Student Stories

- Taster courses for schools

- Current students

- Student portal

- Student blog

- Student services

- Accommodation in London

- Library services

- BLOOM @ Senate House

- Requesting a transcript or certificate

- Support and wellbeing

- Clubs and societies

- Getting involved

- Careers service

- Recent graduates

- Working with alumni

- Working with academics

- Information for employers

- Examinations and assessment

- Assessment timetables

- Entry and deadlines

- Exam centres

- Exam entry and results dates

- Assessment offences

- Mitigating circumstances

- Academic regulations

- Policies and procedures

- Access and Participation Statement

- Refund and Compensation Policy

- Student Protection Plan

- Student guide

- The Student Charter

- Complaints and appeals

- Preparing to graduate

- After Graduation

- Past ceremonies

- Students of federation members

- Research challenges

- Institutes, centres & initiatives

- Institute in Paris

- Centre for Online and Distance Education

- London Research & Policy Partnership

- Institutes at School of Advanced Study

- Public engagement

- Fellowships

- Projects and experts

- Postgraduate research

- Research governance

- Our federation

- Our Chancellor

- Senior Executive Team

- Our history

- Our global reputation

- Equality, diversity and inclusion

- Our civic role

- Strategy 2020-25

- Research & public engagement

- Study with us

- School of Advanced Study

- What makes us unique

- Board of Trustees

- Collegiate Council

- Statutes and Ordinances

- Academic Regulations

- Honorary Awards

- Annual reports and financial statements

- Charitable status

- Doing business with us

- Trust Funds

- Core policies

- Academic quality assurance

- Student policies and procedures

- Our services

- Senate House Library

- Intercollegiate Halls

- The Careers Group

- Our research libraries

- Conference & event hire

- Private housing services

- Short stay accommodation

- University Merchandise

- University of London Press

- Work for us

- Becoming a teaching centre

- Contact and find us

- News & Events

- Past events

- Student blogs

- The Student Insider magazine

- Alumni & Supporters

- Alumni ambassadors

- Your alumni community

- New graduates

- Get involved

- Keep in touch

- Request a transcript

- The Convocation Project

- Ways to give

- Areas to support

- Recognising our donors

- Your impact

- Contact the Development Office

What are you looking for?

Popular courses.

- BSc Business Administration

- BSc Computer Science

- BSc Psychology

- International Foundation Programme

- MSc Computer Science

- MSc Cyber Security

- MSc Professional Accountancy

Understanding Research Methods

Module information>.

Ongoing (every four weeks)

10-15 hours

This MOOC is about demystifying research and research methods. It will outline the fundamentals of doing research, aimed primarily, but not exclusively, at the postgraduate level.

- Share this page on Facebook

- Share this page on X

- Share this page on LinkedIn

About this course

The course will appeal to those of you who require an understanding of research approaches and skills, and importantly an ability to deploy them in your studies or in your professional lives. In particular, this course will aid those of you who have to conduct research as part of your postgraduate studies.

Related Content

Use c++ to build a crypto trading platform ii: data, use c++ to build a crypto trading platform iii: functions, use c++ to build a crypto trading platform iv: objects, use c++ to build a crypto trading platform v: final system.

Browse Course Material

Course info, instructors.

- Prof. Jesper B. Sorensen

- Prof. Lotte Bailyn

Departments

- Sloan School of Management

As Taught In

- Social Science

Learning Resource Types

Doctoral seminar in research methods i, course description.

You are leaving MIT OpenCourseWare

Research Basics: an open academic research skills course

- Lesson 1: Using Library Tools

- Lesson 2: Smart searching

- Lesson 3: Managing information overload

- Assessment - Module 1

- Lesson 1: The ABCs of scholarly sources

- Lesson 2: Additional ways of identifying scholarly sources

- Lesson 3: Verifying online sources

- Assessment - Module 2

- Lesson 1: Creating citations

- Lesson 2: Citing and paraphrasing

- Lesson 3: Works cited, bibliographies, and notes

- Assessment - Module 3

- - For Librarians and Teachers -

- Acknowledgements

- Other free resources from JSTOR

JSTOR is a digital library for scholars, researchers, and students.

Learn more about JSTOR

Get Help with JSTOR

JSTOR Website & Technical Support

Email: [email protected] Text: (734)-887-7001 Call Toll Free in the U.S.: (888)-388-3574 Call Local and International: (734)-887-7001

Hours of operation: Mon - Fri, 8:30 a.m. - 5:00 p.m. EDT (GMT -4:00)

Welcome to the ever-expanding universe of scholarly research!

There's a lot of digital content out there, and we want to help you get a handle on it. Where do you start? What do you do? How do you use it? Don’t worry, this course has you covered.

This introductory program was created by JSTOR to help you get familiar with basic research concepts needed for success in school. The course contains three modules, each made up of three short lessons and three sets of practice quizzes. The topics covered are subjects that will help you prepare for college-level research. Each module ends with an assessment to test your knowledge.

The JSTOR librarians who helped create the course hope you learn from the experience and feel ready to research when you’ve finished this program. Select Module 1: Effective Searching to begin the course. Good luck!

- Next: Module 1: Effective searching >>

- Last Updated: Apr 24, 2024 6:38 AM

- URL: https://guides.jstor.org/researchbasics

JSTOR is part of ITHAKA , a not-for-profit organization helping the academic community use digital technologies to preserve the scholarly record and to advance research and teaching in sustainable ways.

©2000-2024 ITHAKA. All Rights Reserved. JSTOR®, the JSTOR logo, JPASS®, Artstor® and ITHAKA® are registered trademarks of ITHAKA.

JSTOR.org Terms and Conditions Privacy Policy Cookie Policy Cookie settings Accessibility

Graduate research methods in social work

(2 reviews)

Matt DeCarlo, La Salle University

Cory Cummings, Nazareth University

Kate Agnelli, Virginia Commonwealth University

Copyright Year: 2021

ISBN 13: 9781949373219

Publisher: Open Social Work Education

Language: English

Formats Available

Conditions of use.

Learn more about reviews.

Reviewed by Laura Montero, Full-time Lecturer and Course Lead, Metropolitan State University of Denver on 12/23/23

Graduate Research Methods in Social Work by DeCarlo, et al., is a comprehensive and well-structured guide that serves as an invaluable resource for graduate students delving into the intricate world of social work research. The book is divided... read more

Comprehensiveness rating: 4 see less

Graduate Research Methods in Social Work by DeCarlo, et al., is a comprehensive and well-structured guide that serves as an invaluable resource for graduate students delving into the intricate world of social work research. The book is divided into five distinct parts, each carefully curated to provide a step-by-step approach to mastering research methods in the field. Topics covered include an intro to basic research concepts, conceptualization, quantitative & qualitative approaches, as well as research in practice. At 800+ pages, however, the text could be received by students as a bit overwhelming.

Content Accuracy rating: 5

Content appears consistent and reliable when compared to similar textbooks in this topic.

Relevance/Longevity rating: 5

The book's well-structured content begins with fundamental concepts, such as the scientific method and evidence-based practice, guiding readers through the initiation of research projects with attention to ethical considerations. It seamlessly transitions to detailed explorations of both quantitative and qualitative methods, covering topics like sampling, measurement, survey design, and various qualitative data collection approaches. Throughout, the authors emphasize ethical responsibilities, cultural respectfulness, and critical thinking. These are crucial concepts we cover in social work and I was pleased to see these being integrated throughout.

Clarity rating: 5

The level of the language used is appropriate for graduate-level study.

Consistency rating: 5

Book appears to be consistent in the tone and terminology used.

Modularity rating: 4

The images and videos included, help to break up large text blocks.

Organization/Structure/Flow rating: 5

Topics covered are well-organized and comprehensive. I appreciate the thorough preamble the authors include to situate the role of the social worker within a research context.

Interface rating: 4

When downloaded as a pdf, the book does not begin until page 30+ so it may be a bit difficult to scroll so long for students in order to access the content for which they are searching. Also, making the Table of Contents clickable, would help in navigating this very long textbook.

Grammatical Errors rating: 5

I did not find any grammatical errors or typos in the pages reviewed.

Cultural Relevance rating: 5

I appreciate the efforts made to integrate diverse perspectives, voices, and images into the text. The discussion around ethics and cultural considerations in research was nuanced and comprehensive as well.

Overall, the content of the book aligns with established principles of social work research, providing accurate and up-to-date information in a format that is accessible to graduate students and educators in the field.

Reviewed by Elisa Maroney, Professor, Western Oregon University on 1/2/22

With well over 800 pages, this text is beyond comprehensive! read more

Comprehensiveness rating: 5 see less

With well over 800 pages, this text is beyond comprehensive!

I perused the entire text, but my focus was on "Part 4: Using qualitative methods." This section seems accurate.

As mentioned above, my primary focus was on the qualitative methods section. This section is relevant to the students I teach in interpreting studies (not a social sciences discipline).

This book is well-written and clear.

Navigating this text is easy, because the formatting is consistent

Modularity rating: 5

My favorite part of this text is that I can be easily customized, so that I can use the sections on qualitative methods.

The text is well-organized and easy to find and link to related sections in the book.

Interface rating: 5

There are no distracting or confusing features. The book is long; being able to customize makes it easier to navigate.

I did not notice grammatical errors.

The authors offer resources for Afrocentricity for social work practice (among others, including those related to Feminist and Queer methodologies). These are relevant to the field of interpreting studies.

I look forward to adopting this text in my qualitative methods course for graduate students in interpreting studies.

Table of Contents

- 1. Science and social work

- 2. Starting your research project

- 3. Searching the literature

- 4. Critical information literacy

- 5. Writing your literature review

- 6. Research ethics

- 7. Theory and paradigm

- 8. Reasoning and causality

- 9. Writing your research question

- 10. Quantitative sampling

- 11. Quantitative measurement

- 12. Survey design

- 13. Experimental design

- 14. Univariate analysis

- 15. Bivariate analysis

- 16. Reporting quantitative results

- 17. Qualitative data and sampling

- 18. Qualitative data collection

- 19. A survey of approaches to qualitative data analysis

- 20. Quality in qualitative studies: Rigor in research design

- 21. Qualitative research dissemination

- 22. A survey of qualitative designs

- 23. Program evaluation

- 24. Sharing and consuming research

Ancillary Material

About the book.

We designed our book to help graduate social work students through every step of the research process, from conceptualization to dissemination. Our textbook centers cultural humility, information literacy, pragmatism, and an equal emphasis on quantitative and qualitative methods. It includes extensive content on literature reviews, cultural bias and respectfulness, and qualitative methods, in contrast to traditionally used commercial textbooks in social work research.

Our author team spans across academic, public, and nonprofit social work research. We love research, and we endeavored through our book to make research more engaging, less painful, and easier to understand. Our textbook exercises direct students to apply content as they are reading the book to an original research project. By breaking it down step-by-step, writing in approachable language, as well as using stories from our life, practice, and research experience, our textbook helps professors overcome students’ research methods anxiety and antipathy.

If you decide to adopt our resource, we ask that you complete this short Adopter’s Survey that helps us keep track of our community impact. You can also contact [email protected] for a student workbook, homework assignments, slideshows, a draft bank of quiz questions, and a course calendar.

About the Contributors

Matt DeCarlo , PhD, MSW is an assistant professor in the Department of Social Work at La Salle University. He is the co-founder of Open Social Work (formerly Open Social Work Education), a collaborative project focusing on open education, open science, and open access in social work and higher education. His first open textbook, Scientific Inquiry in Social Work, was the first developed for social work education, and is now in use in over 60 campuses, mostly in the United States. He is a former OER Research Fellow with the OpenEd Group. Prior to his work in OER, Dr. DeCarlo received his PhD from Virginia Commonwealth University and has published on disability policy.

Cory Cummings , Ph.D., LCSW is an assistant professor in the Department of Social Work at Nazareth University. He has practice experience in community mental health, including clinical practice and administration. In addition, Dr. Cummings has volunteered at safety net mental health services agencies and provided support services for individuals and families affected by HIV. In his current position, Dr. Cummings teaches in the BSW program and MSW programs; specifically in the Clinical Practice with Children and Families concentration. Courses that he teaches include research, social work practice, and clinical field seminar. His scholarship focuses on promoting health equity for individuals experiencing symptoms of severe mental illness and improving opportunities to increase quality of life. Dr. Cummings received his PhD from Virginia Commonwealth University.

Kate Agnelli , MSW, is an adjunct professor at VCU’s School of Social Work, teaching masters-level classes on research methods, public policy, and social justice. She also works as a senior legislative analyst with the Joint Legislative Audit and Review Commission (JLARC), a policy research organization reporting to the Virginia General Assembly. Before working for JLARC, Ms. Agnelli worked for several years in government and nonprofit research and program evaluation. In addition, she has several publications in peer-reviewed journals, has presented at national social work conferences, and has served as a reviewer for Social Work Education. She received her MSW from Virginia Commonwealth University.

Contribute to this Page

Qualitative Research Methods

This course is a part of Methods and Statistics in Social Sciences, a 5-course Specialization series from Coursera.

OpenCourser is an affiliate partner of Coursera and may earn a commission when you buy through our links.

Get a Reminder

Not ready to enroll yet? We'll send you an email reminder for this course

University of Amsterdam

Get an email reminder about this course

Similar Courses

What people are saying.

According to other learners, here's what you need to know

qualitative research methods in 24 reviews

A good overview of qualitative research methods from a sociological and anthropological perspective.

Of course, the subject is too wide to fit in a month-long mooc but I felt it was a good primer and touched about key aspects of Qualitative Research Methods, with good references and sign-posting to further in-depth study on the specific topics.

I enjoyed a lot doing field work and analyzing results from all over the world Good Course and provide me basic understanding of Qualitative Research Methods.I will be great if course PDF Transcript will be provided as in Quantitative course instead of Presentation Slides.

Great course with a solid introduction to qualitative research methods.

I was impressed by Dr Moerman's passion to qualitative research methods (though sometimes his vivid gesticulation and jumping distracted me from the material).

unique course on qualitative research methods, Very vague, abstract ideas in the lectures.

I recommend it for those at undergraduate/early stages of post-graduate studies who want to get a leg up in qualitative research methods.

It's full of all the basic knowledge one needs to know about qualitative research methods to begin working with them.

I found this to be an excellent course for not only developing my knowledge of qualitative research methods, but also expanding it.

Excellent introduction to qualitative research methods.

Clear videos's, structure is missing sometimes, but great examples Engaging and broad-based course on qualitative research methods.

I now have an appreciation for Qualitative Research Methods instead of looking down my nose at them.

I found this a very useful way of getting a basic introduction to qualitative research methods, without just sitting down with some text books.

I have learned lot of things about Qualitative Research Methods.

very good course in 10 reviews

Very good course and very worthwhile your time.

Overall, a very good course.

Very good course.

This is a very good course that is helpful both to UG students and experts in this topic.

A very good course, although I think there are a couple of improvements that can be made.

Very very good course, I really enjoyed it and learned a lot.

It's really a very good course.

Wonderful lectures and good experience Well-curated program Very Good course.

Very Good Course Excellent Learning A special shout-out to the professor, Gerben Moerman, to his passion, his enthusiasm and his teaching methodology.

really enjoyed this course in 7 reviews

I really enjoyed this course, I would have liked a bit more explanation for certain concepts and perhaps some reading that were shorter, like articles (the suggested readings were mostly books).

I really enjoyed this course.

It surely does help in understanding the qualitative research methods Excellent course and the Professor Moerman is amazing , his energy and dedication are inspiring , I really enjoyed this course.

I am a healthcare provider and during the COVID 19 stress attending this course was my I really, really enjoyed this course.

gerben moerman in 11 reviews

Thank you so much Dr. Gerben Moerman This course is an excellent opportunity to gain knowledge on Qualitative Research.

Prof. Gerben Moerman is one of the most excited teachers you'll ever 'watch' during any lesson, which makes it even more fun to watch all the lessons.

Gerben Moerman is really good instructor and also I'd never bored while watching his videos.

A truly wonderful course thanks to Gerben Moerman's intelligence, competence and enthusiasm.

I am from technical background but Dr. Gerben Moerman taught in such an interesting way it's really very helpful to me.

Best course on Qualitative Research.Improved knowledge about the concepts and its application in different steps of research.Thanks to Dr.Gerben Moerman for his matchless delivering of concepts.

Es un excelente curso, realmente lo disfruté Thank you very much to Gerben Moerman, lecturer of The University of Amsterdam, for providing me with good guidance for my future research.

Enormous, ocean of thanks to Dr.Gerben Moerman - the manner of presenting the material makes the effect of life presence and all explanations are absolutely clear and fabulous - there is no other course on any other MOOC course!

I am looking forward to any other courses with Dr.Gerben Moerman!

I would also like to express my gratitude to Gerben Moerman for his excellent presentation through out the time.

so much in 8 reviews

Definitely made me realize that there is so much to learn and experience in the world of rigorous qualitative research that one cannot simply "pick up" when working in research-related roles in the industry.

Thank you so much for this wonderful lecture!

The lecture videos are interesting, engaging, and informative, and the assignments are so much fun to complete!

The lecturer does a great job at communicating complex concepts in an effective and attractive way (so much energy!).

Very well organised course useful material well educated ProfessorVery interesting approach for assigments Despite it is so much theoretical it provides the suitable knowledge to undestood the not only the learning process but the aspects of society.

I learned so much about differente theories and practice methods.

Thanks so much I prefer the method where there are questions in between the lectures, and a quiz every week.

An overview of related careers and their average salaries in the US. Bars indicate income percentile.

Associate Graduate Faculty, Qualitative Research $43k

IUU - Healthcare Moderator - Qualitative Research, Oncology $49k

Assistant Research Technologist/Research Associate $53k

Research Associate/ Clinical Research coordinator $55k

Qualitative Market Researcher $61k

Research Associate- Process Research 1 $72k

Research Coordinator, Research Computing Services $73k

Research Associate, Economic Research Department $76k

Biotech Market Researcher, qualitative and quantitative $83k

Senior Research Associate in Research & Development $88k

Research Physicist, Milliken Research $101k

Research Engineer, Research and Development Division $114k

Write a review

Your opinion matters. Tell us what you think.

Please login to leave a review

Sorted by relevance

Like this course?

Here's what to do next:

- Save this course for later

- Get more details from the course provider

- Enroll in this course

Special thanks to our sponsors

Online courses from the world's best universities

Simple, cost-effective cloud hosting services

Buy cheap domain names and enjoy 24/7 support

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Med Internet Res

- v.22(4); 2020 Apr

Massive Open Online Course Evaluation Methods: Systematic Review

Abrar alturkistani.

1 Global Digital Health Unit, Imperial College London, London, United Kingdom

2 Digitally Enabled PrevenTative Health Research Group, Department of Paediatrics, University of Oxford, Oxford, United Kingdom

Kimberley Foley

Terese stenfors.

3 Department of Learning, Informatics, Management and Ethics, Karolinska Institutet, Stockholm, Sweden

Elizabeth R Blum

Michelle helena van velthoven, edward meinert, associated data.

Preferred Reporting Items for Systematic Reviews and Meta-Analyses 2009 checklist.

Search strategy.

Data abstraction form.

Quality assessment results of the Randomized Controlled Trial [ 20 ] using the Cochrane Collaboration Risk of Bias Tool.

Quality assessment results of cross-sectional studies using the NIH - National Heart, Lung and Blood Institute quality assessment tool.

Quality assessment results for the quasi experimental study using the Cochrane Collaboration Risk of Bias Tool for Before-After (Pre-Post) Studies With No Control Group.

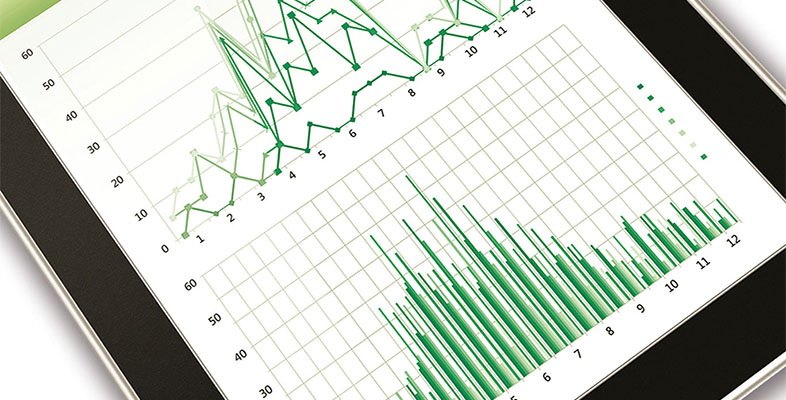

Massive open online courses (MOOCs) have the potential to make a broader educational impact because many learners undertake these courses. Despite their reach, there is a lack of knowledge about which methods are used for evaluating these courses.

The aim of this review was to identify current MOOC evaluation methods to inform future study designs.

We systematically searched the following databases for studies published from January 2008 to October 2018: (1) Scopus, (2) Education Resources Information Center, (3) IEEE (Institute of Electrical and Electronic Engineers) Xplore, (4) PubMed, (5) Web of Science, (6) British Education Index, and (7) Google Scholar search engine. Two reviewers independently screened the abstracts and titles of the studies. Published studies in the English language that evaluated MOOCs were included. The study design of the evaluations, the underlying motivation for the evaluation studies, data collection, and data analysis methods were quantitatively and qualitatively analyzed. The quality of the included studies was appraised using the Cochrane Collaboration Risk of Bias Tool for randomized controlled trials (RCTs) and the National Institutes of Health—National Heart, Lung, and Blood Institute quality assessment tool for cohort observational studies and for before-after (pre-post) studies with no control group.

The initial search resulted in 3275 studies, and 33 eligible studies were included in this review. In total, 16 studies used a quantitative study design, 11 used a qualitative design, and 6 used a mixed methods study design. In all, 16 studies evaluated learner characteristics and behavior, and 20 studies evaluated learning outcomes and experiences. A total of 12 studies used 1 data source, 11 used 2 data sources, 7 used 3 data sources, 4 used 2 data sources, and 1 used 5 data sources. Overall, 3 studies used more than 3 data sources in their evaluation. In terms of the data analysis methods, quantitative methods were most prominent with descriptive and inferential statistics, which were the top 2 preferred methods. In all, 26 studies with a cross-sectional design had a low-quality assessment, whereas RCTs and quasi-experimental studies received a high-quality assessment.

Conclusions

The MOOC evaluation data collection and data analysis methods should be determined carefully on the basis of the aim of the evaluation. The MOOC evaluations are subject to bias, which could be reduced using pre-MOOC measures for comparison or by controlling for confounding variables. Future MOOC evaluations should consider using more diverse data sources and data analysis methods.

International Registered Report Identifier (IRRID)

RR2-10.2196/12087

Introduction

Massive open online courses (MOOCs) are free Web-based open courses available to anyone everywhere and have the potential to revolutionize education by increasing the accessibility and reach of education to large numbers of people [ 1 ]. However, questions remain regarding the quality of education provided through MOOCs [ 1 ]. One way to ensure the quality of MOOCs is through the evaluation of the course in a systematic way with the goal of improvement over time [ 2 ]. Although research about MOOCs has increased in recent years, there is limited research on the evaluation of MOOCs [ 3 ]. In addition, there is a need for effective evaluation methods for appraising the effectiveness and success of the courses.

Evaluation of courses to assess the success and effectiveness and to advise on course improvements is a long-studied approach in the field of education [ 4 - 6 ]. However, owing to the differences between teaching in MOOCs and traditional, face-to-face classrooms, it is not possible to adapt the same traditional evaluation methods [ 7 , 8 ]. For example, MOOCs generally have no restrictions on entrance, withdrawal, or the submission of assignments and assessments [ 7 ]. The methods used in Web-based education or e-learning are not always applicable to MOOCs because Web-based or e-learning courses are often provided as a part of university or higher education curricula, which are different from MOOCs per student expectations [ 8 ]. It is not suitable to directly compare MOOCs with higher education courses by using traditional evaluation standards and criteria [ 8 ].

Despite the limitations in MOOC evaluation methods, several reviews have been conducted on MOOC-related research methods, without specifically focusing on MOOC evaluations. Two recent systematic reviews were published synthesizing MOOC research methods and topics [ 9 , 10 ]. Zhu et al [ 9 ] and Bozkurt et al [ 11 ] recommended further research on the methodological approaches for MOOC evaluation. This research found little focus on the quality of the techniques and methodologies used [ 11 ]. In addition, a large number of studies on MOOCs examine general pedagogical aspects of the course without evaluating the course itself. Although the general evaluation of MOOC education and pedagogy is useful, it is essential that courses are also evaluated [ 12 ].

To address the gaps in MOOC evaluation methods in the literature, this systematic review aimed to identify and analyze current MOOC evaluation methods. The objective of this review was to inform future MOOC evaluation methodology.

This review explored the following research question: What methods have been used to evaluate MOOCs? [ 13 ]. This systematic review was conducted according to the Cochrane guidelines [ 14 ] and reported according to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses guidelines ( Multimedia Appendix 1 ) [ 15 ]. As the review only used publicly available information, an ethics review board approval was not required. The review was executed in accordance with the protocol published by Foley et al [ 13 ].

Eligibility Criteria

Eligible studies focused on the evaluation of MOOCs with reference to the course design, materials, or topics. The evaluation used the following population, intervention, comparator, outcome (PICO) framework for inclusion in the study:

- Population: learners in any geographic area who have participated in MOOCs [ 13 ].

- Intervention: MOOC evaluation methods. This is intended to be broad to include qualitative, quantitative, and mixed methods [ 13 ].

- Comparator: studies did not need to include a comparator for inclusion in this systematic review [ 13 ].

- Outcome: learner-focused outcomes such as attitudes, cognitive changes, learner satisfaction, etc, will be assessed [ 13 ].

Further to the abovementioned PICO framework, we used the following inclusion and exclusion criteria.

Inclusion Criteria

- Studies with a primary focus on MOOC evaluation and studies that have applied or reviewed MOOC evaluation methods (quantitative, qualitative, or mixed methods) [ 13 ].

- Studies published from 2008 to 2018 [ 13 ].

- All types of MOOCs, for example, extended MOOCs, connectivist MOOCs, language MOOCs, or hybrid MOOCs.

Exclusion Criteria

- Studies not in the English language [ 13 ].

- Studies that primarily focused on e-learning or blended learning instead of MOOCs [ 13 ].

- Studies that focused only on understanding MOOC learners such as their behaviors or motivation to join MOOCs, without referring to the MOOC.

- Studies that focused on machine learning or predictive models to predict learner behavior.

Search Strategy

We searched the following databases for potentially relevant literature from January 2008 to October 2018: (1) Scopus, (2) Education Resources Information Center, (3) IEEE (Institute of Electrical and Electronic Engineers) Xplore, (4) Medical Literature Analysis and Retrieval System Online/PubMed, (5) Web of Science, (6) British Education Index, and (7) Google Scholar search engine. The first search was performed in Scopus. The search words and terms for Scopus were as follows: (mooc* OR “massive open online course” OR coursera OR edx OR odl OR udacity OR futurelearn AND evaluat* OR measur* OR compar* OR analys* OR report* OR assess* AND knowledge OR “applicable knowledge” OR retent* OR impact OR quality OR improv* OR environment OR effect “learning outcome” OR learning). The asterisks after the search terms allow all terms beginning with the same root word to be included in the search. The search terms were then adjusted for each database. The complete search strategy for each database can be found in the protocol by Foley et al [ 13 ] and in Multimedia Appendix 2 . In addition, we scanned the reference lists of included studies.

Selection of Studies

Two reviewers (AA and CL) independently screened the titles and abstracts of the articles for eligibility. Selected studies were identified for full-text reading. Disagreements between the reviewers were resolved by discussions with a third reviewer (EM). Few studies (<10) were discussed with a third reviewer.

Data Extraction

The following information was extracted from each included study using a data abstraction form ( Multimedia Appendix 2 ): (1) article title, country of the first author, and year of publication; (2) study aims; (3) evaluation: evaluation method, study design, evaluation type (evaluation of a single MOOC, multiple MOOCs, or review of a method), data collection methods, data analysis methods, and number of participants; and (4) outcome measures of the study: learner-focused outcomes and other outcomes. The studies were classified as quantitative, mixed methods, or qualitative based on the methods used. Studies were considered as mixed methods if they used a combination of qualitative or quantitative techniques, methods, approaches, concepts, or language in the same study [ 16 ].

Assessment of Methodological Quality

The Cochrane Collaboration Risk of Bias Tool for randomized controlled trials (RCTs) [ 17 ] and the National Institutes of Health—National Heart, Lung, and Blood Institute quality assessment tool for cohort observational studies and for before-after (pre-post) studies with no control group [ 18 ] were used to assess the methodological quality of the included studies depending on their study design.

Data Synthesis

We summarized the data graphically and descriptively. The evaluation results were reported according to the design thinking approach for evaluations that follows the subsequent order: (1) problem framing, (2) data collection, (3) analysis, and (4) interpretation [ 19 ].

Problem Framing

The evaluation-focused categories in the problem framing section were determined through discussions among the primary authors to summarize study aims and objectives. The 3 categories used in the evaluation-focused categories were defined as follows:

- The learner-focused evaluation seeks to gain insight into the learner characteristics and behavior, including metrics such as completion and participation rates, satisfaction rates, their learning experiences, and outcomes.

- Teaching-focused evaluation studies aim to analyze pedagogical practices so as to improve teaching.

- MOOC-focused evaluation studies aim to better understand the efficacy of the learning platform to improve the overall impact of these courses.

Further to the evaluation-focused categories, the subcategories were generated by conducting a thematic analysis of the MOOC evaluation studies’ aims and objectives. The themes resulted through an iterative process where study aims were coded and then consolidated into themes by the first author. The themes were then discussed with and reviewed by the second author until an agreement was reached.

Data Collection Analysis and Interpretation

The categories reported in the data collection sections were all representations of what the studies reported to be the data collection method. The categorization of the learner-focused parameters was done based on how the authors identified the outcomes. For example, if authors mention that the reported outcome was measuring learners’ attitudes to evaluate overall MOOC experience , the parameter was recorded in the learner experience category. Similarly, if the authors mentioned that the reported outcome was evaluating what students gained from the course, the parameter was recorded as longer term learner outcomes .

In this section, we have described the search results and the methodological quality assessment results. We have then described the study findings using the following categories for MOOC evaluation: research design, aim, data collection methods, data analysis methods, and analysis and interpretation.

Search Results

There were 3275 records identified in the literature search and 2499 records remained after duplicates were removed. Records were screened twice before full-text reading. In the first screening (n=2499), all articles that did not focus on MOOCs specifically were removed ( Figure 1 ). In the second screening (n=906), all articles that did not focus on MOOC learners or MOOC evaluation methods were removed ( Figure 1 ). This was followed by full-text reading of 154 studies ( Figure 1 ). An additional 5 studies were identified by searching the bibliographies of the included studies. In total, 33 publications were included in this review. There were 31 cross-sectional studies, 1 randomized trial, and 1 quasi-experimental study. The completed data abstraction forms of the included studies are in Multimedia Appendix 3 .

A Preferred Reporting Items for Systematic Reviews and Meta-Analyses flowchart of the literature search.

Methodological Quality

The RCT included in this study [ 20 ] received a low risk-of-bias classification ( Multimedia Appendix 4 ).

Of the 31 cross-sectional studies, 26 received poor ratings because of a high risk of bias ( Multimedia Appendix 5 ). The remaining 5 studies received a fair rating because of a higher consideration for possible bias. In total, 2 studies that were able to measure exposure before outcomes such as studies that performed pretests and posttests [ 21 , 22 ], 3 studies that accounted for confounding variables [ 21 - 23 ], 2 studies that used validated exposure [ 24 , 25 ], and 2 studies that used outcome measures [ 23 , 25 ] received a better quality rating.

A quality assessment of the quasi-experimental study using longitudinal pretests and posttests [ 26 ] is included in Multimedia Appendix 6 .

Massive Open Online Course Evaluation Research Design

In total, 16 studies used a quantitative study design, 11 studies used a qualitative study design, and 6 studies used a mixed methods study design. There was 1 RCT [ 20 ] and 1 quasi-experimental study [ 26 ]. In total, 4 studies evaluated more than 1 MOOC [ 27 - 30 ]. In all, 2 studies evaluated 2 runs of the same MOOC [ 31 , 32 ], and 1 study evaluated 3 parts of the same MOOC, run twice for consecutive years [ 33 ].

In total, 6 studies used a comparator in their methods. A study compared precourse and postcourse surveys by performing a chi-square test of changes in confidence, attitudes, and knowledge [ 34 ]. A study compared the average assignment and final essay scores of MOOC learners with face-to-face learners and calculated 2 independent sample t tests to compare the differences between learners but did not include any pre- and posttest or survey results [ 35 ]. In all, 4 studies conducted pretest and posttest analyses [ 20 , 26 ]. Hossain et al [ 20 ] used an RCT design and calculated the mean between-group differences of knowledge, confidence, and satisfaction comparing MOOC learners with other Web-based learners. Colvin et al [ 21 ] calculated normalized gain using item response between pretest and posttest scores and the Item Response Theory for weekly performance compared with that of on-campus learners. Rubio et al [ 26 ] compared the pretest mean and posttest mean of comprehensibility scores in a MOOC, comparing results with those of face-to-face learners [ 26 ]. Konstan et al [ 22 ] calculated knowledge test gains by performing a paired t test of average knowledge gains, comparing these gains with those of face-to-face learners and (comparing 2 learner groups) the average normalized learning gains among all learners [ 22 ].

Aim of Massive Open Online Course Evaluations

The aim or objective of MOOC evaluations included in this review can be categorized into learner-focused, teaching-focused, and MOOC-focused evaluation aims ( Table 1 ). In all, 16 studies evaluated learner characteristics and behavior and 20 studies evaluated learning outcomes and experiences. One of the least studied aspects of MOOC evaluation is pedagogical practices, which were only evaluated by 2 studies [ 36 , 37 ].

The aim of the massive open online course evaluations for the included studies.

a MOOC: massive open online course.

Massive Open Online Course Evaluation Data Collection Methods

In all, 12 studies used 1 data source [ 20 , 24 , 25 , 27 , 28 , 31 , 32 , 38 , 43 , 47 ], 11 studies used 2 data sources [ 21 , 26 , 29 , 30 , 33 , 34 , 36 , 40 , 42 , 45 , 51 ], 7 studies used 3 data sources [ 22 , 35 , 39 , 41 , 44 , 46 , 48 ], 2 studies used 4 data sources [ 23 , 37 ], and 1 study used 5 data sources [ 52 ]. The most used data sources were surveys followed by learning management system (LMS), quizzes, and interviews ( Table 2 ). “Other” data sources that are referred to in Table 2 include data collected from social media posts [ 37 ], registration forms [ 30 , 44 ], online focus groups [ 37 ], and homework performance data [ 21 ]. These data sources were used to collect data on different aspects of the evaluation.

Studies using different data sources (N=33).

In total, 8 studies collected data through interviews and had a population size ranging from 2 to 44 [ 23 , 37 , 39 , 42 , 43 , 49 , 51 , 52 ]. In total, 20 studies that collected data through surveys had a population size ranging from 25 to 10,392 [ 22 - 41 , 44 - 46 , 51 , 52 ]. In all, 18 studies that collected data through the LMS [ 22 , 24 , 26 , 29 - 31 , 33 - 35 , 39 - 42 , 44 - 46 , 48 , 52 ] had a population size made of participants or data points (eg, discussion posts) ranging from 59 to 209,871. Nine studies used quiz data [ 20 - 22 , 26 , 33 , 35 , 41 , 47 , 52 ]. Studies that used quiz data had a population size of 48 [ 20 ], 53 [ 47 ], 136 [ 41 ], 1080 [ 21 ], and 5255 [ 22 ]. Other data sources used did not have a clearly reported sample size for a particular source.

Table 3 shows the various data collection methods and their uses. Pre-MOOC surveys or pretests could be used for baseline data such as learner expectations [ 22 , 36 , 50 ] or learner baseline test scores [ 20 - 22 , 26 , 33 ], which, then, allows tests scores to be compared with post-MOOC survey and quiz data [ 20 - 22 , 27 , 33 ]. Table 3 explains how studies collected data to meet the aims of their evaluation. In general, surveys were used to collect demographic data, learner experience, and learner perceptions and reactions, whereas LMS data were used for tracking learner completion of the MOOCs.

Data collection methods and their uses in massive open online course evaluations.

Massive Open Online Course Evaluation Analysis and Interpretation

In terms of the data analysis methods, quantitative methods were the only type of method used in 16 studies with descriptive and inferential statistics, the top 2 preferred methods. Qualitative analysis methods such as thematic analyses, which can include grounded theory [ 49 ], focused coding [ 38 , 39 ], and content analysis [ 25 , 50 ], were mainly used in qualitative studies.

A summary of the parameters, indicators, and data analysis used for the MOOC evaluation can be found in Table 4 . Most notably, inferential statistics were used to analyze learning outcomes ( Table 4 ) such as the comparison of means or the use of regression methods to analyze quiz or test grades. These outcomes were also used as a measure to evaluate the overall effectiveness of a MOOC by the studies. Table 4 shows how the data collection method uses mentioned in Table 3 were measured and analyzed. In general, studies focused on measuring learner engagement and learners’ behavior–related indicators. Studies referred to learning in different ways such as learning , learning performance, learning outcome, or gain in comprehensibility depending on the learning material of the course. Other studies considered learning outcomes such as knowledge retention or what students took away from the course . There was a consensus that learner engagement can be measured by measuring the various learner activities in the course, whereas learner behavior was a more general term used by studies to describe the different MOOC evaluation measures. For teaching-focused evaluation, both Mackness et al [ 37 ] and Singh et al [ 36 ] used learner parameters to reflect and analyze pedagogical practices.

Data collection method uses mentioned earlier and how they were analyzed in massive open online course evaluations.

This study aimed to review current MOOC evaluation methods to understand the methods that have been used in published MOOC studies and subsequently to inform future designs of MOOC evaluation methods. Owing to the diversity of MOOC topics and learners, it is not possible to propose a single evaluation method for all MOOCs. Researchers aiming to evaluate a MOOC should choose a method based on the aims of their evaluation or the parameters they would like to measure. In general, data collection methods were similar in most evaluations, such as the use of interviews or survey data, and the analysis methods were highly heterogeneous among studies.

The cross-sectional study design was used in 31 of 33 of the included studies. The cross-sectional study design was used when the aim was to investigate the factors affecting outcomes for a population at a given time point [ 53 ]. For the MOOC evaluation, this is particularly useful for observing the population of learners and for understanding the factors affecting the success and impact of a MOOC. They are relatively inexpensive to conduct and can assess many outcomes and factors at the same time. However, cross-sectional study designs are subject to nonresponse bias , which means that studies are only representative of those who participated, who incidentally may happen to be different from the rest of the population [ 53 ].

One of the most effective methods of evaluation used in MOOCs was the use of baseline data to compare outcomes. Studies that did pretests and posttests had a less likelihood of bias in their outcomes owing to the measurement of exposure before the measurement of outcome [ 18 ]. Even when studies used pre- and postcourse surveys or tests, they were not longitudinal in design, as such a design requires a follow-up of the same individuals and requires observing them at multiple time points [ 53 ]. Therefore, the use of pre- and postsurveys or tests without linking the individuals may simply represent a difference in the groups studied rather than changes in learning or learner outcomes. The advantages of this method are that it can reduce bias, and quasi-experimental studies are known as strong methods. However, the disadvantage is that although this method may work with assessing learning, such as memorizing information, it may not work to assess skill development or the application of skills.

Understanding the aim behind the evaluation of MOOCs is critically important in designing MOOC evaluation methods as it influences the performance indicators and parameters to be evaluated. More importantly, motivation for the evaluation determines the data methods that will be used. One reason for the inability to conclude a standardized evaluation method from this review is that studies differ in the aspects and purposes of why they are conducting the evaluation. For example, not all studies perform evaluations of MOOCs to evaluate overall effectiveness, which is an important aspect to consider if MOOCs are to be adopted more formally in higher education [ 54 ]. The variability in the motivation of MOOC evaluations may also explain the high variability in the outcomes measured and reported.

Data Collection Methodology

In all, 12 studies used 1 data source and 11 studies used 2 data sources ( Table 3 ), which is not different from previous findings [ 10 ]. The results of this study also show that there is high flexibility in data collection methods for MOOC evaluations from survey data to LMS data to more distinct methods such as online focus groups [ 37 ]. The number of participants in the studies was exceedingly varied. This is due to the difference in the data collection methods used. For example, studies with data captured through the LMS, which is capable of capturing data from all of the learners who joined the course, had the highest number of learners. On the contrary, studies that used more time-consuming methods, such as surveys or interviews, generally had a lower number of participants. It is important to note that the MOOC evaluation is not necessarily improved by increasing the number of data sources but rather by conducting a meaningful analysis of the available data. Some studies preferred multiple methods of evaluation and assessment of learning. One paper argued that this allows to evaluate learning of the diverse MOOC population in a more effective way [ 22 ]. Studies should use the best data collection methods to answer their research aims and questions.

Analysis and Interpretation

In total, 16 of 33 studies used only quantitative methods for analysis ( Table 4 ), which is in line with the general MOOC research, which has been predominated by quantitative methods [ 10 , 55 ]. Studies used statistical methods such as descriptive and inferential statistics for data analysis and interpretation of results. The availability of data from sources such as the LMS may have encouraged the use of descriptive statistical methods [ 10 ]. However, 17 of the 33 included studies used some form of qualitative data analysis methods either by using a qualitative study design or by using a mixed methods study design ( Table 4 ). This may be explained by the recent (2016-2017) rise in the use of qualitative methods in MOOC research [ 10 ].

Although inferential statistics can help create better outcomes from studies, this is not always possible. For example, one study [ 36 ] mentioned a high variation between pre- and postcourse survey participant numbers and another [ 29 ] mentioned a small sample size as reasons for not using inferential statistical methods. It should be noted that using data from multiple sources and having a large sample size does not guarantee the quality of the evaluation methods.

In MOOC research, qualitative data can be useful to understand the meaning of different behaviors as quantitative data, oftentimes, cannot answer why things happened [ 56 ].

Thematic and sentiment data analysis methods seek to represent qualitative data in a systematic way. The thematic analysis seeks to organize information into themes to find patterns [ 57 ]. This is especially useful for generalizing data for a subsequent analysis. For instance, Singh et al [ 36 ], Draffan et al [ 34 ], and Shapiro et al [ 23 ] all used a thematic analysis to simplify heterogeneous responses from interviewees and participants to understand what students enjoy about the MOOCs. Focused coding and grounded theory use similar approaches to grouping qualitative data into themes based on conceptual similarity and to developing analytic narratives. Liu et al [ 38 ] used focused coding to group data from course surveys into positive and negative aspects of MOOCs for future MOOC improvement [ 7 ]. Sentiment analysis and social network analysis are both qualitative analysis strategies with a greater focus on opinion-rich data [ 58 ]. These are important strategies used in understanding the opinions of learners and converting subjective feelings of learners into data that can be analyzed and interpreted.

Outcome Measures

The outcome measures reported greatly varied among studies, which is expected, as identifying the right outcome measures is an inherent challenge in educational research, including more traditional classroom-based studies [ 7 ].

The choice of evaluation methods is highly dependent on the aim of the evaluation and the size of the MOOCs. For quantitative measures, such as completion and participation rates, metrics can be easily collected through the MOOC platform. However, these metrics alone may be insufficient to provide insights into why students fail to complete the course for future improvement. Although it may be difficult to represent the problem holistically using qualitative methods, it can be useful in providing insights from individuals who participated in the MOOCs. Mixed methods studies combine the 2 modalities to better understand metrics generated and produce greater insights for future improvement of the MOOCs.

Learning outcomes were mostly analyzed by inferential statistical methods owing to the use of pretest and posttest methods and the calculation of gains in learning. This method may be most suited for MOOCs that require knowledge retention. Learning parameters also involved a lot of comparisons, either a comparison with pre-MOOC measures or a comparison with other learners or both. Social interactions were studied in 2 of the MOOC evaluations using social network analysis methods. Although the MOOC completion rate has been often cited as a parameter for MOOC success, it can be noticed that studies started to move away from only using completion rates. For example, studies looked at completion of different steps of the MOOCs or looked at overall completion. The learning outcomes reported in this review should be used with caution as not all of them have been validated or assessed for their reliability except for a few.

In total, 26 studies with a cross-sectional design had a low-quality assessment, whereas RCTs and quasi-experimental studies received a high-quality assessment. Having a high level of bias affects the generalizability of studies, which is a common problem in most research using data from MOOCs [ 30 , 59 ]. The availability of high risks of bias in current MOOC evaluations requires a closer look at what were the sources of bias and what methods can be used to reduce them. The use of not validated, self-reported data sources and the lack of longitudinal data also increases the risk of bias in these studies [ 56 ]. However, although most MOOCs struggle with learner retention and MOOC completion rates [ 54 ], it is understandable that studies are not able to collect longitudinal data.

Future Directions

The scarcity of studies focusing on the evaluation of the effectiveness of particular MOOCs relative to the number of available studies on MOOCs raises some questions. For example, many studies that were excluded from this review studied MOOC learners or aspects of the MOOCs without conducting an evaluation of course success or effectiveness. As shown in this review, there is a diverse range of evaluation methods, and the quality of these evaluation studies can be as diverse. The motivation of the evaluation exercise should be the basis of the evaluation study design to design effective quantitative or qualitative data collection strategies. The development of general guidance, standardized performance indicators, and an evaluation framework using a design thinking approach can allow these MOOC evaluation exercises to yield data of better quality and precision and allow improved evaluation outcomes. To provide a comprehensive evaluation of MOOCs, studies should try to use a framework to be able to systematically review all of the aspects of the course.

In general, the adoption of a mixed methods analysis considering both quantitative and qualitative data can be more useful for evaluating the overall quality of MOOCs. Although it is useful to have quantitative data such as learner participation and dropout rates, qualitative data gathered through interviews and opinion mining provide valuable insights into the reasons behind the success or failure of a MOOC. Studies of MOOC evaluations should aim to use data collection and analysis methods that can minimize the risk of bias and provide objective results. Whenever possible, studies should use comparison methods, such as the use of pretest or posttest or a comparison with other types of learners, as a control measure. In addition, learner persistence is an important indicator for MOOC evaluation that needs to be addressed in future research.

Strengths and Limitations

To our knowledge, this is the first study to systematically review the evaluation methods of MOOCs. The findings of this review can serve future MOOC evaluators with recommendations on their evaluation methods to facilitate better study designs and maximize the impact of these Web-based platforms. However, as a lot of MOOCs are not necessarily provided by universities and systematically evaluated and published, the scope of this review can only reflect a small part of MOOC evaluation studies.

There is no one way of completing a MOOC evaluation, but there are considerations that should be taken into account in every evaluation. First, because MOOCs are very large, there is a tendency to use quantitative methods using aggregate-level data. However, aggregate-level data do not always tell why things are happening. Qualitative data could further help interpret the results by exploring why things are happening. Evaluations lacked longitudinal data and very few accounted for confounding variables owing to data collection challenges associated with MOOCs such as not having longitudinal data or not having enough data sources. Future studies could help identify how these challenges could be overcome or minimized.

LMS may not report useful findings on an individual level, but they should still be considered and used in MOOC evaluations. Big data in the form of learning analytics can help with decision making, predicting learner behavior, and providing a more comprehensive picture of the phenomena studied [ 60 ]. Studies should still consider using LMS as it can provide a valuable addition to the research, but researchers need to be careful about the depth of the findings that can be concluded from LMS-only datasets.

The use of qualitative data could help enhance the findings from the studies by explaining the phenomena. Both quantitative and qualitative methods could play a key role in MOOC evaluations.

Current MOOC evaluations are subject to many sources of bias owing to the nature of the courses being open and available to a very large and diverse number of participants. However, methods are available to reduce the sources of bias. Studies could use a comparator, such as pretest scores, or other types of learners to be able to calculate relative changes in learning. In addition, studies could control for confounding variables to reduce bias.

This review has provided an in-depth view of how MOOCs can be evaluated and explored the methodological approaches used. Exploring MOOC methodological approaches has been stated as an area for future research [ 10 ]. The review also provided recommendations for future MOOC evaluations and for future research in this area to help improve the quality and reliability of the studies. MOOC evaluations could contribute to the development and improvement of these courses.

Acknowledgments

The authors would like to thank the medical librarian, Rebecca Jones, for her guidance in the search methods and for reviewing the search strategy used in this protocol. This work was funded by the European Institute of Technology and Innovation Health (Grant No. 18654).

Abbreviations

Multimedia appendix 1, multimedia appendix 2, multimedia appendix 3, multimedia appendix 4, multimedia appendix 5, multimedia appendix 6.

Authors' Contributions: AA and CL completed the screening of articles and data analysis. AA and CL completed the first draft of the manuscript. All authors reviewed and edited the manuscript for content and clarity. EM was the guarantor.

Conflicts of Interest: None declared.

- StudentHome

- IntranetHome

- SponsorHome

- Contact the OU

- Accessibility

- Postgraduate

- News & media

- Business & apprenticeships

- Module D849 Introduction to quantitative and qualitative research methods

D849 Introduction to quantitative and qualitative research methods

- Supplementary texts

Related Archive Content

The Open University

- Conditions of use

- Privacy and cookies

- Modern Slavery Act (pdf 149kb)

© 2021 . All rights reserved. The Open University is incorporated by Royal Charter (RC 000391), an exempt charity in England & Wales and a charity registered in Scotland (SC 038302). The Open University is authorised and regulated by the Financial Conduct Authority in relation to its secondary activity of credit broking.

- © Copyright

- +44 (0) 845 300 60 90

NURS 7950 - Theoretical Foundations and Research Methods for Advanced Practice Nursing

- My Account |

- StudentHome |

- TutorHome |

- IntranetHome |

- Contact the OU Contact the OU Contact the OU |

- Accessibility Accessibility

Postgraduate

- International

- News & media

- Business & apprenticeships

Faculty of Arts and Social Sciences

You are here, decolonial approaches to research methods in psychology.

Are you a doctoral student or an academic staff interested in exploring decolonial principles in your work?

Join us for an engaging workshop that introduces decolonial approaches to research methods in Psychology. This event will help participants understand key concepts like colonisation, colonialism, and coloniality, and explore how these ideas impact research practices.

Read more about this event and how to register

Request your prospectus

Explore our qualifications and courses by requesting one of our prospectuses today.

Request prospectus

Are you already an OU student?

Go to StudentHome

The Open University

- Study with us

- Supported distance learning

- Funding your studies

- International students

- Global reputation

- Apprenticeships

- Develop your workforce

- Contact the OU

Undergraduate

- Arts and Humanities

- Art History

- Business and Management

- Combined Studies

- Computing and IT

- Counselling

- Creative Writing

- Criminology

- Early Years

- Electronic Engineering

- Engineering

- Environment

- Film and Media

- Health and Social Care

- Health and Wellbeing

- Health Sciences

- International Studies

- Mathematics

- Mental Health

- Nursing and Healthcare

- Religious Studies

- Social Sciences

- Social Work

- Software Engineering

- Sport and Fitness

- Postgraduate study

- Research degrees

- Masters in Art History (MA)

- Masters in Computing (MSc)

- Masters in Creative Writing (MA)

- Masters degree in Education

- Masters in Engineering (MSc)

- Masters in English Literature (MA)

- Masters in History (MA)

- Master of Laws (LLM)

- Masters in Mathematics (MSc)

- Masters in Psychology (MSc)

- A to Z of Masters degrees

- Accessibility statement

- Conditions of use

- Privacy policy

- Cookie policy

- Manage cookie preferences

- Modern slavery act (pdf 149kb)

Follow us on Social media

- Student Policies and Regulations

- Student Charter

- System Status

- Contact the OU Contact the OU

- Modern Slavery Act (pdf 149kb)

© . . .

- The Open University

- Guest user / Sign out

- Study with The Open University

My OpenLearn Profile

Personalise your OpenLearn profile, save your favourite content and get recognition for your learning

About this free course

Become an ou student, download this course, share this free course.

Start this free course now. Just create an account and sign in. Enrol and complete the course for a free statement of participation or digital badge if available.

Quantitative and qualitative research in finance

Introduction.

This free course, Quantitative and qualitative research in finance , provides you with a good sense of the guiding ideas behind qualitative and quantitative research, of what they involve in practical terms, and of what they can produce. It outlines some of the key features both in terms of how the data are produced and how they are analysed. It also considers some ethical aspects of research that you should have in mind.

This OpenLearn course is an adapted extract from the Open University course B860 Research methods for finance [ Tip: hold Ctrl and click a link to open it in a new tab. ( Hide tip ) ] .

- Search Menu

- Advance Articles

- Special Issues

- Supplements

- Virtual Collection

- Online Only Articles

- International Spotlight

- Free Editor's Choice

- Free Feature Articles

- Author Guidelines

- Submission Site

- Calls for Papers

- Why Submit to the GSA Portfolio?

- Advertising and Corporate Services

- Advertising

- Reprints and ePrints

- Sponsored Supplements

- Journals Career Network

- About The Gerontologist

- About The Gerontological Society of America

- Editorial Board

- Self-Archiving Policy

- Dispatch Dates

- GSA Journals

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

- Background and Objectives

- Discussion and Implications

- Conflict of Interest

- Data Availability

- Acknowledgments

- < Previous

Caregiving While Black : A Novel, Online Culturally Tailored Psychoeducation Course for Black Dementia Caregivers

- Article contents

- Figures & tables

- Supplementary Data

Karah Alexander, Nkosi Cave, Sloan Oliver, Stephanie Bennett, Melinda Higgins, Ken Hepburn, Carolyn Clevenger, Fayron Epps, Caregiving While Black : A Novel, Online Culturally Tailored Psychoeducation Course for Black Dementia Caregivers, The Gerontologist , Volume 64, Issue 6, June 2024, gnae009, https://doi.org/10.1093/geront/gnae009

- Permissions Icon Permissions

Psychoeducation interventions using distance learning modalities to engage caregivers in active learning environments have demonstrated benefits in enhancing caregiving mastery. However, few of these programs have been specifically adapted to develop mastery in Black caregivers.

A multimethod approach was carried out to assess Caregiving While Black ( CWB ), including pre–post surveys and in-depth interviews. This psychoeducation course addresses the cultural realities of caring for a person living with dementia as a Black American. Caregivers engaged in online asynchronous education related to healthcare navigation, home life management, and self-care. Primary (caregiving mastery) and secondary outcomes (anxiety, depression, perceived stress, burden, perceived ability to manage behavioral and psychological symptoms) were assessed at baseline and post-course (10 weeks).

Thirty-two Black caregivers from across the United States completed the course within the allotted time frame. Paired sample t test analyses revealed significant reductions in caregiver burden and role strain. Caregiver mastery from baseline to completion increased by 0.45 points with an effect size of 0.26 (Cohen’s d ). Twenty-nine caregivers participated in an optional post-course interview, and thematic analysis led to the construction of 5 overarching themes: Comfortability with a Culturally Tailored Course ; Experiences Navigating the Course Platform ; Utility of Course Resources ; Time as a Barrier and Facilitator ; Familial and Community Engagement .

Pilot findings convey a need to continue creating and receiving feedback on culturally tailored psychoeducation programs for dementia caregivers. The next steps include applying results to fuel the success of the next iteration of CWB .

- psychoeducation

- african american

- mastery learning

Email alerts

Citing articles via, looking for your next opportunity.

- Recommend to Your Librarian

Affiliations

- Online ISSN 1758-5341

- Copyright © 2024 The Gerontological Society of America

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

IMAGES

VIDEO

COMMENTS

There are 4 modules in this course. This MOOC is about demystifying research and research methods. It will outline the fundamentals of doing research, aimed primarily, but not exclusively, at the postgraduate level. It places the student experience at the centre of our endeavours by engaging learners in a range of robust and challenging ...

RSMT 3501: Introduction to Research Methods. This course will provide an opportunity for participants to establish or advance their understanding of research through critical exploration of research language, ethics, and approaches. The course introduces the language of research, ethical principles and challenges, and the elements of the ...