Critical Thinking and Transferability [a visual guide for writing literature reviews] : A Review of the Literature

This meta-literature review includes an actual literature review as well as margin notes identifying important concepts and structural elements that are usually found in literature reviews.

Usage metrics

- Research article

- Open access

- Published: 17 January 2020

The TRANSFER Approach for assessing the transferability of systematic review findings

- Heather Munthe-Kaas 1 ,

- Heid Nøkleby 1 ,

- Simon Lewin 1 , 2 &

- Claire Glenton 1 , 3

BMC Medical Research Methodology volume 20 , Article number: 11 ( 2020 ) Cite this article

14k Accesses

30 Citations

36 Altmetric

Metrics details

Systematic reviews are a key input to health and social welfare decisions. Studies included in systematic reviews often vary with respect to contextual factors that may impact on how transferable review findings are to the review context. However, many review authors do not consider the transferability of review findings until the end of the review process, for example when assessing confidence in the evidence using GRADE or GRADE-CERQual. This paper describes the TRANSFER Approach, a novel approach for supporting collaboration between review authors and stakeholders from the beginning of the review process to systematically and transparently consider factors that may influence the transferability of systematic review findings.

We developed the TRANSFER Approach in three stages: (1) discussions with stakeholders to identify current practices and needs regarding the use of methods to consider transferability, (2) systematic search for and mapping of 25 existing checklists related to transferability, and (3) using the results of stage two to develop a structured conversation format which was applied in three systematic review processes.

None of the identified existing checklists related to transferability provided detailed guidance for review authors on how to assess transferability in systematic reviews, in collaboration with decision makers. The content analysis uncovered seven categories of factors to consider when discussing transferability. We used these to develop a structured conversation guide for discussing potential transferability factors with stakeholders at the beginning of the review process. In response to feedback and trial and error, the TRANSFER Approach has developed, expanding beyond the initial conversation guide, and is now made up of seven stages which are described in this article.

Conclusions

The TRANSFER Approach supports review authors in collaborating with decision makers to ensure an informed consideration, from the beginning of the review process, of the transferability of the review findings to the review context. Further testing of TRANSFER is needed.

Peer Review reports

Evidence-informed decision making has become a common ideal within healthcare, and increasingly also within social welfare. Consequently, systematic reviews of research evidence (sometimes called evidence syntheses) have become an expected basis for practice guidelines and policy decisions in these sectors. Methods for evidence synthesis have matured, and there is now an increasing focus on considering the transferability of evidence to end users’ settings (context) in order to make systematic reviews more useful in decision making [ 1 , 2 , 3 , 4 ]. End users can include individual, or groups of, decision makers who commission or use the findings from a systematic review, such as policymakers, health/welfare systems managers, and policy analysts [ 3 ]. The term stakeholders in this paper may also refer to potential stakeholders, or those individuals who have knowledge of, or experience with, the intervention being reviewed and whose input may be considered valuable where the review includes a wide range of contexts, not all of which are well understood by the review team.

Concerns regarding the interaction between context and the effect of interventions are not new: the realist approach to systematic reviews emerged in order to address this issue [ 5 ]. However, while there appears to be an increasing amount of interest, and literature, related to context and its role in systematic reviews, it has been noted that “the importance of context in principle has not yet been translated into widespread good practice” within systematic reviews [ 6 ]. Context has been defined in a number of different ways, with the common characteristic being a set of factors external to an intervention (but which may interact with the intervention) that may influence the effects of the intervention [ 6 , 7 , 8 , 9 ]. Within the TRANSFER Approach, and this paper, “context” refers to the multi-level environment (not just the physical setting) in which an intervention is developed, implemented and assessed: the circumstances that interact, influence and even modify the implementation of an intervention and its effects.

Responding to an identified need from end users

We began this project in response to concerns from end users regarding the relevance of the systematic reviews they had commissioned from us. Many of our systematic reviews deal with questions within the field of social welfare and health systems policy and practice. Interventions in this area tend to be complex in a number of ways – for example, they may include multiple components and be context-dependent [ 10 ]. Commissioners have at times expressed frustration with reviews that (a) did not completely address the question in which they were originally interested, or (b) included few studies that came from seemingly very different settings. In one case, the commissioners wished to limit the review to only include primary studies from their own geographical area (Scandinavia) because of doubts regarding the relevance of studies coming from other settings despite the fact that there was no clear evidence that this intervention would have different effects across settings. Although we regularly engage in dialogue with stakeholders (including commissioners, decision makers, clients/patients) at the beginning of each review process, including a discussion of the review question and context, these discussions have varied in how structured and systematic they have been, and the degree to which they have influenced the final review question and inclusion criteria.

For the purpose of this paper, we will define stakeholders as anyone who has an interest in the findings from a systematic review, including client/patients, practitioners, policy/decision makers, commissioners of systematic reviews and other end users. Furthermore, we will define transferability as an assessment of the degree to which the context of the review question and the context of studies contributing data to the review finding differ according to a priori identified characteristics (transfer factors). This is similar to the definition proposed by Wang and colleagues (2006) whereby transferability is the extent to which the measured effectiveness of an applicable intervention could be achieved in another setting ([ 11 ] p. 77). Other terms related to transferability include applicability, generalizability, transportability and relevance and are discussed at length elsewhere [ 12 , 13 , 14 ].

Context matters

Context is important for making decisions about the feasibility and acceptability of an intervention. Systematic reviews typically include studies from many contexts and then draw conclusions, for example about the effects of an intervention, based on the total body of evidence. When context – including that of both the contributing studies and the end user – is not considered, there can be serious, costly and potentially even fatal consequences.

The case of antenatal corticosteroids for women at risk of pre-term birth illustrates the importance of context: a Cochrane review published in 2006 concluded that “A single course of antenatal corticosteroids should be considered routine for preterm delivery with few exceptions” [ 15 ]. However, a large multi-site cluster randomized implementation trial looking at interventions to increase antenatal corticosteroid use in six low- and middle-income countries, and published in 2015, showed contrasting results. The trial found that: “Despite increased use of antenatal corticosteroids in low-birthweight infants in the intervention groups, neonatal mortality did not decrease in this group, and increased in the population overall” [ 16 ]. The trial authors concluded that “the beneficial effects of antenatal corticosteroids in preterm neonates seen in the efficacy trials when given in hospitals with newborn intensive care were not confirmed in our study in low-income and middle-income countries” and hypothesized that this could be due to, among other things, a lack of neonatal intensive care for the majority of preterm/small babies in the study settings [ 16 ]. While there are multiple possible explanations for these two contrasting conclusions (see Vogel 2017 [ 17 ];), the issue of context seems to be critical: “It seems reasonable to assume that the level of maternal and newborn care provided reflected the best available at the time the studies were conducted, including the accuracy of gestational age estimation for recruited women. Comparatively, no placebo-controlled efficacy trials of ACS have been conducted in low-income countries, where the rates of maternal and newborn mortality and morbidity are higher, and the level of health and human resources available to manage pregnant women and preterm infants substantially lower” [ 17 ]. The results from the Althabe (2015) trial highlighted that (in retrospect) the lack of efficacy trials of ACS from low-resource settings was a major limitation of the evidence base.

An updated version of the Cochrane review was published in 2017, and includes a discussion on the importance of context when interpreting the results: “The issue of generalisability of the current evidence has also been highlighted in the recent cluster-randomised trial (Althabe [2015]). This trial suggested harms from better compliance with antenatal corticosteroid administration in women at risk of delivering preterm in communities of low-resource settings” [ 18 ]. The WHO guidelines on interventions to improve preterm birth outcomes (2015) also include a number of issues to be considered before recommendations in the guideline are applied, that were developed by the Guideline Development Group and informed by both the Roberts (2006) review and the Althabe (2015) trial [ 19 ]. This example illustrates the importance of considering and discussing context when interpreting the findings of systematic reviews and using these findings to inform decision making.

Considering context – current approaches

Studies included in a systematic review may vary considerably in terms of who was involved, where the studies took place and when they were conducted; or according to broader factors such as the political environment, organization of the health or social welfare system, or organization of the society or family. These factors may impact how transferable the studies are to the context specified in the review, and how transferable the review findings are to the end users’ context [ 20 ]. Transferability is often assessed by end users based on the information provided in a systematic review, and tools such as the one proposed by Schloemer and Schröeder-Bäck (2018) can assist them in doing so [ 21 ]. However, review authors can also assist in making such assessments by addressing issues related to context in a systematic review.

There are currently two main approaches for review authors to address issues related to context and the relevance of primary studies to a context specified in the review. One approach to responding to stakeholders’ questions about transferability is to highlight these concerns in the final review product or summaries of the review findings. Cochrane recommends that review authors “describe the relevance of the evidence to the review question” [ 22 ] in the review section entitled Overall completeness and applicability of evidence , which is written at the end of the review process. Consideration of issues related to applicability (transferability) is thus only done at a late stage of the review process. SUPPORT summaries are an example of a product intended to present summaries of review findings [ 23 ] and were originally designed to present the results of systematic reviews to decision makers in low and middle income countries. The summaries examine explicitly whether there are differences between the studies included in the review that is the focus of the summary and low- and middle-income settings [ 23 ]. These summaries have been received positively by decision makers, particularly this section on the relevance of the review findings [ 23 ]. In evaluations of other, similar products, such as Evidence Aid summaries for decision makers in emergency contexts, and evidence summaries created by The National Institute for Health and Care Excellence (NICE) [ 24 , 25 , 26 , 27 ], content related to context and applicability were reported as being especially valuable [ 28 , 29 ].

While these products are useful, the authors of such review summaries would be better able to summarize issues related to context and applicability if these assessments were already present in the systematic review being summarized rather than needing to be made post hoc by the summary authors. However, many reviews often only include relatively superficial discussions of context, relevance or applicability, and do not present systematic assessments of how these factors could influence the transferability of findings.

There are potential challenges related to considering issues related to context and relevance after the review is finished, or even after the analysis is concluded. Firstly, if review authors have not considered factors related to context at the review protocol stage, they may not have defined potential subgroup analyses and explanatory factors which could be used to explain heterogeneity of results from a meta-analysis. Secondly, relevant contextual information that could inform the review authors’ discussion of relevance may not have been extracted from included primary studies. To date, though, there is little guidance for a review author on how to systematically or transparently consider applicability of the evidence to the review context [ 30 ]. Not surprisingly, a review of 98 systematic reviews showed that only one in ten review teams discussed the applicability of results [ 31 ].

The second approach, which also comes late in the review process, is to consider relevance as part of an overall assessment of confidence in review findings. The Grading of Recommendations Assessment, Development and Evaluation (GRADE) Approach for effectiveness evidence and the corresponding GRADE-CERQual approach for qualitative evidence [ 32 , 33 ] both support review authors in making judgments about how confident they are that the review finding is “true” (GRADE: “the true effect lies within a particular range or on one side of a threshold”; GRADE-CERQual: “the review finding a reasonable representation of the phenomenon of interest” [ 33 , 34 ]). GRADE and GRADE-CERQual involve an assessment of a number of domains or components, including methodological strengths and weaknesses of the evidence base, and heterogeneity or coherence, among others [ 32 , 33 ]. However, the domain related to relevance of the evidence base to the review context (GRADE indirectness domain, GRADE-CERQual relevance component) appears to be of special concern for decision makers [ 3 , 35 ]. Too often these assessments of indirectness or relevance that the review team makes may be relatively crude – for example, based on the age of participants or the countries where the studies were carried out, features that are usually easy to assess but not necessarily the most important. This may be due to a lack of guidance for review authors on which factors to consider and how to assess them.

Furthermore, many review authors only first begin to consider indirectness and relevance once the review findings have been developed. An earlier systematic and transparent consideration of transferability could influence many stages of the systematic review process and, in collaboration with stakeholders, could lead to a more thoughtful assessment of the GRADE indirectness domain and GRADE-CERQual relevance component. In Table 1 we describe a scenario where issues related to transferability are not adequately considered during the review process.

By engaging with stakeholders at an early stage of planning the review, review authors could ascertain what factors stakeholders judge to be important for their context and use this knowledge throughout the review process. Previous research indicates that decision makers’ perceptions of the relevance of the results and its applicability to policy facilitates the ultimate use of findings from a review [ 3 , 23 ]. These decision makers explicitly stated that summaries of reviews should include sections on relevance, impact and applicability for decision making [ 3 , 23 ]. Stakeholders are not the only source for identifying transferability factors, as other systematic reviews, implementation studies and qualitative studies may also provide relevant information regarding transferability of findings to specific contexts. However, this paper and the TRANSFER Approach focus on stakeholders specifically as it is our experience that stakeholders are often an underused resource for identifying and discussing transferability.

Working toward collaboration

Involving stakeholders in systematic review processes has long been advocated by research institutions and stakeholders alike as a necessary step in producing relevant and timely systematic reviews [ 36 , 37 , 38 ]. Dialogue with stakeholders is key for (a) defining a clear review question, (b) developing a common understanding of, for instance, the population, intervention, comparison and outcomes of interest, (c) understanding the review context, and (d) increasing acceptance among stakeholders of evidence-informed practice and of systematic reviews as methods for producing evidence [ 38 ]. Stakeholders themselves have indicated that improved collaboration with researchers could facilitate the (increased) use of review findings in decision making [ 3 ]. However, in practice, few review teams actively seek collaboration with relevant stakeholders [ 39 ]. This could be due to time or resource constraints or access issues [ 40 ]. There is currently work underway looking at how to identify and engage relevant stakeholders in the systematic review process (for example, Haddaway 2017 [ 41 ];).

For those review teams who do seek collaboration, there is little guidance available on how to collaborate in a structured manner, and we are not aware of any guidance specifically focussed on considering transferability of review findings [ 42 ]. We are unaware of any guidance intended to support systematic review authors in considering transferability of review findings from the beginning of the review process (i.e. before the findings have been developed). The guidance that is available either focuses on a narrow subset of research questions (e.g. healthcare), is intended to be used at the end of a review process [ 12 , 43 ], focuses on primary research rather than systematic reviews [ 44 ], or is theoretical in nature without any concrete stepwise guidance for review authors on how to consider and assess transferability [ 21 ]. Previous work has pointed out that stakeholders “need systematic and practically relevant knowledge on transferability. This may be supported through more practical tools, useful information about transferability, and close collaboration between research, policy, and practice” [ 21 ]. Other studies have also discussed the need for such practical tools, including more guidance for review authors that focuses on methods for (1) collaborating with end users to develop more precise and relevant review questions and identify a priori factors related to the transferability of review findings, and (2) systematically and transparently assessing the transferability of review findings to the review context, or a specific stakeholders’ context, as part of the review process [ 12 , 45 , 46 ].

The aim of the TRANSFER Approach is to support review authors in developing systematic reviews that are more useful for decision makers. TRANSFER provides guidance for review authors on how to consider and assess the transferability of review findings by collaborating with stakeholders to (a) define the review question, (b) identify factors a priori which may influence the transferability of review findings, and (c) define the characteristics of the context specified in the review with respect to the identified transferability factors.

The aim of this paper is to describe the development and application of the TRANSFER Approach, a novel approach for supporting collaboration between review authors and stakeholders from the beginning of the review process to systematically and transparently consider factors that may influence the transferability of systematic review findings.

We developed the TRANSFER Approach in three stages. In the first stage we held informal discussions with stakeholders to ascertain the usefulness of, guidance on assessing and considering the transferability of review findings. An email invitation to participate in a focus group discussion was sent to nine representatives from five Norwegian directorates that regularly commission systematic reviews from the Norwegian Institute of Public Health. In the email we described that the aim of the discussion would be to discuss the possible usefulness of a tool to assess applicability of systematic review findings to the Norwegian context. Four representatives attended the meeting from three directorates. The agenda for the discussion was a brief introduction to the terms and concepts, “transferability” and “applicability”, followed by an overview of the TRANSFER Approach as a method for addressing transferability and applicability. Finally we undertook an exercise to brainstorm transferability factors that may influence the transferability of a specific intervention to the Norwegian context. Participants provided verbal consent to participate in the discussion. We did not use a structured conversation guide. We took notes from the meeting, and collated the transferability issues that were discussed. We also collated responses regarding the usefulness of using time to discuss transferability with review authors during a project as simple yes or no responses (as well as any details provided with responses).

In the second stage we conducted a systematic mapping to uncover any existing checklists or other guidance for assessing the transferability of review findings, and conducted a content analysis of the identified checklists. We began by consulting systematic review authors in our network in March 2016 to get suggestions as to existing checklists or tools to assess transferability. In June 2016 we designed and conducted a systematic search of eight databases using search terms such as terms “transferability”, “applicability”, “generalizability”, etc. and “checklist”, “guideline”, “tool”, “criteria”, etc. We also conducted a grey literature search and searched the EQUATOR repository of checklists for relevant documents. Documents were included if they described a checklist or tool to assess transferability (or other related terms such as e.g., applicability, generalizability, etc.). We had no limitations related to publication type/status, language or date of publication. Documents that discussed transferability at a theoretical level or assessed the transferability of guidelines to local contexts were not included. The methods and results of this work are described in detail elsewhere [ 30 ]. The output from this stage was a list of transferability factors, which became the basis for the initial version of a ‘conversation guide’ for use with stakeholders in identifying and prioritizing factors related to transferability.

In the third stage, we undertook meetings with stakeholders to explore the use of a structured conversation guide (based on results of the second stage) to discuss the transferability of review findings. We used the draft guide in meetings with stakeholders in three separate systematic review processes. We became aware of redundancies in the conversation guide through these meetings, and also of confusing language in the conversation guide. Based on this feedback and our notes from these meetings we then revised the conversation guide. The result of this process was a refined conversation guide as well as guidance for review authors on how to improve collaboration with stakeholders to consider transferability, and guidance on how to assess and present assessments of transferability.

In this section we begin by presenting the results of the exploratory work around transferability, including the discussions with stakeholders, and experiences of using a structured conversation guide in meeting with stakeholders. We then present the TRANSFER Approach that we subsequently developed including the purpose of the TRANSFER Approach, how to use TRANSFER, and a worked example of TRANSFER in action.

Findings of the exploratory work to develop the TRANSFER Approach

Discussions with stakeholders.

The majority of the 3 h discussion with stakeholders was spent on the exercise. We described for participants a systematic review that had recently been commissioned (by one of the directorates represented) on the effect of supported employment interventions for disabled people on employment outcomes. The participants brainstormed the potential differences between the Norwegian context and other contexts and how these differences might influence how the review findings could be used in the Norwegian context. The participants identified a number of issues related to the population (e.g., proportion of immigrants, education level, etc.), the intervention (the length of the intervention, etc.), the social setting (e.g., work culture, union culture, rural versus urban, etc.) and the comparison interventions (e.g., components of interventions given as part of “usual services”). After the exercise was completed, the participants debriefed on the usefulness of such an approach for thinking about the transferability of review findings at the beginning of the review process, in a meeting setting with review authors. All participants agreed that the discussion was (a) useful, and (b) worth a 2 to 3 h meeting at the beginning of the review process. There was discussion regarding the terminology, however, related to transferability, specifically who is responsible for determining transferability. One participant felt that the “applicability” of review findings should be determined by stakeholders, including decision makers, while “transferability” was a question that can be assessed by review authors. There was no consensus among participants regarding the most appropriate terms to use. We believe that opinions expressed within this discussion may be related to language, for instance, how the Norwegian terms for ‘applicability’ and ‘transferability’ are used and interpreted. The main findings from the focus group discussion were that stakeholders considered meeting with review authors early in the review process to discuss transferability factors to be a good use of time and resources.

Systematic mapping and content analysis of existing checklists

We identified 25 existing checklists that assess transferability or related concepts. Only four of these were intended for use in the context of a systematic review [ 14 , 43 , 45 , 47 ]. We did not identify any existing tools that covered our specific aims. Our analysis of the existing checklists identified seven overarching categories of factors related to transferability in the included checklists: population, intervention, implementation context (immediate), comparison condition, outcomes, environmental context, and researcher conduct [ 30 ]. The results of this mapping are reported elsewhere [ 30 ].

Using a structured conversation guide to discuss transferability

Both the review authors and stakeholders involved in the three systematic review processes where an early version of the conversation guide was piloted were favorable to the idea of using a structured approach to discussing transferability. The initial conversation guide that was used in meetings with the stakeholders was found to be too long and repetitive to use easily. The guide was subsequently refined to be shorter and to better reflect the natural patterns of discussion with stakeholders around a systematic review question (i.e. population, intervention, comparison, outcome).

The TRANSFER Approach: purpose

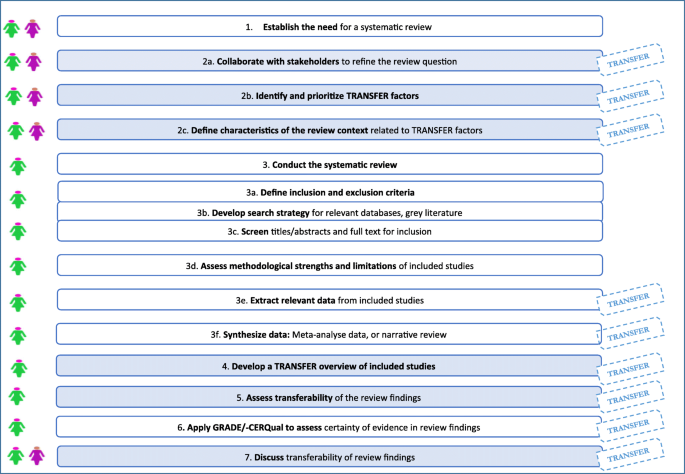

The exploratory work described above resulted in the TRANSFER Approach. The TRANSFER Approach aims to support review authors in systematically and transparently considering transferability of review findings from the beginning of the review process. It does this by providing review authors with structured guidance on how to collaborate with stakeholders to identify transferability factors, and how to assess the transferability of the review findings to the review context or other local contexts (see Fig. 1 ).

TRANSFER diagram

The TRANSFER Approach is intended for use in all types of reviews. However, as of now, it has only been tested in reviews of effectiveness related to population level interventions.

How to use TRANSFER in a systematic review

The TRANSFER Approach is divided into seven stages that mirror the systematic review process. Table 2 outlines the stages of the TRANSFER Approach and the corresponding guidance and templates that support review authors in considering transferability at each stage (see Table 3 ). During these seven stages, review authors make use of the two main components of the TRANSFER Approach: (1) guidance for review authors on how to consider and assess transferability of review findings (including templates), and (2) a Conversation Guide to use with stakeholders in identifying and prioritizing factors related to transferability.

Once systematic review authors have gone through the seven stages outlined in Table 3 , they come up with assessments of concern regarding each transferability factor. This assessment should be expressed as no, minor, moderate or serious concerns regarding the influence of each transferability factor for an individual review finding. This assessment is made for each individual review finding because TRANSFER assessments are intended to support GRADE/−CERQual assessments of indirectness /relevance, and the GRADE/−CERQual approaches require the review author to make assessments for each individual outcome (for effectiveness reviews) or review finding (for qualitative evidence syntheses). Assessments must be done for each review finding individually because assessments may vary across outcomes. One transferability factor may affect a number of review findings (e.g., years of experience of mentors in a mentoring program), in the same way that one risk of bias factor (e.g., selection bias as a consequence of inadequate concealment of allocations before assignment) may affect multiple review findings. However, it is also the case that one transferability factor can affect these review findings differently (e.g., average education level of the population may influence on finding and not another) in the same way that one risk of bias factor may affect review findings differently (e.g., detection bias, due to lack of blinding of outcome assessment, may be less important for objective finding, such as death). An overall TRANSFER assessment of transferability is then made by the review authors (also expressed as no, minor, moderate or serious concerns), based on the assessment(s) for each transferability factor(s). Review authors should then provide an explanation for the overall TRANSFER assessment and an indication of how each transferability factor may influence the finding (e.g. direction and/or size of the effect estimate). Guidance on making assessments is discussed in greater detail below. In this paper, we have, for simplicity, described transferability factors as individual and mutually exclusive constructs. Through our experience in applying TRANSFER, however, we have seen that transferability factors can influence and amplify each other. While the current paper does not address these potential interactions, other review authors will need to consider when transferability factors influence each other or when one factor amplifies the influence of another factor, for example, primary care health facilities in rural settings may both have both fewer resources and poorer access to referral centres, both of which may interact to negatively impact on health outcomes.

TRANSFER in action

In the following section we present the stages of the TRANSFER Approach using a worked example. The scenario is based on a real review [ 48 ]. However, the TRANSFER Approach was not available when this review started and thus the conversation with decision makers was conducted post hoc. Furthermore, while the TRANSFER factors are those that the stakeholders identified, details related to both the review finding and the assessment of transferability were adapted for the purposes of this worked example in order to illustrate how TRANSFER could be applied to a review process.” The scenario focuses on a situation where a review is commissioned and the stakeholders’ context is known. In the case where the decision makers and/or their context is not well understood to the review team, the review team can still engage potential stakeholders with knowledge/experience related to the intervention being reviewed and the relevant contexts. .

Stage 1: Establish the need for a systematic review

Either stakeholders (in commissioning a review) or a review team (if initiating a review themselves) can establish the need for a systematic review (see example provided in Table 4 ). The process of defining the review question and context begins only after some need for a systematic review is established.

Stage 2a: Collaborate with stakeholders to refine the review question

After defining the need for a systematic review, the review team, together with stakeholders need to meet to refine the review question (see example provided in Table 5 ). Part of this discussion will need to focus on establishing the type of review question being asked, and the corresponding review methodology that will be used (e.g., a review to examine intervention effectiveness or a qualitative evidence synthesis to examine barriers and facilitators to implementing an intervention). The group will then need to define the review question including, for example, the population, intervention, comparison and outcomes. A secondary objective of this discussion is to ensure common understanding of the review question, including how the systematic review is intended to be used. During this meeting the review team and stakeholders can discuss and agree upon, for example, the type of population and intervention(s) they are interested in, the comparison(s) they think are the most relevant, and the outcomes they think are the most important. By using a structured template to guide this discussion, the review team can be sure they cover all topics and questions in a systematic fashion. We have developed and used a basic template for reviews of intervention effectiveness that review authors can use to lead this type of discussion with stakeholders (see Appendix 1 ). Future work will involve adapting this template to different types of review questions and processes.

In some situations, such as in the example we provide, the scope of the review is broader (in this case, global) than the actual context specified in the review (in this case, Norway). The review may therefore include a broader set of interventions, population groups, or settings than the decision making context. Where the review scope is broader than the context specified in the review, a secondary review question can be added – for example, How do the results from this review transfer to a pre-specified context? Alternatively, where the context specified in the review context is the same as the end users’ context, such a secondary question would be unnecessary. When the review context or the local context is defined at a country level, the review authors and stakeholders will likely be aware of heterogeneity within that context (e.g., states, neighbourhoods, etc.). However, it is still often possible (and necessary) to ascertain and describe a national context. We need to further explore how decision makers apply review findings to the multitude of local contexts within, for example, their national context. Finally, in a global review initiated by a review team rather than commissioned for a specific context, a secondary question on the transferability of the review findings to a pre-specified context is unlikely to be needed.

Stage 2b. Identify and prioritize TRANSFER factors

In the scenario discussed in Table 6 , stakeholders are invited to identify transferability factors through a structured discussion using the TRANSFER Conversation Guide (see Appendix 2 ). The identified factors are essentially hypotheses which need to be tested later in the review process. The aim of the type of consultation described above is to gather input from stakeholders regarding which contextual factors are believed to influence how/whether an intervention works. Where the review is initiated by the review team, the same process would be used, but with experts and people who are thought to represent stakeholders, rather than actual commissioners.

The review authors may identify and use an existing logic model describing how the intervention under review works or another framework to initiate the discussion on transferability, for example to identify components of the intervention that could be especially susceptible to transferability factors or to highlight at what point in the course of the intervention transferability may become an issue [ 49 , 50 ]. More work is needed to examine how logic models can be used at the beginning of the systematic review in order to identify potential transferability factors.

During this stage, the group may identify multiple transferability factors. However, we suggest that the review team, together with stakeholders, prioritize these factors and only include the most important three to five factors in order to keep data extraction and subgroup analyses manageable. Limiting the number of factors to be examined is based on our experience of piloting the framework in systematic reviews, as well as on guidance for conducting and reporting subgroup analyses [ 51 ]. Guidance on prioritizing transferability factors is still to be developed.

In accordance with guidance for conducting subgroup analyses in effectiveness reviews, the review team should search for evidence to support the hypotheses that these factors influence transferability, and indicate what effect they are hypothesised to have on the review outcomes [ 51 ]. We do not yet know how best to do this in an efficient way. To date, the search for evidence to support hypothetical transferability factors has involved a grey literature search of key terms related to the identified TRANSFER factors together with key terms related to the intervention, as well as searching Epistemonikos for qualitative systematic reviews on the intervention being studied. Other approaches, however, may include searching databases such as Epistemonikos for systematic reviews related to the hypotheses, and/or focused searches of databases of primary studies such as MEDLINE, EMBASE, etc. Assistance of an information specialist may be helpful in designing these searches and it may be possible to focus down on specific contexts, which would reduce the number of records that need to be searched. The efforts made will need to be calibrated to the resources available and the approach used should be described clearly to enhance transparency. In the case where no evidence is available for a transferability factor that stakeholders believe to be important, the review team will need to decide whether or not to include that transferability factor (depending, for example, on how many other factors have been identified), and provide justification for its inclusion in the protocol. The identified factors should be included in the review protocol as the basis for potential subgroup analyses. Such subgroup analyses will assist the review team in determining whether or not, or to what extent, differences with respect to the identified factor influence the effect of the intervention. This is discussed in more detail under Stage 4. In qualitative evidence syntheses, the review team may predefine subgroups according to transferability factors and contrast and compare perceptions/experiences/barriers/facilitators of different groups of participants according to the transferability factors.

Stage 2c: Define characteristics of the review context related to TRANSFER factors

In an intervention effectiveness review, the review context is typically defined in the review question according to inclusion criteria related to the population, intervention, comparison and outcomes (see example provided in Table 7 ). We recommend that this be extended to include the transferability factors identified in Stage 2, so that an assessment of transferability can be made later in the review process. If the review context does not include details related to the transferability factors, the review authors will be unable to assess whether or not the included studies are transferable to the review context. In this stage the review team works with the stakeholders to specify how the identified transferability factors manifest themselves in the context specified in the review (e.g., global context and Norwegian context).

In cases where the review context is global, it may be challenging to specify characteristics of the global context for each transferability factor. In that case, the focus may be on assessing whether a sufficiently wide range of contexts are represented with respect to each transferability factor. Using the example above, the stakeholders and review team could decide that the transferability of the review findings would be strengthened if studies represented a range of usual housing services conditions in terms of quality and comprehensiveness, or if studies from both warm and cold climate settings are included.

Stage 3: conduct the systematic review

Several stages of the systematic review process may be influenced by discussions with stakeholders that took place in Stage 2 and the transferability factors that have been identified (see example in Table 8 ). These include defining the inclusion criteria, developing the search strategy and developing the data extraction form. In addition to standard data extraction fields, the review authors will need to extract data related to the identified transferability factors. This is done in a systematic manner where review authors also note where the information is not reported. For some transferability factors, such as environmental context, additional information may be identified through external sources. For other types of factors it may be necessary to contact study authors for further information.

Stage 4: compare the included studies to the context specified in the review (global and/or local) with respect to TRANSFER factors

This stage is about organizing the included studies according to their characteristics related to the identified transferability factors. The review authors should record these characteristics in a table – this makes it easy to get an overview of the contexts of the studies included in the review (see example in Table 9 ). There are many ways to organize and present such an overview. In the scenario above, the review authors created simple dichotomous subcategories for each transferability factor, which was related to the local context specified in the secondary review question.

Stage 5: assess the transferability of review findings

Review authors should assess the transferability of a review finding to the review context, and in some cases may also consider a local context (see example in Table 10 ). When a review context is global, the review team may have fewer concerns regarding transferability if the data come from studies from a range of contexts, and the results from the individual studies are consistent. If there is an aspect of context for which there is no evidence, this can be highlighted in the discussion.

In summary, when assessing transferability to a secondary context, the review team may:

Consider conducting a subgroup, or regression, analysis for each transferability factor to explore the extent to which this is likely to influence the transferability of the review finding. The review team should follow standards for conducting subgroup analyses [ 51 , 53 , 54 ].

Interpret the results of the subgroup or regression analysis for each transferability factor and record whether they have no, minor, moderate or serious concerns regarding the transferability of the review finding to the local context.

Make an overall assessment (no, minor, moderate or serious concerns) regarding the transferability of the review finding based on the concerns identified for each individual transferability factor. At the time of publication, we are developing more examples for review authors and guidance on how to make this overall assessment.

The overall TRANSFER assessment involves subjective judgements and it is therefore important for review authors to be consistent and transparent in how they make these assessments (see Appendix 4 ).

Stage 6: Apply GRADE for effectiveness or GRADE-CERQual to assess certainty/confidence in review findings

TRANSFER assessments can be used alone to present assessments of the transferability of a review finding in cases where the review authors have chosen not to assess certainty in the evidence. However, we propose that TRANSFER assessments can also be used to support indirectness assessments in GRADE (see example in Table 11 ). Similar to how the Risk of Bias tool or other critical appraisal tools support the assessment of Risk of Bias in GRADE, the TRANSFER Approach can be used to increase the transparency of judgements made for the indirectness domain [ 55 ]. The advantages to using the TRANSFER Approach to support this assessment are:

Factors that may influence transferability are carefully considered a priori, in collaboration with stakeholders;

The GRADE table is supported by a transparent and systematic assessment of these transferability factors for each outcome, and the evidence available for these;

Stakeholders in other contexts are able to clearly see the basis for the indirectness assessment, make an informed decision regarding whether the indirectness assessment would change for their context, and make their own assessment of transferability related to these factors. In some cases the transferability factors identified and assessed in the systematic review may differ from factors which may be considered important to other stakeholders adapting the review findings to their local context (e.g., in the scenario described above, stakeholders using the review findings in a low income, warmer country with a less comprehensive welfare system).

Future work will be needed to develop methods of communicating the transferability assessment, how it is expressed in relation to a GRADE assessment and how to ensure that a clear distinction is made between TRANSFER assessments for a global context and, where relevant, a pre-specified local context.

Stage 7: Discuss transferability of review findings

In some instances it will be possible to discuss the transferability of the review findings with stakeholders prior to publication of the systematic review in order to ensure that the review team has adequately considered the TRANSFER factors as they relate to the context specified in the review (see example in Table 12 ). In many cases this will not be possible, and any input from stakeholders will be post-publication, if at all.

To our knowledge, the TRANSFER Approach is the first attempt to consider the transferability of review findings to the context(s) specified in the review) in a systematic and transparent way from the beginning of the review process through to supporting assessments of certainty and confidence in the evidence for a review finding. Furthermore, it is the only known framework that gives clear guidance on how to collaborate with stakeholders to assess transferability. This guidance can be used in systematic reviews of effectiveness and qualitative evidence syntheses and could be applied to any kind of decision making [ 43 ].

The framework is under development and more user testing is needed to refine the conversation guide, transferability assessment methods, and presentation. Furthermore, it has not yet been applied in a qualitative evidence synthesis, and further guidance may be needed in order to support that process.

Using TRANSFER in a systematic review

We have divided the framework into seven stages, and have provided guidance and templates for review authors for each stage. The first two stages are intended to support the development of the protocol, while stages three through seven are intended to be incorporated into the systematic review process.

The experience of review teams in the three reviews where TRANSFER has been applied (at the time this article is published) has uncovered potential challenges when applying TRANSFER. One challenge is related to reporting: the detail in which interventions, context and population characteristics are reported in primary studies is not always sufficient enough for the purpose of TRANSFER, as has been noted by others [ 56 , 57 ]. With the availability of tools such as the TIDieR checklist and a number of CONSORT extensions, we hope that this improves and that the information that review authors seek is more readily available [ 58 , 59 , 60 ].

Our experience thus far has been that details concerning many of the TRANSFER factors prioritized by the stakeholders are not reported in the studies included in systematic reviews. In one systematic review on the effect of digital couples therapy compared to in-person therapy or no therapy, digital competence was identified as a TRANSFER factor [ 61 ]. The individual studies did not report this, so the review team examined national statistics for each of the studies included and reported this in the data extraction form [ 61 ]. The review team was unable to conduct a subgroup analysis for the TRANSFER factor. However, by comparing Norway’s national level of digital competence to that of the countries where the included studies were conducted, the authors were able to discuss transferability with respect to digital competence in the discussion section of the review [ 61 ]. They concluded that since the level of digital competence was similar in the countries of the included studies and Norway, the review authors had few concerns that this would be likely to influence the transferability of the review findings [ 61 ]. Without having identified this with stakeholders at the beginning of the process, there likely would have been no discussion of transferability, specifically the importance of digital competence in the population. Thus, even when it is not possible to do a subgroup analysis using TRANSFER factors, or even extract data related to these factors, the act of identifying these factors can contribute meaningfully to subsequent discussions of transferability.

Using TRANSFER in a qualitative evidence synthesis

Although we have not yet used TRANSFER as part of a qualitative evidence synthesis, we believe that the process would be similar to that described above. The overall TRANSFER assessment could inform the GRADE-CERQual component relevance . A research agenda is in place to examine this further.

TRANSFER for decision making

he TRANSFER Approach has two important potential impacts for stakeholders, especially decision makers: an assessment of transferability of review findings, and a close(r) collaboration review authors in refining the systematic review question and scope. A TRANSFER assessment provides stakeholders with (a) an overall assessment of the transferability of the review finding to the context(s) of interest in the review, and details regarding (b) whether and how the studies contributing data to the review finding differ from the context(s) of interest in the review, and (c) how any differences between the contexts of the included studies and the context(s) of interest in the review could influence the transferability of the review finding(s) to the context(s) of interest in the review (e.g. direction or size of effect). The TRANSFER assessment can also be used by stakeholders from other contexts to make an assessment of the transferability of the review findings to their own local context. Linked to this, TRANSFER assessments provide systematic and transparent support for assessments of the indirectness domain within GRADE and the relevance component within GRADE-CERQual. TRANSFER is a work in progress, and there are numerous avenues which need to be further investigated (see Table 13 ).

The TRANSFER Approach also supports a closer collaboration between review authors and stakeholders early in the review process, which may result in more relevant and precise review questions, greater consideration of issues important to the decision maker, and better buy-in from stakeholders in the use of systematic reviews in evidence-based decision making [ 2 ].

The TRANSFER Approach is intended to support review authors in collaborating with stakeholders to ensure that review questions are framed in a way that is most relevant for decision making and to systematically and transparently consider transferability of review findings. Many review authors already consider issues related to the transferability of findings, especially review authors applying the GRADE for effectiveness ( indirectness domain) or GRADE-CERQual ( relevance domain) approaches, and many review authors may engage with stakeholders. However current approaches to considering and assessing transferability appear to be ad hoc at best. Consequently, it often remains unclear to stakeholders how issues related to transferability were considered by review authors. By collaborating with stakeholders early in the systematic review process, reviews authors can ensure more precise and relevant review questions and an informed consideration of issues related to the transferability of the review findings. The TRANSFER Approach may therefore help to ensure that systematic reviews are relevant to and useful for decision making.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

The Grading of Recommendations Assessment, Development and Evaluation approach

The Confidence in Evidence from Reviews of Qualitative research approach

The National Institute for Health and Care Excellence

Moher D, Glasziou P, Chalmers I, Nasser M, Bossuyt P, Korevaar D, Graham I, Ravaud P, Boutron I. Increasing value and reducing waste in biomedical research: who’s listening? Lancet. 2016;387:1573–86.

PubMed Google Scholar

Oliver K, Innvar S, Lorenc T, Woodman J, Thomas J. A systematic review of barriers to and facilitators of the use of evidence by policymakers. BMC Health Serv Res. 2014;14:2.

PubMed PubMed Central Google Scholar

Tricco A, Cardoso R, Thomas S, Motiwala S, Sullivan S, Kealey M, Hemmelgarn B, Ouimet M, Hillmer M, Perrier L, et al. Barriers and facilitators to uptake of systematic reviews by policy makers and health care managers: a scoping review. Implement Sci. 2016;11:4.

Wallace J, Byrne C, Clarke M. Improving the uptake of systematic reviews: a systematic review of intervention effectiveness and relevance. BMJ Open. 2014;4:e005834.

Pawson R, Tilley N. Realist Evaluation. London: Sage; 1997.

Google Scholar

Craig P, Di Ruggiero E, Frohlich K, Mykhalovskiy E, White M, On behalf of the Canadian Institutes of Health Research (CIHR)-National Institute for Health Research (NIHR) Context Guidance Authors Group: Taking account of context in population health intervention research: guidance for producers, users and funders of research. In. Southamptom, UK: NIHR Evaluation, Trials and Studies Coordinating Centre; 2018.

Damschroder L, Aron D, Keith R, Krish S, Alexander J, Lowery J. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;7:50.

Moore G, Audrey S, Barker M, Bond L, Bonell C, et al. Process evaluation of complex interventions: Medical Research Council Guidance. Bmj. 2015;350:h1258.

Pfadenhauer L, Gerhardus A, Mozygemba K, Lysdahl K, Booth A, et al. Making sense of complexity in context and implementation: The context and implementation of Complex Interventions (CICI) Framework. Implement Sci. 2017;12(1):21.

Lewin S, Hendry M, Chandler J, Oxman A, Michie S, Shepperd S, Reeves B, Tugwell P, Hannes K, Rehfuess E, et al. Assessing the complexity of interventions within systematic reviews: development, content and use of a new tool ( iCAT_SR). BMC Med Res Methodol. 2017;17:76.

Wang S, Moss JR, Hiller JE. Applicability and transferability of interventions in evidence-based public health. Health Promot Int. 2006;21(1):76–83.

Burford B, Lewin S, Welch V, Rehfuess E, Waters E. Assessing the applicability of findings in systematic reviews of complex interventions can enhance the utility of reviews for decision making. J Clin Epidemiol. 2013;66(11):1251–61.

Cambon L, Minary L, Ridde V, Alla F. Transferability of interventions in health education: a review. BMC Public Health. 2012;12:497.

Schunemann H, Tugwell P, Reeves B, Akl E, Santesso N, Spencer F, Shea B, Wells G, Helfand M. Non-randomized studies as a source of complementary, sequential or replacement evidence for randomized controlled trials in systematic reviews on the effects of interventions. Res Synth Methods. 2013;4:49–62.

Roberts D, Dalziel S. Antenatal corticosteroids for accelerating fetal lung maturation for women at risk of preterm birth. Cochrane Database Syst Rev. 2006;19:3.

Althabe F, Belizán J, McClure E, Hemingway-Foday J, Berrueta M, Mazzoni A, et al. A population-based, multifaceted strategy to implement antenatal corticosteroid treatment versus standard care for the reduction of neonatal mortality due to preterm birth in low-income and middle-income countries: the ACT cluster-randomised trial. Lancet. 2015;385(9968):629–39.

Vogel J, Oladapo O, Pileggi-Castro C, et al. Antenatal corticosteroids for women at risk of imminent preterm birth in low-resource countries: the case for equipoise and the need for efficacy trials. BMJ Glob Health. 2017;2:e000398.

Roberts D, Brown J, Medley N, Dalziel S. Antenatal corticosteroids for accelerating fetal lung maturation for women at risk of preterm birth. Cochrane Database Syst Rev. 2017;21:3.

Organization. WH. WHO recommendations on interventions to improve preterm birth outcomes. 2015.

Guyatt G, Oxman A, Kunz R, Woodcock J, Brozek J, Helfand M, Alonso-Coello P, Falck-Ytter Y, Jaeschke R, Vist G, et al. GRADE guidelines: 8. Rating the quality of evidence—indirectness. J Clin Epidemiol. 2011;64(12):1303–10.

Schloemer T, Schröeder-Bäck P. Criteria for evaluating transferability of health interventions: a systematic review and thematic synthesis. Implement Sci. 2018;13:88.

Higgins JPT, Green S, editors. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]. The Cochrane Collaboration; 2011. Available from www.handbook.cochrane.org .

Rosenbaum S, Glenton C, Wiysonge C, Abalos E, Migini L, Young T, Althabe F, Ciapponi A, Marti S, Meng Q, et al. Evidence summaries tailored to health policy-makers in low- and middle-income countries. Bull World Health Organ. 2011;89(1):54–61.

Evidence Aid. Evidence Aid Resources. 2017. http://www.evidenceaid.org/ . Accessed 23 June 2017.

National Institute for Health and Care Excellence. Evidence summaries: process guide. National Institute for Health and Care Excellence 2017; 2017. https://www.nice.org.uk/process/pmg31/chapter/introduction . Accessed 23 June 2017.

Lavis J. How can we suppot the use of systematic reviews in policymakign? PLoS Med. 2009;6:e1000141.

Robeson P, Dobbins M, DeCorby K, Tirilis D. Facilitating access to pre-processed research evidence in public health. BMC Public Health. 2010;10:95.

Turner T, Green S, Harris C. Supporting evidence-based health care in crises: what information do humanitarian organisations need? Disaster Med Public Health Prep. 2011;5(1):69–72.

Lavis J, Wilson M, Grimshaw J, Haynes R, Ouimet M, Raina P, Gruen R, Graham I. Supporting the use of health technology assessments in policy making about health systems. Int J Technol Assess Health Care. 2010;26(4):405–14.

Munthe-Kaas H, Nøkleby H, Nguyen L. Systematic mapping of checklists for assessing transferability. Syst Rev. 2019;8(1):22.

Ahmad N, Boutron I, Dechartres A, Durieux P, Ravaud P. Applicability and generalisability of the results of systematic reviews to public health practice and policy: a systematic review. Trials. 2010;11(1):20.

Guyatt G, Oxman A, Kunz R, Vist G, Falck-Ytter Y, Schunemann H, Group FtGW. What is “quality of evidence” and why is it important to clinicians? Bmj. 2008;336:995–8.

Lewin S, Glenton C, Munthe-Kaas H, Carlsen B, Colvin C, Gülmezoglu M, Noyes J, Booth A, Garside R, Rashidian A. Using qualitative evidence in decision making for health and social interventions: an approach to assess confidence in findings from qualitative evidence syntheses (GRADE-CERQual). PLoS Med. 2015;12(10):e1001895.

Hultcrantz M, Rind D, Akl EA, Treweek S, Mustafa RA, Iorio A, Alper BS, Meerpohl JJ, Murad MH, Ansari MT, et al. The GRADE working group clarifies the construct of certainty of evidence. J Clin Epidemiol. 2017;87:4.

Wallace J, Nwosu B, Clarke M. Barriers to the uptake of evidence from systematic reviews and meta-analyses: a systematic review of decision makers’ perceptions. BMJ Open. 2012;2:e001220.

Sakala C, Gyte G, Henderson S, Nieilson J, Horey D. Consumer-professional partnership to improve research: the experience of the Cochrane Collaboration's pregnancy and childbirth group. Birth Issues Perinat Care. 2001;28(2):133–7.

CAS Google Scholar

Wale J, Colombi C, Belizan M, Nadel J. Internation health consumers in the Cochrane collaboration: fifteen years on. J Ambul Care Manage. 2010;33(3):182–9.

Cottrell E, Whitlock E, Kato E, Uhl S, Belinson S, Chang C, Hoomans T, Meltzer D, Noorani H, Robinson K, et al. Defining the benefits of stakeholder engagement in systematic reviews. In: Research White Papers. Rockville: Agency for Healthcare Research and Quality; 2014.

Boote J, Baird W, Sutton A. Public involvement in the systematic review process in health and social care: a narrative review of case examples. Health Policy. 2011;102(2–3):105–16.

Kreis J, Puhan M, Schunemann H, Dickersin K. Consumer involvement in systematic reviews of comparative effectiveness research. Health Expect. 2013;16(4):323–37.

Haddaway N, Kohl C, Rebelo da Silva N, Sciemann J, Spök A, Stewart R, Sweet J, Wilhelm R. A framework for stakeholder engagement during systematic reviews and maps in environmental management. Environ Evid. 2017;6:11.

Pollock A, Campbell P, Baer G, Choo P, Morris J, Forster A. User involvement in a Cochrane systematic review: using structured methods to enhance the clinical relevance, usefulness and usability of a systematic review update. Syst Rev. 2015;4:55.

Atkins D, Chang S, Gartlehner G, Buckley DI, Whitlock EP, Berliner E, Matchar DB. Assessing the applicability of studies when comparing medical interventions. In: Methods Guide for Effectiveness and Comparative Effectiveness Reviews. Rockville: Agency for Healthcare Research and Quality (US); 2010. Available from: http://www.ncbi.nlm.nih.gov/books/NBK53480/ .

Craig P, Di Ruggiero E, Frohlich K, et al. Chapter 3, taking account of context in the population health intervention research process. In: Taking account of context in population health intervention research: guidance for producers, users and funders of research. NIHR Journals Library: Southampton; 2018.

Gruen R, Morris P, McDonald E, Bailie R. Making systematic reviews more useful for policy-makers. Bull World Health Organ. 2005;83(6):480–1.

Burchett HED, Blanchard L, Kneale D, Thomas H. Assessing the applicability of public health intervention evaluations from one setting to another: a methodological study of the usability and usefulness of assessment tools and frameworks. Health Res Policy Syst. 2018;16:88.

Taylor B, Dempster M, Donnelly M. Grading gems: appraising the quality of research for social work and social care. Br J Soc Work. 2007;37:335–54.

Munthe-Kaas H, Berg R, Blaasvær N. Effectiveness of interventions to reduce homelessness. A systematic review. Oslo: Norwegian Institute of Public Health; 2016.

Anderson L, Petticrew M, Rehfuess E, Armstrong R, Ueffing E, Baker P, Francis D, Tugwell P. Using logic models to capture complexity in systematic reviews. Res Synth Methods. 2011;2(1):33–42.

Kneale D, Thomas J, Harris K. Developing and optimising the use of logic models in systematic reviews: exploring practice and good practice in the use of programme theory in reviews. PLoS One. 2015;10(11):e0142187.

Oxman A, Guyatt GH. A consumer's guide to subgroup analyses. Ann Intern Med. 1992;116:78–84.

CAS PubMed Google Scholar

Dyb E, Johannessen K. Bostedsløse i Norge 2012 - en kartlegging. Norsk Institutt for by- og regionforskning: Oslo; 2013.

Sun X, Ioannidis J, Agoritsas T, Alba A, Guyatt G. How to use a subgroup analysis: Users’ guides to the medical literature. JAMA. 2014;311(4):405–11.

Sun X, Briel M, Walter S, Guyatt G. Is a subgroup effect believable? Updating criteria to evaluate the credibility of subgroup analyses. BMJ Open. 2010;340:c117.

Higgins J, Altman D, Gøtzsche P, Jüni P, Moher D, Oxman A, Savović J, Schulz K, Weeks L, Sterne J, et al. The Cochrane Collaboration's tool for assessing risk of bias in randomised trials. Bmj. 2011;343:d5928.

Glasziou P, Chalmers I, Altman D, Bastian H, Boutron I, Brice A, Jamtvedt G, Garmer A, Ghersi D, Groves T, et al. Taking healthcare interventions from trial to practice. Bmj. 2010;13(341):c3852.

Harper R, Lewin S, Lenton C, Peña-Rosas J. Completeness of reporting of setting and health worker cadre among trials on antenatal iron and folic acid supplementation in pregnancy: An assessment based on two Cochrane reviews. Syst Rev. 2013;2:42.

Hoffman T, Glasziou P, Milne R, Moher D, Altman D, Barbour V, Macdonald H, Johnston M, Lamb S, Dixon-Woods M, et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. Bmj. 2014;348:g1687.

Montgomery P, Grant S, Mayo-Wilson E, Macdonald G, Michie S, Hopewell S, Moher D. Reporting randomised trials of social and psychological interventions: the CONSORT-SPI 2018 extension. Trials. 2018;19(1):407.

Zwarenstein M, Treweek S, Gagnier J, Altman D, Tunis S, Haynes B, Oxman A, Moher D. Improving the reporting of pragmatic trials: an extension of the CONSORT statement. Bmj. 2008;11(337):a2390.

Nøkleby H, Flodgren G, Munthe-Kaas H, Said M. Digital interventions for couples with relationship problems: a systematic review. Oslo: Norwegian Institute of Public Health; 2018.

Cochrane Effective Practice and Organisation of Care (EPOC). Synthesizing results when it does not make sense to do a meta-analysis. EPOC Resources for review authors, 2017. epoc.cochrane.org/resources/epoc-resources-review-authors (accessed 02 January 2020).

Download references

Acknowledgements

We would like to acknowledge the intellectual support and guidance of Rigmor Berg from the Norwegian Institute of Public Health, as well as researchers from the Division for Health Services and representatives from various Norwegian welfare directorates who participated in the focus group for stakeholders. We would also like to acknowledge Eva Rehfuess who identified the example used under “Context matters” and Josh Vogel for his assistance regarding the example. We would like to thank Sarah Rosenbaum from the Norwegian Institute of Public Health for designing the diagram in Fig. 1 .

The authors’ research time was funded by the Norwegian Institute of Public Health. The authors also received funding from the Campbell Collaboration Methods Grant to support the development of the TRANSFER Approach. SL receives additional funding from the South African Medical Research Council. The funding bodies played no role in the design of the study, the collection, analysis, or interpretation of data or in writing the manuscript.

Author information

Authors and affiliations.

Norwegian Institute of Public Health, Oslo, Norway

Heather Munthe-Kaas, Heid Nøkleby, Simon Lewin & Claire Glenton

Health Systems Research Unit, South African Medical Research Council, Cape Town, South Africa

Simon Lewin

Cochrane Norway, Oslo, Norway

Claire Glenton

You can also search for this author in PubMed Google Scholar

Contributions

HMK and HN developed the framework and wrote the manuscript. CG and SL provided guidance on the development of the framework and gave feedback on the manuscript. All authors have read and approved the manuscript.

Corresponding author

Correspondence to Heather Munthe-Kaas .

Ethics declarations

Ethics approval and consent to participate.

Not applicable. This study did not undertake any formal data collection involving humans or animals. Participants in the informal focus group discussion provided verbal consent to participate. According to the Norwegian Centre for Research Data (nsd.no) online portal for determining registration of studies, this study did not warrant application for review by an ethical committee https://nsd.no/personvernombud/en/notify/index.html .

Consent for publication

Not applicable.

Competing interests

HMK, CG and SL are co-authors of the GRADE-CERQual approach and lead co-ordinators of the GRADE-CERQual coordinating group. HN has no competing interests.

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

TRANSFER characteristics of context

Transfer table of included studies, transfer assessment, rights and permissions.

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated.

Reprints and permissions

About this article

Cite this article.

Munthe-Kaas, H., Nøkleby, H., Lewin, S. et al. The TRANSFER Approach for assessing the transferability of systematic review findings. BMC Med Res Methodol 20 , 11 (2020). https://doi.org/10.1186/s12874-019-0834-5

Download citation

Received : 30 November 2018

Accepted : 12 September 2019

Published : 17 January 2020

DOI : https://doi.org/10.1186/s12874-019-0834-5

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Transferability

- Applicability

- Indirectness

- Systematic review methodology

- GRADE-CERQual

- Stakeholder engagement

BMC Medical Research Methodology

ISSN: 1471-2288

- General enquiries: [email protected]

Advertisement

To Clarity and Beyond: Situating Higher-Order, Critical, and Critical-Analytic Thinking in the Literature on Learning from Multiple Texts

- REVIEW ARTICLE

- Published: 24 March 2023

- Volume 35 , article number 40 , ( 2023 )

Cite this article

- Alexandra List ORCID: orcid.org/0000-0003-1125-9811 1 &

- Yuting Sun 2

1069 Accesses

5 Citations

1 Altmetric

Explore all metrics

For this systematic review, learning from multiple texts served as the specific context for investigating the constructs of higher-order (HOT), critical (CT), and critical-analytic (CAT) thinking. Examining the manifestations of HOT, CT, and CAT within the specific context of learning from multiple texts allowed us to clarify and disentangle these valued modes of thought. We begin by identifying the mental activities underlying the processes and outcomes of learning from multiple texts. We then juxtapose these mental activities with definitions of HOT, CT, and CAT drawn from the literature. Through this juxtaposition, we define HOT as multi-componential, including evaluation; CT as requiring both evaluation and its justification or substantiation; and CAT as considering the extent to which evaluation and justification may be consistently and systematically applied. We further generate a number of insights, described in the final section of this article. These include the frequent manifestations of HOT, CT, and CAT within the context of students learning from multiple texts and the co-occurring demand for these valued modes of thinking. We propose an additional mode of valued thought, that we refer to as devising , when learners synthetically and systematically use knowledge and strategies gained within one multiple text learning situation to produce an original product or solution in another novel learning situation. We consider such devising to demand HOT, CT, and CAT.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Promoting Integration of Multiple Texts: a Review of Instructional Approaches and Practices

Situating Higher-Order, Critical, and Critical-Analytic Thinking in Problem- and Project-Based Learning Environments: A Systematic Review

Reasoning beyond history: examining students’ strategy use when completing a multiple text task addressing a controversial topic in education

Data availability.

Data available upon request.

Although comprehension has been referred to as the result of both bottom-up (or passive) and top-down (i.e., purposeful and active) knowledge activation processes (Kurby et al., 2005 ; Wolfe & Goldman, 2005 ), we refer here to top-down processes or students’ deliberate engagement of prior knowledge, as reflective of HOT (Richter & Maier, 2017 ).

Bloom et al. ( 1956 ), in their original introduction of this taxonomy, did not refer to higher vis-à-vis lower levels.

This study was beyond the scope of our review as it was a dissertation; however, serves as an illustrative example here.

The multiple text literature can also benefit from considering objectives specified within Marzano and Kendall ( 2008 ) self-system. These include asking students to consider the importance of tasks to them , their efficacy for task completion, emotional responses to tasks or texts, as well as overall motivation. As suggested by a recent review from Anmarkrud et al. ( 2021 ), rarely have these self-system components been examined in the literature on learning from multiple texts, with interest constituting the motivational construct most analyzed. Recent work has started to look at the role of epistemic emotions in learning from multiple texts (Danielson et al., 2022 ; Muis et al., 2015 ).

This paper was excluded from our review as it did not have a learning outcome.

Kammerer et al. ( 2013 ) were unique in asking students to apprise their certainty in a solution to a medical controversy, described across multiple texts. We considered students’ certainty appraisals to reflect metacognition; however this was a mental activity not included among the multiple text outcomes we coded for (i.e., and was placed into the Other category), as Kammerer et al. ( 2013 ) were unique in including this as an assessment.

*Indicate references included in the review

Adams, N. E. (2015). Bloom’s Taxonomy of cognitive learning objectives. Journal of the Medical Library Association, 103 (3), 152–153. https://doi.org/10.3163/1536-5050.103.3.010

Article Google Scholar

Afflerbach, P., Cho, B.-Y., & Kim, J.-Y. (2011). The assessment of higher order thinking in reading. In G. Schraw & D. R. Robinson (Eds.), Assessment of higher order thinking skills (pp. 185–217). IAP Information Age Publishing.

Google Scholar

Afflerbach, P., Cho, B.-Y., & Kim, J.-Y. (2015). Conceptualizing and assessing higher-order thinking in reading. Theory into Practice, 54 (3), 203–212. https://doi.org/10.1080/00405841.2015.1044367

Alexander, P. A. (2014). Thinking critically and analytically about critical-analytic thinking: An introduction. Educational Psychology Review, 26 , 469–476. https://doi.org/10.1007/s10648-014-9283-1

Alexander, P. A., Dinsmore, D. L., Fox, E., Grossnickle, E. M., Loughlin, S. M., Maggioni, L., Parkinson, M. M., & Winters, F. I. (2011). Higher order thinking and knowledge: Domain-general and domain-specific trends and future directions. In G. Schraw & D. R. Robinson (Eds.), Assessment of higher order thinking skills (pp. 47–88). IAP Information Age Publishing.