- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

2.1: Types of Data Representation

- Last updated

- Save as PDF

- Page ID 5696

Two common types of graphic displays are bar charts and histograms. Both bar charts and histograms use vertical or horizontal bars to represent the number of data points in each category or interval. The main difference graphically is that in a bar chart there are spaces between the bars and in a histogram there are not spaces between the bars. Why does this subtle difference exist and what does it imply about graphic displays in general?

Displaying Data

It is often easier for people to interpret relative sizes of data when that data is displayed graphically. Note that a categorical variable is a variable that can take on one of a limited number of values and a quantitative variable is a variable that takes on numerical values that represent a measurable quantity. Examples of categorical variables are tv stations, the state someone lives in, and eye color while examples of quantitative variables are the height of students or the population of a city. There are a few common ways of displaying data graphically that you should be familiar with.

A pie chart shows the relative proportions of data in different categories. Pie charts are excellent ways of displaying categorical data with easily separable groups. The following pie chart shows six categories labeled A−F. The size of each pie slice is determined by the central angle. Since there are 360 o in a circle, the size of the central angle θ A of category A can be found by:

CK-12 Foundation - https://www.flickr.com/photos/slgc/16173880801 - CCSA

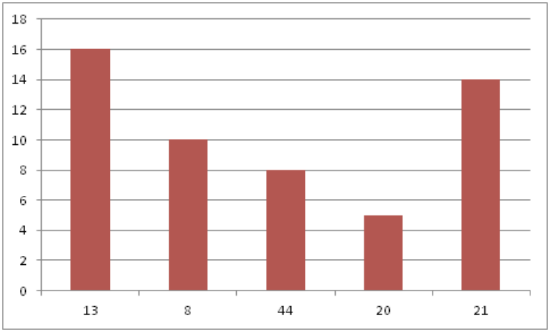

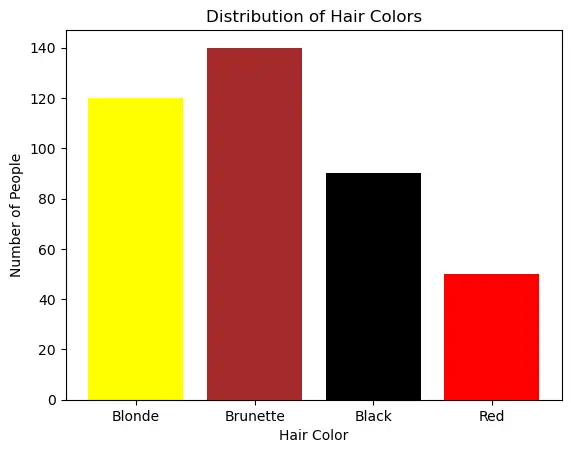

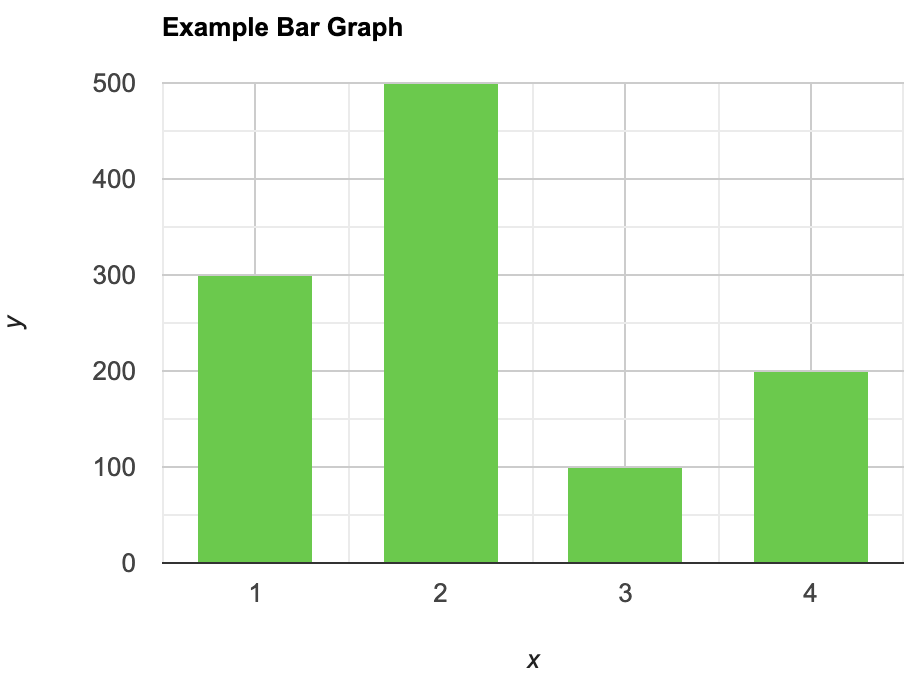

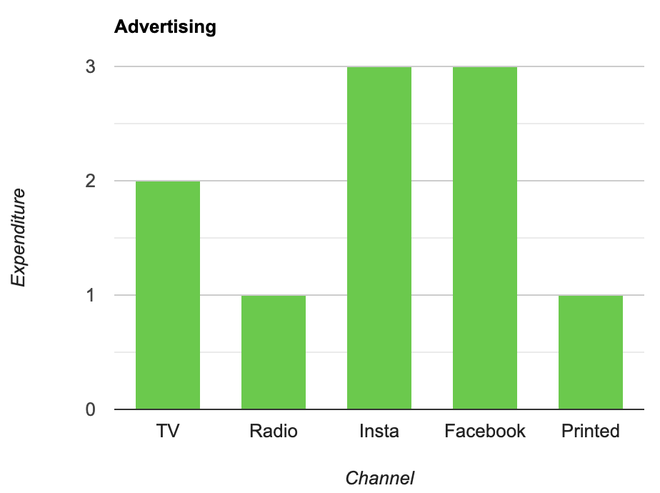

A bar chart displays frequencies of categories of data. The bar chart below has 5 categories, and shows the TV channel preferences for 53 adults. The horizontal axis could have also been labeled News, Sports, Local News, Comedy, Action Movies. The reason why the bars are separated by spaces is to emphasize the fact that they are categories and not continuous numbers. For example, just because you split your time between channel 8 and channel 44 does not mean on average you watch channel 26. Categories can be numbers so you need to be very careful.

CK-12 Foundation - https://www.flickr.com/photos/slgc/16173880801 - CCSA

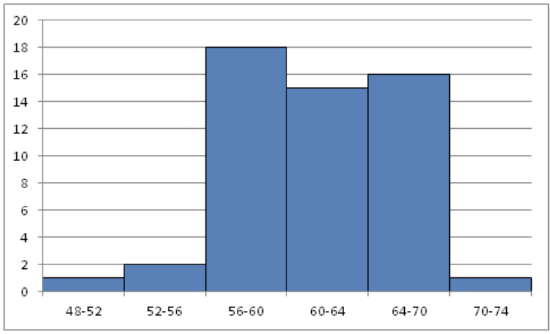

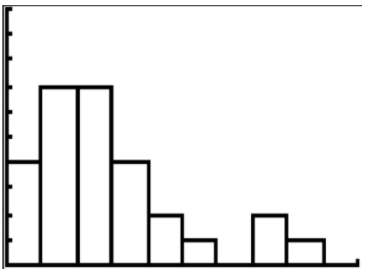

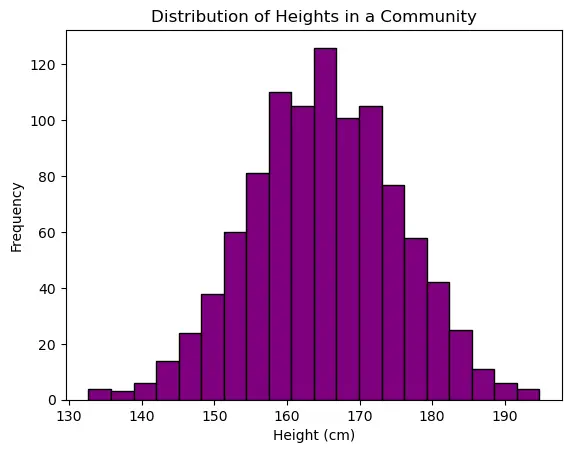

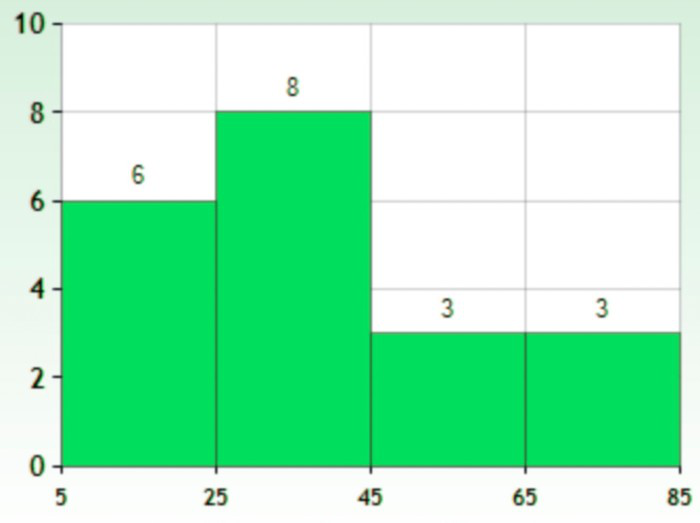

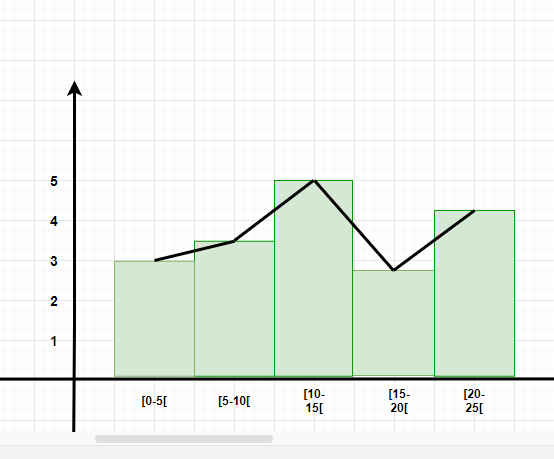

A histogram displays frequencies of quantitative data that has been sorted into intervals. The following is a histogram that shows the heights of a class of 53 students. Notice the largest category is 56-60 inches with 18 people.

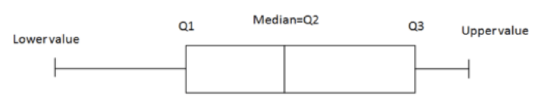

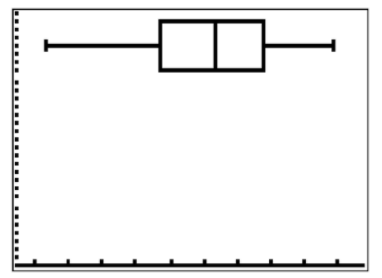

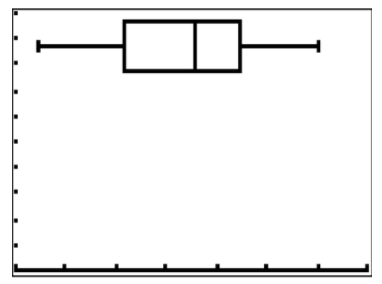

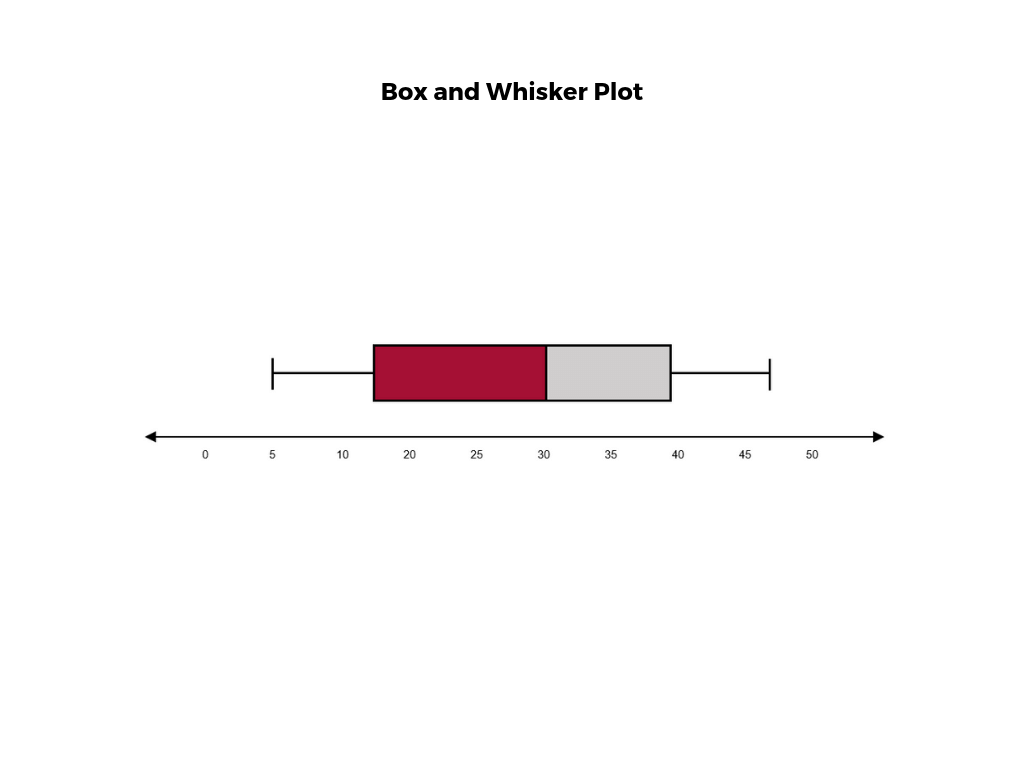

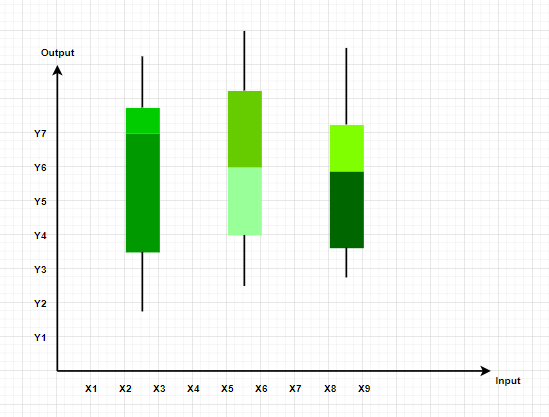

A boxplot (also known as a box and whiskers plot ) is another way to display quantitative data. It displays the five 5 number summary (minimum, Q1, median , Q3, maximum). The box can either be vertically or horizontally displayed depending on the labeling of the axis. The box does not need to be perfectly symmetrical because it represents data that might not be perfectly symmetrical.

Earlier, you were asked about the difference between histograms and bar charts. The reason for the space in bar charts but no space in histograms is bar charts graph categorical variables while histograms graph quantitative variables. It would be extremely improper to forget the space with bar charts because you would run the risk of implying a spectrum from one side of the chart to the other. Note that in the bar chart where TV stations where shown, the station numbers were not listed horizontally in order by size. This was to emphasize the fact that the stations were categories.

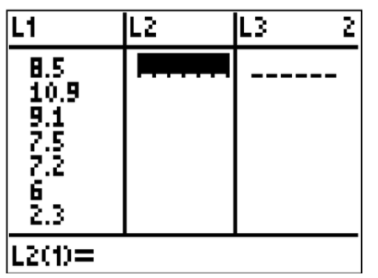

Create a boxplot of the following numbers in your calculator.

8.5, 10.9, 9.1, 7.5, 7.2, 6, 2.3, 5.5

Enter the data into L1 by going into the Stat menu.

CK-12 Foundation - CCSA

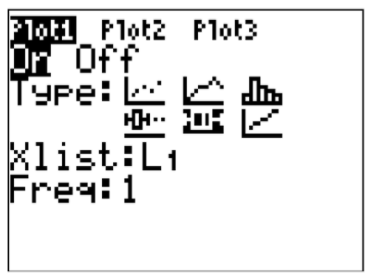

Then turn the statplot on and choose boxplot.

Use Zoomstat to automatically center the window on the boxplot.

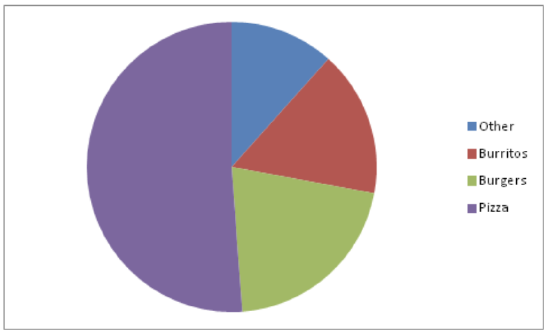

Create a pie chart to represent the preferences of 43 hungry students.

- Other – 5

- Burritos – 7

- Burgers – 9

- Pizza – 22

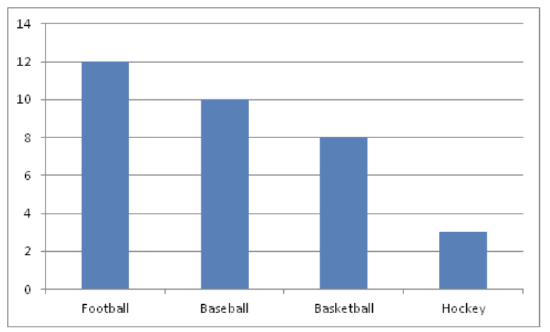

Create a bar chart representing the preference for sports of a group of 23 people.

- Football – 12

- Baseball – 10

- Basketball – 8

- Hockey – 3

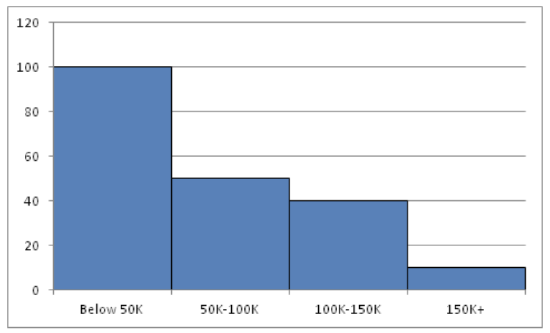

Create a histogram for the income distribution of 200 million people.

- Below $50,000 is 100 million people

- Between $50,000 and $100,000 is 50 million people

- Between $100,000 and $150,000 is 40 million people

- Above $150,000 is 10 million people

1. What types of graphs show categorical data?

2. What types of graphs show quantitative data?

A math class of 30 students had the following grades:

3. Create a bar chart for this data.

4. Create a pie chart for this data.

5. Which graph do you think makes a better visual representation of the data?

A set of 20 exam scores is 67, 94, 88, 76, 85, 93, 55, 87, 80, 81, 80, 61, 90, 84, 75, 93, 75, 68, 100, 98

6. Create a histogram for this data. Use your best judgment to decide what the intervals should be.

7. Find the five number summary for this data.

8. Use the five number summary to create a boxplot for this data.

9. Describe the data shown in the boxplot below.

10. Describe the data shown in the histogram below.

A math class of 30 students has the following eye colors:

11. Create a bar chart for this data.

12. Create a pie chart for this data.

13. Which graph do you think makes a better visual representation of the data?

14. Suppose you have data that shows the breakdown of registered republicans by state. What types of graphs could you use to display this data?

15. From which types of graphs could you obtain information about the spread of the data? Note that spread is a measure of how spread out all of the data is.

Review (Answers)

To see the Review answers, open this PDF file and look for section 15.4.

Additional Resources

PLIX: Play, Learn, Interact, eXplore - Baby Due Date Histogram

Practice: Types of Data Representation

Real World: Prepare for Impact

- Comprehensive Learning Paths

- 150+ Hours of Videos

- Complete Access to Jupyter notebooks, Datasets, References.

Types of Data in Statistics – A Comprehensive Guide

- September 15, 2023

Statistics is a domain that revolves around the collection, analysis, interpretation, presentation, and organization of data. To appropriately utilize statistical methods and produce meaningful results, understanding the types of data is crucial.

In this Blog post we will learn

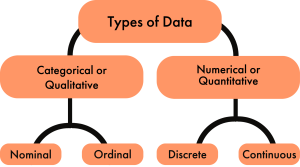

- Qualitative Data (Categorical Data) 1.1. Nominal Data: 1.2. Ordinal Data:

- Quantitative Data (Numerical Data) 2.1. Discrete Data: 2.2. Continuous Data:

- Time-Series Data:

Let’s explore the different types of data in statistics, supplemented with examples and visualization methods using Python.

1. Qualitative Data (Categorical Data)

We often term qualitative data as categorical data, and you can divide it into categories, but you cannot measure or quantify it.

1.1. Nominal Data:

Nominal data represents categories or labels without any inherent order, ranking, or numerical significance as a type of categorical data. In other words, nominal data classifies items into distinct groups or classes based on some qualitative characteristic, but the categories have no natural or meaningful order associated with them.

Key Characteristics

No Quantitative Meaning: Unlike ordinal, interval, or ratio data, nominal data does not imply any quantitative or numerical meaning. The categories are purely qualitative and serve as labels for grouping.

Arbitrary Assignment: The assignment of items to categories in nominal data is often arbitrary and based on some subjective or contextual criteria. For example, assigning items to categories like “red,” “blue,” or “green” for colors is arbitrary.

No Mathematical Operations: Arithmetic operations like addition, subtraction, or multiplication are not meaningful with nominal data because there is no numerical significance to the categories.

Examples of nominal data include:

- Gender categories (e.g., “male,” “female,” “other”).

- Marital status (e.g., “single,” “married,” “divorced,” “widowed”).

- Types of animals (e.g., “cat,” “dog,” “horse,” “bird”).

- Ethnicity or race (e.g., “Caucasian,” “African American,” “Asian,” “Hispanic”).

1.2. Ordinal Data:

Ordinal data is a type of categorical data that represents values with a meaningful order or ranking but does not have a consistent or evenly spaced numerical difference between the values. In other words, ordinal data has categories that can be ordered or ranked, but the intervals between the categories are not uniform or measurable.

Non-Numeric Labels: The categories in ordinal data are typically represented by non-numeric labels or symbols, such as “low,” “medium,” and “high” for levels of satisfaction or “small,” “medium,” and “large” for T-shirt sizes.

No Fixed Intervals: Unlike interval or ratio data, where the intervals between values have a consistent meaning and can be measured, ordinal data does not have fixed or uniform intervals. In other words, you cannot say that the difference between “low” and “medium” is the same as the difference between “medium” and “high.”

Limited Arithmetic Operations: Arithmetic operations like addition and subtraction are not meaningful with ordinal data because the intervals between categories are not quantifiable. However, some basic operations like counting frequencies, calculating medians, or finding modes can still be performed.

Examples of ordinal data include:

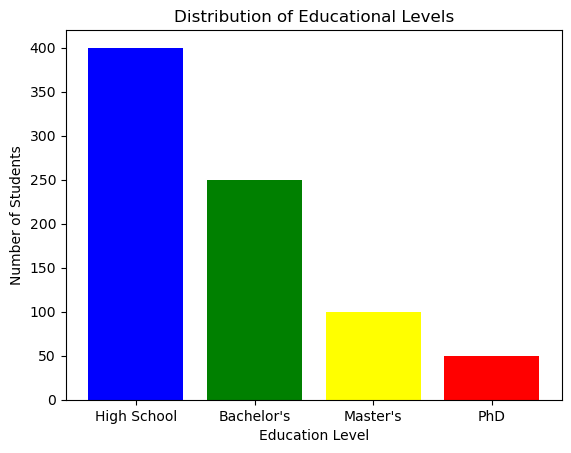

- Educational attainment levels (e.g., “high school,” “bachelor’s degree,” “master’s degree”).

- Customer satisfaction ratings (e.g., “very dissatisfied,” “somewhat dissatisfied,” “neutral,” “satisfied,” “very satisfied”).

- Likert scale responses (e.g., “strongly disagree,” “disagree,” “neutral,” “agree,” “strongly agree”).

2. Quantitative Data (Numerical Data)

Quantitative data represents quantities and can be measured.

2.1. Discrete Data:

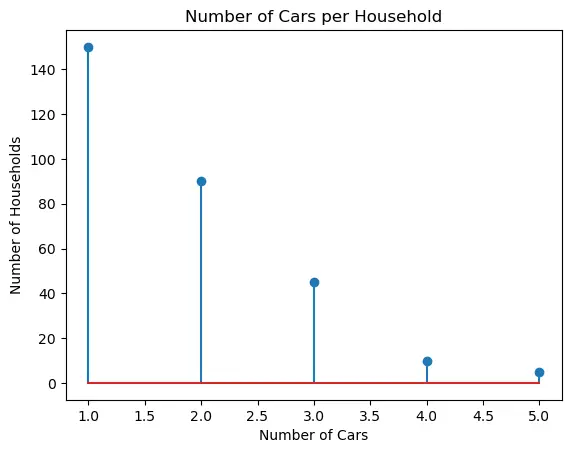

Discrete data refers to a type of data that consists of distinct, separate values or categories. These values are typically counted and are often whole numbers, although they don’t have to be limited to integers. Discrete data can only take on specific, finite values within a defined range.

Key characteristics of discrete data include:

a. Countable Values : Discrete data represents individual, separate items or categories that can be counted or enumerated. For example, the number of students in a classroom, the number of cars in a parking lot, or the number of pets in a household are all discrete data.

b. Distinct Categories : Each value in discrete data represents a distinct category or class. These categories are often non-overlapping, meaning that an item can belong to one category only, with no intermediate values.

c. Gaps between Values : There are gaps or spaces between the values in discrete data. For example, if you are counting the number of people in a household, you can have values like 1, 2, 3, and so on, but you can’t have values like 1.5 or 2.75.

d. Often Represented Graphically with Bar Charts : Discrete data is commonly visualized using bar charts or histograms, where each category is represented by a separate bar, and the height of the bar corresponds to the frequency or count of that category.

* Examples of discrete data include:

The number of children in a family. The number of defects in a batch of products. The number of goals scored by a soccer team in a season. The number of days in a week (Monday, Tuesday, etc.). The types of cars in a parking lot (sedan, SUV, truck).

2.2. Continuous Data:

Continuous data, also known as continuous variables or quantitative data, is a type of data that can take on an infinite number of values within a given range. It represents measurements that can be expressed with a high level of precision and are typically numeric in nature. Unlike discrete data, which consists of distinct, separate values, continuous data can have values at any point along a continuous scale.

Precision: Continuous data is often associated with high precision, meaning that measurements can be made with great detail. For example, temperature, height, and weight can be measured to multiple decimal places.

No Gaps or Discontinuities: There are no gaps, spaces, or jumps between values in continuous data. You can have values that are very close to each other without any distinct categories or separations.

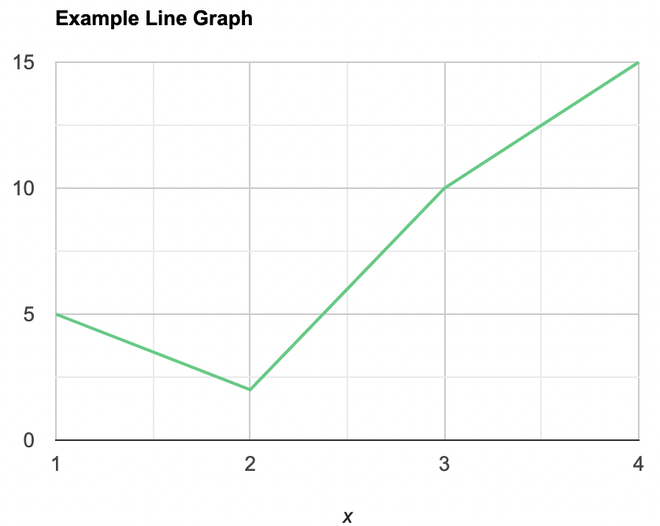

Graphical Representation: Continuous data is commonly visualized using line charts or scatter plots, where data points are connected with lines to show the continuous nature of the data.

Examples of continuous data include:

- Temperature readings, such as 20.5°C or 72.3°F.

- Height measurements, like 175.2 cm or 5.8 feet.

- Weight measurements, such as 68.7 kg or 151.3 pounds.

- Time intervals, like 3.45 seconds or 1.25 hours.

- Age of individuals, which can include decimals (e.g., 27.5 years).

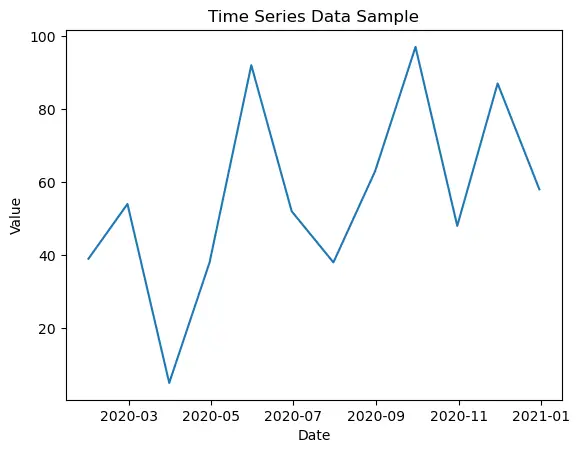

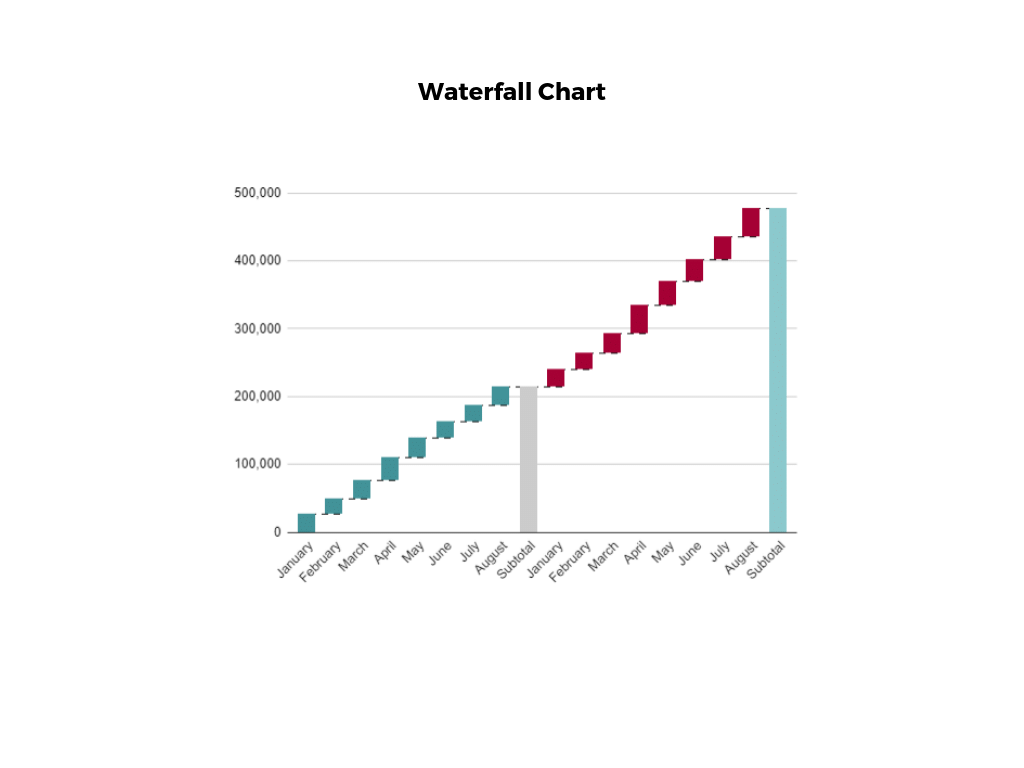

3. Time-Series Data:

Time-series data is a type of data that is collected or recorded over a sequence of equally spaced time intervals. It represents how a particular variable or set of variables changes over time. Each data point in a time series is associated with a specific timestamp, which can be regular (e.g., hourly, daily, monthly) or irregular (e.g., timestamps recorded at random intervals).

Equally Spaced or Irregular Intervals: Time series can have equally spaced intervals, such as daily stock prices, or irregular intervals, like timestamped customer orders. The choice of interval depends on the nature of the data and the context of the analysis.

Seasonality and Trends: Time-series data often exhibits seasonality, which refers to repeating patterns or cycles, and trends, which represent long-term changes or movements in the data. Understanding these patterns is crucial for forecasting and decision-making.

Noise and Variability: Time series may contain noise or random fluctuations that make it challenging to discern underlying patterns. Statistical techniques are often used to filter out noise and identify meaningful patterns.

Applications: Time-series data is widely used in various fields, including finance (stock prices, economic indicators), meteorology (weather data), epidemiology (disease outbreaks), and manufacturing (production processes), among others. It is valuable for making predictions, monitoring trends, and understanding the dynamics of processes over time.

Visualization : Line charts are most suitable for time-series data.

4. Conclusion

Understanding the types of data is crucial as each type requires different methods of analysis. For instance, you wouldn’t use the same statistical test for nominal data as you would for continuous data. By categorizing your data correctly, you can apply the most suitable statistical tools and draw accurate conclusions.

More Articles

Correlation – connecting the dots, the role of correlation in data analysis, hypothesis testing – a deep dive into hypothesis testing, the backbone of statistical inference, sampling and sampling distributions – a comprehensive guide on sampling and sampling distributions, law of large numbers – a deep dive into the world of statistics, central limit theorem – a deep dive into central limit theorem and its significance in statistics, skewness and kurtosis – peaks and tails, understanding data through skewness and kurtosis”, similar articles, complete introduction to linear regression in r, how to implement common statistical significance tests and find the p value, logistic regression – a complete tutorial with examples in r.

Subscribe to Machine Learning Plus for high value data science content

© Machinelearningplus. All rights reserved.

Machine Learning A-Z™: Hands-On Python & R In Data Science

Free sample videos:.

Categorical Representation

- Reference work entry

- Cite this reference work entry

- Arash Shaban-Nejad 2

384 Accesses

Categorization ; Categorical analysis

The origin of the term “categories” is the Greek word “Κατηγορίαι” (Katēgoriai), which refers to the manuscript written by Aristotle, wherein he defined ten fundamental modes (categories) of being (things), namely substance, quantity, quality, relative (relation), somewhere (location), sometime (when), being-in-a-position, having (state), acting, or being affected (Ackrill 1975 ). The word “representation,” as defined by the Oxford English Dictionary, means “the action or fact of expressing or denoting [a thing] symbolically.” Categorical representation can be described as the process of expressing things in different modes and layers of abstraction based on similarities and differences in their attributes and relations. Categorical representation has been a subject of study in knowledge representation, mathematics, cognitive science, linguistics, philosophy, psychology, art, and so forth. Members of a category have common...

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Ackrill, J. L. (1975). Aristotle: Categories and de interpretatione (Clarendon Aristotle Series). USA: Oxford University Press.

Google Scholar

Berg-Cross, G. (2006). Developing knowledge for intelligent agents: Exploring parallels in ontological analysis and epigenetic robotics. NIST PerMIS conferences 2006.

Harnad, S. (1987). Category induction and representation. In S. Harnad (Ed.), Categorical perception: The groundwork of cognition . New York: Cambridge University Press. Chapter 18.

Harnad, S. (1996). The origin of words: A psychophysical hypothesis. In B. Velichkovsky & D. Rumbaugh (Eds.), Communicating meaning: Evolution and development of language (pp. 27–44). New Jersey: Erlbaum.

Harnad, S. (2005). To cognize is to categorize: Cognition is categorization. In H. Cohen & C. Lefebvre (Eds.), Handbook of categorization in cognitive science (pp. 19–43). Amsterdam: Elsevier.

Thagard, P., & Toombs, E. (2005). Atoms, categorization and conceptual change. In H. Cohen & C. Lefebvre (Eds.), Handbook of categorization in cognitive science (pp. 243–254). Amsterdam: Elsevier.

Download references

Author information

Authors and affiliations.

McGill Clinical & Health Informatics, Department of Epidemiology, Biostatistics and Occupational Health, McGill University, 1140 Pine Avenue West, H3A 1A3, Montreal, QC, Canada

Arash Shaban-Nejad

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Arash Shaban-Nejad .

Editor information

Editors and affiliations.

Faculty of Economics and Behavioral Sciences, Department of Education, University of Freiburg, 79085, Freiburg, Germany

Norbert M. Seel

Rights and permissions

Reprints and permissions

Copyright information

© 2012 Springer Science+Business Media, LLC

About this entry

Cite this entry.

Shaban-Nejad, A. (2012). Categorical Representation. In: Seel, N.M. (eds) Encyclopedia of the Sciences of Learning. Springer, Boston, MA. https://doi.org/10.1007/978-1-4419-1428-6_529

Download citation

DOI : https://doi.org/10.1007/978-1-4419-1428-6_529

Publisher Name : Springer, Boston, MA

Print ISBN : 978-1-4419-1427-9

Online ISBN : 978-1-4419-1428-6

eBook Packages : Humanities, Social Sciences and Law

Share this entry

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

CHAPTER 4: DATA MEASUREMENT

4-3: Types of Data and Appropriate Representations

Introduction.

Graphs and charts can be effective visual tools because they present information quickly and easily. Graphs and charts condense large amounts of information into easy-to-understand formats that clearly and effectively communicate important points. Graphs are commonly used by print and electronic media as they quickly convey information in a small space. Statistics are often presented visually as they can effectively facilitate understanding of the data. Different types of graphs and charts are used to represent different types of data.

Types of Data

There are four types of data used in statistics: nominal data, ordinal data, discrete data, and continuous data. Nominal and ordinal data fall under the umbrella of categorical data, while discrete data and continuous data fall under the umbrella of continuous data.

Qualitative Data

Categorical or qualitative data labels data into categories. Categorical data is defined in terms of natural language specifications. For example, name, sex, country of origin, are categories that represent qualitative data. There are two subcategories of qualitative data, nominal data and ordinal data.

Nominal Data

Ordinal Data

When the categories have a natural order, the categories are said to be ordinal . It can be ordered and measured. For example education level (H.S. diploma; 1 year certificate; 2 year degree; 4 year degree; masters degree; doctorate degree), satisfaction rating (extremely dislike; dislike; neutral; like; extremely like), etc. are categories that have a natural order to them. Ordinal data are commonly used for collecting demographic information (age, sex, race, etc.). This is particularly prevalent in marketing and insurance sectors, but it is also used by governments (e.g. the census), and is commonly used when conducting customer satisfaction surveys. Ordinal data is commonly represented using a bar graph .

Quantitative Data

Quantitative data has two subcategories, discrete data and continuous data.

Discrete Data

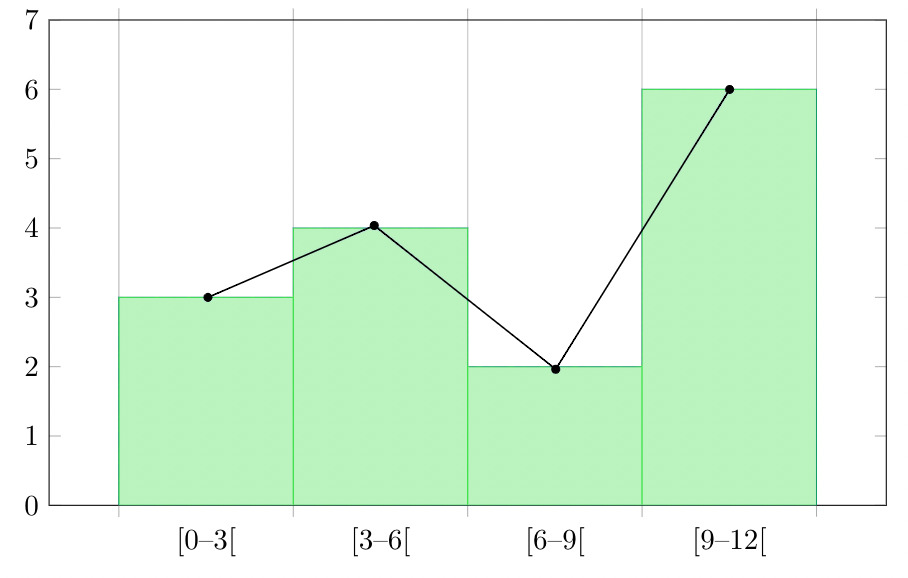

The data is discrete when the numbers do not touch each other on a real number line (e.g., 0, 1, 2, 3, 4…). Discrete data is whole numerical values typically shown as counts and contains only a finite number of possible values. For example, the number of visits to the doctor, the number of students in a class, etc. Discrete data is typically represented by a histogram .

Continuous Data

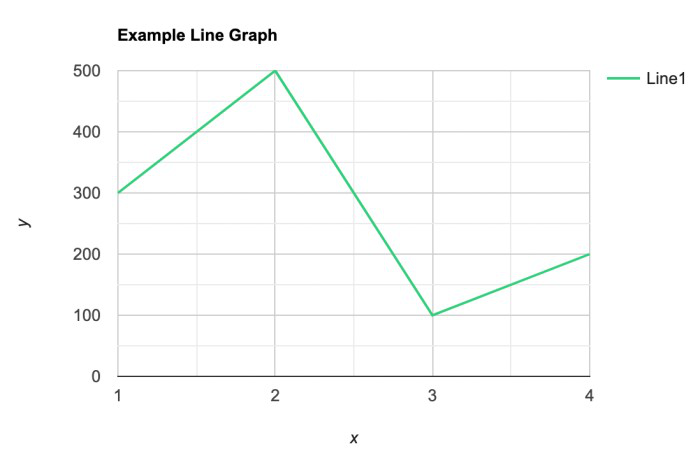

The data is continuous when it has an infinite number of possible values that can be selected within certain limits. (i.e., the numbers run into each other on a real number line). Continuous data is data that can be calculated . It has an infinite number of possible values that can be selected within certain limits. Examples of continuous data are temperature, time, height, etc. Continuous data is typically represented by a line graph .

Explore 1 – Types of data

Classify the data into qualitative or quantitative, then into a subcategory of nominal, ordinal, discrete or continuous.

Weight is a number that is measured and has order. It can also take on any number. So, weight is quantitative: continuous.

- egg size (small, medium, large, extra large)

Egg size is typically small, medium, large, or extra large that has a natural order. So, egg size is qualitative: ordinal.

- number of miles driven to work

Number of miles is a number that is measured and has order. It can also take on any number. So, number of miles is : quantitative: continuous.

- body temperature

Body temperature is a number that is measured and has order. It can also take on any number. So, temperature is quantitative: continuous.

- basketball team jersey number

Jersey numbers have no order and are numbers that are not measured. So, jersey number is qualitative: nominal.

- U.S. shoe size

Shoe size is a number. It is calculated based on a formula that includes the measure of your foot length. However, it has only whole or half numbers (e.g., 8 or 9.5). Shoe size has a natural order but has a finite number of options (e.g., half or whole numbers). So, shoe size is quantitative: discrete.

- military rank

Military rank is not numerical but is categorical with a natural order. So, military rank is qualitative: ordinal.

- university GPA

University GPA is a weighted average that is calculated, so it is quantitative: continuous.

Practice Exercises

- year of birth

- levels of fluency (language)

- height of players on a team

- dose of medicine

- political party

- course letter grades

- Quantitative: discrete

- Qualitative: ordinal

- Quantitative: continuous

- Qualitative: nominal

Types of Graphs and Charts

The type of graph or chart used to visualize data is determined by the type of data being represented. A pie chart or bar chart is typically used for nominal data and a bar chart for ordinal data . For quantitative data , we typically use a histogram for discrete data and a line graph for continuous data .

A pie chart is a circular graphic which is divided into slices to illustrate numerical proportion. Pie charts are widely used in the business world and the mass media. The size of each slice is determined by the percentage represented by a category compared to the whole (i.e., the entire dataset). The percentage in each category adds to 100% or the whole.

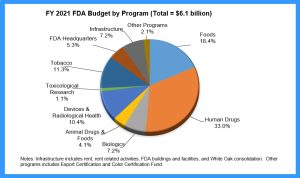

Explore 2 – Pie Charts

The pie chart shows the distribution of the Food and Drug Administration’s Budget of different programs for the fiscal year 2021. The total budget was $6.1 billion. [1]

- How many categories are shown in the pie chart?

If we count the number of slices, there are 10 categories shown.

- What do the percentages represent?

The percentages show the percent of the $6.1 billion FDA budget that was spent on each category.

- Why is it vital to show the total budget on the chart?

Without the total budget we would be unable to calculate the amount spent on each category.

- Is there a limit to the number of categories that can be shown on a pie chart?

Yes. If the slices are too small to see, another method of representing the data should be used. Ideally, a pie chart should show no more than 5 or 6 categories.

- What does the largest slice represent?

The percentage of the total budget spent on human drugs.

- What does the smallest slice represent?

The percentage of the total budget spent on toxicological research.

- How could this pie chart be improved?

The slices could be ordered around the circle by size, and the 3-D look could be eliminated to avoid the distorted perspective and to make the graph clearer.

- Is this an appropriate use of a pie chart?

The chart is showing a comparison of all categories the budget went towards so it is appropriate.

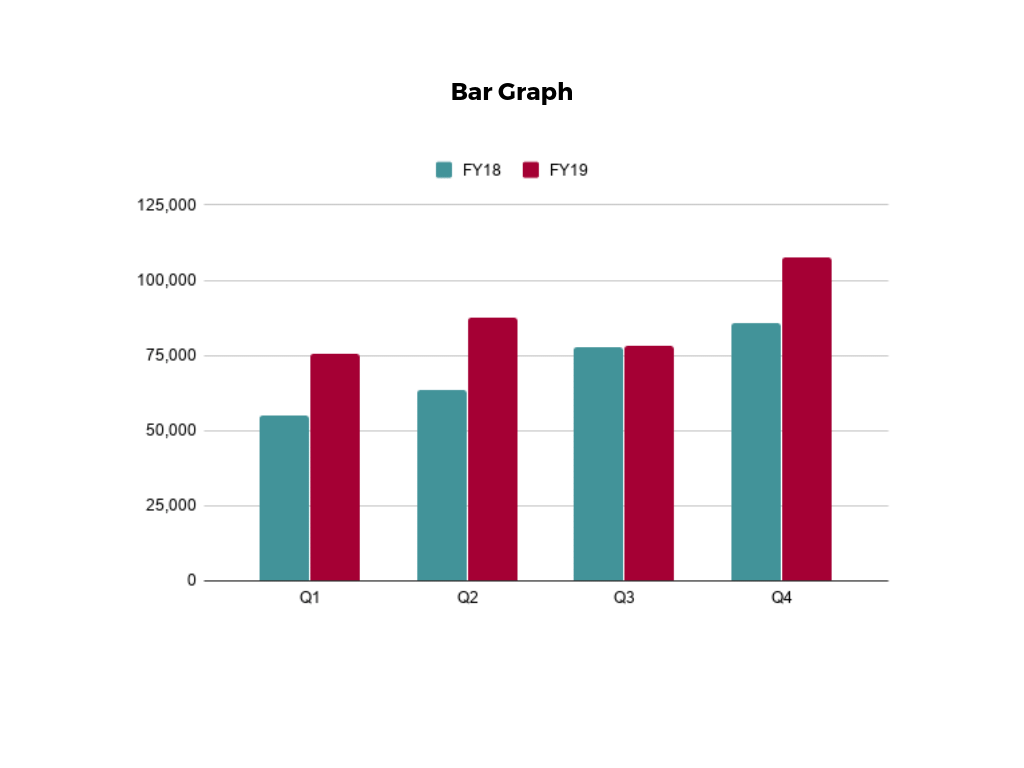

Bar graphs are used to represent categorical data . Each category is represented as a bar either vertically or horizontally. A bar is the measured value or percentage of a category and there is equal space between each pair of consecutive bars. Bar graphs have the advantage of being easy to read and offer direct comparison of categories.

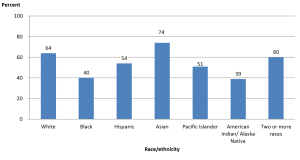

Explore 3 – Bar Graphs

Graduation rates within 6 years from the first institution attended for first-time, full-time bachelor’s degree-seeking students at 4-year postsecondary institutions, by race/ethnicity: cohort entry year 2010.

- How many categories are represented in the bar graph and what do they represent?

There are 7 categories representing the race/ethnicity of the students.

- What do the numbers above each bar represent and why may they be necessary?

The rounded percent of the category. They are necessary because it is very difficult to tell from the vertical scale the height of each bar.

- What does the tallest bar represent?

The percent of students who graduated within six years from their first institution within 6 years who were Asian.

- What does the shortest bar represent?

The percent of students who graduated within six years from their first institution within 6 years who were American Indian or Alaska Native.

- Is this an appropriate use of a bar graph?

Yes. The data is qualitative: nominal; there is no order within the categories.

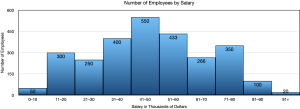

Histograms are used to represent quantitative data that is discrete . A histogram divides up the range of possible values in a data set into classes or intervals. For each class, a rectangle is constructed with a base length equal to the range of values in that specific class and a length equal to the number of observations falling into that class. A histogram has an appearance similar to a vertical bar chart, but there are no gaps between the bars. The bars are ordered along the axis from the smallest to the largest possible value. Consequently, the bars cannot be reordered. Histograms are often used to illustrate the major features of the distribution of the data in a convenient form. They are also useful when dealing with large data sets (greater than 100 observations). They can help detect any unusual observations (outliers) or any gaps in the data.

Histograms may look similar to bar charts but they are really completely different. Histograms plot quantitative data with ranges of the data grouped into classes or intervals while bar charts plot categorical data. Histograms are used to show distributions while bar charts are used to compare categories. Bars can be reordered in bar charts but not in histograms. The bars of bar charts have the same width. The widths of the bars in a histogram need not be the same as long as the total area of all bars is one hundred percent if percentages are used or the total count, if counts are used. Therefore, values in bar graphs are given by the length of the bar while values in histograms are given by areas.

Explore 4 – Histograms

Reading data from a table can be less than enlightening and certainly doesn’t inspire much interest. Graphing the same data in a histogram gives a graphical representation where certain features are automatically highlighted.

- What do you notice about the bars of this histogram compared to the bars of a bar graph?

The bars touch in a histogram but not in a bar chart. This is because the data is ordered along the axis.

- What do the numbers above the bars represent?

The number of employees whose salary lands in each class.

- State a feature of the graph that is very obvious to you.

Answers may vary. Very few employees make less than $10,000 or more than $91,000. $41,000 – $50,000 is the most common salary.

Line graphs are used when the data is quantitative and continuous . The axis acts as a real number line where every possible value is located. Line graphs are typically used to show how data values change over time.

Explore 5 – Line Graphs

Here is an example of a line graph.

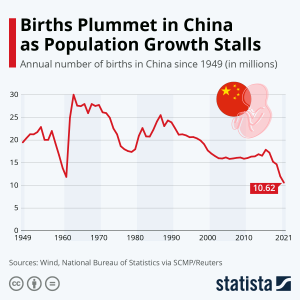

- What does this line graph represent?

Solution: The number of annual births in China from 1949 to 2021.

- What do the numbers on the vertical axis represent?

Solution: The number of births in millions.

- What do the numbers on the horizontal axis represent?

Solution: The year.

- Is this an appropriate use of a line graph?

Solution: Yes. The time scale in years is continuous and a line graph is appropriate for continuous data.

- Does a line graph highlight anything that a histogram may not?

Solution: Yes. The trend in data over time. In this graph the trend of annual births is decreasing.

Infographics are often used by media outlets who are trying to tell a specific (often biased) story. They often combine charts or graphs with narrative and statistics.

Explore 6 – Infographics

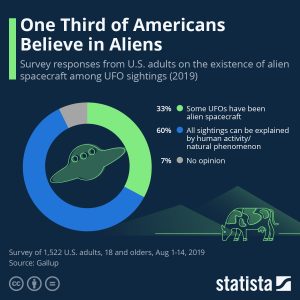

Solution: Since it is circular and based on percentages in each category, it is based on a pie chart.

- How many categories are represented?

Solution: There are three categories.

- What story is the infographic trying to tell?

Solution: About one third of Americans believe in aliens.

- How was the data gathered?

Solution: A survey of 1522 U.S. adults.

- What does the largest blue area on the chart represent?

Solution: The percentage of those surveyed that believe that all sightings can be explained by human activity or natural phenomena.

- What does the smallest grey area on the chart represent?

Solution: The percentage of those surveyed that have no opinion on UFO sightings.

- Robert is involved in a group project for a class. The group has collected data to show the amount of time spent performing different tasks on a cell phone. The categories include making calls, Internet, text, music, videos, social media, email, games, and photos. What type of graph or chart should be used to display the average time spent per day on any of these tasks? Explain your reasoning.

- A marketing firm wants to show what fraction of the overall market uses a particular Internet browser. What type of graph or chart should be used to display this information? Explain your reasoning.

- The data is categorical so a bar graph should be used.

- The data is categorical. If there are not too many categories (browser used) then a pie chart would work since fraction of the market is used. Alternatively, a bar chart could be used showing the fraction or percent as the height of each bar.

- Name three (3) differences between a bar graph and a histogram.

- A bar graph is used for qualitative data while a histogram is used for quantitative data.

- In a bar graph the categories can be reordered. In a histogram the categories cannot be reordered.

- In a bar graph the bars do not touch. In a histogram the bars touch.

- A teacher wants to show their class the results of a midterm exam, without exposing any student names. What type of graph or chart should be used to display the scores earned on the midterm? Explain your reasoning.

- A pizza company wants to display a graphic of the five favorite pizzas of their customers on the company website. What type of graph or chart should be used to display this information? Explain your reasoning.

- Maria is keeping track of her daughter’s height by measuring her height on her birthday each year and recording it in a spreadsheet. What type of graph or chart should be used to display this information? Explain your reasoning

- Midterm scores may be quantitative as either raw scores or percentages, in which case they should show a histogram showing the number of students scoring in a given score (or percentage) interval. If the midterm results are letter grades, the data is qualitative but ordered. In this case, a pie chart could be used to show the percent of students with each letter grade, but it would be very busy. A better option would be a bar graph showing the number of students at each letter grade.

- An infographic. This is categorical data so a (pizza) pie chart would be a good option or a bar chart.

- A line graph since the data is collected over time and time is continuous.

Perspectives

- Mike has collected data for a school project from a survey that asked, “What is your favorite pizza? ”. He surveyed 200 people and discovered that there were only 9 pizzas that were on the favorites list. In his report, he plans to show his data in a (pizza) pie chart. Is this the correct chart to use for his purpose? Explain your reasoning.

- Sarah is keeping track of the value of her car every year. She started when she first bought the car new and looks up its value every year. She figures that when the car’s value drops to $5000, it is time for an upgrade. What type of graph or chart should be used to display this information? Explain your reasoning.

- The Earth’s atmosphere is made up of 77% Nitrogen, 21% Oxygen, and 2% other gases. What type of graph or chart should be used to display this data? Explain your reasoning.

- A pie chart could be used but with 9 categories there may be too many slices for the chart to be clear. A bar graph may be better due to the number of categories.

- A line graph since time is continuous and she will be able to see the trend in car value over time.

- The data is qualitative: nominal and has percentages that add to 100% so a pie chart would work well with only 3 categories. Alternatively, a bar chart would work.

Skills Exercises

- phone number

- https://www.fda.gov/about-fda/fda-basics/fact-sheet-fda-glance ↵

able to be put into categories

data that can be given labels and put into categories

qualitative data that can be put into labelled categories that have no order and no overlap

having nothing in common; no overlap

the number of times a data value has been recorded

a number or ratio expressed as a fraction of 100

a circular graphic which is divided into slices representing the number or percentage in each category

qualitative data that has a natural order

a graph where each category is represented by a vertical or horizontal bar that measures a frequency or percentage of the whole

expressed using a number or numbers

data that involves numerical values with order

data that is measured using whole numbers with only a finite number of possibilities

a graph similar in appearance to a vertical bar graph with gaps between the bars, ordered bars, with a bse length equal to the range of values in a specific class

data that has an infinite number of possible values that can be selected within certain limits

use arithmetic and the order of operations

a graph used for continuous data that uses an axis as a real number line where every possible value is located

a graphic showing a combination of graphs, charts, and statistics

Numeracy Copyright © 2023 by Utah Valley University is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Page Statistics

Table of contents.

- Introduction to Functional Computer

- Fundamentals of Architectural Design

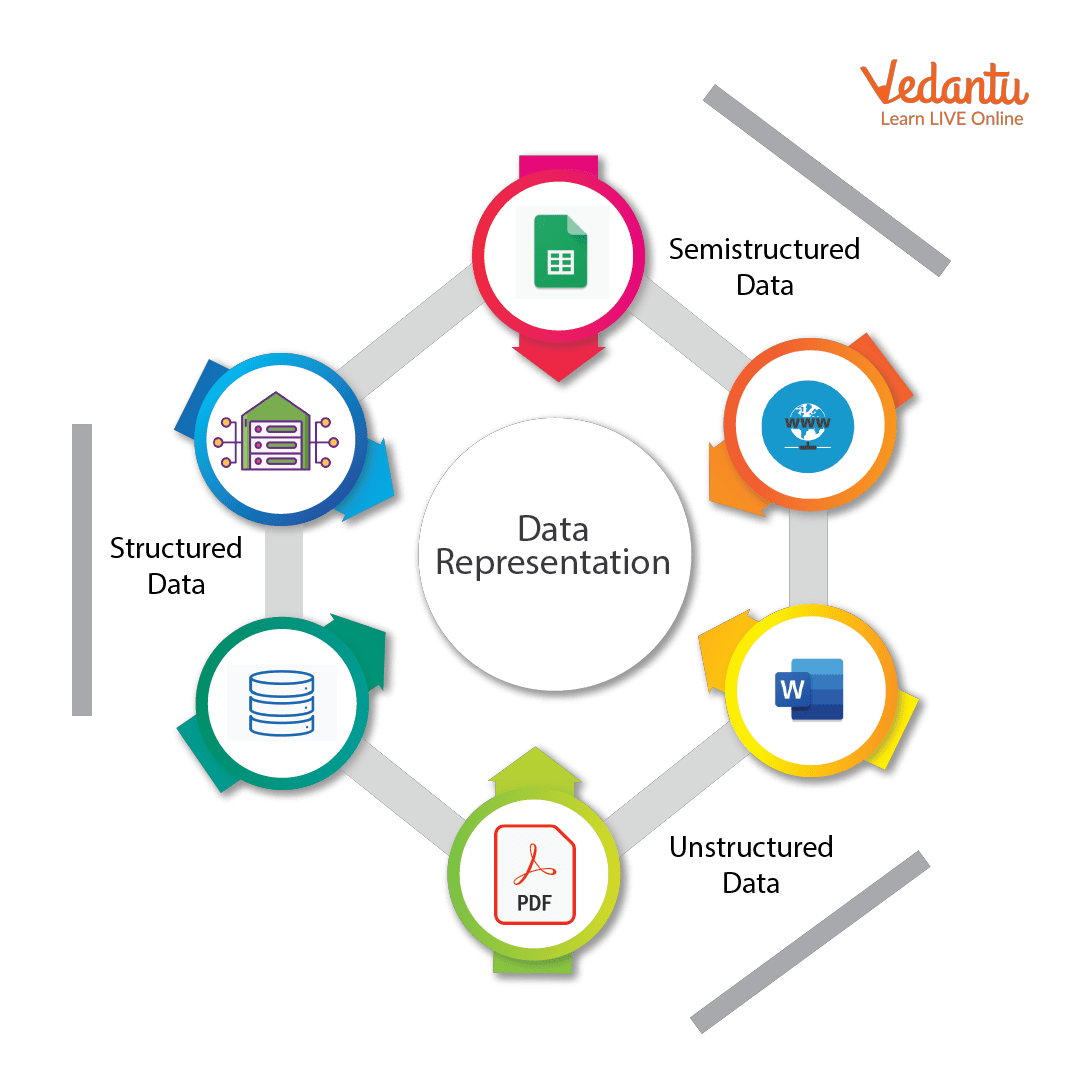

Data Representation

- Instruction Set Architecture : Instructions and Formats

- Instruction Set Architecture : Design Models

- Instruction Set Architecture : Addressing Modes

- Performance Measurements and Issues

- Computer Architecture Assessment 1

- Fixed Point Arithmetic : Addition and Subtraction

- Fixed Point Arithmetic : Multiplication

- Fixed Point Arithmetic : Division

- Floating Point Arithmetic

- Arithmetic Logic Unit Design

- CPU's Data Path

- CPU's Control Unit

- Control Unit Design

- Concepts of Pipelining

- Computer Architecture Assessment 2

- Pipeline Hazards

- Memory Characteristics and Organization

- Cache Memory

- Virtual Memory

- I/O Communication and I/O Controller

- Input/Output Data Transfer

- Direct Memory Access controller and I/O Processor

- CPU Interrupts and Interrupt Handling

- Computer Architecture Assessment 3

Course Computer Architecture

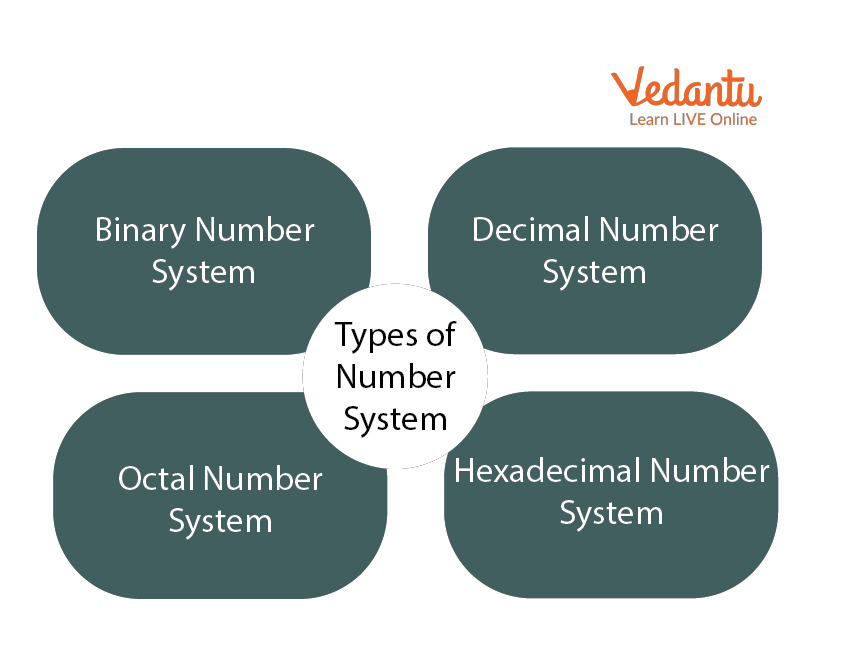

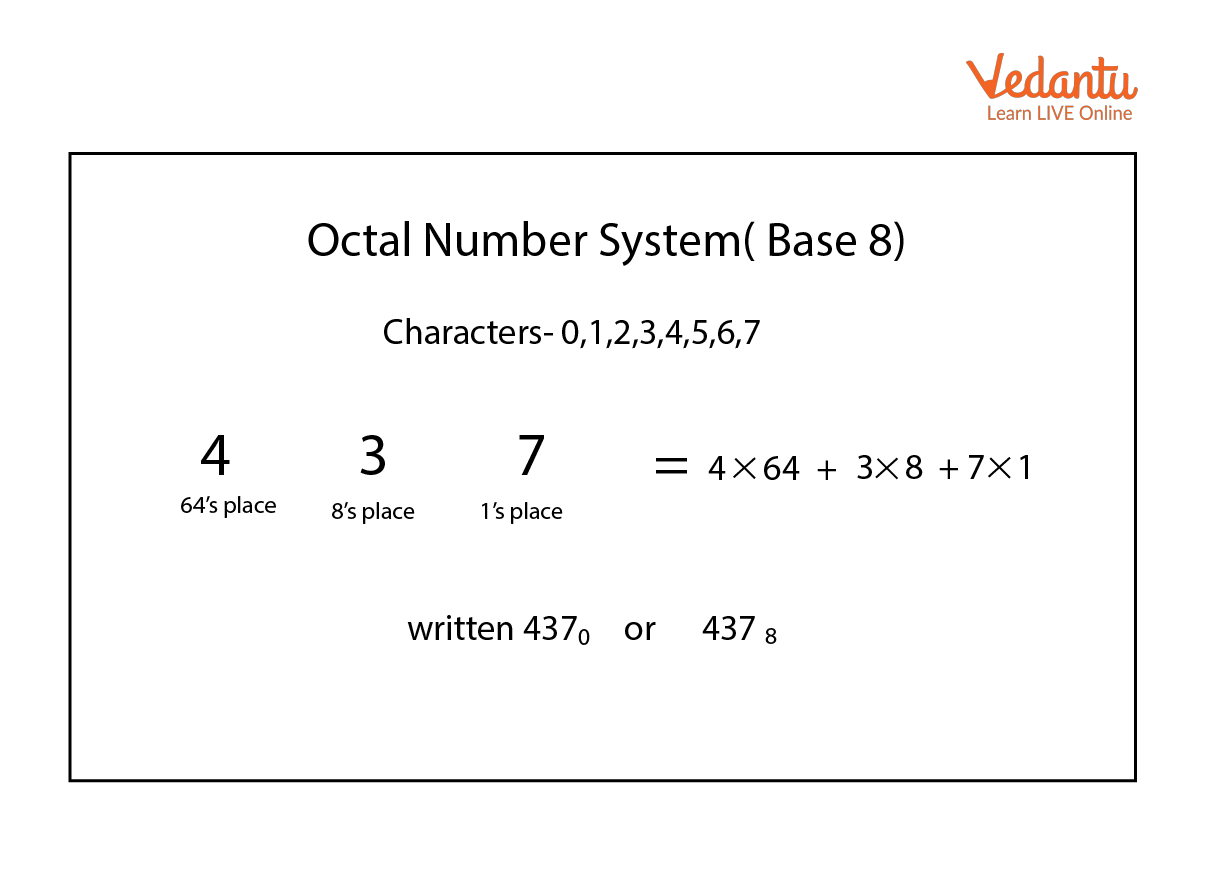

Digital computers store and process information in binary form as digital logic has only two values "1" and "0" or in other words "True or False" or also said as "ON or OFF". This system is called radix 2. We human generally deal with radix 10 i.e. decimal. As a matter of convenience there are many other representations like Octal (Radix 8), Hexadecimal (Radix 16), Binary coded decimal (BCD), Decimal etc.

Every computer's CPU has a width measured in terms of bits such as 8 bit CPU, 16 bit CPU, 32 bit CPU etc. Similarly, each memory location can store a fixed number of bits and is called memory width. Given the size of the CPU and Memory, it is for the programmer to handle his data representation. Most of the readers may be knowing that 4 bits form a Nibble, 8 bits form a byte. The word length is defined by the Instruction Set Architecture of the CPU. The word length may be equal to the width of the CPU.

The memory simply stores information as a binary pattern of 1's and 0's. It is to be interpreted as what the content of a memory location means. If the CPU is in the Fetch cycle, it interprets the fetched memory content to be instruction and decodes based on Instruction format. In the Execute cycle, the information from memory is considered as data. As a common man using a computer, we think computers handle English or other alphabets, special characters or numbers. A programmer considers memory content to be data types of the programming language he uses. Now recall figure 1.2 and 1.3 of chapter 1 to reinforce your thought that conversion happens from computer user interface to internal representation and storage.

- Data Representation in Computers

Information handled by a computer is classified as instruction and data. A broad overview of the internal representation of the information is illustrated in figure 3.1. No matter whether it is data in a numeric or non-numeric form or integer, everything is internally represented in Binary. It is up to the programmer to handle the interpretation of the binary pattern and this interpretation is called Data Representation . These data representation schemes are all standardized by international organizations.

Choice of Data representation to be used in a computer is decided by

- The number types to be represented (integer, real, signed, unsigned, etc.)

- Range of values likely to be represented (maximum and minimum to be represented)

- The Precision of the numbers i.e. maximum accuracy of representation (floating point single precision, double precision etc)

- If non-numeric i.e. character, character representation standard to be chosen. ASCII, EBCDIC, UTF are examples of character representation standards.

- The hardware support in terms of word width, instruction.

Before we go into the details, let us take an example of interpretation. Say a byte in Memory has value "0011 0001". Although there exists a possibility of so many interpretations as in figure 3.2, the program has only one interpretation as decided by the programmer and declared in the program.

- Fixed point Number Representation

Fixed point numbers are also known as whole numbers or Integers. The number of bits used in representing the integer also implies the maximum number that can be represented in the system hardware. However for the efficiency of storage and operations, one may choose to represent the integer with one Byte, two Bytes, Four bytes or more. This space allocation is translated from the definition used by the programmer while defining a variable as integer short or long and the Instruction Set Architecture.

In addition to the bit length definition for integers, we also have a choice to represent them as below:

- Unsigned Integer : A positive number including zero can be represented in this format. All the allotted bits are utilised in defining the number. So if one is using 8 bits to represent the unsigned integer, the range of values that can be represented is 28 i.e. "0" to "255". If 16 bits are used for representing then the range is 216 i.e. "0 to 65535".

- Signed Integer : In this format negative numbers, zero, and positive numbers can be represented. A sign bit indicates the magnitude direction as positive or negative. There are three possible representations for signed integer and these are Sign Magnitude format, 1's Compliment format and 2's Complement format .

Signed Integer – Sign Magnitude format: Most Significant Bit (MSB) is reserved for indicating the direction of the magnitude (value). A "0" on MSB means a positive number and a "1" on MSB means a negative number. If n bits are used for representation, n-1 bits indicate the absolute value of the number. Examples for n=8:

Examples for n=8:

0010 1111 = + 47 Decimal (Positive number)

1010 1111 = - 47 Decimal (Negative Number)

0111 1110 = +126 (Positive number)

1111 1110 = -126 (Negative Number)

0000 0000 = + 0 (Postive Number)

1000 0000 = - 0 (Negative Number)

Although this method is easy to understand, Sign Magnitude representation has several shortcomings like

- Zero can be represented in two ways causing redundancy and confusion.

- The total range for magnitude representation is limited to 2n-1, although n bits were accounted.

- The separate sign bit makes the addition and subtraction more complicated. Also, comparing two numbers is not straightforward.

Signed Integer – 1’s Complement format: In this format too, MSB is reserved as the sign bit. But the difference is in representing the Magnitude part of the value for negative numbers (magnitude) is inversed and hence called 1’s Complement form. The positive numbers are represented as it is in binary. Let us see some examples to better our understanding.

1101 0000 = - 47 Decimal (Negative Number)

1000 0001 = -126 (Negative Number)

1111 1111 = - 0 (Negative Number)

- Converting a given binary number to its 2's complement form

Step 1 . -x = x' + 1 where x' is the one's complement of x.

Step 2 Extend the data width of the number, fill up with sign extension i.e. MSB bit is used to fill the bits.

Example: -47 decimal over 8bit representation

As you can see zero is not getting represented with redundancy. There is only one way of representing zero. The other problem of the complexity of the arithmetic operation is also eliminated in 2’s complement representation. Subtraction is done as Addition.

More exercises on number conversion are left to the self-interest of readers.

- Floating Point Number system

The maximum number at best represented as a whole number is 2 n . In the Scientific world, we do come across numbers like Mass of an Electron is 9.10939 x 10-31 Kg. Velocity of light is 2.99792458 x 108 m/s. Imagine to write the number in a piece of paper without exponent and converting into binary for computer representation. Sure you are tired!!. It makes no sense to write a number in non- readable form or non- processible form. Hence we write such large or small numbers using exponent and mantissa. This is said to be Floating Point representation or real number representation. he real number system could have infinite values between 0 and 1.

Representation in computer

Unlike the two's complement representation for integer numbers, Floating Point number uses Sign and Magnitude representation for both mantissa and exponent . In the number 9.10939 x 1031, in decimal form, +31 is Exponent, 9.10939 is known as Fraction . Mantissa, Significand and fraction are synonymously used terms. In the computer, the representation is binary and the binary point is not fixed. For example, a number, say, 23.345 can be written as 2.3345 x 101 or 0.23345 x 102 or 2334.5 x 10-2. The representation 2.3345 x 101 is said to be in normalised form.

Floating-point numbers usually use multiple words in memory as we need to allot a sign bit, few bits for exponent and many bits for mantissa. There are standards for such allocation which we will see sooner.

- IEEE 754 Floating Point Representation

We have two standards known as Single Precision and Double Precision from IEEE. These standards enable portability among different computers. Figure 3.3 picturizes Single precision while figure 3.4 picturizes double precision. Single Precision uses 32bit format while double precision is 64 bits word length. As the name suggests double precision can represent fractions with larger accuracy. In both the cases, MSB is sign bit for the mantissa part, followed by Exponent and Mantissa. The exponent part has its sign bit.

It is to be noted that in Single Precision, we can represent an exponent in the range -127 to +127. It is possible as a result of arithmetic operations the resulting exponent may not fit in. This situation is called overflow in the case of positive exponent and underflow in the case of negative exponent. The Double Precision format has 11 bits for exponent meaning a number as large as -1023 to 1023 can be represented. The programmer has to make a choice between Single Precision and Double Precision declaration using his knowledge about the data being handled.

The Floating Point operations on the regular CPU is very very slow. Generally, a special purpose CPU known as Co-processor is used. This Co-processor works in tandem with the main CPU. The programmer should be using the float declaration only if his data is in real number form. Float declaration is not to be used generously.

- Decimal Numbers Representation

Decimal numbers (radix 10) are represented and processed in the system with the support of additional hardware. We deal with numbers in decimal format in everyday life. Some machines implement decimal arithmetic too, like floating-point arithmetic hardware. In such a case, the CPU uses decimal numbers in BCD (binary coded decimal) form and does BCD arithmetic operation. BCD operates on radix 10. This hardware operates without conversion to pure binary. It uses a nibble to represent a number in packed BCD form. BCD operations require not only special hardware but also decimal instruction set.

- Exceptions and Error Detection

All of us know that when we do arithmetic operations, we get answers which have more digits than the operands (Ex: 8 x 2= 16). This happens in computer arithmetic operations too. When the result size exceeds the allotted size of the variable or the register, it becomes an error and exception. The exception conditions associated with numbers and number operations are Overflow, Underflow, Truncation, Rounding and Multiple Precision . These are detected by the associated hardware in arithmetic Unit. These exceptions apply to both Fixed Point and Floating Point operations. Each of these exceptional conditions has a flag bit assigned in the Processor Status Word (PSW). We may discuss more in detail in the later chapters.

- Character Representation

Another data type is non-numeric and is largely character sets. We use a human-understandable character set to communicate with computer i.e. for both input and output. Standard character sets like EBCDIC and ASCII are chosen to represent alphabets, numbers and special characters. Nowadays Unicode standard is also in use for non-English language like Chinese, Hindi, Spanish, etc. These codes are accessible and available on the internet. Interested readers may access and learn more.

1. Track your progress [Earn 200 points]

Mark as complete

2. Provide your ratings to this chapter [Earn 100 points]

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

2: Graphical Representations of Data

- Last updated

- Save as PDF

- Page ID 22222

In this chapter, you will study numerical and graphical ways to describe and display your data. This area of statistics is called "Descriptive Statistics." You will learn how to calculate, and even more importantly, how to interpret these measurements and graphs.

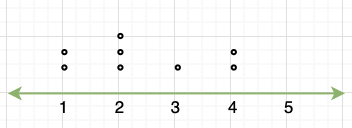

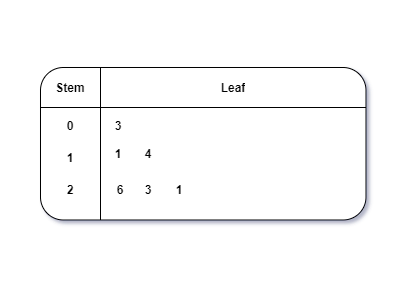

- 2.1: Introduction In this chapter, you will study numerical and graphical ways to describe and display your data. This area of statistics is called "Descriptive Statistics." You will learn how to calculate, and even more importantly, how to interpret these measurements and graphs. In this chapter, we will briefly look at stem-and-leaf plots, line graphs, and bar graphs, as well as frequency polygons, and time series graphs. Our emphasis will be on histograms and box plots.

- 2.2: Stem-and-Leaf Graphs (Stemplots), Line Graphs, and Bar Graphs A stem-and-leaf plot is a way to plot data and look at the distribution, where all data values within a class are visible. The advantage in a stem-and-leaf plot is that all values are listed, unlike a histogram, which gives classes of data values. A line graph is often used to represent a set of data values in which a quantity varies with time. These graphs are useful for finding trends. A bar graph is a chart that uses either horizontal or vertical bars to show comparisons among categories.

- 2.3: Histograms, Frequency Polygons, and Time Series Graphs A histogram is a graphic version of a frequency distribution. The graph consists of bars of equal width drawn adjacent to each other. The horizontal scale represents classes of quantitative data values and the vertical scale represents frequencies. The heights of the bars correspond to frequency values. Histograms are typically used for large, continuous, quantitative data sets. A frequency polygon can also be used when graphing large data sets with data points that repeat.

- 2.4: Using Excel to Create Graphs Using technology to create graphs will make the graphs faster to create, more precise, and give the ability to use larger amounts of data. This section focuses on using Excel to create graphs.

- 2.5: Graphs that Deceive It's common to see graphs displayed in a misleading manner in social media and other instances. This could be done purposefully to make a point, or it could be accidental. Either way, it's important to recognize these instances to ensure you are not misled.

- 2.E: Graphical Representations of Data (Exercises) These are homework exercises to accompany the Textmap created for "Introductory Statistics" by OpenStax.

Contributors and Attributions

Barbara Illowsky and Susan Dean (De Anza College) with many other contributing authors. Content produced by OpenStax College is licensed under a Creative Commons Attribution License 4.0 license. Download for free at http://cnx.org/contents/[email protected] .

- Data representation

Bytes of memory

- Abstract machine

Unsigned integer representation

Signed integer representation, pointer representation, array representation, compiler layout, array access performance, collection representation.

- Consequences of size and alignment rules

Uninitialized objects

Pointer arithmetic, undefined behavior.

- Computer arithmetic

Arena allocation

This course is about learning how computers work, from the perspective of systems software: what makes programs work fast or slow, and how properties of the machines we program impact the programs we write. We want to communicate ideas, tools, and an experimental approach.

The course divides into six units:

- Assembly & machine programming

- Storage & caching

- Kernel programming

- Process management

- Concurrency

The first unit, data representation , is all about how different forms of data can be represented in terms the computer can understand.

Computer memory is kind of like a Lite Brite.

A Lite Brite is big black backlit pegboard coupled with a supply of colored pegs, in a limited set of colors. You can plug in the pegs to make all kinds of designs. A computer’s memory is like a vast pegboard where each slot holds one of 256 different colors. The colors are numbered 0 through 255, so each slot holds one byte . (A byte is a number between 0 and 255, inclusive.)

A slot of computer memory is identified by its address . On a computer with M bytes of memory, and therefore M slots, you can think of the address as a number between 0 and M −1. My laptop has 16 gibibytes of memory, so M = 16×2 30 = 2 34 = 17,179,869,184 = 0x4'0000'0000 —a very large number!

The problem of data representation is the problem of representing all the concepts we might want to use in programming—integers, fractions, real numbers, sets, pictures, texts, buildings, animal species, relationships—using the limited medium of addresses and bytes.

Powers of ten and powers of two. Digital computers love the number two and all powers of two. The electronics of digital computers are based on the bit , the smallest unit of storage, which a base-two digit: either 0 or 1. More complicated objects are represented by collections of bits. This choice has many scale and error-correction advantages. It also refracts upwards to larger choices, and even into terminology. Memory chips, for example, have capacities based on large powers of two, such as 2 30 bytes. Since 2 10 = 1024 is pretty close to 1,000, 2 20 = 1,048,576 is pretty close to a million, and 2 30 = 1,073,741,824 is pretty close to a billion, it’s common to refer to 2 30 bytes of memory as “a giga byte,” even though that actually means 10 9 = 1,000,000,000 bytes. But for greater precision, there are terms that explicitly signal the use of powers of two. 2 30 is a gibibyte : the “-bi-” component means “binary.”

Virtual memory. Modern computers actually abstract their memory spaces using a technique called virtual memory . The lowest-level kind of address, called a physical address , really does take on values between 0 and M −1. However, even on a 16GiB machine like my laptop, the addresses we see in programs can take on values like 0x7ffe'ea2c'aa67 that are much larger than M −1 = 0x3'ffff'ffff . The addresses used in programs are called virtual addresses . They’re incredibly useful for protection: since different running programs have logically independent address spaces, it’s much less likely that a bug in one program will crash the whole machine. We’ll learn about virtual memory in much more depth in the kernel unit ; the distinction between virtual and physical addresses is not as critical for data representation.

Most programming languages prevent their users from directly accessing memory. But not C and C++! These languages let you access any byte of memory with a valid address. This is powerful; it is also very dangerous. But it lets us get a hands-on view of how computers really work.

C++ programs accomplish their work by constructing, examining, and modifying objects . An object is a region of data storage that contains a value, such as the integer 12. (The standard specifically says “a region of data storage in the execution environment, the contents of which can represent values”.) Memory is called “memory” because it remembers object values.

In this unit, we often use functions called hexdump to examine memory. These functions are defined in hexdump.cc . hexdump_object(x) prints out the bytes of memory that comprise an object named x , while hexdump(ptr, size) prints out the size bytes of memory starting at a pointer ptr .

For example, in datarep1/add.cc , we might use hexdump_object to examine the memory used to represent some integers:

This display reports that a , b , and c are each four bytes long; that a , b , and c are located at different, nonoverlapping addresses (the long hex number in the first column); and shows us how the numbers 1, 2, and 3 are represented in terms of bytes. (More on that later.)

The compiler, hardware, and standard together define how objects of different types map to bytes. Each object uses a contiguous range of addresses (and thus bytes), and objects never overlap (objects that are active simultaneously are always stored in distinct address ranges).

Since C and C++ are designed to help software interface with hardware devices, their standards are transparent about how objects are stored. A C++ program can ask how big an object is using the sizeof keyword. sizeof(T) returns the number of bytes in the representation of an object of type T , and sizeof(x) returns the size of object x . The result of sizeof is a value of type size_t , which is an unsigned integer type large enough to hold any representable size. On 64-bit architectures, such as x86-64 (our focus in this course), size_t can hold numbers between 0 and 2 64 –1.

Qualitatively different objects may have the same data representation. For example, the following three objects have the same data representation on x86-64, which you can verify using hexdump :

In C and C++, you can’t reliably tell the type of an object by looking at the contents of its memory. That’s why tricks like our different addf*.cc functions work.

An object can have many names. For example, here, local and *ptr refer to the same object:

The different names for an object are sometimes called aliases .

There are five objects here:

- ch1 , a global variable

- ch2 , a constant (non-modifiable) global variable

- ch3 , a local variable

- ch4 , a local variable

- the anonymous storage allocated by new char and accessed by *ch4

Each object has a lifetime , which is called storage duration by the standard. There are three different kinds of lifetime.

- static lifetime: The object lasts as long as the program runs. ( ch1 , ch2 )

- automatic lifetime: The compiler allocates and destroys the object automatically as the program runs, based on the object’s scope (the region of the program in which it is meaningful). ( ch3 , ch4 )

- dynamic lifetime: The programmer allocates and destroys the object explicitly. ( *allocated_ch )

Objects with dynamic lifetime aren’t easy to use correctly. Dynamic lifetime causes many serious problems in C programs, including memory leaks, use-after-free, double-free, and so forth. Those serious problems cause undefined behavior and play a “disastrously central role” in “our ongoing computer security nightmare” . But dynamic lifetime is critically important. Only with dynamic lifetime can you construct an object whose size isn’t known at compile time, or construct an object that outlives the function that created it.

The compiler and operating system work together to put objects at different addresses. A program’s address space (which is the range of addresses accessible to a program) divides into regions called segments . Objects with different lifetimes are placed into different segments. The most important segments are:

- Code (also known as text or read-only data ). Contains instructions and constant global objects. Unmodifiable; static lifetime.

- Data . Contains non-constant global objects. Modifiable; static lifetime.

- Heap . Modifiable; dynamic lifetime.

- Stack . Modifiable; automatic lifetime.

The compiler decides on a segment for each object based on its lifetime. The final compiler phase, which is called the linker , then groups all the program’s objects by segment (so, for instance, global variables from different compiler runs are grouped together into a single segment). Finally, when a program runs, the operating system loads the segments into memory. (The stack and heap segments grow on demand.)

We can use a program to investigate where objects with different lifetimes are stored. (See cs61-lectures/datarep2/mexplore0.cc .) This shows address ranges like this:

Constant global data and global data have the same lifetime, but are stored in different segments. The operating system uses different segments so it can prevent the program from modifying constants. It marks the code segment, which contains functions (instructions) and constant global data, as read-only, and any attempt to modify code-segment memory causes a crash (a “Segmentation violation”).

An executable is normally at least as big as the static-lifetime data (the code and data segments together). Since all that data must be in memory for the entire lifetime of the program, it’s written to disk and then loaded by the OS before the program starts running. There is an exception, however: the “bss” segment is used to hold modifiable static-lifetime data with initial value zero. Such data is common, since all static-lifetime data is initialized to zero unless otherwise specified in the program text. Rather than storing a bunch of zeros in the object files and executable, the compiler and linker simply track the location and size of all zero-initialized global data. The operating system sets this memory to zero during the program load process. Clearing memory is faster than loading data from disk, so this optimization saves both time (the program loads faster) and space (the executable is smaller).

Abstract machine and hardware

Programming involves turning an idea into hardware instructions. This transformation happens in multiple steps, some you control and some controlled by other programs.

First you have an idea , like “I want to make a flappy bird iPhone game.” The computer can’t (yet) understand that idea. So you transform the idea into a program , written in some programming language . This process is called programming.

A C++ program actually runs on an abstract machine . The behavior of this machine is defined by the C++ standard , a technical document. This document is supposed to be so precisely written as to have an exact mathematical meaning, defining exactly how every C++ program behaves. But the document can’t run programs!

C++ programs run on hardware (mostly), and the hardware determines what behavior we see. Mapping abstract machine behavior to instructions on real hardware is the task of the C++ compiler (and the standard library and operating system). A C++ compiler is correct if and only if it translates each correct program to instructions that simulate the expected behavior of the abstract machine.

This same rough series of transformations happens for any programming language, although some languages use interpreters rather than compilers.

A bit is the fundamental unit of digital information: it’s either 0 or 1.

C++ manages memory in units of bytes —8 contiguous bits that together can represent numbers between 0 and 255. C’s unit for a byte is char : the abstract machine says a byte is stored in char . That means an unsigned char holds values in the inclusive range [0, 255].

The C++ standard actually doesn’t require that a byte hold 8 bits, and on some crazy machines from decades ago , bytes could hold nine bits! (!?)

But larger numbers, such as 258, don’t fit in a single byte. To represent such numbers, we must use multiple bytes. The abstract machine doesn’t specify exactly how this is done—it’s the compiler and hardware’s job to implement a choice. But modern computers always use place–value notation , just like in decimal numbers. In decimal, the number 258 is written with three digits, the meanings of which are determined both by the digit and by their place in the overall number:

\[ 258 = 2\times10^2 + 5\times10^1 + 8\times10^0 \]

The computer uses base 256 instead of base 10. Two adjacent bytes can represent numbers between 0 and \(255\times256+255 = 65535 = 2^{16}-1\) , inclusive. A number larger than this would take three or more bytes.

\[ 258 = 1\times256^1 + 2\times256^0 \]

On x86-64, the ones place, the least significant byte, is on the left, at the lowest address in the contiguous two-byte range used to represent the integer. This is the opposite of how decimal numbers are written: decimal numbers put the most significant digit on the left. The representation choice of putting the least-significant byte in the lowest address is called little-endian representation. x86-64 uses little-endian representation.

Some computers actually store multi-byte integers the other way, with the most significant byte stored in the lowest address; that’s called big-endian representation. The Internet’s fundamental protocols, such as IP and TCP, also use big-endian order for multi-byte integers, so big-endian is also called “network” byte order.

The C++ standard defines five fundamental unsigned integer types, along with relationships among their sizes. Here they are, along with their actual sizes and ranges on x86-64:

Other architectures and operating systems implement different ranges for these types. For instance, on IA32 machines like Intel’s Pentium (the 32-bit processors that predated x86-64), sizeof(long) was 4, not 8.

Note that all values of a smaller unsigned integer type can fit in any larger unsigned integer type. When a value of a larger unsigned integer type is placed in a smaller unsigned integer object, however, not every value fits; for instance, the unsigned short value 258 doesn’t fit in an unsigned char x . When this occurs, the C++ abstract machine requires that the smaller object’s value equals the least -significant bits of the larger value (so x will equal 2).

In addition to these types, whose sizes can vary, C++ has integer types whose sizes are fixed. uint8_t , uint16_t , uint32_t , and uint64_t define 8-bit, 16-bit, 32-bit, and 64-bit unsigned integers, respectively; on x86-64, these correspond to unsigned char , unsigned short , unsigned int , and unsigned long .

This general procedure is used to represent a multi-byte integer in memory.

- Write the large integer in hexadecimal format, including all leading zeros required by the type size. For example, the unsigned value 65534 would be written 0x0000FFFE . There will be twice as many hexadecimal digits as sizeof(TYPE) .

- Divide the integer into its component bytes, which are its digits in base 256. In our example, they are, from most to least significant, 0x00, 0x00, 0xFF, and 0xFE.

In little-endian representation, the bytes are stored in memory from least to most significant. If our example was stored at address 0x30, we would have:

In big-endian representation, the bytes are stored in the reverse order.

Computers are often fastest at dealing with fixed-length numbers, rather than variable-length numbers, and processor internals are organized around a fixed word size . A word is the natural unit of data used by a processor design . In most modern processors, this natural unit is 8 bytes or 64 bits , because this is the power-of-two number of bytes big enough to hold those processors’ memory addresses. Many older processors could access less memory and had correspondingly smaller word sizes, such as 4 bytes (32 bits).

The best representation for signed integers—and the choice made by x86-64, and by the C++20 abstract machine—is two’s complement . Two’s complement representation is based on this principle: Addition and subtraction of signed integers shall use the same instructions as addition and subtraction of unsigned integers.

To see what this means, let’s think about what -x should mean when x is an unsigned integer. Wait, negative unsigned?! This isn’t an oxymoron because C++ uses modular arithmetic for unsigned integers: the result of an arithmetic operation on unsigned values is always taken modulo 2 B , where B is the number of bits in the unsigned value type. Thus, on x86-64,

-x is simply the number that, when added to x , yields 0 (mod 2 B ). For example, when unsigned x = 0xFFFFFFFFU , then -x == 1U , since x + -x equals zero (mod 2 32 ).

To obtain -x , we flip all the bits in x (an operation written ~x ) and then add 1. To see why, consider the bit representations. What is x + (~x + 1) ? Well, (~x) i (the i th bit of ~x ) is 1 whenever x i is 0, and vice versa. That means that every bit of x + ~x is 1 (there are no carries), and x + ~x is the largest unsigned integer, with value 2 B -1. If we add 1 to this, we get 2 B . Which is 0 (mod 2 B )! The highest “carry” bit is dropped, leaving zero.

Two’s complement arithmetic uses half of the unsigned integer representations for negative numbers. A two’s-complement signed integer with B bits has the following values:

- If the most-significant bit is 1, the represented number is negative. Specifically, the represented number is – (~x + 1) , where the outer negative sign is mathematical negation (not computer arithmetic).

- If every bit is 0, the represented number is 0.

- If the most-significant but is 0 but some other bit is 1, the represented number is positive.

The most significant bit is also called the sign bit , because if it is 1, then the represented value depends on the signedness of the type (and that value is negative for signed types).

Another way to think about two’s-complement is that, for B -bit integers, the most-significant bit has place value 2 B –1 in unsigned arithmetic and negative 2 B –1 in signed arithmetic. All other bits have the same place values in both kinds of arithmetic.

The two’s-complement bit pattern for x + y is the same whether x and y are considered as signed or unsigned values. For example, in 4-bit arithmetic, 5 has representation 0b0101 , while the representation 0b1100 represents 12 if unsigned and –4 if signed ( ~0b1100 + 1 = 0b0011 + 1 == 4). Let’s add those bit patterns and see what we get:

Note that this is the right answer for both signed and unsigned arithmetic : 5 + 12 = 17 = 1 (mod 16), and 5 + -4 = 1.

Subtraction and multiplication also produce the same results for unsigned arithmetic and signed two’s-complement arithmetic. (For instance, 5 * 12 = 60 = 12 (mod 16), and 5 * -4 = -20 = -4 (mod 16).) This is not true of division. (Consider dividing the 4-bit representation 0b1110 by 2. In signed arithmetic, 0b1110 represents -2, so 0b1110/2 == 0b1111 (-1); but in unsigned arithmetic, 0b1110 is 14, so 0b1110/2 == 0b0111 (7).) And, of course, it is not true of comparison. In signed 4-bit arithmetic, 0b1110 < 0 , but in unsigned 4-bit arithmetic, 0b1110 > 0 . This means that a C compiler for a two’s-complement machine can use a single add instruction for either signed or unsigned numbers, but it must generate different instruction patterns for signed and unsigned division (or less-than, or greater-than).

There are a couple quirks with C signed arithmetic. First, in two’s complement, there are more negative numbers than positive numbers. A representation with sign bit is 1, but every other bit 0, has no positive counterpart at the same bit width: for this number, -x == x . (In 4-bit arithmetic, -0b1000 == ~0b1000 + 1 == 0b0111 + 1 == 0b1000 .) Second, and far worse, is that arithmetic overflow on signed integers is undefined behavior .

The C++ abstract machine requires that signed integers have the same sizes as their unsigned counterparts.

We distinguish pointers , which are concepts in the C abstract machine, from addresses , which are hardware concepts. A pointer combines an address and a type.

The memory representation of a pointer is the same as the representation of its address value. The size of that integer is the machine’s word size; for example, on x86-64, a pointer occupies 8 bytes, and a pointer to an object located at address 0x400abc would be stored as:

The C++ abstract machine defines an unsigned integer type uintptr_t that can hold any address. (You have to #include <inttypes.h> or <cinttypes> to get the definition.) On most machines, including x86-64, uintptr_t is the same as unsigned long . Cast a pointer to an integer address value with syntax like (uintptr_t) ptr ; cast back to a pointer with syntax like (T*) addr . Casts between pointer types and uintptr_t are information preserving, so this assertion will never fail:

Since it is a 64-bit architecture, the size of an x86-64 address is 64 bits (8 bytes). That’s also the size of x86-64 pointers.

To represent an array of integers, C++ and C allocate the integers next to each other in memory, in sequential addresses, with no gaps or overlaps. Here, we put the integers 0, 1, and 258 next to each other, starting at address 1008:

Say that you have an array of N integers, and you access each of those integers in order, accessing each integer exactly once. Does the order matter?

Computer memory is random-access memory (RAM), which means that a program can access any bytes of memory in any order—it’s not, for example, required to read memory in ascending order by address. But if we run experiments, we can see that even in RAM, different access orders have very different performance characteristics.

Our arraysum program sums up all the integers in an array of N integers, using an access order based on its arguments, and prints the resulting delay. Here’s the result of a couple experiments on accessing 10,000,000 items in three orders, “up” order (sequential: elements 0, 1, 2, 3, …), “down” order (reverse sequential: N , N −1, N −2, …), and “random” order (as it sounds).

Wow! Down order is just a bit slower than up, but random order seems about 40 times slower. Why?

Random order is defeating many of the internal architectural optimizations that make memory access fast on modern machines. Sequential order, since it’s more predictable, is much easier to optimize.

Foreshadowing. This part of the lecture is a teaser for the Storage unit, where we cover access patterns and caching, including the processor caches that explain this phenomenon, in much more depth.

The C++ programming language offers several collection mechanisms for grouping subobjects together into new kinds of object. The collections are arrays, structs, and unions. (Classes are a kind of struct. All library types, such as vectors, lists, and hash tables, use combinations of these collection types.) The abstract machine defines how subobjects are laid out inside a collection. This is important, because it lets C/C++ programs exchange messages with hardware and even with programs written in other languages: messages can be exchanged only when both parties agree on layout.