What Does a Data Researcher Do?

Find out what a Data Researcher does, how to get this job, salary information, and what it takes to succeed as a Data Researcher.

The Data Researcher plays an integral role in gathering, analyzing, and interpreting information to help companies make informed decisions. This position involves sifting through vast amounts of data to identify trends, patterns, and insights that can influence strategic planning and operational efficiency. By employing a variety of tools and methodologies, the Data Researcher contributes to the development of a knowledge base that supports business objectives across different departments. Their work enables organizations to better understand their market, optimize processes, and anticipate future trends, ensuring that data-driven decisions pave the way for innovation and growth.

Data Researcher Job Duties

- Collect and compile data from various primary and secondary sources, ensuring accuracy and relevance to research objectives.

- Design and implement data collection tools, such as surveys and questionnaires, tailored to specific research needs.

- Perform statistical analysis of collected data using software tools like SPSS, R, or Python to identify trends, correlations, and patterns.

- Develop and maintain databases for storing research data, ensuring data integrity, security, and accessibility.

- Write comprehensive research reports that summarize findings, methodologies, and statistical analyses, making recommendations based on data insights.

- Collaborate with cross-functional teams, including data scientists, analysts, and subject matter experts, to refine research questions and methodologies.

- Present research findings to stakeholders through presentations, visualizations, and dashboards, translating complex data into understandable insights.

- Evaluate and integrate new data sources and research tools into existing workflows to enhance data quality and research outcomes.

Data Researcher Salary & Outlook

Salaries for Data Researchers vary based on factors such as industry (tech, finance, healthcare), company size, years of experience, and expertise in specific data analysis tools (e.g., Python, R, SQL). Specialization in high-demand areas like machine learning or big data analytics can also significantly impact earnings.

- Median Annual Salary: $69,825 ($33.57/hour)

- Top 10% Annual Salary: $155,700 ($74.86/hour)

The employment of data researchers is expected to grow much faster than average over the next decade.

This surge is driven by the exponential increase in data generation and the need for sophisticated analysis to derive actionable insights across industries, including healthcare, finance, and technology. Data Researchers are crucial for harnessing this data for predictive analytics, enhancing decision-making, and creating competitive advantages.

Data Researcher Job Requirements

Education: A Data Researcher typically holds a Bachelor’s, Master’s, or Doctoral Degree in fields such as Data Science, Statistics, Computer Science, or a related area. Coursework often includes statistics, programming, data analysis, and machine learning. Advanced degrees may focus on specialized research methods, big data analytics, and project management. Academic projects and internships complement formal education, providing practical experience in data collection, analysis, and interpretation.

Experience: Data Researchers typically come with a blend of on-the-job experience and participation in specialized training programs. Ideal candidates have a solid background in data analysis, research methodologies, and are adept at using various data research tools. Experience in specific industries can be beneficial, depending on the research focus. Continuous professional development through workshops and courses is common, ensuring they stay updated with the latest data research techniques and technologies. Hands-on experience, coupled with ongoing training, shapes their ability to deliver insightful data-driven outcomes.

Certifications & Licenses: Certifications and licenses are not typically required for the role of a Data Researcher.

Data Researcher Skills

Statistical Analysis: Advanced statistical methods are employed by data researchers to identify patterns, trends, and correlations within complex datasets. These techniques facilitate the translation of raw data into actionable insights, which are instrumental in guiding decision-making and predicting future outcomes across various industries.

Data Mining: Researchers extract patterns and knowledge from large datasets using techniques like clustering, classification, and association analysis. This skill, which combines statistical, computational, and analytical abilities, is pivotal in uncovering hidden insights that drive strategic business decisions.

Machine Learning: By leveraging algorithms and statistical models, data researchers can predict outcomes and inform strategic decisions. This area requires a combination of programming expertise, statistical knowledge, and an understanding of complex data patterns for effective interpretation and communication of findings.

Data Visualization: The creation of compelling visual representations of complex datasets allows stakeholders to quickly understand intricate patterns, trends, and insights, aiding in informed decision-making. A keen eye for design and proficiency in visualization tools and software are necessary to make data accessible and engaging for diverse audiences.

Survey Design: A meticulous blend of psychological insight and statistical acumen is required to craft questions that accurately capture respondent experiences and perceptions. This skill is crucial for transforming subjective feedback into quantifiable trends, providing meaningful insights from raw data.

Database Management: The organization, storage, and retrieval of large data sets are streamlined through sophisticated software tools, ensuring data integrity, security, and accessibility. This capability is essential for conducting thorough analyses and deriving actionable insights, supporting the seamless flow of information for research purposes.

Data Researcher Work Environment

A Data Researcher typically operates in a modern office setting, often with the option for remote work, reflecting the digital nature of their role. Their workspace is usually equipped with dual monitors, ergonomic seating, and quiet zones to facilitate concentration on complex data analysis tasks. The use of advanced statistical software and database tools is commonplace, necessitating a high level of proficiency in technology.

Work hours can be flexible, accommodating project deadlines rather than a strict nine-to-five schedule. This flexibility supports a healthy work-life balance, allowing for adjustments as needed. Dress codes tend to be business casual, leaning towards comfort and practicality.

The culture within data research teams emphasizes collaboration and continuous learning, with opportunities for professional development through workshops and conferences. Interaction with colleagues, both in person and virtually, is frequent for project discussions, though the job also requires significant independent work. The environment is generally low in noise, prioritizing a focused atmosphere conducive to detailed analysis.

Advancement Prospects

Data Researchers have a plethora of advancement options, ranging from specialized roles like Data Analysts to leadership positions such as Chief Data Officer (CDO). Progressing typically involves deepening expertise in data modeling, statistical analysis, and machine learning.

To climb the ladder, mastering industry-specific data tools and programming languages (e.g., Python, R) is crucial. Gaining experience in big data technologies like Hadoop or Spark can also set a foundation for roles in data architecture or engineering.

For those inclined towards leadership, developing project management skills and an understanding of business strategy are essential. This can lead to positions where one oversees data governance, strategy, and analytics teams.

Engagement in significant projects and contributions to data-driven decision-making processes can highlight a Data Researcher’s potential for higher responsibilities. Showcasing the ability to translate complex data insights into actionable business strategies is key to advancing in this field.

What Does a Phlebotomy Supervisor Do?

What does a referral specialist do, you may also be interested in..., 16 commercial manager skills for your career and resume, what does a resident manager do, what does an operational excellence manager do, what does an assistant event manager do.

- Certificate in Applied Data Science

- What is Cybersecurity?

- MICS Class Profile

- What Is Data Science?

- Careers in Data Science

- MIDS Class Profile

- Study Applied Statistics

- International Admissions

- Fellowships

- Student Profiles

- Alumni Profiles

- Video Library

- Apply Now External link: open_in_new

Home / Data Science / What Is Data Science?

What is Data Science?

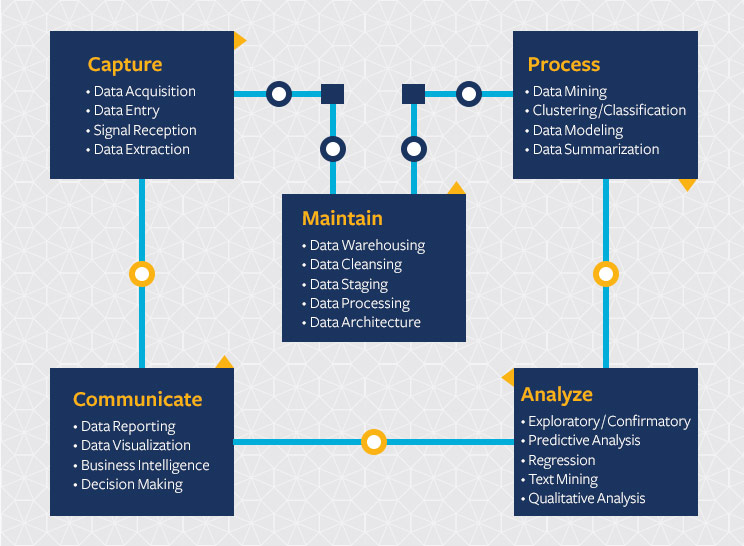

Data science continues to evolve as one of the most promising and in-demand career paths for skilled professionals. Today, successful data professionals understand they must advance past the traditional skills of analyzing large amounts of data, data mining, and programming skills. To uncover useful intelligence for their organizations, data scientists must master the full spectrum of the data science life cycle and possess a level of flexibility and understanding to maximize returns at each phase of the process.

The Data Science Life Cycle

The term “data scientist” was coined when companies first realized the need for data professionals skilled in organizing and analyzing massive amounts of data. Ten years after the widespread business adoption of the internet, Hal Varian, Google’s chief economist, first dean of the UC Berkeley School of Information (I School), and UC Berkeley emeritus professor of information sciences, business, and economics, predicted the importance of adapting to technology’s influence and reconfiguration of different industries.

“The ability to take data — to be able to understand it, to process it, to extract value from it, to visualize it, to communicate it — that’s going to be a hugely important skill in the next decades.”

– Hal Varian, chief economist at Google and UC Berkeley professor of information sciences, business, and economics 1

Today, effective data scientists masterfully identify relevant questions, collect data from a multitude of different data sources, organize the information, translate results into solutions, and communicate their findings in a way that positively affects business decisions. These skills are now required in almost all industries, which means data scientists have become increasingly valuable to companies.

Develop Specialized Data Science Skills Online

Get your master’s in information and data science and earn a certificate from the UC Berkeley School of Information (I School).

What Does a Data Scientist Do?

Data scientists have become assets across the globe and are present in almost all organizations. These professionals are well-rounded, analytical individuals with high-level technical skills who can build complex quantitative algorithms to organize and synthesize large amounts of information used to answer questions and drive strategy in their organizations. They also have the communication and leadership experience to deliver tangible results to various stakeholders across an organization or business.

Data scientists are typically curious and result-oriented, with exceptional industry-specific knowledge and communication skills that allow them to explain highly technical results to their non-technical counterparts. They possess a strong quantitative background in statistics and linear algebra as well as programming knowledge with focuses in data warehousing, mining, and modeling to build and analyze algorithms.

They also use key technical tools and skills, including:

Apache Hadoop

Apache Spark

NoSQL databases

Cloud computing

iPython notebooks

Why Become a Data Scientist?

As increasing amounts of data become more accessible, large tech companies are no longer the only ones in need of data scientists. There’s now a demand for qualified data science professionals across organizations, big and small.

With the power to shape decisions, solve real-world challenges, and make a meaningful impact in diverse sectors, data science professionals have the opportunity to pursue various career paths.

Work from the comfort of your home

Gain new skills as data uses continue to grow

Request More Information

Where do you fit in data science.

Data is everywhere and expansive. Various terms related to mining, cleaning, analyzing, and interpreting data are often used interchangeably, but the roles typically involve different skill sets. The complexity of the data analyzed also differs.

Data Scientist

Data scientists examine which questions need answering and where to find the related data. They have business acumen and analytical skills as well as the ability to mine, clean, and present data. Businesses use data scientists to source, manage, and analyze large amounts of unstructured data. Data scientists also leverage machine learning techniques to model information and interpret results effectively, a skill that differentiates them from data analysts. Results are then synthesized and communicated to key stakeholders to drive strategic decision making in the organization.

Skills needed: Programming skills (SAS, R, Python), statistical and mathematical skills, storytelling and data visualization, Hadoop, SQL, machine learning

Data Analyst

Data analysts bridge the gap between data scientists and business analysts. They’re provided with the questions that need answering from an organization and then organize and analyze data to find results that align with high-level business strategy. Data analysts are responsible for translating technical analysis to qualitative action items and effectively communicating their findings to diverse stakeholders.

Skills needed: Programming skills (SAS, R, Python), statistical and mathematical skills, data wrangling, data visualization

Data Engineer

Data engineers manage exponentially growing and rapidly changing data. They focus on developing, deploying, managing, and optimizing data pipelines and infrastructure to transform and transfer data to data scientists and data analysts for querying.

Skills needed: Programming languages (Java, Scala), NoSQL databases (MongoDB, Cassandra DB), frameworks (Apache Hadoop)

Data Science Career Outlook and Salary Opportunities

Data science professionals are rewarded for their highly technical skill set with competitive salaries and great job opportunities at big and small companies in most industries. Data science professionals with the appropriate experience and education have the opportunity to make their mark in some of the most forward-thinking companies in the world.

Gaining specialized skills within the data science field can distinguish data scientists even further. For example, machine learning experts use high-level programming skills to create algorithms that continuously gather data and adjust their learning to improve prediction performance.

Learn how a Master of Information and Data Science from UC Berkeley can prepare you for a successful career in data science.

1 Hal Varian on How the Web Challenges Managers . (2009). Mckinsey . Retrieved December 2023. arrow_upward

Resume Builder

- Resume Experts

- Search Jobs

- Search for Talent

- Employer Branding

- Outplacement

Data Researcher Job Description

Data researcher duties & responsibilities.

To write an effective data researcher job description, begin by listing detailed duties, responsibilities and expectations. We have included data researcher job description templates that you can modify and use.

Sample responsibilities for this position include:

Data Researcher Qualifications

Qualifications for a job description may include education, certification, and experience.

Licensing or Certifications for Data Researcher

List any licenses or certifications required by the position: AAPCA, AHIMA, PPBC

Education for Data Researcher

Typically a job would require a certain level of education.

Employers hiring for the data researcher job most commonly would prefer for their future employee to have a relevant degree such as Bachelor's and Master's Degree in Computer Science, Economics, Statistics, Business, Mathematics, Finance, Communication, Graduate, Physics, Education

Skills for Data Researcher

Desired skills for data researcher include:

Desired experience for data researcher includes:

Data Researcher Examples

- Microsoft Word (.docx) .DOCX

- PDF Document (.pdf) .PDF

- Image File (.png) .PNG

- Perform primary and secondary health plan research, including extensive communication with payers via telephone

- Collaboration with team for plan changes and formulary updates

- Work with other departments on the facilitation of different data sets

- Support sales in the delivery of data, reports, and ad hoc research assignments

- Desktop and telephone research for aircraft and company profile information

- Work with HPC facility operators and application scientists running computations at the Berkeley Lab to understand and analyze available data sources and necessary analyses

- Collect, manipulate, and analyze data related to security and HPC systems-behavior related research questions in scientific computing environments

- Write scientific research papers suitable for submission to peer-reviewed computer science venues

- Enable science by helping to create better understanding of the computational behavior of software running on HPC systems, allowing scientists to understand and use computing systems more efficiently

- Work closely with researchers and application scientists throughout the DOE Office of Science community, with faculty and students from universities throughout the world, with staff in Integrated Data Frameworks group at LBNL, where this position is housed

- Publications in top tier conferences /journals

- Demonstrated ability to work on applied research problems in interdisciplinary environments

- Proven hands on technical skills including an analytical package (R, Matlab, SAS, Weka) big data processing tools (Hadoop, Java)

- Ability to work with a team with proven verbal and written communications and organizational skills

- Basic knowledge in information retrieval, data mining, machine learning, statistical analysis and modeling

- This is one year contract position to start beginning of 2016

- Create and implement algorithms for predictive modeling, graph analysis and text mining

- Deliver reliable, readable and maintainable code

- Work with team to develop innovative approaches for data analysis, machine learning, data mining and natural language processing

- Manage small R&D projects and drive innovation and hacking

- Responsible for project architecture and survey design based on analyst objectives, hypotheses, usage intentions, and serving client needs that the research will address

- Analyze and interpret primary research data

- Support written research attributed to study findings

- Provides advice on data interpretation to ensure usage is accurate and defensible

- Under supervision, performs tasks in support of the process for ancillary services

- Track the key industry metrics reported by the power plants, energy companies, coal mines, and gas pipelines that we cover

- Deep knowledge of information retrieval, data mining, machine learning, statistical analysis and modeling

- 2 years proven search experience in a search firm, start-up or corporate environment

- Specific expertise in manufacturing, engineering, supply chain, and operations searches preferred

- Great software system and organizational skills, utilizing data systems-oriented research

- ATS/RTS experience with sourcing, tracking, and managing of leads and candidate flow

- Proficiency in passive candidate sourcing methods, including social media, boolean, and internet research

- Accurately enter all publisher information onto the company data base

- Contact publishers for delivery information either by telephone, email, fax or post

- Send orders to the publishers via various methods, including EDI, email and post

- Source publishers and titles from the internet and data bases

- Maintain system information

- Verify information received and offer alternate solutions for customers

- Ability to understand and retain high volumes of different procedures

- Contribute to effective team working both internal and external

- Develop and maintain excellent working relationships with all internal departments and teams

- Ensure that all relevant company and departmental Health, Safety and Environmental policies and procedures are adhered to at all times

- Ability to work in a team environment, manage multiple projects and provide strong consultative skills throughout the search process

- Working knowledge of various social media and analytics tools (such as Radian6, Talkwalker and the like, the ability to learn proprietary tools)

- Je behaalde recent je Master met een sterke focus op statistiek

- Een eerste onderzoekservaring is een plus

- Je hebt een basiskennis van R en interesse om je hierin verder te verdiepen

- Je bent vertrouwd met survey methodologie en multivariate statistiek

- Contact publishers and update the database with new edition information

- Place orders for new editions when due

- Respond to queries & claims via email & phone

- Ensure accurate records are regularly maintained using IT and manual systems, as

- Take ownership and carry out analysis to resolve outstanding coding queries where standard approaches have failed

- To implement initiatives that will positively impact the quality of the data that we supply to clients

- To ensure that the success of the initiatives are quantified through metrics published on a weekly basis

- Ensure that all queries are handled efficiently and effectively

- Build and maintain a good working relationship with internal stakeholders

- Create & maintain a high level of product knowledge – awareness of changes in the market and new product launches for key manufacturers

- The incumbent will proactively identify and utilize the most current resources and technology available to evaluate our donor pool and analyze and track trends

- Proven ability to create and maintain strategic recruiting plans with clearly defined objectives, desired outcomes, and a calendar of events

- Je hebt een sterke interesse voor data science in een marktonderzoeksomgeving

- Nieuwsgierig en bereid om steeds bij te leren

- Je haalt jouw energie uit complexe uitdagingen

- Experience in data mining (cluster analysis, text mining, time series analysis)

- We expect you to providing input and ideas for new collection methods and product improvements related to the covered content sets

- Understand the data management process and work effectively within it

- Build a knowledge base for products across manufacturers and retailers to enable the coding functions to code more effectively and to prevent partially coded items

- Work closely with other Operational departments regarding improving data quality of existing retailer data

- Where required contact manufacturers or make retailer store visits to source missing product information

- Work in Madrid and then travel within Spain (Barcelona and Malaga – subject to change) with the team to set up data collection projects in field locations

- Work one-on-one with participants to ensure project objectives are met

- Be accountable for specific processes including securing testing materials, handling logistics, troubleshooting technical or process issues and providing daily report

- Work closely with team to follow protocol and continually refine participant experience

- Be a quick learner and grasp complex project details and own specific aspects of the project

- Degree in Computer Science, Cognitive Science, Artificial Intelligence, Cognitive Psychology, Information Science, Neuroscience, Psychology, Neurology, or related discipline with a focus on design, development, application, and evaluation of machine learning and advanced data collection, statistics and analysis methods

- 5-7 years of experience in a healthcare analysis capacity, with healthcare data is required

- Ability to query large data sets in either Access or SAS

- Superior communication skills on technical subjects

- PhD in Computer Science, Physics and/or Industrial Engineering, with research/publication track record, or MS in Computer Science, Physics, and/or Industrial Engineering or with 3 or more years of industry related experience

- Creativity, initiative, enthusiasm, and results orientation to drive your inventions towards new products and services

Related Job Descriptions

Create a Resume in Minutes with Professional Resume Templates

I am an Employer

I am a candidate.

Data Researcher Job Description, Key Duties and Responsibilities

This post discusses the job description of a data researcher to help you understand what they do. It presents the key duties, tasks, and responsibilities that commonly make up the data researcher work description in most organizations.

This post also provides the major requirements most recruiters will want prospective candidates for the data researcher job to meet to be qualified to access the position.

Please, continue reading to increase your knowledge of the data researcher career:

What Does a Data Researcher Do?

The data researcher is responsible for the research and management of data relevant to an organization, ensuring quality, completeness, and integrity, to enhance day-to-day operations and improved accuracy and efficiency in data processes, or to aid decision-making.

They work for educational institutions, technology companies, institutes for research, etc.

They are responsible for the design, collection, analysis and review of research and evaluation data, as well as the dissemination of statistics that are objective, accurate, and timely.

The data researcher job description also entails developing spreadsheets and data bases to support project activities, and providing quantitative and qualitative analysis and interpretation of findings to aid decision making.

It also involves working with large amounts of data and developing actionable intelligence from the analysis.

Data researchers also provide support to the sales team and the sales process by developing analyses for sales pitches and press releases.

They are also responsible for carrying out ad hoc analyses in response to client requests. They also help to identify new opportunities for market research; performing analysis and developing data-driven models.

The data researcher work description also involves developing and applying statistical models for estimation and prediction of corporate performance across various sectors.

Data Researcher Job Description Example/Sample/Template

Data researchers perform various functions, which primarily involve researching and identifying entities (companies, brands, people, etc.), beliefs, systems, processes, events etc., and actions related to them from different content sources, and processing collected data in an accurate manner using technical knowledge.

They are also responsible for the quality, accuracy, and completeness of the data.

The primary duties, tasks, and responsibilities that make up the data researcher job description are listed below:

- Responsible for modeling information from the media using internally developed tools

- Take part in product planning meetings and provide statistical backed guidance on product development

- Work collaboratively with the Data Strategy team where applicable, to develop an engagement plan for researchers

- Make addition to the development of training materials and strategies related to the organization’s projects

- Work together with other researchers to collect, process, and understand data that can facilitate clients’ decisions

- Responsible for the design, validation, and visualization of data-driven models, including machine learning approaches, to understand social and behavioral phenomena in support of the institution’s mission

- Responsible for collecting and analyzing text and other data from websites and other sources using APIs and custom code

- Responsible for creating structured datasets from large, unstructured data that describe people, activities, and behavior; social networks, communication, and other social phenomena of interest

- Work together with experts to pioneer new approaches to research by integrating diverse data sources

- Responsible for analyzing and interpreting experimental data

- Responsible for communicating findings to project teams and other technical and non-technical stakeholders.

Data Researcher Job Description for Resume

You can use the sample data researcher job description above in making the professional or job experience section of your resume.

If you have worked before as a data researcher or are presently working in that role and are preparing a resume for a new job, it will be beneficial to add the professional experience section to your resume.

This section helps you show the recruiter that you have been successful performing the duties of a data researcher.

You can easily and quickly create this section by adopting the data researcher duties and responsibilities shown in the sample job description above.

Data Researcher Requirements – Skills, Knowledge, and Abilities for Career Success

If you are seeking to work as a data researcher, recruiters will generally ask you to meet certain requirements to be qualified to access the position.

This is to prove that you will be effective in carrying out the obligations, purpose, and objectives of the data researcher role if employed by the organization.

Shown below are major requirements, which applicants for the data researcher position are commonly expected to meet to be able to access it:

- Education: Employers require at least a Bachelor’s degree in Accounting, Finance, or Economics; Mathematics, Statistics, or Computer Science; Operations Research or in a related field. However, an MS, PhD, or other advanced graduate degree is preferred

- Knowledge: To work as a data researcher, applicants must possess an understanding of the industry they are seeking to work in. In addition to industry knowledge, employers also look out for candidates with hands-on experience compiling and analyzing complex, high volume, and dimensional datasets

- Employers also require a solid and proven knowledge of research methodologies, web and social media applications, and familiarity with web searching tools to source information

- It is also beneficial that applicants have a working knowledge of SQL, R, or Perl; Python, Java, or other languages appropriate for large scale data analysis, as well as experience working with relational and/or NoSQL databases (e.g., MySQL, HBase, Cassandra, Neo4J, etc.)

- Collaborative skills: The data researcher often works with other researchers, sales teams, and product teams to understand the project at hand and provide relevant information. So it is crucial that they have the ability to work in a team-oriented environment, as well as with people from diverse backgrounds

- Interpersonal skills: It is also essential that they have the necessary skills to build rapport, establish meaningful relationships, manage, and build a team

- Computer skills: Data researchers utilize the computer as a primary tool; hence, they must be proficient in the use of Microsoft Office applications, including Excel, PowerPoint, and Word. They must also be familiar with Google Documents, data visualization software (e.g., plot.ly or Tableau), and statistical analysis software applications

- Attention to detail: The data researcher is responsible for the accuracy, quality, and integrity of data. Therefore, it is crucial that they pay attention to detail and have a drive for excellence

- Communication skills: After performing data analysis, it s the responsibility of the data researcher to convey and explain findings to varying audiences with a technical or non-technical background. In view of this, it is important that they can draft clear and concise documentation, reports, and specifications, as well as communicate verbally to the relevant parties.

If you are a recruiter or HR manager needing to hire for the data researcher position in your organization, you will need to make a description of the vacant role so that individuals who are interested in the job will know the duties and responsibilities they will be assigned to perform if hired.

The data researcher job description example above will help you in writing the perfect description of the role for your organization.

The information on this page is also helpful to individuals interested in the data researcher career to learn about the kind of duties commonly assigned to the role. This will enable them to decide correctly if it’s the career they will like to pursue.

Did this post increase your knowledge of what data researchers do? Please, make a comment in the box below. You may also share your job description if you work as a data researcher .

Recommended:

This Site Uses Cookies

Privacy overview.

Data Analyst

- Certifications

- Related Topics

What Is a Data Analyst? How to Become One, Salary, Skills.

Data analysts solve measurable business problems with the help of computer programming and data analytics practices. Here’s what to know about a data analyst’s needed skills, salary and how to become one.

What Is a Data Analyst?

Data analysts conduct statistical analysis on structured data to uncover relevant business conclusions. They primarily focus on converting tangible, readily available data into actionable insights and answers.

What Do Data Analysts Do?

Data analysts acquire and organize cleaned data to search for applicable patterns and trends. They utilize data analysis techniques, programming languages and data visualization tools to conduct analysis and display their findings. Unlike data scientists , data analysts usually don’t work with raw data or machine learning models and don’t conduct largely hypothetical analysis.

Data Analyst Responsibilities

- Gather, reorganize and clean data as necessary from primary and secondary sources.

- Analyze and interpret patterns and trends in structured data sets.

- Extract actionable business insights and present findings to other professionals.

- Communicate with various parties to identify data information needs.

Day-to-Day Responsibilities of Data Analysts

- Use analytics platforms like KNIME to aggregate and clean collected data.

- Use programming languages like Python to manage data structures and conduct data mining operations.

- Create conclusion charts and graphs with visualization tools like Tableau.

- Collaborate with software developers to optimize data collection and analysis systems.

Data Analysts Within a Company

Data analysts are usually part of a data science team within a company. They frequently collaborate with business intelligence analysts , data engineers , data scientists and software developers to accomplish their work.

Importance of Data Analysts

The insights that data analysts uncover through their work can be used to alleviate workflow roadblocks or to eventually make impactful business decisions. Specific business problems or optimization issues that relate to data may take longer to solve without data analysts.

What Skills Are Needed to Be a Data Analyst?

Qualifications to be a data analyst.

- Internship and/or on-the-job training experience in data science or data analytics.

- Ability to organize, clean and interpret large sets of data.

- Ability to conduct statistical and regression analysis to track and identify trends.

- Proficiency in Python or R for data analysis purposes.

Data Analyst Prerequisites

- Bachelor’s degree in computer science, information systems, statistics or a similar field.

Data Analyst Hard Skills

- Expertise in data analysis, cleaning and preparation.

- Knowledge of big data tools and databases.

- Knowledge of cloud computing technologies.

- Experience with data analysis tools and techniques.

- Experience with data visualization tools.

- Knowledge of machine learning technologies.

- Expertise in programming languages (Java, Python, R, Scala, SQL)

- Experience with statistics, mathematics and related analysis.

Data Analyst Soft Skills

- Collaboration.

- Critical thinking skills.

- Problem-solving skills.

- Verbal and written communication skills.

Tools and Programs Data Analysts Use

- Google Sheets

- Jupyter Notebook

- Microsoft Excel

- Microsoft Power BI

How to Become a Data Analyst

Data analyst education and experience.

Data analyst candidates are often expected to have a bachelor’s degree in computer science, information systems, statistics or a similar field.

Candidates will often need to obtain applicable data science or analytics experience through an internship, on-the-job training and/or work experience. Knowledge in the areas of data analysis and tools, data visualization, programming languages (Java, Python, R, Scala, SQL), statistics, big data and effective communication are also recommended.

Data Analyst Certificates and Courses

- 21 Python Data Science Courses and Bootcamps to Know

- Data Analytics Accelerator

- Data Analytics Bootcamp

- Intro to Data Analytics Webinar

- Learning Python for Data Analysis and Visualization

Data Analyst Career Path

After gaining experience as a data analyst, professionals can move into a data scientist, data analytics consultant or specialist role like marketing analyst, operations analyst or systems analyst. From here, professionals may progress into management and leadership roles like senior data analyst, analytics manager, director of analytics or chief data officer.

Data Analyst Salary and Job Outlook

Data analysts jobs, falling under the category of operations research analyst jobs by the U.S. Bureau of Labor Statistics, are projected to grow 23 percent by 2031.

The full compensation package for a data analyst depends on a variety of factors, including but not limited to the candidate’s experience and geographic location. See below for detailed information on the average data analyst salary.

Expand Your Data Analyst Career Opportunities

Invest in your skillset by taking expert-led data science courses from Udemy.

Regardless of your industry or role, fluency in the language of data analytics will allow you to contribute to data driven decision making.

Hello and welcome to the course Product Management: Business KPIs & User Metrics analysis.

In this course, you will learn

#1 - How to look at user metrics & business KPIs

PREDICTIVE, PRESCRIPTIVE ANALYTICS FOR BUSINESS DECISION MAKING

LEARN HOW TO BUILD PREDICTIVE AND PRESCRIPTIVE MODELS USING NUMERICAL DATA

Prescriptive analytics can cut through the clutter of…

Hello, My name is Minerva Singh and I am an Oxford University MPhil (Geography and Environment) graduate. I recently finished a PhD at Cambridge University (Tropical Ecology and Conservation).

I have several…

Data Analyst Certifications + Programs

Give your resume a boost with in-demand data science certifications available from Udacity.

Whether you have coded before or are brand new to the world of programming, this course will put you on the fast track to building confidence with this intuitive, object- oriented language. Learn programming fundamentals and build a custom application. Graduate with the ability to start applying Python within high-growth fields like analytics, data science, and web development.

What you'll accomplish

This is a beginner-friendly program with no prerequisites, although some students may have coded previously. First-time programmers will have access to pre-course preparatory lessons and additional resources to boost their confidence with key concepts and set up their development environments. Throughout this expert-designed program, you’ll:

- Learn object-oriented programming fundamentals and Python basics that get you coding from day one.

- Build a Python program and add on increased complexity throughout the course.

- Troubleshoot Python code and practice common debugging techniques.

- Push your skills to the next level by adding scripting, modules, and APIs to your Python toolkit.

- Explore introductory data science and web development as potential career directions for Python programmers.

- Demonstrate your Python skills by creating apps that pull in data with Pandas or integrate functionality from APIs with Flask.

Why General Assembly

Since 2011, General Assembly has graduated more than 40,000 students worldwide from the full time & part time courses. During the 2020 hiring shutdown, GA's students, instructors, and career coaches never lost focus, and the KPMG-validated numbers in their Outcomes report reflect it. *For students who graduated in 2020 — the peak of the pandemic — 74.4% of those who participated in GA's full-time Career Services program landed jobs within six months of graduation. General Assembly is proud of their grads + teams' relentless dedication and to see those numbers rising. Download the report here .

Your next step? Submit an application to talk to the General Assembly Admissions team

Note: reviews are referenced from Career Karma - https://careerkarma.com/schools/general-assembly

General Assembly’s Data Analytics Immersive is designed for you to harness Excel, SQL, and Tableau to tell compelling stories with a data driven strategy. This program was created for analysts, digital marketers, sales managers, product managers, and data novices looking to learn the essentials of data analysis.

You will learn to use industry tools, Excel, and SQL to analyze large real world data sets and create data dashboards and visualizations to share your findings. The Data Analytics Accelerator culminates in a.

Throughout this expert-designed program, you’ll:

- Use Excel, SQL, and Tableau to collect, clean, and analyze large data sets.

- Present data-driven insights to key stakeholders using data visualization and dashboards.

- Tell compelling stories with your data.

- Graduate with a professional portfolio of projects that includes a capstone project applying rigorous data analysis techniques to solve a real-world problem

General Assembly’s Data Analytics Immersive is a transformative course designed for you to get the necessary skills for a data analyst role in three months.

The Data Analytics bootcamp is led by instructors who are expert practitioners in their field, supported by career coaches that work with you since day one and enhanced by a career services team that is constantly in talks with employers about their tech hiring needs.

As a graduate, you’ll have a portfolio of projects that show your knowledge of data analytics skills, as well as experience with visualization tools and frameworks that employers demand. Throughout this expert-designed program, you’ll:

- Acquire, analyze, and visualize data sets in real time.

- Master industry-standard tools like SQL, Excel, Tableau, PowerBI, and Python.

- Turn data into stories that can influence and inform important decisions.

- Ask the right questions and answer them with data-informed insights.

- Demonstrate what you’ve learned with a solid professional portfolio.

Note: reviews are referenced from Career Karma - https://careerkarma.com/schools/general-assembl

Careers Related to Data Analyst

Data analyst jobs, companies hiring data analysts, most common skills for data analyst, related data science careers.

30 Data Researcher Interview Questions and Answers

Common Data Researcher interview questions, how to answer them, and example answers from a certified career coach.

In today’s data-driven world, the role of a Data Researcher has become increasingly vital for organizations seeking to make informed decisions and gain valuable insights. Your meticulous approach to collecting, analyzing, and interpreting data has brought you this far in your career journey – but now it’s time to impress potential employers during the interview process.

To help you confidently navigate through your upcoming interview, we’ve compiled a list of common Data Researcher interview questions that will showcase your expertise and problem-solving skills. Along with these questions, we’ll provide guidance on how to answer them effectively, ensuring you stand out as a top candidate in this competitive field.

1. What experience do you have in data collection and analysis?

Hiring managers want to gauge your ability to navigate the world of data, from gathering raw information to drawing actionable insights. Your experience in data collection and analysis will demonstrate your ability to tackle complex challenges, use various tools and methodologies, and ultimately contribute to the company’s decision-making process and strategic planning. The more proficient you are in these areas, the more valuable you become as a data researcher.

Example: “During my time as a research assistant at XYZ University, I was responsible for collecting and analyzing data for various projects. One of the most significant projects involved conducting surveys to assess student satisfaction with campus facilities. I designed questionnaires, distributed them both online and in-person, and collected responses from over 1,000 students.

After gathering the data, I used statistical software like SPSS and Excel to clean, organize, and analyze it. Through descriptive statistics and regression analysis, I identified trends and correlations that helped inform recommendations for facility improvements. My findings were presented to university administrators, who implemented several changes based on our insights. This experience not only honed my skills in data collection and analysis but also demonstrated the real-world impact that well-executed research can have on decision-making processes.”

2. Can you describe a project where you had to collect, clean, and analyze large datasets?

Diving into the nitty-gritty of data is at the core of a data researcher’s job. By asking about a specific project, interviewers want to gauge your hands-on experience and expertise in handling large datasets, as well as your ability to identify and fix data inconsistencies. This question also helps them understand your thought process, problem-solving skills, and how you approach complex tasks that are critical to the role.

Example: “During my previous role as a data researcher, I was assigned to a project that aimed to identify trends and patterns in customer behavior for an e-commerce company. The dataset provided consisted of millions of transaction records spanning over two years, which included information on customer demographics, purchase history, and browsing behavior.

The first step involved cleaning the data by identifying and addressing missing values, inconsistencies, and duplicate entries. This process required close attention to detail and the use of various data cleansing techniques, such as imputation and outlier detection. Once the data was cleaned, I used Python libraries like Pandas and NumPy to manipulate and analyze the dataset efficiently.

Through my analysis, I was able to uncover valuable insights into customer preferences, seasonal trends, and potential areas for improvement in the company’s marketing strategy. These findings were then presented to the management team, who utilized the information to make informed decisions about future campaigns and promotions. Ultimately, this project not only honed my skills in handling large datasets but also demonstrated the value of data-driven decision-making in achieving business goals.”

3. Which programming languages are you proficient in for data manipulation and analysis?

As a data researcher, your potential employer wants to ensure you possess the technical skills necessary to work with large datasets and extract valuable insights from them. Proficiency in programming languages such as Python, R, and SQL is essential for tasks like data cleaning, transformation, and analysis. By asking this question, the interviewer is assessing your ability to effectively use these languages to support the company’s research goals and data-driven decision making.

Example: “I am proficient in Python and R for data manipulation and analysis. In my previous role as a data researcher, I primarily used Python along with libraries such as Pandas, NumPy, and Matplotlib to clean, analyze, and visualize large datasets. This allowed me to efficiently process the data and extract valuable insights for the team.

I also have experience working with R, particularly when it comes to statistical modeling and hypothesis testing. Utilizing packages like dplyr, ggplot2, and tidyr, I’ve been able to perform advanced analyses and create compelling visualizations that effectively communicate our findings to stakeholders. My proficiency in both Python and R has enabled me to tackle various data-related challenges and contribute significantly to the success of my projects.”

4. Are you familiar with any data visualization tools? If so, which ones?

Data visualization is a vital skill for a data researcher, as it allows you to present complex information in a visually appealing and easily digestible format. Interviewers ask about your familiarity with data visualization tools to assess your ability to transform raw data into meaningful insights that can be easily understood by non-experts. Showcasing your knowledge of various data visualization tools demonstrates your versatility and adaptability in using the best tool for the job and effectively communicating your findings to different audiences.

Example: “Yes, I am familiar with several data visualization tools that help in presenting complex data in a more understandable and visually appealing manner. Some of the tools I have experience with include Tableau, Microsoft Power BI, and Google Data Studio.

Tableau is my go-to tool for creating interactive dashboards and visualizations, as it offers a wide range of customization options and supports various data sources. Power BI is another powerful tool I’ve used, particularly when working within the Microsoft ecosystem, as it integrates seamlessly with other Microsoft applications like Excel and SharePoint. Lastly, Google Data Studio has been useful for creating real-time reports and sharing them easily with team members, especially when working with Google Analytics or Google Sheets data.”

5. How do you ensure the accuracy and reliability of the data you collect and analyze?

Accuracy and reliability are the cornerstones of quality data research, and interviewers want to ensure you prioritize these aspects in your work. By asking this question, they are looking to gauge your understanding of essential data verification techniques, your attention to detail, and your commitment to delivering accurate, trustworthy insights that can drive informed decision-making within the organization.

Example: “To ensure the accuracy and reliability of the data I collect and analyze, I follow a systematic approach that includes verifying data sources, cross-checking information, and using appropriate analytical methods. First, I make sure to use reputable and reliable sources for data collection, such as government databases, industry reports, or peer-reviewed research articles. This helps establish a solid foundation for my analysis.

Once I have collected the data, I perform thorough cross-checks by comparing it with other relevant sources to identify any discrepancies or inconsistencies. If there are any doubts about the data’s validity, I investigate further to resolve the issue before proceeding with the analysis. Additionally, I maintain clear documentation of my data collection process, including source citations and notes on any assumptions made during the analysis.

When analyzing the data, I choose appropriate statistical techniques and tools based on the nature of the data and the research question at hand. This ensures that the results obtained from the analysis are accurate and meaningful. Finally, I always review my findings critically and seek feedback from colleagues or subject matter experts to validate my conclusions and ensure their reliability.”

6. Describe your experience using APIs to gather data.

Employers want to know if you have the technical skills and experience required to efficiently gather and manipulate data from various sources. APIs, or Application Programming Interfaces, are an essential way to access and collect data from different platforms, websites, or databases. Your familiarity with APIs demonstrates your ability to work with complex data sets and adapt to various data sources, which is a valuable skill in the world of data research.

Example: “During my previous role as a data researcher, I frequently used APIs to gather and analyze data from various sources. One notable project involved collecting social media data for sentiment analysis. To achieve this, I utilized the Twitter API to access tweets containing specific keywords related to our client’s products.

I started by familiarizing myself with the API documentation and setting up authentication using OAuth. Once connected, I wrote Python scripts utilizing the Tweepy library to extract relevant tweet data such as text, timestamp, user information, and engagement metrics. This allowed me to efficiently collect large volumes of data while adhering to the API rate limits.

The gathered data was then cleaned, preprocessed, and analyzed to identify trends and sentiments that helped inform our client’s marketing strategy. The use of APIs in this project not only streamlined the data collection process but also ensured we had access to real-time, accurate information directly from the source.”

7. Have you ever worked with unstructured data? If so, how did you handle it?

Data researchers often face the challenge of working with unstructured data, which requires creativity, flexibility, and problem-solving skills. By asking this question, interviewers want to know if you have experience dealing with this common issue and gauge your ability to transform messy information into valuable insights. Your response will demonstrate your analytical skills, adaptability, and resourcefulness in tackling real-world data problems.

Example: “Yes, I have worked with unstructured data in a previous project where we were analyzing customer feedback from various sources like social media, emails, and online reviews. The challenge was to extract valuable insights from this raw, unorganized information.

To handle the unstructured data, I first used natural language processing (NLP) techniques to preprocess the text by removing stop words, stemming, and tokenizing. Then, I applied topic modeling algorithms such as Latent Dirichlet Allocation (LDA) to identify common themes and patterns within the data. This allowed us to group similar feedback together and gain a better understanding of our customers’ concerns and preferences. Ultimately, these insights helped inform our marketing strategies and improve overall customer satisfaction.”

8. What methods do you use to validate the quality of the data you work with?

Accuracy and reliability are paramount in the world of data research. Interviewers want to ensure that you have a strong understanding of data validation techniques and that you actively employ them in your work. This question helps them gauge your attention to detail, your commitment to maintaining data integrity, and your ability to recognize and address potential errors or inaccuracies in the data you handle.

Example: “To validate the quality of data, I employ a combination of techniques to ensure its accuracy and reliability. First, I perform an initial assessment by checking for any missing values, inconsistencies, or outliers in the dataset. This helps me identify potential issues that may require further investigation.

Once I have a general understanding of the data’s structure, I use cross-validation methods such as comparing it with other reputable sources or benchmarking against industry standards. This step allows me to confirm the credibility of the information and detect any discrepancies that might impact my analysis.

Furthermore, I collaborate with colleagues or subject matter experts to review the data and provide their insights on its validity. Their expertise can help uncover hidden patterns or trends that might not be immediately apparent, ensuring a comprehensive evaluation of the data’s quality. Through this multi-faceted approach, I can confidently work with data that is accurate, reliable, and relevant to the research objectives.”

9. Can you explain the difference between supervised and unsupervised machine learning algorithms?

When considering a data researcher candidate, interviewers want to know whether you possess a solid understanding of the different machine learning approaches. This question is asked to explore your familiarity with the concepts and their applications, as well as your ability to explain complex ideas clearly. Supervised and unsupervised algorithms form the basis of many data-driven solutions, so it’s essential for a data researcher to be well-versed in their differences and use cases.

Example: “Supervised machine learning algorithms involve training a model using labeled data, where the input-output relationship is already known. The algorithm learns from this data and then applies that knowledge to make predictions on new, unseen data. Common supervised learning techniques include regression for continuous outputs and classification for categorical outputs.

On the other hand, unsupervised machine learning algorithms work with unlabeled data, meaning there’s no predefined output or target variable. Instead, these algorithms aim to identify patterns, relationships, or structures within the data itself. Clustering and dimensionality reduction are common unsupervised learning techniques used to group similar data points together or reduce the complexity of high-dimensional data, respectively.

Both supervised and unsupervised learning have their unique applications in data research, depending on the problem at hand and the availability of labeled data.”

10. Describe a situation where you had to deal with missing or incomplete data. How did you handle it?

Data researchers often face the challenge of working with imperfect information. Interviewers want to know if you possess the analytical skills, creativity, and resourcefulness to handle these situations effectively. Your response to this question demonstrates your ability to fill in the gaps, make informed assumptions, and ultimately deliver accurate and meaningful insights, even when the data isn’t perfect.

Example: “During a previous project, I was tasked with analyzing customer satisfaction data to identify trends and areas for improvement. While reviewing the dataset, I noticed that some survey responses were incomplete or missing entirely. To address this issue, I first assessed the extent of the missing data and determined whether it would significantly impact the analysis.

Since the missing data represented a small percentage of the total responses, I decided to proceed with caution by using statistical imputation techniques to estimate the missing values. This involved identifying patterns in the available data and making educated assumptions based on those patterns. Additionally, I made sure to document my methodology and assumptions thoroughly so that other team members could understand the approach taken and its potential limitations.

After completing the analysis, I presented my findings to the team, highlighting the areas where we had estimated data and discussing the possible implications on our conclusions. This transparency allowed us to make informed decisions while acknowledging the limitations of the dataset.”

11. What is your approach to handling outliers in a dataset?

When working with datasets, outliers can have a significant impact on the overall analysis and conclusions. Interviewers want to know how you approach these unusual data points because it demonstrates your critical thinking skills and understanding of data quality. They’re interested in seeing whether you have the ability to recognize, manage, and communicate the potential effects of outliers in your research and analysis.

Example: “When handling outliers in a dataset, my first step is to identify and understand the nature of these data points. I begin by visualizing the data using appropriate plots, such as box plots or scatter plots, which can help me spot any potential outliers. Once identified, I investigate whether these outliers are due to errors in data collection, entry, or processing, or if they represent genuine observations.

If the outliers result from errors, I take corrective measures, such as fixing data entry mistakes or addressing issues with data collection methods. However, if the outliers are valid observations, I consider their impact on the analysis. In some cases, removing them might be necessary to prevent distortion of results, especially when using techniques sensitive to extreme values. Alternatively, if the outliers provide valuable insights or are essential for the research question, I may choose to keep them and use robust statistical methods that minimize their influence on the overall analysis. This approach ensures that the final conclusions drawn from the dataset are both accurate and meaningful.”

12. Explain the concept of data normalization and why it’s important.

Data normalization is a key concept for any data researcher, and interviewers want to ensure you understand its importance. Normalization is the process of organizing data in a database to minimize redundancy and improve data integrity. By demonstrating your knowledge of data normalization, you show that you can maintain and work with clean, high-quality data, leading to more accurate and reliable analysis and insights for the organization.

Example: “Data normalization is a process used in database design to organize data and reduce redundancy, ensuring that each piece of information is stored only once. This is achieved by dividing larger tables into smaller ones and defining relationships between them using primary and foreign keys. The main goal of normalization is to eliminate anomalies, such as insertion, update, and deletion anomalies, which can lead to inconsistencies and inaccuracies in the data.

Normalization is important for several reasons. First, it improves the efficiency of data storage by reducing duplicate data, saving space, and making the database easier to maintain. Second, it enhances data integrity by enforcing consistency across related records, ensuring that changes made to one record are automatically reflected in others. Finally, normalized databases facilitate more efficient querying and reporting, as they allow for simpler and faster retrieval of information. In summary, data normalization plays a critical role in maintaining accurate, consistent, and easily accessible data within a database system.”

13. Do you have experience working with relational databases? If so, which ones?

Your ability to navigate relational databases is essential for a data researcher, as these databases are often used to store, organize, and analyze large amounts of information. By asking this question, interviewers seek to gauge your familiarity with specific tools and platforms, as well as your experience in handling complex data sets. It’s a way to assess your technical skills and determine if you can efficiently manage the data-driven tasks required for the role.

Example: “Yes, I have extensive experience working with relational databases throughout my career as a data researcher. My primary expertise lies in using MySQL and PostgreSQL for managing and organizing large datasets. In my previous role at XYZ Company, I was responsible for designing and maintaining the database schema using MySQL, which involved creating tables, defining relationships between them, and optimizing queries to ensure efficient data retrieval.

I have also worked on projects that utilized PostgreSQL, where I gained experience in advanced features such as full-text search, JSON support, and spatial data processing. This allowed me to develop a deeper understanding of different database systems and their unique capabilities, enabling me to choose the most suitable solution based on project requirements.”

14. What statistical techniques do you commonly use in your data analysis projects?

Your ability to select and apply appropriate statistical techniques is critical to a data researcher role. Employers want to ensure that you have a deep understanding of various statistical methods and can effectively use them to analyze and interpret data, leading to meaningful insights and informed decisions for the organization. Demonstrating your expertise and experience with statistical techniques will signal that you are a capable data researcher who can contribute to the success of the company’s data-driven projects.

Example: “As a data researcher, I employ various statistical techniques depending on the nature of the project and the specific research question. Two commonly used methods in my work are regression analysis and hypothesis testing.

Regression analysis allows me to model relationships between variables and identify trends or patterns within the data. This technique is particularly useful when trying to predict outcomes based on certain input factors or understand how changes in one variable might impact another. For example, I’ve used multiple linear regression to analyze the relationship between sales performance and marketing spend across different channels.

Hypothesis testing is another essential tool for making informed decisions based on sample data. It helps me determine whether observed differences or correlations are statistically significant or simply due to chance. One common test I use is the t-test, which compares means between two groups. In a recent project, I employed an independent samples t-test to evaluate if there was a significant difference in customer satisfaction scores between two product versions.

These techniques, along with others like ANOVA, chi-square tests, and cluster analysis, enable me to extract valuable insights from data and support evidence-based decision-making processes.”

15. How do you stay up-to-date on the latest trends and developments in data research?

Staying current in the field of data research is essential to maintaining a competitive edge in the job market and delivering the most valuable insights to your employer. By asking this question, interviewers want to gauge your commitment to professional growth and your ability to adapt to an ever-evolving landscape, while also ensuring that you’re well-informed about new methodologies, tools, and technologies that could benefit the organization.

Example: “To stay up-to-date on the latest trends and developments in data research, I actively engage with various sources of information. First, I subscribe to industry-leading journals and publications such as Harvard Business Review, Data Science Central, and Journal of Big Data, which provide valuable insights into new methodologies, tools, and best practices.

I also participate in online forums and communities where professionals discuss current challenges, share experiences, and exchange ideas. This helps me gain practical knowledge from my peers and learn about real-world applications of emerging technologies. Additionally, I attend webinars, conferences, and workshops whenever possible to network with other professionals and hear directly from experts in the field.

This combination of staying informed through reputable publications, engaging with professional communities, and attending events allows me to continuously expand my knowledge and ensure that my skills remain relevant in the ever-evolving world of data research.”

16. Describe a time when you had to present complex data findings to a non-technical audience. How did you make the information accessible and understandable?

Data researchers must be able to effectively convey their findings to people who may not have a strong background in data analysis. This question seeks to evaluate your ability to break down complex information and present it in a way that is easy for others to comprehend. The interviewer wants to know if you can adapt your communication style to suit different audiences and ensure that your insights make a meaningful impact on decision-making processes.

Example: “I once worked on a project where I had to analyze customer behavior data for an e-commerce company and present the findings to the marketing team, who were not well-versed in technical data analysis. My goal was to help them understand the key insights so they could make informed decisions about their marketing strategies.

To make the information accessible and understandable, I started by identifying the most important takeaways from my analysis that would be relevant to the marketing team’s objectives. Then, I used visual aids like charts and graphs to represent the data in a more digestible format. For example, I created a heatmap to show the peak hours of website traffic and a bar chart to display the most popular product categories among different age groups.

During the presentation, I focused on explaining the context and significance of each finding rather than diving into complex statistical methods. I also encouraged questions throughout the session to ensure everyone understood the key points. This approach allowed me to effectively communicate the insights from the data analysis, enabling the marketing team to develop targeted campaigns based on the findings.”

17. Can you provide an example of a project where you used predictive analytics to solve a problem or answer a question?

Employers are interested in your ability to leverage data and predictive analytics to draw meaningful insights and inform decision-making. By asking for a specific example, they want to gauge your experience and expertise in using these tools, as well as your thought process and problem-solving skills. Sharing a successful project demonstrates your aptitude for data-driven analysis and your ability to apply it in real-world scenarios.

Example: “Certainly! I recently worked on a project for an e-commerce company that wanted to optimize its marketing efforts by targeting customers who were most likely to make repeat purchases. My role was to analyze the historical transaction data and develop a predictive model to identify these high-value customers.

I started by cleaning and preprocessing the data, ensuring it was free of inconsistencies and missing values. Next, I performed exploratory data analysis to understand patterns and correlations between various customer attributes and their purchasing behavior. Based on my findings, I selected relevant features such as average order value, frequency of purchases, and time since last purchase to build the predictive model.

I experimented with several machine learning algorithms, including logistic regression, decision trees, and random forests, to find the best-performing model. After fine-tuning the parameters and validating the model using cross-validation techniques, I settled on a random forest classifier due to its superior performance in terms of accuracy and precision.

The final model successfully identified customers with a high likelihood of making repeat purchases, enabling the marketing team to focus their efforts on this segment and ultimately increase the company’s revenue through targeted campaigns. This project showcased the power of predictive analytics in driving informed business decisions and achieving desired outcomes.”

18. What is your experience with web scraping tools and techniques?

Employers want to gauge your proficiency in using web scraping tools and techniques because it’s a vital skill for a data researcher. Web scraping is the process of extracting data from websites, and this information is often used for analysis, research, or generating insights. Your familiarity with various web scraping methods and tools demonstrates your ability to efficiently gather the necessary data to support the organization’s objectives.

Example: “Throughout my career as a data researcher, I have gained extensive experience with various web scraping tools and techniques. My primary go-to tool is Python’s Beautiful Soup library, which allows me to efficiently extract information from HTML and XML documents. In combination with the requests library, I can automate the process of fetching web pages and parsing their content.

When dealing with more complex websites that rely on JavaScript for rendering content, I utilize Selenium WebDriver. This enables me to interact with dynamic elements on the page and retrieve data that might not be accessible using traditional methods. Additionally, I am well-versed in implementing proxies and rate-limiting strategies to ensure compliance with website terms of service and avoid IP blocking.

My expertise in web scraping has allowed me to gather valuable insights and support data-driven decision-making across various projects, ultimately contributing to the achievement of overall business goals.”

19. How do you prioritize multiple data research projects with competing deadlines?

Time management is a critical skill in any workplace, but it is especially important for data researchers who often juggle multiple projects with varying deadlines. By asking this question, interviewers want to assess your ability to effectively prioritize tasks, manage your workload, and ensure timely delivery of accurate and insightful research findings that can drive business decisions.

Example: “When faced with multiple data research projects and competing deadlines, I prioritize them based on a combination of factors such as urgency, project complexity, and stakeholder expectations. First, I assess the urgency of each project by considering their respective deadlines and any potential consequences if they are not completed on time. Projects with tighter deadlines or higher stakes will naturally take precedence.

After evaluating urgency, I consider the complexity of each project and estimate the amount of time and resources required to complete them. This helps me allocate my time effectively and identify any potential bottlenecks that may arise during the research process. Additionally, I maintain open communication with stakeholders to understand their expectations and keep them informed about progress and any changes in priorities.

This approach allows me to efficiently manage multiple projects while ensuring that I meet deadlines and deliver high-quality results for all stakeholders involved.”

20. Have you ever encountered ethical concerns while conducting data research? If so, how did you address them?

Ethics play a significant role in data research, as it involves handling sensitive information, maintaining privacy, and ensuring unbiased analysis. Interviewers ask this question to gauge your awareness of ethical considerations and your ability to navigate potential dilemmas. Demonstrating how you’ve dealt with ethical concerns in the past showcases your commitment to integrity and responsible research practices, which are essential qualities for any data researcher.

Example: “Yes, I have encountered ethical concerns during my time as a data researcher. One specific instance involved working on a project that required collecting sensitive personal information from participants. To address these concerns, I ensured that our research team adhered to strict privacy and confidentiality protocols.

We obtained informed consent from all participants before collecting any data, clearly explaining the purpose of the study and how their information would be used. Additionally, we anonymized the collected data by removing personally identifiable information and assigning unique identifiers to each participant. This allowed us to analyze the data without compromising individual privacy. Furthermore, we implemented stringent security measures to protect the stored data from unauthorized access.

Throughout the entire process, open communication with stakeholders and maintaining transparency about our methods were key in addressing ethical concerns. This approach not only helped maintain the integrity of our research but also built trust among participants and other parties involved in the project.”

21. What steps do you take to protect sensitive data during your research process?