- Table of Contents

- Random Entry

- Chronological

- Editorial Information

- About the SEP

- Editorial Board

- How to Cite the SEP

- Special Characters

- Advanced Tools

- Support the SEP

- PDFs for SEP Friends

- Make a Donation

- SEPIA for Libraries

- Entry Contents

Bibliography

Academic tools.

- Friends PDF Preview

- Author and Citation Info

- Back to Top

Scientific Research and Big Data

Big Data promises to revolutionise the production of knowledge within and beyond science, by enabling novel, highly efficient ways to plan, conduct, disseminate and assess research. The last few decades have witnessed the creation of novel ways to produce, store, and analyse data, culminating in the emergence of the field of data science , which brings together computational, algorithmic, statistical and mathematical techniques towards extrapolating knowledge from big data. At the same time, the Open Data movement—emerging from policy trends such as the push for Open Government and Open Science—has encouraged the sharing and interlinking of heterogeneous research data via large digital infrastructures. The availability of vast amounts of data in machine-readable formats provides an incentive to create efficient procedures to collect, organise, visualise and model these data. These infrastructures, in turn, serve as platforms for the development of artificial intelligence, with an eye to increasing the reliability, speed and transparency of processes of knowledge creation. Researchers across all disciplines see the newfound ability to link and cross-reference data from diverse sources as improving the accuracy and predictive power of scientific findings and helping to identify future directions of inquiry, thus ultimately providing a novel starting point for empirical investigation. As exemplified by the rise of dedicated funding, training programmes and publication venues, big data are widely viewed as ushering in a new way of performing research and challenging existing understandings of what counts as scientific knowledge.

This entry explores these claims in relation to the use of big data within scientific research, and with an emphasis on the philosophical issues emerging from such use. To this aim, the entry discusses how the emergence of big data—and related technologies, institutions and norms—informs the analysis of the following themes:

- how statistics, formal and computational models help to extrapolate patterns from data, and with which consequences;

- the role of critical scrutiny (human intelligence) in machine learning, and its relation to the intelligibility of research processes;

- the nature of data as research components;

- the relation between data and evidence, and the role of data as source of empirical insight;

- the view of knowledge as theory-centric;

- understandings of the relation between prediction and causality;

- the separation of fact and value; and

- the risks and ethics of data science.

These are areas where attention to research practices revolving around big data can benefit philosophy, and particularly work in the epistemology and methodology of science. This entry doesn’t cover the vast scholarship in the history and social studies of science that has emerged in recent years on this topic, though references to some of that literature can be found when conceptually relevant. Complementing historical and social scientific work in data studies, the philosophical analysis of data practices can also elicit significant challenges to the hype surrounding data science and foster a critical understanding of the role of data-fuelled artificial intelligence in research.

1. What Are Big Data?

2. extrapolating data patterns: the role of statistics and software, 3. human and artificial intelligence, 4. the nature of (big) data, 5. big data and evidence, 6. big data, knowledge and inquiry, 7. big data between causation and prediction, 8. the fact/value distinction, 9. big data risks and the ethics of data science, 10. conclusion: big data and good science, other internet resources, related entries.

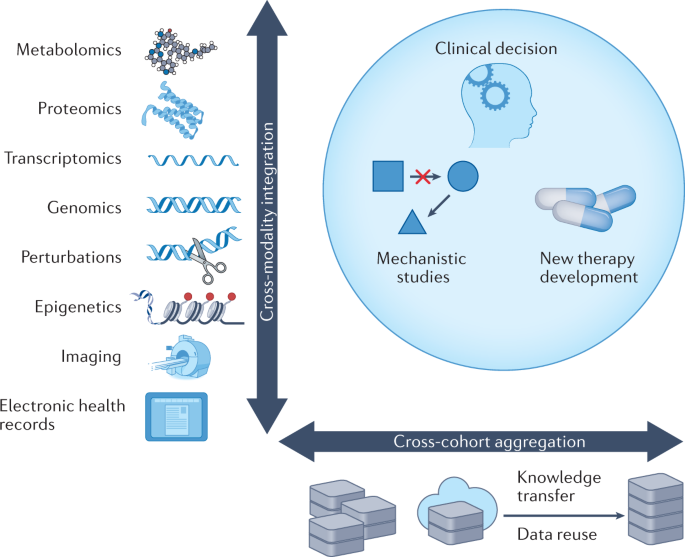

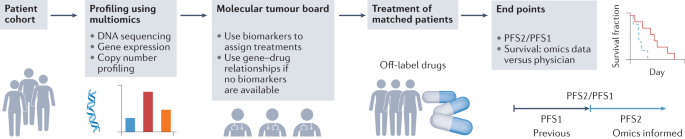

We are witnessing a progressive “datafication” of social life. Human activities and interactions with the environment are being monitored and recorded with increasing effectiveness, generating an enormous digital footprint. The resulting “big data” are a treasure trove for research, with ever more sophisticated computational tools being developed to extract knowledge from such data. One example is the use of various different types of data acquired from cancer patients, including genomic sequences, physiological measurements and individual responses to treatment, to improve diagnosis and treatment. Another example is the integration of data on traffic flow, environmental and geographical conditions, and human behaviour to produce safety measures for driverless vehicles, so that when confronted with unforeseen events (such as a child suddenly darting into the street on a very cold day), the data can be promptly analysed to identify and generate an appropriate response (the car swerving enough to avoid the child while also minimising the risk of skidding on ice and damaging to other vehicles). Yet another instance is the understanding of the nutritional status and needs of a particular population that can be extracted from combining data on food consumption generated by commercial services (e.g., supermarkets, social media and restaurants) with data coming from public health and social services, such as blood test results and hospital intakes linked to malnutrition. In each of these cases, the availability of data and related analytic tools is creating novel opportunities for research and for the development of new forms of inquiry, which are widely perceived as having a transformative effect on science as a whole.

A useful starting point in reflecting on the significance of such cases for a philosophical understanding of research is to consider what the term “big data” actually refers to within contemporary scientific discourse. There are multiple ways to define big data (Kitchin 2014, Kitchin & McArdle 2016). Perhaps the most straightforward characterisation is as large datasets that are produced in a digital form and can be analysed through computational tools. Hence the two features most commonly associated with Big Data are volume and velocity. Volume refers to the size of the files used to archive and spread data. Velocity refers to the pressing speed with which data is generated and processed. The body of digital data created by research is growing at breakneck pace and in ways that are arguably impossible for the human cognitive system to grasp and thus require some form of automated analysis.

Volume and velocity are also, however, the most disputed features of big data. What may be perceived as “large volume” or “high velocity” depends on rapidly evolving technologies to generate, store, disseminate and visualise the data. This is exemplified by the high-throughput production, storage and dissemination of genomic sequencing and gene expression data, where both data volume and velocity have dramatically increased within the last two decades. Similarly, current understandings of big data as “anything that cannot be easily captured in an Excel spreadsheet” are bound to shift rapidly as new analytic software becomes established, and the very idea of using spreadsheets to capture data becomes a thing of the past. Moreover, data size and speed do not take account of the diversity of data types used by researchers, which may include data that are not generated in digital formats or whose format is not computationally tractable, and which underscores the importance of data provenance (that is, the conditions under which data were generated and disseminated) to processes of inference and interpretation. And as discussed below, the emphasis on physical features of data obscures the continuing dependence of data interpretation on circumstances of data use, including specific queries, values, skills and research situations.

An alternative is to define big data not by reference to their physical attributes, but rather by virtue of what can and cannot be done with them. In this view, big data is a heterogeneous ensemble of data collected from a variety of different sources, typically (but not always) in digital formats suitable for algorithmic processing, in order to generate new knowledge. For example boyd and Crawford (2012: 663) identify big data with “the capacity to search, aggregate and cross-reference large datasets”, while O’Malley and Soyer (2012) focus on the ability to interrogate and interrelate diverse types of data, with the aim to be able to consult them as a single body of evidence. The examples of transformative “big data research” given above are all easily fitted into this view: it is not the mere fact that lots of data are available that makes a different in those cases, but rather the fact that lots of data can be mobilised from a wide variety of sources (medical records, environmental surveys, weather measurements, consumer behaviour). This account makes sense of other characteristic “v-words” that have been associated with big data, including:

- Variety in the formats and purposes of data, which may include objects as different as samples of animal tissue, free-text observations, humidity measurements, GPS coordinates, and the results of blood tests;

- Veracity , understood as the extent to which the quality and reliability of big data can be guaranteed. Data with high volume, velocity and variety are at significant risk of containing inaccuracies, errors and unaccounted-for bias. In the absence of appropriate validation and quality checks, this could result in a misleading or outright incorrect evidence base for knowledge claims (Floridi & Illari 2014; Cai & Zhu 2015; Leonelli 2017);

- Validity , which indicates the selection of appropriate data with respect to the intended use. The choice of a specific dataset as evidence base requires adequate and explicit justification, including recourse to relevant background knowledge to ground the identification of what counts as data in that context (e.g., Loettgers 2009, Bogen 2010);

- Volatility , i.e., the extent to which data can be relied upon to remain available, accessible and re-interpretable despite changes in archival technologies. This is significant given the tendency of formats and tools used to generate and analyse data to become obsolete, and the efforts required to update data infrastructures so as to guarantee data access in the long term (Bowker 2006; Edwards 2010; Lagoze 2014; Borgman 2015);

- Value , i.e., the multifaceted forms of significance attributed to big data by different sections of society, which depend as much on the intended use of the data as on historical, social and geographical circumstances (Leonelli 2016, D’Ignazio and Klein 2020). Alongside scientific value, researchers may impute financial, ethical, reputational and even affective value to data, depending on their intended use as well as the historical, social and geographical circumstances of their use. The institutions involved in governing and funding research also have ways of valuing data, which may not always overlap with the priorities of researchers (Tempini 2017).

This list of features, though not exhaustive, highlights how big data is not simply “a lot of data”. The epistemic power of big data lies in their capacity to bridge between different research communities, methodological approaches and theoretical frameworks that are difficult to link due to conceptual fragmentation, social barriers and technical difficulties (Leonelli 2019a). And indeed, appeals to big data often emerge from situations of inquiry that are at once technically, conceptually and socially challenging, and where existing methods and resources have proved insufficient or inadequate (Sterner & Franz 2017; Sterner, Franz, & Witteveen 2020).

This understanding of big data is rooted in a long history of researchers grappling with large and complex datasets, as exemplified by fields like astronomy, meteorology, taxonomy and demography (see the collections assembled by Daston 2017; Anorova et al. 2017; Porter & Chaderavian 2018; as well as Anorova et al. 2010, Sepkoski 2013, Stevens 2016, Strasser 2019 among others). Similarly, biomedical research—and particularly subfields such as epidemiology, pharmacology and public health—has an extensive tradition of tackling data of high volume, velocity, variety and volatility, and whose validity, veracity and value are regularly negotiated and contested by patients, governments, funders, pharmaceutical companies, insurances and public institutions (Bauer 2008). Throughout the twentieth century, these efforts spurred the development of techniques, institutions and instruments to collect, order, visualise and analyse data, such as: standard classification systems and formats; guidelines, tools and legislation for the management and security of sensitive data; and infrastructures to integrate and sustain data collections over long periods of time (Daston 2017).

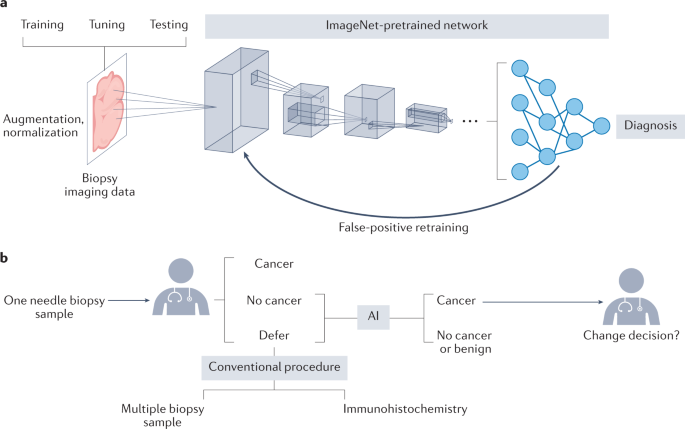

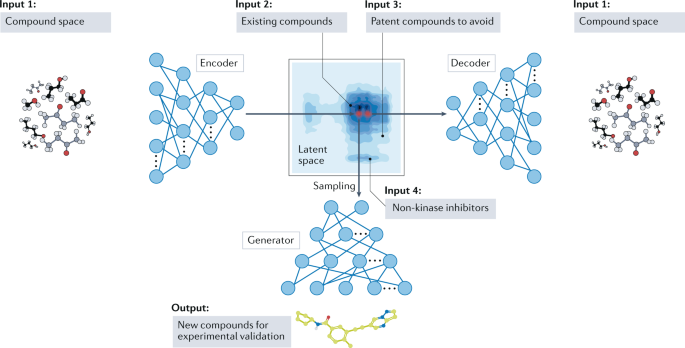

This work culminated in the application of computational technologies, modelling tools and statistical methods to big data (Porter 1995; Humphreys 2004; Edwards 2010), increasingly pushing the boundaries of data analytics thanks to supervised learning, model fitting, deep neural networks, search and optimisation methods, complex data visualisations and various other tools now associated with artificial intelligence. Many of these tools are based on algorithms whose functioning and results are tested against specific data samples (a process called “training”). These algorithms are programmed to “learn” from each interaction with novel data: in other words, they have the capacity to change themselves in response to new information being inputted into the system, thus becoming more attuned to the phenomena they are analysing and improving their ability to predict future behaviour. The scope and extent of such changes is shaped by the assumptions used to build the algorithms and the capability of related software and hardware to identify, access and process information of relevance to the learning in question. There is however a degree of unpredictability and opacity to these systems, which can evolve to the point of defying human understanding (more on this below).

New institutions, communication platforms and regulatory frameworks also emerged to assemble, prepare and maintain data for such uses (Kitchin 2014), such as various forms of digital data infrastructures, organisations aiming to coordinate and improve the global data landscape (e.g., the Research Data Alliance), and novel measures for data protection, like the General Data Protection Regulation launched in 2017 by the European Union. Together, these techniques and institutions afford the opportunity to assemble and interpret data at a much broader scale, while also promising to deliver finer levels of granularity in data analysis. [ 1 ] They increase the scope of any investigation by making it possible for researchers to link their own findings to those of countless others across the world, both within and beyond the academic sphere. By enhancing the mobility of data, they facilitate their repurposing for a variety of goals that may have been unforeseeable when the data were originally generated. And by transforming the role of data within research, they heighten their status as valuable research outputs in and of themselves. These technological and methodological developments have significant implications for philosophical conceptualisations of data, inferential processes and scientific knowledge, as well as for how research is conducted, organised, governed and assessed. It is to these philosophical concerns that I now turn.

Big data are often associated to the idea of data-driven research, where learning happens through the accumulation of data and the application of methods to extract meaningful patterns from those data. Within data-driven inquiry, researchers are expected to use data as their starting point for inductive inference, without relying on theoretical preconceptions—a situation described by advocates as “the end of theory”, in contrast to theory-driven approaches where research consists of testing a hypothesis (Anderson 2008, Hey et al. 2009). In principle at least, big data constitute the largest pool of data ever assembled and thus a strong starting point to search for correlations (Mayer-Schönberger & Cukier 2013). Crucial to the credibility of the data-driven approach is the efficacy of the methods used to extrapolate patterns from data and evaluate whether or not such patterns are meaningful, and what “meaning” may involve in the first place. Hence, some philosophers and data scholars have argued that

the most important and distinctive characteristic of Big Data [is] its use of statistical methods and computational means of analysis, (Symons & Alvarado 2016: 4)

such as for instance machine learning tools, deep neural networks and other “intelligent” practices of data handling.

The emphasis on statistics as key adjudicator of validity and reliability of patterns extracted from data is not novel. Exponents of logical empiricism looked for logically watertight methods to secure and justify inference from data, and their efforts to develop a theory of probability proceeded in parallel with the entrenchment of statistical reasoning in the sciences in the first half of the twentieth century (Romeijn 2017). In the early 1960s, Patrick Suppes offered a seminal link between statistical methods and the philosophy of science through his work on the production and interpretation of data models. As a philosopher deeply embedded in experimental practice, Suppes was interested in the means and motivations of key statistical procedures for data analysis such as data reduction and curve fitting. He argued that once data are adequately prepared for statistical modelling, all the concerns and choices that motivated data processing become irrelevant to their analysis and interpretation. This inspired him to differentiate between models of theory, models of experiment and models of data, noting that such different components of inquiry are governed by different logics and cannot be compared in a straightforward way. For instance,

the precise definition of models of the data for any given experiment requires that there be a theory of the data in the sense of the experimental procedure, as well as in the ordinary sense of the empirical theory of the phenomena being studied. (Suppes 1962: 253)

Suppes viewed data models as necessarily statistical: that is, as objects

designed to incorporate all the information about the experiment which can be used in statistical tests of the adequacy of the theory. (Suppes 1962: 258)

His formal definition of data models reflects this decision, with statistical requirements such as homogeneity, stationarity and order identified as the ultimate criteria to identify a data model Z and evaluate its adequacy:

Z is an N-fold model of the data for experiment Y if and only if there is a set Y and a probability measure P on subsets of Y such that \(Y = \langle Y, P\rangle\) is a model of the theory of the experiment, Z is an N-tuple of elements of Y , and Z satisfies the statistical tests of homogeneity, stationarity and order. (1962: 259)

This analysis of data models portrayed statistical methods as key conduits between data and theory, and hence as crucial components of inferential reasoning.

The focus on statistics as entry point to discussions of inference from data was widely promoted in subsequent philosophical work. Prominent examples include Deborah Mayo, who in her book Error and the Growth of Experimental Knowledge asked:

What should be included in data models? The overriding constraint is the need for data models that permit the statistical assessment of fit (between prediction and actual data); (Mayo 1996: 136)

and Bas van Fraassen, who also embraced the idea of data models as “summarizing relative frequencies found in data” (Van Fraassen 2008: 167). Closely related is the emphasis on statistics as means to detect error within datasets in relation to specific hypotheses, most prominently endorsed by the error-statistical approach to inference championed by Mayo and Aris Spanos (Mayo & Spanos 2009a). This approach aligns with the emphasis on computational methods for data analysis within big data research, and supports the idea that the better the inferential tools and methods, the better the chance to extract reliable knowledge from data.

When it comes to addressing methodological challenges arising from the computational analysis of big data, however, statistical expertise needs to be complemented by computational savvy in the training and application of algorithms associated to artificial intelligence, including machine learning but also other mathematical procedures for operating upon data (Bringsjord & Govindarajulu 2018). Consider for instance the problem of overfitting, i.e., the mistaken identification of patterns in a dataset, which can be greatly amplified by the training techniques employed by machine learning algorithms. There is no guarantee that an algorithm trained to successfully extrapolate patterns from a given dataset will be as successful when applied to other data. Common approaches to this problem involve the re-ordering and partitioning of both data and training methods, so that it is possible to compare the application of the same algorithms to different subsets of the data (“cross-validation”), combine predictions arising from differently trained algorithms (“ensembling”) or use hyperparameters (parameters whose value is set prior to data training) to prepare the data for analysis.

Handling these issues, in turn, requires

familiarity with the mathematical operations in question, their implementations in code, and the hardware architectures underlying such implementations. (Lowrie 2017: 3)

For instance, machine learning

aims to build programs that develop their own analytic or descriptive approaches to a body of data, rather than employing ready-made solutions such as rule-based deduction or the regressions of more traditional statistics. (Lowrie 2017: 4)

In other words, statistics and mathematics need to be complemented by expertise in programming and computer engineering. The ensemble of skills thus construed results in a specific epistemological approach to research, which is broadly characterised by an emphasis on the means of inquiry as the most significant driver of research goals and outputs. This approach, which Sabina Leonelli characterised as data-centric , involves “focusing more on the processes through which research is carried out than on its ultimate outcomes” (Leonelli 2016: 170). In this view, procedures, techniques, methods, software and hardware are the prime motors of inquiry and the chief influence on its outcomes. Focusing more specifically on computational systems, John Symons and Jack Horner argued that much of big data research consists of software-intensive science rather than data-driven research: that is, science that depends on software for its design, development, deployment and use, and thus encompasses procedures, types of reasoning and errors that are unique to software, such as for example the problems generated by attempts to map real-world quantities to discrete-state machines, or approximating numerical operations (Symons & Horner 2014: 473). Software-intensive science is arguably supported by an algorithmic rationality focused on the feasibility, practicality and efficiency of algorithms, which is typically assessed by reference to concrete situations of inquiry (Lowrie 2017).

Algorithms are enormously varied in their mathematical structures and underpinning conceptual commitments, and more philosophical work needs to be carried out on the specifics of computational tools and software used in data science and related applications—with emerging work in philosophy of computer science providing an excellent way forward (Turner & Angius 2019). Nevertheless, it is clear that whether or not a given algorithm successfully applies to the data at hand depends on factors that cannot be controlled through statistical or even computational methods: for instance, the size, structure and format of the data, the nature of the classifiers used to partition the data, the complexity of decision boundaries and the very goals of the investigation.

In a forceful critique informed by the philosophy of mathematics, Christian Calude and Giuseppe Longo argued that there is a fundamental problem with the assumption that more data will necessarily yield more information:

very large databases have to contain arbitrary correlations. These correlations appear only due to the size, not the nature, of data. (Calude & Longo 2017: 595)

They conclude that big data analysis is by definition unable to distinguish spurious from meaningful correlations and is therefore a threat to scientific research. A related worry, sometimes dubbed “the curse of dimensionality” by data scientists, concerns the extent to which the analysis of a given dataset can be scaled up in complexity and in the number of variables being considered. It is well known that the more dimensions one considers in classifying samples, for example, the larger the dataset on which such dimensions can be accurately generalised. This demonstrates the continuing, tight dependence between the volume and quality of data on the one hand, and the type and breadth of research questions for which data need to serve as evidence on the other hand.

Determining the fit between inferential methods and data requires high levels of expertise and contextual judgement (a situation known within machine learning as the “no free lunch theorem”). Indeed, overreliance on software for inference and data modelling can yield highly problematic results. Symons and Horner note that the use of complex software in big data analysis makes margins of error unknowable, because there is no clear way to test them statistically (Symons & Horner 2014: 473). The path complexity of programs with high conditionality imposes limits on standard error correction techniques. As a consequence, there is no effective method for characterising the error distribution in the software except by testing all paths in the code, which is unrealistic and intractable in the vast majority of cases due to the complexity of the code.

Rather than acting as a substitute, the effective and responsible use of artificial intelligence tools in big data analysis requires the strategic exercise of human intelligence—but for this to happen, AI systems applied to big data need to be accessible to scrutiny and modification. Whether or not this is the case, and who is best qualified to exercise such scrutiny, is under dispute. Thomas Nickles argued that the increasingly complex and distributed algorithms used for data analysis follow in the footsteps of long-standing scientific attempts to transcend the limits of human cognition. The resulting epistemic systems may no longer be intelligible to humans: an “alien intelligence” within which “human abilities are no longer the ultimate criteria of epistemic success” (Nickles forthcoming). Such unbound cognition holds the promise of enabling powerful inferential reasoning from previously unimaginable volumes of data. The difficulties in contextualising and scrutinising such reasoning, however, sheds doubt on the reliability of the results. It is not only machine learning algorithms that are becoming increasingly inaccessible to evaluation: beyond the complexities of programming code, computational data analysis requires a whole ecosystem of classifications, models, networks and inference tools which typically have different histories and purposes, and whose relation to each other—and effects when they are used together—are far from understood and may well be untraceable.

This raises the question of whether the knowledge produced by such data analytic systems is at all intelligible to humans, and if so, what forms of intelligibility it yields. It is certainly the case that deriving knowledge from big data may not involve an increase in human understanding, especially if understanding is understood as an epistemic skill (de Regt 2017). This may not be a problem to those who await the rise of a new species of intelligent machines, who may master new cognitive tools in a way that humans cannot. But as Nickles, Nicholas Rescher (1984), Werner Callebaut (2012) and others pointed out, even in that case “we would not have arrived at perspective-free science” (Nickles forthcoming). While the human histories and assumptions interwoven into these systems may be hard to disentangle, they still affect their outcomes; and whether or not these processes of inquiry are open to critical scrutiny, their telos, implications and significance for life on the planet arguably should be. As argued by Dan McQuillan (2018), the increasing automation of big data analytics may foster acceptance of a Neoplatonist machinic metaphysics , within which mathematical structures “uncovered” by AI would trump any appeal to human experience. Luciano Floridi echoes this intuition in his analysis of what he calls the infosphere :

The great opportunities offered by Information and Communication Technologies come with a huge intellectual responsibility to understand them and take advantage of them in the right way. (2014: vii)

These considerations parallel Paul Humphreys’s long-standing critique of computer simulations as epistemically opaque (Humphreys 2004, 2009)—and particularly his definition of what he calls essential epistemic opacity:

A process is essentially epistemically opaque to X if and only if it is impossible , given the nature of X , for X to know all of the epistemically relevant elements of the process. (Humphreys 2009: 618)

Different facets of the general problem of epistemic opacity are stressed within the vast philosophical scholarship on the role of modelling, computing and simulations in the sciences: the implications of lacking experimental access to the concrete parts of the world being modelled, for instance (Morgan 2005; Parker 2009; Radder 2009); the difficulties in testing the reliability of computational methods used within simulations (Winsberg 2010; Morrison 2015); the relation between opacity and justification (Durán & Formanek 2018); the forms of black-boxing associated to mechanistic reasoning implemented in computational analysis (Craver and Darden 2013; Bechtel 2016); and the debate over the intrinsic limits of computational approaches and related expertise (Collins 1990; Dreyfus 1992). Roman Frigg and Julian Reiss argued that such issues do not constitute fundamental challenges to the nature of inquiry and modelling, and in fact exist in a continuum with traditional methodological issues well-known within the sciences (Frigg & Reiss 2009). Whether or not one agrees with this position (Humphreys 2009; Beisbart 2012), big data analysis is clearly pushing computational and statistical methods to their limit, thus highlighting the boundaries to what even technologically augmented human beings are capable of knowing and understanding.

Research on big data analysis thus sheds light on elements of the research process that cannot be fully controlled, rationalised or even considered through recourse to formal tools.

One such element is the work required to present empirical data in a machine-readable format that is compatible with the software and analytic tools at hand. Data need to be selected, cleaned and prepared to be subjected to statistical and computational analysis. The processes involved in separating data from noise, clustering data so that it is tractable, and integrating data of different formats turn out to be highly sophisticated and theoretically structured, as demonstrated for instance by James McAllister’s (1997, 2007, 2011) and Uljana Feest’s (2011) work on data patterns, Marcel Boumans’s and Leonelli’s comparison of clustering principles across fields (forthcoming), and James Griesemer’s (forthcoming) and Mary Morgan’s (forthcoming) analyses of the peculiarities of datasets. Suppes was so concerned by what he called the “bewildering complexity” of data production and processing activities, that he worried that philosophers would not appreciate the ways in which statistics can and does help scientists to abstract data away from such complexity. He described the large group of research components and activities used to prepare data for modelling as “pragmatic aspects” encompassing “every intuitive consideration of experimental design that involved no formal statistics” (Suppes 1962: 258), and positioned them as the lowest step of his hierarchy of models—at the opposite end of its pinnacle, which are models of theory. Despite recent efforts to rehabilitate the methodology of inductive-statistical modelling and inference (Mayo & Spanos 2009b), this approach has been shared by many philosophers who regard processes of data production and processing as so chaotic as to defy systematic analysis. This explains why data have received so little consideration in philosophy of science when compared to models and theory.

The question of how data are defined and identified, however, is crucial for understanding the role of big data in scientific research. Let us now consider two philosophical views—the representational view and the relational view —that are both compatible with the emergence of big data, and yet place emphasis on different aspects of that phenomenon, with significant implications for understanding the role of data within inferential reasoning and, as we shall see in the next section, as evidence. The representational view construes data as reliable representations of reality which are produced via the interaction between humans and the world. The interactions that generate data can take place in any social setting regardless of research purposes. Examples range from a biologist measuring the circumference of a cell in the lab and noting the result in an Excel file, to a teacher counting the number of students in her class and transcribing it in the class register. What counts as data in these interactions are the objects created in the process of description and/or measurement of the world. These objects can be digital (the Excel file) or physical (the class register) and form a footprint of a specific interaction with the natural world. This footprint—“trace” or “mark”, in the words of Ian Hacking (1992) and Hans-Jörg Rheinberger (2011), respectively—constitutes a crucial reference point for analytic study and for the extraction of new insights. This is the reason why data forms a legitimate foundation to empirical knowledge: the production of data is equivalent to “capturing” features of the world that can be used for systematic study. According to the representative approach, data are objects with fixed and unchangeable content, whose meaning, in virtue of being representations of reality, needs to be investigated and revealed step-by-step through adequate inferential methods. The data documenting cell shape can be modelled to test the relevance of shape to the elasticity, permeability and resilience of cells, producing an evidence base to understand cell-to-cell signalling and development. The data produced counting students in class can be aggregated with similar data collected in other schools, producing an evidence base to evaluate the density of students in the area and their school attendance frequency.

This reflects the intuition that data, especially when they come in the form of numerical measurements or images such as photographs, somehow mirror the phenomena that they are created to document, thus providing a snapshot of those phenomena that is amenable to study under the controlled conditions of research. It also reflects the idea of data as “raw” products of research, which are as close as it gets to unmediated knowledge of reality. This makes sense of the truth-value sometimes assigned to data as irrefutable sources of evidence—the Popperian idea that if data are found to support a given claim, then that claim is corroborated as true at least as long as no other data are found to disprove it. Data in this view represent an objective foundation for the acquisition of knowledge and this very objectivity—the ability to derive knowledge from human experience while transcending it—is what makes knowledge empirical. This position is well-aligned with the idea that big data is valuable to science because it facilitates the (broadly understood) inductive accumulation of knowledge: gathering data collected via reliable methods produces a mountain of facts ready to be analysed and, the more facts are produced and connected with each other, the more knowledge can be extracted.

Philosophers have long acknowledged that data do not speak for themselves and different types of data require different tools for analysis and preparation to be interpreted (Bogen 2009 [2013]). According to the representative view, there are correct and incorrect ways of interpreting data, which those responsible for data analysis need to uncover. But what is a “correct” interpretation in the realm of big data, where data are consistently treated as mobile entities that can, at least in principle, be reused in countless different ways and towards different objectives? Perhaps more than at any other time in the history of science, the current mobilisation and re-use of big data highlights the degree to which data interpretation—and with it, whatever data is taken to represent—may differ depending on the conceptual, material and social conditions of inquiry. The analysis of how big data travels across contexts shows that the expectations and abilities of those involved determine not only the way data are interpreted, but also what is regarded as “data” in the first place (Leonelli & Tempini forthcoming). The representative view of data as objects with fixed and contextually independent meaning is at odds with these observations.

An alternative approach is to embrace these findings and abandon the idea of data as fixed representations of reality altogether. Within the relational view , data are objects that are treated as potential or actual evidence for scientific claims in ways that can, at least in principle, be scrutinised and accounted for (Leonelli 2016). The meaning assigned to data depends on their provenance, their physical features and what these features are taken to represent, and the motivations and instruments used to visualise them and to defend specific interpretations. The reliability of data thus depends on the credibility and strictness of the processes used to produce and analyse them. The presentation of data; the way they are identified, selected, and included (or excluded) in databases; and the information provided to users to re-contextualise them are fundamental to producing knowledge and significantly influence its content. For instance, changes in data format—as most obviously involved in digitisation, data compression or archival procedures— can have a significant impact on where, when, and who uses the data as source of knowledge.

This framework acknowledges that any object can be used as a datum, or stop being used as such, depending on the circumstances—a consideration familiar to big data analysts used to pick and mix data coming from a vast variety of sources. The relational view also explains how, depending on the research perspective interpreting it, the same dataset may be used to represent different aspects of the world (“phenomena” as famously characterised by James Bogen and James Woodward, 1988). When considering the full cycle of scientific inquiry from the viewpoint of data production and analysis, it is at the stage of data modelling that a specific representational value is attributed to data (Leonelli 2019b).

The relational view of data encourages attention to the history of data, highlighting their continual evolution and sometimes radical alteration, and the impact of this feature on the power of data to confirm or refute hypotheses. It explains the critical importance of documenting data management and transformation processes, especially with big data that transit far and wide over digital channels and are grouped and interpreted in different ways and formats. It also explains the increasing recognition of the expertise of those who produce, curate, and analyse data as indispensable to the effective interpretation of big data within and beyond the sciences; and the inextricable link between social and ethical concerns around the potential impact of data sharing and scientific concerns around the quality, validity, and security of data (boyd & Crawford 2012; Tempini & Leonelli, 2018).

Depending on which view on data one takes, expectations around what big data can do for science will vary dramatically. The representational view accommodates the idea of big data as providing the most comprehensive, reliable and generative knowledge base ever witnessed in the history of science, by virtue of its sheer size and heterogeneity. The relational view makes no such commitment, focusing instead on what inferences are being drawn from such data at any given point, how and why.

One thing that the representational and relational views agree on is the key epistemic role of data as empirical evidence for knowledge claims or interventions. While there is a large philosophical literature on the nature of evidence (e.g., Achinstein 2001; Reiss 2015; Kelly 2016), however, the relation between data and evidence has received less attention. This is arguably due to an implicit acceptance, by many philosophers, of the representational view of data. Within the representational view, the identification of what counts as data is prior to the study of what those data can be evidence for: in other words, data are “givens”, as the etymology of the word indicates, and inferential methods are responsible for determining whether and how the data available to investigators can be used as evidence, and for what. The focus of philosophical attention is thus on formal methods to single out errors and misleading interpretations, and the probabilistic and/or explanatory relation between what is unproblematically taken to be a body of evidence and a given hypothesis. Hence much of the expansive philosophical work on evidence avoids the term “data” altogether. Peter Achinstein’s seminal work is a case in point: it discusses observed facts and experimental results, and whether and under which conditions scientists would have reasons to believe such facts, but it makes no mention of data and related processing practices (Achinstein 2001).

By contrast, within the relational view an object can only be identified as datum when it is viewed as having value as evidence. Evidence becomes a category of data identification, rather than a category of data use as in the representational view (Canali 2019). Evidence is thus constitutive of the very notion of data and cannot be disentangled from it. This involves accepting that the conditions under which a given object can serve as evidence—and thus be viewed as datum - may change; and that should this evidential role stop altogether, the object would revert back into an ordinary, non-datum item. For example, the photograph of a plant taken by a tourist in a remote region may become relevant as evidence for an inquiry into the morphology of plants from that particular locality; yet most photographs of plants are never considered as evidence for an inquiry into the features and functioning of the world, and of those who are, many may subsequently be discarded as uninteresting or no longer pertinent to the questions being asked.

This view accounts for the mobility and repurposing that characterises big data use, and for the possibility that objects that were not originally generated in order to serve as evidence may be subsequently adopted as such. Consider Mayo and Spanos’s “minimal scientific principle for evidence”, which they define as follows:

Data x 0 provide poor evidence for H if they result from a method or procedure that has little or no ability of finding flaws in H , even if H is false. (Mayo & Spanos 2009b)

This principle is compatible with the relational view of data since it incorporates cases where the methods used to generate and process data may not have been geared towards the testing of a hypothesis H: all it asks is that such methods can be made relevant to the testing of H, at the point in which data are used as evidence for H (I shall come back to the role of hypotheses in the handling of evidence in the next section).

The relational view also highlights the relevance of practices of data formatting and manipulation to the treatment of data as evidence, thus taking attention away from the characteristics of the data objects alone and focusing instead on the agency attached to and enabled by those characteristics. Nora Boyd has provided a way to conceptualise data processing as an integral part of inferential processes, and thus of how we should understand evidence. To this aim she introduced the notion of “line of evidence”, which she defines as:

a sequence of empirical results including the records of data collection and all subsequent products of data processing generated on the way to some final empirical constraint. (Boyd 2018:406)

She thus proposes a conception of evidence that embraces both data and the way in which data are handled, and indeed emphasises the importance of auxiliary information used when assessing data for interpretation, which includes

the metadata regarding the provenance of the data records and the processing workflow that transforms them. (2018: 407)

As she concludes,

together, a line of evidence and its associated metadata compose what I am calling an “enriched line of evidence”. The evidential corpus is then to be made up of many such enriched lines of evidence. (2018: 407)

The relational view thus fosters a functional and contextualist approach to evidence as the manner through which one or more objects are used as warrant for particular knowledge items (which can be propositional claims, but also actions such as specific decisions or modes of conduct/ways of operating). This chimes with the contextual view of evidence defended by Reiss (2015), John Norton’s work on the multiple, tangled lines of inferential reasoning underpinning appeals to induction (2003), and Hasok Chang’s emphasis on the epistemic activities required to ground evidential claims (2012). Building on these ideas and on Stephen Toulmin’s seminal work on research schemas (1958), Alison Wylie has gone one step further in evaluating the inferential scaffolding that researchers (and particularly archaeologists, who so often are called to re-evaluate the same data as evidence for new claims; Wylie 2017) need to make sense of their data, interpret them in ways that are robust to potential challenges, and modify interpretations in the face of new findings. This analysis enabled Wylie to formulate a set of conditions for robust evidential reasoning, which include epistemic security in the chain of evidence, causal anchoring and causal independence of the data used as evidence, as well as the explicit articulation of the grounds for calibration of the instruments and methods involved (Chapman & Wylie 2016; Wylie forthcoming). A similar conclusion is reached by Jessey Wright’s evaluation of the diverse data analysis techniques that neuroscientists use to make sense of functional magnetic resonance imaging of the brain (fMRI scans):

different data analysis techniques reveal different patterns in the data. Through the use of multiple data analysis techniques, researchers can produce results that are locally robust. (Wright 2017: 1179)

Wylie’s and Wright’s analyses exemplify how a relational approach to data fosters a normative understanding of “good evidence” which is anchored in situated judgement—the arguably human prerogative to contextualise and assess the significance of evidential claims. The advantages of this view of evidence are eloquently expressed by Nancy Cartwright’s critique of both philosophical theories and policy approaches that do not recognise the local and contextual nature of evidential reasoning. As she notes,

we need a concept that can give guidance about what is relevant to consider in deciding on the probability of the hypothesis, not one that requires that we already know significant facts about the probability of the hypothesis on various pieces of evidence. (Cartwright 2013: 6)

Thus she argues for a notion of evidence that is not too restrictive, takes account of the difficulties in combining and selecting evidence, and allows for contextual judgement on what types of evidence are best suited to the inquiry at hand (Cartwright 2013, 2019). Reiss’s proposal of a pragmatic theory of evidence similarly aims to

takes scientific practice [..] seriously, both in terms of its greater use of knowledge about the conditions under which science is practised and in terms of its goal to develop insights that are relevant to practising scientists. (Reiss 2015: 361)

A better characterisation of the relation between data and evidence, predicated on the study of how data are processed and aggregated, may go a long way towards addressing these demands. As aptly argued by James Woodward, the evidential relationship between data and claims is not a “a purely formal, logical, or a priori matter” (Woodward 2000: S172–173). This again sits uneasily with the expectation that big data analysis may automate scientific discovery and make human judgement redundant.

Let us now return to the idea of data-driven inquiry, often suggested as a counterpoint to hypothesis-driven science (e.g., Hey et al. 2009). Kevin Elliot and colleagues have offered a brief history of hypothesis-driven inquiry (Elliott et al. 2016), emphasising how scientific institutions (including funding programmes and publication venues) have pushed researchers towards a Popperian conceptualisation of inquiry as the formulation and testing of a strong hypothesis. Big data analysis clearly points to a different and arguably Baconian understanding of the role of hypothesis in science. Theoretical expectations are no longer seen as driving the process of inquiry and empirical input is recognised as primary in determining the direction of research and the phenomena—and related hypotheses—considered by researchers.

The emphasis on data as a central component of research poses a significant challenge to one of the best-established philosophical views on scientific knowledge. According to this view, which I shall label the theory-centric view of science, scientific knowledge consists of justified true beliefs about the world. These beliefs are obtained through empirical methods aiming to test the validity and reliability of statements that describe or explain aspects of reality. Hence scientific knowledge is conceptualised as inherently propositional: what counts as an output are claims published in books and journals, which are also typically presented as solutions to hypothesis-driven inquiry. This view acknowledges the significance of methods, data, models, instruments and materials within scientific investigations, but ultimately regards them as means towards one end: the achievement of true claims about the world. Reichenbach’s seminal distinction between contexts of discovery and justification exemplifies this position (Reichenbach 1938). Theory-centrism recognises research components such as data and related practical skills as essential to discovery, and more specifically to the messy, irrational part of scientific work that involves value judgements, trial-and-error, intuition and exploration and within which the very phenomena to be investigated may not have been stabilised. The justification of claims, by contrast, involves the rational reconstruction of the research that has been performed, so that it conforms to established norms of inferential reasoning. Importantly, within the context of justification, only data that support the claims of interest are explicitly reported and discussed: everything else—including the vast majority of data produced in the course of inquiry—is lost to the chaotic context of discovery. [ 2 ]

Much recent philosophy of science, and particularly modelling and experimentation, has challenged theory-centrism by highlighting the role of models, methods and modes of intervention as research outputs rather than simple tools, and stressing the importance of expanding philosophical understandings of scientific knowledge to include these elements alongside propositional claims. The rise of big data offers another opportunity to reframe understandings of scientific knowledge as not necessarily centred on theories and to include non-propositional components—thus, in Cartwright’s paraphrase of Gilbert Ryle’s famous distinction, refocusing on knowing-how over knowing-that (Cartwright 2019). One way to construe data-centric methods is indeed to embrace a conception of knowledge as ability, such as promoted by early pragmatists like John Dewey and more recently reprised by Chang, who specifically highlighted it as the broader category within which the understanding of knowledge-as-information needs to be placed (Chang 2017).

Another way to interpret the rise of big data is as a vindication of inductivism in the face of the barrage of philosophical criticism levelled against theory-free reasoning over the centuries. For instance, Jon Williamson (2004: 88) has argued that advances in automation, combined with the emergence of big data, lend plausibility to inductivist philosophy of science. Wolfgang Pietsch agrees with this view and provided a sophisticated framework to understand just what kind of inductive reasoning is instigated by big data and related machine learning methods such as decision trees (Pietsch 2015). Following John Stuart Mill, he calls this approach variational induction and presents it as common to both big data approaches and exploratory experimentation, though the former can handle a much larger number of variables (Pietsch 2015: 913). Pietsch concludes that the problem of theory-ladenness in machine learning can be addressed by determining under which theoretical assumptions variational induction works (2015: 910ff).

Others are less inclined to see theory-ladenness as a problem that can be mitigated by data-intensive methods, and rather see it as a constitutive part of the process of empirical inquiry. Arching back to the extensive literature on perspectivism and experimentation (Gooding 1990; Giere 2006; Radder 2006; Massimi 2012), Werner Callebaut has forcefully argued that the most sophisticated and standardised measurements embody a specific theoretical perspective, and this is no less true of big data (Callebaut 2012). Elliott and colleagues emphasise that conceptualising big data analysis as atheoretical risks encouraging unsophisticated attitudes to empirical investigation as a

“fishing expedition”, having a high probability of leading to nonsense results or spurious correlations, being reliant on scientists who do not have adequate expertise in data analysis, and yielding data biased by the mode of collection. (Elliott et al. 2016: 880)

To address related worries in genetic analysis, Ken Waters has provided the useful characterisation of “theory-informed” inquiry (Waters 2007), which can be invoked to stress how theory informs the methods used to extract meaningful patterns from big data, and yet does not necessarily determine either the starting point or the outcomes of data-intensive science. This does not resolve the question of what role theory actually plays. Rob Kitchin (2014) has proposed to see big data as linked to a new mode of hypothesis generation within a hypothetical-deductive framework. Leonelli is more sceptical of attempts to match big data approaches, which are many and diverse, with a specific type of inferential logic. She rather focused on the extent to which the theoretical apparatus at work within big data analysis rests on conceptual decisions about how to order and classify data—and proposed that such decisions can give rise to a particular form of theorization, which she calls classificatory theory (Leonelli 2016).

These disagreements point to big data as eliciting diverse understandings of the nature of knowledge and inquiry, and the complex iterations through which different inferential methods build on each other. Again, in the words of Elliot and colleagues,

attempting to draw a sharp distinction between hypothesis-driven and data-intensive science is misleading; these modes of research are not in fact orthogonal and often intertwine in actual scientific practice. (Elliott et al. 2016: 881, see also O’Malley et al. 2009, Elliott 2012)

Another epistemological debate strongly linked to reflection on big data concerns the specific kinds of knowledge emerging from data-centric forms of inquiry, and particularly the relation between predictive and causal knowledge.

Big data science is widely seen as revolutionary in the scale and power of predictions that it can support. Unsurprisingly perhaps, a philosophically sophisticated defence of this position comes from the philosophy of mathematics, where Marco Panza, Domenico Napoletani and Daniele Struppa argued for big data science as occasioning a momentous shift in the predictive knowledge that mathematical analysis can yield, and thus its role within broader processes of knowledge production. The whole point of big data analysis, they posit, is its disregard for causal knowledge:

answers are found through a process of automatic fitting of the data to models that do not carry any structural understanding beyond the actual solution of the problem itself. (Napoletani, Panza, & Struppa 2014: 486)

This view differs from simplistic popular discourse on “the death of theory” (Anderson 2008) and the “power of correlations” (Mayer-Schoenberg and Cukier 2013) insofar as it does not side-step the constraints associated with knowledge and generalisations that can be extracted from big data analysis. Napoletani, Panza and Struppa recognise that there are inescapable tensions around the ability of mathematical reasoning to overdetermine empirical input, to the point of providing a justification for any and every possible interpretation of the data. In their words,

the problem arises of how we can gain meaningful understanding of historical phenomena, given the tremendous potential variability of their developmental processes. (Napoletani et al. 2014: 487)

Their solution is to clarify that understanding phenomena is not the goal of predictive reasoning, which is rather a form of agnostic science : “the possibility of forecasting and analysing without a structured and general understanding” (Napoletani et al. 2011: 12). The opacity of algorithmic rationality thus becomes its key virtue and the reason for the extraordinary epistemic success of forecasting grounded on big data. While “the phenomenon may forever re-main hidden to our understanding”(ibid.: 5), the application of mathematical models and algorithms to big data can still provide meaningful and reliable answers to well-specified problems—similarly to what has been argued in the case of false models (Wimsatt 2007). Examples include the use of “forcing” methods such as regularisation or diffusion geometry to facilitate the extraction of useful insights from messy datasets.

This view is at odds with accounts that posit scientific understanding as a key aim of science (de Regt 2017), and the intuition that what researchers are ultimately interested in is

whether the opaque data-model generated by machine-learning technologies count as explanations for the relationships found between input and output. (Boon 2020: 44)

Within the philosophy of biology, for example, it is well recognised that big data facilitates effective extraction of patterns and trends, and that being able to model and predict how an organism or ecosystem may behave in the future is of great importance, particularly within more applied fields such as biomedicine or conservation science. At the same time, researchers are interested in understanding the reasons for observed correlations, and typically use predictive patterns as heuristics to explore, develop and verify causal claims about the structure and functioning of entities and processes. Emanuele Ratti (2015) has argued that big data mining within genome-wide association studies often used in cancer genomics can actually underpin mechanistic reasoning, for instance by supporting eliminative inference to develop mechanistic hypotheses and by helping to explore and evaluate generalisations used to analyse the data. In a similar vein, Pietsch (2016) proposed to use variational induction as a method to establish what counts as causal relationships among big data patterns, by focusing on which analytic strategies allow for reliable prediction and effective manipulation of a phenomenon.

Through the study of data sourcing and processing in epidemiology, Stefano Canali has instead highlighted the difficulties of deriving mechanistic claims from big data analysis, particularly where data are varied and embodying incompatible perspectives and methodological approaches (Canali 2016, 2019). Relatedly, the semantic and logistical challenges of organising big data give reason to doubt the reliability of causal claims extracted from such data. In terms of logistics, having a lot of data is not the same as having all of them, and cultivating illusions of comprehensiveness is a risky and potentially misleading strategy, particularly given the challenges encountered in developing and applying curatorial standards for data other than the high-throughput results of “omics” approaches (see also the next section). The constant worry about the partiality and reliability of data is reflected in the care put by database curators in enabling database users to assess such properties; and in the importance given by researchers themselves, particularly in the biological and environmental sciences, to evaluating the quality of data found on the internet (Leonelli 2014, Fleming et al. 2017). In terms of semantics, we are back to the role of data classifications as theoretical scaffolding for big data analysis that we discussed in the previous section. Taxonomic efforts to order and visualise data inform causal reasoning extracted from such data (Sterner & Franz 2017), and can themselves constitute a bottom-up method—grounded in comparative reasoning—for assigning meaning to data models, particularly in situation where a full-blown theory or explanation for the phenomenon under investigation is not available (Sterner 2014).

It is no coincidence that much philosophical work on the relation between causal and predictive knowledge extracted from big data comes from the philosophy of the life sciences, where the absence of axiomatized theories has elicited sophisticated views on the diversity of forms and functions of theory within inferential reasoning. Moreover, biological data are heterogeneous both in their content and in their format; are curated and re-purposed to address the needs of highly disparate and fragmented epistemic communities; and present curators with specific challenges to do with tracking complex, diverse and evolving organismal structures and behaviours, whose relation to an ever-changing environment is hard to pinpoint with any stability (e.g., Shavit & Griesemer 2009). Hence in this domain, some of the core methods and epistemic concerns of experimental research—including exploratory experimentation, sampling and the search for causal mechanisms—remain crucial parts of data-centric inquiry.

At the start of this entry I listed “value” as a major characteristic of big data and pointed to the crucial role of valuing procedures in identifying, processing, modelling and interpreting data as evidence. Identifying and negotiating different forms of data value is an unavoidable part of big data analysis, since these valuation practices determine which data is made available to whom, under which conditions and for which purposes. What researchers choose to consider as reliable data (and data sources) is closely intertwined not only with their research goals and interpretive methods, but also with their approach to data production, packaging, storage and sharing. Thus, researchers need to consider what value their data may have for future research by themselves and others, and how to enhance that value—such as through decisions around which data to make public, how, when and in which format; or, whenever dealing with data already in the public domain (such as personal data on social media), decisions around whether the data should be shared and used at all, and how.

No matter how one conceptualises value practices, it is clear that their key role in data management and analysis prevents facile distinctions between values and “facts” (understood as propositional claims for which data provide evidential warrant). For example, consider a researcher who values both openness —and related practices of widespread data sharing—and scientific rigour —which requires a strict monitoring of the credibility and validity of conditions under which data are interpreted. The scale and manner of big data mobilisation and analysis create tensions between these two values. While the commitment to openness may prompt interest in data sharing, the commitment to rigour may hamper it, since once data are freely circulated online it becomes very difficult to retain control over how they are interpreted, by whom and with which knowledge, skills and tools. How a researcher responds to this conflict affects which data are made available for big data analysis, and under which conditions. Similarly, the extent to which diverse datasets may be triangulated and compared depends on the intellectual property regimes under which the data—and related analytic tools—have been produced. Privately owned data are often unavailable to publicly funded researchers; and many algorithms, cloud systems and computing facilities used in big data analytics are only accessible to those with enough resources to buy relevant access and training. Whatever claims result from big data analysis are, therefore, strongly dependent on social, financial and cultural constraints that condition the data pool and its analysis.

This prominent role of values in shaping data-related epistemic practices is not surprising given existing philosophical critiques of the fact/value distinction (e.g., Douglas 2009), and the existing literature on values in science—such as Helen Longino’s seminal distinction between constitutive and contextual values, as presented in her 1990 book Science as Social Knowledge —may well apply in this case too. Similarly, it is well-established that the technological and social conditions of research strongly condition its design and outcomes. What is particularly worrying in the case of big data is the temptation, prompted by hyped expectations around the power of data analytics, to hide or side-line the valuing choices that underpin the methods, infrastructures and algorithms used for big data extraction.

Consider the use of high-throughput data production tools, which enable researchers to easily generate a large volume of data in formats already geared to computational analysis. Just as in the case of other technologies, researchers have a strong incentive to adopt such tools for data generation; and may do so even in cases where such tools are not good or even appropriate means to pursue the investigation. Ulrich Krohs uses the term convenience experimentation to refer to experimental designs that are adopted not because they are the most appropriate ways of pursuing a given investigation, but because they are easily and widely available and usable, and thus “convenient” means for researchers to pursue their goals (Krohs 2012).

Appeals to convenience can extend to other aspects of data-intensive analysis. Not all data are equally easy to digitally collect, disseminate and link through existing algorithms, which makes some data types and formats more convenient than others for computational analysis. For example, research databases often display the outputs of well-resourced labs within research traditions which deal with “tractable” data formats (such as “omics”). And indeed, the existing distribution of resources, infrastructure and skills determines high levels of inequality in the production, dissemination and use of big data for research. Big players with large financial and technical resources are leading the development and uptake of data analytics tools, leaving much publicly funded research around the world at the receiving end of innovation in this area. Contrary to popular depictions of the data revolution as harbinger of transparency, democracy and social equality, the digital divide between those who can access and use data technologies, and those who cannot, continues to widen. A result of such divides is the scarcity of data relating to certain subgroups and geographical locations, which again limits the comprehensiveness of available data resources.

In the vast ecosystem of big data infrastructures, it is difficult to keep track of such distortions and assess their significance for data interpretation, especially in situations where heterogeneous data sources structured through appeal to different values are mashed together. Thus, the systematic aggregation of convenient datasets and analytic tools over others often results in a big data pool where the relevant sources and forms of bias are impossible to locate and account for (Pasquale 2015; O’Neill 2016; Zuboff 2017; Leonelli 2019a). In such a landscape, arguments for a separation between fact and value—and even a clear distinction between the role of epistemic and non-epistemic values in knowledge production—become very difficult to maintain without discrediting the whole edifice of big data science. Given the extent to which this approach has penetrated research in all domains, it is arguably impossible, however, to critique the value-laden structure of big data science without calling into question the legitimacy of science itself. A more constructive approach is to embrace the extent to which big data science is anchored in human choices, interests and values, and ascertain how this affects philosophical views on knowledge, truth and method.

In closing, it is important to consider at least some of the risks and related ethical questions raised by research with big data. As already mentioned in the previous section, reliance on big data collected by powerful institutions or corporations risks raises significant social concerns. Contrary to the view that sees big and open data as harbingers of democratic social participation in research, the way that scientific research is governed and financed is not challenged by big data. Rather, the increasing commodification and large value attributed to certain kinds of data (e.g., personal data) is associated to an increase in inequality of power and visibility between different nations, segments of the population and scientific communities (O’Neill 2016; Zuboff 2017; D’Ignazio and Klein 2020). The digital gap between those who not only can access data, but can also use it, is widening, leading from a state of digital divide to a condition of “data divide” (Bezuidenout et al. 2017).

Moreover, the privatisation of data has serious implications for the world of research and the knowledge it produces. Firstly, it affects which data are disseminated, and with which expectations. Corporations usually only release data that they regard as having lesser commercial value and that they need public sector assistance to interpret. This introduces another distortion on the sources and types of data that are accessible online while more expensive and complex data are kept secret. Even many of the ways in which citizens -researchers included - are encouraged to interact with databases and data interpretation sites tend to encourage participation that generates further commercial value. Sociologists have recently described this type of social participation as a form of exploitation (Prainsack & Buyx 2017; Srnicek 2017). In turn, these ways of exploiting data strengthen their economic value over their scientific value. When it comes to the commerce of personal data between companies working in analysis, the value of the data as commercial products -which includes the evaluation of the speed and efficiency with which access to certain data can help develop new products - often has priority over scientific issues such as for example, representativity and reliability of the data and the ways they were analysed. This can result in decisions that pose a problem scientifically or that simply are not interested in investigating the consequences of the assumptions made and the processes used. This lack of interest easily translates into ignorance of discrimination, inequality and potential errors in the data considered. This type of ignorance is highly strategic and economically productive since it enables the use of data without concerns over social and scientific implications. In this scenario the evaluation on the quality of data shrinks to an evaluation of their usefulness towards short-term analyses or forecasting required by the client. There are no incentives in this system to encourage evaluation of the long-term implications of data analysis. The risk here is that the commerce of data is accompanied by an increasing divergence between data and their context. The interest in the history of the transit of data, the plurality of their emotional or scientific value and the re-evaluation of their origins tend to disappear over time, to be substituted by the increasing hold of the financial value of data.

The multiplicity of data sources and tools for aggregation also creates risks. The complexity of the data landscape is making it harder to identify which parts of the infrastructure require updating or have been put in doubt by new scientific developments. The situation worsens when considering the number of databases that populate every area of scientific research, each containing assumptions that influence the circulation and interoperability of data and that often are not updated in a reliable and regular way. Just to provide an idea of the numbers involved, the prestigious scientific publication Nucleic Acids Research publishes a special issue on new databases that are relevant to molecular biology every year and included: 56 new infrastructures in 2015, 62 in 2016, 54 in 2017 and 82 in 2018. These are just a small proportion of the hundreds of databases that are developed each year in the life sciences sector alone. The fact that these databases rely on short term funding means that a growing percentage of resources remain available to consult online although they are long dead. This is a condition that is not always visible to users of the database who trust them without checking whether they are actively maintained or not. At what point do these infrastructures become obsolete? What are the risks involved in weaving an ever more extensive tapestry of infrastructures that depend on each other, given the disparity in the ways they are managed and the challenges in identifying and comparing their prerequisite conditions, the theories and scaffolding used to build them? One of these risks is rampant conservativism: the insistence on recycling old data whose features and management elements become increasingly murky as time goes by, instead of encouraging the production of new data with features that specifically respond to the requirements and the circumstances of their users. In disciplines such as biology and medicine that study living beings and therefore are by definition continually evolving and developing, such trust in old data is particularly alarming. It is not the case, for example, that data collected on fungi ten, twenty or even a hundred years ago is reliable to explain the behaviour of the same species of fungi now or in the future (Leonelli 2018).

Researchers of what Luciano Floridi calls the infosphere —the way in which the introduction of digital technologies is changing the world - are becoming aware of the destructive potential of big data and the urgent need to focus efforts for management and use of data in active and thoughtful ways towards the improvement of the human condition. In Floridi’s own words:

ICT yields great opportunity which, however, entails the enormous intellectual responsibility of understanding this technology to use it in the most appropriate way. (Floridi 2014: vii; see also British Academy & Royal Society 2017)

In light of these findings, it is essential that ethical and social issues are seen as a core part of the technical and scientific requirements associated with data management and analysis. The ethical management of data is not obtained exclusively by regulating the commerce of research and management of personal data nor with the introduction of monitoring of research financing, even though these are important strategies. To guarantee that big data are used in the most scientifically and socially forward-thinking way it is necessary to transcend the concept of ethics as something external and alien to research. An analysis of the ethical implications of data science should become a basic component of the background and activity of those who take care of data and the methods used to view and analyse it. Ethical evaluations and choices are hidden in every aspect of data management, including those choices that may seem purely technical.