- Library databases

- Library website

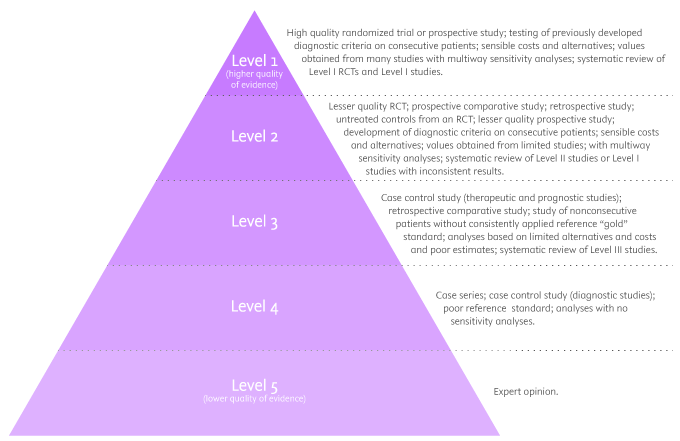

Evidence-Based Research: Levels of Evidence Pyramid

Introduction.

One way to organize the different types of evidence involved in evidence-based practice research is the levels of evidence pyramid. The pyramid includes a variety of evidence types and levels.

- systematic reviews

- critically-appraised topics

- critically-appraised individual articles

- randomized controlled trials

- cohort studies

- case-controlled studies, case series, and case reports

- Background information, expert opinion

Levels of evidence pyramid

The levels of evidence pyramid provides a way to visualize both the quality of evidence and the amount of evidence available. For example, systematic reviews are at the top of the pyramid, meaning they are both the highest level of evidence and the least common. As you go down the pyramid, the amount of evidence will increase as the quality of the evidence decreases.

Text alternative for Levels of Evidence Pyramid diagram

EBM Pyramid and EBM Page Generator, copyright 2006 Trustees of Dartmouth College and Yale University. All Rights Reserved. Produced by Jan Glover, David Izzo, Karen Odato and Lei Wang.

Filtered Resources

Filtered resources appraise the quality of studies and often make recommendations for practice. The main types of filtered resources in evidence-based practice are:

Scroll down the page to the Systematic reviews , Critically-appraised topics , and Critically-appraised individual articles sections for links to resources where you can find each of these types of filtered information.

Systematic reviews

Authors of a systematic review ask a specific clinical question, perform a comprehensive literature review, eliminate the poorly done studies, and attempt to make practice recommendations based on the well-done studies. Systematic reviews include only experimental, or quantitative, studies, and often include only randomized controlled trials.

You can find systematic reviews in these filtered databases :

- Cochrane Database of Systematic Reviews Cochrane systematic reviews are considered the gold standard for systematic reviews. This database contains both systematic reviews and review protocols. To find only systematic reviews, select Cochrane Reviews in the Document Type box.

- JBI EBP Database (formerly Joanna Briggs Institute EBP Database) This database includes systematic reviews, evidence summaries, and best practice information sheets. To find only systematic reviews, click on Limits and then select Systematic Reviews in the Publication Types box. To see how to use the limit and find full text, please see our Joanna Briggs Institute Search Help page .

You can also find systematic reviews in this unfiltered database :

To learn more about finding systematic reviews, please see our guide:

- Filtered Resources: Systematic Reviews

Critically-appraised topics

Authors of critically-appraised topics evaluate and synthesize multiple research studies. Critically-appraised topics are like short systematic reviews focused on a particular topic.

You can find critically-appraised topics in these resources:

- Annual Reviews This collection offers comprehensive, timely collections of critical reviews written by leading scientists. To find reviews on your topic, use the search box in the upper-right corner.

- Guideline Central This free database offers quick-reference guideline summaries organized by a new non-profit initiative which will aim to fill the gap left by the sudden closure of AHRQ’s National Guideline Clearinghouse (NGC).

- JBI EBP Database (formerly Joanna Briggs Institute EBP Database) To find critically-appraised topics in JBI, click on Limits and then select Evidence Summaries from the Publication Types box. To see how to use the limit and find full text, please see our Joanna Briggs Institute Search Help page .

- National Institute for Health and Care Excellence (NICE) Evidence-based recommendations for health and care in England.

- Filtered Resources: Critically-Appraised Topics

Critically-appraised individual articles

Authors of critically-appraised individual articles evaluate and synopsize individual research studies.

You can find critically-appraised individual articles in these resources:

- EvidenceAlerts Quality articles from over 120 clinical journals are selected by research staff and then rated for clinical relevance and interest by an international group of physicians. Note: You must create a free account to search EvidenceAlerts.

- ACP Journal Club This journal publishes reviews of research on the care of adults and adolescents. You can either browse this journal or use the Search within this publication feature.

- Evidence-Based Nursing This journal reviews research studies that are relevant to best nursing practice. You can either browse individual issues or use the search box in the upper-right corner.

To learn more about finding critically-appraised individual articles, please see our guide:

- Filtered Resources: Critically-Appraised Individual Articles

Unfiltered resources

You may not always be able to find information on your topic in the filtered literature. When this happens, you'll need to search the primary or unfiltered literature. Keep in mind that with unfiltered resources, you take on the role of reviewing what you find to make sure it is valid and reliable.

Note: You can also find systematic reviews and other filtered resources in these unfiltered databases.

The Levels of Evidence Pyramid includes unfiltered study types in this order of evidence from higher to lower:

You can search for each of these types of evidence in the following databases:

TRIP database

Background information & expert opinion.

Background information and expert opinions are not necessarily backed by research studies. They include point-of-care resources, textbooks, conference proceedings, etc.

- Family Physicians Inquiries Network: Clinical Inquiries Provide the ideal answers to clinical questions using a structured search, critical appraisal, authoritative recommendations, clinical perspective, and rigorous peer review. Clinical Inquiries deliver best evidence for point-of-care use.

- Harrison, T. R., & Fauci, A. S. (2009). Harrison's Manual of Medicine . New York: McGraw-Hill Professional. Contains the clinical portions of Harrison's Principles of Internal Medicine .

- Lippincott manual of nursing practice (8th ed.). (2006). Philadelphia, PA: Lippincott Williams & Wilkins. Provides background information on clinical nursing practice.

- Medscape: Drugs & Diseases An open-access, point-of-care medical reference that includes clinical information from top physicians and pharmacists in the United States and worldwide.

- Virginia Henderson Global Nursing e-Repository An open-access repository that contains works by nurses and is sponsored by Sigma Theta Tau International, the Honor Society of Nursing. Note: This resource contains both expert opinion and evidence-based practice articles.

- Previous Page: Phrasing Research Questions

- Next Page: Evidence Types

- Office of Student Disability Services

Walden Resources

Departments.

- Academic Residencies

- Academic Skills

- Career Planning and Development

- Customer Care Team

- Field Experience

- Military Services

- Student Success Advising

- Writing Skills

Centers and Offices

- Center for Social Change

- Office of Academic Support and Instructional Services

- Office of Degree Acceleration

- Office of Research and Doctoral Services

- Office of Student Affairs

Student Resources

- Doctoral Writing Assessment

- Form & Style Review

- Quick Answers

- ScholarWorks

- SKIL Courses and Workshops

- Walden Bookstore

- Walden Catalog & Student Handbook

- Student Safety/Title IX

- Legal & Consumer Information

- Website Terms and Conditions

- Cookie Policy

- Accessibility

- Accreditation

- State Authorization

- Net Price Calculator

- Contact Walden

Walden University is a member of Adtalem Global Education, Inc. www.adtalem.com Walden University is certified to operate by SCHEV © 2024 Walden University LLC. All rights reserved.

Systematic Reviews

- Levels of Evidence

- Evidence Pyramid

- Joanna Briggs Institute

The evidence pyramid is often used to illustrate the development of evidence. At the base of the pyramid is animal research and laboratory studies – this is where ideas are first developed. As you progress up the pyramid the amount of information available decreases in volume, but increases in relevance to the clinical setting.

Meta Analysis – systematic review that uses quantitative methods to synthesize and summarize the results.

Systematic Review – summary of the medical literature that uses explicit methods to perform a comprehensive literature search and critical appraisal of individual studies and that uses appropriate st atistical techniques to combine these valid studies.

Randomized Controlled Trial – Participants are randomly allocated into an experimental group or a control group and followed over time for the variables/outcomes of interest.

Cohort Study – Involves identification of two groups (cohorts) of patients, one which received the exposure of interest, and one which did not, and following these cohorts forward for the outcome of interest.

Case Control Study – study which involves identifying patients who have the outcome of interest (cases) and patients without the same outcome (controls), and looking back to see if they had the exposure of interest.

Case Series – report on a series of patients with an outcome of interest. No control group is involved.

- Levels of Evidence from The Centre for Evidence-Based Medicine

- The JBI Model of Evidence Based Healthcare

- How to Use the Evidence: Assessment and Application of Scientific Evidence From the National Health and Medical Research Council (NHMRC) of Australia. Book must be downloaded; not available to read online.

When searching for evidence to answer clinical questions, aim to identify the highest level of available evidence. Evidence hierarchies can help you strategically identify which resources to use for finding evidence, as well as which search results are most likely to be "best".

Image source: Evidence-Based Practice: Study Design from Duke University Medical Center Library & Archives. This work is licensed under a Creativ e Commons Attribution-ShareAlike 4.0 International License .

The hierarchy of evidence (also known as the evidence-based pyramid) is depicted as a triangular representation of the levels of evidence with the strongest evidence at the top which progresses down through evidence with decreasing strength. At the top of the pyramid are research syntheses, such as Meta-Analyses and Systematic Reviews, the strongest forms of evidence. Below research syntheses are primary research studies progressing from experimental studies, such as Randomized Controlled Trials, to observational studies, such as Cohort Studies, Case-Control Studies, Cross-Sectional Studies, Case Series, and Case Reports. Non-Human Animal Studies and Laboratory Studies occupy the lowest level of evidence at the base of the pyramid.

- Finding Evidence-Based Answers to Clinical Questions – Quickly & Effectively A tip sheet from the health sciences librarians at UC Davis Libraries to help you get started with selecting resources for finding evidence, based on type of question.

- << Previous: What is a Systematic Review?

- Next: Locating Systematic Reviews >>

- Getting Started

- What is a Systematic Review?

- Locating Systematic Reviews

- Searching Systematically

- Developing Answerable Questions

- Identifying Synonyms & Related Terms

- Using Truncation and Wildcards

- Identifying Search Limits/Exclusion Criteria

- Keyword vs. Subject Searching

- Where to Search

- Search Filters

- Sensitivity vs. Precision

- Core Databases

- Other Databases

- Clinical Trial Registries

- Conference Presentations

- Databases Indexing Grey Literature

- Web Searching

- Handsearching

- Citation Indexes

- Documenting the Search Process

- Managing your Review

Research Support

- Last Updated: May 1, 2024 4:09 PM

- URL: https://guides.library.ucdavis.edu/systematic-reviews

- Research Process

Levels of evidence in research

- 5 minute read

- 99.1K views

Table of Contents

Level of evidence hierarchy

When carrying out a project you might have noticed that while searching for information, there seems to be different levels of credibility given to different types of scientific results. For example, it is not the same to use a systematic review or an expert opinion as a basis for an argument. It’s almost common sense that the first will demonstrate more accurate results than the latter, which ultimately derives from a personal opinion.

In the medical and health care area, for example, it is very important that professionals not only have access to information but also have instruments to determine which evidence is stronger and more trustworthy, building up the confidence to diagnose and treat their patients.

5 levels of evidence

With the increasing need from physicians – as well as scientists of different fields of study-, to know from which kind of research they can expect the best clinical evidence, experts decided to rank this evidence to help them identify the best sources of information to answer their questions. The criteria for ranking evidence is based on the design, methodology, validity and applicability of the different types of studies. The outcome is called “levels of evidence” or “levels of evidence hierarchy”. By organizing a well-defined hierarchy of evidence, academia experts were aiming to help scientists feel confident in using findings from high-ranked evidence in their own work or practice. For Physicians, whose daily activity depends on available clinical evidence to support decision-making, this really helps them to know which evidence to trust the most.

So, by now you know that research can be graded according to the evidential strength determined by different study designs. But how many grades are there? Which evidence should be high-ranked and low-ranked?

There are five levels of evidence in the hierarchy of evidence – being 1 (or in some cases A) for strong and high-quality evidence and 5 (or E) for evidence with effectiveness not established, as you can see in the pyramidal scheme below:

Level 1: (higher quality of evidence) – High-quality randomized trial or prospective study; testing of previously developed diagnostic criteria on consecutive patients; sensible costs and alternatives; values obtained from many studies with multiway sensitivity analyses; systematic review of Level I RCTs and Level I studies.

Level 2: Lesser quality RCT; prospective comparative study; retrospective study; untreated controls from an RCT; lesser quality prospective study; development of diagnostic criteria on consecutive patients; sensible costs and alternatives; values obtained from limited stud- ies; with multiway sensitivity analyses; systematic review of Level II studies or Level I studies with inconsistent results.

Level 3: Case-control study (therapeutic and prognostic studies); retrospective comparative study; study of nonconsecutive patients without consistently applied reference “gold” standard; analyses based on limited alternatives and costs and poor estimates; systematic review of Level III studies.

Level 4: Case series; case-control study (diagnostic studies); poor reference standard; analyses with no sensitivity analyses.

Level 5: (lower quality of evidence) – Expert opinion.

By looking at the pyramid, you can roughly distinguish what type of research gives you the highest quality of evidence and which gives you the lowest. Basically, level 1 and level 2 are filtered information – that means an author has gathered evidence from well-designed studies, with credible results, and has produced findings and conclusions appraised by renowned experts, who consider them valid and strong enough to serve researchers and scientists. Levels 3, 4 and 5 include evidence coming from unfiltered information. Because this evidence hasn’t been appraised by experts, it might be questionable, but not necessarily false or wrong.

Examples of levels of evidence

As you move up the pyramid, you will surely find higher-quality evidence. However, you will notice there is also less research available. So, if there are no resources for you available at the top, you may have to start moving down in order to find the answers you are looking for.

- Systematic Reviews: -Exhaustive summaries of all the existent literature about a certain topic. When drafting a systematic review, authors are expected to deliver a critical assessment and evaluation of all this literature rather than a simple list. Researchers that produce systematic reviews have their own criteria to locate, assemble and evaluate a body of literature.

- Meta-Analysis: Uses quantitative methods to synthesize a combination of results from independent studies. Normally, they function as an overview of clinical trials. Read more: Systematic review vs meta-analysis .

- Critically Appraised Topic: Evaluation of several research studies.

- Critically Appraised Article: Evaluation of individual research studies.

- Randomized Controlled Trial: a clinical trial in which participants or subjects (people that agree to participate in the trial) are randomly divided into groups. Placebo (control) is given to one of the groups whereas the other is treated with medication. This kind of research is key to learning about a treatment’s effectiveness.

- Cohort studies: A longitudinal study design, in which one or more samples called cohorts (individuals sharing a defining characteristic, like a disease) are exposed to an event and monitored prospectively and evaluated in predefined time intervals. They are commonly used to correlate diseases with risk factors and health outcomes.

- Case-Control Study: Selects patients with an outcome of interest (cases) and looks for an exposure factor of interest.

- Background Information/Expert Opinion: Information you can find in encyclopedias, textbooks and handbooks. This kind of evidence just serves as a good foundation for further research – or clinical practice – for it is usually too generalized.

Of course, it is recommended to use level A and/or 1 evidence for more accurate results but that doesn’t mean that all other study designs are unhelpful or useless. It all depends on your research question. Focusing once more on the healthcare and medical field, see how different study designs fit into particular questions, that are not necessarily located at the tip of the pyramid:

- Questions concerning therapy: “Which is the most efficient treatment for my patient?” >> RCT | Cohort studies | Case-Control | Case Studies

- Questions concerning diagnosis: “Which diagnose method should I use?” >> Prospective blind comparison

- Questions concerning prognosis: “How will the patient’s disease will develop over time?” >> Cohort Studies | Case Studies

- Questions concerning etiology: “What are the causes for this disease?” >> RCT | Cohort Studies | Case Studies

- Questions concerning costs: “What is the most cost-effective but safe option for my patient?” >> Economic evaluation

- Questions concerning meaning/quality of life: “What’s the quality of life of my patient going to be like?” >> Qualitative study

Find more about Levels of evidence in research on Pinterest:

17 March 2021 – Elsevier’s Mini Program Launched on WeChat Brings Quality Editing Straight to your Smartphone

- Manuscript Review

Professor Anselmo Paiva: Using Computer Vision to Tackle Medical Issues with a Little Help from Elsevier Author Services

You may also like.

Descriptive Research Design and Its Myriad Uses

Five Common Mistakes to Avoid When Writing a Biomedical Research Paper

Making Technical Writing in Environmental Engineering Accessible

To Err is Not Human: The Dangers of AI-assisted Academic Writing

When Data Speak, Listen: Importance of Data Collection and Analysis Methods

Choosing the Right Research Methodology: A Guide for Researchers

Why is data validation important in research?

Writing a good review article

Input your search keywords and press Enter.

- Archives & Special Collections home

- Art Library home

- Ekstrom Library home

- Kornhauser Health Sciences Library home

- Law Library home

- Music Library home

- University of Louisville Hospital home

- Interlibrary Loan

- Off-Campus Login

- Renew Books

- Cardinal Card

- My Print Center

- Business Ops

- Cards Career Connection

Search Site

Search catalog, evidence-based practice: types of evidence.

- Introduction

- Finding Evidence (PICO)

- Types of Evidence

- Appraising Evidence

- EBP Resources

- Write a Systematic Review

- Evidence-Based Dentistry

- Evidence-Based Nursing

- Evidence-Based SPL and Audiology

- Evidence-Based Public Health

Types of Research

Once you have your focused question, it's time to decide on the type of evidence you need to answer it. Understanding the types of research will help guide you to proper evidence that will support your question.

Evidence Based Pyramid

Hierarchy of evidence and research designs.

As you move up the pyramid, the study designs are more rigorous and are less biased.

What type of study should you use?

Question definitions:.

Intervention/Therapy: Questions addressing the treatment of an illness or disability.

Etiology: Questions addressing the causes or origins of disease (i.e., factors that produce or predispose toward a certain disease or disorder).

Diagnosis: Questions addressing the act or process of identifying or determining the nature and cause of a disease or injury through evaluation.

Prognosis/Prediction: Questions addressing the prediction of the course of a disease.

The type of question you have will often lead you to the type of research that will best answer the question:

Intervention/Prevention: RCT > Cohort Study > Case Control > Case Series

Therapy: RCT > Cohort > Case Control > Case Series

Prognosis/Prediction: Cohort Study > Case Control > Case Series

Diagnosis/Diagnostic: Prospective, blind comparison to Gold Standard

Etiology: RCT > Cohort Study > Case Control > Case Series

Definitions

Cebm study design tree.

The type of study can generally be worked at by looking at three issues:

Q1. What was the aim of the study?

- To simply describe a population (PO questions) descriptive

- To quantify the relationship between factors (PICO questions) analytic.

Q2. If analytic, was the intervention randomly allocated?

- Yes? RCT

- No? Observational study

For observational study the main types will then depend on the timing of the measurement of outcome, so our third question is:

Q3. When were the outcomes determined?

- Some time after the exposure or intervention? cohort study (‘prospective study’)

- At the same time as the exposure or intervention? cross sectional study or survey

- Before the exposure was determined? case-control study (‘retrospective study’ based on recall of the exposure)

from Centre for Evidence-Based Medicine https://www.cebm.net/2014/04/study-designs/

- << Previous: Finding Evidence (PICO)

- Next: Appraising Evidence >>

- Last Updated: Apr 26, 2024 9:08 AM

- Librarian Login

- What is the best evidence and how to find it

Why is research evidence better than expert opinion alone?

In a broad sense, research evidence can be any systematic observation in order to establish facts and reach conclusions. Anything not fulfilling this definition is typically classified as “expert opinion”, the basis of which includes experience with patients, an understanding of biology, knowledge of pre-clinical research, as well as of the results of studies. Using expert opinion as the only basis to make decisions has proved problematic because in practice doctors often introduce new treatments too quickly before they have been shown to work, or they are too slow to introduce proven treatments.

However, clinical experience is key to interpret and apply research evidence into practice, and to formulate recommendations, for instance in the context of clinical guidelines. In other words, research evidence is necessary but not sufficient to make good health decisions.

Which studies are more reliable?

Not all evidence is equally reliable.

Any study design, qualitative or quantitative, where data is collected from individuals or groups of people is usually called a primary study. There are many types of primary study designs, but for each type of health question there is one that provides more reliable information.

For treatment decisions, there is consensus that the most reliable primary study is the randomised controlled trial (RCT). In this type of study, patients are randomly assigned to have either the treatment being tested or a comparison treatment (sometimes called the control treatment). Random really means random. The decision to put someone into one group or another is made like tossing a coin: heads they go into one group, tails they go into the other.

The control treatment might be a different type of treatment or a dummy treatment that shouldn't have any effect (a placebo). Researchers then compare the effects of the different treatments.

Large randomised trials are expensive and take time. In addition sometimes it may be unethical to undertake a study in which some people were randomly assigned not to have a treatment. For example, it wouldn't be right to give oxygen to some children having an asthma attack and not give it to others. In cases like this, other primary study designs may be the best choice.

Laboratory studies are another type of study. Newspapers often have stories of studies showing how a drug cured cancer in mice. But just because a treatment works for animals in laboratory experiments, this doesn't mean it will work for humans. In fact, most drugs that have been shown to cure cancer in mice do not work for people.

Very rarely we cannot base our health decisions on the results of studies. Sometimes the research hasn't been done because doctors are used to treating a condition in a way that seems to work. This is often true of treatments for broken bones and operations. But just because there's no research for a treatment doesn't mean it doesn't work. It just means that no one can say for sure.

Why we shouldn’t read studies

An enormous amount of effort is required to be able to identify and summarise everything we know with regard to any given health intervention. The amount of data has soared dramatically. A conservative estimation is there are more than 35,000 medical journals and almost 20 million research articles published every year. On the other hand, up to half of existing data might be unpublished.

How can anyone keep up with all this? And how can you tell if the research is good or not? Each primary study is only one piece of a jigsaw that may take years to finish. Rarely does any one piece of research answer either a doctor's, or a patient's questions.

Even though reading large numbers of studies is impractical, high-quality primary studies, especially RCTs, constitute the foundations of what we know, and they are the best way of advancing the knowledge. Any effort to support or promote the conduct of sound, transparent, and independent trials that are fully and clearly published is worth endorsing. A prominent project in this regard is the All trials initiative.

Why we should read systematic reviews

Most of the time a single study doesn't tell us enough. The best answers are found by combining the results of many studies.

A systematic review is a type of research that looks at the results from all of the good-quality studies. It puts together the results of these individual studies into one summary. This gives an estimate of a treatment's risks and benefits. Sometimes these reviews include a statistical analysis, called a meta-analysis , which combines the results of several studies to give a treatment effect.

Systematic reviews are increasingly being used for decision making because they reduce the probability of being misled by looking at one piece of the jigsaw. By being systematic they are also more transparent, and have become the gold standard approach to synthesise the ever-expanding and conflicting biomedical literature.

Systematic reviews are not fool proof. Their findings are only as good as the studies that they include and the methods they employ. But the best reviews clearly state whether the studies they include are good quality or not.

Three reasons why we shouldn’t read (most) systematic reviews

Firstly, systematic reviews have proliferated over time. From 11 per day in 2010, they skyrocketed up to 40 per day or more in 2015.[1][2] Some have described this production as having reached epidemic proportions where the large majority of produced systematic reviews and meta-analyses are unnecessary, misleading, and/or conflicted.[3][4] So, finding more than one systematic review for a question is the rule more than the exception, and it is not unusual to find several dozen for the hottest questions.

Second, most systematic reviews address a narrow question. It is difficult to put them in the context of all of the available alternatives for an individual case. Reading multiple reviews to assess all of the alternatives is impractical, even more if we consider they are typically difficult to read for the average clinician, who will need to solve several questions each day.[5]

Third, systematic reviews do not tell you what to do, or what is advisable for a given patient or situation. Indeed, good systematic reviews explicitly avoid making recommendations.

So, even though systematic reviews play a key role in any evidence-based decision-making process, most of them are low-quality or outdated, and they rarely provide all the information needed to make decisions in the real world.

How to find the best available evidence?

Considering the massive amount of information available, we can quickly discard periodically reviewing our favourite journals as a means of sourcing the best available evidence.

The traditional approach to search for evidence has been using major databases, such as PubMed or EMBASE . These constitute comprehensive sources including millions of relevant, but also irrelevant articles. Even though in the past they were the preferred approach to searching for evidence, information overload has made them impractical, and most clinicians would fail to find the best available evidence in this way, however hard they tried.

Another popular approach is simply searching in Google. Unfortunately, because of its lack of transparency, Google is not a reliable way to filter current best evidence from unsubstantiated or non-scientifically supervised sources.[6]

Three alternatives to access the best evidence

Alternative 1 - Pick the best systematic review Mastering the art of identifying, appraising, and applying high-quality systematic reviews into practice can be very rewarding. It is not easy, but once mastered it gives a view of the bigger picture: of what is known, and what is not known.

The best single source of highest-quality systematic reviews is produced by an international organisation called the Cochrane Collaboration, named after a well-known researcher.[4] They can be accessed at The Cochrane Library .

Unfortunately, Cochrane reviews do not cover all of the existing questions and they are not always up to date. Also, there might be non-Cochrane reviews out-performing Cochrane reviews.

There are many resources that facilitate access to systematic reviews (and other resources), such as Trip database , PubMed Health , ACCESSSS , or Epistemonikos (the Cochrane Collaboration maintains a comprehensive list of these resources).

Epistemonikos database is innovative both in simultaneously searching multiple resources and in indexing and interlinking relevant evidence. For example, Epistemonikos connects systematic reviews and their included studies, and thus allows clustering of systematic reviews based on the primary studies they have in common. Epistemonikos is also unique in offering an appreciable multilingual user interface, multilingual search, and translation of abstracts in more than nine languages.[6] This database includes several tools to compare systematic reviews, including the matrix of evidence, a dynamic table showing all of the systematic reviews, and the primary studies included in those reviews.

Additionally, Epistemonikos partnered with Cochrane, and during 2017 a combined search in both the Cochrane Library and Epistemonikos was released.

Alternative 2 - Read trustworthy guidelines Although systematic reviews can provide a synthesis of the benefits and harms of the interventions, they do not integrate these factors with patients’ values and preferences or resource considerations to provide a suggested course of action. Also, to fully address the questions, clinicians would need to integrate the information of several systematic reviews covering all the relevant alternatives and outcomes. Most clinicians will likely prefer guidance rather than interpreting systematic reviews themselves.

Trustworthy guidelines, especially if developed with high standards, such as the Grading of Recommendations, Assessment, Development, and Evaluation ( GRADE ) approach, offer systematic and transparent guidance in moving from evidence to recommendations.[7]

Many online guideline websites promote themselves as “evidence based”, but few have explicit links to research findings.[8] If they don’t have in-line references to relevant research findings, dismiss them. If they have, you can judge the strength of the commitment to evidence to support inference, checking whether statements are based on high-quality versus low-quality evidence using alternative 1 explained above.

Unfortunately, most guidelines have serious limitations or are outdated.[9][10] The exercise of locating and appraising the best guideline is time consuming. This is particularly challenging for generalists addressing questions from different conditions or diseases.

Alternative 3 - Use point-of-care tools Point-of-care tools, such as BMJ Best Practice, have been developed as a response to the genuine need to summarise the ever-expanding biomedical literature on an ever-increasing number of alternatives in order to make evidence-based decisions. In this competitive market, the more successful products have been those delivering innovative, user-friendly interfaces that improve the retrieval, synthesis, organisation, and application of evidence-based content in many different areas of clinical practice.

However, the same impossibility in catching up with new evidence without compromising quality that affects guidelines also affects point-of-care tools. Clinicians should become familiar with the point-of-care information resource they want or can access, and examine the in-line references to relevant research findings. Clinicians can easily judge the strength of the commitment to evidence checking whether statements are based on high-quality versus low-quality evidence using alternative 1 explained above. Comprehensiveness, use of GRADE approach, and independence are other characteristics to bear in mind when selecting among point-of-care information summaries.

A comprehensive list of these resources can be found in a study by Kwag et al .

Finding the best available evidence is more challenging than it was in the dawn of the evidence-based movement, and the main cause is the exponential growth of evidence-based information, in any of the flavours described above.

However, with a little bit of patience and practice, the busy clinician will discover evidence-based practice is far easier than it was 5 or 10 years ago. We are entering a stage where information is flowing between the different systems, technology is being harnessed for good, and the different players are starting to generate alliances.

The early adopters will surely enjoy the first experiments of living systematic reviews (high-quality, up-to-date online summaries of health research that are updated as new research becomes available), living guidelines, and rapid reviews tied to rapid recommendations, just to mention a few. [13][14][15]

It is unlikely that the picture of countless low-quality studies and reviews will change in the foreseeable future. However, it would not be a surprise if, in 3 to 5 years, separating the wheat from the chaff becomes trivial. Maybe the promise of evidence-based medicine of more effective, safer medical intervention resulting in better health outcomes for patients could be fulfilled.

Author: Gabriel Rada

Competing interests: Gabriel Rada is the co-founder and chairman of Epistemonikos database, part of the team that founded and maintains PDQ-Evidence, and an editor of the Cochrane Collaboration.

Related Blogs

Living Systematic Reviews: towards real-time evidence for health-care decision making

- Bastian H, Glasziou P, Chalmers I. Seventy-five trials and eleven systematic reviews a day: how will we ever keep up? PLoS Med. 2010 Sep 21;7(9):e1000326. doi: 10.1371/journal.pmed.1000326

- Epistemonikos database [filter= systematic review; year=2015]. A Free, Relational, Collaborative, Multilingual Database of Health Evidence. https://www.epistemonikos.org/en/search?&q=*&classification=systematic-review&year_start=2015&year_end=2015&fl=14542 Accessed 5 Jan 2017.

- Ioannidis JP. The Mass Production of Redundant, Misleading, and Conflicted Systematic Reviews and Meta-analyses. Milbank Q. 2016 Sep;94(3):485-514. doi: 10.1111/1468-0009.12210.

- Page MJ, Shamseer L, Altman DG, et al. Epidemiology and reporting characteristics of systematic reviews of biomedical research: a cross-sectional study. PLoS Med. 2016;13(5):e1002028.

- Del Fiol G, Workman TE, Gorman PN. Clinical questions raised by clinicians at the point of care: a systematic review. JAMA Intern Med. 2014 May;174(5):710-8. doi: 10.1001/jamainternmed.2014.368.

- Agoritsas T, Vandvik P, Neumann I, Rochwerg B, Jaeschke R, Hayward R, et al. Chapter 5: finding current best evidence. In: Users' guides to the medical literature: a manual for evidence-based clinical practice. Chicago: MacGraw-Hill, 2014.

- Guyatt GH, Oxman AD, Vist GE, et al. GRADE: An emerging consensus on rating quality of evidence and strength of recommendations. BMJ. 2008;336(7650):924-926. doi: 10.1136/bmj.39489.470347

- Neumann I, Santesso N, Akl EA, Rind DM, Vandvik PO, Alonso-Coello P, Agoritsas T, Mustafa RA, Alexander PE, Schünemann H, Guyatt GH. A guide for health professionals to interpret and use recommendations in guidelines developed with the GRADE approach. J Clin Epidemiol. 2016 Apr;72:45-55. doi: 10.1016/j.jclinepi.2015.11.017

- Alonso-Coello P, Irfan A, Solà I, Gich I, Delgado-Noguera M, Rigau D, Tort S, Bonfill X, Burgers J, Schunemann H. The quality of clinical practice guidelines over the last two decades: a systematic review of guideline appraisal studies. Qual Saf Health Care. 2010 Dec;19(6):e58. doi: 10.1136/qshc.2010.042077

- Martínez García L, Sanabria AJ, García Alvarez E, Trujillo-Martín MM, Etxeandia-Ikobaltzeta I, Kotzeva A, Rigau D, Louro-González A, Barajas-Nava L, Díaz Del Campo P, Estrada MD, Solà I, Gracia J, Salcedo-Fernandez F, Lawson J, Haynes RB, Alonso-Coello P; Updating Guidelines Working Group. The validity of recommendations from clinical guidelines: a survival analysis. CMAJ. 2014 Nov 4;186(16):1211-9. doi: 10.1503/cmaj.140547

- Kwag KH, González-Lorenzo M, Banzi R, Bonovas S, Moja L. Providing Doctors With High-Quality Information: An Updated Evaluation of Web-Based Point-of-Care Information Summaries. J Med Internet Res. 2016 Jan 19;18(1):e15. doi: 10.2196/jmir.5234

- Banzi R, Cinquini M, Liberati A, Moschetti I, Pecoraro V, Tagliabue L, Moja L. Speed of updating online evidence based point of care summaries: prospective cohort analysis. BMJ. 2011 Sep 23;343:d5856. doi: 10.1136/bmj.d5856

- Elliott JH, Turner T, Clavisi O, Thomas J, Higgins JP, Mavergames C, Gruen RL. Living systematic reviews: an emerging opportunity to narrow the evidence-practice gap. PLoS Med. 2014 Feb 18;11(2):e1001603. doi: 10.1371/journal.pmed.1001603

- Vandvik PO, Brandt L, Alonso-Coello P, Treweek S, Akl EA, Kristiansen A, Fog-Heen A, Agoritsas T, Montori VM, Guyatt G. Creating clinical practice guidelines we can trust, use, and share: a new era is imminent. Chest. 2013 Aug;144(2):381-9. doi: 10.1378/chest.13-0746

- Vandvik PO, Otto CM, Siemieniuk RA, Bagur R, Guyatt GH, Lytvyn L, Whitlock R, Vartdal T, Brieger D, Aertgeerts B, Price S, Foroutan F, Shapiro M, Mertz R, Spencer FA. Transcatheter or surgical aortic valve replacement for patients with severe, symptomatic, aortic stenosis at low to intermediate surgical risk: a clinical practice guideline. BMJ. 2016 Sep 28;354:i5085. doi: 10.1136/bmj.i5085

Discuss EBM

- What does evidence-based actually mean?

- Simply making evidence simple

- Six proposals for EBMs future

- Promoting informed healthcare choices by helping people assess treatment claims

- The blind leading the blind in the land of risk communication

- Transforming the communication of evidence for better health

- Clinical search, big data, and the hunt for meaning

- Living systematic reviews: towards real-time evidence for health-care decision making

- The rise of rapid reviews

- Evidence for the Brave New World on multimorbidity

- Genetics and personalised medicine: where’s the revolution?

- Policy, practice, and politics

- The straw men of integrative health and alternative medicine

- Where’s the evidence for teaching evidence-based medicine?

EBM Toolkit home

Learn, Practise, Discuss, Tools

Research Guide for Masters and Doctoral Nursing Students

- Nursing Literature

- Setting up Library Access

- Literature Reviews

- Managing Citations

- Understanding Evidence-based Article Types

- Develop your Writing

Types of Evidence-Based Articles

In order to reduce bias in research, health sciences professionals have developed standards for determining which types of articles and research demonstrate strong evidence in their methodology.

Typically these types of studies build upon each other and what sometimes starts off as a Case Control Study will eventually move through other phases of research and eventually work into a Systematic Review with Meta-Analysis. However, other times there are factors that prevent certain problems in health sciences from being properly examined with a Meta-Analysis due to divergent research methods, etc.

How do I know?

Depending on the search tool you use, there are a few ways to tell...

Firstly, many high-evidence article types will explicitly state what type of an article it is in the title.

Secondly, if you cannot figure out the article type, sometimes that will be revealed in the abstract of the article.Look for words like "systematic analysis" to indicate high levels of evidence.

In PubMed, you can see it by clicking on "Publication Type/MeSH Terms"

In CINAHL you can see it by looking at the Publication Type

Levels of Evidence

- The Pyramid

- Non-Research Information

- Observational Studies

- Experimental Studies

- Critical Analysis

- Non-Evidence-based Expert Opinion - Commentary statements, speeches, or editorials written by prominent experts asserting ideas that are reached by conjecture, casual observation, emotion, religious belief, or ego.

- Non-EBP guidelines - practice guidelines that exist because of eminence, authority, eloquence, providence, or diffidence based approaches to healthcare.

- News Articles - brief summaries of research or medical opinions written by journalists for the general public.

- Editorials - Opinions asserted by experts, lay-people, non-experts, or anyone else in a news outlet, magazine, or academic journal.

- Commentary - similar to an editorial, but it may be identified as a commentary, which can be an invited informal and non-reviewed short article pertaining to a particular concept or idea.

Let's Talk about Review Articles

Review articles are common in health literature. They are typically overviews of literature found on topics, but do not go so far as to meet the methodological requirements for a Systematic Review.

These articles may contain some critical analysis, but will not have the rigorous criteria that a Systematic Review does. They can be used to demonstrate evidence, albeit they do not make a very strong case as they are secondary articles and not originally conducted observational or experimental research.

- Example: a patient enters the ER with some of the key symptoms for Mononucleosis, but complains of nausea and stomach pain. Upon further testing, the doctor concludes that the Mono infection has affected the liver.

- Example: A pediatrician notices that a children of a specific city jurisdiction are being diagnosed with lead poisoning. Upon further examination for the cause, she finds out that the city's dated and crumbling lead pipe plumbing infrastructure is affecting the water quality, leading to this high incidence.

- Example: A group of doctors studied the long term health effects of people who smoked with particular frequencies alongside people who did not smoke at all. From this study doctors concluded that smoking poses significant health hazards such as increased risks of heart disease, cancers, and lung diseases.

- Example: Researchers investigating the difference between between yoga and acupuncture as relief for lower back pain. Since the activity involved in the trial cannot be disguised (as one might with a placebo), randomization cannot be a part of the research study. '

- Example: Researchers need to test the effects of a new drug for Parkinson's, so they recruit subjects for the study. A control group would be given the drugs standard for Parkinson's treatment, and the experimental group would have the trial drug. Participants would not know which treatment they receive during the trial.

- Example: After thorough testing and experimentation, researchers, doctors, and product developers created and started using less-invasive oxygen monitoring devices to improve recovery times after surgeries. These are now standard equipment.

- Example: Researchers want to look at the literature on mammogram for breast cancer screenings to figure out--based on the literature--when someone should start getting regular mammograms as a preventive measure. They conduct a thorough literature search that consults multiple research databases and sources of literature, documenting every step, sorting through and selecting articles for inclusion in their research based on the criteria. They will then qualitatively analyze the results of selected articles to determine what type of recommendation for routine mammogram screening for breast cancer they should provide.

Many Systematic Reviews Contain Meta-Analysis and will specify so, usually in the title.

Example: Researchers want to know what the rate of depression is in overweight women of Latin American heritage and examine self-reported sociocultural factors involved in their mental health. They conduct a literature search, exactly like researchers might do for a Systematic Review (see above) and do a quantitative analysis of the data using advanced statistical methods to synthesize conclusions from the numbers aggregated from a variety of studies.

Qualitative vs Quantitative Methods

Sources: Qualitative Vs Quantitative from Maricopa Community Colleges Helpful Definitions from Simmons College Libraries

- << Previous: Managing Citations

- Next: Develop your Writing >>

- Last Updated: May 2, 2024 1:46 PM

- URL: https://guides.library.uwm.edu/gradnursing

- SPECIAL COLLECTIONS

- COVID-19 Library Updates

- Make Appointment

Evidence-Based Nursing Research Guide: Evidence Levels & Types

- Key Resources

- Evidence-Based Nursing Definitions

- Evidence Levels & Types

- PICO Search Strategy

- Systematic Reviews This link opens in a new window

- Books & eBooks

- Background Information

- Organizations

Evidence Pyramid

Depending on their purpose, design, and mode of reporting or dissemination, health-related research studies can be ranked according to the strength of evidence they provide, with the sources of strongest evidence at the top, and the weakest at the bottom:

Secondary Sources: studies of studies

Systematic Review

- Identifies, appraises, and synthesizes all empirical evidence that meets pre-specified eligibility criteria

- Methods section outlines a detailed search strategy used to identify and appraise articles

- May include a meta-analysis, but not required (see Meta-Analysis below)

Meta-Analysis

- A subset of systematic reviews: uses quantitative methods to combine the results of independent studies and synthesize the summaries and conclusions

- Methods section outlines a detailed search strategy used to identify and appraise articles; often surveys clinical trials

- Can be conducted independently, or as a part of a systematic review

- All meta-analyses are systematic reviews, but not all systematic reviews are meta-analyses

Evidence-Based Guideline

- Provides a brief summary of evidence for a general clinical question or condition

- Produced by professional health care organizations, practices, and agencies that systematically gather, appraise, and combine the evidence

- Click on the 'Evidence-Based Care Sheets' link located at the top of the CINAHL screen to find short overviews of evidence-based care recommendations covering 140 or more health care topics.

Meta-Synthesis or Qualitative Synthesis (Systematic Review of Qualitative or Descriptive Studies)

- a systematic review of qualitative or descriptive studies, low strength level

Primary Sources: original studies

Randomized Controlled Trial

- Experiment where individuals are randomly assigned to an experimental or control group to test the value or efficiency of a treatment or intervention

Non-Randomized Controlled Clinical Trial (Quasi-Experimental)

- Involves one or more test treatments, at least one control treatment, specified outcome measures for evaluating the studied intervention, and a bias-free method for assigning patients to the test treatment

Case-Control or Case-Comparison Study (Non-Experimental)

- Individuals with a particular condition or disease (the cases) are selected for comparison with individuals who do not have the condition or disease (the controls)

Cohort Study (Non-Experimental)

- Identifies subsets (cohorts) of a defined population

- Cohorts may or may not be exposed to factors that researchers hypothesize will influence the probability that participants will have a particular disease or other outcome

- Researchers follow cohorts in an attempt to determine distinguishing subgroup characteristics

Further Reading

- Levels of Evidence - EBP Toolkit Winona State University

- Levels of Evidence Northern Virginia Community College

- Types of Evidence University of Missouri - St Louis

- << Previous: Evidence-Based Nursing Definitions

- Next: PICO Search Strategy >>

- Last Updated: Apr 9, 2024 2:36 PM

- URL: https://libguides.depaul.edu/ebp

- Chamberlain University Library

- Chamberlain Library Core

Finding Types of Research

- Evidence-Based Research

On This Guide

About this guide, understand evidence-based practice, identify research study types.

- Quantitative Studies

- Qualitative Studies

- Meta-Analysis

- Systematic Reviews

- Randomized Controlled Trials

- Observational Studies

- Literature Reviews

- Finding Research Tools This link opens in a new window

Throughout your schooling, you may need to find different types of evidence and research to support your course work. This guide provides a high-level overview of evidence-based practice as well as the different types of research and study designs. Each page of this guide offers an overview and search tips for finding articles that fit that study design.

Note! If you need help finding a specific type of study, visit the Get Research Help guide to contact the librarians.

What is Evidence-Based Practice?

One of the requirements for your coursework is to find articles that support evidence-based practice. But what exactly is evidence-based practice? Evidence-based practice is a method that uses relevant and current evidence to plan, implement and evaluate patient care. This definition is included in the video below, which explains all the steps of evidence-based practice in greater detail.

- Video - Evidence-based practice: What it is and what it is not. Medcom (Producer), & Cobb, D. (Director). (2017). Evidence-based practice: What it is and what it is not [Streaming Video]. United States of America: Producer. Retrieved from Alexander Street Press Nursing Education Collection

Quantitative and Qualitative Studies

Research is broken down into two different types: quantitative and qualitative. Quantitative studies are all about measurement. They will report statistics of things that can be physically measured like blood pressure, weight and oxygen saturation. Qualitative studies, on the other hand, are about people's experiences and how they feel about something. This type of information cannot be measured using statistics. Both of these types of studies report original research and are considered single studies. Watch the video below for more information.

Study Designs

Some research study types that you will encounter include:

- Case-Control Studies

- Cohort Studies

- Cross-Sectional Studies

Studies that Synthesize Other Studies

Sometimes, a research study will look at the results of many studies and look for trends and draw conclusions. These types of studies include:

- Meta Analyses

Tip! How do you determine the research article's study type or level of evidence? First, look at the article abstract. Most of the time the abstract will have a methodology section, which should tell you what type of study design the researchers are using. If it is not in the abstract, look for the methodology section of the article. It should tell you all about what type of study the researcher is doing and the steps they used to carry out the study.

Read the book below to learn how to read a clinical paper, including the types of study designs you will encounter.

- Search Website

- Library Tech Support

- Services for Colleagues

Chamberlain College of Nursing is owned and operated by Chamberlain University LLC. In certain states, Chamberlain operates as Chamberlain College of Nursing pending state authorization for Chamberlain University.

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- BMJ Journals More You are viewing from: Google Indexer

You are here

- Volume 21, Issue 4

- New evidence pyramid

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- M Hassan Murad ,

- Mouaz Alsawas ,

- http://orcid.org/0000-0001-5481-696X Fares Alahdab

- Rochester, Minnesota , USA

- Correspondence to : Dr M Hassan Murad, Evidence-based Practice Center, Mayo Clinic, Rochester, MN 55905, USA; murad.mohammad{at}mayo.edu

https://doi.org/10.1136/ebmed-2016-110401

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

- EDUCATION & TRAINING (see Medical Education & Training)

- EPIDEMIOLOGY

- GENERAL MEDICINE (see Internal Medicine)

The first and earliest principle of evidence-based medicine indicated that a hierarchy of evidence exists. Not all evidence is the same. This principle became well known in the early 1990s as practising physicians learnt basic clinical epidemiology skills and started to appraise and apply evidence to their practice. Since evidence was described as a hierarchy, a compelling rationale for a pyramid was made. Evidence-based healthcare practitioners became familiar with this pyramid when reading the literature, applying evidence or teaching students.

Various versions of the evidence pyramid have been described, but all of them focused on showing weaker study designs in the bottom (basic science and case series), followed by case–control and cohort studies in the middle, then randomised controlled trials (RCTs), and at the very top, systematic reviews and meta-analysis. This description is intuitive and likely correct in many instances. The placement of systematic reviews at the top had undergone several alterations in interpretations, but was still thought of as an item in a hierarchy. 1 Most versions of the pyramid clearly represented a hierarchy of internal validity (risk of bias). Some versions incorporated external validity (applicability) in the pyramid by either placing N-1 trials above RCTs (because their results are most applicable to individual patients 2 ) or by separating internal and external validity. 3

Another version (the 6S pyramid) was also developed to describe the sources of evidence that can be used by evidence-based medicine (EBM) practitioners for answering foreground questions, showing a hierarchy ranging from studies, synopses, synthesis, synopses of synthesis, summaries and systems. 4 This hierarchy may imply some sort of increasing validity and applicability although its main purpose is to emphasise that the lower sources of evidence in the hierarchy are least preferred in practice because they require more expertise and time to identify, appraise and apply.

The traditional pyramid was deemed too simplistic at times, thus the importance of leaving room for argument and counterargument for the methodological merit of different designs has been emphasised. 5 Other barriers challenged the placement of systematic reviews and meta-analyses at the top of the pyramid. For instance, heterogeneity (clinical, methodological or statistical) is an inherent limitation of meta-analyses that can be minimised or explained but never eliminated. 6 The methodological intricacies and dilemmas of systematic reviews could potentially result in uncertainty and error. 7 One evaluation of 163 meta-analyses demonstrated that the estimation of treatment outcomes differed substantially depending on the analytical strategy being used. 7 Therefore, we suggest, in this perspective, two visual modifications to the pyramid to illustrate two contemporary methodological principles ( figure 1 ). We provide the rationale and an example for each modification.

- Download figure

- Open in new tab

- Download powerpoint

The proposed new evidence-based medicine pyramid. (A) The traditional pyramid. (B) Revising the pyramid: (1) lines separating the study designs become wavy (Grading of Recommendations Assessment, Development and Evaluation), (2) systematic reviews are ‘chopped off’ the pyramid. (C) The revised pyramid: systematic reviews are a lens through which evidence is viewed (applied).

Rationale for modification 1

In the early 2000s, the Grading of Recommendations Assessment, Development and Evaluation (GRADE) Working Group developed a framework in which the certainty in evidence was based on numerous factors and not solely on study design which challenges the pyramid concept. 8 Study design alone appears to be insufficient on its own as a surrogate for risk of bias. Certain methodological limitations of a study, imprecision, inconsistency and indirectness, were factors independent from study design and can affect the quality of evidence derived from any study design. For example, a meta-analysis of RCTs evaluating intensive glycaemic control in non-critically ill hospitalised patients showed a non-significant reduction in mortality (relative risk of 0.95 (95% CI 0.72 to 1.25) 9 ). Allocation concealment and blinding were not adequate in most trials. The quality of this evidence is rated down due to the methodological imitations of the trials and imprecision (wide CI that includes substantial benefit and harm). Hence, despite the fact of having five RCTs, such evidence should not be rated high in any pyramid. The quality of evidence can also be rated up. For example, we are quite certain about the benefits of hip replacement in a patient with disabling hip osteoarthritis. Although not tested in RCTs, the quality of this evidence is rated up despite the study design (non-randomised observational studies). 10

Rationale for modification 2

Another challenge to the notion of having systematic reviews on the top of the evidence pyramid relates to the framework presented in the Journal of the American Medical Association User's Guide on systematic reviews and meta-analysis. The Guide presented a two-step approach in which the credibility of the process of a systematic review is evaluated first (comprehensive literature search, rigorous study selection process, etc). If the systematic review was deemed sufficiently credible, then a second step takes place in which we evaluate the certainty in evidence based on the GRADE approach. 11 In other words, a meta-analysis of well-conducted RCTs at low risk of bias cannot be equated with a meta-analysis of observational studies at higher risk of bias. For example, a meta-analysis of 112 surgical case series showed that in patients with thoracic aortic transection, the mortality rate was significantly lower in patients who underwent endovascular repair, followed by open repair and non-operative management (9%, 19% and 46%, respectively, p<0.01). Clearly, this meta-analysis should not be on top of the pyramid similar to a meta-analysis of RCTs. After all, the evidence remains consistent of non-randomised studies and likely subject to numerous confounders.

Therefore, the second modification to the pyramid is to remove systematic reviews from the top of the pyramid and use them as a lens through which other types of studies should be seen (ie, appraised and applied). The systematic review (the process of selecting the studies) and meta-analysis (the statistical aggregation that produces a single effect size) are tools to consume and apply the evidence by stakeholders.

Implications and limitations

Changing how systematic reviews and meta-analyses are perceived by stakeholders (patients, clinicians and stakeholders) has important implications. For example, the American Heart Association considers evidence derived from meta-analyses to have a level ‘A’ (ie, warrants the most confidence). Re-evaluation of evidence using GRADE shows that level ‘A’ evidence could have been high, moderate, low or of very low quality. 12 The quality of evidence drives the strength of recommendation, which is one of the last translational steps of research, most proximal to patient care.

One of the limitations of all ‘pyramids’ and depictions of evidence hierarchy relates to the underpinning of such schemas. The construct of internal validity may have varying definitions, or be understood differently among evidence consumers. A limitation of considering systematic review and meta-analyses as tools to consume evidence may undermine their role in new discovery (eg, identifying a new side effect that was not demonstrated in individual studies 13 ).

This pyramid can be also used as a teaching tool. EBM teachers can compare it to the existing pyramids to explain how certainty in the evidence (also called quality of evidence) is evaluated. It can be used to teach how evidence-based practitioners can appraise and apply systematic reviews in practice, and to demonstrate the evolution in EBM thinking and the modern understanding of certainty in evidence.

- Leibovici L

- Agoritsas T ,

- Vandvik P ,

- Neumann I , et al

- ↵ Resources for Evidence-Based Practice: The 6S Pyramid. Secondary Resources for Evidence-Based Practice: The 6S Pyramid Feb 18, 2016 4:58 PM. http://hsl.mcmaster.libguides.com/ebm

- Vandenbroucke JP

- Berlin JA ,

- Dechartres A ,

- Altman DG ,

- Trinquart L , et al

- Guyatt GH ,

- Vist GE , et al

- Coburn JA ,

- Coto-Yglesias F , et al

- Sultan S , et al

- Montori VM ,

- Ioannidis JP , et al

- Altayar O ,

- Bennett M , et al

- Nissen SE ,

Contributors MHM conceived the idea and drafted the manuscript. FA helped draft the manuscript and designed the new pyramid. MA and NA helped draft the manuscript.

Competing interests None declared.

Provenance and peer review Not commissioned; externally peer reviewed.

Linked Articles

- Editorial Pyramids are guides not rules: the evolution of the evidence pyramid Terrence Shaneyfelt BMJ Evidence-Based Medicine 2016; 21 121-122 Published Online First: 12 Jul 2016. doi: 10.1136/ebmed-2016-110498

- Perspective EBHC pyramid 5.0 for accessing preappraised evidence and guidance Brian S Alper R Brian Haynes BMJ Evidence-Based Medicine 2016; 21 123-125 Published Online First: 20 Jun 2016. doi: 10.1136/ebmed-2016-110447

Read the full text or download the PDF:

- Privacy Policy

Home » Evidence – Definition, Types and Example

Evidence – Definition, Types and Example

Table of Contents

Definition:

Evidence is any information or data that supports or refutes a claim, hypothesis, or argument. It is the basis for making decisions, drawing conclusions, and establishing the truth or validity of a statement.

Types of Evidence

Types of Evidence are as follows:

Empirical evidence

This type of evidence comes from direct observation or measurement, and is usually based on data collected through scientific or other systematic methods.

Expert Testimony

This is evidence provided by individuals who have specialized knowledge or expertise in a particular area, and can provide insight into the validity or reliability of a claim.

Personal Experience

This type of evidence comes from firsthand accounts of events or situations, and can be useful in providing context or a sense of perspective.

Statistical Evidence

This type of evidence involves the use of numbers and data to support a claim, and can include things like surveys, polls, and other types of quantitative analysis.

Analogical Evidence

This involves making comparisons between similar situations or cases, and can be used to draw conclusions about the validity or applicability of a claim.

Documentary Evidence

This includes written or recorded materials, such as contracts, emails, or other types of documents, that can provide support for a claim.

Circumstantial Evidence

This type of evidence involves drawing inferences based on indirect or circumstantial evidence, and can be used to support a claim when direct evidence is not available.

Examples of Evidence

Here are some examples of different types of evidence that could be used to support a claim or argument:

- A study conducted on a new drug, showing its effectiveness in treating a particular disease, based on clinical trials and medical data.

- A doctor providing testimony in court about a patient’s medical condition or injuries.

- A patient sharing their personal experience with a particular medical treatment or therapy.

- A study showing that a particular type of cancer is more common in certain demographics or geographic areas.

- Comparing the benefits of a healthy diet and exercise to maintaining a car with regular oil changes and maintenance.

- A contract showing that two parties agreed to a particular set of terms and conditions.

- The presence of a suspect’s DNA at the crime scene can be used as circumstantial evidence to suggest their involvement in the crime.

Applications of Evidence

Here are some applications of evidence:

- Law : In the legal system, evidence is used to establish facts and to prove or disprove a case. Lawyers use different types of evidence, such as witness testimony, physical evidence, and documentary evidence, to present their arguments and persuade judges and juries.

- Science : Evidence is the foundation of scientific inquiry. Scientists use evidence to support or refute hypotheses and theories, and to advance knowledge in their fields. The scientific method relies on evidence-based observations, experiments, and data analysis.

- Medicine : Evidence-based medicine (EBM) is a medical approach that emphasizes the use of scientific evidence to inform clinical decision-making. EBM relies on clinical trials, systematic reviews, and meta-analyses to determine the best treatments for patients.

- Public policy : Evidence is crucial in informing public policy decisions. Policymakers rely on research studies, evaluations, and other forms of evidence to develop and implement policies that are effective, efficient, and equitable.

- Business : Evidence-based decision-making is becoming increasingly important in the business world. Companies use data analytics, market research, and other forms of evidence to make strategic decisions, evaluate performance, and optimize operations.

Purpose of Evidence

The purpose of evidence is to support or prove a claim or argument. Evidence can take many forms, including statistics, examples, anecdotes, expert opinions, and research studies. The use of evidence is important in fields such as science, law, and journalism to ensure that claims are backed up by factual information and to make decisions based on reliable information. Evidence can also be used to challenge or question existing beliefs and assumptions, and to uncover new knowledge and insights. Overall, the purpose of evidence is to provide a foundation for understanding and decision-making that is grounded in empirical facts and data.

Characteristics of Evidence

Some Characteristics of Evidence are as follows:

- Relevance : Evidence must be relevant to the claim or argument it is intended to support. It should directly address the issue at hand and not be tangential or unrelated.

- Reliability : Evidence should come from a trustworthy and reliable source. The credibility of the source should be established, and the information should be accurate and free from bias.

- Sufficiency : Evidence should be sufficient to support the claim or argument. It should provide enough information to make a strong case, but not be overly repetitive or redundant.

- Validity : Evidence should be based on sound reasoning and logic. It should be based on established principles or theories, and should be consistent with other evidence and observations.

- Timeliness : Evidence should be current and up-to-date. It should reflect the most recent developments or research in the field.

- Accessibility : Evidence should be easily accessible to others who may want to review or evaluate it. It should be clear and easy to understand, and should be presented in a way that is appropriate for the intended audience.

Advantages of Evidence

The use of evidence has several advantages, including:

- Supports informed decision-making: Evidence-based decision-making enables individuals or organizations to make informed choices based on reliable information rather than assumptions or opinions.

- Enhances credibility: The use of evidence can enhance the credibility of claims or arguments by providing factual support.

- Promotes transparency: The use of evidence promotes transparency in decision-making processes by providing a clear and objective basis for decisions.

- Facilitates evaluation : Evidence-based decision-making enables the evaluation of the effectiveness of policies, programs, and interventions.

- Provides insights: The use of evidence can provide new insights and perspectives on complex issues, enabling individuals or organizations to approach problems from different angles.

- Enhances problem-solving : Evidence-based decision-making can help individuals or organizations to identify the root causes of problems and develop more effective solutions.

Limitations of Evidence

Some Limitations of Evidence are as follows:

- Limited availability : Evidence may not always be available or accessible, particularly in areas where research is limited or where data collection is difficult.

- Interpretation challenges: Evidence can be open to interpretation, and individuals may interpret the same evidence differently based on their biases, experiences, or values.

- Time-consuming: Gathering and evaluating evidence can be time-consuming and require significant resources, which may not always be feasible in certain contexts.

- May not apply universally : Evidence may be context-specific and may not apply universally to other situations or populations.

- Potential for bias: Even well-designed studies or research can be influenced by biases, such as selection bias, measurement bias, or publication bias.

- Ethical concerns : Evidence may raise ethical concerns, such as the use of personal data or the potential harm to research participants.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

What is Art – Definition, Types, Examples

What is Anthropology – Definition and Overview

What is Literature – Definition, Types, Examples

Economist – Definition, Types, Work Area

Anthropologist – Definition, Types, Work Area

What is History – Definitions, Periods, Methods

What this handout is about

This handout will provide a broad overview of gathering and using evidence. It will help you decide what counts as evidence, put evidence to work in your writing, and determine whether you have enough evidence. It will also offer links to additional resources.

Introduction

Many papers that you write in college will require you to make an argument ; this means that you must take a position on the subject you are discussing and support that position with evidence. It’s important that you use the right kind of evidence, that you use it effectively, and that you have an appropriate amount of it. If, for example, your philosophy professor didn’t like it that you used a survey of public opinion as your primary evidence in your ethics paper, you need to find out more about what philosophers count as good evidence. If your instructor has told you that you need more analysis, suggested that you’re “just listing” points or giving a “laundry list,” or asked you how certain points are related to your argument, it may mean that you can do more to fully incorporate your evidence into your argument. Comments like “for example?,” “proof?,” “go deeper,” or “expand” in the margins of your graded paper suggest that you may need more evidence. Let’s take a look at each of these issues—understanding what counts as evidence, using evidence in your argument, and deciding whether you need more evidence.

What counts as evidence?

Before you begin gathering information for possible use as evidence in your argument, you need to be sure that you understand the purpose of your assignment. If you are working on a project for a class, look carefully at the assignment prompt. It may give you clues about what sorts of evidence you will need. Does the instructor mention any particular books you should use in writing your paper or the names of any authors who have written about your topic? How long should your paper be (longer works may require more, or more varied, evidence)? What themes or topics come up in the text of the prompt? Our handout on understanding writing assignments can help you interpret your assignment. It’s also a good idea to think over what has been said about the assignment in class and to talk with your instructor if you need clarification or guidance.

What matters to instructors?

Instructors in different academic fields expect different kinds of arguments and evidence—your chemistry paper might include graphs, charts, statistics, and other quantitative data as evidence, whereas your English paper might include passages from a novel, examples of recurring symbols, or discussions of characterization in the novel. Consider what kinds of sources and evidence you have seen in course readings and lectures. You may wish to see whether the Writing Center has a handout regarding the specific academic field you’re working in—for example, literature , sociology , or history .

What are primary and secondary sources?