80+ Rewards and Recognition Speech Examples for Inspiration

Discover impactful rewards and recognition speech example. Inspire your team with words of appreciation. Elevate your recognition game today!

Jan 25th 2024 • 26 min read

In today's competitive corporate landscape, where employee motivation and engagement are crucial for success, rewards and recognition speech examples have emerged as powerful tools to inspire and appreciate the efforts of employees. Whether it's to celebrate milestones, acknowledge outstanding performance, or simply boost morale, a well-crafted rewards and recognition speech can leave a lasting impact on the recipients.

If you're searching for the perfect blend of words to uplift and motivate your team, look no further. In this blog, we will delve into the art of rewards and recognition speeches, exploring examples that encapsulate the essence of appreciation and inspire employees to reach new heights of success.

Whether you're a team leader, manager, or someone looking to express your appreciation to a colleague, our blog will provide you with a treasure trove of rewards and recognition speech examples that are sure to captivate and inspire. So, grab a cup of coffee, sit back, and let us guide you through the world of appreciation and recognition in the workplace.

What Is A Rewards and Recognition Speech?

A rewards and recognition speech is a formal address given to acknowledge and appreciate individuals or groups for their exceptional achievements or contributions. It serves as a platform to publicly recognize the efforts and accomplishments of deserving individuals , boosting morale, and fostering a positive work culture. This type of speech is commonly delivered during award ceremonies, employee appreciation events, or annual gatherings where appreciation and recognition are key objectives.

A well-crafted rewards and recognition speech celebrates the recipients' accomplishments, highlights their impact on the organization, and inspires others to strive for similar success. In essence, it is an opportunity to acknowledge, motivate, and express gratitude towards individuals who have made a significant difference in their field or organization.

Related Reading

• Employee Recognition Ideas • Recognizing Employees • Power Of Recognition • Recognition Of Achievement • Culture Of Appreciation • Employee Rewards And Recognition

How Rewards and Recognition Impact Employee Motivation and Engagement

Employee motivation and engagement are crucial factors in determining the success of a company. One effective way to enhance motivation and engagement is through rewards and recognition. By acknowledging and appreciating employees' efforts and accomplishments, organizations can create a positive work environment that encourages productivity and fosters loyalty. We will explore how rewards and recognition can impact employee motivation and engagement.

1. Increased Job Satisfaction

Rewarding and recognizing employees for their hard work not only boosts their confidence but also increases their overall job satisfaction. When employees feel valued and appreciated, they are more likely to enjoy their work and feel a sense of fulfillment in their roles . This satisfaction translates into higher motivation and engagement, as employees are more committed to their tasks and strive to exceed expectations.

2. Improved Performance

Rewards and recognition serve as powerful motivators that drive employees to perform at their best. When employees know that their efforts will be acknowledged and rewarded, they are more likely to go the extra mile and demonstrate exceptional performance. As a result, organizations witness improved productivity, increased efficiency, and higher quality outputs. By recognizing and rewarding outstanding performance, companies can create a culture of excellence and continuous improvement.

3. Enhanced Employee Morale

Recognition plays a significant role in boosting employee morale. When employees receive acknowledgment for their achievements, it reinforces their belief in their capabilities and contributions. This positive reinforcement not only motivates employees to continue performing well but also creates a supportive and encouraging work environment. High employee morale leads to increased job satisfaction, lower turnover rates, and a stronger sense of belonging within the organization.

4. Strengthened Employee Engagement

Rewards and recognition contribute to higher levels of employee engagement. Engaged employees are those who are fully committed to their work and actively contribute to the success of the organization. When employees feel recognized and valued, they develop a stronger emotional connection to their work and the company's goals. This emotional investment drives their engagement, leading to increased productivity, creativity, and innovation.

5. Retention and Attraction of Talent

An effective rewards and recognition program can significantly impact employee retention and attraction. Recognized and rewarded employees are more likely to remain loyal to their organization and less likely to seek employment elsewhere. In addition, a positive work culture that emphasizes rewards and recognition becomes an attractive selling point for potential candidates. By showcasing a commitment to employee motivation and engagement, organizations can attract top talent, reduce turnover costs, and maintain a highly skilled workforce.

Rewards and recognition have a profound impact on employee motivation and engagement. By implementing a comprehensive program that appreciates and acknowledges employees' efforts, organizations can create a work environment that fosters satisfaction, productivity, and loyalty. Investing in rewards and recognition not only benefits individual employees but also contributes to the long-term success of the organization as a whole.

• Words Of Appreciation For Good Work Done By Team • How To Recognize Employees • Recognition Examples • How Do You Like To Be Recognized • Recognizing A Coworker • Reward And Recognition Ideas • Fun Employee Recognition Ideas • Formal Recognition • Team Member Recognition • Performance Recognition • Reasons To Recognize Employees • Reward And Recognition Strategies • Recognition For Leadership • How To Recognize Employees For A Job Well Done • Reasons For Rewarding Employees • Employee Wall Of Fame Ideas

1. Celebrating Team Milestones

Recognizing and rewarding the achievements of individual team members or the entire team when they reach significant milestones, such as completing a project, meeting a target, or reaching a certain number of sales.

2. Employee of the Month

Recognizing outstanding employees by selecting one as the Employee of the Month, based on their exceptional performance, dedication, and positive impact on the organization.

3. Sales Contest Winners

Acknowledging the top performers in sales contests and rewarding them with incentives, such as cash bonuses, gift cards, or extra vacation days.

4. Most Improved Employee

Recognizing employees who have shown significant improvement in their performance, skills, or productivity, and highlighting their dedication to personal growth and development.

5. Customer Service Heroes

Acknowledging employees who have gone above and beyond to provide exceptional customer service, resolving challenging situations, and ensuring customer satisfaction.

6. Leadership Excellence

Recognizing managers or team leaders who have demonstrated exceptional leadership skills, inspiring and motivating their team members to achieve outstanding results.

7. Innovation Champions

Celebrating employees who have introduced innovative ideas, processes, or solutions that have had a positive impact on the organization, encouraging a culture of creativity and continuous improvement.

8. Outstanding Team Player

Recognizing individuals who consistently contribute to the success of their team, displaying a collaborative mindset, and supporting their colleagues in achieving common goals.

9. Safety Initiatives

Acknowledging employees who have taken proactive measures to ensure a safe working environment, promoting safety protocols, and reducing accidents or injuries.

10. Excellence in Problem-Solving

Recognizing employees who have demonstrated exceptional problem-solving skills, showcasing their ability to analyze complex situations and find effective solutions.

11. Mentorship and Coaching

Celebrating individuals who have dedicated their time and expertise to mentor and coach their colleagues, supporting their professional growth and development.

12. Going the Extra Mile

Recognizing employees who consistently go above and beyond their regular duties, displaying exceptional commitment and dedication to their work.

13. Team Building Champions

Acknowledging individuals who have organized and led successful team-building activities, fostering a positive team spirit and enhancing collaboration within the organization.

14. Employee Wellness Advocates

Recognizing employees who have actively promoted and contributed to the well-being of their colleagues, encouraging a healthy work-life balance and creating a positive work environment.

15. Community Service

Celebrating employees who have actively participated in community service initiatives, volunteering their time and skills to make a positive impact on society.

16. Outstanding Project Management

Recognizing individuals who have demonstrated exceptional project management skills, successfully leading and delivering complex projects on time and within budget.

17. Customer Appreciation

Acknowledging employees who have received positive feedback or testimonials from customers, highlighting their exceptional service and dedication to customer satisfaction.

18. Quality Excellence

Recognizing employees who consistently deliver high-quality work, ensuring that the organization maintains its standards of excellence and customer satisfaction.

19. Team Spirit

Celebrating the unity and camaraderie within a team, acknowledging their strong bond and collaborative efforts in achieving shared goals.

20. Creativity and Innovation

Recognizing employees who have shown creativity and innovative thinking in their work, introducing new ideas, and driving positive change within the organization.

21. Initiative and Proactivity

Acknowledging employees who take the initiative and demonstrate a proactive approach to their work, identifying opportunities for improvement and taking action to implement them.

22. Cross-Functional Collaboration

Celebrating individuals who have successfully collaborated with colleagues from different departments or teams, fostering a culture of teamwork and achieving synergy in their projects.

23. Learning and Development Champions

Recognizing employees who have shown a commitment to their own learning and development, actively seeking opportunities to acquire new skills and knowledge.

24. Outstanding Customer Retention

Acknowledging employees who have played a crucial role in ensuring customer loyalty and retention, consistently delivering exceptional service and building strong relationships with customers.

25. Adaptability and Flexibility

Celebrating employees who have demonstrated adaptability and flexibility in their work, successfully navigating through change and embracing new challenges.

26. Continuous Improvement

Recognizing individuals who consistently seek ways to improve processes, systems, or workflows, contributing to the organization's overall efficiency and effectiveness.

27. Employee Engagement Advocates

Acknowledging employees who have actively promoted employee engagement initiatives, creating a positive and motivating work environment.

28. Exceptional Time Management

Recognizing employees who have demonstrated exceptional time management skills, effectively prioritizing tasks and meeting deadlines.

29. Resilience and Perseverance

Celebrating individuals who have shown resilience and perseverance in the face of challenges or setbacks, inspiring others to overcome obstacles and achieve success.

30. Teamwork in Crisis

Acknowledging the teamwork and collaboration displayed by employees during a crisis or challenging situation, highlighting their ability to work together under pressure.

31. Leadership in Diversity and Inclusion

Recognizing leaders who have actively promoted diversity and inclusion within the organization, fostering an inclusive and equitable work environment.

32. Outstanding Problem-Solving

Celebrating employees who consistently demonstrate exceptional problem-solving skills, showcasing their ability to analyze complex situations and find innovative solutions.

33. Excellence in Customer Retention

Recognizing employees who have played a crucial role in ensuring customer loyalty and satisfaction, consistently delivering exceptional service and building strong relationships.

34. Inspirational Leadership

Acknowledging leaders who have inspired and motivated their team members to achieve outstanding results, displaying exceptional leadership qualities.

35. Customer Service Excellence

Celebrating employees who consistently provide exceptional customer service, going above and beyond to meet customer needs and exceed expectations.

36. Collaboration and Teamwork

Recognizing individuals or teams who have demonstrated outstanding collaboration and teamwork, achieving common goals through effective communication and cooperation.

37. Employee Empowerment

Acknowledging employees who have actively empowered their colleagues, fostering a culture of autonomy, trust, and accountability within the organization.

38. Sales Achievement Awards

Celebrating top performers in sales, acknowledging their exceptional sales skills, and their contribution to the organization's growth and success.

39. Learning and Development Pioneers

Recognizing employees who have taken the initiative in their own learning and development, actively seeking opportunities to acquire new skills and knowledge.

40. Innovation and Creativity

Celebrating individuals who have introduced innovative ideas, processes, or solutions that have had a positive impact on the organization, encouraging a culture of creativity and continuous improvement.

41. Leadership in Crisis

Acknowledging leaders who have displayed exceptional leadership skills during a crisis or challenging situation, guiding their team members and making effective decisions under pressure.

42. Outstanding Customer Service

Recognizing employees who consistently provide exceptional customer service, demonstrating a commitment to customer satisfaction and building strong customer relationships.

43. Collaboration Across Departments

Celebrating individuals or teams who have successfully collaborated with colleagues from different departments, fostering cross-functional synergy and achieving shared goals.

44. Employee Growth and Development

Acknowledging employees who have shown dedication to their own growth and development, actively seeking opportunities to enhance their skills and knowledge.

45. Quality Excellence

46. resilience and adaptability.

Celebrating individuals who have demonstrated resilience and adaptability in the face of challenges or change, inspiring others to overcome obstacles and embrace new opportunities.

47. Leadership in Employee Engagement

Acknowledging leaders who have actively promoted employee engagement initiatives, creating a positive and motivating work environment.

48. Outstanding Problem-Solving

Recognizing employees who consistently demonstrate exceptional problem-solving skills, showcasing their ability to analyze complex situations and find innovative solutions.

49. Customer Appreciation

Celebrating employees who have received positive feedback or testimonials from customers, highlighting their exceptional service and commitment to customer satisfaction.

50. Teamwork in Crisis

51. leadership in diversity and inclusion, 52. inspirational leadership.

Celebrating leaders who have inspired and motivated their team members to achieve outstanding results, displaying exceptional leadership qualities.

53. Exceptional Time Management

Acknowledging employees who have demonstrated exceptional time management skills, effectively prioritizing tasks and meeting deadlines.

54. Continuous Improvement

55. employee empowerment.

Celebrating employees who have actively empowered their colleagues, fostering a culture of autonomy, trust, and accountability within the organization.

56. Sales Achievement Awards

Recognizing top performers in sales, acknowledging their exceptional sales skills, and their contribution to the organization's growth and success.

57. Learning and Development Pioneers

Celebrating employees who have taken the initiative in their own learning and development, actively seeking opportunities to acquire new skills and knowledge.

58. Innovation and Creativity

Acknowledging individuals who have introduced innovative ideas, processes, or solutions that have had a positive impact on the organization, encouraging a culture of creativity and continuous improvement.

59. Leadership in Crisis

Recognizing leaders who have displayed exceptional leadership skills during a crisis or challenging situation, guiding their team members and making effective decisions under pressure.

60. Outstanding Customer Service

Celebrating employees who consistently provide exceptional customer service, demonstrating a commitment to customer satisfaction and building strong customer relationships.

61. Collaboration Across Departments

Recognizing individuals or teams who have successfully collaborated with colleagues from different departments, fostering cross-functional synergy and achieving shared goals.

62. Employee Growth and Development

Celebrating employees who have shown dedication to their own growth and development, actively seeking opportunities to enhance their skills and knowledge.

63. Quality Excellence

Acknowledging employees who consistently deliver high-quality work, ensuring that the organization maintains its standards of excellence and customer satisfaction.

64. Resilience and Adaptability

Recognizing individuals who have demonstrated resilience and adaptability in the face of challenges or change, inspiring others to overcome obstacles and embrace new opportunities.

65. Leadership in Employee Engagement

Celebrating leaders who have actively promoted employee engagement initiatives, creating a positive and motivating work environment.

66. Outstanding Problem-Solving

Acknowledging employees who consistently demonstrate exceptional problem-solving skills, showcasing their ability to analyze complex situations and find innovative solutions.

67. Customer Appreciation

Recognizing employees who have received positive feedback or testimonials from customers, highlighting their exceptional service and commitment to customer satisfaction.

68. Teamwork in Crisis

Celebrating the teamwork and collaboration displayed by employees during a crisis or challenging situation, highlighting their ability to work together under pressure.

69. Leadership in Diversity and Inclusion

Acknowledging leaders who have actively promoted diversity and inclusion within the organization, fostering an inclusive and equitable work environment.

70. Inspirational Leadership

Recognizing leaders who have inspired and motivated their team members to achieve outstanding results, displaying exceptional leadership qualities.

71. Exceptional Time Management

Celebrating employees who have demonstrated exceptional time management skills, effectively prioritizing tasks and meeting deadlines.

72. Continuous Improvement

Acknowledging individuals who consistently seek ways to improve processes, systems, or workflows, contributing to the organization's overall efficiency and effectiveness.

73. Employee Empowerment

Recognizing employees who have actively empowered their colleagues, fostering a culture of autonomy, trust, and accountability within the organization.

74. Sales Achievement Awards

75. learning and development pioneers.

Acknowledging employees who have taken the initiative in their own learning and development, actively seeking opportunities to acquire new skills and knowledge.

76. Innovation and Creativity

Recognizing individuals who have introduced innovative ideas, processes, or solutions that have had a positive impact on the organization, encouraging a culture of creativity and continuous improvement.

77. Leadership in Crisis

Celebrating leaders who have displayed exceptional leadership skills during a crisis or challenging situation, guiding their team members and making effective decisions under pressure.

78. Outstanding Customer Service

Acknowledging employees who consistently provide exceptional customer service, demonstrating a commitment to customer satisfaction and building strong customer relationships.

79. Collaboration Across Departments

80. employee growth and development, the importance of a rewards and recognition speech.

In the business world, rewards and recognition play a crucial role in motivating employees and fostering a positive company culture. While giving a gift with a note may be a thoughtful gesture, delivering a rewards and recognition speech adds a personal touch and amplifies the impact of the recognition. This is especially significant for major employee rewards, such as a 10-year anniversary or other significant recognition events.

1. Personal Connection and Appreciation

A rewards and recognition speech allows the business owner to personally connect with the employee and express gratitude for their dedication and achievements. By taking the time to deliver a speech, the business owner demonstrates that they genuinely value and appreciate the employee's contributions. This personal touch fosters a deeper sense of connection and appreciation within the company culture.

2. Public Acknowledgment and Inspiration

When a rewards and recognition speech is delivered in a public setting, such as a company-wide event or meeting, it not only acknowledges the efforts of the individual employee but also inspires and motivates others. Seeing their colleagues being recognized and appreciated encourages other employees to strive for excellence and contribute to the success of the company. It creates a positive competitive environment where employees are motivated to perform their best.

3. Reinforcement of Company Values

A rewards and recognition speech provides an opportunity for the business owner to reinforce the company's values and goals. By highlighting the employee's achievements and how they align with the company's mission, vision, and values, the speech emphasizes the importance of these core principles. This reinforcement helps to solidify a positive company culture that is built on shared values and a sense of purpose.

4. Celebration and Team Building

Delivering a rewards and recognition speech creates a celebratory atmosphere that brings employees together as a team. It showcases the collective achievements of the company and encourages a sense of camaraderie and unity. Celebrating accomplishments through a speech allows employees to feel proud of their individual and team successes, which further strengthens the bonds within the organization.

5. Emotional Connection and Employee Engagement

A rewards and recognition speech taps into the emotional aspect of recognition. It goes beyond a simple gift and note, as it allows the business owner to communicate genuine appreciation and admiration for the employee's contributions. This emotional connection enhances employee engagement and makes them feel valued and invested in the company's success. Engaged employees are more likely to be loyal, productive, and committed to the organization.

Delivering a rewards and recognition speech is a powerful way for business owners to show appreciation and reinforce a positive company culture. It establishes a personal connection, inspires others, reinforces company values, builds team spirit, and fosters employee engagement. By recognizing and celebrating employees through a speech, business owners can create a work environment that thrives on recognition, motivation, and a shared sense of purpose.

How To Implement A Successful Rewards and Recognition Program

Creating and implementing a rewards and recognition program in a company can have numerous benefits, such as increasing employee motivation, improving performance, and enhancing employee satisfaction. It is essential to approach the implementation strategically to ensure its effectiveness. Here are some effective strategies for implementing a successful rewards and recognition program:

1. Define Clear Objectives and Goals

Before designing your rewards and recognition program, it is crucial to define clear objectives and goals. What do you want to achieve with the program? Are you aiming to boost employee morale, increase productivity, or enhance teamwork? Clearly defining your objectives will help you tailor the program to meet specific needs and ensure that it aligns with the company's overall goals.

2. Involve Employees in the Process

To make your rewards and recognition program truly effective, involve employees in the process. Conduct surveys or focus groups to gather their input and preferences. By involving employees, you can ensure that the program resonates with them, making it more meaningful and valuable. Involving employees in the decision-making process can foster a sense of ownership and engagement.

3. Develop a Variety of Recognition Initiatives

To cater to the diverse needs and preferences of your employees, it is essential to develop a variety of recognition initiatives. Consider implementing both formal and informal recognition programs. Formal recognition may include annual awards ceremonies or performance-based bonuses, while informal recognition can involve small gestures like personalized thank-you notes or shout-outs during team meetings. By offering a range of initiatives, you can ensure that different types of accomplishments are acknowledged and valued.

4. Make the Program Transparent and Equitable

Transparency and equity are crucial in a rewards and recognition program. Clearly communicate the criteria for receiving recognition and the rewards associated with it. Ensure that the criteria are fair, consistent, and unbiased . This transparency will promote a sense of fairness and prevent any perception of favoritism or inequality within the organization.

5. Create a Culture of Appreciation

Implementing a rewards and recognition program is not enough; it must be supported by a culture of appreciation. Encourage managers and leaders to regularly acknowledge and appreciate their team members' efforts. Foster a work environment where recognition is not limited to the formal program but becomes a natural part of everyday interactions. This culture of appreciation will amplify the impact of the formal program and create a positive and motivating work atmosphere.

6. Evaluate and Refine

Continuous evaluation and refinement are essential for the long-term success of a rewards and recognition program. Regularly collect feedback from employees and managers to identify areas of improvement. Analyze the effectiveness of different initiatives and adjust them as necessary. By regularly evaluating and refining the program, you can ensure that it remains relevant, impactful, and aligned with the evolving needs of the organization.

Implementing a rewards and recognition program requires thoughtful planning and execution. By following these strategies, you can create a program that not only rewards and recognizes employees' contributions but also inspires and motivates them to achieve their best.

10 Reasons for Rewards and Recognition & How To Determine Who To Reward

1. boost employee morale.

Rewarding and recognizing employees for their hard work can significantly boost morale. It shows employees that their efforts are valued and appreciated, which in turn motivates them to continue performing at their best.

2. Improve Employee Engagement

When employees feel recognized and rewarded, they are more likely to be engaged in their work. Engaged employees are more productive, creative, and willing to go above and beyond to achieve company goals.

3. Increase Employee Retention

Recognizing and rewarding employees for their contributions can help increase employee retention. Employees who feel valued are more likely to stay with the company, reducing turnover rates and the associated costs of hiring and training new employees.

4. Foster a Positive Work Culture

Implementing a rewards and recognition program can help foster a positive work culture. When employees see their peers being acknowledged for their achievements, it creates a supportive and collaborative environment where everyone strives for success.

5. Reinforce Desired Behaviors

Rewards and recognition can be used to reinforce desired behaviors and values within the organization. By publicly acknowledging and rewarding employees who exemplify these behaviors, it encourages others to follow suit.

6. Encourage Continuous Improvement

Recognizing employees for their good work encourages a culture of continuous improvement. It motivates employees to seek out opportunities to enhance their skills and knowledge, leading to personal and professional growth .

7. Enhance Team Collaboration

Rewarding and recognizing the efforts of individuals within a team can strengthen team collaboration. It fosters a sense of camaraderie and encourages teamwork, as employees understand the importance of supporting one another to achieve common goals.

8. Increase Customer Satisfaction

When employees feel recognized and appreciated, they are more likely to provide excellent customer service. Happy and engaged employees create positive interactions with customers, leading to increased customer satisfaction and loyalty.

9. Drive Innovation

Rewards and recognition can also drive innovation within an organization. When employees are acknowledged for their innovative ideas or problem-solving skills, it encourages a culture of creativity and encourages others to think outside the box.

10. Attract Top Talent

A well-established rewards and recognition program can help attract top talent to the company. By showcasing the company's commitment to valuing and rewarding its employees, it becomes an attractive proposition for potential candidates.

How To Determine Who To Reward as a Business Owner

1. performance metrics.

Use performance metrics such as sales targets, customer satisfaction ratings, or project completion rates to identify employees who have consistently exceeded expectations.

2. Peer Feedback

Seek feedback from colleagues and team members to identify individuals who have made significant contributions to the team or have gone above and beyond their assigned duties.

3. Customer Feedback

Consider customer feedback when determining who to reward. Look for employees who have received positive feedback or have gone the extra mile to ensure customer satisfaction.

4. Quality of Work

Consider the quality of work produced by employees. Reward those who consistently deliver high-quality work and attention to detail.

5. Leadership and Initiative

Identify employees who display leadership qualities and take initiative in solving problems or improving processes. These individuals often have a positive impact on the team and deserve recognition.

6. Innovation and Creativity

Recognize employees who have demonstrated innovation and creativity in their work. These individuals contribute fresh ideas and solutions that drive the company forward.

7. Collaboration and Teamwork

Acknowledge employees who excel at collaboration and teamwork. These individuals build strong relationships with their colleagues and contribute to a positive and productive work environment.

8. Longevity and Seniority

Consider rewarding employees based on their longevity and seniority within the company. This recognizes their loyalty and commitment to the organization over the years.

9. Going Above and Beyond

Identify employees who consistently go above and beyond their job responsibilities. Reward those who have taken on additional tasks, volunteered for extra projects, or contributed to the company's success in exceptional ways.

10. Personal Development and Growth

Recognize employees who actively seek opportunities for personal development and growth. Reward those who have acquired new skills or certifications that benefit both themselves and the company.

By considering these factors, business owners can fairly determine who to reward and ensure that recognition is given to those who truly deserve it.

Potential Challenges To Avoid When Implementing A Rewards and Recognition Program

1. lack of clarity and consistency in criteria.

The success of a rewards and recognition program depends on clearly defined and consistent criteria for determining who is eligible for recognition and what types of rewards are available. Failing to establish and communicate these criteria can lead to confusion and dissatisfaction among employees . It is essential to ensure that the criteria are fair, transparent, and aligned with organizational goals.

2. Inadequate communication and feedback

Effective communication is crucial when implementing a rewards and recognition program. Employees need to understand the purpose of the program, how it works, and what is expected of them to be eligible for recognition. Regular feedback is also vital to ensure that employees understand why they are being recognized and to reinforce positive behaviors. Without proper communication and feedback, employees may feel undervalued or uncertain about the program's objectives.

3. Limited variety and personalization of rewards

Offering a limited range of rewards or failing to personalize them to individual preferences can diminish the impact of a rewards and recognition program. Different employees may value different types of rewards, whether it's financial incentives, professional development opportunities, or public recognition. It is important to consider individual preferences and offer a variety of rewards that align with employees' needs and aspirations.

4. Lack of alignment with organizational values

A rewards and recognition program should align with the core values and goals of an organization. If the program does not reflect the organization's values or reinforce behaviors that contribute to its success, it may be perceived as inauthentic or disconnected from the broader objectives. It is essential to design a program that supports the desired culture and drives employee engagement and performance in a way that aligns with the organization's mission and values.

5. Failure to recognize team efforts

While recognizing individual achievements is important, it is equally crucial to acknowledge and reward team accomplishments. Neglecting to recognize the contributions of teams can create a sense of competition and undermine collaboration, which are essential for overall organizational success. Incorporate team-based rewards and recognition initiatives to foster a sense of camaraderie and motivate collective efforts.

6. Inconsistent and infrequent recognition

Recognition should be timely and consistent to be effective. Delayed or infrequent recognition can diminish its impact and may lead to a decrease in employee motivation. Establish a regular cadence for recognition and ensure that it is provided promptly when deserved. Consistency in recognizing achievements will help reinforce positive behaviors and maintain employee engagement.

7. Lack of management support and involvement

The success of a rewards and recognition program relies heavily on the support and involvement of management. If leaders do not actively participate or demonstrate enthusiasm for the program, employees may perceive it as insignificant or insincere. It is crucial to engage managers at all levels and empower them to recognize and reward employees' achievements. Managers should serve as role models and champions of the program to foster a culture of appreciation and recognition.

Implementing a rewards and recognition program can be a powerful tool for motivating employees, increasing engagement, and driving organizational success. By addressing and avoiding these potential challenges and pitfalls, organizations can create a program that effectively recognizes and rewards employees for their contributions and accomplishments.

Best Practices for Implementing A Rewards and Recognition Program

Implementing a rewards and recognition program is a crucial step in fostering employee engagement, motivation, and loyalty within an organization. It requires careful planning and execution to ensure its effectiveness. We will explore the best practices for implementing a successful rewards and recognition program.

1. Clearly Define Program Objectives

Before implementing a rewards and recognition program, it is essential to define clear objectives. This involves identifying the behaviors, achievements, or contributions that will be rewarded, as well as the desired outcomes of the program. By clearly defining program objectives, organizations can align the program with their overall business goals and ensure its relevance and effectiveness.

2. Align Rewards with Employee Preferences

To ensure the success of a rewards and recognition program, it is important to align the rewards with the preferences and aspirations of employees. Conducting surveys or focus groups can help gather employee feedback and identify the types of rewards that would motivate and resonate with them the most. This could include monetary incentives, non-monetary rewards, or a combination of both.

3. Make the Recognition Timely and Specific

Recognition should be timely and specific to have a lasting impact on employee motivation and morale. It is important to recognize and reward employees promptly after they have achieved the desired behaviors or accomplishments. Recognition should be specific, highlighting the specific actions or contributions that led to the recognition. This helps reinforce desired behaviors and demonstrates the value placed on those actions.

4. Foster a Culture of Peer-to-Peer Recognition

In addition to formal recognition from managers or supervisors, organizations should encourage peer-to-peer recognition. This creates a positive and inclusive work environment where employees feel valued and appreciated by their colleagues. Implementing a platform or system for employees to easily recognize and appreciate each other's efforts can enhance teamwork, collaboration, and overall employee satisfaction.

5. Communicate and Promote the Program

Effective communication and promotion of the rewards and recognition program are essential for its success. Organizations should clearly communicate the program's objectives, eligibility criteria, and rewards to all employees. This can be done through email announcements, intranet postings, or even in-person meetings. Regular reminders and updates about the program can help maintain awareness and encourage participation.

6. Ensure Fairness and Transparency

A successful rewards and recognition program should be perceived as fair and transparent by employees. The criteria for eligibility and selection of recipients should be clearly communicated and consistently applied. To build trust and credibility, it is important to ensure that the program is free from favoritism or bias. Regular evaluations of the program's effectiveness and fairness can help identify any areas for improvement.

7. Measure and Track Results

To evaluate the effectiveness of a rewards and recognition program, it is important to measure and track its results. This can be done through employee surveys, performance metrics, or feedback sessions. By analyzing the data, organizations can identify any gaps or areas for improvement and make necessary adjustments to enhance the program's impact.

By following these best practices, organizations can implement a rewards and recognition program that effectively motivates and engages employees. This, in turn, leads to increased productivity, employee satisfaction, and overall organizational success. Implementing a well-designed program that aligns with the organization's goals and employee preferences is crucial for achieving these desired outcomes.

Find Meaningful Corporate Gifts for Employees With Ease with Giftpack

In today's world, where connections are made across borders and cultures, the act of gift-giving has evolved into a meaningful gesture that transcends mere material objects. Giftpack , a pioneering platform in the realm of corporate gifting, understands the importance of personalized and impactful gifts that can forge and strengthen relationships.

Simplifying the Corporate Gifting Process

The traditional approach to corporate gifting often involves hours of deliberation, browsing through countless options, and struggling to find the perfect gift that truly resonates with the recipient. Giftpack recognizes this challenge and aims to simplify the corporate gifting process for individuals and businesses alike. By leveraging the power of technology and their custom AI algorithm, Giftpack offers a streamlined and efficient solution that takes the guesswork out of gift selection.

Customization at its Best

One of the key features that sets Giftpack apart is their ability to create highly customized scenario swag box options for each recipient. They achieve this by carefully considering the individual's basic demographics, preferences, social media activity, and digital footprint. This comprehensive approach ensures that every gift is tailored to the recipient's unique personality and tastes, enhancing the overall impact and meaning behind the gesture.

A Vast Catalog of Global Gifts

Giftpack boasts an extensive catalog of over 3.5 million products from around the world, with new additions constantly being made. This vast selection allows Giftpack to cater to a wide range of preferences and interests, ensuring that there is something for everyone. Whether the recipient is an employee, a customer, a VIP client, a friend, or a family member, Giftpack has the ability to curate the most fitting gifts that will leave a lasting impression.

User-Friendly Platform and Global Delivery

Giftpack understands the importance of convenience and accessibility, which is why they have developed a user-friendly platform that is intuitive and easy to navigate. This ensures a seamless experience for both individuals and businesses, saving them time and effort in the gift selection process. Giftpack offers global delivery, allowing gifts to be sent to recipients anywhere in the world. This global reach further reinforces their commitment to connecting people through personalized gifting.

Meaningful Connections Across the Globe

At its core, Giftpack's mission is to foster meaningful connections through the power of personalized gifting. By taking into account the recipient's individuality and preferences, Giftpack ensures that each gift is a reflection of thoughtfulness and care. Whether it's strengthening relationships with employees, delighting customers, or expressing gratitude to valued clients, Giftpack enables individuals and businesses to make a lasting impact on those who matter most.

In a world where personalization and meaningful connections are highly valued, Giftpack stands out as a trailblazer in revolutionizing the corporate gifting landscape. With their innovative approach, vast catalog of global gifts, user-friendly platform, and commitment to personalized experiences, Giftpack is transforming the way we think about rewards and recognition.

• Modern Employee Recognition Programs • Employee Award Programs • Recognizing Employee Contributions • Employee Recognition Program Best Practices • Rewards And Recognition System • How To Create An Employee Recognition Program

Make your gifting efficient and improve employee attrition rates with Giftpack AI

Visit our product page to unlock the power of personalized employee appreciation gifts.

About Giftpack

Giftpack's AI-powered solution simplifies the corporate gifting process and amplifies the impact of personalized gifts. We're crafting memorable touchpoints by sending personalized gifts selected out of a curated pool of 3 million options with just one click. Our AI technology efficiently analyzes each recipient's social media, cultural background, and digital footprint to customize gift options at scale. We take care of generating, ordering, and shipping gifts worldwide. We're transforming the way people build authentic business relationships by sending smarter gifts faster with gifting CRM.

Sign up for our newsletter

Enter your email to receive the latest news and updates from giftpack..

By clicking the subscribe button, I accept that I'll receive emails from the Giftpack Blog, and my data will be processed in accordance with Giftpack's Privacy Policy.

- Presentation Hacks

How To Give a Speech of Recognition

- By: Amy Boone

Your boss sends you an email to say she’ll be dropping by your office later that afternoon to talk about the employee of the year award. You start to get excited, thinking about how hard you’ve worked and how nice it would be to get recognized. However, when your boss arrives, she says, “I know you are a great speaker, so I’d like you to be the one to present the employee of the year award at our company banquet in December. I’ll already be speaking during the ceremony a lot, so I’d like to feature other employees, and I think you’d be great at it.” Your excitement falters. So you aren’t up for the award after all. You are just delivering a speech of recognition. But, hey, at least your presentation skills got your boss’s attention.

Sometimes you aren’t the main event or keynote. Like in the scenario above, sometimes you’ll be asked to give another type of speech. But presenting someone else with an award or honor is still an important task. Here are 3 tips for delivering a great speech of recognition.

1. Get the Audience’s Attention

Many times a speech of recognition follows something else. It could be dinner, or another award presentation, or a longer message. At any rate, part of your job is to transition from whatever has been happening to the award presentation. That means you’ll need to get the audience’s attention. This can be done many ways, but here are two of our favorites.

- Take the stage and wait a few moments. Dr. Alex Lickerman says when we use silence strategically, it makes us appear more powerful and charismatic . Both of these can get the attention of our audience. So when you pause, people will try to figure out why. And so your silence effectively captures the attention of the crowd.

- Start with a story. Whenever a speaker begins a story, the audience tends to perk up. It’s human nature to not want to miss a story that is being told. And don’t announce that you are going to tell a story. Just jump right in. This is called a jump start , and it’s a great way to capture attention fast.

2. Know the Recipient

If the whole purpose of the speech of recognition is to shine the spotlight on someone else’s achievements, it helps to know that someone else. You can only give a great speech honoring them if you know how they would like to be honored. For example, you wouldn’t want to lightly roast someone who may be offended or who dislikes being the center of attention. So do a little research. Ask the recipient’s family and friends what makes him/her feel special. Get to know the personality of the recipient a little bit. Is this person more reserved or someone who is boisterous and loves to joke? Match the tone and content of your speech of recognition to the recipient’s personality. And aim to meet all 5 of what Marc Junele calls the characteristics of effective praise . Make it personal, appropriate, specific, timely, and authentic.

3. Show, Don’t Tell

No one wants to sit and listen to a long list of qualifications. So instead of telling why the recipient deserves the award or honor, show it. Use a story to illustrate or paint a mental picture during your speech of recognition. One of the best examples of this I’ve seen happened during the National Funeral Service honoring former president Ronald Reagan. During his eulogy, former President George W. Bush Sr. says he learned a lot about decency from Reagan. He then tells the story of the time he went to visit Reagan after he had been shot. When he entered the hospital room, he found him in his hospital gown, on the floor wiping water up that he had spilled because he was worried his nurse would get blamed for it and get in trouble. It’s one thing to say someone is humble and decent. It’s quite another to show it with a story.

While you are preparing your speech to recognize someone else, remember this. Every chance you get to stand up and present is a chance to hone your speaking skills whether you are the keynote, or not.

Got a presentation problem that we can help you solve? Get in touch with us now.

Join our newsletter today!

© 2006-2024 Ethos3 – An Award Winning Presentation Design and Training Company ALL RIGHTS RESERVED

- Terms & Conditions

- Privacy Policy

- Diversity and Inclusion

Use These Employee Appreciation Speech Examples In 2024 To Show Your Team You Care

The simple act of saying “thank you” does wonders.

Yet sometimes, those two words alone don’t seem to suffice. Sometimes your team made such a difference, and your gratitude is so profound, that a pat on the back just isn’t enough.

Because appreciation is more than saying thank you . It’s about demonstrating that your team is truly seen and heard by thanking them for specific actions. It’s about showing that you understand and empathize with the struggles your team faces every day. And it’s about purpose too. True appreciation connects your team’s efforts back to a grand vision and mission.

According to Investopedia ,

“Appreciation is an increase in the value of an asset over time.”

So it’s time to diversify your portfolio of reliable tips and go-to words of wisdom for expressing your undying appreciation. After all, you diversify your portfolio of investments, and really, workplace appreciation is an investment.

Let’s set aside the standard definition of appreciation for a second and take a look at the financial definition.

In the workplace, appreciation increases the value of your most important assets—your employees—over time.

Here are some ways appreciation enhances employee relations:

- Appreciation makes employees stick around. In fact, statistics suggest that a lack of appreciation is the main driver of employee turnover , which costs companies an average of about $15,000 per worker .

- Appreciation reinforces employees’ understanding of their roles and expectations, which drives engagement and performance.

- Appreciation builds a strong company culture that is magnetic to both current and prospective employees.

- Appreciation might generate positive long-term mental effects for both the giver and the receiver.

- Appreciation motivates employees. One experiment showed that a few simple words of appreciation compelled employees to make more fundraising calls.

We searched through books, movies, songs, and even TED Talks to bring you 141 amazing motivational quotes for employees you’ll be proud to put in a Powerpoint, an intra-office meme or a foam board printing cutout! Find plenty of fantastic workplace quotes to motivate any team.

Some of the most successful entrepreneurs in American business built companies, and lasting legacies, by developing employees through the simple act of appreciation.

Charles Schwab, founder of the Charles Schwab Corporation, once said:

“I consider my ability to arouse enthusiasm among my people the greatest asset I possess, and the way to develop the best that is in a person is by appreciation and encouragement. There is nothing else that so kills the ambitions of a person as criticism from superiors. I never criticize anyone. I believe in giving a person incentive to work. So I am anxious to praise but loath to find fault. If I like anything, I am hearty in my appreciation and lavish in my praise.”

Boost your ability to arouse enthusiasm by learning how to deliver employee appreciation speeches that make an impact. Once you master the habits and rules below, sincere appreciation will flow from you like sweet poetry. Your employees are going to love it!

Page Contents (Click To Jump)

The Employee Appreciation Speech Checklist

Planning employee appreciation speeches can be fast and easy when you follow a go-to “recipe” that works every time. From a simple thank you to a heart felt work anniversary speech, it all has a template.

Maritz®studies human behavior and highlights relevant findings that could impact the workplace. They developed the Maritz Recognition Model to help everyone deliver the best appreciation possible. The model asserts that effective reward and recognition speech examples touch on three critical elements: the behavior, the effect, and the thank you.

Here’s a summary of the model, distilled into a checklist for your employee appreciation speeches:

- Talk about the behavior(s). While most employee appreciation speeches revolve around the vague acknowledgment of “hard word and dedication,” it’s best to call out specific actions and accomplishments so employees will know what they did well, feel proud, and get inspired to repeat the action. Relay an anecdote about one specific behavior to hook your audience and then expand the speech to cover everyone. You can even include appreciation stories from other managers or employees in your speech.

- Talk about the effect(s) of the behavior(s). What positive effect did the employee behaviors have on your company’s mission? If you don’t have any statistics to share, simply discuss how you expect the behaviors to advance your mission.

- Deliver the “thank you” with heartfelt emotion. Infusing speeches with emotion will help employees feel your appreciation in addition to hearing it. To pinpoint the emotional core of your speech, set the “speech” part aside and casually consider why you’re grateful for your employees. Write down everything that comes to mind. Which aspects made you tear up? Which gave you goosebumps? Follow those points to find the particular emotional way you want to deliver your “thank you” to the team .

Tips and tricks:

- Keep a gratitude journal (online or offline) . Record moments of workplace gratitude and employee acts you appreciate. This practice will make you feel good, and it also provides plenty of fodder for appreciation speeches or employee appreciation day .

- Make mini-speeches a habit. Try to deliver words of recognition to employees every single day. As you perfect small-scale appreciation speeches, the longer ones will also feel more natural.

- When speaking, pause frequently to let your words sink in.

- Making eye contact

- Controlling jittery gestures

- Acting out verbs

- Matching facial expression to words

- Moving around the stage

- Varied pace. Don’t drone on at the same pace. Speak quickly and then switch to speaking slowly.

- Varied volume. Raise your voice on key points and closings.

Employee Appreciation Speech Scripts

Build on these customizable scripts to deliver employee appreciation speeches and casual meeting shout-outs every chance you get. Each script follows the 3-step approach we discussed above. Once you get the hang of appreciation speech basics, you’ll be able to pull inspirational monologues from your hat at a moment’s notice.

Swipe the examples below, but remember to infuse each speech with your own unique perspectives, personality, and heartfelt emotions.

All-Purpose Appreciation Speech

Greet your audience..

I feel so lucky to work with you all. In fact, [insert playful aside: e.g. My wife doesn’t understand how I don’t hate Mondays. It drives her nuts!]

Thanks to you, I feel lucky to come to work every day.

Talk about behaviors you appreciate.

Everyone here is [insert applicable team soft skills: e.g. positive, inspiring, creative, and intelligent ]. I’m constantly amazed by the incredible work you do.

Let’s just look at the past few months, for example. [Insert bullet points of specific accomplishments from every department].

- Finance launched an amazing new online payroll system.

- Business Development doubled their sales last quarter.

- Human Resources trained us all in emotional intelligence.

Talk about the effects of the behaviors.

These accomplishment aren’t just nice bullet points for my next presentation. Each department’s efforts has deep and lasting impacts on our business. [Explain the effects of each highlighted accomplishment].

- The new payroll system is going to save us at least $20,000 on staff hours and paper.

- Revenue from those doubled sales will go into our core investments, including a new training program .

- And I can already see the effects of that emotional intelligence training each time I’m in a meeting and a potential argument is resolved before it starts.

Say thank you.

I can’t thank you enough for everything you do for this company and for me. Knowing I have your support and dedication makes me a better, happier person both at work and at home.

Formal Appreciation Speech

Greet your audience by explaining why you were excited to come to work today..

I was not thrilled when my alarm went off this morning, but I must admit, I’m luckier than most people. As I got out of bed and thought about doing [insert daily workplace activities that inspire you], I felt excitement instead of dread. It’s an incredible feeling, looking forward to work every day, and for that, I have each and every one of you to thank.

Just last week, [insert specific anecdote: e.g. I remembered, ironically, that I forgot to create a real-time engagement plan for TECHLO’s giant conference next month. As you all know, they’re one of our biggest clients, so needless to say, I was panicking. Then I sit down for my one-on-one with MEGAN, worried that I didn’t even have time for our meeting, and what does she say? She wants to remind me that we committed to submit a promotional plan by the end of the week. She had some ideas for the TECHLO conference, so she went ahead and created a draft.]

[Insert the outcome of the anecdote: e.g. Her initiative dazzled me, and it saved my life! We met our deadline and also blew TECHLO away. In fact, they asked us to plan a similar initiative for their upcoming mid-year conference.]

[Insert a short thank-you paragraph tying everything together: e.g. And you know what, it was hard for me to pick just one example to discuss tonight. You all do so many things that blow me away every day. Thank you for everything. Thank you for making each day of work something we can all be proud of.]

Tip! Encourage your entire team to join in on the appreciation with CareCards ! This digital appreciation board allows you to recognize your colleague with a dedicated space full of personalized well wishes, thank-yous, and anything else you want to shout them out with! To explore Caroo’s CareCard program, take this 60-second tour !

Visionary Appreciation Speech

Greet your audience by explaining why you do what you do..

Here at [company name] we [insert core competency: e.g. build nonprofit websites], but we really [insert the big-picture outcome of your work: e.g. change the world by helping amazing nonprofits live up to their inspiring visions.]

I want to emphasize the “we” here. This company would be nothing without your work.

Talk about behaviors and explain how each works toward your mission.

Have you guys ever thought about that? How what you do [recap the big-picture outcome at your work: e.g. changes the world by helping amazing nonprofits live up to their inspiring visions]?

[Insert specific examples of recent work and highlight the associated outcomes: e.g. Let’s explore in terms of the websites we launched recently. I know every single person here played a role in developing each of these websites, and you should all be proud.]

- The launch of foodangel.org means that at least 500 homeless people in the greater metro area will eat dinner tonight.

- The launch of happyup.org means thousands of depressed teenagers will get mental health counseling.

Now if that’s not [recap the big-picture outcome], then I don’t know what is.

Thank you for joining me on the mission to [big-picture outcome]. With any other team, all we’re trying to do might just not be possible, but you all make me realize we can do anything together.

Casual Appreciation Speech

Greet your audience by discussing what upcoming work-related items you are most excited about..

I’ve been thinking nonstop about [insert upcoming initiative: e.g. our upcoming gallery opening]. This [initiative] is the direct result of your amazing work. To me, this [initiative] represents [insert what the initiative means to you: e.g. our true debut into the budding arts culture of our city.]

You’ve all been pulling out all the stops, [insert specific example: e.g. staying late, making 1,000 phone calls a day, and ironing out all the details.]

Because of your hard work, I’m absolutely confident the [initiative] will [insert key performance indicator: e.g. sell out on opening night.]

Thank you, not just for making this [initiative] happen, but also for making the journey such a positive and rewarding experience.

Funny Appreciation Speech

Greet your audience by telling an inside joke..

I want to thank you all for the good times, especially [insert inside joke: e.g. that time we put a glitter bomb in Jeff’s office.]

Talk about behaviors you appreciate and highlight comical outcomes.

But seriously, you guys keep me sane. For example [insert comical examples: e.g.]:

- The Operations team handled the merger so beautifully, I only had to pull out half my hair.

- The Marketing team landed a new client, and now we can pay you all for another year.

- And thanks to the Web team’s redesign of our website, I actually know what we do here.

Talk about the real effects of the behaviors.

But for real for real, all your work this year has put us on a new level. [Insert outcomes: e.g. We have an amazing roster of clients, a growing staff, and an incredible strategic plan that makes me feel unqualified to work here.] You guys made all this happen.

So thank you. This is when I would usually tell a joke to deflect my emotions, but for once in my life, I actually don’t want to hide. I want you all to know how much I appreciate all you do.

That was hard; I’m going to sit down now.

Appreciation Speech for Employee of the Month

Greet your audience by giving a shout-out to the employee of the month..

Shout out to [insert employee’s name] for being such a reliable member of our team. Your work ethics and outstanding performance are an inspiration to all of us! Keep up the amazing work!

Talk about behaviors you appreciate in them and highlight their best traits.

It’s not only essential to work diligently, but it is likewise crucial to be kind while you’re at it–and you’ve done both wonderfully!

Talk about the effects of their behaviors on the success of the company.

You bring optimism, happiness, and an all-around positive attitude to this team.

Thank you bring you!

Appreciation Speech for Good Work

Greet your audience with a round of applause to thank them for their hard work..

You always put in 100% and we see it. Proud of you, team!

Talk about behaviors you appreciate in your team members.

You work diligently, you foster a positive team environment, and you achieve or exceed your goals.

Talk about the effects of your team’s behaviors on the company.

Your dedication to the team is commendable, as is your readiness to do whatever needs to be done for the company – even if it’s not technically part of your job description. Thank you.

No matter the situation, you always rise to the occasion! Thank you for your unwavering dedication; it doesn’t go unnoticed.

People Also Ask These Questions:

Q: how can i show that i appreciate my employees .

- A: An appreciation speech is a great first step to showing your employees that you care. The SnackNation team also recommends pairing your words of appreciation with a thoughtful act or activity for employees to enjoy. We’ve researched, interviewed, and tested all the best peer-to-peer recognition platforms, office-wide games, celebration events, and personalized rewards to bring you the top 39 recognition and appreciation ideas to start building a culture of acknowledgment in your office.

Q: What should I do after giving an appreciation speech?

- A: In order to drive home the point of your employee appreciation speech, it can be effective to reward your employees for their excellent work. Rewards are a powerful tool used for employee engagement and appreciation. Recognizing your employees effectively is crucial for retaining top talent and keeping employees happy. To make your search easier, we sought out the top 121 creative ways that companies can reward their employees that you can easily implement at your office.

Q: Why should I give an employee appreciation speech?

- A: Appreciation and employee motivation are intimately linked together. A simple gesture of an employee appreciation gift can have a positive effect on your company culture. When an employee is motivated to work they are more productive. For more ideas to motivate your team, we’ve interviewed leading employee recognition and engagement experts to curate a list of the 22 best tips here !

We hope adapting these tips and scripts will help you articulate the appreciation we know you already feel!

Free Download: Download this entire list as a PDF . Easily save it on your computer for quick reference or print it for future team gatherings.

Employee Recognition & Appreciation Resources:

39 impactful employee appreciation & recognition ideas [updated], 12 effective tools & strategies to improve teamwork in the workplace, your employee referral program guide: the benefits, how-tos, incentives & tools, 21 unforgettable work anniversary ideas [updated], 15 ideas to revolutionize your employee of the month program, 16 awesome employee perks your team will love, 71 employee recognition quotes every manager should know, how to retain employees: 18 practical takeaways from 7 case studies, boost your employee recognition skills and words (templates included).

Interested in a content partnership? Let’s chat!

Get Started

About SnackNation

SnackNation is a healthy office snack delivery service that makes healthy snacking fun, life more productive, and workplaces awesome. We provide a monthly, curated selection of healthy snacks from the hottest, most innovative natural food brands in the industry, giving our members a hassle-free experience and delivering joy to their offices.

Popular Posts

Want to become a better professional in just 5 minutes?

You May Also Like

✅ 13 Best Employee Training Plan Templates In 2024

🚀 12 Best Employee Improvement Plan Templates for 2024

10 Comments

great piece of work love it, great help, thanks.

great tips !!!!

Helpful piece. LAVISH MAYOR

Enjoy reading this. Nice work

Thank you. Very helpful tips.

This is the most helpful and practical article I have found for writing a Colleague Appreciation speech. The Funny Appreciation Speech section was written for me 🙂 Ashley Bell, you’re a rock star!

Very nice speech Well explanation of words And very helpful for work

Hi, Your notes are awesome. Thank you for the share.

Your article is very helpful. Thankyou :).

Your stuff is really awesome. Thankyou for sharing such nice information

Leave a Reply Cancel Reply

Save my name, email, and website in this browser for the next time I comment.

SnackNation About Careers Blog Tech Blog Contact Us Privacy Policy Online Accessibility Statement

Pricing How It Works Member Reviews Take the Quiz Guides and Resources FAQ Terms and Conditions Website Accessibility Policy

Exciting Employee Engagement Ideas Employee Wellness Program Ideas Thoughtful Employee Appreciation Ideas Best ATS Software Fun Office Games & Activities for Employees Best Employee Engagement Software Platforms For High Performing Teams [HR Approved] Insanely Fun Team Building Activities for Work

Fun Virtual Team Building Activities The Best Employee Recognition Software Platforms Seriously Awesome Gifts For Coworkers Company Swag Ideas Employees Really Want Unique Gifts For Employees Corporate Gift Ideas Your Clients and Customers Will Love

© 2024 SnackNation. Handcrafted in Los Angeles

- Recipient Choice Gifts

- Free Work Personality Assessment

- Happy Hour & Lunches

- Group eCards

- Office Snacks

- Employee Recognition Software

- Join Our Newsletter

- Partner With Us

- SnackNation Blog

- Employee Template Directory

- Gifts For Remote Employees

- ATS Software Guide

- Best Swag Vendors

- Top HR Tools

- Ways To Reward Employees

- Employee Appreciation Gift Guide

- More Networks

- Privacy Overview

- Strictly Necessary Cookies

- 3rd Party Cookies

This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

Strictly Necessary Cookie should be enabled at all times so that we can save your preferences for cookie settings.

If you disable this cookie, we will not be able to save your preferences. This means that every time you visit this website you will need to enable or disable cookies again.

This website uses Google Analytics to collect anonymous information such as the number of visitors to the site, and the most popular pages.

Keeping this cookie enabled helps us to improve our website.

Please enable Strictly Necessary Cookies first so that we can save your preferences!

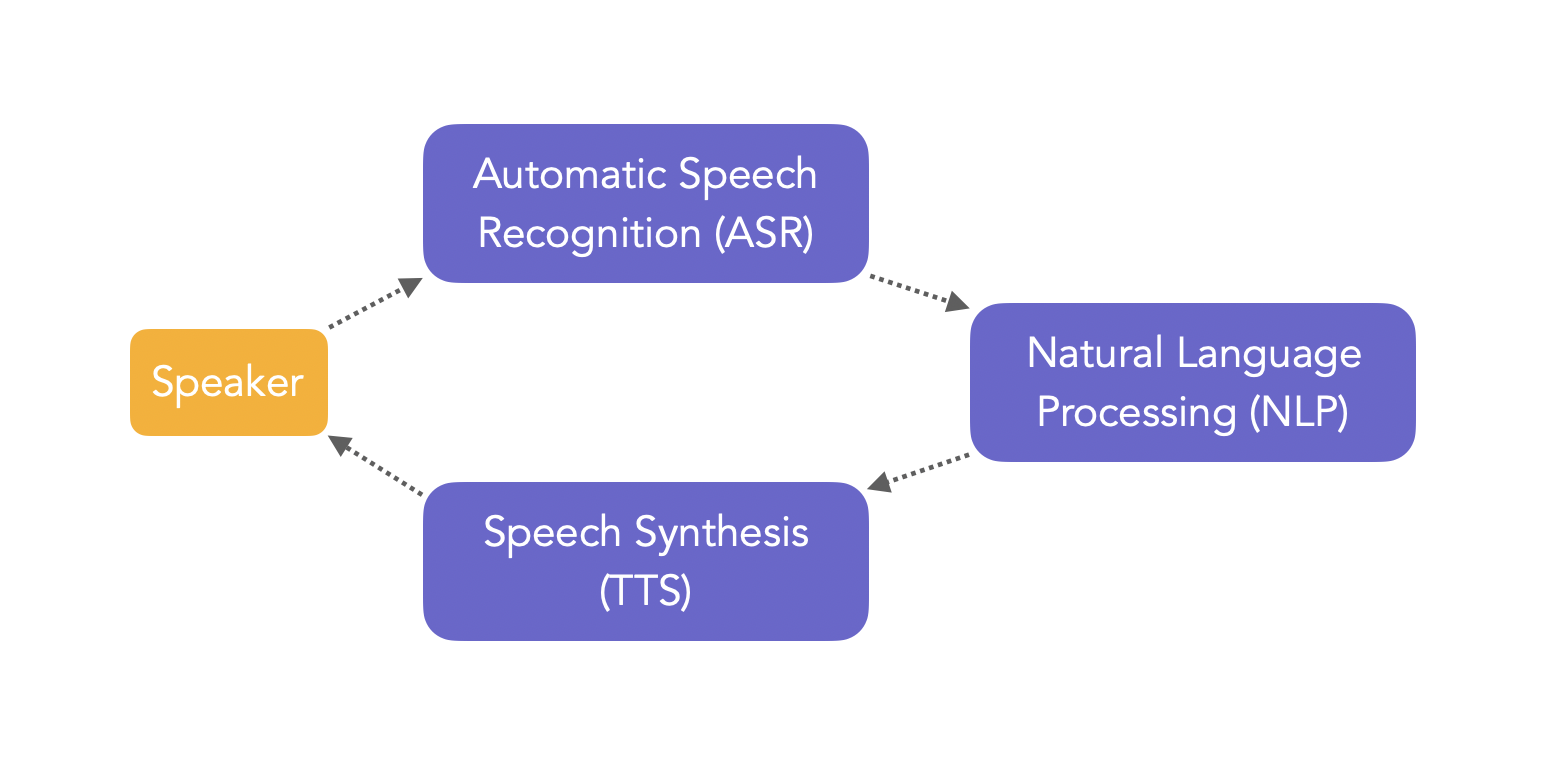

Speech recognition, also known as automatic speech recognition (ASR), computer speech recognition or speech-to-text, is a capability that enables a program to process human speech into a written format.

While speech recognition is commonly confused with voice recognition, speech recognition focuses on the translation of speech from a verbal format to a text one whereas voice recognition just seeks to identify an individual user’s voice.

IBM has had a prominent role within speech recognition since its inception, releasing of “Shoebox” in 1962. This machine had the ability to recognize 16 different words, advancing the initial work from Bell Labs from the 1950s. However, IBM didn’t stop there, but continued to innovate over the years, launching VoiceType Simply Speaking application in 1996. This speech recognition software had a 42,000-word vocabulary, supported English and Spanish, and included a spelling dictionary of 100,000 words.

While speech technology had a limited vocabulary in the early days, it is utilized in a wide number of industries today, such as automotive, technology, and healthcare. Its adoption has only continued to accelerate in recent years due to advancements in deep learning and big data. Research (link resides outside ibm.com) shows that this market is expected to be worth USD 24.9 billion by 2025.

Explore the free O'Reilly ebook to learn how to get started with Presto, the open source SQL engine for data analytics.

Register for the guide on foundation models

Many speech recognition applications and devices are available, but the more advanced solutions use AI and machine learning . They integrate grammar, syntax, structure, and composition of audio and voice signals to understand and process human speech. Ideally, they learn as they go — evolving responses with each interaction.

The best kind of systems also allow organizations to customize and adapt the technology to their specific requirements — everything from language and nuances of speech to brand recognition. For example:

- Language weighting: Improve precision by weighting specific words that are spoken frequently (such as product names or industry jargon), beyond terms already in the base vocabulary.

- Speaker labeling: Output a transcription that cites or tags each speaker’s contributions to a multi-participant conversation.

- Acoustics training: Attend to the acoustical side of the business. Train the system to adapt to an acoustic environment (like the ambient noise in a call center) and speaker styles (like voice pitch, volume and pace).

- Profanity filtering: Use filters to identify certain words or phrases and sanitize speech output.

Meanwhile, speech recognition continues to advance. Companies, like IBM, are making inroads in several areas, the better to improve human and machine interaction.

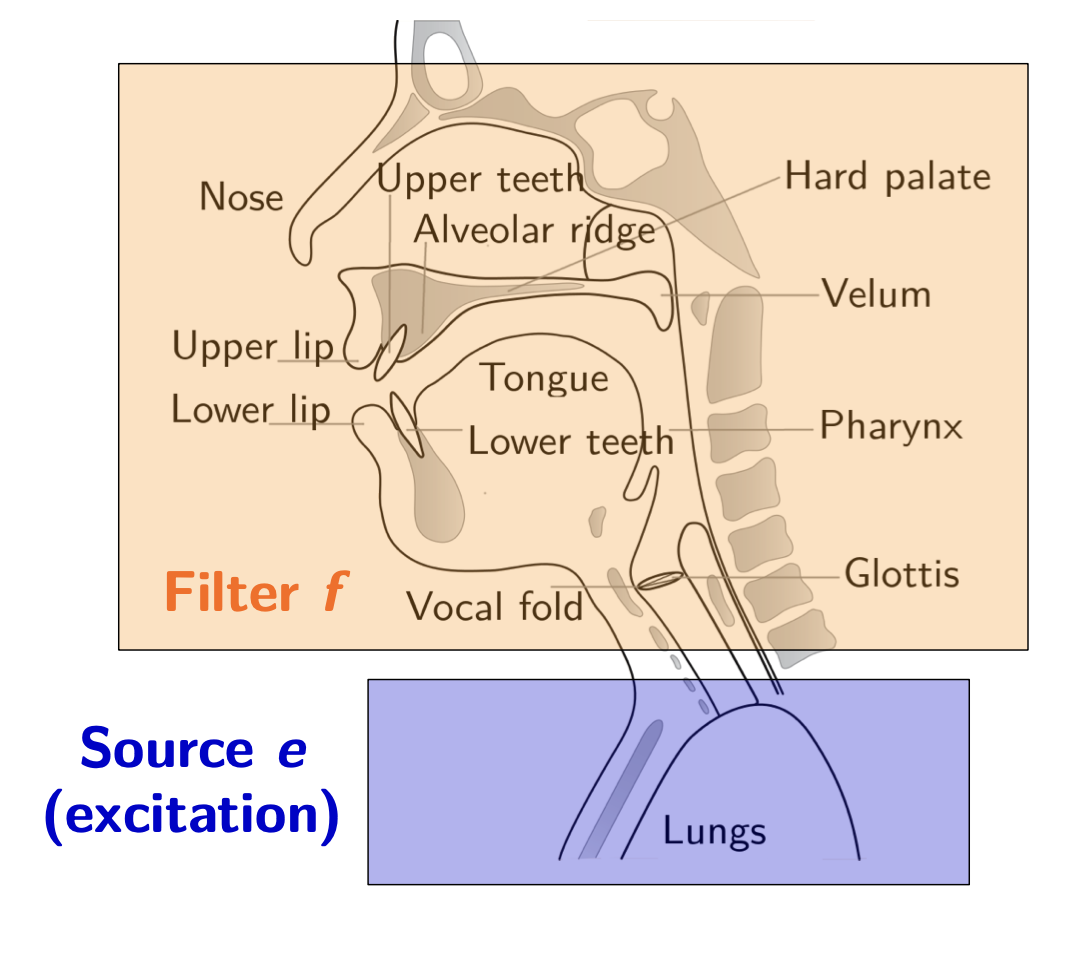

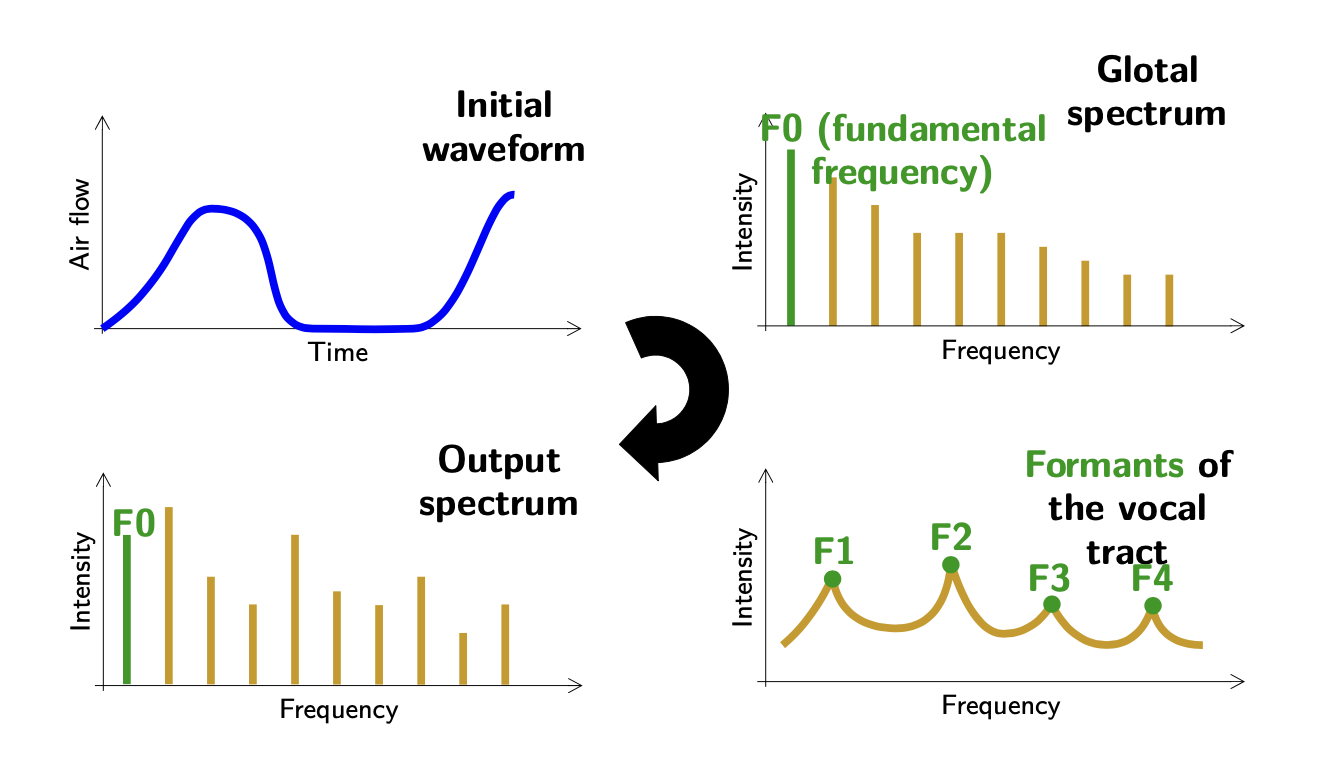

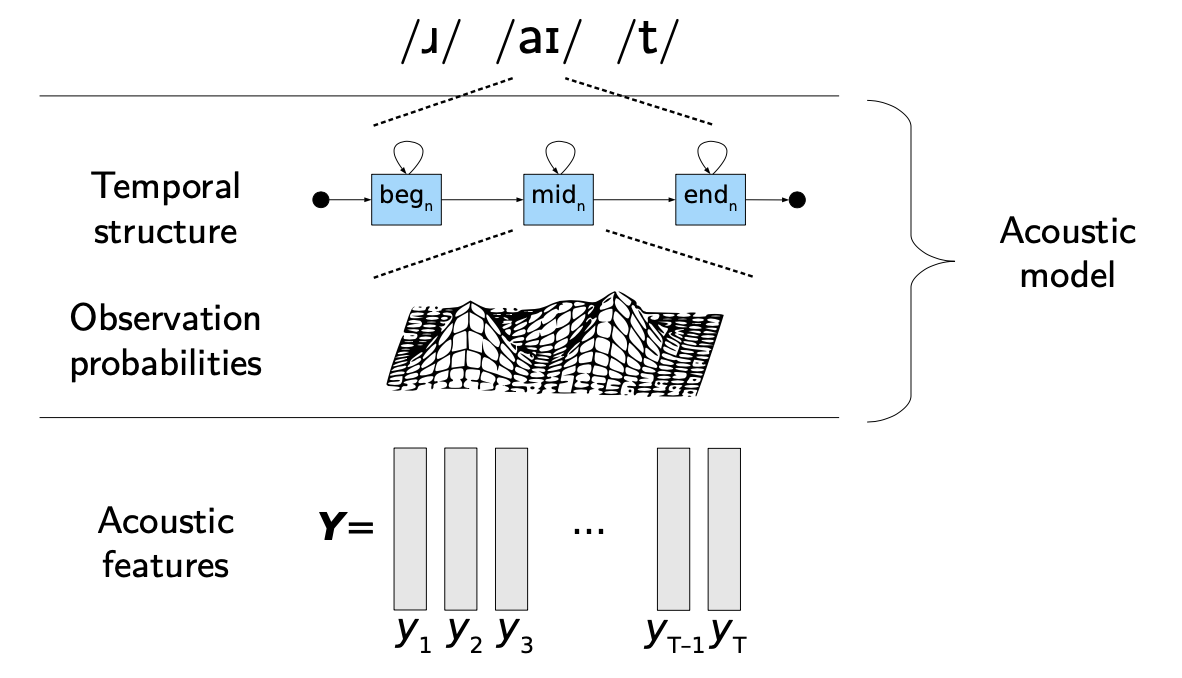

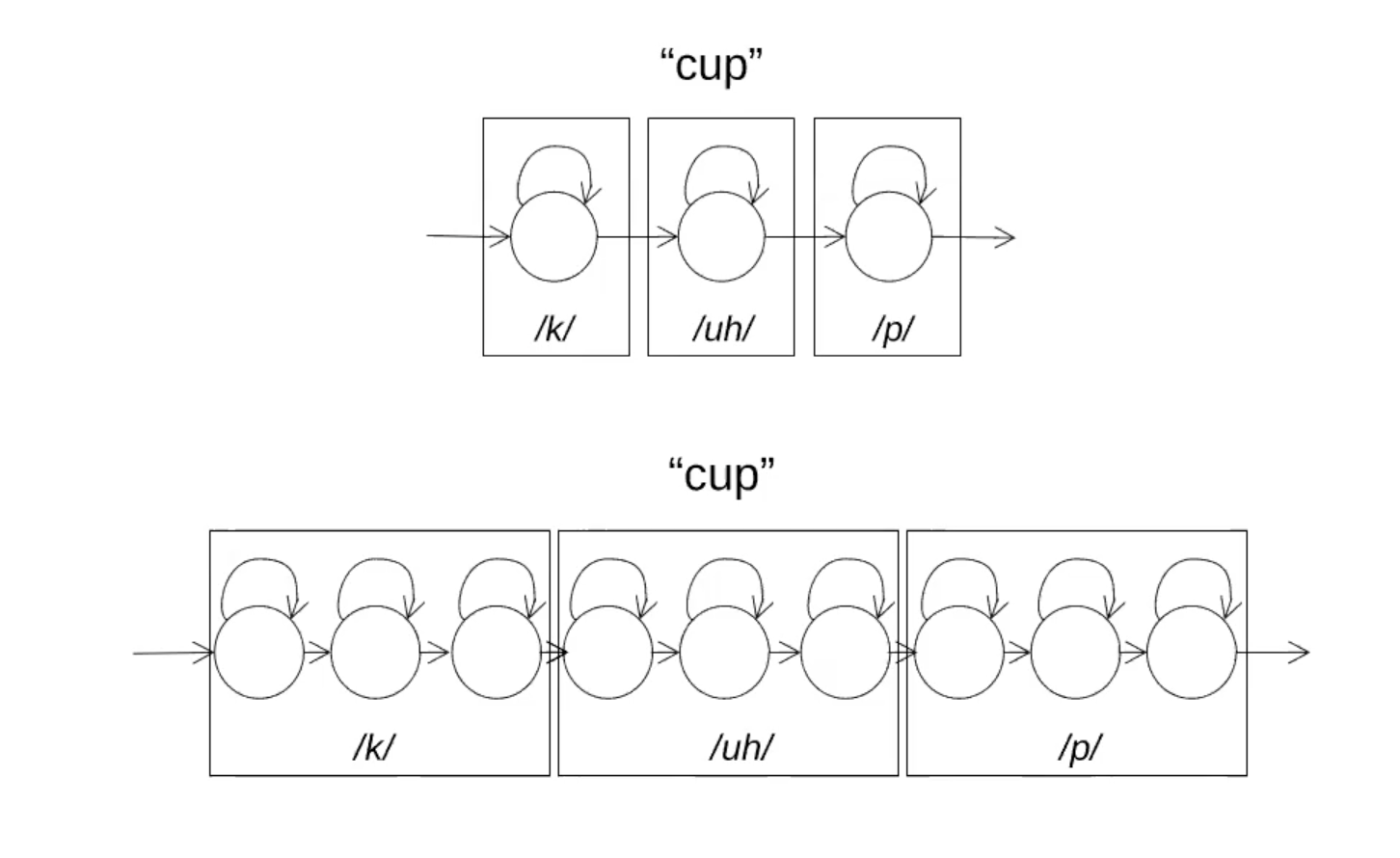

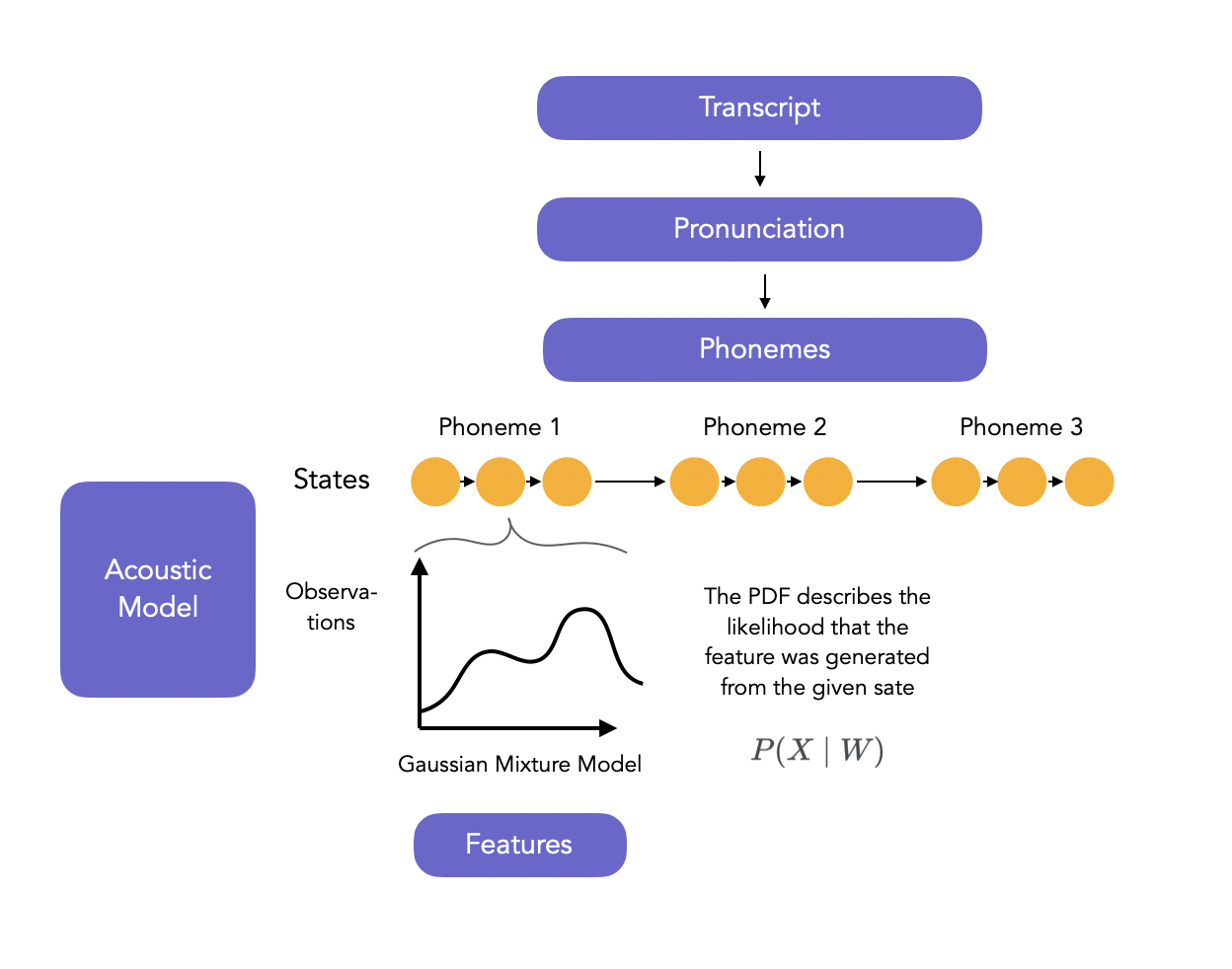

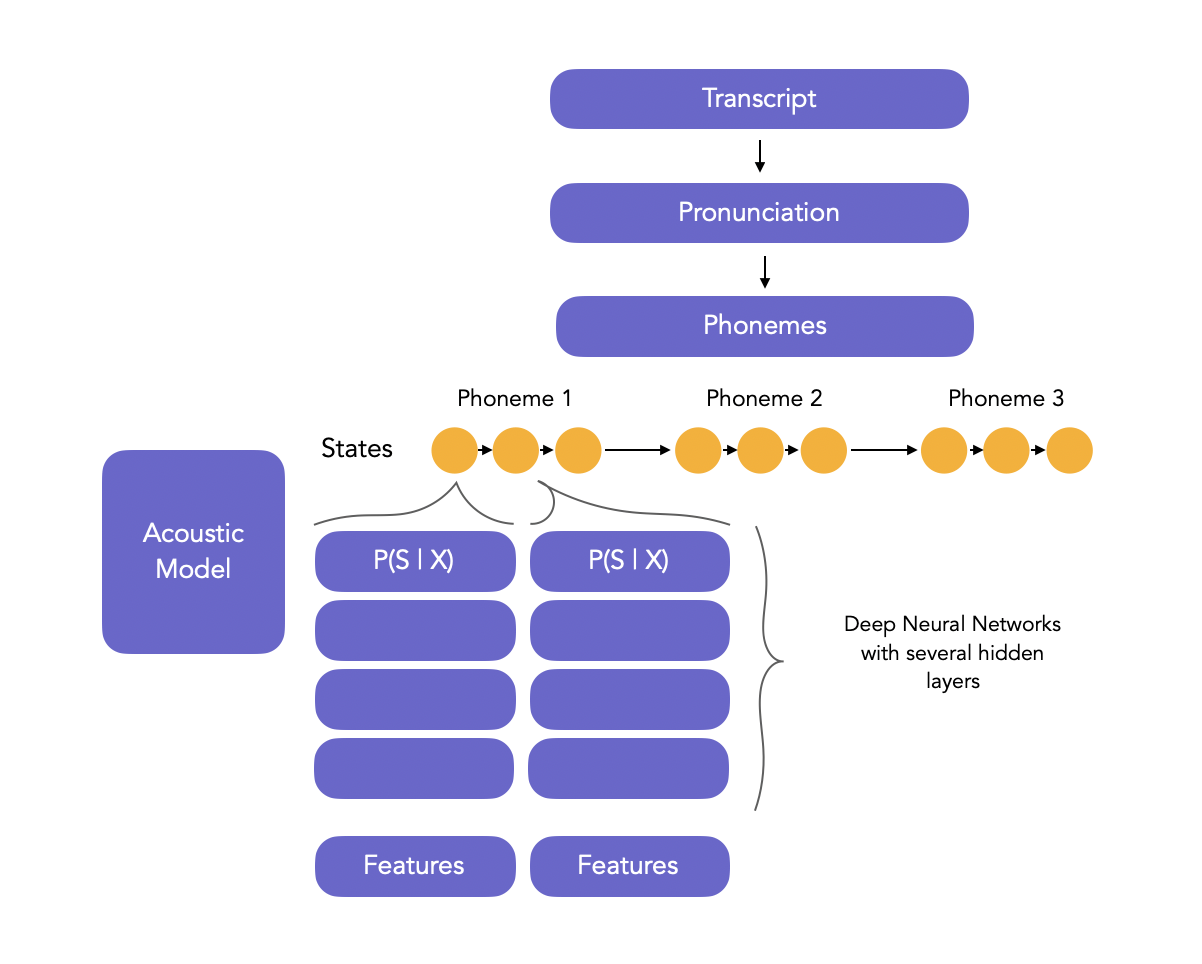

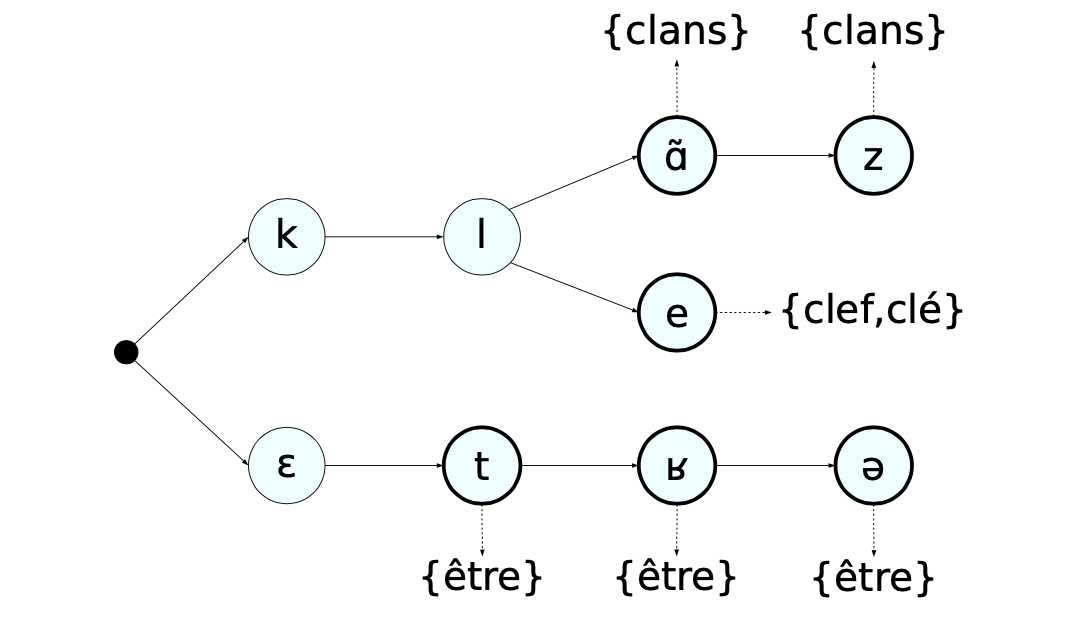

The vagaries of human speech have made development challenging. It’s considered to be one of the most complex areas of computer science – involving linguistics, mathematics and statistics. Speech recognizers are made up of a few components, such as the speech input, feature extraction, feature vectors, a decoder, and a word output. The decoder leverages acoustic models, a pronunciation dictionary, and language models to determine the appropriate output.

Speech recognition technology is evaluated on its accuracy rate, i.e. word error rate (WER), and speed. A number of factors can impact word error rate, such as pronunciation, accent, pitch, volume, and background noise. Reaching human parity – meaning an error rate on par with that of two humans speaking – has long been the goal of speech recognition systems. Research from Lippmann (link resides outside ibm.com) estimates the word error rate to be around 4 percent, but it’s been difficult to replicate the results from this paper.

Various algorithms and computation techniques are used to recognize speech into text and improve the accuracy of transcription. Below are brief explanations of some of the most commonly used methods:

- Natural language processing (NLP): While NLP isn’t necessarily a specific algorithm used in speech recognition, it is the area of artificial intelligence which focuses on the interaction between humans and machines through language through speech and text. Many mobile devices incorporate speech recognition into their systems to conduct voice search—e.g. Siri—or provide more accessibility around texting.

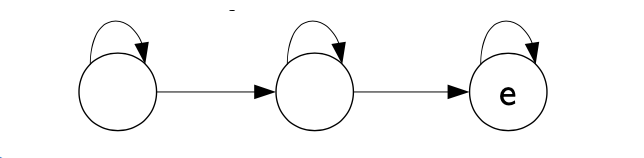

- Hidden markov models (HMM): Hidden Markov Models build on the Markov chain model, which stipulates that the probability of a given state hinges on the current state, not its prior states. While a Markov chain model is useful for observable events, such as text inputs, hidden markov models allow us to incorporate hidden events, such as part-of-speech tags, into a probabilistic model. They are utilized as sequence models within speech recognition, assigning labels to each unit—i.e. words, syllables, sentences, etc.—in the sequence. These labels create a mapping with the provided input, allowing it to determine the most appropriate label sequence.

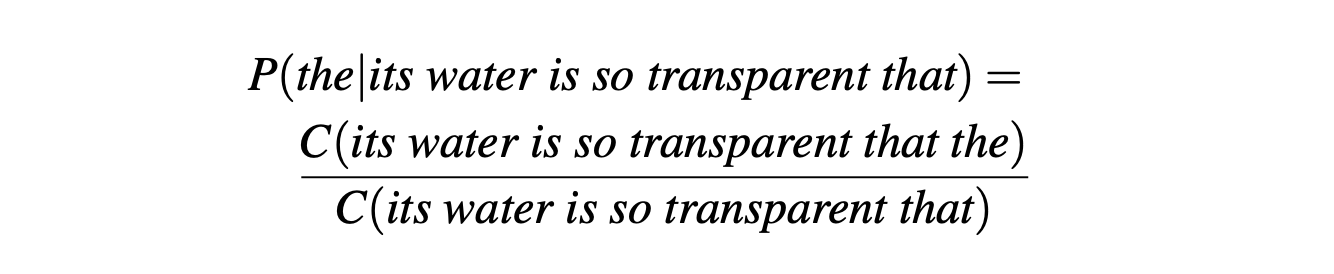

- N-grams: This is the simplest type of language model (LM), which assigns probabilities to sentences or phrases. An N-gram is sequence of N-words. For example, “order the pizza” is a trigram or 3-gram and “please order the pizza” is a 4-gram. Grammar and the probability of certain word sequences are used to improve recognition and accuracy.

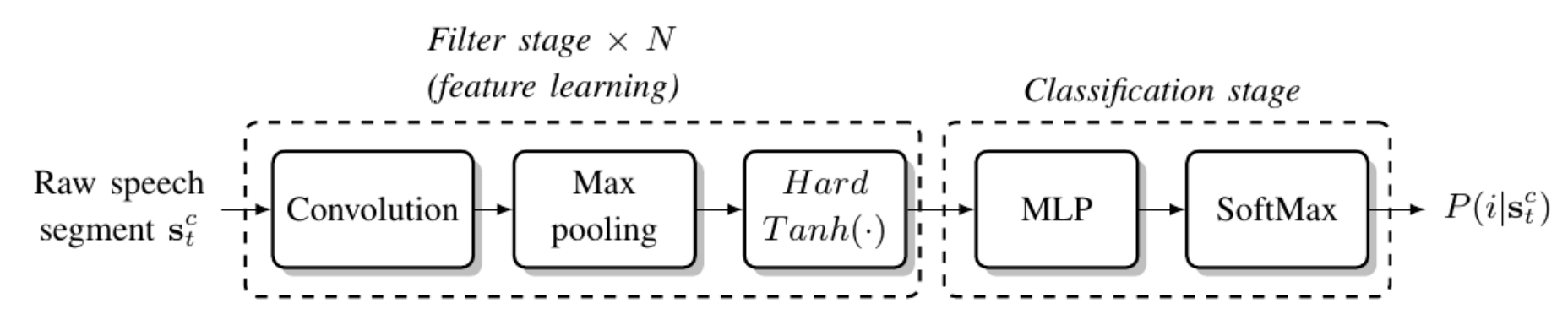

- Neural networks: Primarily leveraged for deep learning algorithms, neural networks process training data by mimicking the interconnectivity of the human brain through layers of nodes. Each node is made up of inputs, weights, a bias (or threshold) and an output. If that output value exceeds a given threshold, it “fires” or activates the node, passing data to the next layer in the network. Neural networks learn this mapping function through supervised learning, adjusting based on the loss function through the process of gradient descent. While neural networks tend to be more accurate and can accept more data, this comes at a performance efficiency cost as they tend to be slower to train compared to traditional language models.

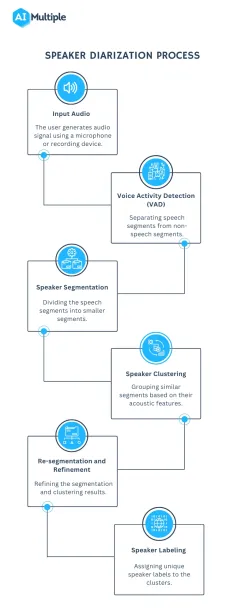

- Speaker Diarization (SD): Speaker diarization algorithms identify and segment speech by speaker identity. This helps programs better distinguish individuals in a conversation and is frequently applied at call centers distinguishing customers and sales agents.

A wide number of industries are utilizing different applications of speech technology today, helping businesses and consumers save time and even lives. Some examples include:

Automotive: Speech recognizers improves driver safety by enabling voice-activated navigation systems and search capabilities in car radios.