CERN Accelerating science

The birth of the Web

The World Wide Web was invented by British scientist Tim Berners-Lee in 1989 while working at CERN

Tim Berners-Lee, a British scientist, invented the World Wide Web (WWW) in 1989, while working at CERN. The web was originally conceived and developed to meet the demand for automated information-sharing between scientists in universities and institutes around the world.

The first website at CERN – and in the world – was dedicated to the World Wide Web project itself and was hosted on Berners-Lee's NeXT computer. In 2013, CERN launched a project to restore this first ever website : info.cern.ch .

On 30 April 1993, CERN put the World Wide Web software in the public domain. Later, CERN made a release available with an open licence, a more sure way to maximise its dissemination. These actions allowed the web to flourish.

A short history of the Web

Licensing the web, browse the first website, the worldwideweb browser, the line-mode browser, the cern effect, internet prehistory at cern, minimising the muddle, good old bitnet, and the rise of the world wi..., on the open internet and the free web, not at all vague and much more than exciting, why bring back the line-mode browser, how the internet came to cern, twenty years of a free and open www.

Advertisement

What's the Difference Between the Internet and the World Wide Web?

- Share Content on Facebook

- Share Content on LinkedIn

- Share Content on Flipboard

- Share Content on Reddit

- Share Content via Email

The Internet has become so ubiquitous it's hard to imagine life without it. It's equally hard to imagine a world where "www" isn't the prefix of many of our online activities. But just because the Internet and the World Wide Web are firmly intertwined with each other, it doesn't mean they're synonymous.

Let's go back to when it all began. President Dwight D. Eisenhower started the Advanced Research Projects Agency (ARPA) in 1958 to increase U.S. technological advancements in the shadow of Sputnik's launch. By October 29, 1969, the first ARPANET network connection between two computers was launched — and promptly crashed. But happily, the second time around was much more successful and the Internet was born. More and more computers were added to this ever-increasing network and the megalith we know today as the Internet began to form. Further information about ARPA can be discovered by reading How ARPANET Works .

But the creation of the World Wide Web didn't come until decades later, with the help of a man named Tim Berners-Lee. In 1990, he developed the backbone of the World Wide Web — the hypertext transfer protocol ( HTTP ). People quickly developed browsers which supported the use of HTTP and with that the popularity of computers skyrocketed. In the 20 years during which ARPANET ruled the Internet, the worldwide network grew from four computers to more than 300,000. By 1992, more than a million computers were connected — only two years after HTTP was developed [source: Computer History Museum ].

You might be wondering at this point what exactly HTTP is — it's simply the widely used set of rules for how files and other information are transferred between computers. So what Berners-Lee did, in essence, was determine how computers would communicate with one another. For instance, HTTP would've come into play if you clicked the source link in the last paragraph or if you typed the https://www.howstuffworks.com URL ( uniform resource locator ) into your browser to get to our home page. But don't get this confused with Web page programming languages like HTML and XHTML . We use those to describe what's on a page, not to communicate between sites or identify a Web page's location.

To learn more about the dawn of the Internet age, visit How did the Internet start? For our purposes, we're set to explore the fundamental difference between the Internet and the World Wide Web, and why it's so easy for us to link them together in our minds. Go to the next page to find the answers.

Internet vs. World Wide Web

To answer this question, let's look at each element. And since the Internet seems to be the more easily understood component, let's start there.

Simply, the Internet is a network of networks — and there are all kinds of networks in all kinds of sizes. You may have a computer network at your work, at your school or even one at your house. These networks are often connected to each other in different configurations, which is how you get groupings such as local area networks ( LAN s) and regional networks . Your cell phone is also on a network that is considered part of the Internet, as are many of your other electronic devices. And all these separate networks — added together — are what constitute the Internet. Even satellites are connected to the Internet.

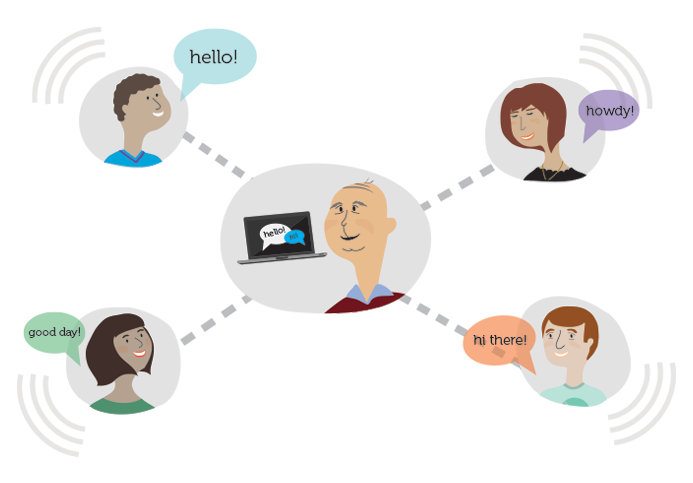

The World Wide Web, on the other hand, is the system we use to access the Internet. The Web isn't the only system out there, but it's the most popular and widely used. (Examples of ways to access the Internet without using HTTP include e-mail and instant messaging .) As mentioned on the previous page, the World Wide Web makes use of hypertext to access the various forms of information available on the world's different networks. This allows people all over the world to share knowledge and opinions. We typically access the Web through browsers, like Internet Explorer, Chrome and Mozilla Firefox . By using browsers like these, you can visit various Web sites and view other online content.

So another way to think about it is to say the Internet is composed of the machines, hardware and data; and the World Wide Web is what brings this technology to life.

Now that we know the difference between the Internet and the World Wide Web, put your newfound knowledge of hyperlinks, HTML and home pages to use and click onto the next page for more great information.

There are several groups of people who work to keep everything standardized and running smoothly across the Internet and the World Wide Web. If you want to learn more about what it takes to keep systems compatible, a good place to start is the World Wide Web Consortium ( W3C ). W3C's aim is to help develop standards and guidelines for the Web. The group is run by Tim Berners-Lee who, if you remember, is the person who invented the World Wide Web. You can also try the Internet Society , which was founded in 1992 to provide leadership in many aspects of Internet-related information, initiatives and activities.

Lots More Information

Related howstuffworks articles.

- How ARPANET Works

- How Bits and Bytes Work

- How did the Internet start?

- How Internet Infrastructure Works

- How Semantic Web Works

- How Web Pages Work

More Great Links

- Computer History Museum

- Defense Advanced Research Projects Agency

- Internet Corporation for Assigned Names and Numbers

- Internet Network Information Center

- National Science Foundation

- Berners-Lee, Tim. "Frequently Asked Questions." W3C. (6/27/2008) http://www.w3.org/People/Berners-Lee/FAQ.html

- "Brief Timeline of the Internet." Webopedia Internet.com. 5/24/2007. (6/23/2008) http://www.webopedia.com/quick_ref/timeline.asp

- Gralla, Preston. "How the Internet Works." Que. 8/1999. (6/27/2008)

- Haynal, Russ. "Internet: The Big Picture." (6/23/2008) http://navigators.com/internet_architecture.html

- "Internet History." Computer History Museum. 2006. (6/23/2008) http://www.computerhistory.org/internet_history/

- Strickland, Jonathan. "How ARPANET Works." HowStuffWorks.com. (6/23/2008). https://computer.howstuffworks.com/arpanet.htm

- Strickland, Jonathan. "How did the Internet start?" HowStuffWorks.com. (6/23/2008). https://computer.howstuffworks.com/internet-start.htm

- "The Difference Between the Internet and the World Wide Web." Webopedia Internet.com. 2/29/2008. (6/23/2008) http://www.webopedia.com/didyouknow/internet/2002/web_vs_internet.asp

- Tyson, Jeff. "How Internet Infrastructure Works." HowStuffWorks.com. (6/23/2008). https://computer.howstuffworks.com/internet-infrastructure.htm

- "What is HTTP?" WhatIs.com. 7/27/2006. (6/27/2008) http://searchwindevelopment.techtarget.com/sDefinition/0,,sid8_gci214004,00.html

- "What is World Wide Web." WhatIs.com. 3/6/2007. (6/27/2008) http://searchcrm.techtarget.com/sDefinition/0,,sid11_gci213391,00.html

- "Who owns the Internet." Webopedia Internet.com. 2/29/2008. (6/23/2008) http://www.webopedia.com/DidYouKnow/Internet/2002/WhoOwnstheInternet.asp

Please copy/paste the following text to properly cite this HowStuffWorks.com article:

Funding a Revolution: Government Support for Computing Research (1999)

Chapter: 7 development of the internet and the world wide web, 7 development of the internet and the world wide web.

The recent growth of the Internet and the World Wide Web makes it appear that the world is witnessing the arrival of a completely new technology. In fact, the Web—now considered to be a major driver of the way society accesses and views information—is the result of numerous projects in computer networking, mostly funded by the federal government, carried out over the last 40 years. The projects produced communications protocols that define the format of network messages, prototype networks, and application programs such as browsers. This research capitalized on the ubiquity of the nation's telephone network, which provided the underlying physical infrastructure upon which the Internet was built.

This chapter traces the development of the Internet, 1 one aspect of the broader field of data networking. The chapter is not intended to be comprehensive; rather, it focuses on the federal role in both funding research and supporting the deployment of networking infrastructure. This history is divided into four distinct periods. Before 1970, individual researchers developed the underlying technologies, including queuing theory, packet switching, and routing. During the 1970s, experimental networks, notably the ARPANET, were constructed. These networks were primarily research tools, not service providers. Most were federally funded, because, with a few exceptions, industry had not yet realized the potential of the technology. During the 1980s, networks were widely deployed, initially to support scientific research. As their potential to improve personal communications and collaboration became apparent, additional academic disciplines and industry began to use the technol-

ogy. In this era, the National Science Foundation (NSF) was the major supporter of networking, primarily through the NSFNET, which evolved into the Internet. Most recently, in the early 1990s, the invention of the Web made it much easier for users to publish and access information, thereby setting off the rapid growth of the Internet. The final section of the chapter summarizes the lessons to be learned from history.

By focusing on the Internet, this chapter does not address the full scope of computer networking activities that were under way between 1960 and 1995. It specifically ignores other networking activities of a more proprietary nature. In the mid-1980s, for example, hundreds of thousands of workers at IBM were using electronic networks (such as the VNET) for worldwide e-mail and file transfers; banks were performing electronic funds transfer; Compuserve had a worldwide network; Digital Equipment Corporation (DEC) had value-added networking services; and a VNET-based academic network known as BITNET had been established. These were proprietary systems that, for the most part, owed little to academic research, and indeed were to a large extent invisible to the academic computer networking community. By the late 1980s, IBM's proprietary SNA data networking business unit already had several billions of dollars of annual revenue for networking hardware, software, and services. The success of such networks in many ways limited the interest of companies like IBM and Compuserve in the Internet. The success of the Internet can therefore, in many ways, be seen as the success of an open system and open architecture in the face of proprietary competition.

Early Steps: 1960-1970

Approximately 15 years after the first computers became operational, researchers began to realize that an interconnected network of computers could provide services that transcended the capabilities of a single system. At this time, computers were becoming increasingly powerful, and a number of scientists were beginning to consider applications that went far beyond simple numerical calculation. Perhaps the most compelling early description of these opportunities was presented by J.C.R. Licklider (1960), who argued that, within a few years, computers would become sufficiently powerful to cooperate with humans in solving scientific and technical problems. Licklider, a psychologist at the Massachusetts Institute of Technology (MIT), would begin realizing his vision when he became director of the Information Processing Techniques Office (IPTO) at the Advanced Research Projects Agency (ARPA) in 1962. Licklider remained at ARPA until 1964 (and returned for a second tour in 1974-1975), and he convinced his successors, Ivan Sutherland and Robert Taylor, of the importance of attacking difficult, long-term problems.

Taylor, who became IPTO director in 1966, worried about the duplication of expensive computing resources at the various sites with ARPA contracts. He proposed a networking experiment in which users at one site accessed computers at another site, and he co-authored, with Licklider, a paper describing both how this might be done and some of the potential consequences (Licklider and Taylor, 1968). Taylor was a psychologist, not a computer scientist, and so he recruited Larry Roberts of MIT's Lincoln Laboratory to move to ARPA and oversee the development of the new network. As a result of these efforts, ARPA became the primary supporter of projects in networking during this period.

In contrast to the NSF, which awarded grants to individual researchers, ARPA issued research contracts. The IPTO program managers, typically recruited from academia for 2-year tours, had considerable latitude in defining projects and identifying academic and industrial groups to carry them out. In many cases, they worked closely with the researchers they sponsored, providing intellectual leadership as well as financial support. A strength of the ARPA style was that it not only produced artifacts that furthered its missions but also built and trained a community of researchers. In addition to holding regular meetings of principal investigators, Taylor started the "ARPA games," meetings that brought together the graduate students involved in programs. This innovation helped build the community that would lead the expansion of the field and growth of the Internet during the 1980s.

During the 1960s, a number of researchers began to investigate the technologies that would form the basis for computer networking. Most of this early networking research concentrated on packet switching, a technique of breaking up a conversation into small, independent units, each of which carries the address of its destination and is routed through the network independently. Specialized computers at the branching points in the network can vary the route taken by packets on a moment-to-moment basis in response to network congestion or link failure.

One of the earliest pioneers of packet switching was Paul Baran of the RAND Corporation, who was interested in methods of organizing networks to withstand nuclear attack. (His research interest is the likely source of a widespread myth concerning the ARPANET's original purpose [Hafner and Lyon, 1996]). Baran proposed a richly interconnected set of network nodes, with no centralized control system—both properties of today's Internet. Similar work was under way in the United Kingdom, where Donald Davies and Roger Scantlebury of the National Physical Laboratory (NPL) coined the term "packet."

Of course, the United States already had an extensive communications network, the public switched telephone network (PSTN), in which digital switches and transmission lines were deployed as early as 1962.

But the telephone network did not figure prominently in early computer networking. Computer scientists working to interconnect their systems spoke a different language than did the engineers and scientists working in traditional voice telecommunications. They read different journals, attended different conferences, and used different terminology. Moreover, data traffic was (and is) substantially different from voice traffic. In the PSTN, a continuous connection, or circuit, is set up at the beginning of a call and maintained for the duration. Computers, on the other hand, communicate in bursts, and unless a number of "calls" can be combined on a single transmission path, line and switching capacity is wasted. Telecommunications engineers were primarily interested in improving the voice network and were skeptical of alternative technologies. As a result, although telephone lines were used to provide point-to-point communication in the ARPANET, the switching infrastructure of the PSTN was not used. According to Taylor, some Bell Laboratories engineers stated flatly in 1967 that "packet switching wouldn't work." 2

At the first Association for Computing Machinery (ACM) Symposium on Operating System Principles in 1967, Lawrence Roberts, then an IPTO program manager, presented an initial design for the packet-switched network that was to become the ARPANET (Davies et al., 1967). In addition, Roger Scantlebury presented the NPL work (Roberts, 1967), citing Baran's earlier RAND report. The reaction was positive, and Roberts issued a request for quotation (RFQ) for the construction of a four-node network.

From the more than 100 respondents to the RFQ, Roberts selected Bolt, Beranek, and Newman (BBN) of Cambridge, Massachusetts; familiar names such as IBM Corporation and Control Data Corporation chose not to bid. The contract to produce the hardware and software was issued in December 1968. The BBN group was led by Frank Heart, and many of the scientists and engineers who would make major contributions to networking in future years participated. Robert Kahn, who with Vinton Cerf would later develop the Transmission Control Protocol/Internet Protocol (TCP/IP) suite used to control the transmission of packets in the network, helped develop the network architecture. The network hardware consisted of a rugged military version of a Honeywell Corporation minicomputer that connected a site's computers to the communication lines. These interface message processors (IMPs)—each the size of a large refrigerator and painted battleship gray—were highly sought after by DARPA-sponsored researchers, who viewed possession of an IMP as evidence they had joined the inner circle of networking research.

The first ARPANET node was installed in September 1969 at Leonard Kleinrock's Network Measurement Center at the University of California at Los Angeles (UCLA). Kleinrock (1964) had published some of the

earliest theoretical work on packet switching, and so this site was an appropriate choice. The second node was installed a month later at Stanford Research Institute (SRI) in Menlo Park, California, using Douglas Engelbart's On Line System (known as NLS) as the host. SRI also operated the Network Information Center (NIC), which maintained operational and standards information for the network. Two more nodes were soon installed at the University of California at Santa Barbara, where Glen Culler and Burton Fried had developed an interactive system for mathematics education, and the University of Utah, which had one of the first computer graphics groups.

Initially, the ARPANET was primarily a vehicle for experimentation rather than a service, because the protocols for host-to-host communication were still being developed. The first such protocol, the Network Control Protocol (NCP), was completed by the Network Working Group (NWG) led by Stephen Crocker in December 1970 and remained in use until 1983, when it was replaced by TCP/IP.

Expansion of the Arpanet: 1970-1980

Initially conceived as a means of sharing expensive computing resources among ARPA research contractors, the ARPANET evolved in a number of unanticipated directions during the 1970s. Although a few experiments in resource sharing were carried out, and the Telnet protocol was developed to allow a user on one machine to log onto another machine over the network, other applications became more popular.

The first of these applications was enabled by the File Transfer Protocol (FTP), developed in 1971 by a group led by Abhay Bhushan of MIT (Bhushan, 1972). This protocol enabled a user on one system to connect to another system for the purpose of either sending or retrieving a particular file. The concept of an anonymous user was quickly added, with constrained access privileges, to allow users to connect to a system and browse the available files. Using Telnet, a user could read the remote files but could not do anything with them. With FTP, users could now move files to their own machines and work with them as local files. This capability spawned several new areas of activity, including distributed client-server computing and network-connected file systems.

Occasionally in computing, a "killer application" appears that becomes far more popular than its developers expected. When personal computers (PCs) became available in the 1980s, the spreadsheet (initially VisiCalc) was the application that accelerated the adoption of the new hardware by businesses. For the newly minted ARPANET, the killer application was electronic mail, or e-mail. The first e-mail program was developed in 1972 by Ray Tomlinson of BBN. Tomlinson had built an

earlier e-mail system for communication between users on BBN's Tenex time-sharing system, and it was a simple exercise to modify this system to work over the network. By combining the immediacy of the telephone with the precision of written communication, e-mail became an instant hit. Tomlinson's syntax ( user@domain ) remains in use today.

Telnet, FTP, and e-mail were examples of the leverage that research typically provided in early network development. As each new capability was added, the efficiency and speed with which knowledge could be disseminated improved. E-mail and FTP made it possible for geographically distributed researchers to collaborate and share results much more effectively. These programs were also among the first networking applications that were valuable not only to computer scientists, but also to scholars in other disciplines.

From Arpanet to Internet

Although the ARPANET was ARPA's largest networking effort, it was by no means the only one. The agency also supported research on terrestrial packet radio and packet satellite networks. In 1973, Robert Kahn and Vinton Cerf began to consider ways to interconnect these networks, which had quite different bandwidth, delay, and error properties than did the telephone lines of the ARPANET. The result was TCP/IP, first described in 1973 at an International Network Working Group meeting in England. Unlike NCP, which enabled the hosts of a single network to communicate, TCP/IP was designed to interconnect multiple networks to form an Internet. This protocol suite defined the packet format and a flow-control and error-recovery mechanism to allow the hosts to recover gracefully from network errors. It also specified an addressing mechanism that could support an Internet comprising up to 4 billion hosts.

The work necessary to transform TCP/IP from a concept into a useful system was performed under ARPA contract by groups at Stanford University, BBN, and University College London. Although TCP/IP has evolved over the years, it is still in use today as the Internet's basic packet transport protocol.

By 1975, the ARPANET had grown from its original four nodes to nearly 100 nodes. Around this time, two phenomena—the development of local area networks (LANs) and the integration of networking into operating systems—contributed to a rapid increase in the size of the network.

Local Area Networks

While ARPANET researchers were experimenting with dedicated telephone lines for packet transmission, researchers at the University of

Hawaii, led by Norman Abramson, were trying a different approach, also with ARPA funding. Like the ARPANET group, they wanted to provide remote access to their main computer system, but instead of a network of telephone lines, they used a shared radio network. It was shared in the sense that all stations used the same channel to reach the central station. This approach had a potential drawback: if two stations attempted to transmit at the same time, then their transmissions would interfere with each other, and neither one would be received. But such interruptions were unlikely because the data were typed on keyboards, which sent very short pulses to the computer, leaving ample time between pulses during which the channel was clear to receive keystrokes from a different user.

Abramson's system, known as Aloha, generated considerable interest in using a shared transmission medium, and several projects were initiated to build on the idea. Two of the best-known projects were the Atlantic Packet Satellite Experiment and Ethernet. The packet satellite network demonstrated that the protocols developed in Aloha for handling contention between simultaneous users, combined with more traditional reservation schemes, resulted in efficient use of the available bandwidth. However, the long latency inherent in satellite communications limited the usefulness of this approach.

Ethernet, developed by a group led by Robert Metcalfe at Xerox Corporation's Palo Alto Research Center (PARC), is one of the few examples of a networking technology that was not directly funded by the government. This experiment demonstrated that using coaxial cable as a shared medium resulted in an efficient network. Unlike the Aloha system, in which transmitters could not receive any signals, Ethernet stations could detect that collisions had occurred, stop transmitting immediately, and retry a short time later (at random). This approach improved the efficiency of the Aloha technique and made it practical for actual use. Shared-media LANs became the dominant form of computer-to-computer communication within a building or local area, although variations from IBM (Token Ring) and others also captured part of this emerging market.

Ethernet was initially used to connect a network of approximately 100 of PARC's Alto PCs, using the center's time-sharing system as a gateway to the ARPANET. Initially, many believed that the small size and limited performance of PCs would preclude their use as network hosts, but, with DARPA funding, David Clark's group at MIT, which had received several Altos from PARC, built an efficient TCP implementation for that system and, later, for the IBM PC. The proliferation of PCs connected by LANs in the 1980s dramatically increased the size of the Internet.

Integrated Networking

Until the 1970s, academic computer science research groups used a variety of computers and operating systems, many of them constructed by the researchers themselves. Most were time-sharing systems that supported a number of simultaneous users. By 1970, many groups had settled on the Digital Equipment Corporation (DEC) PDP-10 computer and the Tenex operating system developed at BBN. This standardization enabled researchers at different sites to share software, including networking software.

By the late 1970s, the Unix operating system, originally developed at Bell Labs, had become the system of choice for researchers, because it ran on DEC's inexpensive (relative to other systems) VAX line of computers. During the late 1970s and early 1980s, an ARPA-funded project at the University of California at Berkeley (UC-Berkeley) produced a version of Unix (the Berkeley System Distribution, or BSD) that included tightly integrated networking capabilities. The BSD was rapidly adopted by the research community because the availability of source code made it a useful experimental tool. In addition, it ran on both VAX machines and the personal workstations provided by the fledgling Sun Microsystems, Inc., several of whose founders came from the Berkeley group. The TCP/IP suite was now available on most of the computing platforms used by the research community.

Standards and Management

Unlike the various telecommunications networks, the Internet has no owner. It is a federation of commercial service providers, local educational networks, and private corporate networks, exchanging packets using TCP/IP and other, more specialized protocols. To become part of the Internet, a user need only connect a computer to a port on a service provider's router, obtain an IP address, and begin communicating. To add an entire network to the Internet is a bit trickier, but not extraordinarily so, as demonstrated by the tens of thousands of networks with tens of millions of hosts that constitute the Internet today.

The primary technical problem in the Internet is the standardization of its protocols. Today, this is accomplished by the Internet Engineering Task Force (IETF), a voluntary group interested in maintaining and expanding the scope of the Internet. Although this group has undergone many changes in name and makeup over the years, it traces its roots directly to Stephen Crocker's NWG, which defined the first ARPANET protocol in 1969. The NWG defined the system of requests for comments (RFCs) that are still used to specify protocols and discuss other engineer-

ing issues. Today's RFCs are still formatted as they were in 1969, eschewing the decorative fonts and styles that pervade today's Web.

Joining the IETF is a simple matter of asking to be placed on its mailing list, attending thrice-yearly meetings, and participating in the work. This grassroots group is far less formal than organizations such as the International Telecommunications Union, which defines telephony standards through the work of members who are essentially representatives of various governments. The open approach to Internet standards reflects the academic roots of the network.

Closing the Decade

The 1970s were a time of intensive research in networking. Much of the technology used today was developed during this period. Several networks other than ARPANET were assembled, primarily for use by computer scientists in support of their own research. Most of the work was funded by ARPA, although the NSF provided educational support for many researchers and was beginning to consider establishing a large-scale academic network.

During this period, ARPA pursued high-risk research with the potential for high payoffs. Its work was largely ignored by AT&T, and the major computer companies, notably IBM and DEC, began to offer proprietary networking solutions that competed with, rather than applied, the ARPA-developed technologies. 3 Yet the technologies developed under ARPA contract ultimately resulted in today's Internet. It is debatable whether a more risk-averse organization lacking the hands-on program management style of ARPA could have produced the same result.

Operation of the ARPANET was transferred to the Defense Communication Agency in 1975. By the end of the decade, the ARPANET had matured sufficiently to provide services. It remained in operation until 1989, when it was superseded by subsequent networks. The stage was now set for the Internet, which was first used by scientists, then by academics in many disciplines, and finally by the world at large.

The NSFNET Years: 1980-1990

During the late 1970s, several networks were constructed to serve the needs of particular research communities. These networks—typically funded by the federal agency that was the primary supporter of the research area—included MFENet, which the Department of Energy established to give its magnetic fusion energy researchers access to supercomputers, and NASA's Space Physics Analysis Network (SPAN). The NSF began supporting network infrastructure with the establishment

of CSNET, which was intended to link university computer science departments with the ARPANET. The CSNET had one notable property that the ARPANET lacked: it was open to all computer science researchers, whereas only ARPA contractors could use the ARPANET. An NSF grant to plan the CSNET was issued to Larry Landweber at the University of Wisconsin in 1980.

The CSNET was used throughout the 1980s, but as it and other regional networks began to demonstrate their usefulness, the NSF launched a much more ambitious effort, the NSFNET. From the start, the NSFNET was designed to be a network of networks—an ''internet''—with a high-speed backbone connecting NSF's five supercomputer centers and the National Center for Atmospheric Research. To oversee the new network, the NSF hired Dennis Jennings from Trinity College, Dublin. In the early 1980s, Jennings had been responsible for the Irish Higher Education Authority network (HEANet), and so he was well-qualified for the task. One of Jennings' first decisions was to select TCP/IP as the primary protocol suite for the NFSNET.

Because the NSFNET was to be an internet (the beginning of today's Internet), specialized computers called routers were needed to pass traffic between networks at the points where the networks met. Today, routers are the primary products of multibillion-dollar companies (e.g., Cisco Systems Incorporated, Bay Networks), but in 1985, few commercial products were available. The NSF chose the "Fuzzball" router designed by David Mills at the University of Delaware (Mills, 1988). Working with ARPA support, Mills improved the protocols used by the routers to communicate the network topology among themselves, a critical function in a large-scale network.

Another technology required for the rapidly growing Internet was the Domain Name Service (DNS). Developed by Paul Mockapetris at the University of Southern California's Information Sciences Institute, the DNS provides for hierarchical naming of hosts. An administrative entity, such as a university department, can assign host names as it wishes. It also has a domain name, issued by the higher-level authority of which it is a part. (Thus, a host named xyz in the computer science department at UC-Berkeley would be named xyz.cs.berkeley.edu. ) Servers located throughout the Internet provide translation between the host names used by human users and the IP addresses used by the Internet protocols. The name-distribution scheme has allowed the Internet to grow much more rapidly than would be possible with centralized administration.

Jennings left the NSF in 1986. He was succeeded by Stephen Wolff, who oversaw the deployment and growth of the NSFNET. During Wolff's tenure, the speed of the backbone, originally 56 kilobits per second, was increased 1,000-fold, and a large number of academic and regional net-

works were connected to the NSFNET. The NSF also began to expand the reach of the NSFNET beyond its supercomputing centers through its Connections program, which targeted the research and education community. In response to the Connections solicitation, the NSF received innovative proposals from what would become two of the major regional networks: SURANET and NYSERNET. These groups proposed to develop regional networks with a single connection to the NSFNET, instead of connecting each institution independently.

Hence, the NSFNET evolved into a three-tiered structure in which individual institutions connected to regional networks that were, in turn, connected to the backbone of the NSFNET. The NSF agreed to provide seed funding for connecting regional networks to the NSFNET, with the expectation that, as a critical mass was reached, the private sector would take over the management and operating costs of the Internet. This decision helped guide the Internet toward self-sufficiency and eventual commercialization (Computer Science and Telecommunications Board, 1994).

As the NSFNET expanded, opportunities for privatization grew. Wolff saw that commercial interests had to participate and provide financial support if the network were to continue to expand and evolve into a large, single internet. The NSF had already (in 1987) contracted with Merit Computer Network Incorporated at the University of Michigan to manage the backbone. Merit later formed a consortium with IBM and MCI Communications Corporation called Advanced Network and Services (ANS) to oversee upgrades to the NSFNET. Instead of reworking the existing backbone, ANS added a new, privately owned backbone for commercial services in 1991. 4

Emergence of the Web: 1990 to the Present

By the early 1990s, the Internet was international in scope, and its operation had largely been transferred from the NSF to commercial providers. Public access to the Internet expanded rapidly thanks to the ubiquitous nature of the analog telephone network and the availability of modems for connecting computers to this network. Digital transmission became possible throughout the telephone network with the deployment of optical fiber, and the telephone companies leased their broadband digital facilities for connecting routers and regional networks to the developers of the computer network. In April 1995, all commercialization restrictions on the Internet were lifted. Although still primarily used by academics and businesses, the Internet was growing, with the number of hosts reaching 250,000. Then the invention of the Web catapulted the Internet to mass popularity almost overnight.

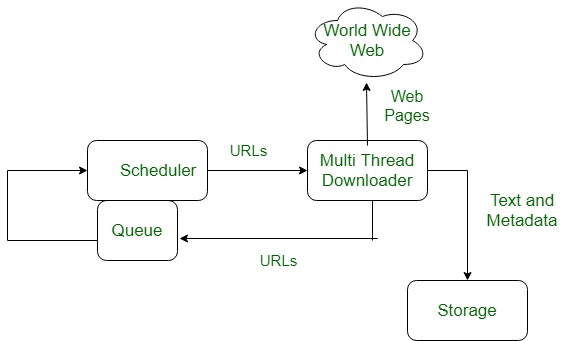

The idea for the Web was simple: provide a common format for

documents stored on server computers, and give each document a unique name that can be used by a browser program to locate and retrieve the document. Because the unique names (called universal resource locators, or URLs) are long, including the DNS name of the host on which they are stored, URLs would be represented as shorter hypertext links in other documents. When the user of a browser clicks a mouse on a link, the browser retrieves and displays the document named by the URL.

This idea was implemented by Timothy Berners-Lee and Robert Cailliau at CERN, the high-energy physics laboratory in Geneva, Switzerland, funded by the governments of participating European nations. Berners-Lee and Cailliau proposed to develop a system of links between different sources of information. Certain parts of a file would be made into nodes, which, when called up, would link the user to other, related files. The pair devised a document format called HYpertext Markup Language (HTML), a variant of the Standard Generalized Markup Language used in the publishing industry since the 1950s. It was released at CERN in May 1991. In July 1992, a new Internet protocol, the Hypertext Transfer Protocol (HTTP), was introduced to improve the efficiency of document retrieval. Although the Web was originally intended to improve communications within the physics community at CERN, it—like e-mail 20 years earlier—rapidly became the new killer application for the Internet.

The idea of hypertext was not new. One of the first demonstrations of a hypertext system, in which a user could click a mouse on a highlighted word in a document and immediately access a different part of the document (or, in fact, another document entirely), occurred at the 1967 Fall Joint Computer Conference in San Francisco. At this conference, Douglas Engelbart of SRI gave a stunning demonstration of his NLS (Engelbart, 1986), which provided many of the capabilities of today's Web browsers, albeit limited to a single computer. Engelbart's Augment project was supported by funding from NASA and ARPA. Engelbart was awarded the Association for Computing Machinery's 1997 A. M. Turing Award for this work. Although it never became commercially successful, the mouse-driven user interface inspired researchers at Xerox PARC, who were developing personal computing technology.

Widespread use of the Web, which now accounts for the largest volume of Internet traffic, was accelerated by the development in 1993 of the Mosaic graphical browser. This innovation, by Marc Andreessen at the NSF-funded National Center for Supercomputer Applications, enabled the use of hyperlinks to video, audio, and graphics, as well as text. More important, it provided an effective interface that allowed users to point-and-click on a menu or fill in a blank to search for information.

The development of the Internet and the World Wide Web has had a tremendous impact on the U.S. economy and society more broadly. By

January 1998, almost 30 million host computers were connected to the Internet (Zakon, 1998), and more than 58 million users in the United States and Canada were estimated to be online (Nielsen Media Research, 1997). Numerous companies now sell Internet products worth billions of dollars. Cisco Systems, a leader in network routing technology, for example, reported sales of $8.5 billion in 1998. Netscape Communications Corporation, which commercialized the Mosaic browser, had sales exceeding $530 million in 1997. 5 Microsoft Corporation also entered the market for Web browsers and now competes head-to-head with Netscape. A multitude of other companies offer hardware and software for Internet based systems.

The Internet has also paved the way for a host of services. Companies like Yahoo! and InfoSeek provide portals to the Internet and have attracted considerable attention from Wall Street investors. Other companies, like Amazon.com and Barnes & Noble, have established online stores. Amazon had online sales of almost $150 million for books in 1997. 6 Electronic commerce, more broadly, is taking hold in many types of organizations, from PC manufacturers to retailers to travel agencies. Although estimates of the value of these services vary widely, they all reflect a growing sector of the economy that is wholly dependent on the Internet. Internet retailing could reach $7 billion by the year 2000, and online sales of travel services are expected to approach $8 billion around the turn of the century. Forrester Research estimates that businesses will buy and sell $327 billion worth of goods over the Internet by the year 2002 (Blane, 1997).

The Web has been likened to the world's largest library—with the books piled in the middle of the floor. Search engines, which are programs that follow the Web's hypertext links and index the material they discover, have improved the organization somewhat but are difficult to use, frequently deluging the user with irrelevant information. Although developments in computing and networking over the last 40 years have realized some of the potential described by visionaries such as Licklider and Engelbart, the field continues to offer many opportunities for innovation.

Lessons from History

The development of the Internet demonstrates that federal support for research, applied at the right place and right time, can be extremely effective. DARPA's support gave visibility to the work of individual researchers on packet switching and resulted in the development of the first large-scale packet-switched network. Continued support for experimentation led to the development of networking protocols and applications, such as e-mail, that were used on the ARPANET and, subsequently, the Internet.

By bringing together a diverse mix of researchers from different institutions, such federal programs helped the Internet gain widespread acceptance and established it as a dominant mode of internetworking. Government programs such as ARPANET and NSFNET created a large enough base of users to make the Internet more attractive in many applications than proprietary networking systems being offered by a number of vendors. Though a number of companies continue to sell proprietary systems for wide area networking, some of which are based on packet-switched technology, these systems have not achieved the ubiquity of the Internet and are used mainly within private industry.

Research in packet switching evolved in unexpected directions and had unanticipated consequences. It was originally pursued to make more-efficient use of limited computing capabilities and later seen as a means of linking the research and education communities. The most notable result, however, was the Internet, which has dramatically improved communication across society, changing the way people work, play, and shop. Although DARPA and the NSF were successful in creating an expansive packet-switched network to facilitate communication among researchers, it took the invention of the Web and its browsers to make the Internet more broadly accessible and useful to society.

The widespread adoption of Internet technology has created a number of new companies in industries that did not exist 20 years ago, and most companies that did exist 20 years ago are incorporating Internet technology into their business operations. Companies such as Cisco Systems, Netscape Communications, Yahoo!, and Amazon.com are built on Internet technologies and their applications and generate billions of dollars annually in combined sales revenues. Electronic commerce is also maturing into an established means of conducting business.

The complementary missions and operating styles of federal agencies are important to the development and implementation of new technologies. Whereas DARPA supported early research on packet switching and development of the ARPANET, it was not prepared to support an operational network, nor did it expand its network beyond DARPA-supported research institutions. With its charter to support research and education, the NSF both supported an operational network and greatly expanded its reach, effectively building the infrastructure for the Internet.

The past 50 years have witnessed a revolution in computing and related communications technologies. The contributions of industry and university researchers to this revolution are manifest; less widely recognized is the major role the federal government played in launching the computing revolution and sustaining its momentum. Funding a Revolution examines the history of computing since World War II to elucidate the federal government's role in funding computing research, supporting the education of computer scientists and engineers, and equipping university research labs. It reviews the economic rationale for government support of research, characterizes federal support for computing research, and summarizes key historical advances in which government-sponsored research played an important role.

Funding a Revolution contains a series of case studies in relational databases, the Internet, theoretical computer science, artificial intelligence, and virtual reality that demonstrate the complex interactions among government, universities, and industry that have driven the field. It offers a series of lessons that identify factors contributing to the success of the nation's computing enterprise and the government's role within it.

READ FREE ONLINE

Welcome to OpenBook!

You're looking at OpenBook, NAP.edu's online reading room since 1999. Based on feedback from you, our users, we've made some improvements that make it easier than ever to read thousands of publications on our website.

Do you want to take a quick tour of the OpenBook's features?

Show this book's table of contents , where you can jump to any chapter by name.

...or use these buttons to go back to the previous chapter or skip to the next one.

Jump up to the previous page or down to the next one. Also, you can type in a page number and press Enter to go directly to that page in the book.

Switch between the Original Pages , where you can read the report as it appeared in print, and Text Pages for the web version, where you can highlight and search the text.

To search the entire text of this book, type in your search term here and press Enter .

Share a link to this book page on your preferred social network or via email.

View our suggested citation for this chapter.

Ready to take your reading offline? Click here to buy this book in print or download it as a free PDF, if available.

Get Email Updates

Do you enjoy reading reports from the Academies online for free ? Sign up for email notifications and we'll let you know about new publications in your areas of interest when they're released.

World Wide Web

Assignment #1, assignment #2, search engines, assignments, internet search, foreign language search engines.

- Full IELTS Practice Tests

- Practice Tests

The World Wide Web from its origins

- View Solution

Solution for: The World Wide Web from its origins

Answer table.

Found a mistake? Let us know!

Share this Practice Test

Exam Review

Science inspired the World Wide Web, and the Web has responded by changing science.

'Information Management: A Proposal' . That was the bland title of a document written in March 1989 by a then little-known computer scientist called Tim Berners-Lee, who was working at CERN, Europe’s particle physics laboratory, near Geneva. His proposal, modestly called the World Wide Web, has achieved far more than anyone expected at the time.

In fact, the Web was invented to deal with a specific problem. In the late 1980s, CERN was planning one of the most ambitious scientific projects ever , the Large Hadron Collider*, or LHC. As the first few lines of the original proposal put it, 'Many of the discussions of the future at CERN and the LHC end with the question "Yes, but how will we ever keep track of such a large project?" This proposal provides an answer to such questions.

The Web, as everyone now knows, has many more uses than the original idea of linking electronic documents about particle physics in laboratories around the world. But among all the changes it has brought about, from personal social networks to political campaigning, it has also transformed the business of doing science itself, as the man who invented it hoped it would.

It allows journals to be published online and links to be made from one paper to another. It also permits professional scientists to recruit thousands of amateurs to give them a hand . One project of this type, called GalaxyZoo, used these unpaid workers to classify one million images of galaxies into various types (spiral, elliptical and irregular). This project, which was intended to help astronomers understand how galaxies evolve, was so successful that a successor has now been launched, to classify the brightest quarter of a million of them in finer detail. People working for a more modest project called Herbaria@home examine scanned images of handwritten notes about old plants stored in British museums. This will allow them to track the changes in the distribution of species in response to climate change.

Another new scientific application of the Web is to use it as an experimental laboratory. It is allowing social scientists, in particular, to do things that were previously impossible. In one project, scientists made observations about the sizes of human social networks using data from Facebook. A second investigation of these networks, produced by Bernardo Huberman of HP Labs, Hewlett-Packard's research arm in Pato Alto, California, looked at Twitter, a social networking website that allows people to post short messages to long lists of friends.

At first glance, the networks seemed enormous - the 300,000 Twitterers sampled had 80 friends each, on average (those on Facebook had 120), but some listed up to 1,000. Closer statistical inspection, however, revealed that the majority of the messages were directed at a few specific friends. This showed that an individual's active social network is far smaller than his 'clan'. Dr Huberman has also helped uncover several laws of web surfing , including the number of times an average person will go from web page to web page on a given site before giving up, and the details of the 'winner takes all' phenomenon, whereby a few sites on a given subject attract most of the attention , and the rest get very little.

Scientists have been good at using the Web to carry out research. However, they have not been so effective at employing the latest web-based social-networking tools to open up scientific discussion and encourage more effective collaboration. Journalists are now used to having their articles commented on by dozens of readers. Indeed, many bloggers develop and refine their essays as a result of these comments.

Yet although people have tried to have scientific research reviewed in the same way, most researchers only accept reviews from a few anonymous experts. When Nature , one of the world's most respected scientific journals, experimented with open peer review in 2006, the results were disappointing. Only 5% of the authors it spoke to agreed to have their article posted for review on the Web - and their instinct turned out to be right, because almost half of the papers attracted no comments. Michael Nielsen, an expert on quantum computers, belongs to a new wave of scientist who want to change this. He thinks the reason for the lack of comments is that potential reviewers lack incentive.

adapted from The Economist

* The Large Hadron Collider (LHC) is the world's largest particle accelerator and collides particle beams. It provides information on fundamental questions of physics.

Questions 1-6

Do the following statements agree with the information given in the reading passage?

Write

TRUE if the statement agrees with the information

FALSE if the statement contradicts the information

NOT GIVEN if there is no information on this

1 TRUE FALSE NOT GIVEN Tim Berners-Lee was famous for his research in physics before he invented the World Wide Web. Answer: FALSE Locate

2 TRUE FALSE NOT GIVEN The original intention of the Web was to help manage one extremely complex project. Answer: TRUE Locate

3 TRUE FALSE NOT GIVEN Tim Berners-Lee has also been active in politics. Answer: NOT GIVEN

4 TRUE FALSE NOT GIVEN The Web has allowed professional and amateur scientists to work together. Answer: TRUE Locate

5 TRUE FALSE NOT GIVEN The second galaxy project aims to examine more galaxies than the first. Answer: FALSE Locate

6 TRUE FALSE NOT GIVEN Herbaria@home’s work will help to reduce the effects of climate change. Answer: NOT GIVEN

Questions 7-10

Complete the notes below.

Choose NO MORE THAN TWO WORDS from the passage for each answer.

Social networks and Internet use

Web used by Social scientists (including Dr Huberman) to investigate the 7 of social networks. Answer: sizes Locate

Most 8 intended for limited number of people - not everyone on list. Answer: messages Locate

Dr Huberman has also investigated:

- 9 to discover how long people will sp end on a particular website, Answer: web surfing Locate

- why a small number of sites get much more 10 than others on same subject. Answer: attention Locate

Questions 11-13

Answer the questions below.

Choose NO MORE THAN TWO WORDS from the passage for each answer.

11 Whose writing improves as a result of feedback received from readers? Answer: bloggers

12 What type of writing is not reviewed extensively on the Web? Answer: scientific research Locate

13 Which publication invited authors to publish their articles on the World Wide Web? Answer: Nature Locate

Other Tests

- 4 - Multiple Choice

- 6 - Matching Headings

- 3 - Matching Information

Deforestation in the 21st century

- Nature & Environment

- 0 unanswered

- 10 - Matching Headings

- 4 - Summary, form completion

Green virtues of green sand

- 6 - YES-NO-NOT GIVEN

- 2 - Sentence Completion

- 6 - Summary, form completion

Worldly Wealth

- 8 - Sentence Completion

A neuroscientist reveals how to think differently

- 3 - Multiple Choice

- 7 - YES-NO-NOT GIVEN

- 3 - Sentence Completion

Zoo conservation programmes

- 5 - YES-NO-NOT GIVEN

- 5 - Matching Information

- 4 - Sentence Completion

Museums of fine art and their public

- Leisure & Entertainment

Found a mistake? Let us know!

Please descibe the mistake as details as possible along with your expected correction, leave your email so we can contact with you when needed.

Describe what is wrong with the practice test:

Please enter description

Enter your name:

Enter your email address:

Please enter a valid email

Internet vs. World Wide Web

The World Wide Web (WWW) is one set of software services running on the Internet. The Internet itself is a global, interconnected network of computing devices. This network supports a wide variety of interactions and communications between its devices. The World Wide Web is a subset of these interactions and supports websites and URIs .

Comparison chart

The Internet is actually a huge network that is accessible to everyone & everywhere across the world. The network is composed of sub-networks comprising of a number of computers that are enabled to transmit data in packets. The internet is governed by a set of rules, laws & regulations, collectively known as the Internet Protocol (IP). The sub-networks may range from defense networks to academic networks to commercial networks to individual PCs. Internet, essentially provides information & services in the form of E-Mail, chat & file transfers. It also provides access to the World Wide Web & other interlinked web pages.

The Internet & the World Wide Web (the Web), though used interchangeably, are not synonymous. Internet is the hardware part - it is a collection of computer networks connected through either copper wires, fiber-optic cables or wireless connections whereas, the World Wide Web can be termed as the software part – it is a collection of web pages connected through hyperlinks and URLs . In short, the World Wide Web is one of the services provided by the Internet. Other services over the Internet include e-mail, chat and file transfer services. All of these services can be provided to consumers for use by businesses or government or by individuals creating their own networks or platforms.

Another method to differentiate between both is using the Protocol Suite – a collection of laws & regulations that govern the Internet. While internet is governed by the Internet Protocol – specifically dealing with data as whole and their transmission in packets , the World Wide Web is governed by the Hyper Text Transfer Protocol (HTTP) that deals with the linking of files, documents and other resources of the World Wide Web.

The Advanced Research Projects Agency (ARPA) created by the US in 1958 as a reply to the USSR’s launching of the Sputnik , led to creation of a department called the Information Processing Technology Office (IPTO) which started the Semi Automatic Ground Environment (SAGE) that linked all the radar systems of US together. With tremendous research happening across the world, the University of California in Los Angeles (UCLA) got the ARPANET , a smaller version of the Internet in 1969. Since then Internet has taken huge strides in terms of technology and connectivity to reach its current position. In 1978, the International Packet Switched Service (IPSS) was created in Europe by the British Post Office in collaboration with Tymnet & Western Union International and this network slowly spread its wings to the US and Australia. In 1983, the first Wide Area Network (WAN) was created by the National Science Foundation (NSF) of the US called the NSFnet. All these sub-networks merged together post 1985 with new definitions of the Transfer Control Protocols of the Internet Protocol ( TCP /IP) for optimization of resources.

The Web was invented by Sir Tim Berners Lee. In March 1989, Tim Berners-Lee wrote a proposal that described the Web as an elaborate information management system. With help from Robert Cailliau, he published a more formal proposal for the World Wide Web on November 12, 1990. By Christmas 1990, Berners-Lee had built all the tools necessary for a working Web: the first web browser (which was a web editor as well), the first web server , and the first Web pages which described the project itself. On August 6, 1991, he posted a short summary of the World Wide Web project on the alt.hypertext newsgroup. This date also marked the debut of the Web as a publicly available service on the Internet.

Berners-Lee's breakthrough was to marry hypertext to the Internet . In his book Weaving The Web , he explains that he had repeatedly suggested that a marriage between the two technologies was possible to members of both technical communities, but when no one took up his invitation, he finally tackled the project himself. In the process, he developed a system of globally unique identifiers for resources on the Web and elsewhere: the Uniform Resource Identifier.

The World Wide Web had a number of differences from other hypertext systems that were then available. The Web required only unidirectional links rather than bidirectional ones. This made it possible for someone to link to another resource without action by the owner of that resource. It also significantly reduced the difficulty of implementing web servers and browsers (in comparison to earlier systems), but in turn presented the chronic problem of link rot . Unlike predecessors such as HyperCard , the World Wide Web was non-proprietary, making it possible to develop servers and clients independently and to add extensions without licensing restrictions.

For more details see The History of the Internet and The History of the World Wide Web .

Internet of Things

In recent years, the phrase Internet of Things—or IoT—has been used to denote a subset of the Internet that connects physical devices, such as home appliances, vehicles, industrial sensors. Historically the devices connected to the Internet have been computers, cell phones and tablets. With the Internet of Things, other devices like refrigerators, HVAC systems, light bulbs, cars, thermostats, video cameras, and locks can also connect to the Internet. This allows better monitoring and more control of the physical world through the Internet.

About the Author

Related Comparisons

Share this comparison via:

If you read this far, you should follow us:

"Internet vs World Wide Web." Diffen.com. Diffen LLC, n.d. Web. 23 Oct 2024. < >

Comments: Internet vs World Wide Web

Anonymous comments (2).

September 12, 2012, 3:28pm This was very educating once you really get to reading these paragraphs. — 170.✗.✗.19

May 8, 2014, 9:23am Nice ans — 101.✗.✗.158

- Comcast vs FiOS

- Flickr vs Picasa

- Google vs Yahoo

- Cat5 vs Cat5e

- WikiLeaks vs Wikipedia

- Cable vs DSL

- Modem vs Router

Edit or create new comparisons in your area of expertise.

Stay connected

© All rights reserved.

- Create A Quiz

- Relationship

- Personality

- Harry Potter

- Online Exam

- Entertainment

- Featured Clients

- Case Studies

- Testimonials

- Integrations

- Suggestion Box

- Ask A Question

- Training Maker

- Survey Maker

- Brain Games

- ProProfs.com

World Wide Web Quizzes, Questions & Answers

Top trending quizzes.

Popular Topics

Recent Quizzes

Assignment on World Wide Web

Web Site:

A web site is a collection of web pages, images, videos or other digital assets that is hosted on one or several web server(s), usually accessible via the Internet, cell phone or a LAN. A web page is a document, typically written in HTML, that is almost always accessible via HTTP, a protocol that transfers information from the web server to display in the user’s web browser. All publicity accessible websites are seen collectively as constituting the “World Wide Web”.

The pages of websites can usually be accessed from a common root URL called the homepage, and usually reside on the same physical server. The URLs of the pages organize them into a hierarchy, although the hyperlinks between them control how the reader perceives the overall structure and how the traffic flows between the different parts of the sites. Some websites require a subscription to access some or all of their content. Examples of subscription sites include many business sites, parts of many news sites, academic journal sites, gaming sites, message boards, Web-based e-mail, services, social networking website and sites providing real-time stock market data.

Organized by the function, a website may be-

» A personal website

» A commercial website

» A government website

» A non-profit organization website

It could be the work of an individual, a business or other organization and is typically dedicated to some particular topic or purpose.

Web The New Arena:

Life was just going on fine, when along came the Internet and just about everything changed. You just have to look back over the past five year or six and think of different things were before that. Today, everyone’s young, smart and online. Oh yes, we are well into the Net Age. Whether you are working in a high-tech corporation or setting up your home office, trying to learn or two at college or having a whale of a time at school, life’s on the Internet .

Content Is The King:

No matter how great a site looks, no amount of design ever makes up for poor content. This is a fact that many web author lose sight of. That’s why we have so many sites around us that offer the visitor the same old thing – a bit of this a bit of that. A really good site must have solid unique content. That’s why as experts recommend we started with strategy and purpose first – no with design. First off you must quite clear of the purpose of your site. This holds true for any type of web sites, whether it’s a personal web sites, a small business set up, a hobbyist’s page or e- commerce or anything else. A web site without purpose just takes space and please no one but its own author. So unless you are just using your site for storage, start with putting down your purpose, your objectives, and message.

Types Of Web Site:

There are many variety of web sites, each specializing in a particular type of content or use and they may be arbitrarily classified in any number of ways. A few such classifications might include:-

Affiliated Sites: Enabled portal that renders not only its custom CMS but also syndicated content from other content providers for an agreed fee. There are usually three relationship tiers. Affiliate Agencies ( e.g. Commission Junction), Advertisers ( e.g. Ebay) and consumer ( e.g. Yahoo).

Archive Site: Used to preserve valuable electronic content threatened with extinction. Two examples are – Internet Archive which since 1996 has preserved billions of old ( and new ) web pages and Google Groups which in early 2005 was archiving over 845,000,000 messages posted to Usenet news / discussion groups.

Blog Site: Sites generally used to post online diaries which may include discussion forums ( e.g. blogger, xanga).

Content Site: Sites whose business is the creation and distribution of original content ( e.g. Slant, About.com).

Corporate Site: Used to provide background information about a business, organization or service.

E-Commerce Site: For purchasing goods such as Amazon.com.

Community Site: A site where persons with similar interests communicate with each other usually by chat or message boards such as MySpace.

Database Site: A site whose main use is the search and display of a specific database’s content such as the Internet Movie Database or the Political Graveyard.

Development Site: A site whose purpose is to provide information and resources related to software development, web design and the like.

Directory Site: A site that contains varied contents which are divided into categories and subcategories such as Yahoo! directory, Google directory and open directory project.

Download Site: Strictly used for downloading electronic content such as software, game demos or computer wall paper.

Employment Site: Allows employers to post job requirements for a position or positions and prospective employees to fill an application.

Erotica Websites: Shows sexual videos and images.

Fan Site: A web site created and maintained by fans of and for a particular celebrity as opposed to a web site created, maintained and controlled by a celebrity through their own paid webmaster. May also be known as a Shine in the case of certain subjects such as anime and manga characters.

Game Site: A site that is itself a game or “playground” where many people come to play such as MSN Games, POGO.com and Newgrounds.com.

Gripe Site: A site devoted to the critique of a person, place, corporation, government or institution.

Humor Site: Satirizes, parodies or otherwise exists solely to amuse.

Information Site: Contains content that is intended to inform visitors but not necessarily for commercial purposes such as: RateMyProfessors.com, free internet lexicon and encyclopedia. Most government, educational and non-profit institutions have an informational site.

Java Applet Site: Contains software to run over the web as a web application.

Mirror ( Computing ) Site: A complete reproduction of a website.

News Site: Similar to an information site but dedicated to dispensing news and commentary.

Personal Homepage: Run by an individual or a small group ( such as a family ) that contains information or any content that the individual wishes to include.

Political Site: A site on which people may voice political views.

Pornography ( porn ) Site: Site that shows pornographic images & videos.

Rating Site: Site on which people can praise or disparage what is featured.

Review Site: Site on which people can post reviews for product or service.

Search Engine Site: A site that provides general information and is intended as a gateway or lookup for other sites. A pure example is Google and the most widely known extended type is Yahoo! .

Shock Site: Includes images or other material that is intended to be offensive to most viewers ( e.g. Rotten.com ).

Phish Site: Website created to fraudulently acquire sensitive information such as passwords and credit card details by masquerading as a trustworthy person or business ( such as social security administration, paypal ) in an electronic communication.

Warez: A site filled with illegal downloads.

Web Portal: A site that provides a starting point or a gateway to other resources on the internet or an intranet.

Wiki Site: A site which users collaboratively edit ( such as Wikipedia ).

World Wide Web:

The letters “www” are commonly found at the beginning of Web addresses because of the long-standing practice if naming Internet hosts ( servers ) according to the services they provide. So for example, the host name for a Web server is often “www” for an FTP server, “ftp”; and for a USENET news server, “news” or “ nntp ” ( after the news protocol NNTP). These host names appear as DNS subdomain names as in www.example.com. This use of such prefix is not require by any technical standard; indeed, the web server was at “ nxoc01.cern.ch”, [ 15 ] and even today many web sites exist without a “ www ” prefix has no meaning in the way the main web site is shown. The “ www ” prefix is simply one choice for a web site’s subdomain name. Some web browsers will automatically try adding “ www ” to the beginning and possibly “ .com ” to the end of typed URLs if no host is found without them. Internet Explorer, Mozilla Firefox, Safari and opera will also prefix “ http://www” and append “ .com ” to the address bar contents if the Control and Enter keys are pressed simulteniously. For example, entering “ example ” in the address bar and then press either just Enter or Control+Enter will usually resolve to “ http://www.example.com ” depending on the exact browser version and its settings.

The World Wide Web ( commonly shortened to the web ) is a system of interlinked, hypertext documents accessed via the Internet. With a web browser, a user views web pages that contain text, images, videos and other multimedia and navigates between them using hyperlinks. The World Wide Web was created in 1989 by Sir Tim Berners Lee, working at CERN in Geneva, Switzerland. Since then, Berners Lee has played an active role in guiding the development of web standards ( such as the markup languages in which web pages are composed), and in recent years has advocated his vision of a Semantic Web. Robert Cailliau, also at CERN, was an early evangelist for the project.

How The Web Works:

Viewing a web page on the World Wide Web normally begins either by typing the URL of the page into a web browser or by following a hyperlink to that page or resource. The web browser then initiates a series of communication messages behind the scenes in order to fetch and display it.

First the server-name portion of the URL is resolved into an IP address using the global, distributed Internet database known as the domain name system or DNS. This IP address is necessary to contact and send data packets to the web server.

The browser then requests the resource by sending an HTTP request to the web server at that particular address. In the case of a typical web page the HTML text of the page is requested first and parsed immediately by the web browser which will then make additional requests for images and any other files that form a part of the page. Statistics measuring a website’s popularity are usually based on the number of ‘ page views’ or associated server ‘ hits’ or requests which take place.

Having received the require files from the web server the browser renders the page onto the screen as specified by its HTML, CSS and other web languages. Any images and other resources are incorporated to produce the on-screen web page that the user sees.

Contents Of Web Site:

No matter how great a site looks, no amount of design ever makes up for poor content. This is a fact that many web author lose sight of. That’s why we have so many sites around us that offer the visitor the same old thing – a bit of this, a bit of that. A really good site must have solid unique content. That’s why as exports recommend we started with strategy and purpose first – no with design. First off you must quite clear of the purpose of your site. This hold true for any type of websites, whether it’s a personal websites, a small business setup, a hobbyist’s page or e-commerce or anything else. A website without purpose just takes space and please no one but its own author. So unless you are just using your site for storage, start with putting down your purpose, your objectives, and the message.

Writing Web Site:

Writing for the web is in many ways different form writing for print. For one, the reader’s purpose in reading may be different. His attention span is different. The reading experience online and the way the reader’s eye moves across a page are different. With a printed page, there is only one sort of navigation-turn the page. But on a web page, there can be dozens and dozens of options all visible at once. And there’s your reader, finger poised over the mouse button, ready to be interactive. But with a web page, interactivity is important because readers want to do something. All this means that information has to be tailored and arranged specially for online reading. Writing for the web skillfully involves learning how to keep in mind new online reading habits and patterns. It means being able to put forward information in a way that draws the reader in quickly and keeps him at the website or at least that it gives him what he wants so that he comes back again and again.

Web Site Styles:

A static web site is one that has web pages stored on the server in the same form as the user will view them. They are edited using three broad categories of software:

Text editor such as notepad or text editor, where the HTML is manipulated directly within the editor program.

Editor such as Microsoft Frontpage and Macromedia Dreamweaver where the site is edited using a GUI interface and the underlying HTML is generated automatically by the editor software. Template-based editors such as Rapidweaver and iWeb which allow users to quickly create and upload websites to a web server without having to know anything about HTML as they just pick a suitable template from a palette and add pictures and text to it in a DTP-like fashion without ever having to see any HTML code. A dynamic website is one that has frequently changing information or collates information on the hop each time a page is requested. For example- it would call various bits of information from a database and put them together in a pre-defined format to present the reader with a coherent page. It interacts with users in a variety of ways including by reading cookies recognizing user’s previous history, session variables, server side variables etc, or by using direct interaction ( form elements, mouseovers, etc ). A site can display the current state of a dialog between users, monitor a changing situation or provide information in some way personalized to the requirements of the individual users.

What makes the World Wide Web so exciting is the limitless ways in which information and content can be put up. Using color, picture, sounds, movie clips, animation and interactivity, you can make sure your site is compelling enough to draw visitors again and again which is what every website wants. Naturally, this makes the site’s design, its layout, navigation and general look and feel an important aspect to work on. What usually tends to happen is that people over-design a website, filling it with bright starting colors, a feast of different fonts and too many pictures for its own good. Many resource sites have design is term that is used rather loosely sometimes it can include usability issues, navigation, browser compatibility and so on. If your page is about a regional specific topic, then make sure you include that.