35 Higher-Order Thinking Questions

Higher-order thinking questions are questions that you can ask in order to stimulate thinking that requires significant knowledge mastery and data manipulation.

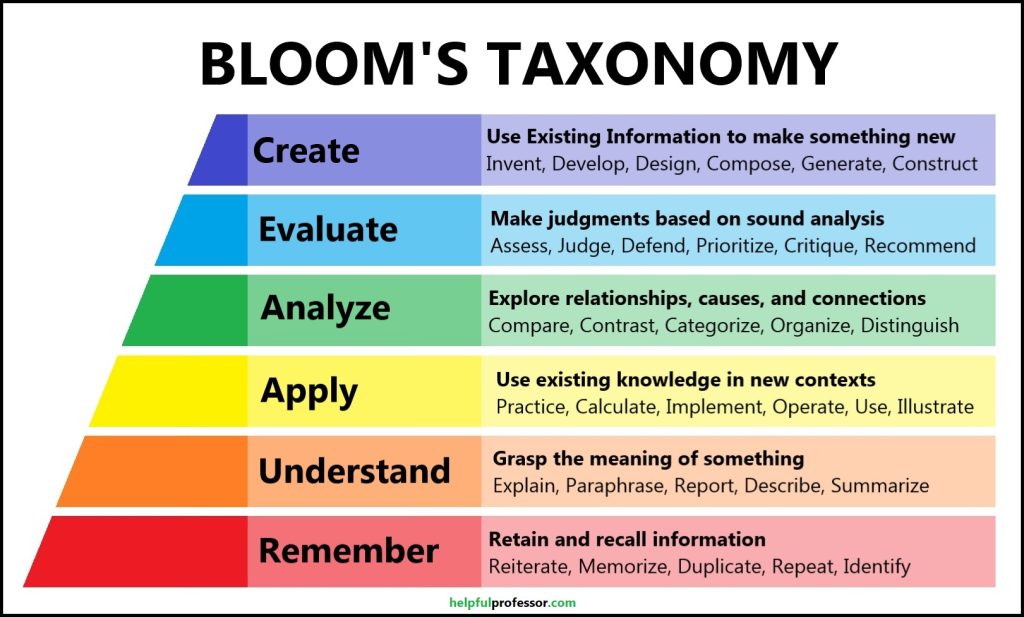

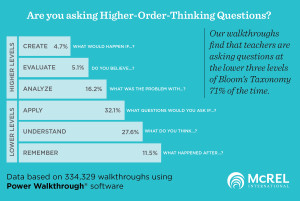

Generally, higher-order thinking involves thinking from the top 3 levels of bloom’s taxonomy: analysis, evaluation, and knowledge creation.

The term “higher-order” is used because these forms of thinking require strong command of information and the ability to work with it to develop complex understanding (Stanley, 2021).

Generally, a higher-order thinking question will be open-ended and require the student to demonstrate their ability to analyze and evaluate information.

Higher-Order Thinking Questions

Below are some useful questions for stimulating higher-order thinking.

Questions for Teachers to Ask Students

- Encourage compare and contrast: How would you compare and contrast these two concepts/ideas?

- Seek alternatives: Can you provide an alternative solution to this problem?

- Apply an ethical lens: What ethical considerations are involved in this situation or decision?

- Categorize and classify: How would you categorize or classify these items based on their shared characteristics?

- Sort by priority: How would you prioritize these tasks, and what factors did you consider?

- Real-world connections: How can you apply this concept to a real-world situation?

- Rephrase and reframe: How would you rephrase this question or problem from a different perspective?

- Identify trends: Can you identify any trends or developments that may influence this issue in the future?

- Seek solutions: How would you design a solution to address this challenge?

- Use evidence: What evidence supports your point of view or conclusion?

- Find relationships: Can you explain the relationship between these two events or phenomena?

- Change a variable: How would this situation change if we altered this variable or factor?

- Compare to prior knowledge: In what ways does this concept challenge your previous understanding or beliefs?

- Identify connections: Can you explain how these two seemingly unrelated ideas are connected or interdependent ?

- Re-conextualize: How would you adapt this solution to work in a different context or environment?

- Identifying consequences: What are the potential consequences of this decision or action?

- Evaluate: What criteria would you use to evaluate the effectiveness or success of this approach?

- Interdisciplinary connections: How can you apply principles from another discipline to enhance your understanding of this topic?

- Distil key factors: What factors may have contributed to this outcome or result, and how might they be addressed?

- Identifying bias: Can you identify any biases or assumptions in this argument?

- Find weaknesses: How would you argue against your own position or point of view?

- Steelman: Can you think of likely criticisms of your position and identify ways you would respond?

- Make judgments about best practices: Can you develop a set of guidelines or best practices based on this information?

- Seek next steps: What questions would you ask to further investigate or explore this topic?

- Reflect on process: What did you learn about how you went about this task and how would you make changes next time for improvements?

Questions for Students to Ask Themselves

- K-W-L: What do I already know about this topic, what do I still need to learn, and what have I learned today?

- Compare and contrast with prior knowledge: How does this new information relate to what I already know?

- Identify assumptions : What assumptions am I making, and are they justified?

- Organize: How can I organize this information in a way that makes sense to me?

- Identify trends: What patterns or connections can I identify between these concepts or ideas?

- Think from another perspective: Am I considering multiple perspectives or viewpoints in my analysis?

- Brainstorm implications : What are the potential implications of my conclusions or decisions?

- Hypothesize: How can I use my current knowledge to predict or hypothesize about future events?

- Identify inconsistency: Can I recognize any logical fallacies or inconsistencies in my reasoning?

- Seek new strategies: What strategies can I employ to improve my understanding and retention of this material?

Higher-Order Thinking vs Lower-Order Thinking

Benefits of higher-order thinking.

Higher-order thinking offers numerous benefits to learners, including:

- Enhanced problem-solving skills : Higher-order thinking develops a student’s ability to tackle complex problems by breaking them down, analyzing different aspects, and putting the information back together to find new solutions. This is highly valued in 21st Century workplaces (Saifer, 2018).

- Critical thinking and reasoning : Students who engage in higher-order thinking are better equipped to evaluate information, question assumptions, and identify biases. This helps them to have better media literacy and enables them to form independent conclusions rather than being easily swayed by flawed information (Richland & Simms, 2015).

- Creativity and innovation : Higher-order thinking fosters creativity by encouraging students to think beyond the obvious. Students are encouraged to explore alternative perspectives and find alternative ways to approach common problems. This creative thinking is highly valuable in various academic and professional fields, including STEM and the arts.

- Deeper understanding and retention: Lower-order thinking prioritizes memorization, but because the information is not sufficiently contextualized and learned though knowledge construction, it tends to be lost with time. Higher-order thinking, on the other hand, promotes a more profound understanding of subjects. This deeper comprehension leads to better long-term retention of knowledge and better ability to manipulate information (Ghanizadeh, Al-Hoorie & Jahedizadeh, 2020).

- Greater self-awareness and metacognition : Higher-order thinking fosters self-reflection and metacognition. Students who have learned skills like critique, identifying flaws and biases, and logical analysis, are able to apply those skills to their own thinking to reflect on how they can improve their own rational meaning-making.

How to Stimulate Higher-Order Thinking in the Classroom

- Cultivate inquisitive minds: Encourage students to ask questions – regularly. Create a classroom culture where questioning is encouraged and there are “no wrong questions.” Encourage questions that delve deeper into subjects, challenge assumptions, or stimulate further cuiriosity. This will foster their critical thinking by constantly making them peel back the layers of knowledge on any topic (Yen & Halili, 2015).

- Tackle real-life challenges: Create lesson plans that root the learning content in real-world situations (i.e. situated learning ). Require students to apply their knowledge and skills to new situations rather than just on worksheets. By addressing genuine issues that, ideally, are relevant to students’ lives, students can start to work with and manipulate the knowledge they have received in the classroom (Saifer, 2018).

- Encourage collaboration and active learning : Promote group discussions, debates, and cooperative problem-solving activities. Group work helps with higher-order thinking because students are exposed to diverse perspectives and new ways of doing things from their peers. By seeing others’ thought processes, we can enhance our own (Ghanizadeh, Al-Hoorie & Jahedizadeh, 2020).

- Reflect and build self-awareness : Nurture the habit of self-reflection in students. Here, we’re referring to the concept of metacognition which refers to ‘thinking about thinking’. This encourages students to evaluate how they went about learning and continually work on improving their learning process. This plays a vital role in recognizing my strengths and weaknesses and refining my learning strategies (Yen & Halili, 2015).

- Interweave interdisciplinary connections: Combine ideas, concepts, and techniques from various disciplines to encourage a comprehensive understanding of complex subjects. One discipline may shed light on the topic in a way that another discipline is completely blind to. By establishing connections between different fields, students can sharpen their analytical and creative thinking abilities (Richland & Simms, 2015).

Higher-Order Thinking on Bloom’s Taxonomy

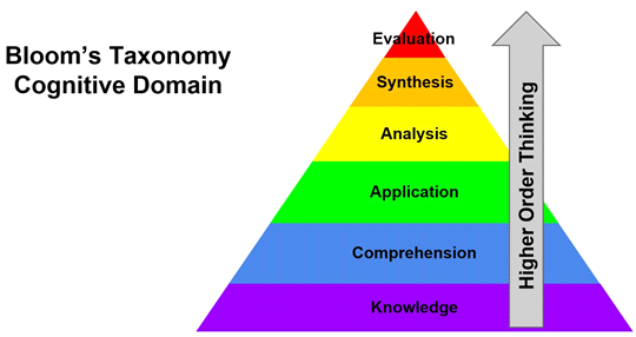

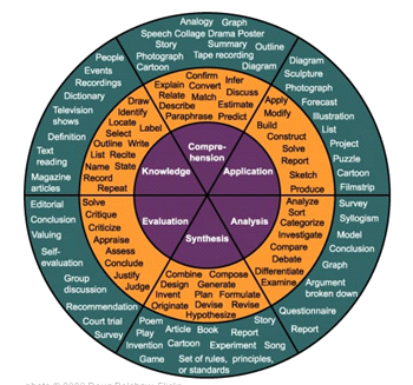

Higher and lower-order thinking skills are most famously presented in Bloom’s Taxonomy .

This taxonomy is used to categorize levels of understanding , starting from shallow knowledge and ending with deep understanding.

Below is an image demonstrating the Bloom’s Taxonomy hierarchy of knowledge :

As shown in the above image, Bloom distils 6 forms of knowledge and understanding. The bottom 3 (remember, understand, and apply) relate to lower-order thinking that doesn’t require deep knowledge. The top 3 (analyze, evaluate, create) represent higher-oreder thinking.

Each is explained below:

1. Remembering (Lower-Order)

Definition: This is the most fundamental level of understanding that involves remembering basic information regarding a subject matter. This means that students will be able to define concepts, list facts, repeat key arguments, memorize details, or repeat information.

Example Question: “What is 5×5?”

2. Understanding (Lower-Order)

Definition: Understanding means being able to explain. This can involve explaining the meaning of a concept or an idea. This is above remembering because it requires people to know why , but it is not yet at a level of analysis or critique.

Example Question: “Can you show me in a drawing what 5×5 looks like?”

3. Applying (Middle-Order)

Definition: Applying refers to the ability to use information to do work. Ideally, it will occur in situations other than the situation in which it was learned. This represents a deeper level of understanding.

Example Question: “If you buy five chocolates worth $5 each, how much will you have to pay?”

4. Analyzing (Higher-Order)

Definition: This is generally considered to be the first layer of higher-order thinking. It involves conducting an analysis independently. This includes the ability to make connections between ideas, explore the logic of an argument, and compare various concepts.

Example Question: “Based on what you’ve learned, can you identify five key themes?”

5. Evaluating (Higher-Order)

Definition: Evaluating means determining the correctness, morality, or rationality of a perspective. At this level, students can identify the merits of an argument or point of view and weigh the relative strengths of each point. It requires analysis, but steps-up to making judgments about what you’re seeing.

Example Question: “Based on all the information you’ve gathered, what do you think is the most ethical course of action?”

6. Creating (Higher-Order)

Definition: The final level of Bloom’s taxonomy is when students can create knowledge by building on what they already know. This may include, for example, formulating a hypothesis and then testing it through rigorous experimentation.

Example Question: “Now you’ve mastered an understanding of accounting, could you make an app that helps an everyday person manage their bookkeeping?”

Higher-order thinking is a necessary skill for the 21st Century. It promotes those thinking skills that are required for high-paying jobs and allows people to think critically, be more media literate, and come to better solutions to problems both in their personal and professional lives. By encouraging this sort of thinking in school, educators can help their students get better grades now and live a better life into the future.

Ghanizadeh, A., Al-Hoorie, A. H., & Jahedizadeh, S. (2020). Higher order thinking skills in the language classroom: A concise guide . New York: Springer International Publishing.

Richland, L. E., & Simms, N. (2015). Analogy, higher order thinking, and education. Wiley Interdisciplinary Reviews: Cognitive Science , 6 (2), 177-192. doi: https://doi.org/10.1002/wcs.1336

Saifer, S. (2018). HOT skills: Developing higher-order thinking in young learners . London: Redleaf Press.

Stanley, T. (2021). Promoting rigor through higher level questioning practical strategies for developing students’ critical thinking. New York: Taylor & Francis.

Yen, T. S., & Halili, S. H. (2015). Effective teaching of higher order thinking (HOT) in education. The Online Journal of Distance Education and e-Learning , 3 (2), 41-47.

Chris Drew (PhD)

Dr. Chris Drew is the founder of the Helpful Professor. He holds a PhD in education and has published over 20 articles in scholarly journals. He is the former editor of the Journal of Learning Development in Higher Education. [Image Descriptor: Photo of Chris]

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 5 Top Tips for Succeeding at University

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 50 Durable Goods Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 100 Consumer Goods Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 30 Globalization Pros and Cons

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

- Grades 6-12

- School Leaders

FREE Poetry Worksheet Bundle! Perfect for National Poetry Month.

50+ Higher-Order Thinking Questions To Challenge Your Students

50+ lower order thinking questions too!

Want to help your students make strong connections with the material? Ensure you’re using all six levels of cognitive thinking. This means asking lower-order thinking questions as well as higher-order thinking questions. Learn more about each here, and find plenty of examples for each.

What are lower-order and higher-order thinking questions?

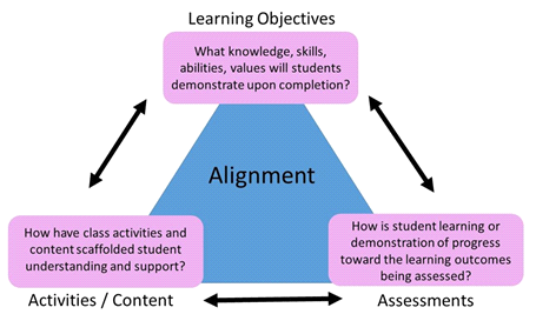

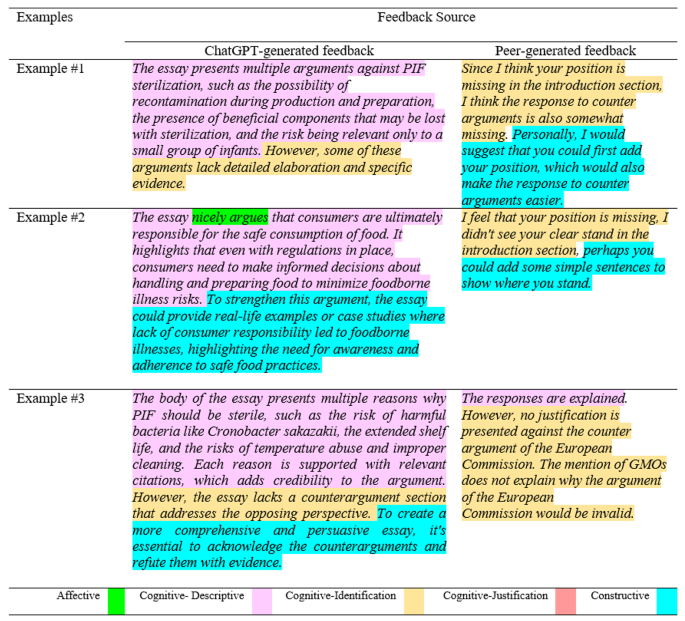

Source: University of Michigan

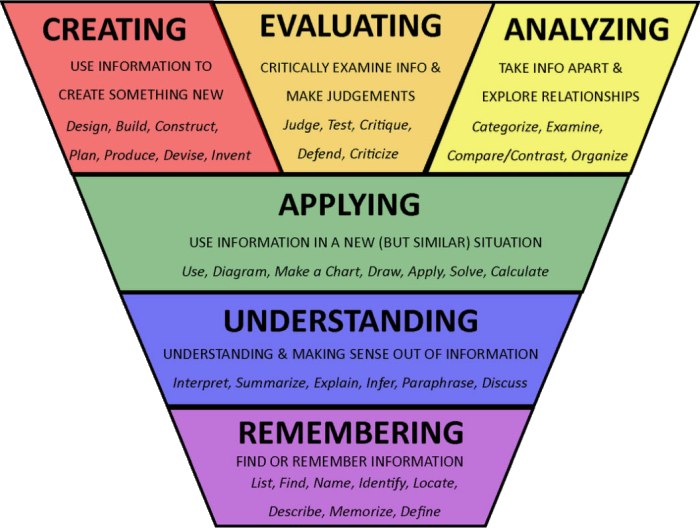

Bloom’s Taxonomy is a way of classifying cognitive thinking skills. The six main categories—remember, understand, apply, analyze, evaluate, create—are broken into lower-order thinking skills (LOTS) and higher-order thinking skills (HOTS). LOTS includes remember, understand, and apply. HOTS covers analyze, evaluate, and create.

While both LOTS and HOTS have value, higher-order thinking questions urge students to develop deeper connections with information. They also encourage kids to think critically and develop problem-solving skills. That’s why teachers like to emphasize them in the classroom.

New to higher-order thinking? Learn all about it here. Then use these lower- and higher-order thinking questions to inspire your students to examine subject material on a variety of levels.

Remember (LOTS)

- Who are the main characters?

- When did the event take place?

- What is the setting of the story?

- Where would you find _________?

- How do you __________?

- What is __________?

- How do you define _________?

- How do you spell ________?

- What are the characteristics of _______?

- List the _________ in proper order.

- Name all the ____________.

- Describe the __________.

- Who was involved in the event or situation?

- How many _________ are there?

- What happened first? Next? Last?

Understand (LOTS)

- Can you explain why ___________?

- What is the difference between _________ and __________?

- How would you rephrase __________?

- What is the main idea?

- Why did the character/person ____________?

- What’s happening in this illustration?

- Retell the story in your own words.

- Describe an event from start to finish.

- What is the climax of the story?

- Who are the protagonists and antagonists?

- What does ___________ mean?

- What is the relationship between __________ and ___________?

- Provide more information about ____________.

- Why does __________ equal ___________?

- Explain why _________ causes __________.

Apply (LOTS)

- How do you solve ___________?

- What method can you use to __________?

- What methods or approaches won’t work?

- Provide examples of _____________.

- How can you demonstrate your ability to __________.

- How would you use ___________?

- Use what you know to __________.

- How many ways are there to solve this problem?

- What can you learn from ___________?

- How can you use ________ in daily life?

- Provide facts to prove that __________.

- Organize the information to show __________.

- How would this person/character react if ________?

- Predict what would happen if __________.

- How would you find out _________?

Analyze (HOTS)

- What facts does the author offer to support their opinion?

- What are some problems with the author’s point of view?

- Compare and contrast two main characters or points of view.

- Discuss the pros and cons of _________.

- How would you classify or sort ___________?

- What are the advantages and disadvantages of _______?

- How is _______ connected to __________?

- What caused __________?

- What are the effects of ___________?

- How would you prioritize these facts or tasks?

- How do you explain _______?

- Using the information in a chart/graph, what conclusions can you draw?

- What does the data show or fail to show?

- What was a character’s motivation for a specific action?

- What is the theme of _________?

- Why do you think _______?

- What is the purpose of _________?

- What was the turning point?

Evaluate (HOTS)

- Is _________ better or worse than _________?

- What are the best parts of __________?

- How will you know if __________ is successful?

- Are the stated facts proven by evidence?

- Is the source reliable?

- What makes a point of view valid?

- Did the character/person make a good decision? Why or why not?

- Which _______ is the best, and why?

- What are the biases or assumptions in an argument?

- What is the value of _________?

- Is _________ morally or ethically acceptable?

- Does __________ apply to all people equally?

- How can you disprove __________?

- Does __________ meet the specified criteria?

- What could be improved about _________?

- Do you agree with ___________?

- Does the conclusion include all pertinent data?

- Does ________ really mean ___________?

Create (HOTS)

- How can you verify ____________?

- Design an experiment to __________.

- Defend your opinion on ___________.

- How can you solve this problem?

- Rewrite a story with a better ending.

- How can you persuade someone to __________?

- Make a plan to complete a task or project.

- How would you improve __________?

- What changes would you make to ___________ and why?

- How would you teach someone to _________?

- What would happen if _________?

- What alternative can you suggest for _________?

- What solutions do you recommend?

- How would you do things differently?

- What are the next steps?

- What factors would need to change in order for __________?

- Invent a _________ to __________.

- What is your theory about __________?

What are your favorite higher-order thinking questions? Come share in the WeAreTeachers HELPLINE group on Facebook .

Plus, 100+ critical thinking questions for students to ask about anything ..

You Might Also Like

What Is Higher-Order Thinking and How Do I Teach It?

Go beyond basic remembering and understanding. Continue Reading

Copyright © 2023. All rights reserved. 5335 Gate Parkway, Jacksonville, FL 32256

Higher Order Thinking: Bloom’s Taxonomy

Many students start college using the study strategies they used in high school, which is understandable—the strategies worked in the past, so why wouldn’t they work now? As you may have already figured out, college is different. Classes may be more rigorous (yet may seem less structured), your reading load may be heavier, and your professors may be less accessible. For these reasons and others, you’ll likely find that your old study habits aren’t as effective as they used to be. Part of the reason for this is that you may not be approaching the material in the same way as your professors. In this handout, we provide information on Bloom’s Taxonomy—a way of thinking about your schoolwork that can change the way you study and learn to better align with how your professors think (and how they grade).

Why higher order thinking leads to effective study

Most students report that high school was largely about remembering and understanding large amounts of content and then demonstrating this comprehension periodically on tests and exams. Bloom’s Taxonomy is a framework that starts with these two levels of thinking as important bases for pushing our brains to five other higher order levels of thinking—helping us move beyond remembering and recalling information and move deeper into application, analysis, synthesis, evaluation, and creation—the levels of thinking that your professors have in mind when they are designing exams and paper assignments. Because it is in these higher levels of thinking that our brains truly and deeply learn information, it’s important that you integrate higher order thinking into your study habits.

The following categories can help you assess your comprehension of readings, lecture notes, and other course materials. By creating and answering questions from a variety of categories, you can better anticipate and prepare for all types of exam questions. As you learn and study, start by asking yourself questions and using study methods from the level of remembering. Then, move progressively through the levels to push your understanding deeper—making your studying more meaningful and improving your long-term retention.

Level 1: Remember

This level helps us recall foundational or factual information: names, dates, formulas, definitions, components, or methods.

Level 2: Understand

Understanding means that we can explain main ideas and concepts and make meaning by interpreting, classifying, summarizing, inferring, comparing, and explaining.

Level 3: Apply

Application allows us to recognize or use concepts in real-world situations and to address when, where, or how to employ methods and ideas.

Level 4: Analyze

Analysis means breaking a topic or idea into components or examining a subject from different perspectives. It helps us see how the “whole” is created from the “parts.” It’s easy to miss the big picture by getting stuck at a lower level of thinking and simply remembering individual facts without seeing how they are connected. Analysis helps reveal the connections between facts.

Level 5: Synthesize

Synthesizing means considering individual elements together for the purpose of drawing conclusions, identifying themes, or determining common elements. Here you want to shift from “parts” to “whole.”

Level 6: Evaluate

Evaluating means making judgments about something based on criteria and standards. This requires checking and critiquing an argument or concept to form an opinion about its value. Often there is not a clear or correct answer to this type of question. Rather, it’s about making a judgment and supporting it with reasons and evidence.

Level 7: Create

Creating involves putting elements together to form a coherent or functional whole. Creating includes reorganizing elements into a new pattern or structure through planning. This is the highest and most advanced level of Bloom’s Taxonomy.

Pairing Bloom’s Taxonomy with other effective study strategies

While higher order thinking is an excellent way to approach learning new information and studying, you should pair it with other effective study strategies. Check out some of these links to read up on other tools and strategies you can try:

- Study Smarter, Not Harder

- Simple Study Template

- Using Concept Maps

- Group Study

- Evidence-Based Study Strategies Video

- Memory Tips Video

- All of our resources

Other UNC resources

If you’d like some individual assistance using higher order questions (or with anything regarding your academic success), check out some of your UNC resources:

- Academic Coaching: Make an appointment with an academic coach at the Learning Center to discuss your study habits one-on-one.

- Office Hours : Make an appointment with your professor or TA to discuss course material and how to be successful in the class.

Works consulted

Anderson, L. W., Krathwohl, D.R., Airasian, P.W., Cruikshank, K.A., Mayer, R.E., Pintrich, P.R., Wittrock, M.C (2001). A taxonomy of learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives. New York, NY: Longman.

“Bloom’s Taxonomy.” University of Waterloo. Retrieved from https://uwaterloo.ca/centre-for-teaching-excellence/teaching-resources/teaching-tips/planning-courses-and-assignments/course-design/blooms-taxonomy

“Bloom’s Taxonomy.” Retrieved from http://www.bloomstaxonomy.org/Blooms%20Taxonomy%20questions.pdf

Overbaugh, R., and Schultz, L. (n.d.). “Image of two versions of Bloom’s Taxonomy.” Norfolk, VA: Old Dominion University. Retrieved from https://www.odu.edu/content/dam/odu/col-dept/teaching-learning/docs/blooms-taxonomy-handout.pdf

If you enjoy using our handouts, we appreciate contributions of acknowledgement.

Make a Gift

Higher Order Thinking Questions for Your Next Lesson

12 Min Read • 21st Century Skills

Higher order thinking questions help students explore and express rigor in their application of knowledge. There are 5 main areas of higher order thinking that promote rigor:

Higher Level Thinking

Deep inquiry.

- Demonstration and

Quality Over Quantity

Each of these areas encourage students to move beyond rote knowledge and to expand their thinking process. Let’s explore each in more depth.

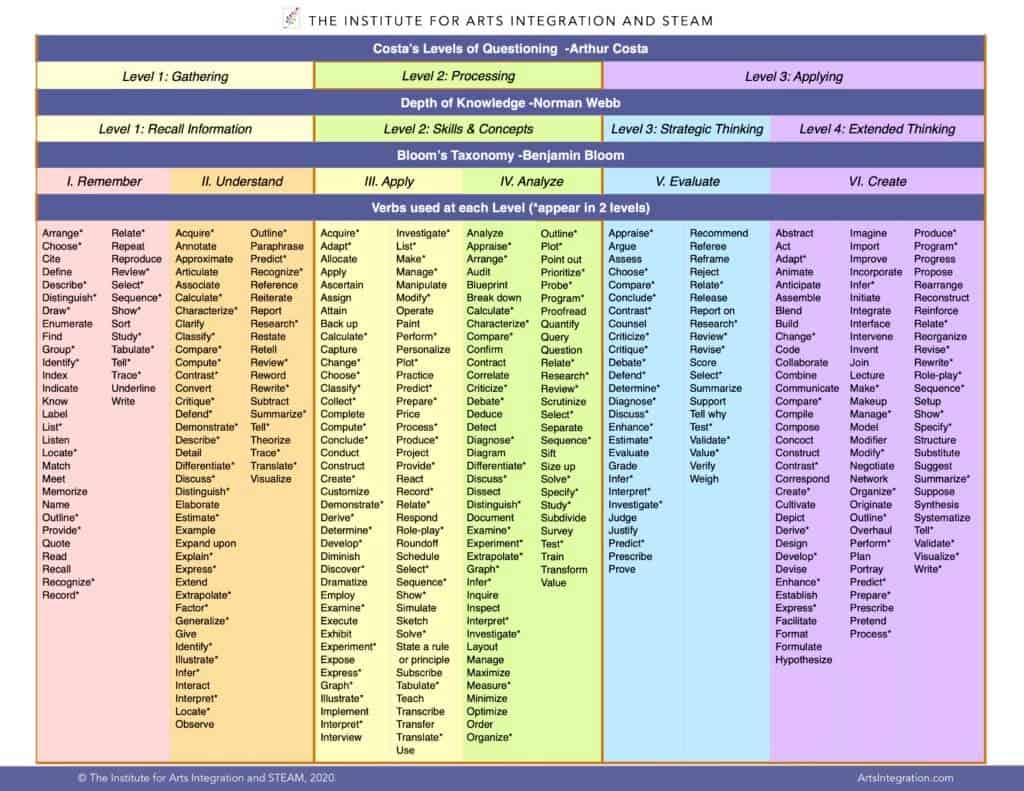

Higher level thinking is simply taking our students to the next level by pushing for more than simple recall or comprehension. There are many resources for higher level thinking. Costa’s Levels of Questioning, Bloom’s Taxonomy and Webb’s Depth of Knowledge are two common references for building higher level thinking. Take a look at this Higher Order Thinking Chart to help you organize these methods and see how to apply them in your own lessons:

DOWNLOAD THE PDF

All 3 of these methodologies provide building blocks for increasing the level of thinking. Creating opportunities for students to work within the recalling and remembering level is relatively simple because we are asking students to identify or recall information. However, moving to the higher levels things become a little more difficult. Here’s a basic list of higher order thinking questions to get your started. However, let’s take a look at how to do this specifically within the STEAM areas.

Webb (2002) offers some of the following activities for using higher levels in science.

DOK Level 1

Recall or recognize a fact, term, or property. Represent in words or diagrams a scientific concept or relationship. Provide or recognize a standard scientific representation for a simple phenomenon. Perform a routine procedure such as measuring length.

DOK Level 2

Specify and explain the relationship between facts, terms, properties, or variables. Describe and explain examples and non-examples of science concepts. Select a procedure according to specified criteria, and perform it. Formulate a routine problem given data and conditions. Organize, represent and interpret data.

DOK Level 3

Identify research questions and design investigations for a scientific problem. Solve non-routine problems, then develop a scientific model for a complex situation. And finally, form conclusions from experimental data.

DOK Level 4

Based on provided data from a complex experiment that is novel to the student, deduct the fundamental relationship between several controlled variables. Conduct an investigation, from specifying a problem to designing and carrying out an experiment, to analyzing its data and forming conclusions.

The SBBC Department of Instructional Technology has developed a comprehensive chart of both teacher-directed and student-directed activities pushing students to higher-level thinking skills.

Engineering

Engineering standards are embedded within the next generation science standards and are engineered with higher-level thinking in mind. The objectives of secondary education engineering are already designed with the Depth of Knowledge levels:

- Defining and delimiting engineering problems involves stating the problem as clearly as possible in terms of criteria for success, and constraints or limits.

- Designing solutions to engineering problems begins with generating a number of different possible solutions, Then, evaluating potential solutions to see which ones best meet the criteria and constraints of the problem.

- Optimizing the design solution involves a process in which solutions are systematically tested, and the final design is improved by trading off less important features for those more important.

Similar to many of the STEAM subjects, the arts push students to higher levels due to the nature of artistic creation. Gerald Aungst designed a wonderful reference chart providing concrete examples of how each of the arts can utilize the higher levels of Depth of Knowledge .

Mathematics

The Kentucky Department of Education has a great resource using Webb’s Depth of Knowledge for building higher-level thinking in Mathematics . Examples include:

Identify a diagonal in a geometric figure. Multiply two numbers. Find the area of a rectangle. Convert scientific notation to decimal form. Measure an angle.

Classify quadrilaterals. Compare two sets of data using the mean, median, and mode of each set. Determine a strategy to estimate the number of jellybeans in a jar. Extend a geometric pattern. Organize a set of data and construct an appropriate display.

Write a mathematical rule for a non-routine pattern. Explain how changes in the dimensions affect the area and perimeter/circumference of geometric figures. Determine the equations and solve and interpret a system of equations for a given problem. Provide a mathematical justification when a situation has more than one possible outcome. Interpret information from a series of data displays.

Collect data over time taking into consideration a number of variables and analyze the results. Model a social studies situation with many alternatives and select one approach to solve with a mathematical model. Develop a rule for a complex pattern and find a phenomenon that exhibits that behavior. Complete a unit of formal geometric constructions, such as nine-point circles or the Euler line. Construct a non-Euclidean geometry.

Often confused with fun , engagement is the presence of all student minds hard at work. Ensuring that all student voices are heard and all students are a part of the learning process.

The G lossary of Education Reform defines engagement as: “the degree of attention, curiosity, interest, optimism, and passion that students show when they are learning or being taught, which extends to the level of motivation they have to learn and progress in their education.” The words that stand out most to me are curiosity and interest. If we foster curiosity, then attention, optimism, and passion will follow.

STEAM content areas inherently encourage engagement, and below is a compilation of resources that build R igor through E ngagement in the STEAM classrooms.

Science provides many opportunities for engagement through experimentation and labs. But, remember to use focused notes strategies for engagement while presenting information prior to the hands on experiments. Life Sciences Education offers Biology-specific strategies for student engagement in Structure Matters: Twenty-One Teaching Strategies to Promote Student Engagement and Cultivate Classroom Equity

Technology in, and of itself fosters engagement. There are so many tools available for our students and teachers. Thomas Murray presents this list of ways to use technology as a tool of engagement in the classroom.

Teach Engineering has a great chart of engagement activitie s to use in the engineering classroom. Nova Teachers also provides a long list of engaging, student centered activities for engineering in Classroom Activities.

The Perpich Professional Development and Resource Center has documented engaging activities for six arts disciplines spanning kindergarten to high school.

Edutopia provides insight on motivating our students in mathematics in 9 Strategies for Motivating Students in Mathematics . The ASCD provides a quick start guide to Common Core math in Unlocking Engagement through Mathematical Discourse .

Inquiry and curiosity, the original purpose of education, is often pushed aside for test prep through breadth not depth. Rigor encourages curiosity, and curiosity spawns inquiry , allowing for a more in-depth look at topics and content.

Merriam-Webster defines inquiry as: a request for information. An official effort to collect and examine information about something, and the act of asking questions in order to collect information. Translating this into the classroom may seem easy, but there is more to inquiry than simply getting students to ask questions. Thirteen.org offers comprehensive overviews of bringing the inquiry process into the classroom.

One key piece of the inquiry process is in asking effective questions. How do you do that?

1. Identify your essential question

First, we must identify the “big idea.” What is the larger question around the piece of art your students are engaging with? It’s time to think beyond your lesson plan! Is the true, essential objective of your lesson that students demonstrate that they know that Georges Seurat painted “A Sunday on La Grande Jette” using a technique called pointillism through identification and the development of a matching product. Or, is there something bigger? The essential questions for National Core Visual Arts Standard 1.2 read:

“How does knowing the context histories, and traditions of art forms help us create works of art and design? Why do artists follow or break from established tradition? How do artists determine what resources are needed to formulate artistic investigation?”

Outline some big ideas and essential questions for your content area that encourage creative, artful thinking can serve to guide you this year.

2. Build an effective questioning toolkit.

This is a great time to look at the essential questions built right into the National Core Arts Standards. And, begin developing some lines of effective questioning helping students meet those standards. What kinds of questions will you ask to encourage inquiry around a piece of art, music, theatre, or dance? How will you guide students to the big idea with smaller questions?

3. Give wait time.

When time is at a premium, it’s easy to forget to do this. However, giving students moments of thoughtful silence to formulate their own observation, ideas, hypotheses, and opinions is crucial to developing artistic minds. Every student should have time to think individually before discussion, so that they all have something to share. Challenge yourself to give your students just a little bit longer this year!

4. Allow opportunities for all students to engage.

This might mean giving students time to turn and talk with a partner. It might mean instituting a “no hands up” policy allowing you to choose, who will respond. This gives students the opportunity to continue thinking while responses are made. Encouraging discussion among all students is difficult to do within time constraints, but it is vitally important to ensure that every child is thinking critically and artfully.

5. Dig deeper.

Follow up student responses in a way that encourages deeper thinking. Ask students to explain their thinking using support and evidence from the piece of art. This is a standard and a skill that crosses all curricular lines, so encouraging this, we are achieving standards in every content area. What better use of time is there than this?

Demonstration

Actions speak louder than words in all areas of life, and education is no different. Being able to recall and regurgitate rote information was helpful in the pre-google era, but now we need our students to show us they understand, not just tell us.

The foundation of Demonstration is the age-old mantra: don’t tell me… show me. Beyond showing, if we have students demonstrate through real-world application we can engage them even more. We can then provide a rigorous platform for their knowledge.

Through experiments, science naturally promotes demonstration. However, if we involve real-world applications, it becomes exciting and engaging. Have students solve realistic problems with limited resources, or propose solutions to issues on a global scale.

Technology offers many opportunities for students to demonstrate their knowledge through real-life application. Using Project-Based Learning in the technology classroom creates engaging lessons with rigorous application of demonstration. The following sites offer ideas for demonstration through technology projects:

Bringing Real-World Project Management into Technology Lessons

Top 10 Innovative Projects

20 Ideas for Engaging Projects

Engineering uses the design process to build and create, which is innately demonstration. However, if you have students determine their own projects they will be engaged and excited about demonstrating their knowledge. Have students journal issues they encounter for one week. Then, have them choose one to solve by building/creating a product that helps solve the issue. Check out these sites for engaging engineering projects:

100 Engineering Projects for Kids

Purdue EPICS High School Projects

Hands-On Engineering STEM Projects

The arts are built on creation, but often it is the teacher demonstrating, and the students mimicking the process. We teach a dance, or a piece of music and the students copy. Have students demonstrate their knowledge of skills by actually having them do the creation:

High School Art Lessons

Arts Integration Lessons

Artsonia Art Lessons

Math is an area where it is difficult to step away from the traditional methods of instruction. The teacher demonstrates, the class practices as a whole, and then practices individually. What would happen if we taught math through projects and allowed students to demonstrate what they know? Here are a couple of sites that bring demonstration into the math classroom:

NASA’s Exploring Math

Authentic Assessment Examples

Demonstration is a great way to bring engagement through rigor into the classroom. Don’t be afraid to share objectives, standards, and goals with students to have them determine how best they can demonstrate their knowledge.

Rigor does not mean more – it means better . Students don’t need more work they need better work. Furthermore, they need exciting work that makes them want to work.

When teachers are asked why we provide curriculum, units, lesson plans, and homework, the answers that come back are often “I’m not sure.”

Here are some helpful ways to focus on the quality of instruction, rather than simply the quantity of what’s provided:

When mapping out your semester, or yearly curriculum, work backwards. Take a look at the standards you plan to cover, and think project-based when you ask yourself how students should demonstrate the standard(s). Keep the big picture in mind when you finally create the project. As you design the major units needed in order to accomplish the big picture, continue to ask why .

Continue working backward as you move into the larger units of your curriculum. What important information do students need to know or understand in order to achieve the big picture? How and what will students do in order to prove they have learned?

As you begin designing the day-to-day lessons, keep asking why . Everything from the opening activity to the exit slip, make sure you are asking why . Don’t do something because you think you are supposed to, be sure each and every activity/task has a purpose. Not only you, but also your students, should know why the activities are being completed and the overall purpose.

Homework is probably the largest area where quality over quantity needs to be investigated. Why do we give homework: because we are supposed to, because our teachers gave us homework, because it helps. But, does it really? Alfie Kohn provided 8 conclusions in his 2006 book The Homework Myth:

- At best, most homework studies show only an association, not a causal relationship.

- Do we really know how much homework kids do?

- Homework studies confuse grades and test scores with learning.

- Homework matters less the longer you look.

- Even where they do exist, positive effects are often quite small.

- There is no evidence of any academic benefit from homework in elementary school.

- The results of national and international exams raise further doubts about homework’s role.

- Incidental research raises further doubts about homework.

So as you assign homework, keep asking yourself Why ? If you are assigning it because you think you have to , then stop.

As you continue to work through lesson planning, curriculum design, and providing high-quality instruction, keep in mind these examples of higher-order thinking questions and examples. The more we engage students in rigorous and purposeful content that encourages inquiry and critical thinking, the more they will be prepared for the 21st century.

Learn how to successfully integrate the arts in any classroom.

Join 65,000+ K-12 educators receiving creative inspiration, free tools, and practical tips once per month in the SmART Ideas Digest.

TRAININGS Courses Micro-Credentials Conference Membership Micro Credentials Certification State PD Acceptance Full 2023-2024 Catalog

SUPPLEMENTS Books Free Teacher Resources Find Funding Using ESSR Funds Research Consulting and Speaking

COMPANY About Us Press Accreditation Careers Download a Free Toolkit Privacy Policy Terms of Service

SUPPORT The Institute for Arts Integration & STEAM PO Box 2622 Westminster, MD 21158 Main: 443-821-1089 Sales: 443-293-5851 Help Center Email Us

Copyright 2010-2024 The Vision Board, LLC | All Rights Reserved

💻 Upcoming Live Webinar With Cofounder John Hollingsworth | Join Us On Thursday, 5/9 at 10AM PDT | Learn More

- Staff Portal

- Consultant Portal

Toll Free 1-800-495-1550

Local 559-834-2449

- Articles & Books

- Explicit Direct Instruction

- Student Engagement

- Checking for Understanding

- ELD Instruction

- About Our Company

- DataWORKS as an EMO

- About Our Professional Development

- English Learner PD

- Schedule a Webinar

- Higher-order Questions

- Classroom Strategy

After reading The Diary of Anne Frank , a student is asked, “ Who is Anne Frank ?” To answer the question, the student simply recalls the information he or she memorized from the reading.

With the implementation of Common Core, students are expected to become critical thinkers instead of just recalling facts and ideas from text. In order for students to reach this potential and be prepared for success, educators must engage students during instruction by asking higher-order questions.

Higher-order Questions (HOQ)

Higher-order questions are those that the students cannot answer just by simple recollection or by reading the information “ verbatim ” from the text. Higher-order questions put advanced cognitive demand on students. They encourage students to think beyond literal questions.

Higher-order questions promote critical thinking skills because these types of questions expect students to apply, analyze, synthesize, and evaluate information instead of simply recalling facts. For instance, application questions require students to transfer knowledge learned in one context to another; analysis questions expect students to break the whole into component parts such as analyze mood, setting, characters, express opinions, make inferences, and draw conclusions; synthesis questions have students use old ideas to create new ones using information from a variety of sources; and evaluation questions require students to make judgments, explain reasons for judgments, compare and contrast information, and develop reasoning using evidence from the text.

Higher-order Questions Research

According to research, teachers who effectively use a variety of higher-order questions can overcome the brain’s natural tendency to develop mental routines and patterns to limit information, which is called neural pruning . As a result, student’s brains may become more open-minded, which strengthens the brain.

According to an article in Educational Leadership (March 1997), researchers Thomas Cardellichio and Wendy Field discovered that higher-order questions increase neural branching , the opposite of neural pruning. In addition, these researchers found that teachers can promote the process of neural branching through seven types of questions.

- Hypothetical thinking . This form of thinking is used to create new information. It causes a person to develop an answer based on generalizations related to that situation. These questions follow general forms such as What if this happened ? What if this were not true ?, etc.

- Reversal thinking . This type of thinking expects students to turn a question around and look for opposite ideas. For example, What happens if I reverse the addends in a math problem ? What caused this? How does it change if I go backward ?, etc.

- Application of different symbol systems . This way of thinking is to apply a symbol system to a situation for which it is not usually used, such as writing a math equation to show how animal interaction is related.

- Analogy . This process of thinking is to compare unrelated situations such as how is the Pythagorean Theorem related to cooking. These questions typically ask How is this like ___?

- Analysis of point of view . This way of thinking requires students to consider and question other people’s perspective, belief, or opinion in order to extend their minds. For instance, a teacher may ask a student, What else could account for this ? or How many other ways could someone look at this ?

- Completion . This form of thinking requires students to finish an incomplete project or situation that would normally be completed. For example, removing the end of a story and expecting the students to create their own ending.

- Web analysis . With web analysis, students must synthesize how events are related in complex ways instead of simply relying on the brain’s natural ability to develop a simple pattern. For example, How extensive were the effects of _____? Or Track the relationship of events following from ___ are types of web analysis questions.

The researchers concluded that this type of questioning can lead to better critical thinking skills. “ They can analyze, synthesize, evaluate, and interpret the text they are reading at complex levels. They can process text at deep levels, make judgments, and detect shades of meaning. They can make critical interpretations and demonstrate high levels of insight and sophistication in their thinking. They are able to make inferences, draw relevant and insightful conclusions, use their knowledge in new situations, and relate their thinking to other situations and to their own background knowledge. These students fare well on standardized tests and are considered to be advanced. They will indeed be prepared to function as outstanding workers and contributors in a fast-paced workplace where the emphasis is on using information rather than just knowing facts .”

Higher-order Questions and Explicit Direct Instruction

The Explicit Direct Instruction (EDI) model incorporates a variety of higher-order questions in order to encourage and increase critical thinking skills.

The LEARNING OBJECTIVE component in EDI is the only question that is at a low level of Bloom’s Taxonomy. The reason for this is because the content during this portion of the lesson is not at a high level. Also, the students have not been taught the high-level content. Typically, the question asked to students is “ What are we doing today ? or What is our Learning Objective ?”

The CONCEPT DEVELOPMENT component includes a variety of higher-order concept-related questions because the content is at a high level. Here is a list of higher-order questions that are asked during this EDI component:

- In your own words, what is (insert the concept being taught)?

- Which is an example of ________? Why?

- What is the difference between the example and the non-example?

- Why is this an example of ______?

- Give me an example of ______.

- Draw an example of ______.

- Match the examples to the definition of ______.

- Which picture/poster shows an example of _______?

The SKILL DEVELOPMENT component asks higher-level thinking-process questions after modeling the skill.

- How did I know how to (insert skill modeled)?

- How did I know that this was the correct answer?

- How did I use to ensure that I knew how to find the _____?

- How did I know how to interpret the answer?

The GUIDED PRACTICE asks higher-level process questions that require the students to show their thought process when performing the skill.

- How did you know how to __________?

- How did you know that this was the correct answer?

- How did you use to ensure that you knew how to find the _____?

- How did you know how to interpret the answer?

- Which steps was most difficult for you? Why?

The RELEVANCE component includes higher-level evaluation questions.

- Does anyone have any other reason as to why this is important?

- Which reason is the most relevant to you? Why?

The CLOSURE component includes high-level questions such as:

- What did you learn today?

- How did the lesson meet the Learning Objective ?

- How will this lesson benefit you in the future ?

If higher-order questions promote critical thinking skills, as research shows, then higher-order questions should be included throughout instruction. The EDI model offers a good way to do just that!

Educational Leadership, Seven Strategies That Encourage Neural Branching , March 1997

How do you incorporate higher-order questions during instruction?

Additional Resources:

- Educeri; Ready Made Lessons with Higher-Order Questions

- The Secret to Differentiation with EDI: Making Better Decisions at Choice Points

- How to Differentiate and Scaffold – after teaching including RTI

- How to Differentiate and Scaffold – Before the Lesson

- How to Differentiate and Scaffold – while teaching

- Differentiation Strategies: Teaching Grade-Level Content to ALL Students

- Explicit Direct Instruction (EDI) Resource Page

Author: Patricia Bogdanovich

Patricia has held various positions with DataWORKS since 2002. She currently works as a Curriculum Specialist. Patricia helped develop and create many of the early resources and workshops designed by DataWORKS, and she is an expert in analysis of standards. Patricia plans to blog about curriculum and assessments for CCSS and NGSS, classroom strategies, and news and research from the world of education.

Related posts

Leave a Reply Cancel reply

You must be logged in to post a comment.

- Our Mission

How to Lead Students to Engage in Higher Order Thinking

Asking students a series of essential questions at the start of a course signals that deep engagement is a requirement.

I teach multigrade, theme-based courses like Spirituality in Literature and The Natural World in Literature to high school sophomores, juniors, and seniors. And like most English language arts teachers, I’ve taught courses built around the organizing principles of genre (Introduction to Drama), time period and geography (American Literature From 1950), and even assessment instrument (A.P. Literature).

No matter what conceptual framework guides the course I’m teaching, though, I begin and anchor it with what I call a thinking inventory.

Thinking Inventories and Essential Questions

Essential questions—a staple of project-based learning—call on students’ higher order thinking and connect their lived experience with important texts and ideas. A thinking inventory is a carefully curated set of about 10 essential questions of various types, and completing one the first thing I ask students to do in every course I teach.

Although a thinking inventory is made up of questions, it’s more than a questionnaire. When we say we’re “taking inventory”—whether we’re in a warehouse or a relationship—we mean we’re taking stock of where things stand at a given moment in time, with the understanding that those things are fluid and provisional. With a thinking inventory, we’re taking stock of students’ thinking, experiences, and sense-making at the beginning of the course.

A well-designed thinking inventory formalizes the essential questions of any course and serves as a touchpoint for both teacher and students throughout that course. For a teacher, writing a course’s thinking inventory can help separate the essential from the nonessential when planning. And starting your class with a thinking inventory signals to students that higher order thinking is both required and valued.

How to Design an Effective Thinking Inventory

I tell students the thinking inventory is a document we’ll be living with—revisiting and referring to often—and that they should spend time mulling their answers before writing them down. The inventory should include a variety of essential questions, including ones that invite students to share relevant experiences.

I may ask students about their current knowledge base or life experience (What’s the best example of empathy you’ve ever witnessed?). I may ask them to make predictions or imagine scenarios (How will an American Literature course in 100 years look different from today’s American Literature course?). Or I may ask perennial questions (To what extent is it possible for human beings to change fundamentally?).

Here are a few of the questions I asked students to address at the start of a course called The Outsider in Literature:

- Who is the most visionary person you know? How do you know they’re visionary? Is there anything about them you want to emulate? Anything about them that frightens you?

- What are the risks of rebelling? Of not rebelling? Explain.

- What would happen if there were no outsiders? How would the world, and your world, be different?

- Do you think there are any ongoing conflicts between groups that are intractable—that will likely never be resolved? What is the root of the intractability? What would need to happen in order to resolve the conflict? Be specific.

- Who is the most deviant, threatening outsider you can think of? Tell us what makes them threatening.

- To what extent do you think that teenagers, as a group, are (by definition) outsiders?

How I Use Thinking Inventories

On the first day of class, I give students the inventory for homework. Because I expect well-thought-out answers and generative thinking, I assign it in chunks over two nights, and we spend at least the second and third class meetings discussing their answers.

Throughout the course, I use the inventory both implicitly and explicitly. I purposefully weave inventory questions into discussions and student writing prompts. More explicitly, I use inventory questions as a framework for pre- and post-reading activities, and as prompts for reading responses, formal writing, and journaling.

The inventory functions as a kind of time stamp that documents each student’s habits of mind, opinions, and ways of framing experience at the start of the year or semester. At the midpoint and at the end of the course, I have students return to their inventory, choose a question they’d now answer differently, and reflect on why and how their thinking has changed.

The Inventory as a Bridge Between Students and Content

By including a variety of essential questions (practical and experiential, conceptual and theoretical) and making a course’s aims explicit, the inventory invites all students into the conversation and the material from day one. It gives a deep thinker with slower processing speed or attention-deficit/hyperactivity disorder, for example, time to orient themselves to the course’s core questions. Meanwhile, the inventory challenges students who see themselves as high achievers to respond authentically to thorny questions that have no right answers.

In addition, using a thinking inventory models how to ask good questions; gives introverts and anxious students an entry point because cold calling becomes warmer (I can ask, “What did you say on your inventory?”); and cultivates a community of learners connected by real, worthwhile inquiry and communal discourse.

Recently, a student reflecting on his inventory at the end of a course wrote that he was taken aback by how intolerant of “loser characters” he’d seemed just a few months prior on his inventory. He noted that he’d been through some upheaval since then. And he ended his paper with the observation that empathy—for people and characters—grows “when you know their backstory.”

Our websites may use cookies to personalize and enhance your experience. By continuing without changing your cookie settings, you agree to this collection. For more information, please see our University Websites Privacy Notice .

Center for Excellence in Teaching and Learning

- Critical Thinking and other Higher-Order Thinking Skills

Critical thinking is a higher-order thinking skill. Higher-order thinking skills go beyond basic observation of facts and memorization. They are what we are talking about when we want our students to be evaluative, creative and innovative.

When most people think of critical thinking, they think that their words (or the words of others) are supposed to get “criticized” and torn apart in argument, when in fact all it means is that they are criteria-based. These criteria require that we distinguish fact from fiction; synthesize and evaluate information; and clearly communicate, solve problems and discover truths.

Why is Critical Thinking important in teaching?

According to Paul and Elder (2007), “Much of our thinking, left to itself, is biased, distorted, partial, uninformed or down-right prejudiced. Yet the quality of our life and that of which we produce, make, or build depends precisely on the quality of our thought.” Critical thinking is therefore the foundation of a strong education.

Using Bloom’s Taxonomy of thinking skills, the goal is to move students from lower- to higher-order thinking:

- from knowledge (information gathering) to comprehension (confirming)

- from application (making use of knowledge) to analysis (taking information apart)

- from evaluation (judging the outcome) to synthesis (putting information together) and creative generation

This provides students with the skills and motivation to become innovative producers of goods, services, and ideas. This does not have to be a linear process but can move back and forth, and skip steps.

How do I incorporate critical thinking into my course?

The place to begin, and most obvious space to embed critical thinking in a syllabus, is with student-learning objectives/outcomes. A well-designed course aligns everything else—all the activities, assignments, and assessments—with those core learning outcomes.

Learning outcomes contain an action (verb) and an object (noun), and often start with, “Student’s will....” Bloom’s taxonomy can help you to choose appropriate verbs to clearly state what you want students to exit the course doing, and at what level.

- Students will define the principle components of the water cycle. (This is an example of a lower-order thinking skill.)

- Students will evaluate how increased/decreased global temperatures will affect the components of the water cycle. (This is an example of a higher-order thinking skill.)

Both of the above examples are about the water cycle and both require the foundational knowledge that form the “facts” of what makes up the water cycle, but the second objective goes beyond facts to an actual understanding, application and evaluation of the water cycle.

Using a tool such as Bloom’s Taxonomy to set learning outcomes helps to prevent vague, non-evaluative expectations. It forces us to think about what we mean when we say, “Students will learn…” What is learning; how do we know they are learning?

The Best Resources For Helping Teachers Use Bloom’s Taxonomy In The Classroom by Larry Ferlazzo

Consider designing class activities, assignments, and assessments—as well as student-learning outcomes—using Bloom’s Taxonomy as a guide.

The Socratic style of questioning encourages critical thinking. Socratic questioning “is systematic method of disciplined questioning that can be used to explore complex ideas, to get to the truth of things, to open up issues and problems, to uncover assumptions, to analyze concepts, to distinguish what we know from what we don’t know, and to follow out logical implications of thought” (Paul and Elder 2007).

Socratic questioning is most frequently employed in the form of scheduled discussions about assigned material, but it can be used on a daily basis by incorporating the questioning process into your daily interactions with students.

In teaching, Paul and Elder (2007) give at least two fundamental purposes to Socratic questioning:

- To deeply explore student thinking, helping students begin to distinguish what they do and do not know or understand, and to develop intellectual humility in the process

- To foster students’ abilities to ask probing questions, helping students acquire the powerful tools of dialog, so that they can use these tools in everyday life (in questioning themselves and others)

How do I assess the development of critical thinking in my students?

If the course is carefully designed around student-learning outcomes, and some of those outcomes have a strong critical-thinking component, then final assessment of your students’ success at achieving the outcomes will be evidence of their ability to think critically. Thus, a multiple-choice exam might suffice to assess lower-order levels of “knowing,” while a project or demonstration might be required to evaluate synthesis of knowledge or creation of new understanding.

Critical thinking is not an “add on,” but an integral part of a course.

- Make critical thinking deliberate and intentional in your courses—have it in mind as you design or redesign all facets of the course

- Many students are unfamiliar with this approach and are more comfortable with a simple quest for correct answers, so take some class time to talk with students about the need to think critically and creatively in your course; identify what critical thinking entail, what it looks like, and how it will be assessed.

Additional Resources

- Barell, John. Teaching for Thoughtfulness: Classroom Strategies to Enhance Intellectual Development . Longman, 1991.

- Brookfield, Stephen D. Teaching for Critical Thinking: Tools and Techniques to Help Students Question Their Assumptions . Jossey-Bass, 2012.

- Elder, Linda and Richard Paul. 30 Days to Better Thinking and Better Living through Critical Thinking . FT Press, 2012.

- Fasko, Jr., Daniel, ed. Critical Thinking and Reasoning: Current Research, Theory, and Practice . Hampton Press, 2003.

- Fisher, Alec. Critical Thinking: An Introduction . Cambridge University Press, 2011.

- Paul, Richard and Linda Elder. Critical Thinking: Learn the Tools the Best Thinkers Use . Pearson Prentice Hall, 2006.

- Faculty Focus article, A Syllabus Tip: Embed Big Questions

- The Critical Thinking Community

- The Critical Thinking Community’s The Thinker’s Guides Series and The Art of Socratic Questioning

Quick Links

- Developing Learning Objectives

- Creating Your Syllabus

- Active Learning

- Service Learning

- Case Based Learning

- Group and Team Based Learning

- Integrating Technology in the Classroom

- Effective PowerPoint Design

- Hybrid and Hybrid Limited Course Design

- Online Course Design

Consult with our CETL Professionals

Consultation services are available to all UConn faculty at all campuses at no charge.

Chap. 2: Critical Thinking

Critical thinking and higher order levels of cognition (thinking), critical thinking in college.

Most of the reading and writing that you will do in college will require you to move beyond remembering and understanding material. You will be required to apply what you learn and create something new (i.e. and essay or project), and evaluate and analyze texts or information. All of these activities are higher order levels of thinking that require students to move beyond the lower two levels: remembering and understanding.

To view a clearer image of the chart above, go to this website: Bloom’s Taxonomy

Using Bloom’s Taxonomy for Effective Learning

Adapted from the article written by Beth Lewis

The hierarchy of Bloom’s Taxonomy is the widely accepted framework through which all teachers should guide their students through the cognitive learning process. In other words, teachers use this framework to focus on higher-order thinking skills.

You can think of Bloom’s Taxonomy as a pyramid, with simple knowledge-based recall questions at the base. Building up through this foundation, you can ask your students increasingly challenging questions to test their comprehension of a given material.

By asking these critical thinking questions or higher-order questions, you are developing all levels of thinking. Students will have improved attention to detail, as well as an increase in their comprehension and problem-solving skills.

There are six levels in the framework, here is a brief look at each of them and a few examples of the questions that you would ask for each component.

- Remember : Recognizing and recalling facts: In this level students are asked questions to see whether they have remembered key information from a lesson and/or reading assignment. (What is… Where is… How would you describe?)

- Understand : Understanding what the facts mean : During this level, students will be asked to interpret facts that they learned. (What is the main idea… How would you summarize?)

- Apply : Applying the facts, rules, concepts and ideas: Questions asked during this level are meant to have students apply or use the knowledge learned during the lesson. (How would you use… How would you solve it?)

- Analyze : Breaking down the information into component parts : In the analysis level, students will be required to go beyond knowledge and see if they can analyze a problem. (What is the theme… How would you classify?)

- Evaluate : Judging the value of information and ideas: Evaluating information is where students are expected to assess the information learned and come to a conclusion about it. (What is your opinion of…how would you evaluate… How would you select… What data was used?)

- Create : Combining parts to make a new whole: The highest level of critical thinking involves creating new or original work.

Lewis, Beth. “Using Bloom’s Taxonomy for Effective Learning.” ThoughtCo, Feb. 11, 2020, thoughtco.com/blooms-taxonomy-the-incredible-teaching-tool-2081869.

Privacy Policy

- Teaching Tips

Bloom’s Taxonomy Question Stems For Use In Assessment [With 100+ Examples]

This comprehensive list of pre-created Bloom’s taxonomy question stems ensure students are critically engaging with course material

Jacob Rutka

![higher level critical thinking questions Bloom’s Taxonomy Question Stems For Use In Assessment [With 100+ Examples]](https://tophat.com/wp-content/uploads/bloomsstem-blog.png)

One of the most powerful aspects of Bloom’s Taxonomy is that it offers you, as an educator, the ability to construct a curriculum to assess objective learning outcomes, including advanced educational objectives like critical thinking. Pre-created Bloom’s Taxonomy questions can also make planning discussions, learning activities, and formative assessments much easier.

For those unfamiliar with Bloom’s Taxonomy, it consists of a series of hierarchical levels (normally arranged in a pyramid) that build on each other and progress towards higher-order thinking skills. Each level contains verbs, such as “demonstrate” or “design,” that can be measured to gain greater insight into student learning.

Click here to download 100+ Bloom’s taxonomy question stems for your classroom and get everything you need to engage your students.

Table of Contents

- Bloom’s Taxonomy (1956)

Revised Bloom’s Taxonomy (2001)

Bloom’s taxonomy for adjunct professors, examples of bloom’s taxonomy question stems, additional bloom’s taxonomy example questions, higher-level thinking questions, bloom’s taxonomy (1956).

The original Bloom’s Taxonomy framework consists of six levels that build off of each other as the learning experience progresses. It was developed in 1956 by Benjamin Bloom, an American educational psychologist. Below are descriptions of each level:

- Knowledge: Identification and recall of course concepts learned

- Comprehension: Ability to grasp the meaning of the material

- Application: Demonstrating a grasp of the material at this level by solving problems and creating projects

- Analysis: Finding patterns and trends in the course material

- Synthesis: The combining of ideas or concepts to form a working theory

- Evaluation: Making judgments based on the information students have learned as well as their own insights

A group of educational researchers and cognitive psychologists developed the new and revised Bloom’s Taxonomy framework in 2001 to be more action-oriented. This way, students work their way through a series of verbs to meet learning objectives. Below are descriptions of each of the levels in revised Bloom’s Taxonomy:

- Remember: To bring an awareness of the concept to learners’ minds.

- Understand: To summarize or restate the information in a particular way.

- Apply: The ability to use learned material in new and concrete situations.

- Analyze: Understanding the underlying structure of knowledge to be able to distinguish between fact and opinion.

- Evaluate: Making judgments about the value of ideas, theories, items and materials.

- Create: Reorganizing concepts into new structures or patterns through generating, producing or planning.

Free Download: Bloom’s Taxonomy Question Stems and Examples

Bloom’s Taxonomy questions are a great way to build and design curriculum and lesson plans. They encourage the development of higher-order thinking and encourage students to engage in metacognition by thinking and reflecting on their own learning. In The Ultimate Guide to Bloom’s Taxonomy Question Stems , you can access more than 100 examples of Bloom’s Taxonomy questions examples and higher-order thinking question examples at all different levels of Bloom’s Taxonomy.

Bloom’s Taxonomy (1956) question samples:

- Knowledge: How many…? Who was it that…? Can you name the…?

- Comprehension: Can you write in your own words…? Can you write a brief outline…? What do you think could have happened next…?

- Application: Choose the best statements that apply… Judge the effects of… What would result …?

- Analysis: Which events could have happened…? If … happened, how might the ending have been different? How was this similar to…?

- Synthesis: Can you design a … to achieve …? Write a poem, song or creative presentation about…? Can you see a possible solution to…?

- Evaluation: What criteria would you use to assess…? What data was used to evaluate…? How could you verify…?

Click here to get 100+ Bloom’s taxonomy question stems that’ll help engage students in your classroom.

Revised Bloom’s Taxonomy (2001) question samples:

- Remember: Who…? What…? Where…? How…?

- Understand: How would you generalize…? How would you express…? What information can you infer from…?

- Apply: How would you demonstrate…? How would you present…? Draw a story map…

- Analyze: How can you sort the different parts…? What can you infer about…? What ideas validate…? How would you categorize…?

- Evaluate: What criteria would you use to assess…? What sources could you use to verify…? What information would you use to prioritize…? What are the possible outcomes for…?

- Create: What would happen if…? List the ways you can…? Can you brainstorm a better solution for…?

As we know, Bloom’s Taxonomy is a framework used in education to categorize levels of cognitive learning. Here are 10 Bloom’s Taxonomy example questions, each corresponding to one of the six levels in Bloom’s Taxonomy, starting from the lowest level (Remember) to the highest level (Create):

- Remember (Knowledge): What are the four primary states of matter? Can you list the main events of the American Civil War?

- Understand (Comprehension): How would you explain the concept of supply and demand to someone who is new to economics? Can you summarize the main idea of the research article you just read?

- Apply (Application): Given a real-world scenario, how would you use the Pythagorean theorem to solve a practical problem? Can you demonstrate how to conduct a chemical titration in a laboratory setting?

- Analyze (Analysis): What are the key factors contributing to the decline of a particular species in an ecosystem? How do the social and economic factors influence voting patterns in a specific region?

- Evaluate (Evaluation): Compare and contrast the strengths and weaknesses of two different programming languages for a specific project. Assess the effectiveness of a marketing campaign, providing recommendations for improvement.

- Create (Synthesis): Design a new and innovative product that addresses a common problem in society. Develop a comprehensive lesson plan that incorporates various teaching methods to enhance student engagement in a particular subject.

Download Now: Bloom’s Taxonomy Question Stems and Examples

Higher-level thinking questions are designed to encourage critical thinking, analysis, and synthesis of information. Here are eight examples of higher-level thinking questions that can be used in higher education:

- Critical Analysis (Analysis): “What are the ethical implications of the decision made by the characters in the novel, and how do they reflect broader societal values?”

- Problem-Solving (Application): “Given the current environmental challenges, how can we develop sustainable energy solutions that balance economic and ecological concerns?”

- Evaluation of Evidence (Evaluation): “Based on the data presented in this research paper, do you think the study’s conclusions are valid? Why or why not?”

- Comparative Analysis (Analysis): “Compare and contrast the economic policies of two different countries and their impact on income inequality.”

- Hypothetical Scenario (Synthesis): “Imagine you are the CEO of a multinational corporation. How would you navigate the challenges of globalization and cultural diversity in your company’s workforce?”

- Ethical Dilemma (Evaluation): “In a medical emergency with limited resources, how should healthcare professionals prioritize patients, and what ethical principles should guide their decisions?”

- Interdisciplinary Connection (Synthesis): “How can principles from psychology and sociology be integrated to address the mental health needs of a diverse student population in higher education institutions?”

- Creative Problem-Solving (Synthesis): “Propose a novel solution to reduce urban congestion while promoting eco-friendly transportation options. What are the potential benefits and challenges of your solution?”

These questions encourage students to go beyond simple recall of facts and engage in critical thinking, analysis, synthesis, and ethical considerations. They are often used to stimulate class discussions, research projects, and written assignments in higher education settings.

Click here to download 100+ Bloom’s taxonomy question stems

Recommended Readings

Educators In Conversation: How to Help Students ‘Do’ Sociology

A 6-Step Exercise for Discussing AI In Education

Subscribe to the top hat blog.

Join more than 10,000 educators. Get articles with higher ed trends, teaching tips and expert advice delivered straight to your inbox.

Higher Level Thinking: Synthesis in Bloom's Taxonomy

Putting the Parts Together to Create New Meaning

- Teaching Resources

- An Introduction to Teaching

- Tips & Strategies

- Policies & Discipline

- Community Involvement

- School Administration

- Technology in the Classroom

- Teaching Adult Learners

- Issues In Education

- Becoming A Teacher

- Assessments & Tests

- Elementary Education

- Secondary Education

- Special Education

- Homeschooling

- M.Ed., Curriculum and Instruction, University of Florida