- Open access

- Published: 01 September 2021

Perspectives in modeling and model validation during analytical quality by design chromatographic method evaluation: a case study

- Yongzhi Dong ORCID: orcid.org/0000-0003-4268-5952 1 ,

- Zhimin Liu 1 ,

- Charles Li 1 ,

- Emily Pinter 1 ,

- Alan Potts 1 ,

- Tanya Tadey 1 &

- William Weiser 1

AAPS Open volume 7 , Article number: 3 ( 2021 ) Cite this article

4255 Accesses

1 Citations

Metrics details

Design of experiments (DOE)-based analytical quality by design (AQbD) method evaluation, development, and validation is gaining momentum and has the potential to create robust chromatographic methods through deeper understanding and control of variability. In this paper, a case study is used to explore the pros, cons, and pitfalls of using various chromatographic responses as modeling targets during a DOE-based AQbD approach. The case study involves evaluation of a reverse phase gradient HPLC method by a modified circumscribed central composite (CCC) response surface DOE.

Solid models were produced for most responses and their validation was assessed with graphical and numeric statistics as well as chromatographic mechanistic understanding. The five most relevant responses with valid models were selected for multiple responses method optimization and the final optimized method was chosen based on the Method Operable Design Region (MODR). The final method has a much larger MODR than the original method and is thus more robust.

This study showcases how to use AQbD to gain deep method understanding and make informed decisions on method suitability. Discoveries and discussions in this case study may contribute to continuous improvement of AQbD chromatography practices in the pharmaceutical industry.

Introduction

Drug development using a quality by design (QbD) approach is an essential part of the Pharmaceutical cGMP Initiative for the twenty-first century (FDA Pharmaceutical cGMPs For The 21st Century — A Risk-Based Approach. 2004 ) established by the FDA. This initiative seeks to address unmet patient needs, unsustainable rise of healthcare costs, and the reluctance to adopt new technology in pharmaceutical development and manufacturing. These issues were the result of old regulations that are very rigid and made continuous improvement of previously approved drugs both challenging and costly. The International Council for Harmonization of Technical Requirements for Pharmaceuticals for Human Use (ICH) embraced this initiative and began issuing QbD relevant quality guidelines in 2005. The final versions of ICH Q8–Q12 [(ICH Q8 (R2) 2009 ) (ICH Q9 2005 ) (ICH Q10 2008 ) (ICH Q11 2012 ) (ICH Q12 2019 )] have been adopted by all ICH members. The in-progress version of ICH Q14 (ICH Q14 2018 ) will offer AQbD guidelines for analytical procedures and promote the use of QbD principles to achieve a greater understanding and control of testing methods and reduction of result variability.

Product development using a QbD approach emphasizes understanding of product and process variability, as well as control of process variability. It relies on analytical methods to measure, understand, and control the critical quality attributes (CQA) of raw materials and intermediates to optimize critical process parameters and realize the Quality Target Product Profile (ICH Q8 (R2) 2009 ). Nevertheless, part of the variability reported by an analytical test can originate from the variability of the analytical measurement itself. This can be seen from Eq. 1 .

The reported variability is the sum of intrinsic product variability and extrinsic analytical measurement variability (NIST/SEMATECH e-Handbook of statistical methods 2012a , 2012b , 2012c , 2012d ). The measurement variability can be minimized by applying QbD principles, concepts, and tools during method development to assure the quality and reliability of the analytical method can meet the target measurement uncertainty (TMU) (EURACHEM/CITAC 2015 ). High-quality analytical data truthfully reveal product CQAs and thus enables robust, informed decisions regarding drug development, manufacturing, and quality control.

ICH Q14 introduces the AQbD concepts, using a rational, systematic, and holistic approach to build quality into analytical methods. The Method Operable Design Region (MODR) (Borman et al. 2007 ) is a multidimensional space based on the critical method parameters and settings that provide suitable method performance. This approach begins with a predefined analytical target profile (ATP) (Schweitzer et al. 2010 ), which defines the method’s intended purpose and commands analytical technique selection and all other method development activities. This involves understanding of the method and control of the method variability based on sound science and risk management. It is generally agreed upon that systematic AQbD method development should include the following six consecutive steps (Tang 2011 ):

ATP determination

Analytical technique selection

Method risk assessment

MODR establishment

Method control strategy

Continuous method improvement through a life cycle approach

A multivariate MODR allows freedom to make method changes and maintain the method validation (Chatterjee S 2012 ). Changing method conditions within an approved MODR does not impact the results and offers an advantage for continuous improvement without submission of supplemental regulatory documentation. Establishment of the MODR is facilitated by multivariate design of experiments (DOE) (Volta e Sousa et al. 2021 ). Typically, three types of DOE may be involved in AQbD. The screening DOE further consolidates the potential critical method parameters determined from the risk assessment. The optimization DOE builds mathematical models and selects the appropriate critical method parameter settings to reach to the target mean responses. Finally, the robustness DOE further narrows down the critical method parameter settings to establish the MODR, within which the target mean responses are consistently realized. Based on this AQbD framework, it is very clear DOE models are essential to understanding and controlling method variability to build robustness into analytical methods. Although there have been extensive case studies published regarding AQbD (Grangeia et al. 2020 ), systematic and in-depth discussion of the fundamental AQbD modeling is still largely unexplored. Methodical evaluation of the pros, cons, and pitfalls of using various chromatographic responses as modeling targets is even more rare (Debrus et al. 2013 ) (Orlandini et al. 2013 ) (Bezerraa et al. 2019 ). The purpose of this case study is to investigate relevant topics such as data analysis and modeling principles, statistical and scientific validation of DOE models, method robustness evaluation and optimization by Monte Carlo simulation (Chatterjee S 2012 ), multiple responses method optimization (Leardi 2009 ), and MODR establishment. Discoveries and discussions in this case study may contribute to continuous improvement of chromatographic AQbD practices in the pharmaceutical industry.

Methods/experimental

Materials and methods.

C111229929-C, a third-generation novel synthetic tetracycline-class antibiotic currently under phase 1 clinical trial was provided by KBP Biosciences. A reverse phase HPLC purity and impurities method was also provided for evaluation and optimization using AQbD. The method was developed using a one factor at a time (OFAT) approach and used a Waters XBridge C18 column (4.6 × 150 mm, 3.5 μm) and a UV detector. Mobile phase A was composed of ammonium acetate/ethylenediaminetetraacetic acid (EDTA) buffer at pH 8.8 and mobile phase B was composed of 70:30 (v/v) acetonitrile/EDTA buffer at pH 8.5. Existing data from forced degradation and 24-month stability studies demonstrated that the method was capable of separating all six specified impurities/degradants with ≥ 1.5 resolution.

A 1.0 mg/mL C111229929-C solution was prepared by dissolving the aged C111229929-C stability sample into 10 mM HCl in methanol and used as the method evaluation sample. An agilent 1290 UPLC equipped with a DAD detector was used. In-house 18.2 MΩ Milli-Q Water was used for solution preparations. All other reagents were of ACS equivalent or higher grade. Waters Empower® 3 was used as the Chromatographic Data System. Fusion QbD v 9.9.0 software was used for DOE design, data analysis, modeling, Monte Carlo simulation, multiple responses mean, and robustness optimization. Empower® 3 and Fusion QbD were fully integrated and validated.

A method risk assessment was performed through review of the literature and existing validation and stability data to establish priorities for method inputs and responses. Based on the risk assessment, four method parameters with the highest risk priority numbers were selected as critical method parameters. Method evaluation and optimization was performed by a modified circumscribed central composite (CCC) response surface DOE design with five levels per parameter, for a total of 30 runs. The modifications were the extra duplicate replications at three factorial points. In addition to triplicate replications at the center point, the modified design had a total of nine replicates. See Table 1 for the detailed design matrix. A full quadratic model for the four-factor five-level CCC design has a total of fourteen potential terms. They include four main linear terms (A, B, C, D), four quadratic terms (A 2 , B 2 , C 2 , D 2 ), and six two-way interaction terms (A*B, A*C, A*D, B*C, B*D, and C*D).

Pre-runs executed at selected star (extreme) points verified that all expected analytes eluted within the 35 min run time. This mitigated the risk of any non-eluting peaks during the full DOE study, as a single unusable run may raise questions regarding the validity of the entire study. Based on the pre-runs, the concentration of the stock EDTA solution was decreased four-fold to mitigate inaccurate in-line mixing of mobile phase B caused by low volumes of a high concentration stock. The final ranges and levels for each of the four selected method parameters are also listed in Table 1 .

Each unique DOE run in Table 1 is a different method. As there were 25 unique running conditions, there were 25 different methods in this DOE study. The G-Efficiency and the average predicted variance (NIST/SEMATECH e-Handbook of statistical methods 2012a , 2012b , 2012c , 2012d ) (Myers and Montgomery 1995 ) of the design were 86.8% and 10.6%, respectively, meeting their respective design goals of ≥ 50% and ≤ 25%. Some of the major advantages of this modified CCC design include the following:

Established quadratic effects

Robust models that minimize effects of potential missing data

Good coverage of the design space by including the interior design points

Low predictive variances of the design points

Low model term coefficient estimation errors

The design also allows for implementation of a sequential approach, where trials from previously conducted factorial experiments can be augmented to form the CCC design. When there is little understanding about the method and critical method parameters, such as when developing a new method from scratch, direct application of an optimizing CCC design is generally not recommended. However, there was sufficient previous knowledge regarding this specific method, justifying the direct approach.

DOE data analysis and modeling principles

DOE software is one of the most important tools to facilitate efficient and effective AQbD chromatographic method development, validation, and transfer. Fusion QbD software was employed for DOE design and data analysis. Mathematical modeling of the physicochemical chromatographic separation process is essential for DOE to develop robust chromatographic methods through three phases: chemistry screening, mean optimization, and robustness optimization. The primary method parameters affecting separation (e.g., column packing, mobile phase pH, mobile phase organic modifier) are statistically determined with models during chemistry screening. The secondary method parameters affecting separation (e.g., column temperature, flow rate, gradient slope settings) are optimized during mean optimization using models to identify the method most capable of reaching all selected method response goals on average. During robustness optimization, robustness models for selected method responses are created with Monte Carlo simulation and used to further optimize method parameters such that all method responses consistently reach their goals, as reflected by a process capability value of ≥ 1.33, which is the established standard for a robust process (NIST/SEMATECH e-Handbook of statistical methods 2012a , 2012b , 2012c , 2012d ).

Models are critical to the AQbD approach and must be validated both statistically and scientifically. Statistical validation is performed using various statistical tests such as residual randomness and normality (NIST/SEMATECH e-Handbook of statistical methods 2012a , 2012b , 2012c , 2012d ), regression R-squared, adjusted regression R-squared, and prediction R-squared. Scientific validation is achieved by checking the terms in a statistical model against the relevant established scientific principles, which is described as mechanistic understanding in the relevant literature (ICH Q8 (R2) 2009 ).

Fusion uses data transformation analysis to decide whether data transformation is necessary before modeling, and then uses analysis of variance (ANOVA) and regression to generate method response models. ANOVA provides objective and statistical rationale for each consecutive modeling decision. Model residual plots are fundamental tools for validating the final method response models. When a model fits the DOE data well, the response residuals should be distributed randomly without any defined structure, and normally. A valid method response model provides the deepest understanding of how a method response, such as resolution, is affected by critical method parameters.

Since Fusion relies on models for chemistry screening, mean optimization, and robustness optimization, it is critical to holistically evaluate each method response model from all relevant model regression statistics to assure model validity before multiple method response optimization. Inappropriate models will lead to poor prediction and non-robust methods. This paper will describe the holistic evaluation approach used to develop a robust chromatographic method with Fusion QbD.

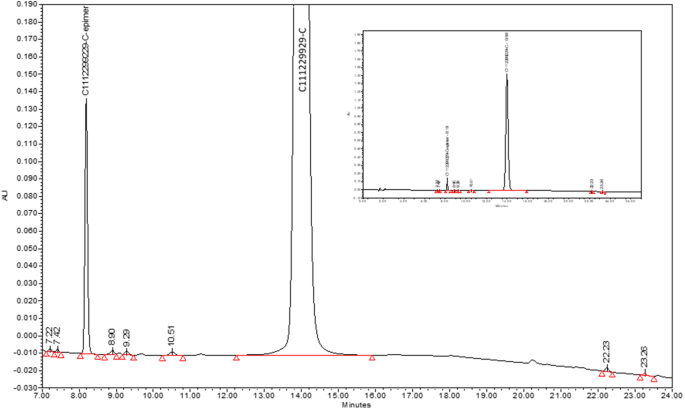

Representative chromatogram under nominal conditions

Careful planning and pre-runs executed at select star points allowed for successful execution of the DOE with all expected peaks eluting within the running time for all the 30 runs. A representative chromatogram at the nominal conditions is shown in Fig. 1 . The API peak (C1112299299-C) and the Epimer peak (C112299299-C-epimer) can be seen, as well as seven minor impurity peaks, among which impurity 2 and impurity 3 elute at 8.90 and 10.51 min, respectively. The inset shows the full-scale chromatogram.

A representative chromatogram under nominal conditions

Results for statistical validation of the DOE models

ANOVA and regression data analysis revealed many DOE models for various peak responses. The major numeric regression statistics of the peak response models are summarized in Table 2 .

MSR (mean square regression), MSR adjusted, and MS-LOF (mean square lack of fit) are major numeric statistics for validating a DOE model. A model is statistically significant when the MSR ≥ the MSR significance threshold, which is the 0.0500 probability value for statistical significance. The lack of fit of a model is not statistically significant when the MS-LOF ≤ the MS-LOF significance threshold, which is also the 0.0500 probability value for statistical significance. The MSR adjusted statistic is the MSR adjusted with the number of terms in the model to assure a new term improves the model fit more than expected by chance alone. For a valid model, the MSR adjusted is always smaller than the MSR and the difference is usually very small, unless too many terms are used in the model or the sample size is too small.

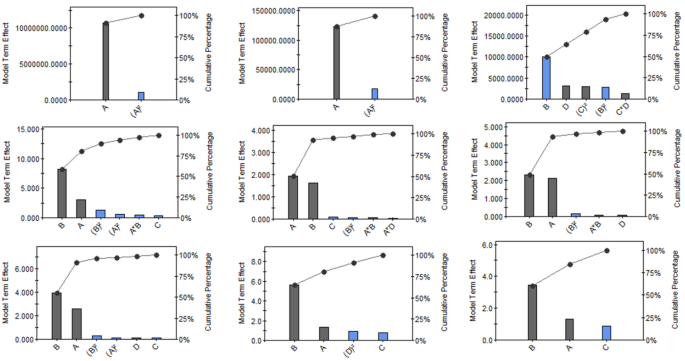

Model Term Ranking Pareto Charts for scientific validation of DOE models

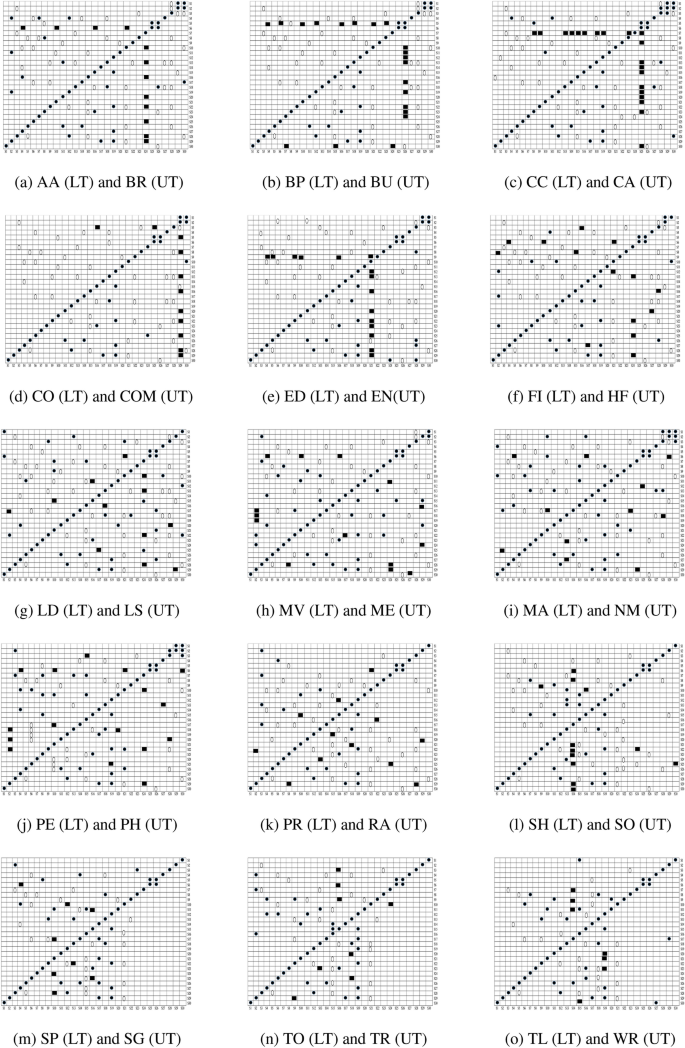

DOE models are calculated from standardized variable level settings. Scientific validation of a DOE model through mechanistic understanding can be challenging when data transformation before modeling ostensibly inverts the positive and negative nature of the model term effect. To overcome this challenge, Model Term Ranking Pareto Charts that provide the detailed effects of each term in a model were employed. See Fig. 2 for details.

Model Term Ranking Pareto Charts. Top row from left to right: API area (default), Epimer area (default), API plate count. Middle row from left to right: API RT, Epimer RT, Impurity 2 RT. Bottom row from left to right: impurity 3 RT, # of peaks, # of peaks with ≥ 1.5 — resolution

The chart presents all terms of a model in descending order (left to right) based on the absolute magnitude of their effects. The primary y -axis (model term effect) gives the absolute magnitude of individual model terms, while the secondary y -axis (cumulative percentage) gives the cumulative relative percentage effects of all model terms. Blue bars correspond to terms with a positive effect, while gray bars correspond to those with a negative effect. The Model Term Ranking Pareto Charts for all models are summarized in Fig. 2 , except the two “customer” peak area models with a single term and the two C pk models.

AQbD relies on models for efficient and effective chemistry screening, mean optimization, and robustness optimization of chromatographic methods. It is critical to “validate” the models both statistically and scientifically, as inappropriate models may lead to impractical methods. As such, this section will discuss statistical and scientific validation of the DOE models. After the models were fully validated for all selected individual method responses, the method MODR was substantiated by balancing and compromising among the most important method responses.

Statistical validation of the DOE models

As shown in Table 2 , the MSR values ranged from 0.7928 to 0.9999. All MSR values were much higher than their respective MSR threshold, which ranged from 0.0006 to 0.0711, indicating that all models were statistically significant and explained the corresponding chromatographic response data. The MSR adjusted values were all smaller than their respective MSR values, and the differences between the two was always very small (the largest difference was 0.0195 for the API plate count model), indicating that there was no overfitting for the models. There was slight lack of fit for the two customer models due to very low pure errors, and the MS-LOF cannot be calculated for the two C pk model because the Monte Carlo simulation gives essentially zero pure error. Other than that, the MS-LOF ≤ the MS-LOF significance threshold for all other models, indicating the lack of fit was not statistically significant.

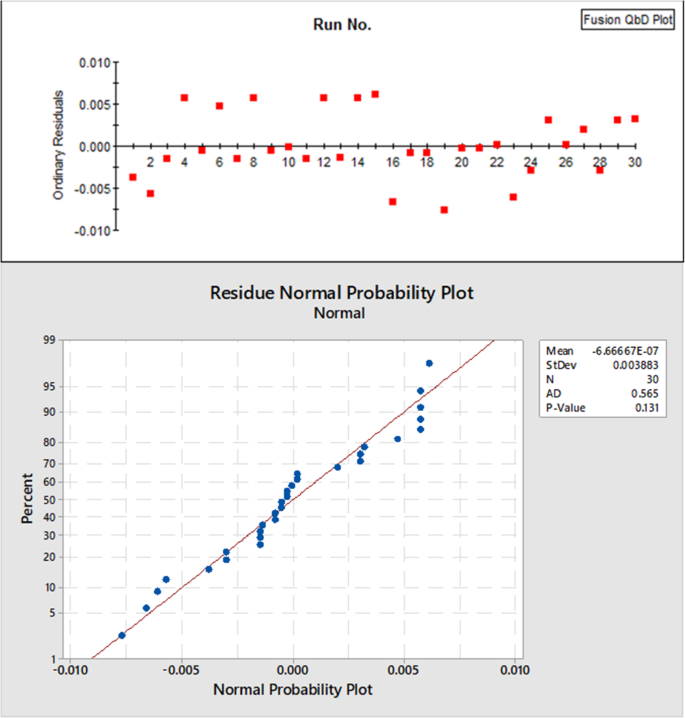

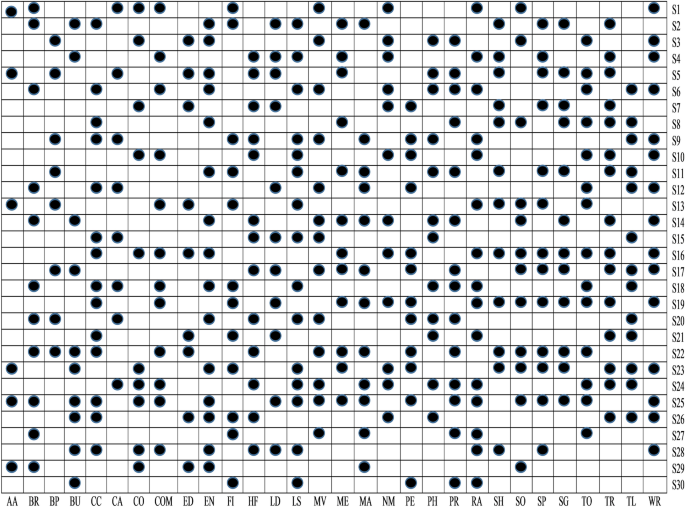

In addition to the above numeric statistical validation, various model residual plots were employed for graphical statistical model validation. The parameter–residual plots and the run number-residual plots for all models showed no defined structure, indicating random residual distribution. The normal probability plots showed all residual points lay in a nearly straight line for each single model, indicating normal residual distribution for all models. The randomly and normally distributed residuals provided the primary graphical statistical validation of the DOE models. See Fig. 3 for representative residuals plots for the “# of Peaks” model.

Representative residuals plot for the “# of Peaks” model. Upper: run no – residuals plot; lower: residues normal probability plot

Scientific validation of the DOE models

With all models statistically validated, the discussions below will focus on scientific validation of the models by mechanistic understanding.

Peak area models for API and Epimer peaks

Peak areas and peak heights have been used for chromatographic quantification. However, peak area was chosen as the preferred approach as it is less sensitive to peak distortions such as broadening, fronting, and tailing, which can cause significant variation in analyte quantitation. To use peak area to reliably quantify the analyte within the MODR of a robust chromatographic method, the peak area must remain stable with consistent analyte injections.

Peak area models can be critical to the method development and validation with multivariate DOE approach. Solid peak area models were revealed for the API and Epimer peaks in this study. See the “API (default)” and “Epimer (default)” rows in Table 2 for the detailed model regression statistics. See Fig. 2 for the Model Term Ranking Pareto Charts. See Eqs. 2 and 3 below for the detailed models.

Although a full quadratic model for the four-factor five-level CCC design has a total of fourteen potential terms, multivariate regression analyses revealed that only two of the fourteen terms are statistically significant for both the API and Epimer peak area models. In addition, the flow rate term and flow rate squared-terms are identical for the two models, indicating the other three parameters (final percentage strong solvent, oven temperature, and EDTA concentration) have no significant effect on peak area for both peaks.

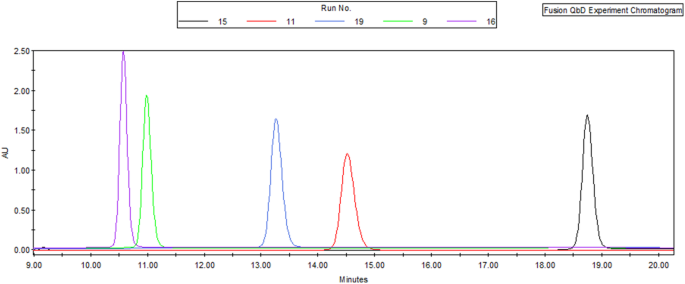

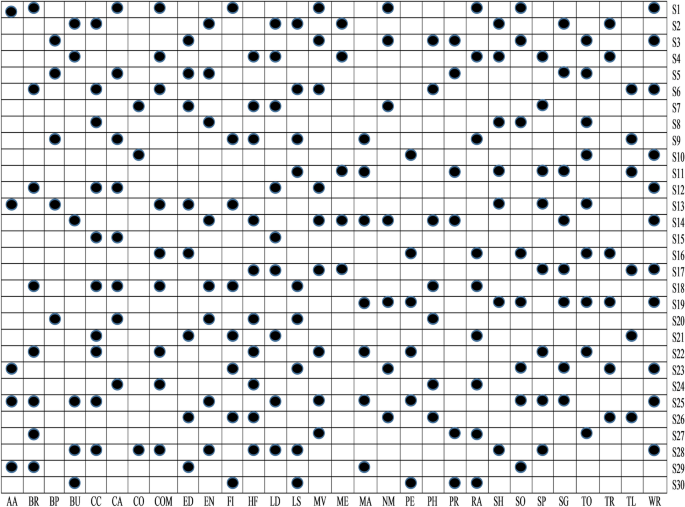

Oven temperature and EDTA concentration have negligible effect on peak area and thus were not significant terms in the peak area models. The percentage of strong solvent was also not a significant term in the peak area models even though it did appear to influence peak height almost as much as flow rate, but not the peak area, as seen in Fig. 4 . It was hypothesized that the two flow rate terms in the model consisted of a strong negative first order term and a weak positive second order term, but more investigation was needed.

Effects of final percentage of strong solvent and flow rate on the API peak area and peak height: run 15 (black) = 31%/0.9 mL/min; run 11 (red) = 33%/1.0 mL/min; run 19 (blue) = 35%/0.9 mL/min; run 9 (green) = 37%/1.0 mL/min; run 16 (purple) = 39%/0.9 mL/min

Peak purity and peak integration are the primary factors affecting peak area. Partial or total peak overlap (resolution < 1.5) due to analyte co-elution can impact the peak purity resulting in inaccurate integration of both peaks. Peak integration may also be affected by unstable baseline and/or peak fronting and tailing due to uncertainty in determining peak start and end points. In this DOE study, the API and Epimer peaks were consistently well-resolved (resolution ≥ 2.0) and were also significantly higher than the limit of quantitation, contributing to the strong peak area models. In contrast, no appropriate peak area models could be developed for other impurity peaks as they were either not properly resolved or were too close to the limit of quantitation. For peaks with resolution ≤ 1.0 there will likely never be an area model with reliable predictivity as the peak area cannot be consistently and accurately measured.

The importance of a mechanistic understanding of the DOE models for AQbD has been extensively discussed. The API and Epimer peak area models were very similar in that they both contained a strong negative first order flow rate term and a weak positive second order flow rate term.

The strong negative first order term can be explained by the exposure time of the analyte molecules to the detector. The UV detector used in the LC method is non-destructive and concentration sensitive. Analyte molecules send signals to the detector when exposed to UV light while flowing through the fixed length detecting window in a band. As the molecules are not degraded by the UV light, the slower the flow rate, the longer the analyte molecules are exposed to the UV light, allowing for increased signal to the detector and thus increased analyte peak area. Simple direct linear regression of the peak area against inverse flow rate confirmed both the API and Epimer peak areas were proportional to the inverse flow rate, with R 2 values ≥ 0.99 (data not included).

As there was no obvious mechanistic explanation of the weak positive second order term in the models, more investigation was needed. Multivariate DOE customer models were pursued. The acquired customer models, listed in Eqs. 4 and 5 , used inverse flow rate “1/A” in place of the flow rate “A” for all pertinent terms among the fourteen terms. The major model regression statistics of the customer models are summarized in the “API (customer)” and “Epimer (customer)” rows in Table 2 . Both customer models contain a single inverse flow rate term, confirming the negative effect of flow rate on peak area for both peaks. The customer models in Eqs. 4 and 5 provide more intuitive understanding of the flow rate effects on peak area than the “default” models in Eqs. 2 and 3 . The weak positive second order flow rate term in Eqs. 2 and 3 contributes less than 15% effect to the peak area and is very challenging to explain mechanistically. This kind of model term replacing technique may be of general value when using DOE to explore and discover new scientific theory, including new chromatographic theory.

Additionally, the peak area models in Eqs. 2 – 5 revealed that the pump flow rate must be very consistent among all injections during a quantitative chromatographic sequence. Currently, the best-in-industry flow rate precision for a binary UPLC pump is “< 0.05% RSD or < 0.01 min SD” (Thermo Fisher Scientific, Vanquish Pump Specification. 2021 ).

API peak plate count model

Column plate count is potentially useful in DOE modeling as it is a key parameter used in all modes of chromatography for measuring and controlling column efficiency to assure separation of the analytes. The equation for plate count ( N ) is shown below. It is calculated using peak retention time ( t r ) and peak width at half height ( w 1/2 ) to mitigate any baseline effects and provide a more reliable response for modeling-based QbD chromatographic method development.

The peak plate count model for the API peak can be seen in Eq. 6 . It was developed by reducing the fourteen terms. The major model quality attributes are summarized in Table 2 .

The flow rate was not a critical factor in the plate count model. This seemingly goes against the Van Deemter equation (van Deemter et al. 1956 ), which states that flow rate directly affects column plate height and thus plate count. However, the missing flow rate term can be rationalized by the LC column that was used. According to the Van Deemter equation, plate height for the 150 × 4.6 mm, 3.5 μm column will remain flat at a minimum level within the 0.7–1.1 mL/min flow rate range used in this DOE study (Altiero 2018 ). As plate count is inversely proportional to the plate height, it will also remain flat at a maximal level within the 0.7–1.1 mL/min flow rate range.

The most dominating parameter in the API plate count model was the final percentage of strong solvent. Its two terms B and B 2 provided more than 60% positive effects to the plate count response (see the Model Term Ranking Pareto Chart in Fig. 2 ) and could be easily explained by the inverse relationship between plate count and peak width when the gradient slope is increased.

Retention time models

Retention time (RT) and peak width are the primary attributes for a chromatographic peak. They are used to calculate secondary attributes such as resolution, plate count, and tailing. These peak attributes together define the overall quality of separation and subsequently quantification of the analytes. RT is determined using all data points on a peak and is thus a more reliable measurand than peak width, which uses only some data points on a peak. As such, peak width cannot provide the same level of RT accuracy, especially for minor peaks, due to uncertainty in the determination of peak start, end, and height. Consequently, RT is the most reliably measured peak attribute.

The reliability of the RT measurement was confirmed in this DOE study. As listed in Table 2 , well-fitted RT models were acquired for the major API and Epimer peaks as well as the minor impurity 2 and impurity 3 peaks. The retention time models are listed in Eqs. 7 – 10 ( note : reciprocal square for the Epimer and impurity 2, and reciprocal for impurity 3 retention time data transformation before modeling inverted the positive and negative nature of the model term effect in Eqs. 8 – 10 , see the Model Term Ranking Pareto Charts in Fig. 2 for the actual effect). The four models shared three common terms: flow rate, final percentage of strong solvent, and the square of final percentage of strong solvent. These three terms contributed more than 90% of the effect in all four RT models. Furthermore, in all four models the flow rate and final percentage of strong solvent terms consistently produced a negative effect on RT, whereas the square of the final percentage of strong solvent term consistently produced positive effects. While the scientific rationale for the negative effects of the first two terms is well-established, the rationale for the positive effects of the third term lies beyond the scope of this study.

As RT is typically the most reliable measured peak response, therefore, it produces most reliable models. One potential shortcoming of RT modeling-based method optimization is that the resolution of two neighboring peaks is not only affected by the retention time, but also by peak width and peak shape, such as peak fronting and tailing.

Peak number models

A representative analytical sample is critical for AQbD to use DOE to develop a chromatographic method capable of resolving all potential related substances. Multivariate DOE chromatography of a forced degradation sample may contain many minor peaks, which may elute in different orders across the different runs of the study, making tracking of the individual peaks nearly impossible. One way to solve this problem is to focus on the number of peaks observed, instead of tracking of individual peaks. Furthermore, to avoid an impractical method with too many partially resolved peaks, the number of peaks with ≥ 1.5 resolution could be an alternative response for modeling.

Excellent models were acquired for both the number of peak responses and the number of peaks with ≥ 1.5 resolution in this DOE study. See Table 2 for the major model statistics, Fig. 2 for the Model Term Pareto Ranking Chart, and Eqs. 11 and 12 for the detailed models ( note : reciprocal square data transformation before modeling reversed the positive and negative nature of the model term effect in Eqs. 11 – 12 ; see the Model Term Ranking Pareto Charts in Fig. 2 for the actual effect). Of the 14 terms, only four were statistically significant for the peak number model and only three were statistically significant for the resolved peak number model. Additionally, it is notable that the two models share three common terms (final percentage of strong solvent ( B ), flow rate ( A ), and oven temperature ( C )) and the orders of impact for the three terms is maintained as ( B ) > ( A ) > ( C ), as seen in the Model Term Ranking Pareto Chart. The models indicated that within the evaluated ranges the final percentage of strong solvent and flow rate have negative effects on the overall separation, while column temperature has a positive effect. These observations align well with chromatographic scientific principles.

Challenges and solutions to peak resolution modeling

No appropriate model was found for the API peak resolution response in this study, possibly due to very high pure experimental error (34.2%) based on the replication runs. With this elevated level of resolution measurement error, only large effects of the experiment variables would be discernable from an analysis of the resolution data. There are many potential reasons for the high pure experimental error: (1) error in the resolution value determination in each DOE run, especially with small peak size or tailing of the reference impurity peaks; (2) the use of different reference peaks to calculate the resolution when elution order shifts between DOE runs; (3) the column is not sufficiently re-equilibrated between different conditions (note: Mention of column equilibration was hypothetical in this case and only to stress the importance of column conditioning during DOE in general. As Fusion QbD automatically inserts conditioning runs into the DOE sequence where needed, this was not found to be an issue in this case study). The respective solutions to overcome these challenges are (1) when reference materials are available, make a synthetic method-development sample composed of each analyte at concentrations at least ten times the limit of quantitation; (2) keep the concentration of analytes in the synthetic sample at distinguishably different levels so that the peaks can be tracked by size; and (3) allow enough time for the column to be sufficiently re-equilibrated between different conditions.

Method robustness evaluation and optimization by Monte Carlo simulation

The robustness of a method is a measure of its capacity to remain unaffected by small but deliberate variations in method parameters. It provides an indication of the method’s reliability during normal usage. Robustness was demonstrated for critical method responses by running system suitability checks, in which selected method parameters were changed one factor at a time. In comparison, the AQbD approach quantifies method robustness with process robustness indices, such as C P and C pk , through multivariate robustness DOE, in which critical method parameters are systematically varied, simultaneously. Process robustness indices are standard statistical process control matrices widely used to quantify and evaluate process and product variations. In this AQbD case study, method capability indices were calculated to compare the variability of a chromatographic method response to its specification limits. The comparison is made by forming the ratio between the spread of the response specifications and the spread of the response values, as measured by six times standard deviation of the response. The spread of the response values is acquired through tens of thousands of virtual Monte Carlo simulation runs of the corresponding response model, with all critical method parameters varied around their setting points randomly and simultaneously according to specified distributions. A method with a process capability of ≥ 1.33 is considered robust as it will only fail to meet the response specifications 63 times out of a million runs and thus is capable of providing much more reliable measurements for informed decisions on drug development, manufacturing, and quality control. Due to its intrinsic advantages over the OFAT approach, multivariate DOE robustness evaluation was recommended to replace the OFAT approach in the latest regulatory guidelines (FDA Guidance for industry-analytical procedures and methods validation for drugs and biologics. 2015 ).

In this DOE study, solid C pk models were produced for the “API Plate Count” and “Number of Peaks ≥ 1.5 USP Resolution”. See Table 2 for the detailed model regression statistics.

Multiple responses method optimization

Once models have been established for selected individual method responses, overall method evaluation and optimization can be performed. This is usually substantiated by balancing and compromising among multiple method responses. Three principles must be followed in selecting method responses to be included in the final optimization: (1) the selected response is critical to achieve the goal (see Table 4 ); (2) a response is included only when its model is of sufficiently high quality to meet the goals of validation; and (3) the total number of responses included should be kept to a minimum.

Following the above three principles, five method responses were selected for the overall method evaluation and optimization. Best overall answer search identified a new optimized method when the four critical method parameters were set at the specific values as listed in Table 3 . The cumulative desirability for the five desired method response goals reached the maximum value of 1.0. The desirability for each individual goal also reached the maximum value of 1.0, as listed in Table 4 .

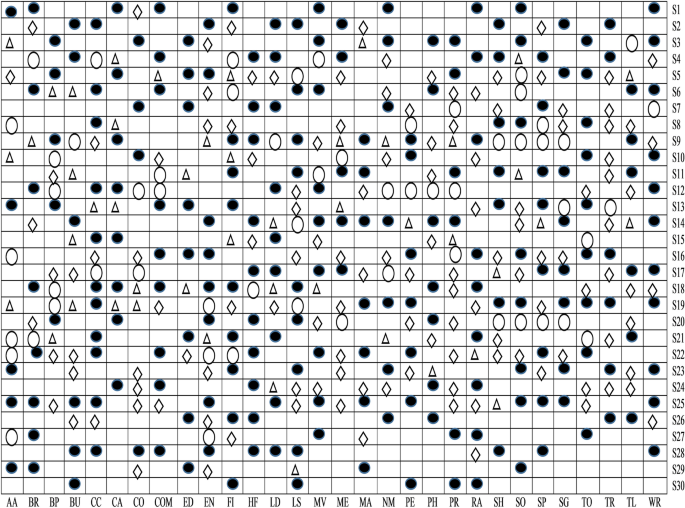

Method Operable Design Region (MODR)

The critical method parameter settings in Table 3 define a single method that can simultaneously fulfill all five targeted method goals listed in Table 4 to the best extent possible. However, the actual operational values of the four critical parameters may drift around their set points during routine method executions. Based on the models, contour plots for method response can be created to reveal how the response value changes as the method parameters drift. Furthermore, overlaying the contour plots of all selected method responses reveal the MODR, as shown in Figs. 4 , 5 , and 6 . Note that for each response, a single unique color is used to shade the region of the graph where the response fails the criteria; thus, criteria for all responses are met in the unshaded area.

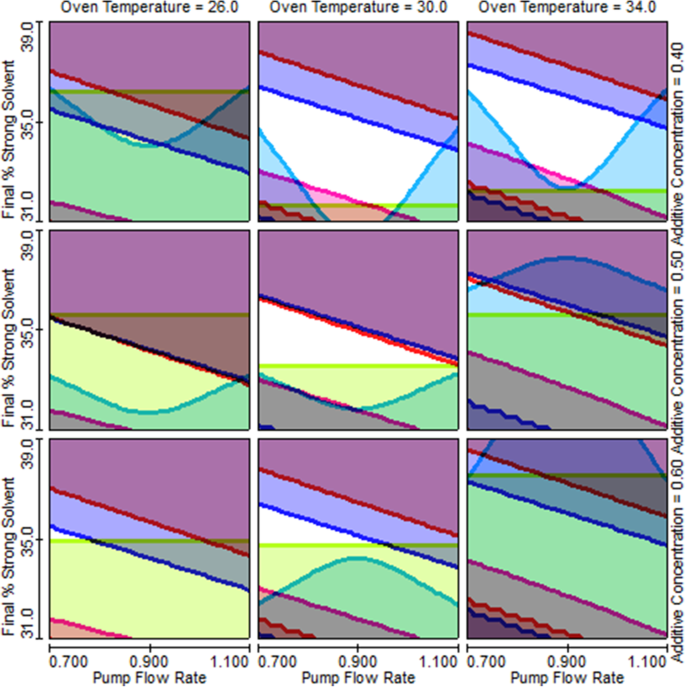

Trellis overlay graph shows how the size of the MODR (unshaded area) changes as the four method parameters change

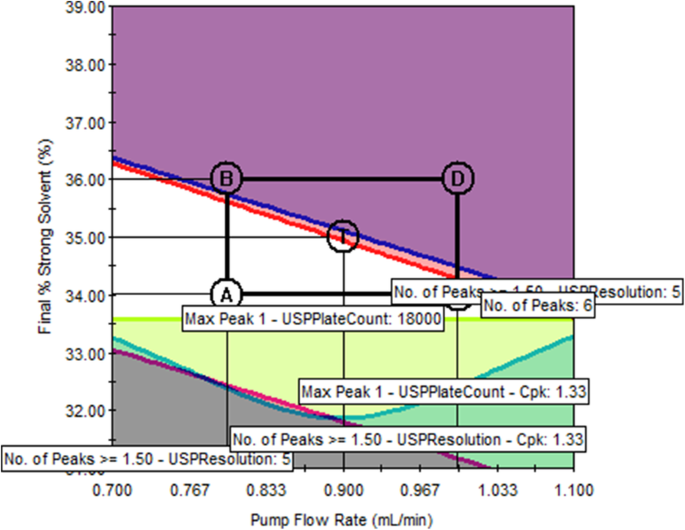

Single overlay graph shows the original as-is method at point T is not robust (pump flow rate = 0.90 mL/min; final % strong solvent = 35%; oven temperature = 30 °C; EDTA concentration = 0.50 mM)

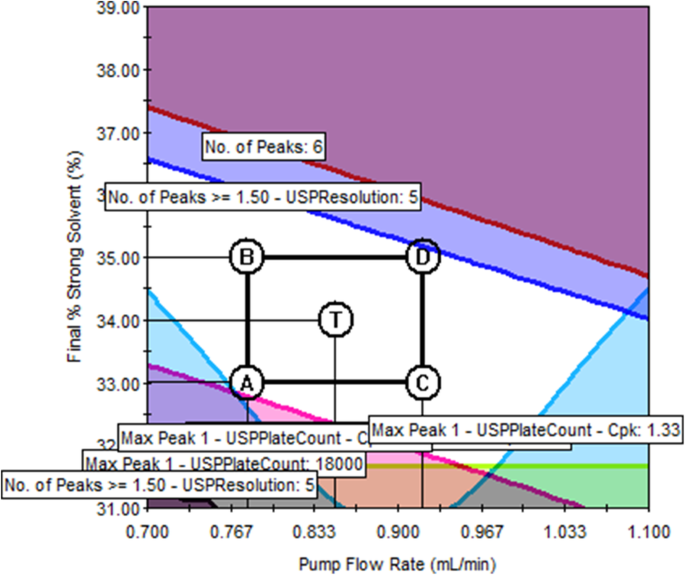

The Trellis overlay graph in Fig. 5 reveals the MODR from the perspectives of all four critical method parameters, among which flow rate and final percentage of strong solvent change continuously while oven temperature and EDTA additive concentration were each set at three different levels. Figure 5 clearly demonstrates how the size of the MODR changes with the four method parameters. The single overlay graph in Fig. 6 shows that the original as-is method (represented by the center point T) is on the edge of failure for two method responses, number of peaks (red) and number of peaks ≥ 1.5 resolution (blue), indicating that the original method is not robust. Conversely, point T in the single overlay graph in Fig. 7 is at the center of a relatively large unshaded area, indicating that the method is much more robust than the original method.

Single overlay graph shows a much more robust method at point T (pump flow rate = 0.78 mL/min; final % strong solvent = 34.2%; oven temperature = 30.8 °C; EDTA concentration = 0.42 mM)

Through the collaboration of regulatory authorities and the industry, AQbD is the new paradigm to develop robust chromatographic methods in the pharmaceutical industry. It uses a systematic approach to understand and control variability and build robustness into chromatographic methods. This ensures that analytical results are always close to the product true value and meet the target measurement uncertainty, thus enabling informed decisions on drug development, manufacturing, and quality control.

Multivariate DOE modeling plays an essential role in AQbD and has the potential to elevate chromatographic methods to a robustness level rarely achievable via the traditional OFAT approach. However, as demonstrated in this case study, chromatography science was still the foundation for prioritizing method inputs and responses for the most appropriate DOE design and modeling, and provided further scientific validation to the statistically validated DOE models. Once models were fully validated for all selected individual method responses, the MODR was substantiated by balancing and compromising among the most important method responses.

Developing a MODR is critical for labs that transfer in externally sourced chromatographic methods. In this case study, method evaluation using AQbD produced objective data that enabled a deeper understanding of method variability, upon which a more robust method with a much larger MODR was proposed. The in-depth method variability understanding through AQbD also paved the way for establishing a much more effective method control strategy. Method development and validation from a multivariate data driven exercise led to better and more informed decisions regarding the suitability of the method.

Availability of data and materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

Analytical quality by design

Design of experiments

Circumscribed central composite

Method Operable Design Region

Quality by design

The International Council for Harmonization of Technical Requirements for Pharmaceuticals for Human Use

Critical quality attributes

Target measurement uncertainty

Analytical target profile

Analysis of variance

Ethylenediaminetetraacetic acid

Mean square regression

Mean square lack of fit

One factor at a time

Altiero, P. Why they matter, an introduction to chromatography equations. Slide 21. https://www.agilent.com/cs/library/eseminars/public/Agilent_Webinar_Why_They_Matter_An_Intro_Chromatography_Equations_Nov262018.pdf . Accessed 13 May 2021. (2018).

Bezerraa MA, , Ferreirab SLC, Novaesa CG, dos Santoset AMP, Valasquesal GS, da Mata Cerqueira UMF, et al. Simultaneous optimization of multiple responses and its application in Analytical Chemistry – a review. Talanta; 194: 941-959. (2019).

Article Google Scholar

Borman P, Chatfield M, Nethercote P, Thompson D, Truman K (2007) The application of quality by design to analytical methods. Pharma.l Technol 31(12):142–152 (n.d.)

Google Scholar

Chatterjee S, CMC Lead for QbD, ONDQA/CDER/FDA. Design space considerations, AAPS Annual Meeting,2012. (n.d.).

Debrus B, Guillarme D, Rudaz S (2013) Improved quality-by-design compliant methodology for method development in reversed-phase liquid chromatography. J Pharm Biomed Anal 84:215–223 (n.d.)

Article CAS Google Scholar

EURACHEM / CITAC. Setting and using target uncertainty in chemical measurement. 2015. (n.d.).

FDA Guidance for industry-analytical procedures and methods validation for drugs and biologics. 2015.

FDA pharmaceutical cGMPs for the 21st century — a risk-based approach. 2004. (n.d.).

Grangeia HB, Silvaa C, Simões SP, Reis MS (2020) Quality by design in pharmaceutical manufacturing: a systematic review of current status, challenges and future perspectives. Eur J Pharm Biopharm 147:19–37 (n.d.)

ICH Q10 - Pharmaceutical quality system. 2008. (n.d.).

ICH Q11 - Development and manufacturing of drug substances (chemical entities and biotechnological/biological entities). 2012. (n.d.).

ICH Q12 - Technical and regulatory considerations for pharmaceutical product lifecycle management. 2019. (n.d.).

ICH Q14 - Analytical procedure development and revision of Q2(R1) analytical validation - final concept paper. 2018. (n.d.).

ICH Q8 (R2) - Pharmaceutical development. 2009. (n.d.).

ICH Q9 - Quality risk management. 2005. (n.d.).

Leardi R (2009) Experimental design in chemistry: a tutorial. Anal. Chim. Acta 652(1–2):161–172 (n.d.)

Myers RH, Montgomery DC (1995) Response surface methodology: process and product optimization using designed experiments, 2nd edition. John Wiley & Sons, New York, pp 366–404 (n.d.)

NIST/SEMATECH e-Handbook of statistical methods. 2012a. http://www.itl.nist.gov/div898/handbook/ppc/section1/ppc133.htm . Accessed May 13, 2021. (n.d.).

NIST/SEMATECH e-Handbook of statistical methods. 2012b. https://www.itl.nist.gov/div898/handbook/pmc/section1/pmc16.htm . Accessed May 13, 2021. (n.d.).

NIST/SEMATECH e-Handbook of statistical methods. 2012c. https://www.itl.nist.gov/div898/handbook/pmd/section4/pmd44.htm . Accessed May 13, 2021. (n.d.).

NIST/SEMATECH e-Handbook of statistical methods. 2012d. https://www.itl.nist.gov/div898/handbook/pri/section5/pri52.htm . Accessed May 13, 2021. (n.d.).

Orlandini S, Pinzauti S, Furlanetto S (2013) Application of quality by design to the development of analytical separation methods. Anal Bioanal Chem 2:443–450 (n.d.)

Schweitzer M, Pohl M, Hanna-Brown M, Nethercote P, Borman P, Hansen P, Smith K et al (2010) Implications and opportunities for applying QbD principles to analytical measurements. Pharma. Technol 34(2):52–59 (n.d.)

CAS Google Scholar

Tang YB, FDA/CDER/ONDQA (2011) Quality by design approaches to analytical methods -- FDA perspective. AAPS, Washington DC (n.d.)

Thermo Fisher Scientific, Vanquish pump specification. 2021. https://assets.thermofisher.com/TFS-Assets/CMD/Specification-Sheets/ps-73056-vanquish-pumps-ps73056-en.pdf . Accessed May 22, 2021. (n.d.).

van Deemter JJ, Zuiderweg FJ, Klinkenberg A. Longitudinal diffusion and resistance to mass transfer as causes of non ideality in chromatography. 1956. (n.d.).

Volta e Sousa L, Gonçalves R, Menezes JC, Ramos A (2021) Analytical method lifecycle management in pharmaceutical industry: a review. AAPS PharmSciTech 22(3):128–141. https://doi.org/10.1208/s12249-021-01960-9 (n.d.)

Article PubMed Google Scholar

Download references

Acknowledgements

The authors would like to thank KBP Biosciences for reviewing and giving permission to publish this case study. They would also like to thank Thermo Fisher Scientific and S Matrix for the Fusion QbD software, Lynette Bueno Perez for solution preparations, Dr. Michael Goedecke for statistical review, and both Barry Gujral and Francis Vazquez for their overall support.

Not applicable; authors contributed case studies based on existing company knowledge and experience.

Author information

Authors and affiliations.

Thermo Fisher Scientific Inc., Durham, NC, USA

Yongzhi Dong, Zhimin Liu, Charles Li, Emily Pinter, Alan Potts, Tanya Tadey & William Weiser

You can also search for this author in PubMed Google Scholar

Contributions

YD designed the study and performed the data analysis. ZL was the primary scientist that executed the study. CL, EP, AP, TT, and WW contributed ideas and information to the study and reviewed and approved the manuscript. The authors read and approved the final manuscript.

Corresponding author

Correspondence to Yongzhi Dong .

Ethics declarations

Competing interests.

The authors declare that they have no competing interests.

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Dong, Y., Liu, Z., Li, C. et al. Perspectives in modeling and model validation during analytical quality by design chromatographic method evaluation: a case study. AAPS Open 7 , 3 (2021). https://doi.org/10.1186/s41120-021-00037-y

Download citation

Received : 27 May 2021

Accepted : 29 July 2021

Published : 01 September 2021

DOI : https://doi.org/10.1186/s41120-021-00037-y

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Statistical model validation

- Scientific model validation

- Multiple responses optimization

A Step-by-Step Guide to Analytical Method Development and Validation

Method development and validation are essential components of drug development and chemistry manufacturing and controls (CMC). The goal of method development and validation is to ensure that the methods used to measure the identity, purity, potency, and stability of drugs are accurate, precise, and reliable. Analytical methods are critical tools for ensuring the quality, safety, and efficacy of pharmaceutical products in the drug development process. Analytical development services performed at Emery Pharma are outlined below.

Analytical Method Development Overview:

Analytical method development is the process of selecting and optimizing analytical methods to measure a specific attribute of a drug substance or drug product. This process involves a systematic approach to evaluating and selecting suitable methods that are sensitive, specific, and robust, and can be used to measure the target attribute within acceptable limits of accuracy and precision.

Method Validation Overview:

Method validation is the process of demonstrating that an analytical method is suitable for its intended use, and that it is capable of producing reliable and consistent results over time. The validation process involves a set of procedures and tests designed to evaluate the performance characteristics of the method.

Components of method validation include:

- Specificity

- Limit of detection (LOD)

- Limit of quantification (LOQ)

Depending on the attribute being assayed, we use state-of-the-art instrumentation such as HPLC (with UV-Vis/DAD, IR, CAD, etc. detectors), LC-MS, HRMS, MS/MS, GC-FID/MS, NMR, plate readers, etc.

At Emery Pharma, we follow a prescribed set of key steps per regulatory (FDA, EMA, etc.) guidance, as well as instructions from the International Council for Harmonisation of Technical Requirements for Pharmaceuticals for Human Use (ICH) for any analytical method development and validation.

Step 1: Define the Analytical Method Objectives

The first step in analytical method development and validation is to define the analytical method objectives, including the attribute to be measured, the acceptance criteria, and the intended use of the method. This step involves understanding the critical quality attributes (CQAs) of the drug product or drug substance and selecting appropriate analytical methods to measure them.

For example, impurity profile of a drug substance assessment require suitable HPLC-based methods for small molecules, whereas host cell proteins (impurity-equivalent) for a Biologic drug substance require ligand binding assays (LBAs) such as ELISA for overview, and LC-HRMS-based analysis for thorough understanding.

Step 2: Conduct a Literature Review

Next, a literature review is conducted to identify existing methods and establish a baseline for the method development process. This step involves reviewing scientific literature, regulatory guidance, and industry standards to determine the current state of the art and identify potential methods that may be suitable for the intended purpose.

At Emery Pharma, we have worked on and have existing programs on virtually all type of drug modalities, thus we have access to many validated internal methods to tap into as well.

Step 3: Develop a Method Plan

The next step is to develop a method plan that outlines the methodology, instrumentation, and experimental design for method development and validation. The plan includes the selection of suitable reference standards, the establishment of performance characteristics, and the development of protocols for analytical method validation.

Step 4: Optimize the Method

Next, the analytical method is optimized to ensure that it is sensitive, specific, and robust. This step involves evaluating various parameters, such as sample preparation, column selection, detector selection, mobile phase composition, and gradient conditions, to optimize the method performance.

Step 5: Validate the Method

The critical next step is to validate the analytical method to ensure that it meets the performance characteristics established in the method plan. This step involves evaluating the method's accuracy, precision, specificity, linearity, range, LOD, LOQ, ruggedness, and robustness.

Depending on the stage of development, validation may be performed under Research and Development (R&D), however, most Regulatory submissions require method validation be conducted per 21 CFR Part 58 on Good Laboratory Practices (GLP). To that end, Emery Pharma has an in-house Quality Assurance department that ensures compliance and can play host to regulators/auditors.

Step 6: (Optional) Transfer the Method

In some instances, e.g., clinical trials with multiple international sites, the validated method may need to be transferred to another qualified laboratory. We routinely help our Clients get several parallel sites up to speed on new validated methods, and support with training analysts on the method, documenting the method transfer process, and conducting ongoing monitoring and maintenance of the method.

Step 7: Sample Analysis

The final step of an analytical method development Validation process is developing a protocol and initiate sample analysis.

At Emery Pharma, depending on the stage of development, sample analysis is conducted under R&D or in compliance with 21 CFR Part 210 and 211 for current Good Manufacturing Procedures (cGMP). We boast an impressive array of qualified instrumentation that can be deployed for cGMP sample analysis, which is overseen by our Quality Assurance Director for compliance and proper reporting.

Let us be a part of your success story

Emery Pharma has decades of experience in analytical method development and validation. We strive to implement procedures that help to ensure new drugs are manufactured to the highest quality standards and are safe and effective for patient use.

Emery Pharma

Request for proposal, let us be a part of your success story..

Do you have questions regarding a potential project? Or would you like to learn more about our services? Please reach out to a member of the Emery Pharma team via the contact form, and one of our experts will be in touch soon as possible. We look forward to working with you!

- My Shodhganga

- Receive email updates

- Edit Profile

Shodhganga : a reservoir of Indian theses @ INFLIBNET

- Shodhganga@INFLIBNET

- Jawaharlal Nehru Technological University, Anantapuram

- Department of Pharmaceutical Sciences

Items in Shodhganga are licensed under Creative Commons Licence Attribution-NonCommercial-ShareAlike 4.0 International (CC BY-NC-SA 4.0).

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 10 May 2024

PermDroid a framework developed using proposed feature selection approach and machine learning techniques for Android malware detection

- Arvind Mahindru 1 ,

- Himani Arora 2 ,

- Abhinav Kumar 3 ,

- Sachin Kumar Gupta 4 , 5 ,

- Shubham Mahajan 6 ,

- Seifedine Kadry 6 , 7 , 8 , 9 &

- Jungeun Kim 10

Scientific Reports volume 14 , Article number: 10724 ( 2024 ) Cite this article

131 Accesses

1 Altmetric

Metrics details

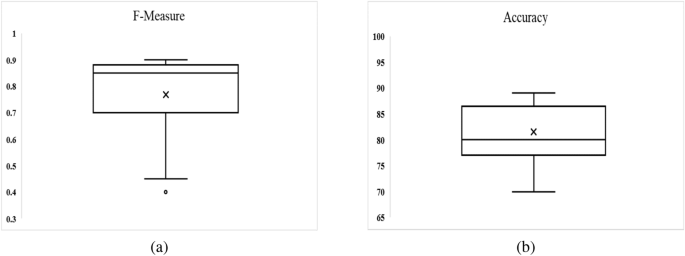

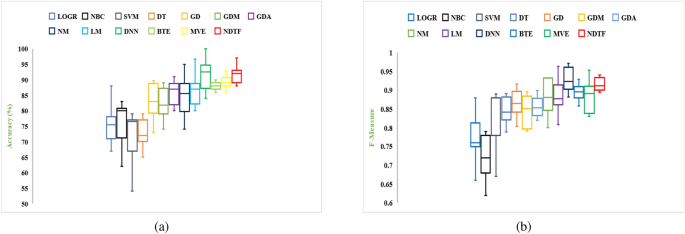

- Electrical and electronic engineering

- Engineering

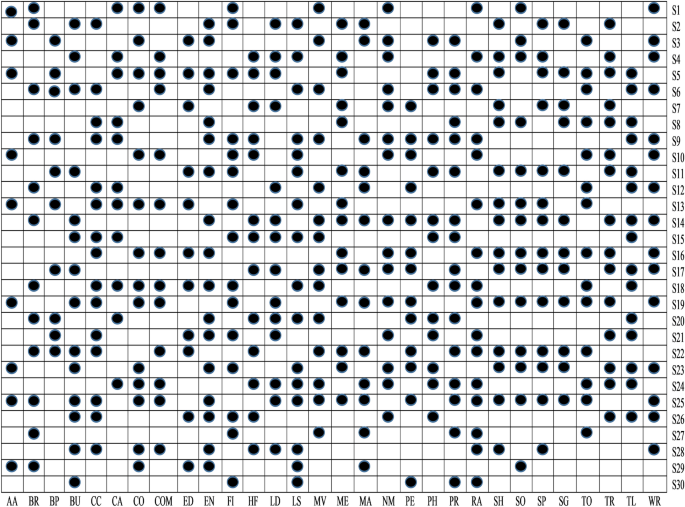

The challenge of developing an Android malware detection framework that can identify malware in real-world apps is difficult for academicians and researchers. The vulnerability lies in the permission model of Android. Therefore, it has attracted the attention of various researchers to develop an Android malware detection model using permission or a set of permissions. Academicians and researchers have used all extracted features in previous studies, resulting in overburdening while creating malware detection models. But, the effectiveness of the machine learning model depends on the relevant features, which help in reducing the value of misclassification errors and have excellent discriminative power. A feature selection framework is proposed in this research paper that helps in selecting the relevant features. In the first stage of the proposed framework, t -test, and univariate logistic regression are implemented on our collected feature data set to classify their capacity for detecting malware. Multivariate linear regression stepwise forward selection and correlation analysis are implemented in the second stage to evaluate the correctness of the features selected in the first stage. Furthermore, the resulting features are used as input in the development of malware detection models using three ensemble methods and a neural network with six different machine-learning algorithms. The developed models’ performance is compared using two performance parameters: F-measure and Accuracy. The experiment is performed by using half a million different Android apps. The empirical findings reveal that malware detection model developed using features selected by implementing proposed feature selection framework achieved higher detection rate as compared to the model developed using all extracted features data set. Further, when compared to previously developed frameworks or methodologies, the experimental results indicates that model developed in this study achieved an accuracy of 98.8%.

Similar content being viewed by others

Evaluation and classification of obfuscated Android malware through deep learning using ensemble voting mechanism

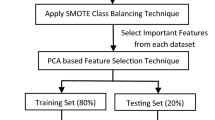

A study of dealing class imbalance problem with machine learning methods for code smell severity detection using PCA-based feature selection technique

AndroMalPack: enhancing the ML-based malware classification by detection and removal of repacked apps for Android systems

Introduction.

Now-a-days, smartphones can do the same work as the computer has been doing. By the end of 2023, there will be around 6.64 billion smartphone users worldwide ( https://www.bankmycell.com/blog/how-many-phones-are-in-the-world ). According to the report ( https://www.statista.com/statistics/272307/market-share-forecast-for-smartphone-operating-systems/ ) at the end of 2023, Android operating systems captured 86.2% of the total segment. The main reason for its popularity is that its code is written in open source which attracts developers to develop Android apps on a daily basis. In addition to that it provides many valuable services such as process management, security configuration, and many more. The free apps that are provided in its official store are the second factor in its popularity. By the end of March 2023 data ( https://www.appbrain.com/stats/number-of-android-apps ), Android will have 2.6 billion apps in Google play store.

Nonetheless, the fame of the Android operating system has led to enormous security challenges. On the daily basis, cyber-criminals invent new malware apps and inject them into the Google Play store ( https://play.google.com/store?hl=en ) and third-party app stores. By using these malware-infected apps cyber-criminals steal sensitive information from the user’s phone and use that information for their own benefits. Google has developed the Google Bouncer ( https://krebsonsecurity.com/tag/google-bouncer/ ) and Google Play Protect ( https://www.android.com/play-protect/ ) for Android to deal with this unwanted malware, but both have failed to find out malware-infected apps 1 , 2 , 3 . According to the report published by Kaspersky Security Network, 6,463,414 mobile malware had been detected at the end of 2022 ( https://securelist.com/it-threat-evolution-in-q1-2022-mobile-statistics/106589/ ). Malware acts as a serious problem for the Android platform because it spreads through these apps. The challenging issue from the defender’s perspective is how to detect malware and enhance its performance. A traditional signature-based detection approach detects only the known malware whose definition is already known to it. Signature-based detection approaches are unable to detect unknown malware due to the limited amount of signatures present in its database. Hence, the solution is to develop a machine learning-based approach that dynamically learns the behavior of malware and helps humans in defending against malware attacks and enhancing mobile security.

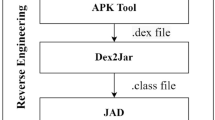

Researchers and academicians have proposed different methods for analyzing and detecting malware from Android. Some of them have been proposed by using static analysis, for example, ANASTASIA 4 , DREBIN 5 , Droiddetector 6 and DroidDet 7 . On the other side, some researchers have proposed with the help of dynamic analysis, for example, IntelliDroid 8 , DroidScribe 9 , StormDroid 10 and MamaDroid 11 . But, the main constraints of these approaches are present in its implementation and time consumption because these models are developed with a number of features. On the other side, academicians and researchers 3 , 12 , 13 , 14 , 15 , 16 , 17 , 18 , 19 also proposed malware detection frameworks that are developed by using relevant features. But, they have restrictions too. They implemented only already proposed feature selection techniques in their work.

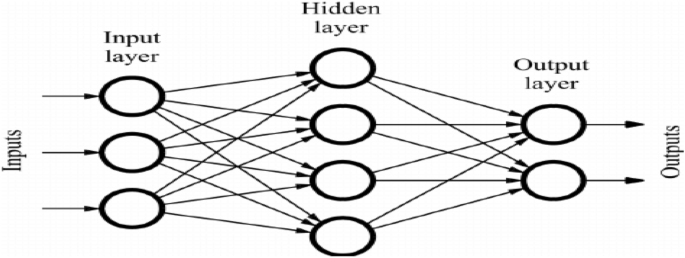

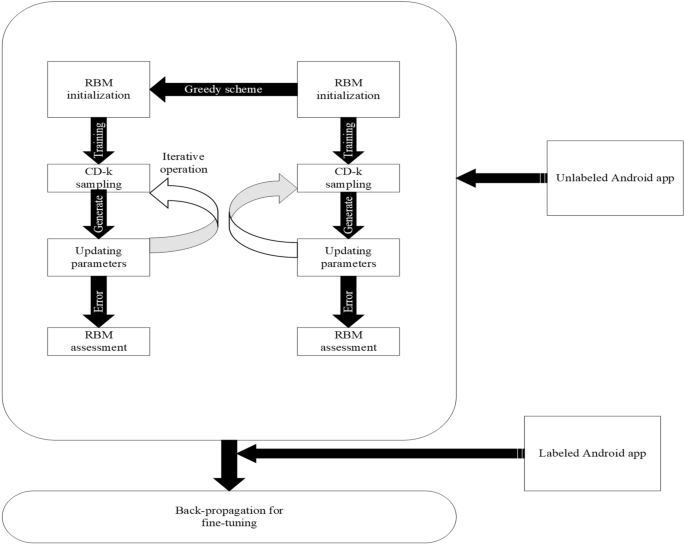

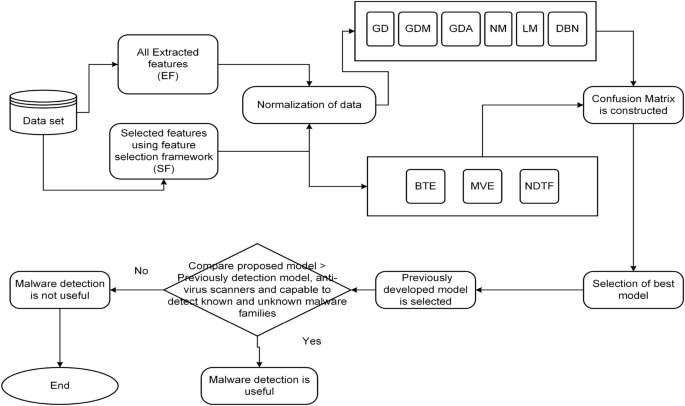

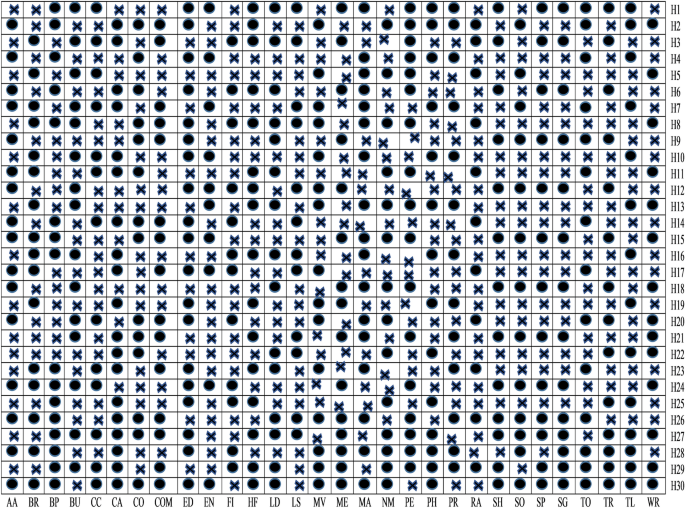

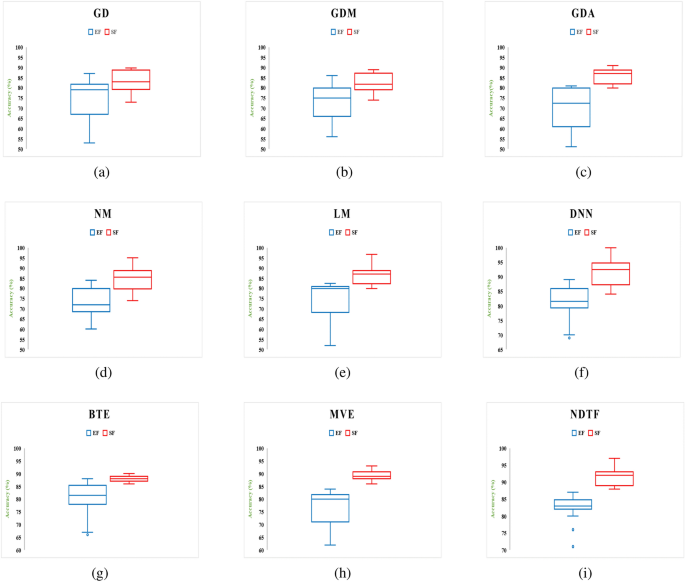

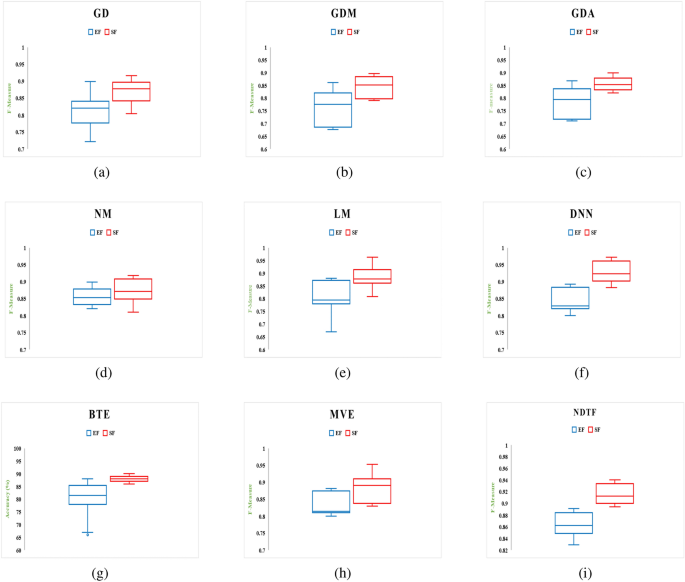

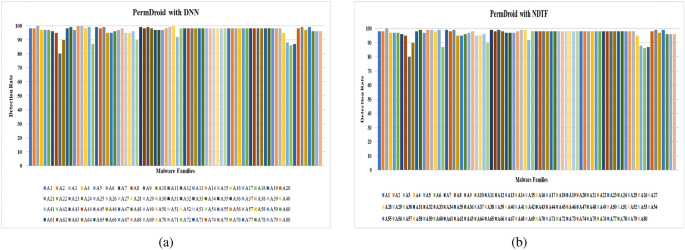

So, in this research paper, to overcome the hindrances a feature selection framework is proposed. This helps in the evaluation of appropriate feature sets with the goal of removing redundant features and enhances the effectiveness of the machine-learning trained model. Further, by selecting a significant features a framework named PermDroid is developed. The proposed framework is based on the principle of artificial neural network with six different machine learning techniques, i.e., Gradient descent with momentum (GDM), Gradient descent method with adaptive learning rate (GDA), Levenberg Marquardt (LM), Quasi-Newton (NM), Gradient descent (GD), and Deep Neural Network (DNN). These machine learning algorithms are considered on the basis of their performance in the literature 20 . In addition to this, three different ensemble techniques with three dissimilar combination rules are proposed in this research work to develop an effective malware detection framework. F-measure and Accuracy have been considered as performance parameters to evaluate the performance. From the literature review 21 , 22 , 23 , it is noticed that a number of authors have concentrated on bettering the functioning of the malware detection models. However, their study had a key flaw, they only used a small amount of data to develop and test the model. In order to address this issue, this study report takes into account 500,000 unique Android apps from various categories.

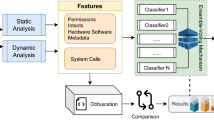

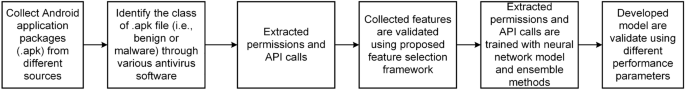

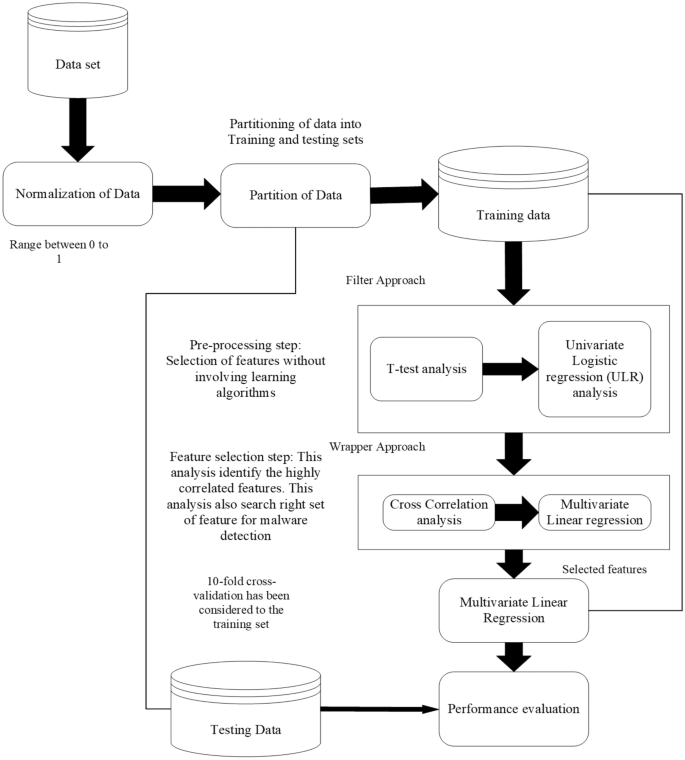

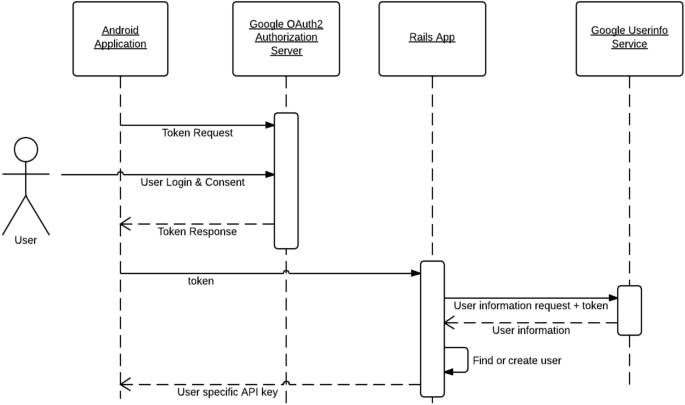

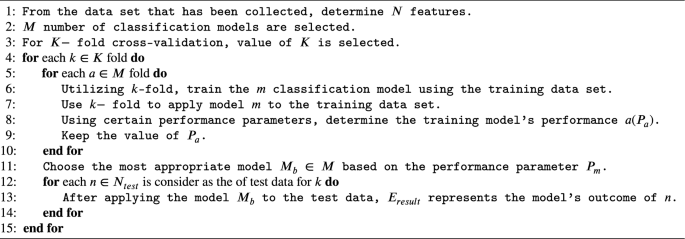

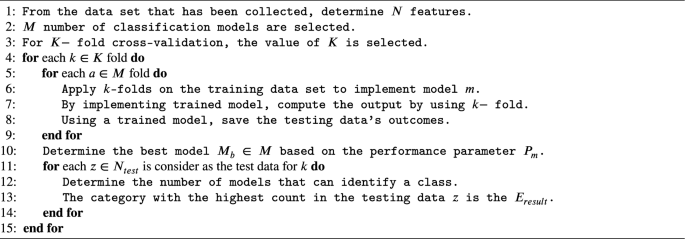

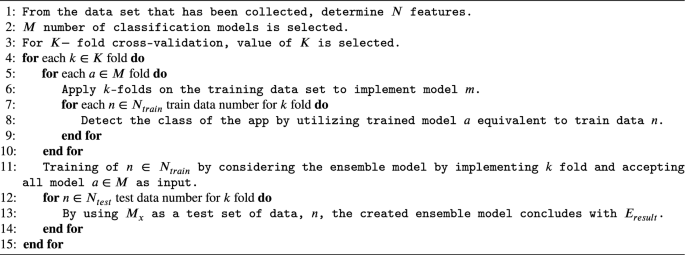

Steps are followed in developing Android malware detection framework.

The method for developing a reliable malware detection model is represented in Fig. 1 . The initial collection of Android application packages (.apk) comes from a variety of promised repositories (mentioned in “ Creation of experimental data set and extraction of features ” section). Anti-virus software is used to identify the class of .apk files at the next level (mentioned in “ Creation of experimental data set and extraction of features ” section). Then, features (such as API calls and permissions) are retrieved from the .apk file using various techniques described in the literature (mentioned in subsection 3.4). Additionally, a feature selection framework is applied to evaluate the extracted features (discussed in “ Proposed feature selection validation method ” section). Then, a model is developed using an artificial neural network using six different machine-learning techniques and three different ensemble models, employing the selected feature sets as input. Finally, F-measure and Accuracy are taken into consideration while evaluating the developed models. The following are the novel and distinctive contributions of this paper:

In this study, to develop efficient malware detection model half a million unique apps have been collected from different resources. Further, unique features are extracted by performing dynamic analysis in this study.

The methodology presented in this paper, is based on feature selection methodologies, which contributes in determining the significant features that are utilized to develop malware detection models.

In this study, we proposed three different ensemble techniques that are based on the principle of a heterogeneous approach.

Six different machine learning algorithms that are based on the principle of Artificial Neural Network (ANN) are trained by using relevant features.

When compared to previously developed frameworks and different anti-virus software in the market, the proposed Android malware detection framework can detect malware-infected apps in less time.

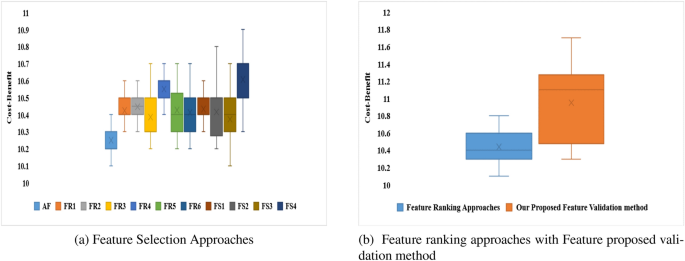

A cost-benefit analysis shows that the proposed Android malware detection framework is more effective in identifying malware-infected apps from the real world.

The remaining sections of this research paper are arranged as follows: “ Related work ” section presents the literature survey on Android malware detection as well as the creation of research questions. “ Research methodology ” section gives an overview of the research methodology used to create the Android malware detection framework. Different machine learning and ensemble techniques are addressed in “ Machine learning technique ” section. The proposed feature selection validation technique is discussed in “Proposed feature selection validation method” section. The experimental results are presented in “ Experimental setup and results ” section. Threats to validity are presented in “ Threats to validity ” section. Conclusion and the future scope are discussed in “ Conclusion and future work ” section.

Related work

The exploitation of the vulnerability is common these days to acquire higher privilege on Android platforms. Since 2008, cybercriminals have started targeting Android devices. An exploit app, from the perspective of Android security, can assist cyber-criminals in bypassing security mechanisms and gaining more access to users’ devices. Cybercriminals may exploit user data by selling their personal information for monetary gain if they took advantage of these privileges. The detection process, which has been used by researchers in the past and is based on Artificial Neural Networks (ANN) and feature selection techniques, is addressed in this subsection.

Androguard ( https://code.google.com/archive/p/androguard/ ) is a static analysis tool that detects malware on Android devices using the signature concept. Only malware that is already known to be present and whose definition is in the Androguard database is identified. It cannot, however, identify unidentified malware. Andromaly 23 , is developed on a dynamic analysis tool that uses a machine learning technique. It monitored CPU utilization, data transfer, the number of effective processes, and battery usage in real-time. The test was carried out on a few different types of simulated malware samples, but not on the applications that are present in the real-world. By using the semantics of the code in the form of code graphs collected from Android apps, Badhani et al. 24 developed malware detection methodology. Faruki et al. 21 introduced AndroSimilar, which is based on the principles of generated signatures that are developed from the extracted features, which are used to develop malware detection model.

Aurasium 25 takes control of an app’s execution by examining arbitrary security rules in real-time. It repackages Android apps with security policy codes and informs users of any privacy breaches. Aurasium has the problem of not being able to detect malicious behavior if an app’s signature changes. They performed dynamic analysis of Android apps and considered call-centric as a feature. The authors tested their method on over 2900 Android malware samples and found that it is effective at detecting malware activity. A web-based malware evaluation method has been proposed by Andrubis 26 , it operates on the premise that users can submit apps via a web service, and after examining their activity, it returns information on whether the app is benign or malicious. Ikram et al. 27 suggested an approach named as DaDiDroid based on weighted directed graphs of API calls to detect malware-infected apps. The experiment was carried out with 43,262 benign and 20,431 malware-infected apps, achieving a 91% accuracy rate. Shen et al. 28 developed an Android malware detection technique based on the information flow analysis principle. They implement N-gram analysis to determine common and unique behavioral patterns present in the complex flow. The experiment was carried out on 8,598 different Android apps with an accuracy of 82.0 percent. Yang et al. 29 proposed an approach named EnMobile that is based on the principle of entity characterization of the behavior of the Android app. The experiment was carried out on 6,614 different Android apps, and the empirical results show that their proposed approach outperformed four state-of-the-art approaches, namely Drebin, Apposcopy, AppContext, and MUDFLOW, in terms of recall and precision.

CrowDroid 34 , which is built using a behavior-based malware detection method, comprises of two components: a remote server and a crowdsourcing app that must both be installed on users’ mobile devices. CrowDroid uses a crowdsourcing app to send behavioral data to a remote server in the form of a log file. Further, they implemented 2-mean clustering approach to identify that the app belongs to malicious or benign class. But, the crowDroid app constantly depletes the device’s resources. Yuan et al. 52 proposed a machine learning approach named Droid-Sec that used 200 extracted static and dynamic features for developing the Android malware detection model. The empirical result suggests that the model built by using the deep learning technique achieved a 96% accuracy rate. TaintDroid 30 tracks privacy-sensitive data leakage in Android apps from third-party developers. Every time any sensitive data leaves the smartphone, TaintDroid records the label of the data, the app that linked with the data, as well as the data’s destination address.

Zhang et al. 53 proposed a malware detection technique based on the weighted contextual API dependency graph principle. An experiment was performed on 13500 benign samples and 2200 malware samples and achieved an acceptable false-positive rate of 5.15% for a vetting purpose.

AndroTaint 54 works on the principle of dynamic analysis. The features extracted were used to classify the Android app as dangerous, harmful, benign, or aggressive using a novel unsupervised and supervised anomaly detection method. Researchers have used numerous classification methods in the past, like Random forest 55 , J48 55 , Simple logistic 55 , Naïve Bayes 55 , Support Vector Machine 56 , 57 , K-star 55 , Decision tree 23 , Logistic regression 23 and k-means 23 to identify Android malware with a better percentage of accuracy. DroidDetector 6 , Droid-Sec 52 , and Deep4MalDroid 58 work on the convention of deep learning for identifying Android malware. Table 1 summarizes some of the existing malware detection frameworks for Android.

The artificial neural network (ANN) technique is used to identify malware on Android devices

Nix and Zhang 59 developed a deep learning algorithm by using a convolution neural network (CNN) and used API calls as a feature. They utilized the principle of Long Short-Term Memory (LSTM) and joined knowledge from its sequences. McLaughlin et al. 60 , implemented deep learning by using CNN and considered raw opcode as a feature to identify malware from real-world Android apps. Recently, researchers 6 , 58 used network parameters to identify malware-infected apps. Nauman et al. 61 , implemented connected, recurrent, and convolutional neural networks, and they also implemented DBN (Deep Belief Networks) to identify malware-infected apps from Android. Xiao et al. 62 , presented a technique that was based on the back-propagation of the neural networks on Markov chains and considered the system calls as a feature. They consider the system call sequence as a homogenous stationary Markov chain and employed a neural network to detect malware-infected apps. Martinelli et al. 63 , implemented a deep learning algorithm using CNN and consider the system call as a feature. They performed an experiment on a collection of 7100 real-world Android apps and identify that 3000 apps belong to distinct malware families. Xiao et al. 64 , suggested an approach that depends on the principle of LSTM (Long Short-Term Memory) and considers the system call sequence as a feature. They trained two LSTM models by the system call sequences for both the benign and malware apps and then compute the similarity score. Dimjas̈evic et al. 65 , evaluate several techniques for detecting malware apps at the repository level. The techniques worked on the tracking of system calls at the time the app is running in a sandbox environment. They performed an experiment on 12,000 apps and able to identify 96% malware-infected apps.

Using feature selection approaches, to detect Android malware

Table 2 shows the literature review for malware detection done by implementing feature selection techniques. Mas’ud et al. 66 proposed a functional solution to detect malware from the smartphone and can address the limitation of the environment of the mobile device. They implemented chi-square and information gain as feature selection techniques to select the best features from the extracted dataset. Further, with the help of selected best features, they employed K-Nearest Neighbour (KNN), Naïve Bayes (NB), Decision Tree (J48), Random Forest (RF), and Multi-Layer Perceptron (MLP) techniques to identify malware-infected apps. Mahindru and Sangal 3 developed a framework that works on the basis of feature selection approaches and used distinct semi-supervised, unsupervised, supervised, and ensemble techniques parallelly and identify 98.8% malware-infected apps. Yerima et al. 67 suggested an effective technique to detect malware from smartphones. They implemented mutual information as a feature selection approach to select the best features from the collected code and app characteristics that indicate the malicious activities of the app. To detect malware apps, from the wild, they trained selected features by using Bayesian classification and achieved an accuracy of 92.1%. Mahindru and Sangal 15 suggested a framework named as “PerbDroid” that is build by considering feature selection approaches and deep learning as a machine classifier. 2,00,000 Android apps in total were subjected to tests, with a detection rate of 97.8%. Andromaly 23 worked on the principle of the Host-based Malware Detection System that monitors features related to memory, hardware, and power events. After selecting the best features by implementing feature selection techniques, they employed distinct classification algorithms such as decision tree (J48), K-Means, Bayesian network, Histogram or Logistic Regression, Naïve Bayes (NB) to detect malware-infected apps. Authors 14 suggested a malware detection model based on semi-supervised machine learning approaches. They examined the proposed method on over 200,000 Android apps and found it to be 97.8% accurate. Narudin et al. 68 proposed a malware detection approach by considering network traffic as a feature. Further, they applied random forest, multi-layer perceptron, K-Nearest Neighbor (KNN), J48, and Bayes network machine learning classifiers out of which the K-Nearest Neighbor classifier attained an 84.57% true-positive rate for detection of the latest Android malware. Wang et al. 69 employed three different feature ranking techniques, i.e., t -test, mutual information, and correlation coefficient on 3,10,926, benign, and 4,868 malware apps using permission and detect 74.03% unknown malware. Previous researchers implement feature ranking approaches to select significant sets of features only. Authors 13 developed a framework named as “DeepDroid” based on deep learning algorithm. They use six different feature ranking algorithms on the extracted features dataset to select significant features. The tests involved 20,000 malware-infected apps and 100,000 benign ones. The detection rate of a framework proposed using Principal component analysis (PCA) was 94%. Researchers and Academicians 70 , 71 , 72 , 73 also implemented features selection techniques in the literature in different fields to select significant features for developing the models.

Research questions

To identify malware-infected apps and considering the gaps that are present in the literature following research questions are addressed in this research work:

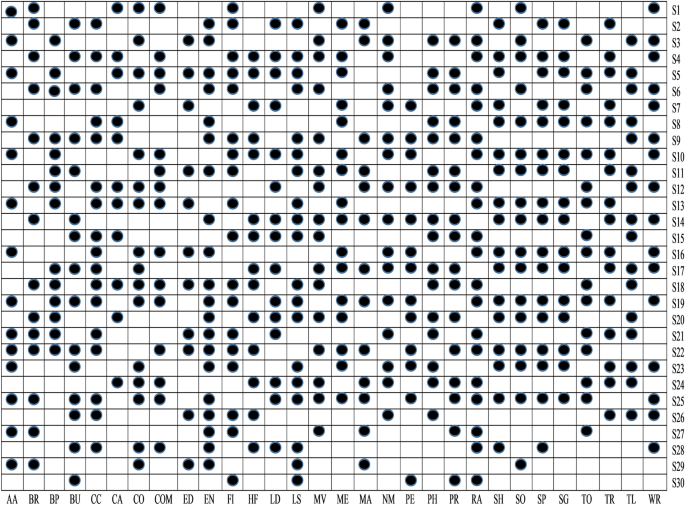

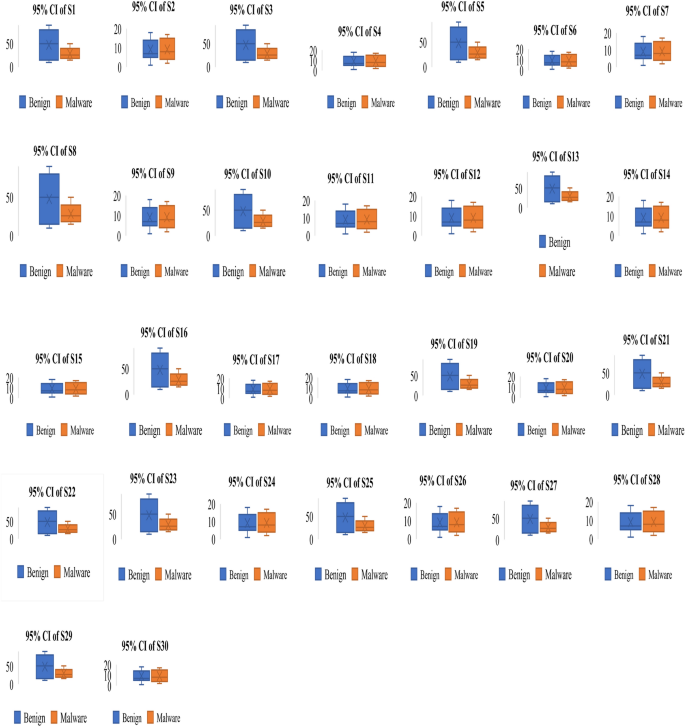

RQ1 Does the filtering approach helps to identify that whether an app is a benign or malware-infected (first phase of the proposed feature selection framework)? To determine the statistical significance among malicious and benign apps, the t -test is used. After, determining significant features, a binary ULR investigation is applied to select more appropriate features. For analysis, all the thirty different feature data sets are assigned (shown in Table 5 ) as null hypotheses.

RQ2 Do already existing and presented work’s sets of features show an immense correlation with each other? To answer this question, both positive and negative correlations are examined to analyze the sets of features, which help in improving the detection rate.