- Introduction

- Conclusions

- Article Information

eTable 1. Characteristics of Delphi panellists

eTable 2. Characteristics of Consensus Meeting panellists

eTable 3. Fillable Checklist: CONSORT-Outcomes (for combined completion of CONSORT 2010 and CONSORT-Outcomes 2022 items)

eTable 4. Fillable Checklist: CONSORT-Outcomes 2022 Extension items only (for separate completion of CONSORT 2010 and CONSORT-Outcomes 2022 items)

eTable 5. Key users and groups, proposed actions, and potential benefits for supporting implementation and adherence to CONSORT-Outcomes

eTable 6. CONSORT-Outcomes items and five optional additional items for outcome reporting in trial reports and related documents

eAppendix 1. Development of CONSORT-Outcomes, including patient/public engagement

eAppendix 2 . Detailed search strategy for scoping review

eAppendix 3. Acknowledgement of Contributors to the Development of CONSORT-Outcomes (and SPIRIT-Outcomes)

eFigure. Flow of candidate items through development of CONSORT-Outcomes

eReferences

- Reporting of Patient-Reported Outcomes in Randomized Trials : The CONSORT PRO Extension JAMA Special Communication February 27, 2013 Calvert and colleagues expand the Consolidated Standards of Reporting Trials (CONSORT) to include 5 guidelines essential for adequately reporting patientreported outcomes as primary or major secondary end points in research articles in its CONSORT PRO guidelines. Melanie Calvert, PhD; Jane Blazeby, MD; Douglas G. Altman, DSc; Dennis A. Revicki, PhD; David Moher, PhD; Michael D. Brundage, MD; for the CONSORT PRO Group

- CONSORT Extension for Reporting Multi-Arm Parallel Group Randomized Trials JAMA Special Communication April 23, 2019 This extension of the CONSORT 2010 Statement, a guideline and checklist for reporting parallel group randomized trials, provides updates for the reporting of multi-arm trials to assist evaluations of rigor and reproducibility and enhance understanding of the methodology of trials with more than 2 comparison arms. Edmund Juszczak, MSc; Douglas G. Altman, DSc; Sally Hopewell, DPhil; Kenneth Schulz, PhD

- CONSERVE Guidelines for Trials Modified Due to Extenuating Circumstances JAMA Special Communication July 20, 2021 This Special Communication describes CONSERVE, a guideline developed to improve the transparency, quality, and completeness of reporting of trials and trial protocols that undergo important modifications in response to extenuating circumstances such as the COVID-19 pandemic. Aaron M. Orkin, MD, MSc, MPH; Peter J. Gill, MD, DPhil; Davina Ghersi, MD, PhD; Lisa Campbell, MD, MBBCh, BSc; Jeremy Sugarman, MD, MPH, MA; Richard Emsley, PhD; Philippe Gabriel Steg, MD; Charles Weijer, MD, PhD; John Simes, MBBS, MD; Tanja Rombey, MPH; Hywel C. Williams, DSc; Janet Wittes, PhD; David Moher, PhD; Dawn P. Richards, PhD; Yvette Kasamon, MD; Kenneth Getz, MBA; Sally Hopewell, MSc, DPhil; Kay Dickersin, MA, PhD; Taixiang Wu, MPH; Ana Patricia Ayala, MISt; Kenneth F. Schulz, PhD; Sabine Calleja, MI; Isabelle Boutron, MD, PhD; Joseph S. Ross, MD, MHS; Robert M. Golub, MD; Karim M. Khan, MD, PhD; Cindy Mulrow, MD, MSc; Nandi Siegfried, PhD, MPH; Joerg Heber, PhD; Naomi Lee, MD; Pamela Reed Kearney, MD; Rhoda K. Wanyenze, MBChB, MPH, PhD; Asbjørn Hróbjartsson, MD, PhD, MPhil; Rebecca Williams, PharmD, MPH; Nita Bhandari, PhD; Peter Jüni, MD; An-Wen Chan, MD, DPhil; CONSERVE Group; Aaron M. Orkin; Peter J. Gill; Davina Ghersi; Lisa Campbell; Jeremy Sugarman; Richard Emsley; Philippe Gabriel Steg; Charles Weijer; John Simes; Tanja Rombey; Hywel C. Williams; Janet Wittes; David Moher; Dawn P. Richards; Yvette Kasamon; Kenneth Getz; Sally Hopewell; Kay Dickersin; Taixiang Wu; Ana Patricia Ayala; Kenneth F. Schulz; Sabine Calleja; Isabelle Boutron; Joseph S. Ross; Robert M. Golub; Karim M. Khan; Cindy Mulrow; Nandi Siegfried; Joerg Heber; Naomi Lee; Pamela Reed Kearney; Rhoda K. Wanyenze; Asbjørn Hróbjartsson; Rebecca Williams; Nita Bhandari; Peter Jüni; An-Wen Chan; Veronique Kiermer; Jacqueline Corrigan-Curay; John Concato

- Guidelines for Reporting Outcomes in Trial Protocols JAMA Special Communication December 20, 2022 This report, the Standard Protocol Items: Recommendations for Interventional Trials (SPIRIT)–Outcomes 2022 extension, is a consensus statement on the standards for describing outcome-specific information in clinical trial protocols and contains recommendations to be integrated with the SPIRIT 2013 statement. Nancy J. Butcher, PhD; Andrea Monsour, MPH; Emma J. Mew, MPH, MPhil; An-Wen Chan, MD, DPhil; David Moher, PhD; Evan Mayo-Wilson, DPhil; Caroline B. Terwee, PhD; Alyssandra Chee-A-Tow, MPH; Ami Baba, MRes; Frank Gavin, MA; Jeremy M. Grimshaw, MBCHB, PhD; Lauren E. Kelly, PhD; Leena Saeed, BSc, BEd; Lehana Thabane, PhD; Lisa Askie, PhD; Maureen Smith, MEd; Mufiza Farid-Kapadia, MD, PhD; Paula R. Williamson, PhD; Peter Szatmari, MD; Peter Tugwell, MD; Robert M. Golub, MD; Suneeta Monga, MD; Sunita Vohra, MD; Susan Marlin, MSc; Wendy J. Ungar, PhD; Martin Offringa, MD, PhD

See More About

Select your interests.

Customize your JAMA Network experience by selecting one or more topics from the list below.

- Academic Medicine

- Acid Base, Electrolytes, Fluids

- Allergy and Clinical Immunology

- American Indian or Alaska Natives

- Anesthesiology

- Anticoagulation

- Art and Images in Psychiatry

- Artificial Intelligence

- Assisted Reproduction

- Bleeding and Transfusion

- Caring for the Critically Ill Patient

- Challenges in Clinical Electrocardiography

- Climate and Health

- Climate Change

- Clinical Challenge

- Clinical Decision Support

- Clinical Implications of Basic Neuroscience

- Clinical Pharmacy and Pharmacology

- Complementary and Alternative Medicine

- Consensus Statements

- Coronavirus (COVID-19)

- Critical Care Medicine

- Cultural Competency

- Dental Medicine

- Dermatology

- Diabetes and Endocrinology

- Diagnostic Test Interpretation

- Drug Development

- Electronic Health Records

- Emergency Medicine

- End of Life, Hospice, Palliative Care

- Environmental Health

- Equity, Diversity, and Inclusion

- Facial Plastic Surgery

- Gastroenterology and Hepatology

- Genetics and Genomics

- Genomics and Precision Health

- Global Health

- Guide to Statistics and Methods

- Hair Disorders

- Health Care Delivery Models

- Health Care Economics, Insurance, Payment

- Health Care Quality

- Health Care Reform

- Health Care Safety

- Health Care Workforce

- Health Disparities

- Health Inequities

- Health Policy

- Health Systems Science

- History of Medicine

- Hypertension

- Images in Neurology

- Implementation Science

- Infectious Diseases

- Innovations in Health Care Delivery

- JAMA Infographic

- Law and Medicine

- Leading Change

- Less is More

- LGBTQIA Medicine

- Lifestyle Behaviors

- Medical Coding

- Medical Devices and Equipment

- Medical Education

- Medical Education and Training

- Medical Journals and Publishing

- Mobile Health and Telemedicine

- Narrative Medicine

- Neuroscience and Psychiatry

- Notable Notes

- Nutrition, Obesity, Exercise

- Obstetrics and Gynecology

- Occupational Health

- Ophthalmology

- Orthopedics

- Otolaryngology

- Pain Medicine

- Palliative Care

- Pathology and Laboratory Medicine

- Patient Care

- Patient Information

- Performance Improvement

- Performance Measures

- Perioperative Care and Consultation

- Pharmacoeconomics

- Pharmacoepidemiology

- Pharmacogenetics

- Pharmacy and Clinical Pharmacology

- Physical Medicine and Rehabilitation

- Physical Therapy

- Physician Leadership

- Population Health

- Primary Care

- Professional Well-being

- Professionalism

- Psychiatry and Behavioral Health

- Public Health

- Pulmonary Medicine

- Regulatory Agencies

- Reproductive Health

- Research, Methods, Statistics

- Resuscitation

- Rheumatology

- Risk Management

- Scientific Discovery and the Future of Medicine

- Shared Decision Making and Communication

- Sleep Medicine

- Sports Medicine

- Stem Cell Transplantation

- Substance Use and Addiction Medicine

- Surgical Innovation

- Surgical Pearls

- Teachable Moment

- Technology and Finance

- The Art of JAMA

- The Arts and Medicine

- The Rational Clinical Examination

- Tobacco and e-Cigarettes

- Translational Medicine

- Trauma and Injury

- Treatment Adherence

- Ultrasonography

- Users' Guide to the Medical Literature

- Vaccination

- Venous Thromboembolism

- Veterans Health

- Women's Health

- Workflow and Process

- Wound Care, Infection, Healing

Others Also Liked

- Download PDF

- X Facebook More LinkedIn

- CME & MOC

Butcher NJ , Monsour A , Mew EJ, et al. Guidelines for Reporting Outcomes in Trial Reports : The CONSORT-Outcomes 2022 Extension . JAMA. 2022;328(22):2252–2264. doi:10.1001/jama.2022.21022

Manage citations:

© 2024

- Permissions

Guidelines for Reporting Outcomes in Trial Reports : The CONSORT-Outcomes 2022 Extension

- 1 Child Health Evaluative Sciences, The Hospital for Sick Children Research Institute, Toronto, Ontario, Canada

- 2 Department of Psychiatry, University of Toronto, Toronto, Ontario, Canada

- 3 Department of Chronic Disease Epidemiology, School of Public Health, Yale University, New Haven, Connecticut

- 4 Department of Medicine, Women’s College Research Institute, University of Toronto, Toronto, Ontario, Canada

- 5 Centre for Journalology, Clinical Epidemiology Program, Ottawa Hospital Research Institute, Ottawa, Ontario, Canada

- 6 School of Epidemiology and Public Health, University of Ottawa, Ottawa, Ontario, Canada

- 7 Department of Epidemiology, Gillings School of Global Public Health, University of North Carolina, Chapel Hill

- 8 Amsterdam University Medical Centers, Vrije Universiteit, Department of Epidemiology and Data Science, Amsterdam, the Netherlands

- 9 Department of Methodology, Amsterdam Public Health Research Institute, Amsterdam, the Netherlands

- 10 public panel member, Toronto, Ontario, Canada

- 11 Clinical Epidemiology Program, Ottawa Hospital Research Institute, Ottawa, Ontario, Canada

- 12 Department of Medicine, University of Ottawa, Ottawa, Ontario, Canada

- 13 Department of Pharmacology and Therapeutics, University of Manitoba, Winnipeg, Canada

- 14 Children’s Hospital Research Institute of Manitoba, Winnipeg, Canada

- 15 Health Research Methods, Evidence, and Impact, McMaster University, Hamilton, Ontario, Canada

- 16 NHMRC Clinical Trials Centre, University of Sydney, Sydney, New South Wales, Australia

- 17 patient panel member, Ottawa, Ontario, Canada

- 18 MRC-NIHR Trials Methodology Research Partnership, Department of Health Data Science, University of Liverpool, Liverpool, England

- 19 Cundill Centre for Child and Youth Depression, Centre for Addiction and Mental Health, Toronto, Ontario, Canada

- 20 Department of Psychiatry, The Hospital for Sick Children, Toronto, Ontario, Canada

- 21 Bruyère Research Institute, Ottawa, Ontario, Canada

- 22 Ottawa Hospital Research Institute, Ottawa, Ontario, Canada

- 23 Department of Medicine, Feinberg School of Medicine, Northwestern University, Chicago, Illinois

- 24 Departments of Pediatrics and Psychiatry, Faculty of Medicine and Dentistry, University of Alberta, Edmonton, Canada

- 25 Clinical Trials Ontario, Toronto, Canada

- 26 Department of Public Health Sciences, Queen’s University, Kingston, Ontario, Canada

- 27 Institute of Health Policy, Management, and Evaluation, University of Toronto, Toronto, Ontario, Canada

- 28 Division of Neonatology, The Hospital for Sick Children, Toronto, Ontario, Canada

- Special Communication Reporting of Patient-Reported Outcomes in Randomized Trials : The CONSORT PRO Extension Melanie Calvert, PhD; Jane Blazeby, MD; Douglas G. Altman, DSc; Dennis A. Revicki, PhD; David Moher, PhD; Michael D. Brundage, MD; for the CONSORT PRO Group JAMA

- Special Communication CONSORT Extension for Reporting Multi-Arm Parallel Group Randomized Trials Edmund Juszczak, MSc; Douglas G. Altman, DSc; Sally Hopewell, DPhil; Kenneth Schulz, PhD JAMA

- Special Communication CONSERVE Guidelines for Trials Modified Due to Extenuating Circumstances Aaron M. Orkin, MD, MSc, MPH; Peter J. Gill, MD, DPhil; Davina Ghersi, MD, PhD; Lisa Campbell, MD, MBBCh, BSc; Jeremy Sugarman, MD, MPH, MA; Richard Emsley, PhD; Philippe Gabriel Steg, MD; Charles Weijer, MD, PhD; John Simes, MBBS, MD; Tanja Rombey, MPH; Hywel C. Williams, DSc; Janet Wittes, PhD; David Moher, PhD; Dawn P. Richards, PhD; Yvette Kasamon, MD; Kenneth Getz, MBA; Sally Hopewell, MSc, DPhil; Kay Dickersin, MA, PhD; Taixiang Wu, MPH; Ana Patricia Ayala, MISt; Kenneth F. Schulz, PhD; Sabine Calleja, MI; Isabelle Boutron, MD, PhD; Joseph S. Ross, MD, MHS; Robert M. Golub, MD; Karim M. Khan, MD, PhD; Cindy Mulrow, MD, MSc; Nandi Siegfried, PhD, MPH; Joerg Heber, PhD; Naomi Lee, MD; Pamela Reed Kearney, MD; Rhoda K. Wanyenze, MBChB, MPH, PhD; Asbjørn Hróbjartsson, MD, PhD, MPhil; Rebecca Williams, PharmD, MPH; Nita Bhandari, PhD; Peter Jüni, MD; An-Wen Chan, MD, DPhil; CONSERVE Group; Aaron M. Orkin; Peter J. Gill; Davina Ghersi; Lisa Campbell; Jeremy Sugarman; Richard Emsley; Philippe Gabriel Steg; Charles Weijer; John Simes; Tanja Rombey; Hywel C. Williams; Janet Wittes; David Moher; Dawn P. Richards; Yvette Kasamon; Kenneth Getz; Sally Hopewell; Kay Dickersin; Taixiang Wu; Ana Patricia Ayala; Kenneth F. Schulz; Sabine Calleja; Isabelle Boutron; Joseph S. Ross; Robert M. Golub; Karim M. Khan; Cindy Mulrow; Nandi Siegfried; Joerg Heber; Naomi Lee; Pamela Reed Kearney; Rhoda K. Wanyenze; Asbjørn Hróbjartsson; Rebecca Williams; Nita Bhandari; Peter Jüni; An-Wen Chan; Veronique Kiermer; Jacqueline Corrigan-Curay; John Concato JAMA

- Special Communication Guidelines for Reporting Outcomes in Trial Protocols Nancy J. Butcher, PhD; Andrea Monsour, MPH; Emma J. Mew, MPH, MPhil; An-Wen Chan, MD, DPhil; David Moher, PhD; Evan Mayo-Wilson, DPhil; Caroline B. Terwee, PhD; Alyssandra Chee-A-Tow, MPH; Ami Baba, MRes; Frank Gavin, MA; Jeremy M. Grimshaw, MBCHB, PhD; Lauren E. Kelly, PhD; Leena Saeed, BSc, BEd; Lehana Thabane, PhD; Lisa Askie, PhD; Maureen Smith, MEd; Mufiza Farid-Kapadia, MD, PhD; Paula R. Williamson, PhD; Peter Szatmari, MD; Peter Tugwell, MD; Robert M. Golub, MD; Suneeta Monga, MD; Sunita Vohra, MD; Susan Marlin, MSc; Wendy J. Ungar, PhD; Martin Offringa, MD, PhD JAMA

Question What outcome-specific information should be included in a published clinical trial report?

Findings Using an evidence-based and international consensus–based approach that applied methods from the Enhancing the Quality and Transparency of Health Research (EQUATOR) methodological framework, 17 outcome-specific reporting items were identified.

Meaning Inclusion of these items in clinical trial reports may enhance trial utility, replicability, and transparency and may help limit selective nonreporting of trial results.

Importance Clinicians, patients, and policy makers rely on published results from clinical trials to help make evidence-informed decisions. To critically evaluate and use trial results, readers require complete and transparent information regarding what was planned, done, and found. Specific and harmonized guidance as to what outcome-specific information should be reported in publications of clinical trials is needed to reduce deficient reporting practices that obscure issues with outcome selection, assessment, and analysis.

Objective To develop harmonized, evidence- and consensus-based standards for reporting outcomes in clinical trial reports through integration with the Consolidated Standards of Reporting Trials (CONSORT) 2010 statement.

Evidence Review Using the Enhancing the Quality and Transparency of Health Research (EQUATOR) methodological framework, the CONSORT-Outcomes 2022 extension of the CONSORT 2010 statement was developed by (1) generation and evaluation of candidate outcome reporting items via consultation with experts and a scoping review of existing guidance for reporting trial outcomes (published within the 10 years prior to March 19, 2018) identified through expert solicitation, electronic database searches of MEDLINE and the Cochrane Methodology Register, gray literature searches, and reference list searches; (2) a 3-round international Delphi voting process (November 2018-February 2019) completed by 124 panelists from 22 countries to rate and identify additional items; and (3) an in-person consensus meeting (April 9-10, 2019) attended by 25 panelists to identify essential items for the reporting of outcomes in clinical trial reports.

Findings The scoping review and consultation with experts identified 128 recommendations relevant to reporting outcomes in trial reports, the majority (83%) of which were not included in the CONSORT 2010 statement. All recommendations were consolidated into 64 items for Delphi voting; after the Delphi survey process, 30 items met criteria for further evaluation at the consensus meeting and possible inclusion in the CONSORT-Outcomes 2022 extension. The discussions during and after the consensus meeting yielded 17 items that elaborate on the CONSORT 2010 statement checklist items and are related to completely defining and justifying the trial outcomes, including how and when they were assessed (CONSORT 2010 statement checklist item 6a), defining and justifying the target difference between treatment groups during sample size calculations (CONSORT 2010 statement checklist item 7a), describing the statistical methods used to compare groups for the primary and secondary outcomes (CONSORT 2010 statement checklist item 12a), and describing the prespecified analyses and any outcome analyses not prespecified (CONSORT 2010 statement checklist item 18).

Conclusions and Relevance This CONSORT-Outcomes 2022 extension of the CONSORT 2010 statement provides 17 outcome-specific items that should be addressed in all published clinical trial reports and may help increase trial utility, replicability, and transparency and may minimize the risk of selective nonreporting of trial results.

Well designed and properly conducted randomized clinical trials (RCTs) are the gold standard for producing primary evidence that informs evidence-based clinical decision-making. In RCTs, trial outcomes are used to assess the intervention effects on participants. 1 The Consolidated Standards of Reporting Trials (CONSORT) 2010 statement provided 25 reporting items for inclusion in published RCT reports. 2 , 3

Fully reporting trial outcomes is important for replicating results, knowledge synthesis efforts, and preventing selective nonreporting of results. A scoping review revealed diverse and inconsistent recommendations on how to report trial outcomes in published reports by academic, regulatory, and other key sources. 4 Insufficient outcome reporting remains common across academic journals and disciplines; key information about outcome selection, definition, assessment, analysis, and changes from the prespecified outcomes (ie, from the trial protocol or the trial registry) is often poorly reported. 5 - 9 Such avoidable reporting issues have been shown to affect the conclusions drawn from systematic reviews and meta-analyses, 10 contributing to research waste. 11

Although calls for improved reporting of trial outcomes have been made, 5 , 12 what constitutes useful, complete reporting of trial outcomes to knowledge users such as trialists, systematic reviewers, journal editors, clinicians, patients, and the public is unclear. 4 Two extensions (for harms in 2004 and for patient-reported outcomes in 2013) 13 , 14 of the CONSORT statement relevant to the reporting of specific types of trial outcomes exist; however, no standard reporting guideline for essential outcome-specific information applicable to all outcome types, populations, and trial designs is available. 4

The aim of the CONSORT-Outcomes 2022 extension was to develop harmonized, evidence- and consensus-based outcome reporting standards for clinical trial reports.

The CONSORT-Outcomes 2022 extension was developed as part of the Instrument for Reporting Planned Endpoints in Clinical Trials (InsPECT) project 15 in accordance with the Enhancing the Quality and Transparency of Health Research (EQUATOR) methodological framework for reporting guideline development. 16 Ethics approval was not required as determined by the research ethics committee at The Hospital for Sick Children. The development 15 of the CONSORT-Outcomes 2022 extension occurred in parallel with the Standard Protocol Items: Recommendations for Interventional Trials (SPIRIT)–Outcomes 2022 extension for clinical trial protocols. 17

First, we created an initial list of recommendations relevant to reporting outcomes for RCTs that were synthesized from consultation with experts and a scoping review of existing guidance for reporting trial outcomes (published within the 10 years prior to March 19, 2018) identified through expert solicitation, electronic database searches of MEDLINE and the Cochrane Methodology Register, gray literature searches, and reference list searches as described. 4 , 18 Second, a 3-round international Delphi voting process took place from November 2018 to February 2019 to identify additional items and assess the importance of each item, which was completed by 124 panelists from 22 countries (eTable 1 in the Supplement ). Third, an in-person expert consensus meeting was held (April 9-10, 2019), which was attended by 25 panelists from 4 countries, including a patient partner and a public partner, to identify the set of essential items relevant to reporting outcomes for trial reports and establish dissemination activities. Selection and wording of the items was finalized at a postconsensus meeting by executive panel members and via email with consensus meeting panelists.

The detailed methods describing development of the CONSORT-Outcomes 2022 extension appear in eAppendix 1 in the Supplement , including the number of items evaluated at each phase and the process toward the final set of included items (eFigure in the Supplement ). The scoping review trial protocol and findings have been published 4 , 18 and appear in eAppendix 1 in the Supplement and the search strategy appears in eAppendix 2 in the Supplement . The self-reported characteristics of the Delphi voting panelists and the consensus meeting panelists appear in eTables 1-2 in the Supplement . Details regarding the patient and public partner involvement appear in eAppendix 1 in the Supplement .

In addition to the inclusion of the CONSORT 2010 statement checklist items, the CONSORT-Outcomes 2022 extension recommends that a minimum of 17 outcome-specific reporting items be included in clinical trial reports, regardless of trial design or population. The scoping review and consultation with experts identified 128 recommendations relevant to reporting outcomes in trial reports, the majority (83%) of which were not included in the CONSORT 2010 statement. All recommendations were consolidated into 64 items for Delphi voting; after the Delphi survey process, 30 items met the criteria for further evaluation at the consensus meeting and possible inclusion in the CONSORT-Outcomes 2022 extension. The CONSORT 2010 statement checklist items and the 17 items added by the CONSORT-Outcomes 2022 extension appear in Table 1 . 19

A fillable version of the checklist appears in eTables 3-4 in the Supplement and on the CONSORT website. 20 When using the updated checklist, users should refer to definitions of key terms in the glossary 21 - 38 ( Box ) because variations in the terminology and definitions exist across disciplines and geographic areas. The 5 core elements of a defined outcome (with examples) appear in Table 2 . 39 , 40

Glossary of Terms Used in the CONSORT-Outcomes 2022 Extension

Composite outcome: A composite outcome consists of ≥2 component outcomes (eg, proportion of participants who died or experienced a nonfatal stroke). Participants who have experienced any of the events specified by the components are considered to have experienced the composite outcome. 21 , 22

CONSORT 2010: Consolidated Standards of Reporting Trials (CONSORT) statement that was published in 2010. 2 , 3

CONSORT-Outcomes 2022 extension: Additional essential checklist items describing outcome-related content that are not covered by the CONSORT 2010 statement.

Construct validity: The degree to which the scores reported in a trial are consistent with the hypotheses (eg, with regard to internal relationships, the relationships of the scores to other instruments, or relevant between-group differences) based on the assumption that the instrument validly measures the domain to be measured. 30

Content validity: The degree to which the content of the study instrument is an adequate reflection of the domain to be measured. 30

Criterion validity: The degree to which the scores of a study instrument are an adequate reflection of a gold standard. 30

Cross-cultural validity: The degree to which the performance of the items on a translated or culturally adapted study instrument are an adequate reflection of the performance of the items using the original version of the instrument. 30

Minimal important change: The smallest within-patient change that is considered important by patients, clinicians, or relevant others. 4 , 5 The change may be in a score or unit of measure (continuous or ordinal measurements) or in frequency (dichotomous outcomes). This term is often used interchangeably in health literature with the term minimal important difference . In the CONSORT-Outcomes 2022 extension, the minimal important change conceptually refers to important intrapatient change (item 6a.3) and the minimal important difference refers to the important between-group difference. Minor variants of the term, such as minimum instead of minimal, or the addition of the adjective clinically or clinical are common (eg, the minimum clinically important change). 23

Minimal important difference: The smallest between-group difference that is considered important by patients, clinicians, or relevant others. 24 - 27 The difference may be in a score or unit of measure (continuous or ordinal measurements) or in frequency (dichotomous outcomes). Minor variants of the term, such as minimum instead of minimal, or the addition of the adjective clinically or clinical are common (eg, the minimum clinically important difference). 23

Outcome: Refers to what is being assessed to examine the effect of exposure to a health intervention. 1 The 5 core elements of a defined outcome appear in Table 2 .

Primary outcome: The planned outcome that is most directly related to the primary objective of the trial. 28 It is typically the outcome used in the sample size calculation for trials with the primary objective of assessing efficacy or effectiveness. 29 Many trials have 1 primary outcome, but some have >1. The term primary end point is sometimes used in the medical literature when referring to the primary outcome. 4

Reliability: The degree to which the measurement is free from error. Specifically, the extent to which scores have not changed for participants and are the same for repeated measures under several conditions (eg, using different sets of items from the same rating scale for internal consistency; over time or test-retest; by different persons on the same occasion or interrater; or by the same persons, such as raters or responders, on different occasions or intrarater). 30

Responsiveness: The ability of a study instrument to accurately detect and measure change in the outcome domain over time. 31 , 32 Distinct from an instrument’s construct validity and criterion validity, which refer to the validity of a single score, responsiveness refers to the validity of a change score (ie, longitudinal validity). 30

Secondary outcomes: The outcomes prespecified in the trial protocol to assess any additional effects of the intervention. 28

Smallest worthwhile effect: The smallest beneficial effect of an intervention that justifies the costs, potential harms, and inconvenience of the interventions as determined by patients. 33

SPIRIT 2013: Standard Protocol Items: Recommendations for Interventional Trials (SPIRIT) statement that was published in 2013. 35 , 36

SPIRIT-Outcomes 2022 extension: Additional essential checklist items describing outcome-related trial protocol content that are not covered by the SPIRIT 2013 statement. 17

Structural validity: The degree to which the scores of a study instrument (eg, a patient questionnaire or a clinical rating scale) are an adequate reflection of the dimensionality of the domain to be measured. 30

Study instrument: The scale or tool used to make an assessment. A study instrument may be a questionnaire, a clinical rating scale, a laboratory test, a score obtained through a physical examination or an observation of an image, or a response to a single question. 34

Target difference: The value that is used in sample size calculations as the difference sought to be detected on the primary outcome between intervention groups and that should be considered realistic or important (such as the minimal important difference or the smallest worthwhile effect) by ≥1 key stakeholder groups. 37 , 38

Validity: The degree to which a study instrument measures the domain it purports to measure. 30

Application of these new checklist items from the CONSORT-Outcomes 2022 extension, in conjunction with the CONSORT 2010 statement, ensures trial outcomes will be comprehensively defined and reported. The estimated list of key users, their proposed actions, and the consequential potential benefits of implementing the 17 CONSORT-Outcomes 2022 extension checklist items appears in eTable 5 in the Supplement and was generated from the consensus meeting’s knowledge translation session. Examination and application of these outcome reporting recommendations may be helpful for trial authors, journal editors, peer reviewers, systematic reviewers, patients, the public, and trial participants (eTable 5 in the Supplement ).

This report contains a brief explanation of the 17 checklist items generated from the CONSORT-Outcomes 2022 extension. Guidance on how to report the existing checklist items can be found in the CONSORT 2010 statement, 2 in Table 1 , and in an explanatory guideline report. 41 Additional items that may be useful to include in some trial reports or in associated trial documents (eg, the statistical analysis plan or in a clinical study report 42 ) appear in eTable 6 in the Supplement , but were not considered essential reporting items for all trial reports.

This item expands on CONSORT 2010 statement checklist item 6a to explicitly ask for reporting on the rationale underlying the selection of the outcome domain for use as the primary outcome. At a broad conceptual level, the outcome’s domain refers to the name or concept used to describe an outcome (eg, pain). 10 , 39 The word domain can be closely linked to and sometimes used equivalently with the terms construct and attribute in the literature. 40 Even though a complete outcome definition is expected to be provided in the trial report (as recommended by CONSORT 2010 statement checklist item 6a), 2 , 40 the rationale for the choice of the outcome domain for the trial’s primary outcome is also essential to communicate because it underpins the purpose of the proposed trial.

Important aspects for the rationale may include (1) the importance of the outcome domain to the individuals involved in the trial (eg, patients, the public, clinicians, policy makers, funders, or health payers), (2) the expected effect of the intervention on the outcome domain, and (3) the ability to assess it accurately, safely, and feasibly during the trial. It also should be reported whether the selected outcome domain originated from a core outcome set (ie, an agreed standardized set of outcomes that should be measured in all trials for a specific clinical area). 43

This item expands on CONSORT 2010 statement checklist item 6a that recommends completely defining prespecified primary and secondary outcomes, and provides specific recommendations that mirror SPIRIT 2013 statement checklist item 12 (and its explanatory text for defining trial outcomes). 35 CONSORT-Outcomes 2022 extension checklist item 6a.2 recommends describing each element of an outcome including its measurement variable, specific analysis metric, method of aggregation, and time point. Registers such as ClinicalTrials.gov already require that trials define their outcomes using this framework. 10 , 35 , 39 Failure to clearly report each element of the outcomes from a trial enables undetectable multiple testing, data cherry-picking, and selective nonreporting of results in the trial report compared with what was planned. 10 , 44

This item expands on CONSORT-Outcomes 2022 extension checklist item 6a.2. In cases in which the participant-level analysis metric for the primary outcome represents intraindividual change from an earlier value (such as those measured at baseline), a definition and justification of what is considered the minimal important change (MIC) for the relevant study instrument should be provided. In the CONSORT-Outcomes 2022 extension, the MIC was defined as the smallest within-patient change that is considered important by patients, clinicians, or relevant others (common alternative terminologies appear in the Box ). 24 , 25 , 31 The MIC is important to report for all trials that use a within-participant change metric, such as those that plan to analyze the proportion of participants showing a change larger than the MIC value in each treatment group (eg, to define the proportion who improved) 45 or in n-of-1 trial designs. 46

Describing the MIC will facilitate understanding of the trial results and their clinical relevance by patients, clinicians, and policy makers. Users with trial knowledge may be interested in the MIC itself as a benchmark or, alternatively, in a value larger than the known MIC. Describing the justification for the selected MIC is important because there can be numerous MICs available for the same study instrument, with varying clinical relevance and methodological quality depending on how and in whom they were determined. 47 - 50 If the MIC is unknown for the study instrument with respect to the trial’s population and setting, this should be reported.

This item expands on CONSORT-Outcomes 2022 extension checklist item 6a.2 to prompt authors, if applicable, to describe the prespecified cutoff values used to convert any outcome data collected on a continuous (or ordinal) scale into a categorical variable for their analyses. 10 , 35 Providing an explanation of the rationale for the choice of the cutoff value is recommended; it is not unusual for different trials to apply different cutoff values. The cutoff values selected are most useful when they have clear clinical relevance. 51 Reporting this information will help avoid multiple testing (known as “p-hacking”), data cherry-picking, and selective nonreporting of results in the trial report. 10 , 44 , 52

This item expands on CONSORT-Outcomes 2022 extension checklist item 6a.2 regarding the time point to prompt authors, if applicable, to specify the time point used in the main analysis if outcome assessments were performed at multiple time points after randomization (eg, trial assessed blood pressure daily for 12 weeks after randomization). Specifying the preplanned time points of assessment used for the analyses will help limit the possibility of unplanned analyses of multiple assessment time points and the selective nonreporting of time points that did not yield large or significant results. 35 , 39

Providing a rationale for the choice of time point is encouraged (eg, based on the expected clinical trajectory after the intervention or the duration of treatment needed to achieve a clinically meaningful exposure to treatment). The length of follow-up should be appropriate to the management decision the trial is designed to inform. 53

A composite outcome consists of 2 or more component outcomes that may be related. Participants who have experienced any 1 of the defined component outcomes comprising the composite outcome are considered to have experienced the composite outcome. 21 , 22 When used, composite outcomes should be prespecified, justified, and fully defined, 51 which includes a complete definition of each individual component outcome and a description of how those will be combined (eg, what analytic steps define the occurrence of the composite outcome).

However, composite outcomes can be difficult to interpret even when sufficiently reported. For example, a composite outcome can disguise treatment effects when the effects on the component outcomes go in opposite directions or when the component outcomes have different effect levels (eg, combining death and disability), furthering the need for quality reporting for every component. 22 , 54

Any outcomes that were not prespecified in the trial protocol or trial registry that were measured during the trial should be clearly identified and labeled. Outcomes that were not prespecified can result from the addition of an entirely new outcome domain that was not initially planned (eg, the unplanned inclusion and analysis of change in frequency of cardiovascular hospital admissions obtained from a hospital database). In addition, outcomes that differ from the prespecified outcomes in measurement variable, analysis metric, method of aggregation, and analysis time point are not prespecified. For example, if the trial reports on treatment success rates at 12 months instead of the prespecified time point of 6 months in the trial protocol, the 12-month rate should be identified as an outcome that was not prespecified with the specific change explained. Many reasons exist for changes in outcome data (eg, screening, diagnostic, and surveillance procedures may change; coding systems may change; or new adverse effect data may emerge). For fundamental changes to the primary outcome, investigators should report details (eg, the nature and timing of the change, motivation, whether the impetus arose from internal or external data sources, and who proposed and who approved these changes).

The addition of undeclared outcomes in trial reports is a major concern. 7 , 55 Among 67 trials published in 5 high-impact CONSORT-endorsing journals, there were 365 outcomes added (a mean of 5 undeclared outcomes per trial). 7 Less than 15% of the added outcomes were described as not being prespecified. 7 Determining whether reported outcomes match those in trial protocols or trial registries should not be left for readers to check for themselves, which is an onerous process (estimated review time of 1-7 hours per trial), 7 and is impossible in some cases (such as when the trial protocol is not publicly available). There can be good reasons to change study outcomes while a trial is ongoing, and authors should disclose these changes (CONSORT 2010 statement checklist item 6b) to avoid any potential appearance of reporting bias.

The information needed to provide a sufficient description of the study instrument should be enough to allow replication of the trial and interpretability of the results (eg, specify the version of the rating scale used, the mode of administration, and the make and model of the relevant laboratory instrument). 35 It is essential to summarize and provide references to empirical evidence that demonstrated sufficient reliability (eg, test-retest, interrater or intrarater reliability, and internal consistency), validity (eg, content, construct, criterion, cross-cultural, and structural validity), ability to detect change in the health outcome being assessed (ie, responsiveness) as appropriate for the type of study instrument, and enable comparison with a population similar to the study sample. Such evidence may be drawn from high-quality primary studies of measurement properties, from systematic reviews of measurement properties of study instruments, and from core outcome sets. Diagnostic test accuracy is relevant to report when the defined outcome relates to the presence or absence of a condition before and after treatment. 56

CONSORT-Outcomes 2022 extension checklist item 6a.8 also recommends describing relevant measurement properties in a population similar to the study sample (or at least not substantively different) because measurement properties of study instruments cannot be presumed to be generalizable between different populations (eg, between different age groups). 57 If measurement properties of the study instrument were unknown for the population used, this can be stated with a rationale for why it was considered appropriate or necessary to use this instrument.

This information is critical to report because the quality and interpretation of the trial data rest on these measurement properties. For example, study instruments with poor content validity would not accurately reflect the domain that was intended to be measured, and study instruments with low interrater reliability would undermine the trial’s statistical power 35 if an expected result was not discovered or accounted for in the planned power calculations. 30 - 32

Substantially different responses, and therefore different trial results, can be obtained for many types of outcomes (eg, behavioral, psychological outcomes), depending on who is assessing the outcome of interest. This variability may result from differences in assessors’ training or experience, different perspectives, or patient recall. 58 , 59 Assessments of a clinical outcome reported by a clinician, a patient, or a nonclinician observer or through a performance-based assessment are correspondingly classified by the US Food and Drug Administration as clinician-reported, patient-reported, observer-reported, and performance outcomes. 60

For outcomes that could be assessed by various people, an explanation for the choice of outcome assessor made in the context of the trial should be provided. For outcomes that are not influenced by the outcome assessor (eg, plasma cholesterol levels), this information is less relevant. Professional qualifications or any trial-specific training necessary for trial personnel to function as outcome assessors is often relevant to describe 35 (eg, when using the second edition of the Wechsler Abbreviated Scale of Intelligence, an assessor with a PhD or PsyD and ≥5 years of experience with the relevant patient population and ≥15 prior administrations using this instrument or similar IQ assessments might be required). Details regarding blinding of an assessor to the patient’s treatment assignment and emerging trial results are covered in CONSORT 2010 statement checklist item 11a.

Providing a description of any of the processes used to promote outcome data quality during and after data collection in a trial provides transparency and facilitates appraisal of the quality of the trial’s data. For example, subjective outcome assessments may have been performed in duplicate (eg, pathology assessments) or a central adjudication committee may have been used to ensure independent and accurate outcome assessments. Other common examples include verifying the data are in the proper format (eg, integer), the data are within an expected range of values, and the data are reviewed with independent source document verification (eg, by an external trial monitor). 35 The trial report should include a full description or a brief summary with reference to where the complete information can be found (eg, an open access trial protocol).

This item expands on CONSORT 2010 statement checklist item 7a for reporting how sample size was determined to prompt authors to report the target difference used to inform the trial’s sample size calculation. The target difference is the value used in sample size calculations as the difference sought to be detected in the primary outcome between the intervention groups at the specific time point for the analysis that should be considered realistic or important by 1 or more key stakeholder groups. 37 The Difference Elicitation in Trials project has published extensive evidence-based guidance on selecting a target difference for a trial, sample size calculation, and reporting. 37 , 38 The target difference may be the minimal important difference (MID; the smallest difference between patients perceived as important) 24 , 26 , 27 or the smallest worthwhile effect (the smallest beneficial effect of an intervention that justifies the costs, harms, and inconvenience of the interventions as determined by patients). 33 Because there can be different pragmatic or clinical factors informing the selected target difference (eg, the availability of a credible MID for the study instrument used to assess the primary outcome), 47 and numerous different options available (eg, 1 of several MIDs or values based on pilot studies), 47 it is important to explain why the chosen target difference was selected. 23 , 48 , 49

This item extends CONSORT 2010 statement checklist item 12a to prompt authors to describe any statistical methods used to account for multiplicity relating to the analysis or interpretation of the results. Outcome multiplicity issues are common in trials and deserve particular attention when there are coprimary outcomes, multiple possible time points resulting from the repeated assessment of a single outcome, multiple planned analyses of a single outcome (eg, interim or subgroup analysis, multigroup trials), or numerous secondary outcomes for analysis. 61

The methods used to account for such forms of multiplicity include statistical methods (eg, family-wise error rate approaches) or descriptive approaches (eg, noting that the analyses are exploratory, placing the results in the context of the expected number of false-positive outcomes). 61 , 62 Such information may be briefly described in the text of the report or described in more detail in the statistical analysis plan. 63 Authors may report if no methods were used to account for multiplicity (eg, not applicable or were not considered necessary).

This item extends CONSORT 2010 statement checklist item 12a to recommend that authors (1) state and justify any criteria applied for excluding certain outcome data from the analysis or (2) report that no outcome data were excluded. This is in reference to explicitly and intentionally excluded outcome data, such as in the instance of too many missing items from a participant’s completed questionnaire, or through other well-justified exclusion of outliers for a particular outcome. This helps the reader to interpret the reported results. This information may be presented in the CONSORT flow diagram where the reasons for outcome data exclusion are stated for each outcome by treatment group.

The occurrence of missing participant outcome data in trials is common, and in many cases, this missingness is not random, meaning it is related to either allocation to a treatment group, patient-specific (prognostic) factors, or the occurrence of a specific health outcome. 64 , 65 When there are missing data, CONSORT-Outcomes 2022 extension checklist item 12a.3 recommends describing (1) any methods used to assess or identify the pattern of missingness and (2) any methods used to handle missing outcomes or entire assessments (the choice of which is informed by the identified pattern of missingness) in the statistical analysis (eg, multiple imputation, complete case, based on likelihood, and inverse-probability weighting).

It is critical to provide information about the patterns and handling of any missing data because missing data can lead to reduced power of the trial, affect its conclusions, and affect whether trials are at low or high risk of bias, depending on the pattern of missingness. 66 , 67 A lack of clarity about the magnitude of the missingness and about how missing data were handled in the analysis makes it impossible for meta-analysists to accurately extract sample sizes needed to weight studies in their pooled estimates and prevents accurate assessment of any risk of bias arising from missing data in the reported results. 67 , 68 This checklist item is not applicable if there is a complete data set, and it may be unimportant if the amount of missing data can be considered negligible.

Patterns of missingness (also referred to as missing data mechanisms) include missing completely at random, missing at random, and missing not at random and require description in trials to help readers and meta-analysists determine which patterns are present in data sets. 69 Some of the missing data may still be able to be measured (eg, via concerted follow-up efforts with a subset of the trial participants with missing data) to help distinguish between missing at random and missing not at random. 70 The pattern of missingness relates to the choice of the methods used to handle missing outcomes or entire assessments (eg, multiple imputation and maximum likelihood analyses assume the data are at least missing at random) and is essential to report. Any sensitivity analyses that were conducted to assess the robustness of the trial results (eg, using different methods to handle missing data) should be reported. 35 , 64

Trial outcome data can be analyzed in many ways that can lead to different results. The general reporting principle described in the CONSORT 2010 statement was to “describe statistical methods with enough detail to enable a knowledgeable reader with access to the original data to verify the reported results.” 2 Item 12a.4 extends CONSORT 2010 statement checklist item 12a to recommend that authors report the definition of the outcome analysis population used for each analysis as it relates to nonadherence of the trial protocol. For each analysis, information on whether the investigators included all participants who were randomized to the group to which they were originally allocated (ie, intention-to-treat analysis) has been widely recognized to be particularly important to the critical appraisal and interpretation of trial findings. 2 , 35

Because amounts of missing data may vary among different outcomes and the reasons data are missing may also vary, CONSORT-Outcomes 2022 extension checklist item 12a.4 specifies reporting the definition of the outcome analysis population used in the statistical analyses. For example, a complete data set may be available to analyze the outcome of mortality but not for patient-reported outcomes within the same trial. In another example, analysis of harms might be restricted to participants who received the trial intervention so the absence or occurrence of harm was not attributed to a treatment that was never received. 35

This item expands on CONSORT 2010 statement checklist item 17a on outcomes and estimation to remind authors to ensure that they have reported the results for all outcome analyses that were prespecified in the trial protocol or statistical analysis plan. 68 Although this is expected to be standard practice, 2 the information available in the trial report is often insufficient regarding prespecified analyses for the reader to determine whether there was selective nonreporting of any trial results. 71 When it is not feasible to report on all prespecified analyses in a single trial report (eg, trials with a large number of prespecified secondary outcomes), authors should report where the results of any other prespecified outcome analyses can be found (eg, in linked publications or an online repository) or signal their intention to report later in the case of longer-term follow-up.

A recent study of adherence showed that prespecified statistical analyses remain low in published trials, with unexplained discrepancies between the prespecified and reported analyses. 71 This item extends CONSORT 2010 statement checklist item 18 on ancillary analyses to recommend that an explanation should be provided for any analyses that were not prespecified (eg, in the trial protocol or statistical analysis plan), but that are being reported in the trial report. These types of analyses can be called either exploratory analyses or analyses that were not prespecified. Communicating the rationale for any analyses that were not prespecified, but which were performed and reported, is important for trial transparency and for correct appraisal of the trial’s credibility. It can be important to state when such additional analyses were performed (eg, before or after seeing any results from comparative analyses for other outcomes). Multiple analyses of the same data create a risk for false-positive findings and selective reporting of analyses that were not prespecified could lead to bias.

The CONSORT-Outcomes 2022 extension provides evidence- and consensus-based guidance for reporting outcomes in published clinical trial reports, extending the CONSORT 2010 statement checklist with 17 additional reporting items and harmonizing reporting recommendations with guidance from the SPIRIT-Outcomes 2022 extension. 17 Alignment across these 2 extension guidelines creates a cohesive continuum of reporting from the trial protocol to the completed trial that will facilitate both the researcher’s production of the trial protocol and trial report and, importantly, any assessment of the final report’s adherence to the trial protocol. 20 Similar to the CONSORT 2010 statement, 41 the CONSORT-Outcomes 2022 extension applies to the content of the trial report, including the tables and figures and online-only supplementary material. 72 The current recommendations are similarly not prescriptive regarding the structure or location of reporting this information; authors should “address checklist items somewhere in the article, with ample detail and lucidity.” 41

Users of the CONSORT-Outcomes 2022 extension checklist should note that these additional checklist items represent the minimum essential items for outcomes reporting and are being added to the CONSORT 2010 statement 2 , 3 guidelines to maximize trial utility, transparency, replication, and limit selective nonreporting of results (eTable 5 in the Supplement ). In some cases, it may be important to report additional outcome-specific information in trial reports, 4 such as those in eTable 6 in the Supplement or refer to CONSORT-PRO for PRO-specific reporting guidance 14 and the CONSORT extension for reporting harms. 13 Authors adhering to the CONSORT-Outcomes 2022 extension should explain why any item is not relevant to their trial. For example, this extension checklist, which is for reporting systematically assessed outcomes, might not be applicable to outcomes that are not systematically collected or prespecified such as spontaneously reported adverse events. When constrained by journal word count, authors can refer to open access trial protocols, statistical analysis plans, trial registry data, or provide online-only supplementary materials.

We anticipate that the key users of the CONSORT-Outcomes 2022 extension will be trial authors, journal editors, peer reviewers, systematic reviewers, meta-analysis researchers, academic institutions, patients (including trial participants), and the broader public (eTable 5 in the Supplement ). Use of this extension by these groups may help improve trial utility, transparency, and replication. Patient and public engagement was successfully embedded into a consensus meeting for a methodologically complex topic, a rarity in reporting guideline development to date. Future reporting guideline development should engage patients and members of the public throughout the process. The CONSORT-Outcomes 2022 extension will be disseminated as outlined previously, 15 including through the EQUATOR Network and the CONSORT website. End users can provide their input on the content, clarity, and usability online, 73 which will inform any future updates.

This study has several limitations. First, the included checklist items are appropriate for systematically collected outcomes, including most potential benefits and some harms; however, other items might be applicable for reporting harms not systematically assessed. 74

Second, because these checklist items are not yet integrated in the main CONSORT checklist, finding and using multiple checklists may be considered burdensome by some authors and editors, which may affect uptake. 75 Future efforts to integrate these additional checklist items in the main CONSORT checklist might promote implementation in practice.

Third, although a large, multinational group of experts and end users was involved in the development of these recommendations with the aim of increasing usability among the broader research community, the Delphi voting results could have been affected by a nonresponse bias because panelists were self-selecting (ie, interested individuals signed up to take part in the Delphi voting process).

Fourth, the consensus meeting panelists were purposively sampled based on their expertise and roles relevant to clinical trial conduct, oversight, and reporting. 15 The views of individuals not well represented by the consensus meeting panelists (eg, trialists outside North America and Europe) may differ. The systematic and evidence-based approach 15 , 16 used to develop this guideline, including a rigorous scoping review of outcome reporting guidance, 4 , 18 will help mitigate the potential effect of these limitations.

This CONSORT-Outcomes 2022 extension of the CONSORT 2010 statement provides 17 outcome-specific items that should be addressed in all published clinical trial reports and may help increase trial utility, replicability, and transparency and may minimize the risk of selective nonreporting of trial results.

Corresponding Author: Nancy J. Butcher, PhD, Peter Gilgan Centre for Research and Learning, The Hospital for Sick Children, 686 Bay St, Toronto, ON M5G 0A4, Canada ( [email protected] ).

Accepted for Publication: October 25, 2022.

Author Contributions: Dr Butcher had full access to all of the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis.

Concept and design: All authors.

Acquisition, analysis, or interpretation of data: All authors.

Drafting of the manuscript: Butcher.

Critical revision of the manuscript for important intellectual content: All authors.

Statistical analysis: Monsour.

Obtained funding: Offringa.

Administrative, technical, or material support: Monsour, Mew, Baba.

Supervision: Butcher, Offringa.

Conflict of Interest Disclosures: Dr Butcher reported receiving grant funding from CHILD-BRIGHT and the Cundill Centre for Child and Youth Depression at the Centre for Addiction and Mental Health (CAMH) and receiving personal fees from Nobias Therapeutics. Ms Mew reported receiving salary support through a Canadian Institutes of Health Research doctoral foreign study award. Dr Kelly reported receiving funding from the Canadian Cancer Society, Research Manitoba, the Children’s Hospital Research Institute of Manitoba, Mitacs, and the SickKids Foundation. Dr Askie reported being a co-convenor of the Cochrane Prospective Meta-Analysis Methods Group. Dr Farid-Kapadia reported currently being an employee of Hoffmann La-Roche and holding shares in the company. Dr Williamson reported chairing the COMET initiative management group. Dr Szatmari reported receiving funding from the CAMH. Dr Tugwell reported co-chairing the OMERACT executive committee; receiving personal fees from the Reformulary Group, UCB Pharma GmbH, Parexel International, PRA Health Sciences, Amgen, AstraZeneca, Bristol Myers Squibb, Celgene, Eli Lilly, Genentech, Roche, Genzyme, Sanofi, Horizon Therapeutics, Merck, Novartis, Pfizer, PPD Inc, QuintilesIMS (now IQVIA), Regeneron Pharmaceuticals, Savient Pharmaceuticals, Takeda Pharmaceutical Co Ltd, Vertex Pharmaceuticals, Forest Pharmaceuticals, and Bioiberica; serving on data and safety monitoring boards for UCB Pharma GmbH, Parexel International, and PRA Health Sciences; and receiving unrestricted educational grants from the American College of Rheumatology and the European League of Rheumatology. Dr Monga reported receiving funding from the Cundill Centre for Child and Youth Depression at CAMH; receiving royalties from Springer for Assessing and Treating Anxiety Disorders in Young Children ; and receiving personal fees from the TD Bank Financial Group for serving as a chair in child and adolescent psychiatry. Dr Ungar reported being supported by the Canada research chair in economic evaluation and technology assessment in child health. No other disclosures were reported.

Funding/Support: This work, project 148953, received financial support from the Canadian Institutes of Health Research (supported the work of Drs Butcher, Kelly, Szatmari, Monga, and Offringa and Mss Saeed and Marlin).

Role of the Funder/Sponsor: The Canadian Institutes of Health Research had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; and decision to submit the manuscript for publication.

Disclaimers: Dr Golub is Executive Deputy Editor of JAMA , but he was not involved in any of the decisions regarding review of the manuscript or its acceptance. This article reflects the views of the authors, the Delphi panelists, and the consensus meeting panelists and may not represent the views of the broader stakeholder groups, the authors’ institutions, or other affiliations.

Additional Contributions: We gratefully acknowledge the additional contributions made by the project core team, the executive team, the operations team, the Delphi panelists, and the international consensus meeting panelists (eAppendix 3 in the Supplement ). We thank Andrea Chiaramida, BA, for administrative project support and Lisa Stallwood, MSc, for administrative manuscript support (both with The Hospital for Sick Children). We thank Petros Pechlivanoglou, PhD, and Robin Hayeems, PhD, for piloting and providing feedback on the format of the Delphi survey (both with The Hospital for Sick Children). We thank Roger F. Soll, MD (Cochrane Neonatal and the Division of Neonatal-Perinatal Medicine, Larner College of Medicine, University of Vermont), and James Webbe, MB BChir, PhD, and Chris Gale, MBBS, PhD (both with Neonatal Medicine, School of Public Health, Imperial College London), for pilot testing the CONSORT-Outcomes 2022 extension checklist. None of these individuals received compensation for their role in the study.

Additional Information: The project materials and data are publicly available on the Open Science Framework at https://osf.io/arwy8/ .

- Register for email alerts with links to free full-text articles

- Access PDFs of free articles

- Manage your interests

- Save searches and receive search alerts

Guidelines for Reporting Outcomes in Trial Reports: The CONSORT-Outcomes 2022 Extension

Affiliations.

- 1 Child Health Evaluative Sciences, The Hospital for Sick Children Research Institute, Toronto, Ontario, Canada.

- 2 Department of Psychiatry, University of Toronto, Toronto, Ontario, Canada.

- 3 Department of Chronic Disease Epidemiology, School of Public Health, Yale University, New Haven, Connecticut.

- 4 Department of Medicine, Women's College Research Institute, University of Toronto, Toronto, Ontario, Canada.

- 5 Centre for Journalology, Clinical Epidemiology Program, Ottawa Hospital Research Institute, Ottawa, Ontario, Canada.

- 6 School of Epidemiology and Public Health, University of Ottawa, Ottawa, Ontario, Canada.

- 7 Department of Epidemiology, Gillings School of Global Public Health, University of North Carolina, Chapel Hill.

- 8 Amsterdam University Medical Centers, Vrije Universiteit, Department of Epidemiology and Data Science, Amsterdam, the Netherlands.

- 9 Department of Methodology, Amsterdam Public Health Research Institute, Amsterdam, the Netherlands.

- 10 public panel member, Toronto, Ontario, Canada.

- 11 Clinical Epidemiology Program, Ottawa Hospital Research Institute, Ottawa, Ontario, Canada.

- 12 Department of Medicine, University of Ottawa, Ottawa, Ontario, Canada.

- 13 Department of Pharmacology and Therapeutics, University of Manitoba, Winnipeg, Canada.

- 14 Children's Hospital Research Institute of Manitoba, Winnipeg, Canada.

- 15 Health Research Methods, Evidence, and Impact, McMaster University, Hamilton, Ontario, Canada.

- 16 NHMRC Clinical Trials Centre, University of Sydney, Sydney, New South Wales, Australia.

- 17 patient panel member, Ottawa, Ontario, Canada.

- 18 MRC-NIHR Trials Methodology Research Partnership, Department of Health Data Science, University of Liverpool, Liverpool, England.

- 19 Cundill Centre for Child and Youth Depression, Centre for Addiction and Mental Health, Toronto, Ontario, Canada.

- 20 Department of Psychiatry, The Hospital for Sick Children, Toronto, Ontario, Canada.

- 21 Bruyère Research Institute, Ottawa, Ontario, Canada.

- 22 Ottawa Hospital Research Institute, Ottawa, Ontario, Canada.

- 23 Department of Medicine, Feinberg School of Medicine, Northwestern University, Chicago, Illinois.

- 24 Departments of Pediatrics and Psychiatry, Faculty of Medicine and Dentistry, University of Alberta, Edmonton, Canada.

- 25 Clinical Trials Ontario, Toronto, Canada.

- 26 Department of Public Health Sciences, Queen's University, Kingston, Ontario, Canada.

- 27 Institute of Health Policy, Management, and Evaluation, University of Toronto, Toronto, Ontario, Canada.

- 28 Division of Neonatology, The Hospital for Sick Children, Toronto, Ontario, Canada.

- PMID: 36511921

- DOI: 10.1001/jama.2022.21022

Importance: Clinicians, patients, and policy makers rely on published results from clinical trials to help make evidence-informed decisions. To critically evaluate and use trial results, readers require complete and transparent information regarding what was planned, done, and found. Specific and harmonized guidance as to what outcome-specific information should be reported in publications of clinical trials is needed to reduce deficient reporting practices that obscure issues with outcome selection, assessment, and analysis.

Objective: To develop harmonized, evidence- and consensus-based standards for reporting outcomes in clinical trial reports through integration with the Consolidated Standards of Reporting Trials (CONSORT) 2010 statement.

Evidence review: Using the Enhancing the Quality and Transparency of Health Research (EQUATOR) methodological framework, the CONSORT-Outcomes 2022 extension of the CONSORT 2010 statement was developed by (1) generation and evaluation of candidate outcome reporting items via consultation with experts and a scoping review of existing guidance for reporting trial outcomes (published within the 10 years prior to March 19, 2018) identified through expert solicitation, electronic database searches of MEDLINE and the Cochrane Methodology Register, gray literature searches, and reference list searches; (2) a 3-round international Delphi voting process (November 2018-February 2019) completed by 124 panelists from 22 countries to rate and identify additional items; and (3) an in-person consensus meeting (April 9-10, 2019) attended by 25 panelists to identify essential items for the reporting of outcomes in clinical trial reports.

Findings: The scoping review and consultation with experts identified 128 recommendations relevant to reporting outcomes in trial reports, the majority (83%) of which were not included in the CONSORT 2010 statement. All recommendations were consolidated into 64 items for Delphi voting; after the Delphi survey process, 30 items met criteria for further evaluation at the consensus meeting and possible inclusion in the CONSORT-Outcomes 2022 extension. The discussions during and after the consensus meeting yielded 17 items that elaborate on the CONSORT 2010 statement checklist items and are related to completely defining and justifying the trial outcomes, including how and when they were assessed (CONSORT 2010 statement checklist item 6a), defining and justifying the target difference between treatment groups during sample size calculations (CONSORT 2010 statement checklist item 7a), describing the statistical methods used to compare groups for the primary and secondary outcomes (CONSORT 2010 statement checklist item 12a), and describing the prespecified analyses and any outcome analyses not prespecified (CONSORT 2010 statement checklist item 18).

Conclusions and relevance: This CONSORT-Outcomes 2022 extension of the CONSORT 2010 statement provides 17 outcome-specific items that should be addressed in all published clinical trial reports and may help increase trial utility, replicability, and transparency and may minimize the risk of selective nonreporting of trial results.

Publication types

- Research Support, Non-U.S. Gov't

- Checklist / standards

- Clinical Trials as Topic* / standards

- Guidelines as Topic*

- Research Design* / standards

Grants and funding

- MR/S014357/1/MRC_/Medical Research Council/United Kingdom

- CIHR/Canada

Case Reports Vs Clinical Studies

Uncategorized

This post discusses questions validity if authored by an employee of the reporting company, Roho. This blog will answer these questions regarding clinical studies and clinical evidence:

- What is the difference between a clinical study and a case report?

- Who can observe and document the results of a clinical study?

- What circumstance would beg questioning the validity of a case report?

(Information below was take from the site clinical trials .gov a service of the National Institute of Health)

Definition of case report and clinical study

In medicine, a case report is a detailed report of the symptoms, signs, diagnosis, treatment, and follow-up of an individual patient. Case reports may contain a demographic profile of the patient, but usually describe an unusual or novel occurrence.

The case report is written on one individual patient.

Clinical Study

A research study using human subjects to evaluate biomedical or health-related outcomes. Two types of clinical studies are Interventional Studies (or clinical trials) and Observational Studies . A clinical study involves multiple patients.

Observational Clinical Studies have a qualified investigator.

In an observational study, investigators assess health outcomes in groups of participants according to a research plan or protocol. Participants may receive interventions (which can include medical products such as drugs or devices) or procedures as part of their routine medical care, but participants are not assigned to specific interventions by the investigator (as in a clinical trial).

The Key Responsibilities of a Clinical Study Investigator:

- Be qualified to practice medicine or psychiatry and meet the qualifications specified by applicable national regulatory requirements(s)

- Be qualified by education, training, and experience to assume responsibility for the proper conduct of the study,

- Be familiar with and compliant with Good Clinical Practice (GCP) ICH E6 Guideline and applicable ethical and regulatory requirements prior to commencement of work on the study.

- Provide evidence of his/her qualification using the Abbreviated TransCelerate Curriculum Vitae (CV) form

The internal validity of a medical device case report is questioned if bias is present. One must consider bias in a case report authored by an employee of the company that makes the device described in the report.

These are the facts on clinical studies published on the roho website.

- There are 15 of what roho calls clinical studies on the roho website. Based on the above definitions, these are not clinical studies but rather case reports.

- Of these 15 case reports only one pertains a seat cushion improving a pressure ulcer.

This one single case report is written by Cynthia Fleck, an employee of crown therapeutics which is a division of roho

After selling 1 million cushions over the span of 45 years in business roho has exactly 1 case report which was written by an employee of roho which then begs the question of validity of this report.

Related Posts

Pressure Injury Prevention , SofTech , Uncategorized

Considerations with a standing chair mechanism in fighting pressure sores.

Inspirational people in wheelchairs to follow on social media, get relief & healing from pressure injuries.

Order Your Pressure Relief Wheelchair Cushion Today

- NIH Grants & Funding

- Blog Policies

NIH Extramural Nexus

Further Refining Case Studies and FAQs about the NIH Definition of a Clinical Trial in Response to Your Questions

22 comments.

In August and September we released case studies and FAQs to help those of you doing human subjects research to determine whether your research study meets the NIH definition of a clinical trial. Correctly making this determination is important to ensure you are following the initiatives we have been implementing to improve the transparency of clinical trials , including the need to pick clinical trial -specific funding opportunity announcements for due dates of January 25, 2018 and beyond.

We have made no changes to the NIH definition of a clinical trial , or how the definition is interpreted. What we have done is revise existing case studies and add a few new ones to help clarify how the definition of clinical trial does or does not apply to: studies of delivery of standard clinical care, device studies, natural experiments, preliminary studies for study procedures, and studies that are primarily focused on the nature or quality of measurements as opposed to biomedical or behavioral outcomes..

As a reminder , the case studies illustrate how to apply the four questions researchers involved in human studies need to ask, and answer, to determine if their study meets the NIH definition of a clinical trial. These questions are:

- Does the study involve human participants?

- Are the participants prospectively assigned to an intervention?

- Is the study designed to evaluate the effect of the intervention on the participants?

- Is the effect that will be evaluated a health-related biomedical or behavioral outcome?

If the answer to all four questions is yes, then we consider your research a clinical trial.

Note that If the answers to the 4 questions are yes, your study meets the NIH definition of a clinical trial, even if…

- You are studying healthy participants

- Your study does not have a comparison group (e.g., placebo or control)

- Your study is only designed to assess the pharmacokinetics, safety, and/or maximum tolerated dose of an investigational drug

- Your study is utilizing a behavioral intervention

Studies intended solely to refine measures are not considered clinical trials.

The adjustments to the case studies include the following:

- #7a, #8a, #24, #31a: Clarified whether it meets definition of intervention

- #18c: Replaced with a more illustrative case study

- #18d, 24, and 33: Clarified whether study was designed to assess the nature or quality of a measurement, as opposed to the effect of an intervention on a behavioral or biomedical outcome.

- #18g: New case study about testing procedures

- #36 a-b: New case studies about standard clinical care

- #37: New case study about Phase 1 device studies

- #38: New case study about natural experiments.

- #39: Proposed case study about preliminary tests for study procedures.

- New case studies specific to select NIH Institutes and Centers

We recognize that sometimes in an attempt to be helpful we end up providing a lot of material to look through. So to help you quickly find the case studies that are most relevant to your research we have added the ability to filter the case studies by keyword.

We also added two new FAQs on standard clinical care and Phase 1 devices.

Thank you for your continuing dialog on this topic. We look forward to continuing to work with you as we move towards higher levels of trust and transparency with our clinical trials.

Update: Some of these case studies have been revised since this publication.

RELATED NEWS

“We have made no changes to the NIH definition of a clinical trial, or how the definition is interpreted.”

Heaven forbid the NIH responds to investigators’ concerns.

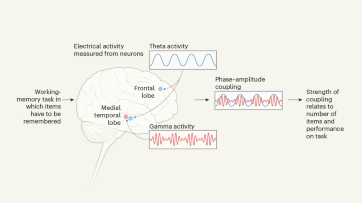

I appreciate the fact that the NIH is working to refine it’s case studies, which have been largely helpful. However, I would like to point out what I think is not so helpful in your revision of 18c, and compare it to 18a. In the former, the case study states that feedback to subjects of winning or losing in a gambling task would be an intervention qualifying as a clinical trial. But in 18a, which could involve a working memory task, is not a clinical trial. However, what happens if after each memory trial, a subject were to receive correct/incorrect feedback? This would affect some of the same brain areas as the win/lose feedback in a gambling task. The implication of this is that studying working memory with feedback is a clinical trial, but without feedback, it’s not a clinical trial? Why is it that studying reward processes makes it a clinical trial, but studying memory processes are not? It becomes very hard to discern the rules that you are using as to what constitutes an intervention, and what does not.

Steve Taylor

The distinction between 18A (an fMRI study that is not a clinical trial) and 18C (an fMRI study that is a clinical trial) is not at all clearcut. Please consider: