- Science Notes Posts

- Contact Science Notes

- Todd Helmenstine Biography

- Anne Helmenstine Biography

- Free Printable Periodic Tables (PDF and PNG)

- Periodic Table Wallpapers

- Interactive Periodic Table

- Periodic Table Posters

- How to Grow Crystals

- Chemistry Projects

- Fire and Flames Projects

- Holiday Science

- Chemistry Problems With Answers

- Physics Problems

- Unit Conversion Example Problems

- Chemistry Worksheets

- Biology Worksheets

- Periodic Table Worksheets

- Physical Science Worksheets

- Science Lab Worksheets

- My Amazon Books

Understanding Peer Review in Science

Peer review is an essential element of the scientific publishing process that helps ensure that research articles are evaluated, critiqued, and improved before release into the academic community. Take a look at the significance of peer review in scientific publications, the typical steps of the process, and and how to approach peer review if you are asked to assess a manuscript.

What Is Peer Review?

Peer review is the evaluation of work by peers, who are people with comparable experience and competency. Peers assess each others’ work in educational settings, in professional settings, and in the publishing world. The goal of peer review is improving quality, defining and maintaining standards, and helping people learn from one another.

In the context of scientific publication, peer review helps editors determine which submissions merit publication and improves the quality of manuscripts prior to their final release.

Types of Peer Review for Manuscripts

There are three main types of peer review:

- Single-blind review: The reviewers know the identities of the authors, but the authors do not know the identities of the reviewers.

- Double-blind review: Both the authors and reviewers remain anonymous to each other.

- Open peer review: The identities of both the authors and reviewers are disclosed, promoting transparency and collaboration.

There are advantages and disadvantages of each method. Anonymous reviews reduce bias but reduce collaboration, while open reviews are more transparent, but increase bias.

Key Elements of Peer Review

Proper selection of a peer group improves the outcome of the process:

- Expertise : Reviewers should possess adequate knowledge and experience in the relevant field to provide constructive feedback.

- Objectivity : Reviewers assess the manuscript impartially and without personal bias.

- Confidentiality : The peer review process maintains confidentiality to protect intellectual property and encourage honest feedback.

- Timeliness : Reviewers provide feedback within a reasonable timeframe to ensure timely publication.

Steps of the Peer Review Process

The typical peer review process for scientific publications involves the following steps:

- Submission : Authors submit their manuscript to a journal that aligns with their research topic.

- Editorial assessment : The journal editor examines the manuscript and determines whether or not it is suitable for publication. If it is not, the manuscript is rejected.

- Peer review : If it is suitable, the editor sends the article to peer reviewers who are experts in the relevant field.

- Reviewer feedback : Reviewers provide feedback, critique, and suggestions for improvement.

- Revision and resubmission : Authors address the feedback and make necessary revisions before resubmitting the manuscript.

- Final decision : The editor makes a final decision on whether to accept or reject the manuscript based on the revised version and reviewer comments.

- Publication : If accepted, the manuscript undergoes copyediting and formatting before being published in the journal.

Pros and Cons

While the goal of peer review is improving the quality of published research, the process isn’t without its drawbacks.

- Quality assurance : Peer review helps ensure the quality and reliability of published research.

- Error detection : The process identifies errors and flaws that the authors may have overlooked.

- Credibility : The scientific community generally considers peer-reviewed articles to be more credible.

- Professional development : Reviewers can learn from the work of others and enhance their own knowledge and understanding.

- Time-consuming : The peer review process can be lengthy, delaying the publication of potentially valuable research.

- Bias : Personal biases of reviews impact their evaluation of the manuscript.

- Inconsistency : Different reviewers may provide conflicting feedback, making it challenging for authors to address all concerns.

- Limited effectiveness : Peer review does not always detect significant errors or misconduct.

- Poaching : Some reviewers take an idea from a submission and gain publication before the authors of the original research.

Steps for Conducting Peer Review of an Article

Generally, an editor provides guidance when you are asked to provide peer review of a manuscript. Here are typical steps of the process.

- Accept the right assignment: Accept invitations to review articles that align with your area of expertise to ensure you can provide well-informed feedback.

- Manage your time: Allocate sufficient time to thoroughly read and evaluate the manuscript, while adhering to the journal’s deadline for providing feedback.

- Read the manuscript multiple times: First, read the manuscript for an overall understanding of the research. Then, read it more closely to assess the details, methodology, results, and conclusions.

- Evaluate the structure and organization: Check if the manuscript follows the journal’s guidelines and is structured logically, with clear headings, subheadings, and a coherent flow of information.

- Assess the quality of the research: Evaluate the research question, study design, methodology, data collection, analysis, and interpretation. Consider whether the methods are appropriate, the results are valid, and the conclusions are supported by the data.

- Examine the originality and relevance: Determine if the research offers new insights, builds on existing knowledge, and is relevant to the field.

- Check for clarity and consistency: Review the manuscript for clarity of writing, consistent terminology, and proper formatting of figures, tables, and references.

- Identify ethical issues: Look for potential ethical concerns, such as plagiarism, data fabrication, or conflicts of interest.

- Provide constructive feedback: Offer specific, actionable, and objective suggestions for improvement, highlighting both the strengths and weaknesses of the manuscript. Don’t be mean.

- Organize your review: Structure your review with an overview of your evaluation, followed by detailed comments and suggestions organized by section (e.g., introduction, methods, results, discussion, and conclusion).

- Be professional and respectful: Maintain a respectful tone in your feedback, avoiding personal criticism or derogatory language.

- Proofread your review: Before submitting your review, proofread it for typos, grammar, and clarity.

- Couzin-Frankel J (September 2013). “Biomedical publishing. Secretive and subjective, peer review proves resistant to study”. Science . 341 (6152): 1331. doi: 10.1126/science.341.6152.1331

- Lee, Carole J.; Sugimoto, Cassidy R.; Zhang, Guo; Cronin, Blaise (2013). “Bias in peer review”. Journal of the American Society for Information Science and Technology. 64 (1): 2–17. doi: 10.1002/asi.22784

- Slavov, Nikolai (2015). “Making the most of peer review”. eLife . 4: e12708. doi: 10.7554/eLife.12708

- Spier, Ray (2002). “The history of the peer-review process”. Trends in Biotechnology . 20 (8): 357–8. doi: 10.1016/S0167-7799(02)01985-6

- Squazzoni, Flaminio; Brezis, Elise; Marušić, Ana (2017). “Scientometrics of peer review”. Scientometrics . 113 (1): 501–502. doi: 10.1007/s11192-017-2518-4

Related Posts

What is peer review?

From a publisher’s perspective, peer review functions as a filter for content, directing better quality articles to better quality journals and so creating journal brands.

Running articles through the process of peer review adds value to them. For this reason publishers need to make sure that peer review is robust.

Editor Feedback

"Pointing out the specifics about flaws in the paper’s structure is paramount. Are methods valid, is data clearly presented, and are conclusions supported by data?” (Editor feedback)

“If an editor can read your comments and understand clearly the basis for your recommendation, then you have written a helpful review.” (Editor feedback)

Peer Review at Its Best

What peer review does best is improve the quality of published papers by motivating authors to submit good quality work – and helping to improve that work through the peer review process.

In fact, 90% of researchers feel that peer review improves the quality of their published paper (University of Tennessee and CIBER Research Ltd, 2013).

What the Critics Say

The peer review system is not without criticism. Studies show that even after peer review, some articles still contain inaccuracies and demonstrate that most rejected papers will go on to be published somewhere else.

However, these criticisms should be understood within the context of peer review as a human activity. The occasional errors of peer review are not reasons for abandoning the process altogether – the mistakes would be worse without it.

Improving Effectiveness

Some of the ways in which Wiley is seeking to improve the efficiency of the process, include:

- Reducing the amount of repeat reviewing by innovating around transferable peer review

- Providing training and best practice guidance to peer reviewers

- Improving recognition of the contribution made by reviewers

Visit our Peer Review Process and Types of Peer Review pages for additional detailed information on peer review.

Transparency in Peer Review

Wiley is committed to increasing transparency in peer review, increasing accountability for the peer review process and giving recognition to the work of peer reviewers and editors. We are also actively exploring other peer review models to give researchers the options that suit them and their communities.

- Technical Support

- Find My Rep

You are here

What is peer review.

Peer review is ‘a process where scientists (“peers”) evaluate the quality of other scientists’ work. By doing this, they aim to ensure the work is rigorous, coherent, uses past research and adds to what we already know.’ You can learn more in this explainer from the Social Science Space.

Peer review brings academic research to publication in the following ways:

- Evaluation – Peer review is an effective form of research evaluation to help select the highest quality articles for publication.

- Integrity – Peer review ensures the integrity of the publishing process and the scholarly record. Reviewers are independent of journal publications and the research being conducted.

- Quality – The filtering process and revision advice improve the quality of the final research article as well as offering the author new insights into their research methods and the results that they have compiled. Peer review gives authors access to the opinions of experts in the field who can provide support and insight.

Types of peer review

- Single-anonymized – the name of the reviewer is hidden from the author.

- Double-anonymized – names are hidden from both reviewers and the authors.

- Triple-anonymized – names are hidden from authors, reviewers, and the editor.

- Open peer review comes in many forms . At Sage we offer a form of open peer review on some journals via our Transparent Peer Review program , whereby the reviews are published alongside the article. The names of the reviewers may also be published, depending on the reviewers’ preference.

- Post publication peer review can offer useful interaction and a discussion forum for the research community. This form of peer review is not usual or appropriate in all fields.

To learn more about the different types of peer review, see page 14 of ‘ The Nuts and Bolts of Peer Review ’ from Sense about Science.

Please double check the manuscript submission guidelines of the journal you are reviewing in order to ensure that you understand the method of peer review being used.

- Journal Author Gateway

- Journal Editor Gateway

- Transparent Peer Review

- How to Review Articles

- Using Sage Track

- Peer Review Ethics

- Resources for Reviewers

- Reviewer Rewards

- Ethics & Responsibility

- Sage Editorial Policies

- Publication Ethics Policies

- Sage Chinese Author Gateway 中国作者资源

- Open Resources & Current Initiatives

- Discipline Hubs

- Find My Rep

You are here

What is peer review.

Peer review is ‘a process where scientists (“peers”) evaluate the quality of other scientists’ work. By doing this, they aim to ensure the work is rigorous, coherent, uses past research and adds to what we already know.’ You can learn more in this explainer from the Social Science Space.

Peer review brings academic research to publication in the following ways:

- Evaluation – Peer review is an effective form of research evaluation to help select the highest quality articles for publication.

- Integrity – Peer review ensures the integrity of the publishing process and the scholarly record. Reviewers are independent of journal publications and the research being conducted.

- Quality – The filtering process and revision advice improve the quality of the final research article as well as offering the author new insights into their research methods and the results that they have compiled. Peer review gives authors access to the opinions of experts in the field who can provide support and insight.

Types of peer review

- Single-anonymized – the name of the reviewer is hidden from the author.

- Double-anonymized – names are hidden from both reviewers and the authors.

- Triple-anonymized – names are hidden from authors, reviewers, and the editor.

- Open peer review comes in many forms . At Sage we offer a form of open peer review on some journals via our Transparent Peer Review program , whereby the reviews are published alongside the article. The names of the reviewers may also be published, depending on the reviewers’ preference.

- Post publication peer review can offer useful interaction and a discussion forum for the research community. This form of peer review is not usual or appropriate in all fields.

To learn more about the different types of peer review, see page 14 of ‘ The Nuts and Bolts of Peer Review ’ from Sense about Science.

Please double check the manuscript submission guidelines of the journal you are reviewing in order to ensure that you understand the method of peer review being used.

- Journal Author Gateway

- Journal Editor Gateway

- Transparent Peer Review

- How to Review Articles

- Using Sage Track

- Peer Review Ethics

- Resources for Reviewers

- Reviewer Rewards

- Ethics & Responsibility

- Sage Editorial Policies

- Publication Ethics Policies

- Sage Chinese Author Gateway 中国作者资源

- Open Resources & Current Initiatives

- Discipline Hubs

- Collections

- Services & Support

All About Peer Review

The peer review process, part 1: watch the video, discussion questions, part 2: practice, for instructors.

So you need to use scholarly, peer-reviewed articles for an assignment...what does that mean?

Peer review is a process for evaluating research studies before they are published by an academic journal. These studies typically communicate original research or analysis for other researchers.

The Peer Review Process at a Glance:

Looking for peer-reviewed articles? Try searching in OneSearch or a library database and look for options to limit your results to scholarly/peer-reviewed or academic journals. Check out this brief tutorial to show you how: How to Locate a Scholarly (Peer Reviewed) Article

Part 1: Watch the video All About Peer Review (3 min.) and reflect on discussion questions.

After watching the video, reflect on the following questions:

- According to the video, what are some of the pros and cons of the peer review process?

- Why is the peer review process important to scholarship?

- Do you think peer reviewers should be paid for their work? Why or why not?

Part 2: Take an interactive tutorial on reading a research article for your major.

Includes a certification of completion to download and upload to Canvas.

Social Sciences

(e.g. Psychology, Sociology)

(e.g. Health Science, Biology)

Arts & Humanities

(e.g. Visual & Media Arts, Cultural Studies, Literature, History)

Click on the handout to view in a new tab, download, or print.

- Teaching Peer Review for Instructors

In class or for homework, watch the video “All About Peer Review” (3 min.) .

Video discussion questions:

- According to the video, what are some of the pros and cons of the peer review process

Assignment Ideas

- Ask students to conduct their own peer review of an important journal article in your field. Ask them to reflect on the process. What was hard to critique?

- Have students examine a journals’ web page with information for authors. What information is given to the author about the peer review process for this journal?

- Assign this reading by CSUDH faculty member Terry McGlynn, "Should journals pay for manuscript reviews?" What is the author's argument? Who profits the most from published research? You could also hold a debate with one side for paying reviewers and the other side against.

- Search a database like Cabell’s for information on the journal submission process for a particular title or subject. How long does peer review take for a particular title? Is it is a blind review? How many reviewers are solicited? What is their acceptance rate?

- Assign short readings that address peer review models. We recommend this issue of Nature on peer review debate and open review and this Chronicle of Higher Education article on open review in Shakespeare Quarterly .

Proof of Completion

Mix and match this suite of instructional materials for your course needs!

Questions about integrating a graded online component into your class, contact the Online Learning Librarian, Rebecca Nowicki ( [email protected] ).

Example of a certificate of completion:

- Last Updated: Sep 20, 2023 2:41 PM

- URL: https://libguides.sdsu.edu/UnderstandingPeerReview

- SpringerLink shop

What is peer review?

Prepublication peer review has been part of science for a long time. Philosophical Transactions, the first peer-reviewed journal, published its first paper in 1665. But peer review may be even older still, because there are records of physicians in the Arab world reviewing the effectiveness of each other’s treatments in the 9th century.

Peer review is a critical part of the modern scientific process. For science to progress, research methods and findings need to be closely examined to decide on the best direction for future research. After a study has gone through peer review and is accepted for publication, scientists and the public can be confident that the study has met certain standards, and that the results can be trusted.

After an editor receives a manuscript, their first step is to check that the manuscript meets the journal’s rules for content and format. If it does, then the editor moves to the next step, which is peer review. The editor will send the manuscript to one or more experts in the field to get their opinion. The experts – called peer reviewers – will then prepare a report that assesses the manuscript, and return it to the editor. After reading the peer reviewer's report, the editor will decide to do one of three things: reject the manuscript, accept the manuscript, or ask the authors to revise and resubmit the manuscript after responding to the peer reviewer feedback. If the authors resubmit the manuscript, editors will sometimes ask the same peer reviewers to look over the manuscript again to see if their concerns have been addressed.

Some of the problems that peer reviewers may find in a manuscript include errors in the study’s methods or analysis that raise questions about the findings, or sections that need clearer explanations so that the manuscript is easily understood. From a journal editor’s point of view, comments on the importance and novelty of a manuscript, and if it will interest the journal’s audience, are particularly useful in helping them to decide which manuscripts are likely to be highly read and cited, and thus which are worth publishing.

--- Commentary ---

Original URL: http://www.springer.com/authors/journal+authors/peer-review-academy?SGWID=0-1741413-12-959404-0

View:

Picture Remarks:

Peer Reviewed Literature

What is peer review, terminology, peer review what does that mean, what types of articles are peer-reviewed, what information is not peer-reviewed, what about google scholar.

- How do I find peer-reviewed articles?

- Scholarly vs. Popular Sources

Research Librarian

For more help on this topic, please contact our Research Help Desk: [email protected] or 781-768-7303. Stay up-to-date on our current hours . Note: all hours are EST.

This Guide was created by Carolyn Swidrak (retired).

Research findings are communicated in many ways. One of the most important ways is through publication in scholarly, peer-reviewed journals.

Research published in scholarly journals is held to a high standard. It must make a credible and significant contribution to the discipline. To ensure a very high level of quality, articles that are submitted to scholarly journals undergo a process called peer-review.

Once an article has been submitted for publication, it is reviewed by other independent, academic experts (at least two) in the same field as the authors. These are the peers. The peers evaluate the research and decide if it is good enough and important enough to publish. Usually there is a back-and-forth exchange between the reviewers and the authors, including requests for revisions, before an article is published.

Peer review is a rigorous process but the intensity varies by journal. Some journals are very prestigious and receive many submissions for publication. They publish only the very best, most highly regarded research.

The terms scholarly, academic, peer-reviewed and refereed are sometimes used interchangeably, although there are slight differences.

Scholarly and academic may refer to peer-reviewed articles, but not all scholarly and academic journals are peer-reviewed (although most are.) For example, the Harvard Business Review is an academic journal but it is editorially reviewed, not peer-reviewed.

Peer-reviewed and refereed are identical terms.

From Peer Review in 3 Minutes [Video], by the North Carolina State University Library, 2014, YouTube (https://youtu.be/rOCQZ7QnoN0).

Peer reviewed articles can include:

- Original research (empirical studies)

- Review articles

- Systematic reviews

- Meta-analyses

There is much excellent, credible information in existence that is NOT peer-reviewed. Peer-review is simply ONE MEASURE of quality.

Much of this information is referred to as "gray literature."

Government Agencies

Government websites such as the Centers for Disease Control (CDC) publish high level, trustworthy information. However, most of it is not peer-reviewed. (Some of their publications are peer-reviewed, however. The journal Emerging Infectious Diseases, published by the CDC is one example.)

Conference Proceedings

Papers from conference proceedings are not usually peer-reviewed. They may go on to become published articles in a peer-reviewed journal.

Dissertations

Dissertations are written by doctoral candidates, and while they are academic they are not peer-reviewed.

Many students like Google Scholar because it is easy to use. While the results from Google Scholar are generally academic they are not necessarily peer-reviewed. Typically, you will find:

- Peer reviewed journal articles (although they are not identified as peer-reviewed)

- Unpublished scholarly articles (not peer-reviewed)

- Masters theses, doctoral dissertations and other degree publications (not peer-reviewed)

- Book citations and links to some books (not necessarily peer-reviewed)

- Next: How do I find peer-reviewed articles? >>

- Last Updated: Feb 12, 2024 9:39 AM

- URL: https://libguides.regiscollege.edu/peer_review

Explainer: what is peer review?

Professor of Organisational Behaviour, Cass Business School, City, University of London

Novak Druce Research Fellow, University of Oxford

Disclosure statement

Thomas Roulet does not work for, consult to, own shares in or receive funding from any company or organisation that would benefit from this article, and has no relevant affiliations.

Andre Spicer does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

City, University of London provides funding as a founding partner of The Conversation UK.

University of Oxford provides funding as a member of The Conversation UK.

View all partners

We’ve all heard the phrase “peer review” as giving credence to research and scholarly papers, but what does it actually mean? How does it work?

Peer review is one of the gold standards of science. It’s a process where scientists (“peers”) evaluate the quality of other scientists’ work. By doing this, they aim to ensure the work is rigorous, coherent, uses past research and adds to what we already knew.

Most scientific journals, conferences and grant applications have some sort of peer review system. In most cases it is “double blind” peer review. This means evaluators do not know the author(s), and the author(s) do not know the identity of the evaluators. The intention behind this system is to ensure evaluation is not biased.

The more prestigious the journal, conference, or grant, the more demanding will be the review process, and the more likely the rejection. This prestige is why these papers tend to be more read and more cited.

The process in details

The peer review process for journals involves at least three stages.

1. The desk evaluation stage

When a paper is submitted to a journal, it receives an initial evaluation by the chief editor, or an associate editor with relevant expertise.

At this stage, either can “desk reject” the paper: that is, reject the paper without sending it to blind referees. Generally, papers are desk rejected if the paper doesn’t fit the scope of the journal or there is a fundamental flaw which makes it unfit for publication.

In this case, the rejecting editors might write a letter summarising his or her concerns. Some journals, such as the British Medical Journal , desk reject up to two-thirds or more of the papers.

2. The blind review

If the editorial team judges there are no fundamental flaws, they send it for review to blind referees. The number of reviewers depends on the field: in finance there might be only one reviewer, while journals in other fields of social sciences might ask up to four reviewers. Those reviewers are selected by the editor on the basis of their expert knowledge and their absence of a link with the authors.

Reviewers will decide whether to reject the paper, to accept it as it is (which rarely happens) or to ask for the paper to be revised. This means the author needs to change the paper in line with the reviewers’ concerns.

Usually the reviews deal with the validity and rigour of the empirical method, and the importance and originality of the findings (what is called the “contribution” to the existing literature). The editor collects those comments, weights them, takes a decision, and writes a letter summarising the reviewers’ and his or her own concerns.

It can therefore happen that despite hostility on the part of the reviewers, the editor could offer the paper a subsequent round of revision. In the best journals in the social sciences, 10% to 20% of the papers are offered a “revise-and-resubmit” after the first round.

3. The revisions – if you are lucky enough

If the paper has not been rejected after this first round of review, it is sent back to the author(s) for a revision. The process is repeated as many times as necessary for the editor to reach a consensus point on whether to accept or reject the paper. In some cases this can last for several years.

Ultimately, less than 10% of the submitted papers are accepted in the best journals in the social sciences. The renowned journal Nature publishes around 7% of the submitted papers.

Strengths and weaknesses of the peer review process

The peer review process is seen as the gold standard in science because it ensures the rigour, novelty, and consistency of academic outputs. Typically, through rounds of review, flawed ideas are eliminated and good ideas are strengthened and improved. Peer reviewing also ensures that science is relatively independent.

Because scientific ideas are judged by other scientists, the crucial yardstick is scientific standards. If other people from outside of the field were involved in judging ideas, other criteria such as political or economic gain might be used to select ideas. Peer reviewing is also seen as a crucial way of removing personalities and bias from the process of judging knowledge.

Despite the undoubted strengths, the peer review process as we know it has been criticised . It involves a number of social interactions that might create biases – for example, authors might be identified by reviewers if they are in the same field, and desk rejections are not blind.

It might also favour incremental (adding to past research) rather than innovative (new) research. Finally, reviewers are human after all and can make mistakes, misunderstand elements, or miss errors.

Are there any alternatives?

Defenders of the peer review system say although there are flaws, we’re yet to find a better system to evaluate research. However, a number of innovations have been introduced in the academic review system to improve its objectivity and efficiency.

Some new open-access journals (such as PLOS ONE ) publish papers with very little evaluation (they check the work is not deeply flawed methodologically). The focus there is on the post-publication peer review system: all readers can comment and criticise the paper.

Some journals such as Nature, have made part of the review process public (“open” review), offering a hybrid system in which peer review plays a role of primary gate keepers, but the public community of scholars judge in parallel (or afterwards in some other journals) the value of the research.

Another idea is to have a set of reviewers rating the paper each time it is revised. In this case, authors will be able to choose whether they want to invest more time in a revision to obtain a better rating, and get their work publicly recognised.

- Peer review

Senior Lecturer - Earth System Science

Strategy Implementation Manager

Sydney Horizon Educators (Identified)

Deputy Social Media Producer

Associate Professor, Occupational Therapy

Libraries & Cultural Resources

Research guides, academic publishing demystified.

- How do I choose a suitable publication venue?

- What is a predatory publisher?

What is peer review?

- What is an impact factor?

- Can I get help with writing?

- Mental health & wellness

- Resources from retreat

Until you've been through it at least once, the review process can be a confusing and opaque. Although there is a great deal of variation in how peer review works at different publication venues, the resources on this page can help you "see inside" the process before you submit. Resources on this page focus on scholarly journal publishing; other venues (e.g. University presses) will use slightly different processes.

Review process:

This image is an adaptation of Types of Review by Jessica Lange. from McGill Library and is used under a Creative Commons CC BY 4.0 International license.

Peer Review Video

- Transcript - Peer review Download video transcript as a .txt file

Editorial Review

When you submit an article for publication, typically the first person to take a look at it is a member of the journal's editorial staff. Typically, these people are looking to assess whether an article is within the scope of the journal (topic, length, format, etc) and that it is of sufficient quality to warrant peer review.

Sometimes, certain sections of publications are subject only to editorial review. This is more common for non-research articles such as reviews, commentary, letters, etc.

Peer Review

Experts in the subject area of your article will review your article and provide feedback on it. Depending on the journal and the availability of reviewers, it is typical for one to three external experts to review your paper.

There are a number of different types of peer review. It's good to know what type your target publication uses, and this information should be on the publication's website. The table below outlines some of the types.

* These terms were formerly referred to as single-blind and double-blind. Anonymous is now the preferred term.

Source: PKP School, Different Types of Peer Review .

Peer Review: Your Questions Answered

Can I submit a work to more than one publication at a time? No. This is to ensure that the labour involved in the review process is protected. If you are rejected from one publication, only then can you submit to a second.

How do I ensure that my work is anonymized prior to submitting? If you're submitting to a venue that uses double anonymous peer review, you will be directed to anonymize your manuscript. Remove any references to yourself or to things that could identify you:

- References to your own works in the text: (Author, 2021).

- Bibliography: Author. 2021.

- Any roles, collaborators, institutions, etc.

You will also have to anonymize the 'hidden' metadata of your paper in the word processor you use.

Is there any guarantee that my work will be accepted? No. Even for experienced authors, it is the peer review process that will decide whether or not a piece is accepted. It is normal for papers to be rejected multiple times, and for the whole process to take months or even years. This is why many authors will have more than one work in the publication "pipelline" at any one time.

How long does the peer review process take? It can be a long time! One study found that the average time from submission to acceptance is five months, with delays of over a year being common. If the process seems very slow, feel free to follow up with an editor. You are also free to withdraw your item and submit elsewhere if the timeline is too long.

How do I address the feedback of peer reviewers? You will be asked to make revisions. This is normal, and it's ok to have feelings (sad, grumpy, outraged) about this. Many experienced authors suggest waiting for several hours or days before addressing revisions. You should address every revision made by reviewers, but you don't have to adopt every revision. Some authors find it very helpful to use a chart to respond to revisions; the example below shows the difference between addressing and adopting suggestions.

Access a Google doc version of this table that you can adapt for your own use.

The reviewers have asked for so many changes! Is this normal?! The volume, nature, and style of reviewer comments can vary hugely, but it is absolutely normal for authors -- even very experienced ones -- to receive a seemingly overwhelming amount of feedback. Using the techniques outlined above, including parsing each piece of feedback into a table, can help you manage the feedback.

I don't agree with the feedback I received. What do I do? Sometimes you may receive contradictory feedback, or a response that does not fit the aims of your paper. This may happen for a variety of reasons: perhaps that section of your work was unclear, and the reviewer misunderstood you. Other times, the reviewer may not have accurate topic knowledge, or they were looking for a different type of paper altogether. Remember, you know your topic and you can push back against inappropriate feedback, as long as this is done respectfully. If feedback is very contradictory or just plain rude, you may wish to raise this issue with the editor. Unacceptable reviewer comments include, use of swear words or profanity, discriminatory language or comments, and personal attacks on character or ability. You should contact the editor if you observe any of these kinds of remarks.

Acknowledgements

Some of the content on this page has been adapted from:

Scholarly Journal Publishing Guide by Jessica Lange , licensed under a Creative Commons Attribution 4.0 International License .

Introduction to Peer Review: Authors by Kate Cawthorn , licensed under a Creative Commons Attribution Share Alike 4.0 International License .

Unless otherwise noted, content is this guide is licensed under a Creative Commons Attribution 4.0 International License .

- << Previous: What is a predatory publisher?

- Next: What is an impact factor? >>

- Last Updated: Apr 4, 2024 10:11 AM

- URL: https://libguides.ucalgary.ca/publishing

Libraries & Cultural Resources

- 403.220.8895

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- What Is Peer Review? | Types & Examples

What Is Peer Review? | Types & Examples

Published on 6 May 2022 by Tegan George . Revised on 2 September 2022.

Peer review, sometimes referred to as refereeing , is the process of evaluating submissions to an academic journal. Using strict criteria, a panel of reviewers in the same subject area decides whether to accept each submission for publication.

Peer-reviewed articles are considered a highly credible source due to the stringent process they go through before publication.

There are various types of peer review. The main difference between them is to what extent the authors, reviewers, and editors know each other’s identities. The most common types are:

- Single-blind review

- Double-blind review

- Triple-blind review

Collaborative review

Open review.

Relatedly, peer assessment is a process where your peers provide you with feedback on something you’ve written, based on a set of criteria or benchmarks from an instructor. They then give constructive feedback, compliments, or guidance to help you improve your draft.

Table of contents

What is the purpose of peer review, types of peer review, the peer review process, providing feedback to your peers, peer review example, advantages of peer review, criticisms of peer review, frequently asked questions about peer review.

Many academic fields use peer review, largely to determine whether a manuscript is suitable for publication. Peer review enhances the credibility of the manuscript. For this reason, academic journals are among the most credible sources you can refer to.

However, peer review is also common in non-academic settings. The United Nations, the European Union, and many individual nations use peer review to evaluate grant applications. It is also widely used in medical and health-related fields as a teaching or quality-of-care measure.

Peer assessment is often used in the classroom as a pedagogical tool. Both receiving feedback and providing it are thought to enhance the learning process, helping students think critically and collaboratively.

Prevent plagiarism, run a free check.

Depending on the journal, there are several types of peer review.

Single-blind peer review

The most common type of peer review is single-blind (or single anonymised) review . Here, the names of the reviewers are not known by the author.

While this gives the reviewers the ability to give feedback without the possibility of interference from the author, there has been substantial criticism of this method in the last few years. Many argue that single-blind reviewing can lead to poaching or intellectual theft or that anonymised comments cause reviewers to be too harsh.

Double-blind peer review

In double-blind (or double anonymised) review , both the author and the reviewers are anonymous.

Arguments for double-blind review highlight that this mitigates any risk of prejudice on the side of the reviewer, while protecting the nature of the process. In theory, it also leads to manuscripts being published on merit rather than on the reputation of the author.

Triple-blind peer review

While triple-blind (or triple anonymised) review – where the identities of the author, reviewers, and editors are all anonymised – does exist, it is difficult to carry out in practice.

Proponents of adopting triple-blind review for journal submissions argue that it minimises potential conflicts of interest and biases. However, ensuring anonymity is logistically challenging, and current editing software is not always able to fully anonymise everyone involved in the process.

In collaborative review , authors and reviewers interact with each other directly throughout the process. However, the identity of the reviewer is not known to the author. This gives all parties the opportunity to resolve any inconsistencies or contradictions in real time, and provides them a rich forum for discussion. It can mitigate the need for multiple rounds of editing and minimise back-and-forth.

Collaborative review can be time- and resource-intensive for the journal, however. For these collaborations to occur, there has to be a set system in place, often a technological platform, with staff monitoring and fixing any bugs or glitches.

Lastly, in open review , all parties know each other’s identities throughout the process. Often, open review can also include feedback from a larger audience, such as an online forum, or reviewer feedback included as part of the final published product.

While many argue that greater transparency prevents plagiarism or unnecessary harshness, there is also concern about the quality of future scholarship if reviewers feel they have to censor their comments.

In general, the peer review process includes the following steps:

- First, the author submits the manuscript to the editor.

- Reject the manuscript and send it back to the author, or

- Send it onward to the selected peer reviewer(s)

- Next, the peer review process occurs. The reviewer provides feedback, addressing any major or minor issues with the manuscript, and gives their advice regarding what edits should be made.

- Lastly, the edited manuscript is sent back to the author. They input the edits and resubmit it to the editor for publication.

In an effort to be transparent, many journals are now disclosing who reviewed each article in the published product. There are also increasing opportunities for collaboration and feedback, with some journals allowing open communication between reviewers and authors.

It can seem daunting at first to conduct a peer review or peer assessment. If you’re not sure where to start, there are several best practices you can use.

Summarise the argument in your own words

Summarising the main argument helps the author see how their argument is interpreted by readers, and gives you a jumping-off point for providing feedback. If you’re having trouble doing this, it’s a sign that the argument needs to be clearer, more concise, or worded differently.

If the author sees that you’ve interpreted their argument differently than they intended, they have an opportunity to address any misunderstandings when they get the manuscript back.

Separate your feedback into major and minor issues

It can be challenging to keep feedback organised. One strategy is to start out with any major issues and then flow into the more minor points. It’s often helpful to keep your feedback in a numbered list, so the author has concrete points to refer back to.

Major issues typically consist of any problems with the style, flow, or key points of the manuscript. Minor issues include spelling errors, citation errors, or other smaller, easy-to-apply feedback.

The best feedback you can provide is anything that helps them strengthen their argument or resolve major stylistic issues.

Give the type of feedback that you would like to receive

No one likes being criticised, and it can be difficult to give honest feedback without sounding overly harsh or critical. One strategy you can use here is the ‘compliment sandwich’, where you ‘sandwich’ your constructive criticism between two compliments.

Be sure you are giving concrete, actionable feedback that will help the author submit a successful final draft. While you shouldn’t tell them exactly what they should do, your feedback should help them resolve any issues they may have overlooked.

As a rule of thumb, your feedback should be:

- Easy to understand

- Constructive

Below is a brief annotated research example. You can view examples of peer feedback by hovering over the highlighted sections.

Influence of phone use on sleep

Studies show that teens from the US are getting less sleep than they were a decade ago (Johnson, 2019) . On average, teens only slept for 6 hours a night in 2021, compared to 8 hours a night in 2011. Johnson mentions several potential causes, such as increased anxiety, changed diets, and increased phone use.

The current study focuses on the effect phone use before bedtime has on the number of hours of sleep teens are getting.

For this study, a sample of 300 teens was recruited using social media, such as Facebook, Instagram, and Snapchat. The first week, all teens were allowed to use their phone the way they normally would, in order to obtain a baseline.

The sample was then divided into 3 groups:

- Group 1 was not allowed to use their phone before bedtime.

- Group 2 used their phone for 1 hour before bedtime.

- Group 3 used their phone for 3 hours before bedtime.

All participants were asked to go to sleep around 10 p.m. to control for variation in bedtime . In the morning, their Fitbit showed the number of hours they’d slept. They kept track of these numbers themselves for 1 week.

Two independent t tests were used in order to compare Group 1 and Group 2, and Group 1 and Group 3. The first t test showed no significant difference ( p > .05) between the number of hours for Group 1 ( M = 7.8, SD = 0.6) and Group 2 ( M = 7.0, SD = 0.8). The second t test showed a significant difference ( p < .01) between the average difference for Group 1 ( M = 7.8, SD = 0.6) and Group 3 ( M = 6.1, SD = 1.5).

This shows that teens sleep fewer hours a night if they use their phone for over an hour before bedtime, compared to teens who use their phone for 0 to 1 hours.

Peer review is an established and hallowed process in academia, dating back hundreds of years. It provides various fields of study with metrics, expectations, and guidance to ensure published work is consistent with predetermined standards.

- Protects the quality of published research

Peer review can stop obviously problematic, falsified, or otherwise untrustworthy research from being published. Any content that raises red flags for reviewers can be closely examined in the review stage, preventing plagiarised or duplicated research from being published.

- Gives you access to feedback from experts in your field

Peer review represents an excellent opportunity to get feedback from renowned experts in your field and to improve your writing through their feedback and guidance. Experts with knowledge about your subject matter can give you feedback on both style and content, and they may also suggest avenues for further research that you hadn’t yet considered.

- Helps you identify any weaknesses in your argument

Peer review acts as a first defence, helping you ensure your argument is clear and that there are no gaps, vague terms, or unanswered questions for readers who weren’t involved in the research process. This way, you’ll end up with a more robust, more cohesive article.

While peer review is a widely accepted metric for credibility, it’s not without its drawbacks.

- Reviewer bias

The more transparent double-blind system is not yet very common, which can lead to bias in reviewing. A common criticism is that an excellent paper by a new researcher may be declined, while an objectively lower-quality submission by an established researcher would be accepted.

- Delays in publication

The thoroughness of the peer review process can lead to significant delays in publishing time. Research that was current at the time of submission may not be as current by the time it’s published.

- Risk of human error

By its very nature, peer review carries a risk of human error. In particular, falsification often cannot be detected, given that reviewers would have to replicate entire experiments to ensure the validity of results.

Peer review is a process of evaluating submissions to an academic journal. Utilising rigorous criteria, a panel of reviewers in the same subject area decide whether to accept each submission for publication.

For this reason, academic journals are often considered among the most credible sources you can use in a research project – provided that the journal itself is trustworthy and well regarded.

Peer review can stop obviously problematic, falsified, or otherwise untrustworthy research from being published. It also represents an excellent opportunity to get feedback from renowned experts in your field.

It acts as a first defence, helping you ensure your argument is clear and that there are no gaps, vague terms, or unanswered questions for readers who weren’t involved in the research process.

Peer-reviewed articles are considered a highly credible source due to this stringent process they go through before publication.

In general, the peer review process follows the following steps:

- Reject the manuscript and send it back to author, or

- Lastly, the edited manuscript is sent back to the author. They input the edits, and resubmit it to the editor for publication.

Many academic fields use peer review , largely to determine whether a manuscript is suitable for publication. Peer review enhances the credibility of the published manuscript.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

George, T. (2022, September 02). What Is Peer Review? | Types & Examples. Scribbr. Retrieved 22 April 2024, from https://www.scribbr.co.uk/research-methods/peer-reviews/

Is this article helpful?

Tegan George

Other students also liked, what is a double-blind study | introduction & examples, a quick guide to experimental design | 5 steps & examples, data cleaning | a guide with examples & steps.

Holman Library

Research Guide: Scholarly Journals

- What does "Peer-Reviewed" mean?

- Why Use Scholarly Journals?

- What is *NOT* a Scholarly Journal Article?

- Interlibrary Loan for Journal Articles

- Introduction: Hypothesis/Thesis

- Reading the Citation

- Authors' Credentials

- Literature Review

- Methodology

- Results/Data

- Discussion/Conclusions

- APA Citations for Scholarly Journal Articles

- MLA Citations for Scholarly Journal Articles

Attribution & Thanks!

Permission to reuse content in this guide, namely regarding the peer-review process , was generously given by the librarians at the Lloyd Sealy Library at the John Jay College of Criminal Justice .

What is Peer-Review?

- What is peer-review?

- Features of a peer-reviewed article

- Video: How do you find a peer-reviewed article?

What is the peer review process?

In academic publishing, the goal of peer review is to assess the quality of articles submitted for publication in a scholarly journal. Before an article is deemed appropriate to be published in a peer-reviewed journal, it must undergo the following process:

- The author of the article must submit it to the journal editor who forwards the article to experts in the field. Because the reviewers specialize in the same scholarly area as the author, they are considered the author’s peers (hence “peer review”).

- These impartial reviewers are charged with carefully evaluating the quality of the submitted manuscript.

- The peer reviewers check the manuscript for accuracy and assess the validity of the research methodology and procedures.

- If appropriate, they suggest revisions. If they find the article lacking in scholarly validity and rigor, they reject it.

Because a peer-reviewed journal will not publish articles that fail to meet the standards established for a given discipline, peer-reviewed articles that are accepted for publication exemplify the best research practices in a field.

Attribution: Much of the information in these boxes about the the peer-review process was used with permission from the awesome librarians at the Lloyd Sealy Library at the John Jay College of Criminal Justice .

Common elements of a peer-reviewed article

When you are determining whether or not the article you found is a peer-reviewed article, you should consider the following.

(Click on image to enlarge)

Also consider...

- Is the journal in which you found the article published or sponsored by a professional scholarly society, professional association, or university academic department? Does it describe itself as a peer-reviewed publication? (To know that, check the journal's website).

- Did you find a citation for it in one of the databases that includes scholarly publications? (Academic Search Complete, PsycINFO, etc.)? Read the database description to see if it includes scholarly publications.

- In the database, did you limit your search to scholarly or peer-reviewed publications? (See video tutorial below for a demonstration.)

- Is the topic of the article narrowly focused and explored in depth?

- Is the article based on either original research or authorities in the field (as opposed to personal opinion)?

- Is the article written for readers with some prior knowledge of the subject?

- Introduction

- Theory or Background

- Literature review

The easiest and fastest way to find peer-reviewed articles is to search the online library databases , many of which include peer-reviewed journals. To make sure your results come from peer-reviewed (also called "scholarly" or "academic") journals, do the following:

Read the database description to determine if it features peer-reviewed articles. All of the GRC databases have short descriptions about what kinds of topics they cover and why types of articles they house. Many, if not most, of the database house journal articles.

When you search for articles, choose the Advanced Search option. On the search screen, look for a check-box that allows you to limit your results to peer-reviewed only. Often, you can see the option to limit to peer-review as well as "full-text" in the advanced settings, or off to the left hand side of the database's results page.

Consider the video below

Source: "Peer Review in 3 Minutes" by libncsu , is licensed under a Standard YouTube License.

Video: Peer Review in 3 Minutes

- << Previous: What is a Scholarly Journal Article?

- Next: What is *NOT* a Scholarly Journal Article? >>

- Last Updated: Mar 15, 2024 1:18 PM

- URL: https://libguides.greenriver.edu/scholarlyjournals

- Harvard Library

- Research Guides

- Faculty of Arts & Sciences Libraries

Computer Science Library Research Guide

What is peer review.

- Get Started

- How to get the full-text

- Find Books in the SEC Library This link opens in a new window

- Find Conference Proceedings

- Find Dissertations and Theses

- Find Patents This link opens in a new window

- Find Standards

- Find Technical Reports

- Find Videos

- Ask a Librarian This link opens in a new window

- << Previous: How to get the full-text

- Next: Find Books in the SEC Library >>

- Last Updated: Feb 27, 2024 1:52 PM

- URL: https://guides.library.harvard.edu/cs

Harvard University Digital Accessibility Policy

- University of Texas Libraries

- UT Libraries

Finding Journal Articles 101

Peer-reviewed or refereed.

- Research Article

- Review Article

- By Journal Title

What Does "Peer-reviewed" or "Refereed" Mean?

Peer review is a process that journals use to ensure the articles they publish represent the best scholarship currently available. When an article is submitted to a peer reviewed journal, the editors send it out to other scholars in the same field (the author's peers) to get their opinion on the quality of the scholarship, its relevance to the field, its appropriateness for the journal, etc.

Publications that don't use peer review (Time, Cosmo, Salon) just rely on the judgment of the editors whether an article is up to snuff or not. That's why you can't count on them for solid, scientific scholarship.

Note:This is an entirely different concept from " Review Articles ."

How do I know if a journal publishes peer-reviewed articles?

Usually, you can tell just by looking. A scholarly journal is visibly different from other magazines, but occasionally it can be hard to tell, or you just want to be extra-certain. In that case, you turn to Ulrich's Periodical Directory Online . Just type the journal's title into the text box, hit "submit," and you'll get back a report that will tell you (among other things) whether the journal contains articles that are peer reviewed, or, as Ulrich's calls it, Refereed.

Remember, even journals that use peer review may have some content that does not undergo peer review. The ultimate determination must be made on an article-by-article basis.

For example, the journal Science publishes a mix of peer-reviewed and non-peer-reviewed content. Here are two articles from the same issue of Science .

This one is not peer-reviewed: https://science-sciencemag-org.ezproxy.lib.utexas.edu/content/303/5655/154.1 This one is a peer-reviewed research article: https://science-sciencemag-org.ezproxy.lib.utexas.edu/content/303/5655/226

That is consistent with the Ulrichsweb description of Science , which states, "Provides news of recent international developments and research in all fields of science. Publishes original research results, reviews and short features."

Test these periodicals in Ulrichs :

- Advances in Dental Research

- Clinical Anatomy

- Molecular Cancer Research

- Journal of Clinical Electrophysiology

- Last Updated: Aug 28, 2023 9:25 AM

- URL: https://guides.lib.utexas.edu/journalarticles101

What are Peer-Reviewed Journals?

- A Definition of Peer-Reviewed

- Research Guides and Tutorials

- Library FAQ Page This link opens in a new window

Research Help

540-828-5642 [email protected] 540-318-1962

- Bridgewater College

- Church of the Brethren

- regional history materials

- the Reuel B. Pritchett Museum Collection

Additional Resources

- What are Peer-reviewed Articles and How Do I Find Them? From Capella University Libraries

Introduction

Peer-reviewed journals (also called scholarly or refereed journals) are a key information source for your college papers and projects. They are written by scholars for scholars and are an reliable source for information on a topic or discipline. These journals can be found either in the library's online databases, or in the library's local holdings. This guide will help you identify whether a journal is peer-reviewed and show you tips on finding them.

What is Peer-Review?

Peer-review is a process where an article is verified by a group of scholars before it is published.

When an author submits an article to a peer-reviewed journal, the editor passes out the article to a group of scholars in the related field (the author's peers). They review the article, making sure that its sources are reliable, the information it presents is consistent with the research, etc. Only after they give the article their "okay" is it published.

The peer-review process makes sure that only quality research is published: research that will further the scholarly work in the field.

When you use articles from peer-reviewed journals, someone has already reviewed the article and said that it is reliable, so you don't have to take the steps to evaluate the author or his/her sources. The hard work is already done for you!

Identifying Peer-Review Journals

If you have the physical journal, you can look for the following features to identify if it is peer-reviewed.

Masthead (The first few pages) : includes information on the submission process, the editorial board, and maybe even a phrase stating that the journal is "peer-reviewed."

Publisher: Peer-reviewed journals are typically published by professional organizations or associations (like the American Chemical Society). They also may be affiliated with colleges/universities.

Graphics: Typically there either won't be any images at all, or the few charts/graphs are only there to supplement the text information. They are usually in black and white.

Authors: The authors are listed at the beginning of the article, usually with information on their affiliated institutions, or contact information like email addresses.

Abstracts: At the beginning of the article the authors provide an extensive abstract detailing their research and any conclusions they were able to draw.

Terminology: Since the articles are written by scholars for scholars, they use uncommon terminology specific to their field and typically do not define the words used.

Citations: At the end of each article is a list of citations/reference. These are provided for scholars to either double check their work, or to help scholars who are researching in the same general area.

Advertisements: Peer-reviewed journals rarely have advertisements. If they do the ads are for professional organizations or conferences, not for national products.

Identifying Articles from Databases

When you are looking at an article in an online database, identifying that it comes from a peer-reviewed journal can be more difficult. You do not have access to the physical journal to check areas like the masthead or advertisements, but you can use some of the same basic principles.

Points you may want to keep in mind when you are evaluating an article from a database:

- A lot of databases provide you with the option to limit your results to only those from peer-reviewed or refereed journals. Choosing this option means all of your results will be from those types of sources.

- When possible, choose the PDF version of the article's full text. Since this is exactly as if you photocopied from the journal, you can get a better idea of its layout, graphics, advertisements, etc.

- Even in an online database you still should be able to check for author information, abstracts, terminology, and citations.

- Next: Research Guides and Tutorials >>

- Last Updated: Dec 12, 2023 4:06 PM

- URL: https://libguides.bridgewater.edu/c.php?g=945314

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- 16 April 2024

Structure peer review to make it more robust

- Mario Malički 0

Mario Malički is associate director of the Stanford Program on Research Rigor and Reproducibility (SPORR) and co-editor-in-chief of the Research Integrity and Peer Review journal.

You can also search for this author in PubMed Google Scholar

You have full access to this article via your institution.

In February, I received two peer-review reports for a manuscript I’d submitted to a journal. One report contained 3 comments, the other 11. Apart from one point, all the feedback was different. It focused on expanding the discussion and some methodological details — there were no remarks about the study’s objectives, analyses or limitations.

My co-authors and I duly replied, working under two assumptions that are common in scholarly publishing: first, that anything the reviewers didn’t comment on they had found acceptable for publication; second, that they had the expertise to assess all aspects of our manuscript. But, as history has shown, those assumptions are not always accurate (see Lancet 396 , 1056; 2020 ). And through the cracks, inaccurate, sloppy and falsified research can slip.

As co-editor-in-chief of the journal Research Integrity and Peer Review (an open-access journal published by BMC, which is part of Springer Nature), I’m invested in ensuring that the scholarly peer-review system is as trustworthy as possible. And I think that to be robust, peer review needs to be more structured. By that, I mean that journals should provide reviewers with a transparent set of questions to answer that focus on methodological, analytical and interpretative aspects of a paper.

For example, editors might ask peer reviewers to consider whether the methods are described in sufficient detail to allow another researcher to reproduce the work, whether extra statistical analyses are needed, and whether the authors’ interpretation of the results is supported by the data and the study methods. Should a reviewer find anything unsatisfactory, they should provide constructive criticism to the authors. And if reviewers lack the expertise to assess any part of the manuscript, they should be asked to declare this.

Anonymizing peer review makes the process more just

Other aspects of a study, such as novelty, potential impact, language and formatting, should be handled by editors, journal staff or even machines, reducing the workload for reviewers.

The list of questions reviewers will be asked should be published on the journal’s website, allowing authors to prepare their manuscripts with this process in mind. And, as others have argued before, review reports should be published in full. This would allow readers to judge for themselves how a paper was assessed, and would enable researchers to study peer-review practices.

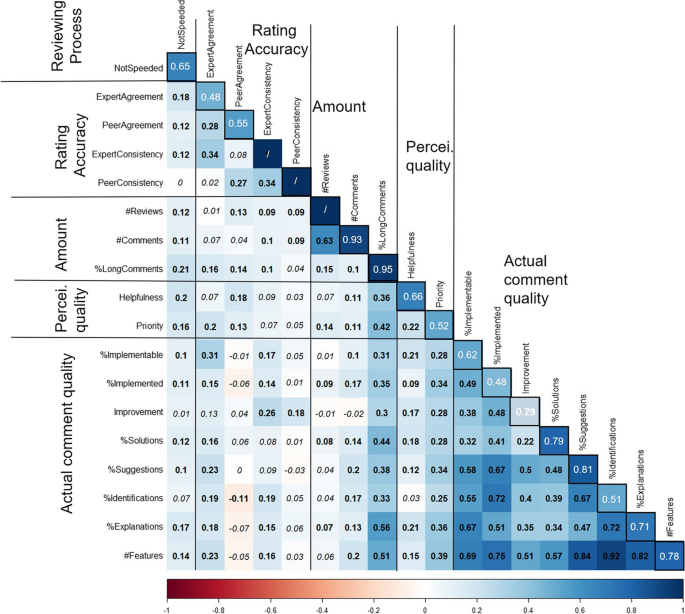

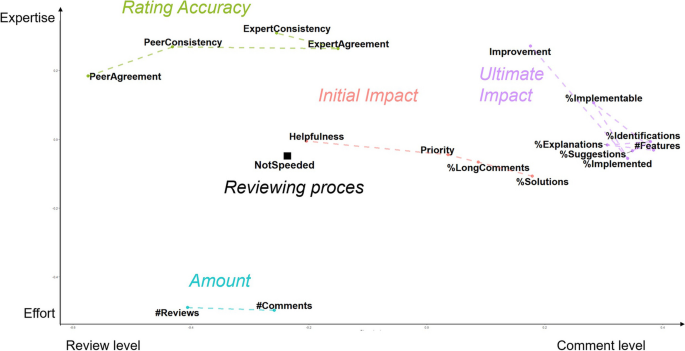

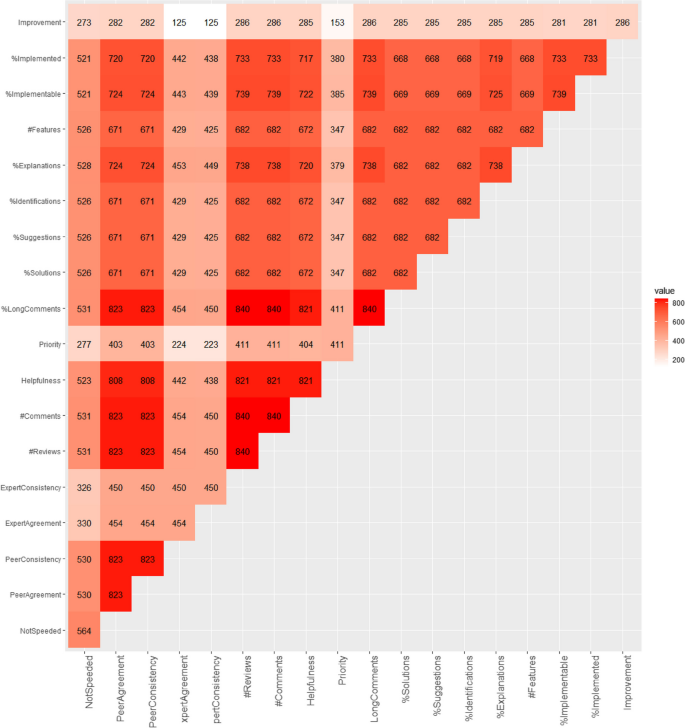

To see how this works in practice, since 2022 I’ve been working with the publisher Elsevier on a pilot study of structured peer review in 23 of its journals, covering the health, life, physical and social sciences. The preliminary results indicate that, when guided by the same questions, reviewers made the same initial recommendation about whether to accept, revise or reject a paper 41% of the time, compared with 31% before these journals implemented structured peer review. Moreover, reviewers’ comments were in agreement about specific parts of a manuscript up to 72% of the time ( M. Malički and B. Mehmani Preprint at bioRxiv https://doi.org/mrdv; 2024 ). In my opinion, reaching such agreement is important for science, which proceeds mainly through consensus.

Stop the peer-review treadmill. I want to get off

I invite editors and publishers to follow in our footsteps and experiment with structured peer reviews. Anyone can trial our template questions (see go.nature.com/4ab2ppc ), or tailor them to suit specific fields or study types. For instance, mathematics journals might also ask whether referees agree with the logic or completeness of a proof. Some journals might ask reviewers if they have checked the raw data or the study code. Publications that employ editors who are less embedded in the research they handle than are academics might need to include questions about a paper’s novelty or impact.

Scientists can also use these questions, either as a checklist when writing papers or when they are reviewing for journals that don’t apply structured peer review.

Some journals — including Proceedings of the National Academy of Sciences , the PLOS family of journals, F1000 journals and some Springer Nature journals — already have their own sets of structured questions for peer reviewers. But, in general, these journals do not disclose the questions they ask, and do not make their questions consistent. This means that core peer-review checks are still not standardized, and reviewers are tasked with different questions when working for different journals.

Some might argue that, because different journals have different thresholds for publication, they should adhere to different standards of quality control. I disagree. Not every study is groundbreaking, but scientists should view quality control of the scientific literature in the same way as quality control in other sectors: as a way to ensure that a product is safe for use by the public. People should be able to see what types of check were done, and when, before an aeroplane was approved as safe for flying. We should apply the same rigour to scientific research.

Ultimately, I hope for a future in which all journals use the same core set of questions for specific study types and make all of their review reports public. I fear that a lack of standard practice in this area is delaying the progress of science.

Nature 628 , 476 (2024)

doi: https://doi.org/10.1038/d41586-024-01101-9

Reprints and permissions

Competing Interests

M.M. is co-editor-in-chief of the Research Integrity and Peer Review journal that publishes signed peer review reports alongside published articles. He is also the chair of the European Association of Science Editors Peer Review Committee.

Related Articles

- Scientific community

- Peer review

Chemistry lab destroyed by Taiwan earthquake has physical and mental impacts

Correspondence 23 APR 24

Breaking ice, and helicopter drops: winning photos of working scientists

Career Feature 23 APR 24

India’s 50-year-old Chipko movement is a model for environmental activism

Is ChatGPT corrupting peer review? Telltale words hint at AI use

News 10 APR 24

Three ways ChatGPT helps me in my academic writing

Career Column 08 APR 24

Is AI ready to mass-produce lay summaries of research articles?

Nature Index 20 MAR 24

Postdoctoral Research Fellow

Description Applications are invited for a postdoctoral fellow position at the Lunenfeld-Tanenbaum Research Institute, Sinai Health, to participate...

Toronto (City), Ontario (CA)

Sinai Health

Postdoctoral Research Associate - Surgery

Memphis, Tennessee

St. Jude Children's Research Hospital (St. Jude)

Open Rank Faculty Position in Biochemistry and Molecular Genetics

The Department of Biochemistry & Molecular Genetics (www.virginia.edu/bmg) and the University of Virginia Cancer Center

Charlottesville, Virginia

Biochemistry & Molecular Genetics

Postdoctoral Position - Synthetic Cell/Living Cell Spheroids for Interactive Biomaterials

Co-assembly of artificial cells and living cells to make co-spheroid structures and study their interactive behavior for biomaterials applications.

Mainz, Rheinland-Pfalz (DE)

University of Mainz

2024 Recruitment notice Shenzhen Institute of Synthetic Biology: Shenzhen, China

The wide-ranging expertise drawing from technical, engineering or science professions...

Shenzhen,China

Shenzhen Institute of Synthetic Biology

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

- Search Site

- Campus Directory

- Online Forms

- Odum Library

- Visitor Information

- About Valdosta

- VSU Administration

- Virtual Tour & Maps Take a sneak peek and plan your trip to our beautiful campus! Our virtual tour is mobile-friendly and offers GPS directions to all campus locations. See you soon!

- Undergraduate Admissions

- Graduate Admissions

- Meet Your Counselor

- Visit Our Campus

- Financial Aid & Scholarships

- Cost Calculator

- Search Degrees

- Online Programs

- How to Become a Blazer Blazers are one of a kind. They find hands-on opportunities to succeed in research, leadership, and excellence as early as freshman year. Think you have what it takes? Click here to get started.

- Academics Overview

- Academic Affairs

- Online Learning

- Colleges & Departments

- Research Opportunities

- Study Abroad

- Majors & Degrees A-Z You have what it takes to change the world, and your degree from VSU will get you there. Click here to compare more than 100 degrees, minors, endorsements & certificates.

- Student Affairs

- Campus Calendar

- Student Access Office

- Safety Resources

- University Housing

- Campus Recreation

- Health and Wellness

- Student Life Make the most of your V-State Experience by swimming with manatees, joining Greek life, catching a movie on the lawn, and more! Click here to meet all of our 200+ student organizations and activities.

- Booster Information

- V-State Club

- NCAA Compliance

- Statistics and Records

- Athletics Staff

- Blazer Athletics Winners of 7 national championships, VSU student athletes excel on the field and in the classroom. Discover the latest and breaking news for #BlazerNation, as well as schedules, rosters, and ticket purchases.

- Alumni Homepage

- Get Involved

- Update your information

- Alumni Events

- How to Give

- VSU Alumni Association

- Alumni Advantages

- Capital Campaign

- Make Your Gift Today At Valdosta State University, every gift counts. Your support enables scholarships, athletic excellence, facility upgrades, faculty improvements, and more. Plan your gift today!

Psychology Research: What is Peer Review?

- Finding Empirical Articles

- PubMed Guide This link opens in a new window

- What is Peer Review?

- Primary versus Secondary Resources

- Citation Styles and Plaigarism This link opens in a new window

- Using the Library This link opens in a new window

Peer Review

"Peer review" (or "refereed") means that an article is reviewed by experts in that field before the article gets published. This means that if a scientist writes an article on stem cells, other experts on stem cells will review the article to make sure it’s of high enough quality to be published. The peer review or referee process ensures that the research described in a journal's articles is sound and of high quality.

Types of Peer Review

Single-blind .

- Reviewers know who wrote the article.

Double-blind

- Reviewers do not know who wrote the article.

- Designed to increase objectivity in the review process.

Searching for Peer-Reviewed Articles

Many databases available through GALILEO give you the option to search for only peer reviewed items.

For example:

- Enter your search terms (keywords)

- Click on the box beside Scholarly (Peer Reviewed) Journals

- You can also select the option for peer reviewed from your results screen

Using Ulrich's to Check Peer Review Status

- Ulrich's Periodicals Directory (Ulrichsweb) You can search in Ulrich's to see if the article is Refereed (Peer Reviewed).

- Do not search for the article title. If you are unsure which title is the journal title, ask a librarian

- Click the magnifying glass icon

- Some journals have very similar names, librarians can help you find the correct journal.

- You can click on the journal title to see more information. Look for the Refereed under the Basic Description. If it says Refereed and Yes, then the journal is peer-reviewed.

- Refereed is the same as peer-reviewed.

- When a journal is peer-reviewed, it means that most of the articles published in it are peer-reviewed. Other content, such as editorials, letter to the editor, and responses to previously published articles, are not peer-reviewed.

Peer Review and Other Journal Information From Database

Some databases will tell you if the article is in a peer-reviewed journal.

- Click on the article title to see more information about the article.

- In some databases you will see more information about the journal, including if it is peer reviewed.

- In other databases, clicking on the journal title runs a search for all articles published in that title.

Journal Title Information:

- << Previous: PubMed Guide

- Next: Primary versus Secondary Resources >>

- Last Updated: Jan 11, 2024 3:37 PM