The Ultimate Guide to Writing a Research Paper

Few things strike more fear in academics than the accursed research paper , a term synonymous with long hours and hard work. Luckily there’s a secret to help you get through them. As long as you know how to write a research paper properly, you’ll find they’re not so bad . . . or at least less painful.

In this guide we concisely explain how to write an academic research paper step by step. We’ll cover areas like how to start a research paper, how to write a research paper outline, how to use citations and evidence, and how to write a conclusion for a research paper.

But before we get into the details, let’s take a look at what a research paper is and how it’s different from other writing .

Write papers with confidence Grammarly helps you make the grade Write with Grammarly

What is a research paper?

A research paper is a type of academic writing that provides an in-depth analysis, evaluation, or interpretation of a single topic, based on empirical evidence. Research papers are similar to analytical essays, except that research papers emphasize the use of statistical data and preexisting research, along with a strict code for citations.

Research papers are a bedrock of modern science and the most effective way to share information across a wide network. However, most people are familiar with research papers from school; college courses often use them to test a student’s knowledge of a particular area or their research skills in general.

Considering their gravity, research papers favor formal, even bland language that strips the writing of any bias. Researchers state their findings plainly and with corresponding evidence so that other researchers can consequently use the paper in their own research.

Keep in mind that writing a research paper is different from writing a research proposal . Essentially, research proposals are to acquire the funding needed to get the data to write a research paper.

How long should a research paper be?

The length of a research paper depends on the topic or assignment. Typically, research papers run around 4,000–6,000 words, but it’s common to see short papers around 2,000 words or long papers over 10,000 words.

If you’re writing a paper for school, the recommended length should be provided in the assignment. Otherwise, let your topic dictate the length: Complicated topics or extensive research will require more explanation.

How to write a research paper in 9 steps

Below is a step-by-step guide to writing a research paper, catered specifically for students rather than professional researchers. While some steps may not apply to your particular assignment, think of this as more of a general guideline to keep you on track.

1 Understand the assignment

For some of you this goes without saying, but you might be surprised at how many students start a research paper without even reading the assignment guidelines.

So your first step should be to review the assignment and carefully read the writing prompt. Specifically, look for technical requirements such as length , formatting requirements (single- vs. double-spacing, indentations, etc.) and citation style . Also pay attention to the particulars, such as whether or not you need to write an abstract or include a cover page.

Once you understand the assignment, the next steps in how to write a research paper follow the usual writing process , more or less. There are some extra steps involved because research papers have extra rules, but the gist of the writing process is the same.

2 Choose your topic

In open-ended assignments, the student must choose their own topic. While it may seem simple enough, choosing a topic is actually the most important decision you’ll make in writing a research paper, since it determines everything that follows.

Your top priority in how to choose a research paper topic is whether it will provide enough content and substance for an entire research paper. You’ll want to choose a topic with enough data and complexity to enable a rich discussion. However, you also want to avoid general topics and instead stick with topics specific enough that you can cover all the relevant information without cutting too much.

3 Gather preliminary research

The sooner you start researching, the better—after all, it’s called a research paper for a reason.

To refine your topic and prepare your thesis statement, find out what research is available for your topic as soon as possible. Early research can help dispel any misconceptions you have about the topic and reveal the best paths and approaches to find more material.

Typically, you can find sources either online or in a library. If you’re searching online, make sure you use credible sources like science journals or academic papers. Some search engines—mentioned below in the Tools and resources section—allow you to browse only accredited sources and academic databases.

Keep in mind the difference between primary and secondary sources as you search. Primary sources are firsthand accounts, like published articles or autobiographies; secondary sources are more removed, like critical reviews or secondhand biographies.

When gathering your research, it’s better to skim sources instead of reading each potential source fully. If a source seems useful, set it aside to give it a full read later. Otherwise, you’ll be stuck poring over sources that you ultimately won’t use, and that time could be better spent finding a worthwhile source.

Sometimes you’re required to submit a literature review , which explains your sources and presents them to an authority for confirmation. Even if no literature review is required, it’s still helpful to compile an early list of potential sources—you’ll be glad you did later.

4 Write a thesis statement

Using what you found in your preliminary research, write a thesis statement that succinctly summarizes what your research paper will be about. This is usually the first sentence in your paper, making it your reader’s introduction to the topic.

A thesis statement is the best answer for how to start a research paper. Aside from preparing your reader, the thesis statement also makes it easier for other researchers to assess whether or not your paper is useful to them for their own research. Likewise, you should read the thesis statements of other research papers to decide how useful they are to you.

A good thesis statement mentions all the important parts of the discussion without disclosing too many of the details. If you’re having trouble putting it into words, try to phrase your topic as a question and then answer it .

For example, if your research paper topic is about separating students with ADHD from other students, you’d first ask yourself, “Does separating students with ADHD improve their learning?” The answer—based on your preliminary research—is a good basis for your thesis statement.

5 Determine supporting evidence

At this stage of how to write an academic research paper, it’s time to knuckle down and do the actual research. Here’s when you go through all the sources you collected earlier and find the specific information you’d like to use in your paper.

Normally, you find your supporting evidence by reading each source and taking notes. Isolate only the information that’s directly relevant to your topic; don’t bog down your paper with tangents or unnecessary context, however interesting they may be. And always write down page numbers , not only for you to find the information later, but also because you’ll need them for your citations.

Aside from highlighting text and writing notes, another common tactic is to use bibliography cards . These are simple index cards with a fact or direct quotation on one side and the bibliographical information (source citation, page numbers, subtopic category) on the other. While bibliography cards are not necessary, some students find them useful for staying organized, especially when it’s time to write an outline.

6 Write a research paper outline

A lot of students want to know how to write a research paper outline. More than informal essays, research papers require a methodical and systematic structure to make sure all issues are addressed, and that makes outlines especially important.

First make a list of all the important categories and subtopics you need to cover—an outline for your outline! Consider all the information you gathered when compiling your supporting evidence and ask yourself what the best way to separate and categorize everything is.

Once you have a list of what you want to talk about, consider the best order to present the information. Which subtopics are related and should go next to each other? Are there any subtopics that don’t make sense if they’re presented out of sequence? If your information is fairly straightforward, feel free to take a chronological approach and present the information in the order it happened.

Because research papers can get complicated, consider breaking your outline into paragraphs. For starters, this helps you stay organized if you have a lot of information to cover. Moreover, it gives you greater control over the flow and direction of the research paper. It’s always better to fix structural problems in the outline phase than later after everything’s already been written.

Don’t forget to include your supporting evidence in the outline as well. Chances are you’ll have a lot you want to include, so putting it in your outline helps prevent some things from falling through the cracks.

7 Write the first draft

Once your outline is finished, it’s time to start actually writing your research paper. This is by far the longest and most involved step, but if you’ve properly prepared your sources and written a thorough outline, everything should run smoothly.

If you don’t know how to write an introduction for a research paper, the beginning can be difficult. That’s why writing your thesis statement beforehand is crucial. Open with your thesis statement and then fill out the rest of your introduction with the secondary information—save the details for the body of your research paper, which comes next.

The body contains the bulk of your research paper. Unlike essays , research papers usually divide the body into sections with separate headers to facilitate browsing and scanning. Use the divisions in your outline as a guide.

Follow along your outline and go paragraph by paragraph. Because this is just the first draft, don’t worry about getting each word perfect . Later you’ll be able to revise and fine-tune your writing, but for now focus simply on saying everything that needs to be said. In other words, it’s OK to make mistakes since you’ll go back later to correct them.

One of the most common problems with writing long works like research papers is connecting paragraphs to each other. The longer your writing is, the harder it is to tie everything together smoothly. Use transition sentences to improve the flow of your paper, especially for the first and last sentences in a paragraph.

Even after the body is written, you still need to know how to write a conclusion for a research paper. Just like an essay conclusion , your research paper conclusion should restate your thesis , reiterate your main evidence , and summarize your findings in a way that’s easy to understand.

Don’t add any new information in your conclusion, but feel free to say your own personal perspective or interpretation if it helps the reader understand the big picture.

8 Cite your sources correctly

Citations are part of what sets research papers apart from more casual nonfiction like personal essays . Citing your sources both validates your data and also links your research paper to the greater scientific community. Because of their importance, citations must follow precise formatting rules . . . problem is, there’s more than one set of rules!

You need to check with the assignment to see which formatting style is required. Typically, academic research papers follow one of two formatting styles for citing sources:

- MLA (Modern Language Association)

- APA (American Psychological Association)

The links above explain the specific formatting guidelines for each style, along with an automatic citation generator to help you get started.

In addition to MLA and APA styles, you occasionally see requirements for CMOS (The Chicago Manual of Style), AMA (American Medical Association) and IEEE (Institute of Electrical and Electronics Engineers).

Citations may seem confusing at first with all their rules and specific information. However, once you get the hang of them, you’ll be able to properly cite your sources without even thinking about it. Keep in mind that each formatting style has specific guidelines for citing just about any kind of source, including photos , websites , speeches , and YouTube videos .

9 Edit and proofread

Last but not least, you want to go through your research paper to correct all the mistakes by proofreading . We recommend going over it twice: once for structural issues such as adding/deleting parts or rearranging paragraphs and once for word choice, grammatical, and spelling mistakes. Doing two different editing sessions helps you focus on one area at a time instead of doing them both at once.

To help you catch everything, here’s a quick checklist to keep in mind while you edit:

Structural edit:

- Is your thesis statement clear and concise?

- Is your paper well-organized, and does it flow from beginning to end with logical transitions?

- Do your ideas follow a logical sequence in each paragraph?

- Have you used concrete details and facts and avoided generalizations?

- Do your arguments support and prove your thesis?

- Have you avoided repetition?

- Are your sources properly cited?

- Have you checked for accidental plagiarism?

Word choice, grammar, and spelling edit:

- Is your language clear and specific?

- Do your sentences flow smoothly and clearly?

- Have you avoided filler words and phrases ?

- Have you checked for proper grammar, spelling, and punctuation?

Some people find it useful to read their paper out loud to catch problems they might miss when reading in their head. Another solution is to have someone else read your paper and point out areas for improvement and/or technical mistakes.

Revising is a separate skill from writing, and being good at one doesn’t necessarily make you good at the other. If you want to improve your revision skills, read our guide on self-editing , which includes a more complete checklist and advanced tips on improving your revisions.

Technical issues like grammatical mistakes and misspelled words can be handled effortlessly if you use a spellchecker with your word processor, or even better, a digital writing assistant that also suggests improvements for word choice and tone, like Grammarly (we explain more in the Tools and resources section below).

Tools and resources

If you want to know more about how to write a research paper, or if you want some help with each step, take a look at the tools and resources below.

Google Scholar

This is Google’s own search engine, which is dedicated exclusively to academic papers. It’s a great way to find new research and sources. Plus, it’s free to use.

Zotero is a freemium, open-source research manager, a cross between an organizational CMS and a search engine for academic research. With it, you can browse the internet for research sources relevant to your topic and share them easily with colleagues. Also, it automatically generates citations.

FocusWriter

Writing long research papers is always a strain on your attention span. If you have trouble avoiding distractions during those long stretches, FocusWriter might be able to help. FocusWriter is a minimalist word processor that removes all the distracting icons and sticks only to what you type. You’re also free to choose your own customized backgrounds, with other special features like timed alarms, daily goals, and optional typewriter sound effects.

Google Charts

This useful and free tool from Google lets you create simple charts and graphs based on whatever data you input. Charts and graphs are excellent visual aids for expressing numeric data, a perfect complement if you need to explain complicated evidential research.

Grammarly goes way beyond grammar, helping you hone word choice, checking your text for plagiarism, detecting your tone, and more. For foreign-language learners, it can make your English sound more fluent, and even those who speak English as their primary language benefit from Grammarly’s suggestions.

Research paper FAQs

A research paper is a piece of academic writing that analyzes, evaluates, or interprets a single topic with empirical evidence and statistical data.

When will I need to write a research paper in college?

Many college courses use research papers to test a student’s knowledge of a particular topic or their research skills in general. While research papers depend on the course or professor, you can expect to write at least a few before graduation.

How do I determine a topic for my research paper?

If the topic is not assigned, try to find a topic that’s general enough to provide ample evidence but specific enough that you’re able to cover all the basics. If possible, choose a topic you’re personally interested in—it makes the work easier.

Where can I conduct research for my paper?

Today most research is conducted either online or in libraries. Some topics might benefit from old periodicals like newspapers or magazines, as well as visual media like documentaries. Museums, parks, and historical monuments can also be useful.

How do I cite sources for a research paper?

The correct formatting for citations depends on which style you’re using, so check the assignment guidelines. Most school research reports use either MLA or APA styles, although there are others.

This article was originally written by Karen Hertzberg in 2017. It’s been updated to include new information.

Reference management. Clean and simple.

What is a research paper?

A research paper is a paper that makes an argument about a topic based on research and analysis.

Any paper requiring the writer to research a particular topic is a research paper. Unlike essays, which are often based largely on opinion and are written from the author's point of view, research papers are based in fact.

A research paper requires you to form an opinion on a topic, research and gain expert knowledge on that topic, and then back up your own opinions and assertions with facts found through your thorough research.

➡️ Read more about different types of research papers .

What is the difference between a research paper and a thesis?

A thesis is a large paper, or multi-chapter work, based on a topic relating to your field of study.

A thesis is a document students of higher education write to obtain an academic degree or qualification. Usually, it is longer than a research paper and takes multiple years to complete.

Generally associated with graduate/postgraduate studies, it is carried out under the supervision of a professor or other academic of the university.

A major difference between a research paper and a thesis is that:

- a research paper presents certain facts that have already been researched and explained by others

- a thesis starts with a certain scholarly question or statement, which then leads to further research and new findings

This means that a thesis requires the author to input original work and their own findings in a certain field, whereas the research paper can be completed with extensive research only.

➡️ Getting ready to start a research paper or thesis? Take a look at our guides on how to start a research paper or how to come up with a topic for your thesis .

Frequently Asked Questions about research papers

Take a look at this list of the top 21 Free Online Journal and Research Databases , such as ScienceOpen , Directory of Open Access Journals , ERIC , and many more.

Mason Porter, Professor at UCLA, explains in this forum post the main reasons to write a research paper:

- To create new knowledge and disseminate it.

- To teach science and how to write about it in an academic style.

- Some practical benefits: prestige, establishing credentials, requirements for grants or to help one get a future grant proposal, and so on.

Generally, people involved in the academia. Research papers are mostly written by higher education students and professional researchers.

Yes, a research paper is the same as a scientific paper. Both papers have the same purpose and format.

A major difference between a research paper and a thesis is that the former presents certain facts that have already been researched and explained by others, whereas the latter starts with a certain scholarly question or statement, which then leads to further research and new findings.

Related Articles

How To Write A Research Paper

By: Derek Jansen (MBA) | Expert Reviewer: Dr Eunice Rautenbach | March 2024

F or many students, crafting a strong research paper from scratch can feel like a daunting task – and rightly so! In this post, we’ll unpack what a research paper is, what it needs to do , and how to write one – in three easy steps. 🙂

Overview: Writing A Research Paper

What (exactly) is a research paper.

- How to write a research paper

- Stage 1 : Topic & literature search

- Stage 2 : Structure & outline

- Stage 3 : Iterative writing

- Key takeaways

Let’s start by asking the most important question, “ What is a research paper? ”.

Simply put, a research paper is a scholarly written work where the writer (that’s you!) answers a specific question (this is called a research question ) through evidence-based arguments . Evidence-based is the keyword here. In other words, a research paper is different from an essay or other writing assignments that draw from the writer’s personal opinions or experiences. With a research paper, it’s all about building your arguments based on evidence (we’ll talk more about that evidence a little later).

Now, it’s worth noting that there are many different types of research papers , including analytical papers (the type I just described), argumentative papers, and interpretative papers. Here, we’ll focus on analytical papers , as these are some of the most common – but if you’re keen to learn about other types of research papers, be sure to check out the rest of the blog .

With that basic foundation laid, let’s get down to business and look at how to write a research paper .

Overview: The 3-Stage Process

While there are, of course, many potential approaches you can take to write a research paper, there are typically three stages to the writing process. So, in this tutorial, we’ll present a straightforward three-step process that we use when working with students at Grad Coach.

These three steps are:

- Finding a research topic and reviewing the existing literature

- Developing a provisional structure and outline for your paper, and

- Writing up your initial draft and then refining it iteratively

Let’s dig into each of these.

Need a helping hand?

Step 1: Find a topic and review the literature

As we mentioned earlier, in a research paper, you, as the researcher, will try to answer a question . More specifically, that’s called a research question , and it sets the direction of your entire paper. What’s important to understand though is that you’ll need to answer that research question with the help of high-quality sources – for example, journal articles, government reports, case studies, and so on. We’ll circle back to this in a minute.

The first stage of the research process is deciding on what your research question will be and then reviewing the existing literature (in other words, past studies and papers) to see what they say about that specific research question. In some cases, your professor may provide you with a predetermined research question (or set of questions). However, in many cases, you’ll need to find your own research question within a certain topic area.

Finding a strong research question hinges on identifying a meaningful research gap – in other words, an area that’s lacking in existing research. There’s a lot to unpack here, so if you wanna learn more, check out the plain-language explainer video below.

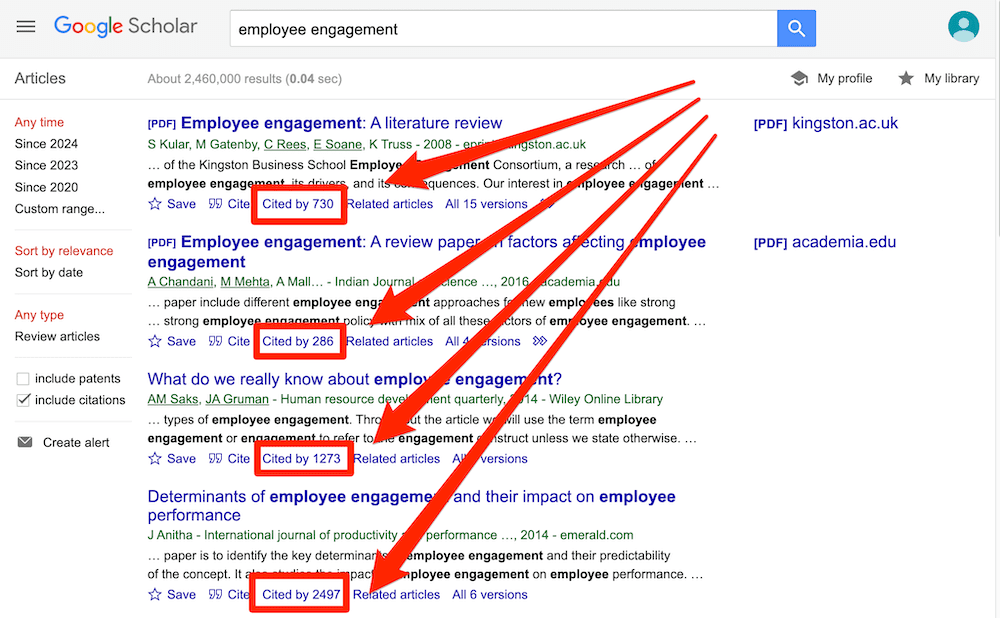

Once you’ve figured out which question (or questions) you’ll attempt to answer in your research paper, you’ll need to do a deep dive into the existing literature – this is called a “ literature search ”. Again, there are many ways to go about this, but your most likely starting point will be Google Scholar .

If you’re new to Google Scholar, think of it as Google for the academic world. You can start by simply entering a few different keywords that are relevant to your research question and it will then present a host of articles for you to review. What you want to pay close attention to here is the number of citations for each paper – the more citations a paper has, the more credible it is (generally speaking – there are some exceptions, of course).

Ideally, what you’re looking for are well-cited papers that are highly relevant to your topic. That said, keep in mind that citations are a cumulative metric , so older papers will often have more citations than newer papers – just because they’ve been around for longer. So, don’t fixate on this metric in isolation – relevance and recency are also very important.

Beyond Google Scholar, you’ll also definitely want to check out academic databases and aggregators such as Science Direct, PubMed, JStor and so on. These will often overlap with the results that you find in Google Scholar, but they can also reveal some hidden gems – so, be sure to check them out.

Once you’ve worked your way through all the literature, you’ll want to catalogue all this information in some sort of spreadsheet so that you can easily recall who said what, when and within what context. If you’d like, we’ve got a free literature spreadsheet that helps you do exactly that.

Step 2: Develop a structure and outline

With your research question pinned down and your literature digested and catalogued, it’s time to move on to planning your actual research paper .

It might sound obvious, but it’s really important to have some sort of rough outline in place before you start writing your paper. So often, we see students eagerly rushing into the writing phase, only to land up with a disjointed research paper that rambles on in multiple

Now, the secret here is to not get caught up in the fine details . Realistically, all you need at this stage is a bullet-point list that describes (in broad strokes) what you’ll discuss and in what order. It’s also useful to remember that you’re not glued to this outline – in all likelihood, you’ll chop and change some sections once you start writing, and that’s perfectly okay. What’s important is that you have some sort of roadmap in place from the start.

At this stage you might be wondering, “ But how should I structure my research paper? ”. Well, there’s no one-size-fits-all solution here, but in general, a research paper will consist of a few relatively standardised components:

- Introduction

- Literature review

- Methodology

Let’s take a look at each of these.

First up is the introduction section . As the name suggests, the purpose of the introduction is to set the scene for your research paper. There are usually (at least) four ingredients that go into this section – these are the background to the topic, the research problem and resultant research question , and the justification or rationale. If you’re interested, the video below unpacks the introduction section in more detail.

The next section of your research paper will typically be your literature review . Remember all that literature you worked through earlier? Well, this is where you’ll present your interpretation of all that content . You’ll do this by writing about recent trends, developments, and arguments within the literature – but more specifically, those that are relevant to your research question . The literature review can oftentimes seem a little daunting, even to seasoned researchers, so be sure to check out our extensive collection of literature review content here .

With the introduction and lit review out of the way, the next section of your paper is the research methodology . In a nutshell, the methodology section should describe to your reader what you did (beyond just reviewing the existing literature) to answer your research question. For example, what data did you collect, how did you collect that data, how did you analyse that data and so on? For each choice, you’ll also need to justify why you chose to do it that way, and what the strengths and weaknesses of your approach were.

Now, it’s worth mentioning that for some research papers, this aspect of the project may be a lot simpler . For example, you may only need to draw on secondary sources (in other words, existing data sets). In some cases, you may just be asked to draw your conclusions from the literature search itself (in other words, there may be no data analysis at all). But, if you are required to collect and analyse data, you’ll need to pay a lot of attention to the methodology section. The video below provides an example of what the methodology section might look like.

By this stage of your paper, you will have explained what your research question is, what the existing literature has to say about that question, and how you analysed additional data to try to answer your question. So, the natural next step is to present your analysis of that data . This section is usually called the “results” or “analysis” section and this is where you’ll showcase your findings.

Depending on your school’s requirements, you may need to present and interpret the data in one section – or you might split the presentation and the interpretation into two sections. In the latter case, your “results” section will just describe the data, and the “discussion” is where you’ll interpret that data and explicitly link your analysis back to your research question. If you’re not sure which approach to take, check in with your professor or take a look at past papers to see what the norms are for your programme.

Alright – once you’ve presented and discussed your results, it’s time to wrap it up . This usually takes the form of the “ conclusion ” section. In the conclusion, you’ll need to highlight the key takeaways from your study and close the loop by explicitly answering your research question. Again, the exact requirements here will vary depending on your programme (and you may not even need a conclusion section at all) – so be sure to check with your professor if you’re unsure.

Step 3: Write and refine

Finally, it’s time to get writing. All too often though, students hit a brick wall right about here… So, how do you avoid this happening to you?

Well, there’s a lot to be said when it comes to writing a research paper (or any sort of academic piece), but we’ll share three practical tips to help you get started.

First and foremost , it’s essential to approach your writing as an iterative process. In other words, you need to start with a really messy first draft and then polish it over multiple rounds of editing. Don’t waste your time trying to write a perfect research paper in one go. Instead, take the pressure off yourself by adopting an iterative approach.

Secondly , it’s important to always lean towards critical writing , rather than descriptive writing. What does this mean? Well, at the simplest level, descriptive writing focuses on the “ what ”, while critical writing digs into the “ so what ” – in other words, the implications . If you’re not familiar with these two types of writing, don’t worry! You can find a plain-language explanation here.

Last but not least, you’ll need to get your referencing right. Specifically, you’ll need to provide credible, correctly formatted citations for the statements you make. We see students making referencing mistakes all the time and it costs them dearly. The good news is that you can easily avoid this by using a simple reference manager . If you don’t have one, check out our video about Mendeley, an easy (and free) reference management tool that you can start using today.

Recap: Key Takeaways

We’ve covered a lot of ground here. To recap, the three steps to writing a high-quality research paper are:

- To choose a research question and review the literature

- To plan your paper structure and draft an outline

- To take an iterative approach to writing, focusing on critical writing and strong referencing

Remember, this is just a b ig-picture overview of the research paper development process and there’s a lot more nuance to unpack. So, be sure to grab a copy of our free research paper template to learn more about how to write a research paper.

You Might Also Like:

How To Choose A Tutor For Your Dissertation

Hiring the right tutor for your dissertation or thesis can make the difference between passing and failing. Here’s what you need to consider.

5 Signs You Need A Dissertation Helper

Discover the 5 signs that suggest you need a dissertation helper to get unstuck, finish your degree and get your life back.

Writing A Dissertation While Working: A How-To Guide

Struggling to balance your dissertation with a full-time job and family? Learn practical strategies to achieve success.

How To Review & Understand Academic Literature Quickly

Learn how to fast-track your literature review by reading with intention and clarity. Dr E and Amy Murdock explain how.

Dissertation Writing Services: Far Worse Than You Think

Thinking about using a dissertation or thesis writing service? You might want to reconsider that move. Here’s what you need to know.

📄 FREE TEMPLATES

Research Topic Ideation

Proposal Writing

Literature Review

Methodology & Analysis

Academic Writing

Referencing & Citing

Apps, Tools & Tricks

The Grad Coach Podcast

Can you help me with a full paper template for this Abstract:

Background: Energy and sports drinks have gained popularity among diverse demographic groups, including adolescents, athletes, workers, and college students. While often used interchangeably, these beverages serve distinct purposes, with energy drinks aiming to boost energy and cognitive performance, and sports drinks designed to prevent dehydration and replenish electrolytes and carbohydrates lost during physical exertion.

Objective: To assess the nutritional quality of energy and sports drinks in Egypt.

Material and Methods: A cross-sectional study assessed the nutrient contents, including energy, sugar, electrolytes, vitamins, and caffeine, of sports and energy drinks available in major supermarkets in Cairo, Alexandria, and Giza, Egypt. Data collection involved photographing all relevant product labels and recording nutritional information. Descriptive statistics and appropriate statistical tests were employed to analyze and compare the nutritional values of energy and sports drinks.

Results: The study analyzed 38 sports drinks and 42 energy drinks. Sports drinks were significantly more expensive than energy drinks, with higher net content and elevated magnesium, potassium, and vitamin C. Energy drinks contained higher concentrations of caffeine, sugars, and vitamins B2, B3, and B6.

Conclusion: Significant nutritional differences exist between sports and energy drinks, reflecting their intended uses. However, these beverages’ high sugar content and calorie loads raise health concerns. Proper labeling, public awareness, and responsible marketing are essential to guide safe consumption practices in Egypt.

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Submit Comment

- Print Friendly

COMMENTS

In a term or research paper, a large portion of the content is your report on the research you read about your topic (called the literature). You’ll need to summarize and discuss how others view the topic, and even more important, provide your own perspective.

Research Paper is a written document that presents the author’s original research, analysis, and interpretation of a specific topic or issue. It is typically based on Empirical Evidence, and may involve qualitative or quantitative research methods, or a combination of both.

The four research paper writing steps according to the LEAP approach: Lay out the facts. Explain the results. Advertize the research. Prepare for submission. I will show each of these steps in detail. And you will be able to download the LEAP cheat sheet for using with every paper you write.

A research paper is a type of academic writing that provides an in-depth analysis, evaluation, or interpretation of a single topic, based on empirical evidence. Research papers are similar to analytical essays, except that research papers emphasize the use of statistical data and preexisting research, along with a strict code for citations.

Any paper requiring the writer to research a particular topic is a research paper. Unlike essays, which are often based largely on opinion and are written from the author's point of view, research papers are based in fact.

A research paper is a piece of academic writing that provides analysis, interpretation, and argument based on in-depth independent research. Research papers are similar to academic essays, but they are usually longer and more detailed assignments, designed to assess not only your writing skills but also your skills in scholarly research ...

Simply put, a research paper is a scholarly written work where the writer (that’s you!) answers a specific question (this is called a research question) through evidence-based arguments. Evidence-based is the keyword here.

The introduction to a research paper is where you set up your topic and approach for the reader. It has several key goals: Present your topic and get the reader interested. Provide background or summarize existing research. Position your own approach. Detail your specific research problem and problem statement.

How to write a research paper. 31 Mar 2023. 1:10 PM ET. By Elisabeth Pain. Share: SeventyFour/Shutterstock. Condensing months or years of research into a few pages can be a mighty exercise even for experienced writers.

A research paper is an extended piece of writing based on in-depth independent research. It may involve conducting empirical research or analyzing primary and secondary sources. Writing a good research paper requires you to demonstrate a strong knowledge of your topic and advance an original argument.