Hypothesis Testing - Chi Squared Test

Lisa Sullivan, PhD

Professor of Biostatistics

Boston University School of Public Health

Introduction

This module will continue the discussion of hypothesis testing, where a specific statement or hypothesis is generated about a population parameter, and sample statistics are used to assess the likelihood that the hypothesis is true. The hypothesis is based on available information and the investigator's belief about the population parameters. The specific tests considered here are called chi-square tests and are appropriate when the outcome is discrete (dichotomous, ordinal or categorical). For example, in some clinical trials the outcome is a classification such as hypertensive, pre-hypertensive or normotensive. We could use the same classification in an observational study such as the Framingham Heart Study to compare men and women in terms of their blood pressure status - again using the classification of hypertensive, pre-hypertensive or normotensive status.

The technique to analyze a discrete outcome uses what is called a chi-square test. Specifically, the test statistic follows a chi-square probability distribution. We will consider chi-square tests here with one, two and more than two independent comparison groups.

Learning Objectives

After completing this module, the student will be able to:

- Perform chi-square tests by hand

- Appropriately interpret results of chi-square tests

- Identify the appropriate hypothesis testing procedure based on type of outcome variable and number of samples

Tests with One Sample, Discrete Outcome

Here we consider hypothesis testing with a discrete outcome variable in a single population. Discrete variables are variables that take on more than two distinct responses or categories and the responses can be ordered or unordered (i.e., the outcome can be ordinal or categorical). The procedure we describe here can be used for dichotomous (exactly 2 response options), ordinal or categorical discrete outcomes and the objective is to compare the distribution of responses, or the proportions of participants in each response category, to a known distribution. The known distribution is derived from another study or report and it is again important in setting up the hypotheses that the comparator distribution specified in the null hypothesis is a fair comparison. The comparator is sometimes called an external or a historical control.

In one sample tests for a discrete outcome, we set up our hypotheses against an appropriate comparator. We select a sample and compute descriptive statistics on the sample data. Specifically, we compute the sample size (n) and the proportions of participants in each response

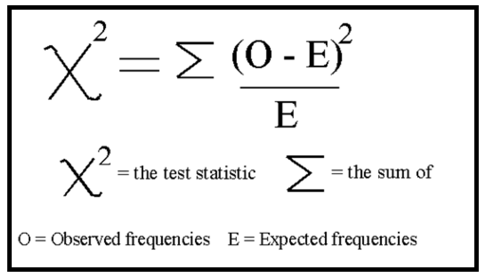

Test Statistic for Testing H 0 : p 1 = p 10 , p 2 = p 20 , ..., p k = p k0

We find the critical value in a table of probabilities for the chi-square distribution with degrees of freedom (df) = k-1. In the test statistic, O = observed frequency and E=expected frequency in each of the response categories. The observed frequencies are those observed in the sample and the expected frequencies are computed as described below. χ 2 (chi-square) is another probability distribution and ranges from 0 to ∞. The test above statistic formula above is appropriate for large samples, defined as expected frequencies of at least 5 in each of the response categories.

When we conduct a χ 2 test, we compare the observed frequencies in each response category to the frequencies we would expect if the null hypothesis were true. These expected frequencies are determined by allocating the sample to the response categories according to the distribution specified in H 0 . This is done by multiplying the observed sample size (n) by the proportions specified in the null hypothesis (p 10 , p 20 , ..., p k0 ). To ensure that the sample size is appropriate for the use of the test statistic above, we need to ensure that the following: min(np 10 , n p 20 , ..., n p k0 ) > 5.

The test of hypothesis with a discrete outcome measured in a single sample, where the goal is to assess whether the distribution of responses follows a known distribution, is called the χ 2 goodness-of-fit test. As the name indicates, the idea is to assess whether the pattern or distribution of responses in the sample "fits" a specified population (external or historical) distribution. In the next example we illustrate the test. As we work through the example, we provide additional details related to the use of this new test statistic.

A University conducted a survey of its recent graduates to collect demographic and health information for future planning purposes as well as to assess students' satisfaction with their undergraduate experiences. The survey revealed that a substantial proportion of students were not engaging in regular exercise, many felt their nutrition was poor and a substantial number were smoking. In response to a question on regular exercise, 60% of all graduates reported getting no regular exercise, 25% reported exercising sporadically and 15% reported exercising regularly as undergraduates. The next year the University launched a health promotion campaign on campus in an attempt to increase health behaviors among undergraduates. The program included modules on exercise, nutrition and smoking cessation. To evaluate the impact of the program, the University again surveyed graduates and asked the same questions. The survey was completed by 470 graduates and the following data were collected on the exercise question:

Based on the data, is there evidence of a shift in the distribution of responses to the exercise question following the implementation of the health promotion campaign on campus? Run the test at a 5% level of significance.

In this example, we have one sample and a discrete (ordinal) outcome variable (with three response options). We specifically want to compare the distribution of responses in the sample to the distribution reported the previous year (i.e., 60%, 25%, 15% reporting no, sporadic and regular exercise, respectively). We now run the test using the five-step approach.

- Step 1. Set up hypotheses and determine level of significance.

The null hypothesis again represents the "no change" or "no difference" situation. If the health promotion campaign has no impact then we expect the distribution of responses to the exercise question to be the same as that measured prior to the implementation of the program.

H 0 : p 1 =0.60, p 2 =0.25, p 3 =0.15, or equivalently H 0 : Distribution of responses is 0.60, 0.25, 0.15

H 1 : H 0 is false. α =0.05

Notice that the research hypothesis is written in words rather than in symbols. The research hypothesis as stated captures any difference in the distribution of responses from that specified in the null hypothesis. We do not specify a specific alternative distribution, instead we are testing whether the sample data "fit" the distribution in H 0 or not. With the χ 2 goodness-of-fit test there is no upper or lower tailed version of the test.

- Step 2. Select the appropriate test statistic.

The test statistic is:

We must first assess whether the sample size is adequate. Specifically, we need to check min(np 0 , np 1, ..., n p k ) > 5. The sample size here is n=470 and the proportions specified in the null hypothesis are 0.60, 0.25 and 0.15. Thus, min( 470(0.65), 470(0.25), 470(0.15))=min(282, 117.5, 70.5)=70.5. The sample size is more than adequate so the formula can be used.

- Step 3. Set up decision rule.

The decision rule for the χ 2 test depends on the level of significance and the degrees of freedom, defined as degrees of freedom (df) = k-1 (where k is the number of response categories). If the null hypothesis is true, the observed and expected frequencies will be close in value and the χ 2 statistic will be close to zero. If the null hypothesis is false, then the χ 2 statistic will be large. Critical values can be found in a table of probabilities for the χ 2 distribution. Here we have df=k-1=3-1=2 and a 5% level of significance. The appropriate critical value is 5.99, and the decision rule is as follows: Reject H 0 if χ 2 > 5.99.

- Step 4. Compute the test statistic.

We now compute the expected frequencies using the sample size and the proportions specified in the null hypothesis. We then substitute the sample data (observed frequencies) and the expected frequencies into the formula for the test statistic identified in Step 2. The computations can be organized as follows.

Notice that the expected frequencies are taken to one decimal place and that the sum of the observed frequencies is equal to the sum of the expected frequencies. The test statistic is computed as follows:

- Step 5. Conclusion.

We reject H 0 because 8.46 > 5.99. We have statistically significant evidence at α=0.05 to show that H 0 is false, or that the distribution of responses is not 0.60, 0.25, 0.15. The p-value is p < 0.005.

In the χ 2 goodness-of-fit test, we conclude that either the distribution specified in H 0 is false (when we reject H 0 ) or that we do not have sufficient evidence to show that the distribution specified in H 0 is false (when we fail to reject H 0 ). Here, we reject H 0 and concluded that the distribution of responses to the exercise question following the implementation of the health promotion campaign was not the same as the distribution prior. The test itself does not provide details of how the distribution has shifted. A comparison of the observed and expected frequencies will provide some insight into the shift (when the null hypothesis is rejected). Does it appear that the health promotion campaign was effective?

Consider the following:

If the null hypothesis were true (i.e., no change from the prior year) we would have expected more students to fall in the "No Regular Exercise" category and fewer in the "Regular Exercise" categories. In the sample, 255/470 = 54% reported no regular exercise and 90/470=19% reported regular exercise. Thus, there is a shift toward more regular exercise following the implementation of the health promotion campaign. There is evidence of a statistical difference, is this a meaningful difference? Is there room for improvement?

The National Center for Health Statistics (NCHS) provided data on the distribution of weight (in categories) among Americans in 2002. The distribution was based on specific values of body mass index (BMI) computed as weight in kilograms over height in meters squared. Underweight was defined as BMI< 18.5, Normal weight as BMI between 18.5 and 24.9, overweight as BMI between 25 and 29.9 and obese as BMI of 30 or greater. Americans in 2002 were distributed as follows: 2% Underweight, 39% Normal Weight, 36% Overweight, and 23% Obese. Suppose we want to assess whether the distribution of BMI is different in the Framingham Offspring sample. Using data from the n=3,326 participants who attended the seventh examination of the Offspring in the Framingham Heart Study we created the BMI categories as defined and observed the following:

- Step 1. Set up hypotheses and determine level of significance.

H 0 : p 1 =0.02, p 2 =0.39, p 3 =0.36, p 4 =0.23 or equivalently

H 0 : Distribution of responses is 0.02, 0.39, 0.36, 0.23

H 1 : H 0 is false. α=0.05

The formula for the test statistic is:

We must assess whether the sample size is adequate. Specifically, we need to check min(np 0 , np 1, ..., n p k ) > 5. The sample size here is n=3,326 and the proportions specified in the null hypothesis are 0.02, 0.39, 0.36 and 0.23. Thus, min( 3326(0.02), 3326(0.39), 3326(0.36), 3326(0.23))=min(66.5, 1297.1, 1197.4, 765.0)=66.5. The sample size is more than adequate, so the formula can be used.

Here we have df=k-1=4-1=3 and a 5% level of significance. The appropriate critical value is 7.81 and the decision rule is as follows: Reject H 0 if χ 2 > 7.81.

We now compute the expected frequencies using the sample size and the proportions specified in the null hypothesis. We then substitute the sample data (observed frequencies) into the formula for the test statistic identified in Step 2. We organize the computations in the following table.

The test statistic is computed as follows:

We reject H 0 because 233.53 > 7.81. We have statistically significant evidence at α=0.05 to show that H 0 is false or that the distribution of BMI in Framingham is different from the national data reported in 2002, p < 0.005.

Again, the χ 2 goodness-of-fit test allows us to assess whether the distribution of responses "fits" a specified distribution. Here we show that the distribution of BMI in the Framingham Offspring Study is different from the national distribution. To understand the nature of the difference we can compare observed and expected frequencies or observed and expected proportions (or percentages). The frequencies are large because of the large sample size, the observed percentages of patients in the Framingham sample are as follows: 0.6% underweight, 28% normal weight, 41% overweight and 30% obese. In the Framingham Offspring sample there are higher percentages of overweight and obese persons (41% and 30% in Framingham as compared to 36% and 23% in the national data), and lower proportions of underweight and normal weight persons (0.6% and 28% in Framingham as compared to 2% and 39% in the national data). Are these meaningful differences?

In the module on hypothesis testing for means and proportions, we discussed hypothesis testing applications with a dichotomous outcome variable in a single population. We presented a test using a test statistic Z to test whether an observed (sample) proportion differed significantly from a historical or external comparator. The chi-square goodness-of-fit test can also be used with a dichotomous outcome and the results are mathematically equivalent.

In the prior module, we considered the following example. Here we show the equivalence to the chi-square goodness-of-fit test.

The NCHS report indicated that in 2002, 75% of children aged 2 to 17 saw a dentist in the past year. An investigator wants to assess whether use of dental services is similar in children living in the city of Boston. A sample of 125 children aged 2 to 17 living in Boston are surveyed and 64 reported seeing a dentist over the past 12 months. Is there a significant difference in use of dental services between children living in Boston and the national data?

We presented the following approach to the test using a Z statistic.

- Step 1. Set up hypotheses and determine level of significance

H 0 : p = 0.75

H 1 : p ≠ 0.75 α=0.05

We must first check that the sample size is adequate. Specifically, we need to check min(np 0 , n(1-p 0 )) = min( 125(0.75), 125(1-0.75))=min(94, 31)=31. The sample size is more than adequate so the following formula can be used

This is a two-tailed test, using a Z statistic and a 5% level of significance. Reject H 0 if Z < -1.960 or if Z > 1.960.

We now substitute the sample data into the formula for the test statistic identified in Step 2. The sample proportion is:

We reject H 0 because -6.15 < -1.960. We have statistically significant evidence at a =0.05 to show that there is a statistically significant difference in the use of dental service by children living in Boston as compared to the national data. (p < 0.0001).

We now conduct the same test using the chi-square goodness-of-fit test. First, we summarize our sample data as follows:

H 0 : p 1 =0.75, p 2 =0.25 or equivalently H 0 : Distribution of responses is 0.75, 0.25

We must assess whether the sample size is adequate. Specifically, we need to check min(np 0 , np 1, ...,np k >) > 5. The sample size here is n=125 and the proportions specified in the null hypothesis are 0.75, 0.25. Thus, min( 125(0.75), 125(0.25))=min(93.75, 31.25)=31.25. The sample size is more than adequate so the formula can be used.

Here we have df=k-1=2-1=1 and a 5% level of significance. The appropriate critical value is 3.84, and the decision rule is as follows: Reject H 0 if χ 2 > 3.84. (Note that 1.96 2 = 3.84, where 1.96 was the critical value used in the Z test for proportions shown above.)

(Note that (-6.15) 2 = 37.8, where -6.15 was the value of the Z statistic in the test for proportions shown above.)

We reject H 0 because 37.8 > 3.84. We have statistically significant evidence at α=0.05 to show that there is a statistically significant difference in the use of dental service by children living in Boston as compared to the national data. (p < 0.0001). This is the same conclusion we reached when we conducted the test using the Z test above. With a dichotomous outcome, Z 2 = χ 2 ! In statistics, there are often several approaches that can be used to test hypotheses.

Tests for Two or More Independent Samples, Discrete Outcome

Here we extend that application of the chi-square test to the case with two or more independent comparison groups. Specifically, the outcome of interest is discrete with two or more responses and the responses can be ordered or unordered (i.e., the outcome can be dichotomous, ordinal or categorical). We now consider the situation where there are two or more independent comparison groups and the goal of the analysis is to compare the distribution of responses to the discrete outcome variable among several independent comparison groups.

The test is called the χ 2 test of independence and the null hypothesis is that there is no difference in the distribution of responses to the outcome across comparison groups. This is often stated as follows: The outcome variable and the grouping variable (e.g., the comparison treatments or comparison groups) are independent (hence the name of the test). Independence here implies homogeneity in the distribution of the outcome among comparison groups.

The null hypothesis in the χ 2 test of independence is often stated in words as: H 0 : The distribution of the outcome is independent of the groups. The alternative or research hypothesis is that there is a difference in the distribution of responses to the outcome variable among the comparison groups (i.e., that the distribution of responses "depends" on the group). In order to test the hypothesis, we measure the discrete outcome variable in each participant in each comparison group. The data of interest are the observed frequencies (or number of participants in each response category in each group). The formula for the test statistic for the χ 2 test of independence is given below.

Test Statistic for Testing H 0 : Distribution of outcome is independent of groups

and we find the critical value in a table of probabilities for the chi-square distribution with df=(r-1)*(c-1).

Here O = observed frequency, E=expected frequency in each of the response categories in each group, r = the number of rows in the two-way table and c = the number of columns in the two-way table. r and c correspond to the number of comparison groups and the number of response options in the outcome (see below for more details). The observed frequencies are the sample data and the expected frequencies are computed as described below. The test statistic is appropriate for large samples, defined as expected frequencies of at least 5 in each of the response categories in each group.

The data for the χ 2 test of independence are organized in a two-way table. The outcome and grouping variable are shown in the rows and columns of the table. The sample table below illustrates the data layout. The table entries (blank below) are the numbers of participants in each group responding to each response category of the outcome variable.

Table - Possible outcomes are are listed in the columns; The groups being compared are listed in rows.

In the table above, the grouping variable is shown in the rows of the table; r denotes the number of independent groups. The outcome variable is shown in the columns of the table; c denotes the number of response options in the outcome variable. Each combination of a row (group) and column (response) is called a cell of the table. The table has r*c cells and is sometimes called an r x c ("r by c") table. For example, if there are 4 groups and 5 categories in the outcome variable, the data are organized in a 4 X 5 table. The row and column totals are shown along the right-hand margin and the bottom of the table, respectively. The total sample size, N, can be computed by summing the row totals or the column totals. Similar to ANOVA, N does not refer to a population size here but rather to the total sample size in the analysis. The sample data can be organized into a table like the above. The numbers of participants within each group who select each response option are shown in the cells of the table and these are the observed frequencies used in the test statistic.

The test statistic for the χ 2 test of independence involves comparing observed (sample data) and expected frequencies in each cell of the table. The expected frequencies are computed assuming that the null hypothesis is true. The null hypothesis states that the two variables (the grouping variable and the outcome) are independent. The definition of independence is as follows:

Two events, A and B, are independent if P(A|B) = P(A), or equivalently, if P(A and B) = P(A) P(B).

The second statement indicates that if two events, A and B, are independent then the probability of their intersection can be computed by multiplying the probability of each individual event. To conduct the χ 2 test of independence, we need to compute expected frequencies in each cell of the table. Expected frequencies are computed by assuming that the grouping variable and outcome are independent (i.e., under the null hypothesis). Thus, if the null hypothesis is true, using the definition of independence:

P(Group 1 and Response Option 1) = P(Group 1) P(Response Option 1).

The above states that the probability that an individual is in Group 1 and their outcome is Response Option 1 is computed by multiplying the probability that person is in Group 1 by the probability that a person is in Response Option 1. To conduct the χ 2 test of independence, we need expected frequencies and not expected probabilities . To convert the above probability to a frequency, we multiply by N. Consider the following small example.

The data shown above are measured in a sample of size N=150. The frequencies in the cells of the table are the observed frequencies. If Group and Response are independent, then we can compute the probability that a person in the sample is in Group 1 and Response category 1 using:

P(Group 1 and Response 1) = P(Group 1) P(Response 1),

P(Group 1 and Response 1) = (25/150) (62/150) = 0.069.

Thus if Group and Response are independent we would expect 6.9% of the sample to be in the top left cell of the table (Group 1 and Response 1). The expected frequency is 150(0.069) = 10.4. We could do the same for Group 2 and Response 1:

P(Group 2 and Response 1) = P(Group 2) P(Response 1),

P(Group 2 and Response 1) = (50/150) (62/150) = 0.138.

The expected frequency in Group 2 and Response 1 is 150(0.138) = 20.7.

Thus, the formula for determining the expected cell frequencies in the χ 2 test of independence is as follows:

Expected Cell Frequency = (Row Total * Column Total)/N.

The above computes the expected frequency in one step rather than computing the expected probability first and then converting to a frequency.

In a prior example we evaluated data from a survey of university graduates which assessed, among other things, how frequently they exercised. The survey was completed by 470 graduates. In the prior example we used the χ 2 goodness-of-fit test to assess whether there was a shift in the distribution of responses to the exercise question following the implementation of a health promotion campaign on campus. We specifically considered one sample (all students) and compared the observed distribution to the distribution of responses the prior year (a historical control). Suppose we now wish to assess whether there is a relationship between exercise on campus and students' living arrangements. As part of the same survey, graduates were asked where they lived their senior year. The response options were dormitory, on-campus apartment, off-campus apartment, and at home (i.e., commuted to and from the university). The data are shown below.

Based on the data, is there a relationship between exercise and student's living arrangement? Do you think where a person lives affect their exercise status? Here we have four independent comparison groups (living arrangement) and a discrete (ordinal) outcome variable with three response options. We specifically want to test whether living arrangement and exercise are independent. We will run the test using the five-step approach.

H 0 : Living arrangement and exercise are independent

H 1 : H 0 is false. α=0.05

The null and research hypotheses are written in words rather than in symbols. The research hypothesis is that the grouping variable (living arrangement) and the outcome variable (exercise) are dependent or related.

- Step 2. Select the appropriate test statistic.

The condition for appropriate use of the above test statistic is that each expected frequency is at least 5. In Step 4 we will compute the expected frequencies and we will ensure that the condition is met.

The decision rule depends on the level of significance and the degrees of freedom, defined as df = (r-1)(c-1), where r and c are the numbers of rows and columns in the two-way data table. The row variable is the living arrangement and there are 4 arrangements considered, thus r=4. The column variable is exercise and 3 responses are considered, thus c=3. For this test, df=(4-1)(3-1)=3(2)=6. Again, with χ 2 tests there are no upper, lower or two-tailed tests. If the null hypothesis is true, the observed and expected frequencies will be close in value and the χ 2 statistic will be close to zero. If the null hypothesis is false, then the χ 2 statistic will be large. The rejection region for the χ 2 test of independence is always in the upper (right-hand) tail of the distribution. For df=6 and a 5% level of significance, the appropriate critical value is 12.59 and the decision rule is as follows: Reject H 0 if c 2 > 12.59.

We now compute the expected frequencies using the formula,

Expected Frequency = (Row Total * Column Total)/N.

The computations can be organized in a two-way table. The top number in each cell of the table is the observed frequency and the bottom number is the expected frequency. The expected frequencies are shown in parentheses.

Notice that the expected frequencies are taken to one decimal place and that the sums of the observed frequencies are equal to the sums of the expected frequencies in each row and column of the table.

Recall in Step 2 a condition for the appropriate use of the test statistic was that each expected frequency is at least 5. This is true for this sample (the smallest expected frequency is 9.6) and therefore it is appropriate to use the test statistic.

We reject H 0 because 60.5 > 12.59. We have statistically significant evidence at a =0.05 to show that H 0 is false or that living arrangement and exercise are not independent (i.e., they are dependent or related), p < 0.005.

Again, the χ 2 test of independence is used to test whether the distribution of the outcome variable is similar across the comparison groups. Here we rejected H 0 and concluded that the distribution of exercise is not independent of living arrangement, or that there is a relationship between living arrangement and exercise. The test provides an overall assessment of statistical significance. When the null hypothesis is rejected, it is important to review the sample data to understand the nature of the relationship. Consider again the sample data.

Because there are different numbers of students in each living situation, it makes the comparisons of exercise patterns difficult on the basis of the frequencies alone. The following table displays the percentages of students in each exercise category by living arrangement. The percentages sum to 100% in each row of the table. For comparison purposes, percentages are also shown for the total sample along the bottom row of the table.

From the above, it is clear that higher percentages of students living in dormitories and in on-campus apartments reported regular exercise (31% and 23%) as compared to students living in off-campus apartments and at home (10% each).

Test Yourself

Pancreaticoduodenectomy (PD) is a procedure that is associated with considerable morbidity. A study was recently conducted on 553 patients who had a successful PD between January 2000 and December 2010 to determine whether their Surgical Apgar Score (SAS) is related to 30-day perioperative morbidity and mortality. The table below gives the number of patients experiencing no, minor, or major morbidity by SAS category.

Question: What would be an appropriate statistical test to examine whether there is an association between Surgical Apgar Score and patient outcome? Using 14.13 as the value of the test statistic for these data, carry out the appropriate test at a 5% level of significance. Show all parts of your test.

In the module on hypothesis testing for means and proportions, we discussed hypothesis testing applications with a dichotomous outcome variable and two independent comparison groups. We presented a test using a test statistic Z to test for equality of independent proportions. The chi-square test of independence can also be used with a dichotomous outcome and the results are mathematically equivalent.

In the prior module, we considered the following example. Here we show the equivalence to the chi-square test of independence.

A randomized trial is designed to evaluate the effectiveness of a newly developed pain reliever designed to reduce pain in patients following joint replacement surgery. The trial compares the new pain reliever to the pain reliever currently in use (called the standard of care). A total of 100 patients undergoing joint replacement surgery agreed to participate in the trial. Patients were randomly assigned to receive either the new pain reliever or the standard pain reliever following surgery and were blind to the treatment assignment. Before receiving the assigned treatment, patients were asked to rate their pain on a scale of 0-10 with higher scores indicative of more pain. Each patient was then given the assigned treatment and after 30 minutes was again asked to rate their pain on the same scale. The primary outcome was a reduction in pain of 3 or more scale points (defined by clinicians as a clinically meaningful reduction). The following data were observed in the trial.

We tested whether there was a significant difference in the proportions of patients reporting a meaningful reduction (i.e., a reduction of 3 or more scale points) using a Z statistic, as follows.

H 0 : p 1 = p 2

H 1 : p 1 ≠ p 2 α=0.05

Here the new or experimental pain reliever is group 1 and the standard pain reliever is group 2.

We must first check that the sample size is adequate. Specifically, we need to ensure that we have at least 5 successes and 5 failures in each comparison group or that:

In this example, we have

Therefore, the sample size is adequate, so the following formula can be used:

Reject H 0 if Z < -1.960 or if Z > 1.960.

We now substitute the sample data into the formula for the test statistic identified in Step 2. We first compute the overall proportion of successes:

We now substitute to compute the test statistic.

- Step 5. Conclusion.

We now conduct the same test using the chi-square test of independence.

H 0 : Treatment and outcome (meaningful reduction in pain) are independent

H 1 : H 0 is false. α=0.05

The formula for the test statistic is:

For this test, df=(2-1)(2-1)=1. At a 5% level of significance, the appropriate critical value is 3.84 and the decision rule is as follows: Reject H0 if χ 2 > 3.84. (Note that 1.96 2 = 3.84, where 1.96 was the critical value used in the Z test for proportions shown above.)

We now compute the expected frequencies using:

The computations can be organized in a two-way table. The top number in each cell of the table is the observed frequency and the bottom number is the expected frequency. The expected frequencies are shown in parentheses.

A condition for the appropriate use of the test statistic was that each expected frequency is at least 5. This is true for this sample (the smallest expected frequency is 22.0) and therefore it is appropriate to use the test statistic.

(Note that (2.53) 2 = 6.4, where 2.53 was the value of the Z statistic in the test for proportions shown above.)

Chi-Squared Tests in R

The video below by Mike Marin demonstrates how to perform chi-squared tests in the R programming language.

Answer to Problem on Pancreaticoduodenectomy and Surgical Apgar Scores

We have 3 independent comparison groups (Surgical Apgar Score) and a categorical outcome variable (morbidity/mortality). We can run a Chi-Squared test of independence.

H 0 : Apgar scores and patient outcome are independent of one another.

H A : Apgar scores and patient outcome are not independent.

Chi-squared = 14.3

Since 14.3 is greater than 9.49, we reject H 0.

There is an association between Apgar scores and patient outcome. The lowest Apgar score group (0 to 4) experienced the highest percentage of major morbidity or mortality (16 out of 57=28%) compared to the other Apgar score groups.

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

9.4: Probability and Chi-Square Analysis

- Last updated

- Save as PDF

- Page ID 24809

- City Tech CUNY

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

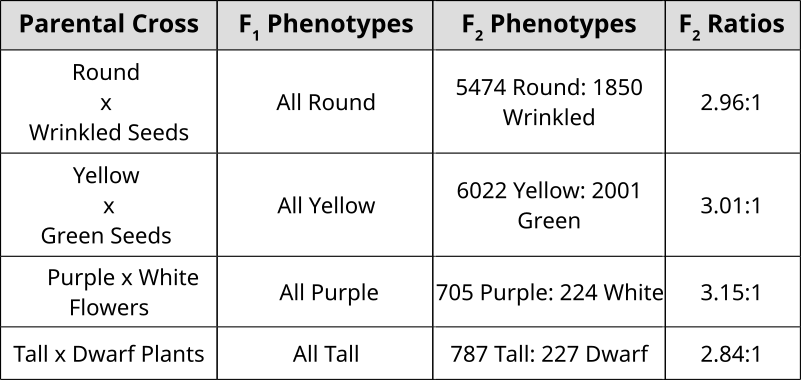

Mendel’s Observations

Probability: Past Punnett Squares

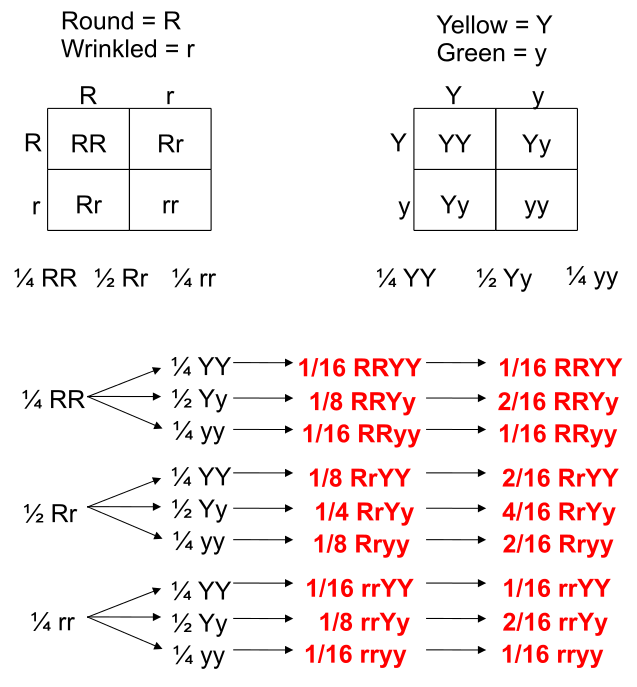

Punnett Squares are convenient for predicting the outcome of monohybrid or dihybrid crosses. The expectation of two heterozygous parents is 3:1 in a single trait cross or 9:3:3:1 in a two-trait cross. Performing a three or four trait cross becomes very messy. In these instances, it is better to follow the rules of probability. Probability is the chance that an event will occur expressed as a fraction or percentage. In the case of a monohybrid cross, 3:1 ratio means that there is a \(\frac{3}{4}\) (0.75) chance of the dominant phenotype with a \(\frac{1}{4}\) (0.25) chance of a recessive phenotype.

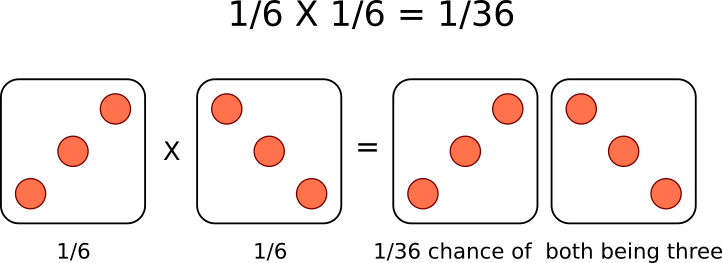

A single die has a 1 in 6 chance of being a specific value. In this case, there is a \(\frac{1}{6}\) probability of rolling a 3. It is understood that rolling a second die simultaneously is not influenced by the first and is therefore independent. This second die also has a \(\frac{1}{6}\) chance of being a 3.

We can understand these rules of probability by applying them to the dihybrid cross and realizing we come to the same outcome as the 2 monohybrid Punnett Squares as with the single dihybrid Punnett Square.

This forked line method of calculating probability of offspring with various genotypes and phenotypes can be scaled and applied to more characteristics.

The Chi-Square Test

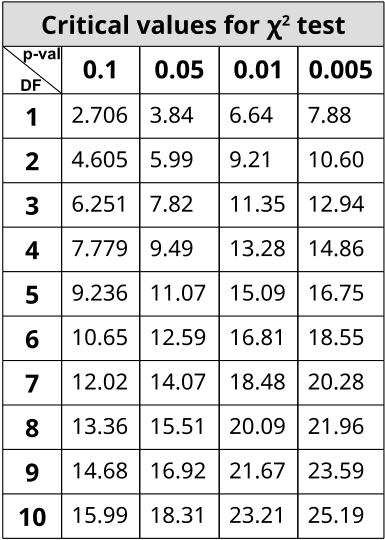

The χ 2 statistic is used in genetics to illustrate if there are deviations from the expected outcomes of the alleles in a population. The general assumption of any statistical test is that there are no significant deviations between the measured results and the predicted ones. This lack of deviation is called the null hypothesis ( H 0 ). X 2 statistic uses a distribution table to compare results against at varying levels of probabilities or critical values . If the X 2 value is greater than the value at a specific probability, then the null hypothesis has been rejected and a significant deviation from predicted values was observed. Using Mendel’s laws, we can count phenotypes after a cross to compare against those predicted by probabilities (or a Punnett Square).

In order to use the table, one must determine the stringency of the test. The lower the p-value, the more stringent the statistics. Degrees of Freedom ( DF ) are also calculated to determine which value on the table to use. Degrees of Freedom is the number of classes or categories there are in the observations minus 1. DF=n-1

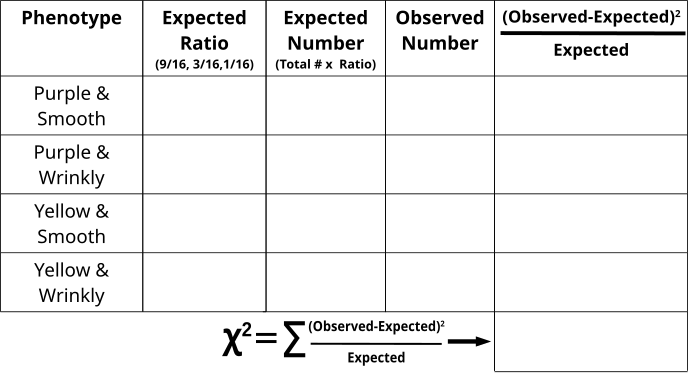

In the example of corn kernel color and texture, there are 4 classes: Purple & Smooth, Purple & Wrinkled, Yellow & Smooth, Yellow & Wrinkled. Therefore, DF = 4 – 1 = 3 and choosing p < 0.05 to be the threshold for significance (rejection of the null hypothesis), the X 2 must be greater than 7.82 in order to be significantly deviating from what is expected. With this dihybrid cross example, we expect a ratio of 9:3:3:1 in phenotypes where 1/16th of the population are recessive for both texture and color while \(\frac{9}{16}\) of the population display both color and texture as the dominant. \(\frac{3}{16}\) will be dominant for one phenotype while recessive for the other and the remaining \(\frac{3}{16}\) will be the opposite combination.

With this in mind, we can predict or have expected outcomes using these ratios. Taking a total count of 200 events in a population, 9/16(200)=112.5 and so forth. Formally, the χ 2 value is generated by summing all combinations of:

\[\frac{(Observed-Expected)^2}{Expected}\]

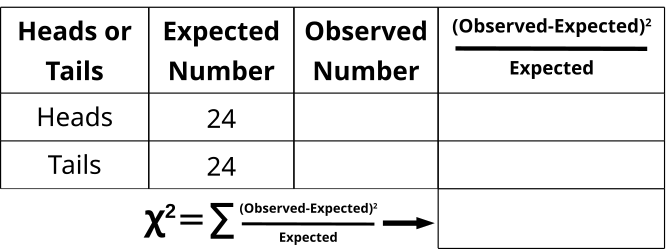

Chi-Square Test: Is This Coin Fair or Weighted? (Activity)

- Everyone in the class should flip a coin 2x and record the result (assumes class is 24).

- 50% of 48 results should be 24.

- 24 heads and 24 tails are already written in the “Expected” column.

- As a class, compile the results in the “Observed” column (total of 48 coin flips).

- In the last column, subtract the expected heads from the observed heads and square it, then divide by the number of expected heads.

- In the last column, subtract the expected tails from the observed tails and square it, then divide by the number of expected tails.

- Add the values together from the last column to generate the X 2 value.

- There are 2 classes or categories (head or tail), so DF = 2 – 1 = 1.

- Were the coin flips fair (not significantly deviating from 50:50)?

Let’s say that the coin tosses yielded 26 Heads and 22 Tails. Can we assume that the coin was unfair? If we toss a coin an odd number of times (eg. 51), then we would expect that the results would yield 25.5 (50%) Heads and 25.5 (50%) Tails. But this isn’t a possibility. This is when the X 2 test is important as it delineates whether 26:25 or 30:21 etc. are within the probability for a fair coin.

Chi-Square Test of Kernel Coloration and Texture in an F 2 Population (Activity)

- From the counts, one can assume which phenotypes are dominant and recessive.

- Fill in the “Observed” category with the appropriate counts.

- Fill in the “Expected Ratio” with either 9/16, 3/16 or 1/16.

- The total number of the counted event was 200, so multiply the “Expected Ratio” x 200 to generate the “Expected Number” fields.

- Calculate the \(\frac{(Observed-Expected)^2}{Expected}\) for each phenotype combination

- Add all \(\frac{(Observed-Expected)^2}{Expected}\) values together to generate the X 2 value and compare with the value on the table where DF=3.

- What would it mean if the Null Hypothesis was rejected? Can you explain a case in which we have observed values that are significantly altered from what is expected?

LEARN STATISTICS EASILY

Learn Data Analysis Now!

Mastering the Chi-Square Test: A Comprehensive Guide

The Chi-Square Test is a statistical method used to determine if there’s a significant association between two categorical variables in a sample data set. It checks the independence of these variables, making it a robust and flexible tool for data analysis.

Introduction to Chi-Square Test

The Chi-Square Test of Independence is an important tool in the statistician’s arsenal. Its primary function is determining whether a significant association exists between two categorical variables in a sample data set. Essentially, it’s a test of independence, gauging if variations in one variable can impact another.

This comprehensive guide gives you a deeper understanding of the Chi-Square Test, its mechanics, importance, and correct implementation.

- Chi-Square Test assess the association between two categorical variables.

- Chi-Square Test requires the data to be a random sample.

- Chi-Square Test is designed for categorical or nominal variables.

- Each observation in the Chi-Square Test must be mutually exclusive and exhaustive.

- Chi-Square Test can’t establish causality, only an association between variables.

Ad description. Lorem ipsum dolor sit amet, consectetur adipiscing elit.

Case Study: Chi-Square Test in Real-World Scenario

Let’s delve into a real-world scenario to illustrate the application of the Chi-Square Test . Picture this: you’re the lead data analyst for a burgeoning shoe company. The company has an array of products but wants to enhance its marketing strategy by understanding if there’s an association between gender (Male, Female) and product preference (Sneakers, Loafers).

To start, you collect data from a random sample of customers, using a survey to identify their gender and their preferred shoe type. This data then gets organized into a contingency table , with gender across the top and shoe type down the side.

Next, you apply the Chi-Square Test to this data. The null hypothesis (H0) is that gender and shoe preference are independent. In contrast, the alternative hypothesis (H1) proposes that these variables are associated. After calculating the expected frequencies and the Chi-Square statistic, you compare this statistic with the critical value from the Chi-Square distribution.

Suppose the Chi-Square statistic is higher than the critical value in our scenario, leading to the rejection of the null hypothesis. This result indicates a significant association between gender and shoe preference. With this insight, the shoe company has valuable information for targeted marketing campaigns.

For instance, if the data shows that females prefer sneakers over loafers, the company might emphasize its sneaker line in marketing materials directed toward women. Conversely, if men show a higher preference for loafers, the company can highlight these products in campaigns targeting men.

This case study exemplifies the power of the Chi-Square Test. It’s a simple and effective tool that can drive strategic decisions in various real-world contexts, from marketing to medical research.

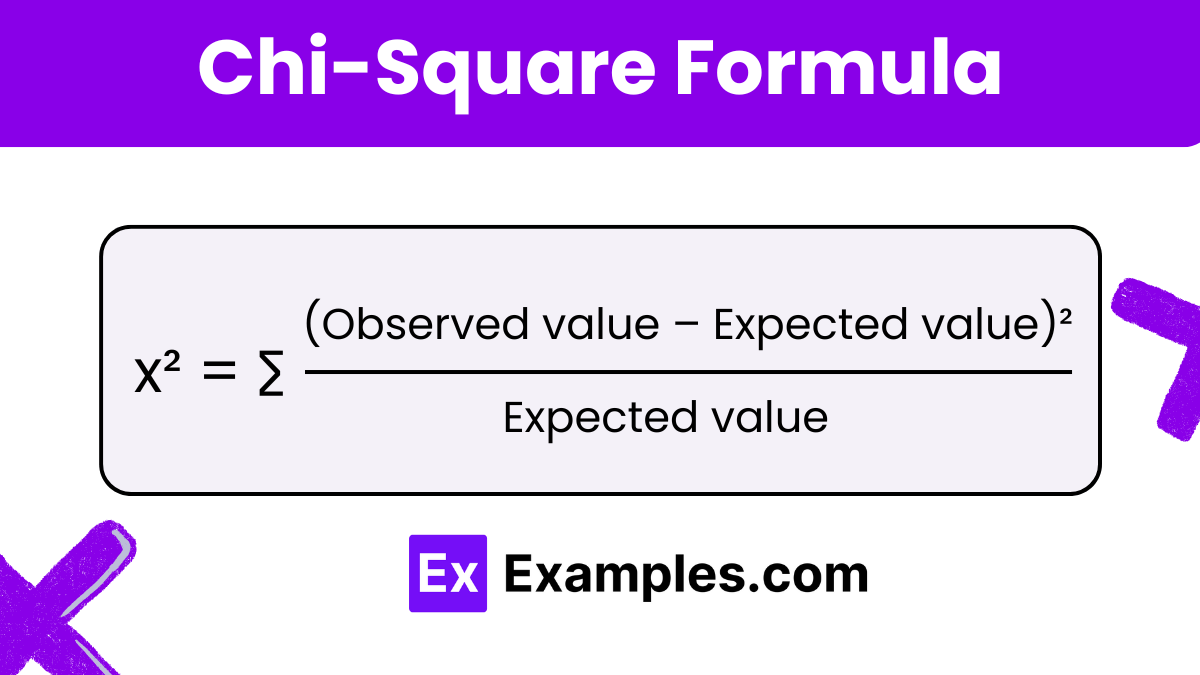

The Mathematics Behind Chi-Square Test

At the heart of the Chi-Square Test lies the calculation of the discrepancy between observed data and the expected data under the assumption of variable independence. This discrepancy termed the Chi-Square statistic, is calculated as the sum of squared differences between observed (O) and expected (E) frequencies, normalized by the expected frequencies in each category.

In mathematical terms, the Chi-Square statistic (χ²) can be represented as follows: χ² = Σ [ (Oᵢ – Eᵢ)² / Eᵢ ] , where the summation (Σ) is carried over all categories.

This formula quantifies the discrepancy between our observations and what we would expect if the null hypothesis of independence were true. We can decide on the variables’ independence by comparing the calculated Chi-Square statistic to a critical value from the Chi-Square distribution. Suppose the computed χ² is greater than the critical value. In that case, we reject the null hypothesis, indicating a significant association between the variables.

Step-by-Step Guide to Perform Chi-Square Test

To effectively execute a Chi-Square Test , follow these methodical steps:

State the Hypotheses: The null hypothesis (H0) posits no association between the variables — i.e., independent — while the alternative hypothesis (H1) posits an association between the variables.

Construct a Contingency Table: Create a matrix to present your observations, with one variable defining the rows and the other defining the columns. Each table cell shows the frequency of observations corresponding to a particular combination of variable categories.

Calculate the Expected Values: For each cell in the contingency table, calculate the expected frequency assuming that H0 is true. This can be calculated by multiplying the sum of the row and column for that cell and dividing by the total number of observations.

Compute the Chi-Square Statistic: Apply the formula χ² = Σ [ (Oᵢ – Eᵢ)² / Eᵢ ] to compute the Chi-Square statistic.

Compare Your Test Statistic: Evaluate your test statistic against a Chi-Square distribution to find the p-value, which will indicate the statistical significance of your test. If the p-value is less than your chosen significance level (usually 0.05), you reject H0.

Interpretation of the results should always be in the context of your research question and hypothesis. This includes considering practical significance — not just statistical significance — and ensuring your findings align with the broader theoretical understanding of the topic.

Assumptions, Limitations, and Misconceptions

The Chi-Square Test , a vital tool in statistical analysis, comes with certain assumptions and distinct limitations. Firstly, it presumes that the data used are a random sample from a larger population and that the variables under investigation are nominal or categorical. Each observation must fall into one unique category or cell in the analysis, meaning observations are mutually exclusive and exhaustive .

The Chi-Square Test has limitations when deployed with small sample sizes. The expected frequency of any cell in the contingency table should ideally be 5 or more. If it falls short, this can cause distortions in the test findings, potentially triggering a Type I or Type II error.

Misuse and misconceptions about this test often center on its application and interpretability. A standard error is using it for continuous or ordinal data without appropriate categorization , leading to misleading results. Also, a significant result from a Chi-Square Test indicates an association between variables, but it doesn’t infer causality . This is a frequent misconception — interpreting the association as proof of causality — while the test doesn’t offer information about whether changes in one variable cause changes in another.

Moreover, more than a significant Chi-Square test is required to comprehensively understand the relationship between variables. To get a more nuanced interpretation, it’s crucial to accompany the test with a measure of effect size , such as Cramer’s V or Phi coefficient for a 2×2 contingency table. These measures provide information about the strength of the association, adding another dimension to the interpretation of results. This is essential as statistically significant results do not necessarily imply a practically significant effect. An effect size measure is critical in large sample sizes where even minor deviations from independence might result in a significant Chi-Square test.

Conclusion and Further Reading

Mastering the Chi-Square Test is vital in any data analyst’s or statistician’s journey. Its wide range of applications and robustness make it a tool you’ll turn to repeatedly.

For further learning, statistical textbooks and online courses can provide more in-depth knowledge and practice. Don’t hesitate to delve deeper and keep exploring the fascinating world of data analysis.

- Effect Size for Chi-Square Tests

- Assumptions for the Chi-Square Test

- Assumptions for Chi-Square Test (Story)

- Chi Square Test – an overview (External Link)

- Understanding the Null Hypothesis in Chi-Square

- What is the Difference Between the T-Test vs. Chi-Square Test?

- How to Report Chi-Square Test Results in APA Style: A Step-By-Step Guide

Frequently Asked Questions (FAQ)

It’s a statistical test used to determine if there’s a significant association between two categorical variables.

The test is suitable for categorical or nominal variables.

No, the test can only indicate an association, not a causal relationship.

The test assumes that the data is a random sample and that observations are mutually exclusive and exhaustive.

It measures the discrepancy between observed and expected data, calculated by χ² = Σ [ (Oᵢ – Eᵢ)² / Eᵢ ].

The result is generally considered statistically significant if the p-value is less than 0.05.

Misuse can lead to misleading results, making it crucial to use it with categorical data only.

Small sample sizes can lead to wrong results, especially when expected cell frequencies are less than 5.

Low expected cell frequencies can lead to Type I or Type II errors.

Results should be interpreted in context, considering the statistical significance and the broader understanding of the topic.

Similar Posts

“What Does The P-Value Mean” Revisited

We Have Already Presented A Didactic Explanation Of The P-Value, But Not That Precise. Now Learn An Accurate Definition For The P-Value!

Student’s T-Test: Don’t Ignore These Secrets

Master the Student’s t-test to accurately compare population means, ensuring valid conclusions in your research. Avoid common pitfalls and enhance data analysis.

Understanding Homoscedasticity vs. Heteroscedasticity in Data Analysis

Master the concepts of homoscedasticity and heteroscedasticity in statistical analysis for accurate predictions and inferences.

Florence Nightingale: How Data Visualization in the Form of Pie Charts Saved Lives

Discover how Florence Nightingale used data visualization and pie charts to revolutionize healthcare during the Crimean War.

Is PSPP A Free Alternative To SPSS?

Explore PSPP, a free alternative to SPSS, offering similar functionality and user interface for data analysis.

How to Report One-Way ANOVA Results in APA Style: A Step-by-Step Guide

Learn how to report the results of ANOVA in APA style with our step-by-step guide, covering key elements, effect sizes, and interpretation.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Chi-Square (Χ²) Test & How To Calculate Formula Equation

Benjamin Frimodig

Science Expert

B.A., History and Science, Harvard University

Ben Frimodig is a 2021 graduate of Harvard College, where he studied the History of Science.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

On This Page:

Chi-square (χ2) is used to test hypotheses about the distribution of observations into categories with no inherent ranking.

What Is a Chi-Square Statistic?

The Chi-square test (pronounced Kai) looks at the pattern of observations and will tell us if certain combinations of the categories occur more frequently than we would expect by chance, given the total number of times each category occurred.

It looks for an association between the variables. We cannot use a correlation coefficient to look for the patterns in this data because the categories often do not form a continuum.

There are three main types of Chi-square tests, tests of goodness of fit, the test of independence, and the test for homogeneity. All three tests rely on the same formula to compute a test statistic.

These tests function by deciphering relationships between observed sets of data and theoretical or “expected” sets of data that align with the null hypothesis.

What is a Contingency Table?

Contingency tables (also known as two-way tables) are grids in which Chi-square data is organized and displayed. They provide a basic picture of the interrelation between two variables and can help find interactions between them.

In contingency tables, one variable and each of its categories are listed vertically, and the other variable and each of its categories are listed horizontally.

Additionally, including column and row totals, also known as “marginal frequencies,” will help facilitate the Chi-square testing process.

In order for the Chi-square test to be considered trustworthy, each cell of your expected contingency table must have a value of at least five.

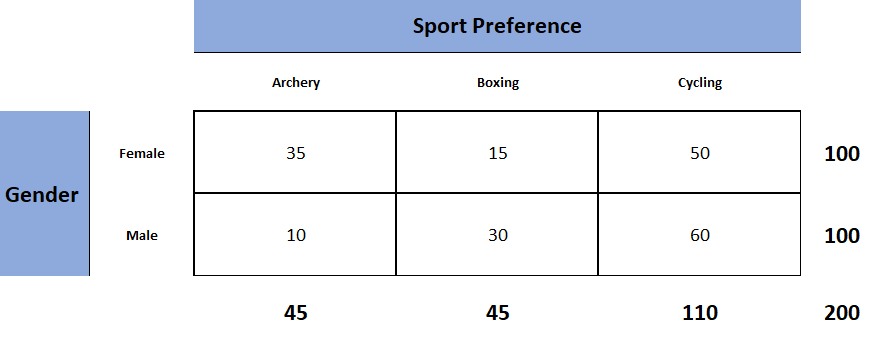

Each Chi-square test will have one contingency table representing observed counts (see Fig. 1) and one contingency table representing expected counts (see Fig. 2).

Figure 1. Observed table (which contains the observed counts).

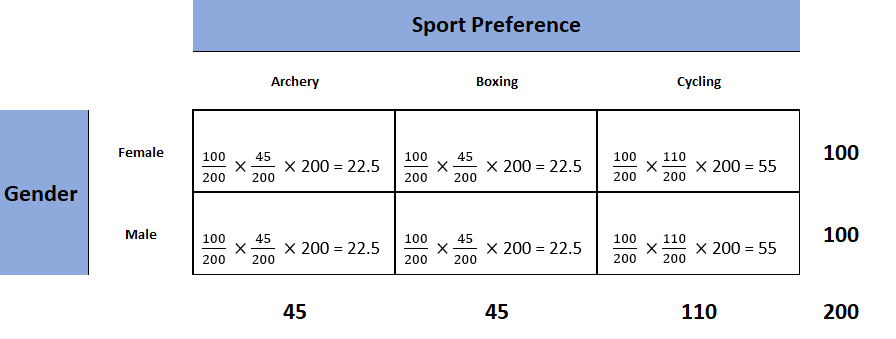

To obtain the expected frequencies for any cell in any cross-tabulation in which the two variables are assumed independent, multiply the row and column totals for that cell and divide the product by the total number of cases in the table.

Figure 2. Expected table (what we expect the two-way table to look like if the two categorical variables are independent).

To decide if our calculated value for χ2 is significant, we also need to work out the degrees of freedom for our contingency table using the following formula: df= (rows – 1) x (columns – 1).

Formula Calculation

Calculate the chi-square statistic (χ2) by completing the following steps:

- Calculate the expected frequencies and the observed frequencies.

- For each observed number in the table, subtract the corresponding expected number (O — E).

- Square the difference (O —E)².

- Divide the squares obtained for each cell in the table by the expected number for that cell (O – E)² / E.

- Sum all the values for (O – E)² / E. This is the chi-square statistic.

- Calculate the degrees of freedom for the contingency table using the following formula; df= (rows – 1) x (columns – 1).

Once we have calculated the degrees of freedom (df) and the chi-squared value (χ2), we can use the χ2 table (often at the back of a statistics book) to check if our value for χ2 is higher than the critical value given in the table. If it is, then our result is significant at the level given.

Interpretation

The chi-square statistic tells you how much difference exists between the observed count in each table cell to the counts you would expect if there were no relationship at all in the population.

Small Chi-Square Statistic: If the chi-square statistic is small and the p-value is large (usually greater than 0.05), this often indicates that the observed frequencies in the sample are close to what would be expected under the null hypothesis.

The null hypothesis usually states no association between the variables being studied or that the observed distribution fits the expected distribution.

In theory, if the observed and expected values were equal (no difference), then the chi-square statistic would be zero — but this is unlikely to happen in real life.

Large Chi-Square Statistic : If the chi-square statistic is large and the p-value is small (usually less than 0.05), then the conclusion is often that the data does not fit the model well, i.e., the observed and expected values are significantly different. This often leads to the rejection of the null hypothesis.

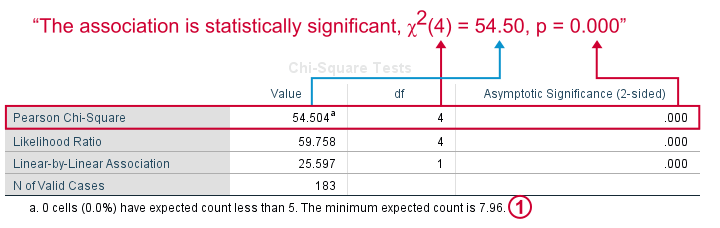

How to Report

To report a chi-square output in an APA-style results section, always rely on the following template:

χ2 ( degrees of freedom , N = sample size ) = chi-square statistic value , p = p value .

In the case of the above example, the results would be written as follows:

A chi-square test of independence showed that there was a significant association between gender and post-graduation education plans, χ2 (4, N = 101) = 54.50, p < .001.

APA Style Rules

- Do not use a zero before a decimal when the statistic cannot be greater than 1 (proportion, correlation, level of statistical significance).

- Report exact p values to two or three decimals (e.g., p = .006, p = .03).

- However, report p values less than .001 as “ p < .001.”

- Put a space before and after a mathematical operator (e.g., minus, plus, greater than, less than, equals sign).

- Do not repeat statistics in both the text and a table or figure.

p -value Interpretation

You test whether a given χ2 is statistically significant by testing it against a table of chi-square distributions , according to the number of degrees of freedom for your sample, which is the number of categories minus 1. The chi-square assumes that you have at least 5 observations per category.

If you are using SPSS then you will have an expected p -value.

For a chi-square test, a p-value that is less than or equal to the .05 significance level indicates that the observed values are different to the expected values.

Thus, low p-values (p< .05) indicate a likely difference between the theoretical population and the collected sample. You can conclude that a relationship exists between the categorical variables.

Remember that p -values do not indicate the odds that the null hypothesis is true but rather provide the probability that one would obtain the sample distribution observed (or a more extreme distribution) if the null hypothesis was true.

A level of confidence necessary to accept the null hypothesis can never be reached. Therefore, conclusions must choose to either fail to reject the null or accept the alternative hypothesis, depending on the calculated p-value.

The four steps below show you how to analyze your data using a chi-square goodness-of-fit test in SPSS (when you have hypothesized that you have equal expected proportions).

Step 1 : Analyze > Nonparametric Tests > Legacy Dialogs > Chi-square… on the top menu as shown below:

Step 2 : Move the variable indicating categories into the “Test Variable List:” box.

Step 3 : If you want to test the hypothesis that all categories are equally likely, click “OK.”

Step 4 : Specify the expected count for each category by first clicking the “Values” button under “Expected Values.”

Step 5 : Then, in the box to the right of “Values,” enter the expected count for category one and click the “Add” button. Now enter the expected count for category two and click “Add.” Continue in this way until all expected counts have been entered.

Step 6 : Then click “OK.”

The four steps below show you how to analyze your data using a chi-square test of independence in SPSS Statistics.

Step 1 : Open the Crosstabs dialog (Analyze > Descriptive Statistics > Crosstabs).

Step 2 : Select the variables you want to compare using the chi-square test. Click one variable in the left window and then click the arrow at the top to move the variable. Select the row variable and the column variable.

Step 3 : Click Statistics (a new pop-up window will appear). Check Chi-square, then click Continue.

Step 4 : (Optional) Check the box for Display clustered bar charts.

Step 5 : Click OK.

Goodness-of-Fit Test

The Chi-square goodness of fit test is used to compare a randomly collected sample containing a single, categorical variable to a larger population.

This test is most commonly used to compare a random sample to the population from which it was potentially collected.

The test begins with the creation of a null and alternative hypothesis. In this case, the hypotheses are as follows:

Null Hypothesis (Ho) : The null hypothesis (Ho) is that the observed frequencies are the same (except for chance variation) as the expected frequencies. The collected data is consistent with the population distribution.

Alternative Hypothesis (Ha) : The collected data is not consistent with the population distribution.

The next step is to create a contingency table that represents how the data would be distributed if the null hypothesis were exactly correct.

The sample’s overall deviation from this theoretical/expected data will allow us to draw a conclusion, with a more severe deviation resulting in smaller p-values.

Test for Independence

The Chi-square test for independence looks for an association between two categorical variables within the same population.

Unlike the goodness of fit test, the test for independence does not compare a single observed variable to a theoretical population but rather two variables within a sample set to one another.

The hypotheses for a Chi-square test of independence are as follows:

Null Hypothesis (Ho) : There is no association between the two categorical variables in the population of interest.

Alternative Hypothesis (Ha) : There is no association between the two categorical variables in the population of interest.

The next step is to create a contingency table of expected values that reflects how a data set that perfectly aligns the null hypothesis would appear.

The simplest way to do this is to calculate the marginal frequencies of each row and column; the expected frequency of each cell is equal to the marginal frequency of the row and column that corresponds to a given cell in the observed contingency table divided by the total sample size.

Test for Homogeneity

The Chi-square test for homogeneity is organized and executed exactly the same as the test for independence.

The main difference to remember between the two is that the test for independence looks for an association between two categorical variables within the same population, while the test for homogeneity determines if the distribution of a variable is the same in each of several populations (thus allocating population itself as the second categorical variable).

Null Hypothesis (Ho) : There is no difference in the distribution of a categorical variable for several populations or treatments.

Alternative Hypothesis (Ha) : There is a difference in the distribution of a categorical variable for several populations or treatments.

The difference between these two tests can be a bit tricky to determine, especially in the practical applications of a Chi-square test. A reliable rule of thumb is to determine how the data was collected.

If the data consists of only one random sample with the observations classified according to two categorical variables, it is a test for independence. If the data consists of more than one independent random sample, it is a test for homogeneity.

What is the chi-square test?

The Chi-square test is a non-parametric statistical test used to determine if there’s a significant association between two or more categorical variables in a sample.

It works by comparing the observed frequencies in each category of a cross-tabulation with the frequencies expected under the null hypothesis, which assumes there is no relationship between the variables.

This test is often used in fields like biology, marketing, sociology, and psychology for hypothesis testing.

What does chi-square tell you?

The Chi-square test informs whether there is a significant association between two categorical variables. Suppose the calculated Chi-square value is above the critical value from the Chi-square distribution.

In that case, it suggests a significant relationship between the variables, rejecting the null hypothesis of no association.

How to calculate chi-square?

To calculate the Chi-square statistic, follow these steps:

1. Create a contingency table of observed frequencies for each category.

2. Calculate expected frequencies for each category under the null hypothesis.

3. Compute the Chi-square statistic using the formula: Χ² = Σ [ (O_i – E_i)² / E_i ], where O_i is the observed frequency and E_i is the expected frequency.

4. Compare the calculated statistic with the critical value from the Chi-square distribution to draw a conclusion.

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

AP®︎/College Statistics

Course: ap®︎/college statistics > unit 12, chi-square statistic for hypothesis testing.

- Chi-square goodness-of-fit example

- Expected counts in a goodness-of-fit test

- Conditions for a goodness-of-fit test

- Test statistic and P-value in a goodness-of-fit test

- Conclusions in a goodness-of-fit test

Want to join the conversation?

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

Video transcript

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

9.6: Chi-Square Tests

- Last updated

- Save as PDF

- Page ID 10216

- Kyle Siegrist

- University of Alabama in Huntsville via Random Services

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

In this section, we will study a number of important hypothesis tests that fall under the general term chi-square tests . These are named, as you might guess, because in each case the test statistics has (in the limit) a chi-square distribution. Although there are several different tests in this general category, they all share some common themes:

- In each test, there are one or more underlying multinomial samples, Of course, the multinomial model includes the Bernoulli model as a special case.

- Each test works by comparing the observed frequencies of the various outcomes with expected frequencies under the null hypothesis.

- If the model is incompletely specified , some of the expected frequencies must be estimated; this reduces the degrees of freedom in the limiting chi-square distribution.

We will start with the simplest case, where the derivation is the most straightforward; in fact this test is equivalent to a test we have already studied. We then move to successively more complicated models.

The One-Sample Bernoulli Model

Suppose that \(\bs{X} = (X_1, X_2, \ldots, X_n)\) is a random sample from the Bernoulli distribution with unknown success parameter \(p \in (0, 1)\). Thus, these are independent random variables taking the values 1 and 0 with probabilities \(p\) and \(1 - p\) respectively. We want to test \(H_0: p = p_0\) versus \(H_1: p \ne p_0\), where \(p_0 \in (0, 1)\) is specified. Of course, we have already studied such tests in the Bernoulli model. But keep in mind that our methods in this section will generalize to a variety of new models that we have not yet studied.

Let \(O_1 = \sum_{j=1}^n X_j\) and \(O_0 = n - O_1 = \sum_{j=1}^n (1 - X_j)\). These statistics give the number of times (frequency) that outcomes 1 and 0 occur, respectively. Moreover, we know that each has a binomial distribution; \(O_1\) has parameters \(n\) and \(p\), while \(O_0\) has parameters \(n\) and \(1 - p\). In particular, \(\E(O_1) = n p\), \(\E(O_0) = n (1 - p)\), and \(\var(O_1) = \var(O_0) = n p (1 - p)\). Moreover, recall that \(O_1\) is sufficient for \(p\). Thus, any good test statistic should be a function of \(O_1\). Next, recall that when \(n\) is large, the distribution of \(O_1\) is approximately normal, by the central limit theorem. Let \[ Z = \frac{O_1 - n p_0}{\sqrt{n p_0 (1 - p_0)}} \] Note that \(Z\) is the standard score of \(O_1\) under \(H_0\). Hence if \(n\) is large, \(Z\) has approximately the standard normal distribution under \(H_0\), and therefore \(V = Z^2\) has approximately the chi-square distribution with 1 degree of freedom under \(H_0\). As usual, let \(\chi_k^2\) denote the quantile function of the chi-square distribution with \(k\) degrees of freedom.

An approximate test of \(H_0\) versus \(H_1\) at the \(\alpha\) level of significance is to reject \(H_0\) if and only if \(V \gt \chi_1^2(1 - \alpha)\).

The test above is equivalent to the unbiased test with test statistic \(Z\) (the approximate normal test) derived in the section on Tests in the Bernoulli model.

For purposes of generalization, the critical result in the next exercise is a special representation of \(V\). Let \(e_0 = n (1 - p_0)\) and \(e_1 = n p_0\). Note that these are the expected frequencies of the outcomes 0 and 1, respectively, under \(H_0\).

\(V\) can be written in terms of the observed and expected frequencies as follows: \[ V = \frac{(O_0 - e_0)^2}{e_0} + \frac{(O_1 - e_1)^2}{e_1} \]

This representation shows that our test statistic \(V\) measures the discrepancy between the expected frequencies, under \(H_0\), and the observed frequencies. Of course, large values of \(V\) are evidence in favor of \(H_1\). Finally, note that although there are two terms in the expansion of \(V\) in Exercise 3, there is only one degree of freedom since \(O_0 + O_1 = n\). The observed and expected frequencies could be stored in a \(1 \times 2\) table.

The Multi-Sample Bernoulli Model

Suppose now that we have samples from several (possibly) different, independent Bernoulli trials processes. Specifically, suppose that \(\bs{X}_i = (X_{i,1}, X_{i,2}, \ldots, X_{i,n_i})\) is a random sample of size \(n_i\) from the Bernoulli distribution with unknown success parameter \(p_i \in (0, 1)\) for each \(i \in \{1, 2, \ldots, m\}\). Moreover, the samples \((\bs{X}_1, \bs{X}_2, \ldots, \bs{X}_m)\) are independent. We want to test hypotheses about the unknown parameter vector \(\bs{p} = (p_1, p_2, \ldots, p_m)\). There are two common cases that we consider below, but first let's set up the essential notation that we will need for both cases. For \(i \in \{1, 2, \ldots, m\}\) and \(j \in \{0, 1\}\), let \(O_{i,j}\) denote the number of times that outcome \(j\) occurs in sample \(\bs{X}_i\). The observed frequency \(O_{i,j}\) has a binomial distribution; \(O_{i,1}\) has parameters \(n_i\) and \(p_i\) while \(O_{i,0}\) has parameters \(n_i\) and \(1 - p_i\).

The Completely Specified Case

Consider a specified parameter vector \(\bs{p}_0 = (p_{0,1}, p_{0,2}, \ldots, p_{0,m}) \in (0, 1)^m\). We want to test the null hypothesis \(H_0: \bs{p} = \bs{p}_0\), versus \(H_1: \bs{p} \ne \bs{p}_0\). Since the null hypothesis specifies the value of \(p_i\) for each \(i\), this is called the completely specified case . Now let \(e_{i,0} = n_i (1 - p_{i,0})\) and let \(e_{i,1} = n_i p_{i,0}\). These are the expected frequencies of the outcomes 0 and 1, respectively, from sample \(\bs{X}_i\) under \(H_0\).

If \(n_i\) is large for each \(i\), then under \(H_0\) the following test statistic has approximately the chi-square distribution with \(m\) degrees of freedom: \[ V = \sum_{i=1}^m \sum_{j=0}^1 \frac{(O_{i,j} - e_{i,j})^2}{e_{i,j}} \]

This follows from the result above and independence.

As a rule of thumb, large means that we need \(e_{i,j} \ge 5\) for each \(i \in \{1, 2, \ldots, m\}\) and \(j \in \{0, 1\}\). But of course, the larger these expected frequencies the better.

Under the large sample assumption, an approximate test of \(H_0\) versus \(H_1\) at the \(\alpha\) level of significance is to reject \(H_0\) if and only if \(V \gt \chi_m^2(1 - \alpha)\).

Once again, note that the test statistic \(V\) measures the discrepancy between the expected and observed frequencies, over all outcomes and all samples. There are \(2 \, m\) terms in the expansion of \(V\) in Exercise 4, but only \(m\) degrees of freedom, since \(O_{i,0} + O_{i,1} = n_i\) for each \(i \in \{1, 2, \ldots, m\}\). The observed and expected frequencies could be stored in an \(m \times 2\) table.

The Equal Probability Case

Suppose now that we want to test the null hypothesis \(H_0: p_1 = p_2 = \cdots = p_m\) that all of the success probabilities are the same, versus the complementary alternative hypothesis \(H_1\) that the probabilities are not all the same. Note, in contrast to the previous model, that the null hypothesis does not specify the value of the common success probability \(p\). But note also that under the null hypothesis, the \(m\) samples can be combined to form one large sample of Bernoulli trials with success probability \(p\). Thus, a natural approach is to estimate \(p\) and then define the test statistic that measures the discrepancy between the expected and observed frequencies, just as before. The challenge will be to find the distribution of the test statistic.

Let \(n = \sum_{i=1}^m n_i\) denote the total sample size when the samples are combined. Then the overall sample mean, which in this context is the overall sample proportion of successes, is \[ P = \frac{1}{n} \sum_{i=1}^m \sum_{j=1}^{n_i} X_{i,j} = \frac{1}{n} \sum_{i=1}^m O_{i,1} \] The sample proportion \(P\) is the best estimate of \(p\), in just about any sense of the word. Next, let \(E_{i,0} = n_i \, (1 - P)\) and \(E_{i,1} = n_i \, P\). These are the estimated expected frequencies of 0 and 1, respectively, from sample \(\bs{X}_i\) under \(H_0\). Of course these estimated frequencies are now statistics (and hence random) rather than parameters. Just as before, we define our test statistic \[ V = \sum_{i=1}^m \sum_{j=0}^1 \frac{(O_{i,j} - E_{i,j})^2}{E_{i,j}} \] It turns out that under \(H_0\), the distribution of \(V\) converges to the chi-square distribution with \(m - 1\) degrees of freedom as \(n \to \infty\).

An approximate test of \(H_0\) versus \(H_1\) at the \(\alpha\) level of significance is to reject \(H_0\) if and only if \(V \gt \chi_{m-1}^2(1 - \alpha)\).

Intuitively, we lost a degree of freedom over the completely specified case because we had to estimate the unknown common success probability \(p\). Again, the observed and expected frequencies could be stored in an \(m \times 2\) table.

The One-Sample Multinomial Model