Live revision! Join us for our free exam revision livestreams Watch now →

Reference Library

Collections

- See what's new

- All Resources

- Student Resources

- Assessment Resources

- Teaching Resources

- CPD Courses

- Livestreams

Study notes, videos, interactive activities and more!

Psychology news, insights and enrichment

Currated collections of free resources

Browse resources by topic

- All Psychology Resources

Resource Selections

Currated lists of resources

Study Notes

- Operationalisation

Last updated 22 Mar 2021

- Share on Facebook

- Share on Twitter

- Share by Email

This term describes when a variable is defined by the researcher and a way of measuring that variable is developed for the research.

This is not always easy and care must be taken to ensure that the method of measurement gives a valid measure for the variable.

The term operationalisation can be applied to independent variables (IV), dependent variables (DV) or co variables (in a correlational design)

Examples of operationalised variables are given in the table below:

You might also like

Observational techniques - introduction, a level psychology topic quiz - research methods.

Quizzes & Activities

Model Answer for Question 11 Paper 2: AS Psychology, June 2016 (AQA)

Exam Support

Research Methods: MCQ Revision Test 1 for AQA A Level Psychology

Topic Videos

Example Answers for Research Methods: A Level Psychology, Paper 2, June 2018 (AQA)

Our subjects.

- › Criminology

- › Economics

- › Geography

- › Health & Social Care

- › Psychology

- › Sociology

- › Teaching & learning resources

- › Student revision workshops

- › Online student courses

- › CPD for teachers

- › Livestreams

- › Teaching jobs

Boston House, 214 High Street, Boston Spa, West Yorkshire, LS23 6AD Tel: 01937 848885

- › Contact us

- › Terms of use

- › Privacy & cookies

© 2002-2024 Tutor2u Limited. Company Reg no: 04489574. VAT reg no 816865400.

What is operationalization?

Last updated

5 February 2023

Reviewed by

Operationalization is the process of turning abstract concepts or ideas into observable and measurable phenomena. This process is often used in the social sciences to quantify vague or intangible concepts and study them more effectively. Examples are emotions and attitudes.

In this article, we will look at operationalization’s definition, benefits, and limitations. We will also provide a step-by-step guide on how to operationalize a concept, including examples and tips for choosing appropriate indicators.

- Defining operationalization

Operationalization is the process of defining abstract concepts in a way that makes them observable and measurable.

For example, suppose a researcher wants to study the concept of anxiety. They might operationalize it by measuring anxiety levels using a standardized questionnaire or by observing physiological changes, like increased heart rate.

Operationalization is mainly a social sciences tool that is applied in many other disciplines. It allows many unquantifiable concepts in these fields to be directly measured, enabling researchers to study and understand them with more accuracy.

- Why does operationalization matter?

As a qualitative researcher, accurately defining the types of variables you intend to study is vital. Transparent and specific operational definitions can help you measure relevant concepts and apply methods consistently.

Here are a few reasons why operationalization matters:

Improved reliability and validity. Researchers can ensure that their results are more reliable and valid when they clearly define and measure variables. This is especially important when comparing results from different studies, as it gives researchers confidence that they are measuring the same thing.

Enhanced objectivity: Operationalization helps reduce subjectivity in research by providing clear guidelines for measuring variables. This can help minimize bias and lead to more objective results.

Better decision-making. Operationalization allows researchers to collect and analyze quantifiable data . This can be useful for making informed decisions in various settings. For example, operationalization can be used to assess group or individual performance in the workplace, leading to improved productivity and execution.

Enhanced understanding of abstract concepts. Operationalizing abstract concepts helps researchers study and understand them more effectively. This can lead to new insights and a deeper understanding of complex phenomena.

Operationalization can reduce the possibility of research bias, minimize subjectivity, and enhance a study’s reliability.

- How to operationalize concepts

Researchers can operationalize abstract concepts in different ways. They will need to measure slightly varying aspects of a concept, so they must be specific about what they are measuring.

Testing a hypothesis using multiple operationalizations of an abstract concept allows you to analyze whether the results depend on the measure type you use. Your results will be labeled “robust” if there’s a lack of variance when using different measures.

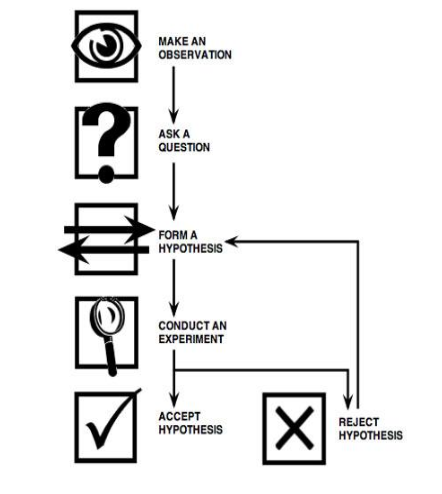

The three main steps of operationalization are:

1. Identifying the main concepts you are interested in studying

Begin by defining your research topic and proposing an initial research question . For example, “What effects does daily social media use have on young teenagers’ attention spans?” Here, the main concepts are social media use and attention span.

2. Choosing variables to represent each concept

Each main concept will typically have several measurable properties or variables that can be used to represent it.

For example, the concept of social media use has the following variables:

Number of hours spent

Frequency of use

Preferred social media platform

The concept of attention span has the following variables:

Quality of attention

Amount of attention span

You can find additional variables to use in your study. Consider reviewing previous related studies and identifying underused or relevant variables to fill gaps in the existing literature.

3. Select indicators to measure your variables

Indicators are specific methods or tools used to numerically measure variables. There are two main types of indicators: objective and subjective.

Objective indicators are based on external, observable data, such as scores on a standardized test. You might use a standardized attention span test to measure the variable “amount of attention span.”

Subjective indicators are based on self-reported data, such as questionnaire responses. You might use a self-report questionnaire to measure the variable “quality of attention.”

Choose indicators that are appropriate for the variables you are studying that will provide accurate and reliable data.

Once you have operationalized your concepts, report your study variables and indicators in the methodology section. Evaluate how your operationalization choice may have impacted your results or interpretations under the discussion section.

- Strengths of operationalization

Operationalizing concepts in research allows you to measure variables across various contexts consistently. Below are the strengths of operationalization for your research purposes:

Objectivity

Data collection using a standardized approach reduces the chance and opportunity for biased or subjective observation interpretation. Operationalization provides clear guidelines for measuring variables, which allows you to interpret observations objectively.

Scientific research relies on observable and measurable findings. Operationalization breaks down abstract, unmeasurable concepts into observable and measurable elements.

Reliability

A good operationalization increases high replicability odds by other researchers. Clearly defining and measuring variables helps you ensure your results are reliable and valid. This is especially important when comparing results from different studies, as it gives you confidence that you’re measuring the same thing.

Better decision-making

Operationalization allows researchers to collect and analyze quantifiable data. It can aid informed decision-making in various settings. For example, operationalization can be used to assess group or individual performance in the workplace, leading to improved productivity and performance.

- Limitations of operationalization

Operationalization has many benefits, but it also has some limitations that researchers should be aware of:

Measurement error

Operationalization relies on the use of indicators to measure variables. These can be subject to measurement errors. For example, response bias can occur with self-reported questionnaires, and the concept being measured may not be accurately captured.

The Mars Climate Orbiter failure is an example of the effects of measurement errors. The expensive satellite disappeared somewhere above Mars, leading to a critical mission failure.

The failure occurred because of a massive error in the thrust force calculation. Engineering teams used different standardized measurements (metric and imperial) in their calculations. This non-standardization of units resulted in the loss of hundreds of millions of dollars and several wasted years of planning and construction.

Limited scope

Operationalization is limited to the specific variables and indicators chosen by the researcher. This issue is further compounded by the fact that concepts generally vary across different time periods and social settings. This means that certain aspects of a concept may be overlooked or captured inaccurately.

Reductiveness

It is relatively easy for operational definitions to miss valuable and subjective concept perceptions by attempting to simplify complex concepts to mere numbers.

Careful consideration is necessary

Researchers must carefully consider their operational definitions and choose appropriate indicators to measure their variables accurately. Failing to do so can lead to inaccurate or misleading results.

For instance, context-specific operationalization can validate real-life experiences. On the other hand, it becomes challenging to compare studies in case the measures vary greatly.

- Examples of operationalization

Operationalization is used to convert abstract concepts into observable and measurable traits.

For example, the concept of social anxiety is virtually impossible to measure directly, but you can operationalize it in different ways.

Using a social anxiety scale to self-rate scores is one such way. You can also measure the total incidents of recent behavioral occurrences related to avoiding crowded places. Observing and measuring the levels of physical anxiety symptoms in almost any social situation is another option.

The following are more examples of how researchers might operationalize different concepts:

Concept: happiness

Variables: life satisfaction, positive emotions, negative emotions

Indicators: self-report questionnaire, daily mood diary, facial expression analysis

Concept: intelligence

Variables: verbal ability, spatial ability, memory

Indicators: standardized intelligence test, reaction time tasks, memory tests

Concept: parenting styles

Variables: authoritative, authoritarian, permissive, neglectful

Indicators: parenting style questionnaire, observations of parent–child interactions, parent-reported child behavior

Operationalization can also be used to conduct research in a typical workplace setting.

- Applications of operationalization

Operationalization can be applied in a range of situations, including research studies, workplace performance assessments, and decision-making processes.

Here are a few examples of how operationalization might be used in different settings:

Research studies: It is commonly used in research studies to define and measure variables systematically and objectively. This allows researchers to collect and analyze quantifiable data that can be used to answer research questions and test hypotheses.

Workplace performance assessments: Operationalization can be used to assess group or individual performance in the workplace by defining and measuring relevant variables such as productivity, efficiency, and teamwork. This can help identify areas for improvement and increase overall workplace performance.

Decision-making processes: It can aid informed decision-making in various settings by defining and measuring relevant variables. For example, a business might use operationalization to compare the costs and benefits of different marketing strategies or to assess the effectiveness of employee training programs.

Business: Operationalization can be used in business settings to assess the performance of employees, departments, or entire organizations. It can also be used to measure the effectiveness of business processes or strategies, such as customer satisfaction or marketing campaigns.

Health: It can be used in the health field to define and measure variables such as disease prevalence, treatment effectiveness, and patient satisfaction. Personnel and organizational performance can also be measured through operationalization.

Education: Operationalization can be used in education settings to define and measure variables such as student achievement, teacher effectiveness, or school performance. It can also be used to assess the effectiveness of educational programs or interventions.

By defining and measuring variables in a systematic and objective way, operationalization can help researchers and professionals make more informed decisions, improve performance, and better understand complex concepts.

What is the process of operationalization in research?

Operationalization is the process of defining abstract concepts through measurable observations and quantifiable data. It involves identifying the main concepts you are interested in studying, choosing variables to represent each concept, and selecting indicators to measure those variables.

Operationalization helps researchers study abstract concepts in a more systematic and objective way, improving the reliability and validity of their research and reducing subjectivity and bias.

What does it mean to operationalize a variable?

Operationalizing a variable involves clearly defining and measuring it in a way that allows researchers to collect and analyze quantifiable data.

It typically involves selecting indicators to measure the variable and determining how the data will be interpreted.

Operationalization helps researchers measure variables with more accuracy and consistency, improving the reliability and validity of their research.

Get started today

Go from raw data to valuable insights with a flexible research platform

Editor’s picks

Last updated: 21 December 2023

Last updated: 16 December 2023

Last updated: 6 October 2023

Last updated: 25 November 2023

Last updated: 12 May 2023

Last updated: 15 February 2024

Last updated: 11 March 2024

Last updated: 12 December 2023

Last updated: 18 May 2023

Last updated: 6 March 2024

Last updated: 10 April 2023

Last updated: 20 December 2023

Latest articles

Related topics, log in or sign up.

Get started for free

Operational Hypothesis

An Operational Hypothesis is a testable statement or prediction made in research that not only proposes a relationship between two or more variables but also clearly defines those variables in operational terms, meaning how they will be measured or manipulated within the study. It forms the basis of an experiment that seeks to prove or disprove the assumed relationship, thus helping to drive scientific research.

The Core Components of an Operational Hypothesis

Understanding an operational hypothesis involves identifying its key components and how they interact.

The Variables

An operational hypothesis must contain two or more variables — factors that can be manipulated, controlled, or measured in an experiment.

The Proposed Relationship

Beyond identifying the variables, an operational hypothesis specifies the type of relationship expected between them. This could be a correlation, a cause-and-effect relationship, or another type of association.

The Importance of Operationalizing Variables

Operationalizing variables — defining them in measurable terms — is a critical step in forming an operational hypothesis. This process ensures the variables are quantifiable, enhancing the reliability and validity of the research.

Constructing an Operational Hypothesis

Creating an operational hypothesis is a fundamental step in the scientific method and research process. It involves generating a precise, testable statement that predicts the outcome of a study based on the research question. An operational hypothesis must clearly identify and define the variables under study and describe the expected relationship between them. The process of creating an operational hypothesis involves several key steps:

Steps to Construct an Operational Hypothesis

- Define the Research Question : Start by clearly identifying the research question. This question should highlight the key aspect or phenomenon that the study aims to investigate.

- Identify the Variables : Next, identify the key variables in your study. Variables are elements that you will measure, control, or manipulate in your research. There are typically two types of variables in a hypothesis: the independent variable (the cause) and the dependent variable (the effect).

- Operationalize the Variables : Once you’ve identified the variables, you must operationalize them. This involves defining your variables in such a way that they can be easily measured, manipulated, or controlled during the experiment.

- Predict the Relationship : The final step involves predicting the relationship between the variables. This could be an increase, decrease, or any other type of correlation between the independent and dependent variables.

By following these steps, you will create an operational hypothesis that provides a clear direction for your research, ensuring that your study is grounded in a testable prediction.

Evaluating the Strength of an Operational Hypothesis

Not all operational hypotheses are created equal. The strength of an operational hypothesis can significantly influence the validity of a study. There are several key factors that contribute to the strength of an operational hypothesis:

- Clarity : A strong operational hypothesis is clear and unambiguous. It precisely defines all variables and the expected relationship between them.

- Testability : A key feature of an operational hypothesis is that it must be testable. That is, it should predict an outcome that can be observed and measured.

- Operationalization of Variables : The operationalization of variables contributes to the strength of an operational hypothesis. When variables are clearly defined in measurable terms, it enhances the reliability of the study.

- Alignment with Research : Finally, a strong operational hypothesis aligns closely with the research question and the overall goals of the study.

By carefully crafting and evaluating an operational hypothesis, researchers can ensure that their work provides valuable, valid, and actionable insights.

Examples of Operational Hypotheses

To illustrate the concept further, this section will provide examples of well-constructed operational hypotheses in various research fields.

The operational hypothesis is a fundamental component of scientific inquiry, guiding the research design and providing a clear framework for testing assumptions. By understanding how to construct and evaluate an operational hypothesis, we can ensure our research is both rigorous and meaningful.

Examples of Operational Hypothesis:

- In Education : An operational hypothesis in an educational study might be: “Students who receive tutoring (Independent Variable) will show a 20% improvement in standardized test scores (Dependent Variable) compared to students who did not receive tutoring.”

- In Psychology : In a psychological study, an operational hypothesis could be: “Individuals who meditate for 20 minutes each day (Independent Variable) will report a 15% decrease in self-reported stress levels (Dependent Variable) after eight weeks compared to those who do not meditate.”

- In Health Science : An operational hypothesis in a health science study might be: “Participants who drink eight glasses of water daily (Independent Variable) will show a 10% decrease in reported fatigue levels (Dependent Variable) after three weeks compared to those who drink four glasses of water daily.”

- In Environmental Science : In an environmental study, an operational hypothesis could be: “Cities that implement recycling programs (Independent Variable) will see a 25% reduction in landfill waste (Dependent Variable) after one year compared to cities without recycling programs.”

Research Hypothesis In Psychology: Types, & Examples

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A research hypothesis, in its plural form “hypotheses,” is a specific, testable prediction about the anticipated results of a study, established at its outset. It is a key component of the scientific method .

Hypotheses connect theory to data and guide the research process towards expanding scientific understanding

Some key points about hypotheses:

- A hypothesis expresses an expected pattern or relationship. It connects the variables under investigation.

- It is stated in clear, precise terms before any data collection or analysis occurs. This makes the hypothesis testable.

- A hypothesis must be falsifiable. It should be possible, even if unlikely in practice, to collect data that disconfirms rather than supports the hypothesis.

- Hypotheses guide research. Scientists design studies to explicitly evaluate hypotheses about how nature works.

- For a hypothesis to be valid, it must be testable against empirical evidence. The evidence can then confirm or disprove the testable predictions.

- Hypotheses are informed by background knowledge and observation, but go beyond what is already known to propose an explanation of how or why something occurs.

Predictions typically arise from a thorough knowledge of the research literature, curiosity about real-world problems or implications, and integrating this to advance theory. They build on existing literature while providing new insight.

Types of Research Hypotheses

Alternative hypothesis.

The research hypothesis is often called the alternative or experimental hypothesis in experimental research.

It typically suggests a potential relationship between two key variables: the independent variable, which the researcher manipulates, and the dependent variable, which is measured based on those changes.

The alternative hypothesis states a relationship exists between the two variables being studied (one variable affects the other).

A hypothesis is a testable statement or prediction about the relationship between two or more variables. It is a key component of the scientific method. Some key points about hypotheses:

- Important hypotheses lead to predictions that can be tested empirically. The evidence can then confirm or disprove the testable predictions.

In summary, a hypothesis is a precise, testable statement of what researchers expect to happen in a study and why. Hypotheses connect theory to data and guide the research process towards expanding scientific understanding.

An experimental hypothesis predicts what change(s) will occur in the dependent variable when the independent variable is manipulated.

It states that the results are not due to chance and are significant in supporting the theory being investigated.

The alternative hypothesis can be directional, indicating a specific direction of the effect, or non-directional, suggesting a difference without specifying its nature. It’s what researchers aim to support or demonstrate through their study.

Null Hypothesis

The null hypothesis states no relationship exists between the two variables being studied (one variable does not affect the other). There will be no changes in the dependent variable due to manipulating the independent variable.

It states results are due to chance and are not significant in supporting the idea being investigated.

The null hypothesis, positing no effect or relationship, is a foundational contrast to the research hypothesis in scientific inquiry. It establishes a baseline for statistical testing, promoting objectivity by initiating research from a neutral stance.

Many statistical methods are tailored to test the null hypothesis, determining the likelihood of observed results if no true effect exists.

This dual-hypothesis approach provides clarity, ensuring that research intentions are explicit, and fosters consistency across scientific studies, enhancing the standardization and interpretability of research outcomes.

Nondirectional Hypothesis

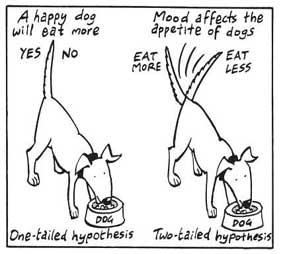

A non-directional hypothesis, also known as a two-tailed hypothesis, predicts that there is a difference or relationship between two variables but does not specify the direction of this relationship.

It merely indicates that a change or effect will occur without predicting which group will have higher or lower values.

For example, “There is a difference in performance between Group A and Group B” is a non-directional hypothesis.

Directional Hypothesis

A directional (one-tailed) hypothesis predicts the nature of the effect of the independent variable on the dependent variable. It predicts in which direction the change will take place. (i.e., greater, smaller, less, more)

It specifies whether one variable is greater, lesser, or different from another, rather than just indicating that there’s a difference without specifying its nature.

For example, “Exercise increases weight loss” is a directional hypothesis.

Falsifiability

The Falsification Principle, proposed by Karl Popper , is a way of demarcating science from non-science. It suggests that for a theory or hypothesis to be considered scientific, it must be testable and irrefutable.

Falsifiability emphasizes that scientific claims shouldn’t just be confirmable but should also have the potential to be proven wrong.

It means that there should exist some potential evidence or experiment that could prove the proposition false.

However many confirming instances exist for a theory, it only takes one counter observation to falsify it. For example, the hypothesis that “all swans are white,” can be falsified by observing a black swan.

For Popper, science should attempt to disprove a theory rather than attempt to continually provide evidence to support a research hypothesis.

Can a Hypothesis be Proven?

Hypotheses make probabilistic predictions. They state the expected outcome if a particular relationship exists. However, a study result supporting a hypothesis does not definitively prove it is true.

All studies have limitations. There may be unknown confounding factors or issues that limit the certainty of conclusions. Additional studies may yield different results.

In science, hypotheses can realistically only be supported with some degree of confidence, not proven. The process of science is to incrementally accumulate evidence for and against hypothesized relationships in an ongoing pursuit of better models and explanations that best fit the empirical data. But hypotheses remain open to revision and rejection if that is where the evidence leads.

- Disproving a hypothesis is definitive. Solid disconfirmatory evidence will falsify a hypothesis and require altering or discarding it based on the evidence.

- However, confirming evidence is always open to revision. Other explanations may account for the same results, and additional or contradictory evidence may emerge over time.

We can never 100% prove the alternative hypothesis. Instead, we see if we can disprove, or reject the null hypothesis.

If we reject the null hypothesis, this doesn’t mean that our alternative hypothesis is correct but does support the alternative/experimental hypothesis.

Upon analysis of the results, an alternative hypothesis can be rejected or supported, but it can never be proven to be correct. We must avoid any reference to results proving a theory as this implies 100% certainty, and there is always a chance that evidence may exist which could refute a theory.

How to Write a Hypothesis

- Identify variables . The researcher manipulates the independent variable and the dependent variable is the measured outcome.

- Operationalized the variables being investigated . Operationalization of a hypothesis refers to the process of making the variables physically measurable or testable, e.g. if you are about to study aggression, you might count the number of punches given by participants.

- Decide on a direction for your prediction . If there is evidence in the literature to support a specific effect of the independent variable on the dependent variable, write a directional (one-tailed) hypothesis. If there are limited or ambiguous findings in the literature regarding the effect of the independent variable on the dependent variable, write a non-directional (two-tailed) hypothesis.

- Make it Testable : Ensure your hypothesis can be tested through experimentation or observation. It should be possible to prove it false (principle of falsifiability).

- Clear & concise language . A strong hypothesis is concise (typically one to two sentences long), and formulated using clear and straightforward language, ensuring it’s easily understood and testable.

Consider a hypothesis many teachers might subscribe to: students work better on Monday morning than on Friday afternoon (IV=Day, DV= Standard of work).

Now, if we decide to study this by giving the same group of students a lesson on a Monday morning and a Friday afternoon and then measuring their immediate recall of the material covered in each session, we would end up with the following:

- The alternative hypothesis states that students will recall significantly more information on a Monday morning than on a Friday afternoon.

- The null hypothesis states that there will be no significant difference in the amount recalled on a Monday morning compared to a Friday afternoon. Any difference will be due to chance or confounding factors.

More Examples

- Memory : Participants exposed to classical music during study sessions will recall more items from a list than those who studied in silence.

- Social Psychology : Individuals who frequently engage in social media use will report higher levels of perceived social isolation compared to those who use it infrequently.

- Developmental Psychology : Children who engage in regular imaginative play have better problem-solving skills than those who don’t.

- Clinical Psychology : Cognitive-behavioral therapy will be more effective in reducing symptoms of anxiety over a 6-month period compared to traditional talk therapy.

- Cognitive Psychology : Individuals who multitask between various electronic devices will have shorter attention spans on focused tasks than those who single-task.

- Health Psychology : Patients who practice mindfulness meditation will experience lower levels of chronic pain compared to those who don’t meditate.

- Organizational Psychology : Employees in open-plan offices will report higher levels of stress than those in private offices.

- Behavioral Psychology : Rats rewarded with food after pressing a lever will press it more frequently than rats who receive no reward.

- Foundations

- Write Paper

Search form

- Experiments

- Anthropology

- Self-Esteem

- Social Anxiety

Operationalization

Operationalization is the process of strictly defining variables into measurable factors. The process defines fuzzy concepts and allows them to be measured, empirically and quantitatively.

This article is a part of the guide:

- Null Hypothesis

- Research Hypothesis

- Defining a Research Problem

- Selecting Method

Browse Full Outline

- 1 Scientific Method

- 2.1.1 Null Hypothesis

- 2.1.2 Research Hypothesis

- 2.2 Prediction

- 2.3 Conceptual Variable

- 3.1 Operationalization

- 3.2 Selecting Method

- 3.3 Measurements

- 3.4 Scientific Observation

- 4.1 Empirical Evidence

- 5.1 Generalization

- 5.2 Errors in Conclusion

For experimental research , where interval or ratio measurements are used, the scales are usually well defined and strict.

Operationalization also sets down exact definitions of each variable, increasing the quality of the results, and improving the robustness of the design .

For many fields, such as social science, which often use ordinal measurements, operationalization is essential. It determines how the researchers are going to measure an emotion or concept, such as the level of distress or aggression.

Such measurements are arbitrary, but allow others to replicate the research, as well as perform statistical analysis of the results.

Fuzzy Concepts

Fuzzy concepts are vague ideas, concepts that lack clarity or are only partially true. These are often referred to as " conceptual variables ".

It is important to define the variables to facilitate accurate replication of the research process . For example, a scientist might propose the hypothesis :

“Children grow more quickly if they eat vegetables.”

What does the statement mean by 'children'? Are they from America or Africa. What age are they? Are the children boys or girls? There are billions of children in the world, so how do you define the sample groups?

How is 'growth' defined? Is it weight, height, mental growth or strength? The statement does not strictly define the measurable, dependent variable .

What does the term 'more quickly' mean? What units, and what timescale, will be used to measure this? A short-term experiment, lasting one month, may give wildly different results than a longer-term study.

The frequency of sampling is important for operationalization , too.

If you were conducting the experiment over one year, it would not be practical to test the weight every 5 minutes, or even every month. The first is impractical, and the latter will not generate enough analyzable data points.

What are 'vegetables'? There are hundreds of different types of vegetable, each containing different levels of vitamins and minerals. Are the children fed raw vegetables, or are they cooked? How does the researcher standardize diets, and ensure that the children eat their greens?

The above hypothesis is not a bad statement, but it needs clarifying and strengthening, a process called operationalization.

The researcher could narrow down the range of children, by specifying age, sex, nationality, or a combination of attributes. As long as the sample group is representative of the wider group, then the statement is more clearly defined.

Growth may be defined as height or weight. The researcher must select a definable and measurable variable, which will form part of the research problem and hypothesis.

Again, 'more quickly' would be redefined as a period of time, and stipulate the frequency of sampling. The initial research design could specify three months or one year, giving a reasonable time scale and taking into account time and budget restraints.

Each sample group could be fed the same diet, or different combinations of vegetables. The researcher might decide that the hypothesis could revolve around vitamin C intake, so the vegetables could be analyzed for the average vitamin content.

Alternatively, a researcher might decide to use an ordinal scale of measurement, asking subjects to fill in a questionnaire about their dietary habits.

Already, the fuzzy concept has undergone a period of operationalization, and the hypothesis takes on a testable format.

The Importance of Operationalization

Of course, strictly speaking, concepts such as seconds, kilograms and centigrade are artificial constructs, a way in which we define variables.

Pounds and Fahrenheit are no less accurate, but were jettisoned in favor of the metric system. A researcher must justify their scale of scientific measurement .

Operationalization defines the exact measuring method used, and allows other scientists to follow exactly the same methodology. One example of the dangers of non-operationalization is the failure of the Mars Climate Orbiter .

This expensive satellite was lost, somewhere above Mars, and the mission completely failed. Subsequent investigation found that the engineers at the sub-contractor, Lockheed, had used imperial units instead of metric units of force.

A failure in operationalization meant that the units used during the construction and simulations were not standardized. The US engineers used pound force, the other engineers and software designers, correctly, used metric Newtons.

This led to a huge error in the thrust calculations, and the spacecraft ended up in a lower orbit around Mars, burning up from atmospheric friction. This failure in operationalization cost hundreds of millions of dollars, and years of planning and construction were wasted.

- Psychology 101

- Flags and Countries

- Capitals and Countries

Martyn Shuttleworth (Jan 17, 2008). Operationalization. Retrieved May 05, 2024 from Explorable.com: https://explorable.com/operationalization

You Are Allowed To Copy The Text

The text in this article is licensed under the Creative Commons-License Attribution 4.0 International (CC BY 4.0) .

This means you're free to copy, share and adapt any parts (or all) of the text in the article, as long as you give appropriate credit and provide a link/reference to this page.

That is it. You don't need our permission to copy the article; just include a link/reference back to this page. You can use it freely (with some kind of link), and we're also okay with people reprinting in publications like books, blogs, newsletters, course-material, papers, wikipedia and presentations (with clear attribution).

Want to stay up to date? Follow us!

Get all these articles in 1 guide.

Want the full version to study at home, take to school or just scribble on?

Whether you are an academic novice, or you simply want to brush up your skills, this book will take your academic writing skills to the next level.

Download electronic versions: - Epub for mobiles and tablets - For Kindle here - PDF version here

Save this course for later

Don't have time for it all now? No problem, save it as a course and come back to it later.

Footer bottom

- Privacy Policy

- Subscribe to our RSS Feed

- Like us on Facebook

- Follow us on Twitter

- Abnormal Psychology

- Assessment (IB)

- Biological Psychology

- Cognitive Psychology

- Criminology

- Developmental Psychology

- Extended Essay

- General Interest

- Health Psychology

- Human Relationships

- IB Psychology

- IB Psychology HL Extensions

- Internal Assessment (IB)

- Love and Marriage

- Post-Traumatic Stress Disorder

- Prejudice and Discrimination

- Qualitative Research Methods

- Research Methodology

- Revision and Exam Preparation

- Social and Cultural Psychology

- Studies and Theories

- Teaching Ideas

Travis Dixon October 24, 2016 Assessment (IB) , Internal Assessment (IB) , Research Methodology

- Click to share on Facebook (Opens in new window)

- Click to share on Twitter (Opens in new window)

- Click to share on LinkedIn (Opens in new window)

- Click to share on Pinterest (Opens in new window)

- Click to email a link to a friend (Opens in new window)

Updated June 2020

Writing good hypotheses in IB Psychology IAs is something many students find challenging. After moderating another 175+ IA’s this year I could see some common errors students were making. This post hopes to give a clear explanation with examples to help with this tricky task.

Null and Alternative Hypotheses

Null hypothesis (h0).

Our teacher support pack has everything students and teachers need to get top marks in the IA. Download a Free preview from https://store.themantic-education.com/

The term “null” means having no value, significance or effect. It also refers to something associated with zero. A null hypothesis in a student’s IA, therefore, should state that there is (or will be) no effect of the IV on the DV. This is what we assume to be true until we have the evidence to suggest otherwise.

A common misconception is that the hypothesis is based on the sample in the study. Our hypotheses should actually be about the population from which we’ve drawn the sample, not the sample itself. Therefore, when writing our hypotheses we can use present tense instead of future tense (e.g. There is instead of There will be… ).

Having said that, in the IB Psych’ IA, the IB is apparently assuming the hypotheses are based on the sample (because variables need to be operationalized) so writing your hypotheses as predictions of what might happen in the experiment is fine (see below for examples).

IB Psych IA Tip: It’s fine (and even recommended) to state in your null hypotheses that there will be no significant difference between the two conditions in your experiment or any differences are due to chance (see footnote 1)

The Alternative Hypothesis (H1)

This is also referred to as the research hypothesis or the experimental hypothesis. It’s an alternative hypothesis to the null because if the null is not true, there must be an alternative explanation.

Generally speaking it’s not a prediction of what will happen in the study, but it’s an assumption about what is true for the population being studied. But, similar to the null hypothesis in the IB Psych IA you can (and should) write this about a prediction of what you think will happen in your study (see examples below).

This must be operationalized: it must be evident how the variables will be quantified, and may be either one- or two-tailed (directional or non-directional).

Read more:

Operational Definitions

- Key Studies for the IA

- Lesson Idea: Inferential Statistics

To avoid issues with copying and plagiarism, the following examples are from studies that students cannot do for the internal assessment. Some are taken from this post on how to operationalize definitions of variables .

A Fictional Drug Trial

- H1: Taking Paroxetine will decrease symptoms of PTSD.

- Ho: Taking paroxetine will not decrease symptoms of PTSD.

Operationalized (as if for an IB Psych IA):

- H1: The experimental group who take 20mg of Paroxetine (as a pill) every morning for 7 days will have a larger decrease in symptoms (as measured by the CAPs scale) when compared to the control group who will take an identical placebo pill every morning for 7 days.

A Fictional Study on Body Image*

- H1: Viewing media that portrays the thin ideal increases feelings of body image dissatisfaction.

- Ho: Types of media viewed does not affect body image dissatisfaction.

- H1: Watching a video portraying the thin ideal in a Baywatch film trailer will result in higher scores on the Body Shape Questionnaire (BSQ-34) compared with watching media with “normal” body types in the Grownups film trailer.

*This entire IA exemplar is included in the IA Teacher Support Pack.

A fictional study on weight training.

- H1: Listening to music affects training performance.

- Ho: Music has no effect on training performance.

- H1: Listening to heavy metal rock music (AC/DC songs) causes a difference in the number of push-ups performed compared to listening to classical music (Mozart’s symphony #41).

One vs. Two Tailed

It is important to know if your hypothesis is one or two-tailed. This will influence the type of inferential statistics test you use later. If you have a one-tailed hypotheses, you should use a one-tailed test. And if you have a two-tailed hypothesis? You guessed it – a two-tailed test.

The one vs two tailed debate still continues in Psychology ( read more ). The IB ignores this and makes it simple: one tailed hypotheses = one tailed test. No ifs, ands, or buts!

If you are predicting that one of your conditions in your experiment will have a higher value than the other, it’s one-tailed (because you know the direction of the effect – the IV is increasing the DV). Similarly, your hypothesis is one-tailed if you are predicting that manipulating the IV will cause a decrease in the DV.

However, if you think your IV will have an effect, but you’re not sure if it will increase or decrease it, this is two-tailed.

Of the three examples above, can you tell which one is two-tailed and which one is one-tailed?

Read more about operationally defining your variables in your hypotheses in this blog post .

Points to Remember

- Hypotheses are based on the population, not the sample, so you can write in present tense. However, the norm for IB Psych IA’s is to write in the future tense as a prediction of what will happen in your experiment.

- In IB IA’s, we’re hypothesizing about a causal relationship of an IV on a DV in a population – the hypotheses should reflect that causal relationship.

- Inferential tests are test of the null hypothesis (hence it’s called null hypothesis testing). We are conducting the tests to see the chances of obtaining our results even if the null is true (i.e. there is no effect).

Footnote 1: Saying “that there will be no significant difference between the two conditions in or any differences are due to chance” is technically an incorrect way to state a null hypothesis. That’s because when we conduct our inferential tests we’re seeing what the probability is of getting our results even if our null were true. So if we get a p value of say 0.10 (10%), according to the above null hypothesis we’re saying there is a 10% chance that there will be no significant difference between the two conditions, which isn’t actually accurate (don’t worry if I’ve lost you – it’s mind bending stuff). This is one of those instances where poor statistical practice has ingrained itself in IB assessment. But on the plus side it does make it easier for students (and not enough time is spent on this for the bad habits to be too ingrained anyway).

Travis Dixon is an IB Psychology teacher, author, workshop leader, examiner and IA moderator.

Frequently asked questions

What is operationalisation.

Operationalisation means turning abstract conceptual ideas into measurable observations.

For example, the concept of social anxiety isn’t directly observable, but it can be operationally defined in terms of self-rating scores, behavioural avoidance of crowded places, or physical anxiety symptoms in social situations.

Before collecting data , it’s important to consider how you will operationalise the variables that you want to measure.

Frequently asked questions: Methodology

Quantitative observations involve measuring or counting something and expressing the result in numerical form, while qualitative observations involve describing something in non-numerical terms, such as its appearance, texture, or color.

To make quantitative observations , you need to use instruments that are capable of measuring the quantity you want to observe. For example, you might use a ruler to measure the length of an object or a thermometer to measure its temperature.

Scope of research is determined at the beginning of your research process , prior to the data collection stage. Sometimes called “scope of study,” your scope delineates what will and will not be covered in your project. It helps you focus your work and your time, ensuring that you’ll be able to achieve your goals and outcomes.

Defining a scope can be very useful in any research project, from a research proposal to a thesis or dissertation . A scope is needed for all types of research: quantitative , qualitative , and mixed methods .

To define your scope of research, consider the following:

- Budget constraints or any specifics of grant funding

- Your proposed timeline and duration

- Specifics about your population of study, your proposed sample size , and the research methodology you’ll pursue

- Any inclusion and exclusion criteria

- Any anticipated control , extraneous , or confounding variables that could bias your research if not accounted for properly.

Inclusion and exclusion criteria are predominantly used in non-probability sampling . In purposive sampling and snowball sampling , restrictions apply as to who can be included in the sample .

Inclusion and exclusion criteria are typically presented and discussed in the methodology section of your thesis or dissertation .

The purpose of theory-testing mode is to find evidence in order to disprove, refine, or support a theory. As such, generalisability is not the aim of theory-testing mode.

Due to this, the priority of researchers in theory-testing mode is to eliminate alternative causes for relationships between variables . In other words, they prioritise internal validity over external validity , including ecological validity .

Convergent validity shows how much a measure of one construct aligns with other measures of the same or related constructs .

On the other hand, concurrent validity is about how a measure matches up to some known criterion or gold standard, which can be another measure.

Although both types of validity are established by calculating the association or correlation between a test score and another variable , they represent distinct validation methods.

Validity tells you how accurately a method measures what it was designed to measure. There are 4 main types of validity :

- Construct validity : Does the test measure the construct it was designed to measure?

- Face validity : Does the test appear to be suitable for its objectives ?

- Content validity : Does the test cover all relevant parts of the construct it aims to measure.

- Criterion validity : Do the results accurately measure the concrete outcome they are designed to measure?

Criterion validity evaluates how well a test measures the outcome it was designed to measure. An outcome can be, for example, the onset of a disease.

Criterion validity consists of two subtypes depending on the time at which the two measures (the criterion and your test) are obtained:

- Concurrent validity is a validation strategy where the the scores of a test and the criterion are obtained at the same time

- Predictive validity is a validation strategy where the criterion variables are measured after the scores of the test

Attrition refers to participants leaving a study. It always happens to some extent – for example, in randomised control trials for medical research.

Differential attrition occurs when attrition or dropout rates differ systematically between the intervention and the control group . As a result, the characteristics of the participants who drop out differ from the characteristics of those who stay in the study. Because of this, study results may be biased .

Criterion validity and construct validity are both types of measurement validity . In other words, they both show you how accurately a method measures something.

While construct validity is the degree to which a test or other measurement method measures what it claims to measure, criterion validity is the degree to which a test can predictively (in the future) or concurrently (in the present) measure something.

Construct validity is often considered the overarching type of measurement validity . You need to have face validity , content validity , and criterion validity in order to achieve construct validity.

Convergent validity and discriminant validity are both subtypes of construct validity . Together, they help you evaluate whether a test measures the concept it was designed to measure.

- Convergent validity indicates whether a test that is designed to measure a particular construct correlates with other tests that assess the same or similar construct.

- Discriminant validity indicates whether two tests that should not be highly related to each other are indeed not related. This type of validity is also called divergent validity .

You need to assess both in order to demonstrate construct validity. Neither one alone is sufficient for establishing construct validity.

Face validity and content validity are similar in that they both evaluate how suitable the content of a test is. The difference is that face validity is subjective, and assesses content at surface level.

When a test has strong face validity, anyone would agree that the test’s questions appear to measure what they are intended to measure.

For example, looking at a 4th grade math test consisting of problems in which students have to add and multiply, most people would agree that it has strong face validity (i.e., it looks like a math test).

On the other hand, content validity evaluates how well a test represents all the aspects of a topic. Assessing content validity is more systematic and relies on expert evaluation. of each question, analysing whether each one covers the aspects that the test was designed to cover.

A 4th grade math test would have high content validity if it covered all the skills taught in that grade. Experts(in this case, math teachers), would have to evaluate the content validity by comparing the test to the learning objectives.

Content validity shows you how accurately a test or other measurement method taps into the various aspects of the specific construct you are researching.

In other words, it helps you answer the question: “does the test measure all aspects of the construct I want to measure?” If it does, then the test has high content validity.

The higher the content validity, the more accurate the measurement of the construct.

If the test fails to include parts of the construct, or irrelevant parts are included, the validity of the instrument is threatened, which brings your results into question.

Construct validity refers to how well a test measures the concept (or construct) it was designed to measure. Assessing construct validity is especially important when you’re researching concepts that can’t be quantified and/or are intangible, like introversion. To ensure construct validity your test should be based on known indicators of introversion ( operationalisation ).

On the other hand, content validity assesses how well the test represents all aspects of the construct. If some aspects are missing or irrelevant parts are included, the test has low content validity.

- Discriminant validity indicates whether two tests that should not be highly related to each other are indeed not related

Construct validity has convergent and discriminant subtypes. They assist determine if a test measures the intended notion.

The reproducibility and replicability of a study can be ensured by writing a transparent, detailed method section and using clear, unambiguous language.

Reproducibility and replicability are related terms.

- A successful reproduction shows that the data analyses were conducted in a fair and honest manner.

- A successful replication shows that the reliability of the results is high.

- Reproducing research entails reanalysing the existing data in the same manner.

- Replicating (or repeating ) the research entails reconducting the entire analysis, including the collection of new data .

Snowball sampling is a non-probability sampling method . Unlike probability sampling (which involves some form of random selection ), the initial individuals selected to be studied are the ones who recruit new participants.

Because not every member of the target population has an equal chance of being recruited into the sample, selection in snowball sampling is non-random.

Snowball sampling is a non-probability sampling method , where there is not an equal chance for every member of the population to be included in the sample .

This means that you cannot use inferential statistics and make generalisations – often the goal of quantitative research . As such, a snowball sample is not representative of the target population, and is usually a better fit for qualitative research .

Snowball sampling relies on the use of referrals. Here, the researcher recruits one or more initial participants, who then recruit the next ones.

Participants share similar characteristics and/or know each other. Because of this, not every member of the population has an equal chance of being included in the sample, giving rise to sampling bias .

Snowball sampling is best used in the following cases:

- If there is no sampling frame available (e.g., people with a rare disease)

- If the population of interest is hard to access or locate (e.g., people experiencing homelessness)

- If the research focuses on a sensitive topic (e.g., extra-marital affairs)

Stratified sampling and quota sampling both involve dividing the population into subgroups and selecting units from each subgroup. The purpose in both cases is to select a representative sample and/or to allow comparisons between subgroups.

The main difference is that in stratified sampling, you draw a random sample from each subgroup ( probability sampling ). In quota sampling you select a predetermined number or proportion of units, in a non-random manner ( non-probability sampling ).

Random sampling or probability sampling is based on random selection. This means that each unit has an equal chance (i.e., equal probability) of being included in the sample.

On the other hand, convenience sampling involves stopping people at random, which means that not everyone has an equal chance of being selected depending on the place, time, or day you are collecting your data.

Convenience sampling and quota sampling are both non-probability sampling methods. They both use non-random criteria like availability, geographical proximity, or expert knowledge to recruit study participants.

However, in convenience sampling, you continue to sample units or cases until you reach the required sample size.

In quota sampling, you first need to divide your population of interest into subgroups (strata) and estimate their proportions (quota) in the population. Then you can start your data collection , using convenience sampling to recruit participants, until the proportions in each subgroup coincide with the estimated proportions in the population.

A sampling frame is a list of every member in the entire population . It is important that the sampling frame is as complete as possible, so that your sample accurately reflects your population.

Stratified and cluster sampling may look similar, but bear in mind that groups created in cluster sampling are heterogeneous , so the individual characteristics in the cluster vary. In contrast, groups created in stratified sampling are homogeneous , as units share characteristics.

Relatedly, in cluster sampling you randomly select entire groups and include all units of each group in your sample. However, in stratified sampling, you select some units of all groups and include them in your sample. In this way, both methods can ensure that your sample is representative of the target population .

When your population is large in size, geographically dispersed, or difficult to contact, it’s necessary to use a sampling method .

This allows you to gather information from a smaller part of the population, i.e. the sample, and make accurate statements by using statistical analysis. A few sampling methods include simple random sampling , convenience sampling , and snowball sampling .

The two main types of social desirability bias are:

- Self-deceptive enhancement (self-deception): The tendency to see oneself in a favorable light without realizing it.

- Impression managemen t (other-deception): The tendency to inflate one’s abilities or achievement in order to make a good impression on other people.

Response bias refers to conditions or factors that take place during the process of responding to surveys, affecting the responses. One type of response bias is social desirability bias .

Demand characteristics are aspects of experiments that may give away the research objective to participants. Social desirability bias occurs when participants automatically try to respond in ways that make them seem likeable in a study, even if it means misrepresenting how they truly feel.

Participants may use demand characteristics to infer social norms or experimenter expectancies and act in socially desirable ways, so you should try to control for demand characteristics wherever possible.

A systematic review is secondary research because it uses existing research. You don’t collect new data yourself.

Ethical considerations in research are a set of principles that guide your research designs and practices. These principles include voluntary participation, informed consent, anonymity, confidentiality, potential for harm, and results communication.

Scientists and researchers must always adhere to a certain code of conduct when collecting data from others .

These considerations protect the rights of research participants, enhance research validity , and maintain scientific integrity.

Research ethics matter for scientific integrity, human rights and dignity, and collaboration between science and society. These principles make sure that participation in studies is voluntary, informed, and safe.

Research misconduct means making up or falsifying data, manipulating data analyses, or misrepresenting results in research reports. It’s a form of academic fraud.

These actions are committed intentionally and can have serious consequences; research misconduct is not a simple mistake or a point of disagreement but a serious ethical failure.

Anonymity means you don’t know who the participants are, while confidentiality means you know who they are but remove identifying information from your research report. Both are important ethical considerations .

You can only guarantee anonymity by not collecting any personally identifying information – for example, names, phone numbers, email addresses, IP addresses, physical characteristics, photos, or videos.

You can keep data confidential by using aggregate information in your research report, so that you only refer to groups of participants rather than individuals.

Peer review is a process of evaluating submissions to an academic journal. Utilising rigorous criteria, a panel of reviewers in the same subject area decide whether to accept each submission for publication.

For this reason, academic journals are often considered among the most credible sources you can use in a research project – provided that the journal itself is trustworthy and well regarded.

In general, the peer review process follows the following steps:

- First, the author submits the manuscript to the editor.

- Reject the manuscript and send it back to author, or

- Send it onward to the selected peer reviewer(s)

- Next, the peer review process occurs. The reviewer provides feedback, addressing any major or minor issues with the manuscript, and gives their advice regarding what edits should be made.

- Lastly, the edited manuscript is sent back to the author. They input the edits, and resubmit it to the editor for publication.

Peer review can stop obviously problematic, falsified, or otherwise untrustworthy research from being published. It also represents an excellent opportunity to get feedback from renowned experts in your field.

It acts as a first defence, helping you ensure your argument is clear and that there are no gaps, vague terms, or unanswered questions for readers who weren’t involved in the research process.

Peer-reviewed articles are considered a highly credible source due to this stringent process they go through before publication.

Many academic fields use peer review , largely to determine whether a manuscript is suitable for publication. Peer review enhances the credibility of the published manuscript.

However, peer review is also common in non-academic settings. The United Nations, the European Union, and many individual nations use peer review to evaluate grant applications. It is also widely used in medical and health-related fields as a teaching or quality-of-care measure.

Peer assessment is often used in the classroom as a pedagogical tool. Both receiving feedback and providing it are thought to enhance the learning process, helping students think critically and collaboratively.

- In a single-blind study , only the participants are blinded.

- In a double-blind study , both participants and experimenters are blinded.

- In a triple-blind study , the assignment is hidden not only from participants and experimenters, but also from the researchers analysing the data.

Blinding is important to reduce bias (e.g., observer bias , demand characteristics ) and ensure a study’s internal validity .

If participants know whether they are in a control or treatment group , they may adjust their behaviour in ways that affect the outcome that researchers are trying to measure. If the people administering the treatment are aware of group assignment, they may treat participants differently and thus directly or indirectly influence the final results.

Blinding means hiding who is assigned to the treatment group and who is assigned to the control group in an experiment .

Explanatory research is a research method used to investigate how or why something occurs when only a small amount of information is available pertaining to that topic. It can help you increase your understanding of a given topic.

Explanatory research is used to investigate how or why a phenomenon occurs. Therefore, this type of research is often one of the first stages in the research process , serving as a jumping-off point for future research.

Exploratory research is a methodology approach that explores research questions that have not previously been studied in depth. It is often used when the issue you’re studying is new, or the data collection process is challenging in some way.

Exploratory research is often used when the issue you’re studying is new or when the data collection process is challenging for some reason.

You can use exploratory research if you have a general idea or a specific question that you want to study but there is no preexisting knowledge or paradigm with which to study it.

To implement random assignment , assign a unique number to every member of your study’s sample .

Then, you can use a random number generator or a lottery method to randomly assign each number to a control or experimental group. You can also do so manually, by flipping a coin or rolling a die to randomly assign participants to groups.

Random selection, or random sampling , is a way of selecting members of a population for your study’s sample.

In contrast, random assignment is a way of sorting the sample into control and experimental groups.

Random sampling enhances the external validity or generalisability of your results, while random assignment improves the internal validity of your study.

Random assignment is used in experiments with a between-groups or independent measures design. In this research design, there’s usually a control group and one or more experimental groups. Random assignment helps ensure that the groups are comparable.

In general, you should always use random assignment in this type of experimental design when it is ethically possible and makes sense for your study topic.

Clean data are valid, accurate, complete, consistent, unique, and uniform. Dirty data include inconsistencies and errors.

Dirty data can come from any part of the research process, including poor research design , inappropriate measurement materials, or flawed data entry.

Data cleaning takes place between data collection and data analyses. But you can use some methods even before collecting data.

For clean data, you should start by designing measures that collect valid data. Data validation at the time of data entry or collection helps you minimize the amount of data cleaning you’ll need to do.

After data collection, you can use data standardisation and data transformation to clean your data. You’ll also deal with any missing values, outliers, and duplicate values.

Data cleaning involves spotting and resolving potential data inconsistencies or errors to improve your data quality. An error is any value (e.g., recorded weight) that doesn’t reflect the true value (e.g., actual weight) of something that’s being measured.

In this process, you review, analyse, detect, modify, or remove ‘dirty’ data to make your dataset ‘clean’. Data cleaning is also called data cleansing or data scrubbing.

Data cleaning is necessary for valid and appropriate analyses. Dirty data contain inconsistencies or errors , but cleaning your data helps you minimise or resolve these.

Without data cleaning, you could end up with a Type I or II error in your conclusion. These types of erroneous conclusions can be practically significant with important consequences, because they lead to misplaced investments or missed opportunities.

Observer bias occurs when a researcher’s expectations, opinions, or prejudices influence what they perceive or record in a study. It usually affects studies when observers are aware of the research aims or hypotheses. This type of research bias is also called detection bias or ascertainment bias .

The observer-expectancy effect occurs when researchers influence the results of their own study through interactions with participants.

Researchers’ own beliefs and expectations about the study results may unintentionally influence participants through demand characteristics .

You can use several tactics to minimise observer bias .

- Use masking (blinding) to hide the purpose of your study from all observers.

- Triangulate your data with different data collection methods or sources.

- Use multiple observers and ensure inter-rater reliability.

- Train your observers to make sure data is consistently recorded between them.

- Standardise your observation procedures to make sure they are structured and clear.

Naturalistic observation is a valuable tool because of its flexibility, external validity , and suitability for topics that can’t be studied in a lab setting.

The downsides of naturalistic observation include its lack of scientific control , ethical considerations , and potential for bias from observers and subjects.

Naturalistic observation is a qualitative research method where you record the behaviours of your research subjects in real-world settings. You avoid interfering or influencing anything in a naturalistic observation.

You can think of naturalistic observation as ‘people watching’ with a purpose.

Closed-ended, or restricted-choice, questions offer respondents a fixed set of choices to select from. These questions are easier to answer quickly.

Open-ended or long-form questions allow respondents to answer in their own words. Because there are no restrictions on their choices, respondents can answer in ways that researchers may not have otherwise considered.

You can organise the questions logically, with a clear progression from simple to complex, or randomly between respondents. A logical flow helps respondents process the questionnaire easier and quicker, but it may lead to bias. Randomisation can minimise the bias from order effects.

Questionnaires can be self-administered or researcher-administered.

Self-administered questionnaires can be delivered online or in paper-and-pen formats, in person or by post. All questions are standardised so that all respondents receive the same questions with identical wording.

Researcher-administered questionnaires are interviews that take place by phone, in person, or online between researchers and respondents. You can gain deeper insights by clarifying questions for respondents or asking follow-up questions.

In a controlled experiment , all extraneous variables are held constant so that they can’t influence the results. Controlled experiments require:

- A control group that receives a standard treatment, a fake treatment, or no treatment

- Random assignment of participants to ensure the groups are equivalent

Depending on your study topic, there are various other methods of controlling variables .

An experimental group, also known as a treatment group, receives the treatment whose effect researchers wish to study, whereas a control group does not. They should be identical in all other ways.

A true experiment (aka a controlled experiment) always includes at least one control group that doesn’t receive the experimental treatment.

However, some experiments use a within-subjects design to test treatments without a control group. In these designs, you usually compare one group’s outcomes before and after a treatment (instead of comparing outcomes between different groups).

For strong internal validity , it’s usually best to include a control group if possible. Without a control group, it’s harder to be certain that the outcome was caused by the experimental treatment and not by other variables.

A questionnaire is a data collection tool or instrument, while a survey is an overarching research method that involves collecting and analysing data from people using questionnaires.

A Likert scale is a rating scale that quantitatively assesses opinions, attitudes, or behaviours. It is made up of four or more questions that measure a single attitude or trait when response scores are combined.

To use a Likert scale in a survey , you present participants with Likert-type questions or statements, and a continuum of items, usually with five or seven possible responses, to capture their degree of agreement.

Individual Likert-type questions are generally considered ordinal data , because the items have clear rank order, but don’t have an even distribution.

Overall Likert scale scores are sometimes treated as interval data. These scores are considered to have directionality and even spacing between them.

The type of data determines what statistical tests you should use to analyse your data.

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (‘ x affects y because …’).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses. In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess. It should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations, and statistical analysis of data).

Cross-sectional studies are less expensive and time-consuming than many other types of study. They can provide useful insights into a population’s characteristics and identify correlations for further research.

Sometimes only cross-sectional data are available for analysis; other times your research question may only require a cross-sectional study to answer it.

Cross-sectional studies cannot establish a cause-and-effect relationship or analyse behaviour over a period of time. To investigate cause and effect, you need to do a longitudinal study or an experimental study .

Longitudinal studies and cross-sectional studies are two different types of research design . In a cross-sectional study you collect data from a population at a specific point in time; in a longitudinal study you repeatedly collect data from the same sample over an extended period of time.

Longitudinal studies are better to establish the correct sequence of events, identify changes over time, and provide insight into cause-and-effect relationships, but they also tend to be more expensive and time-consuming than other types of studies.

The 1970 British Cohort Study , which has collected data on the lives of 17,000 Brits since their births in 1970, is one well-known example of a longitudinal study .