- All subject areas

- Agricultural and Biological Sciences

- Arts and Humanities

- Biochemistry, Genetics and Molecular Biology

- Business, Management and Accounting

- Chemical Engineering

- Computer Science

- Decision Sciences

- Earth and Planetary Sciences

- Economics, Econometrics and Finance

- Engineering

- Environmental Science

- Health Professions

- Immunology and Microbiology

- Materials Science

- Mathematics

- Multidisciplinary

- Neuroscience

- Pharmacology, Toxicology and Pharmaceutics

- Physics and Astronomy

- Social Sciences

- All subject categories

- Acoustics and Ultrasonics

- Advanced and Specialized Nursing

- Aerospace Engineering

- Agricultural and Biological Sciences (miscellaneous)

- Agronomy and Crop Science

- Algebra and Number Theory

- Analytical Chemistry

- Anesthesiology and Pain Medicine

- Animal Science and Zoology

- Anthropology

- Applied Mathematics

- Applied Microbiology and Biotechnology

- Applied Psychology

- Aquatic Science

- Archeology (arts and humanities)

- Architecture

- Artificial Intelligence

- Arts and Humanities (miscellaneous)

- Assessment and Diagnosis

- Astronomy and Astrophysics

- Atmospheric Science

- Atomic and Molecular Physics, and Optics

- Automotive Engineering

- Behavioral Neuroscience

- Biochemistry

- Biochemistry, Genetics and Molecular Biology (miscellaneous)

- Biochemistry (medical)

- Bioengineering

- Biological Psychiatry

- Biomaterials

- Biomedical Engineering

- Biotechnology

- Building and Construction

- Business and International Management

- Business, Management and Accounting (miscellaneous)

- Cancer Research

- Cardiology and Cardiovascular Medicine

- Care Planning

- Cell Biology

- Cellular and Molecular Neuroscience

- Ceramics and Composites

- Chemical Engineering (miscellaneous)

- Chemical Health and Safety

- Chemistry (miscellaneous)

- Chiropractics

- Civil and Structural Engineering

- Clinical Biochemistry

- Clinical Psychology

- Cognitive Neuroscience

- Colloid and Surface Chemistry

- Communication

- Community and Home Care

- Complementary and Alternative Medicine

- Complementary and Manual Therapy

- Computational Mathematics

- Computational Mechanics

- Computational Theory and Mathematics

- Computer Graphics and Computer-Aided Design

- Computer Networks and Communications

- Computer Science Applications

- Computer Science (miscellaneous)

- Computer Vision and Pattern Recognition

- Computers in Earth Sciences

- Condensed Matter Physics

- Conservation

- Control and Optimization

- Control and Systems Engineering

- Critical Care and Intensive Care Medicine

- Critical Care Nursing

- Cultural Studies

- Decision Sciences (miscellaneous)

- Dental Assisting

- Dental Hygiene

- Dentistry (miscellaneous)

- Dermatology

- Development

- Developmental and Educational Psychology

- Developmental Biology

- Developmental Neuroscience

- Discrete Mathematics and Combinatorics

- Drug Discovery

- Drug Guides

- Earth and Planetary Sciences (miscellaneous)

- Earth-Surface Processes

- Ecological Modeling

- Ecology, Evolution, Behavior and Systematics

- Economic Geology

- Economics and Econometrics

- Economics, Econometrics and Finance (miscellaneous)

- Electrical and Electronic Engineering

- Electrochemistry

- Electronic, Optical and Magnetic Materials

- Emergency Medical Services

- Emergency Medicine

- Emergency Nursing

- Endocrine and Autonomic Systems

- Endocrinology

- Endocrinology, Diabetes and Metabolism

- Energy Engineering and Power Technology

- Energy (miscellaneous)

- Engineering (miscellaneous)

- Environmental Chemistry

- Environmental Engineering

- Environmental Science (miscellaneous)

- Epidemiology

- Experimental and Cognitive Psychology

- Family Practice

- Filtration and Separation

- Fluid Flow and Transfer Processes

- Food Animals

- Food Science

- Fuel Technology

- Fundamentals and Skills

- Gastroenterology

- Gender Studies

- Genetics (clinical)

- Geochemistry and Petrology

- Geography, Planning and Development

- Geometry and Topology

- Geotechnical Engineering and Engineering Geology

- Geriatrics and Gerontology

- Gerontology

- Global and Planetary Change

- Hardware and Architecture

- Health Informatics

- Health Information Management

- Health Policy

- Health Professions (miscellaneous)

- Health (social science)

- Health, Toxicology and Mutagenesis

- History and Philosophy of Science

- Horticulture

- Human Factors and Ergonomics

- Human-Computer Interaction

- Immunology and Allergy

- Immunology and Microbiology (miscellaneous)

- Industrial and Manufacturing Engineering

- Industrial Relations

- Infectious Diseases

- Information Systems

- Information Systems and Management

- Inorganic Chemistry

- Insect Science

- Instrumentation

- Internal Medicine

- Issues, Ethics and Legal Aspects

- Leadership and Management

- Library and Information Sciences

- Life-span and Life-course Studies

- Linguistics and Language

- Literature and Literary Theory

- LPN and LVN

- Management Information Systems

- Management, Monitoring, Policy and Law

- Management of Technology and Innovation

- Management Science and Operations Research

- Materials Chemistry

- Materials Science (miscellaneous)

- Maternity and Midwifery

- Mathematical Physics

- Mathematics (miscellaneous)

- Mechanical Engineering

- Mechanics of Materials

- Media Technology

- Medical and Surgical Nursing

- Medical Assisting and Transcription

- Medical Laboratory Technology

- Medical Terminology

- Medicine (miscellaneous)

- Metals and Alloys

- Microbiology

- Microbiology (medical)

- Modeling and Simulation

- Molecular Biology

- Molecular Medicine

- Nanoscience and Nanotechnology

- Nature and Landscape Conservation

- Neurology (clinical)

- Neuropsychology and Physiological Psychology

- Neuroscience (miscellaneous)

- Nuclear and High Energy Physics

- Nuclear Energy and Engineering

- Numerical Analysis

- Nurse Assisting

- Nursing (miscellaneous)

- Nutrition and Dietetics

- Obstetrics and Gynecology

- Occupational Therapy

- Ocean Engineering

- Oceanography

- Oncology (nursing)

- Ophthalmology

- Oral Surgery

- Organic Chemistry

- Organizational Behavior and Human Resource Management

- Orthodontics

- Orthopedics and Sports Medicine

- Otorhinolaryngology

- Paleontology

- Parasitology

- Pathology and Forensic Medicine

- Pathophysiology

- Pediatrics, Perinatology and Child Health

- Periodontics

- Pharmaceutical Science

- Pharmacology

- Pharmacology (medical)

- Pharmacology (nursing)

- Pharmacology, Toxicology and Pharmaceutics (miscellaneous)

- Physical and Theoretical Chemistry

- Physical Therapy, Sports Therapy and Rehabilitation

- Physics and Astronomy (miscellaneous)

- Physiology (medical)

- Plant Science

- Political Science and International Relations

- Polymers and Plastics

- Process Chemistry and Technology

- Psychiatry and Mental Health

- Psychology (miscellaneous)

- Public Administration

- Public Health, Environmental and Occupational Health

- Pulmonary and Respiratory Medicine

- Radiological and Ultrasound Technology

- Radiology, Nuclear Medicine and Imaging

- Rehabilitation

- Religious Studies

- Renewable Energy, Sustainability and the Environment

- Reproductive Medicine

- Research and Theory

- Respiratory Care

- Review and Exam Preparation

- Reviews and References (medical)

- Rheumatology

- Safety Research

- Safety, Risk, Reliability and Quality

- Sensory Systems

- Signal Processing

- Small Animals

- Social Psychology

- Social Sciences (miscellaneous)

- Social Work

- Sociology and Political Science

- Soil Science

- Space and Planetary Science

- Spectroscopy

- Speech and Hearing

- Sports Science

- Statistical and Nonlinear Physics

- Statistics and Probability

- Statistics, Probability and Uncertainty

- Strategy and Management

- Stratigraphy

- Structural Biology

- Surfaces and Interfaces

- Surfaces, Coatings and Films

- Theoretical Computer Science

- Tourism, Leisure and Hospitality Management

- Transplantation

- Transportation

- Urban Studies

- Veterinary (miscellaneous)

- Visual Arts and Performing Arts

- Waste Management and Disposal

- Water Science and Technology

- All regions / countries

- Asiatic Region

- Eastern Europe

- Latin America

- Middle East

- Northern America

- Pacific Region

- Western Europe

- ARAB COUNTRIES

- IBEROAMERICA

- NORDIC COUNTRIES

- Afghanistan

- Bosnia and Herzegovina

- Brunei Darussalam

- Czech Republic

- Dominican Republic

- Netherlands

- New Caledonia

- New Zealand

- Papua New Guinea

- Philippines

- Puerto Rico

- Russian Federation

- Saudi Arabia

- South Africa

- South Korea

- Switzerland

- Syrian Arab Republic

- Trinidad and Tobago

- United Arab Emirates

- United Kingdom

- United States

- Vatican City State

- Book Series

- Conferences and Proceedings

- Trade Journals

- Citable Docs. (3years)

- Total Cites (3years)

Follow us on @ScimagoJR Scimago Lab , Copyright 2007-2024. Data Source: Scopus®

Cookie settings

Cookie Policy

Legal Notice

Privacy Policy

- Open access

- Published: 11 March 2021

Evaluating cancer research impact: lessons and examples from existing reviews on approaches to research impact assessment

- Catherine R. Hanna ORCID: orcid.org/0000-0002-0907-7747 1 ,

- Kathleen A. Boyd 2 &

- Robert J. Jones 1

Health Research Policy and Systems volume 19 , Article number: 36 ( 2021 ) Cite this article

6610 Accesses

6 Citations

10 Altmetric

Metrics details

Performing cancer research relies on substantial financial investment, and contributions in time and effort from patients. It is therefore important that this research has real life impacts which are properly evaluated. The optimal approach to cancer research impact evaluation is not clear. The aim of this study was to undertake a systematic review of review articles that describe approaches to impact assessment, and to identify examples of cancer research impact evaluation within these reviews.

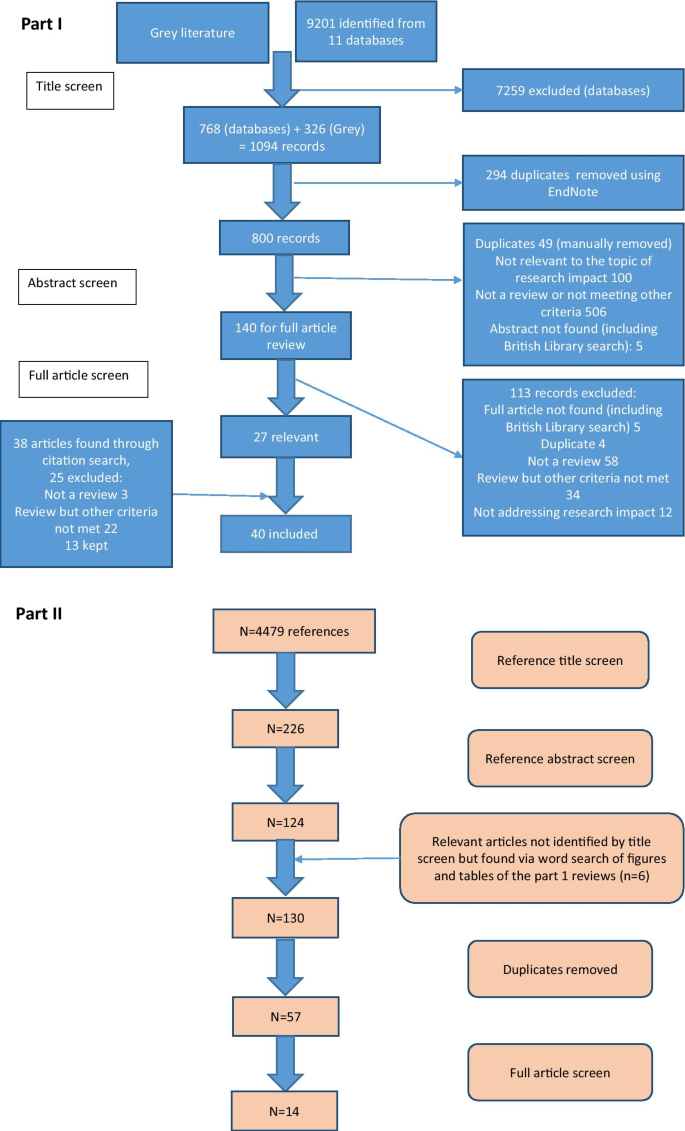

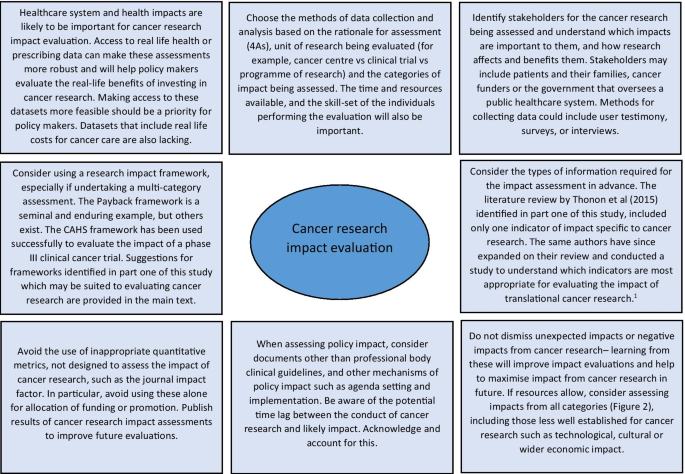

In total, 11 publication databases and the grey literature were searched to identify review articles addressing the topic of approaches to research impact assessment. Information was extracted on methods for data collection and analysis, impact categories and frameworks used for the purposes of evaluation. Empirical examples of impact assessments of cancer research were identified from these literature reviews. Approaches used in these examples were appraised, with a reflection on which methods would be suited to cancer research impact evaluation going forward.

In total, 40 literature reviews were identified. Important methods to collect and analyse data for impact assessments were surveys, interviews and documentary analysis. Key categories of impact spanning the reviews were summarised, and a list of frameworks commonly used for impact assessment was generated. The Payback Framework was most often described. Fourteen examples of impact evaluation for cancer research were identified. They ranged from those assessing the impact of a national, charity-funded portfolio of cancer research to the clinical practice impact of a single trial. A set of recommendations for approaching cancer research impact assessment was generated.

Conclusions

Impact evaluation can demonstrate if and why conducting cancer research is worthwhile. Using a mixed methods, multi-category assessment organised within a framework, will provide a robust evaluation, but the ability to perform this type of assessment may be constrained by time and resources. Whichever approach is used, easily measured, but inappropriate metrics should be avoided. Going forward, dissemination of the results of cancer research impact assessments will allow the cancer research community to learn how to conduct these evaluations.

Peer Review reports

Cancer research attracts substantial public funding globally. For example, the National Cancer Institute (NCI) in the United States of America (USA) had a 2020 budget of over $6 billion United States (US) dollars. In addition to public funds, there is also huge monetary investment from private pharmaceutical companies, as well as altruistic investment of time and effort to participate in cancer research from patients and their families. In the United Kingdom (UK), over 25,000 patients were recruited to cancer trials funded by one charity (Cancer Research UK (CRUK)) alone in 2018 [ 1 ]. The need to conduct research within the field of oncology is an ongoing priority because cancer is highly prevalent, with up to one in two people now having a diagnosis of cancer in their lifetime [ 2 , 3 ], and despite current treatments, mortality and morbidity from cancer are still high [ 2 ].

In the current era of increasing austerity, there is a desire to ensure that the money and effort to conduct any type of research delivers tangible downstream benefits for society with minimal waste [ 4 , 5 , 6 ]. These wider, real-life benefits from research are often referred to as research impact. Given the significant resources required to conduct cancer research in particular, it is reasonable to question if this investment is leading to the longer-term benefits expected, and to query the opportunity cost of not spending the same money directly within other public sectors such as health and social care, the environment or education.

The interest in evaluating research impact has been rising, partly driven by the actions of national bodies and governments. For example, in 2014, the UK government allocated its £2 billion annual research funding to higher education institutions, in part based on an assessment of the impact of research performed by each institution in an assessment exercise known as the Research Excellence Framework (REF). The proportion of funding dependent on impact assessment will increase from 20% in 2014, to 25% in 2021[ 7 ].

Despite the clear rationale and contemporary interest in research impact evaluation, assessing the impact of research comes with challenges. First, there is no single definition of what research impact encompasses, with potential differences in the evaluation approach depending on the definition. Second, despite the recent surge of interest, knowledge of how best to perform assessments and the infrastructure for, and experience in doing so, are lagging [ 6 , 8 , 9 ]. For the purposes of this review, the definition of research impact given by the UK Research Councils is used (see Additional file 1 for full definition). This definition was chosen because it takes a broad perspective, which includes academic, economic and societal views of research impact [ 10 ].

There is a lack of clarity on how to perform research impact evaluation, and this extends to cancer research. Although there is substantial interest from cancer funders and researchers [ 11 ], this interest is not accompanied by instruction or reflection on which approaches would be suited to assessing the impact of cancer research specifically. In a survey of Australian cancer researchers, respondents indicated that they felt a responsibility to deliver impactful research, but that evaluating and communicating this impact to stakeholders was difficult. Respondents also suggested that the types of impact expected from research, and the approaches used, should be discipline specific [ 12 ]. Being cognisant of the discipline specific nature of impact assessment, and understanding the uniqueness of cancer research in approaching such evaluations, underpins the rationale for this study.

The aim of this study was to explore approaches to research impact assessment, identify those approaches that have been used previously for cancer research, and to use this information to make recommendations for future evaluations. For the purposes of this study, cancer research included both basic science and applied research, research into any malignant disease, concerning paediatric or adult cancer, and studies spanning nursing, medical, public health elements of cancer research.

The study objectives were to:

Identify existing literature reviews that report approaches to research impact assessment and summarise these approaches.

Use these literature reviews to identify examples of cancer research impact evaluations, describe the approaches to evaluation used within these studies, and compare them to those described in the broader literature.

This approach was taken because of the anticipated challenge of conducting a primary review of empirical examples of cancer research impact evaluation, and to allow a critique of empirical studies in the context of lessons learnt from the wider literature. A primary review would have been difficult because examples of cancer research impact evaluation, for example, the assessment of research impact on clinical guidelines [ 13 ], or clinical practice [ 14 , 15 , 16 ], are often not categorised in publication databases under the umbrella term of research impact. Reasons for this are the lack of medical subject heading (MeSH) search term relating to research impact assessment and the differing definitions for research impact. In addition, many authors do not recognise their evaluations as sitting within the discipline of research impact assessment, which is a novel and emerging field of study.

General approach

A systematic search of the literature was performed to identify existing reviews of approaches to assess the impact of research. No restrictions were placed on the discipline, field, or scope (national/global) of research for this part of the study. In the second part of this study, the reference lists of the literature reviews identified were searched to find empirical examples of the evaluation of the impact of cancer research specifically.

Data sources and searches

For the first part of the study, 11 publication databases and the grey literature from January 1998 to May 2019 were searched. The electronic databases were Medline, Embase, Health Management and Policy Database, Education Resources Information Centre, Cochrane, Cumulative Index of Nursing and Allied Health Literature, Applied Social Sciences Index and Abstract, Social Services Abstracts, Sociological Abstracts, Health Business Elite and Emerald. The search strategy specified that article titles must contain the word “impact”, as well as a second term indicating that the article described the evaluation of impact, such as “model” or “measurement” or “method”. Additional file 1 provides a full list of search terms. The grey literature was searched using a proforma. Keywords were inserted into the search function of websites listed on the proforma and the first 50 results were screened. Title searches were performed by either a specialist librarian or the primary researcher (Dr. C Hanna). All further screening of records was performed by the primary researcher.

Following an initial title screen, 800 abstracts were reviewed and 140 selected for full review. Articles were kept for final inclusion in the study by assessing each article against specific inclusion criteria (Additional file 1 ). There was no assessment of the quality of the included reviews other than to describe the search strategy used. If two articles drew primarily on the same review but contributed a different critique of the literature or methods to evaluate impact, both were kept. If a review article was part of a grey literature report, for example a thesis, but was also later published in a journal, the journal article only was kept. Out of 140 articles read in full, 27 met the inclusion criteria and a further 13 relevant articles were found through reference list searching from the included reviews [ 17 ].

For the second part of the study, the reference lists from the literature reviews were manually screened [ 17 ] ( n = 4479 titles) by the primary researcher to identify empirical examples of assessment of the impact of cancer research. Summary tables and diagrams from the reviews were also searched using the words “cancer” and “oncology” to identify relevant articles that may have been missed by reference list searching. After removal of duplicates, 57 full articles were read and assessed against inclusion criteria (Additional file 1 ). Figure 1 shows the search strategy for both parts of the study according to the guidelines for preferred reporting items for systematic reviews and meta-analysis (PRISMA) [ 18 ].

Search strategies for this study

Data extraction and analysis

A data extraction form produced in Microsoft ® Word 2016 was used to collect details for each literature review. This included year of publication, location of primary author, research discipline, aims of the review as described by the authors and the search strategy (if any) used. Information on approaches to impact assessment was extracted under three specific themes which had been identified from a prior scoping review as important factors when planning and conducting research impact evaluation. These themes were: (i) categorisation of impact into different types depending on who or what is affected by the research (the individuals, institutions, or parts of society, the environment), and how they are affected (for example health, monetary gain, sustainability) (ii) methods of data collection and analysis for the purposes of evaluation, and (iii) frameworks to organise and communicate research impact. There was space to document any other key findings the researcher deemed important. After data extraction, lists of commonly described categories, methods of data collection and analysis, and frameworks were compiled. These lists were tabulated or presented graphically and narrative analysis was used to describe and discuss the approaches listed.

For the second part of the study, a separate data extraction form produced in Microsoft ® Excel 2016 was used. Basic information on each study was collected, such as year of publication, location of primary authors, research discipline, aims of evaluation as described by the authors and research type under assessment. Data was also extracted from these empirical examples using the same three themes as outlined above, and the approaches used in these studies were compared to those identified from the literature reviews. Finally, a set of recommendations for future evaluations of cancer research impact were developed by identifying the strengths of the empirical examples and using the lists generated from the first part of the study to identify improvements that could be made.

Part one: Identification and analysis of literature reviews describing approaches to research impact assessment

Characteristics of included literature reviews.

Forty literature reviews met the pre-specified inclusion criteria and the characteristics of each review are outlined in Table 1 . A large proportion (20/40; 50%) were written by primary authors based in the UK, followed by the USA (5/40; 13%) and Australia (5/40; 13%), with the remainder from Germany (3/40; 8%), Italy (3/40; 8%), the Netherlands (1/40; 3%), Canada (1/40; 3%), France (1/40; 3%) and Iran (1/40; 3%). All reviews were published since 2003, despite the search strategy dating from 1998. Raftery et al. 2016 [ 19 ] was an update to Hanney et al. 2007 [ 20 ] and both were reviews of studies assessing research impact relevant to a programme of health technology assessment research. The narrative review article by Greenhalgh et al. [ 21 ] was based on the same search strategy used by Raftery et al. [ 19 ].

Approximately half of the reviews (19/40; 48%) described approaches to evaluate research impact without focusing on a specific discipline and nearly the same amount (16/40; 40%) focused on evaluating the impact of health or biomedical research. Two reviews looked at approaches to impact evaluation for environmental research and one focused on social sciences and humanities research. Finally, two reviews provided a critique of impact evaluation methods used by different countries at a national level [ 22 , 23 ]. None of these reviews focused specifically on cancer research.

Twenty-five reviews (25/40; 63%) specified search criteria and 11 of these included a PRISMA diagram. The articles that did not outline a search strategy were often expert reviews of the approaches to impact assessment methods and the authors stated they had chosen the articles included based on their prior knowledge of the topic. Most reviews were found by searching traditional publication databases, however seven (7/40; 18%) were found from the grey literature. These included four reports written by an independent, not-for-profit research institution (Research and Development (RAND) Europe) [ 23 , 24 , 25 , 26 ], one literature review which was part of a Doctor of Philosophy (Ph.D) thesis [ 27 ], a literature review informing a quantitative study [ 28 ] and a review that provided background information for a report to the UK government on the best use of impact metrics [ 29 ].

Key findings from the reviews: approaches to research impact evaluation

Categorisation of impact for the purpose of impact assessment

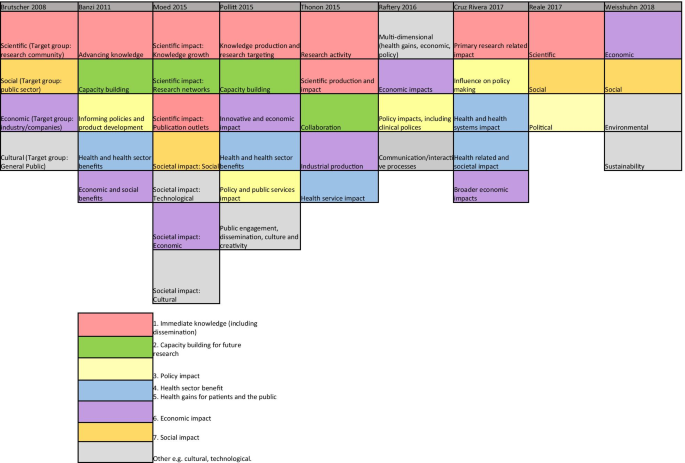

Nine reviews attempted to categorise the type of research impact being assessed according to who or what is affected by research, and how they are affected. In Fig. 2 , colour coding was used to identify overlap between impact types identified in these reviews to produce a summary list of seven main impact categories.

The first two categories of impact refer to the immediate knowledge produced from research and the contribution research makes to driving innovation and building capacity for future activities within research institutions. The former is often referred to as the academic impact of research. The academic impact of cancer research may include the knowledge gained from conducting experiments or performing clinical trials that is subsequently disseminated via journal publications. The latter may refer to securing future funding for cancer research, providing knowledge that allows development of later phase clinical trials or training cancer researchers of the future.

The third category identified was the impact of research on policy. Three of the review articles included in this overview specifically focused policy impact evaluation [ 30 , 31 , 32 ]. In their review, Hanney et al. [ 30 ] suggested that policy impact (of health research) falls into one of three sub-categories: impact on national health policies from the government, impact on clinical guidelines from professional bodies, and impact on local health service policies. Cruz Rivera and colleagues [ 33 ] specifically distinguished impact on policy making from impact on clinical guidelines, which they described under health impact. This shows that the lines between categories will often blur.

Impact on health was the next category identified and several of the reviews differentiated health sector impact from impact on health gains. For cancer research, both types of health impact will be important given that it is a health condition which is a major burden for healthcare systems and the patients they treat. Economic impact of research was the fifth category. For cancer research, there is likely to be close overlap between healthcare system and economic impacts because of the high cost of cancer care for healthcare services globally.

In their 2004 article, Buxton et al. [ 34 ] searched the literature for examples of the evaluation of economic return on investment in health research and found four main approaches, which were referenced in several later reviews [ 19 , 25 , 35 , 36 ]. These were (i) measuring direct cost savings to the health-care system, (ii) estimating benefits to the economy from a healthy workforce, (iii) evaluating benefits to the economy from commercial development and, (iv) measuring the intrinsic value to society of the health gain from research. In a later review [ 25 ], they added an additional approach of estimating the spill over contribution of research to the Gross Domestic Product (GDP) of a nation.

The final category was social impact. This term was commonly used in a specific sense to refer to research improving human rights, well-being, employment, education and social inclusion [ 33 , 37 ]. Two of the reviews which included this category focused on the impact of non-health related research (social sciences and agriculture), indicating that this type of impact may be less relevant or less obvious for health related disciplines such as oncology. Social impact is distinct from the term societal impact, which was used in a wider sense to describe impact that is external to traditional academic benefits [ 38 , 39 ]. Other categories of impact identified that did not show significant overlap between the reviews included cultural and technological impact. In two of the literature reviews [ 33 , 40 ], the authors provided a list of indicators of impact within each of their categories. In the review by Thonon et al. [ 40 ], only one (1%) of these indicators was specific to evaluating the impact of cancer research.

Methods for data collection and analysis

In total, 36 (90%) reviews discussed methods to collect or analyse the data required to conduct an impact evaluation. The common methods described, and the strengths and weaknesses of each approach, are shown in Additional file 2 : Table S1. Many authors advocated using a mixture of methods and in particular, the triangulation of surveys, interviews (of researchers or research users), and documentary analysis [ 20 , 30 , 31 , 32 ]. A large number of reviews cautioned against the use of quantitative metrics, such as bibliometrics, alone [ 29 , 30 , 41 , 42 , 43 , 44 , 45 , 46 , 47 , 48 ]. Concerns included that these metrics were often not designed to be comparable between research programmes [ 49 ], their use may incentivise researchers to focus on quantity rather than quality [ 42 ], and these metrics could be gamed and used in the wrong context to make decisions about researcher funding, employment and promotion [ 41 , 43 , 45 ].

Several reviews explained that the methods for data collection and analysis chosen for impact evaluation depended on both the unit of research under analysis and the rationale for the impact analysis [ 23 , 24 , 26 , 31 , 36 , 50 , 51 ]. Specific to cancer research, the unit of analysis may be a single clinical trial or a programme of trials, research performed at a cancer centre or research funded by a specific institution or charity. The rationale for research impact assessment was categorised in multiple reviews under four headings (“the 4 As”): advocacy, accountability, analysis and allocation [ 19 , 20 , 23 , 24 , 30 , 31 , 32 , 33 , 36 , 46 , 52 , 53 ]. Finally, Boaz and colleagues found that there was a lack of information on the cost-effectiveness of research impact evaluation methods but suggested that pragmatic, but often cheaper approaches to evaluation, such as surveys, were least likely to give in depth insights into the processes through which research impact occurred [ 31 ].

Using a framework within a research impact evaluation

Applied to research impact evaluation, a framework provides a way of organising collected data, which encourages a more objective and structured evaluation than would be possible with an ad hoc analysis. In total, 27 (68%) reviews discussed the use of a framework in this context. Additional file 2 : Table S2 lists the frameworks mentioned in three or more of the included reviews. The most frequently described framework was the Payback Framework, developed by Buxton and Hanney in 1996 [ 54 ], and many of the other frameworks identified reported that they were developed by adapting key elements of the Payback framework. None of the frameworks identified were specifically developed to assess the impact of cancer research, however several were specific to health research. The unit of cancer research being evaluated will dictate the most suitable framework to use in any evaluation. The unit of research most suited to each framework is outlined in Additional file 2 : Table S2.

Categories of impact identified in the included literature reviews

Additional findings from the included reviews

The challenges of research impact evaluation were commonly discussed in these reviews. Several mentioned that the time lag [ 24 , 25 , 33 , 35 , 38 , 46 , 50 , 53 , 55 ] between research completion and impact occurring should influence when an impact evaluation is carried out: too early and impact will not have occurred, too late and it is difficult to link impact to the research in question. This overlapped with the challenge of attributing impact to a particular piece of research [ 24 , 26 , 33 , 34 , 35 , 37 , 38 , 39 , 46 , 50 , 56 ]. Many authors argued that the ability to show attribution was inversely related to the time since the research was carried out [ 24 , 25 , 31 , 46 , 53 ].

Part II: Empirical examples of cancer research impact evaluation

Study characteristics.

In total, 14 empirical impact evaluations relevant to cancer research were identified from the references lists of the literature reviews included in the first part of this study. These empirical studies were published between 1994–2015 by primary authors located in the UK (7/14; 50%), USA (2/14; 14%), Italy (2/14; 14%), Canada (2/14; 14%) and Brazil (1/14; 14%). Table 2 lists these studies with the rationale for each assessment (defined using the “4As”), the unit of analysis of cancer research evaluated and the main findings from each evaluation. The categories of impact evaluated, methods of data collection and analysis, and impact frameworks utilised are also summarised in Table 2 and discussed in more detail below.

Approaches to cancer research impact evaluation used in empirical studies

Categories of impact evaluated in cancer research impact assessments

Several of the empirical studies focused on academic impact. For example, Ugolini and colleagues evaluated scholarly outputs from one cancer research centre in Italy [ 57 ] and in a second study looked at the academic impact of cancer research from European countries [ 58 ]. Saed et al. [ 59 ] used submissions to an international cancer conference (American Society of Clinical Oncology (ASCO)) to evaluate the dissemination of cancer research to the academic community, and Lewison and colleagues [ 60 , 61 , 62 , 63 ] assessed academic, as well as policy impact and dissemination of cancer research findings to the lay media.

The category of the health impact was also commonly evaluated, with particular focus on the assessment of survival gains. Life years gained or deaths averted [ 64 ], life expectancy gains [ 65 ] and years of extra survival [ 66 ] were all used as indicators of the health impact attributable to cancer research. Glover and colleagues [ 67 ] used a measure of health utility, the quality adjusted life year (QALY), which combines both survival and quality of life assessments. Lakdawalla and colleagues [ 66 ] considered the impact of both research on cancer screening and treatments, and concluded that survival gains were 80% attributable to treatment improvement. In contrast, Glover and colleagues [ 67 ] acknowledged the importance of improved cancer therapies due to research but also highlight the major impacts from research around smoking cessation, as well as cervical and bowel cancer screening. Several of these studies that assessed health impact, also used the information on health gains to assess the economic impact of the same research [ 64 , 65 , 66 , 67 ].

Finally, two studies [ 68 , 69 ] performed multi-dimensional research impact assessments, which incorporated nearly all of the seven categories of impact identified from the previous literature (Fig. 2 ). In their assessment of the impact of research funded by one breast cancer charity in Australia, Donovan and colleagues [ 69 ] evaluated academic, capacity building, policy, health, and wider economic impacts. Montague and Valentim [ 68 ] assessed the impact of one randomised clinical trial (MA17) which investigated the use of a hormonal medication as an adjuvant treatment for patients with breast cancer. In their study, they assessed the dissemination of research findings, academic impact, capacity building for future trials and international collaborations, policy citation, and the health impact of decreased breast cancer recurrence attributable to the clinical trial.

Methods of data collection and analysis for cancer research impact evaluation

Methods for data collection and analysis used in these studies aligned with the categories of impact assessed. For example, studies assessing academic impact used traditional bibliometric searching of publication databases and associated metrics. Ugolini et al. [ 57 ] applied a normalised journal impact factor to publications from a cancer research centre as an indicator of the research quality and productivity from that centre. This analysis was adjusted for the number of employees within each department and the scores were used to apportion 20% of future research funding. The same bibliometric method of analysis was used in a second study by the same authors to compare and contrast national level, cancer research efforts across Europe [ 58 ]. They assessed the quantity and the mean impact factor of the journals for publications from each country and compared this to the location-specific population and GDP. A similar approach was used for the manual assessment of 10% of cancer research abstracts submitted to an international conference (ASCO) between 2001–2003 and 2006–2008 [ 59 ]. These authors examined if the location of authors affected the likelihood of the abstract being presented orally, as a face-to-face poster or online only.

Lewison and colleagues, who performed four of the studies identified [ 60 , 61 , 62 , 63 ], used a different bibliometric method of publication citation count to analyse the dissemination, academic, and policy impact of cancer research. The authors also assigned a research level to publications to differentiate if the research was a basic science or clinical cancer study by coding the words in the title of each article or the journal in which the paper was published. The cancer research types assessed by these authors included cancer research at a national level for two different countries (UK and Russia) and research performed by cancer centres in the UK.

To assess policy impact these authors extracted journal publications from cancer clinical guidelines and for media impact they looked at publications cited in articles stored within an online repository from a well-known UK media organisation (British Broadcasting Co-operation). Interestingly, most of the cancer research publications contained in guidelines and cited in the UK media were clinical studies whereas a much higher proportion published by UK cancer centres were basic science studies. These authors also identified that funders of cancer research played an critical role as commentators to explain the importance of the research in the lay media. The top ten most frequent commentators (commenting on > 19 media articles (out of 725) were all representatives from the UK charity CRUK.

A combination of clinical trial findings and documentary analysis of large data repositories were used to estimate health system or health impact. In their study, Montague and Valentim [ 68 ] cited the effect size for a decrease in cancer recurrence from a clinical trial and implied the same health gains would be expected in real life for patients with breast cancer living in Canada. In their study of the impact of charitable and publicly funded cancer research in the UK, Glover et al. [ 67 ] used CRUK and Office for National Statistics (ONS) cancer incidence data, as well as national hospital databases listing episodes of radiotherapy delivered, number of cancer surgeries performed and systemic anti-cancer treatments prescribed, to evaluate changes in practice attributable to cancer research. In their USA perspective study, Lakdawalla et al. [ 66 ] used the population-based Surveillance, Epidemiology and End Results Program (SEER) database to evaluate the number of patients likely to be affected by the implementation of cancer research findings [ 66 ]. Survival calculations from clinical trials were also applied to population incidence estimates to predict the scale of survival gain attributable to cancer research [ 64 , 66 ].

The methods of data collection and analysis used for economic evaluations aligned with the categories of assessment identified by Buxton in their 2004 literature review [ 34 ]. For example, three studies [ 65 , 66 , 67 ] estimated direct healthcare cost savings from implementation of cancer research. This was particularly relevant in one ex-ante assessment of the potential impact of a clinical trial testing the equivalence of using less intensive follow up for patients following cancer surgery [ 65 ]. These authors assessed the number of years it would take (“years to payback”) of implementing the hypothetical clinical trial findings to outweigh the money spent developing and running the trial. The return on investment calculation was performed by estimating direct cost savings to the healthcare system by using less intensive follow up without any detriment to survival.

The second of Buxton’s categories was an estimation of productivity loss using the human capital approach. In this method, the economic value of survival gains from cancer research are calculated by measuring the monetary contribution from patients surviving longer who are of working age. This approach was used in two studies [ 64 , 66 ] and in both, estimates of average income (USA) were utilised. Buxton’s fourth category, an estimation of an individual’s willingness to pay for a statistical life, was used in two assessments [ 65 , 66 ], and Glover and colleagues [ 67 ] adapted this method, placing a monetary value on the opportunity cost of QALYs forgone in the UK health service within a fixed budget [ 70 ]. One of the studies that used this method identified that there may be differences in how patients diagnosed with distinct cancer types value the impact of research on cancer specific survival [ 66 ]. In particular, individuals with pancreatic cancer seemed to be willing to spend up to 80% of their annual income for the extra survival attributable to implementation of cancer research findings, whereas this fell to below 50% for breast and colorectal cancer. Only one of the studies considered Buxton’s third category of benefits to the economy from commercial development [ 66 ]. These authors calculated the gain to commercial companies from sales of on-patent pharmaceuticals and concluded that economic gains to commercial producers were small relative to gains from research experienced by cancer patients.

The cost estimates used in these impact evaluations came from documentary analysis, clinical trial publications, real-life data repositories, surveys, and population average income estimates. For example, in one study, cost information from NCI trials was supplemented by using a telephone phone survey to pharmacies, historical Medicare documents and estimates of the average income from the 1986 US Bureau of the Census Consumer Income [ 64 ]. In their study, Coyle et al. [ 65 ] costed annual follow up and treatment for cancer recurrence based on the Ontario Health Insurance plan, a cost model relevant to an Ottawa hospital and cost estimates from Statistics Canada [ 71 ]. The data used to calculate the cost of performing cancer research was usually from funding bodies and research institutions. For example, charity reports and Canadian research institution documents were used to estimate that it costs the National Cancer Institute in Canada $1500 per patient accrued to a clinical trial [ 65 ]. Government research investment outgoings were used to calculate that $300 billion was spent on cancer research in the USA from 1971 to 2000, 25% of which was contributed by the NCI [ 66 ] and that the NCI spent over $10 million USD in the 1980s to generate the knowledge that adjuvant chemotherapy was beneficial to colorectal cancer patients [ 64 ]. Charity and research institution spending reports, along with an estimation of the proportion of funds spent specifically on cancer research, were used to demonstrate £15 billion of UK charity and public money was spent on cancer research between 1970 and 2009 [ 67 ].

Lastly, the two studies [ 68 , 69 ] which adopted a multi-category approach to impact assessment used the highest number and broadest range of methods identified from the previous literature (Additional file 2 : Table S1). The methods utilised included surveys and semi-structured telephone interviews with clinicians, documentary analysis of funding and project reports, case studies, content analysis of media release, peer review, bibiliometrics, budget analysis, large data repository review, and observations of meetings.

Frameworks for cancer research impact evaluation

Only two of the empirical studies identified used an impact framework. Unsurprisingly, these were also the studies that performed a multi-category assessment and used the broadest range of methods within their analyses. Donovan et al. [ 69 ] used the Payback framework (Additional file 2 : Table S2) to guide the categories of impact assessed and the questions in their researcher surveys and interviews. They also reported the results of their evaluation using the same categories: from knowledge production, through capacity building to health and wider economic impacts. Montague and Valentim [ 68 ] used the Canadian Academy Health Services (CAHS) Framework (Additional file 2 : Table S2). Rather than using the framework in it is original form, they arranged impact indicators from the CAHS framework within a hierarchy to illustrate impacts occurring over time. The authors distinguished short term, intermediate and longer-term changes resulting from one clinical cancer trial, aligning with the concept of categorising impacts based on when they occur, which was described in one of the literature reviews identified in the first part of this study [ 33 ].

Lastly, the challenges of time lags and attribution of impact were identified and addressed by several of these empirical studies. Lewison and colleagues tracked the citation of over 3000 cancer publications in UK cancer clinical guidelines over time [ 61 ], and in their analysis Donovan et al. [ 69 ] explicitly acknowledged that the short time frame between their analysis and funding of the research projects under evaluations was likely to under-estimate the impact achieved. Glover et al. [ 67 ] used bibliometric analysis of citations in clinical cancer guidelines to estimate the average time from publication to clinical practice change (8 years). They added 7 years to account for the time between funding allocation and publication of research results giving an overall time lag from funding cancer research to impact of 15 years. The challenge of attribution was addressed in one study by using a time-line to describe impacts occurring at different time-points but linking back to the original research in question [ 68 ]. The difficultly of estimating time lags and attributing impact to cancer research were both specifically addressed in a companion study [ 72 ] to the one conducted by Glover and colleagues. In this study, instead of quantifying the return on cancer research investment, qualitative methods of assessment were used. This approach identified factors that enhanced and accelerated the process of impact occurring and helped to provide a narrative to link impacts to research.

This study has identified several examples of the evaluation of the impact of cancer research. These evaluations were performed over three decades, and mostly assessed research performed in high-income countries. Justification for the approach to searching the literature used in this study is given by looking at the titles of the articles identified. In only 14% (2/14) was the word “impact” included, suggesting that performing a search for empirical examples of cancer research impact evaluation using traditional publication databases would have been challenging. Furthermore, all the studies identified were included within reviews of approaches to research impact evaluation, which negated the subjective decision of whether the studies complied with a particular definition of research impact.

Characteristics of research that were specifically relevant to cancer studies can be identified from these impact assessments. Firstly, many of these evaluations acknowledged the contribution of both basic and applied studies to the body of cancer research, and several studies categorised research publications based on this distinction. Second, the strong focus on health impact and the expectation that cancer research will improve health was not surprising. The focus on survival in particular, especially in economic studies looking at the value of health gains, reflects the high mortality of cancer as a disease entity. This contrasts with similar evaluations of musculoskeletal or mental health research, which have focused on improvements in morbidity [ 73 , 74 ]. Third, several studies highlighted the distinction between research looking at different aspects of the cancer care continuum; from screening, prevention and diagnosis, to treatment and end of life care. The division of cancer as a disease entity by the site of disease was also recognised. Studies that analysed the number of patients diagnosed with cancer, or population-level survival gains, often used site-specific cancer incidence and other studies evaluated research relating to only one type of cancer [ 64 , 65 , 68 , 69 ]. Lastly, the empirical examples of cancer research impact identified in this study confirm the huge investment into cancer research that exists, and the desire by many research organisations and funders to quantify a rate of return on that investment. Most of these studies concluded that cancer research investment far exceeded expectations of the return on investment. Even using the simple measure of future research grants attracted by researchers funded by one cancer charity, the monetary value of these grants outweighed the initial investment [ 69 ].

There were limitations in the approaches to impact evaluation used in these studies which were recognised by reflecting on the findings from the broader literature. Several studies assessed academic impact in isolation, and studies using the journal impact factor or the location of authors on publications were limited in the information they provided. In particular, using the journal impact factor (JIF) to allocate funding research which was used in one study, is now outdated and controversial. The policy impact of cancer research was commonly evaluated by using clinical practice guidelines, but other policy types that could be used in impact assessment [ 30 ], such as national government reports or local guidelines, were rarely used. In addition, using cancer guidelines as a surrogate for clinical practice change and health service impact could have drawbacks. For example, guidelines can often be outdated, irrelevant or simply not used by cancer clinicians and in addition, local hospitals often have their own local clinical guidelines, which may take precedent over national documents. Furthermore, the other aspects of policy impact described in the broader literature [ 30 ], such as impact on policy agenda setting and implementation, were rarely assessed. There were also no specific examples of social, environmental or cultural impacts and very few of the studies mentioned wider economic benefits from cancer research, such as spin out companies and patents. It may be that these types of impact were less relevant to cancer research being assessed, however unexpected impacts may have be identified if they were considered at the time of impact evaluation.

Reflecting on how the methods of data collection and analysis used in these studies aligned with those listed in Additional file 2 : Table S1 bibliometrics, alternative metrics (media citation), documentary analysis, surveys and economic approaches were often used. Methods less commonly adopted were interviews, using a scale and focus groups. This may have been due to the time and resource implications of using qualitative techniques and more in depth analysis, or a lack of awareness by authors regarding the types of scales that could be used. An example of a scale that could be used to assess the impact of research on policy is provided in one of the literature reviews identified [ 30 ]. The method of collecting expert testimony from researchers was utilised in the studies identified, but there were no obvious examples of testimony about the impact of cancer research from stakeholders such as cancer patients or their families.

Lastly, despite the large number of examples identified from the previous literature, a minority of the empirical assessments used an impact framework. The Payback Framework, and an iteration of the CAHS Framework were used with success and these studies are excellent examples of how frameworks can be used for cancer research impact evaluation in future. Other frameworks identified from the literature (Additional file 2 : Table S2) that may be appropriate for the assessment of cancer research impact in future include Anthony Weiss’s logic model [ 75 ], the research impact framework [ 76 ] and the research utilisation ladder [ 77 ]. Weiss’s model is specific to medical research and encourages evaluation of how clinical trial publication results are implemented in practice and lead to health gain. He describes an efficacy-efficiency gap [ 75 ] between clinical decision makers becoming aware of research findings, changing their practice and this having impact on health. The Research Impact Framework, developed by the Department of Public Health and Policy at the UK London School of Hygiene and Tropical Medicine [ 76 ], is an aid for researchers to self-evaluate their research impact, and offers an extensive list of categories and indicators of research which could be applied to evaluating the impact of cancer research. Finally, Landry’s Research Utilisation Ladder [ 77 ] has similarities to the hierarchy used in the empirical study by Montegue and Valentim [ 68 ], and focuses on the role of the individual researcher in determining how research is utilised and its subsequent impact.

Reflecting on the strengths and limitations of the empirical approaches to cancer research impact identified in this study, Fig. 3 outlines recommendations for the future. One of these recommendations refers to improving the use of real-life data to assess the actual impact of research on incidence, treatment, and outcomes, rather than predicting these impacts by using clinical trial results. Databases for cancer incidence, such as SEER (USA) and the Office of National Statistics (UK), are relatively well established. However, those that collect data on treatments delivered and patient outcomes are less so, and when they do exist, they have been difficult to establish and maintain and often have large quantities of missing data [ 78 , 79 ]. In their study, Glover et al. [ 67 ] specifically identified the lack of good quality data documenting radiotherapy use in the UK in 2012.

1 Thonon F, Boulkedid R, Teixeira M, Gottot S, Saghatchian M, Alberti C. Identifying potential indicators to measure the outcome of translational cancer research: a mixed methods approach. Health Res Policy Syst. 2015;13:72

Suggestions for approaching cancer research impact evaluation.

The recommendations also suggest that impact assessment for cancer and other health research could be made more robust by giving researchers access to cost data linked to administrative datasets. This type of data was used in empirical impact assessments performed in the USA [ 64 , 66 ] because the existing Medicare and Medicaid health service infrastructure collects and provides access to this data. In the UK, hospital cost data is collected for accounting purposes but this could be unlocked as a resource for research impact assessments going forward. A good example of where attempts are being made to link resource use to cost data for cancer care in the UK is through the UK Colorectal Cancer Intelligence Hub [ 80 ].

Lastly, several empirical examples highlighted that impact from cancer research can be increased when researchers or research organisations advocate, publicise and help to interpret research findings for a wider audience [ 60 , 72 ]. In addition, it is clear from these studies that organisations that want to evaluate the impact of their cancer research must also appreciate that research impact evaluation is a multi-disciplinary effort, requiring the skills and input from individuals with different skill sets, such as basic scientists, clinicians, social scientists, health economists, statisticians, and information technology analysts. Furthermore, the users and benefactors from cancer research, such as patients and their families, should not be forgotten, and asking them which impacts from cancer research are important will help direct and improve future evaluations.

The strengths of this study are the broad, yet systematic approach used to identify existing reviews within the research impact literature. This allowed a more informed assessment of cancer research evaluations than would have been possible if a primary review of these empirical examples had been undertaken. Limitations of the study include the fact that the review protocol was not registered in advance and that one researcher screened the full articles for review. The later was partly mitigated by using pre-defined inclusion criteria.

Impact assessment is a way of communicating to funders and patients the merits of undertaking cancer research and learning from previous research to develop better studies that will have positive impacts on society in the future. To the best of our knowledge, this is the first review to consider how to approach evaluation of the impact of cancer research. At the policy level, a lesson learned from this study for institutions, governments, and funders of cancer research, is that an exact prescription for how to conduct cancer research impact evaluation cannot be provided, but a multi-disciplinary approach and sufficient resources are required if a meaningful assessment can be achieved. The approach to impact evaluation used to assess cancer research will depend on the type of research being assessed, the unit of analysis, rationale for the assessment and the resources available. This study has added to an important dialogue for cancer researchers, funders and patients about how cancer research can be evaluated and ultimately how future cancer research impact can be improved.

Availability of data and materials

Additional files included. No primary research data analysed.

Abbreviations

National Cancer Institute

United States of America

United States

United Kingdom

Cancer Research UK

Medical subject heading

Preferred reporting items for systematic reviews and meta-analysis

Gross Domestic Product

American Society of Clinical Oncology

Surveillance, Epidemiology and End Results Program

Journal impact factor

Research evaluation framework

Health Technology Assessment

Doctor of Philosophy

Research and Development

Quality adjusted life year

Canadian Academy of Health Sciences

Office for National Statistics

UK CR. CRUK: "Current clinical trial research". https://www.cancerresearchuk.org/our-research-by-cancer-topic/our-clinical-trial-research/current-clinical-trial-research . Accessed 20th May 2019.

UK CR. CRUK: "Cancer statistics for the UK.". https://www.cancerresearchuk.org/health-professional/cancer-statistics-for-the-uk . Accessed 20th May 2019.

Cancer Research UK. Cancer risk statistics 2019. https://www.cancerresearchuk.org/health-professional/cancer-statistics/risk . Accessed 20th Dec 2019.

Chalmers I, Bracken MB, Djulbegovic B, Garattini S, Grant J, Gulmezoglu AM, et al. How to increase value and reduce waste when research priorities are set. Lancet. 2014;383(9912):156–65.

Article PubMed Google Scholar

Ioannidis JPA, Greenland S, Hlatky MA, Khoury MJ, Macleod MR, Moher D, et al. Increasing value and reducing waste in research design, conduct, and analysis. Lancet. 2014;383(9912):166–75.

Article PubMed PubMed Central Google Scholar

Richard S. BMJ blogs. In: Godlee F, editor. 2018. https://blogs.bmj.com/bmj/2018/07/30/richard-smith-measuring-research-impact-rage-hard-get-right/ . Accessed 31st July 2019.

Department for the Economy, Higher Education Funding Council for Wales, Research England, Scottish Funding Council. Draft guidance on submissions REF 2018/01 2018 July 2018. Report No.

Gunn A, Mintrom M. Social science space network blogger. 2017. https://www.socialsciencespace.com/2017/01/five-considerations-policy-measure-research-impact/ . Accessed 2019.

Callaway E. Beat it, impact factor! Publishing elite turns against controversial metric. Nature. 2016;535(7611):210–1.

Article CAS PubMed Google Scholar

Research Councils UK. Excellence with impact 2018. https://webarchive.nationalarchives.gov.uk/20180322123208/http://www.rcuk.ac.uk/innovation/impact/ . Accessed 24th Aug 2020.

Cancer Research UK. Measuring the impact of research 2017. https://www.cancerresearchuk.org/funding-for-researchers/research-features/2017-06-20-measuring-the-impact-of-research . Accessed 24th Aug 2020.

Gordon LG, Bartley N. Views from senior Australian cancer researchers on evaluating the impact of their research: results from a brief survey. Health Res Policy Syst. 2016;14:2.

Article CAS PubMed PubMed Central Google Scholar

Thompson MK, Poortmans P, Chalmers AJ, Faivre-Finn C, Hall E, Huddart RA, et al. Practice-changing radiation therapy trials for the treatment of cancer: where are we 150 years after the birth of Marie Curie? Br J Cancer. 2018;119(4):389–407.

Downing A, Morris EJ, Aravani A, Finan PJ, Lawton S, Thomas JD, et al. The effect of the UK coordinating centre for cancer research anal cancer trial (ACT1) on population-based treatment and survival for squamous cell cancer of the anus. Clin Oncol. 2015;27(12):708–12.

Article CAS Google Scholar

Tsang Y, Ciurlionis L, Kirby AM, Locke I, Venables K, Yarnold JR, et al. Clinical impact of IMPORT HIGH trial (CRUK/06/003) on breast radiotherapy practices in the United Kingdom. Br J Radiol. 2015;88(1056):20150453.

South A, Parulekar WR, Sydes MR, Chen BE, Parmar MK, Clarke N, et al. Estimating the impact of randomised control trial results on clinical practice: results from a survey and modelling study of androgen deprivation therapy plus radiotherapy for locally advanced prostate cancer. Eur Urol Focus. 2016;2(3):276–83.

Horsley T DO, Sampson M. Checking reference lists to find additional studies for systematic reviews. Cochrane Database Syst Rev. 2011(8).

Moher D, Liberati A, Tetzlaff J, Altman DG, Group P. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA Statement. Open medicine : a peer-reviewed, independent, open-access journal. 2009;3(3):e123–30.

Google Scholar

Raftery JH, Greenhalgh S, Glover T, Blatch-Jones MA. Models and applications for measuring the impact of health research: update of a systematic review for the Health Technology Assessment programme. Health Technol Assess. 2016;20(76):1–254.

Hanney SB, Buxton M, Green C, Coulson D, Raftery J. An assessment of the impact of the NHS Health Technology Assessment Programme. Health Technol Assess. 2007;11(53):82.

Article Google Scholar

Greenhalgh T, Raftery J, Hanney S, Glover M. Research impact: a narrative review. BMC Med. 2016;14:78.

Coryn CLS, Hattie JA, Scriven M, Hartmann DJ. Models and mechanisms for evaluating government-funded research—an international comparison. Am J Eval. 2007;28(4):437–57.

Philipp-Bastian Brutscher SW, Grant J. Health research evaluation frameworks: an international comparison. RAND Europe. 2008.

Guthrie S, Wamae W, Diepeveen S, Wooding S, Grant J. Measuring Research. A guide to research evaluation frameworks and tools. RAND Europe Cooperation 2013.

Health Economics Research Group OoHE, RAND Europe. Medical research: what’s it worth? Estimating the economic benefits from medical research in the UK. London: UK Evaluation Forum; 2008.

Marjanovic SHS, Wooding Steven. A historical reflection on research evaluation studies, their recurrent themes and challenges. Technical report. 2009.

Chikoore L. Perceptions, motivations and behaviours towards 'research impact': a cross-disciplinary perspective. University of Loughborough; 2016.

Pollitt A, Potoglou D, Patil S, Burge P, Guthrie S, King S, et al. Understanding the relative valuation of research impact: a best-worst scaling experiment of the general public and biomedical and health researchers. BMJ Open. 2016;6(8).

Wouters P, Thelwall M, Kousha K, Waltman L, de Rijcke S, Rushforth A, Franssen T. The metric tide literature review (Supplementary report I to the independent review of the role of metrics in research assessment and management). 2015.

Hanney SR, Gonzalez-Block MA, Buxton MJ, Kogan M. The utilisation of health research in policy-making: concepts, examples and methods of assessment. Health Res Policy Syst. 2003;1(1):2.

Boaz A, Fitzpatrick S, Shaw B. Assessing the impact of research on policy: a literature review. Sci Public Policy. 2009;36(4):255–70.

Newson R, King L, Rychetnik L, Milat A, Bauman A. Looking both ways: a review of methods for assessing research impacts on policy and the policy utilisation of research. Health Res Policy Syst. 2018;16(1):54.

Cruz Rivera S, Kyte DG, Aiyegbusi OL, Keeley TJ, Calvert MJ. Assessing the impact of healthcare research: a systematic review of methodological frameworks. PLoS Med. 2017;14(8):e1002370.

Buxton M, Hanney S, Jones T. Estimating the economic value to societies of the impact of health research: a critical review. Bull World Health Organ. 2004;82(10):733–9.

PubMed PubMed Central Google Scholar

Yazdizadeh B, Majdzadeh R, Salmasian H. Systematic review of methods for evaluating healthcare research economic impact. Health Res Policy Syst. 2010;8:6.

Hanney S. Ways of assessing the economic value or impact of research: is it a step too far for nursing research? J Res Nurs. 2011;16(2):151–66.

Banzi R, Moja L, Pistotti V, Facchini A, Liberati A. Conceptual frameworks and empirical approaches used to assess the impact of health research: an overview of reviews. Health Res Policy Syst. 2011;9:26.

Bornmann L. What is societal impact of research and how can it be assessed? A literature survey. J Am Soc Inform Sci Technol. 2013;64(2):217–33.

Pedrini M, Langella V, Battaglia MA, Zaratin P. Assessing the health research’s social impact: a systematic review. Scientometrics. 2018;114(3):1227–50.

Thonon F, Boulkedid R, Delory T, Rousseau S, Saghatchian M, van Harten W, et al. Measuring the outcome of biomedical research: a systematic literature review. PLoS ONE. 2015;10(4):e0122239.

Article PubMed PubMed Central CAS Google Scholar

Raftery J, Hanley S, Greenhalgh T, Glover M, Blatch-Jones A. Models and applications for measuring the impact of health research: Update of a systematic review for the Health Technology Assessment Programme. Health Technol Assess. 2016;20(76).

Ruscio J, Seaman F, D'Oriano C, Stremlo E, Mahalchik K. Measuring scholarly impact using modern citation-based indices. Meas Interdiscip Res Perspect. 2012;10(3):123–46; 24.

Carpenter CR, Cone DC, Sarli CC. Using publication metrics to highlight academic productivity and research impact. Acad Emerg Med. 2014;21(10):1160–72.

Patel VM, Ashrafian H, Ahmed K, Arora S, Jiwan S, Nicholson JK, et al. How has healthcare research performance been assessed? A systematic review. J R Soc Med. 2011;104(6):251–61.

Smith KM, Crookes E, Crookes PA. Measuring research "Impact" for academic promotion: issues from the literature. J High Educ Policy Manag. 2013;35(4):410–20; 11.

Penfield T, Baker M, Scoble R, Wykes M. Assessment, evaluations and definitions of research impact: a review. Res Eval. 2014;23(1):21–32.

Agarwal A, Durairajanayagam D, Tatagari S, Esteves SC, Harlev A, Henkel R, et al. Bibliometrics: tracking research impact by selecting the appropriate metrics. Asian J Androl. 2016;18(2):296–309.

Braithwaite J, Herkes J, Churruca K, Long J, Pomare C, Boyling C, et al. Comprehensive researcher achievement model (CRAM): a framework for measuring researcher achievement, impact and influence derived from a systematic literature review of metrics and models. BMJ Open. 2019;9(3):e025320.

Moed HF, Halevi G. Multidimensional assessment of scholarly research impact. J Assoc Inf Sci Technol. 2015;66(10):1988–2002.

Milat AJ, Bauman AE, Redman S. A narrative review of research impact assessment models and methods. Health Res Policy Syst. 2015;13:18.

Weißhuhn P, Helming K, Ferretti J. Research impact assessment in agriculture—a review of approaches and impact areas. Res Eval. 2018;27(1):36-42; 7.

Deeming S, Searles A, Reeves P, Nilsson M. Measuring research impact in Australia’s medical research institutes: a scoping literature review of the objectives for and an assessment of the capabilities of research impact assessment frameworks. Health Res Policy Syst. 2017;15(1):22.

Peter N, Kothari A, Masood S. Identifying and understanding research impact: a review for occupational scientists. J Occup Sci. 2017;24(3):377–92.

Buxton M, Hanney S. How can payback from health services research be assessed? J Health Serv Res Policy. 1996;1(1):35–43.

Bornmann L. Measuring impact in research evaluations: a thorough discussion of methods for, effects of and problems with impact measurements. High Educ Int J High Educ Res. 2017;73(5):775–87; 13.

Reale E, Avramov D, Canhial K, Donovan C, Flecha R, Holm P, et al. A review of literature on evaluating the scientific, social and political impact of social sciences and humanities research. Res Eval. 2017;27(4):298–308.

Ugolini D, Bogliolo A, Parodi S, Casilli C, Santi L. Assessing research productivity in an oncology research institute: the role of the documentation center. Bull Med Libr Assoc. 1997;85(1):33–8.

CAS PubMed PubMed Central Google Scholar

Ugolini D, Casilli C, Mela GS. Assessing oncological productivity: is one method sufficient? Eur J Cancer. 2002;38(8):1121–5.

Saad ED, Mangabeira A, Masson AL, Prisco FE. The geography of clinical cancer research: analysis of abstracts presented at the American Society of Clinical Oncology Annual Meetings. Ann Oncol. 2010;21(3):627–32.

Lewison G, Tootell S, Roe P, Sullivan R. How do the media report cancer research? A study of the UK’s BBC website. Br J Cancer. 2008;99(4):569–76.

Lewison GS, Sullivan R. The impact of cancer research: how publications influence UK cancer clinical guidelines. Br J Cancer. 2008;98(12):1944–50.

Lewison G, Markusova V. The evaluation of Russian cancer research. Res Eval. 2010;19(2):129–44.

Sullivan R, Lewison G, Purushotham AD. An analysis of research activity in major UK cancer centres. Eur J Cancer. 2011;47(4):536–44.

Brown ML, Nayfield SG, Shibley LM. Adjuvant therapy for stage III colon cancer: economics returns to research and cost-effectiveness of treatment. J Natl Cancer Inst. 1994;86(6):424–30.

Coyle D, Grunfeld E, Wells G. The assessment of the economic return from controlled clinical trials. A framework applied to clinical trials of colorectal cancer follow-up. Eur J Health Econ. 2003;4(1):6–11.

Lakdawalla DN, Sun EC, Jena AB, Reyes CM, Goldman DP, Philipson TJ. An economic evaluation of the war on cancer. J Health Econ. 2010;29(3):333–46.

Glover M, Buxton M, Guthrie S, Hanney S, Pollitt A, Grant J. Estimating the returns to UK publicly funded cancer-related research in terms of the net value of improved health outcomes. BMC Med. 2014;12:99.

Montague S, Valentim R. Evaluation of RT&D: from ‘prescriptions for justifying’ to ‘user-oriented guidance for learning.’ Res Eval. 2010;19(4):251–61.

Donovan C, Butler L, Butt AJ, Jones TH, Hanney SR. Evaluation of the impact of National Breast Cancer Foundation-funded research. Med J Aust. 2014;200(4):214–8.

Excellence NIfHaC. Guide to the methods of technology appraisal: 2013. 2013.

Government of Canada. Statistics Canada 2020. https://www.statcan.gc.ca/eng/start . Accessed 31st Aug 2020.

Guthrie S, Pollitt A, Hanney S, Grant J. Investigating time lags and attribution in the translation of cancer research: a case study approach. Rand Health Q. 2014;4(2):16.

Glover M, Montague E, Pollitt A, Guthrie S, Hanney S, Buxton M, et al. Estimating the returns to United Kingdom publicly funded musculoskeletal disease research in terms of net value of improved health outcomes. Health Res Policy Syst. 2018;16(1):1.

Wooding S, Pollitt A, Castle-Clarke S, Cochrane G, Diepeveen S, Guthrie S, et al. Mental health retrosight: understanding the returns from research (lessons from schizophrenia): policy report. Rand Health Q. 2014;4(1):8.

Weiss AP. Measuring the impact of medical research: moving from outputs to outcomes. Am J Psychiatry. 2007;164(2):206–14.

Kuruvilla S, Mays N, Pleasant A, Walt G. Describing the impact of health research: a research impact framework. BMC Health Serv Res. 2006;6:134.

Landry RA, Lamari NM. Climbing the ladder of research utilization. Evidence from social science research. Sci Commun. 2001;22(4):396–422.

Eisemann N, Waldmann A, Katalinic A. Imputation of missing values of tumour stage in population-based cancer registration. BMC Med Res Methodol. 2011;11(1):129.

Morris EJ, Taylor EF, Thomas JD, Quirke P, Finan PJ, Coleman MP, et al. Thirty-day postoperative mortality after colorectal cancer surgery in England. Gut. 2011;60(6):806–13.

University of Leeds. CORECT-R 2020. https://bci.leeds.ac.uk/applications/ . Accessed 24th Aug 2020.

Download references

Acknowledgements

We would like to acknowledge the help of Ms Lorraine MacLeod, specialist librarian from the Beatson West of Scotland Cancer Network in NHS Greater Glasgow and Clyde for her assistance in formulating the search strategy. We would like to acknowledge that Professor Stephen Hanney provided feedback on an earlier version of this review.

Dr. Catherine Hanna has a CRUK and University of Glasgow grant. Grant ID: C61974/A2429.

Author information

Authors and affiliations.

CRUK Clinical Trials Unit, Institute of Cancer Sciences, University of Glasgow, Glasgow, United Kingdom

Catherine R. Hanna & Robert J. Jones

Health Economics and Health Technology Assessment, Institute of Health and Wellbeing, University of Glasgow, Glasgow, United Kingdom

Kathleen A. Boyd

You can also search for this author in PubMed Google Scholar

Contributions

All authors contributed to the concept and design of the study. CH was responsible for the main data analysis and writing of the manuscript. KAB and RJJ responsible for writing, editing and final approval of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Correspondence to Catherine R. Hanna .

Ethics declarations

Ethics approval and consent to participate.

No ethical approval required.

Consent for publication

No patient level or third party copyright material used.

Competing interests

Nil declared.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1..

Research Council UK Impact definition, summary of search terms for part one, and inclusion criteria for both parts of the study.

Additional file 2: Table S1

(List of methods for research impact evaluation) and Table S2 (List if frameworks for research impact evaluation).

Rights and permissions