Forgot password? New user? Sign up

Existing user? Log in

Dynamic Programming

Already have an account? Log in here.

- Karleigh Moore

- Norbert Madarász

Dynamic programming refers to a problem-solving approach, in which we precompute and store simpler, similar subproblems, in order to build up the solution to a complex problem. It is similar to recursion , in which calculating the base cases allows us to inductively determine the final value. This bottom-up approach works well when the new value depends only on previously calculated values.

An important property of a problem that is being solved through dynamic programming is that it should have overlapping subproblems. This is what distinguishes DP from divide and conquer in which storing the simpler values isn't necessary.

To show how powerful the technique can be, here are some of the most famous problems commonly approached through dynamic programming:

- Backpack Problem : Given a set of treasures with known values and weights, which of them should you pick to maximize your profit whilst not damaging your backpack which has a fixed capacity?

- Egg Dropping : What is the best way to drop \(n\) eggs from an \(m\)-floored building to figure out the lowest height from which the eggs when dropped crack?

- Longest Common Subsequence : Given two sequences, which is the longest subsequence common to both of them?

- Subset Sum Problem : Given a set and a value \(n,\) is there a subset the sum of whose elements is \(n?\)

- Fibonacci Numbers : Is there a better way to compute Fibonacci numbers than plain recursion?

In a contest environment, dynamic programming almost always comes up (and often in a surprising way, no matter how familiar the contestant is with it).

Motivational Example: Change of Coins

Recursion with memoization, bidimensional dynamic programming: example, example: maximum paths.

What is the minimum number of coins of values \(v_1,v_2, v_3, \ldots, v_n\) required to amount a total of \(V?\) You may use a denomination more than once.

Optimal Substructure

The most important aspect of this problem that encourages us to solve this through dynamic programming is that it can be simplified to smaller subproblems.

Let \(f(N)\) represent the minimum number of coins required for a value of \(N\).

Visualize \(f(N)\) as a stack of coins. What is the coin at the top of the stack? It could be any of \(v_1,v_2, v_3, \ldots, v_n\). In case it were \(v_1\), the rest of the stack would amount to \(N-v_1;\) or if it were \(v_2\), the rest of the stack would amount to \(N-v_2\), and so on.

How do we decide which is it? Sure enough, we do not know yet. We need to see which of them minimizes the number of coins required.

Going by the above argument, we could state the problem as follows:

\[f(V) = \min \Big( \big\{ 1 + f(V - v_1), 1 + f(V-v_2), \ldots, 1 + f(V-v_n) \big \} \Big). \]

Because the coin at the top of the stack also counts as one coin, and then we can look at the rest.

Overlapping Subproblems

It is easy to see that the subproblems could be overlapping.

For example, if we are trying to make a stack of $11 using $1, $2, and $5, our look-up pattern would be like this: \[\begin{align} f(11) &= \min \Big( \big\{ 1+f(10),\ 1+ f(9),\ 1 + f(6) \big\} \Big) \\ &= \min \Big ( \big \{ 1+ \min {\small \left ( \{ 1 + f(9), 1+ f(8), 1+ f(5) \} \right )},\ 1+ f(9),\ 1 + f(6) \big \} \Big ). \end{align} \] Clearly enough, we'll need to use the value of \(f(9)\) several times.

One of the most important aspects of optimizing our algorithms is that we do not recompute these values. To do this, we compute and store all the values of \(f\) from 1 onwards for potential future use.

The recursion has to bottom out somewhere, in other words, at a known value from which it can start.

For this problem, we need to take care of two things:

Zero : It is clear enough that \(f(0) = 0\) since we do not require any coins at all to make a stack amounting to 0.

Negative and Unreachable Values : One way of dealing with such values is to mark them with a sentinel value so that our code deals with them in a special way. A good choice of a sentinel is \(\infty\), since the minimum value between a reachable value and \(\infty\) could never be infinity.

The Algorithm

Let's sum up the ideas and see how we could implement this as an actual algorithm:

We have claimed that naive recursion is a bad way to solve problems with overlapping subproblems. Why is that? Mainly because of all the recomputations involved.

Another way to avoid this problem is to compute the data first time and store it as we go, in a top-down fashion.

Let's look at how one could potentially solve the previous coin change problem in the memoization way. 1 2 3 4 5 6 7 8 9 10 11 12 def coinsChange ( V , v ): memo = {} def Change ( V ): if V in memo : return memo [ V ] if V == 0 : return 0 if V < 0 : return float ( "inf" ) memo [ V ] = min ([ 1 + Change ( V - vi ) for vi in v ]) return memo [ V ] return Change ( V )

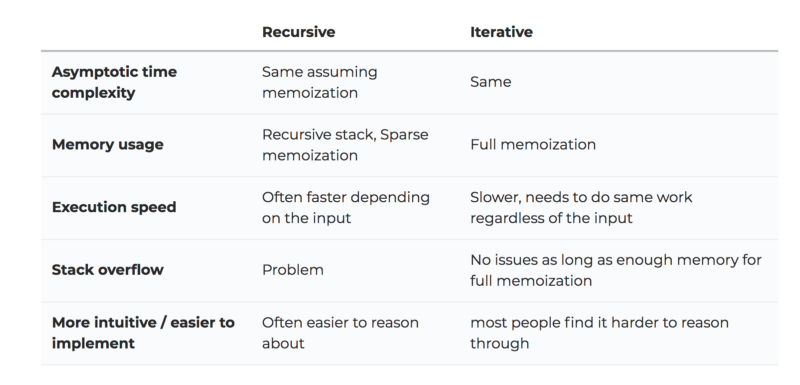

Dynamic Programming vs Recursion with Caching

There are \(k\) types of brackets each with its own opening bracket and closing bracket. We assume that the first pair is denoted by the numbers 1 and \(k+1,\) the second by 2 and \(k+2,\) and so on. Thus the opening brackets are denoted by \(1, 2, \ldots, k,\) and the corresponding closing brackets are denoted by \(k+1, k+2, \ldots, 2k,\) respectively.

Some sequences with elements from \(1, 2, \ldots, 2k\) form well-bracketed sequences while others don't. A sequence is well-bracketed if we can match or pair up opening brackets of the same type in such a way that the following holds:

- Every bracket is paired up.

- In each matched pair, the opening bracket occurs before the closing bracket.

- For a matched pair, any other matched pair lies either completely between them or outside them.

In this problem, you are given a sequence of brackets of length \(N\): \(B[1], \ldots, B[N]\), where each \(B[i]\) is one of the brackets. You are also given an array of Values: \(V[1],\ldots, V[N] \).

Among all the subsequences in the Values array, such that the corresponding bracket subsequence in the B Array is a well-bracketed sequence, you need to find the maximum sum.

Task: Solve the above problem for this input.

Input Format

One line, which contains \((2\times N + 2)\) space separate integers. The first integer denotes \(N.\) The next integer is \(k.\) The next \(N\) integers are \(V[1],..., V[N].\) The last \(N\) integers are \(B[1],..., B[N].\)

Constraints

- \(1 \leq k \leq 7\)

- \(-10^6 \leq V[i] \leq 10^6\), for all \(i\)

- \(1 \leq B[i] \leq 2k\), for all \(i\)

Illustrated Examples

For the examples discussed here, let us assume that \(k = 2\). The sequence 1, 1, 3 is not well-bracketed as one of the two 1's cannot be paired. The sequence 3, 1, 3, 1 is not well-bracketed as there is no way to match the second 1 to a closing bracket occurring after it. The sequence 1, 2, 3, 4 is not well-bracketed as the matched pair 2, 4 is neither completely between the matched pair 1, 3 nor completely outside of it. That is, the matched pairs cannot overlap. The sequence 1, 2, 4, 3, 1, 3 is well-bracketed. We match the first 1 with the first 3, the 2 with the 4, and the second 1 with the second 3, satisfying all the 3 conditions. If you rewrite these sequences using [, {, ], } instead of 1, 2, 3, 4 respectively, this will be quite clear.

Suppose \(N = 6, k = 3,\) and the values of \(V\) and \(B\) are as follows: Then, the brackets in positions 1, 3 form a well-bracketed sequence (1, 4) and the sum of the values in these positions is 2 (4 + (-2) =2). The brackets in positions 1, 3, 4, 5 form a well-bracketed sequence (1, 4, 2, 5) and the sum of the values in these positions is 4. Finally, the brackets in positions 2, 4, 5, 6 form a well-bracketed sequence (3, 2, 5, 6) and the sum of the values in these positions is 13. The sum of the values in positions 1, 2, 5, 6 is 16 but the brackets in these positions (1, 3, 5, 6) do not form a well-bracketed sequence. You can check the best sum from positions whose brackets form a well-bracketed sequence is 13.

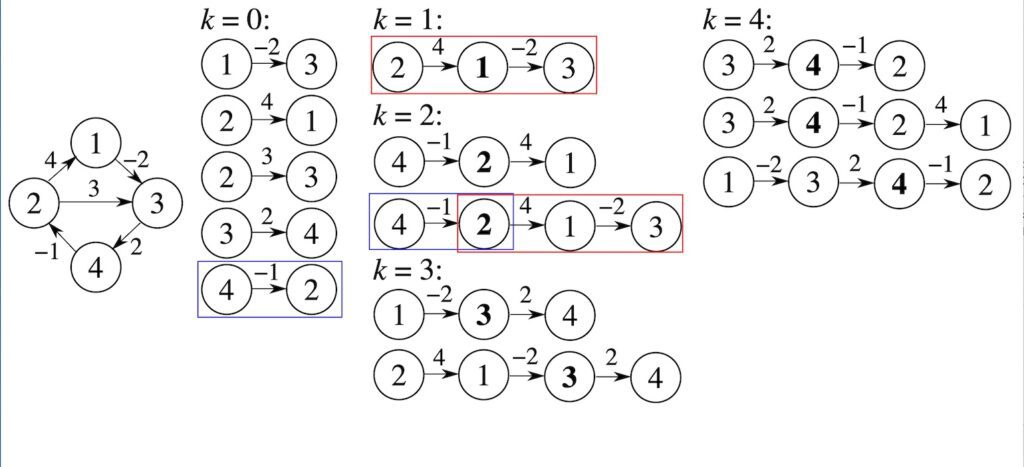

We'll try to solve this problem with the help of a dynamic program, in which the state , or the parameters that describe the problem, consist of two variables.

First, we set up a two-dimensional array dp[start][end] where each entry solves the indicated problem for the part of the sequence between start and end inclusive.

We'll try to think what happens when we run across a new end value, and need to solve the new problem in terms of the previously solved subproblems. Here are all the possibilities:

- When end <= start , there are no valid subsequences.

- When b[end] <= k , i.e, the last entry is an open bracket, no valid subsequence can end with it. Effectively, the result is the same if we hadn't included the last entry at all.

- When b[end] > k , i.e, the last entry is a closing bracket, one has to find the best match for it, or simply ignore it, whichever maximizes the sum.

Can you use these ideas to solve the problem?

Very often, dynamic programming helps solve problems that ask us to find the most profitable (or least costly) path in an implicit graph setting. Let us try to illustrate this with an example.

You are supposed to start at the top of a number triangle and chose your passage all the way down by selecting between the numbers below you to the immediate left or right. Your goal is to maximize the sum of the elements lying in your path. For example, in the triangle below, the red path maximizes the sum.

To see the optimal substructures and the overlapping subproblems , notice that everytime we make a move from the top to the bottom right or the bottom left, we are still left with smaller number triangle, much like this:

We could break each of the sub-problems in a similar way until we reach an edge-case at the bottom:

In this case, the solution is a + max(b,c) .

A bottom-up dynamic programming solution is to allocate a number triangle that stores the maximum reachable sum if we were to start from that position . It is easy to compute the number triangles from the bottom row onward using the fact that the

\[\text{best from this point} = \text{this point} + \max(\text{best from the left, best from the right}).\]

Let me demonstrate this principle through the iterations. Iteration 1: 1 8 5 9 3 Iteration 2: 1 2 10 13 15 8 5 9 3 Iteration 3: 1 2 3 20 19 10 13 15 8 5 9 3 Iteration 4: 1 2 3 4 23 20 19 10 13 15 8 5 9 3 So, the effective best we could do from the top is 23, which is our answer.

Problem Loading...

Note Loading...

Set Loading...

- Interview Problems on DP

- Practice DP

- Tutorial on Dynamic Programming

- Optimal Substructure

- Overlapping Subproblem

- Memoization

Tabulation vs Memoization

- 0/1 Knapsack

- Unbounded Knapsack

- Coin Change

- Egg Dropping Puzzle

- Matrix Chain Multiplication

Palindrome Partitioning

- DP on Arrays

- DP with Bitmasking

- DP on Trees

- DP on Graph

Complete Guide to Dynamic Programming

In this complete guide to dynamic programming, you will learn about the basics of dynamic programming, how to get started with dynamic programming, learning, strategy, resources, problems, and much more..

Discover a smoother learning journey through our effortless roadmap

Getting Started with Dynamic Programming

Dynamic Programming (DP) Tutorial with Problems

What is memoization? A Complete tutorial

Overlapping Subproblems Property in Dynamic Programming | DP-1

Optimal Substructure Property in Dynamic Programming | DP-2

Steps for how to solve a Dynamic Programming Problem

Dynamic Programming Vs Other Algorithms

Greedy Approach vs Dynamic programming

Comparison among Greedy, Divide and Conquer and Dynamic Programming algorithm

Easy Problems on Dynamic Programming

Nth Fibonacci Number

Program for factorial of a number

Count all combinations of coins to make a given value sum (Coin Change II)

Longest Common Subsequence (LCS)

Longest Increasing Subsequence (LIS)

Largest Sum Contiguous Subarray (Kadane's Algorithm)

0/1 Knapsack Problem

Unbounded Knapsack (Repetition of items allowed)

Cutting a Rod | DP-13

Medium Problems on Dynamic Programming

14 articles

Maximum sum rectangle in a 2D matrix | DP-27

Maximum size square sub-matrix with all 1s

Edit Distance

Longest Palindromic Subsequence (LPS)

Longest Palindromic Substring

Longest Common Increasing Subsequence (LCS + LIS)

Shortest Common Supersequence

Maximum Product Subarray

Egg Dropping Puzzle | DP-11

Partition problem | DP-18

Word Break Problem | DP-32

Word Break Problem | DP-32 | Set - 2

Box Stacking Problem | DP-22

Floyd Warshall Algorithm

Hard Problems on Dynamic Programming

12 articles

Longest Bitonic Subsequence | DP-15

Word Wrap Problem

The Painter's Partition Problem

Matrix Chain Multiplication | DP-8

Maximum profit by buying and selling a share at most k times

Maximum size rectangle binary sub-matrix with all 1s

Count Distinct Subsequences

Wildcard Pattern Matching

Travelling Salesman Problem using Dynamic Programming

Longest Increasing Path in Matrix

Longest Zig-Zag Subsequence

Dynamic Programming on Tree

Introduction to Dynamic Programming on Trees

Maximum height of Tree when any Node can be considered as Root

DP on Trees | Set-3 ( Diameter of N-ary Tree )

Maximal Point Path

Maximum Path sum in a N-ary Tree

Bitmasking in Dynamic Programming

Bitmasking and Dynamic Programming | Set 1 (Count ways to assign unique cap to every person)

Bitmasking and Dynamic Programming | Travelling Salesman Problem

Partition of a set into K subsets with equal sum using BitMask and DP

Digit Dynamic Programming

10 articles

Digit DP | Introduction

Longest subsequence such that adjacent elements have at least one common digit

Count of N digit numbers which contains all single digit primes

Count of N-digit numbers with at least one digit repeating

Count numbers from a given range whose product of digits is K

Count numbers in range [L, R] having K consecutive set bits

Count the numbers with N digits and whose suffix is divisible by K

Count of integers from the range [0, N] whose digit sum is a multiple of K

Count Numbers in Range with difference between Sum of digits at even and odd positions as Prime

Count numbers in given range such that sum of even digits is greater than sum of odd digits

Quiz on Dynamic Programming

Top MCQs on Dynamic Programming with Answers

About the Complete Guide to Dynamic Programming

Our Complete Guide to Dynamic Programming offers a comprehensive overview of this powerful problem-solving technique. Whether you're an experienced coder looking to enhance your skills or a beginner aiming to master this essential concept, this guide equips you with the knowledge and tools to efficiently solve complex problems by breaking them down into manageable subproblems.

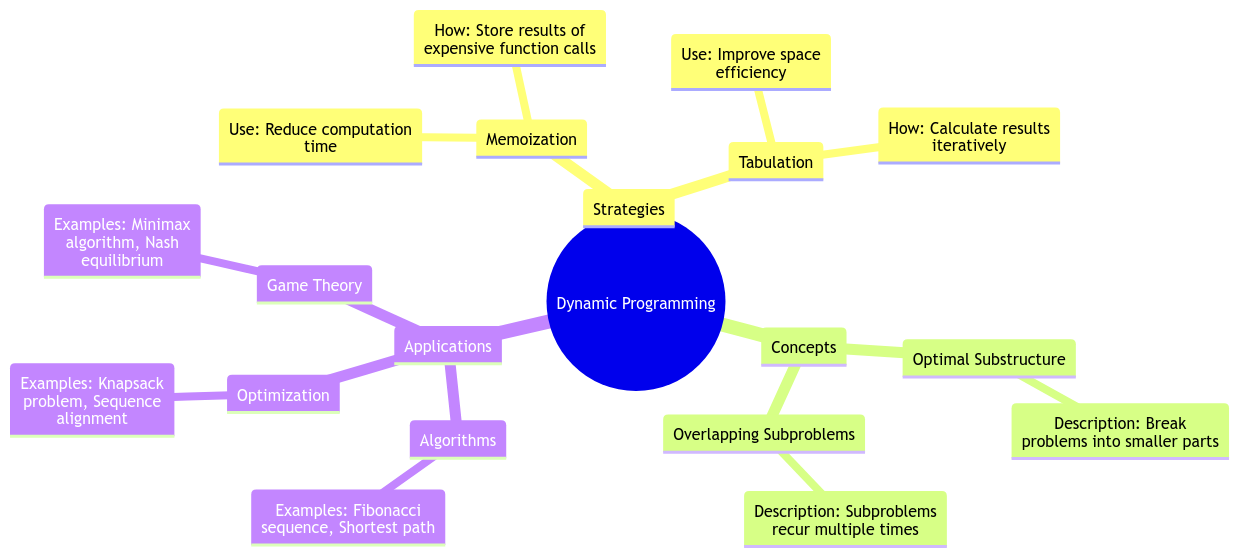

Basic Terminologies of Dynamic Programming

While learning about Dynamic Programming in this Complete Guide on Dynamic Programming, you will come across some common terms that will be used multiple times. Some of these terms are:

- Optimal Substructure: Problems can be solved using solutions to their subproblems.

- Overlapping Subproblems: Some subproblems are solved repeatedly.

- Memoization: Caching and reusing computed results.

- Tabulation: Building solutions iteratively in a table.

- State: Parameters defining a subproblem.

- Recurrence Relation: Mathematical equation for problem-solving.

- Top-Down: Recursive approach, starting with the main problem.

- Bottom-Up: Iterative approach, solving simple subproblems first.

- State Space: All possible states in a problem.

- Optimal Solution: The best solution to the original problem.

Why Dynamic Programming is needed?

Dynamic Programming is needed to efficiently solve complex problems by breaking them into smaller, solvable subproblems, saving time and resources in finding optimal solutions.

Tejas Pradhan

This roadmap provides an excellent guide to grasp fundamental concepts. It comprehensively covers various data structures and algorithms topics. I am totally satisfied with the contents of this roadmap.

Sachin Motwani

The topics in this roadmap are explained clearly, and practice problems given were very helpful to implement the concepts learnt. Kudos to GeeksforGeeks!!!

Shreya Kumari

This roadmap has been a one-stop destination for my basics revision. GeeksforGeeks has touched all the important topics with the best approach. This roadmap has a huge role in getting me placed.

The organized structure of the roadmap on GFG is excellent. The sequence in which to study is fantastic, and it has been incredibly beneficial for me.

How can I start this course?

You just need to click on the button that says START YOUR JOURNEY, and that's it. You will be taken to your first chapter.

Is this a language-specific course?

No. Majority topics in the course include implementations in popular programming languages like C, C++, Java, Python, C#, and Javascript.

Does the course include programming questions?

Yes, the course focuses on DS & Algo with a mix of theoretical topics and programming questions.

Can I learn DSA live?

Yes, we do have LIVE batched for DSA. You may call us on our toll-free number: +91-7838223507 or Drop us an email at [email protected] for any queries.

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

The complete beginners guide to dynamic programming

Dynamic programming isn't about design patterns; it's a way of thinking that breaks down a problem into individual components.

If you've been programming for long enough, you've probably heard the term dynamic programming. Often a key subject in technical interviews , the idea will also come up in design review meetings or regular interactions with fellow developers. This essay will examine what dynamic programming is and why you would use it. I'll be illustrating this concept with specific code examples in Swift, but the concepts I introduce can be applied to your language of choice. Let's begin!

A way of thinking

Unlike specific coding syntax or design patterns, dynamic programming isn't a particular algorithm but a way of thinking . Therefore, the technique takes many forms when it comes to implementation.

The main idea of dynamic programming is to consider a significant problem and break it into smaller, individualized components. When it comes to implementation, optimal techniques rely on data storage and reuse to increase algorithm efficiency. As we'll see, many questions in software development are solved using various forms of dynamic programming. The trick is recognizing when optimal solutions can be devised using a simple variable or require a sophisticated data structure or algorithm.

For example, code variables can be considered an elementary form of dynamic programming. As we know, a variable's purpose is to reserve a specific place in memory for a value to be recalled later.

While addNumbersMemo above does provide a simple introduction, the goal of a dynamic programming solution is to preserve previously seen values because the alternative could either be inefficient or could prevent you from answering the question. This design technique is known as memoization .

Code challenge—Pair of numbers

Over the years, I've had the chance to conduct mock interviews with dozens of developers preparing for interviews at top companies like Apple, Facebook, and Amazon. Most of us would be happy to skip the realities of the dreaded whiteboard or take-home coding project. However, the fact is that many of these brain-teaser questions are designed to test for a basic understanding of computer science fundamentals. For example, consider the following:

As developers, we know there are usually multiple ways to arrive at a solution. In this case, the goal is knowing which numbers should be paired to achieve the expected result. As people, it's easy for us to quickly scan the sequence of numbers and promptly come up with the pair of 9 and 2. However, an algorithm will need to either check and compare each value in the sequence or develop a more streamlined solution to help us find the values we are seeking. Let's review both techniques.

A brute force approach

Our first approach involves looking at the first value, then reviewing each subsequent value to determine if it will provide the difference needed to solve the question. For example, once our algorithm checks the value of the first array item, 8, it will then scan the remaining values for 3 (e.g., 11 - 8 = 3). However, we can see the value of 3 doesn't exist, so the algorithm will repeat the same process for the next value (in our case, 10) until it finds a successful matching pair. Without going into the details of big-O notation, we can assume this type of solution would have an average runtime of O(n ^ 2)time or greater, mainly because our algorithm works by comparing each value with every other value. In code, this can be represented as follows:

A memoized approach

Next, let's try a different approach using the idea of memoization. Before implementing our code, we can brainstorm how storing previously seen values will help streamline the process. While using a standard array is feasible, a set collection object (also referred to as a hash table or hash map) could provide an optimized solution.

Using a memoized approach, we've improved the algorithm's average run time efficiency to O(n + d) by adding previously seen values to a set collection object. Those familiar with hash-based structures will know that item insert and retrieval occurs in O(1) - constant time. This further streamlines the solution, as the set is designed to retrieve values in an optimized way regardless of size.

The Fibonacci sequence

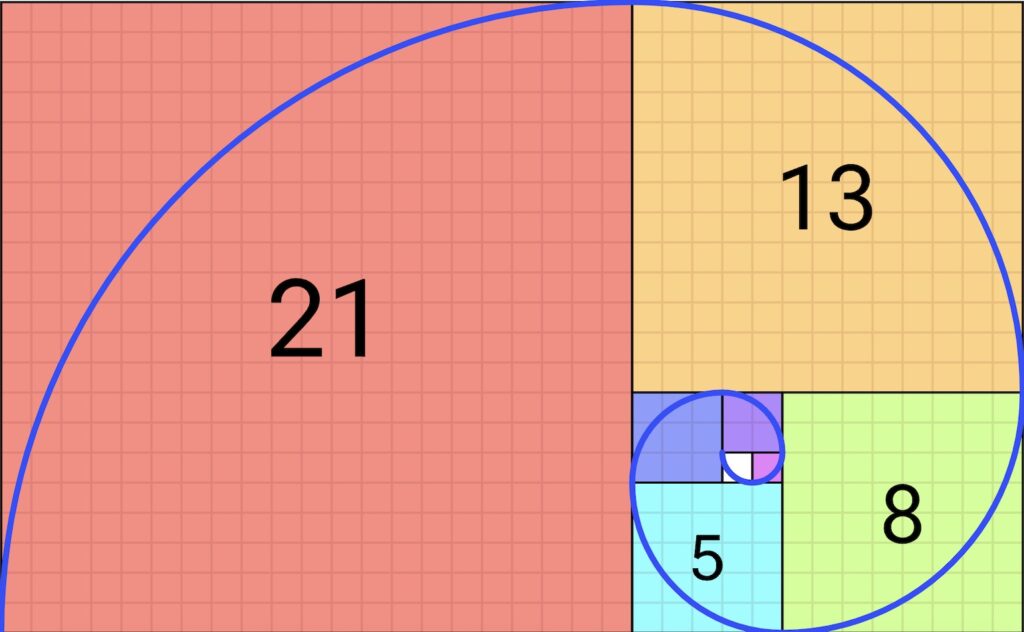

When learning various programming techniques, one topic that comes to mind is recursion. Recursive solutions work by having a model that refers to itself. As such, recursive techniques are implemented through algorithms or data structures. A well-known example of recursion can be seen with the Fibonacci sequence—a numerical sequence made by adding the two preceding numbers (0, 1, 1, 2, 3, 5, 8, 13, 21, etc):

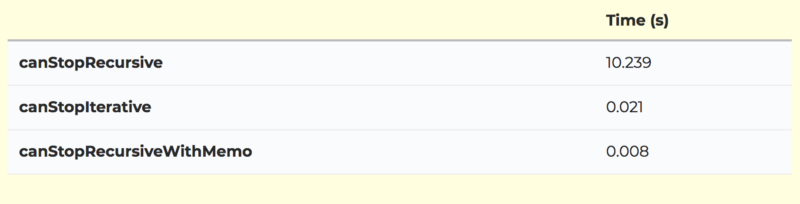

When examined, our code is error-free and works as expected. However, notice a few things about the performance of the algorithm:

Positions (n) fibRec() - Number of times called214510109151219

As shown, there's a significant increase in the number of times our function is called. Similar to our previous example, the algorithm's performance decreases exponentially based on the input size. This occurs because the operation does not store previously calculated values. Without access to stored variables, the only way we can obtain the required (preceding) values is through recursion. Assuming this code is used in a production setting, the function could introduce bugs or performance errors. Let's refactor the code to support a memoized approach:

This revised solution now supports memoization through the use of stored variables. Notice how the refactored code no longer requires a recursive technique. The two most previous values are added to a result, which is appended to the main array sequence. Even though the algorithm's performance still depends on the sequence size, our revisions have increased algorithmic efficiency to O(n) - linear time. In addition, our iterative solution should be easier to revise, test and debug since a single function is added to the call stack, thus reducing complexities with memory management and object scope.

We've learned that dynamic programming isn't a specific design pattern as it is a way of thinking. Its goal is to create a solution to preserve previously seen values to increase time efficiency. While examples include basic algorithms, dynamic programming provides a foundation in almost all programs. This includes the use of simple variables and complex data structures.

Dynamic Programming: An Approach to Solving Computing Problems

Sometimes in computer science, you will run into problems. You can divide these into subproblems, which can, in turn, be divided into smaller subproblems. If the smaller subproblems overlap, then you can save the result in memory for future reference. This way, you don’t need to compute the same result multiple times, thus increasing the efficiency of the program substantially. This way of solving these problems is referred to as dynamic programming.

In this article, you will learn what dynamic programming is. I will also show how to compute Fibonacci numbers, which is a simple problem that dynamic programming can solve. I will compare the dynamic programming solutions to the naive solution that uses recursion. These examples are written in Python syntax. Finally, I’ll also give some general pointers to keep in mind when attempting to solve problems using dynamic programming

Dynamic programming

More From Artturi Jalli: Python Cheat Sheet: A Handy Guide to Python

What Types of Problems Can Dynamic Programming Solve?

Dynamic programming is typically a way to optimize solutions to certain problems that use recursion. If a recursive solution to a problem has to compute solutions for subproblems with the same inputs repeatedly, then you can optimize it through dynamic programming. As mentioned earlier, in this case, you would simply save the result of the computation for use later if and when it’s needed. This optimization can reduce the time complexity of an algorithm from exponential time to polynomial time. This means that the number of computations n scales like a polynomial expression instead of scaling like an exponential expression as n increases. In general, polynomial expressions grow much slower than exponential expressions.

There are two conditions that need to be satisfied to use dynamic programming:

- Overlapping subproblems

- Optimal substructure property

What Are Overlapping Subproblems?

I alluded to the overlapping subproblems condition earlier. It simply means that when solving the problem in question, the solutions for the same subproblems are repeatedly necessary. In this case, the solutions to these subproblems can be stored for later use to skip recomputation.

Another way to think about this condition is to turn it upside down. If there are no overlapping subproblems, then there’s no point in saving the solutions for the subproblems, and you can’t use dynamic programming.

There are two different ways of storing the solutions to the overlapping subproblems:

- Memoization (top-down)

- Tabulation (bottom-up)

What Is Memoization?

The memoization approach to dynamic programming is very similar to the naive recursion approach, with only a small change. The difference is that we use a lookup table to store solutions to the subproblems, and then use this table to check whether that solution already exists.

If the solution for a certain subproblem already exists in the lookup table, then that solution is returned from the lookup table. If it does not, then it needs to be computed (through recursion) and added to the lookup table.

For the sake of clarity, let’s define a solution for a subproblem in our dynamic programming problem to be DP[X] ., with DP[N] being the desired solution and DP[0] being the base solution. In the memoization approach, the program starts from DP[N] and asks for the solutions from which DP[N] can be reached (these should be subproblems of lower order DP[n<N]) . Then, from these states, the same process is repeated recursively, until reaching the base solution DP[0] .

If this feels a little too abstract, don’t worry. The examples introduced later in this article should clarify what I mean.

Memoization is known as a top-down approach to dynamic programming since the program will start from the desired, last (top) state and work its way down recursively.

What Is Tabulation?

The tabulation approach to dynamic programming works in a reverse manner compared to the memoization approach. The program will start from the base (or bottom) solution for the subproblem and work its way up, solving the subproblems one by one until reaching the desired solution.

In terms of solutions to subproblems, the tabulation approach starts from the base solution DP[0] and then calculates DP[1], DP[2], … DP[N] until reaching the desired solution for the subproblem DP[N] . Since we started from the base solution DP[0] and worked towards the desired solution DP[N] , the tabulation approach is also known as a bottom-up approach.

Again, the examples below should make this easier to understand.

What Is Optimal Substructure Property?

If the optimal solution to a problem can be obtained using the optimal solution to its subproblems, then the problem is said to have optimal substructure property .

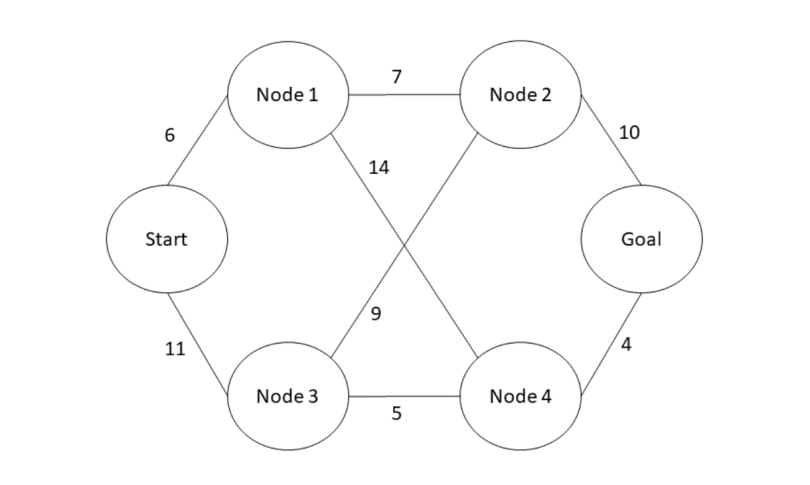

As an example, let’s consider the problem of finding the shortest path between ‘Start’ and ‘Goal’ nodes in the graph below. The nodes are connected to other nodes via edges, and the distance between two connected nodes is marked with a number next to the edge.

The shortest path from the Start node to the Goal node is through nodes three and four.

This problem clearly has optimal substructure property. Since the shortest path from the Start node to the Goal node goes through Node Four, it clearly follows that this path is a combination of the shortest path from the Start node to Node Four and the shortest path from Node Four to the Goal node.

Many problems do not have optimal substructure property. For example, the problem of finding the longest path (with no cycles) between the Start node and the Goal node in the above graph doesn’t. Here’s how you can see this:

The longest path is: Start - Node Three - Node Two - Node One - Node Four - Goal. However, this does not imply that the longest path from the Start node to Node Two is Start - Node Three - Node Two. The longest path from the Start node to Node Two is actually Start - Node One - Node Four - Node Three - Node Two (and Start - Node One - Node Four - Goal - Node Two, which has equal length to the first one).

Dynamic Programming Example: Calculating Fibonacci Numbers

One of the simplests examples of dynamic programming is the computation of Fibonacci numbers, which are numbers from the Fibonacci sequence. The first Fibonacci number is zero, the second is one, and the subsequent numbers are the sum of the previous two Fibonacci numbers. The 10 first Fibonacci numbers are zero, one, one, two, three, five, eight, 13, 21, and 34.

Let’s first start with the naive, recursive solution. Here’s a Python function to calculate the nth Fibonacci number (indexing starts from zero):

From this example it is easy to see that this problem satisfies the overlapping subproblems condition since the function clearly has to calculate the previous Fibonacci numbers multiple times (when n > three). The smallest Fibonacci numbers are computed most often, when this function is called for a large n.

This problem also clearly has optimal substructure since there is only a single solution for the subproblems, which are used to compute the solution to the desired problem.

Due to the recursion, this function runs in exponential time.

Let’s next look at how this could be solved using dynamic programming. Let’s start with a top-down solution using memoization. Here’s a Python function that calculates the nth Fibonacci number that implements dynamic programming through memoization:

This approach is quite similar to the recursive approach since it starts from calculating the nth Fibonacci number and, in order to calculate it, goes onto calculating the n-1st and n-2nd Fibonacci numbers. The difference is in the lookup table, where the smaller Fibonacci numbers will be saved as they are calculated, so that they do not need to be calculated again and again.

This makes this function actually run in linear time instead of exponential time.

For the sake of an example, let’s also look at a Python function that solves the same problem in a bottom-up manner with dynamic programming using tabulation:

In this solution, the nth Fibonacci number is calculated in a bottom-up manner, starting by calculating the first Fibonacci number, then the second, third and so on until reaching the nth Fibonacci number. This function also runs in linear time.

More in Software Engineering: Glob Module in Python: Explained

How to Solve Problems Using Dynamic Programming

The first step to solving a problem using dynamic programming is to identify it as a dynamic programming problem. If you can validate that the problem has overlapping subproblems and that it satisfies the optimal substructure property, you can be sure that you can solve it with dynamic programming.

The second step is to decide on a state expression. This state is the set of parameters that uniquely identifies the different subproblems.

In the Fibonacci numbers example, the parameter identifying the state would just be the serial number of a certain Fibonacci number.

There can be more than one parameter identifying a state. You should always use as few parameters as possible, however.

The third — and probably hardest — step in solving problems using dynamic programming is formulating the relation between the different states.

In the Fibonacci number case this is simple, however, since the nth Fibonacci number is the sum of the n-1st and n-2nd Fibonacci numbers. So F[n] = F[n-1] + F[n-2].

The fourth step is to implement memoization or tabulation for the states that you decided upon, using the relation you discovered between the states. This means simply saving the state (or in other words the solution to a certain subproblem) so it can be recovered from memory without recomputation when it is needed again. This should be fairly simple, if you did steps one to three well

Built In’s expert contributor network publishes thoughtful, solutions-oriented stories written by innovative tech professionals. It is the tech industry’s definitive destination for sharing compelling, first-person accounts of problem-solving on the road to innovation.

Great Companies Need Great People. That's Where We Come In.

Dynamic Programming: Examples, Common Problems, and Solutions

Dynamic programming problems can catch you off-guard in an interview or exam. Check out the most common problems and the solutions here.

There’s no doubt that dynamic programming problems can be very intimidating in a coding interview. Even when you may know that a problem needs to be solved using a dynamic programming method, it’s a challenge to be able to come up with a working solution in a limited time frame.

The best way to be good at dynamic programming problems is to go through as many of them as you can. While you don’t necessarily need to memorize the solution to every problem, it’s good to have an idea of how to go about implementing one.

What Is Dynamic Programming?

Simply put, dynamic programming is an optimization method for recursive algorithms, most of which are used to solve computing or mathematical problems.

You can also call it an algorithmic technique for solving an optimization problem by breaking it into simpler sub-problems. A key principle that dynamic programming is based on is that the optimal solution to a problem depends on the solutions to its sub-problems.

Wherever we see a recursive solution that has repeated calls for the same inputs, we can optimize it using dynamic programming. The idea is to simply store the results of subproblems so that we do not have to re-compute them when needed later.

Dynamically programmed solutions have a polynomial complexity which assures a much faster running time than other techniques like recursion or backtracking. In most cases, dynamic programming reduces time complexities, also known as big-O , from exponential to polynomial.

Now that you have a good idea of what dynamic programming is, it’s time to check out a few common problems and their solutions.

Dynamic Programming Problems

1. knapsack problem.

Problem Statement

Given a set of items, each with a weight and a value, determine the number of each item to include in a collection so that the total weight doesn’t exceed a given limit and the total value is as large as possible.

You’re given two integer arrays values[0..n-1] and weights[0..n-1] which represent values and weights associated with n items respectively. Also given is an integer W which represents the knapsack capacity.

Here we’re solving the 0/1 knapsack problem, which means that we can choose to either add an item or exclude it.

- Create a two-dimensional array with n+1 rows and w+1 columns. A row number n denotes the set of items from 1 to i , and a column number w denotes the maximum carrying capacity of the bag.

- The numeric value at [i][j] denotes the total value of items up till i in a bag that can carry a maximum weight of j.

- At every coordinate [i][j] in the array, pick the maximum value that we can obtain without item i , or the maximum value that we can obtain with item i ---whichever is larger.

- The maximum obtainable value by including item i is the sum of item i itself and the maximum value that can be obtained with the remaining capacity of the knapsack.

- Perform this step until you find the maximum value for the W th row.

2. Coin Change Problem

Suppose you’re given an array of numbers that represent the values of each coin. Given a specific amount, find the minimum number of coins that are needed to make that amount.

- Initialize an array of size n+1 , where n is the amount. Initialize the value of every index i in the array to be equal to the amount. This denotes the maximum number of coins (using coins of denomination 1) required to make up that amount.

- Since there is no denomination for 0, initialise the base case where array[0] = 0 .

- For every other index i , we compare the value in it (which is initially set to n+1 ) with the value array[i-k] +1 , where k is less than i . This essentially checks the entire array up till i-1 to find the minimum possible number of coins we can use.

- If the value at any array[i-k] + 1 is lesser than the existing value at array[i] , replace the value at array[i] with the one at array[i-k] +1 .

3. Fibonacci

The Fibonacci Series is a sequence of integers where the next integer in the series is the sum of the previous two.

It’s defined by the following recursive relation: F(0) = 0, F(n) = F(n-1) + F(n-2) , where F(n) is the nth term. In this problem, we have to generate all the numbers in a Fibonacci sequence up till a given nth term.

- First, use a recursive approach to implement the given recurrence relation.

- Recursively solving this problem entails breaking down F(n) into F(n-1) + F(n-2) , and then calling the function with F(n-1) and F(n+2) as parameters. We do this until the base cases where n = 0 , or n = 1 are reached.

- Now, we use a technique called memoization. Store the results of all function calls in an array. This will ensure that for every n, F(n) only needs to be calculated once.

- For any subsequent calculations, its value can simply be retrieved from the array in constant time.

4. Longest Increasing Subsequence

Find the length of the longest increasing subsequence inside a given array. The longest increasing subsequence is a subsequence within an array of numbers with an increasing order. The numbers within the subsequence have to be unique and in ascending order.

Also, the items of the sequence do not need to be consecutive.

- Start with a recursive approach where you calculate the value of the longest increasing subsequence of every possible subarray from index zero to index i, where i is lesser than or equal to the size of the array.

- To turn this method into a dynamic one, create an array to store the value for every subsequence. Initialise all the values of this array to 0.

- Every index i of this array corresponds to the length of the longest increasing subsequence for a subarray of size i .

- Now, for every recursive call of findLIS(arr, n) , check the n th index of the array. If this value is 0, then calculate the value using the method in the first step and store it at the n th index.

- Finally, return the maximum value from the array. This is the length of the longest increasing subsequence of a given size n .

Solutions to Dynamic Programming Problems

Now that you’ve gone through some of the most popular dynamic programming problems, it’s time to try implementing the solutions by yourself. If you’re stuck, you can always come back and refer to the algorithm section for each problem above.

Given how popular techniques such as recursion and dynamic programming are today, it won’t hurt to check out some popular platforms where you can learn such concepts and hone your coding skills . While you might not run into these problems on a daily basis, you'll surely encounter them in a technical interview.

Naturally, having the know-how of common problems is bound to pay dividends when you go for your next interview. So open up your favourite IDE , and get started!

Learn C practically and Get Certified .

Popular Tutorials

Popular examples, reference materials, learn c interactively, dsa introduction.

- What is an algorithm?

- Data Structure and Types

- Why learn DSA?

- Asymptotic Notations

Master Theorem

Divide and Conquer Algorithm

Data Structures (I)

- Types of Queue

- Circular Queue

- Priority Queue

Data Structures (II)

- Linked List

- Linked List Operations

- Types of Linked List

- Heap Data Structure

- Fibonacci Heap

- Decrease Key and Delete Node Operations on a Fibonacci Heap

Tree based DSA (I)

- Tree Data Structure

- Tree Traversal

- Binary Tree

- Full Binary Tree

- Perfect Binary Tree

- Complete Binary Tree

- Balanced Binary Tree

- Binary Search Tree

Tree based DSA (II)

- Insertion in a B-tree

- Deletion from a B-tree

- Insertion on a B+ Tree

- Deletion from a B+ Tree

- Red-Black Tree

- Red-Black Tree Insertion

- Red-Black Tree Deletion

Graph based DSA

- Graph Data Structure

- Spanning Tree

- Strongly Connected Components

- Adjacency Matrix

- Adjacency List

- DFS Algorithm

- Breadth-first Search

- Bellman Ford's Algorithm

Sorting and Searching Algorithms

- Bubble Sort

- Selection Sort

- Insertion Sort

- Counting Sort

- Bucket Sort

- Linear Search

- Binary Search

Greedy Algorithms

Greedy Algorithm

- Ford-Fulkerson Algorithm

- Dijkstra's Algorithm

- Kruskal's Algorithm

- Prim's Algorithm

- Huffman Coding

Dynamic Programming

- Floyd-Warshall Algorithm

- Longest Common Sequence

Other Algorithms

Backtracking Algorithm

- Rabin-Karp Algorithm

DSA Tutorials

- Longest Common Subsequence

- What is an Algorithm?

Dynamic Programming is a technique in computer programming that helps to efficiently solve a class of problems that have overlapping subproblems and optimal substructure property.

If any problem can be divided into subproblems, which in turn are divided into smaller subproblems, and if there are overlapping among these subproblems, then the solutions to these subproblems can be saved for future reference. In this way, efficiency of the CPU can be enhanced. This method of solving a solution is referred to as dynamic programming.

Such problems involve repeatedly calculating the value of the same subproblems to find the optimum solution.

- Dynamic Programming Example

Let's find the fibonacci sequence upto 5th term. A fibonacci series is the sequence of numbers in which each number is the sum of the two preceding ones. For example, 0,1,1, 2, 3 . Here, each number is the sum of the two preceding numbers.

We are calculating the fibonacci sequence up to the 5th term.

- The first term is 0.

- The second term is 1.

- The third term is sum of 0 (from step 1) and 1(from step 2), which is 1.

- The fourth term is the sum of the third term (from step 3) and second term (from step 2) i.e. 1 + 1 = 2 .

- The fifth term is the sum of the fourth term (from step 4) and third term (from step 3) i.e. 2 + 1 = 3 .

Hence, we have the sequence 0,1,1, 2, 3 . Here, we have used the results of the previous steps as shown below. This is called a dynamic programming approach .

- How Dynamic Programming Works

Dynamic programming works by storing the result of subproblems so that when their solutions are required, they are at hand and we do not need to recalculate them.

This technique of storing the value of subproblems is called memoization. By saving the values in the array, we save time for computations of sub-problems we have already come across.

Dynamic programming by memoization is a top-down approach to dynamic programming. By reversing the direction in which the algorithm works i.e. by starting from the base case and working towards the solution, we can also implement dynamic programming in a bottom-up manner.

- Recursion vs Dynamic Programming

Dynamic programming is mostly applied to recursive algorithms. This is not a coincidence, most optimization problems require recursion and dynamic programming is used for optimization.

But not all problems that use recursion can use Dynamic Programming. Unless there is a presence of overlapping subproblems like in the fibonacci sequence problem, a recursion can only reach the solution using a divide and conquer approach.

That is the reason why a recursive algorithm like Merge Sort cannot use Dynamic Programming, because the subproblems are not overlapping in any way.

- Greedy Algorithms vs Dynamic Programming

Greedy Algorithms are similar to dynamic programming in the sense that they are both tools for optimization.

However, greedy algorithms look for locally optimum solutions or in other words, a greedy choice, in the hopes of finding a global optimum. Hence greedy algorithms can make a guess that looks optimum at the time but becomes costly down the line and do not guarantee a globally optimum.

Dynamic programming, on the other hand, finds the optimal solution to subproblems and then makes an informed choice to combine the results of those subproblems to find the most optimum solution.

Different Types of Dynamic Programming Algorithms

Table of contents.

- Introduction

- Different Types of Greedy Algorithm

Sorry about that.

Related Tutorials

DS & Algorithms

Code With C

The Way to Programming

- C Tutorials

- Java Tutorials

- Python Tutorials

- PHP Tutorials

- Java Projects

Dynamic Programming: Strategies for Solving Complex Problems Efficiently

Dynamic Programming Demystified 🚀

Hey there, fellow tech enthusiasts! 🤖 Today, let’s delve into the fascinating world of Dynamic Programming 🌟. Don’t be scared off by the jargon; I’m here to break it down with a sprinkle of humor and a dollop of enthusiasm! So, grab your virtual seat and let’s dive right in! 💻

Understanding Dynamic Programming

So, what in the world is Dynamic Programming ? 🤔 Imagine you have this colossal problem to solve, and it’s so complex that you feel like pulling your hair out! Dynamic Programming swoops in like a superhero, offering you a strategy to break down this mammoth task into bite-sized, manageable chunks. Voila! Problem solved! 💪

Definition of Dynamic Programming

Dynamic Programming is like the master chef in the kitchen of algorithms . It’s a methodical way of solving complex problems by breaking them down into simpler subproblems. 🍳 Each subproblem’s solution is stored to avoid redundant calculations, making the overall process more efficient. Efficiency for the win! 🎉

Importance of Dynamic Programming

Picture this: You’re at a buffet, and you want to sample every dish. Dynamic Programming allows you to do just that in the realm of algorithms – optimize your solutions and tackle even the most intricate problems with finesse. It’s like having a secret recipe book for cracking challenging puzzles in record time! 🍽️

Basic Principles of Dynamic Programming

Let’s talk about the fundamental rules that make Dynamic Programming the rockstar of problem-solving techniques! 🌟

- Overlapping Subproblems : It’s like finding money in the pockets of your old jeans. Dynamic Programming identifies these recurring subproblems and saves their solutions for later use, eliminating unnecessary work. It’s all about efficiency, baby! 💸

- Optimal Substructure : This principle is like building a sturdy LEGO tower. Each piece (subproblem) fits perfectly to create the optimal solution . Dynamic Programming ensures that each subproblem’s solution contributes to the overall best answer. It’s all about that solid foundation! 🏗️

Strategies for Applying Dynamic Programming

Now that we’ve got the basics under our belt, let’s explore the two dynamic strategies that make the magic happen! 🎩✨

- Top-down Approach : Imagine you’re skydiving from the top. This approach starts with the main problem and recursively breaks it down into subproblems. It’s adventurous, exhilarating, and definitely keeps you on your toes! 🪂

- Bottom-up Approach : Ever built a tower from the ground up? That’s the bottom-up approach. You start with the smallest subproblems, gradually solving larger ones until you conquer the main problem like a champ. It’s a steady climb to success! 🗼

Applications of Dynamic Programming

Dynamic Programming isn’t just a fancy term; it’s the powerhouse behind some incredible real-world applications! 🌐 Let’s take a peek at a couple of them:

- Fibonacci Sequence : Ah, the Fibonacci sequence, nature’s favorite mathematical marvel ! Dynamic Programming nimbly calculates Fibonacci numbers faster than you can say “Golden Ratio.” It’s all about those efficient number-crunching skills! 🔢

- Shortest Path Algorithms : Navigating through a maze? Dynamic Programming has your back! It’s the GPS guiding you through the shortest route with speed and precision. Say goodbye to taking the long, scenic route! 🗺️

Challenges and Tips for Mastering Dynamic Programming

Ah, every hero has their kryptonite, right? Let’s uncover some challenges in mastering Dynamic Programming and how to conquer them like a pro! 🦸♂️

- Identifying Optimal Substructure : Sometimes the forest (complex problem) is so dense that you can’t see the trees (optimal substructure). Mastering Dynamic Programming involves honing your detective skills to spot these crucial patterns. Sherlock, who? 🕵️

- Handling State Transitions efficiently : It’s like switching gears in a Formula 1 race. Efficiently transitioning between states is key to zipping through problems with ease. Rev up those mental engines and zoom past any hurdles! 🏎️

Overall, Mastering Dynamic Programming Like a Pro! 🚀

So, there you have it, folks! Dynamic Programming, the unsung hero of problem-solving, ready to tackle any challenge with finesse. Remember, practice makes perfect, and with a dash of determination and a sprinkle of creativity, you’ll be mastering Dynamic Programming like a seasoned pro in no time! 💪

Thanks for tuning in, and remember: Keep coding, keep smiling, and embrace the dynamic journey ahead! 🌟

Stay Dynamically Awesome, Techies! 🚀👩💻

In closing, thanks a ton for joining me on this Dynamic Programming rollercoaster! 🎢 Keep shining bright and solving those tech puzzles like a boss! See you in the next coding adventure! ✨

Program Code – Dynamic Programming: Strategies for Solving Complex Problems Efficiently

Code Output: The 10th Fibonacci number is: 55

Code Explanation:

In the given dynamic programming example , we solve for the nth Fibonacci number, a popular problem showcasing the elegance and efficiency of the dynamic programming approach. The recursive nature of the Fibonacci sequence, where each number is the sum of the two preceding ones (excluding the first two numbers which are both considered as 1), makes it an ideal candidate for dynamic programming, particularly memoization.

The code defines a fibonacci function that takes two arguments: n , the position in the Fibonacci sequence whose value we want to find, and memo , a dictionary used as a cache to store the results of the Fibonacci numbers we’ve already computed.

At the function’s core, we employ a base case for when n is less than or equal to 2. For these cases, we simply return 1, as the first two Fibonacci numbers by definition are 1.

The true power and efficiency lie in the subsequent lines. Before plunging headlong into a recursive frenzy, we first check if our current n is in the memo . If it’s not, this means we haven’t computed it yet, and then we proceed to perform the recursive operations to calculate fibonacci(n-1) and fibonacci(n-2) . Crucially, we then store this computed value in memo[n] . This storage step is what saves us from the redundant work if we encounter the same n value again in our computations.

In essence, any subsequent calls for the same Fibonacci number won’t have to go through the recursion again; instead, they’ll directly fetch the result from our memo , dramatically reducing the time complexity from what would have been exponential in a naive recursive approach (O(2^n)) to O(n) in our dynamic programming approach.

Let’s not forget—the function concludes by returning the memoized or newly computed value of the nth Fibonacci number, giving us our desired result efficiently and elegantly. Dynamic programming, with its memoization strategy, thus transforms an otherwise computationally intensive problem into a tractable one, showcasing its power in optimizing the performance of algorithms dealing with overlapping subproblems.

Frequently Asked Questions (F&Q) on Dynamic Programming

What is dynamic programming and how does it work.

Dynamic programming is a method for solving complex problems by breaking them down into simpler subproblems. It involves solving each subproblem only once and storing the solution to avoid redundant calculations. This approach can significantly improve the efficiency of solving problems with overlapping subproblems.

When should I use dynamic programming to solve a problem?

Dynamic programming is most useful when a problem can be broken down into overlapping subproblems, and the optimal solution to the problem can be constructed from optimal solutions to its subproblems. It is commonly used in optimization problems, such as finding the shortest path or maximizing profit.

What are the key characteristics of problems that are suitable for dynamic programming?

Problems suitable for dynamic programming usually exhibit two key characteristics: optimal substructure and overlapping subproblems. Optimal substructure means that the optimal solution to the problem can be constructed from the optimal solutions to its subproblems. Overlapping subproblems refer to the fact that the same subproblems are solved multiple times in a recursive algorithm .

Can you provide an example of a problem that can be solved using dynamic programming?

One classic example of a problem solved using dynamic programming is the Fibonacci sequence. By storing the results of subproblems (calculating Fibonacci numbers for smaller indices), we can avoid redundant calculations and compute the nth Fibonacci number efficiently.

Are there different strategies or approaches to dynamic programming?

Yes, there are several strategies for approaching dynamic programming problems, such as top-down with memoization and bottom-up with tabulation. Top-down with memoization involves solving the problem recursively while storing the results of subproblems to avoid redundant calculations. Bottom-up with tabulation involves solving the problem iteratively, starting from the smallest subproblems and building up to the desired solution.

What are some common pitfalls to avoid when using dynamic programming?

One common pitfall when using dynamic programming is not recognizing the overlapping subproblems and failing to store and reuse intermediate results. It is essential to identify the repeating subproblems to benefit from the efficiency of dynamic programming . Additionally, starting with a brute-force approach before optimizing with dynamic programming can help in understanding the problem better.

How can I improve my skills in dynamic programming?

To improve your skills in dynamic programming, practice solving a variety of problems that can be optimized using dynamic programming techniques . Online coding platforms, coding contests, and algorithmic problem-solving websites offer a wide range of problems to help you sharpen your skills. Additionally, studying different dynamic programming patterns and approaches can enhance your problem-solving abilities.

What are some resources to learn more about dynamic programming?

There are plenty of resources available to deepen your understanding of dynamic programming, including online courses, textbooks, and tutorials. Websites like LeetCode, GeeksforGeeks, and CodeSignal offer practice problems and explanations related to dynamic programming. Additionally, joining online coding communities and forums can provide valuable insights and tips from experienced programmers in the field.

You Might Also Like

Binary decision diagrams: simplifying complex decision processes, optimizing data search in binary search trees, binary search tree: structure, operations, and applications, binary tree search: navigating trees for efficient data retrieval, searching in a binary search tree: algorithms and efficiency.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Latest Posts

Top Machine Learning Projects in Python with Source Code for Your Next Project

Machine Learning Projects for Final Year with Source Code: A Comprehensive Guide to Success

Top 10 Machine Learning Projects for Students to Excel in Machine Learning Project

Top Machine Learning Projects for Students: A Compilation of Exciting ML Projects to Boost Your Skills in 2022 Project

Top Machine Learning Projects on GitHub for Deep Learning Enthusiasts – Dive into Exciting Project Ideas Now!

Privacy overview.

Sign in to your account

Username or Email Address

Remember Me

Dynamic Programming: Definition, Methods, and Practice Questions

Dynamic programming is a useful problem-solving technique that every developer should know. While the basics are easy to learn, dynamic programming can be difficult to master. In this post, we break down the fundamentals of dynamic programming and share challenge questions to start practicing.

What is Dynamic Programming?

Dynamic programming is a problem-solving paradigm used to find a solution by breaking the larger problem into subproblems. This approach takes advantage of the fact that the optimal solution to a problem depends upon the optimal solution to its subproblems.

But dynamic programming isn’t the right approach for every problem. You can use dynamic programming to solve a problem if that problem has two characteristics:

- Overlapping Subproblems: The problem can be divided into a number of subproblems of similar type but of smaller size than the original one.

- Optimal Substructure Property: The optimal solution to the problem can be formulated from the optimal solution to its subproblems.

Dynamic Programming vs Greedy Algorithms

One helpful way to understand dynamic programming is to compare it to greedy algorithms.

A greedy algorithm is a simple, intuitive algorithm that is used in optimization problems. The algorithm makes the optimal choice at each step to find the global or overall optimal solution to the entire problem.

However, a greedy algorithm isn’t always the right approach, as it can create an inaccurate or suboptimal solution. In some situations, the largest sum or most optimal path is “hiding behind the door” of an answer that at first appears small or suboptimal.

In contrast, dynamic programming can break those decisions into components and solve for the overall optimal solution. The drawback, however, is that dynamic programming can be challenging, compared to the easier greedy algorithms .

Dynamic Programming Methods

Dynamic Programming has two methods that can be used to solve problems: top-down and bottom-up.

Top-Down Approach

A top-down (recursive) approach tries to solve the bigger problem by recursively finding the solution to smaller problems while also storing local solutions in a look-up table such that those are not computed each time. This technique is called Memo-ization.

Memoization

The advantage of the top-down approach is that it’s more intuitive to come up with solutions than the other method. However, this doesn’t always produce the most optimal solution, as it often leads to code that is slower and lengthier.

F(n) = (F(n – 1) + F(n -3), if (n >=3)

F(n) = 7, otherwise

Given n, find the n th term of the recurrence.

Top-Down Solution

int F[MAXSIZE] ;

// set F to some unique value like -1 in this case.

int solve(int n){

if(n < 3){

return 7 ; // recurrence definition

int &ret = F[n] ; // cache technique

if(ret != -1) // absence of -1 indicate that this is already computed

return ret ; // use this computed result

ret = solve(n-3) + solve(n-1) ; // compute otherwise

return F[n] = ret ; // final return computed answer

Bottom-Up Approach

The bottom-up (iterative) approach is the opposite of the top-down approach in that you start from the smallest solution and go up to the required solution. The bottom-up approach tends to produce shorter, more optimal code. However, thinking of a bottom-up solution is less intuitive, and their base cases are often trickier than top-down base cases.

Bottom-Up Solution

F[0] = F[1] = F[2] = 7 ; // recurrence definition

for(int i=3;i<=n;i++)

F[i] = F[i-1] + F[i-3] ; // recurrence definition

return F[n] ;

Dynamic Programming Questions

Problem: row of numbers.

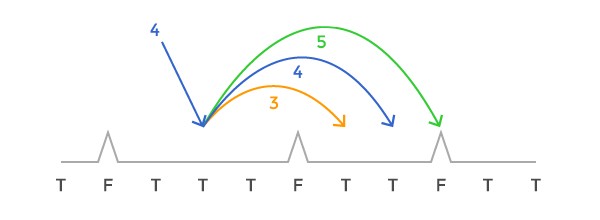

You have a row of numbers. In every turn, you can remove either the leftmost or the rightmost number and get points equal to turn_number x value of removed number. What is the maximum number of points you can get?

While you might think to use a greedy algorithm, that solution is not correct. [2, 3, 5, 1, 4] is a counter-example. This leads to the answer 2 x 1 + 3 x 2 + 4 x 3 + 1 x 4 + 5 x 5 = 49. The optimal answer in this case would be 2 x 1 + 4 x 2 + 1 x 3 + 3 x 4 + 5 x 5 = 50.

The correct solution is a dynamic programming approach.

Assume we have a function f(i, j) which gives us the optimal score of picking numbers with indices from i to j assuming the picking begins at turn number 1. Then, our answer would be f(1, n) where n is the total count of numbers.

However, notice that since we can only pick the leftmost one or the rightmost one, f(1, n) = max(g(2, n)) + val 1 , g(1, n – 1) + val j ) where g(i, j) is the optimal cost assuming picking begins at turn number 2.

A small observation: g(i, j) = f(i, j) + sum of all numbers from i to j.

Which means: f(i, j) = max(f(i, j – 1)) + sum(i, j – 1) + val j , f(i + 1, j) + sum(i + 1, j)

Computing this relation by recursion will take an exponential amount of time because the problem of size j – i gets reduced to two instances of problem sizes j – i -1 each.

This gives the recurrence:

T(n) = 2T(n – 1)

or, T(n) = O(2 n )

Let’s try to handle this.

First of all, notice that this problem has both the required properties of a dynamic programming problem. The answer depends on the answers to smaller subproblems.

These subproblems overlap with each other: f(i, j – 1) and f(i + 1, j) both call f(i + 1, j – 1).

However, there are only O(n 2 ) different parameters of f and, therefore, only O(n 2 ) different values of f.

So, we can use memoization while calculating f. Once it has been evaluated, all subsequent calls to this state are answered using the stored value.

The complexity of this solutions is: number of states x number of transitions from each state, which is O(n 2 ) for this problem.

It is important to note that every recursion must have a base case in order to terminate. The base case here is pretty simple: f(i, i) = val i : ∀i

Problem: Max Array Sum

Difficulty Level: Medium

Given an array of integers, find the subset of non-adjacent elements with the maximum sum. Calculate the sum of that subset. It is possible that the maximum sum is 0, the case when all elements are negative.

The following subsets with more than 1 element exist. These exclude the empty subset and single element subsets which are also valid.

Subset Sum

[-2, 3, 5] 6

[-2, 3] 1

[-2, -4] -6

[-2, 5] 3

[1, -4] -3

[1, 5] 6

[3, 5] 8

The maximum subset sum is 8. Note that any individual element is a subset as well.

arr = [-2, -3, -1]

In this case, it is best to choose no element:

Function Description

Complete the maxSubsetSum function in the editor below.

maxSubsetSum has the following parameter(s):

- int arr[n]: an array of integers

– int: the maximum subset sum

Input Format

The first line contains an integer, n.

The second line contains n space-separated integers arr[i].

Constraints

- 1 <= n <= 10 5

- -10 4 <= arr[i] <= 10 4

Sample Input

Sample Output

Explanation

Our subsets are [2, 5, 4]. [2, 5], [2,8], [2, 4], [1, 8], [1, 4] and [5, 4] The maximum subset sum is 11 from the first subset listed.

To solve the problem with dynamic programming, work through the array, keeping track of the max at each position until you get to the last value of the array. You should start with the base cases defined before iterating through the remainder of the array.

Challenge Problem: Billboards

Difficulty Level: Advanced

Below is an advanced-level dynamic programming problem that covers topics such as dynamic programming and priority queue. Only 36.09% of developers in the HackerRank Community that have attempted this problem have succeeded. Good luck!

ADZEN is a popular advertising firm in your city that owns all n billboard locations on Main Street. The city council passed a new zoning ordinance mandating that no more than k consecutive billboards may be up at any given time. For example, if there are n = 3 billboards on Main street and k = 1, ADZEN must remove either the middle billboard, the first two billboards, the last two billboards, or the first and last billboard.

Being a for-profit company, ADZEN wants to lose as little advertising revenue as possible when removing the billboards. They want to comply with the new ordinance in such a way that the remaining billboards maximize their total revenues (i.e., the sum of revenues generated by the billboards left standing on Main street).

Given n, k, and the revenue of each of the n billboards, find and print the maximum profit that ADZEN can earn while complying with the zoning ordinance. Assume that Main street is a straight, contiguous block of n billboards that can be removed but cannot be reordered in any way.

For example, if there are n = 7 billboards, and k = 3 is the maximum number of consecutive billboards that can be active, with revenues = [5, 6, 4, 2, 10, 8, 4], then the maximum revenue that can be generated is 37: 5 + 6 + 4 + 2 + 10 + 8 + 4.

Complete the billboards function in the editor below. It should return an integer that represents the maximum revenue that can be generated under the rules.

billboards has the following parameter(s):

- k: an integer that represents the longest contiguous group of billboards allowed

- revenue: an integer array where each element represents the revenue potential for a billboard at that index

The first line contains two space-separated integers, n (the number of billboards) and k (the maximum number of billboards that can stand together on any part of the road).

Each line i of the n subsequent lines contains an integer denoting the revenue value of billboard i (where 0 <= i <= n).

- 1 <= n < 10^ 5

- 1 <= k <=n

- 0 <= revenue value of any billboard <= 2 * 10^ 9

Output Format

Print a single integer denoting the maximum profit ADZEN can earn from Main street after complying with the city’s ordinance.

Sample Input 0

Sample Output 0

Explanation 0

There are n = 6 billboards, and we must remove some of them so that no more than k = 2 billboards are immediately next to one another.

We remove the first and fourth billboards, which gives us the configuration _ 2 3 _ 6 10 and a profit of 2 + 3 + 6 + 10 + 21. As no other configuration has a profit greater than 21, we print 21 as our answer.

Sample Input 1

Sample Output 1

Explanation 1

There are n = 5 billboards, and we must remove some of them so that no more than k = 4 billboards are immediately next to one another.

We remove the first billboard, which gives us the configuration _ 2 3 4 5 and a profit of 2 + 3 +4 + 5 = 14. As no other configuration has a profit greater than 14, we print 14 as our answer.

Basics of Dynamic Programming

Dynamic Programming Interview Questions

15 Common Problem-Solving Interview Questions

HackerRank Basic Problem-Solving Skills Certification

Get started with HackerRank

Over 2,500 companies and 40% of developers worldwide use HackerRank to hire tech talent and sharpen their skills.

Recommended topics

- Coding Questions

- Interview Preparation

6 REST API Interview Questions Every Developer Should Know

LTCWM > Blog > What to learn > Skill > What Is Dynamic Programming?

What Is Dynamic Programming?

Updated on October 26th, 2023 | Sign up for learn to code tips

Table of Contents

- What Is It Used For?

- The DP Process

- Top-Down vs Bottom-Up DP

- Other Techniques

- Why Learn DP?

- How to Learn DP

- Learn DP Basics

Also referred to as DP, dynamic programming is a powerful technique for solving complex problems in computer science, engineering, and mathematics.

While it has a reputation for being intimidating, learning dynamic programming is much easier once you understand the basics.

In the simplest terms, dynamic programming is a method of solving problems by breaking them down into smaller sub-problems and reusing the solutions to these sub-problems to build up to the solution for the original problem.

This approach allows us to solve problems much more efficiently.

Dynamic programming techniques can be used to solve a wide range of problems, from finding the shortest path in a graph to solving mathematical optimization problems.

Click To Tweet

In this introduction to dynamic programming, we’ll explore dynamic programming basics like what it’s used for, steps in the process, and the different dynamic programming techniques , types, and algorithms that make it happen.

Plus, we’ll look at some real-world dynamic programming examples, compare it to other programming methods (like dynamic programming vs recursion and greedy algorithms), and share tips on how to learn dynamic programming yourself!

Disclosure: I’m a proud affiliate for some of the resources mentioned in this article. If you buy a product through my links on this page, I may get a small commission for referring you. Thanks!

Dynamic programming is a technique used in computer science and mathematics to solve problems efficiently. It helps you avoid having to solve the same problem over and over again.

🎮 Think about it like playing a video game .

In a video game, you often have to solve small problems to progress to the next level. Sometimes you encounter a problem that you’ve already solved before—so instead of going through the whole process again, you use what you learned from the last time to solve it faster.

Dynamic programming is similar. It helps you find the solution to a big problem by breaking it down into smaller, easier-to-solve problems.

You’ll solve those problems first, then take what you’ve learned from designing those solutions and apply that knowledge to solve the bigger one.

This way, you don’t have to start from scratch, and the original problem feels less insurmountable.

🌟 As this tweet explains : “It’s like having a time machine for your code; it helps you travel back to the simpler version of a problem to solve it more efficiently.”

🌟 This Redditor also has a good way of explaining what dynamic programming is: “Say I put a bunch of matches down on the table, and ask you to count them. After a few moments you say ‘12,’ then I add another match and say ‘How many are there now?’ You wouldn’t have to count them again to tell me there are 13. You already knew there were 12, and I’ve added one more. DP is this. Each individual subtask is ‘counting a match,’ and you are memorizing the previous result to get the next result, in this case, 12 matches, to count to 13 matches.”

Start coding now

Stop waiting and start learning! Get my 10 tips on teaching yourself how to code.

Success! Now check your email to confirm your subscription.

There was an error submitting your subscription. Please try again.

Dynamic programming vs dynamic programming languages

You might assume that a dynamic programming language is just a coding language used for DP. However, while dynamic programming and dynamic programming languages share the term “dynamic,” they are actually not directly related to each other.

Dynamic programming , as you’ve just learned, is a CS/mathematical optimization technique.

Dynamic programming languages are coding languages (like JavaScript and Python ) that allow for the modification of a program’s structure while it is running.

Don’t get confused wondering what the connection is: as far as tech concepts go, they’re not in the same category.

☝️ Back to top

What Is Dynamic Programming Used For?

Dynamic programming is commonly used for solving optimization problems, where the goal is to find the best possible solution given certain constraints.

This obviously becomes useful in a wide range of applications, such as:

- 🧮 Combinatorial mathematics: DP is used for optimization problems such as the traveling salesman problem or the knapsack problem .

- 🧬 Bioinformatics: DP can help align sequences of DNA or protein, where the goal is to find the optimal match between two sequences.

- 📅 Management & operations: Dynamic programming can be leveraged to help ops teams and managers allocate resources efficiently (eg in scheduling).

- 👁️ Computer vision and image processing: It can be used in applications to solve problems such as image segmentation and pattern recognition.

- 🤖 Natural language processing applications: DP can assist with processes like speech recognition and machine translation, to find the optimal solution for problems including sequence alignment and language modeling.

Steps in the Dynamic Programming Process

When you boil down dynamic programming basics to their simplest forms, it doesn’t seem so difficult! There are essentially just five steps:

- Identify sub-problems: The first step is to identify the smaller sub-problems that make up the larger problem.

- Store solutions to sub-problems: Create an array or matrix to store the solutions to each sub-problem.

- Solve sub-problems: Solve each sub-problem, one at a time, and store that solution in the array or matrix.

- Use stored solutions: Use the stored solutions to build up to the solution for the original problem.

- Optimize the solution: Finally, optimize the solution by removing any redundant computations and ensuring that it is as efficient as possible.

➡️ Learn more about the steps in the dynamic programming process in this post .

Top-Down vs Bottom-Up Dynamic Programming

Bottom-up and top-down dynamic programming are two different ways to solve problems using programmers DP.

Think of them as two different ways to put together a puzzle.

🖼️ In top-down dynamic programming (also known as “memoization”), the algorithm starts by solving the original problem and then recursively breaks it down into smaller sub-problems. You then solve each small piece, one at a time, until the entire puzzle is solved. If you compare it to a jigsaw puzzle, it’s like looking at the box as you put it together.

🧩 Bottom-up dynamic programming (also called “tabulation”) is like starting with the small puzzle pieces and putting them together, piece by piece, until you have the big picture. You start by solving the smallest, easiest sub-problems first, and then use that information to solve bigger and bigger problems, until you’ve solved the whole thing.

Both top-down and bottom-up dynamic programming can be useful, depending on the problem you’re trying to solve. The choice between the two often depends on the size of the problem and how well it can be broken down into smaller parts.