An Introduction to Data Analysis

- First Online: 28 September 2018

Cite this chapter

- Fabio Nelli 2

16k Accesses

In this chapter, you begin to take the first steps in the world of data analysis, learning in detail about all the concepts and processes that make up this discipline. The concepts discussed in this chapter are helpful background for the following chapters, where these concepts and procedures will be applied in the form of Python code, through the use of several libraries that will be discussed in just as many chapters.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Author information

Authors and affiliations.

Rome, Italy

Fabio Nelli

You can also search for this author in PubMed Google Scholar

Rights and permissions

Reprints and permissions

Copyright information

© 2018 Fabio Nelli

About this chapter

Nelli, F. (2018). An Introduction to Data Analysis. In: Python Data Analytics. Apress, Berkeley, CA. https://doi.org/10.1007/978-1-4842-3913-1_1

Download citation

DOI : https://doi.org/10.1007/978-1-4842-3913-1_1

Published : 28 September 2018

Publisher Name : Apress, Berkeley, CA

Print ISBN : 978-1-4842-3912-4

Online ISBN : 978-1-4842-3913-1

eBook Packages : Professional and Applied Computing Apress Access Books Professional and Applied Computing (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HCA Healthc J Med

- v.1(2); 2020

- PMC10324782

Introduction to Research Statistical Analysis: An Overview of the Basics

Christian vandever.

1 HCA Healthcare Graduate Medical Education

Description

This article covers many statistical ideas essential to research statistical analysis. Sample size is explained through the concepts of statistical significance level and power. Variable types and definitions are included to clarify necessities for how the analysis will be interpreted. Categorical and quantitative variable types are defined, as well as response and predictor variables. Statistical tests described include t-tests, ANOVA and chi-square tests. Multiple regression is also explored for both logistic and linear regression. Finally, the most common statistics produced by these methods are explored.

Introduction

Statistical analysis is necessary for any research project seeking to make quantitative conclusions. The following is a primer for research-based statistical analysis. It is intended to be a high-level overview of appropriate statistical testing, while not diving too deep into any specific methodology. Some of the information is more applicable to retrospective projects, where analysis is performed on data that has already been collected, but most of it will be suitable to any type of research. This primer will help the reader understand research results in coordination with a statistician, not to perform the actual analysis. Analysis is commonly performed using statistical programming software such as R, SAS or SPSS. These allow for analysis to be replicated while minimizing the risk for an error. Resources are listed later for those working on analysis without a statistician.

After coming up with a hypothesis for a study, including any variables to be used, one of the first steps is to think about the patient population to apply the question. Results are only relevant to the population that the underlying data represents. Since it is impractical to include everyone with a certain condition, a subset of the population of interest should be taken. This subset should be large enough to have power, which means there is enough data to deliver significant results and accurately reflect the study’s population.

The first statistics of interest are related to significance level and power, alpha and beta. Alpha (α) is the significance level and probability of a type I error, the rejection of the null hypothesis when it is true. The null hypothesis is generally that there is no difference between the groups compared. A type I error is also known as a false positive. An example would be an analysis that finds one medication statistically better than another, when in reality there is no difference in efficacy between the two. Beta (β) is the probability of a type II error, the failure to reject the null hypothesis when it is actually false. A type II error is also known as a false negative. This occurs when the analysis finds there is no difference in two medications when in reality one works better than the other. Power is defined as 1-β and should be calculated prior to running any sort of statistical testing. Ideally, alpha should be as small as possible while power should be as large as possible. Power generally increases with a larger sample size, but so does cost and the effect of any bias in the study design. Additionally, as the sample size gets bigger, the chance for a statistically significant result goes up even though these results can be small differences that do not matter practically. Power calculators include the magnitude of the effect in order to combat the potential for exaggeration and only give significant results that have an actual impact. The calculators take inputs like the mean, effect size and desired power, and output the required minimum sample size for analysis. Effect size is calculated using statistical information on the variables of interest. If that information is not available, most tests have commonly used values for small, medium or large effect sizes.

When the desired patient population is decided, the next step is to define the variables previously chosen to be included. Variables come in different types that determine which statistical methods are appropriate and useful. One way variables can be split is into categorical and quantitative variables. ( Table 1 ) Categorical variables place patients into groups, such as gender, race and smoking status. Quantitative variables measure or count some quantity of interest. Common quantitative variables in research include age and weight. An important note is that there can often be a choice for whether to treat a variable as quantitative or categorical. For example, in a study looking at body mass index (BMI), BMI could be defined as a quantitative variable or as a categorical variable, with each patient’s BMI listed as a category (underweight, normal, overweight, and obese) rather than the discrete value. The decision whether a variable is quantitative or categorical will affect what conclusions can be made when interpreting results from statistical tests. Keep in mind that since quantitative variables are treated on a continuous scale it would be inappropriate to transform a variable like which medication was given into a quantitative variable with values 1, 2 and 3.

Categorical vs. Quantitative Variables

Both of these types of variables can also be split into response and predictor variables. ( Table 2 ) Predictor variables are explanatory, or independent, variables that help explain changes in a response variable. Conversely, response variables are outcome, or dependent, variables whose changes can be partially explained by the predictor variables.

Response vs. Predictor Variables

Choosing the correct statistical test depends on the types of variables defined and the question being answered. The appropriate test is determined by the variables being compared. Some common statistical tests include t-tests, ANOVA and chi-square tests.

T-tests compare whether there are differences in a quantitative variable between two values of a categorical variable. For example, a t-test could be useful to compare the length of stay for knee replacement surgery patients between those that took apixaban and those that took rivaroxaban. A t-test could examine whether there is a statistically significant difference in the length of stay between the two groups. The t-test will output a p-value, a number between zero and one, which represents the probability that the two groups could be as different as they are in the data, if they were actually the same. A value closer to zero suggests that the difference, in this case for length of stay, is more statistically significant than a number closer to one. Prior to collecting the data, set a significance level, the previously defined alpha. Alpha is typically set at 0.05, but is commonly reduced in order to limit the chance of a type I error, or false positive. Going back to the example above, if alpha is set at 0.05 and the analysis gives a p-value of 0.039, then a statistically significant difference in length of stay is observed between apixaban and rivaroxaban patients. If the analysis gives a p-value of 0.91, then there was no statistical evidence of a difference in length of stay between the two medications. Other statistical summaries or methods examine how big of a difference that might be. These other summaries are known as post-hoc analysis since they are performed after the original test to provide additional context to the results.

Analysis of variance, or ANOVA, tests can observe mean differences in a quantitative variable between values of a categorical variable, typically with three or more values to distinguish from a t-test. ANOVA could add patients given dabigatran to the previous population and evaluate whether the length of stay was significantly different across the three medications. If the p-value is lower than the designated significance level then the hypothesis that length of stay was the same across the three medications is rejected. Summaries and post-hoc tests also could be performed to look at the differences between length of stay and which individual medications may have observed statistically significant differences in length of stay from the other medications. A chi-square test examines the association between two categorical variables. An example would be to consider whether the rate of having a post-operative bleed is the same across patients provided with apixaban, rivaroxaban and dabigatran. A chi-square test can compute a p-value determining whether the bleeding rates were significantly different or not. Post-hoc tests could then give the bleeding rate for each medication, as well as a breakdown as to which specific medications may have a significantly different bleeding rate from each other.

A slightly more advanced way of examining a question can come through multiple regression. Regression allows more predictor variables to be analyzed and can act as a control when looking at associations between variables. Common control variables are age, sex and any comorbidities likely to affect the outcome variable that are not closely related to the other explanatory variables. Control variables can be especially important in reducing the effect of bias in a retrospective population. Since retrospective data was not built with the research question in mind, it is important to eliminate threats to the validity of the analysis. Testing that controls for confounding variables, such as regression, is often more valuable with retrospective data because it can ease these concerns. The two main types of regression are linear and logistic. Linear regression is used to predict differences in a quantitative, continuous response variable, such as length of stay. Logistic regression predicts differences in a dichotomous, categorical response variable, such as 90-day readmission. So whether the outcome variable is categorical or quantitative, regression can be appropriate. An example for each of these types could be found in two similar cases. For both examples define the predictor variables as age, gender and anticoagulant usage. In the first, use the predictor variables in a linear regression to evaluate their individual effects on length of stay, a quantitative variable. For the second, use the same predictor variables in a logistic regression to evaluate their individual effects on whether the patient had a 90-day readmission, a dichotomous categorical variable. Analysis can compute a p-value for each included predictor variable to determine whether they are significantly associated. The statistical tests in this article generate an associated test statistic which determines the probability the results could be acquired given that there is no association between the compared variables. These results often come with coefficients which can give the degree of the association and the degree to which one variable changes with another. Most tests, including all listed in this article, also have confidence intervals, which give a range for the correlation with a specified level of confidence. Even if these tests do not give statistically significant results, the results are still important. Not reporting statistically insignificant findings creates a bias in research. Ideas can be repeated enough times that eventually statistically significant results are reached, even though there is no true significance. In some cases with very large sample sizes, p-values will almost always be significant. In this case the effect size is critical as even the smallest, meaningless differences can be found to be statistically significant.

These variables and tests are just some things to keep in mind before, during and after the analysis process in order to make sure that the statistical reports are supporting the questions being answered. The patient population, types of variables and statistical tests are all important things to consider in the process of statistical analysis. Any results are only as useful as the process used to obtain them. This primer can be used as a reference to help ensure appropriate statistical analysis.

Funding Statement

This research was supported (in whole or in part) by HCA Healthcare and/or an HCA Healthcare affiliated entity.

Conflicts of Interest

The author declares he has no conflicts of interest.

Christian Vandever is an employee of HCA Healthcare Graduate Medical Education, an organization affiliated with the journal’s publisher.

This research was supported (in whole or in part) by HCA Healthcare and/or an HCA Healthcare affiliated entity. The views expressed in this publication represent those of the author(s) and do not necessarily represent the official views of HCA Healthcare or any of its affiliated entities.

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- Write for Us

- BMJ Journals More You are viewing from: Google Indexer

You are here

- Volume 3, Issue 3

- Data analysis in qualitative research

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- Sally Thorne , RN, PhD

- School of Nursing, University of British Columbia Vancouver, British Columbia, Canada

https://doi.org/10.1136/ebn.3.3.68

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

Unquestionably, data analysis is the most complex and mysterious of all of the phases of a qualitative project, and the one that receives the least thoughtful discussion in the literature. For neophyte nurse researchers, many of the data collection strategies involved in a qualitative project may feel familiar and comfortable. After all, nurses have always based their clinical practice on learning as much as possible about the people they work with, and detecting commonalities and variations among and between them in order to provide individualised care. However, creating a database is not sufficient to conduct a qualitative study. In order to generate findings that transform raw data into new knowledge, a qualitative researcher must engage in active and demanding analytic processes throughout all phases of the research. Understanding these processes is therefore an important aspect not only of doing qualitative research, but also of reading, understanding, and interpreting it.

For readers of qualitative studies, the language of analysis can be confusing. It is sometimes difficult to know what the researchers actually did during this phase and to understand how their findings evolved out of the data that were collected or constructed. Furthermore, in describing their processes, some authors use language that accentuates this sense of mystery and magic. For example, they may claim that their conceptual categories “emerged” from the data 1 —almost as if they left the raw data out overnight and awoke to find that the data analysis fairies had organised the data into a coherent new structure that explained everything! In this EBN notebook, I will try to help readers make sense of some of the assertions that are made about qualitative data analysis so that they can develop a critical eye for when an analytical claim is convincing and when it is not.

Qualitative data

Qualitative data come in various forms. In many qualitative nursing studies, the database consists of interview transcripts from open ended, focused, but exploratory interviews. However, there is no limit to what might possibly constitute a qualitative database, and increasingly we are seeing more and more creative use of such sources as recorded observations (both video and participatory), focus groups, texts and documents, multi-media or public domain sources, policy manuals, photographs, and lay autobiographical accounts.

Qualitative analytic reasoning processes

What makes a study qualitative is that it usually relies on inductive reasoning processes to interpret and structure the meanings that can be derived from data. Distinguishing inductive from deductive inquiry processes is an important step in identifying what counts as qualitative research. Generally, inductive reasoning uses the data to generate ideas (hypothesis generating), whereas deductive reasoning begins with the idea and uses the data to confirm or negate the idea (hypothesis testing). 2 In actual practice, however, many quantitative studies involve much inductive reasoning, whereas good qualitative analysis often requires access to a full range of strategies. 3 A traditional quantitative study in the health sciences typically begins with a theoretical grounding, takes direction from hypotheses or explicit study questions, and uses a predetermined (and auditable) set of steps to confirm or refute the hypothesis. It does this to add evidence to the development of specific, causal, and theoretical explanations of phenomena. 3 In contrast, qualitative research often takes the position that an interpretive understanding is only possible by way of uncovering or deconstructing the meanings of a phenomenon. Thus, a distinction between explaining how something operates (explanation) and why it operates in the manner that it does (interpretation) may be a more effective way to distinguish quantitative from qualitative analytic processes involved in any particular study.

Because data collection and analysis processes tend to be concurrent, with new analytic steps informing the process of additional data collection and new data informing the analytic processes, it is important to recognise that qualitative data analysis processes are not entirely distinguishable from the actual data. The theoretical lens from which the researcher approaches the phenomenon, the strategies that the researcher uses to collect or construct data, and the understandings that the researcher has about what might count as relevant or important data in answering the research question are all analytic processes that influence the data. Analysis also occurs as an explicit step in conceptually interpreting the data set as a whole, using specific analytic strategies to transform the raw data into a new and coherent depiction of the thing being studied. Although there are many qualitative data analysis computer programs available on the market today, these are essentially aids to sorting and organising sets of qualitative data, and none are capable of the intellectual and conceptualising processes required to transform data into meaningful findings.

Specific analytic strategies

Although a description of the actual procedural details and nuances of every qualitative data analysis strategy is well beyond the scope of a short paper, a general appreciation of the theoretical assumptions underlying some of the more common approaches can be helpful in understanding what a researcher is trying to say about how data were sorted, organised, conceptualised, refined, and interpreted.

CONSTANT COMPARATIVE ANALYSIS

Many qualitative analytic strategies rely on a general approach called “constant comparative analysis”. Originally developed for use in the grounded theory methodology of Glaser and Strauss, 4 which itself evolved out of the sociological theory of symbolic interactionism, this strategy involves taking one piece of data (one interview, one statement, one theme) and comparing it with all others that may be similar or different in order to develop conceptualisations of the possible relations between various pieces of data. For example, by comparing the accounts of 2 different people who had a similar experience, a researcher might pose analytical questions like: why is this different from that? and how are these 2 related? In many qualitative studies whose purpose it is to generate knowledge about common patterns and themes within human experience, this process continues with the comparison of each new interview or account until all have been compared with each other. A good example of this process is reported in a grounded theory study of how adults with brain injury cope with the social attitudes they face (see Evidence-Based Nursing , April 1999, p64).

Constant comparison analysis is well suited to grounded theory because this design is specifically used to study those human phenomena for which the researcher assumes that fundamental social processes explain something of human behaviour and experience, such as stages of grieving or processes of recovery. However, many other methodologies draw from this analytical strategy to create knowledge that is more generally descriptive or interpretive, such as coping with cancer, or living with illness. Naturalistic inquiry, thematic analysis, and interpretive description are methods that depend on constant comparative analysis processes to develop ways of understanding human phenomena within the context in which they are experienced.

PHENOMENOLOGICAL APPROACHES

Constant comparative analysis is not the only approach in qualitative research. Some qualitative methods are not oriented toward finding patterns and commonalities within human experience, but instead seek to discover some of the underlying structure or essence of that experience through the intensive study of individual cases. For example, rather than explain the stages and transitions within grieving that are common to people in various circumstances, a phenomenological study might attempt to uncover and describe the essential nature of grieving and represent it in such a manner that a person who had not grieved might begin to appreciate the phenomenon. The analytic methods that would be employed in these studies explicitly avoid cross comparisons and instead orient the researcher toward the depth and detail that can be appreciated only through an exhaustive, systematic, and reflective study of experiences as they are lived.

Although constant comparative methods might well permit the analyst to use some pre-existing or emergent theory against which to test all new pieces of data that are collected, these more phenomenological approaches typically challenge the researcher to set aside or “bracket” all such preconceptions so that they can work inductively with the data to generate entirely new descriptions and conceptualisations. There are numerous forms of phenomenological research; however, many of the most popular approaches used by nurses derive from the philosophical work of Husserl on modes of awareness (epistemology) and the hermeneutic tradition of Heidegger, which emphasises modes of being (ontology). 5 These approaches differ from one another in the degree to which interpretation is acceptable, but both represent strategies for immersing oneself in data, engaging with data reflectively, and generating a rich description that will enlighten a reader as to the deeper essential structures underlying a particular human experience. Examples of the kinds of human experience that are amenable to this type of inquiry are the suffering experienced by individuals who have a drinking problem (see Evidence-Based Nursing , October 1998, p134) and the emotional experiences of parents of terminally ill adolescents (see Evidence-Based Nursing , October 1999, p132). Sometimes authors explain their approaches not by the phenomenological position they have adopted, but by naming the theorist whose specific techniques they are borrowing. Colaizzi and Giorgi are phenomenologists who have rendered the phenomenological attitude into a set of manageable steps and processes for working with such data and have therefore become popular reference sources among phenomenological nurse researchers.

ETHNOGRAPHIC METHODS

Ethnographic research methods derive from anthropology's tradition of interpreting the processes and products of cultural behaviour. Ethnographers documented such aspects of human experience as beliefs, kinship patterns and ways of living. In the healthcare field, nurses and others have used ethnographic methods to uncover and record variations in how different social and cultural groups understand and enact health and illness. An example of this kind of study is an investigation of how older adults adjust to living in a nursing home environment (see Evidence-Based Nursing , October 1999, p136). When a researcher claims to have used ethnographic methods, we can assume that he or she has come to know a culture or group through immersion and engagement in fieldwork or participant observation and has also undertaken to portray that culture through text. 6 Ethnographic analysis uses an iterative process in which cultural ideas that arise during active involvement “in the field” are transformed, translated, or represented in a written document. It involves sifting and sorting through pieces of data to detect and interpret thematic categorisations, search for inconsistencies and contradictions, and generate conclusions about what is happening and why.

NARRATIVE ANALYSIS AND DISCOURSE ANALYSIS

Many qualitative nurse researchers have discovered the extent to which human experience is shaped, transformed, and understood through linguistic representation. The vague and subjective sensations that characterise cognitively unstructured life experiences take on meaning and order when we try to articulate them in communication. Putting experience into words, whether we do this verbally, in writing, or in thought, transforms the actual experience into a communicable representation of it. Thus, speech forms are not the experiences themselves, but a socially and culturally constructed device for creating shared understandings about them. Narrative analysis is a strategy that recognises the extent to which the stories we tell provide insights about our lived experiences. 7 For example, it was used as a strategy to learn more about the experiences of women who discover that they have a breast lump (see Evidence-Based Nursing , July 1999, p93). Through analytic processes that help us detect the main narrative themes within the accounts people give about their lives, we discover how they understand and make sense of their lives.

By contrast, discourse analysis recognises speech not as a direct representation of human experience, but as an explicit linguistic tool constructed and shaped by numerous social or ideological influences. Discourse analysis strategies draw heavily upon theories developed in such fields as sociolinguistics and cognitive psychology to try to understand what is represented by the various ways in which people communicate ideas. They capitalise on critical inquiry into the language that is used and the way that it is used to uncover the societal influences underlying our behaviours and thoughts. 8 Thus, although discourse analysis and narrative analysis both rely heavily on speech as the most relevant data form, their reasons for analysing speech differ. The table ⇓ illustrates the distinctions among the analytic strategies described above using breast cancer research as an example.

- View inline

General distinctions between selected qualitative research approaches: an illustration using breast cancer research

Cognitive processes inherent in qualitative analysis

The term “qualitative research” encompasses a wide range of philosophical positions, methodological strategies, and analytical procedures. Morse 1 has summarised the cognitive processes involved in qualitative research in a way that can help us to better understand how the researcher's cognitive processes interact with qualitative data to bring about findings and generate new knowledge. Morse believes that all qualitative analysis, regardless of the specific approach, involves:

comprehending the phenomenon under study

synthesising a portrait of the phenomenon that accounts for relations and linkages within its aspects

theorising about how and why these relations appear as they do, and

recontextualising , or putting the new knowledge about phenomena and relations back into the context of how others have articulated the evolving knowledge.

Although the form that each of these steps will take may vary according to such factors as the research question, the researcher's orientation to the inquiry, or the setting and context of the study, this set of steps helps to depict a series of intellectual processes by which data in their raw form are considered, examined, and reformulated to become a research product.

Quality measures in qualitative analysis

It used to be a tradition among qualitative nurse researchers to claim that such issues as reliability and validity were irrelevant to the qualitative enterprise. Instead, they might say that the proof of the quality of the work rested entirely on the reader's acceptance or rejection of the claims that were made. If the findings “rang true” to the intended audience, then the qualitative study was considered successful. More recently, nurse researchers have taken a lead among their colleagues in other disciplines in trying to work out more formally how the quality of a piece of qualitative research might be judged. Many of these researchers have concluded that systematic, rigorous, and auditable analytical processes are among the most significant factors distinguishing good from poor quality research. 9 Researchers are therefore encouraged to articulate their findings in such a manner that the logical processes by which they were developed are accessible to a critical reader, the relation between the actual data and the conclusions about data is explicit, and the claims made in relation to the data set are rendered credible and believable. Through this short description of analytical approaches, readers will be in a better position to critically evaluate individual qualitative studies, and decide whether and when to apply the findings of such studies to their nursing practice.

- ↵ Morse JM. “Emerging from the data”: the cognitive processes of analysis in qualitative inquiry. In: JM Morse, editor. Critical issues in qualitative research methods . Thousand Oaks, CA: Sage, 1994:23–43.

- ↵ Holloway I. Basic concepts for qualitative research . Oxford: Blackwell Science, 1997.

- ↵ Schwandt TA. Qualitative inquiry: a dictionary of terms . Thousand Oaks, CA: Sage, 1997.

- ↵ Glaser BG, Strauss AL. The discovery of grounded theory . Hawthorne, NY: Aldine, 1967.

- ↵ Ray MA. The richness of phenomenology: philosophic, theoretic, and methodologic concerns. In: J M Morse, editor. Critical issues in qualitative research methods . Thousand Oaks, CA: Sage, 1994:117–33.

- ↵ Boyle JS. Styles of ethnography. In: JM Morse, editor. Critical issues in qualitative research methods .. Thousand Oaks, CA: Sage, 1994:159–85.

- ↵ Sandelowski M. We are the stories we tell: narrative knowing in nursing practice. J Holist Nurs 1994 ; 12 : 23 –33. OpenUrl CrossRef PubMed

- ↵ Boutain DM. Critical language and discourse study: their transformative relevance for critical nursing inquiry. ANS Adv Nurs Sci 1999 ; 21 : 1 –8.

- ↵ Thorne S. The art (and science) of critiquing qualitative research. In: JM Morse, editor. Completing a qualitative project: details and dialogue . Thousand Oaks, CA: Sage, 1997:117–32.

Read the full text or download the PDF:

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

14 Quantitative analysis: Descriptive statistics

Numeric data collected in a research project can be analysed quantitatively using statistical tools in two different ways. Descriptive analysis refers to statistically describing, aggregating, and presenting the constructs of interest or associations between these constructs. Inferential analysis refers to the statistical testing of hypotheses (theory testing). In this chapter, we will examine statistical techniques used for descriptive analysis, and the next chapter will examine statistical techniques for inferential analysis. Much of today’s quantitative data analysis is conducted using software programs such as SPSS or SAS. Readers are advised to familiarise themselves with one of these programs for understanding the concepts described in this chapter.

Data preparation

In research projects, data may be collected from a variety of sources: postal surveys, interviews, pretest or posttest experimental data, observational data, and so forth. This data must be converted into a machine-readable, numeric format, such as in a spreadsheet or a text file, so that they can be analysed by computer programs like SPSS or SAS. Data preparation usually follows the following steps:

Data coding. Coding is the process of converting data into numeric format. A codebook should be created to guide the coding process. A codebook is a comprehensive document containing a detailed description of each variable in a research study, items or measures for that variable, the format of each item (numeric, text, etc.), the response scale for each item (i.e., whether it is measured on a nominal, ordinal, interval, or ratio scale, and whether this scale is a five-point, seven-point scale, etc.), and how to code each value into a numeric format. For instance, if we have a measurement item on a seven-point Likert scale with anchors ranging from ‘strongly disagree’ to ‘strongly agree’, we may code that item as 1 for strongly disagree, 4 for neutral, and 7 for strongly agree, with the intermediate anchors in between. Nominal data such as industry type can be coded in numeric form using a coding scheme such as: 1 for manufacturing, 2 for retailing, 3 for financial, 4 for healthcare, and so forth (of course, nominal data cannot be analysed statistically). Ratio scale data such as age, income, or test scores can be coded as entered by the respondent. Sometimes, data may need to be aggregated into a different form than the format used for data collection. For instance, if a survey measuring a construct such as ‘benefits of computers’ provided respondents with a checklist of benefits that they could select from, and respondents were encouraged to choose as many of those benefits as they wanted, then the total number of checked items could be used as an aggregate measure of benefits. Note that many other forms of data—such as interview transcripts—cannot be converted into a numeric format for statistical analysis. Codebooks are especially important for large complex studies involving many variables and measurement items, where the coding process is conducted by different people, to help the coding team code data in a consistent manner, and also to help others understand and interpret the coded data.

Data entry. Coded data can be entered into a spreadsheet, database, text file, or directly into a statistical program like SPSS. Most statistical programs provide a data editor for entering data. However, these programs store data in their own native format—e.g., SPSS stores data as .sav files—which makes it difficult to share that data with other statistical programs. Hence, it is often better to enter data into a spreadsheet or database where it can be reorganised as needed, shared across programs, and subsets of data can be extracted for analysis. Smaller data sets with less than 65,000 observations and 256 items can be stored in a spreadsheet created using a program such as Microsoft Excel, while larger datasets with millions of observations will require a database. Each observation can be entered as one row in the spreadsheet, and each measurement item can be represented as one column. Data should be checked for accuracy during and after entry via occasional spot checks on a set of items or observations. Furthermore, while entering data, the coder should watch out for obvious evidence of bad data, such as the respondent selecting the ‘strongly agree’ response to all items irrespective of content, including reverse-coded items. If so, such data can be entered but should be excluded from subsequent analysis.

Data transformation. Sometimes, it is necessary to transform data values before they can be meaningfully interpreted. For instance, reverse coded items—where items convey the opposite meaning of that of their underlying construct—should be reversed (e.g., in a 1-7 interval scale, 8 minus the observed value will reverse the value) before they can be compared or combined with items that are not reverse coded. Other kinds of transformations may include creating scale measures by adding individual scale items, creating a weighted index from a set of observed measures, and collapsing multiple values into fewer categories (e.g., collapsing incomes into income ranges).

Univariate analysis

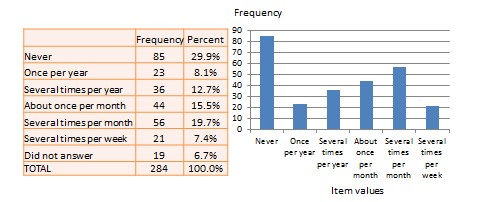

Univariate analysis—or analysis of a single variable—refers to a set of statistical techniques that can describe the general properties of one variable. Univariate statistics include: frequency distribution, central tendency, and dispersion. The frequency distribution of a variable is a summary of the frequency—or percentages—of individual values or ranges of values for that variable. For instance, we can measure how many times a sample of respondents attend religious services—as a gauge of their ‘religiosity’—using a categorical scale: never, once per year, several times per year, about once a month, several times per month, several times per week, and an optional category for ‘did not answer’. If we count the number or percentage of observations within each category—except ‘did not answer’ which is really a missing value rather than a category—and display it in the form of a table, as shown in Figure 14.1, what we have is a frequency distribution. This distribution can also be depicted in the form of a bar chart, as shown on the right panel of Figure 14.1, with the horizontal axis representing each category of that variable and the vertical axis representing the frequency or percentage of observations within each category.

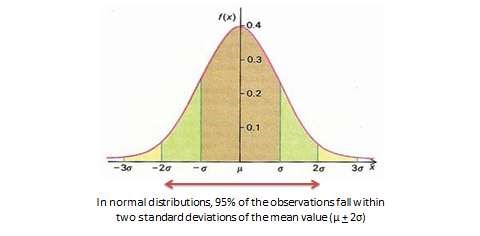

With very large samples, where observations are independent and random, the frequency distribution tends to follow a plot that looks like a bell-shaped curve—a smoothed bar chart of the frequency distribution—similar to that shown in Figure 14.2. Here most observations are clustered toward the centre of the range of values, with fewer and fewer observations clustered toward the extreme ends of the range. Such a curve is called a normal distribution .

Lastly, the mode is the most frequently occurring value in a distribution of values. In the previous example, the most frequently occurring value is 15, which is the mode of the above set of test scores. Note that any value that is estimated from a sample, such as mean, median, mode, or any of the later estimates are called a statistic .

Bivariate analysis

Bivariate analysis examines how two variables are related to one another. The most common bivariate statistic is the bivariate correlation —often, simply called ‘correlation’—which is a number between -1 and +1 denoting the strength of the relationship between two variables. Say that we wish to study how age is related to self-esteem in a sample of 20 respondents—i.e., as age increases, does self-esteem increase, decrease, or remain unchanged?. If self-esteem increases, then we have a positive correlation between the two variables, if self-esteem decreases, then we have a negative correlation, and if it remains the same, we have a zero correlation. To calculate the value of this correlation, consider the hypothetical dataset shown in Table 14.1.

After computing bivariate correlation, researchers are often interested in knowing whether the correlation is significant (i.e., a real one) or caused by mere chance. Answering such a question would require testing the following hypothesis:

Social Science Research: Principles, Methods and Practices (Revised edition) Copyright © 2019 by Anol Bhattacherjee is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Your Modern Business Guide To Data Analysis Methods And Techniques

Table of Contents

1) What Is Data Analysis?

2) Why Is Data Analysis Important?

3) What Is The Data Analysis Process?

4) Types Of Data Analysis Methods

5) Top Data Analysis Techniques To Apply

6) Quality Criteria For Data Analysis

7) Data Analysis Limitations & Barriers

8) Data Analysis Skills

9) Data Analysis In The Big Data Environment

In our data-rich age, understanding how to analyze and extract true meaning from our business’s digital insights is one of the primary drivers of success.

Despite the colossal volume of data we create every day, a mere 0.5% is actually analyzed and used for data discovery , improvement, and intelligence. While that may not seem like much, considering the amount of digital information we have at our fingertips, half a percent still accounts for a vast amount of data.

With so much data and so little time, knowing how to collect, curate, organize, and make sense of all of this potentially business-boosting information can be a minefield – but online data analysis is the solution.

In science, data analysis uses a more complex approach with advanced techniques to explore and experiment with data. On the other hand, in a business context, data is used to make data-driven decisions that will enable the company to improve its overall performance. In this post, we will cover the analysis of data from an organizational point of view while still going through the scientific and statistical foundations that are fundamental to understanding the basics of data analysis.

To put all of that into perspective, we will answer a host of important analytical questions, explore analytical methods and techniques, while demonstrating how to perform analysis in the real world with a 17-step blueprint for success.

What Is Data Analysis?

Data analysis is the process of collecting, modeling, and analyzing data using various statistical and logical methods and techniques. Businesses rely on analytics processes and tools to extract insights that support strategic and operational decision-making.

All these various methods are largely based on two core areas: quantitative and qualitative research.

To explain the key differences between qualitative and quantitative research, here’s a video for your viewing pleasure:

Gaining a better understanding of different techniques and methods in quantitative research as well as qualitative insights will give your analyzing efforts a more clearly defined direction, so it’s worth taking the time to allow this particular knowledge to sink in. Additionally, you will be able to create a comprehensive analytical report that will skyrocket your analysis.

Apart from qualitative and quantitative categories, there are also other types of data that you should be aware of before dividing into complex data analysis processes. These categories include:

- Big data: Refers to massive data sets that need to be analyzed using advanced software to reveal patterns and trends. It is considered to be one of the best analytical assets as it provides larger volumes of data at a faster rate.

- Metadata: Putting it simply, metadata is data that provides insights about other data. It summarizes key information about specific data that makes it easier to find and reuse for later purposes.

- Real time data: As its name suggests, real time data is presented as soon as it is acquired. From an organizational perspective, this is the most valuable data as it can help you make important decisions based on the latest developments. Our guide on real time analytics will tell you more about the topic.

- Machine data: This is more complex data that is generated solely by a machine such as phones, computers, or even websites and embedded systems, without previous human interaction.

Why Is Data Analysis Important?

Before we go into detail about the categories of analysis along with its methods and techniques, you must understand the potential that analyzing data can bring to your organization.

- Informed decision-making : From a management perspective, you can benefit from analyzing your data as it helps you make decisions based on facts and not simple intuition. For instance, you can understand where to invest your capital, detect growth opportunities, predict your income, or tackle uncommon situations before they become problems. Through this, you can extract relevant insights from all areas in your organization, and with the help of dashboard software , present the data in a professional and interactive way to different stakeholders.

- Reduce costs : Another great benefit is to reduce costs. With the help of advanced technologies such as predictive analytics, businesses can spot improvement opportunities, trends, and patterns in their data and plan their strategies accordingly. In time, this will help you save money and resources on implementing the wrong strategies. And not just that, by predicting different scenarios such as sales and demand you can also anticipate production and supply.

- Target customers better : Customers are arguably the most crucial element in any business. By using analytics to get a 360° vision of all aspects related to your customers, you can understand which channels they use to communicate with you, their demographics, interests, habits, purchasing behaviors, and more. In the long run, it will drive success to your marketing strategies, allow you to identify new potential customers, and avoid wasting resources on targeting the wrong people or sending the wrong message. You can also track customer satisfaction by analyzing your client’s reviews or your customer service department’s performance.

What Is The Data Analysis Process?

When we talk about analyzing data there is an order to follow in order to extract the needed conclusions. The analysis process consists of 5 key stages. We will cover each of them more in detail later in the post, but to start providing the needed context to understand what is coming next, here is a rundown of the 5 essential steps of data analysis.

- Identify: Before you get your hands dirty with data, you first need to identify why you need it in the first place. The identification is the stage in which you establish the questions you will need to answer. For example, what is the customer's perception of our brand? Or what type of packaging is more engaging to our potential customers? Once the questions are outlined you are ready for the next step.

- Collect: As its name suggests, this is the stage where you start collecting the needed data. Here, you define which sources of data you will use and how you will use them. The collection of data can come in different forms such as internal or external sources, surveys, interviews, questionnaires, and focus groups, among others. An important note here is that the way you collect the data will be different in a quantitative and qualitative scenario.

- Clean: Once you have the necessary data it is time to clean it and leave it ready for analysis. Not all the data you collect will be useful, when collecting big amounts of data in different formats it is very likely that you will find yourself with duplicate or badly formatted data. To avoid this, before you start working with your data you need to make sure to erase any white spaces, duplicate records, or formatting errors. This way you avoid hurting your analysis with bad-quality data.

- Analyze : With the help of various techniques such as statistical analysis, regressions, neural networks, text analysis, and more, you can start analyzing and manipulating your data to extract relevant conclusions. At this stage, you find trends, correlations, variations, and patterns that can help you answer the questions you first thought of in the identify stage. Various technologies in the market assist researchers and average users with the management of their data. Some of them include business intelligence and visualization software, predictive analytics, and data mining, among others.

- Interpret: Last but not least you have one of the most important steps: it is time to interpret your results. This stage is where the researcher comes up with courses of action based on the findings. For example, here you would understand if your clients prefer packaging that is red or green, plastic or paper, etc. Additionally, at this stage, you can also find some limitations and work on them.

Now that you have a basic understanding of the key data analysis steps, let’s look at the top 17 essential methods.

17 Essential Types Of Data Analysis Methods

Before diving into the 17 essential types of methods, it is important that we go over really fast through the main analysis categories. Starting with the category of descriptive up to prescriptive analysis, the complexity and effort of data evaluation increases, but also the added value for the company.

a) Descriptive analysis - What happened.

The descriptive analysis method is the starting point for any analytic reflection, and it aims to answer the question of what happened? It does this by ordering, manipulating, and interpreting raw data from various sources to turn it into valuable insights for your organization.

Performing descriptive analysis is essential, as it enables us to present our insights in a meaningful way. Although it is relevant to mention that this analysis on its own will not allow you to predict future outcomes or tell you the answer to questions like why something happened, it will leave your data organized and ready to conduct further investigations.

b) Exploratory analysis - How to explore data relationships.

As its name suggests, the main aim of the exploratory analysis is to explore. Prior to it, there is still no notion of the relationship between the data and the variables. Once the data is investigated, exploratory analysis helps you to find connections and generate hypotheses and solutions for specific problems. A typical area of application for it is data mining.

c) Diagnostic analysis - Why it happened.

Diagnostic data analytics empowers analysts and executives by helping them gain a firm contextual understanding of why something happened. If you know why something happened as well as how it happened, you will be able to pinpoint the exact ways of tackling the issue or challenge.

Designed to provide direct and actionable answers to specific questions, this is one of the world’s most important methods in research, among its other key organizational functions such as retail analytics , e.g.

c) Predictive analysis - What will happen.

The predictive method allows you to look into the future to answer the question: what will happen? In order to do this, it uses the results of the previously mentioned descriptive, exploratory, and diagnostic analysis, in addition to machine learning (ML) and artificial intelligence (AI). Through this, you can uncover future trends, potential problems or inefficiencies, connections, and casualties in your data.

With predictive analysis, you can unfold and develop initiatives that will not only enhance your various operational processes but also help you gain an all-important edge over the competition. If you understand why a trend, pattern, or event happened through data, you will be able to develop an informed projection of how things may unfold in particular areas of the business.

e) Prescriptive analysis - How will it happen.

Another of the most effective types of analysis methods in research. Prescriptive data techniques cross over from predictive analysis in the way that it revolves around using patterns or trends to develop responsive, practical business strategies.

By drilling down into prescriptive analysis, you will play an active role in the data consumption process by taking well-arranged sets of visual data and using it as a powerful fix to emerging issues in a number of key areas, including marketing, sales, customer experience, HR, fulfillment, finance, logistics analytics , and others.

As mentioned at the beginning of the post, data analysis methods can be divided into two big categories: quantitative and qualitative. Each of these categories holds a powerful analytical value that changes depending on the scenario and type of data you are working with. Below, we will discuss 17 methods that are divided into qualitative and quantitative approaches.

Without further ado, here are the 17 essential types of data analysis methods with some use cases in the business world:

A. Quantitative Methods

To put it simply, quantitative analysis refers to all methods that use numerical data or data that can be turned into numbers (e.g. category variables like gender, age, etc.) to extract valuable insights. It is used to extract valuable conclusions about relationships, differences, and test hypotheses. Below we discuss some of the key quantitative methods.

1. Cluster analysis

The action of grouping a set of data elements in a way that said elements are more similar (in a particular sense) to each other than to those in other groups – hence the term ‘cluster.’ Since there is no target variable when clustering, the method is often used to find hidden patterns in the data. The approach is also used to provide additional context to a trend or dataset.

Let's look at it from an organizational perspective. In a perfect world, marketers would be able to analyze each customer separately and give them the best-personalized service, but let's face it, with a large customer base, it is timely impossible to do that. That's where clustering comes in. By grouping customers into clusters based on demographics, purchasing behaviors, monetary value, or any other factor that might be relevant for your company, you will be able to immediately optimize your efforts and give your customers the best experience based on their needs.

2. Cohort analysis

This type of data analysis approach uses historical data to examine and compare a determined segment of users' behavior, which can then be grouped with others with similar characteristics. By using this methodology, it's possible to gain a wealth of insight into consumer needs or a firm understanding of a broader target group.

Cohort analysis can be really useful for performing analysis in marketing as it will allow you to understand the impact of your campaigns on specific groups of customers. To exemplify, imagine you send an email campaign encouraging customers to sign up for your site. For this, you create two versions of the campaign with different designs, CTAs, and ad content. Later on, you can use cohort analysis to track the performance of the campaign for a longer period of time and understand which type of content is driving your customers to sign up, repurchase, or engage in other ways.

A useful tool to start performing cohort analysis method is Google Analytics. You can learn more about the benefits and limitations of using cohorts in GA in this useful guide . In the bottom image, you see an example of how you visualize a cohort in this tool. The segments (devices traffic) are divided into date cohorts (usage of devices) and then analyzed week by week to extract insights into performance.

3. Regression analysis

Regression uses historical data to understand how a dependent variable's value is affected when one (linear regression) or more independent variables (multiple regression) change or stay the same. By understanding each variable's relationship and how it developed in the past, you can anticipate possible outcomes and make better decisions in the future.

Let's bring it down with an example. Imagine you did a regression analysis of your sales in 2019 and discovered that variables like product quality, store design, customer service, marketing campaigns, and sales channels affected the overall result. Now you want to use regression to analyze which of these variables changed or if any new ones appeared during 2020. For example, you couldn’t sell as much in your physical store due to COVID lockdowns. Therefore, your sales could’ve either dropped in general or increased in your online channels. Through this, you can understand which independent variables affected the overall performance of your dependent variable, annual sales.

If you want to go deeper into this type of analysis, check out this article and learn more about how you can benefit from regression.

4. Neural networks

The neural network forms the basis for the intelligent algorithms of machine learning. It is a form of analytics that attempts, with minimal intervention, to understand how the human brain would generate insights and predict values. Neural networks learn from each and every data transaction, meaning that they evolve and advance over time.

A typical area of application for neural networks is predictive analytics. There are BI reporting tools that have this feature implemented within them, such as the Predictive Analytics Tool from datapine. This tool enables users to quickly and easily generate all kinds of predictions. All you have to do is select the data to be processed based on your KPIs, and the software automatically calculates forecasts based on historical and current data. Thanks to its user-friendly interface, anyone in your organization can manage it; there’s no need to be an advanced scientist.

Here is an example of how you can use the predictive analysis tool from datapine:

**click to enlarge**

5. Factor analysis

The factor analysis also called “dimension reduction” is a type of data analysis used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors. The aim here is to uncover independent latent variables, an ideal method for streamlining specific segments.

A good way to understand this data analysis method is a customer evaluation of a product. The initial assessment is based on different variables like color, shape, wearability, current trends, materials, comfort, the place where they bought the product, and frequency of usage. Like this, the list can be endless, depending on what you want to track. In this case, factor analysis comes into the picture by summarizing all of these variables into homogenous groups, for example, by grouping the variables color, materials, quality, and trends into a brother latent variable of design.

If you want to start analyzing data using factor analysis we recommend you take a look at this practical guide from UCLA.

6. Data mining

A method of data analysis that is the umbrella term for engineering metrics and insights for additional value, direction, and context. By using exploratory statistical evaluation, data mining aims to identify dependencies, relations, patterns, and trends to generate advanced knowledge. When considering how to analyze data, adopting a data mining mindset is essential to success - as such, it’s an area that is worth exploring in greater detail.

An excellent use case of data mining is datapine intelligent data alerts . With the help of artificial intelligence and machine learning, they provide automated signals based on particular commands or occurrences within a dataset. For example, if you’re monitoring supply chain KPIs , you could set an intelligent alarm to trigger when invalid or low-quality data appears. By doing so, you will be able to drill down deep into the issue and fix it swiftly and effectively.

In the following picture, you can see how the intelligent alarms from datapine work. By setting up ranges on daily orders, sessions, and revenues, the alarms will notify you if the goal was not completed or if it exceeded expectations.

7. Time series analysis

As its name suggests, time series analysis is used to analyze a set of data points collected over a specified period of time. Although analysts use this method to monitor the data points in a specific interval of time rather than just monitoring them intermittently, the time series analysis is not uniquely used for the purpose of collecting data over time. Instead, it allows researchers to understand if variables changed during the duration of the study, how the different variables are dependent, and how did it reach the end result.

In a business context, this method is used to understand the causes of different trends and patterns to extract valuable insights. Another way of using this method is with the help of time series forecasting. Powered by predictive technologies, businesses can analyze various data sets over a period of time and forecast different future events.

A great use case to put time series analysis into perspective is seasonality effects on sales. By using time series forecasting to analyze sales data of a specific product over time, you can understand if sales rise over a specific period of time (e.g. swimwear during summertime, or candy during Halloween). These insights allow you to predict demand and prepare production accordingly.

8. Decision Trees

The decision tree analysis aims to act as a support tool to make smart and strategic decisions. By visually displaying potential outcomes, consequences, and costs in a tree-like model, researchers and company users can easily evaluate all factors involved and choose the best course of action. Decision trees are helpful to analyze quantitative data and they allow for an improved decision-making process by helping you spot improvement opportunities, reduce costs, and enhance operational efficiency and production.

But how does a decision tree actually works? This method works like a flowchart that starts with the main decision that you need to make and branches out based on the different outcomes and consequences of each decision. Each outcome will outline its own consequences, costs, and gains and, at the end of the analysis, you can compare each of them and make the smartest decision.

Businesses can use them to understand which project is more cost-effective and will bring more earnings in the long run. For example, imagine you need to decide if you want to update your software app or build a new app entirely. Here you would compare the total costs, the time needed to be invested, potential revenue, and any other factor that might affect your decision. In the end, you would be able to see which of these two options is more realistic and attainable for your company or research.

9. Conjoint analysis

Last but not least, we have the conjoint analysis. This approach is usually used in surveys to understand how individuals value different attributes of a product or service and it is one of the most effective methods to extract consumer preferences. When it comes to purchasing, some clients might be more price-focused, others more features-focused, and others might have a sustainable focus. Whatever your customer's preferences are, you can find them with conjoint analysis. Through this, companies can define pricing strategies, packaging options, subscription packages, and more.

A great example of conjoint analysis is in marketing and sales. For instance, a cupcake brand might use conjoint analysis and find that its clients prefer gluten-free options and cupcakes with healthier toppings over super sugary ones. Thus, the cupcake brand can turn these insights into advertisements and promotions to increase sales of this particular type of product. And not just that, conjoint analysis can also help businesses segment their customers based on their interests. This allows them to send different messaging that will bring value to each of the segments.

10. Correspondence Analysis

Also known as reciprocal averaging, correspondence analysis is a method used to analyze the relationship between categorical variables presented within a contingency table. A contingency table is a table that displays two (simple correspondence analysis) or more (multiple correspondence analysis) categorical variables across rows and columns that show the distribution of the data, which is usually answers to a survey or questionnaire on a specific topic.

This method starts by calculating an “expected value” which is done by multiplying row and column averages and dividing it by the overall original value of the specific table cell. The “expected value” is then subtracted from the original value resulting in a “residual number” which is what allows you to extract conclusions about relationships and distribution. The results of this analysis are later displayed using a map that represents the relationship between the different values. The closest two values are in the map, the bigger the relationship. Let’s put it into perspective with an example.

Imagine you are carrying out a market research analysis about outdoor clothing brands and how they are perceived by the public. For this analysis, you ask a group of people to match each brand with a certain attribute which can be durability, innovation, quality materials, etc. When calculating the residual numbers, you can see that brand A has a positive residual for innovation but a negative one for durability. This means that brand A is not positioned as a durable brand in the market, something that competitors could take advantage of.

11. Multidimensional Scaling (MDS)

MDS is a method used to observe the similarities or disparities between objects which can be colors, brands, people, geographical coordinates, and more. The objects are plotted using an “MDS map” that positions similar objects together and disparate ones far apart. The (dis) similarities between objects are represented using one or more dimensions that can be observed using a numerical scale. For example, if you want to know how people feel about the COVID-19 vaccine, you can use 1 for “don’t believe in the vaccine at all” and 10 for “firmly believe in the vaccine” and a scale of 2 to 9 for in between responses. When analyzing an MDS map the only thing that matters is the distance between the objects, the orientation of the dimensions is arbitrary and has no meaning at all.

Multidimensional scaling is a valuable technique for market research, especially when it comes to evaluating product or brand positioning. For instance, if a cupcake brand wants to know how they are positioned compared to competitors, it can define 2-3 dimensions such as taste, ingredients, shopping experience, or more, and do a multidimensional scaling analysis to find improvement opportunities as well as areas in which competitors are currently leading.

Another business example is in procurement when deciding on different suppliers. Decision makers can generate an MDS map to see how the different prices, delivery times, technical services, and more of the different suppliers differ and pick the one that suits their needs the best.

A final example proposed by a research paper on "An Improved Study of Multilevel Semantic Network Visualization for Analyzing Sentiment Word of Movie Review Data". Researchers picked a two-dimensional MDS map to display the distances and relationships between different sentiments in movie reviews. They used 36 sentiment words and distributed them based on their emotional distance as we can see in the image below where the words "outraged" and "sweet" are on opposite sides of the map, marking the distance between the two emotions very clearly.

Aside from being a valuable technique to analyze dissimilarities, MDS also serves as a dimension-reduction technique for large dimensional data.

B. Qualitative Methods

Qualitative data analysis methods are defined as the observation of non-numerical data that is gathered and produced using methods of observation such as interviews, focus groups, questionnaires, and more. As opposed to quantitative methods, qualitative data is more subjective and highly valuable in analyzing customer retention and product development.

12. Text analysis

Text analysis, also known in the industry as text mining, works by taking large sets of textual data and arranging them in a way that makes it easier to manage. By working through this cleansing process in stringent detail, you will be able to extract the data that is truly relevant to your organization and use it to develop actionable insights that will propel you forward.

Modern software accelerate the application of text analytics. Thanks to the combination of machine learning and intelligent algorithms, you can perform advanced analytical processes such as sentiment analysis. This technique allows you to understand the intentions and emotions of a text, for example, if it's positive, negative, or neutral, and then give it a score depending on certain factors and categories that are relevant to your brand. Sentiment analysis is often used to monitor brand and product reputation and to understand how successful your customer experience is. To learn more about the topic check out this insightful article .

By analyzing data from various word-based sources, including product reviews, articles, social media communications, and survey responses, you will gain invaluable insights into your audience, as well as their needs, preferences, and pain points. This will allow you to create campaigns, services, and communications that meet your prospects’ needs on a personal level, growing your audience while boosting customer retention. There are various other “sub-methods” that are an extension of text analysis. Each of them serves a more specific purpose and we will look at them in detail next.

13. Content Analysis

This is a straightforward and very popular method that examines the presence and frequency of certain words, concepts, and subjects in different content formats such as text, image, audio, or video. For example, the number of times the name of a celebrity is mentioned on social media or online tabloids. It does this by coding text data that is later categorized and tabulated in a way that can provide valuable insights, making it the perfect mix of quantitative and qualitative analysis.

There are two types of content analysis. The first one is the conceptual analysis which focuses on explicit data, for instance, the number of times a concept or word is mentioned in a piece of content. The second one is relational analysis, which focuses on the relationship between different concepts or words and how they are connected within a specific context.

Content analysis is often used by marketers to measure brand reputation and customer behavior. For example, by analyzing customer reviews. It can also be used to analyze customer interviews and find directions for new product development. It is also important to note, that in order to extract the maximum potential out of this analysis method, it is necessary to have a clearly defined research question.

14. Thematic Analysis

Very similar to content analysis, thematic analysis also helps in identifying and interpreting patterns in qualitative data with the main difference being that the first one can also be applied to quantitative analysis. The thematic method analyzes large pieces of text data such as focus group transcripts or interviews and groups them into themes or categories that come up frequently within the text. It is a great method when trying to figure out peoples view’s and opinions about a certain topic. For example, if you are a brand that cares about sustainability, you can do a survey of your customers to analyze their views and opinions about sustainability and how they apply it to their lives. You can also analyze customer service calls transcripts to find common issues and improve your service.

Thematic analysis is a very subjective technique that relies on the researcher’s judgment. Therefore, to avoid biases, it has 6 steps that include familiarization, coding, generating themes, reviewing themes, defining and naming themes, and writing up. It is also important to note that, because it is a flexible approach, the data can be interpreted in multiple ways and it can be hard to select what data is more important to emphasize.

15. Narrative Analysis

A bit more complex in nature than the two previous ones, narrative analysis is used to explore the meaning behind the stories that people tell and most importantly, how they tell them. By looking into the words that people use to describe a situation you can extract valuable conclusions about their perspective on a specific topic. Common sources for narrative data include autobiographies, family stories, opinion pieces, and testimonials, among others.

From a business perspective, narrative analysis can be useful to analyze customer behaviors and feelings towards a specific product, service, feature, or others. It provides unique and deep insights that can be extremely valuable. However, it has some drawbacks.

The biggest weakness of this method is that the sample sizes are usually very small due to the complexity and time-consuming nature of the collection of narrative data. Plus, the way a subject tells a story will be significantly influenced by his or her specific experiences, making it very hard to replicate in a subsequent study.

16. Discourse Analysis

Discourse analysis is used to understand the meaning behind any type of written, verbal, or symbolic discourse based on its political, social, or cultural context. It mixes the analysis of languages and situations together. This means that the way the content is constructed and the meaning behind it is significantly influenced by the culture and society it takes place in. For example, if you are analyzing political speeches you need to consider different context elements such as the politician's background, the current political context of the country, the audience to which the speech is directed, and so on.

From a business point of view, discourse analysis is a great market research tool. It allows marketers to understand how the norms and ideas of the specific market work and how their customers relate to those ideas. It can be very useful to build a brand mission or develop a unique tone of voice.

17. Grounded Theory Analysis

Traditionally, researchers decide on a method and hypothesis and start to collect the data to prove that hypothesis. The grounded theory is the only method that doesn’t require an initial research question or hypothesis as its value lies in the generation of new theories. With the grounded theory method, you can go into the analysis process with an open mind and explore the data to generate new theories through tests and revisions. In fact, it is not necessary to collect the data and then start to analyze it. Researchers usually start to find valuable insights as they are gathering the data.

All of these elements make grounded theory a very valuable method as theories are fully backed by data instead of initial assumptions. It is a great technique to analyze poorly researched topics or find the causes behind specific company outcomes. For example, product managers and marketers might use the grounded theory to find the causes of high levels of customer churn and look into customer surveys and reviews to develop new theories about the causes.

How To Analyze Data? Top 17 Data Analysis Techniques To Apply

Now that we’ve answered the questions “what is data analysis’”, why is it important, and covered the different data analysis types, it’s time to dig deeper into how to perform your analysis by working through these 17 essential techniques.

1. Collaborate your needs