When you choose to publish with PLOS, your research makes an impact. Make your work accessible to all, without restrictions, and accelerate scientific discovery with options like preprints and published peer review that make your work more Open.

- PLOS Biology

- PLOS Climate

- PLOS Complex Systems

- PLOS Computational Biology

- PLOS Digital Health

- PLOS Genetics

- PLOS Global Public Health

- PLOS Medicine

- PLOS Mental Health

- PLOS Neglected Tropical Diseases

- PLOS Pathogens

- PLOS Sustainability and Transformation

- PLOS Collections

How to Write a Peer Review

When you write a peer review for a manuscript, what should you include in your comments? What should you leave out? And how should the review be formatted?

This guide provides quick tips for writing and organizing your reviewer report.

Review Outline

Use an outline for your reviewer report so it’s easy for the editors and author to follow. This will also help you keep your comments organized.

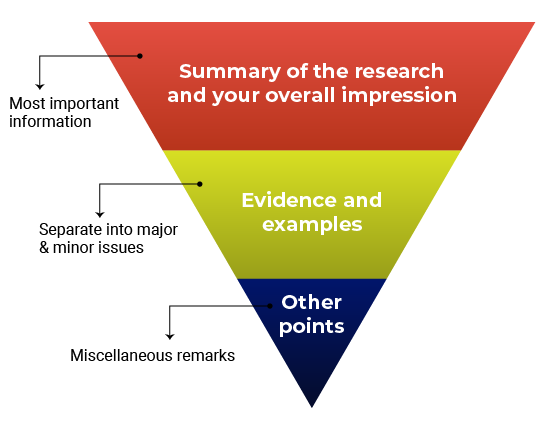

Think about structuring your review like an inverted pyramid. Put the most important information at the top, followed by details and examples in the center, and any additional points at the very bottom.

Here’s how your outline might look:

1. Summary of the research and your overall impression

In your own words, summarize what the manuscript claims to report. This shows the editor how you interpreted the manuscript and will highlight any major differences in perspective between you and the other reviewers. Give an overview of the manuscript’s strengths and weaknesses. Think about this as your “take-home” message for the editors. End this section with your recommended course of action.

2. Discussion of specific areas for improvement

It’s helpful to divide this section into two parts: one for major issues and one for minor issues. Within each section, you can talk about the biggest issues first or go systematically figure-by-figure or claim-by-claim. Number each item so that your points are easy to follow (this will also make it easier for the authors to respond to each point). Refer to specific lines, pages, sections, or figure and table numbers so the authors (and editors) know exactly what you’re talking about.

Major vs. minor issues

What’s the difference between a major and minor issue? Major issues should consist of the essential points the authors need to address before the manuscript can proceed. Make sure you focus on what is fundamental for the current study . In other words, it’s not helpful to recommend additional work that would be considered the “next step” in the study. Minor issues are still important but typically will not affect the overall conclusions of the manuscript. Here are some examples of what would might go in the “minor” category:

- Missing references (but depending on what is missing, this could also be a major issue)

- Technical clarifications (e.g., the authors should clarify how a reagent works)

- Data presentation (e.g., the authors should present p-values differently)

- Typos, spelling, grammar, and phrasing issues

3. Any other points

Confidential comments for the editors.

Some journals have a space for reviewers to enter confidential comments about the manuscript. Use this space to mention concerns about the submission that you’d want the editors to consider before sharing your feedback with the authors, such as concerns about ethical guidelines or language quality. Any serious issues should be raised directly and immediately with the journal as well.

This section is also where you will disclose any potentially competing interests, and mention whether you’re willing to look at a revised version of the manuscript.

Do not use this space to critique the manuscript, since comments entered here will not be passed along to the authors. If you’re not sure what should go in the confidential comments, read the reviewer instructions or check with the journal first before submitting your review. If you are reviewing for a journal that does not offer a space for confidential comments, consider writing to the editorial office directly with your concerns.

Get this outline in a template

Giving Feedback

Giving feedback is hard. Giving effective feedback can be even more challenging. Remember that your ultimate goal is to discuss what the authors would need to do in order to qualify for publication. The point is not to nitpick every piece of the manuscript. Your focus should be on providing constructive and critical feedback that the authors can use to improve their study.

If you’ve ever had your own work reviewed, you already know that it’s not always easy to receive feedback. Follow the golden rule: Write the type of review you’d want to receive if you were the author. Even if you decide not to identify yourself in the review, you should write comments that you would be comfortable signing your name to.

In your comments, use phrases like “ the authors’ discussion of X” instead of “ your discussion of X .” This will depersonalize the feedback and keep the focus on the manuscript instead of the authors.

General guidelines for effective feedback

- Justify your recommendation with concrete evidence and specific examples.

- Be specific so the authors know what they need to do to improve.

- Be thorough. This might be the only time you read the manuscript.

- Be professional and respectful. The authors will be reading these comments too.

- Remember to say what you liked about the manuscript!

Don’t

- Recommend additional experiments or unnecessary elements that are out of scope for the study or for the journal criteria.

- Tell the authors exactly how to revise their manuscript—you don’t need to do their work for them.

- Use the review to promote your own research or hypotheses.

- Focus on typos and grammar. If the manuscript needs significant editing for language and writing quality, just mention this in your comments.

- Submit your review without proofreading it and checking everything one more time.

Before and After: Sample Reviewer Comments

Keeping in mind the guidelines above, how do you put your thoughts into words? Here are some sample “before” and “after” reviewer comments

✗ Before

“The authors appear to have no idea what they are talking about. I don’t think they have read any of the literature on this topic.”

✓ After

“The study fails to address how the findings relate to previous research in this area. The authors should rewrite their Introduction and Discussion to reference the related literature, especially recently published work such as Darwin et al.”

“The writing is so bad, it is practically unreadable. I could barely bring myself to finish it.”

“While the study appears to be sound, the language is unclear, making it difficult to follow. I advise the authors work with a writing coach or copyeditor to improve the flow and readability of the text.”

“It’s obvious that this type of experiment should have been included. I have no idea why the authors didn’t use it. This is a big mistake.”

“The authors are off to a good start, however, this study requires additional experiments, particularly [type of experiment]. Alternatively, the authors should include more information that clarifies and justifies their choice of methods.”

Suggested Language for Tricky Situations

You might find yourself in a situation where you’re not sure how to explain the problem or provide feedback in a constructive and respectful way. Here is some suggested language for common issues you might experience.

What you think : The manuscript is fatally flawed. What you could say: “The study does not appear to be sound” or “the authors have missed something crucial”.

What you think : You don’t completely understand the manuscript. What you could say : “The authors should clarify the following sections to avoid confusion…”

What you think : The technical details don’t make sense. What you could say : “The technical details should be expanded and clarified to ensure that readers understand exactly what the researchers studied.”

What you think: The writing is terrible. What you could say : “The authors should revise the language to improve readability.”

What you think : The authors have over-interpreted the findings. What you could say : “The authors aim to demonstrate [XYZ], however, the data does not fully support this conclusion. Specifically…”

What does a good review look like?

Check out the peer review examples at F1000 Research to see how other reviewers write up their reports and give constructive feedback to authors.

Time to Submit the Review!

Be sure you turn in your report on time. Need an extension? Tell the journal so that they know what to expect. If you need a lot of extra time, the journal might need to contact other reviewers or notify the author about the delay.

Tip: Building a relationship with an editor

You’ll be more likely to be asked to review again if you provide high-quality feedback and if you turn in the review on time. Especially if it’s your first review for a journal, it’s important to show that you are reliable. Prove yourself once and you’ll get asked to review again!

- Getting started as a reviewer

- Responding to an invitation

- Reading a manuscript

- Writing a peer review

The contents of the Peer Review Center are also available as a live, interactive training session, complete with slides, talking points, and activities. …

The contents of the Writing Center are also available as a live, interactive training session, complete with slides, talking points, and activities. …

There’s a lot to consider when deciding where to submit your work. Learn how to choose a journal that will help your study reach its audience, while reflecting your values as a researcher…

Peer review templates, expert examples and free training courses

Joanna Wilkinson

Learning how to write a constructive peer review is an essential step in helping to safeguard the quality and integrity of published literature. Read on for resources that will get you on the right track, including peer review templates, example reports and the Web of Science™ Academy: our free, online course that teaches you the core competencies of peer review through practical experience ( try it today ).

How to write a peer review

Understanding the principles, forms and functions of peer review will enable you to write solid, actionable review reports. It will form the basis for a comprehensive and well-structured review, and help you comment on the quality, rigor and significance of the research paper. It will also help you identify potential breaches of normal ethical practice.

This may sound daunting but it doesn’t need to be. There are plenty of peer review templates, resources and experts out there to help you, including:

Peer review training courses and in-person workshops

- Peer review templates ( found in our Web of Science Academy )

- Expert examples of peer review reports

- Co-reviewing (sharing the task of peer reviewing with a senior researcher)

Other peer review resources, blogs, and guidelines

We’ll go through each one of these in turn below, but first: a quick word on why learning peer review is so important.

Why learn to peer review?

Peer reviewers and editors are gatekeepers of the research literature used to document and communicate human discovery. Reviewers, therefore, need a sound understanding of their role and obligations to ensure the integrity of this process. This also helps them maintain quality research, and to help protect the public from flawed and misleading research findings.

Learning to peer review is also an important step in improving your own professional development.

You’ll become a better writer and a more successful published author in learning to review. It gives you a critical vantage point and you’ll begin to understand what editors are looking for. It will also help you keep abreast of new research and best-practice methods in your field.

We strongly encourage you to learn the core concepts of peer review by joining a course or workshop. You can attend in-person workshops to learn from and network with experienced reviewers and editors. As an example, Sense about Science offers peer review workshops every year. To learn more about what might be in store at one of these, researcher Laura Chatland shares her experience at one of the workshops in London.

There are also plenty of free, online courses available, including courses in the Web of Science Academy such as ‘Reviewing in the Sciences’, ‘Reviewing in the Humanities’ and ‘An introduction to peer review’

The Web of Science Academy also supports co-reviewing with a mentor to teach peer review through practical experience. You learn by writing reviews of preprints, published papers, or even ‘real’ unpublished manuscripts with guidance from your mentor. You can work with one of our community mentors or your own PhD supervisor or postdoc advisor, or even a senior colleague in your department.

Go to the Web of Science Academy

Peer review templates

Peer review templates are helpful to use as you work your way through a manuscript. As part of our free Web of Science Academy courses, you’ll gain exclusive access to comprehensive guidelines and a peer review report. It offers points to consider for all aspects of the manuscript, including the abstract, methods and results sections. It also teaches you how to structure your review and will get you thinking about the overall strengths and impact of the paper at hand.

- Web of Science Academy template (requires joining one of the free courses)

- PLoS’s review template

- Wiley’s peer review guide (not a template as such, but a thorough guide with questions to consider in the first and second reading of the manuscript)

Beyond following a template, it’s worth asking your editor or checking the journal’s peer review management system. That way, you’ll learn whether you need to follow a formal or specific peer review structure for that particular journal. If no such formal approach exists, try asking the editor for examples of other reviews performed for the journal. This will give you a solid understanding of what they expect from you.

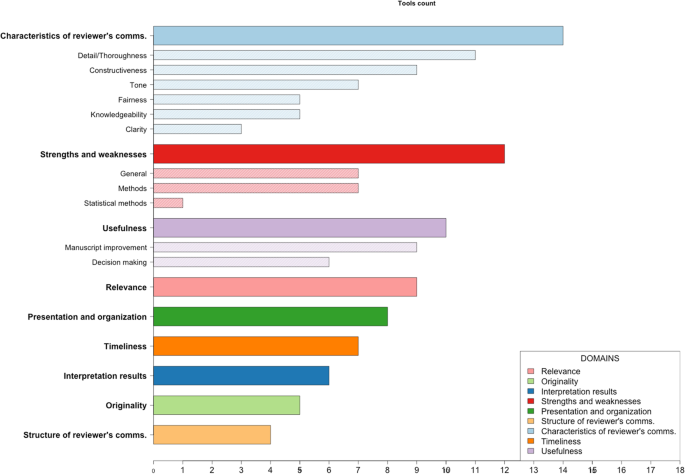

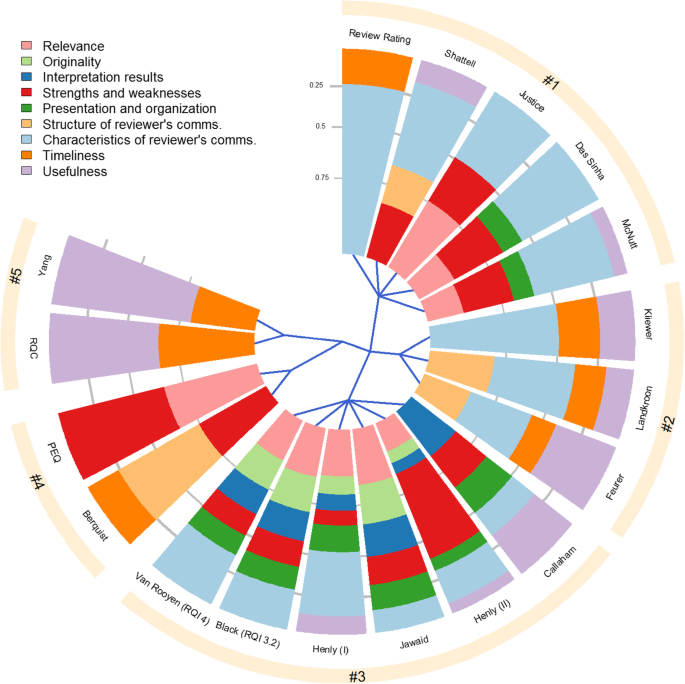

Peer review examples

Understand what a constructive peer review looks like by learning from the experts.

Here’s a sample of pre and post-publication peer reviews displayed on Web of Science publication records to help guide you through your first few reviews. Some of these are transparent peer reviews , which means the entire process is open and visible — from initial review and response through to revision and final publication decision. You may wish to scroll to the bottom of these pages so you can first read the initial reviews, and make your way up the page to read the editor and author’s responses.

- Pre-publication peer review: Patterns and mechanisms in instances of endosymbiont-induced parthenogenesis

- Pre-publication peer review: Can Ciprofloxacin be Used for Precision Treatment of Gonorrhea in Public STD Clinics? Assessment of Ciprofloxacin Susceptibility and an Opportunity for Point-of-Care Testing

- Transparent peer review: Towards a standard model of musical improvisation

- Transparent peer review: Complex mosaic of sexual dichromatism and monochromatism in Pacific robins results from both gains and losses of elaborate coloration

- Post-publication peer review: Brain state monitoring for the future prediction of migraine attacks

- Web of Science Academy peer review: Students’ Perception on Training in Writing Research Article for Publication

F1000 has also put together a nice list of expert reviewer comments pertaining to the various aspects of a review report.

Co-reviewing

Co-reviewing (sharing peer review assignments with senior researchers) is one of the best ways to learn peer review. It gives researchers a hands-on, practical understanding of the process.

In an article in The Scientist , the team at Future of Research argues that co-reviewing can be a valuable learning experience for peer review, as long as it’s done properly and with transparency. The reason there’s a need to call out how co-reviewing works is because it does have its downsides. The practice can leave early-career researchers unaware of the core concepts of peer review. This can make it hard to later join an editor’s reviewer pool if they haven’t received adequate recognition for their share of the review work. (If you are asked to write a peer review on behalf of a senior colleague or researcher, get recognition for your efforts by asking your senior colleague to verify the collaborative co-review on your Web of Science researcher profiles).

The Web of Science Academy course ‘Co-reviewing with a mentor’ is uniquely practical in this sense. You will gain experience in peer review by practicing on real papers and working with a mentor to get feedback on how their peer review can be improved. Students submit their peer review report as their course assignment and after internal evaluation receive a course certificate, an Academy graduate badge on their Web of Science researcher profile and is put in front of top editors in their field through the Reviewer Locator at Clarivate.

Here are some external peer review resources found around the web:

- Peer Review Resources from Sense about Science

- Peer Review: The Nuts and Bolts by Sense about Science

- How to review journal manuscripts by R. M. Rosenfeld for Otolaryngology – Head and Neck Surgery

- Ethical guidelines for peer review from COPE

- An Instructional Guide for Peer Reviewers of Biomedical Manuscripts by Callaham, Schriger & Cooper for Annals of Emergency Medicine (requires Flash or Adobe)

- EQUATOR Network’s reporting guidelines for health researchers

And finally, we’ve written a number of blogs about handy peer review tips. Check out some of our top picks:

- How to Write a Peer Review: 12 things you need to know

- Want To Peer Review? Top 10 Tips To Get Noticed By Editors

- Review a manuscript like a pro: 6 tips from a Web of Science Academy supervisor

- How to write a structured reviewer report: 5 tips from an early-career researcher

Want to learn more? Become a master of peer review and connect with top journal editors. The Web of Science Academy – your free online hub of courses designed by expert reviewers, editors and Nobel Prize winners. Find out more today.

Related posts

Journal citation reports 2024 preview: unified rankings for more inclusive journal assessment.

Introducing the Clarivate Academic AI Platform

Reimagining research impact: Introducing Web of Science Research Intelligence

Advertisement

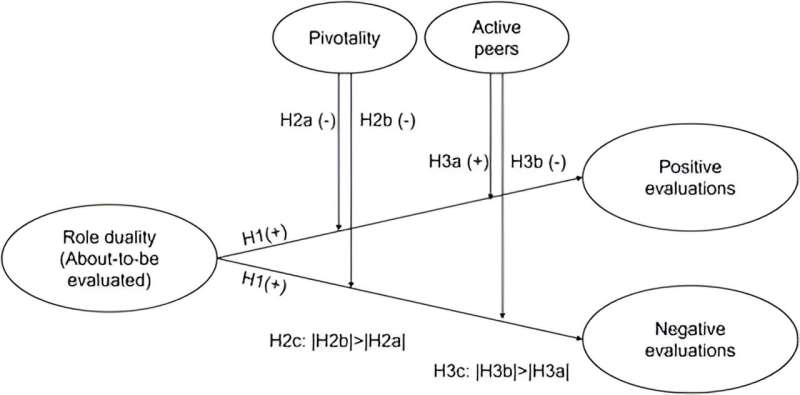

The Impact of Peer Assessment on Academic Performance: A Meta-analysis of Control Group Studies

- Meta-Analysis

- Open access

- Published: 10 December 2019

- Volume 32 , pages 481–509, ( 2020 )

Cite this article

You have full access to this open access article

- Kit S. Double ORCID: orcid.org/0000-0001-8120-1573 1 ,

- Joshua A. McGrane 1 &

- Therese N. Hopfenbeck 1

104k Accesses

149 Citations

125 Altmetric

Explore all metrics

Peer assessment has been the subject of considerable research interest over the last three decades, with numerous educational researchers advocating for the integration of peer assessment into schools and instructional practice. Research synthesis in this area has, however, largely relied on narrative reviews to evaluate the efficacy of peer assessment. Here, we present a meta-analysis (54 studies, k = 141) of experimental and quasi-experimental studies that evaluated the effect of peer assessment on academic performance in primary, secondary, or tertiary students across subjects and domains. An overall small to medium effect of peer assessment on academic performance was found ( g = 0.31, p < .001). The results suggest that peer assessment improves academic performance compared with no assessment ( g = 0.31, p = .004) and teacher assessment ( g = 0.28, p = .007), but was not significantly different in its effect from self-assessment ( g = 0.23, p = .209). Additionally, meta-regressions examined the moderating effects of several feedback and educational characteristics (e.g., online vs offline, frequency, education level). Results suggested that the effectiveness of peer assessment was remarkably robust across a wide range of contexts. These findings provide support for peer assessment as a formative practice and suggest several implications for the implementation of peer assessment into the classroom.

Similar content being viewed by others

The Impact of Peer Feedback on Student Learning Effectiveness: A Meta-analysis Based on 39 Experimental or Quasiexperimental Studies

A Systematic Review of Peer Assessment Design Elements

Peer-Assisted Learning Strategies (PALS): A Validated Classwide Program for Improving Reading and Mathematics Performance

Avoid common mistakes on your manuscript.

Feedback is often regarded as a central component of educational practice and crucial to students’ learning and development (Fyfe & Rittle-Johnson, 2016 ; Hattie and Timperley 2007 ; Hays, Kornell, & Bjork, 2010 ; Paulus, 1999 ). Peer assessment has been identified as one method for delivering feedback efficiently and effectively to learners (Topping 1998 ; van Zundert et al. 2010 ). The use of students to generate feedback about the performance of their peers is referred to in the literature using various terms, including peer assessment, peer feedback, peer evaluation, and peer grading. In this article, we adopt the term peer assessment, as it more generally refers to the method of peers assessing or being assessed by each other, whereas the term feedback is used when we refer to the actual content or quality of the information exchanged between peers. This feedback can be delivered in a variety of forms including written comments, grading, or verbal feedback (Topping 1998 ). Importantly, by performing both the role of assessor and being assessed themselves, students’ learning can potentially benefit more than if they are just assessed (Reinholz 2016 ).

Peer assessments tend to be highly correlated with teacher assessments of the same students (Falchikov and Goldfinch 2000 ; Li et al. 2016 ; Sanchez et al. 2017 ). However, in addition to establishing comparability between teacher and peer assessment scores, it is important to determine whether peer assessment also has a positive effect on future academic performance. Several narrative reviews have argued for the positive formative effects of peer assessment (e.g., Black and Wiliam 1998a ; Topping 1998 ; van Zundert et al. 2010 ) and have additionally identified a number of potentially important moderators for the effect of peer assessment. This meta-analysis will build upon these reviews and provide quantitative evaluations for some of the instructional features identified in these narrative reviews by utilising them as moderators within our analysis.

Evaluating the Evidence for Peer Assessment

Empirical studies.

Despite the optimism surrounding peer assessment as a formative practice, there are relatively few control group studies that evaluate the effect of peer assessment on academic performance (Flórez and Sammons 2013 ; Strijbos and Sluijsmans 2010 ). Most studies on peer assessment have tended to focus on either students’ or teachers’ subjective perceptions of the practice rather than its effect on academic performance (e.g., Brown et al. 2009 ; Young and Jackman 2014 ). Moreover, interventions involving peer assessment often confound the effect of peer assessment with other assessment practices that are theoretically related under the umbrella of formative assessment (Black and Wiliam 2009 ). For instance, Wiliam et al. ( 2004 ) reported a mean effect size of .32 in favor of a formative assessment intervention but they were unable to determine the unique contribution of peer assessment to students’ achievement, as it was one of more than 15 assessment practices included in the intervention.

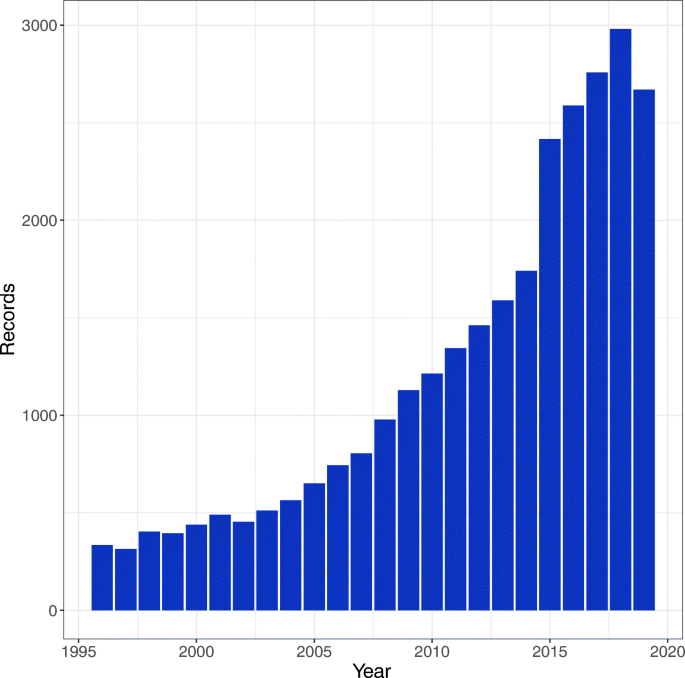

However, as shown in Fig. 1 , there has been a sharp increase in the number of studies related to peer assessment, with over 75% of relevant studies published in the last decade. Although it is still far from being the dominant outcome measure in research on formative practices, many of these recent studies have examined the effect of peer assessment on objective measures of academic performance (e.g., Gielen et al. 2010a ; Liu et al. 2016 ; Wang et al. 2014a ). The number of studies of peer assessment using control group designs also appears to be increasing in frequency (e.g., van Ginkel et al. 2017 ; Wang et al. 2017 ). These studies have typically compared the formative effect of peer assessment with either teacher assessment (e.g., Chaney and Ingraham 2009 ; Sippel and Jackson 2015 ; van Ginkel et al. 2017 ) or no assessment conditions (e.g., Kamp et al. 2014 ; L. Li and Steckelberg 2004 ; Schonrock-Adema et al. 2007 ). Given the increase in peer assessment research, and in particular experimental research, it seems pertinent to synthesise this new body of research, as it provides a basis for critically evaluating the overall effectiveness of peer assessment and its moderators.

Number of records returned by year. The following search terms were used: ‘peer assessment’ or ‘peer grading or ‘peer evaluation’ or ‘peer feedback’. Data were collated by searching Web of Science ( www.webofknowledge.com ) for the following keywords: ‘peer assessment’ or ‘peer grading’ or ‘peer evaluation’ or ‘peer feedback’ and categorising by year

Previous Reviews

Efforts to synthesise peer assessment research have largely been limited to narrative reviews, which have made very strong claims regarding the efficacy of peer assessment. For example, in a review of peer assessment with tertiary students, Topping ( 1998 ) argued that the effects of peer assessment are, ‘as good as or better than the effects of teacher assessment’ (p. 249). Similarly, in a review on peer and self-assessment with tertiary students, Dochy et al. ( 1999 ) concluded that peer assessment can have a positive effect on learning but may be hampered by social factors such as friendships, collusion, and perceived fairness. Reviews into peer assessment have also tended to focus on determining the accuracy of peer assessments, which is typically established by the correlation between peer and teacher assessments for the same performances. High correlations have been observed between peer and teacher assessments in three meta-analyses to date ( r = .69, .63, and .68 respectively; Falchikov and Goldfinch 2000 ; H. Li et al. 2016 ; Sanchez et al. 2017 ). Given that peer assessment is often advocated as a formative practice (e.g., Black and Wiliam 1998a ; Topping 1998 ), it is important to expand on these correlational meta-analyses to examine the formative effect that peer assessment has on academic performance.

In addition to examining the correlation between peer and teacher grading, Sanchez et al. ( 2017 ) additionally performed a meta-analysis on the formative effect of peer grading (i.e., a numerical or letter grade was provided to a student by their peer) in intervention studies. They found that there was a significant positive effect of peer grading on academic performance for primary and secondary (grades 3 to 12) students ( g = .29). However, it is unclear whether their findings would generalise to other forms of peer feedback (e.g., written or verbal feedback) and to tertiary students, both of which we will evaluate in the current meta-analysis.

Moderators of the Effectiveness of Peer Assessment

Theoretical frameworks of peer assessment propose that it is beneficial in at least two respects. Firstly, peer assessment allows students to critically engage with the assessed material, to compare and contrast performance with their peers, and to identify gaps or errors in their own knowledge (Topping 1998 ). In addition, peer assessment may improve the communication of feedback, as peers may use similar and more accessible language, as well as reduce negative feelings of being evaluated by an authority figure (Liu et al. 2016 ). However, the efficacy of peer assessment, like traditional feedback, is likely to be contingent on a range of factors including characteristics of the learning environment, the student, and the assessment itself (Kluger and DeNisi 1996 ; Ossenberg et al. 2018 ). Some of the characteristics that have been proposed to moderate the efficacy of feedback include anonymity (e.g., Rotsaert et al. 2018 ; Yu and Liu 2009 ), scaffolding (e.g., Panadero and Jonsson 2013 ), quality and timing of the feedback (Diab 2011 ), and elaboration (e.g., Gielen et al. 2010b ). Drawing on the previously mentioned narrative reviews and empirical evidence, we now briefly outline the evidence for each of the included theoretical moderators.

It is somewhat surprising that most studies that examine the effect of peer assessment tend to only assess the impact on the assessee and not the assessor (van Popta et al. 2017 ). Assessing may confer several distinct advantages such as drawing comparisons with peers’ work and increased familiarity with evaluative criteria. Several studies have compared the effect of assessing with being assessed. Lundstrom and Baker ( 2009 ) found that assessing a peer’s written work was more beneficial for their own writing than being assessed by a peer. Meanwhile, Graner ( 1987 ) found that students who were receiving feedback from a peer and acted as an assessor did not perform better than students who acted as an assessor but did not receive peer feedback. Reviewing peers’ work is also likely to help students become better reviewers of their own work and to revise and improve their own work (Rollinson 2005 ). While, in practice, students will most often act as both assessor and assessee during peer assessment, it is useful to gain a greater insight into the relative impact of performing each of these roles for both practical reasons and to help determine the mechanisms by which peer assessment improves academic performance.

Peer Assessment Type

The characteristics of peer assessment vary greatly both in practice and within the research literature. Because meta-analysis is unable to capture all of the nuanced dimensions that determine the type, intensity, and quality of peer assessment, we focus on distinguishing between what we regard as the most prevalent types of peer assessment in the literature: grading, peer dialogs, and written assessment. Each of these peer assessment types is widely used in the classroom and often in various combinations (e.g., written qualitative feedback in combination with a numerical grade). While these assessment types differ substantially in terms of their cognitive complexity and comprehensiveness, each has shown at least some evidence of impactive academic performance (e.g., Sanchez et al. 2017 ; Smith et al. 2009 ; Topping 2009 ).

Freeform/Scaffolding

Peer assessment is often implemented in conjunction with some form of scaffolding, for example, rubrics, and scoring scripts. Scaffolding has been shown to improve both the quality peer assessment and increase the amount of feedback assessors provide (Peters, Körndle & Narciss, 2018 ). Peer assessment has also been shown to be more accurate when rubrics are utilised. For example, Panadero, Romero, & Strijbos ( 2013 ) found that students were less likely to overscore their peers.

Increasingly, peer assessment has been performed online due in part to the growth in online learning activities as well as the ease by which peer assessment can be implemented online (van Popta et al. 2017 ). Conducting peer assessment online can significantly reduce the logistical burden of implementing peer assessment (e.g., Tannacito and Tuzi 2002 ). Several studies have shown that peer assessment can effectively be carried out online (e.g., Hsu 2016 ; Li and Gao 2016 ). Van Popta et al. ( 2017 ) argue that the cognitive processes involved in peer assessment, such as evaluating, explaining, and suggesting, similarly play out in online and offline environments. However, the social processes involved in peer assessment are likely to substantively differ between online and offline peer assessment (e.g., collaborating, discussing), and it is unclear whether this might limit the benefits of peer assessment through one or the other medium. To the authors’ knowledge, no prior studies have compared the effects of online and offline peer assessment on academic performance.

Because peer assessment is fundamentally a collaborative assessment practice, interpersonal variables play a substantial role in determining the type and quality of peer assessment (Strijbos and Wichmann 2018 ). Some researchers have argued that anonymous peer assessment is advantageous because assessors are more likely to be honest in their feedback, and interpersonal processes cannot influence how assessees receive the assessment feedback (Rotsaert et al. 2018 ). Qualitative evidence suggests that anonymous peer assessment results in improved feedback quality and more positive perceptions towards peer assessment (Rotsaert et al. 2018 ; Vanderhoven et al. 2015 ). A recent qualitative review by Panadero and Alqassab ( 2019 ) found that three studies had compared anonymous peer assessment to a control group (i.e., open peer assessment) and looked at academic performance as the outcome. Their review found mixed evidence regarding the benefit of anonymity in peer assessment with one of the included studies finding an advantage of anonymity, but the other two finding little benefit of anonymity. Others have questioned whether anonymity impairs the development of cognitive and interpersonal development by limiting the collaborative nature of peer assessment (Strijbos and Wichmann 2018 ).

Peers are often novices at providing constructive assessment and inexperienced learners tend to provide limited feedback (Hattie and Timperley 2007 ). Several studies have therefore suggested that peer assessment becomes more effective as students’ experience with peer assessment increases. For example, with greater experience, peers tend to use scoring criteria to a greater extent (Sluijsmans et al. 2004 ). Similarly, training peer assessment over time can improve the quality of feedback they provide, although the effects may be limited by the extent of a student’s relevant domain knowledge (Alqassab et al. 2018 ). Frequent peer assessment may also increase positive learner perceptions of peer assessment (e.g., Sluijsmans et al. 2004 ). However, other studies have found that learner perceptions of peer assessment are not necessarily positive (Alqassab et al. 2018 ). This may suggest that learner perceptions of peer assessment vary depending on its characteristics (e.g., quality, detail).

Current Study

Given the previous reliance on narrative reviews and the increasing research and teacher interest in peer assessment, as well as the popularity of instructional theories advocating for peer assessment and formative assessment practices in the classroom, we present a quantitative meta-analytic review to develop and synthesise the evidence in relation to peer assessment. This meta-analysis evaluates the effect of peer assessment on academic performance when compared to no assessment as well as teacher assessment. To do this, the meta-analysis only evaluates intervention studies that utilised experimental or quasi-experimental designs, i.e., only studies with control groups, so that the effects of maturation and other confounding variables are mitigated. Control groups can be either passive (e.g., no feedback) or active (e.g., teacher feedback). We meta-analytically address two related research questions:

What effect do peer assessment interventions have on academic performance relative to the observed control groups?

What characteristics moderate the effectiveness of peer assessment?

Working Definitions

The specific methods of peer assessment can vary considerably, but there are a number of shared characteristics across most methods. Peers are defined as individuals at similar (i.e., within 1–2 grades) or identical education levels. Peer assessment must involve assessing or being assessed by peers, or both. Peer assessment requires the communication (either written, verbal, or online) of task-relevant feedback, although the style of feedback can differ markedly, from elaborate written and verbal feedback to holistic ratings of performance.

We took a deliberately broad definition of academic performance for this meta-analysis including traditional outcomes (e.g., test performance or essay writing) and also practical skills (e.g., constructing a circuit in science class). Despite this broad interpretation of academic performance, we did not include any studies that were carried out in a professional/organisational setting other than professional skills (e.g., teacher training) that were being taught in a traditional educational setting (e.g., a university).

Selection Criteria

To be included in this meta-analysis, studies had to meet several criteria. Firstly, a study needed to examine the effect of peer assessment. Secondly, the assessment could be delivered in any form (e.g., written, verbal, online), but needed to be distinguishable from peer-coaching/peer-tutoring. Thirdly, a study needed to compare the effect of peer assessment with a control group. Pre-post designs that did not include a control/comparison group were excluded because we could not discount the effects of maturation or other confounding variables. Moreover, the comparison group could take the form of either a passive control (e.g., a no assessment condition) or an active control (e.g., teacher assessment). Fourthly, a study needed to examine the effect of peer assessment on a non-self-reported measure of academic performance.

In addition to these criteria, a study needed to be carried out in an educational context or be related to educational outcomes in some way. Any level of education (i.e., tertiary, secondary, primary) was acceptable. A study also needed to provide sufficient data to calculate an effect size. If insufficient data was available in the manuscript, the authors were contacted by email to request the necessary data (additional information was provided for a single study). Studies also needed to be written in English.

Literature Search

The literature search was carried out on 8 June 2018 using PsycInfo , Google Scholar , and ERIC. Google Scholar was used to check for additional references as it does not allow for the exporting of entries. These three electronic databases were selected due to their relevance to educational instruction and practice. Results were not filtered based on publication date, but ERIC only holds records from 1966 to present. A deliberately wide selection of search terms was used in the first instance to capture all relevant articles. The search terms included ‘peer grading’ or ‘peer assessment’ or ‘peer evaluation’ or ‘peer feedback’, which were paired with ‘learning’ or ‘performance’ or ‘academic achievement’ or ‘academic performance’ or ‘grades’. All peer assessment-related search terms were included with and without hyphenation. In addition, an ancestry search (i.e., back-search) was performed on the reference lists of the included articles. Conference programs for major educational conferences were searched. Finally, unpublished results were sourced by emailing prominent authors in the field and through social media. Although there is significant disagreement about the inclusion of unpublished data and conference abstracts, i.e., ‘grey literature’ (Cook et al. 1993 ), we opted to include it in the first instance because including only published studies can result in a meta-analysis over-estimating effect sizes due to publication bias (Hopewell et al. 2007 ). It should, however, be noted that none of the substantive conclusions changed when the analyses were re-run with the grey literature excluded.

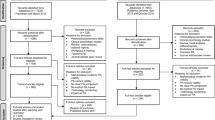

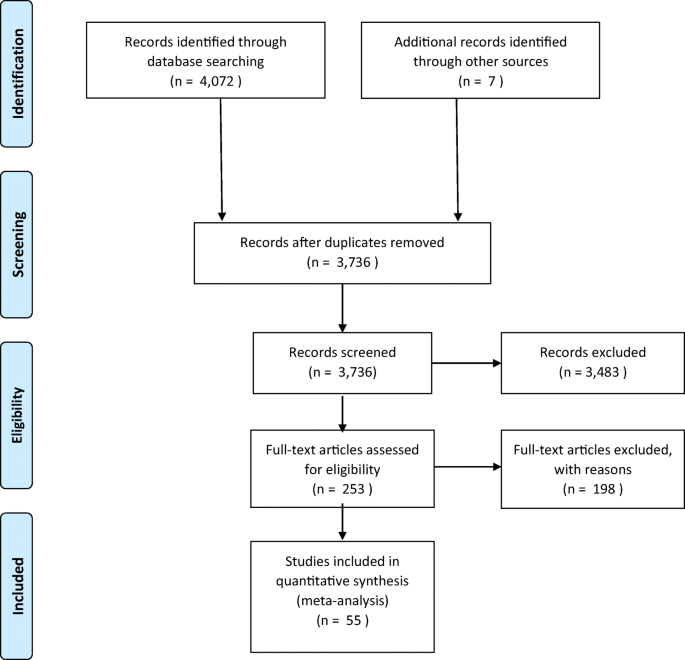

The database search returned 4072 records. An ancestry search returned an additional 37 potentially relevant articles. No unpublished data could be found. After duplicates were removed, two reviewers independently screened titles and abstracts for relevance. A kappa statistic was calculated to assess inter-rater reliability between the two coders and was found to be .78 (89.06% overall agreement, CI .63 to .94), which is above the recommended minimum levels of inter-rater reliability (Fleiss 1971 ). Subsequently, the full text of articles that were deemed relevant based on their abstracts was examined to ensure that they met the selection criteria described previously. Disagreements between the coders were discussed and, when necessary, resolved by a third coder. Ultimately, 55 articles with 143 effect sizes were found that met the inclusion criteria and included in the meta-analysis. The search process is depicted in Fig. 2 .

Flow chart for the identification, screening protocol, and inclusion of publications in the meta-analyses

Data Extraction

A research assistant and the first author extracted data from the included papers. We took an iterative approach to the coding procedure whereby the coders refined the classification of each variable as they progressed through the included studies to ensure that the classifications best characterised the extant literature. Below, the coding strategy is reviewed along with the classifications utilised. Frequency statistics and inter-rater reliability for the extracted data for the different classifications are presented in Table 1 . All extracted variable showed at least moderate agreement except for whether the peer assessment was freeform or structured, which showed fair agreement (Landis and Koch 1977 ).

Publication Type

Publications were classified into journal articles, conference papers, dissertations, reports, or unpublished records.

Education Level

Education level was coded as either graduate tertiary, undergraduate tertiary, secondary, or primary. Given the small number of studies that utilised graduate samples ( N = 2), we subsequently combined this classification with undergraduate to form a general tertiary category. In addition, we recorded the grade level of the students. Generally speaking, primary education refers to the ages of 6–12, secondary education refers to education from 13–18, and tertiary education is undertaken after the age of 18.

Age and Sex

The percentage of students in a study that were female was recorded. In addition, we recorded the mean age from each study. Unfortunately, only 55.5% of studies recorded participants’ sex and only 18.5% of studies recorded mean age information.

The subject area associated with the academic performance measure was coded. We also recorded the nature of the academic performance variable for descriptive purposes.

Assessment Role

Studies were coded as to whether the students acted as peer assessors, assessees, or both assessors and assessees.

Comparison Group

Four types of comparison group were found in the included studies: no assessment, teacher assessment, self-assessment, and reader-control. In many instances, a no assessment condition could be characterised as typical instruction; that is, two versions of a course were run—one with peer assessment and one without peer assessment. As such, while no specific teacher assessment comparison condition is referenced in the article, participants would most likely have received some form of teacher feedback as is typical in standard instructional practice. Studies were classified as having teacher assessment on the basis of a specific reference to teacher feedback being provided.

Studies were classified as self-assessment controls if there was an explicit reference to a self-assessment activity, e.g., self-grading/rating. Studies that only included revision, e.g., working alone on revising an assignment, were classified as no assessment rather than self-assessment because they did not necessarily involve explicit self-assessment. Studies where both the comparison and intervention groups received teacher assessment (in addition to peer assessment in the case of the intervention group) were coded as no assessment to reflect the fact that the comparison group received no additional assessment compared to the peer assessment condition. In addition, Philippakos and MacArthur ( 2016 ) and Cho and MacArthur ( 2011 ) were notable in that they utilised a reader-control condition whereby students read, but did not assess peers’ work. Due to the small frequency of this control condition, we ultimately classified them as no assessment controls.

Peer assessment was characterised using coding we believed best captured the theoretical distinctions in the literature. Our typology of peer assessment used three distinct components, which were combined for classification:

Did the peer feedback include a dialog between peers?

Did the peer feedback include written comments?

Did the peer feedback include grading?

Each study was classified using a dichotomous present/absent scoring system for each of the three components.

Studies were dichotomously classified as to whether a specific rubric, assessment script, or scoring system was provided to students. Studies that only provided basic instructions to students to conduct the peer feedback were coded as freeform.

Was the Assessment Online?

Studies were classified based on whether the peer assessment was online or offline.

Studies were classified based on whether the peer assessment was anonymous or identified.

Frequency of Assessment

Studies were coded dichotomously as to whether they involved only a single peer assessment occasion or, alternatively, whether students provided/received peer feedback on multiple occasions.

The level of transfer between the peer assessment task and the academic performance measure was coded into three categories:

No transfer—the peer-assessed task was the same as the academic performance measure. For example, a student’s assignment was assessed by peers and this feedback was utilised to make revisions before it was graded by their teacher.

Near transfer—the peer-assessed task was in the same or very similar format as the academic performance measure, e.g., an essay on a different, but similar topic.

Far transfer—the peer-assessed task was in a different form to the academic performance task, although they may have overlapping content. For example, a student’s assignment was peer assessed, while the final course exam grade was the academic performance measure.

We recorded how participants were allocated to a condition. Three categories of allocation were found in the included studies: random allocation at the class level, at the student level, or at the year/semester level. As only two studies allocated students to conditions at the year/semester level, we combined these studies with the studies allocated at the classroom level (i.e., as quasi-experiments).

Statistical Analyses of Effect Sizes

Effect size estimation and heterogeneity.

A random effects, multi-level meta-analysis was carried out using R version 3.4.3 (R Core Team 2017 ). The primary outcome was standardised mean difference between peer assessment and comparison (i.e., control) conditions. A common effect size metric, Hedge’s g , was calculated. A positive Hedge’s g value indicates comparatively higher values in the dependent variable in the peer assessment group (i.e., higher academic performance). Heterogeneity in the effect sizes was estimated using the I 2 statistic. I 2 is equivalent to the percentage of variation between studies that is due to heterogeneity (Schwarzer et al. 2015 ). Large values of the I 2 statistics suggest higher heterogeneity between studies in the analysis.

Meta-regressions were performed to examine the moderating effects of the various factors that differed across the studies. We report the results of these meta-regressions alongside sub-groups analyses. While it was possible to determine whether sub-groups differed significantly from each other by determining whether the confidence interval around their effect sizes overlap, sub-groups analysis may also produce biased estimates when heteroscedasticity or multicollinearity are present (Steel and Kammeyer-Mueller 2002 ). We performed meta-regressions separately for each predictor to test the overall effect of a moderator.

Finally, as this meta-analysis included students from primary school to graduate school, which are highly varied participant and educational contexts, we opted to analyse the data both in complete form, as well as after controlling for each level of education. As such, we were able to look at the effect of each moderator across education levels and for each education level separately.

Robust Variance Estimation

Often meta-analyses include multiple effect sizes from the same sample (e.g., the effect of peer assessment on two different measures of academic performance). Including these dependent effect sizes in a meta-analysis can be problematic, as this can potentially bias the results of the analysis in favour of studies that have more effect sizes. Recently, Robust Variance Estimation (RVE) was developed as a technique to address such concerns (Hedges et al. 2010 ). RVE allows for the modelling of dependence between effect sizes even when the nature of the dependence is not specifically known. Under such situations, RVE results in unbiased estimates of fixed effects when dependent effect sizes are included in the analysis (Moeyaert et al. 2017 ). A correlated effects structure was specified for the meta-analysis (i.e., the random error in the effects from a single paper were expected to be correlated due to similar participants, procedures). A rho value of .8 was specified for the correlated effects (i.e., effects from the same study) as is standard practice when the correlation is unknown (Hedges et al. 2010 ). A sensitivity analysis indicated that none of the results varied as a function of the chosen rho. We utilised the ‘robumeta’ package (Fisher et al. 2017 ) to perform the meta-analyses. Our approach was to use only summative dependent variables when they were provided (e.g., overall writing quality score rather than individual trait measures), but to utilise individual measures when overall indicators were not available. When a pre-post design was used in a study, we adjusted the effect size for pre-intervention differences in academic performance as long as there was sufficient data to do so (e.g., t tests for pre-post change).

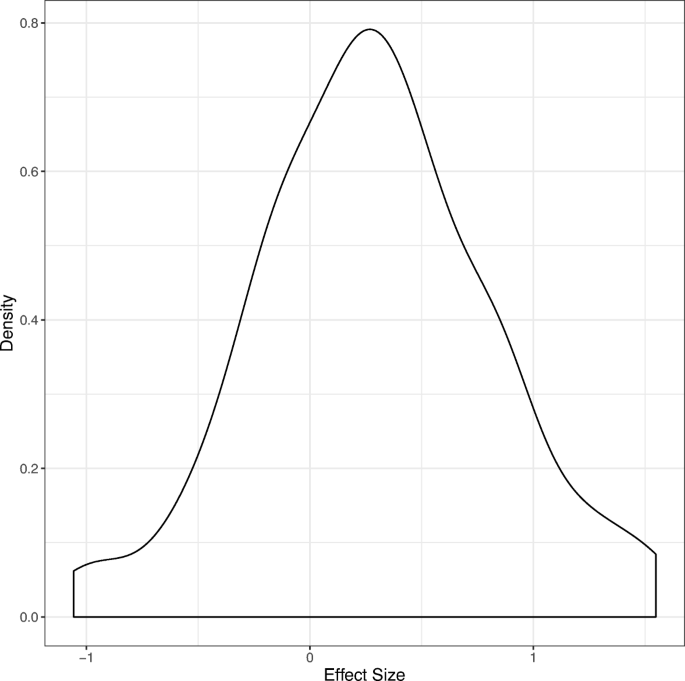

Overall Meta-analysis of the Effect of Peer Assessment

Prior to conducting the analysis, two effect sizes ( g = 2.06 and 1.91) were identified as outliers and removed using the outlier labelling rule (Hoaglin and Iglewicz 1987 ). Descriptive characteristics of the included studies are presented in Table 2 . The meta-analysis indicated that there was a significant positive effect of peer assessment on academic performance ( g = 0.31, SE = .06, 95% CI = .18 to .44, p < .001). A density graph of the recorded effect sizes is provided in Fig. 3 . A sensitivity analysis indicated that the effect size estimates did not differ with different values of rho. Heterogeneity between the studies’ effect sizes was large, I 2 = 81.08%, supporting the use of a meta-regression/sub-groups analysis in order to explain the observed heterogeneity in effect sizes.

A density plot of effect sizes

Meta-Regressions and Sub-Groups Analyses

Effect sizes for sub-groups are presented in Table 3 . The results of the meta-regressions are presented in Table 4 .

A meta-regression with tertiary students as the reference category indicated that there was no significant difference in effect size as a function of education level. The effect of peer assessment was similar for secondary students ( g = .44, p < .001) and primary school students ( g = .41, p = .006) and smaller for tertiary students ( g = .21, p = .043). There is, however, a strong theoretical basis for examining effects separately at different education levels (primary, secondary, tertiary), because of the large degree of heterogeneity across such a wide span of learning contexts (e.g., pedagogical practices, intellectual and social development of the students). We therefore will proceed by reporting the data both as a whole and separately for each of the education levels for all of the moderators considered here. Education level is contrast coded such that tertiary is compared to the average of secondary and primary and secondary and primary are compared to each other.

A meta-regression indicated that the effect size was not significantly different when comparing peer assessment with teacher assessment, than when comparing peer assessment with no assessment ( b = .02, 95% CI − .26 to .31, p = .865). The difference between peer assessment vs. no assessment and peer assessment vs. self-assessment was also not significant ( b = − .03, CI − .44 to .38, p = .860), see Table 4 . An examination of sub-groups suggested that peer assessment had a moderate positive effect compared to no assessment controls ( g = .31, p = .004) and teacher assessment ( g = .28, p = .007) and was not significantly different compared with self-assessment ( g = .23, p = .209). The meta-regression was also re-run with education level as a covariate but the results were unchanged.

Meta-regressions indicated that the participant’s role was not a significant moderator of the effect size; see Table 4 . However, given the extremely small number of studies where participants did not act as both assessees ( n = 2) and assessors ( n = 4), we did not perform a sub-groups analysis, as such analyses are unreliable with small samples (Fisher et al. 2017 ).

Subject Area

Given that many subject areas had few studies (see Table 1 ) and the writing subject area made up the majority of effect sizes (40.74%), we opted to perform a meta-regression comparing writing with other subject areas. However, the effect of peer assessment did not differ between writing ( g = .30 , p = .001) and other subject areas ( g = .31 , p = .002); b = − .003, 95% CI − .25 to .25, p = .979. Similarly, the results did not substantially change when education level was entered into the model.

The effect of peer assessment did not differ significantly when peer assessment included a written component ( g = .35 , p < .001) than when it did not ( g = .20 , p = .015) , b = .144, 95% CI − .10 to .39, p = .241. Including education as a variable in the model did not change the effect written feedback. Similarly, studies with a dialog component ( g = .21 , p = .033) did not differ significantly from those that did not ( g = .35 , p < .001), b = − .137, 95% CI − .39 to .12, p = .279.

Studies where peer feedback included a grading component ( g = .37 , p < .001) did not differ significantly from those that did not ( g = .17 , p = .138). However, when education level was included in the model, the model indicated significant interaction effect between grading in tertiary students and the average effect of grading in primary and secondary students ( b = .395, 95% CI .06 to .73, p = .022). A follow-up sub-groups analysis showed that grading was beneficial for academic performance in tertiary students ( g = .55 , p = .009), but not secondary school students ( g = .002, p = .991) or primary school students ( g = − .08, p = .762). When the three variables used to characterise peer assessment were entered simultaneously, the results were unchanged.

The average effect size was not significantly different for studies where assessment was freeform, i.e., where no specific script or rubric was given ( g = .42, p = .030) compared to those where a specific script or rubric was provided ( g = .29, p < .001); b = − .13, 95% CI − .51 to .25, p = .455. However, there were few studies where feedback was freeform ( n = 9, k =29). The results were unchanged when education level was controlled for in the meta-regression.

Studies where peer assessment was online ( g = .38, p = .003) did not differ from studies where assessment was offline ( g = .24, p = .004); b = .16, 95% CI − .10 to .42, p = .215. This result was unchanged when education level was included in the meta-regression.

There was no significant difference in terms of effect size between studies where peer assessment was anonymised ( g = .27, p = .019) and those where it was not ( g = .25, p = .004); b = .03, 95% CI − .22 to .28, p = .811). Nor was the effect significant when education level was controlled for.

Studies where peer assessment was performed just a single time ( g = .19, p = .103) did not differ significantly from those where it was performed multiple times ( g = .37, p < .001); b = -.17, 95% CI − .45 to .11, p = .223. Although it is worth noting that the results of the sub-groups analysis suggest that the effect of peer assessment was not significant when only considering studies that applied it a single time. The result did not change when education was included in the model.

There was no significant difference in effect size between studies utilising far transfer ( g = .21, p = .124) than those with near ( g = .42, p < .001) or no transfer ( g = .29, p = .017). Although it is worth noting that the sub-groups analysis suggests that the effect of peer assessment was only significant when there was no transfer to the criterion task. As shown in Table 4 , this was also not significant when analysed using meta-regressions either with or without education in the model.

Studies that allocated participants to experimental condition at the student level ( g = .21, p = .14) did not differ from those that allocated condition at the classroom/semester level ( g = .31, p < .001 and g = .79, p = .223 respectively), see Table 4 for meta-regressions.

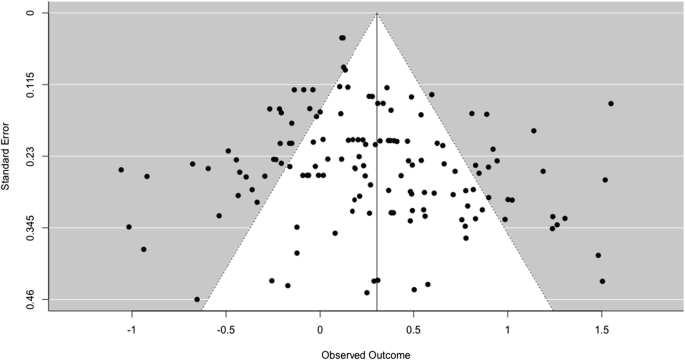

Publication Bias

Risk of publication bias was assessed by inspecting the funnel plots (see Fig. 4 ) of the relationship between observed effects and standard error for asymmetry (Schwarzer et al. 2015 ). Egger’s test was also run by including standard error as a predictor in a meta-regression. Based on the funnel plots and a non-significant Egger’s test of asymmetry ( b = .886, p = .226), risk of publication bias was judged to be low

A funnel plot showing the relationship between standard error and observed effect size for the academic performance meta-analysis

Proponents of peer assessment argue that it is an effective classroom technique for improving academic performance (Topping 2009 ). While previous narrative reviews have argued for the benefits of peer assessment, the current meta-analysis quantifies the effect of peer assessment interventions on academic performance within educational contexts. Overall, the results suggest that there is a positive effect of peer assessment on academic performance in primary, secondary, and tertiary students. The magnitude of the overall effect size was within the small to medium range for effect sizes (Sawilowsky 2009 ). These findings also suggest that that the benefits of peer assessment are robust across many contextual factors, including different feedback and educational characteristics.

Recently, researchers have increasingly advocated for the role of assessment in promoting learning in educational practice (Wiliam 2018 ). Peer assessment forms a core part of theories of formative assessment because it is seen as providing new information about the learning process to the teacher or student, which in turn facilitates later performance (Pellegrino et al. 2001 ). The current results provide support for the position that peer assessment can be an effective classroom technique for improving academic performance. The result suggest that peer assessment is effective compared to both no assessment (which often involved ‘teaching as usual’) and teacher assessment, suggesting that peer assessment can play an important formative role in the classroom. The findings suggest that structuring classroom activities in a way that utilises peer assessment may be an effective way to promote learning and optimise the use of teaching resources by permitting the teacher to focus on assisting students with greater difficulties or for more complex tasks. Importantly, the results indicate that peer assessment can be effective across a wide range of subject areas, education levels, and assessment types. Pragmatically, this suggests that classroom teachers can implement peer assessment in a variety of ways and tailor the peer assessment design to the particular characteristics and constraints of their classroom context.

Notably, the results of this quantitative meta-analysis align well with past narrative reviews (e.g., Black and Wiliam 1998a ; Topping 1998 ; van Zundert et al. 2010 ). The fact that both quantitative and qualitative syntheses of the literature suggest that peer assessment can be beneficial provides a stronger basis for recommending peer assessment as a practice. However, several of the moderators of the effectiveness of peer feedback that have been argued for in the available narrative reviews (e.g., rubrics; Panadero and Jonsson 2013 ) have received little support from this quantitative meta-analysis. As detailed below, this may suggest that the prominence of such feedback characteristics in narrative reviews is more driven by theoretical considerations rather than quantitative empirical evidence. However, many of these moderating variables are complex, for example, rubrics can take many forms, and due to this complexity may not lend themselves as well to quantitative synthesis/aggregation (for a detailed discussion on combining qualitative and quantitative evidence, see Gorard 2002 ).

Mechanisms and Moderators

Indeed, the current findings suggest that the feedback characteristics deemed important by current theories of peer assessment may not be as significant as first thought. Previously, individual studies have argued for the importance of characteristics such as rubrics (Panadero and Jonsson 2013 ), anonymity (Bloom & Hautaluoma, 1987 ), and allowing students to practice peer assessment (Smith, Cooper, & Lancaster, 2002 ). While these feedback characteristics have been shown to affect the efficacy of peer assessment in individual studies, we find little evidence that they moderate the effect of peer assessment when analysed across studies. Many of the current models of peer assessment rely on qualitative evidence, theoretical arguments, and pedagogical experience to formulate theories about what determines effective peer assessment. While such evidence should not be discounted, the current findings also point to the need for better quantitative and experimental studies to test some of the assumptions embedded in these models. We suggest that the null findings observed in this meta-analysis regarding the proposed moderators of peer assessment efficacy should be interpreted cautiously, as more studies that experimentally manipulate these variables are needed to provide more definitive insight into how to design better peer assessment procedures.

While the current findings are ambiguous regarding the mechanisms of peer assessment, it is worth noting that without a solid understanding of the mechanisms underlying peer assessment effects, it is difficult to identify important moderators or optimally use peer assessment in the classroom. Often the research literature makes somewhat broad claims about the possible benefits of peer assessment. For example, Topping ( 1998 , p.256) suggested that peer assessment may, ‘promote a sense of ownership, personal responsibility, and motivation… [and] might also increase variety and interest, activity and interactivity, identification and bonding, self-confidence, and empathy for others’. Others have argued that peer assessment is beneficial because it is less personally evaluative—with evidence suggesting that teacher assessment is often personally evaluative (e.g., ‘good boy, that is correct’) which may have little or even negative effects on performance particularly if the assessee has low self-efficacy (Birney, Beckmann, Beckmann & Double 2017 ; Double and Birney 2017 , 2018 ; Hattie and Timperley 2007 ). However, more research is needed to distinguish between the many proposed mechanisms for peer assessment’s formative effects made within the extant literature, particularly as claims about the mechanisms of the effectiveness of peer assessment are often evidenced by student self-reports about the aspects of peer assessment they rate as useful. While such self-reports may be informative, more experimental research that systematically manipulates aspects of the design of peer assessment is likely to provide greater clarity about what aspects of peer assessment drive the observed benefits.

Our findings did indicate an important role for grading in determining the effectiveness of peer feedback. We found that peer grading was beneficial for tertiary students but not beneficial for primary or secondary school students. This finding suggests that grading appears to add little to the peer feedback process in non-tertiary students. In contrast, a recent meta-analysis by Sanchez et al. ( 2017 ) on peer grading found a benefit for non-tertiary students, albeit based on a relatively small number of studies compared with the current meta-analysis. In contrast, the present findings suggest that there may be significant qualitative differences in the performance of peer grading as students develop. For example, the criteria students use to assesses ability may change as they age (Stipek and Iver 1989 ). It is difficult to ascertain precisely why grading has positive additive effects in only tertiary students, but there are substantial differences in pedagogy, curriculum, motivation of learning, and grading systems that may account for these differences. One possibility is that tertiary students are more ‘grade orientated’ and therefore put more weight on peer assessment which includes a specific grade. Further research is needed to explore the effects of grading at different educational levels.

One of the more unexpected findings of this meta-analysis was the positive effect of peer assessment compared to teacher assessment. This finding is somewhat counterintuitive given the greater qualifications and pedagogical experience of the teacher. In addition, in many of the studies, the teacher had privileged knowledge about, and often graded the outcome assessment. Thus, it seems reasonable to expect that teacher feedback would better align with assessment objectives and therefore produce better outcomes. Despite all these advantages, teacher assessment appeared to be less efficacious than peer assessment for academic performance. It is possible that the pedagogical disadvantages of peer assessment are compensated for by affective or motivational aspects of peer assessment, or by the substantial benefits of acting as an assessor. However, more experimental research is needed to rule out the effects of potential methodological issues discussed in detail below.

Limitations

A major limitation of the current results is that they cannot adequately distinguish between the effect of assessing versus being an assessee. Most of the current studies confound giving and receiving peer assessment in their designs (i.e., the students in the peer assessment group both provide assessment and receive it), and therefore, no substantive conclusions can be drawn about whether the benefits of peer assessment extend from giving feedback, receiving feedback, or both. This raises the possibility that the benefit of peer assessment comes more from assessing, rather than being assessed (Usher 2018 ). Consistent with this, Lundstrom and Baker ( 2009 ) directly compared the effects of giving and receiving assessment on students’ writing performance and found that assessing was more beneficial than being assessed. Similarly, Graner ( 1987 ) found that assessing papers without being assessed was as effective for improving writing performance as assessing papers and receiving feedback.

Furthermore, more true experiments are needed, as there is evidence from these results that they produce more conservative estimates of the effect of peer assessment. The studies included in this meta-analysis were not only predominantly randomly allocated at the classroom level (i.e., quasi-experiments), but in all but one case, were not analysed using appropriate techniques for analysing clustered data (e.g., multi-level modelling). This is problematic because it makes disentangling classroom-level effects (e.g., teacher quality) from the intervention effect difficult, which may lead to biased statistical inferences (Hox 1998 ). While experimental designs with individual allocation are often not pragmatic for classroom interventions, online peer assessment interventions appear to be obvious candidates for increased true experiments. In particular, carefully controlled experimental designs that examine the effect of specific assessment characteristics, rather than ‘black-box’ studies of the effectiveness of peer assessment, are crucial for understanding when and how peer assessment is most likely to be effective. For example, peer assessment may be counterproductive when learning novel tasks due to students’ inadequate domain knowledge (Könings et al. 2019 ).

While the current results provide an overall estimate of the efficacy of peer assessment in improving academic performance when compared to teacher and no assessment, it should be noted that these effects are averaged across a wide range of outcome measures, including science project grades, essay writing ratings, and end-of-semester exam scores. Aggregating across such disparate outcomes is always problematic in meta-analysis and is a particular concern for meta-analyses in educational research, as some outcome measures are likely to be more sensitive to interventions than others (William, 2010 ). A further issue is that the effect of moderators may differ between academic domains. For example, some assessment characteristics may be important when teaching writing but not mathematics. Because there were too few studies in the individual academic domains (with the exception of writing), we are unable to account for these differential effects. The effects of the moderators reported here therefore need to be considered as overall averages that provide information about the extent to which the effect of a moderator generalises across domains.

Finally, the findings of the current meta-analysis are also somewhat limited by the fact that few studies gave a complete profile of the participants and measures used. For example, few studies indicated that ability of peer reviewer relative to the reviewee and age difference between the peers was not necessarily clear. Furthermore, it was not possible to classify the academic performance measures in the current study further, such as based on novelty, or to code for the quality of the measures, including their reliability and validity, because very few studies provide comprehensive details about the outcome measure(s) they utilised. Moreover, other important variables such as fidelity of treatment were almost never reported in the included manuscripts. Indeed, many of the included variables needed to be coded based on inferences from the included studies’ text and were not explicitly stated, even when one would reasonably expect that information to be made clear in a peer-reviewed manuscript. The observed effect sizes reported here should therefore be taken as an indicator of average efficacy based on the extant literature and not an indication of expected effects for specific implementations of peer assessment.

Overall, our findings provide support for the use of peer assessment as a formative practice for improving academic performance. The results indicate that peer assessment is more effective than no assessment and teacher assessment and not significantly different in its effect from self-assessment. These findings are consistent with current theories of formative assessment and instructional best practice and provide strong empirical support for the continued use of peer assessment in the classroom and other educational contexts. Further experimental work is needed to clarify the contextual and educational factors that moderate the effectiveness of peer assessment, but the present findings are encouraging for those looking to utilise peer assessment to enhance learning.

References marked with an * were included in the meta-analysis

* AbuSeileek, A. F., & Abualsha'r, A. (2014). Using peer computer-mediated corrective feedback to support EFL learners'. Language Learning & Technology, 18 (1), 76-95.

Alqassab, M., Strijbos, J. W., & Ufer, S. (2018). Training peer-feedback skills on geometric construction tasks: Role of domain knowledge and peer-feedback levels. European Journal of Psychology of Education, 33 (1), 11–30.

Article Google Scholar

* Anderson, N. O., & Flash, P. (2014). The power of peer reviewing to enhance writing in horticulture: Greenhouse management. International Journal of Teaching and Learning in Higher Education, 26 (3), 310–334.

* Bangert, A. W. (1995). Peer assessment: an instructional strategy for effectively implementing performance-based assessments. (Unpublished doctoral dissertation). University of South Dakota.

* Benson, N. L. (1979). The effects of peer feedback during the writing process on writing performance, revision behavior, and attitude toward writing. (Unpublished doctoral dissertation). University of Colorado, Boulder.

* Bhullar, N., Rose, K. C., Utell, J. M., & Healey, K. N. (2014). The impact of peer review on writing in apsychology course: Lessons learned. Journal on Excellence in College Teaching, 25(2), 91-106.

* Birjandi, P., & Hadidi Tamjid, N. (2012). The role of self-, peer and teacher assessment in promoting Iranian EFL learners’ writing performance. Assessment & Evaluation in Higher Education, 37 (5), 513–533.

Birney, D. P., Beckmann, J. F., Beckmann, N., & Double, K. S. (2017). Beyond the intellect: Complexity and learning trajectories in Raven’s Progressive Matrices depend on self-regulatory processes and conative dispositions. Intelligence, 61 , 63–77.

Black, P., & Wiliam, D. (1998a). Assessment and classroom learning. Assessment in Education: Principles, Policy & Practice, 5 (1), 7–74.

Google Scholar

Black, P., & Wiliam, D. (2009). Developing the theory of formative assessment. Educational Assessment, Evaluation and Accountability (formerly: Journal of Personnel Evaluation in Education), 21 (1), 5.

Bloom, A. J., & Hautaluoma, J. E. (1987). Effects of message valence, communicator credibility, and source anonymity on reactions to peer feedback. The Journal of Social Psychology, 127 (4), 329–338.

Brown, G. T., Irving, S. E., Peterson, E. R., & Hirschfeld, G. H. (2009). Use of interactive–informal assessment practices: New Zealand secondary students' conceptions of assessment. Learning and Instruction, 19 (2), 97–111.

* Califano, L. Z. (1987). Teacher and peer editing: Their effects on students' writing as measured by t-unit length, holistic scoring, and the attitudes of fifth and sixth grade students (Unpublished doctoral dissertation), Northern Arizona University.

* Chaney, B. A., & Ingraham, L. R. (2009). Using peer grading and proofreading to ratchet student expectations in preparing accounting cases. American Journal of Business Education, 2 (3), 39-48.

* Chang, S. H., Wu, T. C., Kuo, Y. K., & You, L. C. (2012). Project-based learning with an online peer assessment system in a photonics instruction for enhancing led design skills. Turkish Online Journal of Educational Technology-TOJET, 11(4), 236–246.

* Cho, K., & MacArthur, C. (2011). Learning by reviewing. Journal of Educational Psychology, 103 (1), 73.

Cho, K., Schunn, C. D., & Charney, D. (2006). Commenting on writing: Typology and perceived helpfulness of comments from novice peer reviewers and subject matter experts. Written Communication, 23 (3), 260–294.

Cook, D. J., Guyatt, G. H., Ryan, G., Clifton, J., Buckingham, L., Willan, A., et al. (1993). Should unpublished data be included in meta-analyses?: Current convictions and controversies. JAMA, 269 (21), 2749–2753.

*Crowe, J. A., Silva, T., & Ceresola, R. (2015). The effect of peer review on student learning outcomes in a research methods course. Teaching Sociology, 43 (3), 201–213.

* Diab, N. M. (2011). Assessing the relationship between different types of student feedback and the quality of revised writing . Assessing Writing, 16(4), 274-292.

Demetriadis, S., Egerter, T., Hanisch, F., & Fischer, F. (2011). Peer review-based scripted collaboration to support domain-specific and domain-general knowledge acquisition in computer science. Computer Science Education, 21 (1), 29–56.

Dochy, F., Segers, M., & Sluijsmans, D. (1999). The use of self-, peer and co-assessment in higher education: A review. Studies in Higher Education, 24 (3), 331–350.

Double, K. S., & Birney, D. (2017). Are you sure about that? Eliciting confidence ratings may influence performance on Raven’s progressive matrices. Thinking & Reasoning, 23 (2), 190–206.

Double, K. S., & Birney, D. P. (2018). Reactivity to confidence ratings in older individuals performing the latin square task. Metacognition and Learning, 13(3), 309–326.

* Enders, F. B., Jenkins, S., & Hoverman, V. (2010). Calibrated peer review for interpreting linear regression parameters: Results from a graduate course. Journal of Statistics Education , 18 (2).

* English, R., Brookes, S. T., Avery, K., Blazeby, J. M., & Ben-Shlomo, Y. (2006). The effectiveness and reliability of peer-marking in first-year medical students. Medical Education, 40 (10), 965-972.

* Erfani, S. S., & Nikbin, S. (2015). The effect of peer-assisted mediation vs. tutor-intervention within dynamic assessment framework on writing development of Iranian Intermediate EFL Learners. English Language Teaching, 8 (4), 128–141.