Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Review Article

- Published: 08 March 2018

Meta-analysis and the science of research synthesis

- Jessica Gurevitch 1 ,

- Julia Koricheva 2 ,

- Shinichi Nakagawa 3 , 4 &

- Gavin Stewart 5

Nature volume 555 , pages 175–182 ( 2018 ) Cite this article

58k Accesses

956 Citations

734 Altmetric

Metrics details

- Biodiversity

- Outcomes research

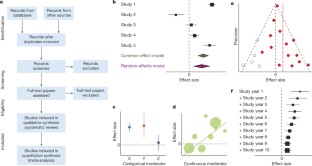

Meta-analysis is the quantitative, scientific synthesis of research results. Since the term and modern approaches to research synthesis were first introduced in the 1970s, meta-analysis has had a revolutionary effect in many scientific fields, helping to establish evidence-based practice and to resolve seemingly contradictory research outcomes. At the same time, its implementation has engendered criticism and controversy, in some cases general and others specific to particular disciplines. Here we take the opportunity provided by the recent fortieth anniversary of meta-analysis to reflect on the accomplishments, limitations, recent advances and directions for future developments in the field of research synthesis.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

185,98 € per year

only 3,65 € per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Eight problems with literature reviews and how to fix them

The past, present and future of Registered Reports

Raiders of the lost HARK: a reproducible inference framework for big data science

Jennions, M. D ., Lortie, C. J. & Koricheva, J. in The Handbook of Meta-analysis in Ecology and Evolution (eds Koricheva, J . et al.) Ch. 23 , 364–380 (Princeton Univ. Press, 2013)

Article Google Scholar

Roberts, P. D ., Stewart, G. B. & Pullin, A. S. Are review articles a reliable source of evidence to support conservation and environmental management? A comparison with medicine. Biol. Conserv. 132 , 409–423 (2006)

Bastian, H ., Glasziou, P . & Chalmers, I. Seventy-five trials and eleven systematic reviews a day: how will we ever keep up? PLoS Med. 7 , e1000326 (2010)

Article PubMed PubMed Central Google Scholar

Borman, G. D. & Grigg, J. A. in The Handbook of Research Synthesis and Meta-analysis 2nd edn (eds Cooper, H. M . et al.) 497–519 (Russell Sage Foundation, 2009)

Ioannidis, J. P. A. The mass production of redundant, misleading, and conflicted systematic reviews and meta-analyses. Milbank Q. 94 , 485–514 (2016)

Koricheva, J . & Gurevitch, J. Uses and misuses of meta-analysis in plant ecology. J. Ecol. 102 , 828–844 (2014)

Littell, J. H . & Shlonsky, A. Making sense of meta-analysis: a critique of “effectiveness of long-term psychodynamic psychotherapy”. Clin. Soc. Work J. 39 , 340–346 (2011)

Morrissey, M. B. Meta-analysis of magnitudes, differences and variation in evolutionary parameters. J. Evol. Biol. 29 , 1882–1904 (2016)

Article CAS PubMed Google Scholar

Whittaker, R. J. Meta-analyses and mega-mistakes: calling time on meta-analysis of the species richness-productivity relationship. Ecology 91 , 2522–2533 (2010)

Article PubMed Google Scholar

Begley, C. G . & Ellis, L. M. Drug development: Raise standards for preclinical cancer research. Nature 483 , 531–533 (2012); clarification 485 , 41 (2012)

Article CAS ADS PubMed Google Scholar

Hillebrand, H . & Cardinale, B. J. A critique for meta-analyses and the productivity-diversity relationship. Ecology 91 , 2545–2549 (2010)

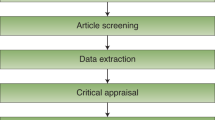

Moher, D . et al. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 6 , e1000097 (2009). This paper provides a consensus regarding the reporting requirements for medical meta-analysis and has been highly influential in ensuring good reporting practice and standardizing language in evidence-based medicine, with further guidance for protocols, individual patient data meta-analyses and animal studies.

Moher, D . et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst. Rev. 4 , 1 (2015)

Nakagawa, S . & Santos, E. S. A. Methodological issues and advances in biological meta-analysis. Evol. Ecol. 26 , 1253–1274 (2012)

Nakagawa, S ., Noble, D. W. A ., Senior, A. M. & Lagisz, M. Meta-evaluation of meta-analysis: ten appraisal questions for biologists. BMC Biol. 15 , 18 (2017)

Hedges, L. & Olkin, I. Statistical Methods for Meta-analysis (Academic Press, 1985)

Viechtbauer, W. Conducting meta-analyses in R with the metafor package. J. Stat. Softw. 36 , 1–48 (2010)

Anzures-Cabrera, J . & Higgins, J. P. T. Graphical displays for meta-analysis: an overview with suggestions for practice. Res. Synth. Methods 1 , 66–80 (2010)

Egger, M ., Davey Smith, G ., Schneider, M. & Minder, C. Bias in meta-analysis detected by a simple, graphical test. Br. Med. J. 315 , 629–634 (1997)

Article CAS Google Scholar

Duval, S . & Tweedie, R. Trim and fill: a simple funnel-plot-based method of testing and adjusting for publication bias in meta-analysis. Biometrics 56 , 455–463 (2000)

Article CAS MATH PubMed Google Scholar

Leimu, R . & Koricheva, J. Cumulative meta-analysis: a new tool for detection of temporal trends and publication bias in ecology. Proc. R. Soc. Lond. B 271 , 1961–1966 (2004)

Higgins, J. P. T . & Green, S. (eds) Cochrane Handbook for Systematic Reviews of Interventions : Version 5.1.0 (Wiley, 2011). This large collaborative work provides definitive guidance for the production of systematic reviews in medicine and is of broad interest for methods development outside the medical field.

Lau, J ., Rothstein, H. R . & Stewart, G. B. in The Handbook of Meta-analysis in Ecology and Evolution (eds Koricheva, J . et al.) Ch. 25 , 407–419 (Princeton Univ. Press, 2013)

Lortie, C. J ., Stewart, G ., Rothstein, H. & Lau, J. How to critically read ecological meta-analyses. Res. Synth. Methods 6 , 124–133 (2015)

Murad, M. H . & Montori, V. M. Synthesizing evidence: shifting the focus from individual studies to the body of evidence. J. Am. Med. Assoc. 309 , 2217–2218 (2013)

Rasmussen, S. A ., Chu, S. Y ., Kim, S. Y ., Schmid, C. H . & Lau, J. Maternal obesity and risk of neural tube defects: a meta-analysis. Am. J. Obstet. Gynecol. 198 , 611–619 (2008)

Littell, J. H ., Campbell, M ., Green, S . & Toews, B. Multisystemic therapy for social, emotional, and behavioral problems in youth aged 10–17. Cochrane Database Syst. Rev. https://doi.org/10.1002/14651858.CD004797.pub4 (2005)

Schmidt, F. L. What do data really mean? Research findings, meta-analysis, and cumulative knowledge in psychology. Am. Psychol. 47 , 1173–1181 (1992)

Button, K. S . et al. Power failure: why small sample size undermines the reliability of neuroscience. Nat. Rev. Neurosci. 14 , 365–376 (2013); erratum 14 , 451 (2013)

Parker, T. H . et al. Transparency in ecology and evolution: real problems, real solutions. Trends Ecol. Evol. 31 , 711–719 (2016)

Stewart, G. Meta-analysis in applied ecology. Biol. Lett. 6 , 78–81 (2010)

Sutherland, W. J ., Pullin, A. S ., Dolman, P. M . & Knight, T. M. The need for evidence-based conservation. Trends Ecol. Evol. 19 , 305–308 (2004)

Lowry, E . et al. Biological invasions: a field synopsis, systematic review, and database of the literature. Ecol. Evol. 3 , 182–196 (2013)

Article PubMed Central Google Scholar

Parmesan, C . & Yohe, G. A globally coherent fingerprint of climate change impacts across natural systems. Nature 421 , 37–42 (2003)

Jennions, M. D ., Lortie, C. J . & Koricheva, J. in The Handbook of Meta-analysis in Ecology and Evolution (eds Koricheva, J . et al.) Ch. 24 , 381–403 (Princeton Univ. Press, 2013)

Balvanera, P . et al. Quantifying the evidence for biodiversity effects on ecosystem functioning and services. Ecol. Lett. 9 , 1146–1156 (2006)

Cardinale, B. J . et al. Effects of biodiversity on the functioning of trophic groups and ecosystems. Nature 443 , 989–992 (2006)

Rey Benayas, J. M ., Newton, A. C ., Diaz, A. & Bullock, J. M. Enhancement of biodiversity and ecosystem services by ecological restoration: a meta-analysis. Science 325 , 1121–1124 (2009)

Article ADS PubMed CAS Google Scholar

Leimu, R ., Mutikainen, P. I. A ., Koricheva, J. & Fischer, M. How general are positive relationships between plant population size, fitness and genetic variation? J. Ecol. 94 , 942–952 (2006)

Hillebrand, H. On the generality of the latitudinal diversity gradient. Am. Nat. 163 , 192–211 (2004)

Gurevitch, J. in The Handbook of Meta-analysis in Ecology and Evolution (eds Koricheva, J . et al.) Ch. 19 , 313–320 (Princeton Univ. Press, 2013)

Rustad, L . et al. A meta-analysis of the response of soil respiration, net nitrogen mineralization, and aboveground plant growth to experimental ecosystem warming. Oecologia 126 , 543–562 (2001)

Adams, D. C. Phylogenetic meta-analysis. Evolution 62 , 567–572 (2008)

Hadfield, J. D . & Nakagawa, S. General quantitative genetic methods for comparative biology: phylogenies, taxonomies and multi-trait models for continuous and categorical characters. J. Evol. Biol. 23 , 494–508 (2010)

Lajeunesse, M. J. Meta-analysis and the comparative phylogenetic method. Am. Nat. 174 , 369–381 (2009)

Rosenberg, M. S ., Adams, D. C . & Gurevitch, J. MetaWin: Statistical Software for Meta-Analysis with Resampling Tests Version 1 (Sinauer Associates, 1997)

Wallace, B. C . et al. OpenMEE: intuitive, open-source software for meta-analysis in ecology and evolutionary biology. Methods Ecol. Evol. 8 , 941–947 (2016)

Gurevitch, J ., Morrison, J. A . & Hedges, L. V. The interaction between competition and predation: a meta-analysis of field experiments. Am. Nat. 155 , 435–453 (2000)

Adams, D. C ., Gurevitch, J . & Rosenberg, M. S. Resampling tests for meta-analysis of ecological data. Ecology 78 , 1277–1283 (1997)

Gurevitch, J . & Hedges, L. V. Statistical issues in ecological meta-analyses. Ecology 80 , 1142–1149 (1999)

Schmid, C. H . & Mengersen, K. in The Handbook of Meta-analysis in Ecology and Evolution (eds Koricheva, J . et al.) Ch. 11 , 145–173 (Princeton Univ. Press, 2013)

Eysenck, H. J. Exercise in mega-silliness. Am. Psychol. 33 , 517 (1978)

Simberloff, D. Rejoinder to: Don’t calculate effect sizes; study ecological effects. Ecol. Lett. 9 , 921–922 (2006)

Cadotte, M. W ., Mehrkens, L. R . & Menge, D. N. L. Gauging the impact of meta-analysis on ecology. Evol. Ecol. 26 , 1153–1167 (2012)

Koricheva, J ., Jennions, M. D. & Lau, J. in The Handbook of Meta-analysis in Ecology and Evolution (eds Koricheva, J . et al.) Ch. 15 , 237–254 (Princeton Univ. Press, 2013)

Lau, J ., Ioannidis, J. P. A ., Terrin, N ., Schmid, C. H . & Olkin, I. The case of the misleading funnel plot. Br. Med. J. 333 , 597–600 (2006)

Vetter, D ., Rucker, G. & Storch, I. Meta-analysis: a need for well-defined usage in ecology and conservation biology. Ecosphere 4 , 1–24 (2013)

Mengersen, K ., Jennions, M. D. & Schmid, C. H. in The Handbook of Meta-analysis in Ecology and Evolution (eds Koricheva, J. et al.) Ch. 16 , 255–283 (Princeton Univ. Press, 2013)

Patsopoulos, N. A ., Analatos, A. A. & Ioannidis, J. P. A. Relative citation impact of various study designs in the health sciences. J. Am. Med. Assoc. 293 , 2362–2366 (2005)

Kueffer, C . et al. Fame, glory and neglect in meta-analyses. Trends Ecol. Evol. 26 , 493–494 (2011)

Cohnstaedt, L. W. & Poland, J. Review Articles: The black-market of scientific currency. Ann. Entomol. Soc. Am. 110 , 90 (2017)

Longo, D. L. & Drazen, J. M. Data sharing. N. Engl. J. Med. 374 , 276–277 (2016)

Gauch, H. G. Scientific Method in Practice (Cambridge Univ. Press, 2003)

Science Staff. Dealing with data: introduction. Challenges and opportunities. Science 331 , 692–693 (2011)

Nosek, B. A . et al. Promoting an open research culture. Science 348 , 1422–1425 (2015)

Article CAS ADS PubMed PubMed Central Google Scholar

Stewart, L. A . et al. Preferred reporting items for a systematic review and meta-analysis of individual participant data: the PRISMA-IPD statement. J. Am. Med. Assoc. 313 , 1657–1665 (2015)

Saldanha, I. J . et al. Evaluating Data Abstraction Assistant, a novel software application for data abstraction during systematic reviews: protocol for a randomized controlled trial. Syst. Rev. 5 , 196 (2016)

Tipton, E. & Pustejovsky, J. E. Small-sample adjustments for tests of moderators and model fit using robust variance estimation in meta-regression. J. Educ. Behav. Stat. 40 , 604–634 (2015)

Mengersen, K ., MacNeil, M. A . & Caley, M. J. The potential for meta-analysis to support decision analysis in ecology. Res. Synth. Methods 6 , 111–121 (2015)

Ashby, D. Bayesian statistics in medicine: a 25 year review. Stat. Med. 25 , 3589–3631 (2006)

Article MathSciNet PubMed Google Scholar

Senior, A. M . et al. Heterogeneity in ecological and evolutionary meta-analyses: its magnitude and implications. Ecology 97 , 3293–3299 (2016)

McAuley, L ., Pham, B ., Tugwell, P . & Moher, D. Does the inclusion of grey literature influence estimates of intervention effectiveness reported in meta-analyses? Lancet 356 , 1228–1231 (2000)

Koricheva, J ., Gurevitch, J . & Mengersen, K. (eds) The Handbook of Meta-Analysis in Ecology and Evolution (Princeton Univ. Press, 2013) This book provides the first comprehensive guide to undertaking meta-analyses in ecology and evolution and is also relevant to other fields where heterogeneity is expected, incorporating explicit consideration of the different approaches used in different domains.

Lumley, T. Network meta-analysis for indirect treatment comparisons. Stat. Med. 21 , 2313–2324 (2002)

Zarin, W . et al. Characteristics and knowledge synthesis approach for 456 network meta-analyses: a scoping review. BMC Med. 15 , 3 (2017)

Elliott, J. H . et al. Living systematic reviews: an emerging opportunity to narrow the evidence-practice gap. PLoS Med. 11 , e1001603 (2014)

Vandvik, P. O ., Brignardello-Petersen, R . & Guyatt, G. H. Living cumulative network meta-analysis to reduce waste in research: a paradigmatic shift for systematic reviews? BMC Med. 14 , 59 (2016)

Jarvinen, A. A meta-analytic study of the effects of female age on laying date and clutch size in the Great Tit Parus major and the Pied Flycatcher Ficedula hypoleuca . Ibis 133 , 62–67 (1991)

Arnqvist, G. & Wooster, D. Meta-analysis: synthesizing research findings in ecology and evolution. Trends Ecol. Evol. 10 , 236–240 (1995)

Hedges, L. V ., Gurevitch, J . & Curtis, P. S. The meta-analysis of response ratios in experimental ecology. Ecology 80 , 1150–1156 (1999)

Gurevitch, J ., Curtis, P. S. & Jones, M. H. Meta-analysis in ecology. Adv. Ecol. Res 32 , 199–247 (2001)

Lajeunesse, M. J. phyloMeta: a program for phylogenetic comparative analyses with meta-analysis. Bioinformatics 27 , 2603–2604 (2011)

CAS PubMed Google Scholar

Pearson, K. Report on certain enteric fever inoculation statistics. Br. Med. J. 2 , 1243–1246 (1904)

Fisher, R. A. Statistical Methods for Research Workers (Oliver and Boyd, 1925)

Yates, F. & Cochran, W. G. The analysis of groups of experiments. J. Agric. Sci. 28 , 556–580 (1938)

Cochran, W. G. The combination of estimates from different experiments. Biometrics 10 , 101–129 (1954)

Smith, M. L . & Glass, G. V. Meta-analysis of psychotherapy outcome studies. Am. Psychol. 32 , 752–760 (1977)

Glass, G. V. Meta-analysis at middle age: a personal history. Res. Synth. Methods 6 , 221–231 (2015)

Cooper, H. M ., Hedges, L. V . & Valentine, J. C. (eds) The Handbook of Research Synthesis and Meta-analysis 2nd edn (Russell Sage Foundation, 2009). This book is an important compilation that builds on the ground-breaking first edition to set the standard for best practice in meta-analysis, primarily in the social sciences but with applications to medicine and other fields.

Rosenthal, R. Meta-analytic Procedures for Social Research (Sage, 1991)

Hunter, J. E ., Schmidt, F. L. & Jackson, G. B. Meta-analysis: Cumulating Research Findings Across Studies (Sage, 1982)

Gurevitch, J ., Morrow, L. L ., Wallace, A . & Walsh, J. S. A meta-analysis of competition in field experiments. Am. Nat. 140 , 539–572 (1992). This influential early ecological meta-analysis reports multiple experimental outcomes on a longstanding and controversial topic that introduced a wide range of ecologists to research synthesis methods.

O’Rourke, K. An historical perspective on meta-analysis: dealing quantitatively with varying study results. J. R. Soc. Med. 100 , 579–582 (2007)

Shadish, W. R . & Lecy, J. D. The meta-analytic big bang. Res. Synth. Methods 6 , 246–264 (2015)

Glass, G. V. Primary, secondary, and meta-analysis of research. Educ. Res. 5 , 3–8 (1976)

DerSimonian, R . & Laird, N. Meta-analysis in clinical trials. Control. Clin. Trials 7 , 177–188 (1986)

Lipsey, M. W . & Wilson, D. B. The efficacy of psychological, educational, and behavioral treatment. Confirmation from meta-analysis. Am. Psychol. 48 , 1181–1209 (1993)

Chalmers, I. & Altman, D. G. Systematic Reviews (BMJ Publishing Group, 1995)

Moher, D . et al. Improving the quality of reports of meta-analyses of randomised controlled trials: the QUOROM statement. Quality of reporting of meta-analyses. Lancet 354 , 1896–1900 (1999)

Higgins, J. P. & Thompson, S. G. Quantifying heterogeneity in a meta-analysis. Stat. Med. 21 , 1539–1558 (2002)

Download references

Acknowledgements

We dedicate this Review to the memory of Ingram Olkin and William Shadish, founding members of the Society for Research Synthesis Methodology who made tremendous contributions to the development of meta-analysis and research synthesis and to the supervision of generations of students. We thank L. Lagisz for help in preparing the figures. We are grateful to the Center for Open Science and the Laura and John Arnold Foundation for hosting and funding a workshop, which was the origination of this article. S.N. is supported by Australian Research Council Future Fellowship (FT130100268). J.G. acknowledges funding from the US National Science Foundation (ABI 1262402).

Author information

Authors and affiliations.

Department of Ecology and Evolution, Stony Brook University, Stony Brook, 11794-5245, New York, USA

Jessica Gurevitch

School of Biological Sciences, Royal Holloway University of London, Egham, TW20 0EX, Surrey, UK

Julia Koricheva

Evolution and Ecology Research Centre and School of Biological, Earth and Environmental Sciences, University of New South Wales, Sydney, 2052, New South Wales, Australia

Shinichi Nakagawa

Diabetes and Metabolism Division, Garvan Institute of Medical Research, 384 Victoria Street, Darlinghurst, Sydney, 2010, New South Wales, Australia

School of Natural and Environmental Sciences, Newcastle University, Newcastle upon Tyne, NE1 7RU, UK

Gavin Stewart

You can also search for this author in PubMed Google Scholar

Contributions

All authors contributed equally in designing the study and writing the manuscript, and so are listed alphabetically.

Corresponding authors

Correspondence to Jessica Gurevitch , Julia Koricheva , Shinichi Nakagawa or Gavin Stewart .

Ethics declarations

Competing interests.

The authors declare no competing financial interests.

Additional information

Reviewer Information Nature thanks D. Altman, M. Lajeunesse, D. Moher and G. Romero for their contribution to the peer review of this work.

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

PowerPoint slides

Powerpoint slide for fig. 1, rights and permissions.

Reprints and permissions

About this article

Cite this article.

Gurevitch, J., Koricheva, J., Nakagawa, S. et al. Meta-analysis and the science of research synthesis. Nature 555 , 175–182 (2018). https://doi.org/10.1038/nature25753

Download citation

Received : 04 March 2017

Accepted : 12 January 2018

Published : 08 March 2018

Issue Date : 08 March 2018

DOI : https://doi.org/10.1038/nature25753

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Accelerating evidence synthesis for safety assessment through clinicaltrials.gov platform: a feasibility study.

BMC Medical Research Methodology (2024)

Investigate the relationship between the retraction reasons and the quality of methodology in non-Cochrane retracted systematic reviews: a systematic review

- Azita Shahraki-Mohammadi

- Leila Keikha

- Razieh Zahedi

Systematic Reviews (2024)

A meta-analysis on global change drivers and the risk of infectious disease

- Michael B. Mahon

- Alexandra Sack

- Jason R. Rohr

Nature (2024)

Systematic review of the uncertainty of coral reef futures under climate change

- Shannon G. Klein

- Cassandra Roch

- Carlos M. Duarte

Nature Communications (2024)

A population-scale analysis of 36 gut microbiome studies reveals universal species signatures for common diseases

- Qiulong Yan

npj Biofilms and Microbiomes (2024)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.