Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Doing Survey Research | A Step-by-Step Guide & Examples

Doing Survey Research | A Step-by-Step Guide & Examples

Published on 6 May 2022 by Shona McCombes . Revised on 10 October 2022.

Survey research means collecting information about a group of people by asking them questions and analysing the results. To conduct an effective survey, follow these six steps:

- Determine who will participate in the survey

- Decide the type of survey (mail, online, or in-person)

- Design the survey questions and layout

- Distribute the survey

- Analyse the responses

- Write up the results

Surveys are a flexible method of data collection that can be used in many different types of research .

Table of contents

What are surveys used for, step 1: define the population and sample, step 2: decide on the type of survey, step 3: design the survey questions, step 4: distribute the survey and collect responses, step 5: analyse the survey results, step 6: write up the survey results, frequently asked questions about surveys.

Surveys are used as a method of gathering data in many different fields. They are a good choice when you want to find out about the characteristics, preferences, opinions, or beliefs of a group of people.

Common uses of survey research include:

- Social research: Investigating the experiences and characteristics of different social groups

- Market research: Finding out what customers think about products, services, and companies

- Health research: Collecting data from patients about symptoms and treatments

- Politics: Measuring public opinion about parties and policies

- Psychology: Researching personality traits, preferences, and behaviours

Surveys can be used in both cross-sectional studies , where you collect data just once, and longitudinal studies , where you survey the same sample several times over an extended period.

Prevent plagiarism, run a free check.

Before you start conducting survey research, you should already have a clear research question that defines what you want to find out. Based on this question, you need to determine exactly who you will target to participate in the survey.

Populations

The target population is the specific group of people that you want to find out about. This group can be very broad or relatively narrow. For example:

- The population of Brazil

- University students in the UK

- Second-generation immigrants in the Netherlands

- Customers of a specific company aged 18 to 24

- British transgender women over the age of 50

Your survey should aim to produce results that can be generalised to the whole population. That means you need to carefully define exactly who you want to draw conclusions about.

It’s rarely possible to survey the entire population of your research – it would be very difficult to get a response from every person in Brazil or every university student in the UK. Instead, you will usually survey a sample from the population.

The sample size depends on how big the population is. You can use an online sample calculator to work out how many responses you need.

There are many sampling methods that allow you to generalise to broad populations. In general, though, the sample should aim to be representative of the population as a whole. The larger and more representative your sample, the more valid your conclusions.

There are two main types of survey:

- A questionnaire , where a list of questions is distributed by post, online, or in person, and respondents fill it out themselves

- An interview , where the researcher asks a set of questions by phone or in person and records the responses

Which type you choose depends on the sample size and location, as well as the focus of the research.

Questionnaires

Sending out a paper survey by post is a common method of gathering demographic information (for example, in a government census of the population).

- You can easily access a large sample.

- You have some control over who is included in the sample (e.g., residents of a specific region).

- The response rate is often low.

Online surveys are a popular choice for students doing dissertation research , due to the low cost and flexibility of this method. There are many online tools available for constructing surveys, such as SurveyMonkey and Google Forms .

- You can quickly access a large sample without constraints on time or location.

- The data is easy to process and analyse.

- The anonymity and accessibility of online surveys mean you have less control over who responds.

If your research focuses on a specific location, you can distribute a written questionnaire to be completed by respondents on the spot. For example, you could approach the customers of a shopping centre or ask all students to complete a questionnaire at the end of a class.

- You can screen respondents to make sure only people in the target population are included in the sample.

- You can collect time- and location-specific data (e.g., the opinions of a shop’s weekday customers).

- The sample size will be smaller, so this method is less suitable for collecting data on broad populations.

Oral interviews are a useful method for smaller sample sizes. They allow you to gather more in-depth information on people’s opinions and preferences. You can conduct interviews by phone or in person.

- You have personal contact with respondents, so you know exactly who will be included in the sample in advance.

- You can clarify questions and ask for follow-up information when necessary.

- The lack of anonymity may cause respondents to answer less honestly, and there is more risk of researcher bias.

Like questionnaires, interviews can be used to collect quantitative data : the researcher records each response as a category or rating and statistically analyses the results. But they are more commonly used to collect qualitative data : the interviewees’ full responses are transcribed and analysed individually to gain a richer understanding of their opinions and feelings.

Next, you need to decide which questions you will ask and how you will ask them. It’s important to consider:

- The type of questions

- The content of the questions

- The phrasing of the questions

- The ordering and layout of the survey

Open-ended vs closed-ended questions

There are two main forms of survey questions: open-ended and closed-ended. Many surveys use a combination of both.

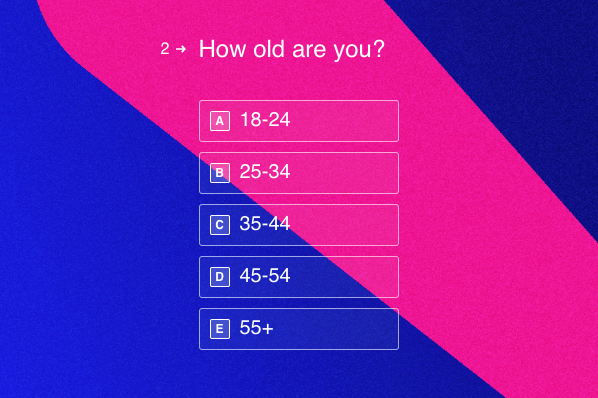

Closed-ended questions give the respondent a predetermined set of answers to choose from. A closed-ended question can include:

- A binary answer (e.g., yes/no or agree/disagree )

- A scale (e.g., a Likert scale with five points ranging from strongly agree to strongly disagree )

- A list of options with a single answer possible (e.g., age categories)

- A list of options with multiple answers possible (e.g., leisure interests)

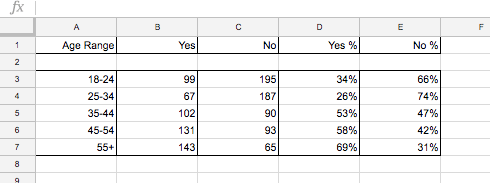

Closed-ended questions are best for quantitative research . They provide you with numerical data that can be statistically analysed to find patterns, trends, and correlations .

Open-ended questions are best for qualitative research. This type of question has no predetermined answers to choose from. Instead, the respondent answers in their own words.

Open questions are most common in interviews, but you can also use them in questionnaires. They are often useful as follow-up questions to ask for more detailed explanations of responses to the closed questions.

The content of the survey questions

To ensure the validity and reliability of your results, you need to carefully consider each question in the survey. All questions should be narrowly focused with enough context for the respondent to answer accurately. Avoid questions that are not directly relevant to the survey’s purpose.

When constructing closed-ended questions, ensure that the options cover all possibilities. If you include a list of options that isn’t exhaustive, you can add an ‘other’ field.

Phrasing the survey questions

In terms of language, the survey questions should be as clear and precise as possible. Tailor the questions to your target population, keeping in mind their level of knowledge of the topic.

Use language that respondents will easily understand, and avoid words with vague or ambiguous meanings. Make sure your questions are phrased neutrally, with no bias towards one answer or another.

Ordering the survey questions

The questions should be arranged in a logical order. Start with easy, non-sensitive, closed-ended questions that will encourage the respondent to continue.

If the survey covers several different topics or themes, group together related questions. You can divide a questionnaire into sections to help respondents understand what is being asked in each part.

If a question refers back to or depends on the answer to a previous question, they should be placed directly next to one another.

Before you start, create a clear plan for where, when, how, and with whom you will conduct the survey. Determine in advance how many responses you require and how you will gain access to the sample.

When you are satisfied that you have created a strong research design suitable for answering your research questions, you can conduct the survey through your method of choice – by post, online, or in person.

There are many methods of analysing the results of your survey. First you have to process the data, usually with the help of a computer program to sort all the responses. You should also cleanse the data by removing incomplete or incorrectly completed responses.

If you asked open-ended questions, you will have to code the responses by assigning labels to each response and organising them into categories or themes. You can also use more qualitative methods, such as thematic analysis , which is especially suitable for analysing interviews.

Statistical analysis is usually conducted using programs like SPSS or Stata. The same set of survey data can be subject to many analyses.

Finally, when you have collected and analysed all the necessary data, you will write it up as part of your thesis, dissertation , or research paper .

In the methodology section, you describe exactly how you conducted the survey. You should explain the types of questions you used, the sampling method, when and where the survey took place, and the response rate. You can include the full questionnaire as an appendix and refer to it in the text if relevant.

Then introduce the analysis by describing how you prepared the data and the statistical methods you used to analyse it. In the results section, you summarise the key results from your analysis.

A Likert scale is a rating scale that quantitatively assesses opinions, attitudes, or behaviours. It is made up of four or more questions that measure a single attitude or trait when response scores are combined.

To use a Likert scale in a survey , you present participants with Likert-type questions or statements, and a continuum of items, usually with five or seven possible responses, to capture their degree of agreement.

Individual Likert-type questions are generally considered ordinal data , because the items have clear rank order, but don’t have an even distribution.

Overall Likert scale scores are sometimes treated as interval data. These scores are considered to have directionality and even spacing between them.

The type of data determines what statistical tests you should use to analyse your data.

A questionnaire is a data collection tool or instrument, while a survey is an overarching research method that involves collecting and analysing data from people using questionnaires.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2022, October 10). Doing Survey Research | A Step-by-Step Guide & Examples. Scribbr. Retrieved 14 May 2024, from https://www.scribbr.co.uk/research-methods/surveys/

Is this article helpful?

Shona McCombes

Other students also liked, qualitative vs quantitative research | examples & methods, construct validity | definition, types, & examples, what is a likert scale | guide & examples.

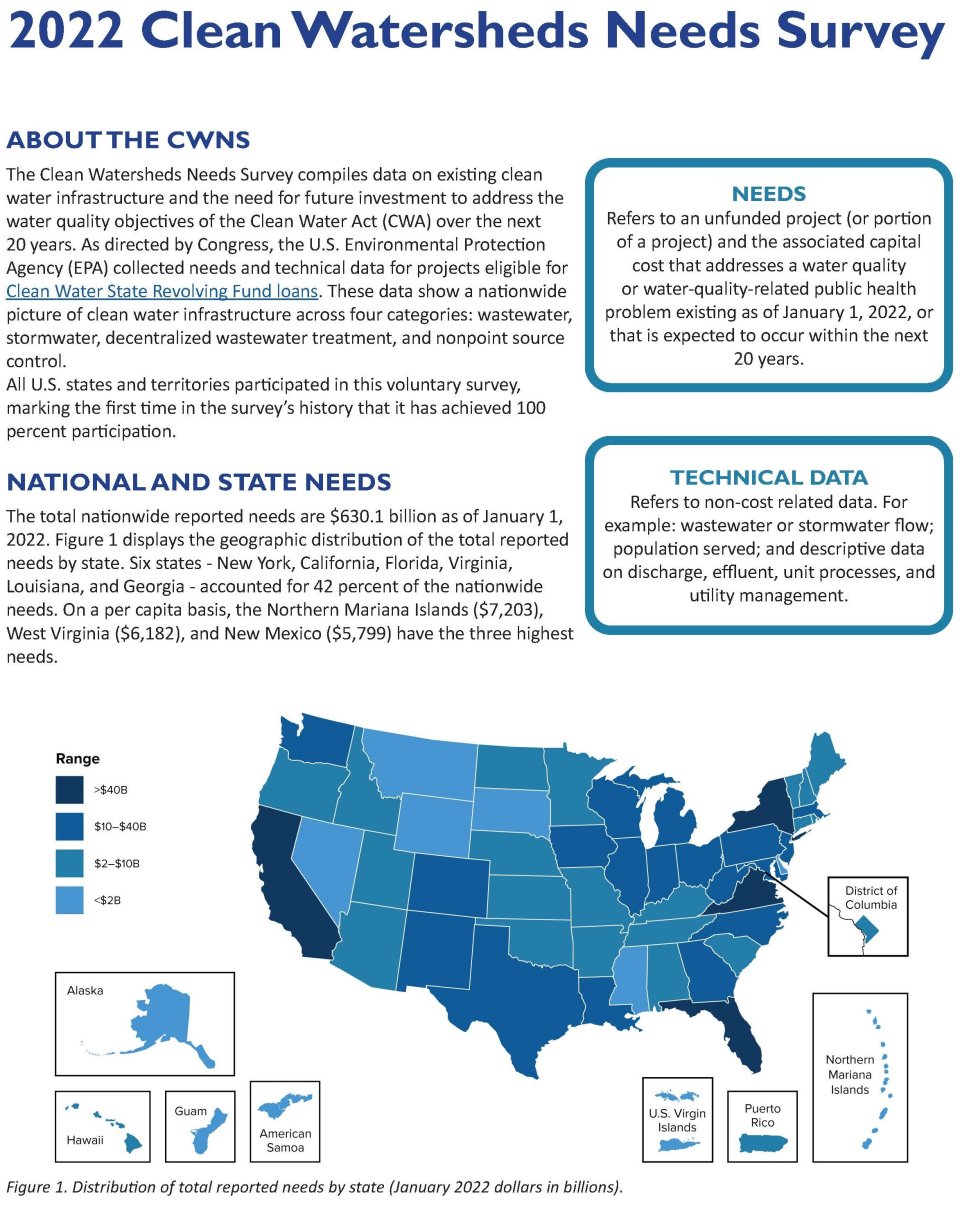

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Adv Pract Oncol

- v.6(2); Mar-Apr 2015

Understanding and Evaluating Survey Research

A variety of methodologic approaches exist for individuals interested in conducting research. Selection of a research approach depends on a number of factors, including the purpose of the research, the type of research questions to be answered, and the availability of resources. The purpose of this article is to describe survey research as one approach to the conduct of research so that the reader can critically evaluate the appropriateness of the conclusions from studies employing survey research.

SURVEY RESEARCH

Survey research is defined as "the collection of information from a sample of individuals through their responses to questions" ( Check & Schutt, 2012, p. 160 ). This type of research allows for a variety of methods to recruit participants, collect data, and utilize various methods of instrumentation. Survey research can use quantitative research strategies (e.g., using questionnaires with numerically rated items), qualitative research strategies (e.g., using open-ended questions), or both strategies (i.e., mixed methods). As it is often used to describe and explore human behavior, surveys are therefore frequently used in social and psychological research ( Singleton & Straits, 2009 ).

Information has been obtained from individuals and groups through the use of survey research for decades. It can range from asking a few targeted questions of individuals on a street corner to obtain information related to behaviors and preferences, to a more rigorous study using multiple valid and reliable instruments. Common examples of less rigorous surveys include marketing or political surveys of consumer patterns and public opinion polls.

Survey research has historically included large population-based data collection. The primary purpose of this type of survey research was to obtain information describing characteristics of a large sample of individuals of interest relatively quickly. Large census surveys obtaining information reflecting demographic and personal characteristics and consumer feedback surveys are prime examples. These surveys were often provided through the mail and were intended to describe demographic characteristics of individuals or obtain opinions on which to base programs or products for a population or group.

More recently, survey research has developed into a rigorous approach to research, with scientifically tested strategies detailing who to include (representative sample), what and how to distribute (survey method), and when to initiate the survey and follow up with nonresponders (reducing nonresponse error), in order to ensure a high-quality research process and outcome. Currently, the term "survey" can reflect a range of research aims, sampling and recruitment strategies, data collection instruments, and methods of survey administration.

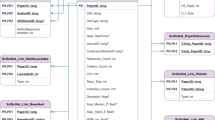

Given this range of options in the conduct of survey research, it is imperative for the consumer/reader of survey research to understand the potential for bias in survey research as well as the tested techniques for reducing bias, in order to draw appropriate conclusions about the information reported in this manner. Common types of error in research, along with the sources of error and strategies for reducing error as described throughout this article, are summarized in the Table .

Sources of Error in Survey Research and Strategies to Reduce Error

The goal of sampling strategies in survey research is to obtain a sufficient sample that is representative of the population of interest. It is often not feasible to collect data from an entire population of interest (e.g., all individuals with lung cancer); therefore, a subset of the population or sample is used to estimate the population responses (e.g., individuals with lung cancer currently receiving treatment). A large random sample increases the likelihood that the responses from the sample will accurately reflect the entire population. In order to accurately draw conclusions about the population, the sample must include individuals with characteristics similar to the population.

It is therefore necessary to correctly identify the population of interest (e.g., individuals with lung cancer currently receiving treatment vs. all individuals with lung cancer). The sample will ideally include individuals who reflect the intended population in terms of all characteristics of the population (e.g., sex, socioeconomic characteristics, symptom experience) and contain a similar distribution of individuals with those characteristics. As discussed by Mady Stovall beginning on page 162, Fujimori et al. ( 2014 ), for example, were interested in the population of oncologists. The authors obtained a sample of oncologists from two hospitals in Japan. These participants may or may not have similar characteristics to all oncologists in Japan.

Participant recruitment strategies can affect the adequacy and representativeness of the sample obtained. Using diverse recruitment strategies can help improve the size of the sample and help ensure adequate coverage of the intended population. For example, if a survey researcher intends to obtain a sample of individuals with breast cancer representative of all individuals with breast cancer in the United States, the researcher would want to use recruitment strategies that would recruit both women and men, individuals from rural and urban settings, individuals receiving and not receiving active treatment, and so on. Because of the difficulty in obtaining samples representative of a large population, researchers may focus the population of interest to a subset of individuals (e.g., women with stage III or IV breast cancer). Large census surveys require extremely large samples to adequately represent the characteristics of the population because they are intended to represent the entire population.

DATA COLLECTION METHODS

Survey research may use a variety of data collection methods with the most common being questionnaires and interviews. Questionnaires may be self-administered or administered by a professional, may be administered individually or in a group, and typically include a series of items reflecting the research aims. Questionnaires may include demographic questions in addition to valid and reliable research instruments ( Costanzo, Stawski, Ryff, Coe, & Almeida, 2012 ; DuBenske et al., 2014 ; Ponto, Ellington, Mellon, & Beck, 2010 ). It is helpful to the reader when authors describe the contents of the survey questionnaire so that the reader can interpret and evaluate the potential for errors of validity (e.g., items or instruments that do not measure what they are intended to measure) and reliability (e.g., items or instruments that do not measure a construct consistently). Helpful examples of articles that describe the survey instruments exist in the literature ( Buerhaus et al., 2012 ).

Questionnaires may be in paper form and mailed to participants, delivered in an electronic format via email or an Internet-based program such as SurveyMonkey, or a combination of both, giving the participant the option to choose which method is preferred ( Ponto et al., 2010 ). Using a combination of methods of survey administration can help to ensure better sample coverage (i.e., all individuals in the population having a chance of inclusion in the sample) therefore reducing coverage error ( Dillman, Smyth, & Christian, 2014 ; Singleton & Straits, 2009 ). For example, if a researcher were to only use an Internet-delivered questionnaire, individuals without access to a computer would be excluded from participation. Self-administered mailed, group, or Internet-based questionnaires are relatively low cost and practical for a large sample ( Check & Schutt, 2012 ).

Dillman et al. ( 2014 ) have described and tested a tailored design method for survey research. Improving the visual appeal and graphics of surveys by using a font size appropriate for the respondents, ordering items logically without creating unintended response bias, and arranging items clearly on each page can increase the response rate to electronic questionnaires. Attending to these and other issues in electronic questionnaires can help reduce measurement error (i.e., lack of validity or reliability) and help ensure a better response rate.

Conducting interviews is another approach to data collection used in survey research. Interviews may be conducted by phone, computer, or in person and have the benefit of visually identifying the nonverbal response(s) of the interviewee and subsequently being able to clarify the intended question. An interviewer can use probing comments to obtain more information about a question or topic and can request clarification of an unclear response ( Singleton & Straits, 2009 ). Interviews can be costly and time intensive, and therefore are relatively impractical for large samples.

Some authors advocate for using mixed methods for survey research when no one method is adequate to address the planned research aims, to reduce the potential for measurement and non-response error, and to better tailor the study methods to the intended sample ( Dillman et al., 2014 ; Singleton & Straits, 2009 ). For example, a mixed methods survey research approach may begin with distributing a questionnaire and following up with telephone interviews to clarify unclear survey responses ( Singleton & Straits, 2009 ). Mixed methods might also be used when visual or auditory deficits preclude an individual from completing a questionnaire or participating in an interview.

FUJIMORI ET AL.: SURVEY RESEARCH

Fujimori et al. ( 2014 ) described the use of survey research in a study of the effect of communication skills training for oncologists on oncologist and patient outcomes (e.g., oncologist’s performance and confidence and patient’s distress, satisfaction, and trust). A sample of 30 oncologists from two hospitals was obtained and though the authors provided a power analysis concluding an adequate number of oncologist participants to detect differences between baseline and follow-up scores, the conclusions of the study may not be generalizable to a broader population of oncologists. Oncologists were randomized to either an intervention group (i.e., communication skills training) or a control group (i.e., no training).

Fujimori et al. ( 2014 ) chose a quantitative approach to collect data from oncologist and patient participants regarding the study outcome variables. Self-report numeric ratings were used to measure oncologist confidence and patient distress, satisfaction, and trust. Oncologist confidence was measured using two instruments each using 10-point Likert rating scales. The Hospital Anxiety and Depression Scale (HADS) was used to measure patient distress and has demonstrated validity and reliability in a number of populations including individuals with cancer ( Bjelland, Dahl, Haug, & Neckelmann, 2002 ). Patient satisfaction and trust were measured using 0 to 10 numeric rating scales. Numeric observer ratings were used to measure oncologist performance of communication skills based on a videotaped interaction with a standardized patient. Participants completed the same questionnaires at baseline and follow-up.

The authors clearly describe what data were collected from all participants. Providing additional information about the manner in which questionnaires were distributed (i.e., electronic, mail), the setting in which data were collected (e.g., home, clinic), and the design of the survey instruments (e.g., visual appeal, format, content, arrangement of items) would assist the reader in drawing conclusions about the potential for measurement and nonresponse error. The authors describe conducting a follow-up phone call or mail inquiry for nonresponders, using the Dillman et al. ( 2014 ) tailored design for survey research follow-up may have reduced nonresponse error.

CONCLUSIONS

Survey research is a useful and legitimate approach to research that has clear benefits in helping to describe and explore variables and constructs of interest. Survey research, like all research, has the potential for a variety of sources of error, but several strategies exist to reduce the potential for error. Advanced practitioners aware of the potential sources of error and strategies to improve survey research can better determine how and whether the conclusions from a survey research study apply to practice.

The author has no potential conflicts of interest to disclose.

Faculty and researchers : We want to hear from you! We are launching a survey to learn more about your library collection needs for teaching, learning, and research. If you would like to participate, please complete the survey by May 17, 2024. Thank you for your participation!

- University of Massachusetts Lowell

- University Libraries

Survey Research: Design and Presentation

- Planning a Thesis Proposal

- Introduction to Survey Research Design

- Literature Review: Definition and Context

- Slides, Articles

- Evaluating Survey Results

- Related Library Databases

The goal of a proposal is to demonstrate that you are ready to start your research project by presenting a distinct idea, question or issue which has great interest for you, along with the method you have chosen to explore it.

The process of developing your research question is related to the literature review. As you discover more from your research, your question will be shaped by what you find.

The clarity of your idea dictates the plan for your dissertation or thesis work.

From the University of North Texas faculty member Dr. Abraham Benavides:

Elements of a Thesis Proposal

(Adapted from the Department of Communication, University of Washington)

Dissertation proposals vary but most share the following elements, though not necessarily in this order.

1. The Introduction

In three paragraphs to three or four pages very simply introduce your question. Use a narrative to style to engage readers. A well-known issue in your field, controversy surrounding some texts, or the policy implications of your topic are some ways to add context to the proposal.

2. Research Questions

State your question early in your proposal. Even if you are going to restate your research questionas part of the literature review, you may wish to mention it briefly at the end of the introduction.

Make sure if you have questions which follow from your main question that this is clearly indicated. The research questions should include any boundaries you have placed on your inquiry, for instance time, place, and topics. Terms with unusual meanings should be defined.

3. Literature Synthesis or Review

The proposal must be described within the broader body of scholarship around the topic. This is part of establishing the significance of your research. The discussion of the literature typically shows how your project will extend what’s already known.

In writing your literature review, think about the important theories and concepts related to your project and organize your discussion accordingly; you usually want to avoid a strictly chronological discussion (i.e., earliest study, next study, etc.).

What research is directly related to your topic? Discuss it thoroughly.

What literature provides context for your research? Discuss it briefly.

In your proposal you should avoid writing a genealogy of your field’s research. For instance, you don’t need to tell your committee about the development of research in the entire field in order to justify the particular project you propose. Instead, isolate the major areas of research within your field that are relevant to your project.

4. Significance of your Research Question

Good proposals leave readers with a clear understanding of the dissertation project’s overall significance. Consider the following:

- advancing theoretical understandings

- introducing new interpretations

- analyzing the relationship between variables

- testing a theory

- replicating earlier studies

- exploring the whether earlier findings can be demonstrated to hold true in new times, places, or circumstances

- refining a method of inquiry.

5. Research Method

The research method that will be used involves three levels of concern:

- overall research design

- delineation of the method

- procedures for executing it.

At the outset you have to show that your overall design is appropriate for the questions you’re posing.

Next, you need to outline your specific research method. What data will you analyze?

How will you collect the data? Supervisors sometimes expect proposals to sketch instruments (e.g., coding sheets, questionnaires, protocols) central to the project.

Third, what procedures will you follow as you conduct your research? What will you do with your data? A key here is your plan for analyzing data. You want to gather data in a form in which you can analyze it. [In this case the method is a survey administered to a group of people]. If appropriate, you should indicate what rules for interpretation or what kinds of statistical tests that you’ll use.

6. Tentative Dissertation Outline

Give your committee a sense of how your thesis will be organized. You can write a short (two- or three-sentence) paragraph summarizing what you expect to include in each section of the thesis.

7. Tentative Schedule for Completion

Be realistic in projecting your timeline. Don’t forget to include time for human subjects review, if appropriate .

8. References

If you didn’t use footnotes or endnotes throughout, you should include a list of references to the literature cited in the proposal.

9. Selected Bibliography of Other Sources

You might want to append a more extensive bibliography (check with your supervisor). If you include one, you might want to divide it into several subsections, for instance by concept, topic or field.

- << Previous: Introduction to Survey Research Design

- Next: Literature Review: Definition and Context >>

- Last Updated: Jan 22, 2024 2:05 PM

- URL: https://libguides.uml.edu/rohland_surveys

Dissertation surveys: Questions, examples, and best practices

Collect data for your dissertation with little effort and great results.

Dissertation surveys are one of the most powerful tools to get valuable insights and data for the culmination of your research. However, it’s one of the most stressful and time-consuming tasks you need to do. You want useful data from a representative sample that you can analyze and present as part of your dissertation. At SurveyPlanet, we’re committed to making it as easy and stress-free as possible to get the most out of your study.

With an intuitive and user-friendly design, our templates and premade questions can be your allies while creating a survey for your dissertation. Explore all the options we offer by simply signing up for an account—and leave the stress behind.

How to write dissertation survey questions

The first thing to do is to figure out which group of people is relevant for your study. When you know that, you’ll also be able to adjust the survey and write questions that will get the best results.

The next step is to write down the goal of your research and define it properly. Online surveys are one of the best and most inexpensive ways to reach respondents and achieve your goal.

Before writing any questions, think about how you’ll analyze the results. You don’t want to write and distribute a survey without keeping how to report your findings in mind. When your thesis questionnaire is out in the real world, it’s too late to conclude that the data you’re collecting might not be any good for assessment. Because of that, you need to create questions with analysis in mind.

You may find our five survey analysis tips for better insights helpful. We recommend reading it before analyzing your results.

Once you understand the parameters of your representative sample, goals, and analysis methodology, then it’s time to think about distribution. Survey distribution may feel like a headache, but you’ll find that many people will gladly participate.

Find communities where your targeted group hangs out and share the link to your survey with them. If you’re not sure how large your research sample should be, gauge it easily with the survey sample size calculator.

Need help with writing survey questions? Read our guide on well-written examples of good survey questions .

Dissertation survey examples

Whatever field you’re studying, we’re sure the following questions will prove useful when crafting your own.

At the beginning of every questionnaire, inform respondents of your topic and provide a consent form. After that, start with questions like:

- Please select your gender:

- What is the highest educational level you’ve completed?

- High school

- Bachelor degree

- Master’s degree

- On a scale of 1-7, how satisfied are you with your current job?

- Please rate the following statements:

- I always wait for people to text me first.

- Strongly Disagree

- Neither agree nor disagree

- Strongly agree

- My friends always complain that I never invite them anywhere.

- I prefer spending time alone.

- Rank which personality traits are most important when choosing a partner. Rank 1 - 7, where 1 is the most and 7 is the least important.

- Flexibility

- Independence

- How openly do you share feelings with your partner?

- Almost never

- Almost always

- In the last two weeks, how often did you experience headaches?

Dissertation survey best practices

There are a lot of DOs and DON’Ts you should keep in mind when conducting any survey, especially for your dissertation. To get valuable data from your targeted sample, follow these best practices:

Use the consent form.

The consent form is a must when distributing a research questionnaire. A respondent has to know how you’ll use their answers and that the survey is anonymous.

Avoid leading and double-barreled questions

Leading and double-barreled questions will produce inconclusive results—and you don’t want that. A question such as: “Do you like to watch TV and play video games?” is double-barreled because it has two variables.

On the other hand, leading questions such as “On a scale from 1-10 how would you rate the amazing experience with our customer support?” influence respondents to answer in a certain way, which produces biased results.

Use easy and straightforward language and questions

Don’t use terms and professional jargon that respondents won’t understand. Take into consideration their educational level and demographic traits and use easy-to-understand language when writing questions.

Mix close-ended and open-ended questions

Too many open-ended questions will annoy respondents. Also, analyzing the responses is harder. Use more close-ended questions for the best results and only a few open-ended ones.

Strategically use different types of responses

Likert scale, multiple-choice, and ranking are all types of responses you can use to collect data. But some response types suit some questions better. Make sure to strategically fit questions with response types.

Ensure that data privacy is a priority

Make sure to use an online survey tool that has SSL encryption and secure data processing. You don’t want to risk all your hard work going to waste because of poorly managed data security. Ensure that you only collect data that’s relevant to your dissertation survey and leave out any questions (such as name) that can identify the respondents.

Create dissertation questionnaires with SurveyPlanet

Overall, survey methodology is a great way to find research participants for your research study. You have all the tools required for creating a survey for a dissertation with SurveyPlanet—you only need to sign up . With powerful features like question branching, custom formatting, multiple languages, image choice questions, and easy export you will find everything needed to create, distribute, and analyze a dissertation survey.

Happy data gathering!

Sign up now

Free unlimited surveys, questions and responses.

Reference management. Clean and simple.

How to collect data for your thesis

Collecting theoretical data

Search for theses on your topic, use content-sharing platforms, collecting empirical data, qualitative vs. quantitative data, frequently asked questions about gathering data for your thesis, related articles.

After choosing a topic for your thesis , you’ll need to start gathering data. In this article, we focus on how to effectively collect theoretical and empirical data.

Empirical data : unique research that may be quantitative, qualitative, or mixed.

Theoretical data : secondary, scholarly sources like books and journal articles that provide theoretical context for your research.

Thesis : the culminating, multi-chapter project for a bachelor’s, master’s, or doctoral degree.

Qualitative data : info that cannot be measured, like observations and interviews .

Quantitative data : info that can be measured and written with numbers.

At this point in your academic life, you are already acquainted with the ways of finding potential references. Some obvious sources of theoretical material are:

- edited volumes

- conference proceedings

- online databases like Google Scholar , ERIC , or Scopus

You can also take a look at the top list of academic search engines .

Looking at other theses on your topic can help you see what approaches have been taken and what aspects other writers have focused on. Pay close attention to the list of references and follow the bread-crumbs back to the original theories and specialized authors.

Another method for gathering theoretical data is to read through content-sharing platforms. Many people share their papers and writings on these sites. You can either hunt sources, get some inspiration for your own work or even learn new angles of your topic.

Some popular content sharing sites are:

With these sites, you have to check the credibility of the sources. You can usually rely on the content, but we recommend double-checking just to be sure. Take a look at our guide on what are credible sources?

The more you know, the better. The guide, " How to undertake a literature search and review for dissertations and final year projects ," will give you all the tools needed for finding literature .

In order to successfully collect empirical data, you have to choose first what type of data you want as an outcome. There are essentially two options, qualitative or quantitative data. Many people mistake one term with the other, so it’s important to understand the differences between qualitative and quantitative research .

Boiled down, qualitative data means words and quantitative means numbers. Both types are considered primary sources . Whichever one adapts best to your research will define the type of methodology to carry out, so choose wisely.

In the end, having in mind what type of outcome you intend and how much time you count on will lead you to choose the best type of empirical data for your research. For a detailed description of each methodology type mentioned above, read more about collecting data .

Once you gather enough theoretical and empirical data, you will need to start writing. But before the actual writing part, you have to structure your thesis to avoid getting lost in the sea of information. Take a look at our guide on how to structure your thesis for some tips and tricks.

The key to knowing what type of data you should collect for your thesis is knowing in advance the type of outcome you intend to have, and the amount of time you count with.

Some obvious sources of theoretical material are journals, libraries and online databases like Google Scholar , ERIC or Scopus , or take a look at the top list of academic search engines . You can also search for theses on your topic or read content sharing platforms, like Medium , Issuu , or Slideshare .

To gather empirical data, you have to choose first what type of data you want. There are two options, qualitative or quantitative data. You can gather data through observations, interviews, focus groups, or with surveys, tests, and existing databases.

Qualitative data means words, information that cannot be measured. It may involve multimedia material or non-textual data. This type of data claims to be detailed, nuanced and contextual.

Quantitative data means numbers, information that can be measured and written with numbers. This type of data claims to be credible, scientific and exact.

How To Write The Results/Findings Chapter

For qualitative studies (dissertations & theses).

By: Jenna Crossley (PhD). Expert Reviewed By: Dr. Eunice Rautenbach | August 2021

So, you’ve collected and analysed your qualitative data, and it’s time to write up your results chapter. But where do you start? In this post, we’ll guide you through the qualitative results chapter (also called the findings chapter), step by step.

Overview: Qualitative Results Chapter

- What (exactly) the qualitative results chapter is

- What to include in your results chapter

- How to write up your results chapter

- A few tips and tricks to help you along the way

- Free results chapter template

What exactly is the results chapter?

The results chapter in a dissertation or thesis (or any formal academic research piece) is where you objectively and neutrally present the findings of your qualitative analysis (or analyses if you used multiple qualitative analysis methods ). This chapter can sometimes be combined with the discussion chapter (where you interpret the data and discuss its meaning), depending on your university’s preference. We’ll treat the two chapters as separate, as that’s the most common approach.

In contrast to a quantitative results chapter that presents numbers and statistics, a qualitative results chapter presents data primarily in the form of words . But this doesn’t mean that a qualitative study can’t have quantitative elements – you could, for example, present the number of times a theme or topic pops up in your data, depending on the analysis method(s) you adopt.

Adding a quantitative element to your study can add some rigour, which strengthens your results by providing more evidence for your claims. This is particularly common when using qualitative content analysis. Keep in mind though that qualitative research aims to achieve depth, richness and identify nuances , so don’t get tunnel vision by focusing on the numbers. They’re just cream on top in a qualitative analysis.

So, to recap, the results chapter is where you objectively present the findings of your analysis, without interpreting them (you’ll save that for the discussion chapter). With that out the way, let’s take a look at what you should include in your results chapter.

What should you include in the results chapter?

As we’ve mentioned, your qualitative results chapter should purely present and describe your results , not interpret them in relation to the existing literature or your research questions . Any speculations or discussion about the implications of your findings should be reserved for your discussion chapter.

In your results chapter, you’ll want to talk about your analysis findings and whether or not they support your hypotheses (if you have any). Naturally, the exact contents of your results chapter will depend on which qualitative analysis method (or methods) you use. For example, if you were to use thematic analysis, you’d detail the themes identified in your analysis, using extracts from the transcripts or text to support your claims.

While you do need to present your analysis findings in some detail, you should avoid dumping large amounts of raw data in this chapter. Instead, focus on presenting the key findings and using a handful of select quotes or text extracts to support each finding . The reams of data and analysis can be relegated to your appendices.

While it’s tempting to include every last detail you found in your qualitative analysis, it is important to make sure that you report only that which is relevant to your research aims, objectives and research questions . Always keep these three components, as well as your hypotheses (if you have any) front of mind when writing the chapter and use them as a filter to decide what’s relevant and what’s not.

Need a helping hand?

How do I write the results chapter?

Now that we’ve covered the basics, it’s time to look at how to structure your chapter. Broadly speaking, the results chapter needs to contain three core components – the introduction, the body and the concluding summary. Let’s take a look at each of these.

Section 1: Introduction

The first step is to craft a brief introduction to the chapter. This intro is vital as it provides some context for your findings. In your introduction, you should begin by reiterating your problem statement and research questions and highlight the purpose of your research . Make sure that you spell this out for the reader so that the rest of your chapter is well contextualised.

The next step is to briefly outline the structure of your results chapter. In other words, explain what’s included in the chapter and what the reader can expect. In the results chapter, you want to tell a story that is coherent, flows logically, and is easy to follow , so make sure that you plan your structure out well and convey that structure (at a high level), so that your reader is well oriented.

The introduction section shouldn’t be lengthy. Two or three short paragraphs should be more than adequate. It is merely an introduction and overview, not a summary of the chapter.

Pro Tip – To help you structure your chapter, it can be useful to set up an initial draft with (sub)section headings so that you’re able to easily (re)arrange parts of your chapter. This will also help your reader to follow your results and give your chapter some coherence. Be sure to use level-based heading styles (e.g. Heading 1, 2, 3 styles) to help the reader differentiate between levels visually. You can find these options in Word (example below).

Section 2: Body

Before we get started on what to include in the body of your chapter, it’s vital to remember that a results section should be completely objective and descriptive, not interpretive . So, be careful not to use words such as, “suggests” or “implies”, as these usually accompany some form of interpretation – that’s reserved for your discussion chapter.

The structure of your body section is very important , so make sure that you plan it out well. When planning out your qualitative results chapter, create sections and subsections so that you can maintain the flow of the story you’re trying to tell. Be sure to systematically and consistently describe each portion of results. Try to adopt a standardised structure for each portion so that you achieve a high level of consistency throughout the chapter.

For qualitative studies, results chapters tend to be structured according to themes , which makes it easier for readers to follow. However, keep in mind that not all results chapters have to be structured in this manner. For example, if you’re conducting a longitudinal study, you may want to structure your chapter chronologically. Similarly, you might structure this chapter based on your theoretical framework . The exact structure of your chapter will depend on the nature of your study , especially your research questions.

As you work through the body of your chapter, make sure that you use quotes to substantiate every one of your claims . You can present these quotes in italics to differentiate them from your own words. A general rule of thumb is to use at least two pieces of evidence per claim, and these should be linked directly to your data. Also, remember that you need to include all relevant results , not just the ones that support your assumptions or initial leanings.

In addition to including quotes, you can also link your claims to the data by using appendices , which you should reference throughout your text. When you reference, make sure that you include both the name/number of the appendix , as well as the line(s) from which you drew your data.

As referencing styles can vary greatly, be sure to look up the appendix referencing conventions of your university’s prescribed style (e.g. APA , Harvard, etc) and keep this consistent throughout your chapter.

Section 3: Concluding summary

The concluding summary is very important because it summarises your key findings and lays the foundation for the discussion chapter . Keep in mind that some readers may skip directly to this section (from the introduction section), so make sure that it can be read and understood well in isolation.

In this section, you need to remind the reader of the key findings. That is, the results that directly relate to your research questions and that you will build upon in your discussion chapter. Remember, your reader has digested a lot of information in this chapter, so you need to use this section to remind them of the most important takeaways.

Importantly, the concluding summary should not present any new information and should only describe what you’ve already presented in your chapter. Keep it concise – you’re not summarising the whole chapter, just the essentials.

Tips for writing an A-grade results chapter

Now that you’ve got a clear picture of what the qualitative results chapter is all about, here are some quick tips and reminders to help you craft a high-quality chapter:

- Your results chapter should be written in the past tense . You’ve done the work already, so you want to tell the reader what you found , not what you are currently finding .

- Make sure that you review your work multiple times and check that every claim is adequately backed up by evidence . Aim for at least two examples per claim, and make use of an appendix to reference these.

- When writing up your results, make sure that you stick to only what is relevant . Don’t waste time on data that are not relevant to your research objectives and research questions.

- Use headings and subheadings to create an intuitive, easy to follow piece of writing. Make use of Microsoft Word’s “heading styles” and be sure to use them consistently.

- When referring to numerical data, tables and figures can provide a useful visual aid. When using these, make sure that they can be read and understood independent of your body text (i.e. that they can stand-alone). To this end, use clear, concise labels for each of your tables or figures and make use of colours to code indicate differences or hierarchy.

- Similarly, when you’re writing up your chapter, it can be useful to highlight topics and themes in different colours . This can help you to differentiate between your data if you get a bit overwhelmed and will also help you to ensure that your results flow logically and coherently.

If you have any questions, leave a comment below and we’ll do our best to help. If you’d like 1-on-1 help with your results chapter (or any chapter of your dissertation or thesis), check out our private dissertation coaching service here or book a free initial consultation to discuss how we can help you.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

20 Comments

This was extremely helpful. Thanks a lot guys

Hi, thanks for the great research support platform created by the gradcoach team!

I wanted to ask- While “suggests” or “implies” are interpretive terms, what terms could we use for the results chapter? Could you share some examples of descriptive terms?

I think that instead of saying, ‘The data suggested, or The data implied,’ you can say, ‘The Data showed or revealed, or illustrated or outlined’…If interview data, you may say Jane Doe illuminated or elaborated, or Jane Doe described… or Jane Doe expressed or stated.

I found this article very useful. Thank you very much for the outstanding work you are doing.

What if i have 3 different interviewees answering the same interview questions? Should i then present the results in form of the table with the division on the 3 perspectives or rather give a results in form of the text and highlight who said what?

I think this tabular representation of results is a great idea. I am doing it too along with the text. Thanks

That was helpful was struggling to separate the discussion from the findings

this was very useful, Thank you.

Very helpful, I am confident to write my results chapter now.

It is so helpful! It is a good job. Thank you very much!

Very useful, well explained. Many thanks.

Hello, I appreciate the way you provided a supportive comments about qualitative results presenting tips

I loved this! It explains everything needed, and it has helped me better organize my thoughts. What words should I not use while writing my results section, other than subjective ones.

Thanks a lot, it is really helpful

Thank you so much dear, i really appropriate your nice explanations about this.

Thank you so much for this! I was wondering if anyone could help with how to prproperly integrate quotations (Excerpts) from interviews in the finding chapter in a qualitative research. Please GradCoach, address this issue and provide examples.

what if I’m not doing any interviews myself and all the information is coming from case studies that have already done the research.

Very helpful thank you.

This was very helpful as I was wondering how to structure this part of my dissertation, to include the quotes… Thanks for this explanation

This is very helpful, thanks! I am required to write up my results chapters with the discussion in each of them – any tips and tricks for this strategy?

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Surveys

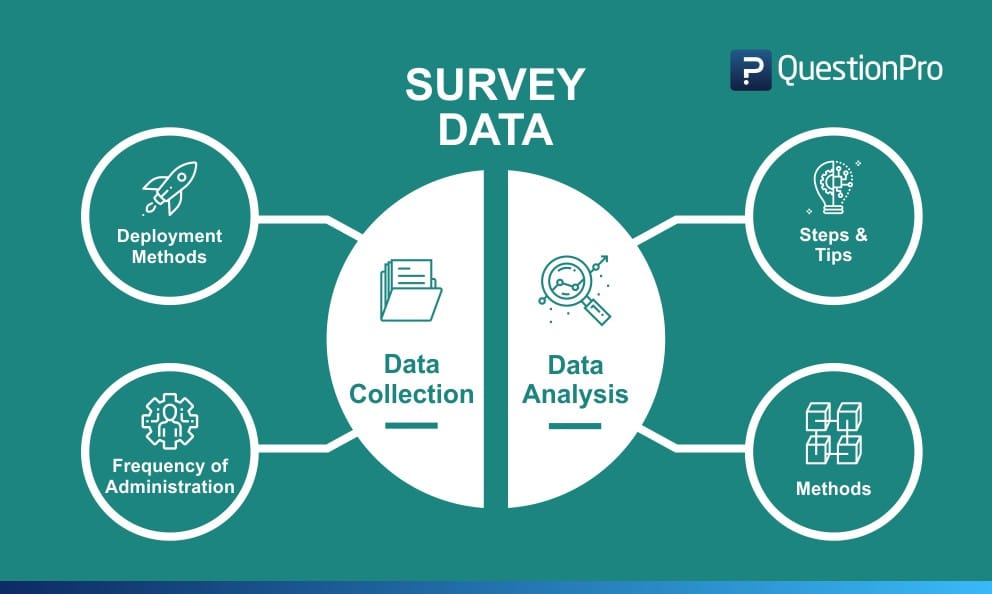

Survey Data Collection: Definition, Methods with Examples and Analysis

Survey Data: Definition

Survey data is defined as the resultant data that is collected from a sample of respondents that took a survey . This data is comprehensive information gathered from a target audience about a specific topic to conduct research. There are many methods used for survey data collection and statistical analysis .

Various mediums are used to collect feedback and opinions from the desired sample of individuals. While conducting survey research, researchers prefer multiple sources to gather data such as online surveys , telephonic surveys, face-to-face surveys, etc. The medium of collecting survey data decides the sample of people that are to be reached out to, to reach the requisite number of survey responses.

LEARN ABOUT: Survey Sample Sizes

Factors of collecting survey data such as how the interviewer will contact the respondent (online or offline), how the information is communicated to the respondents etc. decide the effectiveness of gathered data.

LEARN ABOUT: telephone survey

Survey Data Collection Methods with Examples

The methods used to collect survey data have evolved with the change in technology. From face-to-face surveys, telephonic surveys to now online and email surveys , the world of survey data collection has changed with time. Each survey data collection method has its pros and cons, and every researcher has a preference for gathering accurate information from the target sample.

The survey response rates for each of these data collection methods will differ as their reach and impact are always different. Different ways are chosen according to specific target population characteristics and the intent to examine human nature under various situations.

Types of survey data based on deployment methods:

There are four main survey data collection methods – Telephonic Surveys, Face-to-face Surveys, and Online Surveys.

- Online Surveys

Online surveys are the most cost-effective and can reach the maximum number of people in comparison to the other mediums. The performance of these surveys is much more widespread than the other data collection methods. In situations where there is more than one question to be asked to the target sample, certain researchers prefer conducting online surveys over the traditional face-to-face or telephone surveys.

Online surveys are effective and therefore require computational logic and branching technologies for exponentially more accurate survey data collection vs any other traditional means of surveying. They are straightforward in their implementation and take a minimum time of the respondents. The investment required for survey data collection using online surveys is also negligible in comparison to the other methods. The results are collected in real-time for researchers to analyze and decide corrective measures.

A very good example of an online survey is a hotel chain using an online survey to collect guest satisfaction metrics after a stay or an event at the property.

Learn more: Quality Of Life Survey Questions + Sample Questionnaire Template

Online surveys are safe and secure to conduct. As there is no in-person interaction or any direct form of communication, they are quite useful in times of global crisis. For instance, many organizations moved to contactless surveys during the pandemic. It helped them ensure that the employees are not experiencing any COVID-19 symptoms before they come to the office.

Learn more: Contactless Health-Screen Survey Questions + Sample Questionnaire Template

- Face-to-face Surveys

Gaining information from respondents via face-to-face mediums is much more effective than the other mediums because respondents usually tend to trust the surveyors and provide honest and clear feedback about the subject in-hand.

Researchers can easily identify whether their respondents are uncomfortable with the asked questions and can be extremely productive in case there are sensitive topics involved in the discussion. This online data collection method demands more cost-investment than in comparison to the other methods. According to the geographic segmentation or psychographic segmentation , researchers must be trained to gain accurate information.

For example, a job evaluation survey is conducted in person between an HR or a manager with the employee. This method works best face-to-face as the data collection can collect as accurate information as possible.

LEARN ABOUT: Workforce Planning Model

- Telephone Surveys

Telephone surveys require much lesser investment than face-to-face surveys. Depending on the required reach, telephone surveys cost as much or a little more than online surveys. Contacting respondents via the telephonic medium requires less effort and manpower than the face-to-face survey medium.

If interviewers are located at the same place, they can cross-check their questions to ensure error-free questions are asked to the target audience. The main drawback of conducting telephone surveys is that establishing a friendly equation with the respondent becomes challenging due to the bridge of the medium. Respondents are also highly likely to choose to remain anonymous in their feedback over the phone as the reliability associated with the researcher can be questioned.

For example, if a retail giant would like to understand purchasing decisions, they can conduct a telephonic, motivation, and buying experience survey to collect data about the entire purchasing experience.

LEARN ABOUT: Anonymous Surveys

- Paper Surveys

The other commonly used survey method is paper surveys. These surveys can be used where laptops, computers, and tablets cannot go, and hence they use the age-old method of data collection ; pen and paper. This method helps collect survey data in field research and helps strengthen the number of responses collected and the validity of these responses.

A popular example or use case of a paper survey is a fast-food restaurant survey, where the fast-food chain would like to collect feedback on its patrons’ dining experience.

Types of survey data based on the frequency at which they are administered:

Surveys can be divided into 3 distinctive types on the basis of the frequency of their distribution. They are:

- Cross-Sectional Surveys

Cross-sectional surveys is an observational research method that analyzes data of variables collected at one given point of time across a sample population or a pre-defined subset. The survey data from this method helps the researcher understand what the respondent feels at a certain point. It helps measure opinions in a particular situation.

For example, if the researcher would like to understand movie rental habits, a survey can be conducted across demographics and geographical locations. The cross-sectional study , for example, can help understand that males between 21-28 rent action movies and females between 35-45 rent romantic comedies. This survey data helps for the basis of a longitudinal study .

LEARN ABOUT: Real Estate Surveys

- Longitudinal Surveys

Longitudinal surveys are those surveys that help researchers to make an observation and collect data over an extended period of time. This survey data can be qualitative or quantitative in nature, and the survey creator does not interfere with the survey respondents.

For example, a longitudinal study can be carried out for years to help understand if mine workers are more prone to lung diseases. This study takes a year and discounts any pre-existing conditions.

- Retrospective Surveys

In retrospective surveys, researchers ask respondents to report events from the past. This survey method offers in-depth survey data but doesn’t take as long to complete. By deploying this kind of survey, researchers can gather data based on past experiences and beliefs of people.

For example, if hikers are asked about a certain hike – the conditions of the hiking trail, ease of hike, weather conditions, trekking conditions, etc. after they have completed the trek, it is a retrospective study.

LEARN ABOUT: Powerful Survey Generator

Survey Data Analysis

After the survey data has been collected, this data has to be analyzed to ensure it aids towards the end research objective. There are different ways of conducting this research and some steps to follow. They are as below:

Survey Data Analysis: Steps and Tips

There are four main steps of survey data analysis :

- Understand the most popular survey research questions: The survey format questions should align with the overall purpose of the survey. That is when the collected data will be effective in helping researchers. For example, if a seminar has been conducted, the researchers will send out a post-seminar feedback survey. The primary goal of this survey will be to understand whether the attendees are interested in attending future seminars. The question will be: “How likely are you to attend future seminars?” – Data collected for this question will decide the likelihood of success of future seminars.

- Filter obtained results using the cross-tabulation technique: Understand the various categories in the target audience and their thoughts using cross-tabulation format. For example, if there are business owners, administrators, students, etc. who attend the seminar, the data about whether they would prefer attending future seminars or not can be represented using cross-tabulation.

- Evaluate the derived numbers: Analyzing the gathered information is critical. How many of the attendees are of the opinion that they will be attending future seminars and how many will not – these facts need to be evaluated according to the results obtained from the sample.

- Draw conclusions: Weave a story with the collected and analyzed data. What was the intention of the survey research, and how does the survey data suffice that objective? – Understand that and develop accurate, conclusive results.

Survey Data Analysis Methods

Conducting a survey without having access to the resultant data and the inability to drawing conclusions from the survey data is pointless. When you conduct a survey, it is imperative to have access to its analytics. It is tough to analyze using traditional survey methods like pen and paper and also requires additional manpower. Survey data analysis becomes much easier when using advanced online data collection methods with an online survey platform such as market research survey software or customer survey software.

LEARN ABOUT: Top 12 Tips to Create A Good Survey

Statistical analysis can be conducted on the survey data to make sense of the data that has been collected. There are multiple data analysis methods of quantitative data . Some of the commonly used types are:

- Cross-tabulation: Cross-tabulation is the most widely used data analysis methods. It uses a basic tabulation framework to make sense of data. This statistical analysis method helps tabulate data into easily understandable rows and columns, and this helps draw parallels between different research parameters. It contains data that is mutually exclusive or have some connection with each other.

- Trend analysis: Trend analysis is a statistical analysis method that provides the ability to look at survey-data over a long period of time. This method helps plot aggregated response data over time allows drawing a trend line of the change, if any, of perceptions over time about a common variable.

- MaxDiff analysis: The MaxDiff analysis method is used to gauge what a customer prefers in a product or a service across multiple parameters. For example, a product’s feature list, the difference with the competition, ease of use and likert scale , pricing, etc. form the basis for maxdiff analysis. In a simplistic form, this method is also called the “best-worst” method. This method is very similar to conjoint analysis, but it is much easier to implement and can be interchangeably used.

LEARN ABOUT: System Usability Scale

- Conjoint analysis: As mentioned above, conjoint analysis is similar to maxdiff analysis, only differing in its complexity and the ability to collect and analyze advance survey data. This method analyzes each parameter behind a person’s purchasing behavior. By using conjoint analysis, it is possible to understand what exactly is important to a customer and the aspects that are evaluated before purchase.

- TURF analysis: TURF analysis or Total Unduplicated Reach and Frequency analysis, is a statistical research methodology that assesses the total market reach of a product or service or a mix of both. This method is used by organizations to understand the frequency and the avenues at which their messaging reaches customers and prospective customers. This helps them tweak their go-to-market strategies.

- Gap analysis: Gap analysis uses a side-by-side matrix question type that helps measure the difference between expected performance and actual performance. This statistical method for survey data helps understand the things that have to be done to move performance from actual to planned performance.

- SWOT analysis: SWOT analysis , another widely used statistical method, organizes survey data into data that represents the strength, weaknesses, opportunities, and threats of an organization or product or service that provides a holistic picture of competition. This method helps to create effective business strategies.

- Text analysis: Text analysis is an advanced statistical method where intelligent tools make sense of and quantify or fashion qualitative and open-ended data into easily understandable data. This method is used when the survey data is unstructured.

MORE LIKE THIS

The Best Email Survey Tool to Boost Your Feedback Game

May 7, 2024

Top 10 Employee Engagement Survey Tools

Top 20 Employee Engagement Software Solutions

May 3, 2024

15 Best Customer Experience Software of 2024

May 2, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Product Overview

SurveyMonkey is built to handle every use case and need. Explore our product to learn how SurveyMonkey can work for you.

SurveyMonkey

Get data-driven insights from a global leader in online surveys.

Integrations

Integrate with 100+ apps and plug-ins to get more done.

SurveyMonkey Forms

Build and customize online forms to collect info and payments.

SurveyMonkey Genius

Create better surveys and spot insights quickly with built-in AI.

Market Research Solutions

Purpose-built solutions for all of your market research needs.

Financial Services

See more industries, customer experience, human resources, see more roles.

Online Polls

Registration Forms

Employee feedback, event feedback, customer satisfaction, see more use cases.

Contact Sales

Net Promoter Score

Measure customer satisfaction and loyalty for your business.

Learn what makes customers happy and turn them into advocates.

Website Feedback

Get actionable insights to improve the user experience.

Contact Information

Collect contact information from prospects, invitees, and more.

Event Registration

Easily collect and track RSVPs for your next event.

Find out what attendees want so that you can improve your next event.

Employee Engagement

Uncover insights to boost engagement and drive better results.

Meeting Feedback

Get feedback from your attendees so you can run better meetings.

360-degree employee evaluation

Use peer feedback to help improve employee performance.

Course Evaluation

Create better courses and improve teaching methods.

University Instructor Evaluation

Learn how students rate the course material and its presentation.

Product Testing

Find out what your customers think about your new product ideas.

See all templates

Resource center.

Best practices for using surveys and survey data

Curiosity at Work Blog

Our blog about surveys, tips for business, and more.

Help Center

Tutorials and how to guides for using SurveyMonkey.

How top brands drive growth with SurveyMonkey.

- English (US)

- English (UK)

How to analyze survey data

Learn how SurveyMonkey can help you analyze your survey data effectively, as well as create better surveys with ease.

The results are back from your online surveys. Now it’s time to tap the power of survey data analysis to make sense of the results and present them in ways that are easy to understand and act on.After you’ve collected statistical survey results and have a data analysis plan , it’s time to begin the process of calculating survey results you got back. Here’s how our survey research scientists make sense of quantitative data (versus qualitative data ). They structure their reporting around survey responses that will answer research questions. Even for the experts, it can be hard to parse the insights in raw data.

In order to reach your survey goals, you’ll want to start with relying on the survey methodology suggested by our experts. Then once you have results, you can effectively analyze them using all the data analysis tools available to you including statistical analysis, data analytics, and charts and graphs that capture your survey metrics.

Build a survey analytics team for deeper insights

Add analysts to any team plan for even bigger impact.

Survey data analysis made easy

Sound survey data analysis is key to getting the information and insights you need to make better business decisions. Yet it’s important to be aware of potential challenges that can make analysis more difficult or even skew results.

Asking too many open-ended questions can add time and complexity to your analysis because it produces qualitative results that aren’t numerically based. Meanwhile, closed-ended questions generate results that are easier to analyze. Analysis can also be hampered by asking leading or biased questions or posing questions that are confusing or too complex. Being equipped with the right tools and know-how helps assure that survey analysis is both easy and effective.

Read more about using closed-ended vs. open-ended questions .

See how SurveyMonkey makes analyzing results a breeze

With its many data analysis techniques , SurveyMonkey makes it easy for you to turn your raw data into actionable insights presented in easy-to-grasp formats. Features such as automatic charts and graphs and word clouds help bring data to life. For instance, Sentiment Analysis allows you to get an instant summary of how people feel from thousands or even millions of open text responses. You can review positive, neutral, and negative sentiments and a glance or filter by sentiment to identify areas that need attention. For even deeper insights, you can filter a question by sentiment. Imagine being able to turn all those text responses into a quantitative data set.

Word clouds let you quickly interpret open-ended responses through a visual display of the most frequently used words. You can customize the look of your word clouds in a range of ways from selecting colors or fonts for specific words to easily hiding non-relevant words.

Our wide range of features and tools can help you address analysis challenges, and quickly generate graphics and robust reports. Check out how a last-minute report request can be met in a snap through SurveyMonkey.

Ready to get started?

To begin calculating survey results more effectively, follow these 6 steps:

- Take a look at your top survey questions

Determine sample size

- Use cross tabulation to filter your results

Benchmarking, trending, and comparative data

- Crunch the numbers

- Draw conclusions

Calculating results using your top survey questions

First, let’s talk about how you’d go about calculating survey results from your top research questions. Did you feature empirical research questions? Did you consider probability sampling ? Remember that you should have outlined your top research questions when you set a goal for your survey.

For example, if you held an education conference and gave attendees a post-event feedback survey , one of your top research questions may look like this: How did the attendees rate the conference overall? Now take a look at the answers you collected for a specific survey question that speaks to that top research question:

Do you plan to attend this conference next year?

Notice that in the responses, you’ve got some percentages (71%, 18%) and some raw numbers (852, 216). The percentages are just that—the percent of people who gave a particular answer. Put another way, the percentages represent the number of people who gave each answer as a proportion of the number of people who answered the question. So, 71% of your survey respondents (852 of the 1,200 surveyed) plan on coming back next year.

This table also shows you that 18% say they are planning to return and 11% say they are not sure.